Home > Integrated Products > VxRail > Guides > Planning Guide—VMware Cloud Foundation 5.x on VxRail > VxRail cluster network planning

VxRail cluster network planning

-

There are options and design decisions to be considered when integrating the VxRail cluster physical and virtual networks with your data center networks. The decisions made regarding VxRail networking cannot be easily modified after the cluster is deployed and supporting Cloud Foundation, and should be decided before deploying a VxRail cluster.

Each VxRail node has an on-board, integrated network card. Depending on the VxRail models selected and supported options, you can configure the on-board Ethernet ports as either 2x10Gb, 4x10Gb, 2x25Gb, 4x10Gb, or 4x25Gb. You can choose to support your Cloud Foundation on VxRail workload using only the on-board Ethernet ports, or deploy with both on-board Ethernet ports and with Ethernet ports from PCIe adapter cards. If NIC-level redundancy is a business requirement, you can decide to install optional Ethernet adapter cards into each VxRail node for this purpose. Depending on the VxRail nodes selected for the cluster, the adapter cards can support 10 Gb, 25 Gb, and 100 Gb ports.

Selecting a VxRail network profile

VxRail supports both predefined network profiles and custom network profiles when deploying the cluster to support Cloud Foundation VI workload domains. The best practice is to select the network profile that aligns with the number of on-board ports and expansion ports being selected per node to support Cloud Foundation on VxRail networking. This ensures that VxRail and CloudBuilder will configure the supporting virtual networks by following the guidance designed into these network profiles.

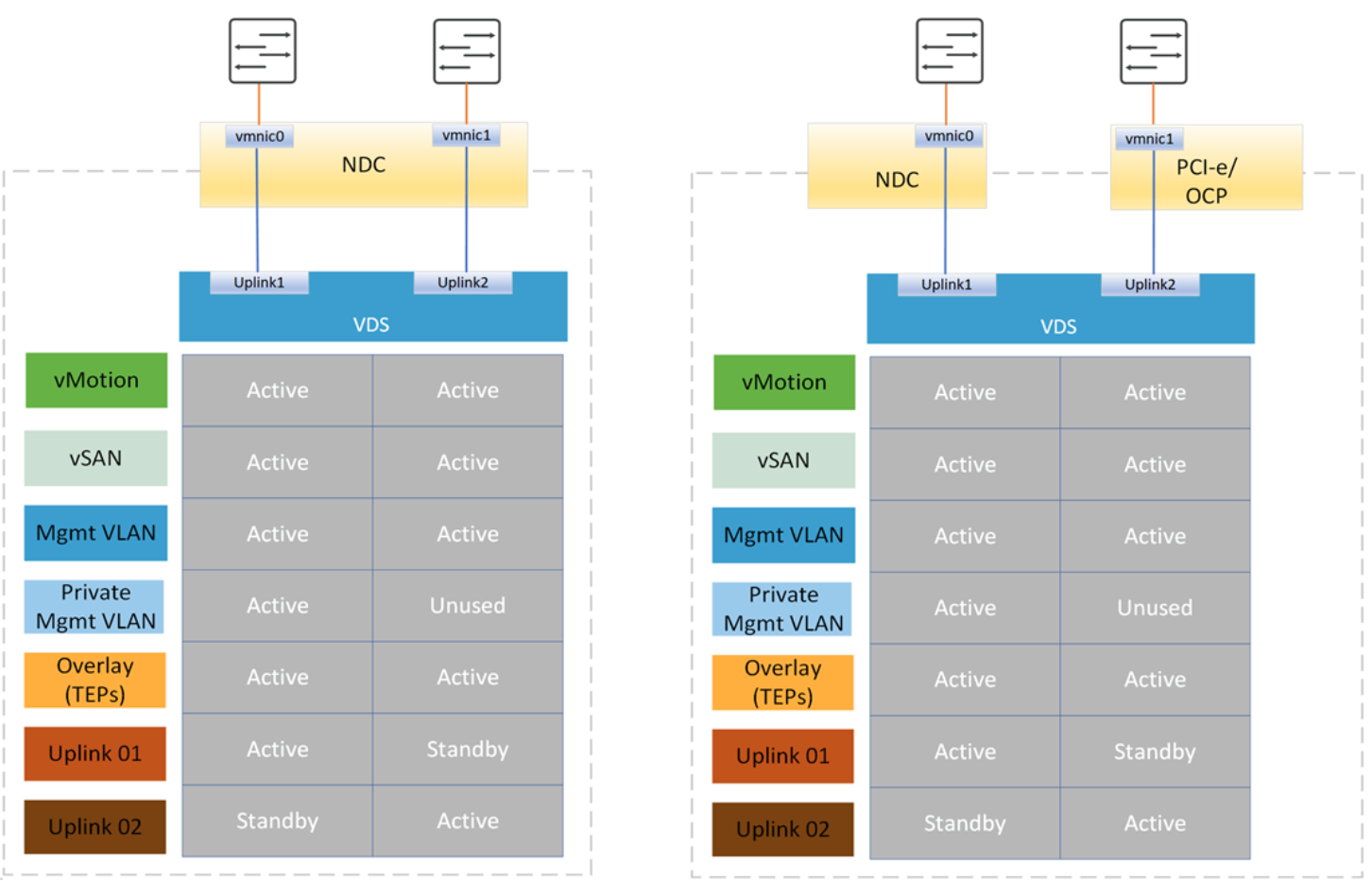

If VCF on VxRail networking will be configured on two node ports, you must decide whether or not to add an expansion card into each VxRail node to eliminate the on-board port as a single point of failure:

- If only the on-board ports are present, the first two ports on each VxRail node will be reserved to support VCF on VxRail networking using a predefined network profile.

- If both on-board and expansion ports are present, you can configure a custom network profile, with the option to select an on-board port and expansion port to reserve for VCF on VxRail networking.

In both two-port instances, the NSX and VxRail networks are configured to share the bandwidth capacity of the two Ethernet ports.

Figure 37. Two ports reserved for VCF on VxRail networking

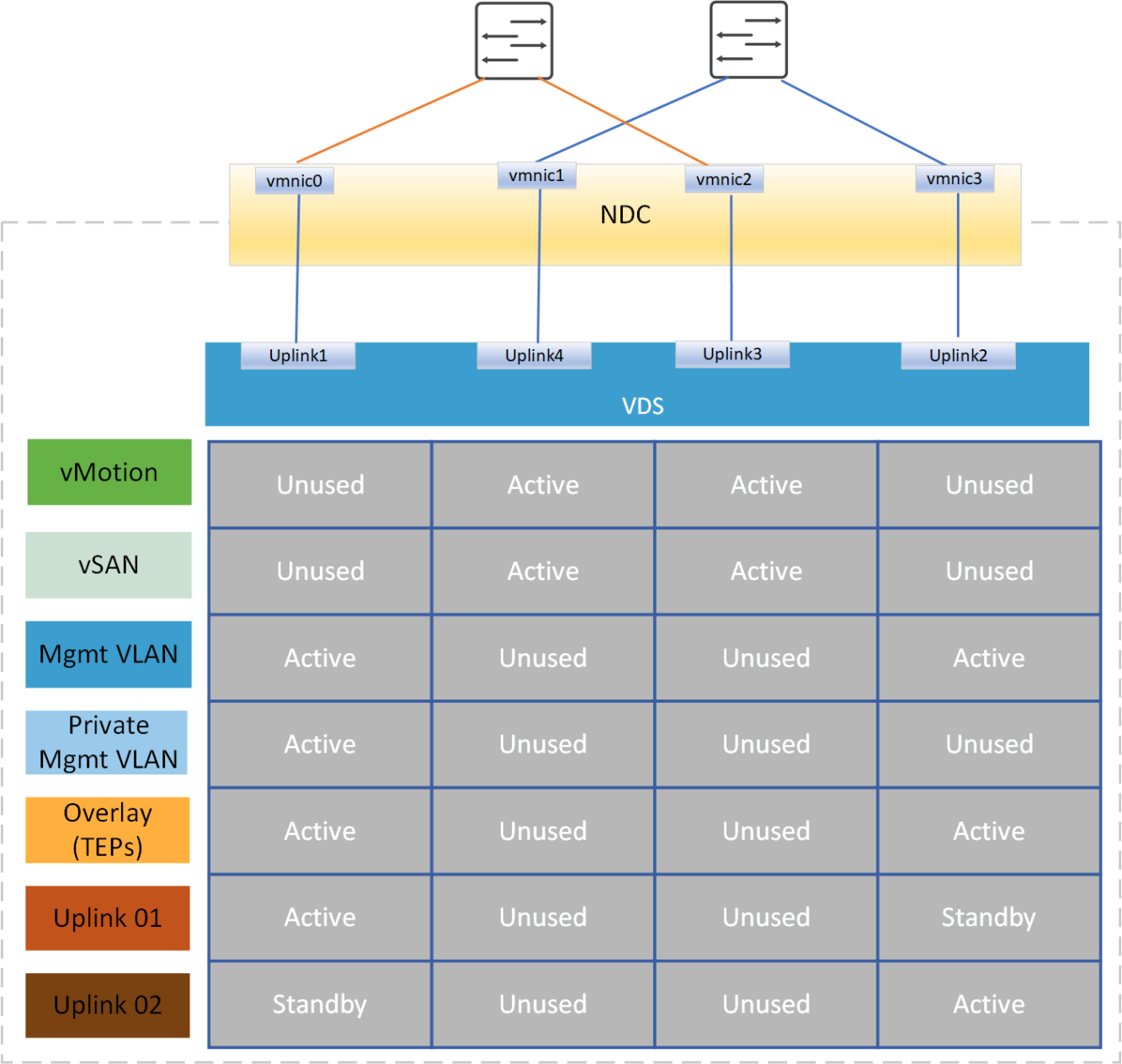

If VCF on VxRail networking is configured with four ports, either all four on-board ports can be used, or the workload can be spread across on-board and expansion ports. The option to use only on-board ports uses a predefined network profile, with automatic assignment of the VMnics to the uplinks. Configuring with both on-board and expansion ports is preferred because it enables resiliency across the node devices and across the pair of switches.

Figure 38. Four ports reserved for VCF on VxRail networking in a pre-defined network profile

A custom network profile offers more flexibility and choice than a pre-defined network profile, and should take precedence for Cloud Foundation on VxRail deployments.

Network Profile

Pre-Defined

Custom

Assign any NDC/OPC/PCIe NIC to any VxRail network

No

Yes

Custom MTU

No

Yes

Custom teaming and failover policy

No

Yes

Configure more than one Virtual Distributed Switch

No

Yes

Assign two, four, six, or eight NICs to support VxRail networking

No

Yes

- A custom network profile enables resiliency by assigning an Ethernet port from the NDC/OCP card and an Ethernet port from a PCIe cards to a Cloud Foundation on VxRail network. A pre-defined network profile does not support this selection process.

- A custom network profile will support configuring an MTU larger than the 1500 default to any VxRail network. A pre-defined network profile configures the default MTU of 1500 to all VxRail networks.

- A custom network will support configuring either active/standby ports or active/active ports in the teaming and failover policy for a VxRail network. The teaming and failover policies are preset in a pre-defined network profile. The initial deployment of Cloud Foundation on VxRail sets the teaming and failover policies to active/active as a best practice, but those policies can be updated post-deployment.

- A custom network profile will support configuring one or two virtual distributed switches for VxRail clusters, while a pre-defined network profile deploys just a single virtual distributed switch.

- A custom network profile supports selecting two, four, six, or eight NICs per VxRail node for assignment to VxRail networks. A pre-defined network profile only supports two or four NICs.

Cloud Foundation on VxRail supports not only selecting the Ethernet ports on each node for physical network connectivity, but also assigning the Ethernet ports to be configured as uplinks for the supporting virtual distributed switches in the virtual infrastructure. This enables a more efficient bandwidth resource consumption model. It also enables the physical segmentation of VxRail and Cloud Foundation network traffic onto dedicated Ethernet ports, and enables segmentation onto separate virtual distributed switches.

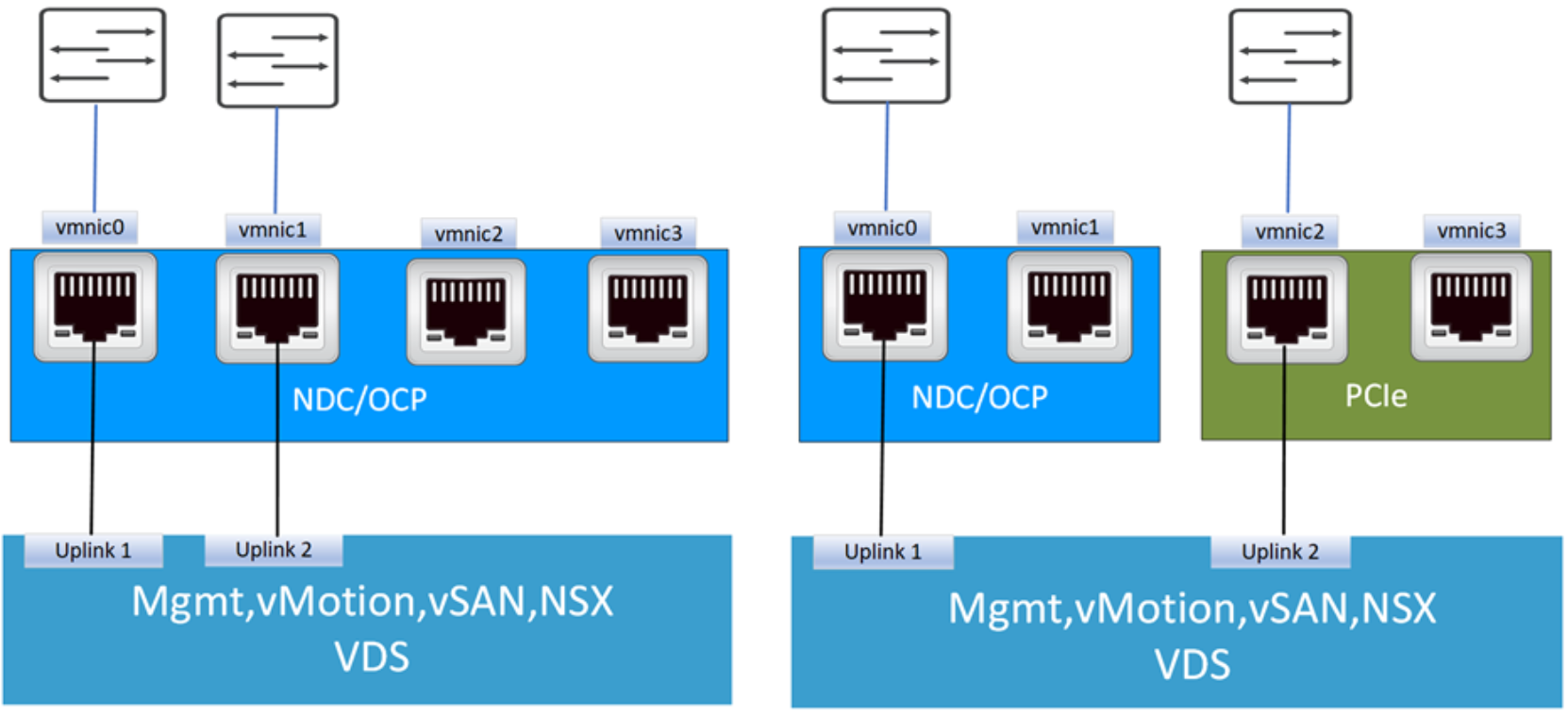

If the VxRail nodes are configured with two Ethernet ports for networking purposes, all the VxRail network traffic and Cloud Foundation/NSX traffic is consolidated onto those two ports. Additional virtual distributed switches are not supported for the two-port connectivity option, so all VxRail and Cloud Foundation/NSX traffic flows through a single virtual distributed switch.

Figure 39. Two connectivity options for VxRail nodes with two NICs

With the option of deploying four NICs to a single VDS, you can use all four ports on the NDC/OCP, or you can establish the resiliency with a custom network profile to select NICs from both the NDC/OCP and PCIe devices. With this option, you can reserve two uplinks to support NSX traffic, or the NSX traffic can share uplinks with the other networks.

Figure 40. Two connectivity options for VxRail nodes with four NICs with a single VDS

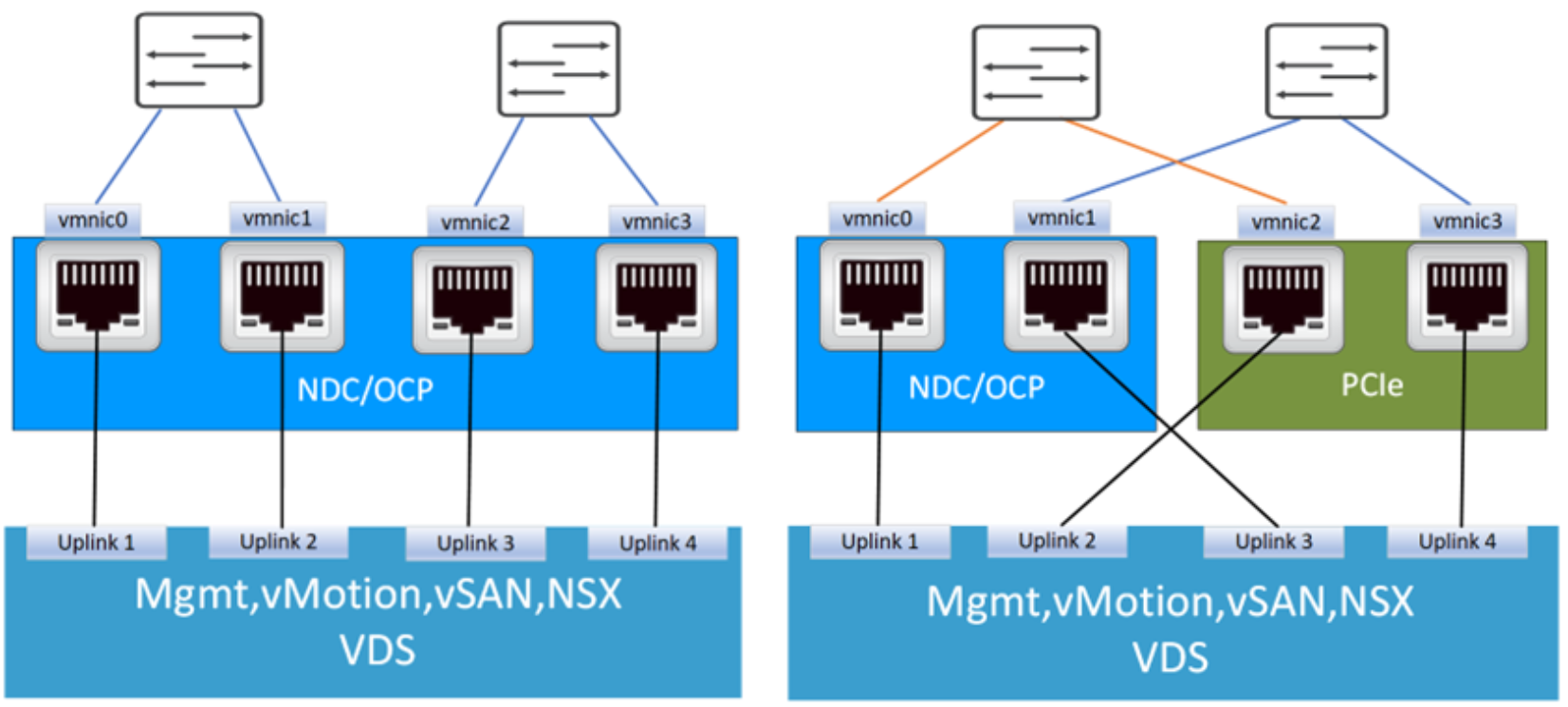

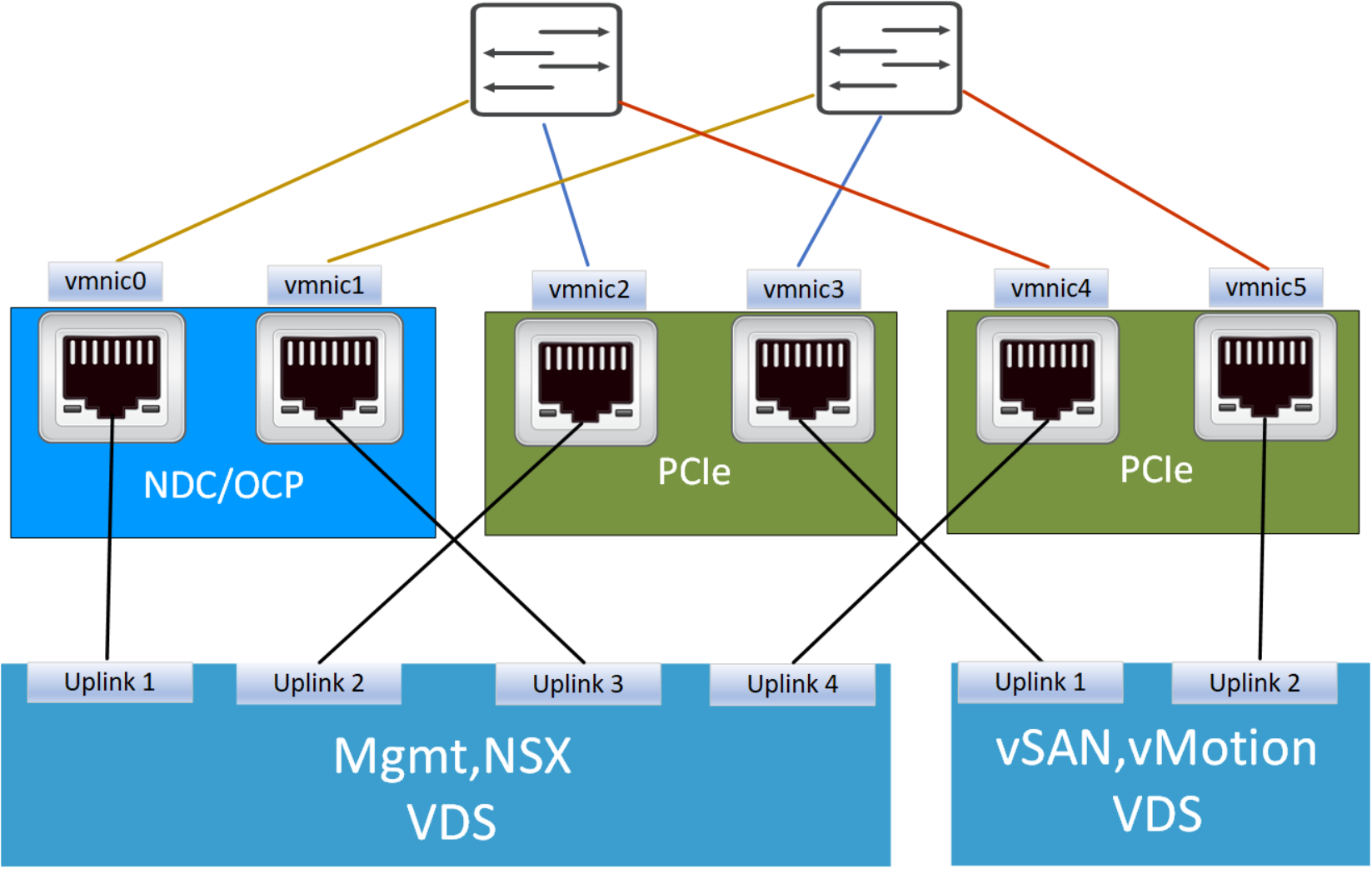

Deploying Cloud Foundation on VxRail with four NICs enables the option to deploy a second virtual distributed switch to isolate network traffic. One option is to separate non-management VxRail networks, such as vSAN and vMotion, away from the VxRail management network with two virtual distributed switches.

Another option is to deploy NSX on a second virtual distributed switch to enable separation from all VxRail network traffic. This specific virtual distributed switch is solely to support NSX, and is not used for VxRail networking. If you select this option, you must reserve two unused NICs as uplinks for this specific virtual distributed switch.

Figure 41. Two connectivity options for VxRail nodes with four NICs and two VDS

For planned workloads that have high workload demands or strict network separation requirements, you can use six or eight NICs across the NDC/OCP and PCIe adapter cards to support Cloud Foundation on VxRail.

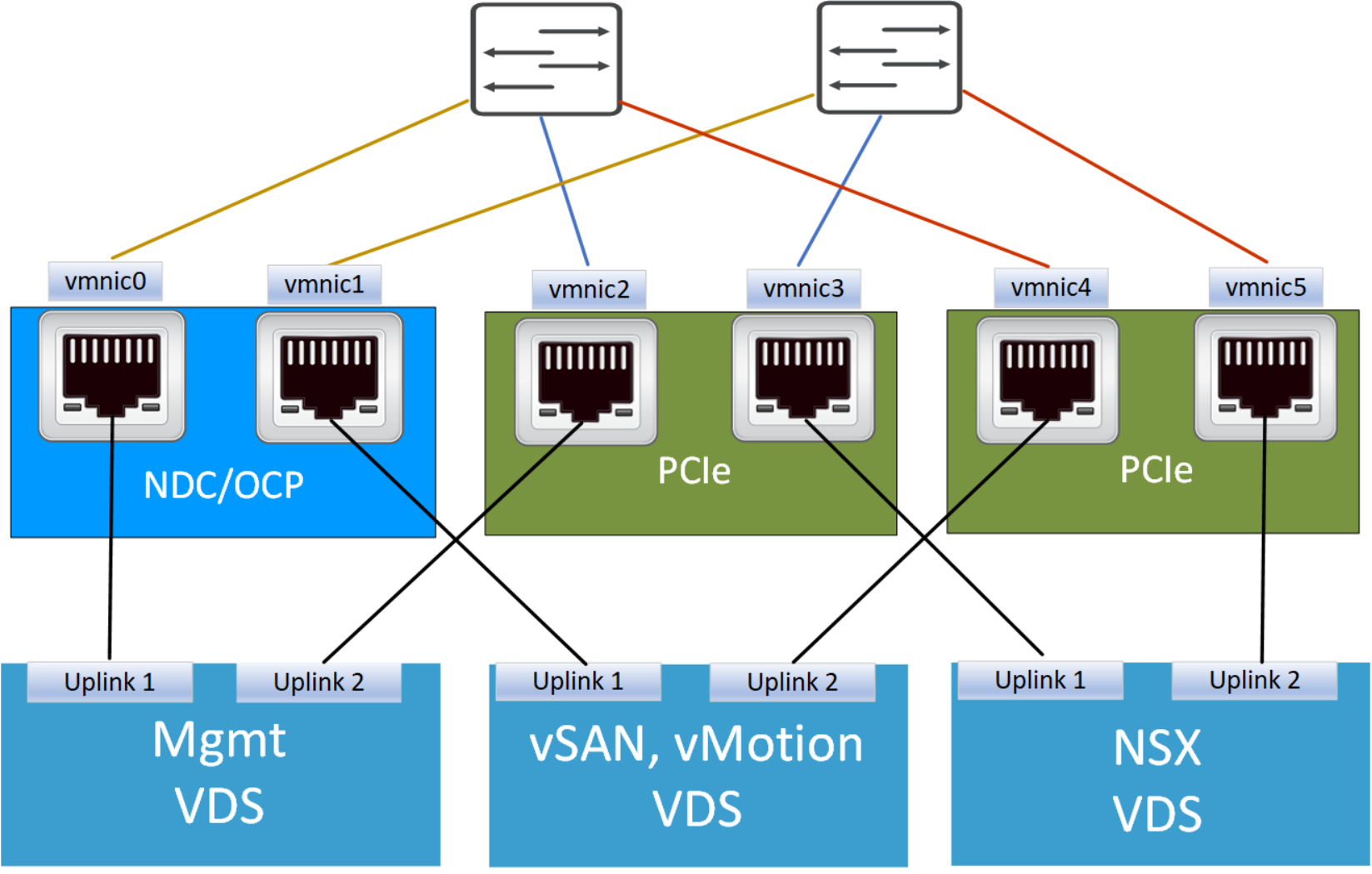

With the six-NIC option, you have the option to deploy up to three virtual distributed switches to support the Cloud Foundation on VxRail environment. If the desired outcome is to separate non-management VxRail networks and VxRail management networks, you can deploy a second virtual distributed switch at the time the VxRail cluster is built. With this option, you can reserve two uplinks to support NSX traffic, or the NSX traffic can share uplinks with the other networks.

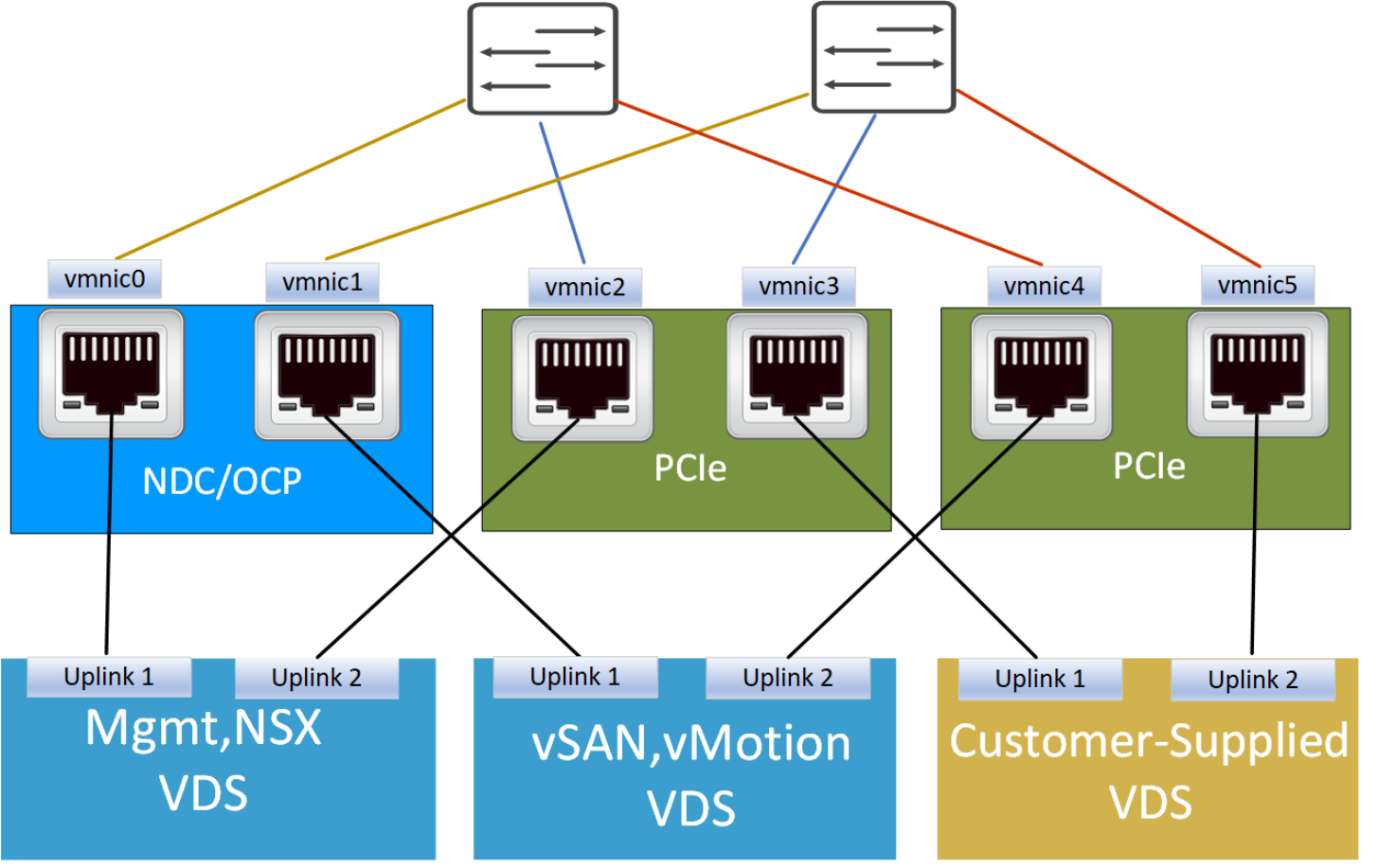

Figure 42. Connectivity option for VxRail nodes with six NICs and two VDS

If the desired outcome is to further separate non-management VxRail networks, VxRail management networks, and NSX network traffic, you can deploy a third virtual distributed switch. This third virtual distributed switch is solely for NSX traffic, so the underlying VxRail cluster is built with four NICs to reserve two unused NICs for NSX.

Figure 43. Connectivity option for VxRail nodes with six NICs and three VDS

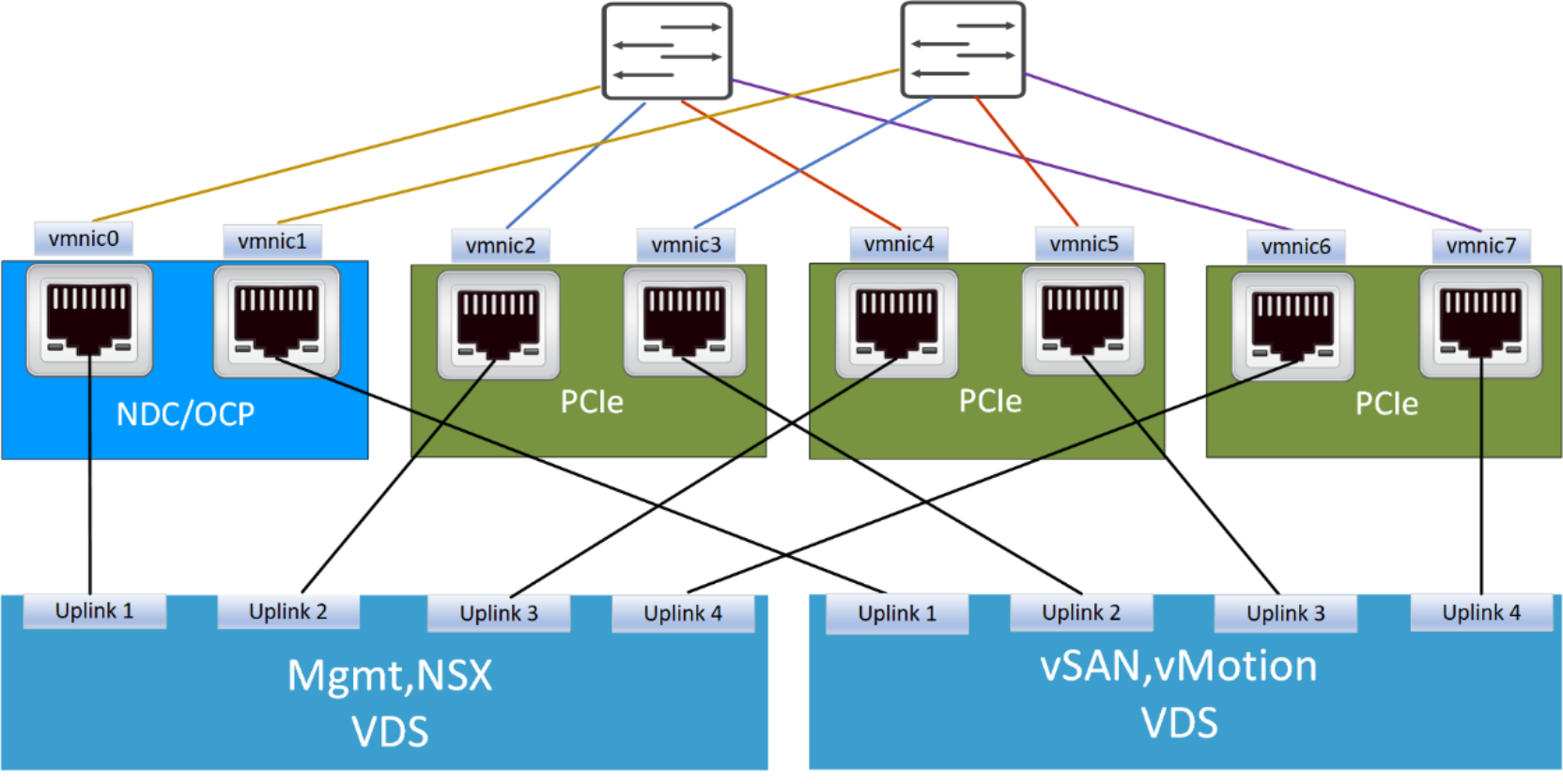

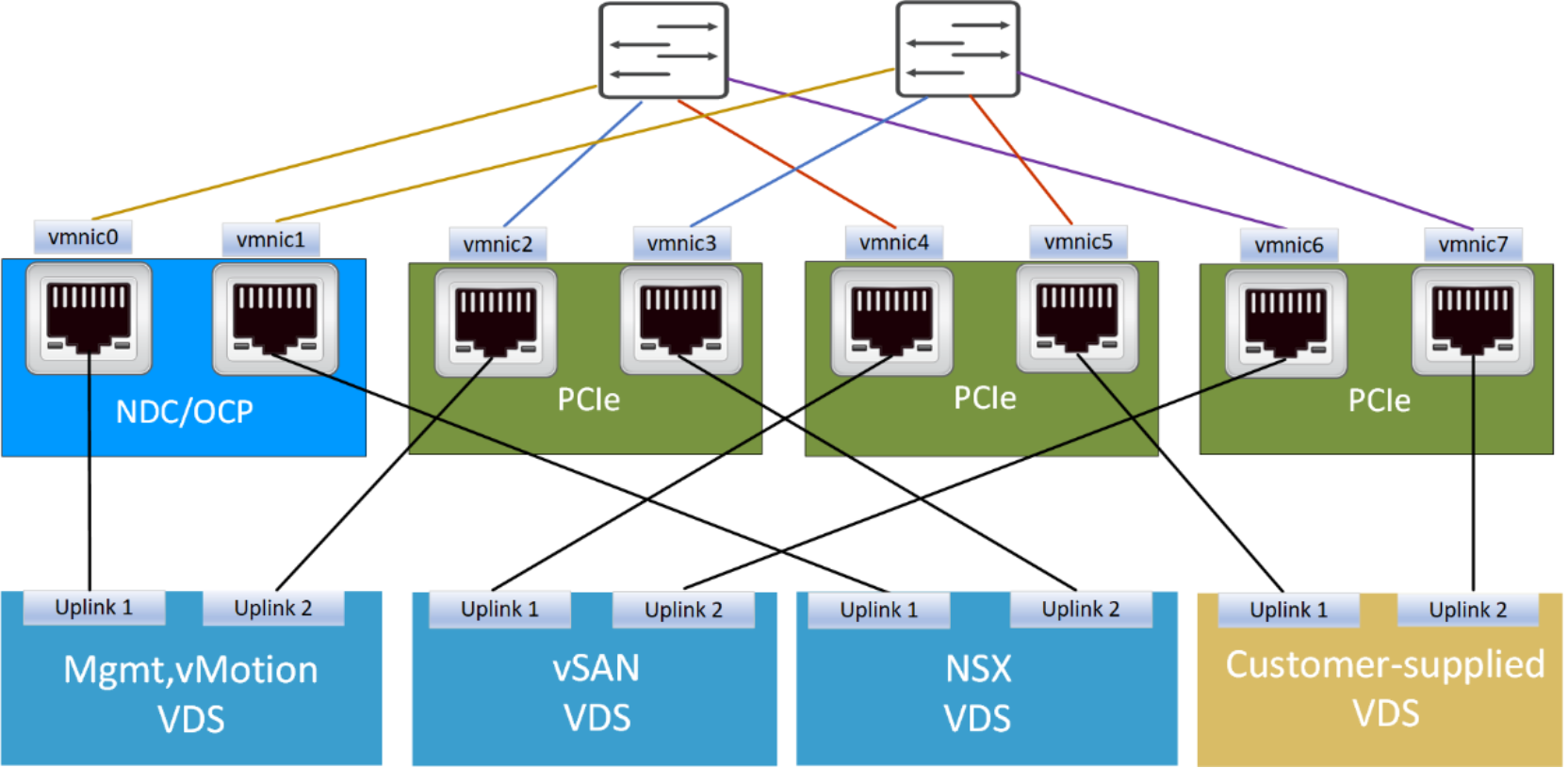

In extreme cases in which you want to have dedicated uplinks assigned to the vMotion and vSAN networks, you can deploy Cloud Foundation on VxRail with eight NICs. The 8-NIC option supports up to three virtual distributed switches to support VxRail and NSX networking. If you prefer two virtual distributed switches, then the NSX networking shares a virtual distributed switch with VxRail management traffic.

Figure 44. Connectivity option for VxRail nodes with eight NICs and two VDS

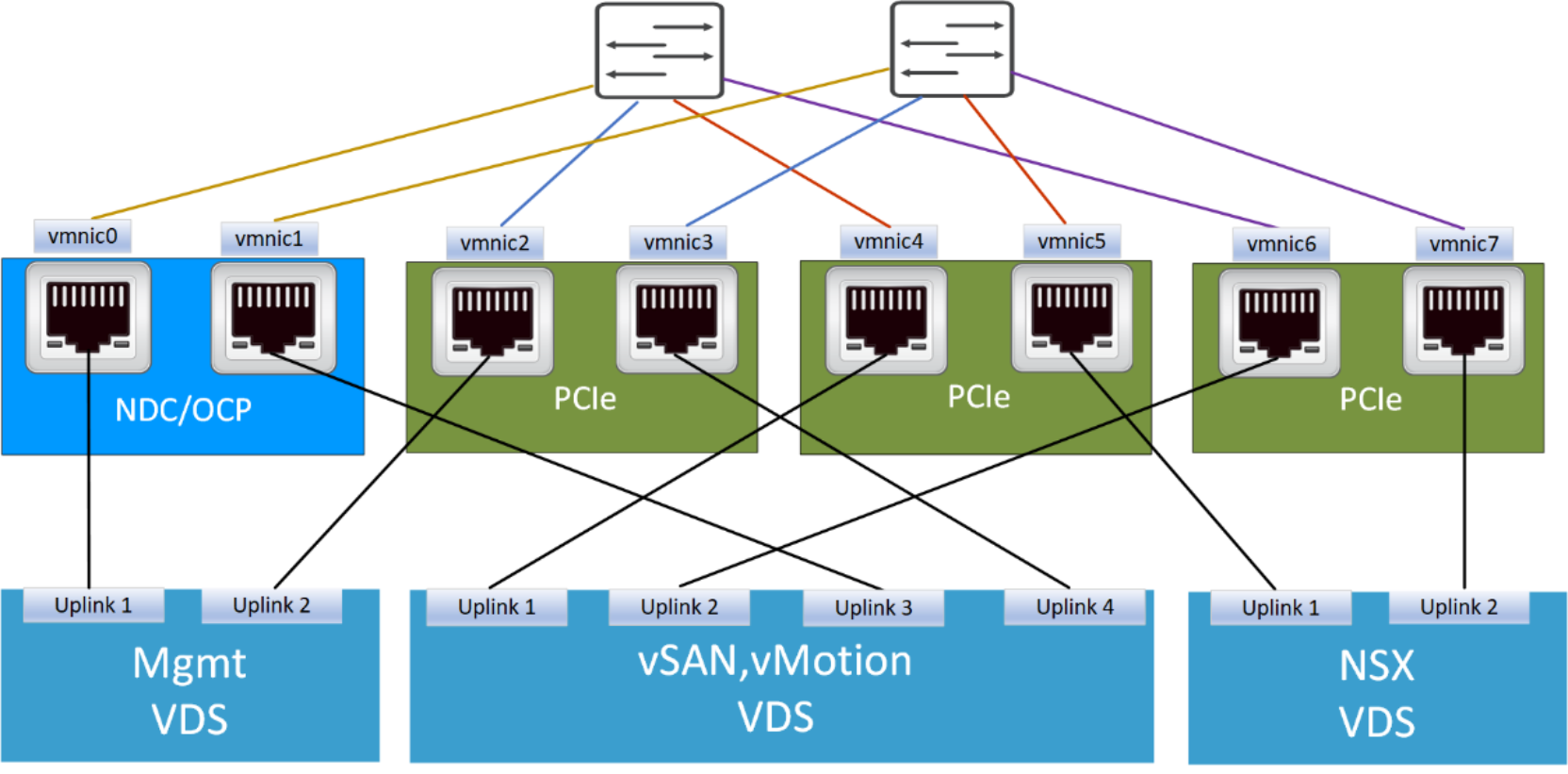

If you want further segmentation of the VxRail management networks (VxRail non-management networks and NSX), you can deploy a third virtual distributed switch to support NSX traffic. With this option, two unused NICs are required for the third virtual distributed switch dedicated to NSX network traffic.

Figure 45. Connectivity options for VxRail nodes with eight NICs and three VDS

Cloud Foundation on VxRail does not support adding another virtual distributed switch during the automated deployment process for customer networks. If there are other networks you want to integrate into the Cloud Foundation on VxRail instance post-deployment, the best practice is to reserve a minimum of two NICs for this purpose.

Figure 46. Customer-supplied VDS with four NICs reserved for Cloud Foundation on VxRail networking

Figure 47. Customer-supplied VDS with six NICs reserved for Cloud Foundation on VxRail networking

The reservation and assignment of the physical ports on the VxRail nodes to support Cloud Foundation on VxRail networking is performed during the initial deployment of the VxRail cluster. Dell Technologies recommends that careful consideration be taken to ensure that sufficient network capacity is built into the overall design to support planned workloads. If possible, Dell Technologies recommends an overcapacity of physical networking resources to support future workload growth.