Home > Networking Solutions > Enterprise/Data Center Networking Solutions > Enterprise SONiC Networking Solutions > Guides > Enterprise SONiC Distribution by Dell Technologies - Lifecycle Management > Everflow

Everflow

-

Everflow is a network telemetry system that provides scalable and flexible access to packet-level information in large data centers. Everflow uses “match and mirror” functionality. Commodity switches can apply actions on packets that match on flexible patterns over packet headers or payloads and then mirror packets to analysis servers by the action.

Everflow leverages the capability of commodity DCN switches to match based on predefined rules and then execute specific actions like mirror and encapsulate to reduce the tracing overhead. The standards designed to handle DCN faults are as follows:

- In the DCN environment, flow size distribution is highly skewed, and even small follows are often associated with customer-facing interactive services with strict performance requirements. Everflow traces every flow in DCN by introducing new rules that match based on TCP, SYN, FIN, and RST fields in the packet.

- To permit flexible tracing, the packets can be marked with an additional “debug” bit in the header. A new rule can be installed that traces any packet with the “debug” bit set.

- Like regular data traffic, protocol traffic is also traced as it is critical for the health and performance of DCN.

Everflow components and operation

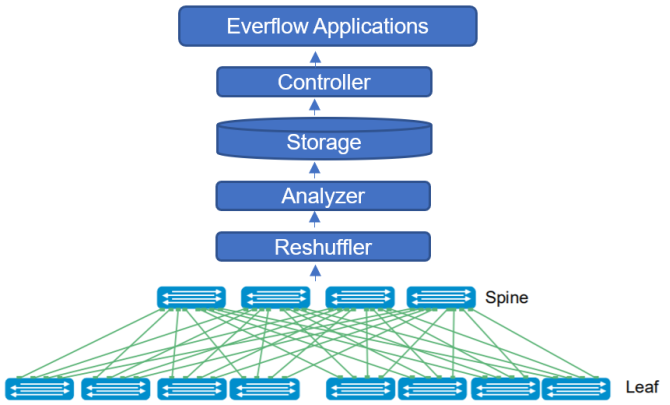

Everflow consists of four key components:

Some applications use the packet-level information that is provided by Everflow to debug network faults. Everflow configures the rules on switches. Packets that match these rules are mirrored to the reshufflers and directed to the analyzers, which output the analysis results into storage. Everflow encapsulates Ethernet packets in a GRE tunnel.

The analyzers are a distributed set of servers that process a portion of tracing traffic. To complete a packet trace, the analyzer checks for loop and drop problems. A loop happens when the same device appears multiple times in the evidence. The drop is spotted when the last hop trace is different from the expected trace, which is computed from the topology.

Everflow applications interact with the controller using several APIs to debug various network faults. With these APIs, the applications can query the packet traces, install load counters, and trace traffic by marking the debug bit.

Figure 19: Everflow architecture

Everflow use case and example

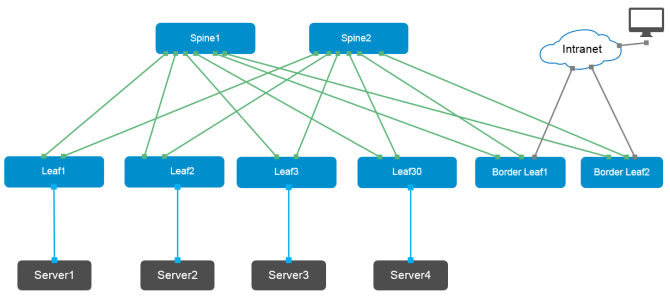

Everflow can be enabled on certain production switches on-demand to debug live incidents. The topology that is used for configuration example is shown in Figure 20. Everflow configuration can be carried out using the config_db.json on Enterprise SONiC Distribution by Dell Technologies NOS.

In the topology example, the traffic flows from Server1 (172.16.11.10) to Server2 (172.16.12.10), passing through switch Leaf1. The gateway IP address for Server1 configured on Leaf 1 is 172.16.11.253. The mirror session is applied on Leaf1 with the destination IP address of 12.0.0.2 and the GRE Tunnel type of 0x88be to the collector. The interface Ethernet 8 on Leaf1 connects to Server1, while Ethernet 16 connects to Spine1. A route is added to the collector Linux VM for all the mirror traffic. The collected data is then used for filtering and visualization.

Figure 20: Topology for Everflow configuration example

Everflow configuration can be carried out using the config_db.json file on the Enterprise SONiC Distribution by Dell Technologies NOS. The high-level steps for configuring Leaf1 are as follows:

Configure mirror session

config mirror_session add Mirror_Ses 172.16.11.253 12.0.0.2 50 100 0x88be 0

Define ACL table for mirror_acl that lists the ports in a temporary json file

printf '{"ACL_TABLE": {"MIRROR_ACL": {"stage": "INGRESS", "type": "mirror", "policy_desc": "Mirror_ACLV4_CREATION", "ports": ["Ethernet8", "Ethernet16"]}}}\n' > /tmp/apply_json2.json

Load ACL table to the DB

config load -y /tmp/apply_json2.json

Define the ACL rule that lists the source and destination for L3 packets to be captured

printf '{"ACL_RULE": {"MIRROR_ACL|Mirror_Rule": {"PRIORITY": "999", "IP_PROTOCOL": "61", "MIRROR_ACTION": "Mirror_Ses", "SRC_IP": "172.16.11.10/24", "DST_IP": "172.16.12.10/24"}}}\n' > /tmp/apply_json2.json

Load ACL rule to the DB

config load -y /tmp/apply_json2.json

Save config to config DB

The config_db command displays as follows:

{

"ACL_RULE": {

"MIRROR_ACL|Mirror_Rule": {

"DST_IP": "172.16.12.10/24",

"IP_PROTOCOL": "61",

"MIRROR_ACTION": "Mirror_Ses",

"PRIORITY": "999",

"SRC_IP": "172.16.11.10/24"

}

},

"ACL_TABLE": {

"MIRROR_ACL": {

"policy_desc": "Mirror_ACLV4_CREATION",

"ports": [

"Ethernet8",

"Ethernet16"

],

"stage": "INGRESS",

"type": "mirror"

}

},

"MIRROR_SESSION": {

"Mirror_Ses": {

"dscp": "50",

"dst_ip": "12.0.0.2",

"gre_type": "0x88be",

"queue": "0",

"src_ip": "172.16.11.10/24",

"ttl": "100"

}

}