Cnvrg.io on Data Lakehouse using Symworld Cloud Native Platform

Home > Workload Solutions > Artificial Intelligence > Guides > Design Guide—Optimize Machine Learning Through MLOps with Dell Technologies cnvrg.io > Cnvrg.io on Data Lakehouse using Symworld Cloud Native Platform

Cnvrg.io on Data Lakehouse using Symworld Cloud Native Platform

-

The cnvrg.io MLOps platform is delivered on the Validated Design for Analytics─Data Lakehouse platform that incorporates Dell Technologies infrastructure, the Symworld Cloud Native Platform, NVIDIA GPUs, and NVIDIA GPU Operator. This validated design addresses the needs of organizations deploying advanced analytics. It incorporates a lakehouse architecture together with a container platform using decoupled compute and storage.

The Symworld Cloud Native Platform provides the ability to deploy applications to a Kubernetes cluster using custom application bundles containing all the specifications and resources necessary for application deployment. When the Symworld Cloud Native Platform deploys the application, it takes responsibility for creating all relevant Kubernetes resources, such as StatefulSets, PersistentVolumeClaims, and so on. Symworld applications are automatically handled by the Symworld platform framework. This approach enables DevOps engineers to rapidly deploy applications to their Symworld Cloud Native Platforms, without requiring a deep understanding of Kubernetes concepts.

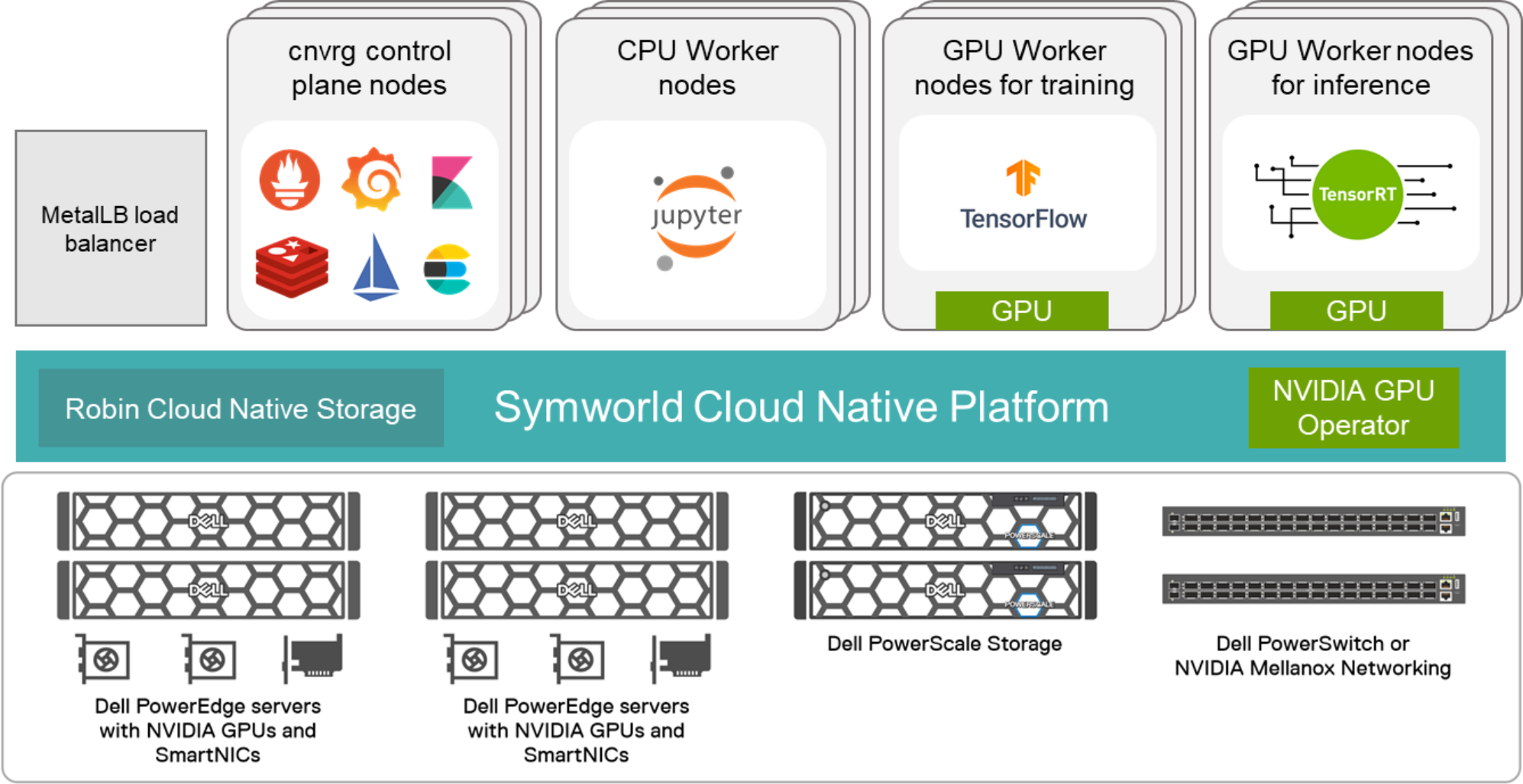

See the Design Guide for Dell Validated Design for Analytics—Data Lakehouse for information about the Symworld Cloud Native Platform reference architecture on Dell Technologies infrastructure, as shown in the following figure:

Figure 6. cnvrg.io solution architecture on the Symworld Cloud Native Platform

PowerEdge servers provide compute resources for the AI workloads and pipelines deployed on cnvrg.io. The PowerEdge servers can be optionally configured with NVIDIA GPUs to support acceleration for neural network training and inference. PowerSwitch switches are used for network connectivity and out-of-band (OOB) connectivity.

These PowerEdge servers are configured with the Symworld Cloud Native Platform to enable creation of Kubernetes clusters, which the Symworld Cloud Native Platform can manage. The Symworld Cloud Native Platform discovers disks attached to the nodes (servers) and uses them for providing storage to applications.

NVIDIA GPU

Kubernetes worker nodes can be equipped with NVIDIA GPUs. These GPUs can be enabled with MIG capability. The NVIDIA GPU operator provides automated deployment of NVIDIA software components required for the GPUs. Using cnvrg.io, these nodes can run various workloads such as TensorFlow for training, Jupyter notebooks for interactive model development, and TensorRT for inference. By selecting the Compute Template with the correct MIG partition, these workloads are allocated the right GPU resource.

Network configuration

The Symworld Cloud Native Platform extends Kubernetes networking using both Calico and SR-IOV/Open vSwitch-based Container Network Interface (CNI) drivers. This dual support offers flexibility to use either overlay networks to create nonrigid L3 subnets that span multiple data centers/cloud environments or bridged networking for wire-speed network access for high-performance applications. In either mode, the Symworld Cloud Native Platform enhances the CNI driver to retain the IP address of the pod when it is restarted or relocated. This approach provides additional flexibility during the application life cycle management process for operations such as scaling and migration, and ensuring high availability. For more information about managing networking, see the Symworld Platform user guide.

We have validated this solution with the MetalLB load balancer to provide external access to cnvrg.io. MetalLB comes packaged and can be deployed during the installation of the Symworld Cloud Native Platform. However, customers can deploy other load balancers.

Storage architecture

Symworld Cloud Native Storage discovers disks attached to the PowerEdge servers and creates a Kubernetes Storage Class, which provides storage to applications that require Persistent Volumes. This approach applies to local disks, cloud volumes, and SAN storage that are available to nodes that have the storage role assigned to them. During the process, the Symworld Cloud Native Platform collects all the required metadata about the disks and identifies them as HDD, SSD, or NVMe drives. All the eligible disks (excluding disks with partitions or without WWNs) are marked as Symworld Cloud Native Storage. When cnvrg.io is deployed, Symworld Cloud Native Storage is used for pods storage and Docker images. cnvrg.io application data is stored as persistent volumes in Symworld Cloud Native Storage. Datasets imported to cnvrg.io are also hosted on Symworld Cloud Native Storage.

We recommend PowerScale storage for data lake storage, that is, storing data that are required for neural network training. PowerScale storage can also be used for NFS caching for datasets. Local NFS cache can save time when working with large datasets pulled from an external object-storage. The data is saved to an NFS server that is accessible to the Kubernetes cluster.