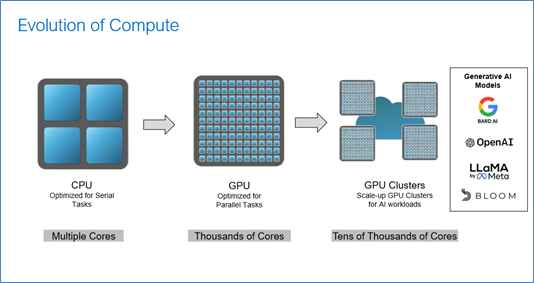

The saying “necessity breeds innovation” is an understatement for what has taken place in the evolution of the compute cluster. The creation of the computer central processing unit (CPU) sparked an evolutionary path towards a newer, more efficient, and potentially groundbreaking solution.

The CPU is the brain, the core computational component in a computer or server. It can perform millions of computations per second, cutting down the amount of data analysis from days to hours or minutes. It can also create complex analytical models in weather prediction, finance market outlook analysis, and more.

However, while efficient and powerful, the CPU is still limited in how it processes all data points or calculations. For example, computer graphics and animation rendering life-like image resolution has resulted in the first compute-intensive workload that the standard CPU was not designed to handle.

To address this challenge, hardware manufacturers have looked for ways to offload and relieve a CPU’s involvement and increase performance by architecting a new type of processing unit—the graphics procession unit (GPU).

One of the main challenges of the standard CPU is its inability to overcome its inherent serial-based processing methodology. In a standard CPU architecture, all processing is done serially, while all processing is done in parallel in a GPU.

The nature of a parallel architecture allows GPUs to be clustered to scale up to tens of thousands of cores and not miss a beat.

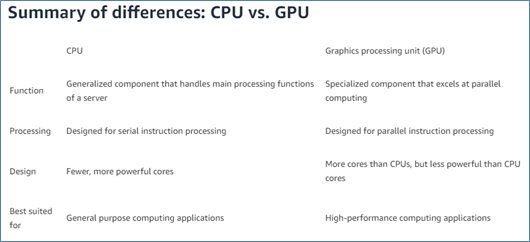

When comparing a CPU to a GPU, four unique categories stand out:

- Function

- Processing

- Design

- Applicability

Figure 5 summarizes these categories:

These advantages make GPUs the ideal candidate for AI applications, specifically GenAI. In addition, the architecture of clustering thousands of GPUs into a single entity requires a unique fabric that can be:

- Lossless

- High-performance

- Scalable