Home > Integrated Products > VxRail > Guides > Architecture Guide—VMware Cloud Foundation on VxRail: VCF 5.x on VxRail 8.x > VxRail physical network interfaces

VxRail physical network interfaces

-

Overview

VxRail can be deployed with either 2x10/2x25 GbE, 4x10 GbE, or 4x25 GbE predefined profiles. Beginning with VxRail version 7.0.130, custom profiles can be used. VxRail needs the necessary network hardware to support the initial deployment. The following table illustrates various physical network connectivity options with a single system vDS and a dedicated NSX vDS with and without NIC-level redundancy. For this table, a standard wiring configuration can be used for connectivity—odd-numbered uplinks cabled to Fabric A and even-numbered uplinks cabled to Fabric B.

Notes:

- 100 Gbps PCIe adapters are supported using custom profiles.

• For the dedicated NSX vDS deployed by VCF, the vmnic to uplink mapping is in lexicographic order, so this factor must be considered during the design phase.

Table 14. Physical network connectivity options

Option

Dedicated VDS for NSX

Uplinks per vDS

NIC Redundancy

VxRail vDS

NSX vDS

Uplink 1

Uplink 2

Uplink 3

Uplink 4

Uplink 1

Uplink 2

Uplink 3

Uplink 4

A

No

2

No

NDC-1

NDC-2

B

No

2

Yes

NDC-1

PCI1-2

C

No

4

No

NDC-1

NDC-2

NDC-3

NDC-4

D

No

4

Yes

NDC-1

PCI1-2

NDC-2

PCI1-1

E

Yes

2

No

NDC-1

NDC-2

NDC-3

NDC-4

F

Yes

2

No

NDC-1

NDC-2

PCI1-1

PCI1-2

G

Yes

2

Yes

NDC-1

PCI1-2

NDC-2

PCI1-1

H

Yes

4/2

No

NDC-1

NDC-2

NDC-3

NDC-4

PCI1-1

PCI1-2

I

Yes

4/2

Yes

NDC-1

PCI1-2

NDC-2

PCI1-1

PCI1-3

PCI1-4

J

Yes

2/4

No

NDC-1

NDC-2

PCI1-1

PCI1-2

PCI1-3

PCI1-4

K

Yes

4

No

NDC-1

NDC-2

NDC-3

NDC-4

PCI1-1

PCI1-2

PCI1-3

PCI1-4

L

Yes

4

Yes

NDC-1

PCI1-2

NDC-2

PCI1-1

NDC-3

PCI1-4

PCI2-14

PCI2-2

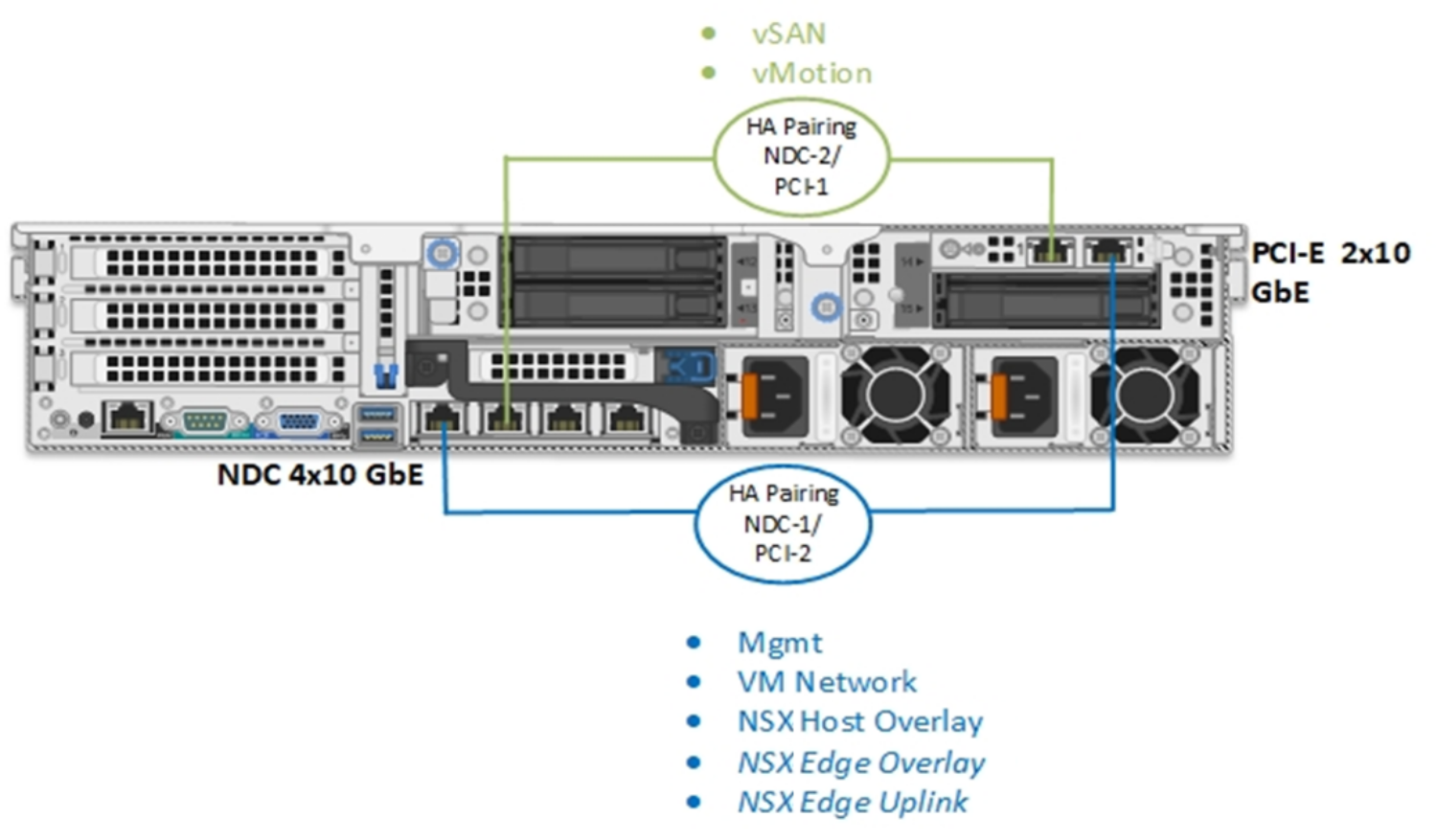

The following figures illustrate some of the different host connectivity options from the preceding table for the different VxRail deployment types for either the Mgmt WLD or a VI WLD. For the Mgmt WLD and the VI WLD, the Edge overlay and the Edge Uplink networks will be deployed when NSX Edges are deployed using Edge automation in SDDC Manager. There are too many different options to cover in this section. The following sections describe the most common options from Table 14.

Note: The PCIe card placements in the following figures are for illustration purposes only and might not match the configuration of the physical server. See the VxRail documentation for riser and PCIe placement.

Single VxRail vDS connectivity options

This section illustrates the physical host network connectivity options for different VxRail profiles and connectivity options when only using the single VxRail vDS.

10 GbE connectivity options

This figure illustrates option A in Table 14. The VxRail deployed with 2x10 predefined network profile on the 4-port NDC. The remaining two ports are unused and can be used for other purposes if required.

Figure 37. Single VxRail vDS - 2x10 predefined network profile

The next figure refers to option C in Table 14. The VxRail is deployed with a 4x10 predefined network profile. This places vSAN and vMotion onto their own dedicated physical NICs on the NDC and NSX traffic will use vmnic0 and vmnic1 shared with management traffic. More PCI cards can be installed and used for other traffic if that is required.

Figure 38. Single VxRail vDS - 4x10 predefined network profile

The final 10 GbE option provides NIC-level redundancy. To achieve this redundancy, use an NDC and PCIe with a custom profile to deploy the VxRail vDS. The following figure illustrates this option, which is option D in Table 14:

Figure 39. Single VxRail vDS – 4x10 custom profile and NIC-level redundancy

25 GbE connectivity options

The first option aligns with option A in Table 14. A single VxRail vDS using 2x25 network profile for the VxRail using the 25GbE NDC. The VxRail system traffic uses the two ports of the NDC along with NSX traffic.

Figure 40. Single VxRail vDS - 2x25 network profile

As with the previous option, additional PCIe cards can be added to the node for other traffic, for example, backup, replication, and so on.

The second option aligns with option D in Table 14. A single VxRail vDS using a custom network profile which provides NIC-level redundancy for the VxRail system traffic and the NSX TEP and Edge uplink traffic. A standard physical cabling configuration can be achieved with the logical network configuration described in section VxRail vDS custom profiles.

Figure 41. Single VxRail vDS - 4x25 custom network profile