Home > Integrated Products > VxRail > Guides > Architecture Guide—VMware Cloud Foundation on VxRail: VCF 5.x on VxRail 8.x > Multi-rack design considerations

Multi-rack design considerations

-

Introduction

You might want to span WLD VxRail clusters across racks to avoid a single point of failure within one rack. The management VMs running on the Mgmt WLD VxRail cluster and any management VMs running on the VI WLD require VxRail nodes to reside on the same L2 management network. This requirement ensures that the VMs can be migrated between racks and maintain the same IP address. For a Layer 3 Leaf-Spine fabric, this requirement is a problem because the VLANs are terminated at the leaf switches in each rack.

VxRail cluster across racks

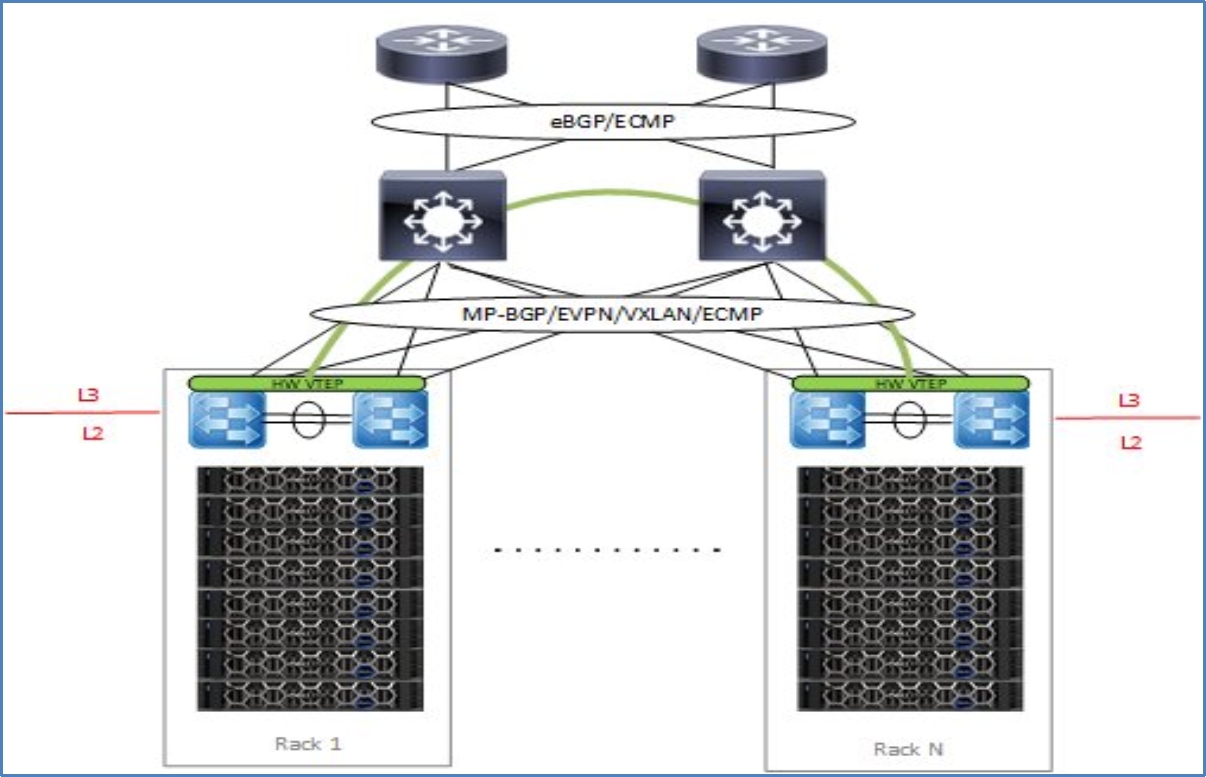

VxRail clusters deployed across racks require a network design that allows a single (or multiple) VxRail clusters to span between racks. This solution uses a Dell PowerSwitch hardware VTEP to provide an L2 overlay network. This design extends L2 segments over an L3 underlay network for VxRail node discovery, vSAN, vMotion, management, and VM/App L2 network connectivity between racks. The following figure is an example of a multi-rack solution using hardware VTEP with VXLAN BGP EVPN. The advantage of VXLAN BGP EVPN over a static VXLAN configuration is that each VTEP is automatically learned as a member of a virtual network from the EVPN routes received from the remote VTEP.

For more information about Dell Network solutions for VxRail, see the Dell VxRail Network Planning Guide.

Figure 36. Multi-rack VxRail cluster with hardware VTEP