Assets

Model Training – Dell Validated Design

Fri, 03 May 2024 16:09:06 -0000

|Read Time: 0 minutes

Introduction

When it comes to large language models (LLMs), there may be fundamental question that everyone looking to leverage foundational models need to answer: should I train my model, or should I customize an existing model?

There can be strong arguments for either. In a previous post, Nomuka Luehr covered some popular customization approaches. In this blog, I will look at the other side of the question: training, and answer the following questions: Why would I train an LLM? What factors should I consider? I’ll also cover the recently announced Generative AI in the Enterprise – Model Training Dell Validated Design - A Scalable and Modular Production Infrastructure for AI Large Language Model Training. This is a collaborative effort between Dell Technologies and NVIDIA, aimed at facilitating high-performance, scalable, and modular solutions for training large language models (LLMs) in enterprise settings (more about that later).

Training pipeline

The data pipelines for training and customization are similar because both processes involve feeding specific datasets through the LLM.

In the case of training, the dataset is typically much larger than for customization, because customization is targeted at a specific domain. Remember, for training a model, the goal is to embed as much knowledge into the model as possible, so the dataset must be large.

This raises the question of the dataset and its accuracy and relevance. Curating and preparing the data are essential processes to avoid biases and misinformation. This step is vital for ensuring the quality and relevance of the data fed into the LLM. It involves meticulously selecting and refining data to minimize biases and misinformation, which if overlooked, could compromise the model's output accuracy and reliability. Data curation is not just about gathering information; it's about ensuring that the model's knowledge base is comprehensive, balanced, and reflects a wide array of perspectives.

When the dataset is curated and prepped, the actual process of training involves a series of steps where the data is fed through the LLM. The model generates outputs based on the input provided, which are then compared against expected results. Discrepancies between the actual and expected outputs lead to adjustments in the model's weights, gradually improving its accuracy through iterative refinement (using supervised learning, unsupervised learning, and so on).

While the overarching principle of this process might seem straightforward, it's anything but simple. Each step involves complex decisions, from selecting the right data and preprocessing it effectively to customizing the model's parameters for optimal performance. Moreover, the training landscape is evolving, with advancements, such as supervised and unsupervised learning, which offer different pathways to model development. Supervised learning, with its reliance on labeled datasets, remains a cornerstone for most LLM training regimes, by providing a structured way to embed knowledge. However, unsupervised learning, which explores data patterns without predefined labels, is gaining traction for its ability to unearth novel insights.

These intricacies highlight the importance of leveraging advanced tools and technologies. Companies like NVIDIA are at the forefront, offering sophisticated software stacks that automate many aspects of the process, and reducing the barriers to entry for those looking to develop or refine LLMs.

Network and storage performance

In the previous section, I touched on the dataset required to train or customize models. While having the right dataset is a critical piece of this process, being able to deliver that dataset fast enough to the GPUs running the model is another critical and yet often overlooked piece. To achieve that, you must consider two components:

- Storage performance

- Network performance

For anyone looking to train a model, having a node-to-node (also known as East-West) network infrastructure based on 100Gbps, or better yet, 400Gbps, is critical, because it ensures sufficient bandwidth and throughput to keep saturated the type of GPUs, such as the NVIDIA H100, required for training.

Because customization datasets are typically smaller than full training datasets, a 100Gbps network can be sufficient, but as with everything in technology, your mileage may vary and proper testing is critical to ensure adequate performance for your needs.

Datasets used to train models are typically very large: in the 100s of GB. For instance, the dataset used to train GPT-4 is said to be over 550GB. With the advance of RDMA over Converged Ethernet (RoCE), GPUs can pull the data directly from storage. And because 100Gbps networks are able to support that load, the bottleneck has moved to the storage.

Because of the nature of large language models, the dataset used to train them is made of unstructured data, such as Sharepoint sites and document repositories, and are therefore most often hosted on network attached storage, such as Dell PowerScale. I am not going to get into further details on the storage part because I’ll be publishing another blog on how to use PowerScale to support model training. But you must make careful considerations when designing the storage to ensure that the storage is able to keep up with the GPUs and the network.

A note about checkpointing

As we previously mentioned, the process of training is iterative. Based on the input provided, the model generates outputs, which are then compared against expected results. Discrepancies between the actual and expected outputs lead to adjustments in the model's weights, gradually improving its accuracy through iterative refinement. This process is repeated across many iterations over the entire training dataset.

A training run (that is, running an entire dataset through a model and updating its weight), is extremely time consuming and resource intensive. According to this blog post, a single training run of ChatGPT-4 costs about $4.6M. Imagine running a few of them in a row, only to have an issue and having to start again. Because of the cost associated with training runs, it is often a good idea to save the weights of the model at an intermediate stage during the run. Should something fail later on, you can load the saved weights and restart from that point. Snapshotting the weights of a model in this way is called checkpointing. The challenge with checkpointing is performance.

A checkpoint is typically stored on an external storage system, so again, storage performance and network performance are critical considerations to offer the proper bandwidth and throughput for checkpointing. For instance, the Llama-2 70B consumes about 129GB of storage. Because each of its checkpoints is the exact same predictable size, they can be saved quickly (to disk) to ensure the proper performance of the training process.

NVIDIA software stack

The choice of which framework to use depends on whether you typically lean more towards doing it yourself or buying specific outcomes. The benefit of doing it yourself is ultimate flexibility, sometimes at the expense of time to market, whereas buying an outcome can offer better time to market at the expense of having to choose within a pre-determined set of components. In my case, I have always tended to favor buying outcomes, which is why I want to cover the NVIDIA AI Enterprise (NVAIE) software stack at a high level.

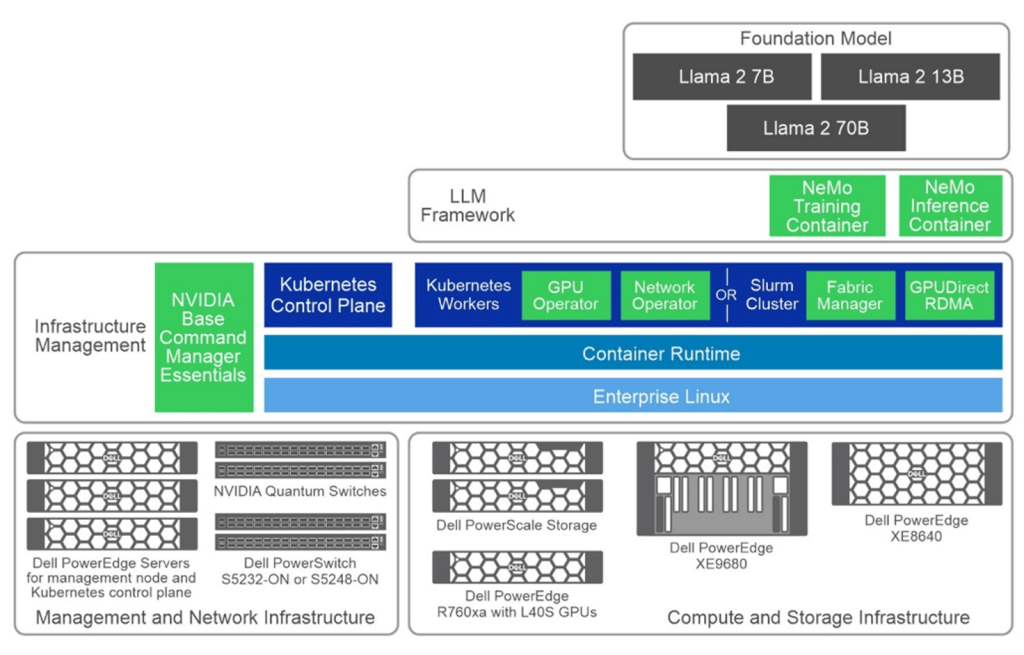

The following figure is a simple layered cake that showcases the various components of the NVAIE, in light green.

The white paper Generative AI in the Enterprise – Model Training Dell Validated Design provides an in-depth exploration of a reference design developed by Dell Technologies in collaboration with NVIDIA. It offers enterprises a robust and scalable framework to train large language models effectively. Whether you're a CTO, AI engineer, or IT executive, this guide addresses the crucial aspects of model training infrastructure, including hardware specifications, software design, and practical validation findings.

Training the Dell Validated Design architecture

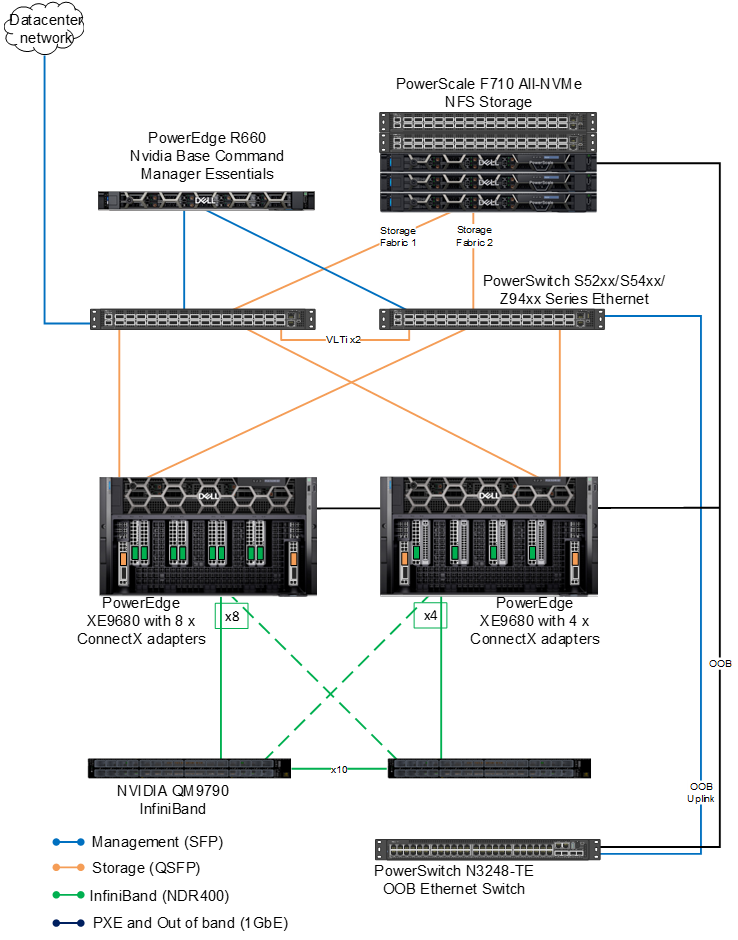

The validated architecture aims to give the reader a broad output of model training results. We used two separate configuration types across the compute, network and GPU stack.

There are two 8x PowerEdge XE9680 configurations both with 8x NVIDIA H100 SXM GPUs. The difference between the configurations (again) is the network. The first configuration is equipped with 8x ConnectX-7; the second configuration is equipped with four ConnectX-7 adapters. Both are configured for NDR.

On the storage side, the evolution of PowerScale continues to thrive in the AI domain with the launch of its latest line, including the notable PowerScale F710. This addition embraces Dell PowerEdge 16G servers, heralding a new era in performance capabilities for PowerScale's all-flash nodes. On the software side, the F710 benefits from the enhanced performance features found in the PowerScale OneFS 9.7 update.

Key takeaways

The guide provides training times for the Llama 2 7B and Llama 2 70B models over 500 steps, with variations based on the number of nodes and configurations used.

Why only 500 steps? The decision to train models for a set number of steps (500), rather than to train models for convergence, is practical for validation purposes. It allows for a consistent benchmarking metric across different scenarios and models, to produce a clearer comparison of infrastructure efficiency and model performance in the early stages.

Efficiency of Model Sizing: The choice of 7B and 70B Llama 2 model architectures indicates a strategic approach to balance computational efficiency with potential model performance. Smaller models like the 7B are quicker to train and require fewer resources, making them suitable for preliminary tests and smaller-scale applications. On the other hand, the 70B model, while significantly more resource-intensive, was chosen for its potential to capture more complex patterns and provide more accurate outputs.

Configuration and Resource Optimization: Comparing two hardware configurations provides valuable insights into optimizing resource allocation. While higher-end configurations (Configuration 1 with 8 adapters) offer slightly better performance, you must weigh the marginal gains against the increased costs. This highlights the importance of tailoring the hardware setup to the specific needs and scale of the project, where sometimes, a less maximalist approach (Configuration 2 with 4 adapters) can provide a more balanced cost-to-benefit ratio, especially in smaller setups. Certainly something to think about!

Parallelism Settings: The specific settings for tensor and pipeline parallelism (as covered in the guide), along with batch sizes and sequence lengths, are crucial for training efficiency. These settings impact the training speed and model performance, indicating the need for careful tuning to balance resource use with training effectiveness. The choice of these parameters reflects a strategic approach to managing computational loads and training times.

To close

With the scalable and modular infrastructure designed by Dell Technologies and NVIDIA, you are well-equipped to embark on or enhance your AI initiatives. Leverage this blueprint to drive innovation, refine your operational capabilities, and maintain a competitive edge in harnessing the power of large language models.

Authors: Bertrand Sirodot and Damian Erangey

Dell Validated Design Guides for Inferencing and for Model Customization – March ’24 Updates

Fri, 15 Mar 2024 20:16:59 -0000

|Read Time: 0 minutes

Continuous Innovation with Dell Validated Designs for Generative AI with NVIDIA

Since Dell Technologies and NVIDIA introduced what was then known as Project Helix less than a year ago, so much has changed. The rate of growth and adoption of generative AI has been faster than probably any technology in human history.

From the onset, Dell and NVIDIA set out to deliver a modular and scalable architecture that supports all aspects of the generative AI life cycle in a secure, on-premises environment. This architecture is anchored by high-performance Dell server, storage, and networking hardware and by NVIDIA acceleration and networking hardware and AI software.

Since that introduction, the Dell Validated Designs for Generative AI have flourished, and have been continuously updated to add more server, storage, and GPU options, to serve a range of customers from those just getting started to high-end production operations.

A modular, scalable architecture optimized for AI

This journey was launched with the release of the Generative AI in the Enterprise white paper.

This design guide laid the foundation for a series of comprehensive resources aimed at integrating AI into on-premises enterprise settings, focusing on scalable and modular production infrastructure in collaboration with NVIDIA.

Dell, known for its expertise not only in high-performance infrastructure but also in curating full-stack validated designs, collaborated with NVIDIA to engineer holistic generative AI solutions that blend advanced hardware and software technologies. The dynamic nature of AI presents a challenge in keeping pace with rapid advancements, where today's cutting-edge models might become obsolete quickly. Dell distinguishes itself by offering essential insights and recommendations for specific applications, easing the journey through the fast-evolving AI landscape.

The cornerstone of the joint architecture is modularity, offering a flexible design that caters to a multitude of use cases, sectors, and computational requirements. A truly modular AI infrastructure is designed to be adaptable and future-proof, with components that can be mixed and matched based on specific project requirements and which can span from model training, to model customization including various fine-tuning methodologies, to inferencing where we put the models to work.

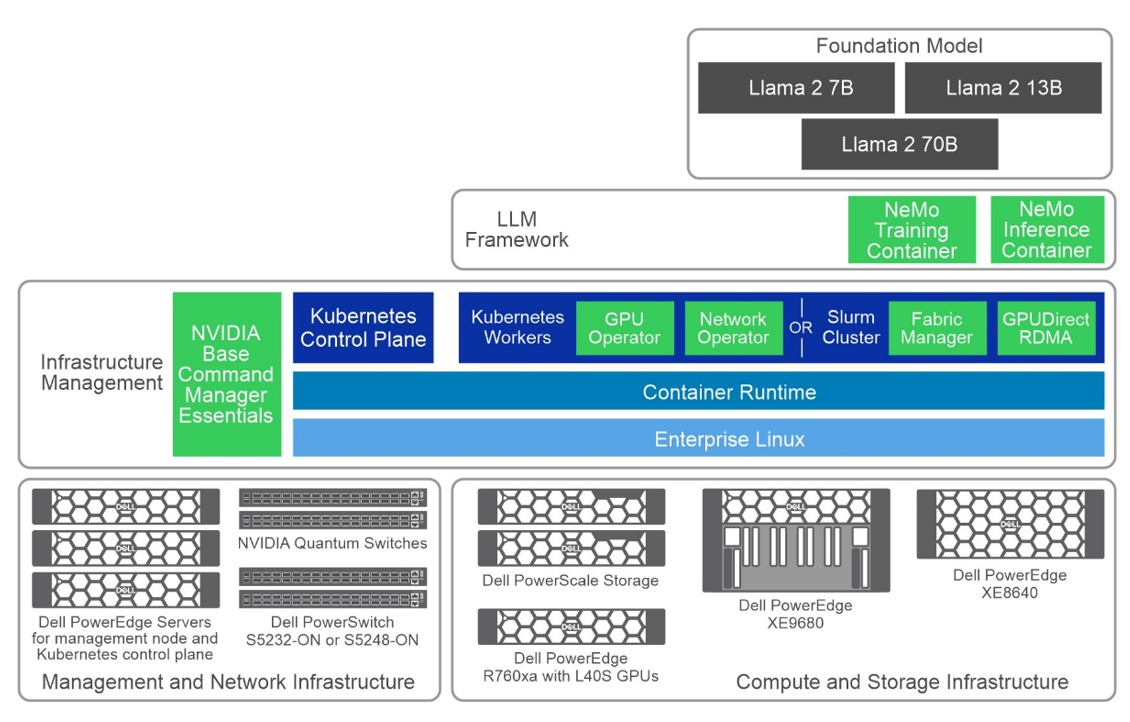

The following figure shows a high-level view of the overall architecture, including the primary hardware components and the software stack:

Figure 1: Common high-level architecture

Generative AI Inferencing

Following the introductory white paper, the first validated design guide released was for Generative AI Inferencing, in July 2023, anchored by the innovative concepts introduced earlier.

The complexity of assembling an AI infrastructure, often involving an intricate mix of open-source and proprietary components, can be formidable. Dell Technologies addresses this complexity by providing fully validated solutions where every element is meticulously tested, ensuring functionality and optimization for deployment. This validation gives users the confidence to proceed, knowing their AI infrastructure rests on a robust and well-founded base.

Key Takeaways

- In October 2023, the guide received its first update, broadening its scope with added validation and configuration details for Dell PowerEdge XE8640 and XE9680 servers. This update also introduced support for NVIDIA Base Command Manager Essentials and NVIDIA AI Enterprise 4.0, marking a significant enhancement to the guide's breadth and depth.

- The guide's evolution continues into March 2024 with its third iteration, which includes support for the PowerEdge R760xa servers equipped with NVIDIA L40S GPUs.

- The design now supports several options for NVIDIA GPU acceleration components across the multiple Dell server options. In this design, we showcase three Dell PowerEdge servers with several GPU options tailored for generative AI purposes:

- PowerEdge R760xa server, supporting up to four NVIDIA H100 GPUs or four NVIDIA L40S GPUs

- PowerEdge XE8640 server, supporting up to four NVIDIA H100 GPUs

- PowerEdge XE9680 server, supporting up to eight NVIDIA H100 GPUs

The choice of server and GPU combination is often a balance of performance, cost, and availability considerations, depending on the size and complexity of the workload.

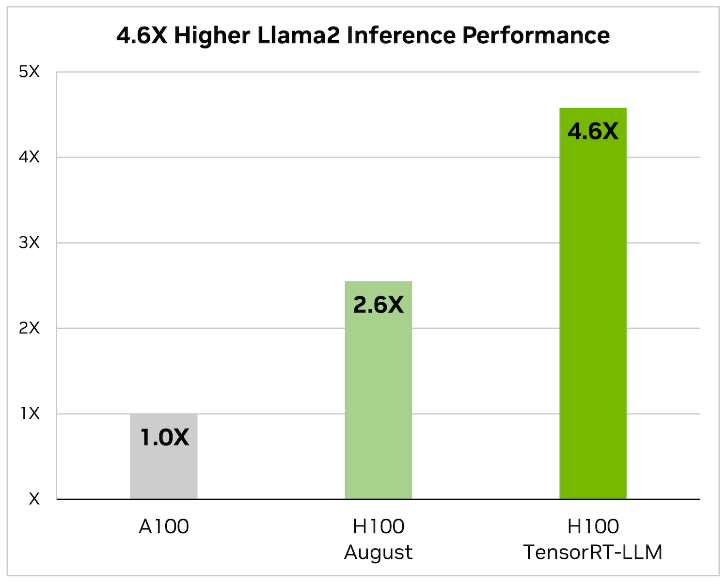

- This latest edition also saw the removal of NVIDIA FasterTransformer, replaced by TensorRT-LLM, reflecting Dell’s commitment to keeping the guide abreast of the latest and most efficient technologies. When it comes to optimizing large language models, TensorRT-LLM is the key. It ensures that models not only deliver high performance but also maintain efficiency in various applications.

The library includes optimized kernels, pre- and postprocessing steps, and multi-GPU/multi-node communication primitives. These features are specifically designed to enhance performance on NVIDIA GPUs.

It uses tensor parallelism for efficient inference across multiple GPUs and servers, without the need for developer intervention or model changes.

- Additionally, this update includes revisions to the models used for validation, ensuring users have access to the most current and relevant information for their AI deployments. The Dell Validated Design guide covers Llama 2 and now Mistral as the foundation models for inferencing with this infrastructure design with Triton Inference Server:

- Llama 2 7B, 13B, and 70B

- Mistral

- Falcon 180B

- Finally (and most importantly) performance test results and sizing considerations showcase the effectiveness of this updated architecture in handling large language models (LLMs) for various inference tasks. Key takeaways include:

- Optimized Latency and Throughput—The design achieved impressive latency metrics, crucial for real-time applications like chatbots, and high tokens per second, indicating efficient processing for offline tasks.

- Model Parallelism Impact—The performance of LLMs varied with adjustments in tensor and pipeline parallelism, highlighting the importance of optimal parallelism settings for maximizing inference efficiency.

- Scalability with Different GPU Configurations—Tests across various NVIDIA GPUs, including L40S and H100 models, demonstrated the design’s scalability and its ability to cater to diverse computational needs.

- Comprehensive Model Support—The guide includes performance data for multiple models (as we already discussed) across different configurations, showcasing the design’s versatility in handling various LLMs.

- Sizing Guidelines—Based on performance metrics, updated sizing examples are available to help users determine the appropriate infrastructure based on their specific inference requirements (these guidelines very welcome)

All this highlights Dell’s commitments and capability to deliver high-performance, scalable, and efficient generative AI inferencing solutions tailored to enterprise needs.

Generative AI Model Customization

The validated design guide for Generative AI Model Customization was first released in October 2023, anchored by the PowerEdge XE9680 server. This guide detailed numerous model customization methods, including the specifics of prompt engineering, supervised fine-tuning, and parameter-efficient fine-tuning.

The updates to the Dell Validated Design Guide from October 2023 to March 2024 included the initial release, the addition of validated scenarios for multi-node SFT and Kubernetes in November 2023, updated performance test results, and new support for PowerEdge R760xa servers, PowerEdge XE8640 servers, and PowerScale F710 all-flash storage as of March 2024.

Key Takeaways

- The validation aimed to test the reliability, performance, scalability, and interoperability of a system using model customization in the NeMo framework, specifically focusing on incorporating domain-specific knowledge into Large Language Models (LLMs).

- The process involved testing foundational models of sizes 7B, 13B, and 70B from the Llama 2 series. Various model customization techniques were employed, including:

- Prompt engineering

- Supervised Fine-Tuning (SFT)

- P-Tuning, and

- Low-Rank Adaptation of Large Language Models (LoRA)

- The design now supports several options for NVIDIA GPU acceleration components across the multiple Dell server options. In this design, we showcase three Dell PowerEdge servers with several GPU options tailored for generative AI purposes:

- PowerEdge R760xa server, supporting up to four NVIDIA H100 GPUs or four NVIDIA L40S GPUs. While the L40S is cost-effective for small to medium workloads, the H100 is typically used for larger-scale tasks, including SFT.

- PowerEdge XE8640 server, supporting up to four NVIDIA H100 GPUs.

- PowerEdge XE9680 server, supporting up to eight NVIDIA H100 GPUs.

As always, the choice of server and GPU combination depends on the size and complexity of the workload.

- The validation used both Slurm and Kubernetes clusters for computational resources and involved two datasets: the Dolly dataset from Databricks, covering various behavioral categories, and the Alpaca dataset from OpenAI, consisting of 52,000 instruction-following records. Training was conducted for a minimum of 50 steps, with the goal being to validate the system's capabilities rather than achieving model convergence, to provide insights relevant to potential customer needs.

The validation results along with our analysis can be found in the Performance Characterization section of the design guide.

What’s Next?

Looking ahead, you can expect even more innovation at a rapid pace with expansions to the Dell’s leading-edge generative AI product and solutions portfolio.

For more information, see the following resources:

- Dell Generative AI Solutions

- Dell Technical Info Hub for AI

- Generative AI in the Enterprise white paper

- Generative AI Inferencing in the Enterprise Inferencing design guide

- Generative AI in the Enterprise Model Customization design guide

- Dell Professional Services for Generative AI

~~~~~~~~~~~~~~~~~~~~~