Blogs

Blog posts related to Dell Technologies solutions for Oracle

NVMe/FC vs. SCSI/FC – The Numbers Are In

Thu, 15 Jun 2023 18:54:10 -0000

|Read Time: 0 minutes

Dell Technologies and Broadcom collaborated to understand database workloads performance comparison between NVMe/FC and SCSI/FC. The two companies performed a study and commissioned a third party (Tolly) to initiate the benchmark using a Broadcom Emulex LPe36002 64Gb Host Bus Adapter (HBA), a 16G PowerEdge server, and a PowerStore storage array. Dell Technologies is in a unique position to offer end to end NVMe/FC capabilities resulting in significant performance gains as evident in this study using its mid-range storage product line such as PowerStore.

NVMe and SCSI

Non-Volatile Memory Express (NVMe) is a host controller interface designed to scale and address the needs of enterprise and client systems that use PCI Express ®-based solid-state storage. It is designed specifically for high-performance, multi-queue communication with non-volatile memory (NVM) devices. NVMe supports up to 64K commands per queue and up to 64K queues for parallel operations. NVMe can be transported over Fibre Channel protocol as a means to connect storage devices and servers over an extended distance.

Small Computer System Interface (SCSI) is set of standards and commands for transferring data between devices. SCSI can support one command queue with a queue depth of up to 256 commands. SCSI commands can be transported over Fibre Channel protocol as well.

Fibre Channel Storage Area Network (SAN) is a specialized, high-speed, low-latency networking method of connecting storage devices to servers. It is an ordered, lossless transport protocol used primarily for SCSI commands. The Fibre Channel SAN connects storage devices and servers using optical fibers and supports data rates of 1, 2, 4, 8, 16, 32, 64, and 128 gigabit per second.

In a world of NVMe/FC and SCSI/FC, both NVMe and SCSI data are encapsulated and transported within Fibre Channel frames.

Benchmark setup

The performance comparison between NVMe/FC and SCSI/FC was focused on database throughput, latency, and CPU efficiency using TPROC-C like workload. Oracle Database 19c and Microsoft SQL Server 2019 were used along with HammerDB as a front-end client software.

The test bed for the benchmark includes:

- 1 x Dell PowerEdge R760 rack server with Emulex LPe36002 64Gb Host Bus Adapter

- 1 x Dell PowerStore 9200T

- 1 x Brocade G720 FC switch

Test results

To view the complete test report and the detailed test bed configuration, see the following documents:

Proof of Concept – Running Oracle and Microsoft SQL Databases on Dell APEX – Part 1

Wed, 31 May 2023 16:08:03 -0000

|Read Time: 0 minutes

Oracle and Microsoft SQL databases are critical components of most business operations. Most organizations have many Oracle and Microsoft SQL databases spread across on-premises and the cloud. Procuring the underlying infrastructure can pose a challenge to IT. These challenges can be minimized by using Dell APEX Private Cloud, Hybrid Cloud, or APEX Data Storage Services offerings.

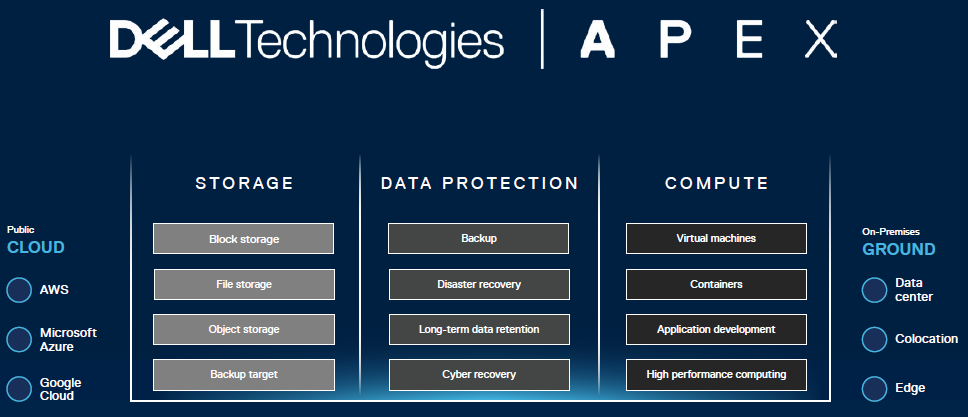

What is Dell APEX?

Dell APEX is a portfolio of Dell Technologies as-a-Service offerings simplify digital transformation by increasing IT agility and control. It gives customers a seamless IT experience by delivering infrastructure, devices, and applications as-a-Service. Customers have the freedom to scale resources up or down quickly to react to their business requirements. Dell APEX makes it easier than ever to unlock the full potential of multicloud, allowing customers to capitalize on cloud experiences and have access to best-in-class technologies to drive innovation. Dell APEX gives customers control of the security, access, and location of their data.

Why run Oracle and Microsoft SQL Databases on Dell APEX?

Traditionally, building an infrastructure solution for mission-critical databases such as Oracle and Microsoft SQL can be a time-consuming and complex process because running these databases in production demands high performance, high availability, and elasticity. Technologies and lines of business are constantly changing, which makes it difficult for IT to forecast infrastructure requirements. CAPEX and time-to-market (TTM) must also be evaluated.

To solve these challenges, IT architects are often required to work with business stakeholders on a regular cadence to build the right software and hardware technologies to enable business growth and minimize complexities. Because hardware technology enhancements are developing rapidly, the technology refresh also needs to be aligned. This technology refresh requires IT architects to research, procure, and test the technology as soon as possible in this never-ending process.

The reason to upkeep with the latest hardware technology is because mission-critical applications running on Oracle or Microsoft SQL databases require zero downtime for a business to be successful. Even a brief outage of a mission-critical application is likely to have negative financial consequences.

With Dell APEX, customers only focus on business outcomes and not the infrastructure. Dell APEX delivers as-a-Service solutions which offer a core set of capabilities from deployment to ongoing infrastructure monitoring, operations, optimization, and support. It provides customers a seamless experience to manage their entire cloud and as-a-service journey. Customers can easily browse a selection of as-a-Service/cloud solutions and then place an order for their business. Customers can also deploy workloads, manage their multicloud resources, monitor their costs in real-time, and add cloud services, all with a few clicks.

Proof of concept

The Dell Solutions Engineers deployed both Oracle and Microsoft SQL database workloads to demonstrate the Dell APEX Cloud offerings. Since Dell APEX offers infrastructure as-a-Service, the Solutions Engineers focused on business outcomes for Oracle and Microsoft SQL workloads. The business outcomes for this proof of concept are the number of New Orders Per Minute (NOPM) and the number of Transactions Per Minute (TPM). These requirements simulate a retail business where customers can order new products online. The requirements for Oracle and Microsoft SQL workloads are around 120,000 NOPM and around 300,000 TPM.

The Solutions Engineers requested an infrastructure as-a-Service from the APEX team that would meet these workload requirements through the APEX console.

Because this exercise was based on the private cloud model, the Dell APEX team delivered a pre-engineered solution that was optimized for database workloads. The Dell APEX Private Cloud with APEX Data Storage Services infrastructure provide a seamless, curated, and optimized production-ready solution, meaning it consists of necessary components not just for the requirements but also includes backup and recovery. The Solutions Engineers were given access to start deploying virtual machines, installing operating systems, configuring storage devices, and installing and configure Oracle and Microsoft databases.

Another approach to running workloads on Dell APEX is to migrate existing Oracle and Microsoft SQL databases on to the Dell APEX Private Cloud infrastructure. This option not only enables customers to easily migrate existing virtual machines configurations to Dell APEX, reducing setup time and enabling the business quicker time-to-market, but also provides a level of confidence to the customers knowing that their existing VMs configurations were performing adequately before moving to the APEX Private Cloud.

To accomplish this proof of concept, the Solutions Engineers copied the Oracle and Microsoft SQL OVA archive files from their existing environment to the new Dell APEX Private Cloud infrastructure and spun up the VMs. After the database VMs were successfully up and running, the engineers used an open-source benchmark tool called HammerDB to simulate an Online Transaction Processing (OLTP) workload against both Oracle and Microsoft SQL databases. Table 1 shows the VMs configurations.

Table 1. Database VMs Configurations

| Workloads | # of VMs | # of vCPU | Memory |

|---|---|---|---|

Oracle | 1 | 6 | 192 GB |

Microsoft SQL | 1 | 10 | 112 GB |

The HammerDB benchmark tool was used to simulate the retail business example stated above. The below table shows the HammerDB workload configuration.

Table 2. HammerDB Workload Settings

| Setting name | Value |

|---|---|

Total transactions per user | 10,000,000 |

Number of warehouses | 10,000 |

Minutes of ramp up time | 10 |

Minutes of test duration | 50 |

Use all warehouses | Yes |

No. of virtual users | 80 |

User delay (ms) | 500 |

Repeat delay (ms) | 500 |

Iterations | 1 |

To ensure results consistency, multiple iterations of the OLTP workload benchmark were performed. The final test results derived from the average of three tests. Table 3 below shows the results for the NOPM and TPM for Oracle and Microsoft SQL workloads.

Table 3. Workloads results

| Workloads | NOPM | TPM |

|---|---|---|

Oracle | 147,874 | 313,130 |

Microsoft SQL | 130,824 | 300,978 |

Summary

Based on these results, we are confident that when we subscribe to Dell APEX offerings we will achieve the desired outcomes. With Dell APEX, the Solutions Engineers did not spend time on researching or validating what hardware to procure for these workloads but instead they just used the Dell APEX console and subscribe to resources already engineered to meet the business requirements.

In the next blog we are going to fully populate an APEX Private Cloud and APEX Data Storage Services subscription with Oracle and Microsoft SQL workloads in order to show how well it handles a demanding workload.

Additional resources

Containerized Microsoft SQL Server on Dell Technologies APEX

Oracle Linux HCL certification of Dell PowerEdge servers

Fri, 07 Apr 2023 19:40:11 -0000

|Read Time: 0 minutes

The Oracle Linux and Virtualization Hardware Certification List (HCL) is an Oracle program that provides a list of certified Server Systems, Storage Systems, and Oracle Linux KVM for its customers. Dell Technologies has been a close and longtime partner with the Oracle HCL team and have been participating in the HCL certifications program for over a decade. HCL certifications provide Dell and Oracle customers the confidence of running Oracle Linux on the Dell PowerEdge servers of choice.

New server certifications involve close collaboration between Oracle and Dell to ensure adequate testing and bug resolution has been performed on the target server type. The program entails running and passing a suite of certification tests that validates the functionality and robustness of the server components–CPU, Memory, Disks, and Network–on Oracle Linux running with Unbreakable Enterprise Kernel (UEK). Test results are reviewed and approved by Oracle and published on the following websites once completed. Through this qualification, Oracle and Dell can help ensure that both parties are equipped to provide collaborative support to customers running Oracle Linux and Virtualization environments.

Dell PowerEdge servers certified with the Oracle HCL program can be found at:

- Oracle Linux and Virtualization Hardware Certification List (filter ‘Dell Technologies’)

- Dell Technologies Hardware Compatibility List (HCL) for Oracle Linux (OL) and Oracle VM

The latest collaboration introduces the following new certifications of Dell’s latest 16th Generation (16G) of the PowerEdge servers running Intel’s latest 4th generation Xeon Scalable processors and AMD’s latest 4th generation EPYC processors:

Dell 16G HCL of OL 9.x running UEK R7

Server | CPU Series | OL 9.x (x86_64) | |

ISO | UEK R7 | ||

Rack Servers | |||

R660 | Scalable x4xx series | OL 9.1 (or higher) | 5.15.0-6.80.3.1 (or higher) |

R760 | Scalable x4xx series | OL 9.1 (or higher) | 5.15.0-6.80.3.1 (or higher) |

R6615 | AMD EPYC 4th Gen | OL 9.1 (or higher) | 5.15.0-6.80.3.1 (or higher) |

R7615 | AMD EPYC 4th Gen | OL 9.1 (or higher) | 5.15.0-6.80.3.1 (or higher) |

R6625 | AMD EPYC 4th Gen | OL 9.1 (or higher) | 5.15.0-6.80.3.1 (or higher) |

R7625 | AMD EPYC 4th Gen | OL 9.1 (or higher) | 5.15.0-6.80.3.1 (or higher) |

Dell 16G HCL of OL 8.x running UEK R7

Server | CPU Series | OL 8.x (x86_64) | |

ISO | UEK R7 | ||

Rack Servers | |||

R660 | Scalable x4xx series | OL 8.6 (or higher) | 5.15.0-6.80.3.1 (or higher) |

R760 | Scalable x4xx series | OL 8.6 (or higher) | 5.15.0-6.80.3.1 (or higher) |

R6615 | AMD EPYC 4th Gen | OL 8.6 (or higher) | 5.15.0-6.80.3.1 (or higher) |

R7615 | AMD EPYC 4th Gen | OL 8.6 (or higher) | 5.15.0-6.80.3.1 (or higher) |

R6625 | AMD EPYC 4th Gen | OL 8.6 (or higher) | 5.15.0-6.80.3.1 (or higher) |

R7625 | AMD EPYC 4th Gen | OL 8.6 (or higher) | 5.15.0-6.80.3.1 (or higher) |

Note: 16G Intel and 16G AMD servers running UEK R7 (OL 8.6+ or OL9.x) require minimum 5.15.0-3.60.5.1 kernel or higher.

Dell 16G HCL of OL 8.x running UEK R6

Server | CPU Series | OL 8.x (x86_64) | |

ISO | UEK R6 | ||

Rack Servers | |||

R660 | Scalable x4xx series | OL 8.6 (or higher) | 5.4.17-2136.315.5 (or higher) |

R760 | Scalable x4xx series | OL 8.6 (or higher) | 5.4.17-2136.315.5 (or higher) |

R6615 | AMD EPYC 4th Gen | OL 8.6 (or higher) | 5.4.17-2136.315.5 (or higher) |

R7615 | AMD EPYC 4th Gen | OL 8.6 (or higher) | 5.4.17-2136.315.5 (or higher) |

R6625 | AMD EPYC 4th Gen | OL 8.6 (or higher) | 5.4.17-2136.315.5 (or higher) |

R7625 | AMD EPYC 4th Gen | OL 8.6 (or higher) | 5.4.17-2136.315.5 (or higher) |

Note: 16G Intel and 16G AMD servers running UEK R6 (OL 8.6+) require minimum 5.4.17-2136.312.3.4 kernel or higher..

Dell PowerFlex Plug-in for Oracle Enterprise Manager: Simplify the Oracle management experience

Fri, 03 Feb 2023 15:17:53 -0000

|Read Time: 0 minutes

Oracle databases are critical components for most business operations. Many organizations have hundreds, if not, thousands of Oracle databases running across on-premises and public cloud locations. Managing and monitoring these databases, including the applications and the underlying infrastructure associated with them, can pose a challenge to IT providers. The Oracle Enterprise Manager (Oracle EM) management tool can help reduce these challenges by enabling users to monitor and manage Oracle IT infrastructure from a single console. It provides a single dashboard for managing all Oracle database deployments, whether in the data center or in the cloud.

The Dell Technologies plug-in for Oracle EM uses Dell OpenManage technology to allow Oracle DBAs to monitor and proactively set alerts for Dell PowerEdge and Dell PowerFlex systems using the Oracle EM console for a proactive approach to data center management. In addition to Dell infrastructure management, the plug-in also supports mapping of database workloads to Dell hardware for quicker fault detection.

What is Oracle EM?

Oracle EM is a single-pane-of-glass graphical user interface (GUI) management tool. Oracle EM is an Oracle flagship management product for the whole Oracle stack. It provides a comprehensive monitoring and management solution for Oracle database and applications including Oracle Engineered Systems deployed in the cloud or on-premises. Both large and small enterprises running Oracle products rely on Oracle EM for everyday management of mission-critical applications.

The capabilities of Oracle EM include:

- Enterprise monitoring

- Application management

- Database management

- Middleware management

- Hardware and virtualization management

- Heterogeneous (Non-Oracle) management

- Cloud management

- Lifecycle management

- Application performance management

- Application quality management

What is Oracle EM Plug-in?

An Oracle EM plug-in defines a new type of target that can be monitored by Oracle EM. A target can be defined as an entity that can be monitored within an organization. This entity can be an application running on a server, the server itself, the network, or a storage device.

Oracle EM makes managing target instances simple by enabling you to add a new target to the management framework from the Oracle EM console. After adding a new target, you can take advantage of Oracle Enterprise Manager’s monitoring and administrative features for that target and others.

What is Dell PowerFlex Platform?

Dell PowerFlex is a hyperconverged infrastructure (HCI) platform that combines storage, compute, and networking resources with industry-standard hardware. It empowers organizations to harness the power of software and embrace change while achieving consistently predictable outcomes for mission-critical workloads. Dell PowerFlex is a modern platform that delivers extreme flexibility, massive performance, and linear scalability while simplifying complete infrastructure management and boosting IT agility. It is the ideal foundation for organizations that are looking to modernize their mission-critical applications like Oracle, consolidate heterogeneous workloads, and build agile, private, and hybrid clouds. It is also optimized and validated for a broad set of enterprise workloads including Oracle database, Microsoft SQL Server, SAP HANA, Cassandra, MongoDB, to Splunk, SAS, and Elastic Search.

There are several options for a PowerFlex deployment, as described in the following table:

Deployment Type | Description |

Hyperconverged |

|

Two-layer |

|

Hybrid hyperconverged |

|

PowerFlex compute-only nodes |

|

PowerFlex storage-only nodes |

|

What is Dell Plug-in for Oracle EM and what does it do?

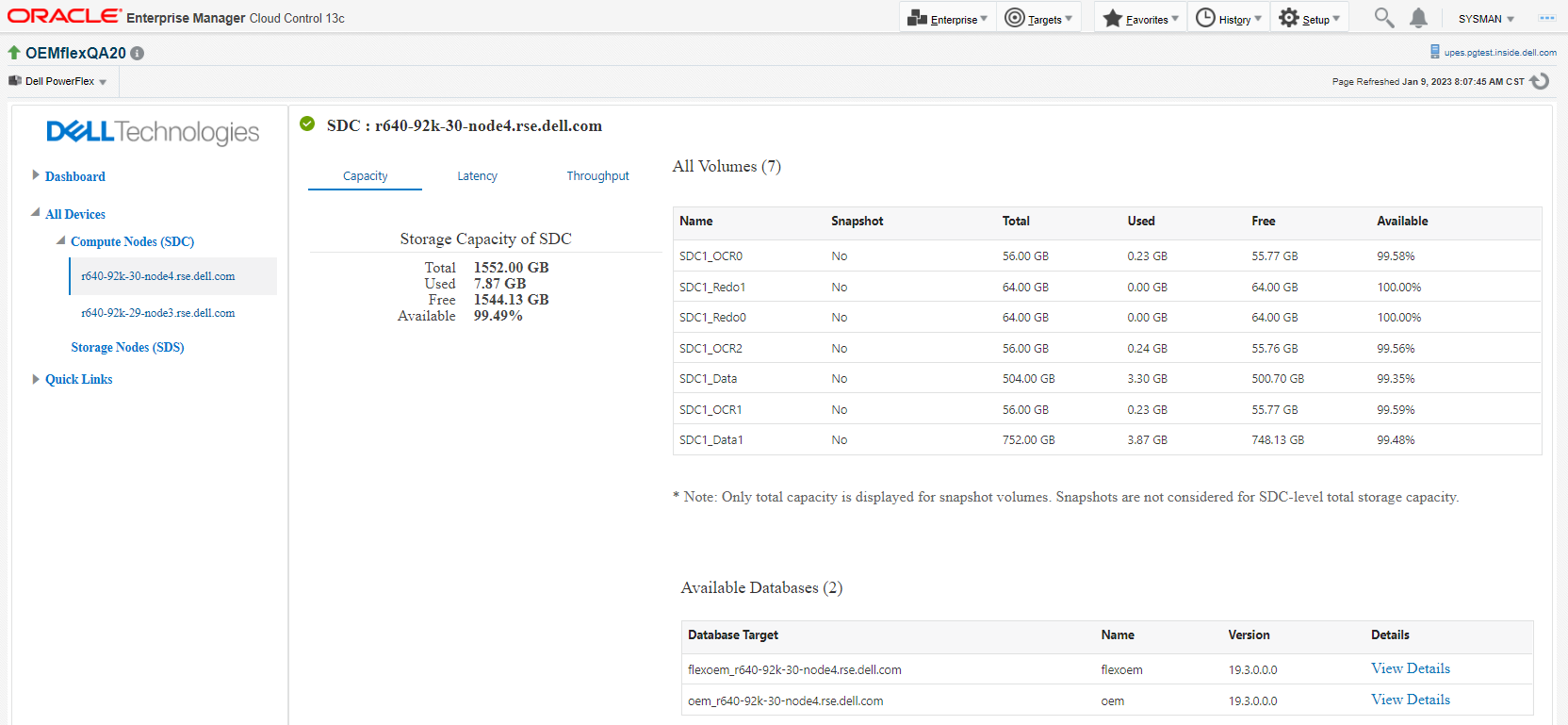

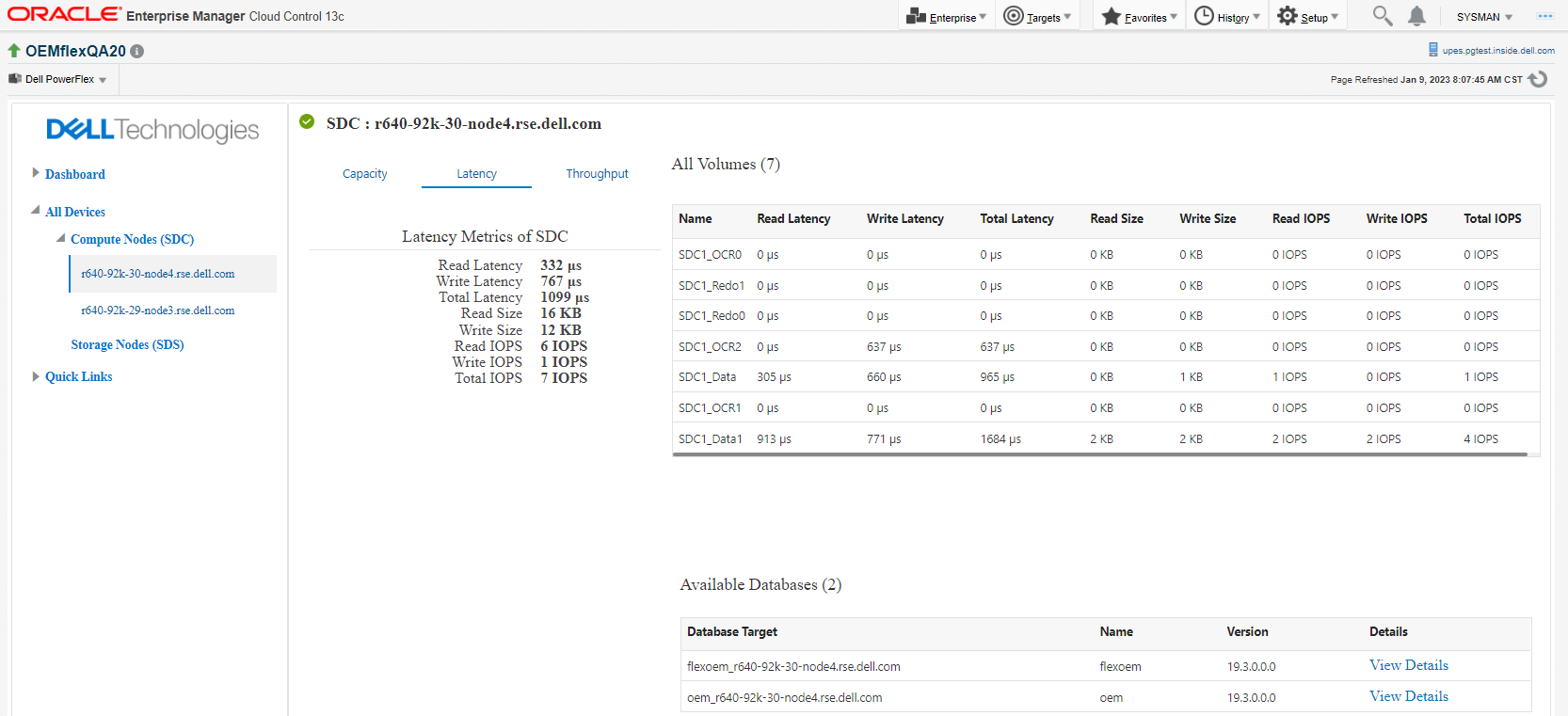

Dell Plug-in for Oracle EM provides a proactive approach to managing hardware for database servers such as the PowerEdge and monitoring the PowerFlex two-layer deployment components such as SDC and SDS and their sub-components through the integration with Dell OpenManage and PowerFlex gateway. This plug-in allows DBAs to discover, inventory, and monitor the database infrastructure running on Dell PowerFlex platform. Because Oracle maps databases to server host names, the DBAs can proactively monitor for any potential database performance issues by drilling down on the SDC nodes or SDS nodes.

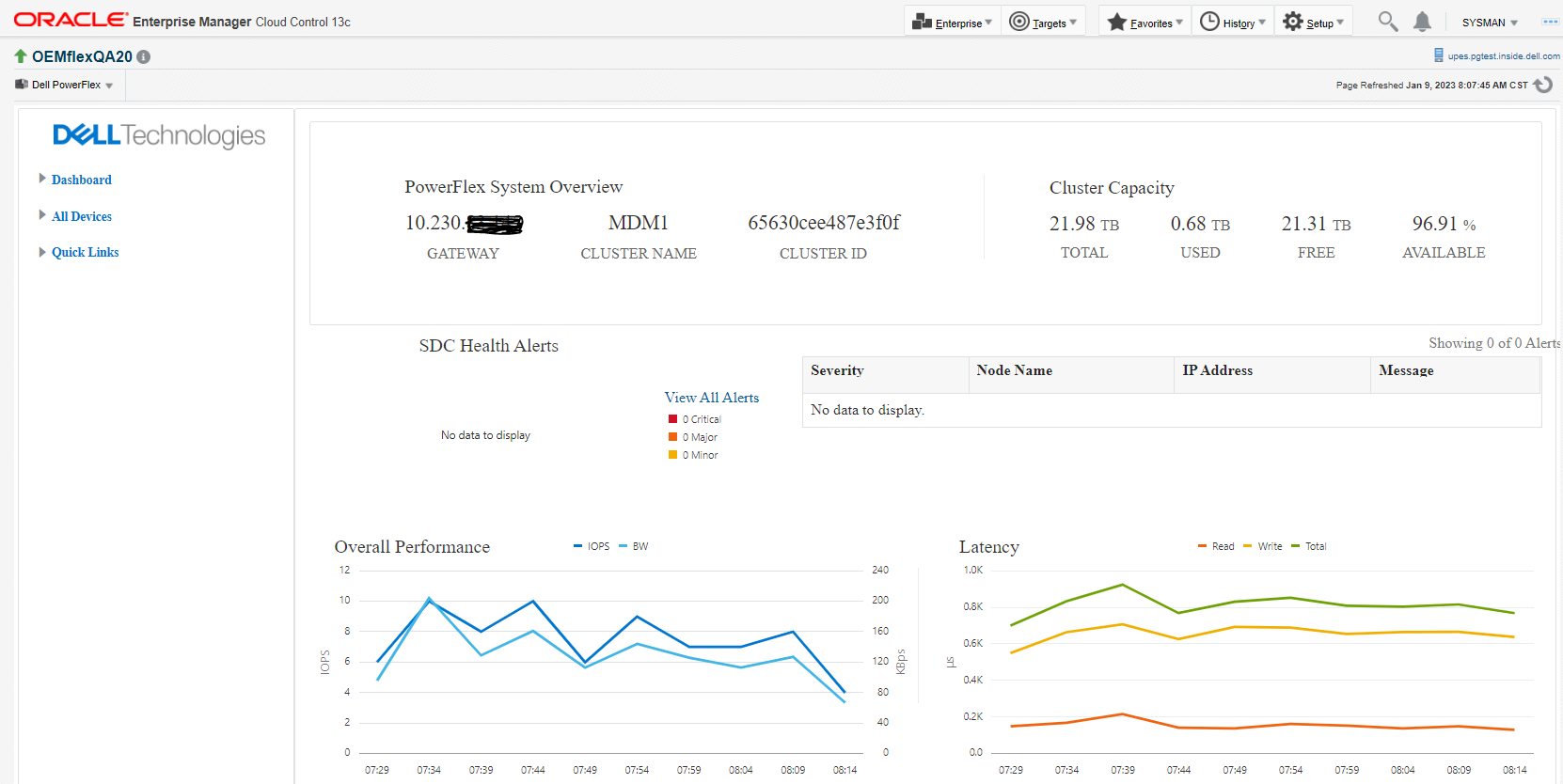

After launching the Oracle EM GUI, DBAs can navigate to the dashboard of the managed target, in this case the PowerFlex, to get an overall view of the PowerFlex system including the PowerFlex gateway IP, cluster storage capacity, SDC nodes health alerts, and the storage overall performance.

This plug-in also provides DBAs the ability to launch the PowerFlex management console from the Oracle EM console to further investigate issues or to perform other PowerFlex tasks such as troubleshooting, configuration, or management of the PowerFlex components.

This release of the plug-in provides Dell server hardware and storage monitoring for Oracle databases running on Dell PowerFlex. More features will be available in later releases. Not only will more features be available, but the plug-in will be available on other Dell platforms as well.

To learn about more features, see the Dell Plug-in for Oracle Enterprise Manager User Guide.

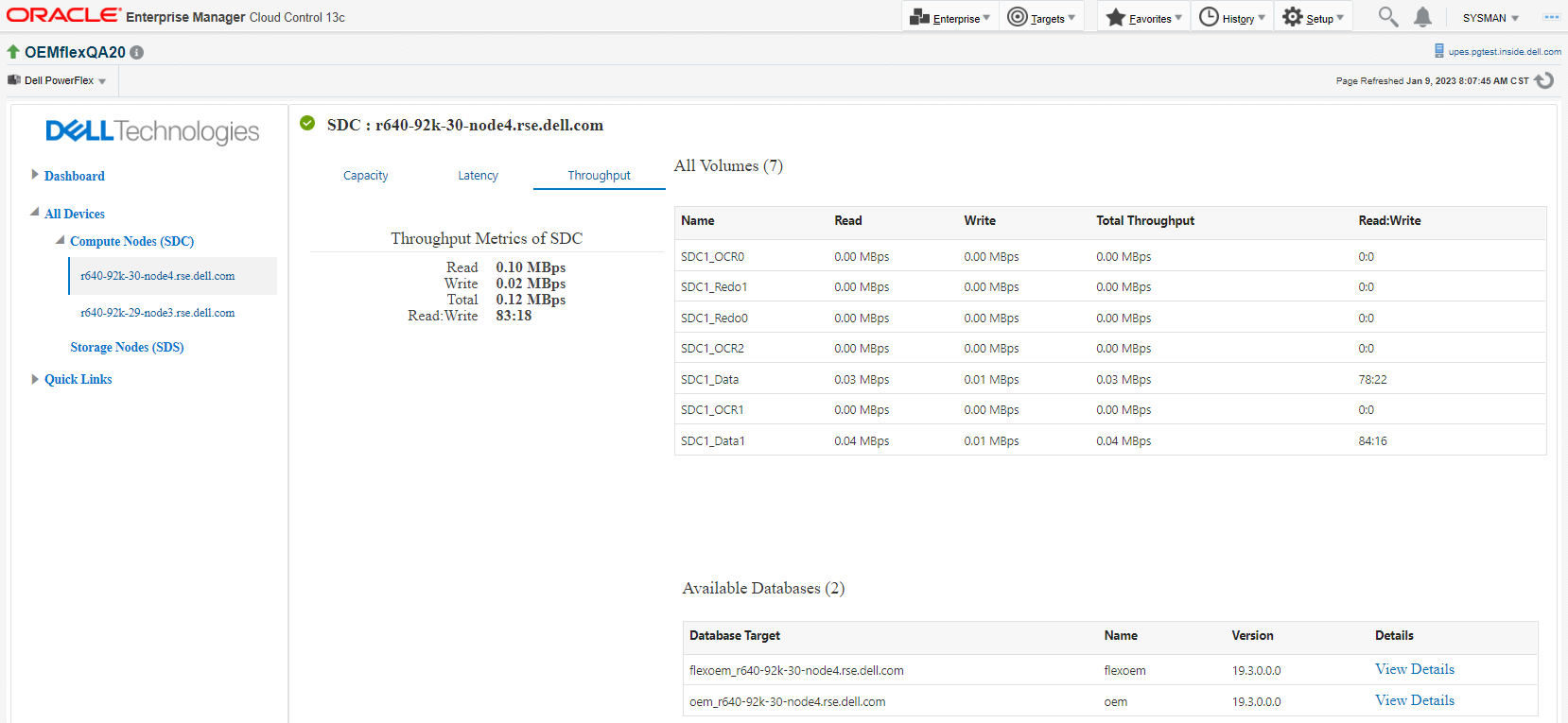

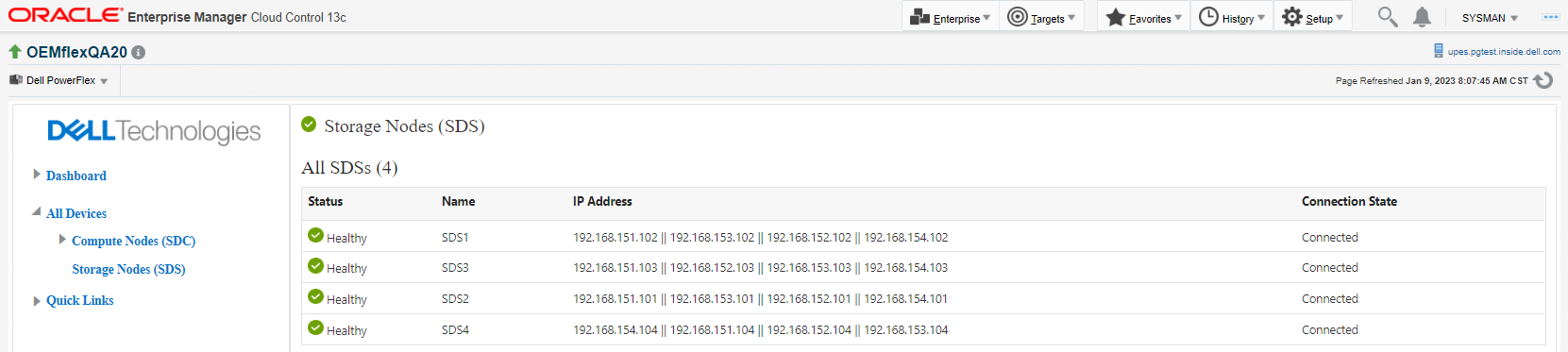

The following screenshots provide examples of Oracle EM in action with PowerFlex infrastructure.

Figure 1. Oracle EM - Dell Plug-in Dashboard

Figure 2. Oracle EM - Dell Plug-in - Compute node Capacity tab

Figure 3. Oracle EM - Dell Plug-in - Compute node Latency tab

Figure 4. Oracle EM - Dell Plug-in - Compute node Throughput tab

Figure 5. Oracle EM - Dell Plug-in - Storage node health

Summary

To improve our customer business outcomes, Dell Technologies continues to innovate and integrate with third-party software. With future releases of the Dell Plug-in for Oracle EM more features will be available to enable the DBAs to easily manage their Oracle database environments on Dell platforms.

Additional resources

Dell Plug-in for Oracle EM Release Notes

Dell Plug-in for Oracle EM Installation Guide

Have you sized your Oracle database lately? Understanding server core counts and Oracle performance.

Mon, 24 Oct 2022 14:08:36 -0000

|Read Time: 0 minutes

Oracle specialists at Dell Technologies are frequently engaged by customers to help with database (DB) server sizing. That is, sizing DB servers that are stand-alone or part of a VxRail or PowerFlex system.

Server sizing is important in the fact that it helps ensure the CPU core count matches your workload needs, provides optimal performance, and helps manage Oracle DB software licensing costs.

Understanding CPU frequency

When sizing a server for an Oracle DB, CPU frequency matters. By CPU frequency, we are referring to the CPU cycle time, which can basically be thought of as the time it takes for the CPU to execute a simple instruction, for example, an Addition instruction.

In this blog post, we will use the base CPU frequency metric, which is the frequency the CPU would run at if turbo-boost and power management options were disabled in the system BIOS.

Focus during the sizing process is therefore on:

- Single-threaded transactions

- The best host-based performance per DB session

Packing as many CPU cores as possible into a server will increase the aggregate workload capability of the server more than it will improve per-DB session performance. Moreover, in most cases, a server that runs Oracle does not require 40+ CPU cores. Using the highest base-frequency CPU will provide the best performance per DB session.

Why use a 3.x GHz frequency CPU?

CPU cycle time is essentially the time it takes to perform a simple instruction, for example, an Addition instruction. A 3.x GHz CPU cycle time is lower on the higher frequency CPU:

- A 2.3 GHz CPU cycle time is .43 ns

- A 3.4 GHz CPU cycle time is .29 ns

A 3.x GHz CPU achieves an approximate 33% reduction in cycle time with the higher base frequency CPU.

Although a nanosecond may seem like an extremely short period of time, in a typical DB working environment, those nanoseconds add up. For example, if a DB is driving 3,000,000+ logical reads per second while also doing all the other host-based work, speed may suffer.

An Oracle DB performs an extreme amount of host-based processing that eats up CPU cycles. For example:

- Traversing the Cache Buffer Chain (CBC)

- The CBC is a collection of hash buckets and linked lists located in server memory.

- They are used to locate a DB block in the server buffer cache and then find the needed row and column the query needs.

- SQL Order by processing

- Sorting and/or grouping the SQL results set helps the server memory create the results set in the order the user intended to see.

- Parsing SQL

- Before a SQL statement can be started, it must be parsed.

- CPU is used for soft and hard parsing.

- PLSQL

- PLSQL is the procedural logic the application uses to run IF-THEN-ELSE, and DO-WHILE logic. It is the application logic on the DB server.

- Chaining a DB block just read from storage

- Once a new DB block is read from storage, it must be placed on the CBC and a memory buffer is “allocated” for its content.

- DB session logons and logoffs

- Session logons and logoffs allocate and de-allocate memory areas for the DB session to run.

- ACO – Advanced Compression Option

- ACO is eliminating identical column data in a DB block – once again, more CPU and RAM work to walk through server memory to eliminate redundant data.

- TDE – Transparent Data Encryption

- TDE encrypts and decrypts data in server memory when accessed by the user – on large data Inserts/Updates extra CPU is needed to encrypt the data during the transaction.

All of this host-based processing drives CPU instruction execution and memory access (remote/local DIMMs and L1, L2, and L3 caches). When there are millions of these operations occurring per second, it supports the need for the fastest CPU to complete CPU instruction execution in the shortest amount of time. Nanosecond execution time adds up.

Keep in mind, the host O/S and the CPU itself are:

- Running lots of background memory processes supporting the SGA and PGA accesses

- Using CPU cycles to traverse memory addresses to find and move the data the DB session needs

- Working behind the scenes to transfer cache lines from NUMA node to NUMA node, DIMM to the CPU core, and Snoopy/MESI protocol work to keep cache lines in-sync

In the end, these processes consume some additional CPU cycles on top of what the DB is consuming, adding to the need for the fastest CPU for our DB workload.

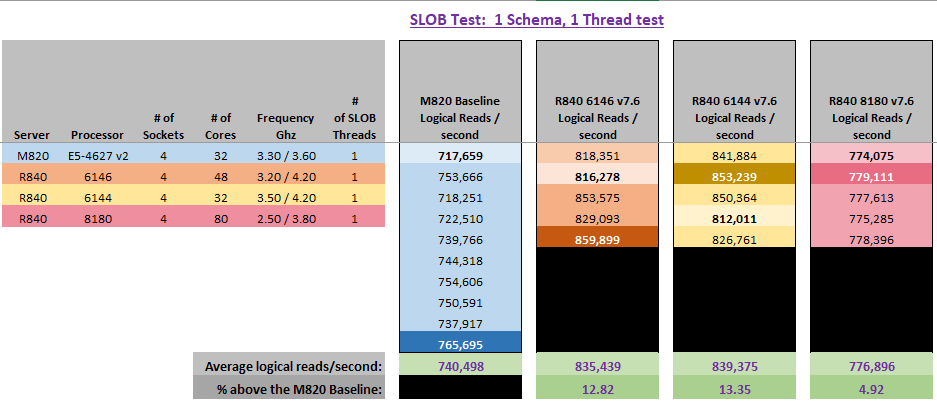

SLOB Logical Read testing:

The 3.x GHz recommendation is based on various Oracle logical read tests that were run with a customer using various Intel CPU models of the same family. The customer goal was to find the CPU that performs the highest number of logical read tests per second to support a critical application that predominantly runs single-threaded queries (such as no PQ – Parallel Query).

Each of the tests showed a higher base-frequency CPU outperformed a lower base-frequency CPU on a per-CPU core basis running SLOB logical read tests (i.e., no physical I/O was done, all host-based processing – only using CPU, Cache, DIMMs, and remote NUMA accesses).

In the tests performed, the comparison point across the various CPUs and their tests was the number of logical reads per second per CPU core. Moreover, the host was 100% dedicated to our testing and no physical I/O was done for the transactions to avoid introducing physical I/O response times in the results.

The following graphic depicts a few examples from the many tests run using a single SLOB thread (a DB session), and the captured logical read-per-second counts per test.

The CPU baseline was an E5-4627 v2, which has a 3.3GHz base frequency, but a 7.2GTs QPI and 4 x DDR3-1866 DIMM channels, versus the Skylake CPUs having a 10.2GTs UPI and 6 x DDR4-2666 DIMMs.

Why is there a variance in the number of logical reads per second between the test run of the same CPU model on the same server? Because SLOB runs a random read DB block profile which leads to more or less DB blocks being found on the local NUMA node the DB session was running on in the various tests. Hence, where fewer DB blocks were present, the remote NUMA node had to transfer DB blocks over the UPI to the local NUMA node, which takes time to complete.

If you are still wondering if Dell Technologies can help you find the right CPU and PowerEdge server for your Oracle workloads, consider the following:

- Dell has many PowerEdge server models to choose from, all of which provide the ability to support specific workload needs.

- Dell PowerEdge servers let you choose from several Intel and AMD CPU models and RAM sizes.

- The Oracle specialists at Dell Technologies can perform AWR workload assessments to ensure you get the right PowerEdge server, CPU model, and RAM configurations to achieve optimal Oracle performance.

Summary

If per-DB session, host-based performance is important to you and your business, then CPU matters. Always use the highest base-frequency CPU you can, to meet CPU power needs and maintain faster cycle times for Oracle DB performance.

If you need more information or want to discuss this topic further, a Dell Oracle specialist is ready to help analyze your application and database environment and to recommend the appropriate CPU and PowerEdge server models to meet your needs. Contact your Dell representative for more information or email us at askaworkloadspecialist@dell.com

Get it right the first time: Dell best practices for busy Oracle DBAs

Tue, 20 Sep 2022 15:10:59 -0000

|Read Time: 0 minutes

Oracle databases are critical components of most business operations. As these systems become more intelligent and complex, maintaining optimal Oracle performance and uptime can pose significant challenges to IT—and often has severe implications for the business.

According to a 2022 Quest IOUG survey, industry estimates put the cost of IT downtime at approximately $5,600 a minute, with the range of losses between $140,000 and $540,000 per hour. Maintaining optimal efficiency and performance of database systems is essential. The same survey also reported that 43 percent of Oracle DBAs reported that maintaining database management and deployment inhibit business competitiveness.

What are Oracle best practices for PowerStore?

To address the pressures and challenges that Oracle DBAs and other IT professionals face, Dell offers a Best Practice Program for deploying critical applications on Dell infrastructure. Dell Best Practices for Oracle database solutions are a comprehensive set of recommendations for both the physical infrastructure and the software stack. These recommendations derive from extensive testing hours and expertise from the PowerEdge server team, PowerStore storage team, and Oracle specialists.

Why use these Oracle best practices?

Business-critical applications require an optimized infrastructure to run smoothly and efficiently. Not only does an optimized infrastructure allow applications to run optimally, but it also helps prevent future unexpected outcomes, such as system sluggishness that could potentially affect system resources and application response time. Such unexpected outcomes can often result in revenue loss, customer dissatisfaction and damage to brand reputation.

The purpose of the Oracle Best Practices Program

Dell’s mission is to ensure that its customers have a robust and high-performance database infrastructure solution by providing best practices for Oracle Database 19c running on PowerEdge R750xs servers and PowerStore T model storage including the new PowerStore 3.0. These best practices aim to reduce or eliminate the complex work that our customers would have to perform. To enhance the value of best practices, we identify which configuration changes produce the greatest results and categorize them as follows:

Day 1 through Day 3: Most enterprises implement changes based on the delivery cycle:

- Day 1: Indicates configuration changes that are part of provisioning a database. The business has defined these best practices as an essential part of delivering a database.

- Day 2: Indicates configuration changes that are applied after the database has been delivered to the customer. These best practices address optimization steps to further improve system performance.

- Day 3: Indicates configuration changes that provide small incremental improvements in the database performance.

Highly, moderately, and fine-tuning recommendations: Customers want to understand the impact of the best practices and these terms are used to indicate the value of each best practice.

- Highly recommended: Indicates best practices that provided the greatest performance in our tests.

- Moderately recommended: Indicates best practices that provide modest performance improvements, but which are not as substantial as the highly recommended best practices.

- Fine-tuning: Indicates best practices that provide small incremental improvements in database performance.

Best practices test methodology for Intel-based PowerEdge and PowerStore deployments

Within each layer of the infrastructure, the team sequentially tested each component and documented the results. For example, within the storage layer, the goal was to show how optimizing the number of volumes for DATA, REDO, and FRA disk groups improve performance of an Oracle database.

The expectation was that performance would sequentially improve. Using this methodology, the last test in changing the Linux operating system kernel parameters and database parameters would achieve an overall optimal SQL Server database solution.

The physical architecture consists of the following:

- 2 x PowerEdge R750xs servers

- 1 x PowerStore T model array

Table 1 and Table 2 below show the server configuration and the PowerStore T model configuration.

Table 1. Server configuration

Processors | 2 x Intel® Xeon® Gold 6338 32 core CPU @2.00GHz |

Memory | 16 x 64 GB 3200MT/s memory, total of 1 TB |

Network Adapters | Embedded NIC: 1 x Broadcom BCM5720 1 GbE DP Ethernet Integrated NIC1: 1 x Broadcom Adv. Dual port 25 GB Ethernet NIC slot 5: 1 x Mellanox ConnectX-5-EN 25 GbE Dual port |

HBA | 2 x Emulex LP35002 32 Gb Dual Port Fibre Channel |

Table 2. PowerStore 5000T configuration details

Processors | 2 x Intel® Xeon® Gold 6130 CPU @ 2.10 GHz per Node

|

Cache size | 4 x 8.5 GB NVMe NVRAM |

Drives | 21 x 1.92 TB NVMe SSD |

Total usable capacity | 28.3 TB |

Front-end I/O modules | 2 x Four-Port 32 Gb FC |

The software layer consists of:

- VMware ESXi 7.3

- Red Hat Enterprise Linux 8.5

- Oracle 19c Database and Grid Infrastructure

There are several combinations possible for the software architecture. For this testing, Oracle Database19c, Red Hat Enterprise Linux 8.5, and VMware vSphere 7.3 were selected to have a design that applies to many database customers use today.

Benchmark tool

HammerDB is a leading benchmarking tool that is used with databases such as Oracle, MySQL, Microsoft SQL Server, and others. Dell’s engineering team used HammerDB to generate an Online Transaction Processing (OLTP) workload to simulate enterprise applications. To compare the benchmark results between the baseline configuration and the best practice configuration, there must be a significant load on the Oracle infrastructure to ensure that the system was sufficiently taxed. This method of testing guarantees that the infrastructure resources are optimized after applying best practices. Table 3 shows the HammerDB workload configuration.

Table 3. HammerDB workload configuration

Setting name | Value |

Total transactions per user | 1,000,000 |

Number of warehouses | 5,000 |

Minutes of ramp up time | 10 |

Minutes of test duration | 50 |

Use all warehouses | Yes |

User delay (ms) | 500 |

Repeat delay (ms) | 500 |

Iterations | 1 |

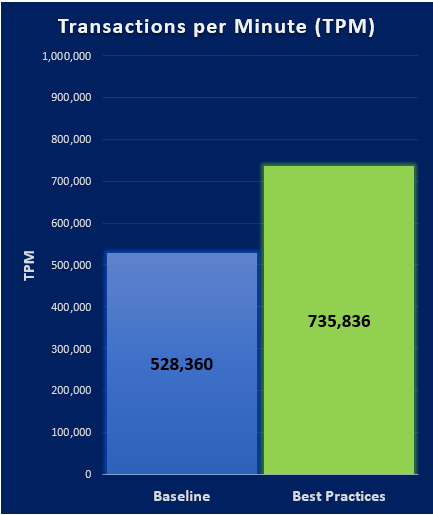

New Order per Minute (NOPM) and Transaction per Minute (TPM) provide metrics to interpret the HammerDB results. These metrics are from the TPC-C benchmark and indicate the result of a test. During our best practice validation, we compare those metrics against the baseline configuration to ensure that there is an increase in performance.

Findings

After performing various test cases between the baseline configuration and the best practice configuration, our findings showed that the results with best practices applied, the performance improved over the baseline configuration. Table 4 describes the configuration details for the database virtual machines that are used in the following graphs.

Note: Every database workload and system is different, which means actual results of these best practices may vary from system to system.

Table 4. vCPU and memory allocation

Resource Reservation | Baseline configuration per virtual machine | Number of Oracle database virtual machines | Total |

vCPU | 16 cores | 4 | 64 cores |

Memory | 128 GB | 4 | 512 GB |

Get the most from your Oracle database

Wed, 30 Mar 2022 15:09:53 -0000

|Read Time: 0 minutes

This year, the Oracle team at Dell Technologies set out to help our customers get the most from their database investments. To determine optimal price performance, we started by validating three different PowerEdge R750 configurations.

The first configuration was an Intel Xeon Gold 5318Y CPU with 24 processor cores, a 2.10 GHz clock speed, and a cache of 36 MB. This PowerEdge configuration had the highest core count of all the three configurations, so we expected it to be the performance leader in our workload tests.

The second configuration included two Intel Xeon Gold 6334 CPUs, each with eight processor cores for a total of 16 cores. Each CPU had the same clock speed of 3.6 GHz, and each with 18 MB cache for a total of 36 MB. This configuration had a much higher clock speed, even though it had eight fewer processor cores.

The third and final configuration had the fewest cores, with only one Intel Xeon Gold 6334 CPU with eight processor cores, a 3.6 GHz clock speed, and an 18 MB cache used for the workload tests. We did not expect this entry-level configuration to match the performance of the first configuration as it has 16 fewer processor cores. The main reason that we included this configuration was to provide insights into how a PowerEdge design can start with one processor and scale-up when adding another processor.

All three configurations were tested with an OLTP workload using HammerDB load generation tool. The TPROC-C workload configuration is described in Table 1.

Table 1: Virtual users and related HammerDB workload parameters

| Specifications | Use case 1: 1x 5318Y CPU | Use case 2: 2 x 6334 CPUs | Use case 3: 1 x 6334 CPU |

|---|---|---|---|

Virtual Users | 120 | 120 | 60 |

User Delay (ms) | 500 | ||

Repeat Delay (ms) | 500 | ||

Iterations | 1 | ||

We measured our results in New Orders Per Minute (NOPM), as this metric facilitates price performance comparison between different database systems.

| Use Case 1: 1 x 5318Y CPU | Use Case 2: 2 x 6334 CPUs | Use Case 3: 1 x 6334 CPU |

|---|---|---|

Compared to use case 1: | 75% of NOPM | 47% of NOPM |

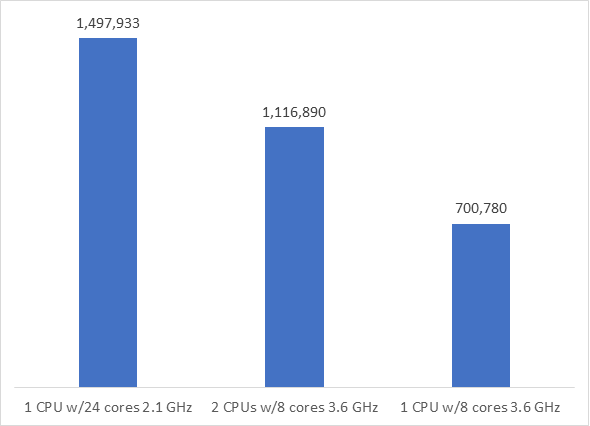

Figure 1: NOPM for each use case

Unsurprisingly, use case 1 was the performance leader. Although we expected this result, we did not expect the high NOPM performance for use cases 2 and 3. Use case 2 supported 75 percent of the NOPM workload of use case 1, with eight fewer processor cores. Use case 3 supported 47 percent of the NOPM of use case 1, with 16 fewer processor cores. The higher base clock speed in both use cases 2 and 3 seemed to increase efficiency.

Estimated costs are important factors that impact the efficiency of each PowerEdge configuration. Table 2 shows the cost of the PowerEdge configurations combined with the Oracle Database Enterprise Edition (EE) licensing using the processor metric. For a more detailed overview of costs, read the white paper.

Table 2: Server and Oracle EE processor core license costs (non-discounted, no support costs)

| 1 CPU w/ 24 cores 2.1 GHz | 2 CPUs w/ 8 cores 3.6 GHz | 1 CPU w/ 8 cores 3.6 GHz | |

|---|---|---|---|

License Totals | $570,000.00 | $380,000.00 | $190,000.00 |

PowerEdge R750 costs | $70,320.00 | $98,982 | $71,790.00 |

Grand total costs | $640,320.00 | $478,982.00 | $261,790.00 |

Price per Performance-efficiency-per-processor-core is calculated by taking the total cost of each configuration divided by the number of NOPM per processor core.

| 1 CPU w/ 24 cores 2.1 GHz | 2 CPUs w/ 8 cores 3.6 GHz | 1 CPU w/ 8 cores 3.6 GHz | |

|---|---|---|---|

Total cost | $640,320.00 | $478,982.00 | $261,790.00 |

NOPM per processor core | 62,414 | 69,806 | 87,598 |

Price per Performance-Efficiency-per-processor-core | $10.26 | $6.86 | $2.17 |

Use cases 2 and 3 were more efficient in terms of performance than use case 1, as both configurations processed more NOPM per processor core. Although use case 1 was the performance leader, it had highest price per performance-efficiency-per-processor-core. The high price performance efficiency per core relates to how high it would cost to generate the number of NOPM per core that the system was able to process. More information about the DBAs and wait events for each use case is available in this white paper.

The PowerEdge configuration for use case 2 had twice the amount of RAM than use case 1, meaning the system was more expensive. However, the additional memory had a minimal impact on performance, as the database SGA size remained the same for each validation test.

Use case 3 had the best price performance-efficiency per core at $2.17 which provided a 79 percent savings compared to use case 1 ($10.26). This result shows that customers can start with a small Oracle database configuration with one low core count high frequency CPU and achieve up to 47 percent of the performance that a very high core count low frequency CPU can achieve. For the processor configurations used in use case 1 and 3 this savings that are equated to $379,530, which is significant savings in terms of dollar amount!

The two CPUs configuration in use case 2 cost $6.86, which provided a 33 percent savings compared to use case 1 ($10.26). This result shows that when customers must support larger workload arises customers can scale-up by adding a second CPU to their server and achieve up to 75 percent of the performance that a very high core count low frequency CPU can achieve and still end up paying less than what they would’ve paid had they started with the high core count low frequency to start with. For the processor configurations used in use case 1 and 2 these savings equated to $161,388, which is again good savings in terms of dollar amounts.

Each database is unique, and the savings results demonstrated in our validation tests may vary depending on your database system. Dell Technologies has an experienced Oracle team that can configure PowerEdge systems based on your performance requirements and can help you optimize price efficiency for your databases. For customers who are interested in more information, see the following next steps:

- Read the white paper: Accelerate Oracle Databases and Maximize Your Investment

- Connect with your Dell Technologies Representative and mention that you want to upgrade your Oracle databases with this price efficiency approach

AMD EPYC Milan Processors and FFC Mode Accelerate Oracle 244%

Thu, 06 May 2021 18:54:59 -0000

|Read Time: 0 minutes

Intriguing, right? The Oracle team at Dell Technologies recently configured a Dell EMC PowerEdge server equipped with the new AMD EPYC processors to test the performance of an Oracle 19c database feature called Force Full Cache (FFC) mode. When using FFC mode, the Oracle server attempts to cache the full database footprint in buffer cache memory. This effectively reduces the read latency from what would have been experienced with the storage system to memory access speed. Writes are still sent to storage to ensure durability and recovery of the database. What’s fascinating is that by using Oracle’s FFC mode, the AMD EPYC processors can accelerate database operations while bypassing most storage I/O wait times.

For this performance test our PowerEdge R7525 server was populated with two AMD EPYC 7543 processors with a speed of 2.8 GHz, each with 32 cores. There are several layers of cache in these processors:

- Zen3 processor core, includes an L1 write-back cache

- Each core has a private 512 KB L2 cache

- Up to eight Zen3 cores share a 32 MB L3 cache

Each processor also supports 8 memory channels and each memory channel supports up to 2 DIMMS. With all these cache levels and memory channels our hypothesis was that the AMD EPYC processors were going to deliver amazing performance. Although we listed the processor features we believe will most impact performance, in truth there is much more to these new processors that we haven’t covered. The AMD webpage on modern data workloads is an excellent overview. For a deep dive into RDBMS tuning, this white paper provides more great technical detail.

For the sake of comparison, we also ran an Oracle database without FFC mode on the same server. Both database modes used the exact same technology stacks:

- Oracle Enterprise Edition 19c (19.7.0.0.200414)

- Red Hat Enterprise Linux 8.2

- VMware vSphere ESXi 7.0 Update 1

We virtualized the Oracle database instance since that is the most common deployment model in use today. AMD and VMware are continuously working to optimize the performance of high value workloads like Oracle. In the paper, “Performance Optimizations in VMware vSphere 7.0 U2 CPU Scheduler for AMD EPYC Processors” VMware shows how their CPU scheduler achieves up to 50% better performance than vSphere 7.0 U1. As the performance gap narrows between bare metal and virtualized applications, the gains in agility with virtualization outweigh the minor performance overhead of a hypervisor. The engineering team performing the testing used VMware vSphere virtualization to configure a primary Oracle virtual machine that was cloned to a common starting configuration.

HammerDB is a leading benchmarking tool used with databases like Oracle, Microsoft SQL Server and others. The engineering team used HammerDB to generate a TPROC-C workload on the database VM. The TPROC-C benchmark is referred to as an Online Transaction Processing (OLTP) workload because it simulates terminal operators executing transactions. When running the TPROC-C workload the storage system must support thousands of small read and write request per minute. With a traditional configuration, Oracle’s buffer cache would only be able to accelerate a portion of the reads and writes. The average latency of the system will increase when more reads and writes go to storage system as the wait times are greater for physical storage operations than memory. This is what the team expects to observe with the Oracle database that is not configured for FFC mode. Storage I/O is continually getting faster but not nearly as fast as I/O served from memory.

Once the test tool warms the cache, most of the reads will be serviced from memory rather than from storage, providing what we hope will be a significant boost in performance. We will not be able to separate out the individual performance benefits of using AMD EPYC processors combined with Oracle’s FFC mode, however, the efficiencies gained via AMD caches, memory channels, and a VMware vSphere optimizations will make this performance test fun!

Before reviewing the performance results, it is important that we review the virtual machine, storage, and TPROC-C workload configurations. One important difference between the baseline virtual machine (no FFC mode) and the database configuration with FFC mode enabled is the memory allocated to the SGA. A key consideration is that the logical database size is smaller than the individual buffer cache. See the Oracle 19c Database Performance Tuning Guide for a complete list of considerations. In this case the SGA size is 784 GB to accommodate caching the entire database in the Oracle buffer cache. All other configuration parameters like vCPU, memory, and disk storage were identically configured.

Using memory technologies like Force Full Cache mode should be a key consideration for the Enterprise as the AMD EPYC processors enable the PowerEdge R7525 servers to support up to 4 TB of LRDIMM. Depending upon the database and its growth rate, this could support many small to medium- sized systems. The advantage for the business is the capability to accelerate the database by configuring a Dell EMC PowerEdge R7525 server and AMD processors with enough memory to cache the entire database.

Table 1: Virtual Machine configuration and SGA size

Component | Oracle Baseline | Oracle Force Full Cache Mode |

vCPU | 32 | 32 |

Memory | 960 GB | 960 GB |

Disk Storage | 400 GB | 400 GB |

SGA Size | 64 GB | 784 GB |

This database storage configuration includes using VMware vSphere’s Virtual Machine File System (VMFS) and Oracle’s Automatic Storage Management (ASM) on Direct Attached Storage (DAS). The storage configuration is detailed in the table below. ASM Normal redundancy mirrors each extent providing the capability to protect against one disk failure.

Table 2: Storage and ASM configuration

Storage Group | Size (GB) | ASM Redundancy |

Data | 800 | Normal |

Redo | 50 | Normal |

FRA | 50 | Normal |

TEMP | 250 | Normal |

OCR | 50 | Normal |

We used HammerDB to create a TPROC-C database with 5,000 simulated warehouses which generated approximately 500 GB of data, whichwas small enough to be loaded entirely in the buffer cache. Other HammerDB settings we used included those shown in this table:

Table 3: HammerDB: TPROC-C test configuration

Setting | Value |

Time Driver Script | Yes |

Total Transactions per user | 1,000,000 |

Minutes of Ramp Up Time | 5 |

Minutes of Test Duration | 55 |

Use All Warehouses | Yes |

Number of Virtual Users | 16 |

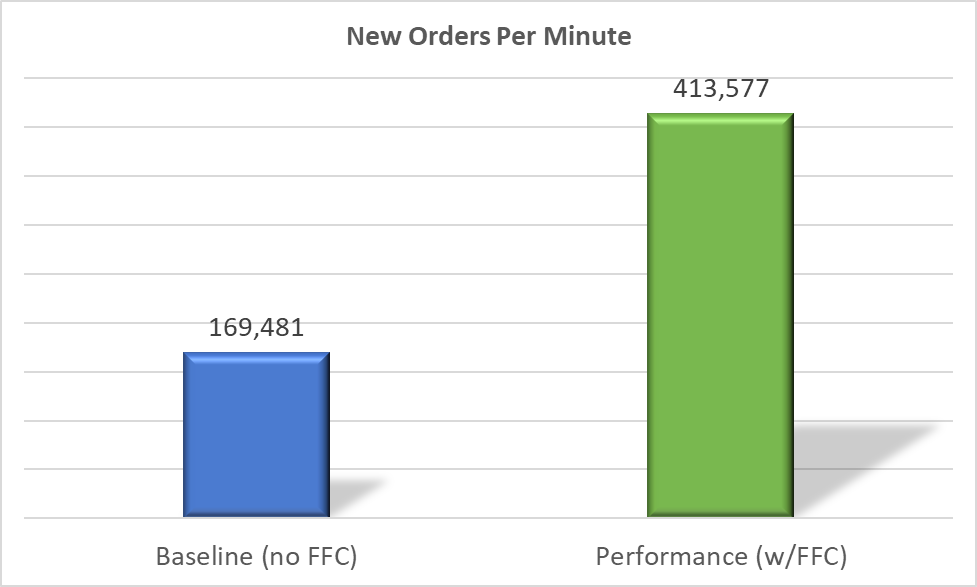

New Orders Per Minute (NOPM) is a metric that indicates the number of orders that were fully processed in one minute. This performance metric provides insight into the performance of the database system and can be used to compare two different systems running the same TPROC-C workload. The AMD EYPC processors combined with FFC mode delivered an outstanding 244% more NOPM than the baseline system. This is a powerful finding because it shows how tuning the hardware and software stack can accelerate database performance without adding more resources. In this case the optimal technology stack included AMD EYPC processors which, when combined with Oracle’s FFC mode, accelerated NOPM by 2.4 times the baseline.

Figure 1: New Orders Per Minute Comparison

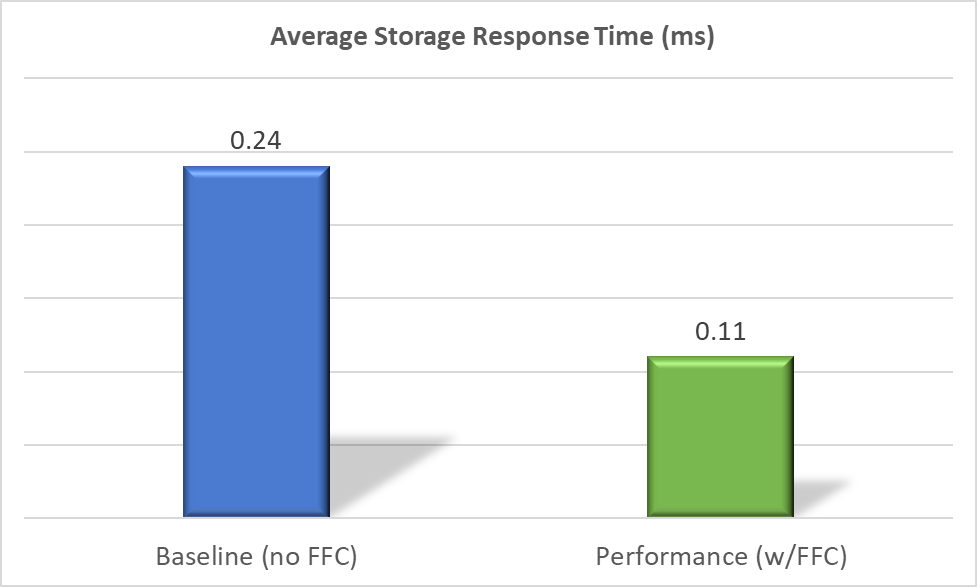

What factors played a role in boosting performance? The Average Storage Response Time chart for the baseline test shows that the system’s average storage response time was .24 milliseconds. The goal of OLTP production systems is that most storage response times should be less than 1 millisecond as this is an indication of healthy storage performance. Thus, the baseline system was demonstrating good performance; however, even with the minimal storage response times the system only achieved 169,481 NOPM.

With FFC mode enabled, the entire database resided in the database buffer cache. This resulted in fewer physical reads and faster average storage response times. Results show the average storage response time with FFC was less than half the baseline numbers at just .11 milliseconds, or 2.2 times faster than the baseline. With most of the I/O activity working in memory the AMD EYPC processor cache and memory channel features provided a big boost in accelerating the database workload!

Figure 2: Average Storage Response Time

The combination of AMD EYPC processors with Oracle’s Force Full Cache mode should provide extremely good performance for databases that are smaller than 4 TBs. Our test results show an increase in 244% in New Orders per Minute and faster response time, meaning that this solution stack built on the PowerEdge R7525 can accelerate an Oracle database that fits the requirements of FFC mode. Every database system is different, and results will vary. But in our tests this AMD-based solution provided substantial performance.

Supporting detail:

Table 4: PowerEdge R7525 Configuration

Component | Detail |

Processors | 2 x AMD EPYC 7543 32-Core processors @ 2800 MHz |

Memory | 16 x 128GB @ 3200 MHz (for a total of 2TB) |

Storage | 8 x Dell Express Flash NVMe P4610 1.6TB SFF (Intel 2.5 inch 8GT/s) |

Embedded NIC | 1 x Broadcom Gigabit Ethernet BC5720 |

Integrated NIC | 1 x Broadcom Advanced Dual Port 25 Gb Ethernet |

Oracle Database Solutions on Docker Container and Kubernetes

Sat, 27 Apr 2024 13:07:57 -0000

|Read Time: 0 minutes

The Opportunity

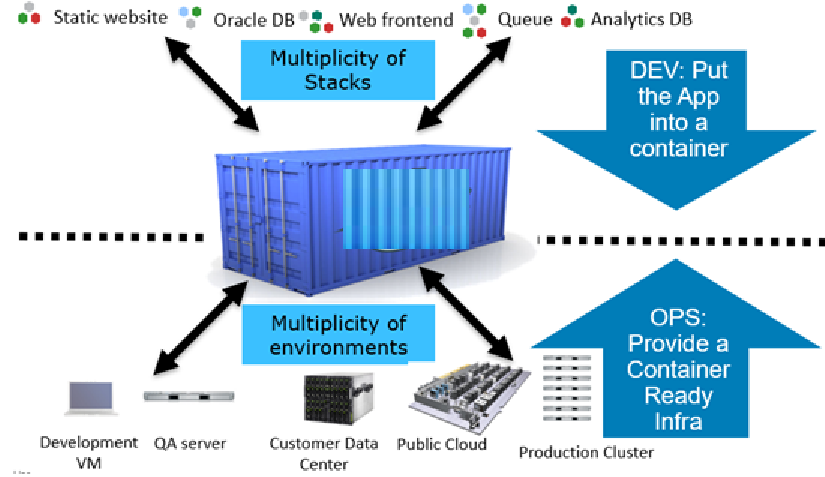

Containers are a lightweight, stand-alone, executable package of software that includes everything that is needed to run an application: code, runtime, system tools, system libraries, and settings. A container isolates software from its environment and ensures that it works uniformly despite any differences between development and staging. Containers share the machine’s operating system kernel and do not require an operating system for each application, driving higher server efficiencies and reducing server and licensing costs.

The traditional build process for database application development is complex, time intensive and difficult to schedule. With containers and the right supporting tools, the traditional build process is transformed into a self-service, on-demand experience that enables developers to rapidly deploy applications. In the remaining sections of this article we describe how to develop the capability to have an Oracle database container running in a matter of minutes.

Oracle has a long commitment to supporting the developer communities working in containerized environments. At the DockerCon US event in April 2017, Oracle announced that its Oracle 12c database software application would be available alongside of other Oracle products on Docker Store, the standard for dev-ops developers. Dev-ops developers have pulled over four billion images from the Docker Store and are increasingly turning to the Docker Store as the canonical source for high-quality curated content. In the present-day database world, customers are invariably switching to the use of containers with Kubernetes management to build and run a wide variety of applications and services in a highly available on-premises hosted environment.

Containerized environments can reliably offer high-performance compute, storage and network capabilities with the necessary configurations. A containerized environment also reduces overhead costs by providing a repeatable process for application deployment across build, test, and production systems. To enable the deployment and management of containerized applications, organizations use Kubernetes technologies to operate at any scale including production. Kubernetes enables powerful collaboration and workflow management capabilities by deploying containers for cloud-native, distributed applications and microservices. It even allows you to repackage legacy applications for increased portability, more efficient deployment, and improved customer and employee engagement.

Figure 1: Docker containers for reducing development complexity

The Solution

For many companies, to boost productivity and time to value, container usage starts with the departments that are focused on software development. Their journey typically starts with installing, implementing, and using containers for applications that are based on the microservice architecture as shown in Figure 2. Developers want to be able to build microservices-based container applications without changing code or infrastructure.

This approach enables portability between data centers and obviates the need for changes in traditional applications enabling faster development and deployment cycles. Oracle Docker containers run the microservices while Kubernetes is used for container orchestration. Also, the microservices running within Docker containers can communicate with the Oracle databases by using messaging services.

Figure 2: Architecture for Oracle Database featuring Docker and Kubernetes

Using orchestration and automation for containerized applications, developers can self-provision an Oracle database, thereby increasing flexibility and productivity while saving substantial time in creating a production copy for development and testing environments. This solution enables development teams to quickly provision isolated applications without the traditional complexities.

Our Dell EMC engineers recently tested and validated a solution for Oracle database using Docker containers and Kubernetes. The solution uses Oracle Database in containers, Kubernetes, and the Container Storage Interface (CSI) Driver for Dell EMC PowerFlex OS to show how dev/ops teams can transform their development processes.

Dell EMC engineers demonstrated two use cases for this solution. Both of our use cases feature four Dell EMC PowerEdge R640 servers, which are an integral part of Dell EMC VxFlex Ready Nodes, and a CSI Driver for Dell EMC PowerFlex that were hosted in our DellEMC labs.

Use Case 1

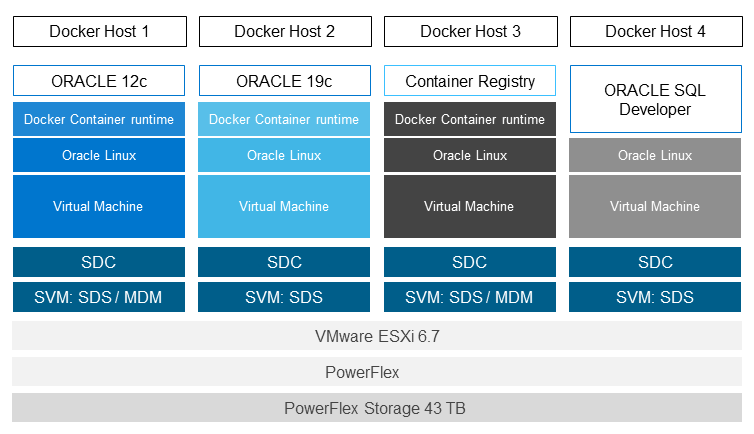

In use case 1, the DellEMC engineers manually provisioned the container-based development and testing environment shown in Figure 3 as follows:

- Install Docker.

- Activate the Docker Enterprise Edition-License.

- Run the Oracle 12c database within the Docker container.

- Build and run the Oracle 19c database in the Docker container.

- Import the sample Oracle schemas that are pulled from GitHub into the Oracle 12c and 19c database.

- Install Oracle SQL Developer and query tables from the container to demonstrate that the connection from Oracle SQL Developer to Oracle database functions.

Figure 3: Use Case 1 - Architecture

The key benefit of our first use case was the time that we saved by using Docker containers instead of the traditional manual installation and configuration method of building a typical Oracle database environment. Use Case 1 planning also demonstrated the importance of selecting the Docker registry location and storage provisioning options that are most appropriate for the requirements of a typical development and testing environment.

Use Case 2

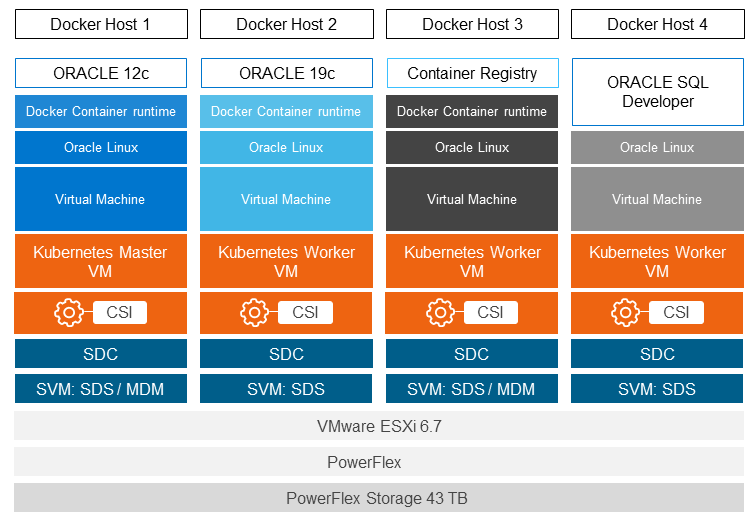

Use Case 2 demonstrates the value of CSI plug-in integration with Kubernetes and Dell EMC Power Flex storage to automate storage configuration. Kubernetes orchestration with PowerFlex provides a container deployment strategy with persistent storage. It demonstrates the ease, simplicity, and speed in scaling out a development and testing environment from production Oracle databases. In this use case, a developer provisions the Oracle database in containers on the same infrastructure described in Use Case 1 only this time using Kubernetes with the CSI Driver for Dell EMC PowerFlex. Figure 4 depicts the detailed architecture of Use Case 2.

Figure 4. Use Case 2 – Architecture

Use Case 2 demonstrates how Docker, Kubernetes, and the CSI Driver for Dell EMC PowerFlex accelerate the development life cycle for Oracle applications. Kubernetes configured with the CSI Driver for Dell EMC VxFlex OS simplified and automated the provisioning and removal of containers with persistent storage. Engineers used yaml configuration files along with the kubectl command to quickly deploy and delete containers and complete pods. Our solution demonstrates that developers can provision Oracle databases in containers without the complexities that are associated with installing the database and provisioning storage.

Use Case Observations and Benefits

Adding Kubernetes container orchestration is an essential addition for database developers on a containerized development journey. Automation becomes essential with the expansion of containerized application deployments. In this case, it enabled our developers to bypass the complexities that are associated with plain scripting. Instead, our solution uses open source Kubernetes to accomplish the developer’s objectives. The CSI plug-in integrates with Kubernetes and exposes the capabilities of the Dell EMC PowerFlex storage system, enabling the developer to:

- Take a snapshot of the Oracle database, including the sample schema that was pulled from the GitHub site.

- Protect the work of the existing Oracle database, which was changed before taking the snapshot. We can protect any state. Use the CSI plug-in Driver for Dell EMC PowerFlex OS to create a snapshot that is installed in Kubernetes to provide persistent storage.

- Restore an Oracle 19c database to its pre-deletion state using a snapshot, even after removing the containers and the attached storage.

In our second use case, using Kubernetes combined with the CSI Driver for DellEMC PowerFlex OS simplified and automated the provisioning and removal of containers and storage. In this use case, we used yaml files along with the kubectl command to deploy and delete the containers and pods. All these components facilitate the automation of the container hosting the Oracle database on the top of PowerFlex.

Kubernetes, enhanced with the CSI Driver for Dell EMC VxFlex OS, provides the capability to attach and manage Dell EMC VxFlex OS storage system volumes to containerized applications. Our developers worked with a familiar Kubernetes interface to modify a copy of Oracle database schema gathered from the Github repository database and connect it to the Oracle database container. After modifying the database, the developer protected all progress by using the snapshot feature of Dell EMC VxFlex OS storage system and creating a point-in-time copy of the database.

Comparing Use Case 1 to Use Case 2 demonstrated how we can easily shift away from the complexities of scripting and using the command line to implement a self-service model that accelerates container management. The move to a self-service model, which increases developer productivity by removing bottlenecks, becomes increasingly important as the Docker container environment grows.

Summary

The power of containers and automation show how tasks that traditionally required multiple roles—developers and others working with the storage and database administrators - can be simplified. Kubernetes with the CSI plug-in enables developers and others to do more in less time and with fewer complexities. The time savings means that coding projects can be completed faster, benefiting both the developers and the business-side employees and customers. Overall, the key benefit shown in comparing our two use cases was the transformation from a manually managed container environment to an orchestrated system with more capabilities.

Innovation drives transformation. In the case of Docker containers and Kubernetes, the key benefit is a shift to rapid application deployment services. Oracle and many others have embraced containers and provide images of applications, such as for the Oracle 12c database, that can be deployed in days and instantiated in seconds. Installations and other repetitive tasks are replaced with packaged applications that enable the developer to work quickly in the database. The ease of using Docker and Kubernetes, combined with rapid provisioning of persistent storage, transforms development by removing wait time and enabling the developer to move closer to the speed of thought.

The addition of the Kubernetes orchestration system and the CSI Driver for Dell EMC VxFlex OS brings a rich user interface that simplifies provisioning containers and persistent storage. In our testing, we found that Kubernetes plus the CSI Driver for Dell EMC VxFlex OS enabled developers to provision containerized applications with persistent storage. This solution features point-and-click simplicity and frees valuable time so that the storage administrator can focus on business-critical tasks.

The best of all worlds: Dell EMC Ready Bundle for Oracle (Part I)

Fri, 09 Dec 2022 20:07:50 -0000

|Read Time: 0 minutes

The best of all worlds: Dell EMC Ready Bundle for Oracle (Part I)

The time has finally arrived! The Dell EMC Ready Bundle for Oracle which is an integrated, validated, tested and engineered system has been launched. This solution will help the Oracle DBAs and other IT users address and eliminate most of the below expectations and challenges in a typical Oracle database infrastructure.

- Higher performance which should be consistent and scalable with the exponential data deluge.

- Higher availability and reliability for mission critical applications.

- Optimal time and operational process management related to database infrastructure.

- Optimal control of database CAPEX, licensing and resource cost with enhanced productivity.

- Improved interoperability among multiple vendors for support of database and other related activities.

It is indeed incredible that the Dell EMC Ready Bundle for Oracle addresses all the above concerns/challenges and provides a better solution vis-à-vis its competitors. When we talk of vetted claims (verified by internal and external sources of Dell EMC) regarding its features, the following points are worth mentioning:-

- 20% lesser Oracle licensing cost.

- 5X Space savings for copies of Oracle databases.

- 99K+ IOPS with sub-millisecond(0.75 milliseconds) latencies

- Six Nine’s availability for Oracle databases.

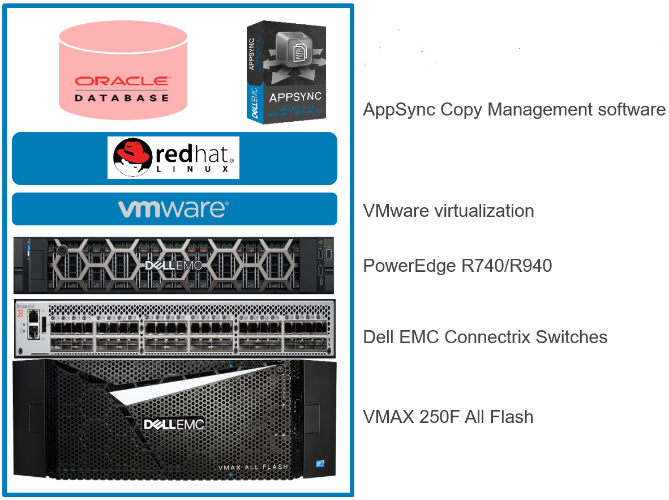

Now let’s look into the individual components that deliver the above exceptional features/statistics. All the components of the Dell EMC Ready Bundle for Oracle come from the best of all worlds like Dell EMC VMAX 250F (storage), Dell EMC PowerEdge 14G servers (compute), VMware vSphere (Virtualization), Oracle 12cRel2 database (database), Dell EMC AppSync (Applications) etc. as depicted in figure 1 :-

Figure 1: Components of the Dell EMC Ready Bundle for Oracle

This stack of Dell EMC software and hardware along with Oracle Database and Red Hat Enterprise Linux offers umpteen benefits individually or as the Dell EMC Ready Bundle for Oracle. The Dell EMC Ready Bundle for Oracle facilitates the management, optimization, and reduction of OPEX & CAPEX costs to help with the ever-increasing pressure on organizational budget. This bundle offers high resiliency, availability, productivity, performance and innovation. For all technical and non-technical issues, there is a single support window manned by experienced and reputed Dell EMC personnel. Now let’s try to understand all the components of The Dell EMC Ready Bundle for Oracle.

Dell EMC VMAX 250F All Flash Array is the storage component which is designed to consolidate multiple mixed workload Oracle databases into a single platform with a very high IOPS and sub-millisecond response time. Dell EMC Connectrix Switches and directors provide six 9s high availability, built-in high availability, maximum scalability and unmatched reliability with high performance. The Dell EMC PowerEdge R940 is designed to power the Oracle mission-critical applications and real-time decisions, consolidate hardware resources and/or save Oracle licensing costs. Oracle databases are increasingly running(over 70% of the customer base) on the VMware vSphere platform . So far as Oracle Database is concerned, VMware delivers an industry-leading intelligent virtual infrastructure that maximizes performance, scalability and availability while enabling fully automated disaster recovery, better-than-physical security, and proactive management of service levels. Red Hat Linux 7.3 helps in optimizing Oracle Database environments to keep up with the ever increasing workload demands and evolving security risks. Dell EMC AppSync simplifies, orchestrates and automates the process of generating and consuming application consistent copies of production data. It provides intuitive workflows to setup scheduled protection and repurposing jobs that provide end to end automation of all the steps from application discovery and storage mapping to mounting of the copies to the target hosts.

All the benefits that are described above are available in the Dell EMC Ready Bundle for Oracle.

Variants of the Dell EMC Ready Bundle for Oracle

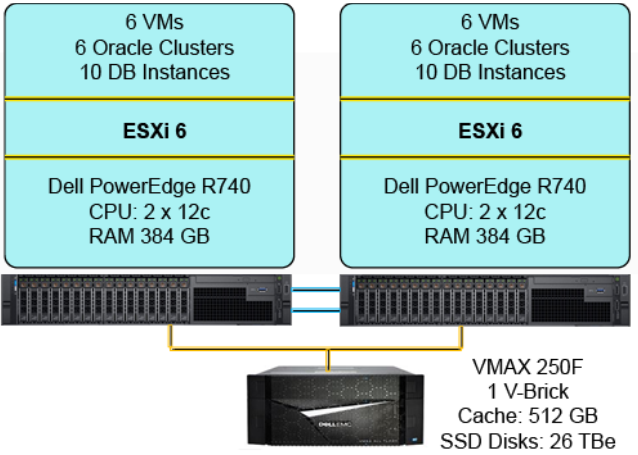

With the above components in place, we have two variants available at present. They are small and medium configurations as depicted in Figures 2 & 3. The “large configuration” variant will be discussed in my next blog..

Figure 2: Small Configuration

Figure 3: Medium Configuration

Both small and medium configurations ensure higher performance, scalability, resilience and availability. With these configurations, Dell EMC has conducted some in-house testing and found some interesting/extraordinary results. Let me discuss these results.

Dell EMC has run five tests for five different use cases as documented below:-

- Two production Oracle RAC clusters

- Repurposing five production databases to fifteen dev RAC database clusters.

- Cluster of five OLAP production databases

- Mixed OLTP workloads

- Mixed OLAP and OLTP workloads

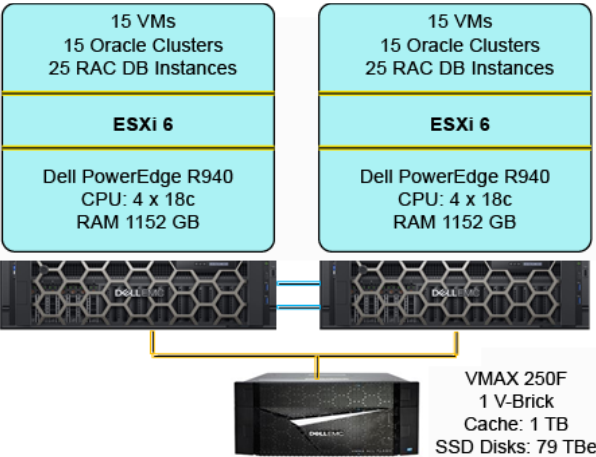

The detailed configuration and the outcomes of the various tests are documented here. In this blog I want to touch upon the last test (use case five) that was run based on the below configuration.

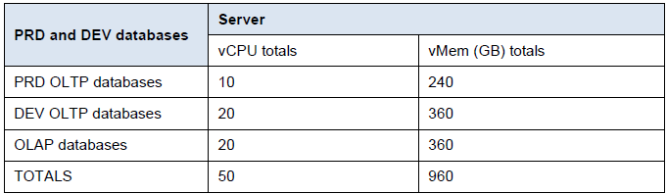

Figure 4: Configuration and Pictorial Diagram of Use Case Five Test

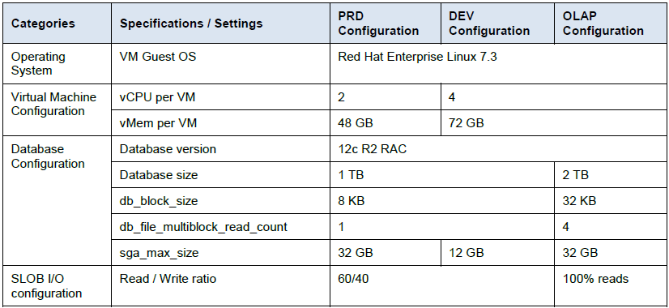

The outcome of this test is phenomenal which is depicted in figure 5.

Figure 5: Outcome of Use Case Five Test

Summary

The Dell EMC Ready Bundle for Oracle is an awesome solution with great features like scalability, performance, consolidation, automation, centralized management and protection. So far as technical virtues of this solution is concerned, it has been internally tested which delivers fantastic results in terms of higher IOPS, bandwidth, scalability, consolidation along with consistent sub-millisecond (less than .75 milliseconds) latencies even after adding enhanced OLTP and OLAP workloads. All these salient features of the Dell EMC Ready Bundle for Oracle in turn reduce the CAPEX and OPEX costs. More importantly, you feel less worried as the Dell EMC Ready Bundle for Oracle makes lifecycle management simpler and all the support and services requests are taken care of by Dell EMC staff with over 10 years of experience. Additionally, the Dell EMC R740/R940 Servers can sustain multiple and larger workloads with less CPU and memory utilization. When we talk of Dell EMC VMAX 250F storage we observed that it generates 99K+ IOPS (in-house) along with higher throughput and less than .75 millisecond latencies for mixed workloads. Repurposing copies generated by the Dell EMC AppSync software helps in 5X flash storage space savings due to the inline deduplication and compression features of VMAX 250F All flash arrays. AppSync also helps in automation of the cloned production database copies and protection of the databases. Net-net, the Dell EMC Ready Bundle for Oracle is a winning solution in all aspects as it combines the best of all the worlds of a typical datacenter.

N.B : The large configuration of Dell EMC Ready Bundle for Oracle has been discussed in my next blog.

In the Part III of this blog series, I would like to discuss about a new Oracle solution on XtremIO X2 Array.

The best of all worlds: Dell EMC Ready Bundle for Oracle (Part II)

Fri, 09 Dec 2022 20:07:51 -0000

|Read Time: 0 minutes

The best of all worlds: Dell EMC Ready Bundle for Oracle (Part II)

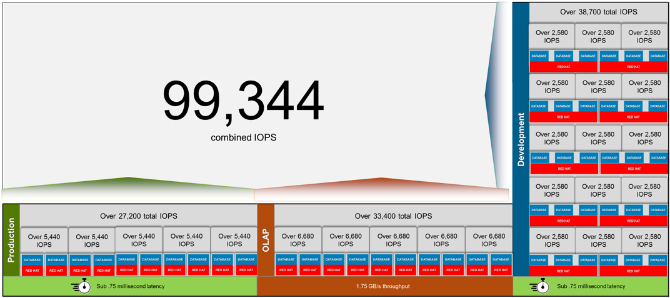

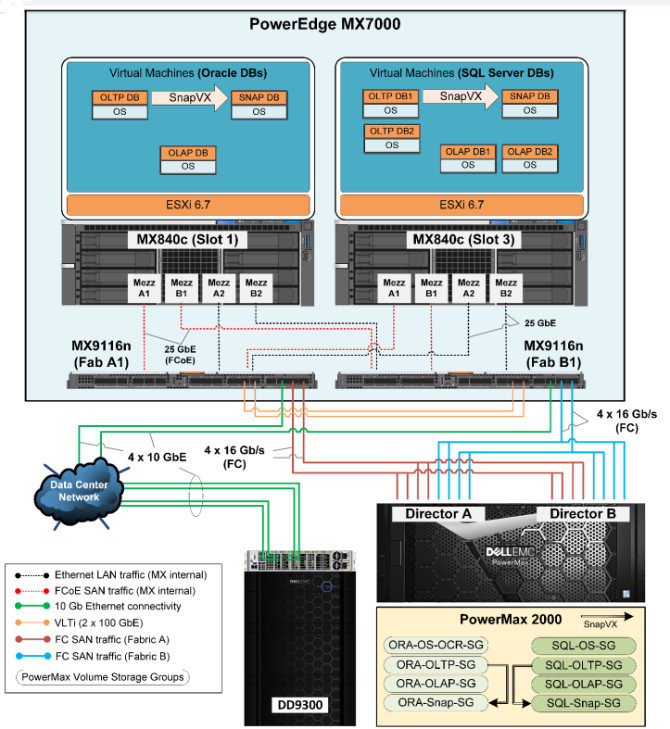

In my last blog I talked about the small and medium configurations. In this blog, I am going to talk about the large configuration of Ready Bundle for Oracle (RBO) .Part III of this blog series will talk about the different backup options available in this product. To back up the small configuration of RBO (called commercial backups), we use Data Domain 6300 while Data Domain 9300 (DD9300) is used for backing up large configuration (called enterprise backups). Let’s talk about the large configurations in this blog here. It is very exciting to talk about the large configuration which mainly caters to the enterprise world which wants to use the oracle environment in a bigger scale with higher IOPS and bandwidth along with lesser CPU utilization and latency. The large configuration of Ready Bundle for Oracle hosts 50 mixed-workload databases on a vSphere cluster with two ESXi 6 hosts on PowerEdge R940 servers. A VMAX 250F array with two V-Brick blocks, 2 x 1 TB mirrored cache and 105 TBe SSDs is used as the storage array for the VM OS and Oracle RAC databases. Figure 1 depicts the large-configuration architecture.

Fig. 1: Architecture of large configuration

The large configuration uses PowerEdge R940 servers, which are designed for larger workloads, to maximize enterprise application performance. The PowerEdge R940 servers are configured with 112 cores—88 more physical cores than the PowerEdge R740 servers in the small configuration and 40 more physical cores than the PowerEdge R940 servers in the medium configuration. The PowerEdge R940 server is configured with 3,072 GB—2,688 GB more than the R740 server in the small configuration and 1,920 GB more than the R940 server in the medium configuration. In Figure 2 we have performed a comparative analysis of the configurations for the small, medium (discussed in my previous blog) and large configurations of RBO.

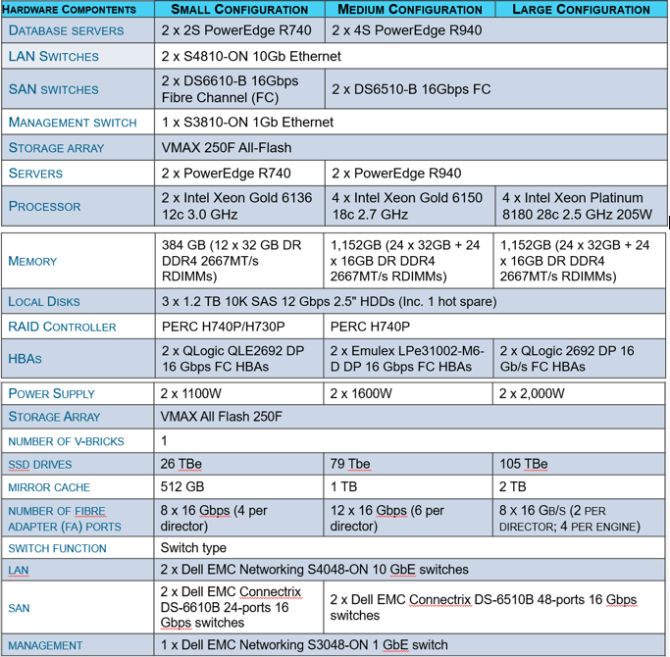

Fig. 2: Comparative Analysis of small, medium and large configurations of Ready Bundle for Oracle

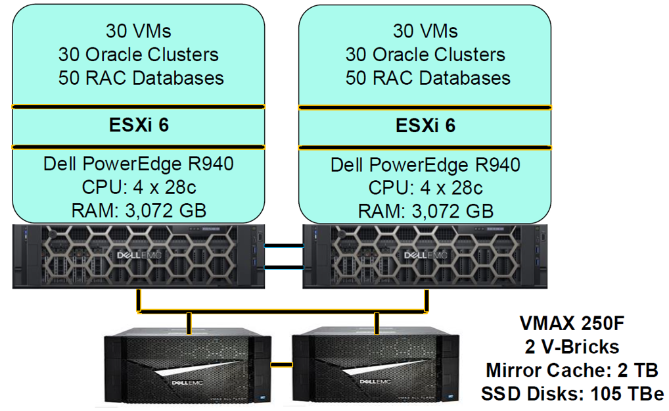

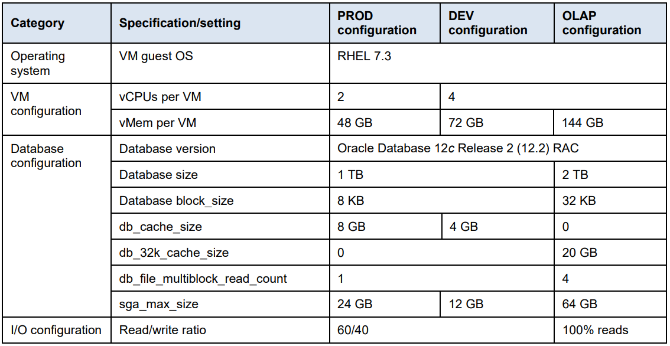

The comparative analysis will help the customers to select the appropriate configuration based on their requirements. There is also a tool available called VMAX sizer (available with the local support rep) which helps the customers to ascertain the appropriate configuration for their database/data center infrastructure. Now, I would like to discuss about the spectacular performances that were achieved during the stress-testing of large configuration for RBO. Let me provide some background for in-house database stress-testing which was performed by DellEMC engineers. The engineers ran 10 OLTP production RAC databases, 30 OLTP development RAC databases, and 10 OLAP RAC databases in parallel for a total mixed workload of 50 RAC databases. SLOB was used to create a 60/40 read/write OLTP workload. Swingbench Sales History benchmark was used for the OLAP workload generation. By adding the OLAP databases, they tested the VMAX 250F array along with large sequential reads to the storage workload. This load is reflected by the database size of 2 TB and the db_32k_cache_size and db_file_multiblock_read_count settings that enable larger database I/O to improve large sequential read performance. The entire architecture and database configuration of this use case has been depicted in the figure 3 and 4.

Fig 3: Architecture of the use case for large configuration

Fig 4 : Oracle RAC configuration for the use case in large configuration of RBO

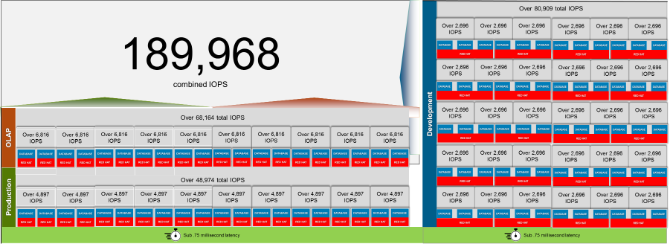

The large configuration included two PowerEdge R940 servers and a VMAX 250F array. Here is a review of the results for this use case, in which the workload of 10 production OLTP databases, 30 development OLTP databases, and 10 OLAP databases ran in parallel:

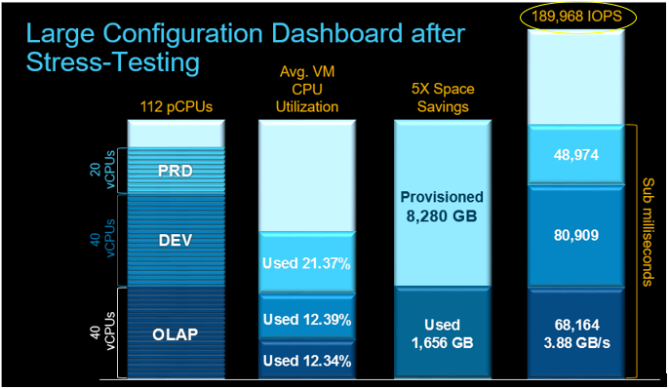

- 100 vCPUs and 2,640 GB vMem per R940 computer server were used to generate a workload of over 189,968 IOPS on the VMAX array as is depicted in figure 5.

- Due to the processing power of the PowerEdge servers, the CPU utilization was only 20 percent, leaving room for more databases or for failover of VMs from one ESXi server to another.

- The VMAX array with inline deduplication and compression saved 5X the flash space, using only 8,280 GB of capacity for 30 development databases.

- Database IOPS was also excellent. The 189,000 IOPS of workload (highlighted in figure 5) were serviced at sub-millisecond latency.

- The 10 OLAP databases generated a total of 3.88 GB/s of throughput.

Fig 5. Aggregate IOPS numbers for the use case of large configuration

Figure 6 below illustrates the complete dashboard of the configuration and performance numbers (including the results that were discussed above) that got generated after the stress-testing for this use case in large configuration of RBO.

Fig 6. Stress-testing Dashboard of the use case for large configuration

The test results show that this configuration of RBO offer twice the number of supported IOPS compared to the small and medium configurations. The salient feature of this large configuration is that it supported just over 189,968 IOPS below sub-millisecond latencies for all OLTP databases. It supported the total bandwidth of 3.88 GB/sec using two PowerEdge R940 servers and a VMAX 250F with more flash drives. Under this database workload, a mere 20 percent of the CPU capacity was utilized, while the VMAX array accelerated 100 percent on most of the writes. Like the small and medium configurations, storage efficiency continued with 5X space savings across the 30 development OLTP databases due to the inline compression of VMAX 250F. In addition, the large configuration still had plenty of unused resources to support even greater workloads. In the next blog (Part III), I would like to discuss about a new Oracle solution on XtremIO X2 Array.

The best of all worlds: Dell EMC Ready Solution for Oracle (Part III)

Fri, 09 Dec 2022 20:07:51 -0000

|Read Time: 0 minutes

The best of all worlds: Dell EMC Ready Solution for Oracle (Part III)

In the last two blogs viz. Part I and Part II, I was talking about the oracle solution that was running on the DELLEMC VMAX storage along with many other components. In this blog, I will talk about one more oracle solution that is running on the top of the XtremIO X2 storage, though there are some variations in the use cases here. Before we delve into the details of the solution architecture, I would like to emphasize here that the taxonomy got changed this time from “Ready Bundle” to “Ready Solution”. Now, let’s look into architecture diagram as depicted in Figure 1.

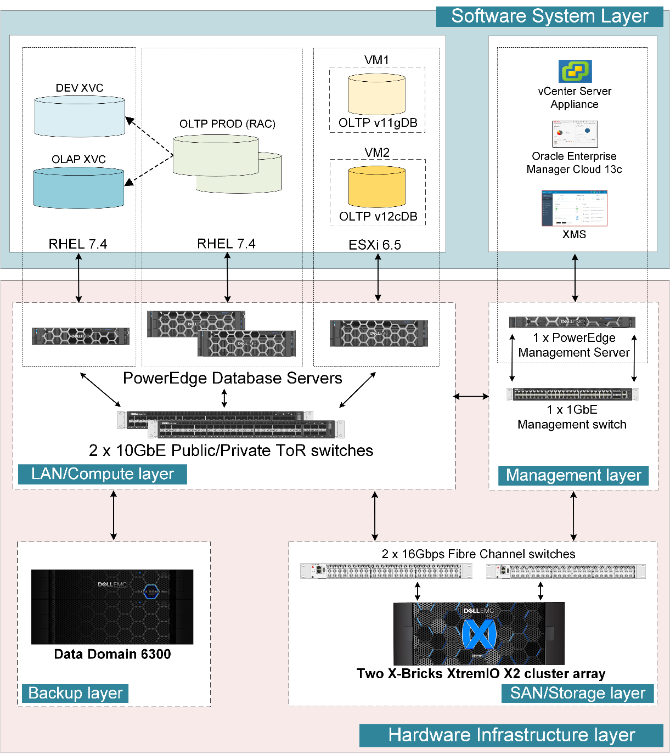

Figure 1: Ready Solutions for Oracle with XtremIO X2 and Data Protection

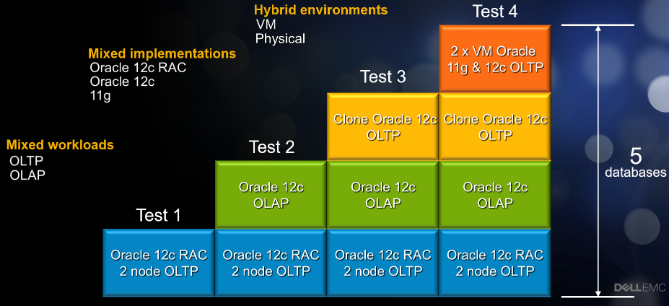

In this solution, the Oracle databases operated in three different mode. The first is the bare metal 2-node RAC database running on two PowerEdge R940 servers and the second consists of two standalone snapshot databases running on R740 standalone server created from the first category via XtremIO XVC (XtremIO Virtual Copies) while the third one is comprised of two standalone virtualized databases of 11g and 12c versions. The whole architecture depicted in Figure 1 is supported by 2 X-Bricks XtremIO X2 cluster array and backed up by the Data Domain 6300 appliance. The DELLEMC engineers have run a rigorous and diversified testing over this oracle solution (as shown in Figure 1) and generated good results. We will discuss about the results in this blog along with the details of different use cases performed in four tests as depicted in Figure 2. In the first test the objective is to create a simple, standalone 2-node RAC database on a bare metal infrastructure with 2 X-Bricks. This architecture is then enhanced to accommodate 2 snapshot databases (created via XVC from Test 1) and finally two different standalone virtualized databases are created one of 11g Rel 2 version & the other database is of 12c . The complete details of the test case 1, 2, 3 and 4 are depicted in Figure 2.

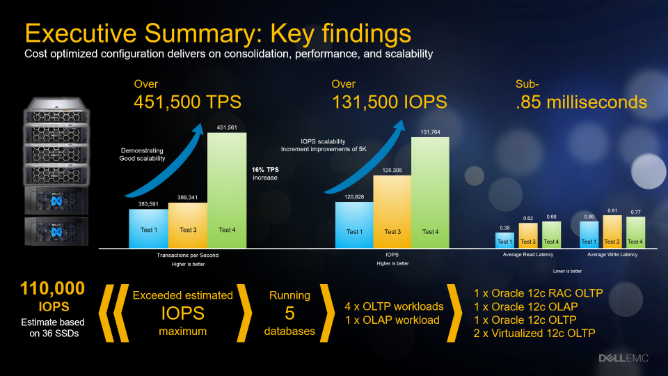

Figure 2: The details of the test case 1, 2, 3 and 4