Blogs

Q1 2024 Update for Ansible Integrations with Dell Infrastructure

Tue, 02 Apr 2024 14:45:56 -0000

|Read Time: 0 minutes

In this blog post, I am going to cover the new Ansible functionality for the Dell infrastructure portfolio that we released over the past two quarters. Ansible collections are now on a monthly release cadence, and you can bookmark the changelog pages from their respective GitHub pages to get updates as soon as they are available!

PowerScale Ansible collections 2.3 & 2.4

SyncIQ replication workflow support

SyncIQ is the native remote replication engine of PowerScale. Before seeing what is new in the Ansible tasks for SyncIQ, let’s take a look at the existing modules:

- SyncIQPolicy: Used to query, create, and modify replication policies, as well as to start a replication job.

- SyncIQJobs: Used to query, pause, resume, or cancel a replication job. Note that new synciq jobs are started using the synciqpolicy module.

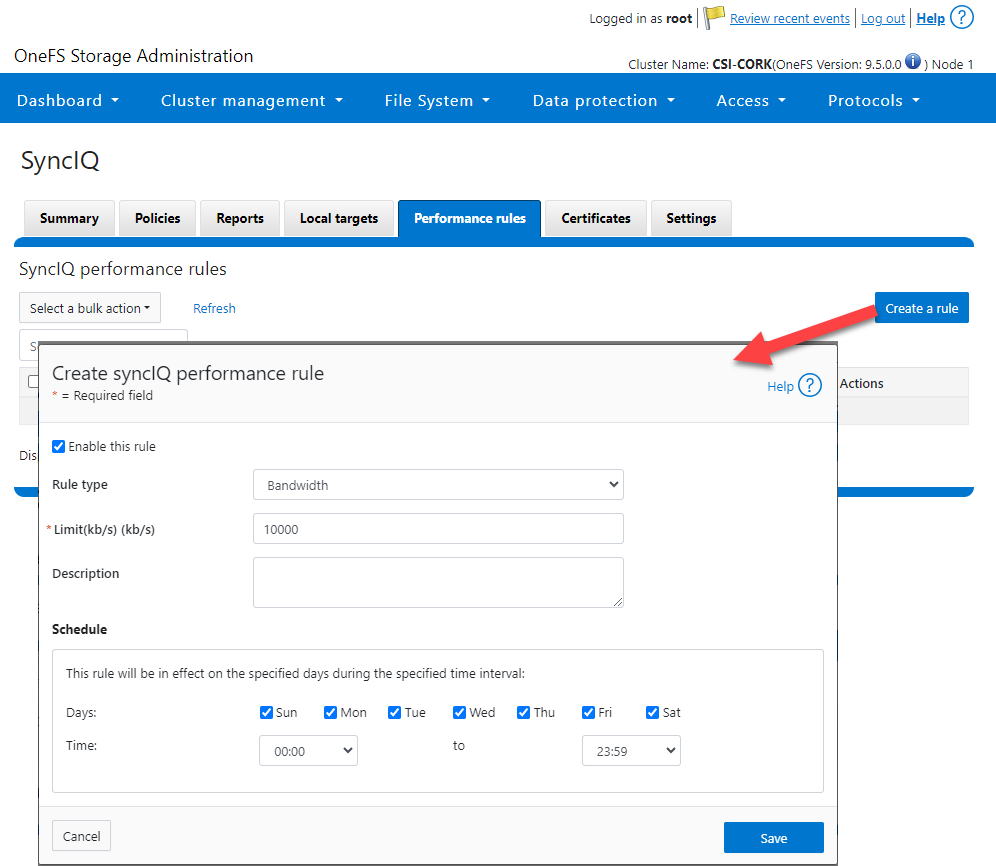

- SyncIQRules: Used to manage the replication performance rules that can be accessed as follows on the OneFS UI:

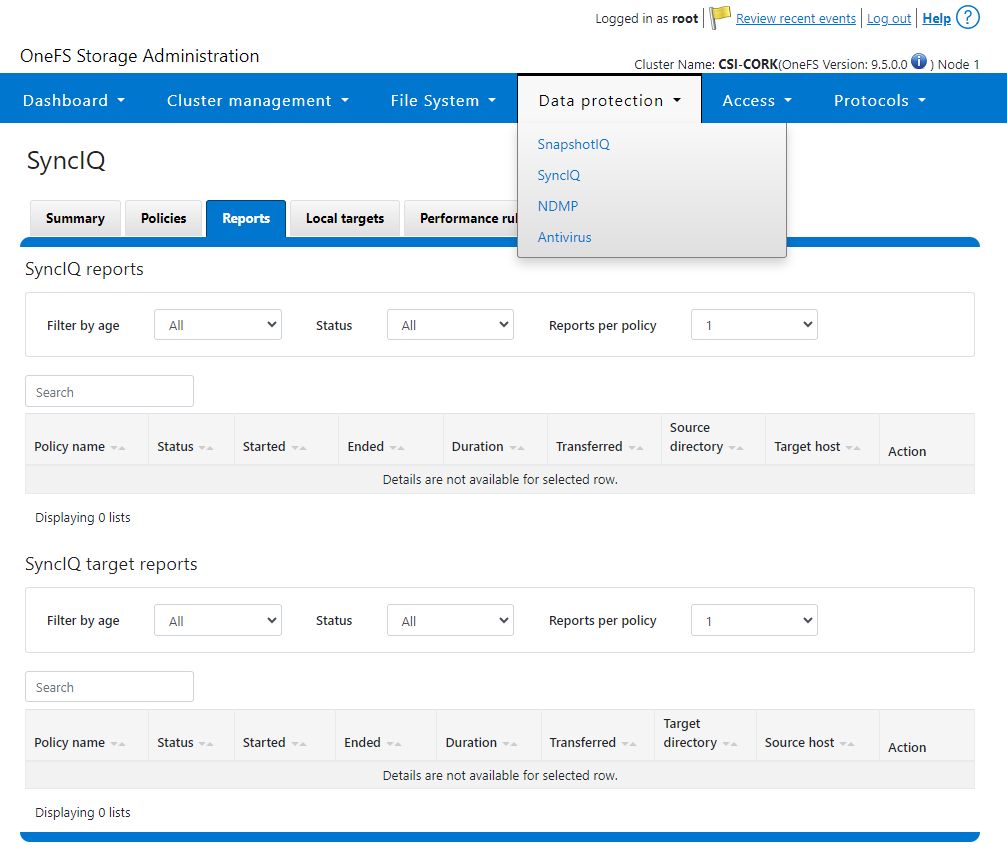

- SyncIQReports and SyncIQTargetReports: Used to manage SyncIQ reports. Following is the corresponding management UI screen where it is done manually:

Following are the new modules introduced to enhance the Ansible automation of SyncIQ workflows:

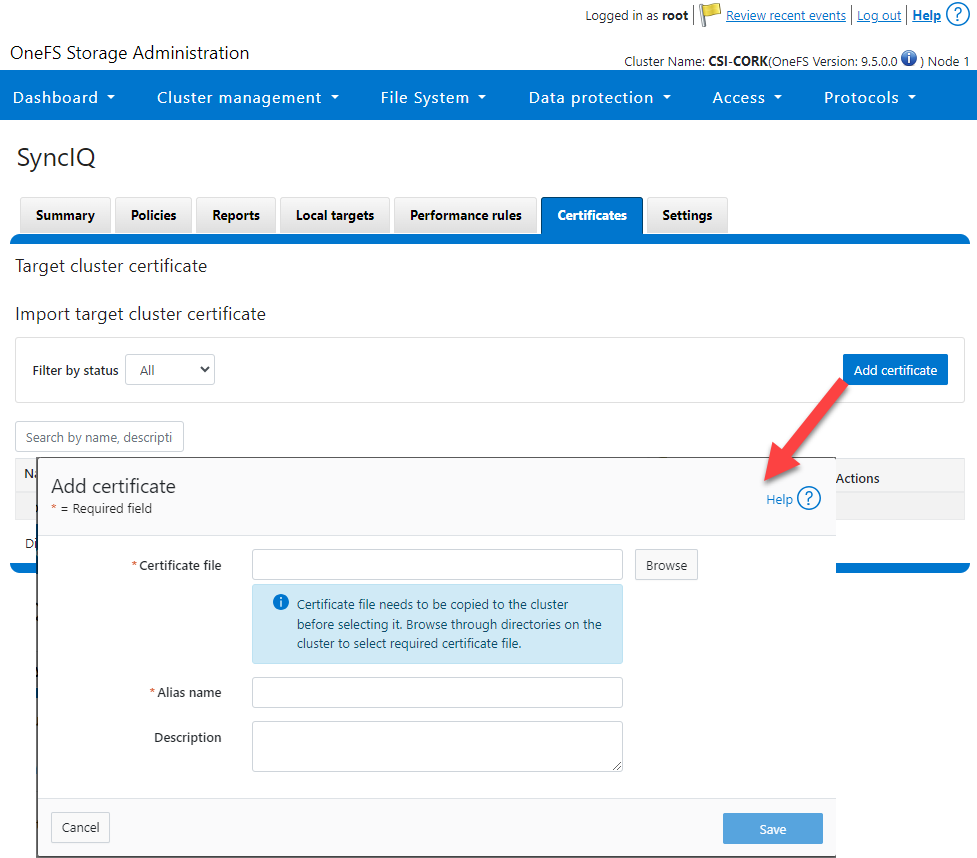

- SyncIQCertificate (v2.3): Used to manage SyncIQ target cluster certificates on PowerScale. Functionality includes getting, importing, modifying, and deleting target cluster certificates. Here is the OneFS UI for these settings:

- SyncIQ_global_settings (v2.3): Used to configure SyncIQ global settings that are part of the include the following:

Table 1. SyncIQ settings

SyncIQ Setting (datatype) | Description |

bandwidth_reservation_reserve_absolute (int) | The absolute bandwidth reservation for SyncIQ |

bandwidth_reservation_reserve_percentage (int) | The percentage-based bandwidth reservation for SyncIQ |

cluster_certificate_id (str) | The ID of the cluster certificate used for SyncIQ |

encryption_cipher_list (str) | The list of encryption ciphers used for SyncIQ |

encryption_required (bool) | Whether encryption is required or not for SyncIQ |

force_interface (bool) | Whether the force interface is enabled or not for SyncIQ |

max_concurrent_jobs (int) | The maximum number of concurrent jobs for SyncIQ |

ocsp_address (str) | The address of the OCSP server used for SyncIQ certificate validation |

ocsp_issuer_certificate_id (str) | The ID of the issuer certificate used for OCSP validation in SyncIQ |

preferred_rpo_alert (bool) | Whether the preferred RPO alert is enabled or not for SyncIQ |

renegotiation_period (int) | The renegotiation period in seconds for SyncIQ |

report_email (str) | The email address to which SyncIQ reports are sent |

report_max_age (int) | The maximum age in days of reports that are retained by SyncIQ |

report_max_count (int) | The maximum number of reports that are retained by SyncIQ |

restrict_target_network (bool) | Whether to restrict the target network in SyncIQ |

rpo_alerts (bool) | Whether RPO alerts are enabled or not in SyncIQ |

service (str) | Specifies whether the SyncIQ service is currently on, off, or paused |

service_history_max_age (int) | The maximum age in days of service history that is retained by SyncIQ |

service_history_max_count (int) | The maximum number of service history records that are retained by SyncIQ |

source_network (str) | The source network used by SyncIQ |

tw_chkpt_interval (int) | The interval between checkpoints in seconds in SyncIQ |

use_workers_per_node (bool) | Whether to use workers per node in SyncIQ or not |

Additions to Info module

The following information fields have been added to the Info module:

- S3 buckets

- SMB global settings

- Detailed network interfaces

- NTP servers

- Email settings

- Cluster identity (also available in the Settings module)

- Cluster owner (also available in the Settings module)

- SNMP settings

- SynciqGlobalSettings

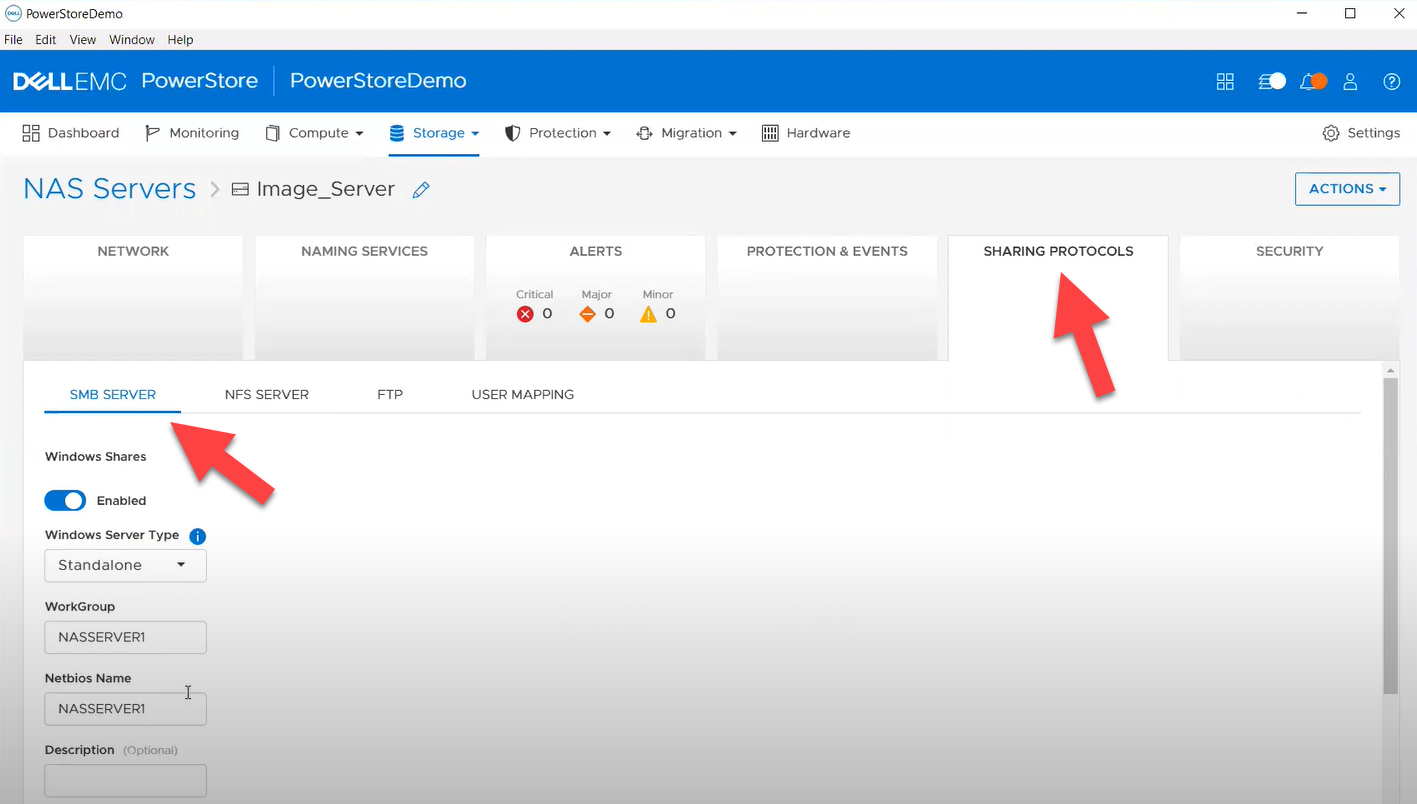

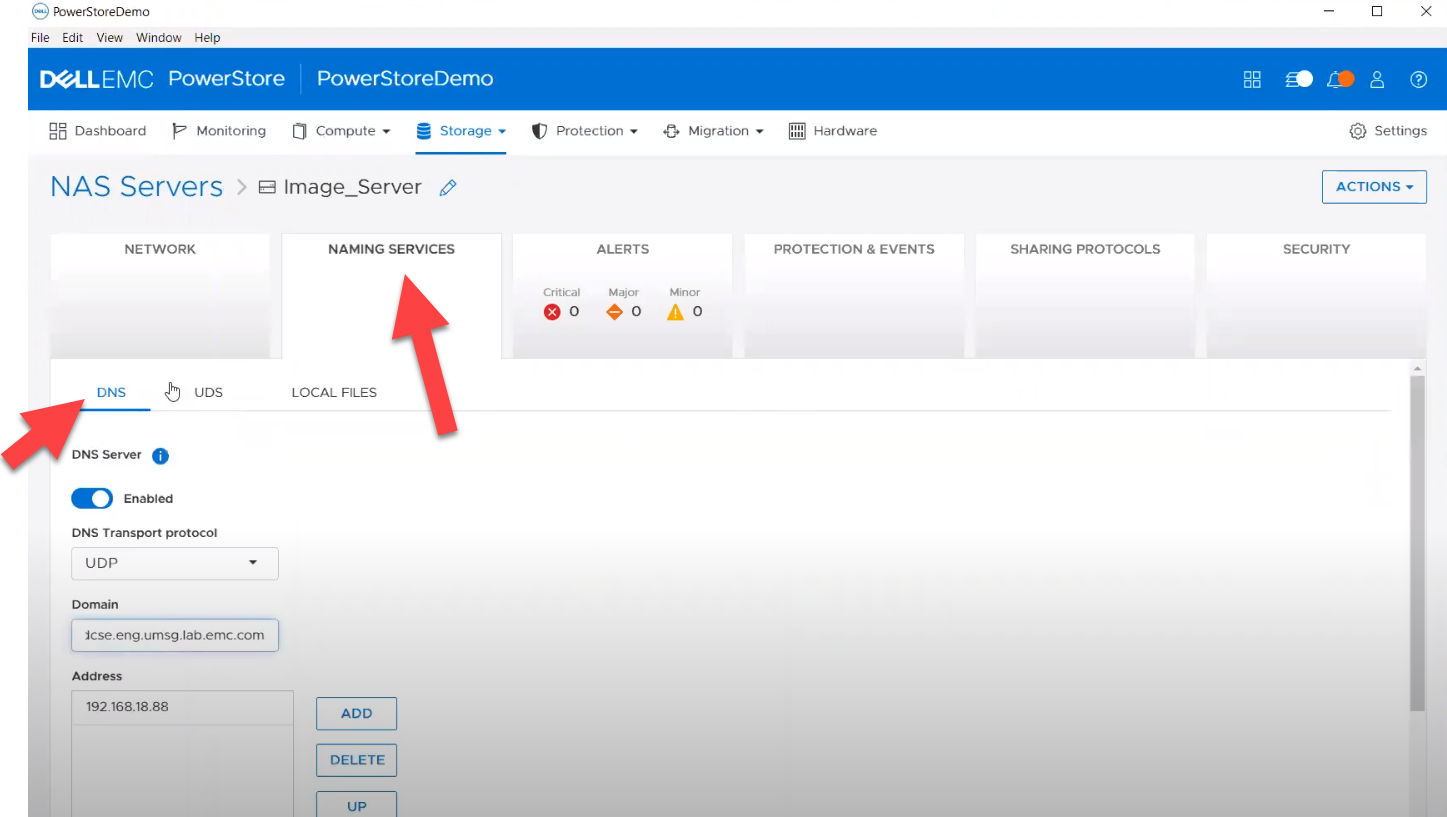

PowerStore Ansible collections 3.1: More NAS configuration

In this release of Ansible collections for PowerStore, new modules have been added to manage the NAS Server protocols like NFS and SMB, as well as to configure a DNS or NIS service running on PowerStore NAS.

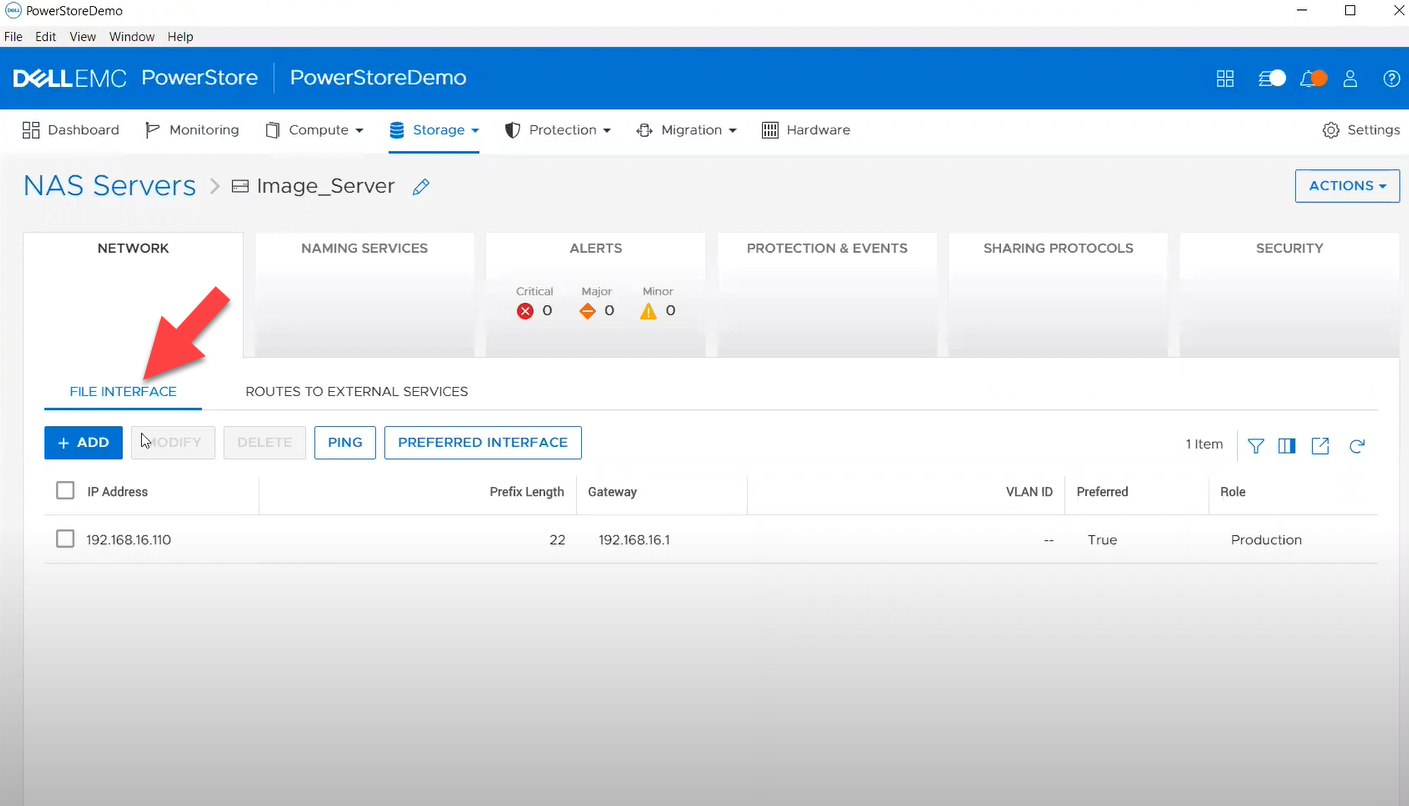

Managing NAS Server interfaces on PowerStore

- file_interface - to enable, query, and modify PowerStore NAS interfaces. Some examples can be found here.

- smb_server - to enable, query, and modify SMB Shares on PowerStore NAS. Some examples can be found here.

- nfs_server - to enable, query, and modify NFS Server on PowerStore NAS. Some examples can be found here.

Naming services on PowerStore NAS

- file_dns – to enable, query, and modify File DNS on PowerStore NAS. Some examples can be found here.

- file_nis - to enable, query, and modify NIS on PowerStore NAS. Some examples can be found here.

- service_config - manage service config for PowerStore

The Info module is enhanced to list file interfaces, DNS Server, NIS Server, SMB Shares, and NFS exports. Also in this release, support has been added for creating multiple NFS exports with same name but different NAS servers.

PowerFlex Ansible collections 2.0.1 and 2.1: More roles

In releases 1.8 and 1.9 of the PowerFlex collections, new roles have been introduced to install and uninstall various software components of PowerFlex to enable day-1 deployment of a PowerFlex cluster. In the latest 2.0.1 and 2.1 releases, more updates have been made to roles, such as:

- Updated config role to support creation and deletion of protection domains, storage pools, and fault sets

- New role to support installation and uninstallation of Active MQ

- Enhanced SDC role to support installation on ESXi, Rocky Linux, and Windows OS

OpenManage Ansible collections: More power to iDRAC

At the risk of repetition, OpenManage Ansible collections have modules and roles for both OpenManage Enterprise as well as iDRAC/Redfish node interfaces. In the last five months, a plethora of a new functionalities (new modules and roles) have become available, especially for the iDRAC modules in the areas of security and user and license management. Following is a summary of the new features:

V9.1

- redfish_storage_volume now supports iDRAC8.

- dellemc_idrac_storage_module is deprecated and replaced with idrac_storage_volume.

v9.0

- Module idrac_diagnostics is added to run and export diagnostics on iDRAC.

- Role idrac_user is added to manage local users of iDRAC.

v8.7

- New module idrac_license to manage iDRAC licenses. With this module you can import, export, and delete licenses on iDRAC.

- idrac_gather_facts role enhanced to add storage controller details in the role output and provide support for secure boot.

v8.6

- Added support for the environment variables, `OME_USERNAME` and `OME_PASSWORD`, as fallback for credentials for all modules of iDRAC, OME, and Redfish.

- Enhanced both idrac_certificates module and role to support the import and export of `CUSTOMCERTIFICATE`, Added support for import operation of `HTTPS` certificate with the SSL key.

v8.5

- redfish_storage_volume module is enhanced to support reboot options and job tracking operation.

v8.4

- New module idrac_network_attributes to configure the port and partition network attributes on the network interface cards.

Conclusion

Ansible is the most extensively used automation platform for IT Operations, and Dell Technologies provides an exhaustive set of modules and roles to easily deploy and manage server and storage infrastructure on-prem as well as on Cloud. With the monthly release cadence for both storage and server modules, you can get access to our latest feature additions even faster. Enjoy coding your Dell infrastructure!

Author: Parasar Kodati, Engineering Technologist, Dell ISG

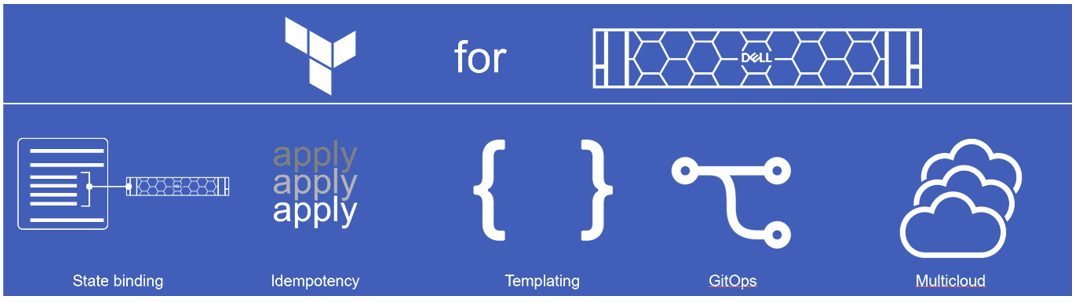

Q1 2024 Update for Terraform Integrations with Dell Infrastructure

Tue, 02 Apr 2024 14:45:56 -0000

|Read Time: 0 minutes

This post covers all the new Terraform resources and data sources that have been released in the last two quarters: Q4’23 and Q1 ‘24. You can check out previous releases of Terraform providers here: Q1-2023, Q2-2023, and Q3-2023. I also covered the first release of PowerScale provider here.

Here is a summary of the Dell Terraform Provider versions released over the last two quarters:

- v1.1 and v1.2 of the provider for PowerScale

- v1.3 and v1.4 of the provider for PowerFlex

- v1.3 and v1.4 of the provider for PowerStore

- v1.2 of the Provider for OME

- v1.1 and v1.2 of the Provider for Redfish

PowerScale Provider v1.1 and v1.2

PowerScale received the most number of new Terraform capabilities in the last few months. New resources and corresponding data sources have been under the following workflow categories:

- Data Management

- User and Access Management

- Cluster Management

Data management

Following is the summary for the different resource-datasource pairs introduced to automate operations related to Data management on PowerScale:

Snapshots: CRUD operations for Snapshots

Here's an example of how to create a snapshot resource within a PowerScale storage environment using Terraform:

resource "powerscale_snapshot" "example_snapshot" {

name = "example-snapshot"

filesystem = powerscale_filesystem.example_fs.id

description = "Example snapshot description"

// Add any additional configurations as needed

}- name: Specifies the name of the snapshot to be created.

- filesystem: References the PowerScale filesystem for which the snapshot will be created.

- description: Provides a description for the snapshot.

Here's an example of how to retrieve information about existing snapshots within a PowerScale environment using Terraform:

data "powerscale_snapshot" "existing_snapshot" {

name = "existing-snapshot"

}

output "snapshot_id" {

value = data.powerscale_snapshot.existing_snapshot.id

}- name: Specifies the name of the existing snapshot to query.

Snapshot schedules: CRUD operations for Snapshot schedules

Following is an example of how to define a snapshot schedule resource:

resource "powerscale_snapshot_schedule" "example_schedule" {

name = "example-schedule"

filesystem = powerscale_filesystem.example_fs.id

snapshot_type = "weekly"

retention_policy = "4 weeks"

snapshot_start_time = "23:00"

// Add any additional configurations as needed

}- name: Specifies the name of the snapshot schedule.

- filesystem: References the PowerScale filesystem for which the snapshot schedule will be applied.

- snapshot_type: Specifies the type of snapshot schedule, such as "daily", "weekly", and so on.

- retention_policy: Defines the retention policy for the snapshots created by the schedule.

- snapshot_start_time: Specifies the time at which the snapshot creation process should begin.

Data Source Example:

The following example shows how to retrieve information about existing snapshot schedules within a PowerScale environment using Terraform. The powerscale_snapshot_schedule data source fetches information about the specified snapshot schedule. An output is defined to display the ID of the retrieved snapshot schedule:

data "powerscale_snapshot_schedule" "existing_schedule" {

name = "existing-schedule"

}

output "schedule_id" {

value = data.powerscale_snapshot_schedule.existing_schedule.id

}- name: Specifies the name of the existing snapshot schedule to query.

File Pool Policies: CRUD operations for File Pool Policies

File policies in PowerScale help establish policy-based workflows like file placement and tiering of files that match certain criteria. Following is an example of how the new file pool policy resource can be configured:

resource "powerscale_filepool_policy" "example_filepool_policy" {

name = "filePoolPolicySample"

is_default_policy = false

file_matching_pattern = {

or_criteria = [

{

and_criteria = [

{

operator = ">"

type = "size"

units = "B"

value = "1073741824"

},

{

operator = ">"

type = "birth_time"

use_relative_time = true

value = "20"

},

{

operator = ">"

type = "metadata_changed_time"

use_relative_time = false

value = "1704742200"

},

{

operator = "<"

type = "accessed_time"

use_relative_time = true

value = "20"

}

]

},

{

and_criteria = [

{

operator = "<"

type = "changed_time"

use_relative_time = false

value = "1704820500"

},

{

attribute_exists = false

field = "test"

type = "custom_attribute"

value = ""

},

{

operator = "!="

type = "file_type"

value = "directory"

},

{

begins_with = false

case_sensitive = true

operator = "!="

type = "path"

value = "test"

},

{

case_sensitive = true

operator = "!="

type = "name"

value = "test"

}

]

}

]

}

# A list of actions to be taken for matching files. (Update Supported)

actions = [

{

data_access_pattern_action = "concurrency"

action_type = "set_data_access_pattern"

},

{

data_storage_policy_action = {

ssd_strategy = "metadata"

storagepool = "anywhere"

}

action_type = "apply_data_storage_policy"

},

{

snapshot_storage_policy_action = {

ssd_strategy = "metadata"

storagepool = "anywhere"

}

action_type = "apply_snapshot_storage_policy"

},

{

requested_protection_action = "default"

action_type = "set_requested_protection"

},

{

enable_coalescer_action = true

action_type = "enable_coalescer"

},

{

enable_packing_action = true,

action_type = "enable_packing"

},

{

action_type = "set_cloudpool_policy"

cloudpool_policy_action = {

archive_snapshot_files = true

cache = {

expiration = 86400

read_ahead = "partial"

type = "cached"

}

compression = true

data_retention = 604800

encryption = true

full_backup_retention = 145152000

incremental_backup_retention = 145152000

pool = "cloudPool_policy"

writeback_frequency = 32400

}

}

]

description = "filePoolPolicySample description"

apply_order = 1

}You can import existing file pool policies using the file pool policy ID:

terraform import powerscale_filepool_policy.example_filepool_policy <policyID>

or by simply referencing the default policy:

terraform import powerscale_filepool_policy.example_filepool_policy is_default_policy=true

The data source can be used to get a handle to a particular file pool policy:

data "powerscale_filepool_policy" "example_filepool_policy" {

filter {

# Optional list of names to filter upon

names = ["filePoolPolicySample", "Default policy"]

}

}or to get the complete list of policies including the default policy:

data "powerscale_filepool_policy" "all" {

}You can then deference into the data structure as needed.

User and Access management

Following is a summary of the different resource-datasource pairs introduced to automate operations related to User and Access management on PowerScale:

LDAP Providers: CRUD operations

To create and manage LDAP providers, you can use the new resource as follows:

resource "powerscale_ldap_provider" "example_ldap_provider" {

# Required params for creating and updating.

name = "ldap_provider_test"

# root of the tree in which to search identities.

base_dn = "dc=tthe,dc=testLdap,dc=com"

# Specifies the server URIs. Begin URIs with ldap:// or ldaps://

server_uris = ["ldap://10.225.108.54"]

}You can import existing LDAP providers using the provider name:

terraform import powerscale_ldap_provider.example_ldap_provider <ldapProviderName>

and also get a handle using the corresponding data source using a variety of criteria:

data "powerscale_ldap_provider" "example_ldap_provider" {

filter {

names = ["ldap_provider_name"]

# If specified as "effective" or not specified, all fields are returned. If specified as "user", only fields with non-default values are shown. If specified as "default", the original values are returned.

scope = "effective"

}

}ACL Settings: CRUD operations

PowerScale OneFS provides very powerful ACL capabilities, including a single namespace for multi-protocol access and its own internal ACL representation to perform access control. The internal ACL is presented as protocol-specific views of permissions so that NFS exports display POSIX mode bits for NFSv3 and shows ACL for NFSv4 and SMB. Now, we have a new resource to manage the global ACL settings for a given cluster:

resource "powerscale_aclsettings" "example_acl_settings" {

# Optional fields both for creating and updating

# Please check the acceptable inputs for each setting in the documentation

# access = "windows"

# calcmode = "approx"

# calcmode_group = "group_aces"

# calcmode_owner = "owner_aces"

# calcmode_traverse = "ignore"

# chmod = "merge"

# chmod_007 = "default"

# chmod_inheritable = "no"

# chown = "owner_group_and_acl"

# create_over_smb = "allow"

# dos_attr = "deny_smb"

# group_owner_inheritance = "creator"

# rwx = "retain"

# synthetic_denies = "remove"

# utimes = "only_owner"

}Import is supported, and there is corresponding data source for the resource as well.

Smart Quotas: CRUD operations

Following is an example that shows how to define a quota resource:

resource "powerscale_quota" "example_quota" {

name = "example-quota"

filesystem = powerscale_filesystem.example_fs.id

size = "10GB"

soft_limit = "8GB"

hard_limit = "12GB"

grace_period = "7d"

// Add any additional configurations as needed

}- name: Specifies the name of the quota.

- filesystem: References the PowerScale filesystem to associate with the quota.

- size: Sets the size of the quota.

- soft_limit: Defines the soft limit for the quota.

- hard_limit: Defines the hard limit for the quota.

- grace_period: Specifies the grace period for the quota.

Data Source Example:

The following code snippet illustrates how to retrieve information about existing smart quotas within a PowerScale environment using Terraform. The powerscale_quota data source fetches information about the specified quota. An output is defined to display the ID of the retrieved quota:

data "powerscale_quota" "existing_quota" {

name = "existing-quota"

}

output "quota_id" {

value = data.powerscale_quota.existing_quota.id

}- name: Specifies the name of the existing smart quota to query.

Cluster management

Groupnet: CRUD operations

Following is an example that shows how to define a GroupNet resource:

resource "powerscale_groupnet" "example_groupnet" {

name = "example-groupnet"

subnet = powerscale_subnet.example_subnet.id

gateway = "192.168.1.1"

netmask = "255.255.255.0"

vlan_id = 100

// Add any additional configurations as needed

}- name: Specifies the name of the GroupNet.

- subnet: References the PowerScale subnet to associate with the GroupNet.

- gateway: Specifies the gateway for the GroupNet.

- netmask: Defines the netmask for the GroupNet.

- vlan_id: Specifies the VLAN ID for the GroupNet.

Data Source Example:

The following code snippet illustrates how to retrieve information about existing GroupNets within a PowerScale environment using Terraform. The powerscale_groupnet data source fetches information about the specified GroupNet. An output is defined to display the ID of the retrieved GroupNet:

data "powerscale_groupnet" "existing_groupnet" {

name = "existing-groupnet"

}

output "groupnet_id" {

value = data.powerscale_groupnet.existing_groupnet.id

}- name: Specifies the name of the existing GroupNet to query.

Subnet: CRUD operations

Resource Example:

The following code snippet shows how to provision a new subnet:

resource "powerscale_subnet" "example_subnet" {

name = "example-subnet"

ip_range = "192.168.1.0/24"

network_mask = 24

gateway = "192.168.1.1"

dns_servers = ["8.8.8.8", "8.8.4.4"]

// Add any additional configurations as needed

}- name: Specifies the name of the subnet to be created.

- ip_range: Defines the IP range for the subnet.

- network_mask: Specifies the network mask for the subnet.

- gateway: Specifies the gateway for the subnet.

- dns_servers: Lists the DNS servers associated with the subnet.

Data Source Example:

The powerscale_subnet data source fetches information about the specified subnet. The following code snippet illustrates how to retrieve information about existing subnets within a PowerScale environment. An output block is defined to display the ID of the retrieved subnet:

data "powerscale_subnet" "existing_subnet" {

name = "existing-subnet"

}

output "subnet_id" {

value = data.powerscale_subnet.existing_subnet.id

}- name: Specifies the name of the existing subnet to query. The result is stored in the data object called existing_subnet.

Network pool

Following is an example demonstrating how to define a network pool resource:

resource "powerscale_networkpool" "example_network_pool" {

name = "example-network-pool"

subnet = powerscale_subnet.example_subnet.id

gateway = "192.168.1.1"

netmask = "255.255.255.0"

start_addr = "192.168.1.100"

end_addr = "192.168.1.200"

// Add any additional configurations as needed

}- name: Specifies the name of the network pool.

- subnet: References the PowerScale subnet to associate with the network pool.

- gateway: Specifies the gateway for the network pool.

- netmask: Defines the netmask for the network pool.

- start_addr and end_addr: Specify the starting and ending IP addresses for the network pool range.

Data Source Example:

The following code snippet illustrates how to retrieve information about existing network pools. The powerscale_networkpool data source fetches information about the specified network pool. An output is defined to display the ID of the retrieved network pool:

data "powerscale_networkpool" "existing_network_pool" {

name = "existing-network-pool"

}

output "network_pool_id" {

value = data.powerscale_networkpool.existing_network_pool.id

}- name: Specifies the name of the existing network pool to query.

SmartPool settings

Here's an example that shows how to configure SmartPool settings within a PowerScale storage environment using Terraform:

resource "powerscale_smartpool_settings" "example_smartpool_settings" {

name = "example-smartpool-settings"

default_policy = "balanced"

compression = true

deduplication = true

auto_tiering = true

auto_tiering_policy = "performance"

auto_tiering_frequency = "weekly"

// Add any additional configurations as needed

}- name: Specifies the name of the SmartPool settings.

- default_policy: Sets the default policy for SmartPool.

- compression: Enables or disables compression.

- deduplication: Enables or disables deduplication.

- auto_tiering: Enables or disables auto-tiering.

- auto_tiering_policy: Sets the policy for auto-tiering.

- auto_tiering_frequency: Sets the frequency for auto-tiering.

Data Source Example:

The following example shows how to retrieve information about existing SmartPool settings within a PowerScale environment using Terraform. The powerscale_smartpool_settings data source fetches information about the specified SmartPool settings. An output is defined to display the ID of the retrieved SmartPool settings:

data “powerscale_smartpool_settings” “existing_smartpool_settings” {

name = “existing-smartpool-settings”

}

output “smartpool_settings_id” {

value = data.powerscale_smartpool_settings.existing_smartpool_settings.id

}- name: Specifies the name of the existing SmartPool settings to query.

New resources

New resources and datasources are also available for the following entities:

- NTP Server

- NTP Settings

- Cluster Email Settings

In addition to the previously mentioned resource-datasource pairs for PowerScale Networking, an option to enable or disable “Source based networking” has been added to the Network settings resource. The corresponding datasources can retrieve this setting on a PowerScale cluster.

PowerFlex Provider v1.3 and v1.4

The following new resources and corresponding datasources have been added to PowerFlex:

Fault Sets: CRUD and Import operations

The following is an example that shows how to define a Fault Set resource within a PowerFlex storage environment using Terraform:

resource "powerflex_fault_set" "example_fault_set" {

name = "example-fault-set"

protection_domain_id = powerflex_protection_domain.example_pd.id

fault_set_type = "RAID-1"

// Add any additional configurations as needed

}- name: Specifies the name of the Fault Set.

- protection_domain_id: References the PowerFlex Protection Domain to associate with the Fault Set.

- fault_set_type: Defines the type of Fault Set, such as "RAID-1".

If you would like to bring an existing fault set resource into Terraform state management, you can import it using the fault set id:

terraform import powerflex_fault_set.fs_import_by_id "<id>"

Data Source Example:

The following code snippet illustrates how to retrieve information about existing Fault Sets within a PowerFlex environment using Terraform. The powerflex_fault_set data source fetches information about the specified Fault Set. An output is defined to display the ID of the retrieved Fault Set:

Ldata "powerflex_fault_set" "existing_fault_set" {

name = "existing-fault-set"

}

output "fault_set_id" {

value = data.powerflex_fault_set.existing_fault_set.id

}- name: Specifies the name of the existing Fault Set to query.

Snapshot policies: CRUD operations

- Snapshot policy resource – create, update, and delete.

- Snapshot policy data source – to get information of an existing policy.

Two new data sources

- powerflex_node: to get complete information related to a PowerFlex node firmware, hardware, and node health details.

- powerflex_template: this is a massive object that has information categorized into multiple groups within this object.

OME Provider v1.2

Following are the new resources to support Firmware baselining and compliance that have been added to the Dell OME Provider:

- Firmware Catalog

- Firmware Baselines

Firmware Catalog

Here is an example of how the catalog resource can be used to create or update catalogs:

# Resource to manage a new firmware catalog

resource "ome_firmware_catalog" "firmware_catalog_example" {

# Name of the catalog required

name = "example_catalog_1"

# Catalog Update Type required.

# Sets to Manual or Automatic on schedule catalog updates of the catalog.

# Defaults to manual.

catalog_update_type = "Automatic"

# Share type required.

# Sets the different types of shares (DELL_ONLINE, NFS, CIFS, HTTP, HTTPS)

# Defaults to DELL_ONLINE

share_type = "HTTPS"

# Catalog file path, required for share types (NFS, CIFS, HTTP, HTTPS)

# Start directory path without leading '/' and use alphanumeric characters.

catalog_file_path = "catalogs/example_catalog_1.xml"

# Share Address required for share types (NFS, CIFS, HTTP, HTTPS)

# Must be a valid ipv4 (x.x.x.x), ipv6(xxxx:xxxx:xxxx:xxxx:xxxx:xxxx:xxxx:xxxx), or fqdn(example.com)

# And include the protocol prefix ie (https://)

share_address = "https://1.2.2.1"

# Catalog refresh schedule, Required for catalog_update_type Automatic.

# Sets the frequency of the catalog refresh.

# Will be ignored if catalog_update_type is set to manual.

catalog_refresh_schedule = {

# Sets to (Weekly or Daily)

cadence = "Weekly"

# Sets the day of the week (Monday, Tuesday, Wednesday, Thursday, Friday, Saturday, Sunday)

day_of_the_week = "Wednesday"

# Sets the hour of the day (1-12)

time_of_day = "6"

# Sets (AM or PM)

am_pm = "PM"

}

# Domain optional value for the share (CIFS), for other share types this will be ignored

domain = "example"

# Share user required value for the share (CIFS), optional value for the share (HTTPS)

share_user = "example-user"

# Share password required value for the share (CIFS), optional value for the share (HTTPS)

share_password = "example-pass"

}Existing catalogs can be imported into the Terraform state with the import command:

# terraform import ome_firmware_catalog.cat_1 <id> terraform import ome_firmware_catalog.cat_1 1

After running the import command, populate the name field in the config file to start managing this resource.

Firmware Baseline

Here is an example that shows how a baseline can be compared to an array of individual devices or device groups:

# Resource to manage a new firmware baseline

resource "ome_firmware_baseline" "firmware_baseline" {

// Required Fields

# Name of the catalog

catalog_name = "tfacc_catalog_dell_online_1"

# Name of the Baseline

name = "baselinetest"

// Only one of the following fields (device_names, group_names , device_service_tags) is required

# List of the Device names to associate with the firmware baseline.

device_names = ["10.2.2.1"]

# List of the Group names to associate with the firmware baseline.

# group_names = ["HCI Appliances","Hyper-V Servers"]

# List of the Device service tags to associate with the firmware baseline.

# device_service_tags = ["HRPB0M3"]

// Optional Fields

// This must always be set to true. The size of the DUP files used is 64 bits."

#is_64_bit = true

// Filters applicable updates where no reboot is required during create baseline for firmware updates. This field is set to false by default.

#filter_no_reboot_required = true

# Description of the firmware baseline

description = "test baseline"

}Although the resource supports terraform import, in most cases a new baseline can be created using a Firmware catalog entry.

Following is a list of new data sources and supported operations in Terraform Provider for Dell OME:

- Firmware Repository

- Firmware Baseline Compliance Report

- Firmware Catalog

- Device Compliance Report

RedFish Provider v1.1 and 1.2

Several new resources have been added to the Redfish provider to access and set different iDRAC attribute sets. Following are the details:

Certificate Resource

This is a resource for the import of the ssl certificate to iDRAC based on the input parameter Type. After importing the certificate, the iDRAC will automatically restart. By default, iDRAC comes with a self-signed certificate for its web server. If the user wants to replace with his/her own server certificate (signed by Trusted CA), two kinds of SSL certificates are supported: (1) Server certificate and (2) Custom certificate. Following are the steps to generate these certificates:

- Server Certificate:

- Generate the CSR from iDRAC.

- Create the certificate using CSR and sign with trusted CA.

- The certificate should be signed with hashing algorithm equivalent to sha256

- Custom Certificate:

- An externally created custom certificate which can be imported into the iDRAC.

- Convert the external custom certificate into PKCS#12 format, and it should be encoded via base64. The conversion requires passphrase which should be provided in 'passphrase' attribute.

Boot Order Resource

This Terraform resource is used to configure Boot Order and enable/disable Boot Options of the iDRAC Server. We can read the existing configurations or modify them using this resource.

Boot Source Override Resource

This Terraform resource is used to configure Boot sources of the iDRAC Server. If the state in boot_source_override_enabled is set once or continuous, the value is reset to disabled after the boot_source_override_target actions have completed successfully. Changes to these options do not alter the BIOS persistent boot order configuration.

Manager Reset

This resource is used to reset the manager.

Lifecycle Controller Attributes Resource

This Terraform resource is used to get and set the attributes of the iDRAC Lifecycle Controller.

System Attributes Resource

This Terraform resource is used to configure System Attributes of the iDRAC Server. We can read the existing configurations or modify them using this resource. Import is also supported for this resource to include existing System Attributes in Terraform state.

iDRAC Firmware Update Resource

This Terraform resource is used to update the firmware of the iDRAC Server based on a catalog entry.

Resources

Here are the link sets for key resources for each of the Dell Terraform providers:

- Provider for PowerScale

- Provider for PowerFlex

- Provider for PowerStore

- Provider for Redfish

Author: Parasar Kodati, Engineering Technologist, Dell ISG

Dell OpenManage Enterprise Operations with Ansible Part 3: Compliance, Reporting, and Remediation

Fri, 08 Dec 2023 15:37:49 -0000

|Read Time: 0 minutes

In case you missed it, check out the first post of this series for some background information on the openmanage Ansible collection by Dell and inventory management, as well as the second post to learn more about template-based deployment. In this blog, we’ll take a look at automating compliance and remediation workflows in Dell OpenManage Enterprise (OME) with Ansible.

Compliance baselines

Compliance baselines in OME are reports that show the ‘delta’ or difference between the specified desired configuration and the actual configuration of the various devices in the inventory. The desired configuration is specified as a compliance template, which can be cloned from either a deployment template or a device using the ome_template covered in the deployment section of this series. Following are task examples for creating compliance templates:

- name: Create a compliance template from deploy template

dellemc.openmanage.ome_template:

hostname: "{{ hostname }}"

username: "{{ username }}"

password: "{{ password }}"

validate_certs: no

command: "clone"

template_name: "email_deploy_template"

template_view_type: "Compliance"

attributes:

Name: "email_compliance_template"- name: Create a compliance template from reference device

dellemc.openmanage.ome_template:

hostname: "{{ hostname }}"

username: "{{ username }}"

password: "{{ password }}"

validate_certs: no

command: "create"

device_service_tag:

- "SVTG123"

template_view_type: "Compliance"

attributes:

Name: "Configuration Compliance"

Description: "Configuration Compliance Template"

Fqdds: "BIOS"Once we have the template ready, we can create the baseline, which is the main step where OME compares the template configuration to devices. Devices can be specified as a list or a device group. Depending on the number of devices, this step can be time-consuming. The following code uses a device group that has already been created, as shown in part 2 of this OME blog series:

- name: Create a configuration compliance baseline using an existing template

dellemc.openmanage.ome_configuration_compliance_baseline:

hostname: "{{ hostname }}"

username: "{{ username }}"

password: "{{ password }}"

validate_certs: no

command: create

template_name: "email_compliance_template"

description: "SNMP Email setting"

names: "baseline_email"

device_group_names: demo-group-allOnce the baseline task is run, we can retrieve the results, store them in a variable, and write the contents to a file for further analysis:

- name: Retrieve the compliance report of all of the devices in the specified configuration compliance baseline.

dellemc.openmanage.ome_configuration_compliance_info:

hostname: "{{ hostname }}"

username: "{{ username }}"

password: "{{ password }}"

validate_certs: no

baseline: "baseline_email"

register: compliance_report

delegate_to: localhost- name: store the variable to json

copy:

content: "{{ compliance_report | to_nice_json }}"

dest: "./output-json/compliance_report.json"

delegate_to: localhostOnce the compliance details are stored in a variable, we can always extract details from it, like the list of non-compliant devices shown here:

- name: Extract service tags of devices with highest level compliance status

set_fact:

non_compliant_devices: "{{ non_compliant_devices | default([]) + [device.Id] }}"

loop: "{{ compliance_report.compliance_info }}"

loop_control:

loop_var: device

when: device.ComplianceStatus > 1

no_log: trueRemediatation

The remediation task brings all devices to a desired template configuration, much like the template deployment job. For remediation, we use the same baseline module with command set to remediate and pass all devices we would like to remediate, as well as the list of devices that are non-compliant:

- name: Remediate a specified non-complaint devices to a configuration compliance baseline using device IDs # noqa: args[module]

dellemc.openmanage.ome_configuration_compliance_baseline:

hostname: "{{ hostname }}"

username: "{{ username }}"

password: "{{ password }}"

validate_certs: no

command: "remediate"

names: "baseline_email"

device_ids: "{{ non_compliant_devices }}"

when: "non_compliant_devices | length > 0"

delegate_to: localhostWatch the following video to see in-depth how the different steps of this workflow are run:

Conclusion

To recap, we’ve covered the creation of compliance templates and running baseline checks against your PowerEdge server inventory. We then saw how to retrieve detailed compliance reports and parse them in Ansible for further analysis. Finally, using the OME baseline Ansible, we ran a remediation job to correct any configuration drift in non-compliant devices. Don’t forget to check out the detailed documentation for openmanage Ansible modules including both OME and iDRAC/redfish modules and roles, as well as the complete code examples used here in this GitHub repository.

Author: Parasar Kodati, Engineering Technologist, Dell ISG

Dell OpenManage Enterprise Operations with Ansible Part 2: Templates and Deployment

Mon, 04 Dec 2023 16:30:25 -0000

|Read Time: 0 minutes

In case you missed it, check out the first part of this blog series for some background on the openmanage Ansible collection by Dell. In this post, we’ll take a look at template based deployment in OME driving from Ansible.

Template based deployment

Templates in OME are a great way to define the exact configuration that you would like to replicate on a group of servers. You can collect devices into multiple groups based on the workload profile and apply templates on these groups to achieve identical configurations based on security, performance, and other considerations.

To retrieve template information, you can use the dellemc.openmanage.ome_template_info module to query templates based on a variety of system_query _options. You can pass filter parameters as shown here:

- name: Get filtered template info based on name.

dellemc.openmanage.ome_template_info:

hostname: "{{ hostname }}"

username: "{{ username }}"

password: "{{ password }}"

validate_certs: no

system_query_options:

filter: "Name eq 'empty_template'"

register: template_info

- name: print template info

debug:

msg: "{{template_info}}"Template creation

One way to create a template is by using an existing device configuration. You can also create a template by cloning an existing template and then modifying the parameters as necessary. Following are the Ansible tasks for each respective method:

- name: Create a template from a reference device.

dellemc.openmanage.ome_template:

hostname: "{{ hostname }}"

username: "{{ username }}"

password: "{{ password }}"

validate_certs: no

device_service_tag: "{{device_service_tag}}"

attributes:

Name: "{{device_service_tag}}-template"

Description: "ideal Template description"

- name: Clone a template

dellemc.openmanage.ome_template:

hostname: "{{ hostname }}"

username: "{{ username }}"

password: "{{ password }}"

validate_certs: no

command: "clone"

template_name: "empty_template"

attributes:

Name: "deploy_clone"

delegate_to: localhostTemplate Attribute list

Very often as part of day-2 operations you may have to change a set of attributes, which can be difficult given that a template is a very detailed object with thousands of parameters. To see what parameters are available to modify in a template, we must get the complete list of parameters using a REST API call. In the following example, we first establish an API connection and then make a call to api/TemplateService/Templates(11)/Views(1)/AttributeViewDetails. We then store this information in a JSON file for further exploration and parsing:

- name: Get PowerScale API Session Token

ansible.builtin.uri:

url: "https://{{ hostname }}/api/SessionService/Sessions"

method: post

body_format: json

validate_certs: false

status_code: 200,201

body: |

{

"UserName": "{{ username }}",

"Password": "{{ password }}",

"SessionType":"API"

}

register: api_response

tags: "api-call"

- name: Store API auth token

ansible.builtin.set_fact:

ome_auth_token: "{{ api_response.x_auth_token }}"

tags: "api-call"

- name: Get attribute details

uri:

url: "https://{{ hostname }}/api/TemplateService/Templates(11)/Views(1)/AttributeViewDetails"

validate_certs: false

method: get

#body_format: json

#body: |

# {"privileges":{{ admin_priv.json.privileges }}}

headers:

X-Auth-Token: "{{ ome_auth_token }}"

status_code: 200,201,204,409

register: api_output

- name: Save device_info to a file

copy:

content: "{{ api_output | to_nice_json }}"

dest: "./output-json/api_output.json"Once we have the JSON file with the complete set of attributes, we can find the exact attribute we want to modify. Given the attribute JSON file can span thousands of lines, we can use a simple python script to run a quick search of the attributes file based on keywords. Here are a few lines that can retrieve all the attributes containing Email:

import json

with open('./output-json/api_output.json') as f:

data = json.load(f)

for item in data['json']['AttributeGroups'][0]['SubAttributeGroups']:

if item['DisplayName'].find("Email") >-1:

print('\n')

print(item['DisplayName'])

print('-------------------------')

for subitem in item['Attributes']:

print(subitem['DisplayName'])Once we have the attribute that needs to be modified, we can use the ome_template module with (a) command set to modify and (b) attribute name and value set as follows:

- name: Modify template

dellemc.openmanage.ome_template:

hostname: "{{ hostname }}"

username: "{{ username }}"

password: "{{ password }}"

validate_certs: no

command: "modify"

template_name: "deploy_clone"

attributes:

Attributes:

- DisplayName: 'iDRAC, RAC Email Alert, EmailAlert 1 Email Alert Address'

Value: "world123@test.com"Device grouping and deployment

To apply templates to multiple devices, we can create device groups and then apply the deployment template for the entire group. To add devices to a group, you can create an array of devices. Here, I am passing the entire set of devices that I queried using ome_device_info:

- name: Retrieve basic inventory of all devices.

dellemc.openmanage.ome_device_info:

hostname: "{{ hostname }}"

username: "{{ username }}"

password: "{{ password }}"

validate_certs: no

register: device_info_result

- name: get all service tags

set_fact:

service_tags: "{{ service_tags + [item.DeviceServiceTag] }}"

loop: "{{ device_info_result.device_info.value }}"

no_log: true

- name: Create a device group

dellemc.openmanage.ome_groups:

hostname: "{{ hostname }}"

username: "{{ username }}"

password: "{{ password }}"

validate_certs: no

name: "demo-group-all"

- name: Add devices to a static device group

dellemc.openmanage.ome_device_group:

hostname: "{{ hostname }}"

username: "{{ username }}"

password: "{{ password }}"

validate_certs: no

name: "demo-group-all"

device_service_tags: "{{service_tags}}"Now, we are ready to deploy our template to the device group we created using the same ome_template module but with command set to deploy:

- name: Deploy template on groups

dellemc.openmanage.ome_template:

hostname: "{{ hostname }}"

username: "{{ username }}"

password: "{{ password }}"

validate_certs: no

command: "deploy"

template_name: "deploy_clone"

device_group_names:

- "deploy_group" You can watch the following video to see in depth how the different steps of the workflow are run:

Conclusion

To recap, we’ve covered how to create templates, query and find the available attributes we can modify, and then modify them in a template, as well as how to group devices and deploy templates to those groups. You can find the code mentioned in this blog on GitHub as part of this Automation examples repo.

Author: Parasar Kodati, Engineering Technologist, Dell ISG

Generative AI Tools for Infrastructure As Code Practitioners

Fri, 01 Dec 2023 15:32:51 -0000

|Read Time: 0 minutes

Today, Infrastructure as code is a mainstream technology used extensively by DevOps and ITOps engineers to manage dynamic IT environments consisting of data, applications, and infrastructure with increasing scale, complexity, and diversity. With a GitOps driven workflow, engineers can bring much needed standardization, security, and operational consistency across diverse environments. While there are a multitude of compelling reasons to embrace IaC, one innovation tips the scales toward a resounding yes, and that is generative AI. When coding assistants were released throughout this year, there was some skepticism around the accuracy of generated code, however this game-changing technology is evolving rapidly and becoming a key enabler for IaC, transforming it from a best practice to an indispensable strategy.

In this blog post, we'll explore some of the specific tools under the GenAI umbrella and how they can help you accelerate your workflows, learning, and even your career! Given how OpenAI has been leading the industry with generative AI tools like ChatGPT, I am going to refer to many of the specific tools from OpenAI. That said, many broad GenAI capabilities are possible with other capable large language models. To learn more, check out this great whitepaper on building general purpose LLM environments by my colleagues at Dell.

Code generation

If I have to choose between a monthly haircut or a generative code assistant subscription, I would choose the latter. Tools like GitHub copilot are becoming indispensable no matter which programming language or framework you use. These tools cut down the time it takes to create the building blocks of code necessary for automating tasks, so elegantly generated that you will not find a single line of generated code without a meaningful comment that your high school English teacher would be proud of. Examples of how this can help are shown in this Ansible for OpenManage Enterprise video.

Code interpretation

Large Language Models or LLMs are capable of interpreting code from a wide variety of programming languages thanks to their extensive training with millions of lines of code. This code interpretation is another huge capability where you can prompt the models with code, often easier than plain English. This can be especially helpful when refactoring code or when engineers are tasked with understanding and improving upon existing automation scripts. Following is a quick example where I generated the Ansible equivalent of good old Shell script using the latest ChatGPT 4 model.

Shell script input to create compressed backup of a folder:

#!/bin/bash # Set the directory to back up and the backup location DIRECTORY_TO_BACKUP="/home/user/data" BACKUP_LOCATION="/backup" TIMESTAMP=$(date +"%F") BACKUP_FILE="backup-$TIMESTAMP.tar.gz" # Create the backup tar -czf $BACKUP_LOCATION/$BACKUP_FILE $DIRECTORY_TO_BACKUP # Check if the backup was successful if [ $? -eq 0 ]; then echo "Backup completed successfully." else echo "Backup failed." >&2 fi

Ansible output I got the first time:

---

- name: Create Backup of Directory

hosts: all

tasks:

- name: Create backup file

ansible.builtin.archive:

path: /home/user/data

dest: /backup/backup-{{ ansible_date_time.iso8601_basic_short }}.tar.gz

format: gzOutput received when I prompted the model for a more structured code block with variables:

---

- name: Create Backup of Directory with Variables

hosts: all

vars:

backup_source: /home/user/data # Define the source directory to back up

backup_destination: /backup # Define the backup destination directory

backup_filename: "backup-{{ ansible_date_time.iso8601_basic_short }}.tar.gz" # Backup filename with a timestamp

tasks:

- name: Create backup directory if it does not exist

ansible.builtin.file:

path: "{{ backup_destination }}"

state: directory

mode: '0755'

- name: Create backup file

ansible.builtin.archive:

path: "{{ backup_source }}"

dest: "{{ backup_destination }}/{{ backup_filename }}"

format: gzThe latest ChatGPT interface supports attaching files, so you don’t even have to copy-paste code for refactoring. Simply organize the files and attach them to your prompt.

Error analysis and debugging

If you think copy-pasting error messages into Stack Overflow is the best way to debug, think again. LLM-based code interpreter tools can analyze error messages and logs and, in many cases, identify the root causes for frequently occurring issues. This applies to any code, including Ansible playbooks and Terraform modules, where an LLM can swiftly recommend fixes, link to the relevant documentation, or even automate the resolution process in some cases.

Prompt engineering

If you think the term “prompt engineering” is just to make fun of GenAI tools, it’s time for a reframe in perspective. Prompting has become a critical factor in determining the accuracy of responses from LLMs. The more specific and detailed the prompt, the more useable the response. Here are some Infrastructure as code examples:

"I am working on a Terraform project where I need to provision an AWS EC2 instance with specific requirements: it should be of type 't2.micro', within the 'us-east-1' region, and include tags for 'Name' as 'MyInstance' and 'Environment' as 'Development'. Could you provide me with the Terraform code snippet that defines this resource?"

"I need to create an Ansible playbook that performs a common operation: updating all packages on a group of Ubuntu servers. The playbook should be idempotent and only target servers in the 'webservers' group. It must also restart the 'nginx' service only if the updates require a restart. Can you generate the YAML code for this playbook?"

And, if you are on a mission to change the world with Automation, maybe something like this:

"For my automation scripts using Python in a DevOps context, I require a robust error handling strategy that logs errors to a file and sends an email notification when a critical failure occurs. The script is meant to automate the deployment process. Could you provide a Python code sample that demonstrates this error handling? Here is the code: <your python code>"

So, if needed, skip the coffee a few times or a haircut, but please let a code assistant help you.

Your own GPT

Already have a ChatGPT tab in your browser at all times? Already a prompting machine? There is more you can do with GenAI than just ‘plain’ (very interesting how quickly this technology is becoming table stakes and commoditized) code generation.

Thanks to the recently announced GPTs and Assistant API by OpenAI, you can create a tailor-built model that is significantly faster and more precise in responses. You can train GPT models with anything from a policy document to coding guidelines to a sizing calculator for your IT infrastructure and have chat bots use these backend models to answer queries from customers or internal stakeholders. Please note that this does have a cost associated with it depending on the number of clients and usage. Please visit the OpenAI website to check out various plans and pricing. While we won’t go into detail on the topic in this particular blog, let me lay out the key elements that make up a custom GPT:

- Code Interpreter

- Knowledge Retrieval

- Your own functions

Code interpreter

This is not much different from the coding capabilities of ChatGPT or GitHub copilot. In the context of creating custom GPTs, you can basically check this as a required tool. You may ask, “why do you even have to select this foundational feature?” Put simply, it’s because of the pay-for-what-you-use pricing model in which users who don’t need this capability can uncheck it.

Knowledge retrieval

AI-powered knowledge retrieval systems can instantly pull up relevant technical documentation and best practices that are pertinent to the task at hand, whether it's crafting an Ansible playbook or defining resources in Terraform. This immediate access to information accelerates the development process and aids in maintaining industry standards across both platforms. Stay tuned for examples in future blog posts.

Your own functions

If you have already built scripts and routines to compute or make decisions, you can incorporate them into your custom GPT as well. I recently saw an example where an ROI calculator for switching to solar power had been incorporated into a chat bot to help customers visiting their website evaluate the switch to solar. Your GPT can be a sizer tool or a performance benchmarking tool for the end user for which you are building it.

Proprietary and sensitive data

While LLMs are the best thing to happen to programmers in a long time, one should exercise extreme caution when using data that is not publicly available to train AI models. Depending on the use case, extensive guard rails must be put in place when using sensitive data or proprietary data in your prompts or knowledge documents for training. If such guard rails do not exist in your organization, consider championing to create them and be a part of the process of helping your organization achieve a greater degree of maturity in AI adoption.

Dell and AI

Dell has been at the forefront of the GenAI revolution as the leading infrastructure provider for Artificial Intelligence solutions for the enterprise. Check out this insightful talk on Dell AI strategy by CTO John Roese that goes over A-in, AI-on, AI-for and AI-with aspects of Dell’s approach. Following are more resources to learn about infrastructure setup for LLM training and deployment in particular:

- Blog: Dell and Meta Collaborate to Drive Generative AI Innovation

- White Paper: Llama 2: Inferencing on a Single GPU

- Design Guide: Generative AI in the Enterprise – Inferencing

Author: Parasar Kodati, Engineering Technologist, Dell ISG

Dell OpenManage Enterprise Operations with Ansible Part 1: Inventory Management

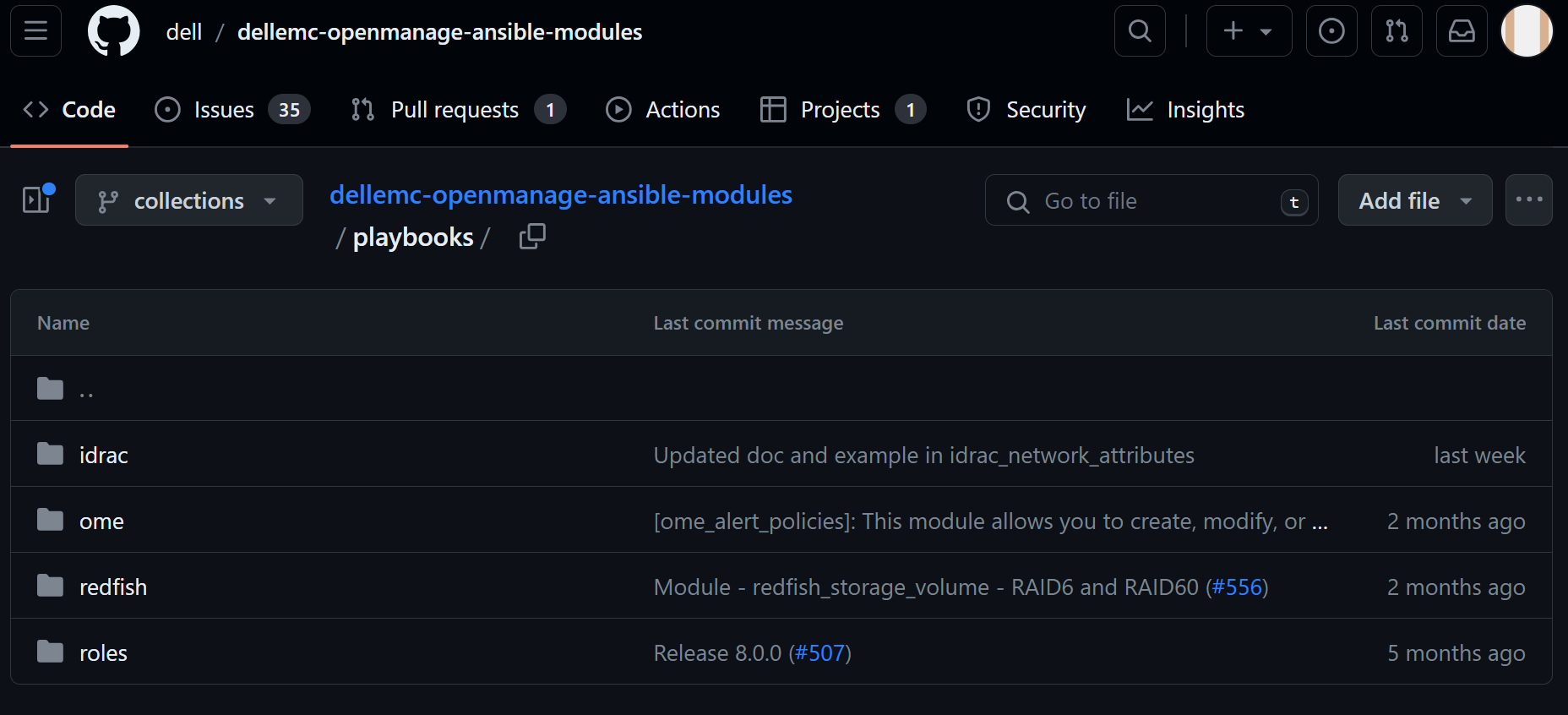

Tue, 14 Nov 2023 14:36:09 -0000

|Read Time: 0 minutes

The OpenManage collection

Dell OpenManage Enterprise (OME) is a powerful fleet management tool for managing and monitoring Dell PowerEdge server infrastructure. Very recently, Dell announced OME 4.0, complete with a litany of new functionality that my colleague Mark detailed in another blog. Here, we'll explore how to automate inventory management of devices managed by OME using Ansible.

Prerequisites

Before we get started, ensure you have Ansible and Python installed on your system. Additionally, you will need to install Dell’s openmanage Ansible collection from Ansible Galaxy using the following command:

ansible-galaxy collection install dellemc.openmanage

The source code and examples for the openmanage collection can be found on GitHub as well. Note that this collection includes modules and roles for iDRAC/Redfish interfaces as well as modules for OpenManage Enterprise with complete fleet management workflows. In this blog, we will look at examples from the OME modules within the collection.

Figure 1. Dell openmanage ansible modules on GitHub

Inventory management workflows

Inventory management typically involves gathering details like the different devices under management, their health information, and so on. The dellemc.openmanage.ome_device_info is the optimal module for collecting the most information. Let’s dig into some tasks to get this information.

Retrieve basic inventory

This task retrieves basic inventory information for all devices managed by OME:

- name: Retrieve basic inventory of all devices

dellemc.openmanage.ome_device_info:

hostname: "{{ hostname }}"

username: "{{ username }}"

password: "{{ password }}"

validate_certs: no

register: device_info_resultOnce we have the output of this captured in a variable like device_info_result, we can drill down into the object to retrieve data like the number of servers and their service tags and print such information using the debug task:

- name: Device count

debug:

msg: "Number of devices: {{ device_info_result.device_info.value | length }}" - name: get all service tags

set_fact:

service_tags: "{{ service_tags + [item.DeviceServiceTag] }}"

loop: "{{ device_info_result.device_info.value }}"

no_log: true - name: List service tags of devices

debug:

msg: "{{ service_tags }}"Note that device_info_result is a huge object. To view all the information that is available to extract, write the contents of the variable to a JSON file:

- name: Save device_info to a file

copy:

content: "{{ device_info_result | to_nice_json }}"

dest: "./output-json/device_info_result.json"Subsystem health

Subsystem health information is another body of information that is extremely granular. This information is not part of the default module task. To get this data, we need to explicitly set the fact_subsystem option to subsystem_health. Following is the task to retrieve subsystem health information for devices identified by their service tags. We pass the entire array of service tags to get all the information at once:

- name: Retrieve subsystem health of specified devices identified by service tags.

dellemc.openmanage.ome_device_info:

hostname: "{{ hostname }}"

username: "{{ username }}"

password: "{{ password }}"

validate_certs: no

fact_subset: "subsystem_health"

system_query_options:

device_service_tag: "{{ service_tags }}"

register: health_info_resultUsing the register directive, we loaded the subsystem health information into the variable health_info_result. Once again, we recommend writing this information to a JSON file using the following code in order to see the level of granularity that you can extract:

- name: Save device health info to a file

copy:

content: "{{ health_info_result | to_nice_json }}"

dest: "./output-json/health_info_result.json"To identify device health issues, we loop through all the devices with the service_tags variable and check if there are any faults reported for each device. When faults are found, we store the fault information into a dictionary variable, shown as the inventory_issues variable in the following code. The dictionary variable has three fields: service tag, fault summary, and the fault list. Note that the fault list itself is an array containing all the faults for the device:

- name: Gather information for devices with issues

set_fact:

inventory_issues: >

{{

inventory_issues +

[{

'service_tag': item,

'fault_summary': health_info_result['device_info']['device_service_tag'][service_tags[index]]['value'] | json_query('[?FaultSummaryList].FaultSummaryList[]'),

'fault_list': health_info_result['device_info']['device_service_tag'][service_tags[index]]['value'] | json_query('[?FaultList].FaultList[]')

}]

}}

loop: "{{ service_tags }}"

when: " (health_info_result['device_info']['device_service_tag'][service_tags[index]]['value'] | json_query('[?FaultList].FaultList[]') | length) > 0"

loop_control:

index_var: index

no_log: trueIn the next task, we loop through the devices with issues and gather more detailed fault information for each. The tasks to perform this extraction are included in an external task file named device_issues.yml which is run for every member of the inventory_issues dictionary. Note that we are passing device_item and device_index as variables for each iteration of device_issues.yml:

- name: Gather fault details

include_tasks: device_issues.yml

vars:

device_item: "{{ item }}"

device_index: "{{ index }}"

loop: "{{ inventory_issues }}"

loop_control:

index_var: index

no_log: trueWithin the device_issues.yml, we first initialize a dictionary variable that can gather information about the faults for the device. The variable captures the subsystem, fault message, and the recommended action:

- name: Initialize specifics structure

set_fact:

current_device:

{

'service_tag': '',

'subsystem': [],

'Faults': [],

'Recommendations':[]

}We loop through all the faults for the device and populate the objects of the dictionary variable:

- name: Assign fault specifics

set_fact:

current_device:

service_tag: "{{ device_item.service_tag }}"

Faults: "{{ current_device.Faults + [fault.Message] }}"

Recommendations: "{{ current_device.Recommendations + [fault.RecommendedAction] }}"

loop: "{{ device_item.fault_list }}"

loop_control:

loop_var: fault

when: device_item.fault_list is defined

no_log: trueWe then append to a global variable that is aggregating the information for all the devices:

- name: Append current device to all_faults

set_fact:

fault_details: "{{ fault_details + [current_device] }}"Back to the main YML script, once we have all the information captured in fault_details, we can print the information we need to store to a file:

- name: Print fault details

debug:

msg: "Fault details: {{ item.Faults }}"

loop: "{{ fault_details }}"

loop_control:

label: "{{ item.service_tag }}" - name: Print recommendations

debug:

msg: "Recommended actions: {{ item.Recommendations }}"

loop: "{{ fault_details }}"

loop_control:

label: "{{ item.service_tag }}"Check out the following video to see how the different steps of the workflow are run:

Conclusion

To recap, we looked at the various information gathering tasks within inventory management of a large PowerEdge server footprint. Note that I used health information objects to demonstrate how to drill down to find the information you need, however you can do this with any fact subset that can retrieved using the dellemc.openmanage.ome_device_info module. You can find the code from this blog on GitHub as part of this Automation examples repo.

Author: Parasar Kodati, Engineering Technologist, Dell ISG

Bringing Efficiency to Dell Business with RabbitMQ Messaging Self-Service

Mon, 23 Oct 2023 16:07:34 -0000

|Read Time: 0 minutes

RabbitMQ messaging platform is widely used in Dell Digital to connect various distributed heterogeneous applications at scale. It provides a common platform to exchange messages between producing applications and consuming applications.

Providing a ready-to-use, production-grade RabbitMQ messaging platform to application developers requires wiring up various services from multiple departments across Dell Digital. Traditionally, to avail a RabbitMQ platform, an application developer triggers the process by submitting a ticket to the messaging platform team, and a series of manual processes kicks off for services (such as VM provisioning, RabbitMQ setup, load balancer, security configuration, and monitoring). Each of these touchpoint interfaces could again have an internal dependency on other systems to fulfill their service. Each of these service interfaces must maintain the requester/developer information as part of the fulfillment service to the respective datastore. If there are any challenges to understanding the request from the requester, it might end up with lengthy email conversations to record the fulfillment process. Overall, the ticket-based approach to delivering a service to end users is not only inefficient but also time-consuming. The entire flow of activities can take an estimated 3 to 4 weeks to complete. The ability to fulfill the service quickly and seamlessly becomes indispensable for a large organization like Dell with a developer workforce of about 4000.

So, how did we streamline the fulfillment process flow?

The answer is implementing a smart workflow. With a charter to provide Platform as a Service (PaaS) for RabbitMQ, once a request is accepted, the messaging team owns the entire process chain.

Transforming such a manually intensive process flow into a fully automated self-service solution is not only exhilarating but also a modernization journey in to provide an exceptional user experience. The RabbitMQ cloud self-service provisioning portal is a unified and integrated solution that enables data center resources, installs required software, and configures various features to provide a ready-to-use RabbitMQ messaging platform. It provides a complete end-to-end solution, delivering a seamless experience to developers. There is no dependency on any team to avail service, resulting in less friction and thus improving overall efficiencies, including cost and time savings.

We followed the Saga architecture pattern to manage this complex process flow across heterogeneous distributed systems while maintaining data consistency. We broke down the process into multiple stages, with each stage that is further divided into smaller manageable steps. Each stage corresponds to a call to a service-providing system performed in a coordinated manner to complete the whole process. These stages are designed to be idempotent, meaning they can be safely retried if a failure occurs. This is especially important in distributed systems where the processing time of different services or processes may vary. This approach allows asynchronous processing allowing greater concurrency and performance.

What do we provide to our customers?

We offer developers self-service access to on-demand enterprise-ready RabbitMQ clusters hosted on a VM or in a private cloud curated with a predefined service catalog. The service catalog enlists essential service features including the price that is associated with each item. We standardized the landscape of the service provisioning by the size of the cluster, hosting platform, platform capacity, and high availability. Users browse the catalog, learn about the service offering from the description, and launch it all on their own. The key Service Level Objective (SLO) for the RabbitMQ self-service is offering a scalable, resilient RabbitMQ platform through an intuitive portal with the availability of support documentation, accessibility to technical support channels, adherence to organizational security with data protection, and access controls.

What is our business value stream?

- Autonomy - Enable developers to provision and deprovision platforms at any time at their own pace by moving the control to the hands of the developers. They can focus on their core business implementation without worrying about resource availability. They can plan their business releases in a more deterministic manner. This autonomy helps increase developer productivity.

- 24/7 availability – RabbitMQ self-service operates around the clock regardless of any business hours. Developers and users can access this service from any time zone with uninterrupted access.

- Visibility – Real-time visibility and tracking of the service delivery progress.

- Stability – Improve stability and reduce manual errors with automation.

- Cost and time saving – Self-service significantly reduces operational costs by reducing manual work and provides significant time savings for both the application team and the RabbitMQ service owner. No more lengthy email exchanges, no need to continuously monitor ticket status, no need to wait for multiple platform owners to complete their tasks, no integration bottleneck, and no manual errors to revert. Thanks to these simplifications, the entire fulfillment process can be completed within 1.5 hours for “RabbitMQ platform hosting on VM” and within 10 minutes for “RabbitMQ platform hosting in Kubernetes container”. Previously, these processes would have required 3+ weeks.

How do we measure service quality?

We built observability by collecting various data points ranging from infrastructure, RabbitMQ platform, and applications. We created a dashboard with a bird’s eye view and an to examine the details separated by category. These details include platform uptime, latency, throughput, usage, platform growth, historical health, infrastructure usage, and I/O metrics to name a few. We have a proactive alerting mechanism to identify any potential issues before they create a major impact on the business.

The Dell self-service cloud portal has an integrated reporting feature that provides insight into key performance indicators across the entire self-service management spectrum, including RabbitMQ service. These KPIs include self-service failure rate, self-service adoption rate, platform provisioning time, and so on.

We continuously monitor and measure these metrics to uncover opportunity areas, service effectiveness, service quality, and customer satisfaction rates. The objective is to offer services to developers more effectively leading to increased efficiency and a better self-sustained Dell Digital ecosystem.

About the Author: Gitanjali Sahoo

Gitanjali Sahoo is the Senior Manager of the Application Messaging Platform under Cloud Platform Service. She led several automation implementations and PaaS capabilities to operate efficiently while sustaining the growth of the platform. In her current role, her core focus is delivering highly available, scalable, robust messaging platforms enabling PaaS capability and providing a seamless experience for Dell application teams.

Q3 2023: New and Updated Terraform Providers for Dell Infrastructure

Mon, 02 Oct 2023 12:49:02 -0000

|Read Time: 0 minutes

We just concluded three quarters of Terraform provider development for Dell infrastructure, and we have some exciting updates to existing providers as well as two brand new providers for PowerScale and PowerEdge node (Redfish-interface) workflows! You can check out the first two releases of Terraform providers here: Q1-2023 and Q2-2023.

We are excited to announce the following new features for the Terraform integrations for Dell infrastructure:

- NEW provider! v1.0 of the provider for PowerScale

- v1.2 of the provider for PowerFlex

- v1.0 of the provider for PowerMax

- NEW provider! v1.0 Terraform Provider for Redfish v1.0.0

- v1.1 Terraform Provider for OME

Terraform Provider for PowerScale v1.0

The first version of the PowerScale provider has a lot of net new capabilities in the form of new resources and data sources. Add to that a set of examples and utilities for AWS deployment, there is enough great material to have its own blog post. Please see this post--Introducing Terraform Provider for Dell PowerScale--all the details.

Terraform Provider for PowerFlex v1.2: it’s all about day-1 deployment

Day-1 deployment refers to the initial provisioning and configuration of hardware and software resources before any production workloads are deployed. A successful Day-1 deployment sets the foundation for the entire infrastructure's performance, scalability, and reliability. However, Day-1 deployment can be complex and time-consuming, often involving manual tasks, potential errors, and delays. This is where automation and the Dell PowerFlex Terraform Provider come into play.

Dell PowerFlex is the software defined leader of the storage industry, providing the foundational technology of Dell’s multicloud infrastructure as well as APEX Cloud Platforms variants for OpenShift and Azure. PowerFlex was the first platform in Dell’s ISG portfolio to have a Terraform provider. In the latest v1.2 release, the provider leapt forward in day-1 deployment operations of a PowerFlex cluster, now providing:

- New resource and data source for Cluster

- New resource and data source for MDM Cluster

- New resource and data source for User Management

- New data source for vTree (PowerFlex Volume Tree)

Now we’ll get into the details pertaining to these new features.

New resource and data source for Cluster

The cluster resource and data source are at the heart of day-1 deployment as well as ongoing cluster expansion and management. Cluster resource can be used to deploy or destroy 3- or 5-node clusters. Please refer the more detailed PowerFlex deployment guide here. The resource deploys all the foundational components of the PowerFlex architecture:

- Storage Data Client (SDC) -- consumes storage from the PowerFlex appliance

- Storage Data Server (SDS) -- contributes node storage to PowerFlex appliance

- Metadata Manager (MDM) -- manages the storage blocks and tracks data location across the system

- Storage Data Replication (SDR) -- enables native asynchronous replication on PowerFlex nodes

Following are the key elements of this resource:

- cluster for Cluster Installation Details

- lia_password for Lia Password

- mdm_password for MDM Password

- allow_non_secure_communication_with_lia to allow Non-Secure Communication With Lia

- allow_non_secure_communication_with_mdm to Allow Non-Secure Communication With MDM

- disable_non_mgmt_components_auth to Disable Non Mgmt Components Auth

- storage_pools for Storage Pool Details

- mdm_list for Cluster MDM Details

- protection_domains for Cluster Protection Domain Details

- sdc_list for Cluster SDC Details

- sdr_list for Cluster SDR Details

- sds_list for Cluster SDS Details

You can destroy a cluster but cannot update it. You can also import an existing cluster using the following command:

terraform import "powerflex_cluster.resource_block_name" "MDM_IP,MDM_Password,LIA_Password"You can find example of a complete cluster resource definition here.

New resource and data source for MDM Setup

Out of the core architecture components of PowerFlex, we already have resources for SDC and SDS. The MDM resource is for the ongoing management of the MDM cluster and has the following key parameters for the Primary, Secondary, Tie-breaker, and Standby nodes:

- Node ID

- Node name

- Node port

- IPs of the MDM type

- The management IPs for the MDM node type

- While the Standby MDM is optional, it does require the role parameter to be setup to one of [‘Manager’, ‘TieBreaker’]

You can find multiple examples of using MDM cluster resource here.

New resource and data source for User Management

With the User resource, you can perform all Create, Read, Update, and Delete (CRUD) operations as well as import existing users that are part of a PowerFlex cluster.

To import users, you can use any one of the following import formats:

terraform import powerflex_user.resource_block_name “<id>”or

terraform import powerflex_user.resource_block_name “id:<id>”or by username

terraform import powerflex_user.resource_block_name “name:<user_name>”New data source for vTree (PowerFlex Volume Tree)

Wouldn’t it be great to get all the storage details in one shot? The vTree data source is a comprehensive collection of the required storage volumes and their respective snapshot trees that can be queried using an array of the volume ids, volume names, or the vTree ids themselves. The data source returns vTree migration information as well.

You can find examples of specifying the query details for vTree data source here.

Terraform Provider for PowerMax v1.0