Dell Container Storage Modules 1.5 Release

Thu, 12 Jan 2023 19:27:23 -0000

|Read Time: 0 minutes

Made available on December 20th, 2022, the 1.5 release of our flagship cloud-native storage management products, Dell CSI Drivers and Dell Container Storage Modules (CSM), is here!

See the official changelog in the CHANGELOG directory of the CSM repository.

First, this release extends support for Red Hat OpenShift 4.11 and Kubernetes 1.25 to every CSI Driver and Container Storage Module.

Featured in the previous CSM release (1.4), avid customers may recall a few new additions to the portfolio made available in tech preview. Primarily:

- CSM Application Mobility: Enables the movement of Kubernetes resources and data from one cluster to another no matter the source and destination (on-prem, co-location, cloud) and any type of backend storage (Dell or non-Dell)

- CSM Secure: Allows for on-the-fly encryption of PV data

- CSM Operator: Manages CSI and CSM as a single stack

Building on these three new modules, Dell Technologies is adding deeper capabilities and major improvements as part of today’s 1.5 release for CSM, including:

- CSM Application Mobility: Users can now schedule backups

- CSM Secure: Users can now “rekey” an encrypted PV

- CSM Operator: Support added for Dell’s PowerFlex CSI Driver, the Authorization Proxy Server, and the CSM Observability module for Dell PowerFlex and Dell PowerScale

For the platform updates included in today’s 1.5 release, the major new features are:

- It is now possible to set the Quality of Service of a Dell PowerFlex persistent volume. Two new parameters can be set in the StorageClass (bandwidthLimitInKbps and iopsLimit) to limit the consumption of a volume. Watch this short video to learn how it works.

- For Dell PowerScale, when a Kubernetes node is decommissioned from the cluster, the NFS export created by the driver will “Ignore the Unresolvable Hosts” and clean them later.

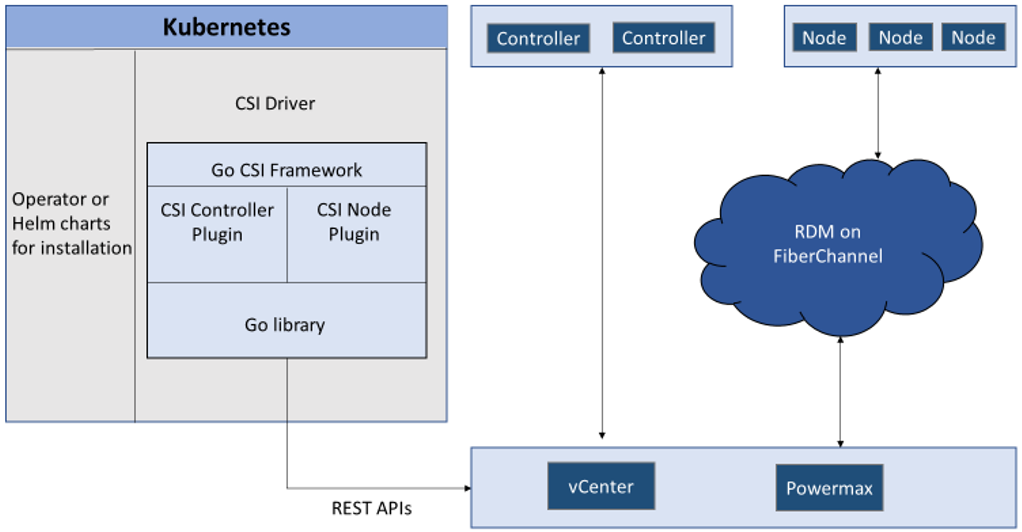

- Last but not least, when you have a Kubernetes cluster that runs on top of Virtual Machines backed by VMware, the CSI driver can mount FiberChannel attached LUNs.

This feature is named “Auto RDM over FC” in the CSI/CSM documentation.

The concept is that the CSI driver will connect to both Unisphere and vSphere API to create the respective objects.

When deployed with “Auto-RDM” the driver can only function in that mode. It is not possible to combine iSCSI and FC access within the same driver installation.

The same limitation applies for RDM usage. You can learn more about it at RDM Considerations and Limitations on the VMware website.

That’s all for CSM 1.5! Feel free to share feedback or send questions to the Dell team on Slack: https://dell-csm.slack.com.

Author: Florian Coulombel

Related Blog Posts

Use Go Debugger’s Delve with Kubernetes and CSI PowerFlex

Wed, 15 Mar 2023 14:41:14 -0000

|Read Time: 0 minutes

Some time ago, I faced a bug where it was important to understand the precise workflow.

One of the beauties of open source is that the user can also take the pilot seat!

In this post, we will see how to compile the Dell CSI driver for PowerFlex with a debugger, configure the driver to allow remote debugging, and attach an IDE.

Compilation

Base image

First, it is important to know that Dell and RedHat are partners, and all CSI/CSM containers are certified by RedHat.

This comes with a couple of constraints, one being that all containers use the Red Hat UBI Minimal image as a base image and, to be certified, extra packages must come from a Red Hat official repo.

CSI PowerFlex needs the e4fsprogs package to format file systems in ext4, and that package is missing from the default UBI repo. To install it, you have these options:

- If you build the image from a registered and subscribed RHEL host, the repos of the server are automatically accessible from the UBI image. This only works with podman build.

- If you have a Red Hat Satellite subscription, you can update the Dockerfile to point to that repo.

- You can use a third-party repository.

- You go the old way and compile the package yourself (the source of that package is in UBI source-code repo).

Here we’ll use an Oracle Linux mirror, which allows us to access binary-compatible packages without the need for registration or payment of a Satellite subscription.

The Oracle Linux 8 repo is:

[oracle-linux-8-baseos] name=Oracle Linux 8 - BaseOS baseurl=http://yum.oracle.com/repo/OracleLinux/OL8/baseos/latest/x86_64 gpgcheck = 0 enabled = 1

And we add it to final image in the Dockerfile with a COPY directive:

# Stage to build the driver image

FROM $BASEIMAGE@${DIGEST} AS final

# install necessary packages

# alphabetical order for easier maintenance

COPY ol-8-base.repo /etc/yum.repos.d/

RUN microdnf update -y && \

...Delve

There are several debugger options available for Go. You can use the venerable GDB, a native solution like Delve, or an integrated debugger in your favorite IDE.

For our purposes, we prefer to use Delve because it allows us to connect to a remote Kubernetes cluster.

Our Dockerfile employs a multi-staged build approach. The first stage is for building (and named builder) from the Golang image; we can add Delve with the directive:

RUN go install github.com/go-delve/delve/cmd/dlv@latest

And then compile the driver.

On the final image that is our driver, we add the binary as follows:

# copy in the driver COPY --from=builder /go/src/csi-vxflexos / COPY --from=builder /go/bin/dlv /

In the build stage, we download Delve with:

RUN go get github.com/go-delve/delve/cmd/dlv

In the final image we copy the binary with:

COPY --from=builder /go/bin/dlv /

To achieve better results with the debugger, it is important to disable optimizations when compiling the code.

This is done in the Makefile with:

CGO_ENABLED=0 GOOS=linux GO111MODULE=on go build -gcflags "all=-N -l"

After rebuilding the image with make docker and pushing it to your registry, you need to expose the Delve port for the driver container. You can do this by adding the following lines to your Helm chart. We need to add the lines to the driver container of the Controller Deployment.

ports: - containerPort: 40000

Alternatively, you can use the kubectl edit -n powerflex deployment command to modify the Kubernetes deployment directly.

Usage

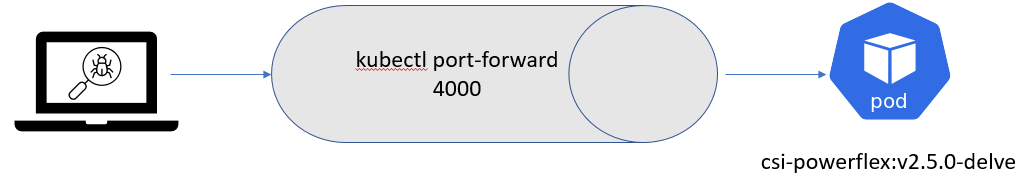

Assuming that the build has been completed successfully and the driver is deployed on the cluster, we can expose the debugger socket locally by running the following command:

kubectl port-forward -n powerflex pod/csi-powerflex-controller-uid 40000:40000

Next, we can open the project in our favorite IDE and ensure that we are on the same branch that was used to build the driver.

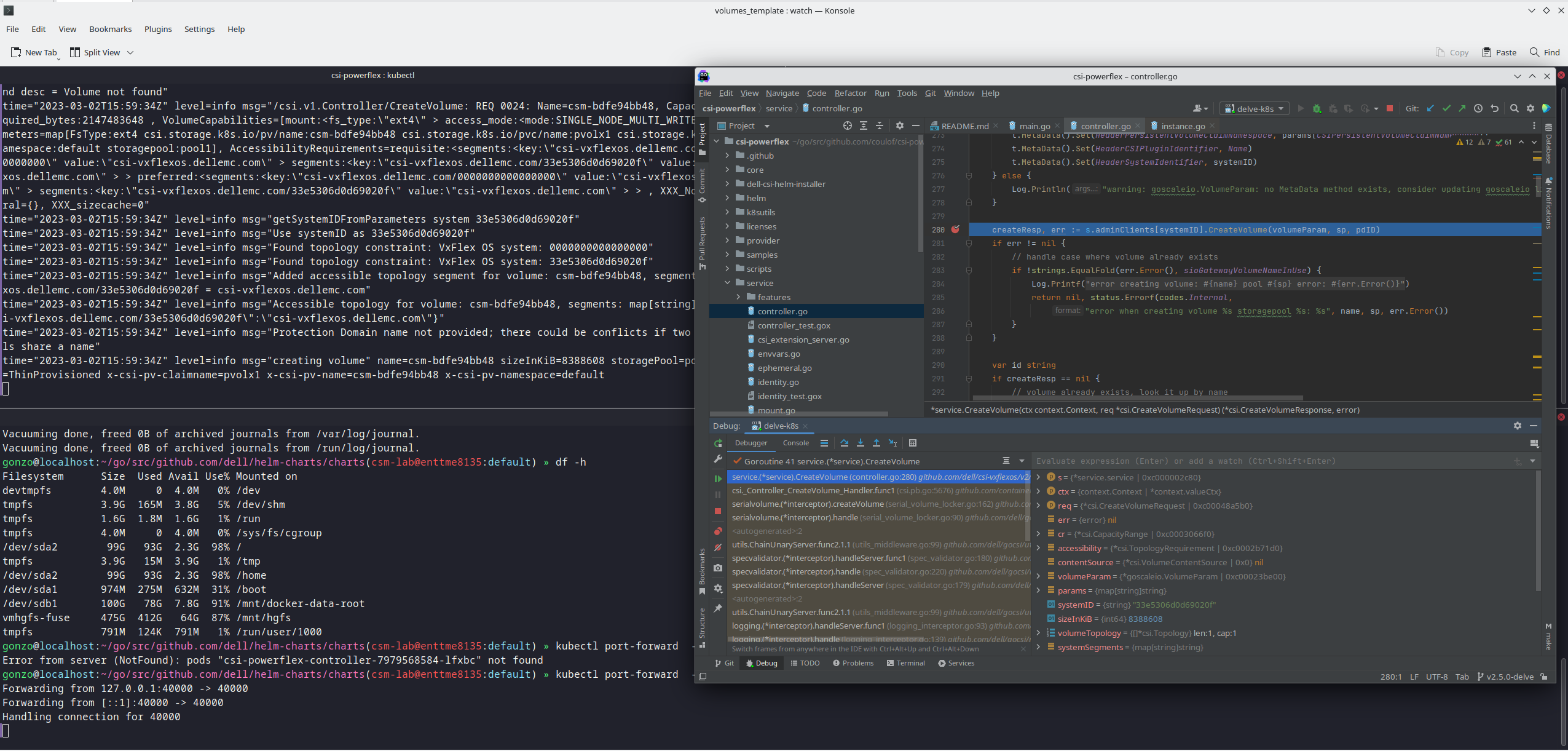

In the following screenshot I used Goland, but VSCode can do remote debugging too.

We can now connect the IDE to that forwarded socket and run the debugger live:

And here is the result of a breakpoint on CreateVolume call:

The full code is here: https://github.com/dell/csi-powerflex/compare/main...coulof:csi-powerflex:v2.5.0-delve.

If you liked this information and need more deep-dive details on Dell CSI and CSM, feel free to reach out at https://dell-iac.slack.com.

Author: Florian Coulombel

Exploring the Latest Advancements in Dell ObjectScale 1.2.0

Wed, 28 Jun 2023 13:22:49 -0000

|Read Time: 0 minutes

Dell ObjectScale is an object storage solution that represents the evolution of Dell Technologies' commitment to delivering enterprise-class, reliable, and high-performance storage. With its software-defined and containerized architecture, ObjectScale operates seamlessly within Kubernetes, empowering organizations to become more agile and responsive to ever-changing business requirements.

ObjectScale adheres to a set of core design principles that define its exceptional capabilities:

- Global namespace with eventual consistency

- Scale-out capabilities

- Secure multitenancy

- Optimum performance for small, medium, and large objects

In this blog post, we delve into the exciting new features introduced in the Dell ObjectScale 1.2.0 release.

Feature enhancements

This new code release introduces a range of feature enhancements, including:

- Simpler deployment options and enhanced user experience: The latest release of ObjectScale brings forth a range of improvements to simplify the deployment process and enhance user experience. Key updates include:

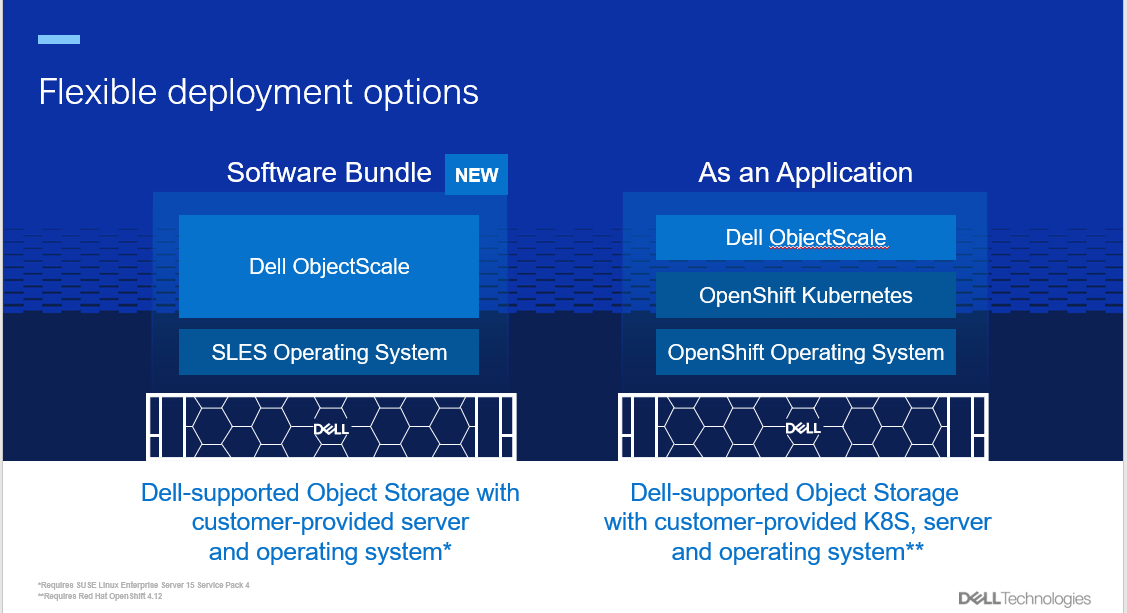

- New deployment option: A faster deployment option, known as the “Software Bundle,” enables the installation of ObjectScale and Kubernetes on a cluster of nodes with SUSE Linux Enterprise Server 15 SP4. This option streamlines the setup process and accelerates the journey toward efficient object storage.

- Support for Red Hat OpenShift Container Platform: ObjectScale continues to offer the "As an Application" deployment option, providing seamless integration with Red Hat OpenShift Container Platform 4.12. This compatibility ensures that users can leverage the power of ObjectScale within their existing OpenShift environments.

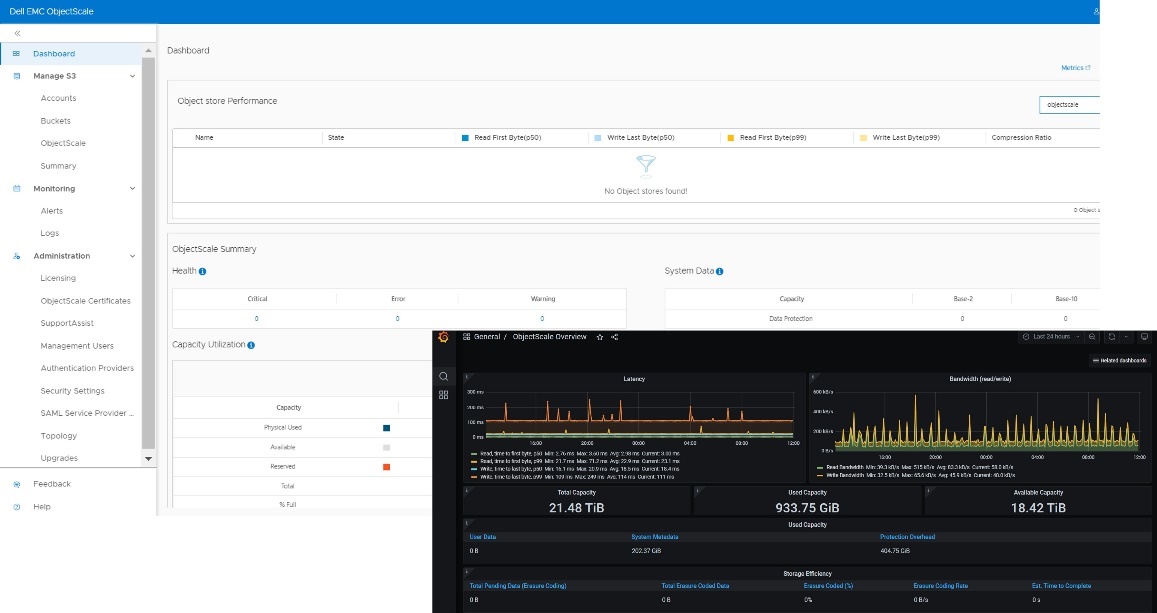

- Streamlined user interface: The ObjectScale Portal boasts a redesigned user interface, offering an intuitive and streamlined workflow for bucket provisioning and management. This enhancement simplifies object storage administration, making it more accessible to users.

- Enhanced performance and efficiency: ObjectScale 1.2.0 introduces several notable performance improvements to optimize efficiency across various use cases:

- Increased write performance: Users can now enjoy write performance of up to 2 GB/s per node, which enables faster data ingestion and storage.

- Support for larger objects: ObjectScale now supports objects as large as 30 TB, including replication of these huge objects. This six-fold increase in the maximum object size surpasses the limits set by Amazon S3, making ObjectScale an ideal choice for large-scale projects such as HPC, analytics, AI, and their associated backup requirements.

- Flexible erasure coding: The latest release includes more flexible erasure coding options, including support for a minimum of four nodes with a 12+4 EC policy. This enables greater data protection and fault tolerance.

- Logical capacity usage reporting: ObjectScale now provides reporting of logical capacity usage for Dell APEX, facilitating better resource management and planning.

- Expanded Zero Trust features: ObjectScale 1.2.0 strengthens ObjectScale security and access control capabilities. Highlights include:

- Active Directory and LDAP support: System users can now benefit from Active Directory and LDAP support, which enables seamless integration with existing user management systems. This simplifies user administration and enhances security.

- Comprehensive IAM model: ObjectScale introduces an improved Identity and Access Management (IAM) model, accommodating local or management users, external authentication providers, configurable login workflows, user persona roles, password policies, and account lockout configurations. These advancements offer enhanced flexibility and control over access management.

- Improved reliability and supportability: The latest ObjectScale release focuses on enhancing reliability and supportability:

- Enhanced maintenance mode handling: ObjectScale now allows up to two nodes to be simultaneously placed in maintenance mode, simplifying maintenance operations and minimizing downtime.

- Remote Connect Assist: The integration of Pluggable Authentication Module (PAM) enables Remote Connect Assist, empowering remote support technicians to securely access the system using remote secure credentials tokens. This facilitates efficient troubleshooting and support.

Conclusion

Dell ObjectScale 1.2.0 introduces improved deployment options and feature enhancements, solidifying its position as a leading software-defined, containerized object storage platform. For more in-depth information, we encourage you to explore the Dell ObjectScale: Overview and Architecture white paper and the ObjectScale and ECS Info Hub. Embrace these advancements and unlock the full potential of Dell ObjectScale for your organization's evolving storage needs.

Author: Cris Banson