Assets

APEX Navigator – General Availability

Fri, 01 Dec 2023 15:17:18 -0000

|Read Time: 0 minutes

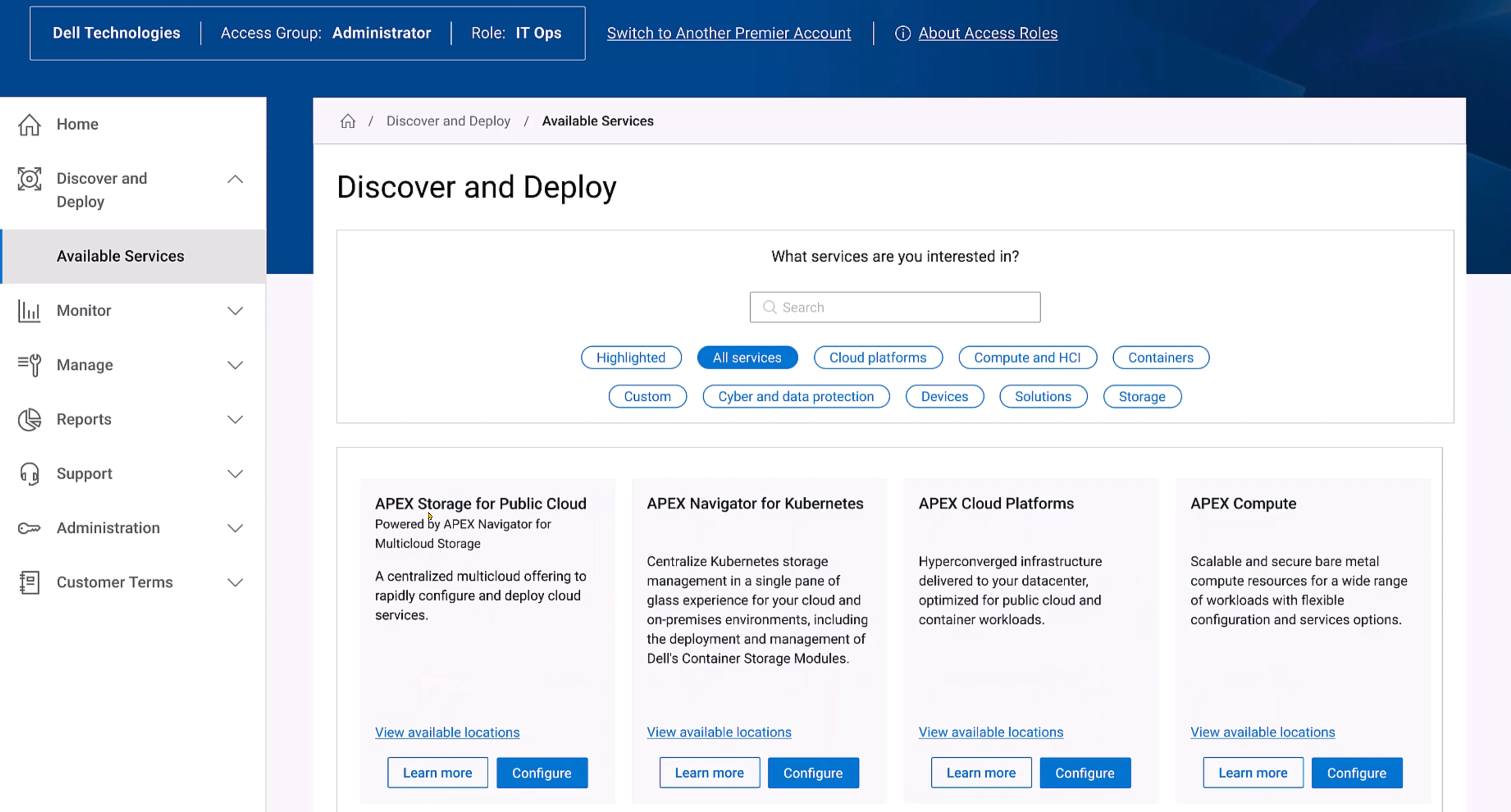

We are finally at a point in the APEX Navigator journey that we are ready for prime-time general availability! Our highly anticipated release of APEX Navigator is now open and ready for all.

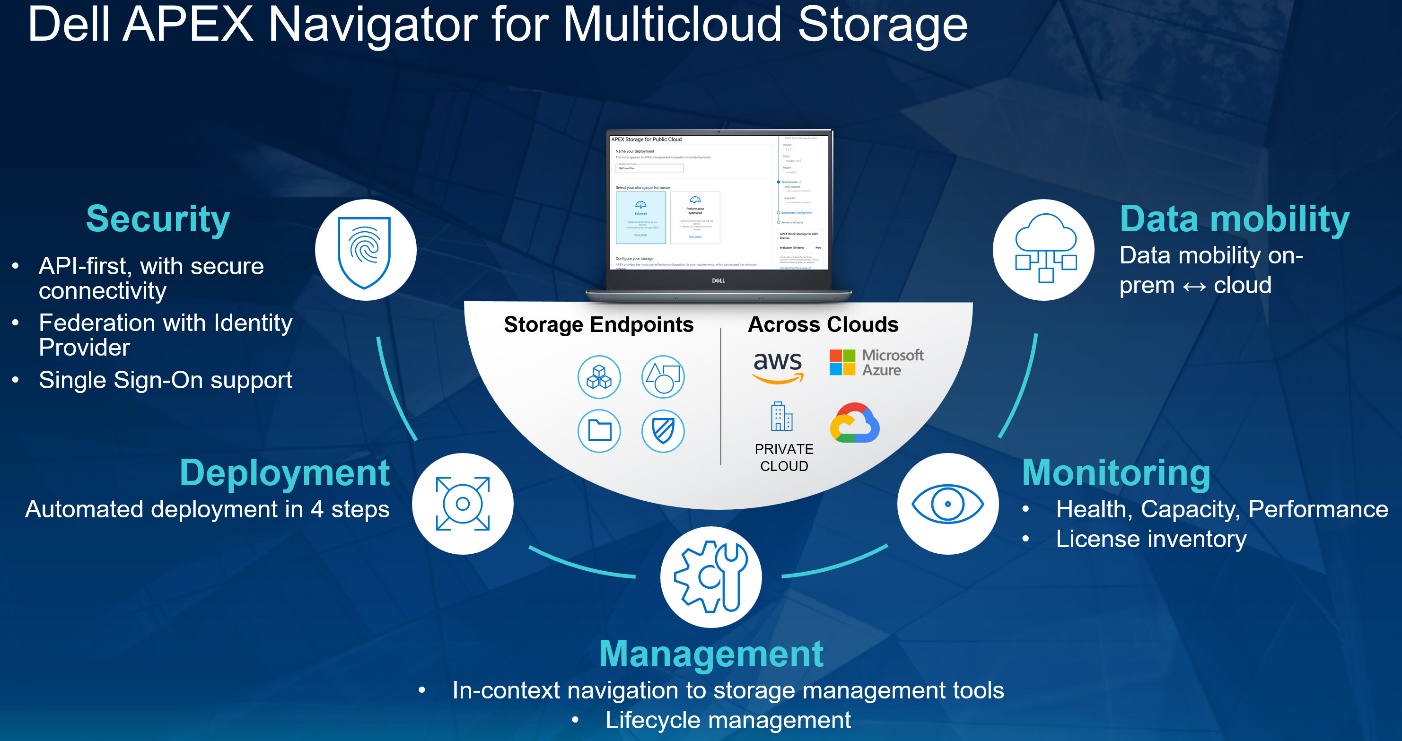

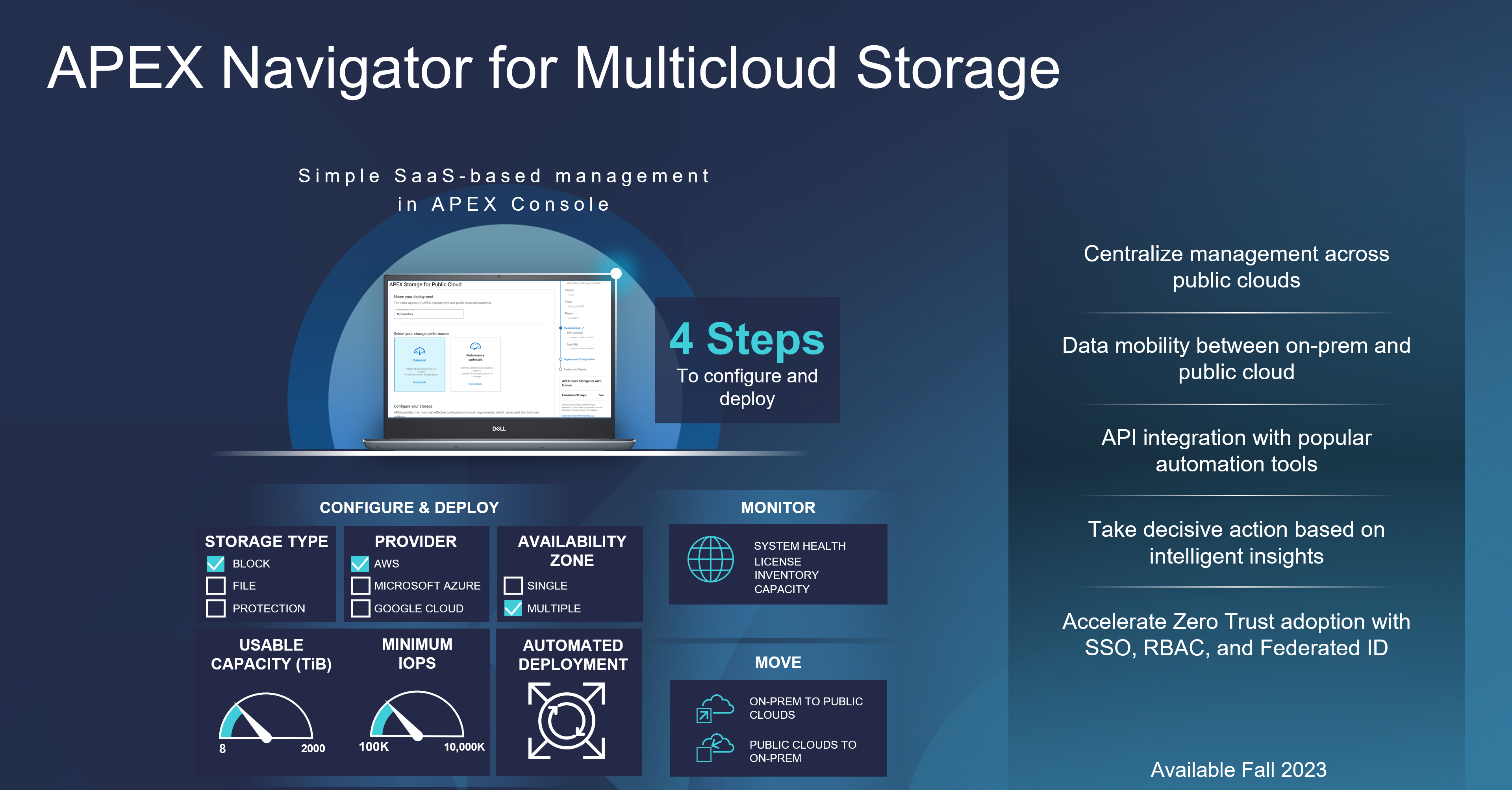

Ready to fully embrace multicloud storage ecosystems? Then this is the release for you and your company. Enabling a free 90-day evaluation license that applies to Dell licensing only, as well as a common storage platform for block and file (in the near future) across on-premises and major hyperscalers, Dell APEX Navigator is here to bring you multicloud storage ecosystems at their most elegant. The centralized SaaS experience will synthesize your digital transformation across all clouds and all infrastructure services.

Ready to fully embrace multicloud storage ecosystems? Then this is the release for you and your company. Enabling a free 90-day evaluation license that applies to Dell licensing only, as well as a common storage platform for block and file (in the near future) across on-premises and major hyperscalers, Dell APEX Navigator is here to bring you multicloud storage ecosystems at their most elegant. The centralized SaaS experience will synthesize your digital transformation across all clouds and all infrastructure services.

Vision, tooling, and commonality are at the core of Dell APEX Navigator, and our engineering teams have been exceptionally busy thinking through the permutations and experiences that a multicloud offering should deliver. With great success, I might add!

The Dell APEX block storage that will be deployed as part of the release has already proven to be the best of its kind in the industry, and our APEX Navigator portal provides the deployment orchestration, day two monitoring, and decommissioning that glues the entire ecosystem together.

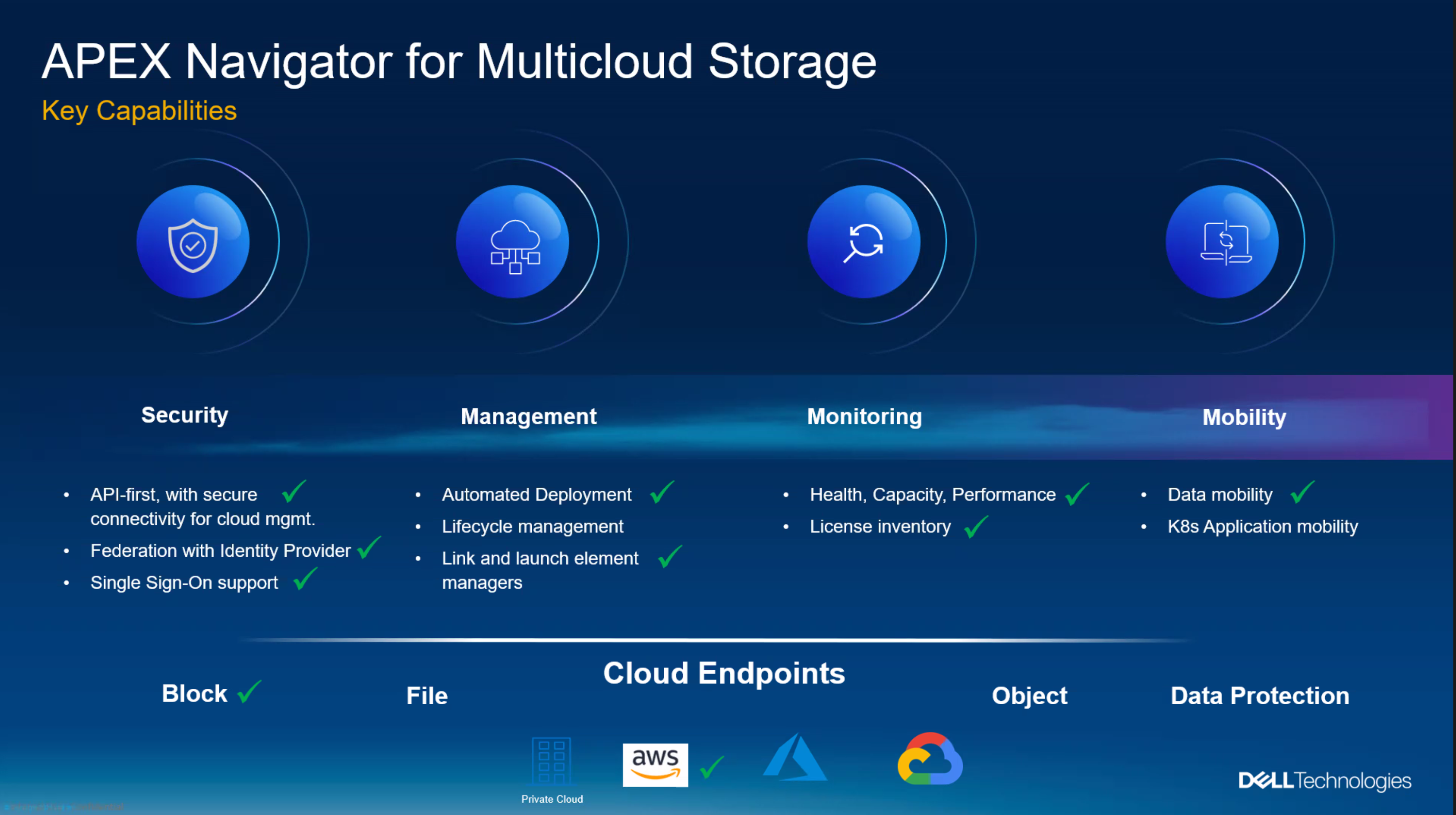

With APEX Navigator for Multicloud Storage, you can:

- Centralize Dell Storage software deployment and lifecycle management across multiple public clouds, including:

- Drive data mobility between on-prem and multiple public clouds to meet shifting cloud strategies

- AWS available at release

- Azure to follow soon

- Integrate with popular automation tools such as Ansible and Terraform as part of our API-first architecture, so you can:

- Accelerate productivity

- Embrace Dell storage software in the public cloud as part of your broader IT strategy

- Take decisive action using intelligent insights and comprehensive monitoring across your entire Dell storage estate with CloudIQ integration to track:

- License inventory

- System health

- Performance

- Capacity

- Accelerate Zero Trust adoption with role-based access control, single-sign on, and federated identity, all incorporating Zero Trust principles for complete control of data

If multicloud use cases are currently on your strategy to-do list (and they should be), the time is now to embrace the innovation Dell is bringing to the space. And we’re just getting warmed up, with our evolution only accelerating.

Don’t miss the APEX Navigator video series to dive deeper into the exciting trajectory we’re on. More video demos are being added frequently, so stay tuned for more!

Resources

Check out the Dell APEX Navigator page for more information.

The following APEX demos and documents provide additional information:

- Full APEX demo playlist

- APEX Block Storage for AWS

- APEX Block Storage for Azure

- Performance Results for Dell APEX Block Storage for AWS

- Performance Results for Dell APEX Block Storage for Azure

Author: Robert F. Sonders, Technical Staff – Engineering Technologist, Multicloud Storage Software

@RobertSonders | |

Blog | https://www.dell.com/en-us/blog/authors/robert-f-sonders/ |

Location | Scottsdale AZ, USA (GMT-7) |

Multicloud Acceleration: Why Dell Now?

Tue, 25 Jul 2023 22:23:28 -0000

|Read Time: 0 minutes

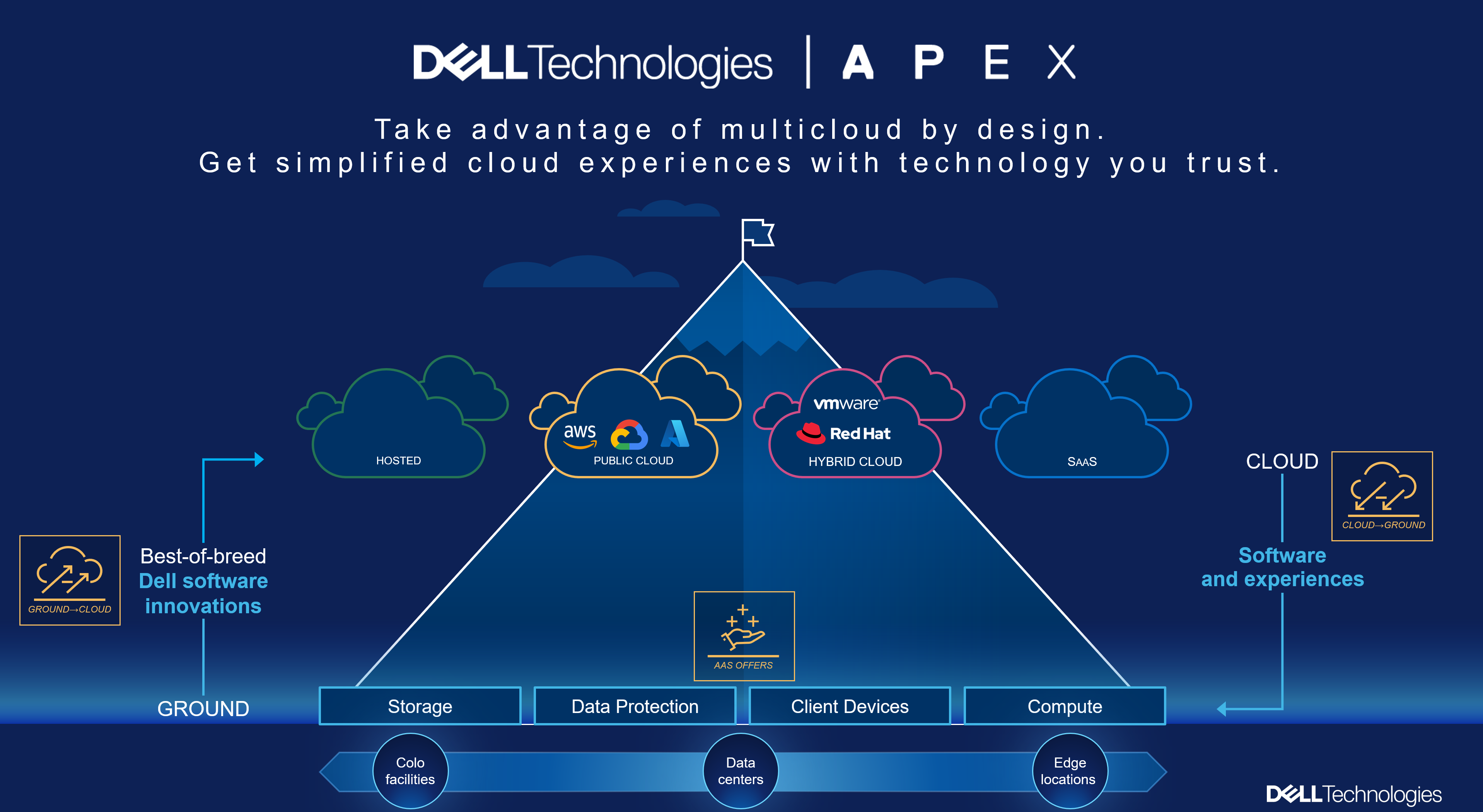

It seems like we have a nice taste of Dell APEX sprinkled on everything these days. We are continuing to unpack all that Dell APEX can provide solutions for. Dell is right at the front, helping the multicloud acceleration adoption initiatives and efficiencies our clients are driving towards.

I am currently authoring a new paper on Dell APEX Block Storage that focuses on both the business case and the technical case. As I was writing, I included a few interesting nuggets on how Dell APEX Block Storage can accelerate multicloud SLA considerations. Multicloud acceleration focuses on improving performance, reducing latency, and optimizing resource utilization in a multicloud environment, by enhancing what the hyperscalers have already built. So, why Dell now?

I was recently having a conversation with one of Dell’s larger clients and they asked “Why is Dell getting into the software realm and competing with the hyperscalers? Isn’t Dell too late? Why Dell, now?”.

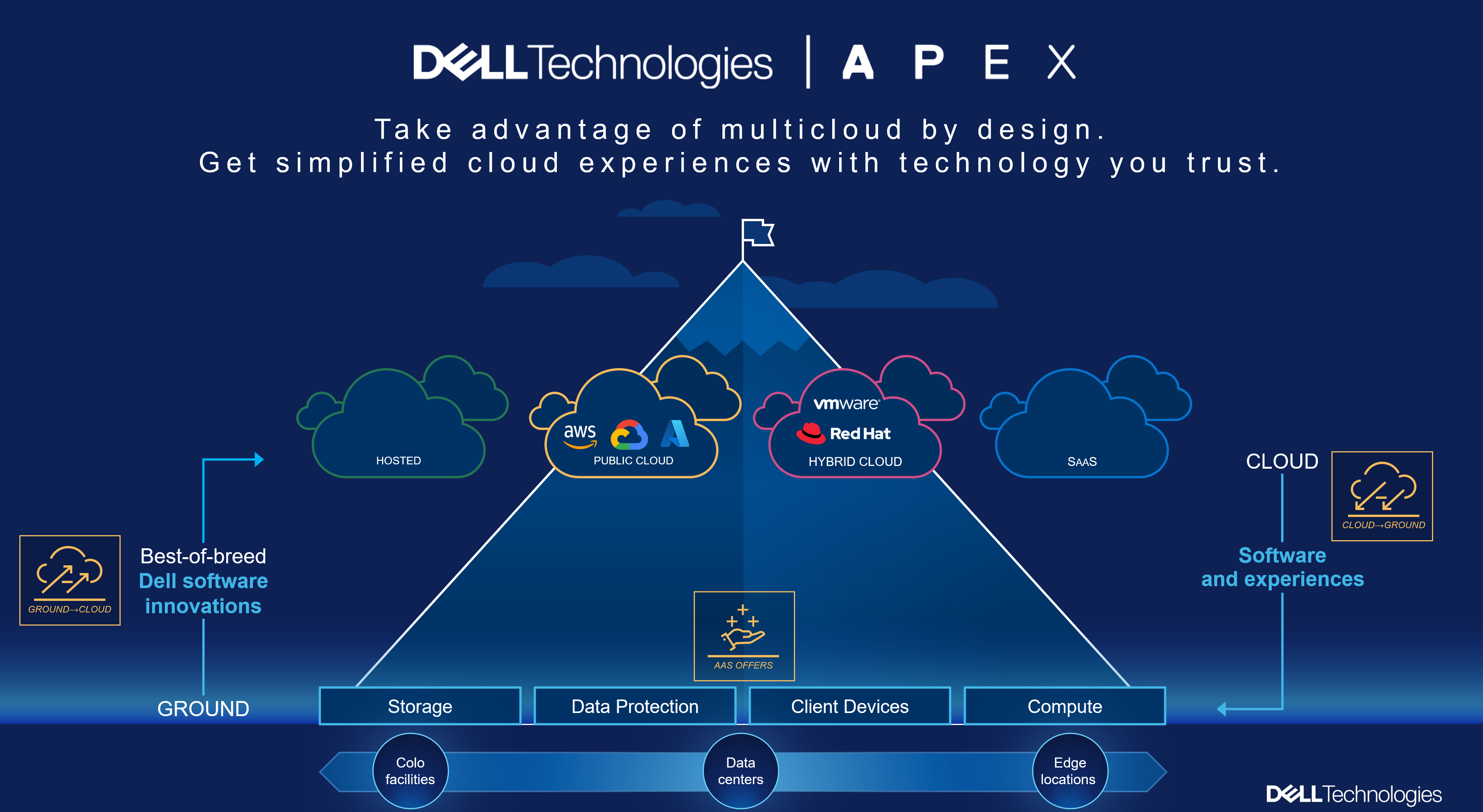

My response was this: With Dell APEX, we are arriving at the perfect time in history. We needed the hyperscalers to build out their extensive ecosystem topologies, PaaS and SaaS services and all the other good guy check boxes that create a gravitational pull to “the cloud”. Now, Dell Technologies matches the customer application needs with the correct solutions, in the correct location.

My response was this: With Dell APEX, we are arriving at the perfect time in history. We needed the hyperscalers to build out their extensive ecosystem topologies, PaaS and SaaS services and all the other good guy check boxes that create a gravitational pull to “the cloud”. Now, Dell Technologies matches the customer application needs with the correct solutions, in the correct location.

Dell is now uniquely positioned to offer our storage services (block, file, and backup) with the same platform admin skill sets, from ground to cloud, and cloud to cloud. We can even solve for cloud to ground with our APEX Cloud Platforms for Azure, OpenShift, and VMware. With Dell and any major cloud vendor, we truly have and deliver the ”AND” story.

So far, I have not seen the SLA angle addressed out in the wild, so let’s tackle it here. This is a super interesting opportunity for Dell APEX Block storage, and file, deployed in any public cloud to address SLAs (Service Level Agreements) that are lacking in public environments. As we all know, the cloud vendors are pretty much around three to four nines. Mostly three. Yes, you can potentially achieve additional “nines”, but this comes at a substantial cost.

Another awesome opportunity would be the ability to build in the SLA resiliency in “said cloud” with Dell APEX Block storage, without completely refactoring to cloud native. Many apps simply cannot get there because they’re constrained by legacy code and bloated data stores. The technical SMEs are required to find a way to lift and shift these legacy workloads to the cloud. These mission critical apps still need mission critical SLAs. With Dell APEX Block storage, these same mission critical legacy apps can now consume Dell APEX Block storage in AWS or Azure and potentially have the five or even six nines of availability. Our offer pools together the lower cost and lower durability volumes and can present up to six nines of SLA. Wow! That is awesome. The best of both worlds! Now, our legacy apps are resilient in the cloud and potentially can consume the additional PaaS and SaaS hyperscaler offerings.

Dell APEX Block Storage also saves VM storage deployment dollars by thin provisioning the storage that is presented to the VM. We also offer highly effective snapshotting without the snapshot count limitations, when compared with the hyperscalers.

We even have a single cluster combined with multi-availability zone configurations to allow our customers to provide highly operational efficiencies at the storage layer, within a cloud environment. Again, a win-win!

All of these factors line up to deliver the most stringent SLAs, in tangible and measurable ways. Combining the best of Dell software defined storage with cost-effective cloud agility is a win for even the most mission critical SLA requirements.

And if there is a need to move back on-premises, we also have that covered. The same software defined storage deployed as APEX Block Storage can also replicate back to on-premises as needed.

Stay tuned for my next white paper to expand on all the use case detail. Time to get back at it!

Resources

For more information, see the following:

- Video 1 APEX Navigator for Multicloud Storage demo

- Video 2 APEX Navigator Dell APEX Block Storage deployment into AWS

- Video 3 APEX Navigator and CloudIQ – Day 2 management and monitoring

- Video 4 APEX Navigator and Data Mobility

- Video APEX Navigator for Kubernetes

- The full APEX demo playlist

- Coming soon: White paper – Dell APEX Block Storage for Multicloud – AWS focus

Author: Robert F. Sonders, Technical Staff - Engineering Technologist, Multicloud Storage Software

Twitter: @RobertSonders

LinkedIn: linkedin.com/in/Robert-f-sonders

Multicloud—All the things!

Thu, 01 Jun 2023 17:12:40 -0000

|Read Time: 0 minutes

It has been a few months now since I moved over from pre-sales to a Dell Technical Staff role, supporting all our multicloud storage software offerings here at Dell Technologies. Once again, just like my previous role, this role is very broad and also very deep. Dell APEX has many facets, from both a horizontal view and a deep dive into each offering. I’m now part of the team driving the world’s most comprehensive multicloud portfolio, spanning the data center to the public cloud!

Over the past months, I have realized even more than before how awesome this opportunity is. It’s just like working for a startup, except at a big corporation. The teams are building exactly what our clients have been asking for. I am in the unique position to test, evaluate, document, and evangelize, all while getting the word out through training sessions, white papers, and so on.

Our latest announcement from Dell Tech World 2023 is APEX Navigator, which provides our client teams in the SysOps and DevOps spaces the ability to actually “Multicloud—all the things!” Previously, the reference to “multicloud” sounded great, on paper. Now, Dell has made multicloud a reality. And, as a bonus, Dell has also made it easy, by removing the need for any manual deployment management that could cause “day zero” failures. The same flexibility you have in your own data center can now be achieved in the public cloud with APEX Navigator at the helm.

We now have the SaaS offerings as APEX Navigator for multicloud storage management, which aligns our best of breed, enterprise-class technology with:

- APEX Block Storage for Public Cloud

- PowerFlex software-defined block storage

- APEX File Storage for Public Cloud

- PowerScale (OneFS file system – multi-protocol, NFS, SMB, and my favorite—S3 Object!)

The login experience has also been refactored to be clean and simple. The Dell Premier portal experience will guide our users directly to the full APEX experience. Where all things multicloud reside.

We also have, as part of Navigator:

- APEX Navigator for Kubernetes

- Data persistence management along with deployment and monitoring of Dell Container Storage Modules at scale

APEX Storage for Public Cloud is available in AWS today and will be available in Azure in the second half of 2023.

In this video, you can see how simple it is to configure and deploy APEX Navigator— with only four steps and just a few more clicks. This process has removed the error-prone time on keyboard to a fully automated deployment in the public cloud of your choice. Here is what the process looks like in AWS, so none of this work in AWS is needed. It provides a clean interface, with day two CloudIQ monitoring included. CloudIQ, described here, also brings real-time intelligence to your storage endpoints. It offers both predictive and AI anomaly detection to provide XOps engineers and developers quick insight into their multicloud world and allows them to take action where it might be required.

The reality is this: Today’s data center has also been modernized. The initial attraction to public-only was the rich service offerings that existed in cloud-only. Now, with Dell Technologies leading the way, our clients can manage all their distributed data requirements, and all with predictable costs. Ground to cloud and cloud to ground are all part of the “All Things” story.

Our clients who are investing in multicloud are also growing and retaining the top industry engineers. The APEX Navigator portal delivers the rich automation to partner with our client engineers for consistent operations, wherever the data resides. This is a BIG deal!

The right data, placed in the right location, at the right time, for the right consumption service.

We have also announced APEX Navigator for Kubernetes. Simply put, Kubernetes is vast. APEX Navigator for Kubernetes can provide observability across the multicloud, multisite, microservice landscape. Make your DevOps teams happy while protecting and governing these assets.

Why Navigator?

I am asked this question almost daily: Why use Navigator and all the software-defined storage deployment, management, and monitoring? Here are the answers:

- Integrated zero trust security (SSO, RBAC, and Identity Federation)

- Seamless SSO!

- Unique performance and deployment

- Extremely high I/O performance is cheaper with PowerFlex deployed into any public cloud.

- Simple, day zero deployment

- A deployment process the only requires four simple steps.

- 90-day evaluation license! (applies to Dell licensing only)

- Federated storage protection with multi-availability zone resiliency and flexibility

- Protection and scalability reach across availability zones.

- Rebuild times decrease with additional availability zones.

- Data mobility, ground to cloud, cloud to ground

- Meet any shifting cloud strategy

- Crash consistent copies

- Efficient – Changed blocks only snapshot shipping

- API-first integration with many of the most popular automation tools

- Ansible and Terraform – Yes and yes!

- Currently, our customers can create their own artifacts for API integration

- Ansible and Terraform – Yes and yes!

- Cost

- Predictable

- Data services that the cloud alone cannot provide

- Better services at a reduced cost

- Thin provisioning

- Multiple and efficient snapshots

- Public cloud PaaS services, with only data attributes you want, consumed in the cloud—a true hybrid model—as shown here

- Rich PaaS feature set reporting capability

- AI/ML model training

- Consuming just what you need without incurring any egress fees; then, rinse and repeat

- If you need to export—we have the proper compression in place to minimize any egress fees

- Mountable snapshots

- Kubernetes namespace data mobility, inclusive of all PV (Persistent Volumes) and PVC (Persistent Volume Claims), with an option to even rename a namespace in flight (think Dev, Test, Stage for micro-services)

- Evolving S3 Object solution sets (to be discussed in a future blog)

- Monitoring all endpoints from a single pane of glass

- Robust monitoring—View of all systems, public or private, to observe and act on the performance, health, inventory, and capacity

- Licensing inventory

- Active AI for anomaly detection

My next white paper will go into the details of each of these answers.

Release timelines

APEX Navigator will be generally available in the United States around the second half of 2023. At the same time, APEX Navigator for Kubernetes will be generally available in North America, France, Germany, and the UK. Additionally, APEX Block Storage for Microsoft Azure will be available through RPQ.

Finally, it is important to mention that not all feature sets previously referenced will be available on the release-to-service dates. The teams are working hard to solve all the complex problems—with great success.

If your organization is interested in participating in our APEX Navigator program, visit here.

Resources

For more information on our APEX Storage for Public Cloud, visit this page.

Video 1 APEX Navigator for Multicloud Storage demo

Video 2 APEX Navigator PowerFlex deployment into AWS

Video 3 APEX Navigator and CloudIQ – Day 2 management and monitoring

Video 4 APEX Navigator and Data Mobility

Video APEX Navigator for Kubernetes

Here is a link to the full APEX demo playlist

Author: Robert F. Sonders

Technical Staff – Engineering Technologist

Multicloud Storage Software

@RobertSonders | |

Blog | |

Location | Scottsdale AZ, USA (GMT-7) |

Why Canonicalization Should Be a Core Component of Your SQL Server Modernization (Part 2)

Wed, 12 Apr 2023 16:01:55 -0000

|Read Time: 0 minutes

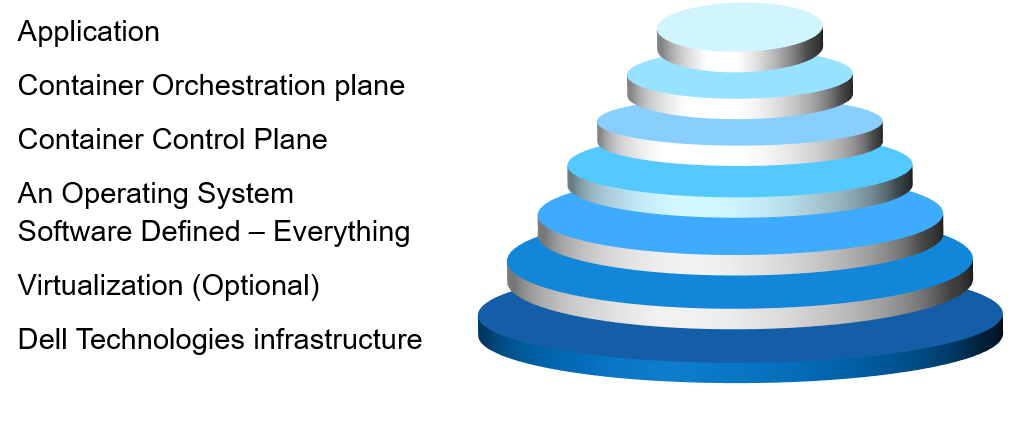

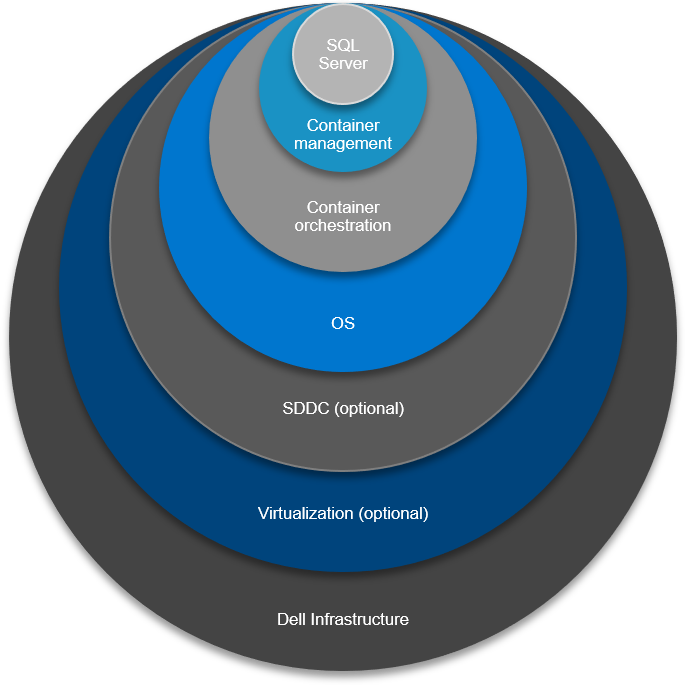

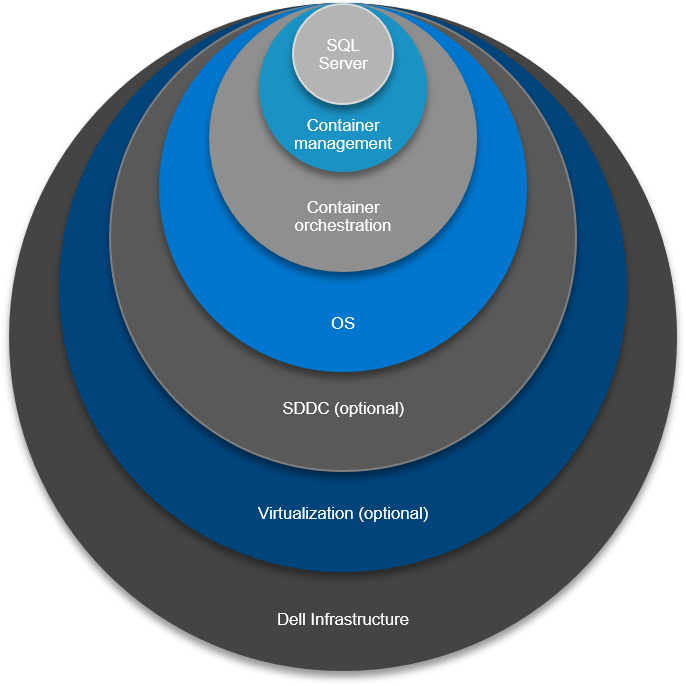

In Part 1 of this blog series, I introduced the Canonical Model, a fairly recent addition to the Services catalog. Canonicalization will become the north star where all newly created work is deployed to and managed, and it’s simplified approach also allows for vertical integration and solutioning an ecosystem when it comes to the design work of a SQL Server modernization effort. The stack is where the “services” run—starting with bare-metal, all the way to the application, with seven layers up the stack.

In this blog, I’ll dive further into the detail and operational considerations for the 7 layers of the fully supported stack and use by way of example the product that makes my socks roll up and down: a SQL Server Big Data Cluster. The SQL BDC is absolutely not the only “application” your IT team would address. This conversation is used for any “top of stack application” solutions. One example is Persistent Storage – for databases running in a container. We need to solution for the very top (SQL Server) and the very bottom (Dell Technologies Infrastructure). And, many optional permutation layers.

First, a Word About Kubernetes

One of my good friends at Microsoft, Buck Woody, never fails to mention a particular truth in his deep-dive training sessions for Kubernetes. He says, “If your storage is not built on a strong foundation, Kubernetes will fall apart.” He’s absolutely correct.

Kubernetes or “K8s” is an open-source container-orchestration system for automating deployment, scaling, and management of containerized applications and is the catalyst in the creation of many new business ventures, startups, and open-source projects. A Kubernetes cluster consists of the components that represent the control plane and includes a set of machines called nodes.

To get a good handle on Kubernetes, give Global Discipline Lead Daniel Murray’s blog a read, “Preparing to Conduct Your Kubernetes Orchestra in Tune with Your Goals.”

The 7 Layers of Integration Up the Stack

Let’s look at the vertical integration one layer at a time. This process and solution conversation is very fluid at the start. Facts, IT desires, best practice considerations, IT maturity, is currently all on the table. For me, at this stage, there is zero product conversation. For my data professionals, this is where we get on a white board (or virtual white board) and answer these questions:

Let’s look at the vertical integration one layer at a time. This process and solution conversation is very fluid at the start. Facts, IT desires, best practice considerations, IT maturity, is currently all on the table. For me, at this stage, there is zero product conversation. For my data professionals, this is where we get on a white board (or virtual white board) and answer these questions:

- Any data?

- Anywhere?

- Any way?

Answers here will help drive our layer conversations.

From tin to application, we have:

Layer 1

The foundation of any solid design of the stack starts with Dell Technologies Solutions for SQL Server. Dell Technologies infrastructure is best positioned to drive consistency up and down the stack and its supplemented by the company’s subject matter experts who work with you to make optimal decisions concerning compute, storage, and back up.

The requisites and hardware components of Layer 1 are:

- Memory, storage class memory (PMEM), and a consideration for later—maybe a bunch of all-flash storage. Suggested equipment: PowerEdge.

- Storage and CI component. Considerations here included use cases that will drive decisions to be made later within the layers. Encryption and compression in the mix? Repurposing? HA/DR conversations are also potentially spawned here. Suggested hardware: PowerOne, PowerStore, PowerFlex. Other considerations – structured or unstructured? Block? File? Object? Yes to all! Suggested hardware: PowerScale, ECS

- Hard to argue the huge importance of a solid backup and recovery plan. Suggested hardware: PowerProtect Data Management portfolio.

- Dell Networking. How are we going to “wire up”—Converged or Hyper-converged, or up the stack of virtualization, containerization and orchestration? How are all those aaS’es going to communicate? These questions concern the stack relationship integration and a key component to getting right.

Note: All of Layer 1 should consist of Dell Technologies products with deployment and support services. Full stop.

Layer 2

Now that we’ve laid our foundation from Dell Technologies, we can pivot to other Dell ecosystem solution sets as our journey continues, up the stack. Let’s keep going.

Considerations for Layer 2 are:

- Are we sticking with physical tin (bare-metal)?

- Should we apply a virtualization consolidationfactor here? ESXi, Hyper-V, KVM? Virtualization is “optional” at this point. Again, the answers are fluid right now and it’s okay to say, “it depends.” We’ll get there!

- Do we want to move to open-source in terms of a fully supported stack? Do we want the comfort of a supported model? IMO, I like a fully supported model although it comes at a cost. Implementing consolidation economics, however, like I mentioned above with virtualization and containerization, equals doing more with less.

Note: Layer 2 is optional (dependent upon future layers) and would be fully supported by either Dell Technologies, VMware or Microsoft and services provided by Dell Technologies Services or VMware Professional Services.

Layer 3

Choices in Layer 3 help drive decision or maturity curve comfort level all the way back to Layer 1. Additionally, at this juncture, we’ll also start talking about subsequent layers and thinking about the orchestration of Containers with Kubernetes.

Considerations and some of the purpose-built solutions for Layer 3 include:

- Software-defined everything such as Dell Technologies PowerFlex (formally VxFlex).

- Network and storage such as The Dell Technologies VMware Family – vSAN and the Microsoft Azure Family on-premises servers – Edge, Azure Stack Hub, Azure Stack HCI.

As we are walking through the journey to a containerized database world, at this level, is where we also need to start thinking about the CSI (Container Storage Interface) driver and where it will be supported.

Note: Layer 3 is optional (dependent upon future layers) and would be fully supported by either Dell Technologies, VMware or Microsoft and services provided by Dell Technologies Services or VMware Professional Services.

Layer 4

Ah, we’ve climbed up four rungs on the ladder and arrived at the Operating System where things get really interesting! (Remember the days when OS was tin and an OS?)

Considerations for Layer 4 are:

- Windows Server. Available in a few different forms—Desktop experience, Core, Nano.

- Linux OS. Many choices including RedHat, Ubuntu, SUSE, just to name a few.

Note: Do you want to continue the supported stack path? If so, Microsoft and RedHat are the answers here in terms of where you’ll reach for “phone-a-friend” support.

Option: We could absolutely stop at this point and deploy our application stack. Perfectly fine to do this. It is a proven methodology.

Layer 5

Container technology – the ability to isolate one process from another – dates back to 1979. How is it that I didn’t pick this technology when I was 9 years old?  Now, the age of containers is finally upon us. It cannot be ignored. It should not be ignored. If you have read my previous blogs, especially “The New DBA Role – Time to Get your aaS in Order,” you are already embracing SQL Server on containers. Yes!

Now, the age of containers is finally upon us. It cannot be ignored. It should not be ignored. If you have read my previous blogs, especially “The New DBA Role – Time to Get your aaS in Order,” you are already embracing SQL Server on containers. Yes!

Considerations and options for Layer 5, the “Container Control plane” are:

- VMware VCF 4.

- RedHat OpenShift (with our target of a SQL 2019 BDC, we need 4.3+).

- AKS (Azure Kubernetes Service) – on-premises with Azure Stack Hub.

- Vanilla Kubernetes (The original Trunk/Master).

Note: Containers are absolutely optional here. However, certain options, in these layers, that will provide the runway for containers in the future. Virtualization of data and containerization of data can live on the same platform! Even if you are not ready currently. It would be good to setup for success now. Ready to start with containers, within hours, if needed.

Layer 6

The Container Orchestration plane. We all know about Virtualization sprawl. Now, we have container sprawl! Where are all these containers running? What cloud are they running? Which Hypervisor? It’s best to now manage through a single pane of glass—understanding and managing “all the things.”

Considerations for Layer 6 are:

Considerations for Layer 6 are:

Note: As of this blog publish date Azure Arc is not yet GA, it’s still in preview. No time like the present to start learning Arc’s in’s and out’s! Sign up for the public preview.

Layer 7

Finally, we have reached the application layer in our SQL Server Modernization. We can now install SQL Server, or any ancillary service offering in the SQL Server ecosystem. But hold on! There are a couple options to consider: Would you like your SQL services to be managed and “Always Current?” For me, the answer would be yes. And remember, we are talking about on-premises data here.

Considerations for Layer 7:

- The application for this conversation is SQL Server 2019.

- The appropriate decisions in building you stack will lead you to Azure Arc Data Services (currently in Preview), SQL Server and Kubernetes is a requirement here.

Note: With Dell Technologies solutions, you can deploy at your rate, as long as your infrastructure is solid. Dell Technologies Services has services to move/consolidate and/or upgrade old versions of SQL Server to SQL Server 2019.

The Fully Supported Stack

In terms of considering all the choices and dependencies made at each layer of building and integrating the 7 layers up the stack, there is a fully supported stack available that includes services and products from:

- Dell Technologies

- VMware

- RedHat

- Microsoft

Also, there are absolutely many open-source choices that your teams can make along the way. Perfectly acceptable to do. In the end, it comes down to who wants to support what, and when.

Dell Technologies Is Here to Help You Succeed

There are deep integration points for the fully supported stack. I can speak for all permutations representing the four companies listed above. In my role at Dell Technologies, I engage with senior leadership, product owners, engineers, evangelists, professional services teams, data scientists—you name it. We all collaborate and discuss what is best for you, the client. When you engage with Dell Technologies for the complete solution experience, we have a fierce drive to make sure you are satisfied, both in the near and long term. Find out more about our products and services for Microsoft SQL Server.

I invite you to take a moment to connect with a Dell Technologies Service Expert today and begin moving forward to your fully-support stack / SQL Server Modernization.

Mowing the DbaaS Weeds Without --Force

Tue, 04 Apr 2023 16:51:39 -0000

|Read Time: 0 minutes

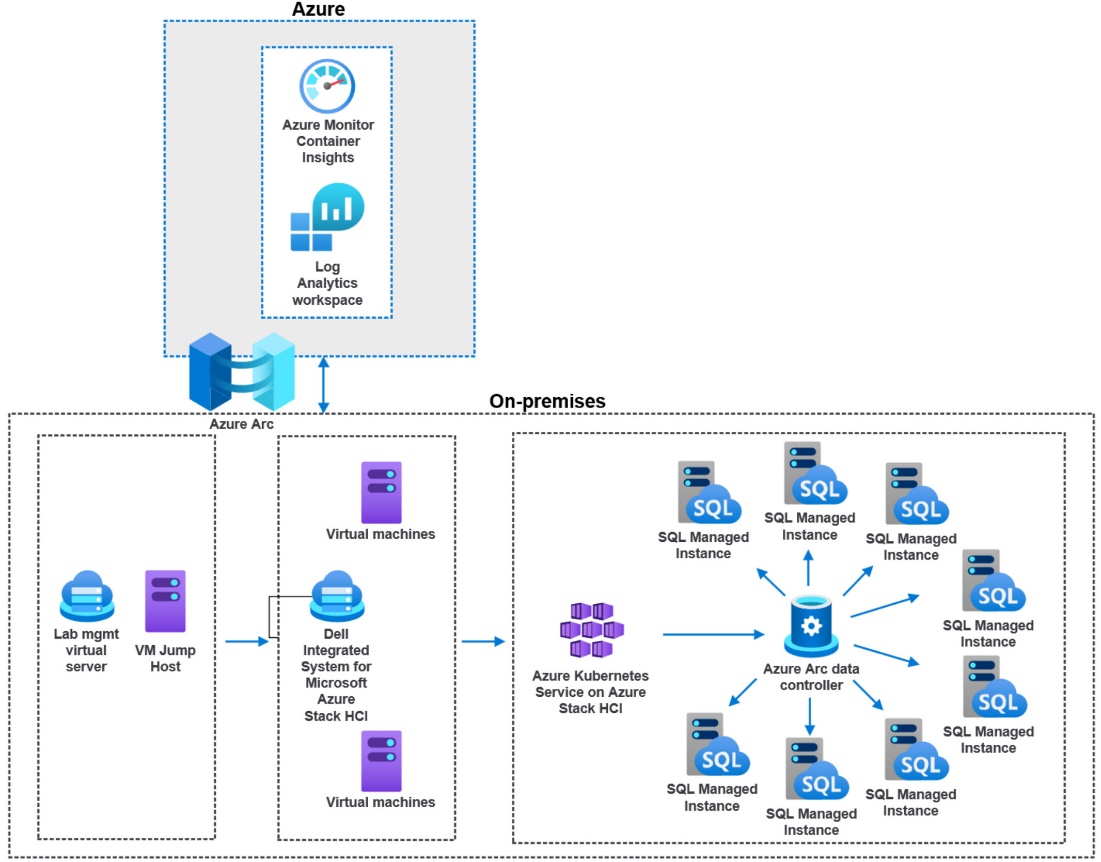

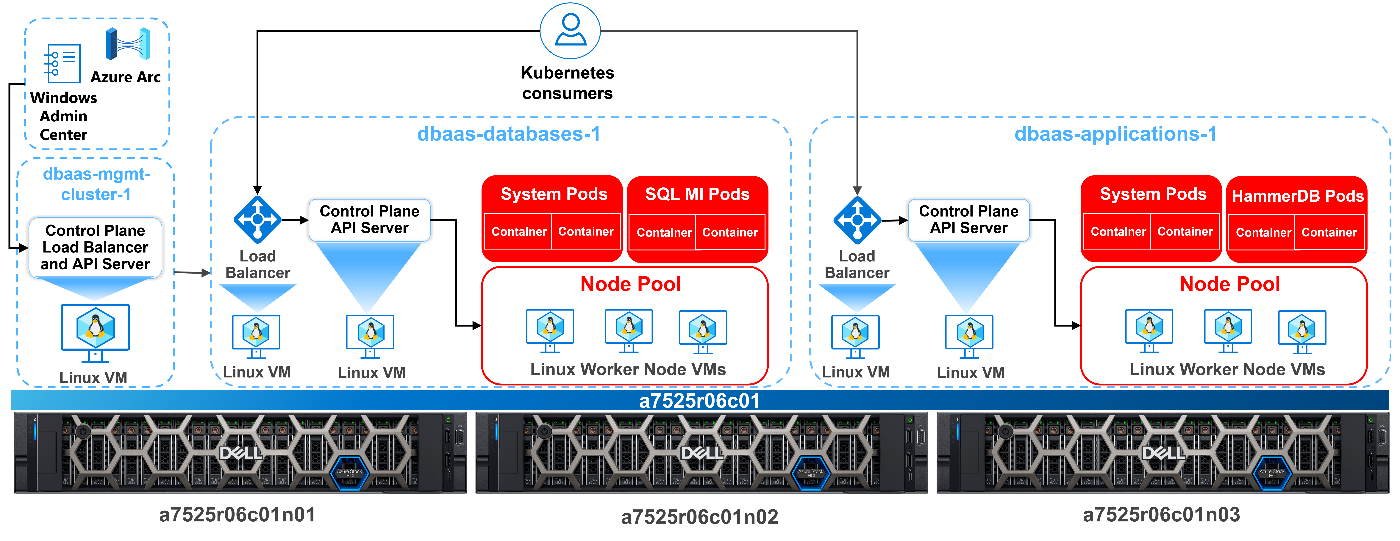

With the release of a recent paper that I had the pleasure to co-author, Building a Hybrid Database-as-a-Service Platform with Azure Stack HCI, I wanted to continue to author some additional interesting perspectives, and dive deep into the DbaaS technical weeds.

That recent paper was a refresh of a previous paper that we wrote roughly 16 months ago. That time frame is an eternity for technology changes. It was time to refresh with tech updates and some lessons learned.

The detail in the paper describes an end-to-end Database as a Service solution with Dell and Microsoft product offerings. The entire SysOps, DevOps, and DataOps teams will appreciate the detail in the paper. DbaaS actually realized.

One topic that was very interesting to me was our analysis and resource tuning of Kubernetes workloads. With K8s (what the cool kids say), we have the option to configure our pods with a very tightly defined resource allocation, both requested and limits, for both CPU and memory.

A little test harness history

First, a little history from previous papers with my V1 test harness. I first started working through an automated test harness constructed by using the HammerDB CLI, T-SQL, PowerShell, and even some batch files. Yeah, batch files… I am that old. The HammerDB side of the harness required a sizable virtual machine with regard to CPU and memory—along with the overhead and maintenance of a full-blown Windows OS, which itself requires a decent amount of resource to properly function. Let’s just say this was not the optimal way to go about end-to-end testing, especially with micro-services as an integral part of the harness.

Our previous test harness architecture is represented by this diagram:

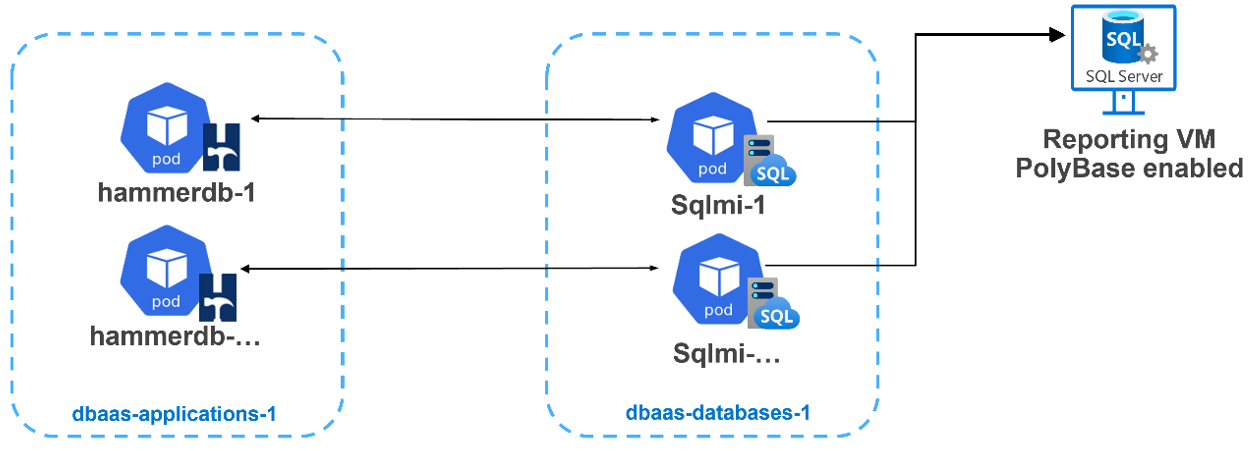

The better answer would be to use micro-services for everything. We were ready and up for the task. This is where I burned some quality cycles with another awesome Dell teammate to move the test harness into a V2 configuration. We decided to use HammerDB within a containerized deployment.

Each HammerDB in a container would map to a separate SQL MI (referenced in the image below). I quickly saw some very timely configuration opportunities where I could dive into resource consolidation. Both the application and the database layers were deployed into their own Kubernetes namespaces. This allowed us a much better way to provide fine-grained resource reporting analysis.

There is a section within our paper regarding the testing we worked through, comparing Kubernetes requests and limits for CPU and memory. For an Azure Arc-enabled SQL managed instance, defining these attributes is required and there are minimums as defined by Microsoft here. But where do you start? How do we size a pod? There are a few pieces of the puzzle to consider:

- What is the resource allocation of each Kubernetes worker node (virtual or physical)— CPU and memory totals?

- How many of these worker nodes exist? Can we scale? Should we use anti-affinity rules? (Not that it is better to let the scheduler sort it out.)

- Kubernetes does have its own overhead. A conservative resource allocation would allow for at least 20 percent overhead for each worker node.

For DbaaS CPU, how do we define our requests and limits?

We know a SQL managed instance is billed on CPU resource limits and not CPU resource requests. This consumption billing leaves us with an interesting paradigm. With any modern architecture, we want to maximize our investment with a very dense, but still performant, SQL Server workload environment. We need to strike a balance.

With microservices, we can finally achieve real consolidation for workloads.

Real… Consolidation...

What do we know about the Kubernetes scheduler around CPU?

- A request for a pod is a guaranteed baseline for the pod. When a pod is initially scheduled and deployed, the request attribute is used.

- Setting a CPU request is a solid best practice when using Kubernetes. This setting does help the scheduler allocate pods efficiently.

- The limit is the hard “limit” that that pod can consume. The CPU limit only affects how the spare CPU is distributed. This is good for a dense and highly consolidated SQL MI deployment.

- With Kubernetes, CPU represents compute processing time that is measured in cores. The minimum is 1m. My HammerDB pod YAML references 500m, or half a core.

- With a CPU limit, you are defining a period and a quota.

- CPU is a compressible resource, and it can be stretched. It can also be throttled if processes request too much.

Let go of the over-provisioning demons

It is time to let go of our physical and virtual machine sizing constructs, where most SQL Server deployments are vastly over-provisioned. I have analyzed and recommended better paths forward for over-provisioned machines for years.

- For SQL Server, do we always consume the limit, or max CPU, 100 percent of the time? I doubt it. Our workloads almost always go up and down—consuming CPU cycles, then pulling back and waiting for more work.

- For workload placement, the scheduler—by the transitive property—therein defines our efficiency and consolidation automatically. However, as mentioned, we do need to reference a CPU limit because it is required.

- There is a great deal of Kubernetes CPU sizing guidance to not use limits; however, for a database workload, this is a good thing, not to mention a requirement and good fundamental database best practice.

- Monitor your workloads with real production-like work to derive the average CPU utilization. If CPU consumption percentages remain low, throttle back the CPU requests and limits.

- Make sure that your requests are accurate for SQL Server. We should not over-provision resource “just because” we may need them.

- Start with half the CPU you had allocated for the same SQL Server running in a virtual machine, then monitor. If still over-provisioned, decrease by half again.

Kubernetes also exists in part to terminate pods that are no longer needed or no longer consuming resources. In fact, I had to fake out the HammerDB container with a “keep-alive” within my YAML file to make sure that the pod remained active long enough to be called upon to run a workload. Notice the command:sleep attribute in this YAML file:

apiVersion: v1 kind: Pod metadata: name: <hammerpod> namespace: <hammernamespace> spec: containers: - name: <hammerpod> image: dbaasregistry/hammerdb:latest command: - "sleep" - "7200" resources: requests: memory: "500M" cpu: "500m" limits: memory: "500M" cpu: "500m" imagePullPolicy: IfNotPresent

Proving out the new architecture

Our new fully deployed architecture is depicted below, with a separation of applications, in this case HammerDB from SQL Server, deployed into separate namespaces. This allows for tighter resource utilization, reporting, and tuning.

It's also important to note that setting appropriate resources and limits is just one aspect of optimizing your Azure Arc-enabled data services deployment. You should also consider other factors, such as storage configuration, network configuration, and workload characteristics, to ensure that your microservice architecture runs smoothly and efficiently.

Scheduled CPU lessons learned

The tests we conducted and described in the paper gave me some enlightenment regarding proper database microservice sizing. Considering our dense SQL MI workload, we again wanted to maximize the amount of SQL instances we could deploy and keep performance at an acceptable level. I also was very mindful of our consumption-based billing based on CPU limit. For all my tests, I did keep memory as a constant, as it is a finite resource for Kubernetes.

What I found is that performance was identical, and it was even better in some cases when:

- I set CPU requested to half the limit, letting the Kubernetes scheduler do what it is best at—managing resources.

- I monitored the tests and watched resource consumption, tightening up allocation where I could.

My conclusion is this: It is time to let go and not burn my own thinking analysis on trying to outsmart the scheduler. I have better squirrels to go chase and rabbit holes to dive into. 😊

Embrace the IT polyglot mindset

To properly engage and place the best practice stake in the ground, I needed to continue to embrace my polyglot persona. Use all the tools while containerizing all the things! I wrote about this previously here.

I was presenting on the topic of Azure Arc-enabled data services at a recent conference. I have a conversation slide that has a substantial list of tools that I use in my test engineering life. The question was asked, “Do you think all GUI will go away and scripting will again become the norm?” I explained that I think that all tools have their place, depending on the problem or deployment you are working through. For me, scripting is vital for repeatable testing success. You can’t check-in a point-and-click deployment.

There are many GUI tools for Linux and Kubernetes and others. They all have their place, especially when managing very large environments. I do also believe that honing your script skills first is best. Then you understand and appreciate the GUI.

Being an IT polyglot means that you have a broad understanding of various technologies and how they can be used to solve different problems. It also means that you can communicate effectively with developers and other stakeholders, from tin to “C-level” who may have expertise in different areas.

For most everything I do with Azure Arc, I first turn to command line tools, CLI or kubectl to name a few. I love the fact that I can script, check in my work, or feed into a GitOps pipe, and forget about it. It always works on my machine. 😉

To continue developing your skills as an IT polyglot, it's important to stay up to date with the latest industry trends and technologies. This can be done by attending conferences, reading industry blogs and publications, participating in online communities, and experimenting with new tools and platforms. As I have stated in other blogs… #NeverStopLearning

Author: Robert F. Sonders

Technical Staff – Engineering Technologist

Multicloud Storage Software

@RobertSonders | |

robert.sonders@dell.com | |

Blog | https://www.dell.com/en-us/blog/authors/robert-f-sonders/ |

Location | Scottsdale AZ, USA (GMT-7) |

Accelerate SQL Server modernization: Getting started

Wed, 03 Aug 2022 21:45:58 -0000

|Read Time: 0 minutes

In Part I of this blog series, I discussed issues concerning EOL SQL Server and Windows Server running on aging hardware, and three pathways to SQL Server modernization. In this blog, I’ll discuss how a combination of Dell Technologies Consulting Services and free tools from Microsoft can help you get started on your SQL migration journey and how to meet your SQL Server data modernization objectives.

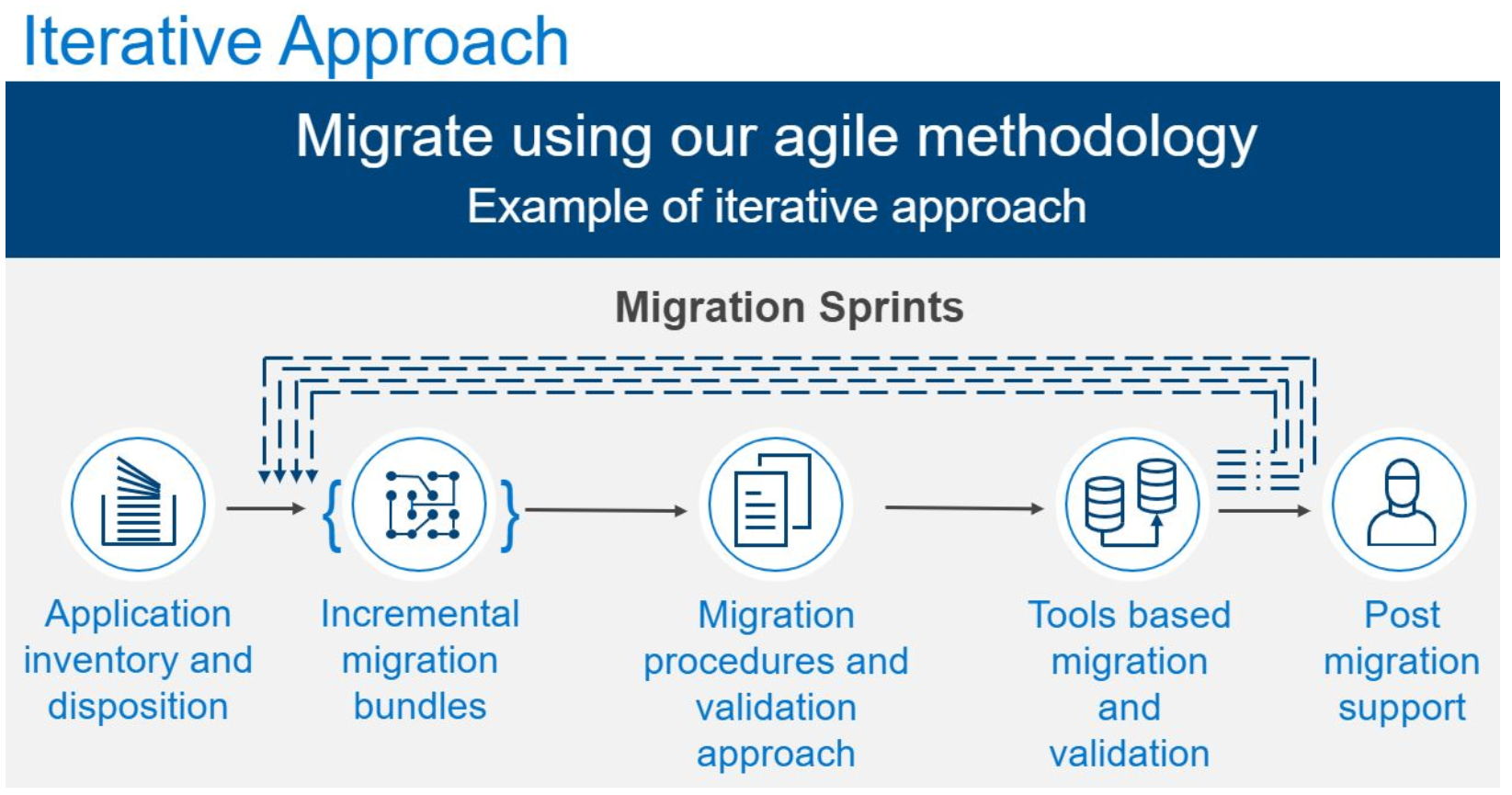

Beginning Your SQL Migration Journey

During my client conversations, I hear three repeating pain points: (1) they would love to modernize, don’t have the cycles or staff to drive a solid iterative SQL migration approach through to completion, (2) they still need to “keep the lights on” and (3) despite having the skills, they lack the team cycles to execute on the plan.

This is where Dell Technologies Consulting Services can help you plan a solid foundational set of goals and develop a roadmap for the modernization. Here are the key points our SQL modernizations teams address:

- Discover the as-is SQL environment including the current state of all in scope SQL servers, associated workloads and configurations.

- Inventory and classify applications (which align to SQL databases) and all dependencies. Critical here to think through all the connections, reporting, ETL/ELT processes, etc.

- Group and prioritize the SQL databases (or entire instance) by application group and develop a near-term modernization plan and roadmap for modernization. Also an excellent time to consider database consolidation.

- Identify the rough order of magnitude for future state compute, storage and software requirements to support a modernization plan. Here is where our core product teams would collaborate with the SQL modernization teams. This collaboration is a major value add for Dell Technologies.

Additionally, there are excellent, free tools from Microsoft to help your teams, assess, test and begin the migration journey. I will talk about these tools below.

Free Microsoft Tools to Help You Get Started

Microsoft has stated they will potentially assist if there are performance issues with SQL procedures and queries. It is best to utilize the Query Tuning Assistant while preparing your database environment for a migration.

Microsoft provides query plan shape protection when:

- The new SQL Server version (target) runs on hardware that is comparable to the hardware where the previous SQL Server version (source) was running.

- The same supported database compatibility level is used both at the target SQL Server and source SQL Server.

- Any query plan shape regression (as compared to the source SQL Server) that occurs in the above conditions will be addressed (contact Microsoft Customer Support if this is the case).

Here are a few other free Microsoft tools I recommend running ASAP that will enable you to understand your environment fully and provide measurable and actionable data points to feed into your SQL modernization journey. Moreover, proven and reliable upgrades should always start with these tools (also explained in detail within the Database Migration Guide):

- Discovery – Microsoft Assessment and Planning Toolkit (MAP)

- Assessment – Database Migration Assistant (DMA)

- Testing – Database Experimentation Assistant (DEA)

Database engine upgrade methods are listed here. Personally, I am not a fan of any upgrade in-place option. I like greenfield and a minimal cutover with an application connection string change only. There are excellent, proven, migration paths to minimize the application downtime due to a database migration. Another place our Professional Services SQL Server team can provide value and reliability to execute a successful transition.

Here are a few cutover migration options for you to consider:

| Feature | Notes |

| Log Shipping | Cutover measured in (typically) minutes |

| Replication | Cutover measured in (potentially) seconds |

| Backup and Restore | This is going to take a while for larger databases. However, a Full, Differential and T-log backup / restore process can be automated |

| Filesystem/SAN Copy | Can also take time |

| Always On Groups (>= SQL 2012) | Cutover measured in (typically) seconds – Rolling Upgrade |

| *Future – SQL on Containers | No down time – Always On Groups Rolling Upgrade |

A consideration for you — all these tools and references are great — but does your team have the cycles and skills to execute the migration?

3 Pillars to Help You with Your SQL Server Data Modernization Objectives

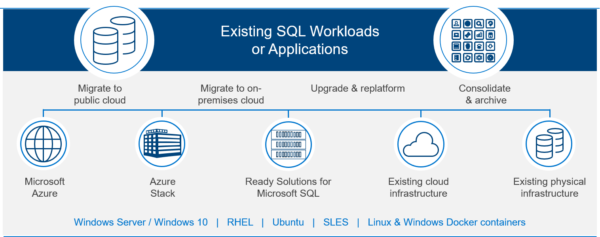

As I mentioned at the start of this blog, it makes perfect sense to use the experts from Dell Technologies Consulting Services for your SQL Server migration. Our Consulting Services teams are seasoned in SQL modernization processes, including pathways to Azure, Azure Stack, the Dell EMC Ready Solutions for SQL Server, existing hardware or cloud, and align perfectly with a server and storage refresh, if you have aging hardware (will be covered in future blog).

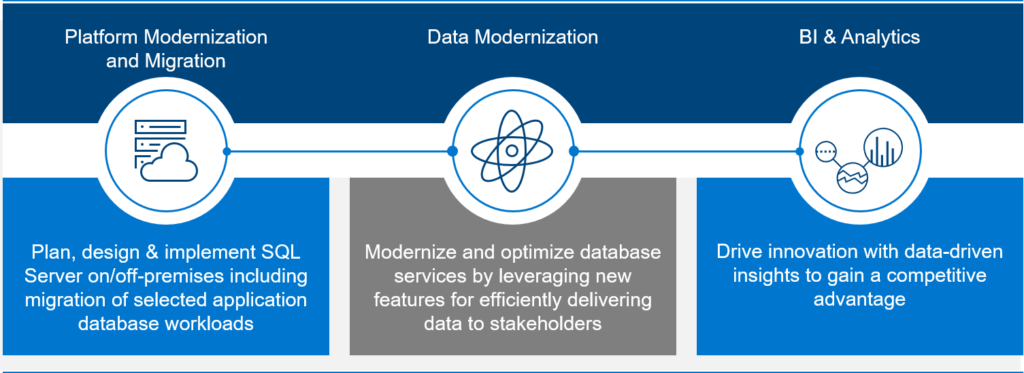

Within Dell Technologies Consulting Services, we support 3 pillars to help you with your SQL Server data modernization objectives.

Platform Consulting Services

Dell Technologies Consulting Services provides planning, design and implementation of resilient data architectures with Microsoft technology both on-premises and in the cloud. Whether you are installing or upgrading a data platform, migrating and consolidating databases to the Cloud (Public, Private, Hybrid) or experiencing performance problems with an existing database, our proven methodologies, best practices and expertise, will help you make a successful transition.

Data Modernization Services

Modernizing your data landscape improves the quality of data delivered to stakeholders by distributing workloads in a cost-efficient manner. Platform upgrades and consolidations can lower the total cost of ownership while efficiently delivering data in the hands of stakeholders. Exploring data workloads in the cloud, such and test/development, disaster recovery or for active archives provide elastic scale and reduced maintenance.

Business Intelligence and Analytics Services

By putting data-driven decisions at the heart of your business, your organization can harness a wealth of information, gain immediate insights, drive innovation, and create competitive advantage. Dell Technologies Consulting Services provides a complete ecosystem of services that enable your organization to implement business intelligence and create insightful analytics for on-premises, public cloud, or hybrid solutions.

We have in place, the proven methodologies with an focus, to drive a repeatable and successful SQL Server migration.

3 Approaches to Drive a Repeatable and Successful SQL Server Migration

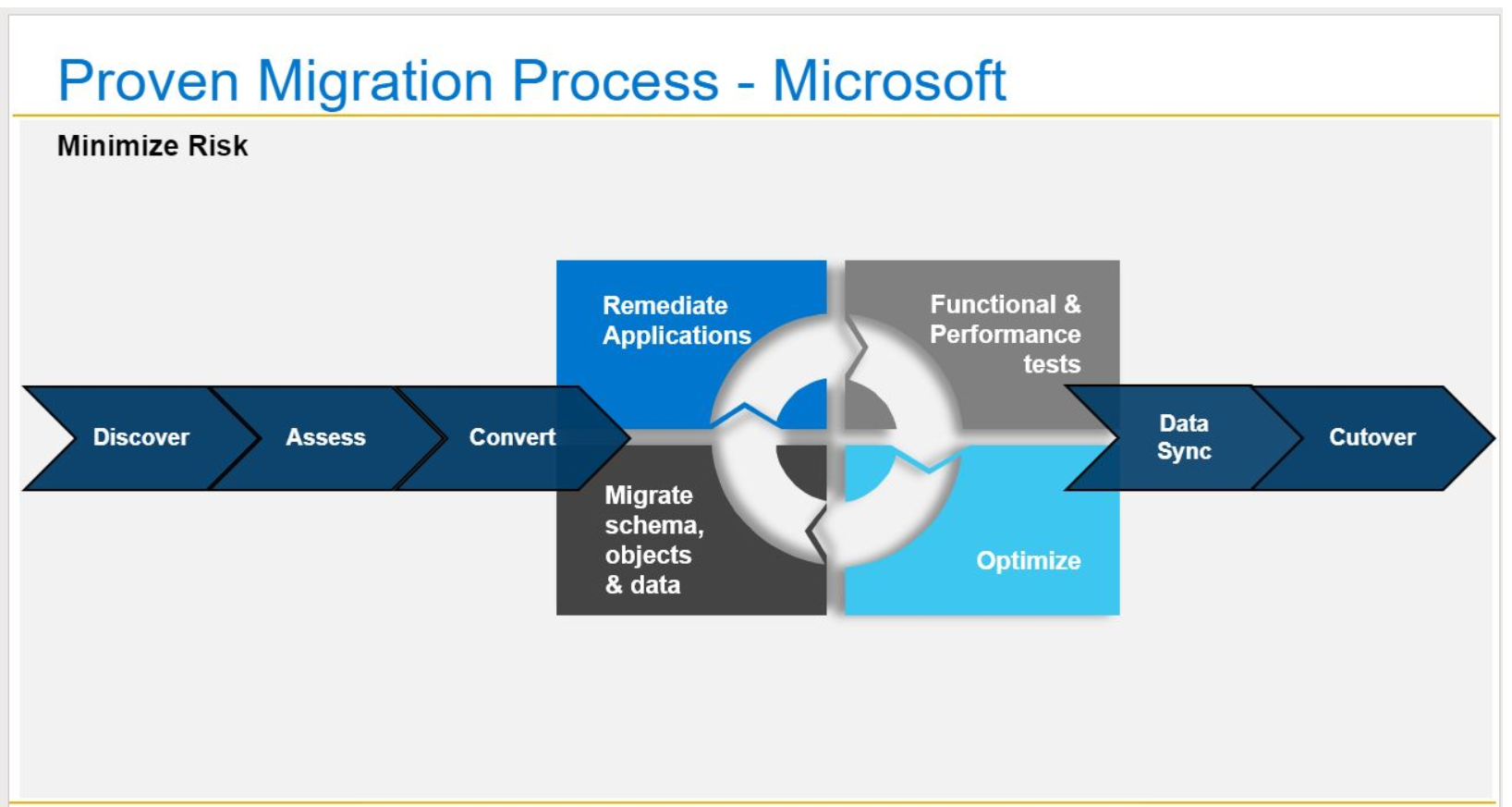

Migration Procedures and Validation Approach

A critical success factor for migrations is ensuring the migration team has a well-documented set of migration processes, procedures and validation plan. Dell Technologies Consulting Services ensure that every team member involved in the migration process has a clear set of tasks and success metrics to measure and validate the process throughout the migration lifecycle.

Tools-based Migration and Validation

Our migration approach includes tools-based automation solutions and scripts to ensure the target state environment is right-sized and configured to exacting standards. We leverage industry standard tools to synchronize data from the source to target environments. Lastly, we leverage a scripted approach for validating data consistency and performance in the target environment.

Post Migration Support

Once the migration event is complete, our consultants remain at the ready for up to 5 business days, providing Tier-3 support to assist with troubleshooting and mitigating any issues that may manifest in the SQL environment as a result of the migration.

Considerations for the Data Platform of the Future

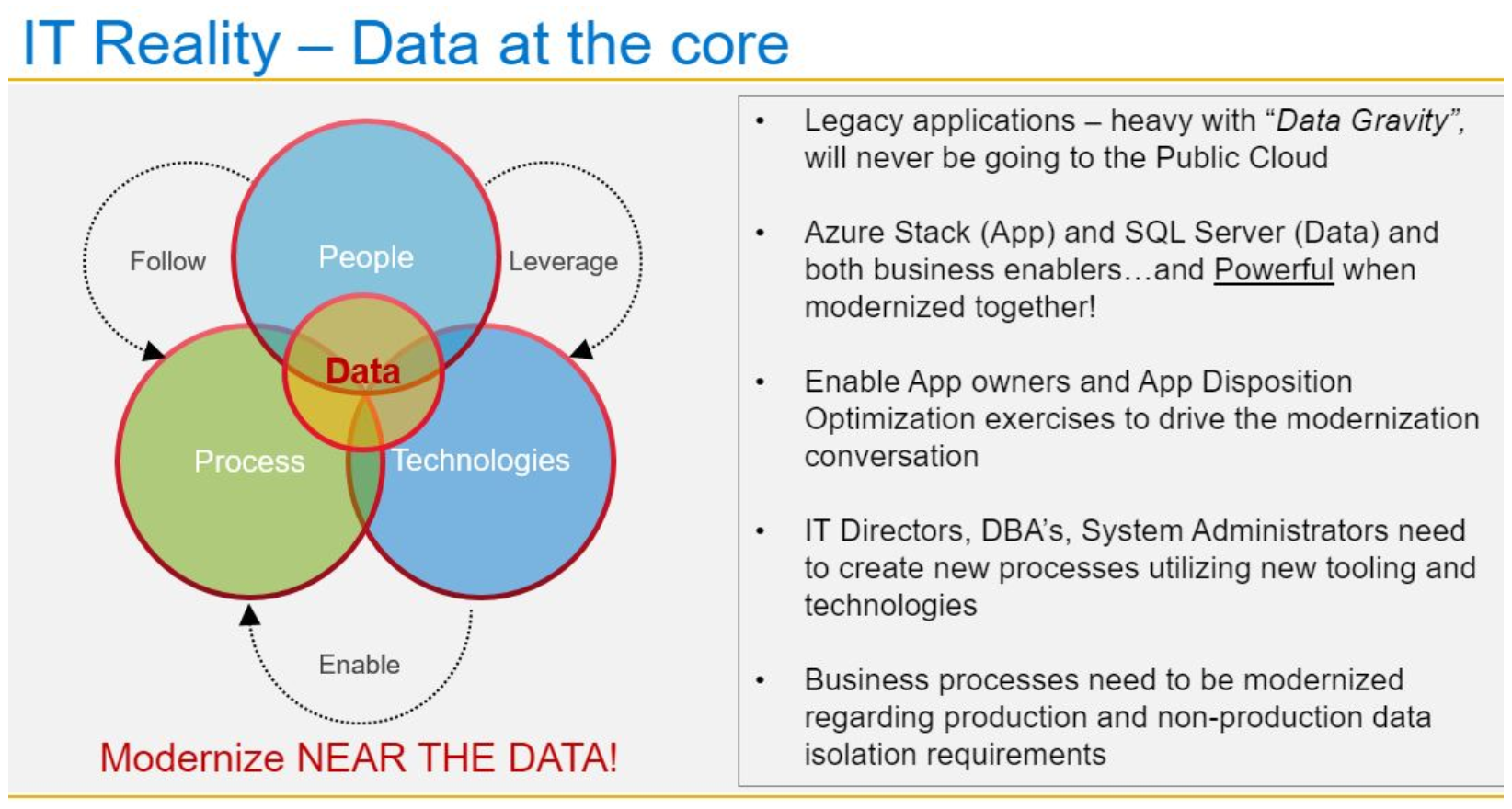

There are a number of pathways for modernizing SQL Server workloads. Customers need flexibility when it comes to the choice of platform, programming languages & data infrastructure to get from the most from their data. Why? In most IT environments, platforms, technologies and skills are as diverse as they have ever been, the data platform of the future needs to you to build intelligent applications on any data, any platform, any language on premises and in the cloud.

SQL Server manages your data, across platforms, on-premises and cloud. The goal of Dell Technologies Consulting Services is to meet you where you are, on any platform, anywhere with the tools and languages of your choice.

SQL Server now has support for Windows, Linux & Docker Containers. Kubernetes orchestration with SQL 2019 coming soon!

Additionally, SQL allows you to leverage the language of your choice for advanced analytics – R and Python.

Where Can You Move and Modernize these SQL Server Workloads?

Microsoft Azure and Dell EMC Cloud for Microsoft Azure Stack

Migrate your SQL Server databases without changing your apps. Azure SQL Database is the intelligent, fully managed relational cloud database service that provides the broadest SQL Server engine compatibility. Accelerate app development and simplify maintenance using the SQL tools you love to use. Reduce the burden of data-tier management and save time and costs by migrating workloads to the cloud. Azure Hybrid Benefit for SQL Server provides a cost-effective path for migrating hundreds or thousands of SQL Server databases with minimal effort. Use your SQL Server licenses with Software Assurance to pay a reduced rate when migrating to the cloud. SQL 2008 support will be extended for three years if those IaaS workloads are migrated to Azure or Azure Stack.

Another huge value add, with Azure Stack and SQL Server, is the SQL Server Resource Provider. Think SQL PaaS (Platform as a Service). Here is a quick video I put together on the topic.

The SQL RP does not execute the SQL Server engine. However, it does manage the various SQL Server instances, that can be provided to tenants as SKUs, for SQL database consumption. These managed SQL Server SKUs can exist on, or off, Azure Stack.

Now that is cool if you need big SQL Server horsepower!

Dell EMC Proven SQL Server Solutions

Dell EMC Proven solutions for Microsoft SQL are purpose-designed and validated to optimize performance with SQL Server 2017. Products such as Dell EMC Ready Solutions for Microsoft SQL helps you save the hours required to develop a new database and can also help you avoid the costly pitfalls of a new implementation, while ensuring that your company’s unique needs are met. Our solution also helps you reduce costs with hardware resource consolidation and SQL Server licensing savings by consolidating and simplifying SQL systems onto modern infrastructure that supports mixed DBMS workloads, making them future ready for data analytics and next generation real-time applications.

Leverage Existing Cloud or Physical Infrastructure

Enable your IT teams with more efficient and effective operational support processes, while reducing licensing costs, solution complexity and time to delivery for new SQL Server services. Dell Technologies Consulting Services has experience with all the major cloud platforms. We can assist with planning and implementation services to migrate, upgrade and consolidate SQL Server database workloads on your existing cloud assets.

Consolidate SQL Workloads

Reduce operating and licensing costs by consolidating SQL Server workloads. With the Microsoft SQL Server per-core licensing model in SQL Server 2012 and above, moving workloads to a virtual/cloud environment can often present significant licensing savings. In addition, Dell Technologies Consulting Services will typically discover within the enterprise SQL server landscape, that there are many SQL Server instances which are underutilized, which presents an opportunity to reduce the CPU core counts or move SQL workloads to shared infrastructure to maximize hardware resource utilization and reduce licensing costs.

Summary

Some migrations will be simple, others much more complex, especially with mission critical databases. If you have yet to kick off this modernization effort, I recommend starting today. The EOL clock is ticking. Get your key stakeholders involved. Show them the data points from your environment. Use the free tools from Microsoft. Send them the link to this blog!

If you continue to struggle or don’t want to go it alone, Dell Technologies Consulting Services, the only holistic SQL Server workload provider, can help your team every step of the way. Take a moment to connect with your Dell Technologies Service Expert today and begin moving forward to a modern platform.

Blogs in the Series

Best Practices to Accelerate SQL Server Modernization (Part I)

Introduction to SQL Server Data Estate Modernization

Why Canonicalization Should Be a Core Component of Your SQL Server Modernization (Part 1)

Tue, 23 Mar 2021 13:00:57 -0000

|Read Time: 0 minutes

The Canonical Model, Defined

A canonical model is a design pattern used to communicate between different data formats; a data model which is a superset of all the others (“canonical”) and creates a translator module or layer to/from which all existing modules exchange data with other modules [1]. It’s a form of enterprise application integration that reduces the number of data translations, streamlines maintenance and cost, standardizes on agreed data definitions associated with integrating business systems, and drives consistency in providing common data naming, definition and values with a generalized data framework.

SQL Server Modernization

I’ve always been a data fanatic and forever hold a special fondness for SQL Server. As of late, my many clients have asked me: “How do we embark on era of data management for the SQL Server stack?”

Canonicalization, in fact, is very much applicable to the design work of a SQL Server modernization effort. It’s simplified approach allows for vertical integration and solutioning an entire SQL Server ecosystem. The stack is where the “Services” run—starting with bare-metal, all the way to the application, with seven integrated layers up the stack.

The 7 Layers of Integration Up the Stack

The foundation of any solid design of the stack starts with . Dell Technologies is best positioned to drive consistency up and down the stack and its supplemented by the company’s subject matter infrastructure and services experts who work with you to make the best decisions concerning compute, storage, and back up.

Let’s take a look at the vertical integration one layer at a time. From tin to application, we have:

- Infrastructure from Dell Technologies

- Virtualization (optional)

- Software defined – everything

- An operating system

- Container control plane

- Container orchestration plane

- Application

There are so many dimensions to choose from as we work up this layer cake of both hardware and software-defined and, of course, applications. Think: Dell, VMware, RedHat, Microsoft. With the progress of software, evolving at an ever-increasing rate and eating up the world, there is additional complexity. It’s critical you understand how all the pieces of the puzzle work and which pieces work well together, giving consideration of the integration points you may already have in your ecosystem.

Determining the Most Reliable and Fully Supported Solution

With all this complexity, which architecture do you choose to become properly solutioned? How many XaaS would you like to automate? I hope you answer is – All of them! At what point would you like the control plane, or control planes? Think of a control plane as the where your team’s manage from, deploy to, hook your DevOps tooling to. To put it a different way, would you like your teams innovating or maintaining?

As your control plane insertion point moves up towards the application, the automation below increases, as does the complexity. One example here is the Azure Resource Manager, or ARM. There are ways to connect any infrastructure in your on-premises data centers, driving consistent management. We also want all the role-based access control (RBAC) in place – especially for our data stores we are managing. One example, which we will talk about in Part 2, is Azure Arc.

This is the main reason for this blog, understanding the choices and tradeoff of cost versus complexity, or automated complexity. Many products deliver this automation, out of the box. “Pay no attention to the man behind the curtain!”

One of my good friends at Dell Technologies, Stephen McMaster an Engineering Technologist at Dell, describes these considerations as the Plinko Ball, a choose your own adventure type of scenario. This analogy is spot on!

With all the choices of dimensions, we must distill down to the most efficient approach. I like to understand both the current IT tool set and the maturity journey of the organization, before I tackle making the proper recommendation for a solid solution set and fully supported stack.

Dell Technologies Is Here to Help You Succeed

Is “keeping the lights on” preventing your team from innovating?

Dell Technologies Services can complement your team! As your company’s trusted advisor, my team members share deep expertise for Microsoft products and services and we’re best positioned to help you build your stack from tin to application. Why wait? Contact a Dell Technologies Services Expert today to get started.

Stay tuned for Part 2 of this blog series where we’ll dive further into the detail and operational considerations of the 7 layers of the fully supported stack.

Recommendations for modernizing the Microsoft SQL Server platform

Mon, 03 Aug 2020 16:08:49 -0000

|Read Time: 0 minutes

This blog follows Introduction to SQL Server Data Estate Modernization, the second in a series discussing what’s entailed in modernizing the Microsoft SQL server platform and recommendations for executing an effective migration.

SQL Server—Love at First Sight Despite Modernization Challenges

SQL Server is my favorite Microsoft product and one of the most prolific databases of our time. I fell in love with SQL Server version 6.5, back in the day when scripting was king! Scripting is making a huge comeback, which is awesome! SQL Server, in fact, now exists in pretty much every environment—sometimes in locations IT organizations don’t even know about.

It can be a daunting task to even kick off a SQL modernization undertaking. Especially if you have End of Life SQL Server and/or Windows Server, running on aging hardware. My clients voice these concerns:

- The risk is too high to migrate. (I say, isn’t there a greater risk in doing nothing?)

- Our SQL Server environment is running on aging hardware—Dev/Test/Stage/Prod do not have the same performance characteristics, making it hard to regression test and performance test.

- How can I make the case for modernization? My team doesn’t have the cycles to address a full modernization workstream.

I will address these concerns, first, by providing a bit of history in terms of virtualization and SQL Server, and second, how to overcome the challenges to modernization, an undertaking in my opinion that should be executed sooner rather than later.

When virtualization first started to appear in data centers, one of its biggest value propositions was to increase server utilization, especially for SQL Server. Hypervisors increased server utilization by allowing multiple enterprise applications to share the same physical server hardware. While the improvement of utilization brought by virtualization is impressive, the amount of unutilized or underutilized resources trapped on each server starts to add up quickly. In a virtual server farm, the data center could have the equivalent of one idle server for every one to three servers deployed.

Fully Leveraging the Benefits of Integrated Copy Data Management (iCDM)

Many of these idle servers, in several instances, were related to Dev/Test/Stage. The QoS (Quality of Service) can also be a concern with these instances.

SQL Server leverages Always On Groups as a native way to create replicas that can then be used for multiple use cases. The most typical deployment of AAG replicas are for high availability failover databases and for offloading heavy read operations, such as reporting, analytics and backup operations.

iCDM allows for additional use cases like the ones listed below with the benefits of inline data services:

- Test/Dev for continued application feature development, testing, CI/CD pipelines.

- Maintenance to present an environment to perform resource intensive database maintenance tasks, such as DBCC and CHECKDB operations.

- Operational Management to test and execute upgrades, performance tuning, and pre-production simulation

- Reporting to serve as the data source for any business intelligence system or reporting.

One of the key benefits of iCDM technology is the ability to provide cost efficient lifecycle management environment. iCDM provides efficient copy data management at the storage layer to consolidate both primary data and its associated copies on the same scale-out, all-flash array for unprecedented agility and efficiency. When combined with specific Dell EMC products, bullet-proof, consistent IOPS and latency, linear scale-out all-flash performance, and the ability to add more performance and capacity as needed with no application downtime, iCDM delivers incredible potential to consolidate both production and non-production applications without impacting production SLAs.

While emerging technologies, such as artificial intelligence, IoT and software-defined storage and networking, offer competitive benefits, their workloads can be difficult to predict and pose new challenges for IT departments.

Traditional architectures (hardware and software) are not designed for modern business and service delivery goals. Which is yet another solid use case for a SQL modernization effort.

As I mentioned in my previous post, not all data stores will be migrating to the cloud. Ever. The true answer will always be a Hybrid Data Estate. Full stop. However, we need to modernize for a variety of compelling reasons.

5 Paths to SQL Modernization

Here’s how you can simplify making the case for modernization and what’s included in each option.

Do nothing (not really a path at all!):

- Risky roll of the dice, especially when coupled with aging infrastructure.

- Run the risk of a security exploit, regulatory requirement (think GDPR) or a non-compliance mandate.

Purchase Extended Support from Microsoft:

- Supports SQL 2008 and R2 or Windows Server 2008 and R2 only.

- Substantial cost using a core model pricing.

- Costs tens of thousands of dollars per year…and that is for just ONE SQL instance! Ouch. How many are in your environment?

- Available for 3 additional years.

- True up yearly—meaning you cannot run unsupported for 6 months then purchase an additional 6 months. Yearly purchase only. More – ouch.

- Paid annually, only for the servers you need.

- Tech support is only available if Extended Support is purchased (oh…and that tech support is also a separate cost).

Transform with Azure/Azure Stack:

- Migrate IaaS application and databases to Azure/ Dell EMC Azure Stack virtual machines (Azure, on-premises…Way Cool!!!).

- Receive an additional 3 years of extended security updates for SQL Server and Windows Server (versions 2008 and R2) at no additional charge.

- In both cases, there is a new consumption cost, however, security updates are covered.

- When Azure Stack (on-premises Azure) is the SQL IaaS target, there are many cases where the appliance cost plus the consumption cost, is still substantially cheaper than #2, Extended Support, listed above.

- Begin the journey to operate in Cloud Operating Model fashion. Start with the Azure Stack SQL Resource Provider and easily, within a day, be providing your internal subscribers a full SQL PaaS (Platform as a Service).

- If you are currently configured with a Highly Available – Failover Cluster Instance with SQL 2008, this will be condensed into a single node. The Operating System protection you had with Always On FCI is not available with Azure Stack. However, your environment will now be running within a Hyper-Converged Infrastructure. Which does offer node failure (fault domain) protection, not operating system protection, or downtime protection from an Operating System patch. There are trade-offs. Best to weigh the options for your business use case and recovery procedures.

Move and Modernize (the Best Option!):

- Migrate IaaS instances to modern all Flash Dell EMC infrastructure.

- Migrate application workloads on a new Server Operating System – Windows Server 2016 or 2019.

- Migrate SQL Server databases to SQL Server 2017 and quickly upgrade to SQL 2019 when Generally Available. Currently SQL 2019 is not yet GA. Best guess, before end of year 2019.

- Enable your IT teams with more efficient and effective operational support processes, while reducing licensing costs, solution complexity and time to delivery for new SQL Server services.

- Reduce operating and licensing costs by consolidating SQL Server workloads. With the Microsoft SQL Server per-core licensing model in SQL Server 2012 and above, moving workloads to a virtual/cloud environment can often present significant licensing savings. In addition, through the Dell Technologies advisory services; we typically discover within the enterprise SQL server landscape, that there are many SQL Server instances which are underutilized, which presents an opportunity to reduce the CPU core counts or move SQL workloads to shared infrastructure to maximize hardware resource utilization and reduce licensing costs.

Rehost 2008 VMware Workloads:

- Run your VMware workloads natively on Azure

- Migrate VMware IaaS applications and databases to Azure VMware Solution by CloudSimple or Azure VMware solution by Virtustream

- Get 3 years of extended security updates for SQL Server and Windows Server 2008 / R2 at no additional charge

- vSphere network full compatibility

Remember, your Windows Server 2008 and 2008 R2 servers will also be EOL January 14, 2020.

Avoid Risks and Disasters Related to EOL Systems (in Other Words, CYA)

Can your company afford the risk of both an EOL Operating System and an EOL Database Engine? Pretty scary stuff. It really makes sense to look at both. Do your business leaders know this risk? If not, you need to be vocal and explain the risk. Fully. They need to know, understand, and sign off on the risk as something they personally want to absorb when an exploit hits. Somewhat of a CYA for IT, Data professionals and line of business owners. If you need help here, my team can help engage your leadership teams with you!

In my opinion, the larger problem, between SQL EOL and Windows Server EOL, is the latter. Running an unsupported operating system that supports an unsupported SQL Server workload is a serious recipe for disaster. Failover Cluster Instances (Always On FCI) was the normal way to provide Operating System High Availability with SQL Server 2008 and lower, which compounds the issue of multiple unsupported environment levels. Highly Available FCI environments are now left unprotected.

Summary

Some migrations will be simple, others much more complex, especially with mission critical databases. If you have yet to kick off this modernization effort, I recommend starting today. The EOL clock is ticking. Get your key stakeholders involved. Show them the data points from your environment. Send them the link to this blog!

If you continue to struggle or don’t want to go it alone, Dell Technologies Consulting Services, the only holistic SQL Server workload provider, can help your team every step of the way. Take a moment to connect with your Dell Technologies Service Expert today and begin moving forward to a modern platform.

Other Blogs in This Series:

Best Practices to Accelerate SQL Server Modernization (Part II)

Introduction to SQL Server data estate modernization

Mon, 03 Aug 2020 16:08:26 -0000

|Read Time: 0 minutes

This blog is the first in a series discussing what’s entailed in modernizing the Microsoft SQL server platform.

I hear the adage “if it ain’t broke don’t fix it” a lot during my conversations with clients about SQL Server, and many times it’s from the Database Administrators. Many DBA’s are reluctant to change—maybe they think their jobs will go away. It’s my opinion that their roles are not going anywhere, but they do need to expand their knowledge to support coming changes. Their role will involve innovation at a different level, merging application technology (think CI/CD pipelines) integrated into the mix. The DBA will also need to trust that their hardware partner has fully tested and vetted a solution for best possible SQL performance and can provide a Future Proof architecture to grow and change as the needs of the business grow and change.

The New Normal Is Hybrid Cloud

When “public clouds” first became mainstream, the knee-jerk reaction was everything must go to the cloud! If this had happened, then the DBA’s job would have gone away by default. This could not be farther from the truth and, of course, it’s not what happened. With regards to SQL Server, the new normal is hybrid.

Now some years later, will some data stores have a place in public cloud?

Absolutely, they will.

However, it’s become apparent that many of these data stores are better suited to existing on-premises. Also, there are current trends where data is being re-patriated from the public cloud back to the on-premises environments due to cost, management and data gravity (keeping the data close to the business need and/or specific regulatory compliance needs).

The SQL Server data estate can be a vast, and in some cases, a hodgepodge of “Rube Goldberg” design processes. In the past, I was the lead architect of many of these designs, I am sad to admit. (I continue to ask forgiveness from the IT gods.) Today, IT departments manage one base set of database architecture technology for operational databases, another potential hardware partner caching layer, and yet another architecture for data analytics and emerging AI.

Oh wait…one more point…all this data needs to be at the fingertips of a mobile device and edge computing. Managing and executing on all these requirements, in a real-time fashion, can result in a highly complex and specialized platform.

Data Estate Modernization

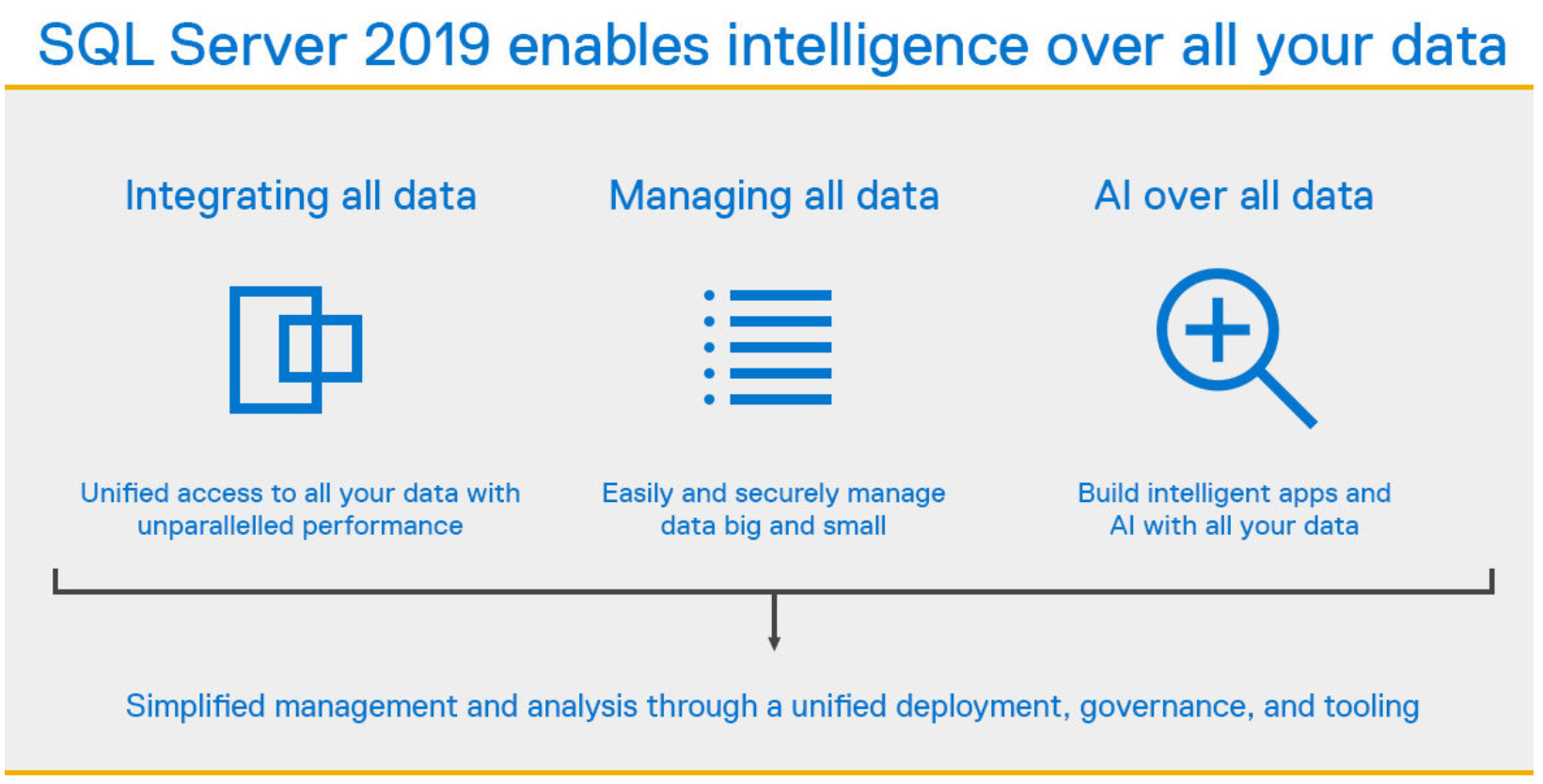

The new normal everyone wants is to keep it as simple as possible. Remember those “Rube Goldberg” designs referenced above? They’re no longer applicable. Simple, portable and seamless execution is key. As data volumes increase, DBA’s partnering with hardware vendors need to simplify as security, compliance and data integrity remain a fixed concern. However, there is a new normal for data estate management; one where large volumes of data can be referenced in place, with push down compute or seamlessly snapped and copied in a highly efficient manner to other managed environments. The evolution of SQL Server 2019 will also be a part of the data estate orchestration solution.

The new normal everyone wants is to keep it as simple as possible. Remember those “Rube Goldberg” designs referenced above? They’re no longer applicable. Simple, portable and seamless execution is key. As data volumes increase, DBA’s partnering with hardware vendors need to simplify as security, compliance and data integrity remain a fixed concern. However, there is a new normal for data estate management; one where large volumes of data can be referenced in place, with push down compute or seamlessly snapped and copied in a highly efficient manner to other managed environments. The evolution of SQL Server 2019 will also be a part of the data estate orchestration solution.

Are you an early adopter of SQL 2019?

Get Modern: A Unified Approach of Data Estate Management

A SQL Server Get Modern architecture from Dell EMC can consolidate your data estate, defined with our high value core pillars that align perfectly for SQL Server.

The pillars will work with any size environment, from the small and agile to the very large and complex SQL database landscape. There IS a valid solution for all environments. All the pillars work in concert to complement each other across all feature sets and integration points.

- Accelerate – To not only accelerate and Future-Proof the environment but completely modernize your SQL infrastructure. A revamped perspective on storage, leveraging RAM and other memory technologies, to maximum effect.

- Protect – Protect your database with industry leading backups, replication, resiliency and self-service Deduplicated copies.

- Reuse – Reuse snapshots. Operational recovery. Dev/Test repurposing. CI/CD pipelines.

Aligning along these pillars will bring efficiency and consistency to a unified approach of data estate management. The combination of a strong, consistent, high-performance architecture supporting the database platform will make your IT team the modernization execution masters.

What Are Some of the Compelling Reasons to Modernize Your SQL Server Data Estate?

Here are some of the pain point challenges I hear frequently in my travels chatting with clients. I will talk through these topics in future blog posts.

1. Our SQL Server environment is running on aging hardware:

- Dev/Test/Stage/Prod do not have the same performance characteristics making it hard to regression test and performance test.

2. We have modernization challenges:

- How can I make the case for modernization?

- My team does not have the cycles to address a full modernization workstream.

3. The Hybrid data estate is the answer… how to we get there?

4. We are at EOL (End of Life) for SQL Server 2008 / 2008R2 and Windows Server but are stuck due to:

- ISV (Independent Software Vendor) “lock in” requirement to a specific SQL Server engine version.

- Migration plan to modernize SQL cannot be staffed and executed through to completion.

5. We need to consolidate SQL Server sprawl and standardize on a SQL Server version:

- Build for the future, where disruptive SQL version upgrades become a thing of the distant past. Think…containerized SQL Server. OH yeah!

- CI/CD success – the database is a key piece of the puzzle for success.

- Copies of databases for App Dev / Test / Reporting copies are consuming valuable space on disk.

- Backups take too long and consume too much space.

6. I want to embrace SQL Server on Linux.

7. Let’s talk modern SQL Server application tuning and performance.

8. Where do you see the DBA role in the next few years?

Summary

I hope you will come along this SQL Server journey with me as I discuss each of these customer challenges in this blog series:

Best Practices to Accelerate SQL Server Modernization (Part I)

Best Practices to Accelerate SQL Server Modernization (Part II)

And, if you’re ready to consider a certified, award-winning Microsoft partner who understands your Microsoft SQL Server endeavors for modernization, Dell EMC’s holistic approach can help you minimize risk and business disruption. To find out more, contact your Dell EMC representative.

The new DBA role—Time to get your aaS in order

Mon, 03 Aug 2020 16:07:49 -0000

|Read Time: 0 minutes

Yes, a “catchy” little title for a blog post but aaS is a seriously cool and fun topic and the new DBA role calls for a skill set that’s incredibly career-enhancing. DBA teams, in many cases, will be leading the data-centric revolution! The way data is stored, orchestrated, virtualized, visualized, secured and ultimately, democratized.

Let’s dive right in to see how aaS and the new Hybrid DBA role are shaking up the industry and what they’re all about!

The Future-ready Hybrid DBA

I chatted about the evolution of the DBA in a previous blog within this series called Introduction to SQL Server Data Estate Modernization. I can remember, only a few short years ago, many of my peers were vocalizing the slow demise of the DBA. I never once agreed with their opinion. Judging from the recent data-centric revolution, the DBA is not going anywhere.

But the million-dollar question is how will the DBA seamlessly manage all the different attributes of data mentioned above? By aligning the role with the skill that will be required to exceed in exceptional fashion.

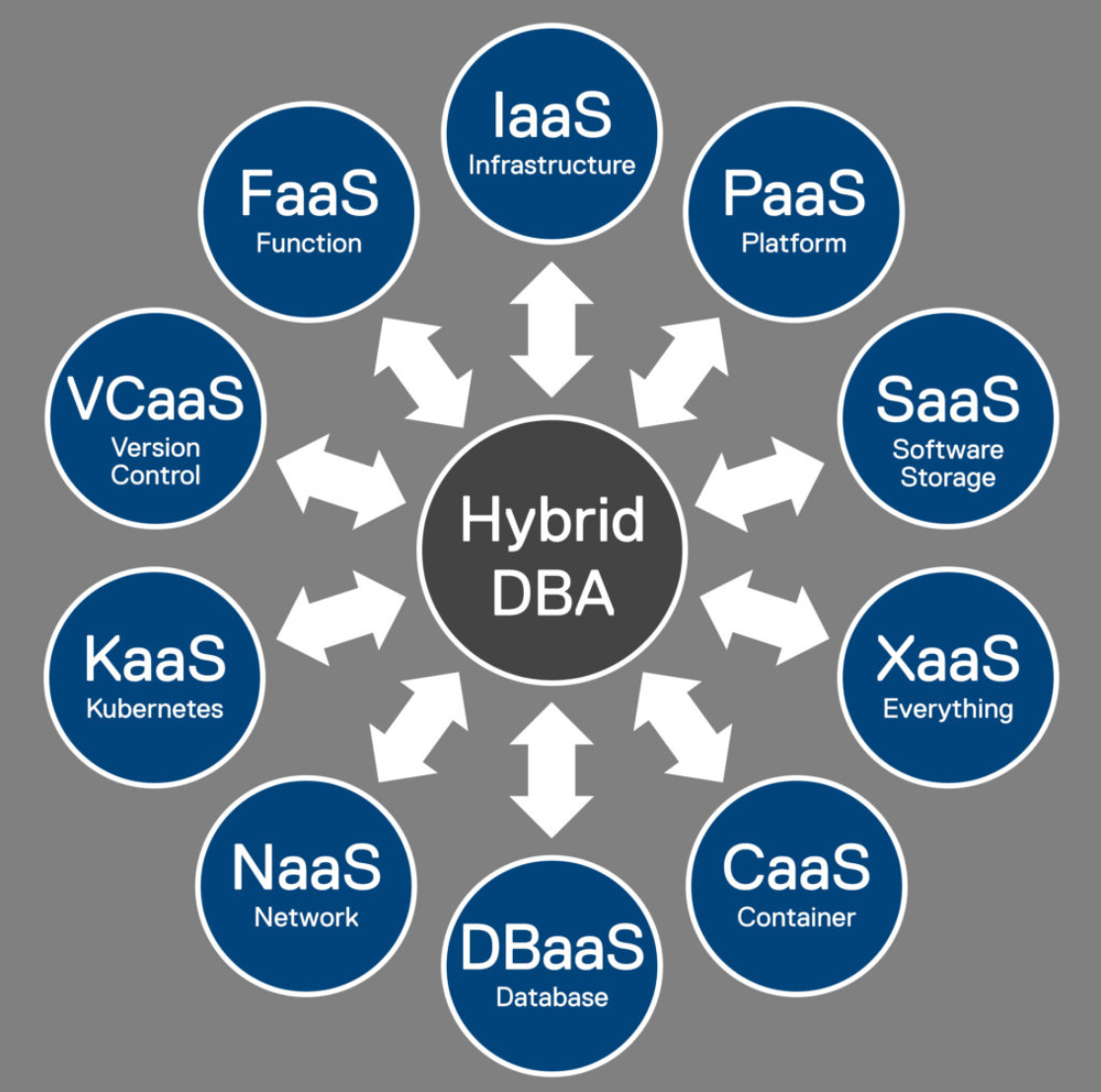

Getting Acquainted with the aaS Shortlist

To begin getting assimilated with what aaS has in store, the DBA will need to get his/her aaS in order and become familiar with the various services. I am not a huge acronym guy (in meetings I often find that folks don’t even know the words behind their own acronyms), so let’s spell out a shortlist of aaS acronyms, so there’s no guesswork at their meaning.

And this is by no means is a be-all-end-all list – technology is advancing at hyper-speed – as Heraclitus of Ephesus philosophized, “Change is the only constant in life.”

Why all this aaS, you ask?

Because teams must align with some, if not all of these services, to enable massive scalability, multitenancy, independence and rapid time to value. These services make up the layers in the cake which comprise the true solution.

Let’s take SQL Server 2019, for instance, a new and incomparable version that’s officially generally available and whose awesome goodness will be the topic of many of my blogs in 2020!

SQL 2019 Editions and Feature Sets

I started with SQL Server 6.5, before a slick GUI existed. Now, with SQL 2019, we have the ability to manage a stateful app, SQL Server, in a container, within a cluster, and as a platform.

That is “awesome sauce” spread all around!

And running SQL Server 2019 on Linux? We’re back to scripting again. I love it! Scripting is testable, repeatable, sharable, and can be checked into source control. Which, quite simply, enables collaboration, which in turn makes a better product. You’ll need to be VCaaS-enabled to store all these awesome scripts.

Is your entire production database schema in source control? If it isn’t, script it out and put it there. A backup of a database is not the same as scripting version control. DBA’s often tell me their production code resides in the database, which is backed up. It’s best practice, however, to begin your proven, repeatable pattern by scripting out the DDL and checking into and deploying from a VCaaS. Aligning your database DDL and test data sets with a CI/CD application development pipeline, should be addressed ASAP. By the way, try this with ADS (Azure Data Studio) and the dacpac extension. Many of the aaSes can be managed with ADS. And, with the #SQLFamily third-party community – the ADS extension tooling will only continue to grow.