Direct from Development: Tech Notes

Spark Machine Learning on Dell HS5610 Platform with Cloudera

Mon, 29 Jan 2024 22:49:51 -0000

|Read Time: 0 minutes

Executive Summary

To establish a thorough solution collateral for the Dell PowerEdge HS5610 platform integrated with Cloudera software, we are commencing benchmarking initiatives this year. These benchmarks will form the foundational baseline for our future testing endeavors, and it's essential to emphasize that we will not be making comparisons with previous generations.

This initiative holds great significance, prompted directly by Dell's explicit request to craft this reference solution. Intel has taken charge of executing the benchmark tests and generously shared their Best-Known Methods (BKMs), providing invaluable guidance for this critical undertaking.

What are the key takeaways?

Cloudera Data Platform built on Dell’s 16G PowerEdge servers with Intel® 4th Generation Xeon processor architecture can accommodate growing enterprise data workloads and efficiently manage increasing demands for analytics and machine learning in a smaller footprint. Cloudera Data Platform delivers easier data management and scalability for data anywhere with optimal performance, scalability, and security.

As organizations create more diverse and more user-focused data products and services, there is a growing need for machine learning, which can be used to develop personalization, recommendations, and predictive insights. But as organizations amass greater volumes and greater varieties of data, data scientists are spending most of their time supporting their infrastructure instead of building the models to solve their data problems. To help solve this problem, as an integrated part of Cloudera’s platform, Spark provides a general machine learning library that is designed for simplicity, scalability, and easy integration with other tools. With the scalability, language compatibility, simple administration and compliance-ready security and governance provided through cloudera, data scientists can solve and iterate through their data problems faster.

Spark MLlib

Spark MLlib is a distributed machine learning framework built on top of Spark Core. The key benefit of MLlib is that it allows data scientists to focus on their data problems and models instead of solving the complexities surrounding distributed data. MLlib leverages the advantages of in-memory computation and is optimized for matrix and vector operations, aligning its capabilities with specific algorithmic requirements for the given use case.

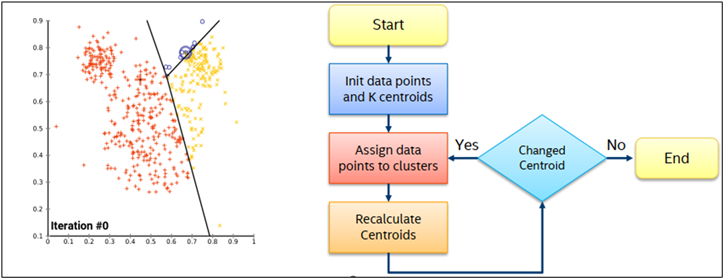

K-means Overview

Clustering stands as a fundamental exploratory data analysis technique, providing valuable insights into the inherent structure of data. One prominent algorithm for this purpose is K-means, widely recognized for partitioning data points into a predefined number of clusters. This technique finds extensive applications in diverse domains including market segmentation, document clustering, image segmentation, search engines, real estate, anomaly detection and image compression, highlighting it’s versatility and importance in data analysis.

- K-Means Overview

K-means clustering performance

We achieved remarkable results by clustering 10 million samples with 1,000 dimensions in just 283 seconds. This accomplishment was made possible through the application of the K-Means algorithm from Spark's ML library, which was provided by Cloudera 7.1.8 and deployed on Dell PowerEdge HS5610 platform.

We conducted a performance evaluation of Spark's MLlib K-Means algorithm using the HiBench Benchmark.

For detailed information on our benchmarking process, you can refer to Intel GitHub repository: https://github.com/Intel-bigdata/HiBench

Note - This result is not compared against any other platform hardware or software. We will use this as baseline for future products.

Configuration Details

Workload Configuration | |

Platform | Dell PowerEdge HS5610 |

CPU | 6448Y |

Memory | 512 GB (16 x 32GB DDR5-4800) |

Boot Device | Dell EMC™ Boot Optimized Server Storage (BOSS-N1) with 2 x 480 GB M.2 NVMe SSDs (RAID1) |

HDFS Data Disk | 2 x Dell Ent NVMe P5500 RI U.2 3.84TB |

HDFS Namenode Disk | 1 x Dell Ent NVMe P5500 RI U.2 3.84TB |

Yarn Cache Disk | 1 x Dell Ent NVMe P5500 RI U.2 3.84TB |

Network Interface Controller | NetXtreme BCM5720 Gigabit Ethernet PCIe |

Cluster size | 1 |

Cloudera Distribution | Cloudera Data Platform 7.1.8 |

Compute Engine | Spark 3.2.0 |

Workload | Hibench 7.1.1 – Kmeans Algorithm |

Iterations and result choice | 3 iterations, average |

Spark Configuration | |

spark.deploy.mode | yarn |

Executor Numbers | 16 |

Executor cores | 8 |

spark.executor.memory | 24g |

spark.executor.memoryOverhead | 4g |

spark.driver.memory | 20g |

spark default parallelism | 128 |

spark.driver.maxResultSize | 20g |

spark.serializer | org.apache.spark.serializer.KryoSerializer |

spark.kryoserializer.buffer.max | 1g |

spark.network.timeout | 1200s |

K-means Configuration | |

Number of clusters | 5 |

Dimensions | 1,000 |

Number of Samples | 10,000,000 |

Samples per inputfile | 10,000 |

Number of Iterations | 40 |

k | 300 |

Extract Insights on a Scalable and Security-Enabled Data Platform from Cloudera

Mon, 29 Jan 2024 22:48:44 -0000

|Read Time: 0 minutes

Summary

This joint paper outlines the key hardware considerations when configuring a data platform based on the most recent Dell’s 16th Generation PowerEdge Server portfolio offerings.

Market positioning

Cloudera® Data Platform (CDP) Private Cloud is a scalable data platform that allows data to be managed across its life cycle—from ingestion to analysis—without leaving the data center. It consists of two products: Cloudera Private Cloud Base (the on-premises portion built on Dell PowerEdge™ servers[RAK1] [DD2] [DD3] ) and Cloudera Private Cloud Data Services. The Data Services provide containerized compute analytic applications that scale dynamically and can be upgraded independently. This platform simplifies managing the growing volume and variety of data in your enterprise, unleashing the business value of that data. CDP Private Cloud helps enhance business agility and flexibility by disaggregating compute and storage and supporting a container-based environment. The platform also includes secure user access and data governance features.

Key Considerations

- Scalability and Performance: The CDP Platform is built on Dell’s 16th Generation PowerEdge servers with Intel® 4th Generation Xeon processor architecture. It can accommodate growing enterprise data workloads and efficiently handle increasing demands for analytics and machine learning in a smaller footprint.

- Compatibility and Integration: Ensuring compatibility and seamless integration between CDP Private Cloud and the hardware components is essential for a successful deployment in a Cloud environment. Delivering faster time-to-market and minimizing the total cost of ownership are ensured with Intel architecture-based Dell PowerEdge servers that are well suited to work with the CDP Platform running on a private cloud

- Availability and Resilience: The reliability and resilience features of the 16th Generation PowerEdge servers, (such as redundant power supplies, hardware monitoring, and failover capabilities, so on), are critical for maintaining[RAK4] [RAK5] the reliability and availability of the CDP Platform.

Available Configurations

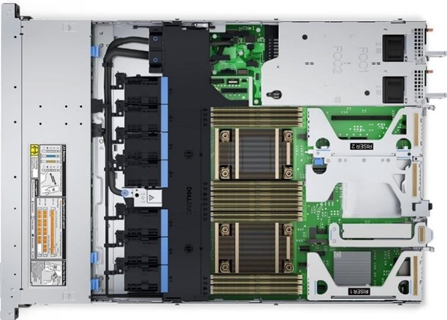

The new Dell PowerEdge HS5610 is a 1U, two-socket rack server purpose-built for Cloud Service Providers’ most popular IT applications, this also lends itself well for Hybrid Cloud Edge deployments. Vi This scalable server optimizes technology without the financial and operational burden of supporting extreme configurations. With tailored performance, I/O flexibility, and open ecosystem system management, you gain simplicity for large-scale, heterogeneous SaaS, PaaS, and IaaS data centers.

Some of the benefits include –

- Faster performance by using 4th generation Intel® Xeon® Scalable processors with up to 32 cores per socket

- Accelerated in-memory applications with up to 16 DDR5 RDIMMS with speeds up to 4800 MT/sec

- Designed to take up less space than traditional servers, which makes them a good option for data centers with limited space and for cloud service providers

- Designed to be cooled efficiently, which can help to prevent overheating and ensure the longevity of the servers at cloud and on-premises

- Power efficient, which can help to reduce the overall operating costs of a data center

- Configurations that can easily scale to meet changing demand, which can help to optimize the cost of a data center

- Long living instances for space and cost reductions

- Validated workloads that reduce data center costs and overhead

- Resilient Architecture for Zero Trust IT environment and operations

| Cloudera® Data Platform (CDP) Private Cloud Base Cluster |

| |||

| Edge Node (1 Node) + Master Nodes (Minimum of Three Nodes Required)

| Worker Nodes for Use with External Storage System (Minimum of Three Nodes Required) | Worker Nodes with Local All-Flash Storage (Minimum of Three Nodes Required) | Worker Nodes with Local HDDs (Minimum of Three Nodes Required) |

|

Functions | Edge node: Apache Hadoop® clients, NameNode, Resource Manager, Apache ZooKeeper | DataNode, NodeManager, CDP DC (YARN) workloads |

| ||

Platform | Dell PowerEdge HS5610 (1RU) Chassis with up to 10x 2.5" SAS/SATA/NVMe Direct Drives | Dell PowerEdge HS5610 (1RU) Chassis with up to 10x 2.5" SAS/SATA/NVMe Direct Drives | Dell PowerEdge HS5620 (2RU) Chassis with up to 16x 2.5" SAS/SATA and 8x 2.5” NVMe | Dell PowerEdge HS5620 (2RU) Chassis with up to 12x 3.5" Drives and 2 x 2.5” rear storage (NVMe) |

|

CPU | 2 x 4th Gen Intel® Xeon® Gold 6426Y processor | 2 x 4th Gen Intel® Xeon® Gold 6448Y processor

|

| ||

DRAM | 256 GB (16 x 16 GB DDR5-4800) | 512 GB (16 x 32 GB DDR5-4800) |

| ||

Boot Device | Dell EMC™ Boot Optimized Server Storage (BOSS-N1) with 2 x 480 GB M.2 NVMe SSDs (RAID 1) |

| |||

Storage Adapter | Dell PERC H755N NVMe RAID adapter | None | Dell HBA355i |

| |

Storage HDFS/Ozone | 2x (up to 4x) 3.84 TB SATA Read Intensive SSD 2.5in AG Drive, 1DWPD | Not Required. Use an external storage system instead | 8x (up to 16x) 3.84 TB SATA Read Intensive SSD 2.5in AG Drive, 1DWPD | 12x 4 TB (or larger) 7.2 K RPM NLSAS 12 Gbps 512n 3.5” hot plug HDD |

|

Storage Fast Cache | 1 x 1.6 TB or 3.2 TB Enterprise NVMe Mixed Use AG Drive U.2 Gen4 | 1 x 3.2 TB Enterprise NVMe Mixed Use AG Drive U.2 Gen4 |

| ||

Network Interface Controller | Intel Ethernet Network Adapter E810-XXVDA2 for OCP3 (dual-port 10/25 GbE) |

| |||

Additional NIC | None | Intel Ethernet Network Adapter E810-XXV (dual-port 10/25 GbE), or | None | None |

|

Note: For storage-only configuration (Hadoop Distributed File System/Ozone), customers can still choose traditional high-density storage nodes with high-capacity rotational HDDs based on the HS5610 platform, however, external storage systems like Dell PowerScale or ECS are recommended. Customers should be aware that using large capacity HDDs increases the time of background scans (bit-rot detection) and block report generation for HDFS and significantly increases recovery time after full node failure. Also, using nodes with more than 100 TB of storage is not recommended by Cloudera. Source: https://blog.cloudera.com/disk-and-datanode-size-in-hdfs/. For more information and specifications, contact a Dell representative.

| CDP Private Cloud Data Services (Red Hat® OpenShift® Kubernetes®)/Embedded Container Service (ECS) Cluster | ||||

| Container Services Administration Host | Master Nodes (Three Nodes Required) | Worker Nodes (10 Nodes or More) | ||

Functions | OpenShift administration services

| OpenShift services, Kubernetes services | Kubernetes operators, Cloudera® Data Platform (CDP) Private Cloud workload pods | ||

Platform | Dell PowerEdge HS5610 (1RU) Chassis with up to 10x 2.5" SAS/SATA/NVMe Direct Drives | ||||

CPU | 2 x 4th Gen Intel® Xeon® Gold 6426Y processor | 2 x 4th Gen Intel® Xeon® Gold 6448Y processor

| |||

DRAM | 128 GB (16 x 8 GB DDR5-4800) | Standard configuration: 512 GB (16 x 32 GB DDR5-4800) Large memory configuration: 1024 GB (16 x 64 GB DDR5-4800) | |||

Boot device | Dell EMC™ Boot Optimized Server Storage (BOSS-N1) with 2 x 480 GB M.2 NVMe SSDs (RAID 1) | ||||

Storage adapter | Not required for all-NVMe configuration. | ||||

Storage (NVMe) | 1 x 1.6 TB Enterprise NVMe Mixed Use AG Drive U.2 Gen4 | 1 x 3.2 TB Enterprise NVMe Mixed Use AG Drive U.2 Gen4 | 1 x 6.4 TB Enterprise NVMe Mixed Use AG Drive U.2 Gen4

| ||

NOTHING |

| Intel Ethernet Network Adapter E810-XXVDA2 for OCP3 (dual-port 10/25 GbE) |

| ||

Additional NIC | Intel Ethernet Network Adapter E810-XXV (dual-port 10/25 GbE) | ||||

Learn More

Contact your Dell account team for a customized quote on 1-877-289+-3355 or go to the Intel and Cloudera solutions page.

- For workloads requiring high network bandwidth, customers might use an Intel Ethernet Network Adapter E810-CQDA2 with PCIe (dual-port 100 GbE) and 100 GbE top-of-rack (ToR) switches.

- Additional NIC is recommended for connectivity to an external storage system using a dedicated storage network. we [repeat endnote 2]

[RAK1]Dell to confirm the legal name for this platform

[DD2]“Dell PowerEdge HS5610 cloud scale server” is the correct name.

[RAK4]Can add more based on dells feedback

[RAK5]Added benefit section in available configs

Dell PowerEdge HS5610 Performance

Thu, 29 Jun 2023 21:55:49 -0000

|Read Time: 0 minutes

Summary

Dell delivers technology optimization without the financial and operational burden of supporting extreme configurations. Dell PowerEdge cloud-scale servers are designed and optimized to give you the ability to scale with server configurations built for CSPs. The servers scale up to two sockets, 32 cores each, 1 TB of memory, and various SAS, SATA, and NVMe storage options. Cloud-scale servers also offer Dell Open Server Manager built on open-source OpenBMC systems management software.

Test configuration

Server | PowerEdge R650xs | PowerEdge HS5610 |

CPU | 2 x Intel® Xeon® Gold 5318Y | 2 x Intel® Xeon® Gold 5418Y |

Memory | 16 x 32 GB at 2933 MT/s | 16 x 32 GB at 4400 MT/s |

Storage | 4 x 960 GB SATA drives | |

RAID controller | H755 Front RAID 5 | |

Operating system | Ubuntu 22.04 TLS | |

Database benchmark—Redis

Redis is an open-source (BSD licensed), in-memory data structure store used as a database, cache, message broker, and streaming engine. Redis provides data structures such as strings, hashes, lists, sets, sorted sets with range queries, bitmaps, HyperLogLogs, geospatial indexes, and streams. Redis has integrated replication, Lua scripting, LRU eviction, transactions, and different levels of on-disk persistence, and provides high availability through Redis Sentinel and automatic partitioning with Redis Cluster.

To achieve top performance, Redis works with an in-memory dataset. Depending on your use case, Redis can persist your data either by periodically dumping the dataset to disk or by appending each command to a disk-based log. You can also disable persistence if you just need a feature-rich, networked, in-memory cache.

Redis supports asynchronous replication, with fast nonblocking synchronization and auto-reconnection with partial resynchronization on net split.

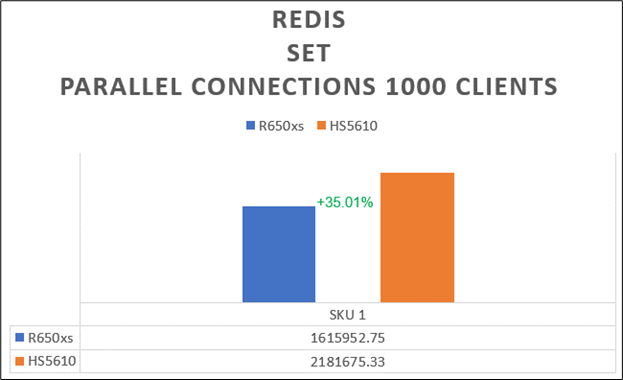

Results:

The data provided highlights the performance of each system running a typical SET command to modify data in the schema in memory. This test leverages AVX512 extensions on both 15G and 16G systems. Relative performance uplift on our 16G configuration was strongly influenced by the increased memory bandwidth provided by DDR5.

The data provided highlights the performance of each system running a typical SET command to modify data in the schema in memory. This test leverages AVX512 extensions on both 15G and 16G systems. Relative performance uplift on our 16G configuration was strongly influenced by the increased memory bandwidth provided by DDR5.- Dell PowerEdge HS5610 database performance improved by 35 percent compared to the previous generation. Veeva claim ID: CLM-007679

- The Dell PowerEdge HS5610 offers a 33 percent increase in price performance per CPU dollar when compared to the previous generation. Veeva claim ID: CLM‑007681

- Dell PowerEdge HS5610 performance has increased by 28 percent per watt compared to the previous generation with Redis database benchmark. Veeva claim ID: CLM-007680

CPU benchmark—V-Ray 5

V-Ray Benchmark is a free, stand-alone application that can be used to test how fast your system renders. It’s simple and fast, and includes three render engine tests:

- V-Ray—CPU compatible

- V-Ray GPU CUDA—GPU and CPU compatible

- V-Ray GPU RTX—RTX GPU compatible

Three custom-built test scenarios are also included to put each V-Ray 5 render engine through its paces.

Discover how your computer ranks alongside others and learn how different hardware combinations can boost your rendering speeds.

Discover how your computer ranks alongside others and learn how different hardware combinations can boost your rendering speeds.

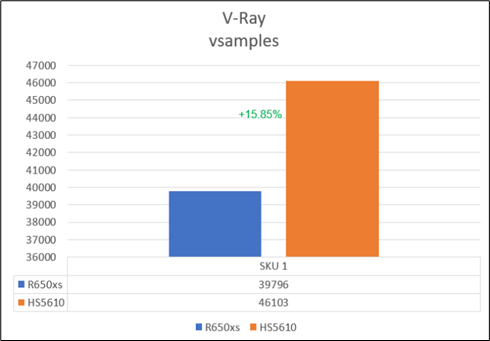

Results:

- The data provided highlights the performance of each system running a CPU benchmark with V-Ray using the number of vsamples. Higher is better.

- PowerEdge HS5610 has 15 percent more CPU rendering compared to the previous generation. Veeva claim ID: CLM-007677

Memory benchmark—STREAM

The STREAM benchmark is a simple synthetic benchmark program that measures sustainable memory bandwidth (in MB/s) and the corresponding computation rate for simple vector kernels.

Computer CPUs are getting faster much more quickly than computer memory systems. As these gains progress, an increasing number of programs will be limited in performance by the memory bandwidth of the system, rather than by the computational performance of the CPU.

As an extreme example, several current high-end machines run simple arithmetic kernels for out-of-cache operands at 4 to 5 percent of their rated peak speeds. That means that they are spending 95 to 96 percent of their time idle and waiting for cache misses to be satisfied.

The STREAM benchmark is specifically designed to work with datasets much larger than the available cache on any given system, so that the results are (presumably) more indicative of the performance of very large, vector-style applications.

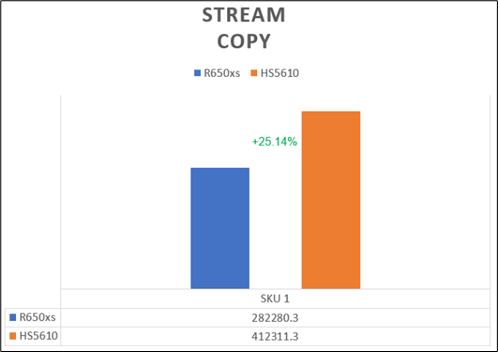

Results:

Results:

- The Copy benchmark measures the transfer rate in the absence of arithmetic. This should be one of the fastest memory operations, but it also represents a common one—fetching two values from memory, a(i) and b(i), and updating one operation.

- PowerEdge HS5610 has 25 percent more memory bandwidth than the similarly configured previous-generation system. Veeva claim ID: CLM-007678

Conclusion

Our engineers select the appropriate benchmarks in coordination with your team. Then, using the benchmarks, we perform iterative testing in a Dell Technologies performance lab to analyze the effects of specific server settings and hardware configurations on a benchmark. This data-driven approach with engineers specializing in PowerEdge system performance allows Dell to identify the optimal system configuration for a given workload and provide guidance that delivers rapid time to value for our cloud customers.

Legal disclosures

- Testing conducted by Dell Server TME Lab March of 2023. Server performance benchmarks were performed on similarly configured Dell PowerEdge HS5610 vs Dell PowerEdge R650xs. See documentation for test and configuration specifics. Actual results will vary by use.

- Testing conducted by Dell Server TME Lab March of 2023. Server performance benchmarks were performed on similarly configured Dell PowerEdge HS5610 vs Dell PowerEdge R650xs. See documentation for test and configuration specifics. The CPU price was based on Intel.com site per March 29, 2023, for Gold 5318Y and 5418Y. Actual results will vary by use.

Dell PowerEdge HS5610—Cold Aisle Service: Blind Mate Rail Kit

Thu, 20 Apr 2023 15:39:35 -0000

|Read Time: 0 minutes

Summary

Large scale data centers are often designed with contained hot aisles to manage ambient temperature. Hot aisle/cold aisle layout involves lining up server racks in alternating rows with cold air intakes, the fronts of servers, facing each other (the “cold aisle”) and hot air exhausts, the backs of servers, facing each other (the “hot aisle”). While this approach to data center design increases control over temperature and power consumption, it can also lead to safety (OSHA) and management efficiency concerns for the technicians who administer data center infrastructure. To address these issues, customers are pushing for cold-aisle-serviceable configurations to reduce the need to enter the hot aisle.

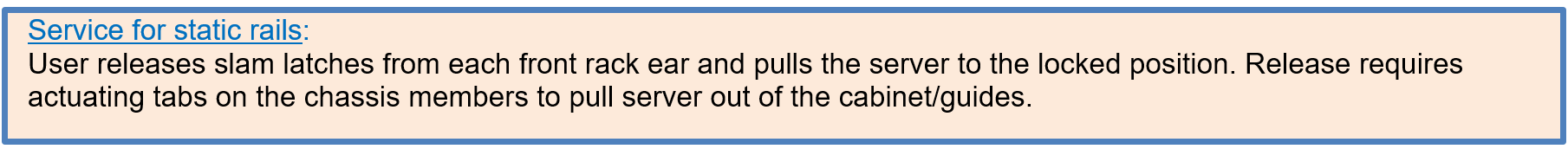

What are blind mate power rails?

Blind mate power rails are static rails that include extra power pass-through bracket assemblies to allow for the ability to connect power and then remove or service the HS5610 without needing hot-aisle access. These rails do not allow for hot-swapping of internal components or in-rack serviceability and are not compatible with strain relief bars (SRBs) or cable management arms (CMAs).

Blind mate power rails:

- Support stab-in installation of the chassis to the rails

- Support tool-less installation in 19-inch EIA-310-E compliant square or unthreaded round hole 4-post racks including all generations of Dell racks

- Support tooled installation in 19-inch EIA-310-E compliant threaded hole 4-post racks

- Support tooled installation in Dell Titan or Titan-D racks

These rails are compatible with the HS5610 cold-aisle-service configuration only.

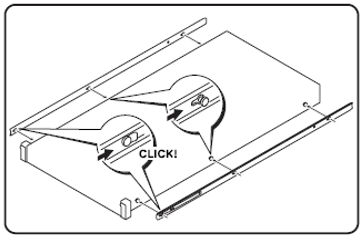

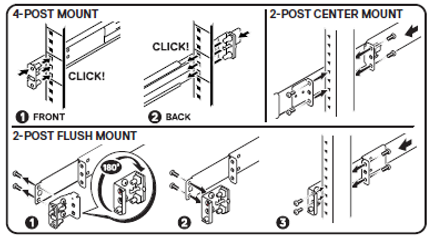

Customer installation for static rails

Step 1: Install chassis members onto server.

- Install chassis rail members with extra length behind server for inner bracket.

- Attach inner bracket to rail chassis members, using supplied screws and a Phillips screwdriver.

- Plug the power cord pigtails into the PSUs.

Step 2: Install cabinet members into rack.

- Attach the outer bracket to installed rail cabinet members by aligning the t-nut and pogo-pin features (should “snap in” both sides).

- Plug external power to the outer bracket receptacles, just as you would an installed server.

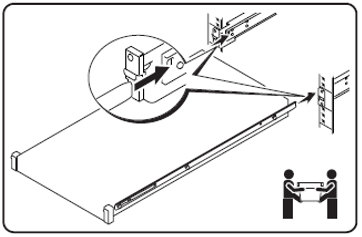

Step 3: Stab-in the chassis to the cabinet guides, and slide it back until rack ears latch to rail mounting brackets. Go around to the hot aisle and plug in the PSUs to power on the server.

Limits

Any cables that do not come out the front need to have blind-mate functionality. Having cables without blind-mate functionality limits our ability to have in-rack service (and an on-rails “service position”), which limits our rails to “static” designs.

Conclusion

Once the cabinet members are installed and powered, service no longer requires hot-aisle access.

References

For more information about PowerEdge HS5610, see the PowerEdge HS5610 Specification Sheet.

For more information about the PowerEdge HS5610 blind mate rail kit, see A22 Rail Installation Guide.

Hyperscale Next: Rack Integration

Wed, 04 Jan 2023 19:03:53 -0000

|Read Time: 0 minutes

Summary

The PowerEdge Product Group and Services, in partnership with Intel®, have purpose-built a program to directly support the growth and specific needs of Cloud Service Providers. This includes hosters, B2B Service Providers, and B2C Service Providers like you. We are offering the Hyperscale Next program to select pre-approved accounts. This Direct from Development (DfD) tech note summarizes how our rack integration service enables rapid time-to-value by delivering ready to deploy rack solutions.

Hyperscale Next

As the public cloud market accelerates business operations and functionality for customers, the largest hyperscalers dominate the cloud infrastructure market and often get favorable treatment from supplying vendors, including advanced access to new technologies, helping them market faster with differentiated services. Unfortunately, this makes it harder for other CSPs, hosters, and online service providers to provide differentiation and innovation. Most must wait until general availability to test new technologies. It can take them several months to take new technology from testing to full production, making it difficult to respond rapidly to evolving technologies and customer demands. The Dell Hyperscale Next program is designed to help our cloud customers react to evolving technologies and customer demands, expand their cloud services and applications, and provide differentiated performance for target workloads. The program includes early access to new technology, rack integration, factory server configuration, ProSupport One, Self-service support technology, and server performance tuning.

Rack integration

A single rack can comprise hundreds of individual components that need to be identified, ordered, assembled, and eventually tested. These components are acquired through various suppliers, with lead times ranging from days to months. In addition, components must be inspected, tracked, and stored until the rack is assembled. Given the complexity of acquiring the necessary components, a single part delay can wreak havoc on even the best planned schedules! Designing, integrating, and testing all components in a rack that meets your unique environment is a complex process. The process requires expertise, including but not limited to server and rack engineering, networking, software integration and automation, hardware validation, purchasing, transportation, and logistics. The great news is that the Hyperscale Next Rack Integration Service lets Dell experts manage the complexities of rack integration and deliver fully configured and tested rack solutions that can be deployed within hours of reaching your data center.

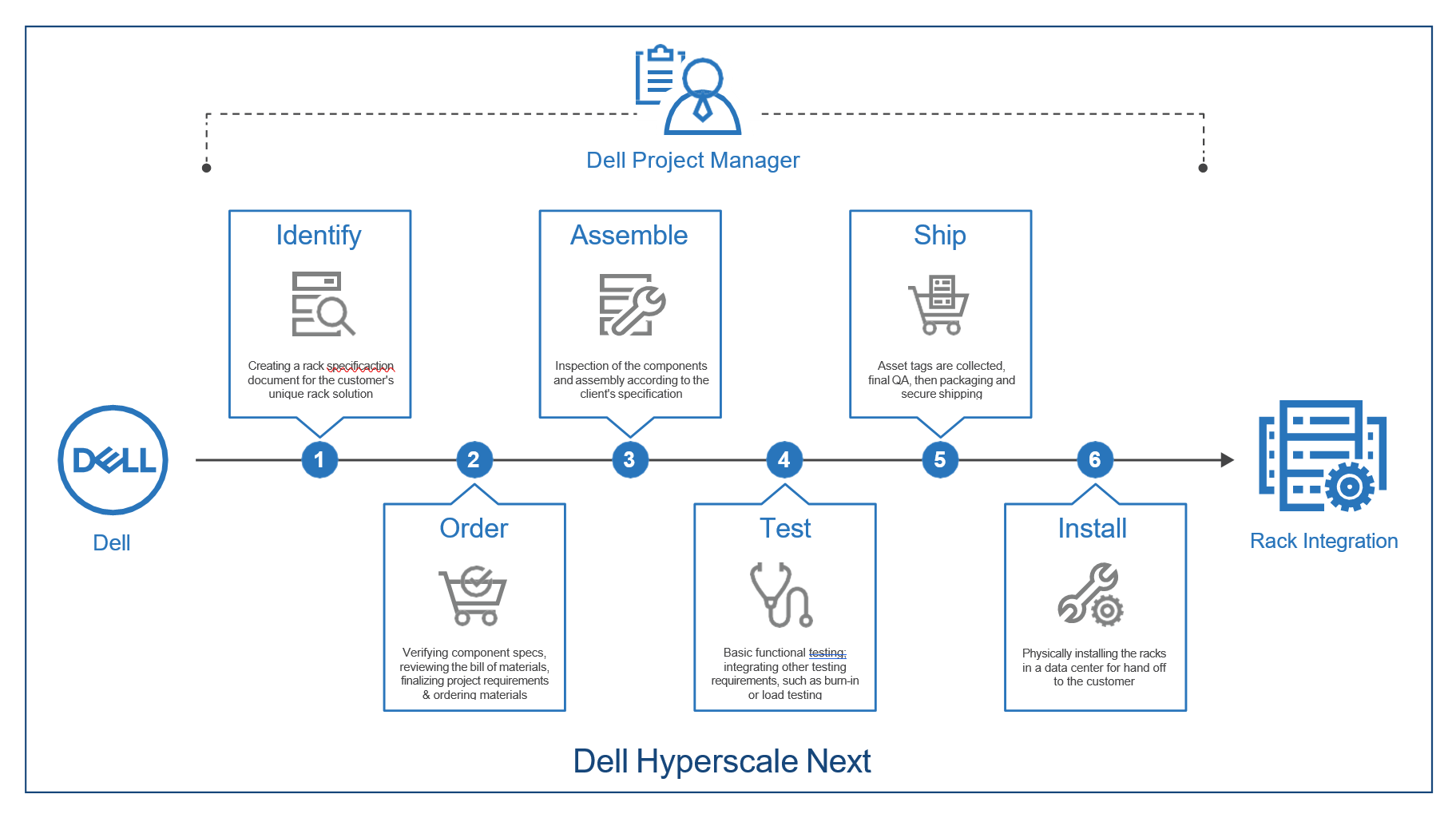

How does it work?

It starts with a Dell Project Manager. This person works to understand your unique requirements and is your single point of contact for the project. They scope the project, keep you updated, and work with the various Dell teams to develop a plan that works for you and your schedule. Let us take a closer look at the major steps.

Planning and design

Planning is critical to a successful and on-time rack integration project, and we analyze every detail. During this phase, we verify component specs, review the bill of materials, finalize the project requirements, and order materials. In addition, Dell has developed relationships with suppliers worldwide to ensure that we can get our customers what they need, when they need it.

Configuration collection

We create a rack specification document for your unique rack solution. This document includes the layout, servers, power distribution, networking, labels, and cabling. If your solution requires custom cables, we can work with our partners to make it happen. In addition, the document includes details on required firmware settings, firmware versions, and your software installation or configuration. Finally, this document is machine-readable, so our automated rack test suite can use it to validate that each component is installed and configured precisely to your specifications. Before proceeding, our project manager reviews the document with you for approval or updates.

Factory build

Dell has rack integration sites worldwide where we have perfected the science of rack integration. First, the materials are collected and securely stored until assembly. Then, after everything is in place, our integration team inspects the components and assembles them to your specification. Components that go into a rack are shipped in packaging to ensure that they reach their final destination intact. The packaging protects equipment but generates waste. The Dell rack integration service allows Dell to recycle and reuse packing material that may otherwise find its way into a landfill. As outlined in our FY21 ESG Progress Made Real Report, Dell has committed that by 2030, 100% of our packaging will be made from recycled or renewable material. Read more about what Dell is doing to advance sustainability here.

Server testing, imaging, and provisioning

Using the BIOS, iDRAC, and other component settings defined in the rack specification document as input, our automated rack integration framework configures settings on each server. This step saves our customers hours because they do not have to perform onsite manual provisioning or develop automation to do it themselves. After configuring the servers, our automation framework performs basic functional tests and checks server event logs for errors. In rare instances, a hardware failure occurs, and we have direct access to replacement parts to allow us to move forward quickly.

We also use the rack integration framework to install custom software or operating systems during server provisioning in a consistent and repeatable manner. Our software development team is also available to work with you to integrate other testing requirements, such as burn-in or load testing, to minimize or eliminate your onsite provisioning to further your rapid time to value.

Network, power, and serial testing

The automated rack integration framework collects the MAC data from all servers and switches and confirms correct network connectivity across the rack by comparing it to the rack specification document. We repeat the process for any serial devices. Also, the integration framework methodically cycles power on smart PDU sockets and confirms that connected devices power off when they should.

Final test and asset report

We consolidate the test results into a final test report, or “green sheet” after completing the build, testing, and provisioning process. This green sheet tells the team that a rack is ready to ship! Collecting asset information can be tedious. Therefore, our integration framework collects all asset info, such as network card MAC and Dell service tag data, during the integration process. We then combine the data into a carefully formatted report that our customers can programmatically read to update their asset information before rack delivery, saving even more time.

First article inspection

We conduct final rack inspections and a first article inspection where we select a random rack and manually confirm every requested configuration change. We invite our customers to participate in person for the first article inspection, or we record videos and photos of the inspection and share them before shipping.

Shipping and installation

The racks are loaded into trucks, securely strapped using extensively tested standards, and sent to their final destination. Upon arrival, Dell can physically install the racks in a data center or hand off to your team. We also offer onsite validation, where we can rerun server, network, serial, and power testing.

Let’s get started!

Using the Dell Rack Integration service gives our customers rapid time to value by taking the complexity out of designing, building, and deploying enterprise rack solutions. Our experts use years of partner relationships, experience, and best practices to make the process painless and efficient. Work with your Dell account team for more detailed information or email to hyperscale.next@dell.com.

References

Hyperscale Next: Performance Tuning

Wed, 04 Jan 2023 18:41:31 -0000

|Read Time: 0 minutes

Summary

The PowerEdge Product Group and Services, in partnership with Intel, have purpose-built a program to directly support the growth and specific needs of Cloud Service Providers. This includes Hosters, B2B Service Providers, and B2C Service Providers like you. We are offering the Hyperscale Next program to select pre-approved accounts. This Direct from Development (DfD) tech note summarizes how our performance engineering team can enable rapid time-to-value through data-driven performance optimization testing at no-cost.

Hyperscale Next

As the public cloud market accelerates business operations and functionality for customers, the largest hyperscalers dominate the cloud infrastructure market and often get favorable treatment from supplying vendors, including advanced access to new technologies, helping them market faster with differentiated services. Unfortunately, this makes it harder for other CSPs, Hosters, and online service providers to provide differentiation and innovation. Most must wait until general availability to test new technologies. It can take them several months to take new technology from testing to full production, making it difficult to respond rapidly to evolving technologies and customer demands.

The Dell Hyperscale Next program is designed to help our cloud customers react to evolving technologies and customer demands, expand their cloud services and applications, and provide differentiated performance for target workloads — with speeds unmatched by other Tier 1 providers. The program includes early access to new technology, rack integration, factory server configuration, ProSupport One, Self-service support technology, and server performance tuning.

A Workload Optimized Server

Dell Technologies understands that time-to-value is critical when making IT purchasing decisions. Our no-cost Hyperscale Next Performance Tuning Program aims to give select customers a head start when evaluating and adopting new enterprise technologies. Optimizing a server for a particular workload can be challenging, time-consuming, and costly. Our performance tuning program allows you to work with our world-class performance engineers to configure and optimize a PowerEdge server for your unique workload. This direct engagement lets our engineers understand your requirements and perform iterative testing in our lab to make data-driven recommendations for optimal workload performance.

Figure 1: Dell PowerEdge R650xs

Figure 1: Dell PowerEdge R650xs

The Process

IT decision-makers must answer a few questions when mapping a workload or use case to a server.

- Server: Which server meets my workload and use-case requirements?

- CPU: Which CPU SKU delivers the best price/performance? More cores or higher frequency?

- Storage/Memory: What are my capacity and performance requirements?

- BIOS and Firmware: Which settings deliver the best performance for my workload?

- Networking: What is my networking bandwidth requirement per CPU?

- Power: What are my power constraints?

- Cost: What is my budget?

Our team works with you to identify the appropriate server, components, and server settings for the workload.

For example, when running a transactional database, selecting a low core count and high-frequency CPU SKU can deliver high performance and be more cost-effective due to licensing costs. Likewise, a memory configuration with the optimal DIMM quantity, capacity, and speed increases performance and minimizes TCO. Here’s how it works.

- Dell performance engineers meet with you to understand your workload requirements

- Our engineers map your workload to synthetic benchmarks that act as proxies for the workload

- Our engineers perform iterative benchmarking in our lab to study the effects of various BIOS, firmware, OS settings, and system settings on workload performance

- Finally, our engineers analyze the data to identify the hardware configuration and server settings that deliver optimal performance for your workload

Performance Tuning

Our performance engineers use popular synthetic benchmarks as proxies for your workload to evaluate performance and identify server settings that optimize performance. Some of the example workloads we use are shown in Figure 2.

Benchmark | What It Tests |

MLC and Stream | Memory Bandwidth and Latency |

FIO | Storage IOPS and Throughput |

SPEC CPU 2017 (SPECint) | CPU Integer Performance |

HPL (Linpack) | CPU Floating Point Performance |

HammerDB | SQL, MySQL database performance |

SPECjbb 2015 | Java Server Performance |

iPerf | Network Bandwidth |

Figure 2: Synthetic Benchmarks List

Our engineers select the appropriate benchmarks in coordination with your team. Then, using the benchmarks, we perform iterative testing in a Dell performance lab to analyze the effects of specific server settings and hardware configurations on a benchmark. This data-driven approach with engineers specializing in PowerEdge system performance allows Dell to identify the optimal system configuration for a given workload and provide guidance that delivers rapid time to-value for our cloud customers.

Performance Troubleshooting

The Hyperscale Next Program includes direct access to our system performance engineers. When evaluating a new server or optimizing your current fleet, our team helps you quickly understand and resolve issues limiting expected performance in your workload or benchmark. This service is another example of how we are helping our customers achieve rapid time-to-value when they choose Dell PowerEdge servers.

Early Access Program/s

Through the Hyperscale Next Program, select customers receive server engineering samples to start their evaluation before a platform RTS. This early access program enables rapid time-to-value by allowing you to evaluate and optimize for your environment before a product is publicly available. With the help of our Hyperscale Next team, you get a head start on adopting the latest technologies. In addition, the performance tuning service is available for platforms in the early access program and currently shipping products.

Let’s get started!

The Hyperscale Next Program performance tuning service is available to select customers at no additional cost. This program helps our customers enable and optimize the latest technologies at the pace their business demands. Work with your Dell account team for more detailed information or email hyperscale.next@dell.com.