Home > Storage > PowerFlex > White Papers > VMware Greenplum on Dell PowerFlex > Basic validation

Basic validation

-

After the Greenplum cluster is installed, a basic validation is carried out to ensure that PowerFlex compute, storage, and networking components are performing as expected. The following tools are used to carry out the basic validation of PowerFlex and Greenplum: FIO and gpcheckperf.

FIO tool: This tool is used to carry out storage IO characterization, an FIO tool is used to measure the IOPS and bandwidth of a cluster. FIO was originally written as a tool to test the Linux I/O subsystem. It has the flexibility to select different IO sizes, sequential or random reads and writes. It spawns worker threads or processes to perform the specified I/O operations.

Gpcheckperf tool: Greenplum provides a utility that is called gpcheckperf, which is used to identify hardware and system-level performance issues on the hosts in the Greenplum cluster. gpcheckperf starts a session on one of the hosts in the cluster and runs the following performance tests:

- Network Performance (gpnetbench)

- Disk I/O Performance (dd test)

- Memory Bandwidth (stream test)

FIO storage IO characterization

Read and write tests are carried out using FIO tool. The following commands are issued on each file system mountpoint which is mapped to every disk for each SDS using the FIO tool.

Read Test Results:

This test was done with 256 K block size using a random read workload.

fio --name=largeread --bs=256k --filesize=10G --rw=randread --ioengine=libaio --direct=1 --time_based --runtime=900 --numjobs=4 --randrepeat=0 --iodepth=16 --refill_buffers --filename=/storage/data01/fiotestfile:/storage/data03/fiotestfile:/storage/data02/fiotestfile:/storage/data04/fiotestfile:/storage/data05/fiotestfile:/storage/data10/fiotestfile:/storage/data08/fiotestfile:/storage/data06/fiotestfile:/storage/data07/fiotestfile:/storage/data09/fiotestfile

The FIO test was run using a PowerFlex cluster that had five Storage Only nodes in a single protection domain. Each Storage Only node was populated with ten SSD disks, in total there were 50 disks.

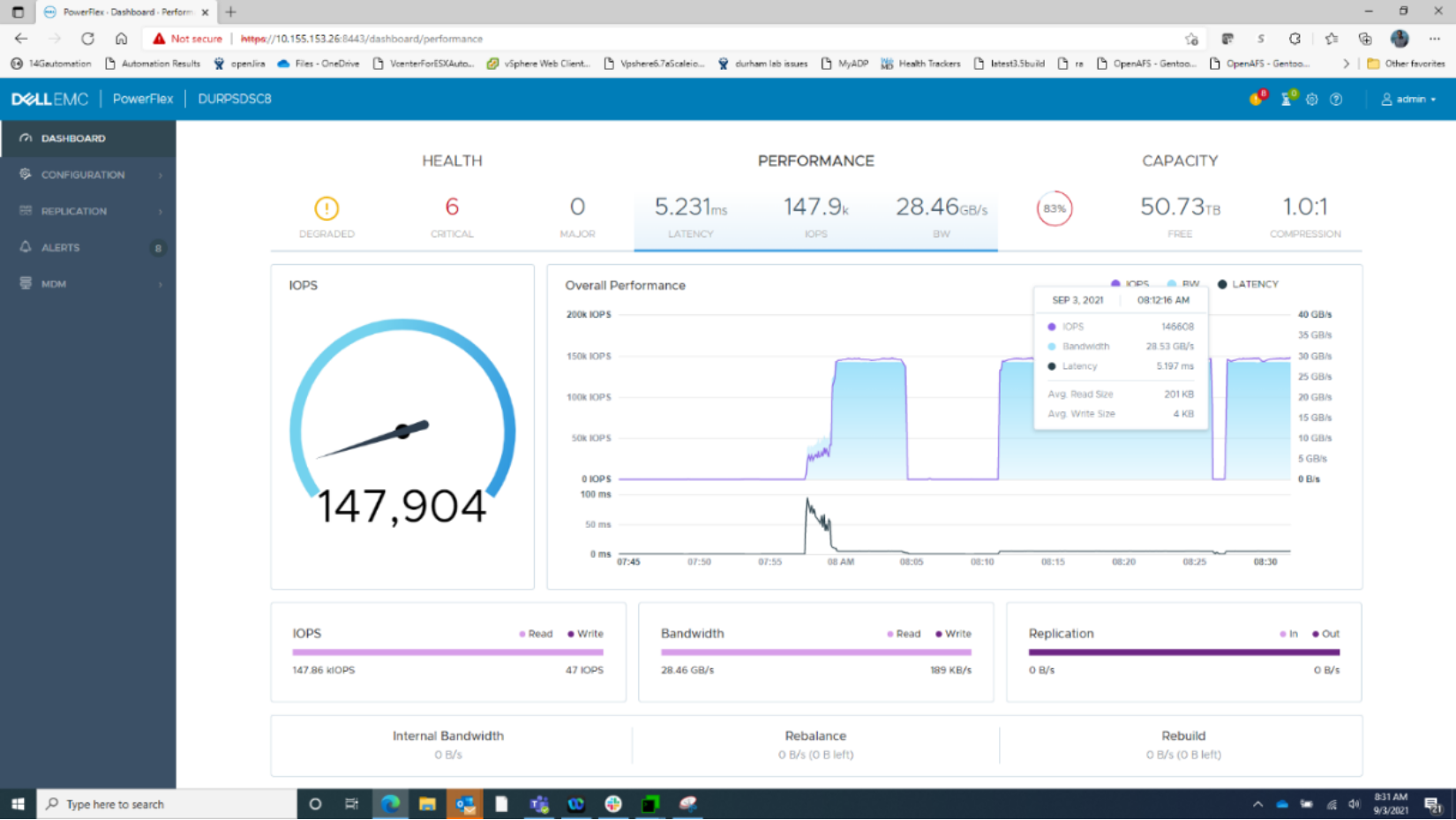

Note: The FIO tests showed a read bandwidth of 28 GB/s. The HBA limit on the storage nodes were reached in this test. There was no bottleneck observed at the PowerFlex storage side. Extra network bandwidth allows an increased bandwidth.

Figure 5. FIO random read test on PowerFlex

Write Test Results:

This test was done with 256 K block size using a random write workload.

fio --name=largewrite --bs=256k --filesize=10G --rw=randwrite --ioengine=libaio --direct=1 --time_based --runtime=900 --numjobs=4 --randrepeat=0 --iodepth=16 --refill_buffers --filename=/storage/data01/fiotestfile:/storage/data03/fiotestfile:/storage/data02/fiotestfile:/storage/data04/fiotestfile:/storage/data05/fiotestfile:/storage/data10/fiotestfile:/storage/data08/fiotestfile:/storage/data06/fiotestfile:/storage/data07/fiotestfile:/storage/data09/fiotestfile

The FIO test was run using a PowerFlex cluster that had five Storage Only nodes in a single protection domain. Each Storage Only node was populated with 10 SSD disks, in total there were 50 disks.

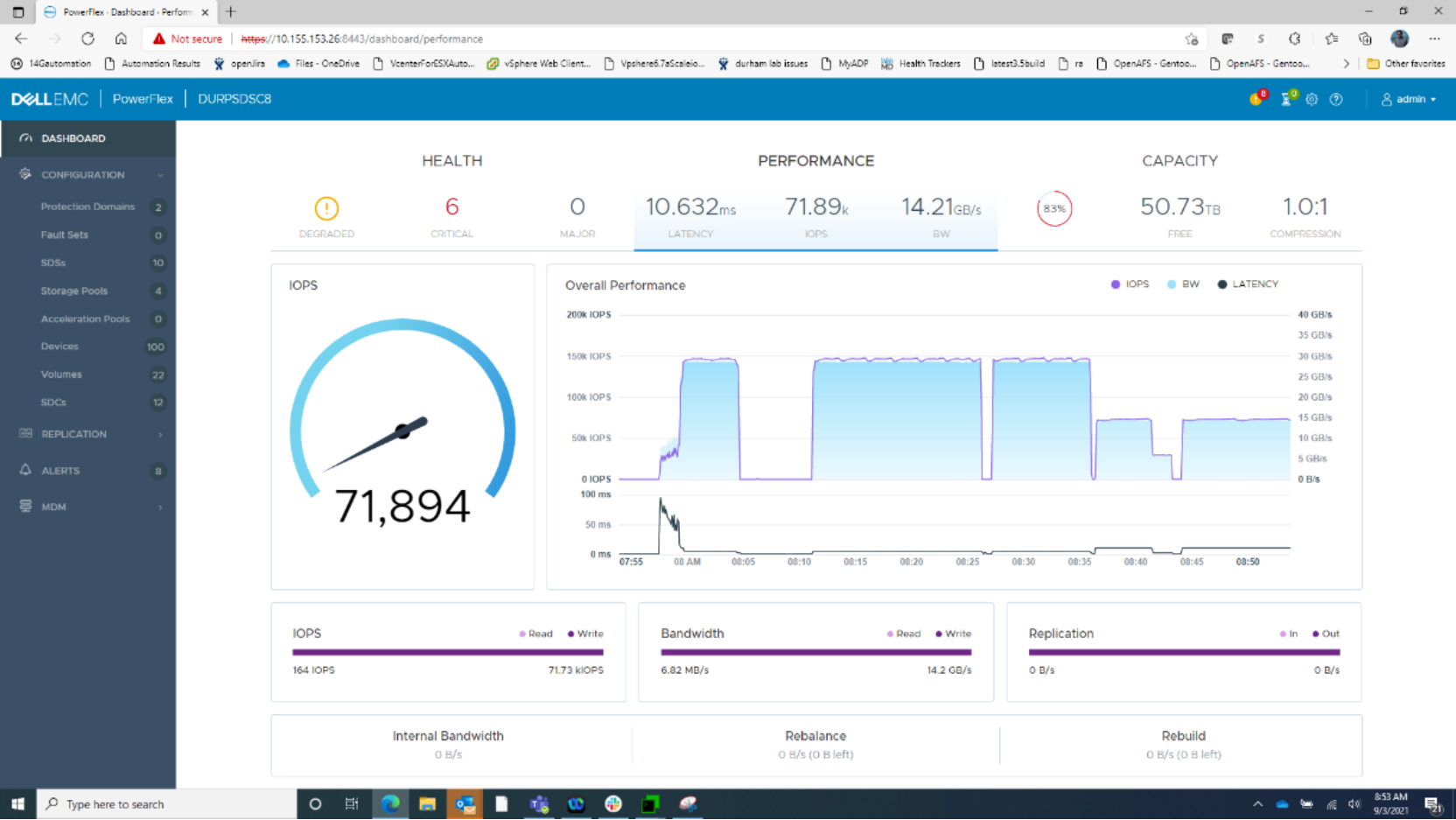

Note: The FIO tests showed a write bandwidth of 14 GB/s. The HBA limit on the storage nodes were reached in this test. The NVMe nodes would eliminate this bottleneck, and moving to 100 GbE networking would increase the performance up to a theoretical 20 GB/s per node. There was no bottleneck observed at the PowerFlex storage side.

The bandwidth in the write test is half of that observed during the read test. This bandwidth loss happens because the writes to PowerFlex volumes are mirrored at the storage layer to allow for data resiliency. Therefore, every host write results in two writes in the storage layer.

Figure 6. FIO random write test on PowerFlex

Greenplum IO characterization

Gpcheckperf is used to test the performance of the hardware across the Greenplum cluster. I/O, memory, and network tests are carried out from the Greenplum VMs by gpcheckperf utility, provided by VMware.

gpcheckperf -f /home/gpadmin/GBB.seghosts1-5 -S 80GB -r dsN -D -d /storage/data01 -d /storage/data02 -d /storage/data03 -d /storage/data04 -d /storage/data05 -d /storage/data06 -d /storage/data07 -d /storage/data08 -d /storage/data09 -d /storage/data10

The following are the results.

Sum = 14,283.62 MB/sec

min = 1,098.35 MB/sec

max = 1,790.22 MB/sec

avg = 1,428.36 MB/sec

Median = 1,432.24 MB/sec

For detailed results of gpcheckperf, see Appendix