NVIDIA vGPU brings the full benefit of NVIDIA hardware-accelerated graphics to virtualized solutions. This technology provides exceptional graphics performance for virtual desktops equivalent to local PCs when sharing a GPU among multiple users. It also enables aggregation of multiple GPUs assigned to a single VM to power the most demanding workloads.

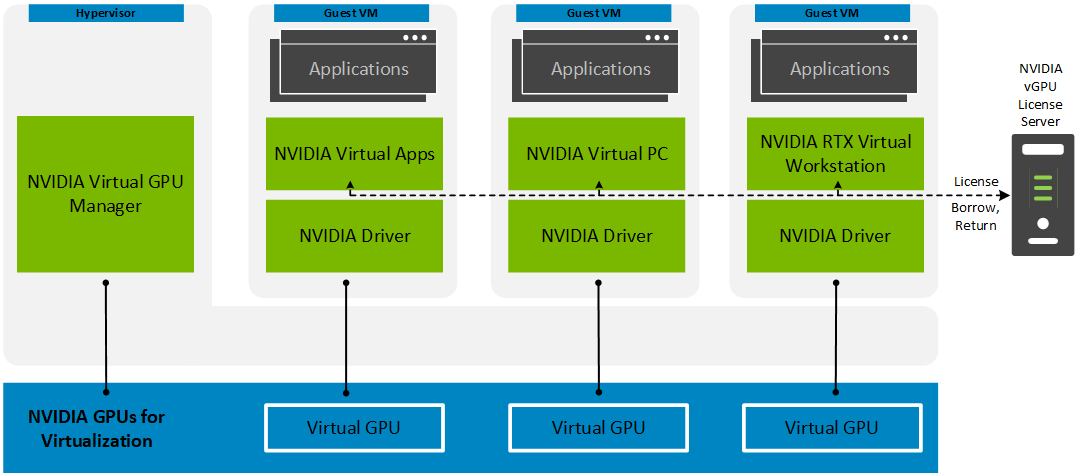

NVIDIA vGPU is the industry's most advanced technology for virtualizing true GPU hardware acceleration to share them between multiple virtual desktops, or aggregate them to assign to a single virtual desktop, without compromising the graphics experience. NVIDIA vGPU offers three software variants to enable graphics for different virtualization techniques:

- NVIDIA Virtual Applications (vApps) —Designed to deliver graphics accelerated applications using Remote Desktop Session Host (RDSH)

- NVIDIA Virtual PC (vPC) —Designed to provide full virtual desktops with up to dual 4K monitor support or single 5K monitor support

- NVIDIA RTX Virtual Workstation (vWS) —Designed to provide workstation-grade performance in a virtual environment with support for up to four quad 4K or 5K monitors or up to two 8K monitors

- NVIDIA Virtual Compute Server (vCS) —Designed to accelerate server virtualization so that the most compute-intensive workloads, such as artificial intelligence, deep learning, and data science, can be run in a VM

Dell EMC Ready Solutions for VDI can be configured with the following NVIDIA GPUs:

- NVIDIA M10 (Maxwell) —Recommended for vApps or vPC environments, each card is equipped with 32 GB of frame buffer with the maximum available frame buffer per user at 8 GB. Dell Technologies recommends hosting a maximum of 32 Windows 10 users per card. While some VSAN Ready Node configurations support three cards, consider sizing with a maximum of two cards per node. Configure systems with less than 1 TB of memory when using the M10 card.

- NVIDIA T4 Tensor Core —NVIDIA's Turing architecture is available in the T4 GPU, which is considered the universal GPU for data center workflows. The T4 GPU is flexible enough to run knowledge worker VDI or professional graphics workloads. Add up to six GPU cards into your R740xd appliance to enable up 96 GB of graphics frame buffer. For modernized data centers, use this card in off-peak hours to perform your inferencing workloads with NVIDIA vCS software.

- NVIDIA A40 (Ampere) —NVIDIA A40 GPUs provide a leap in performance and multi-workload capabilities for the data center, combining superior professional graphics with powerful compute and AI acceleration to meet today’s design, creative, and scientific challenges. Driving the next generation of virtual workstations and server-based workloads, NVIDIA A40 brings features for ray-traced rendering, simulation, virtual production, and more to professionals any time, anywhere.

Note: The A40 is only supported with vGPU on vSphere 7.0 update 2 and newer.

Mixed GPU deployments

As a best practice, members of a VMware vSAN-based cluster must be identical or as homogeneous as possible in terms of hardware, software, and firmware. This configuration is primarily designed to reduce operational complexity and maintenance requirements. When deploying NVIDIA vGPU and the associated NVIDIA GPUs in a VxRail or vSAN Ready Node environment, you might want or require a mixed GPU environment because:

- Usage patterns and workloads that are better matched to different physical GPU types need to be addressed.

- A newer generation of NVIDIA GPUs that adds greater value to the overall solution has been released.

- A previously deployed cluster has GPUs that have reached their end-of-life and the cluster must be expanded to accommodate a growth in the user base.

If a mixed GPU configuration is unavoidable, consider the following information when you are planning and designing within a Citrix Virtual Apps and Desktops environment:

- Mixed physical GPUs are not supported within a single node. A single compute node can only contain a single physical GPU type.

- Each NVIDIA GPU model has its own set of NVIDIA vGPU profiles that are unique to that card model.

- Each chosen vGPU profile needs an associated Citrix Virtual Apps and Desktops gold image. This requirement adds administrative overhead. These gold images must be either maintained separately or copied from a single parent gold image and the vGPU configurations that are applied to each subsequent related vGPU-enabled gold image.

- Create and maintain separate Citrix Virtual Apps and Desktops pools must be created and maintained for each related vGPU profile.

- Citrix Virtual Apps and Desktops intelligently picks the appropriate hosts to deploy the NVIDIA vGPU pool to the correlated NVIDIA graphics cards in a vSphere cluster.

- Consider implementing multiple machine catalogs that are specified within a single delivery group to obfuscate the separate desktop gold images that are required to support the mixed GPU configuration.

- Consider redundancy and failover when expanding an existing VxRail or vSAN Ready Node cluster with a new GPU type:

- To enable maintenance with minimal downtime, add two or more identically configured nodes.

- To expand by a single node, which does not provide redundancy for that vGPU type, use only random non-persistent desktops (also known as pooled VDI desktops) to reduce the impact of an outage.

- If four or more nodes are required, deploy them as a new cluster.

Consult your Dell Technologies account representatives to discuss a long-term hardware life-cycle plan that gets the best value out of your investment and solution.