Home > APEX > Storage > White Papers > Sentiment Analysis using Dell APEX File Storage for AWS and Amazon SageMaker > Step 7: Training and Evaluation

Step 7: Training and Evaluation

-

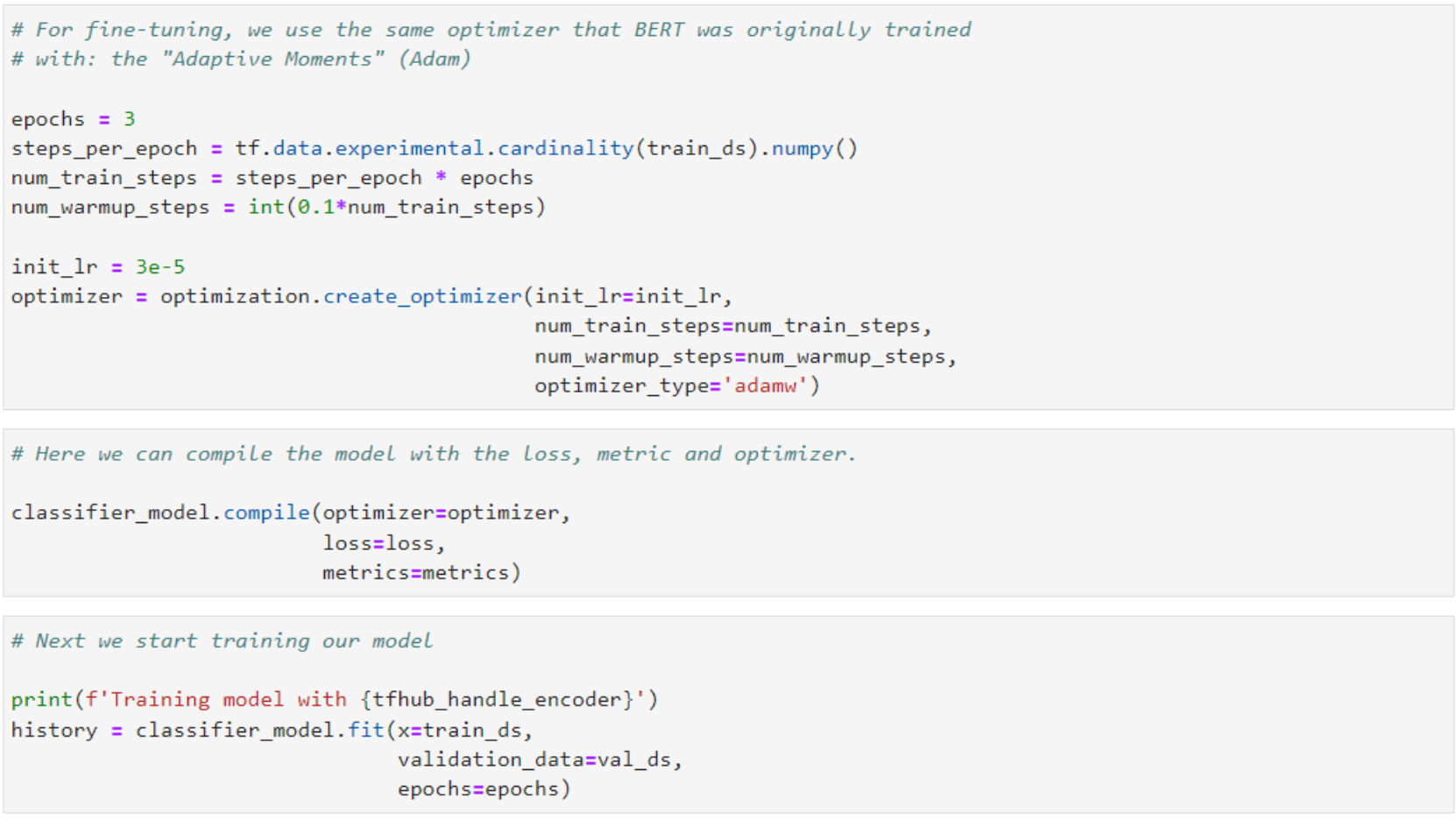

Now we can proceed with training our model for three epochs and define some hyperparameters.

For fine-tuning, we use the same optimizer that BERT was originally trained with, known as Adaptive Moment Estimation (ADAM). This optimizer minimizes the prediction loss and completes regularization by weight decay (not using moments). This is also known as AdamW.

Training model with https://tfhub.dev/tensorflow/small_bert/bert_en_uncased_L-2_H-128_A-2/1

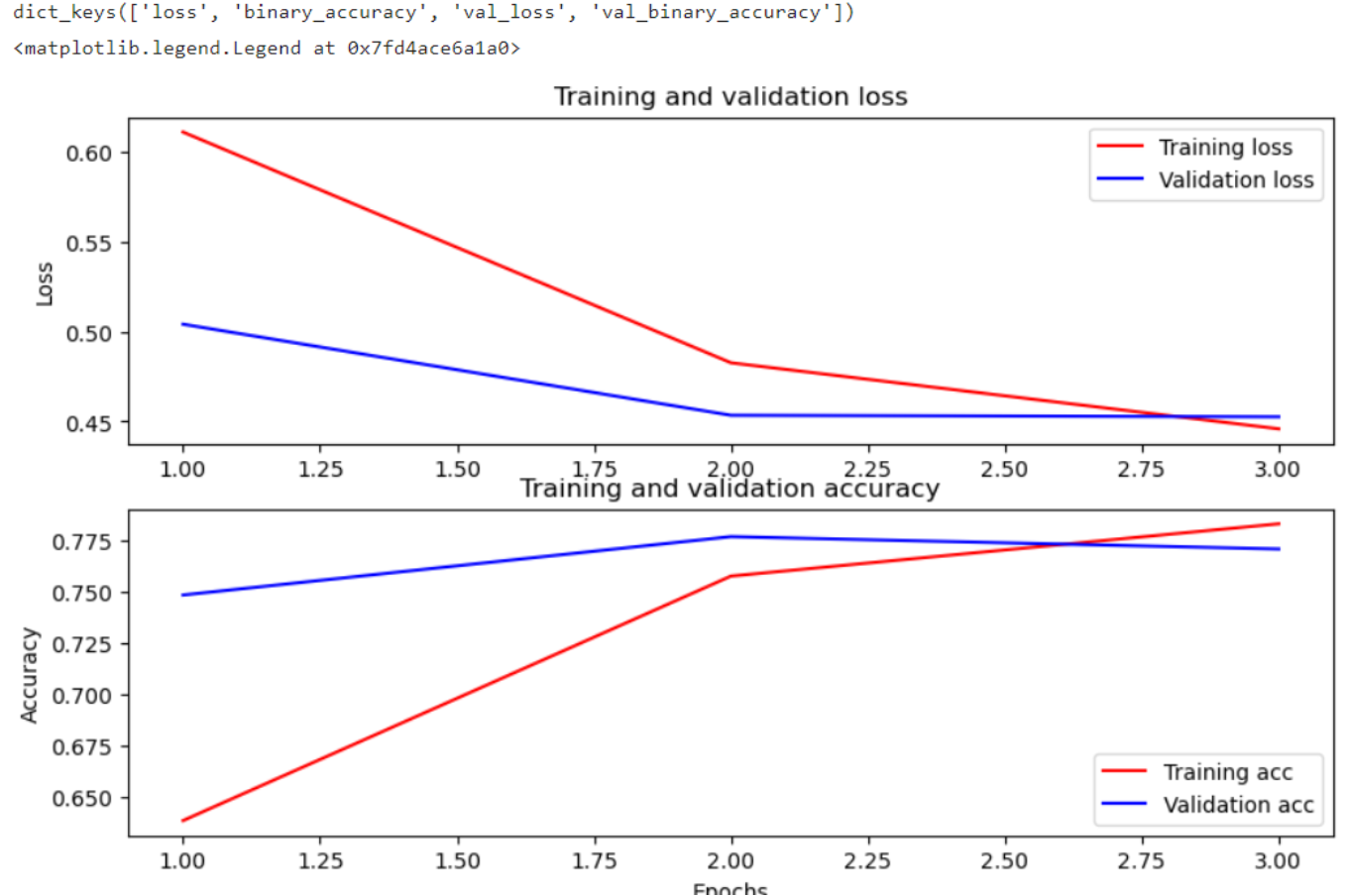

Epoch 1/3

625/625 [==============================] - 355s 558ms/step - loss: 0.6109 - binary_accuracy: 0.6386 - val_loss: 0.5041 - val_binary_accuracy: 0.7484

Epoch 2/3

625/625 [==============================] - 345s 552ms/step - loss: 0.4827 - binary_accuracy: 0.7576 - val_loss: 0.4535 - val_binary_accuracy: 0.7768

Epoch 3/3

625/625 [==============================] - 341s 546ms/step - loss: 0.4459 - binary_accuracy: 0.7831 - val_loss: 0.4527 - val_binary_accuracy: 0.7708

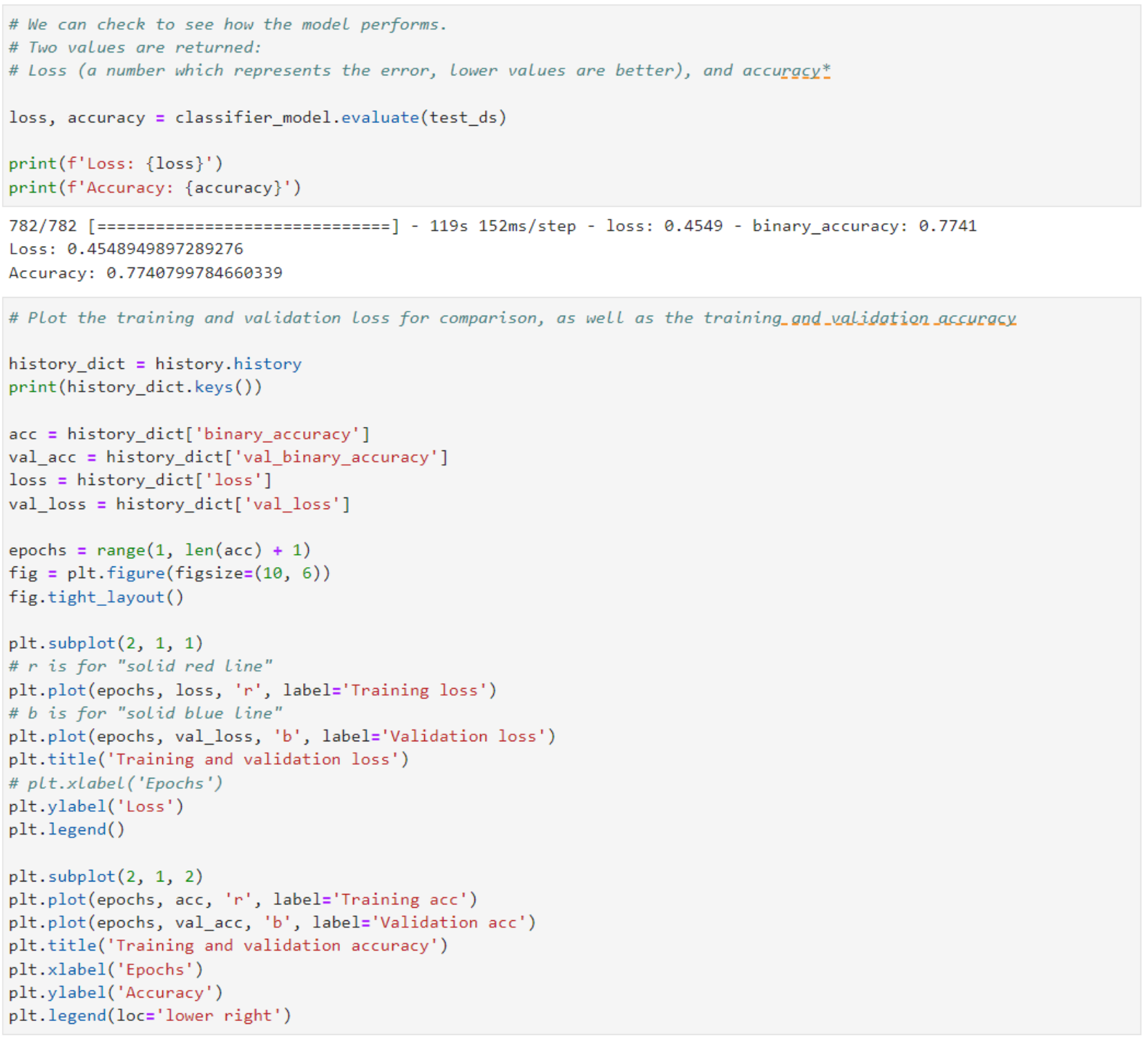

The following output displays how the model performs. Two values are returned. Loss (a number which represents the error, where lower values are better), and accuracy.

In the above plot, the red lines represent the training loss and accuracy, and the blue lines are the validation loss and accuracy. Both lines converge as expected.