Home > Data Protection > PowerProtect Data Manager > PowerProtect Data Manager: Protecting VMware Tanzu Kubernetes Clusters > Architecture overview

Architecture overview

-

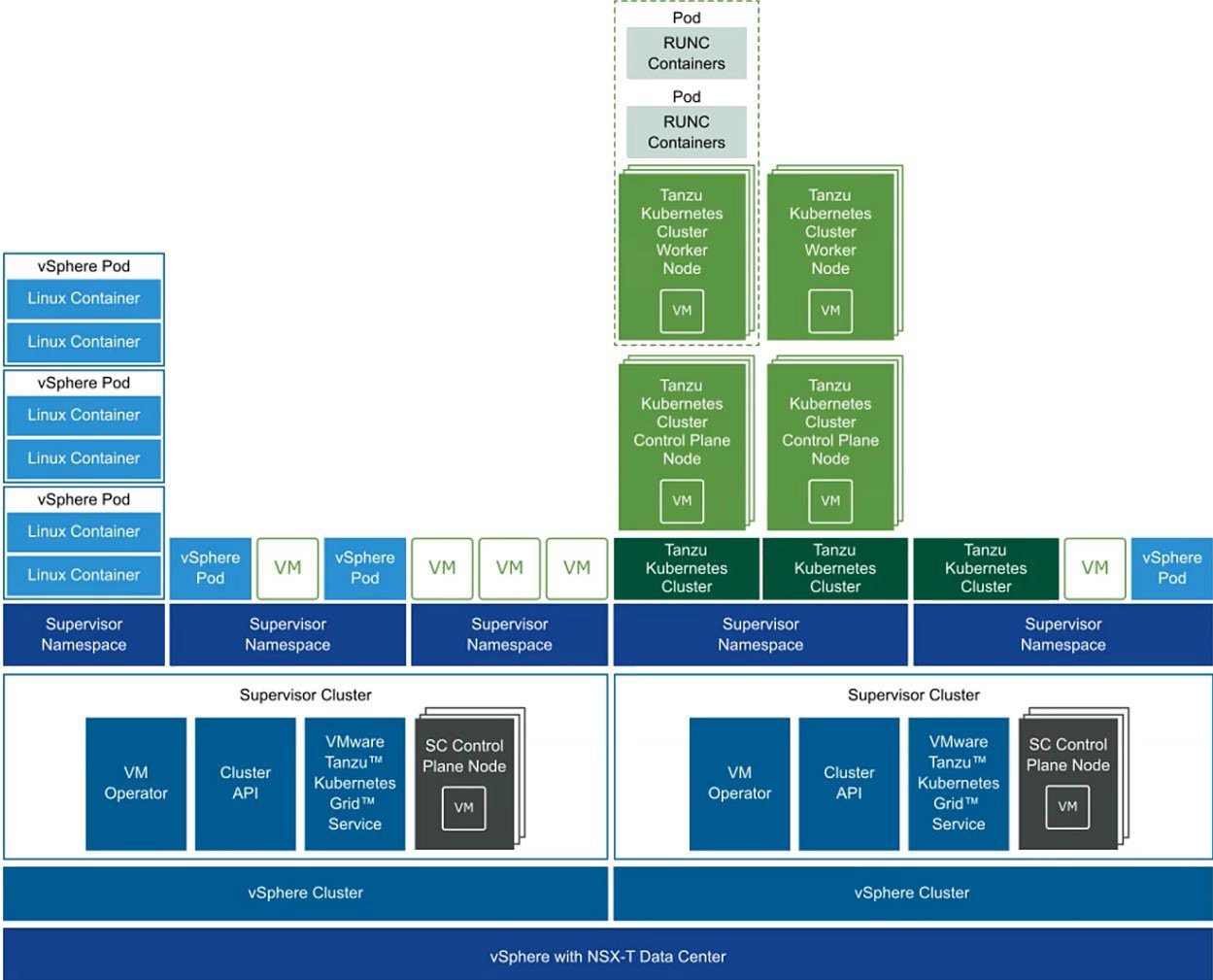

PowerProtect Data Manager 19.7 introduces the ability to protect Tanzu Kubernetes cluster workloads. VMware vSphere 7U1 re-architects vSphere with native Kubernetes as its control plane. A TKG cluster is a Kubernetes cluster that runs inside the virtual machines on the supervisor layer, which allows Kubernetes to run with consistency. It is enabled via the TKG service for VMware vSphere and is upstream-complaint with open- source Kubernetes (guest cluster). The guest cluster is a Kubernetes cluster running on VMs and consists of its control plane VM, management plane VM, worker nodes, pods, and containers.

PowerProtect Data Manager protects Kubernetes workloads and ensures that the data is consistent and highly available. PowerProtect Data Manager is a virtual appliance that is deployed on an ESXi host using OVA. It is integrated with PowerProtect Data Domain Virtual Edition (DDVE) as protection target where backups are stored.

Once the cluster is discovered, it is added as a PowerProtect Data Manager asset source, and associated namespaces as assets are available to be protected. During the discovery process, PowerProtect Data Manager creates the following two namespaces in the cluster. The data is compressed and deduplicated at the source and sent to the target storage.

- Velero-ppdm: Contains a Velero pod to back up metadata and stage it to the target storage in a bare metal environment. It performs PVC and metadata backup in VMware Cloud Native Storage (CNS).

- PowerProtect: Contains a PowerProtect controller pod to drive Persistent Volume Claim snapshot and backup and push the backups to target storage using intermittently spawned cProxy pods.

Note: The pods running in the guest clusters do not have direct access to the supervisor cluster. The persistent volumes provisioned by vSphere CSI on the guest cluster create an FCD disk in the supervisor space and are mapped to the guest cluster via paravirtual CSI driver. PowerProtect Data Manager uses internal APIs to protect paravirtual volumes.

According to Tanzu Kubernetes cluster architecture, vSphere cluster (ESXi as worker node) has supervisor clusters and guest clusters (TKG clusters). The guest clusters have their own control plane VMs, management plane, worker nodes, networking, pods, and namespaces and are isolated from each other. Supervisor clusters and guest clusters communicate via API servers. The cProxy of PowerProtect Data Manager does not have access to the pods running on the guest clusters because it is external to the clusters; therefore, PowerProtect Data Manager does not use cProxy for the backup and restore process. However, PowerProtect Data Manager uses a vProxy based protection solution. The vProxy agent creates a snapshot of VM data directly from the datastore. The snapshot is moved directly to the target storage where the backups are stored. When the backup job is triggered, CNDM communicates with the VM direct to find and reserve a vProxy. The vProxy is created at the vCenter specifically for TKG clusters. Once the vProxy is reserved, CNDM initiates the communication with the API server of the guest cluster using Velero operator. The API server then communicates with the PowerProtect controller (PowerProtect namespace) where the backup job and Velero backup custom resources are created. It communicates with Velero PodVM.

Velero PodVM is responsible for communicating with the API server of the supervisor cluster, which in turn talks to the MasterVM of the supervisor cluster. MasterVM takes an FCD snapshot of the pods using the backup driver component. Once this task is completed, the PowerProtect controller requests that the vProxy VM move data from FCD to the backup target. The Velero PodVM has two main components—the vSphere plug-in that communicates with the supervisor cluster and the Data Domain object store plug-in that communicates with the backup target.