BOSS AI Platform On-Premises Deployment Requirements

The BOSS AI platform can be deployed onto any size of TKG cluster. The most commonly deployed cluster configurations are listed below:

| Cluster category | Number of workers (physical servers | CPU | RAM (GB) | Local storage (GB) |

| Small | 3 | 8 (16 Cores Min) | 32 | 300 |

| Medium | 6 | 16 (16 Cores Min) | 64 | 300 |

| Large | 9 | 24 (16 Cores Min) | 96 | 300 |

predeployment requirements

- Load Balancer

- Persistent Storage

- Backed by a resilient fault-tolerant datastore.

Load Balancer

Every pod on a cluster requires a unique cluster-wide IP and a suitable method for pods to communicate with the outside world.

BOSS AI requires that the following TCP Ports are free and open for cluster ingress:

- 80, 443

- 5671 (If used in a federated environment)

Persistent Storage

BOSS AI recommends a minimum of 1 TB of persistent storage availability before deployment. The Dell VxRail cluster was configured with vSAN datastores available to TKG as persistent storage.

- Storage requirements are use-case dependent.

-

Dell VxRail includes a Container Storage Integration (C.S.I) interface to enable persistent storage consumption with VMware TKG. Other storage options are also supported, such as PowerScale.

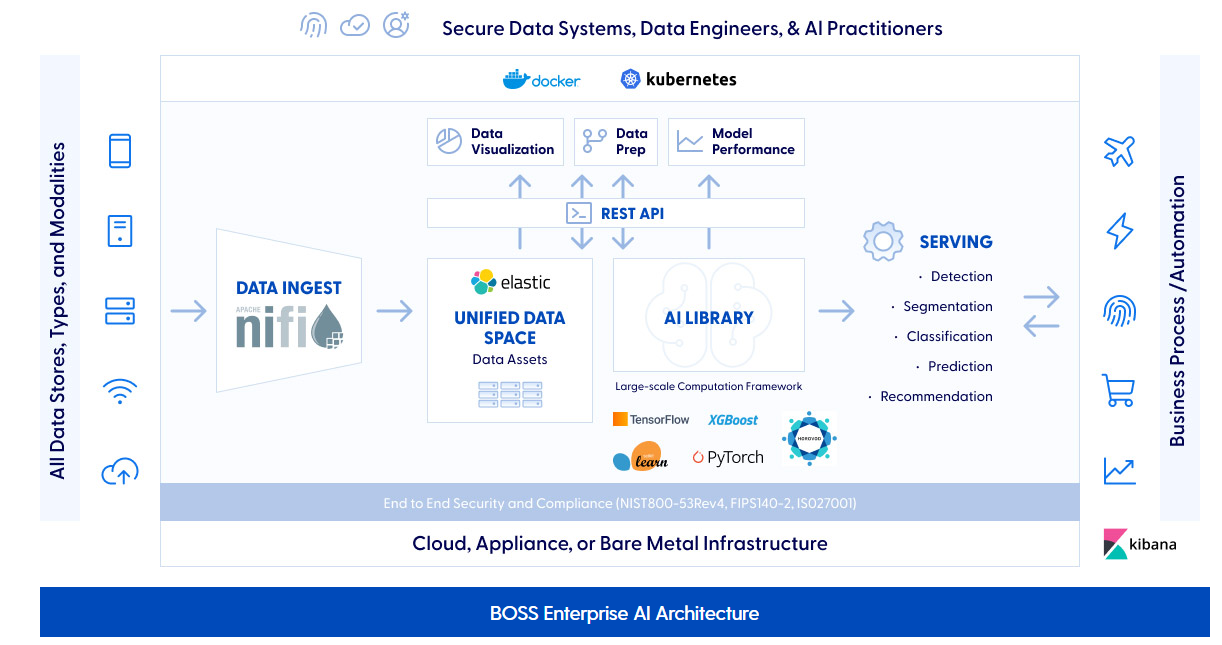

BOSS AI Architecture

The following figure shows a high-level overview of the BOSS AI system architecture:

Summary

BOSS AI Enterprise is deployed as a container-based architecture.

Core services such as security, load balancing, data ingestion, and monitoring are deployed as container pods, enabling cloud and on-premises deployments.

Common AI functions such as Extract, Transform, and Load capabilities are exposed to the AI/ML practitioner, with low or "No Code" options available.

- Several data ingestion options are available, such as local file uploads or automated workflows provided by Apache NIFI.

- Traditional data querying can be done using the Elasticsearch domain-specific language (DSL) syntax.

- Simplified visual querying can be done with drag-and-drop interfaces for less technical users.

- Data transformation is supported using an intuitive drag-and-drop "transformation tree" building in the BOSS AI GUI.

- Feature selection is simplified with BOSS's no-code solution automatically visualizes feature selection, which applies throughout the entire ML development workflow.

For more details on BOSS AI features, services, and integrations, see BOSS AI Documentation.

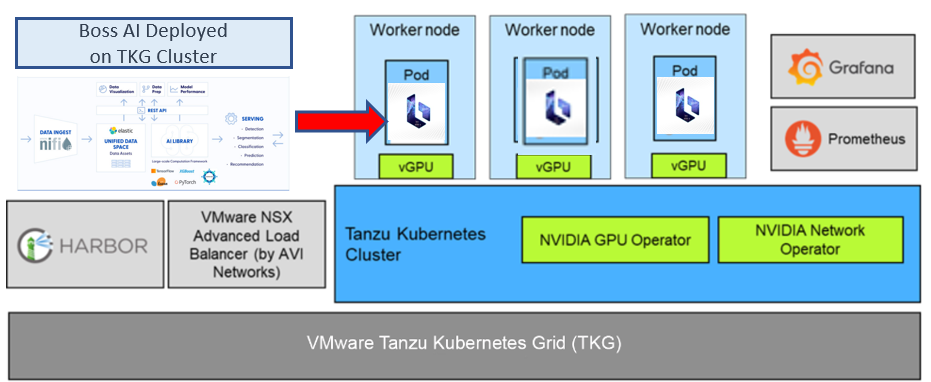

BOSS AI deployment on TKG

BOSS AI Enterprise is deployed as containerized pods to worker nodes within the TKG cluster on the CV platform.

The addition of container support provides the ability to manage High availability and scale-out requirements of CV and VMS applications for both VM and container-based workloads on VxRail solutions.