Home > AI Solutions > Artificial Intelligence > Guides > Design Guide—Implementing a Digital Assistant with Red Hat OpenShift AI on Dell APEX Cloud Platform > Solution concepts

Solution concepts

-

Overview

This section discusses the concepts involved in building the solution. The following list includes the hardware and software layers in the solution stack.

- Dell APEX Cloud Platform for Red Hat OpenShift

- Red Hat OpenShift Container Platform

- Red Hat OpenShift AI

- Digital assistant related components (Llama 2, LangChain, Redis, Gradio, Caikit, and Text Generation Inference [TGI])

Dell APEX Cloud Platform

Dell APEX is a portfolio of cloud services that is based on a cloud consumption model that delivers IT as-a-service, with no upfront costs and pay-as-you-go subscriptions. Dell APEX Cloud Platforms deliver innovation, automation, and integration across your choice of cloud ecosystems, empowering innovation in multicloud environments.

Dell APEX Cloud Platforms are a portfolio of fully integrated, turnkey systems integrating Dell infrastructure, software, and cloud operating stacks that deliver consistent multicloud operations by extending cloud operating models to on-premises and edge environments.

Dell APEX Cloud Platform provides an on-premises private cloud environment with consistent full-stack integration for the most widely deployed cloud ecosystem software including Microsoft Azure, Red Hat OpenShift, and VMware vSphere.

For more information, see the Dell APEX Cloud Platforms webpage.

Dell APEX Cloud Platform for Red Hat OpenShift

Dell APEX Cloud Platform for Red Hat OpenShift is designed collaboratively with Red Hat to optimize and extend OpenShift deployments on-premises with a seamless operational experience.

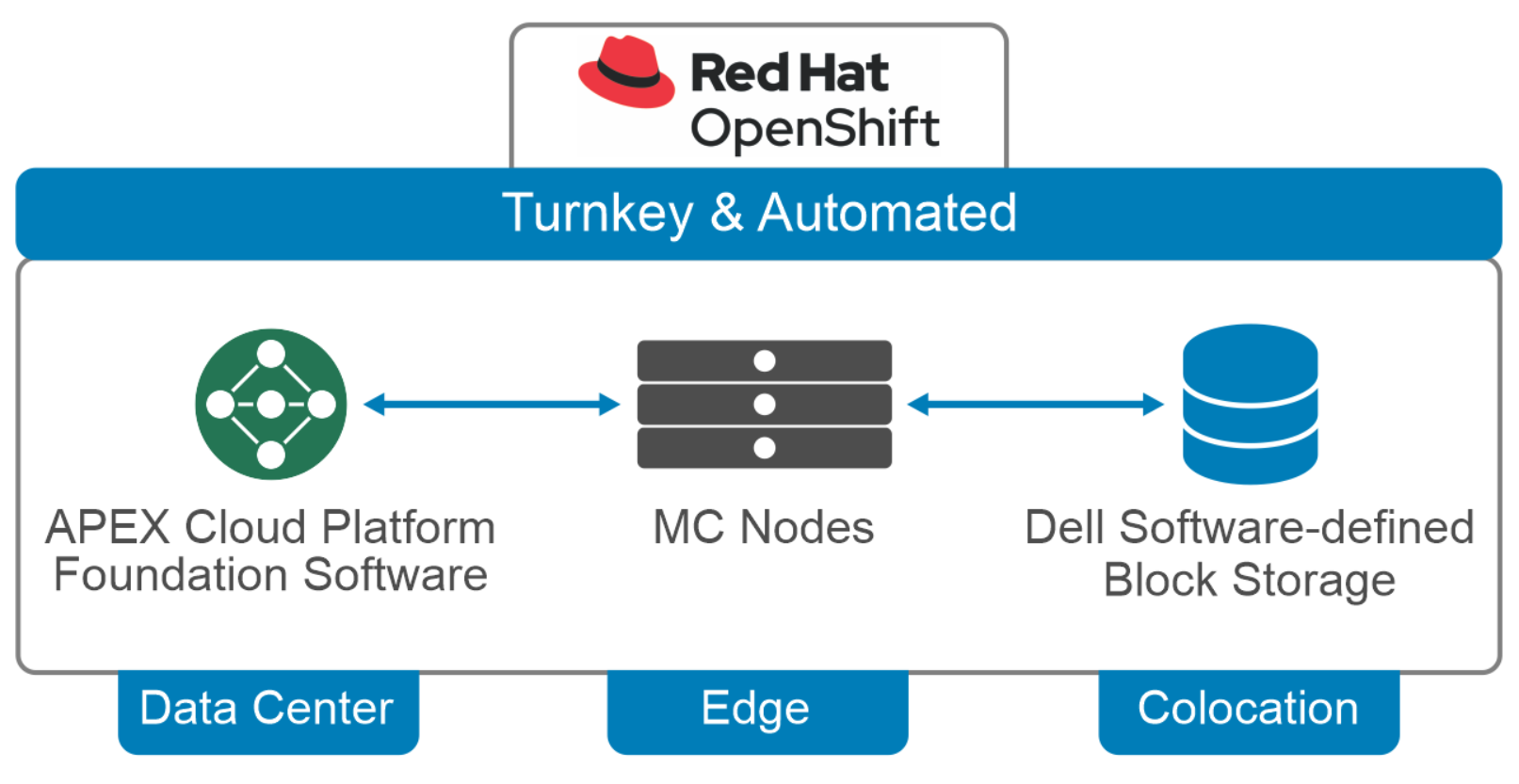

Figure 1. Dell APEX Cloud Platform for Red Hat OpenShift high level physical architecture

This turnkey platform provides:

- Deep integrations and intelligent automation between layers of Dell and OpenShift technology stacks, accelerating time-to-value and eliminating the complexity of management using different tools in disparate portals.

- Simplified, integrated management using the OpenShift Web Console.

- A bare metal architecture delivers the performance, predictability, and linear scalability needed to meet even the most stringent SLAs.

Additional benefits of Dell APEX Cloud Platform for Red Hat OpenShift include:

- Accelerating time to value with a purpose-built platform for OpenShift.

- Seamlessly extending applications and data across the IT landscape with a common SDS and advanced data services everywhere

- Enhanced control with multilayer security and compliance capabilities.

The Dell APEX Cloud Platform for Red Hat OpenShift uses separate storage nodes to provide persistent block storage for the compute cluster. This separation enables the compute and storage nodes to scale independently. The storage cluster is based on Dell PowerFlex software-defined storage architecture. This disaggregation allows customers with existing PowerFlex storage to deploy only the compute cluster (in a brownfield environment). Customers can opt for full integration in which the OpenShift Web Console is used to control both the compute and storage clusters.The Dell APEX Cloud Platform for Red Hat OpenShift introduces a new level of integration for running OpenShift on bare metal servers. Until now, all infrastructure management has been through separate OEM tooling and is managed separately from OpenShift. This dissociation requires IT staff with unique expertise to maintain the system, increasing operational costs.

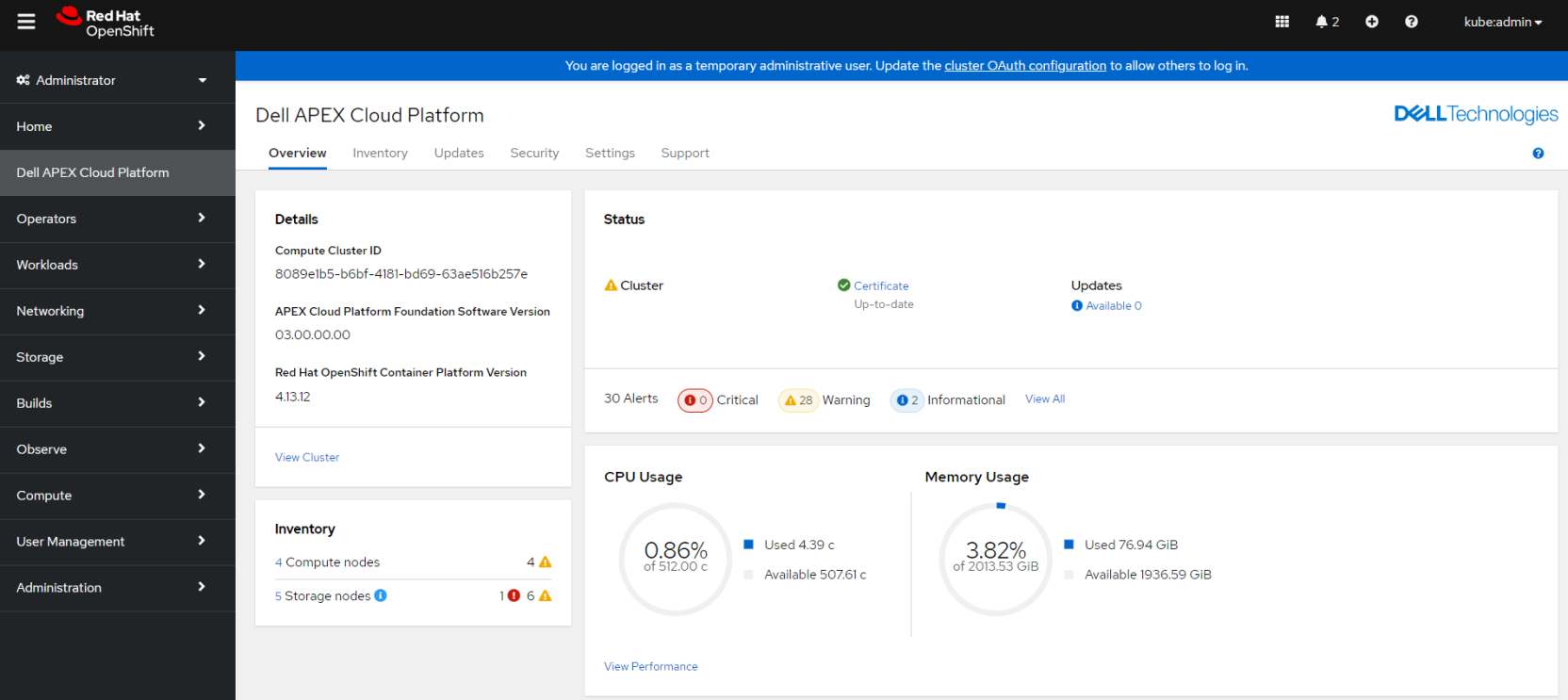

The Dell APEX Cloud Platform Foundation Software mitigates this complexity by integrating the infrastructure management into the OpenShift Web Console. This integration enables administrators to update the hardware using the same workflow that updates the OpenShift software. It also enables OpenShift administrators to manage the infrastructure using the same management tools they use to control the cluster and the applications that run on it. Figure 3. Dell APEX Cloud Platform Foundation Software integration in OpenShift Web Console

Figure 3. Dell APEX Cloud Platform Foundation Software integration in OpenShift Web ConsoleEnsuring that sensitive data, such as Personally Identifiable Information (PII), is securely stored in every stage of its life is an important task for any organization. To simplify this process, the National Institute of Science and Technology (NIST) developed standards, regulations, and best practices to protect data. These standards protect government data and ensure those working with the government comply with certain safety standards before they have access to data.

The Federal Information Protection Standard (FIPS) is one such standard that helps organizations simplify the process of protecting data.

Dell APEX Cloud Platform for Red Hat OpenShift is designed with FIPS-validated cryptographic based on RSA BSAFE Crypto Module. This provides support for various FIPS standards. For more information, see General FAQ for OpenShift and FIPS compliance.

Note: FIPS is enabled by default to provide a secure environment. This default setting is important to consider while developing your digital assistant applications, as some of the LangChain modules may not be FIPS-compliant.

Red Hat OpenShift

Red Hat OpenShift Container Platform is a consistent hybrid cloud foundation for containerized applications, powered by Kubernetes. Developers and DevOps engineers using Red Hat OpenShift Container Platform can quickly build, modernize, deploy, run, and manage applications anywhere, securely, and at scale.

Red Hat OpenShift Container Platform is powered by open-source technologies and offers flexible deployment options ranging from physical, virtual, private cloud, public cloud, and Edge. Red Hat OpenShift Container Platform cluster consists of one or more control-plane nodes and a set of worker nodes.

For more information about Red Hat OpenShift Container Platform, see the Red Hat OpenShift web page.

Red Hat OpenShift AI

AI/ML has quickly become crucial for businesses and organizations to remain competitive. However, deploying AI applications can be complicated due to a lack of integration among rapidly evolving tools. Popular cloud platforms offer attractive tools and scalability but often lock users in, limiting architectural and deployment options.

Red Hat OpenShift AI, formerly referenced as Red Hat OpenShift Data Science (RHODS), is a platform that unlocks the power of AI for developers, data engineers, and data scientists in Red Hat OpenShift. It is easily installed through a Kubernetes operator and provides a fully focused development environment called workbench that automatically manages the storage and integrates different tools. This provides users the ability to rapidly develop, train, test, and deploy machine learning models on-premises or in the public cloud environment.

Red Hat OpenShift AI allows data scientists and developers to focus on their data modeling and application development without waiting for infrastructure provisioning. The ML models developed using Red Hat OpenShift AI are portable to deploy in Production, on containers, on-premises, at the edge, or in the public cloud.

For more information about Red Hat OpenShift AI, see the Red Hat OpenShift AI web page.

Llama 2

Llama 2 is an open-source pre-trained LLM that is freely available for research and commercial use. Llama 2 was trained on 40 percent more data than its predecessor, Llama 1, and has twice the context length (4096 compared to 2048 tokens). This means that Llama 2 can better understand context and generate more relevant and accurate information.

Llama 2 can be used in use cases to build digital assistant for consumers and enterprise usage, language translation, research, code generation, and various AI-powered tools.

For more information about Llama 2, see the Meta web page.

LangChain

LangChain is an open-source framework for developing LLM-powered applications. It simplifies the process of building LLM powered applications by providing an abstracted standard interface that makes it easier to interact with different language models, including Llama 2.

LangChain's plug-and-play features allows users to use different data sources, LLMs, and UI tools without having to rewrite code and build powerful NLP applications with minimal effort.

LangChain provides a variety of tools and APIs to connect language models to other data sources, interact with their environment, and build complex applications. Developers are required to use language models such as Llama 2 to build applications using LangChain. LangChain can be used to build digital assistants to generate a question-answering system over domain specific information.

For more information about LangChain, see the LangChain web page.

Redis

Redis is a vector store that offers an effective solution to efficiently query and retrieve relevant information from massive amounts of data. Vector stores are databases designed for storing and retrieving vector embeddings efficiently and to perform semantic searches. Vector stores can index and instantly search for similar vectors using similarity algorithms.

Redis is a popular in-memory data structure store. One feature of the Redis database is the ability to store embeddings with metadata to be used later by LLMs. Redis vector database is an excellent choice for applications that have to store and search vector data quickly and efficiently.

For more information about the Redis vector database, see the Redis webpage.

Gradio

Gradio is an open-source Python library that enables incredibly fast development/ prototyping of the ML web applications with user interfaces. It provides a simple and intuitive API which is compatible with all Python programs and libraries. Gradio provides a variety of options to customize various elements of the user interface (UI).

Gradio is a fastest way to protype any ML model with a friendly web interface.

Gradio has a unique capability to visualize the intermediate steps or thought processes during a language model's decision-making process. This unique feature makes Gradio a useful tool for analyzing and debugging the decision processing abilities for language models.

For more information about Gradio, see the Gradio webpage.

Caikit

Caikit provides an abstraction layer for developers where they can utilize AI models through APIs without the knowledge of the data form of the model. Caikit is an advanced AI toolkit that streamlines the process of working with AI models through developer-friendly APIs.

By implementing a model based on Caikit, an organization can:

- Perform training jobs to create models from dataset.

- Merge models from diverse AI communities into a common API

- Update applications to newer models for a specific task without client-side changes.

For more information about Caikit, see the Caikit webpage.

Caikit-NLP

Caikit-NLP is a python library providing various Natural Language Processing (NLP) capabilities built on top of Caikit framework. Caikit-NLP is a powerful library that leverages prompt tuning and fine-tuning to add NLP domain capabilities to Caikit. This library also provides a ready to use python script to convert models from different format to Caikit format.

For more information, see the Caikit-NLP web page.

Text Generation Inference

TGI is a toolkit designed for deploying and serving LLMs. TGI provides a high-performance text generation for the most popular open-source LLMs, such as Llama. It is powered by Python, Rust, and gRPC.

TGI implements many features to enhance the use of LLMs including:

- Serves the most popular LLMs with a simple launcher.

- Distributes the inference workload across multiple GPUs, which can significantly speed up generation for large models.

- Batches incoming requests together to improve throughput.

- Uses optimized versions of the transformers library to improve inference performance.

For more information about TGI, see the TGI GitHub webpage.

This validated solution uses many open-source technologies, frameworks, libraries, and methods.

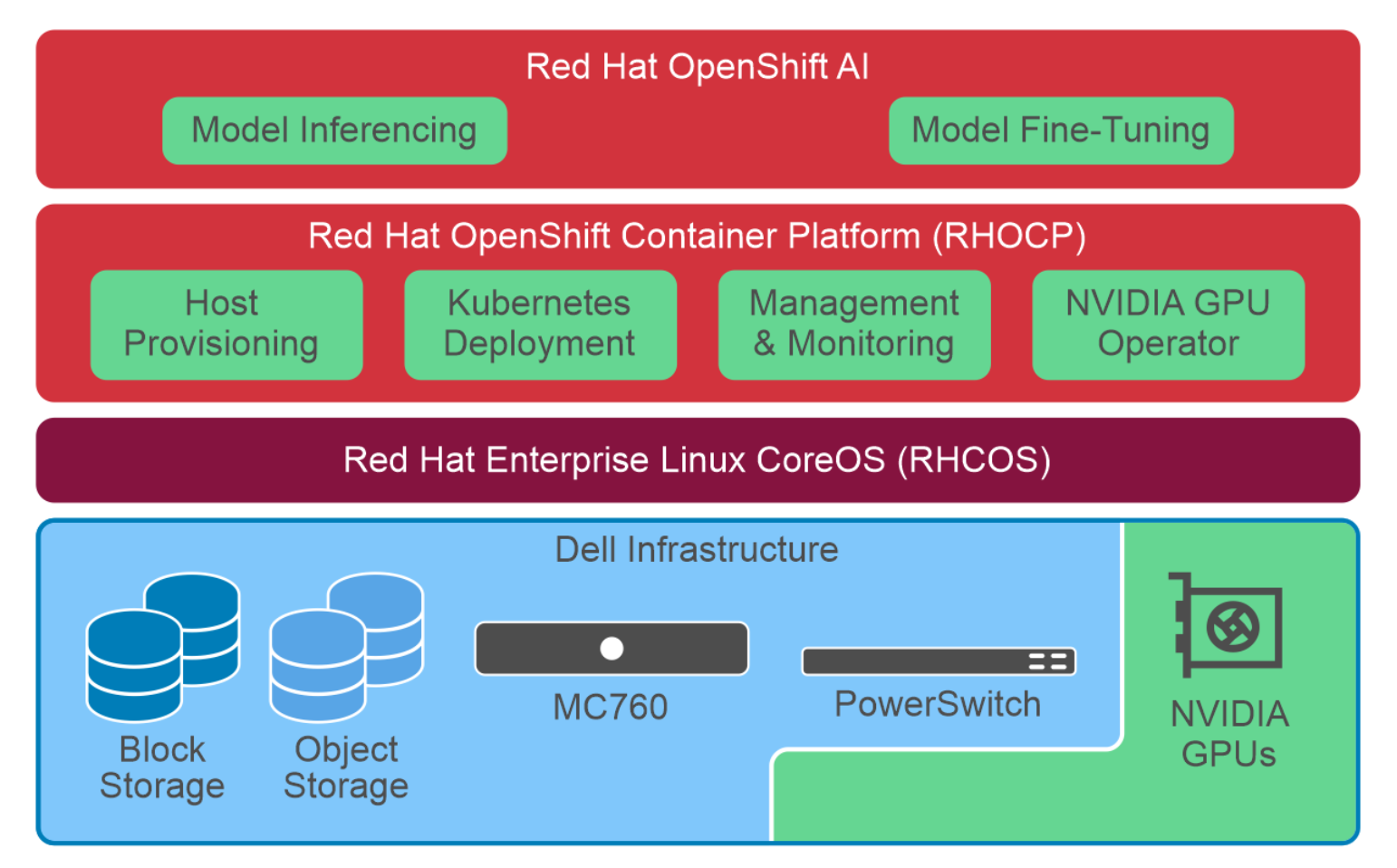

Figure 2.

Figure 2.