Home > AI Solutions > Artificial Intelligence > White Papers > Automate Machine Learning with H2O Driverless AI on Dell Infrastructure > Kubernetes-based deployment using Enterprise Steam

Kubernetes-based deployment using Enterprise Steam

-

Enterprise Steam from H2O.ai is a service for securely managing and deploying infrastructure for H2O Driverless AI on Kubernetes. Enterprise Steam offers security, access control, resource control, and resource monitoring out of the box so that organizations can focus on the core of their data science practice. It enables secure, streamlined adoption of H2O Driverless AI and other H2O.ai products that complies with company policies.

For data scientists, Enterprise Steam provides Python, R, and web clients for managing clusters and instances. It allows data scientists to practice data science in their own H2O Driverless AI instance. For administrators, Enterprise Steam controls which product versions and compute resources are available.

Enterprise steam is a single pod that is deployed using Helm. When Enterprise Steam is deployed, you can launch a new H2O Driverless AI instance and manage existing instances.

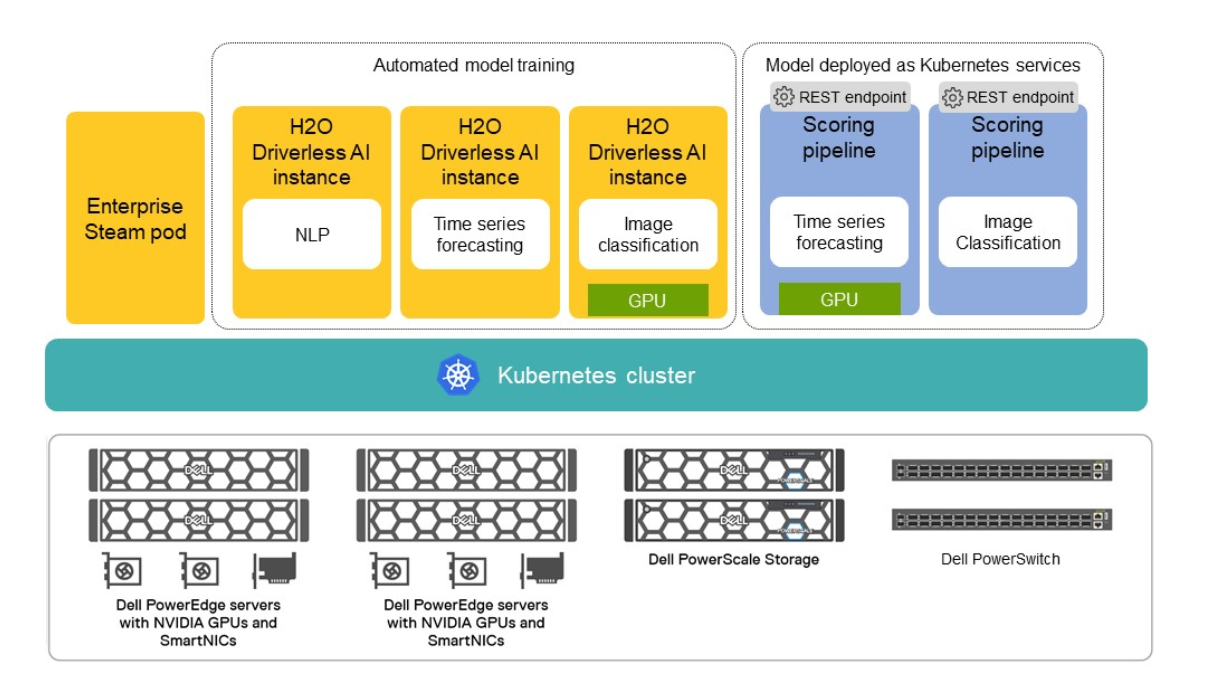

You can use each instance for model building for a specific project. In the following figure, we show three instances of H2O Driverless AI deployed for automated model building for three different use cases: NLP, time series forecasting, and image classification.

Figure 3. Solution architecture for Kubernetes-based Driverless AI deployment

Datasets are made available to the instance either by downloading them into the container or through several of the data connectors, as explained in the following sections. Data visualization, feature engineering, and model development are performed on this instance. H2O Driverless AI supports NVIDIA GPU acceleration and some use cases such as image classification can benefit from GPU resources. For these use cases, GPUs are configured and made available to the container.

After the model is trained, you can download the Python or MOJO Scoring Pipeline, and build a Docker container. You can deploy this Docker container outside of the Kubernetes environment or as pod exposed as a Kubernetes service.

H2O Driverless AI can also be deployed as a stand-alone container either on bare metal or virtual machines. This deployment option is outside the scope of this validated design. See the H2O Driverless AI documentation for more information.