Home > Integrated Products > VxRail > Guides > Architecture Guide—VMware Cloud Foundation 3.10.01 on VxRail > VxRail Physical network interfaces

VxRail Physical network interfaces

-

The VxRail can be deployed with either 2x10/2x25 GbE or with 4x10 GbE profile. It will need the necessary network hardware to support the initial deployment. There are two important considerations that must be kept in mind when planning or designing the physical network connectivity:

- NSX-V based WLDs (Mgmt or a VI WLD) deploy the VXLAN VTEP Port Group to the VxRail vDS.

- NSX-T based VI WLD require additional uplinks. The uplinks that were used to deploy the VxRail vDS cannot be used or the NSX-T N-VDS.

Note: For VCF versions earlier than 3.10, adding nodes to an additional vDS is not supported as this causes an error when adding nodes to a cluster in SDDC manager.

The following physical host connectivity diagrams illustrate the different host connectivity options for NSX-V and NSX-T based WLDs.

NSX-V based WLD physical host connectivity options

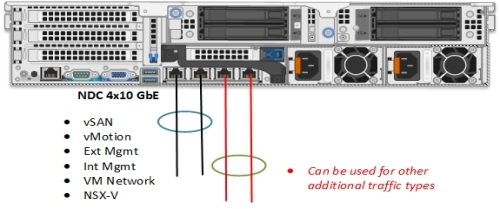

Figure 32 shows a VxRail deployed with a 2x10 profile on the NDC network card with NSX-V (Mgmt or VI WLD). The remaining two ports on the NDC can be used for other traffic types such as iSCSI, NFS, and Replication.

Figure 32. VxRail 2x10 network profile with NSX-V (Mgmt or VI WLD)

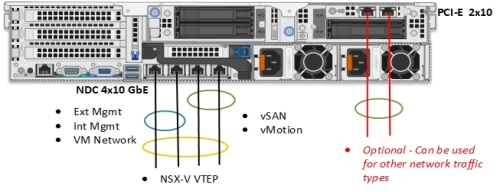

Figure 33 shows a VxRail deployed with a 4x10 profile with a 4-port NDC card. The NSX-V VXLAN traffic uses all four interfaces. An additional PCI-E card can be installed for additional network traffic if required.

Figure 33. VxRail 4x10 network profile with NSX-V (Mgmt or VI WLD)

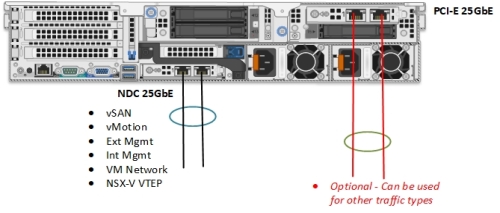

The VxRail can be deployed with a 2x25 profile on NDC with NSX-V (Mgmt or VI WLD) as shown in Figure 34. An additional PCI-E card can be installed to provide more connectivity for other traffic types.

Figure 34. VxRail 2x25 network profile on NDC with NSX-V (Mgmt or VI WLD)

Note: VCF on VxRail does not support installing additional vDS. More physical interfaces must be added to the VxRail vDS to be used for additional traffic types.

NSX-T based VI WLD physical host connectivity options

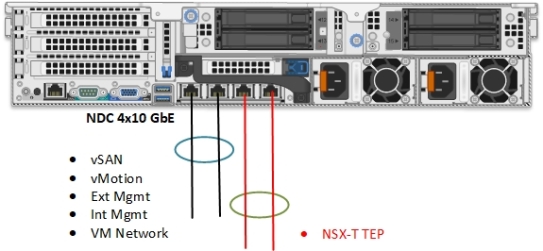

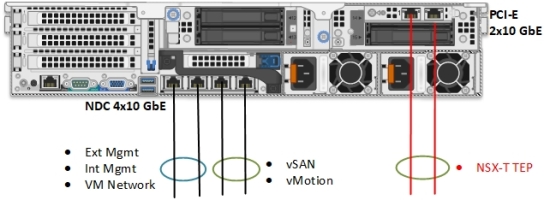

This section illustrates the physical host network connectivity options for NSX-T based VI WLD using different VxRail profiles and connectivity options. Figure 35 illustrates a VxRail deployed with 2x10 profile on the 4-port NDC, the remaining two ports available after the deployment are used for NSX-T.

Note: For each cluster that is added to an NSX-T VI WLD, the user will have the option to select the two pNICs if there are more than two pNICs available. This can provide NIC redundancy if the pNICs are selected from two different NICs. Any subsequent nodes added to the cluster will use the same pNICs.

Figure 35. VxRail 2x10 network profile with NSX-T VI WLD

The next option is VxRail deployed with a 4x10 profile and NSX-T deployed onto the cluster. In this scenario, the NSX-T traffic uses the additional PCI-E card as shown in Figure 36, making this a 6xNIC configuration.

Figure 36. VxRail 4x10 network profile with NSX-T VI WLD

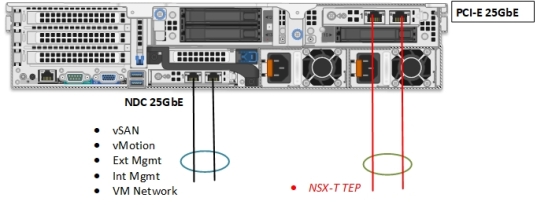

The final option that is covered here is a 2x25 profile for the VxRail using the 25GbE NDC and an additional 25GbE PCI-E. The VxRail system traffic uses the two ports of the NDC while the NSX-T traffic is placed on the two port PCI-E card.

Figure 37. VxRail 2x25 network profile with NSX-T VI WLD