Home > Integrated Products > VxRail > Guides > Architecture Guide—VMware Cloud Foundation 3.10.01 on VxRail > Multi-AZ (stretched cluster)

Multi-AZ (stretched cluster)

-

All WLDs can be stretched across two availability zones. Availability zones can be located in either the same data center but in different racks or server rooms, or in two different data centers in two different geographic locations. The following general requirements apply to a VCF on VxRail stretched-cluster deployments.

- Witness deployed at a third site using the same vSphere version used in the VCF on VxRail release

- All SC configurations must be balanced with the same number of hosts in AZ1 and AZ2.

Note: The VI WLD clusters can only be stretched if the Mgmt WLD cluster is first stretched.

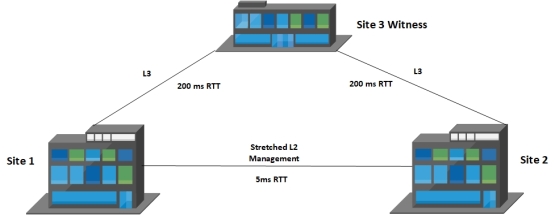

The following network requirements apply for the Mgmt WLD and the VI WLD clusters that need to be stretched across the AZs:

- Stretched Layer 2 for the external management traffic.

- 5 millisecond RTT between data node sites

- Layer 3 between each data nodes site and Witness site

- 200 millisecond RTT between data node sites and the Witness site

Figure 38. Stretched Cluster Network Requirements

The following section contains more detail about the requirements for each type of WLD.

NSX-V WLD

Table 8 shows the supported connectivity for the data nodes sites for the different traffic types for an NSX-V based WLD. This includes the management WLD and any NSX-V based VI WLD.

Table 2. NSX-V WLD Multi-AZ Connectivity Requirements

Traffic Type

Connectivity Options

Minimum MTU

Maximum MTU

vSAN

L2 Stretched to AZ2

L3 Routed to AZ2

1500

9000

vMotion

L2 Stretched to AZ2

L3 Routed to AZ2

1500

9000

External Management

L2 Stretched to AZ2

1500

9000

VXLAN

L2 Stretched to AZ2

L3 Routed to AZ2

1500

9000

Witness vSAN

L3 Routed to Witness Site

1500

9000

The vSAN and the vMotion traffic can be stretched Layer 2 or extended using Layer 3 routed networks. The external management traffic requires to be stretched Layer 2 only. This ensures the management VMs (VC/PSC/VxRM) do not need their IP addresses to be changed. It also ensures that no manual network reconfiguration is required when the VMs are restarted in AZ2 due to a site failure at AZ1. The VXLAN network uses one port group on the VxRail vDS that is connected to the nodes at AZ1 and AZ2. However, DHCP is used to assign the VTEP IPs for each host. DHCP enables you to stretch the VXLAN VLAN between sites and use the same subnet at each site for the VTEPs. Alternatively, you can use the same VLAN local at each site non-stretched, use a different subnet for the VTEPs, and route the VXLAN traffic between the two sites.

Note: The VXLAN VLAN ID is the same at each site whether using stretched Layer 2 or Layer 3 routed.

NSX-T WLD

The following table shows the supported connectivity for the data nodes sites for the different traffic types for an NSX-T WLD.

Traffic Type

Connectivity Options

Minimum MTU

Maximum MTU

vSAN

L3 Routed

1500

9000

vMotion

L2 Stretched

L3 Routed

1500

9000

External Management

L2 Stretched

1500

9000

Geneve Overlay

L2 Stretched

L3 Routed

1500

9000

Witness vSAN

L3 Routed to Witness Site

1500

9000

NSX-T WLD Multi-AZ Connectivity Requirements

The vSAN traffic can only be extended using Layer 3 routed networks between sites. The vMotion traffic can be stretched Layer 2 or extended using Layer 3 routed networks. The external management traffic must be stretched Layer 2 only. This ensures the VxRail Manager and the Edge Nodes can continue to have connectivity to the management network if they are restarted at AZ2 due to a site failover at AZ1. The Geneve overlay network can either use the same or different VLANs for each AZ so a single Geneve VLAN can be stretched Layer 2. The same VLAN can be used at each site non-stretched, or a different VLAN can be used at each site allowing the traffic to route between sites.

Note: The vSAN traffic can only be extended using Layer 3 networks between sites. If only Layer 2 stretched networks are available between sites with no capability to extend with Layer 3 routed networks, an RPQ should be submitted.

Increasing the vSAN traffic MTU to improve performance requires the MTU for the witness traffic to the witness site to also use an MTU of 9000. This might cause an issue if the routed traffic needs to pass through firewalls or use VPNs for site-to-site connectivity. Witness traffic separation is one option to work around this issue, but is not yet officially supported for VCF on VxRail.

Note: Witness Traffic Separation (WTS) is not officially supported but if there is a requirement to use WTS, the configuration can be supported through the RPQ process. The VCF automation cannot be used for the stretched cluster configuration. It must be done manually using a standard VxRail SolVe procedure with some additional guidance.

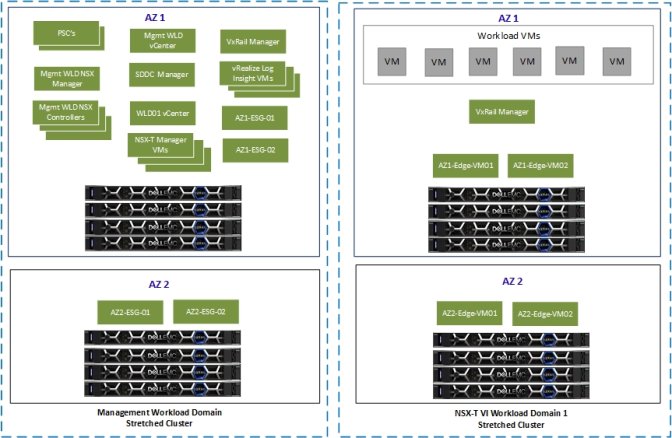

Multi-AZ Component placement

During the stretched-cluster configuration, the management VMs are configured to run on the first AZ by default. This is achieved using Host/VM groups and affinity rules that keep these VMs running on the hosts in AZ1 during normal operation. The following diagram shows where the management and NSX VMs are placed after the stretched configuration is complete for the Mgmt WLD and the first cluster of an NSX-T VI WLD.

Figure 39. Multi-AZ Component Layout

Management WLD Multi-AZ – stretched cluster routing design

The management WLD multi-AZ aligns with the VVD reference architecture. This requires a manual Day 2 deployment using VVD documentation as a guide for the NSX ESGs at AZ2. The AVN network requires NorthSouth routing at AZ2. If there is a failure at AZ1, the ESGs will need to be manually deployed and configured at AZ2. The physical network design varies depending on the existing network infrastructure.

Key points to consider for Multi-AZ management WLD routing design:

- Single management WLD vCenter/NSX Manager

- BPG used as the routing protocol for uDLR/ESG/TOR

- Site 1 ESGs deployed by Cloud Builder when management WLD is deployed

- Site 2 ESGs must be manually deployed.

- At Site 2 two uplink VLANs required for the ESGs

- Site 2 ESGs connect to the same Universal Transit Network as site 1 ESGs.

- Site 2 ESGs have the same ASN as Site 1 ESGs.

- Traffic flow from uDLR prefers Site 1 (primary) ESGs during normal operation; lower BGP weight is used between uDLR and ESGs at Site 2 for this purpose.

- BGP path prepend is needed on the Physical TORs to control Ingress traffic.

- Physical network design varies depending on existing network infrastructure.

Figure 40. Multi AZ – Mgmt WLD routing design example