Assets

Multitenancy in MEC: When is it needed and what does it look like?

Wed, 27 Sep 2023 20:32:45 -0000

|Read Time: 0 minutes

Sometimes the best solutions stem from the simplest questions. Simple questions often prompt us to think about why we do things the way that we do. For example, a customer recently asked me, “What about multi-tenancy for my MEC?”

Multitenancy and MEC are both loaded terms, so an easier way to tackle this question is to start by asking, “Should I plan to support multiple customers on my network-edge cloud infrastructure; and if so, how do I do it?”

Multitenancy is one of the critical benefits of a cloud environment, and sharing resources seems to make sense in a highly constrained environment like the edge. Despite its benefits, multitenancy also introduces significant management complexities, which come at a cost. These complexities can drive customers to consider whether the efficiencies of multitenancy justify the costs. This answer depends on both the cloud model that is used to deliver a service (SaaS/PaaS/IaaS and co-location) and the customer. Moreover, in most cases, it is either innate to the solution hosted on public MEC, or not worth the cost.

Starting with SaaS, multitenancy is enabled through proper handling of organization accounts and associated user accounts, something that any successful cloud-based SaaS must enable. It is therefore innate in this model. The second model where multitenancy is innate is co-location because, presumably, a successful co-location business model needs more than one customer.

For PaaS (which includes container platforms such as Kubernetes), the answer to considering multitenancy is a straightforward no. Delivering multitenancy across customer boundaries (as opposed to simply hosting multiple projects of a single enterprise) typically involves creating a SaaS offering from a PaaS-based platform. For more information, see the discussion of this issue on the Kubernetes project site.

This leaves us with IaaS and reduces the question to whether a Mobile Network Operation (MNO) deploying MEC should invest in IaaS multi-tenancy. Conversely, it might be sufficient for such an MNO to provide each customer with physical infrastructure and a cloud stack, which the customer then integrates into an overall IaaS multicloud operational process. To address this, we need to dig deeper into requirements that different types of customers are likely to have for IaaS Edge infrastructure.

MEC IaaS and verticals

It is important to remember that the information in this section is opinion-based and should be interpreted as such. Also, it is equally important to keep in mind that each customer is different and broad-stroke statements such as the ones below may not be valid in every situation. In short, pay attention to your customer!

Let’s start with large enterprises, which are likely to have some of the most stringent security and management policies. IT downtime and security breaches carry significant costs, and there is substantial in-house IT expertise to deploy and enforce industry best practices. For enterprises, anything that could have been moved into the cloud while meeting IT policies and application requirements presumably is already there. This means there is unlikely to be low-hanging fruit candidates for a network-edge migration.

A multi-tenant IaaS environment introduces additional hurdles towards meeting IT policies which can complicate an already difficult sales motion and business case. In short, the opportunity cost of trying to do multi-tenant IaaS in this segment is usually not worth it. Capturing business for network edge here is hard enough. This remains true even when such enterprises have remote locations with limited IT expertise at such sites. While the ROI of moving compute off-prem improves in such cases, the hurdle of meeting IT policies remains, and the complications associated with trying to meet them are usually not justified by the cost savings of multitenancy.

Smaller enterprises (SMBs) often have a stronger case for moving compute off-prem while the burden of IT policies is lower. These SMBs are likely looking for an easy way to achieve an outcome. A SaaS-based solution is much more appropriate than an IaaS one, which means multi-tenancy is out of the question.

To find a business justification for multi-tenancy in IaaS MEC, we need to look outside of enterprises and to direct-to-customer applications providers that take advantage of the network edge. Examples of these include the independent SW vendors (ISVs) of SaaS solutions which are offering outcomes to SMBs (where the tenant is now the ISV itself, not its customers), and ISV offering consumer applications, like emerging interactive gaming. When such applications require Edge presence, the choice is often limited to public MEC, as there is no on-premises and traditional edge co-location providers may not be able to deliver sufficient proximity to customer to meet the required KPIs. Moreover, the customers (ISVs) are typically well-adapted to the cloud and are comfortable with issues such as multitenancy, provided that cloud-like shared responsibility structures are in place and adequately formalized (a legal and contractual issue as much as it is a technical one).

Delivering a multitenant IaaS solution

So how does an MNO go about creating that multitenant edge? First, we need something generic because attempting to guess what kind of applications might run on MEC simply limits the addressable market. Second, it is important to remember that operations (O&M) and management will be your biggest headache, so anything that simplifies O&M is likely to pay back for itself in spades. And third, it’s public ISVs are most likely to be your customers.

Typically, ISVs developing cloud-native applications use some flavor of Kubernetes (K8S) as the platform. K8S flavors can span the gamut from public clouds (Google, Amazon’s EKS) to enterprise clouds (Red Hat, OpenShift, VMware Tanzu). An ideal platform would address the following:

- Provide a way to efficiently address the need for compute-intensive applications, including high-performance computing and storage-intensive ones

- Make O&M easier in a meaningful and monetarily measurable way

- Support cloud-native applications developed for any (almost any) of the most commonly used Kubernetes frameworks.

Although that seems like a lot to ask out of a platform, solutions that address all of these points do exist. One excellent example is Dell’s VxRail VmWare HCI platform. The definition of VxRail, according to the Dell VxRail home page is:

VxRail goes further to deliver more highly differentiated features and benefits based on proprietary VxRail HCI System Software. This unique combination automates deployment, provides full stack lifecycle management and facilitates critical upstream and downstream integration points that create a truly better together experience

VxRail provides an MNO that flexible combination of compute (GPU and DPU options for high-performance computing) and storage, which can be easily and quickly scaled in response to actual demand. Notably, with vCloud Suite, a VxRail deployment can be turned into a multitenant public cloud. For more information, see VmWare Public Cloud Service Definition.

Last but not least, in addition to VmWare’s Tanzu, VxRail supports Google Anthos (Running Google Anthos on VmWare Cloud Foundation), Red Hat OpenShift (Vxrail and OpenShift Solution Brief), and AWS EKS (Amazon EKS Anywhere on Vxrail Solution Brief); delivering an all-in-one platform for a flexible public MEC at Network Edge cloud deployment.

Summary

The question of multitenancy for MEC is only relevant when considering the IaaS service model. In that case, multitenancy is not likely to be of interest when addressing most traditional enterprise customers but may be important when addressing the needs of ISVs providing SaaS solutions that need Edge presence. To succeed in delivering a MEC platform to such ISVs MNO needs an underlying platform like Dell’s VxRail, designed to address their diverse needs in a scalable and easily manageable fashion.

What is Happening in the Network Edge

Mon, 26 Jun 2023 10:59:44 -0000

|Read Time: 0 minutes

Where is the Network Edge in Mobile Networks

The notion of ‘Edge’ can take on different meanings depending on the context, so it’s important to first define what we mean by Network Edge. This term can be broadly classified into two categories: Enterprise Edge and Network Edge. The former refers to when the infrastructure is hosted by the company using the service, while the latter refers to when the infrastructure is hosted by the Mobile Network Operator (MNO) providing the service.

This article focuses on the Network Edge, which can be located anywhere from the Radio Access Network (RAN) to next to the Core Network (CN). Network Edge sites collocated with the RAN are often referred to as Far Edge.

What is in the Network Edge

In a 5G Standalone (5G SA) Network, a Network Edge site typically contains a cloud platform that hosts a User Plane Function (UPF) to enable local breakout (LBO). It may include a suite of consumer and enterprise applications, for example, those that require lower latency or more privacy. It can also benefit the transport network when large content such as Video-on-Demand is brought closer to the end users.

Modern cloud platforms are envisioned to be open and disaggregated to enable MNOs to rapidly onboard new applications from different Independent Software Vendors (ISV) thus accelerating technology adoption. These modern cloud platforms are typically composed of Commercial-of-the-Shelf (COTS) hardware, multi-tenant Container-as-a-Service (CaaS) platforms, and multi-cloud Management and Orchestration solutions.

Similarly, modern applications are designed to be cloud-native to maximize service agility. By having microservices architectures and supporting containerized deployments, MNOs can rapidly adapt their services to meet changing market demands.

What contributes to Network Latency

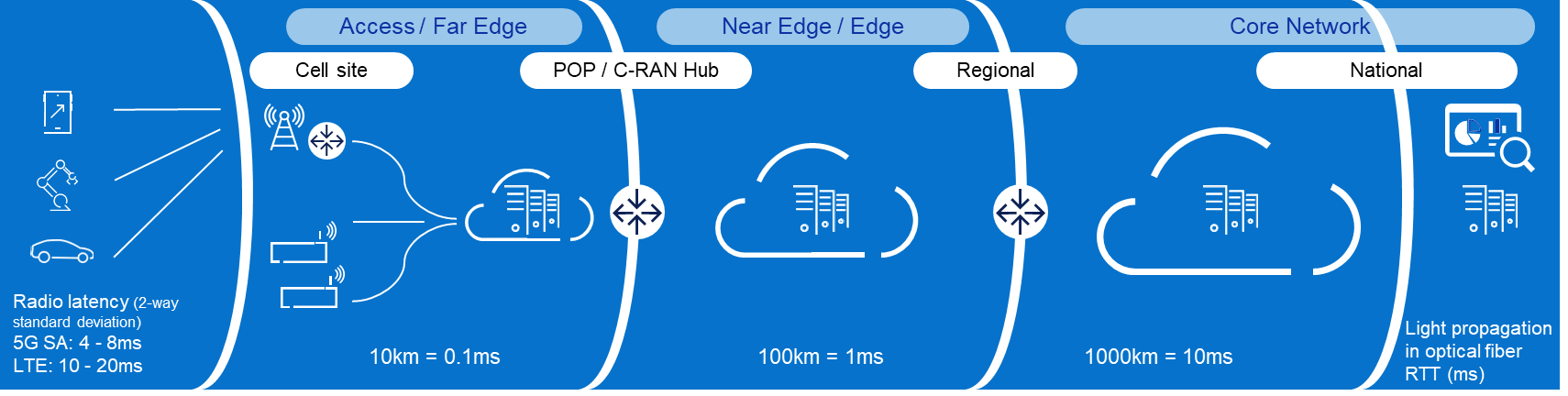

The appeal of Network Edge or Multi-access Edge Computing (MEC) is commonly associated with lower latency or more privacy. While moving applications from beyond the CN to near the RAN does eliminate up to tens of milliseconds of delay, it is also important to understand that there are many other contributors to network latency which can be optimized. In fact, latency is added at every stage from the User Equipment (UE) to the application and back.

RAN is typically the biggest contributor to network latency and jitter, the latter being a measure of fluctuations in delay. Accordingly, 3GPP has introduced a lot of enhancements in 5G New Radio (5G NR) to reduce latency and jitter in the air interface. We can actively reduce latency through the following categories: There are three primary categories where latency can be reduced:

- Transmission time: reduce symbol duration with higher subcarrier spacing or with mini slots

- Waiting time: improve scheduling (optimize handshaking), simultaneous transmit/receive, and uplink/downlink switching with TDD

- Processing time: reduce UE and gNB processing and queuing with enhanced coding and modulation

Transport latency is relatively simple to understand as it is mainly due to light propagation in optical fiber. The industry rule of thumb is 1 millisecond round trip latency for every 100 kilometers. The number of hops along the path also impacts latency as every transport equipment adds a bit of delay.

Typically, CN adds less than 1 millisecond to the latency. The challenge for the CN is more about keeping the latency low for mobile UEs, by seamlessly changing anchors to the nearest Edge UPF through a new procedure called ‘make before break’. Also, the UPF architecture and Gi/SGi services (e.g., Deep Packet Inspection, Network Address Translation, and Content Optimization) may add a few additional milliseconds to the overall latency, depending on whether these functions are integrated or independent.

Architectural and Business approaches for the Network Edge

The physical locations that host RAN and Network Edge functionalities are widely recognized to be some of the MNOs’ most valuable assets. Few other entities today have the real estate and associated infrastructure (e.g., power, fiber) to bring cloud capabilities this close to the end clients. Consequently, monetization of the Network Edge is an important component of most MNOs’ strategy for maximizing their investment in the mobile network and, specifically, in 5G. In almost all cases, the Network Edge monetization strategy includes making Network Edge available for Enterprise customers to use as an “Edge Cloud.” However, doing so involves making architectural and business model choices across several dimensions:

- Connectivity or Cloud: should the MNO offer a cloud service or just the connectivity to a cloud service provided by a third party (and potentially hosted at a third party’s site).

- aaS model: in principle, the full range of as-a-Service models are available to the MNO to offer at the network edge. This includes co-location services; Bare-Metal-as-a-Service, Infrastructure-as-a-Service (IaaS), Containers-as-a-Service (CaaS), and Platform and Software-as-a-Service (PaaS and SaaS). Going up this value chain (up being from co-lo to SaaS) allows the MNO to capture more of the value provided to the Enterprise. However, it also requires it to take on significantly more of responsibility and puts it in direct competition with well-established players in this space – e.g., the cloud hyperscale companies. The right mix of offerings – and it is invariably a mix – thus involves a complex set of technical and business case tradeoffs. The end result will be different for every MNO and how each arrives there will also be unique.

- Management framework: our industry’s initial approach to exposing the Network Edge to the enterprises involved a management framework that tightly couples to how the MNO manages its network functions (e.g., the ETSI MEC family of standards for example (ETSI MEC)). However, this approach comes with several drawbacks from an Enterprise point of view. As a result, a loosely coupled approach, where the Enterprise manages its Edge Cloud applications using typical cloud management solutions appears to be gaining significant traction, with solutions such as Amazon’s Wavelength as an example. This approach, of course, has its own drawbacks and managing the interplay between the two is an important consideration in Network Edge (and one that is intertwined with the selection of aaS model).

- Network-as-a-Service: a unique aspect of the Network Edge is the MNOs ability to expose network information to applications as well as the ability to provide those applications (highly curated) means of controlling the network. How and if this makes sense is again both an issue of the business case – for the MNO and the Enterprise – as well as a technical/architectural issue.

Certainly, the likely end state is a complex mixture of services and go-to-market models focused on the Enterprise (B2B) segment. The exposition of operational automation and the features of 5G designed to address this make it likely that this is a huge opportunity for MNOs. Navigating the complexities of this space requires a deep understanding of both what services the Enterprises are looking for and how they are looking to consume these. It also requires an architectural approach that can handle the variable mix of what is needed in a way that is highly scalable.

As the long-time leader in Enterprise IT services, Dell is uniquely positioned to address this space – stay tuned for more details in an upcoming blog!

Building the Network Edge

There are several factors to consider when moving workloads from central sites to edge locations. Limited space and power are at the top of the list. The distance of locations from the main cities and generally more exposed to the elements require a new class of denser, easier-to-service, and even ruggedized form factors. Thanks to the popularity of Open RAN and Enterprise Edge, there are already solutions in the market today that can also be used for Network Edge. Read more on Edge blog series Computing on the Edge | Dell Technologies Info Hub

Higher deployment and operating costs are another major factor. The sheer number of edge locations combined with their degraded accessibility make them more expensive to build and maintain. The economics of the Network Edge thus necessitates automation and pre-integration. Dell’s solution is the newly engineered cloud-native solution with automated deployment and life-cycle management at its core. More on this novel approach here Dell Telecom MultiCloud Foundation | Dell USA.

Last is the lower cost of running applications centrally. Central sites have the advantage of pooling computes and sharing facilities such as power, connectivity, and cooling. It is therefore important to reduce overhead wherever possible, such as opting for containerized over VM-based cloud platforms. Moreover, having an open and disaggregated horizontal cloud platform not only allows for multitenancy at edge locations, which significantly reduces overhead but also enables application portability across the network to maximize efficiency.

The ideal situation is where Open/Cloud RAN and Network Edge are sharing sites thus splitting several of the deployment and operations costs. Due to the latency requirements, Distributed Unit (DU) must be placed within 20 kilometers of the Radio Unit (RU). Latency requirements for the mid-haul interface between DU and Central Unit (CU) are less stringent, and CU could be placed roughly around 80-100 kilometers from the DU. In addition, the Near-Real Time Radio Intelligent Controller (Near-RT RIC) and the related xApps must be placed within 10ms RTT. This makes it possible to collocate Network Edge sites with the CU sites and Near-RT RIC.

Future

What has happened over the past few years is that several MNOs have already moved away from having 2-3 national DCs for their entire CN to deploying 5-10 regional DCs where some network functions such as the UPF were distributed. One example of this is AT&Ts dozen “5G Edge Zones” which were introduced in the major metropolitan areas: AT&T Launching a Dozen 5G “Edge Zones” Across the U.S. (att.com).

This approach already suffices for the majority of “low latency” use cases and for smaller countries even the traditional 2-3 national DCs can offer sufficiently low transport latency. However, when moving into critical use cases with more stringent latency requirements, which means consistently very low latency is a must, then moving the applications to the Far Edge sites becomes a necessity in tandem with 5G SA enhancements such as network slicing and an optimized air interface.

The challenge with consumer use cases such as cloud gaming is supporting the required Service Level (i.e., low latency) country wide. And since enabling the network to support this requires a substantial initial investment, we are seeing the classic chicken and egg problem where independent software vendors opt not to develop these more demanding applications while MNOs keep waiting for these “killer use cases” to justify the initial investment for the Network Edge. As a result, we expect geographically limited enterprise use cases to gain market traction first and serve as catalysts for initially limited Network Edge deployments.

For use cases where assured speeds and low latency are critical, end-to-end Network Slicing is essential. In order to adopt a new more service-oriented approach, MNOs will need Network Edge and low latency enhancements together with Network Slicing in their toolbox. For more on this approach and Network Slicing, please check out our previous blog To slice or not to slice | Dell Technologies Info Hub.

About the author: Tomi Varonen

Tomi Varonen is a Telecom Network Architect in Dell’s Telecom Systems Business Unit. He is based in Finland and working with the Cloud, Core Network, and OSS&BSS customer cases in the EMEA region. Tomi has over 23 years of experience in the Telecom sector in various technical and sales positions. Wide expertise in end-to-end mobile networks and enjoys creating solutions for new technology areas. Passion for various outdoor activities with family and friends including skiing, golf, and bicycling.

About the author: Arthur Gerona

Arthur is a Principal Global Enterprise Architect at Dell Technologies. He is working on the Telecom Cloud and Core area for the Asia Pacific and Japan region. He has 19 years of experience in Telecommunications, holding various roles in delivery, technical sales, product management, and field CTO. When not working, Arthur likes to keep active and travel with his family.

About the author: Alex Reznik

ALEX REZNIK is a Global Principal Architect in Dell Technologies Telco Solutions Business organization. In this role, he is focused on helping Dell’s Telco and Enterprise partners navigate the complexities of Edge Cloud strategy and turning the potential of 5G Edge transformation into the reality of business outcomes. Alex is a recognized industry expert in the area of edge computing and a frequent speaker on the subject. He is a co-author of the book "Multi-Access Edge Computing in Action." From March 2017 through February 2021, Alex served as Chair of ETSI’s Multi-Access Edge Computing (MEC) ISG – the leading international standards group focused on enabling edge computing in access networks.

Prior to joining Dell, Alex was a Distinguished Technologist in HPE’s North American Telco organization. In this role, he was involved in various aspects of helping Tier 1 CSPs deploy state-of-the-art flexible infrastructure capable of delivering on the full promises of 5G. Prior to HPE Alex was a Senior Principal Engineer/Senior Director at InterDigital, leading the company’s research and development activities in the area of wireless internet evolution. Since joining InterDigital in 1999, he has been involved in a wide range of projects, including leadership of 3G modem ASIC architecture, design of advanced wireless security systems, coordination of standards strategy in the cognitive networks space, development of advanced IP mobility and heterogeneous access technologies and development of new content management techniques for the mobile edge.

Alex earned his B.S.E.E. Summa Cum Laude from The Cooper Union, S.M. in Electrical Engineering and Computer Science from the Massachusetts Institute of Technology, and Ph.D. in Electrical Engineering from Princeton University. He held a visiting faculty appointment at WINLAB, Rutgers University, where he collaborated on research in cognitive radio, wireless security, and future mobile Internet. He served as the Vice-Chair of the Services Working Group at the Small Cells Forum. Alex is an inventor of over 160 granted U.S. patents and has been awarded numerous awards for Innovation at InterDigital.