Direct from Development: Tech Notes

Reference Architecture: GPU Acceleration for Dell PowerEdge MX7000

Tue, 26 Sep 2023 16:34:19 -0000

|Read Time: 0 minutes

Summary

Many of today’s most demanding applications can make use of GPU acceleration. Liqid partnered with Dell Technologies, to enable the rapid and dynamic provisioning of PCIe GPUs, as well as FPGA, and NVMe to Dell PowerEdge MX7000 compute sleds. The goal being to ensure that workload performance needs are met for the most accelerator hungry applications.

Background

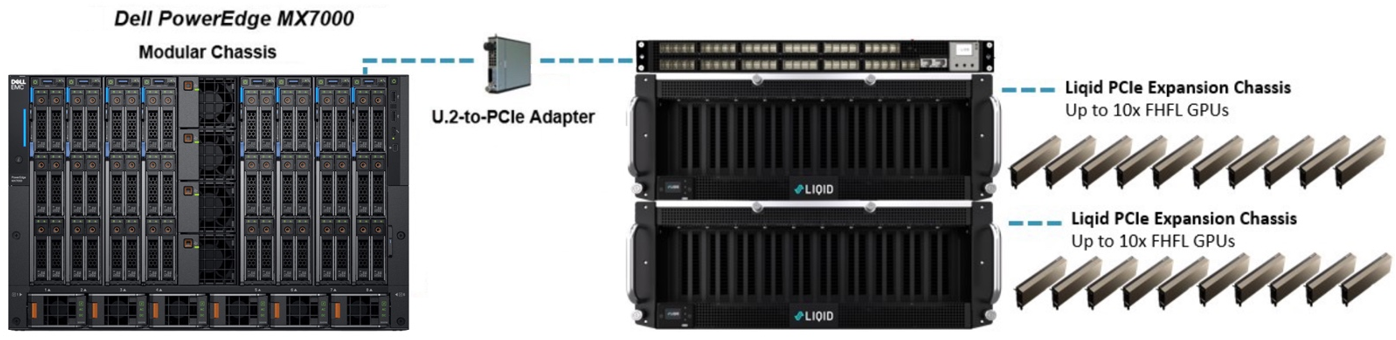

The Dell PowerEdge MX7000 Modular Chassis simplifies the deployment and management of today’s most challenging workloads by allowing IT administrators to dynamically assign, move, and scale shared pools of compute, storage, and networking resources. It provides IT administrators the ability to deliver fast results, eliminating managing and reconfiguring infrastructure, to meet the ever-changing needs of their end users. For compute intensive AI-driven compute environments and high-value applications, Liqid Matrix software enables the ability to add physical GPUs on-demand to the PowerEdge MX7000.

GPU acceleration for PowerEdge MX7000

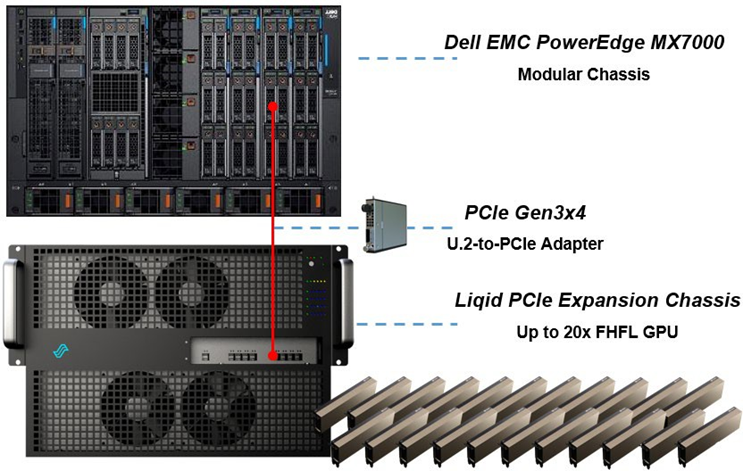

The following figure shows the essential MX7000 GPU expansion components:

Figure 1. Deploying GPU into a PowerEdge MX7000

Liqid SmartStack Composable Systems for PowerEdge MX7000

Liqid SmartStacks are fully validated Liqid composable solutions designed to meet your most challenging GPU requirements. Available in four sizes, with a maximum capacity of 30 GPUs and 16 servers per system, each SmartStack includes everything you need to deploy GPUs to MX7000 systems.

Liqid SmartStack 4410 Series Technical Specifications

Table 1. Liqid SmartStack Solutions

| SmartStack 10 | SmartStack 20 | SmartStack 30 | SmartStack 30+ |

Description | 10 GPU / 4 Host Capacity | 20 GPU / 8 Host Capacity | 30 GPU / 6 Host Capacity | 30 GPU / 16 Host Capacity |

Supported Device Types | GPU, NVMe, FPGA, DPU | GPU, NVMe, FPGA, DPU | GPU, NVMe, FPGA, DPU | GPU, NVMe, FPGA, DPU |

Max Devices | 10x Full-height, full-length (FHFL) 10.5”, dual-slot | 20x Full-height, full-length (FHFL) 10.5”, dual-slot | 30x Full-height, full-length (FHFL) 10.5”, dual-slot | 30x Full-height, full-length (FHFL) 10.5”, dual-slot |

Max Hosts Supported | 4x Host Servers | 8x Host Servers | 6x Host Servers | 16x Host Servers |

Max Composed Devices Per Host | 4x Devices | 4x Devices | 4x Devices | 4x Devices |

PCIe Expansion Chassis | 1x Liqid EX-4410 PCIe Gen4 | 2x Liqid EX-4410 PCIe Gen4 | 3x Liqid EX-4410 PCIe Gen4 | 3x Liqid EX-4410 PCIe Gen4 |

PCIe Fabric Switch | None | 1x 48 Port | 1x 48 Port | 2x 48 Port |

PCIe Host Bus Adapter | PCIe Gen3 x4 Per Compute Sled (1 or more) | PCIe Gen3 x4 Per Compute Sled (1 or more) | PCIe Gen3 x4 Per Compute Sled (1 or more) | PCIe Gen3 x4 Per Compute Sled (1 or more) |

Rack Units | 5U | 10U | 14U | 15U |

Composable Devices | Go to liqid.com/resources/library, for a current hardware compatibility list of composable PCIe devices | |||

Implementing GPU expansion for MX

Implementing GPU expansion for MX

GPUs are installed into the PCIe expansion chassis. Next, U.2 to PCIe Gen3 adapters are added to each compute sled that requires GPU acceleration. They are then connected to the expansion chassis (Figure 1). Liqid Command Center software enables discovery of all GPUs, making them ready to be added to the server over native PCIe.

FPGA and NVMe storage can also be added to compute nodes in tandem. This PCIe expansion chassis and software are available from Dell.

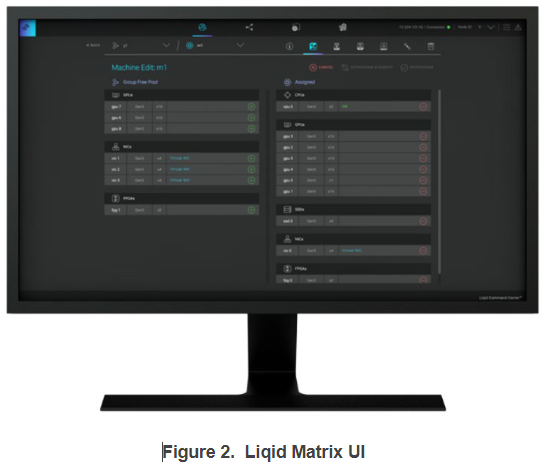

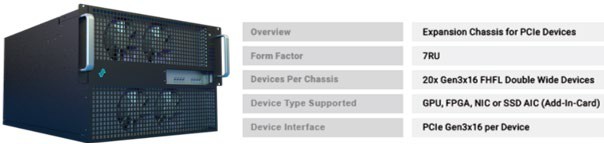

Software-defined GPU deployment

Liqid Matrix software enables the dynamic allocation of GPUs to MX compute sleds at the bare metal level (GPU hot plug supported) via software composability. Up to 4 GPUs can be composed to a single compute sled, using Liqid UI or RESTful API, to meet end user workload requirements. To the operating system, the GPUs are presented as local resources directly connected to the MX compute sled over PCIe (Figure 2). All operating systems are supported including Linux, Microsoft Windows, and VMware ESXi. As workload needs change, using management software to add or remove resources, such as GPU, NVMe SSD and FPGA on the fly.

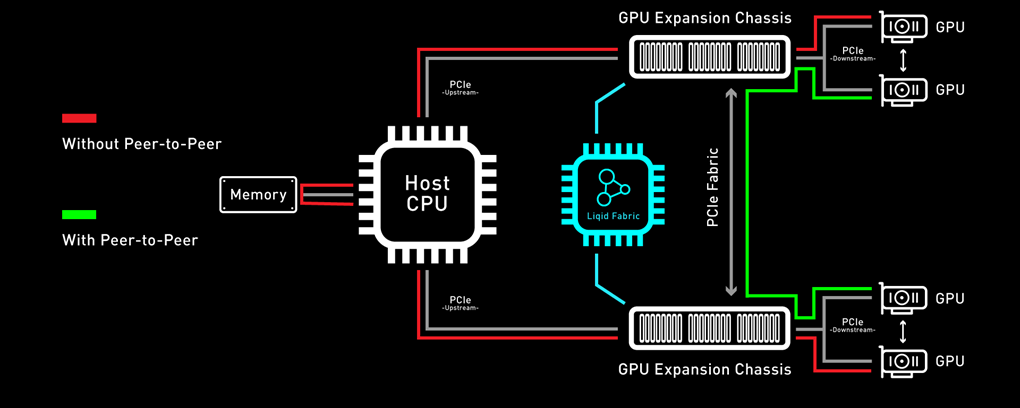

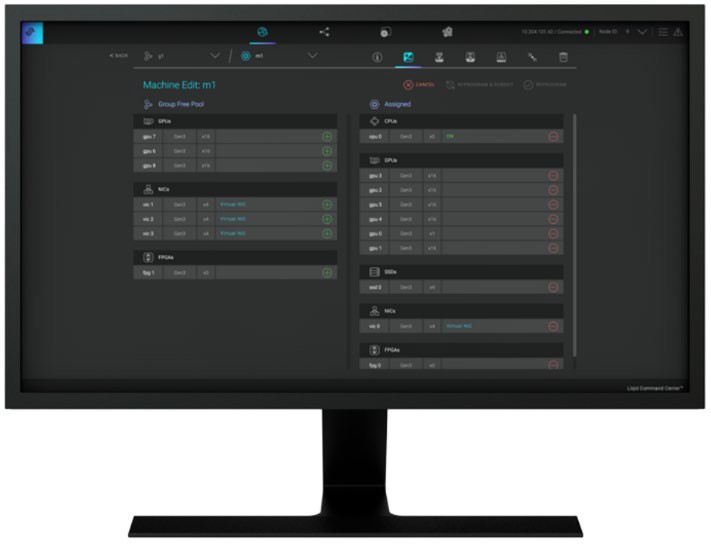

Enabling GPU Peer-2-Peer capability

A fundamental capability of this solution is the ability for RDMA Peer-2-Peer between GPU devices. Direct RDMA transfers have a massive impact on both throughput and latency for the highest performing GPU-centric applications. Up to 10x improvement in performance has been achieved with RDMA Peer-2-Peer enabled. The following figure provides an overview of how PCIe Peer-2-Peer works (Figure 3).

Figure 3. Peer-2-Peer performance

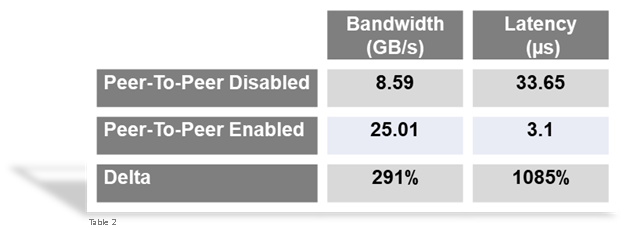

Bypassing the x86 processor, and enabling direct RDMA communication between GPUs, unlocks a dramatic improvement in bandwidth, and a reduction in latency. This chart outlines the performance expected for GPUs that are composed to a single node with GPU RDMA Peer-2-Peer enabled (Table 2).

Table 2. Peer-2-Peer Performance Comparison

| Peer-to-Peer Disabled | Peer-to-Peer Enabled | Improvement |

Bandwidth | 8.6 GB/s | 25.0 GB/s | 3X More Bandwidth |

Latency | 33.7 µs | 3.1 µs | 11X Lower Latency |

Application Performance

Scalable GPU performance is critical for successful outcomes. Tables 4 and 5 present a performance comparison of the Dell MX705c Compute Sled configured with varying numbers of NVIDIA A100 GPUs (1x, 2x, 3x, and 4x) in two different precisions: FP16 and FP32. These results indicate near-linear growth scale.

Table 3. FP16 GPU performance – MX7000 with NVIDIA A100 GPUs, P2P enabled

FP16 | BERT-Base | BERT-Large | GNMT | NCF | ResNet-50 | Tacotron 2 | Transformer-XL Base | Transformer-XL Large | WaveGlow |

1x A100 | 374 | 119 | 187,689 | 37,422,425 | 1,424 | 37,047 | 37,044 | 16,407 | 198,005 |

2x A100 | 638 | 157 | 240,368 | 68,023,242 | 2,627 | 72,631 | 73,661 | 32,694 | 284,709 |

3x A100 | 879 | 208 | 313,561 | 85,030,276 | 3,742 | 87,409 | 102,121 | 45,220 | 376,094 |

4x A100 | 1,088 | 256 | 379,515 | 98,740,107 | 4,657 | 112,282 | 129,336 | 58,503 | 460,793 |

Table 4. FP32 GPU performance – MX7000 with NVIDIA A100 GPUs, P2P enabled

FP32 | BERT-Base | BERT-Large | GNMT | NCF | ResNet-50 | Tacotron 2 | Transformer-XL Base | Transformer-XL Large | WaveGlow |

1x A100 | 184 | 55 | 100,612 | 24,117,691 | 891 | 36,953 | 24,394 | 10,520 | 198,237 |

2x A100 | 283 | 66 | 115,903 | 38,107,456 | 1,610 | 72,218 | 50,108 | 20,941 | 284,047 |

3x A100 | 380 | 88 | 149,359 | 47,133,830 | 2,257 | 84,735 | 66,869 | 28,748 | 370,425 |

4x A100 | 464 | 108 | 180,022 | 57,539,993 | 2,840 | 104,398 | 93,394 | 35,927 | 460,492 |

Conclusion

Liqid composable GPUs for the Dell PowerEdge MX7000 and other PowerEdge rack mount servers unlocks the ability to manage the most demanding workloads in which accelerators are required for both new and existing deployments. Liqid collaborated with Dell Technologies Design Solutions to accelerate applications through the addition of GPUs to the Dell MX compute sleds over PCIe.

Learn more | See a demo | Get a quote

This reference architecture is available as part of the Dell Technologies Design Solutions. To learn more, contact a Design Expert today https://www.delltechnologies.com/en-us/oem/index2.htm#open-contact-form.

Dell PowerEdge C6615 Performance

Thu, 04 Apr 2024 16:51:46 -0000

|Read Time: 0 minutes

Dell PowerEdge C6615 Performance

Authors:

David Dam – Principal Engineering Technologist

Kavya Ar – Sr. Systems Development Engineer

Summary

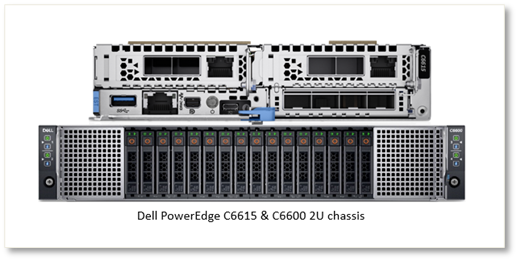

As power and cooling costs continue to occupy a substantial portion of IT budgets, IT departments are placing a strong emphasis on power efficiency to minimize the Total Cost of Ownership (TCO) when selecting server hardware. This paper assesses the power efficiency of the new Dell PowerEdge C6615 server, a model designed in the multi-node, 2U form factor. Due to its adept balance between density and expandability, this form factor is widely used across various edge and telecom use cases.

Test configuration

Server | Processor | CPU Cores | Default TDP | Memory | OS |

PowerEdge C6615 | AMD EPYC 8324P | 32 | 180W | 6 x 64 GB 4800 MT/s | Ubuntu 22.04.2 LTS |

AMD EPYC 8534P | 64 | 200W | 6 x 64 GB 4800 MT/s | Ubuntu 22.04.2 LTS | |

PowerEdge 1S Server | AMD EPYC 9334 | 32 | 240W | 12 x 64 GB 4800 MT/s | Ubuntu 22.04.1 LTS |

AMD EPYC 9534 | 64 | 280W | 12 x 64 GB 4800 MT/s | Ubuntu 22.04.1 LTS |

AMD STREAM

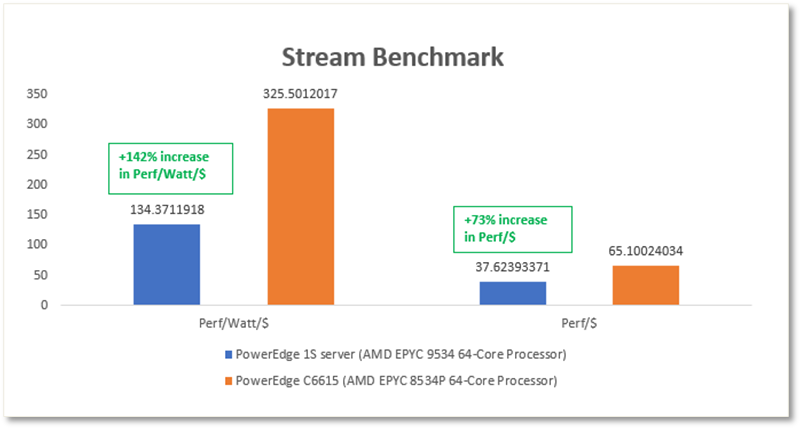

STREAM benchmark is used to calculate the memory bandwidth of a system. The Dell PowerEdge C6615, featuring the AMD EPYC 8534P 64-Core processor, shows a remarkable up to 142% enhancement in performance per watt per dollar. It also boasts an impressive up to 73% increase in performance per CPU dollar when compared to the Dell PowerEdge 1S server equipped with the AMD EPYC 9534P 64-Core processor[1] (Figure 1).

- STREAM results for selected AMD EPYC 64-Core processors

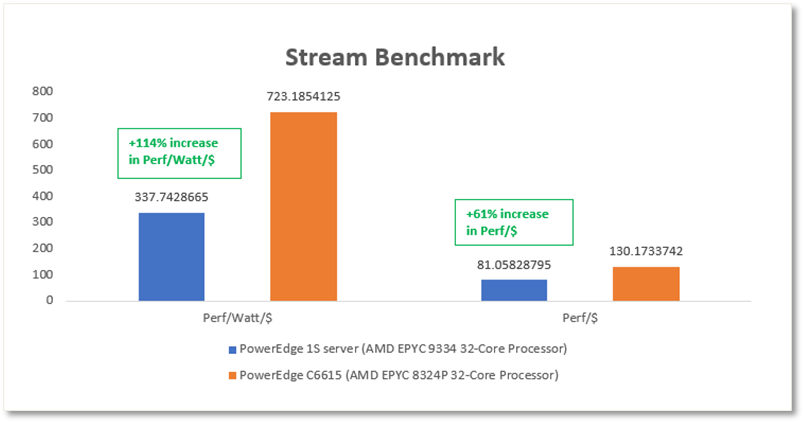

When compared to the Dell PowerEdge 1S server featuring the AMD EPYC 9334 32-Core processor, the test results showed up to a 114% improvement in performance per watt per dollar and up to 61% improvement in performance per CPU dollar[2] (Figure 2).

- STREAM results for selected AMD EPYC 32-Core processors

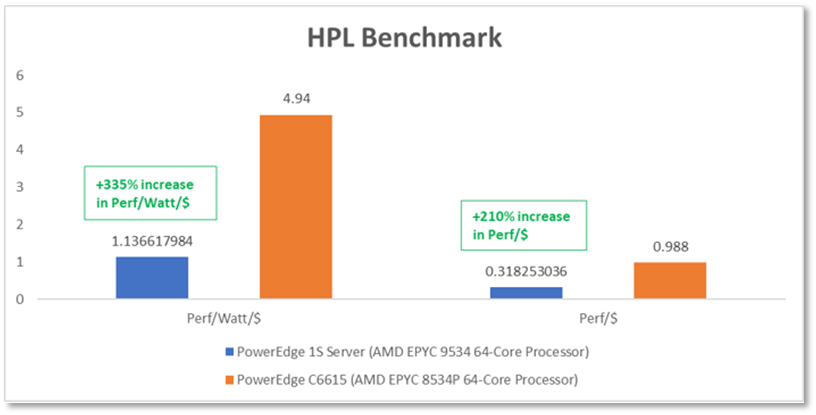

High-Performance Linpack (HPL)

High-Performance Linpack (HPL) is a benchmark used to measure the floating-point computing performance of a computer system. It is specifically designed to assess a system’s ability to solve a dense system of linear equations. HPL is widely used in the high-performance computing (HPC) industry and is considered a standard benchmark for evaluating supercomputers and clusters.

The results have shown outstanding cost-efficiency, revealing a remarkable up to 335% enhancement in performance per watt per dollar, and a substantial 210% increase in performance per CPU dollar when compared to PowerEdge 1S server equipped with the AMD EPYC 9534P 64-Core processor[3] (Figure 3).

- HPL results for selected AMD EPYC 64-Core processors

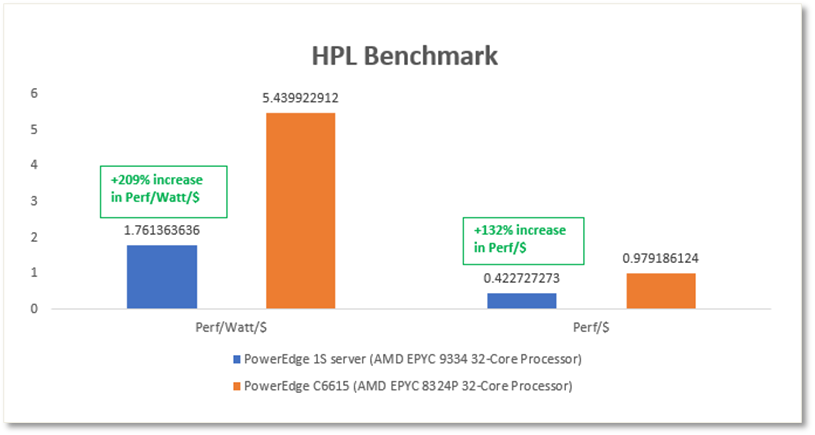

When compared to a PowerEdge 1S server equipped with the AMD EPYC 9334 32-Core processor, the results highlight exceptional cost-efficiency. It also features an impressive up to 209% improvement in performance per watt per dollar and a substantial 132% increase in performance per CPU dollar[4] (Figure 4).

- HPL results for selected AMD EPYC 32-Core processors

SPEC CPU 2017

The SPEC CPU® 2017 benchmark from the Standard Performance Evaluation Corporation (SPEC®) provides a comparative measure of compute-intensive performance using workloads developed from real user applications. SPEC CPU 2017 is the industry-standardized CPU intensive suite of benchmarks for measuring and comparing compute intensive performance, stressing a system's processor, memory subsystem and compiler. This benchmark is used by hardware vendors, IT industry, computer manufacturers, and government.

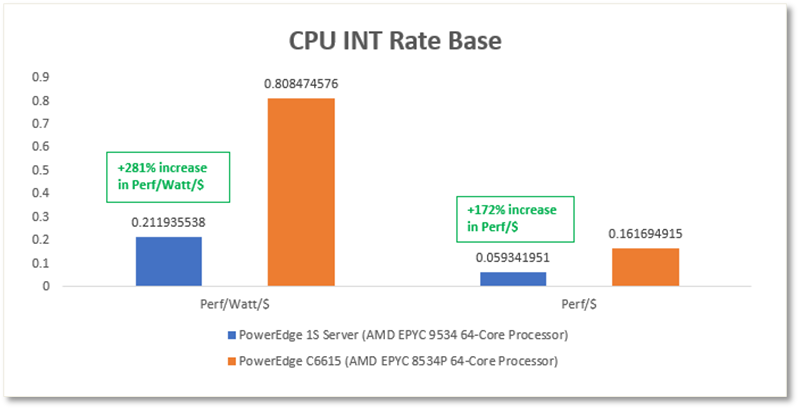

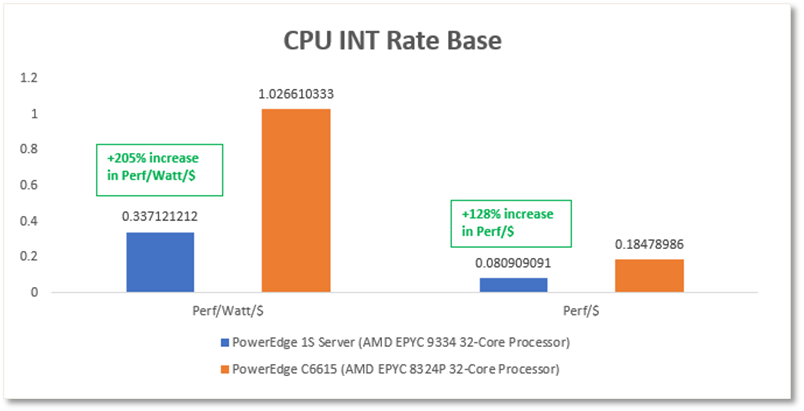

The results demonstrate remarkable cost-efficiency, with an impressive up to 281% improvement in performance per watt per dollar, and a substantial 172% increase in performance per CPU dollar when compared to a PowerEdge 1S server equipped with the AMD EPYC 9534P 64-Core processor[5] (Figure 5).

- SPECINTRate results for selected AMD EPYC 64-Core processors

When compared to the Dell PowerEdge 1S server which is equipped with the AMD EPYC 9334 32-Core processor, the test results indicate a significant improvement in cost-efficiency with a remarkable up to 205% increase in performance per watt per dollar, and an impressive up to 128% boost in performance per CPU dollar[6] (Figure 6).

- SPECINTRate results for selected AMD EPYC 32-Core processors

Conclusion

Through various benchmarks and workloads, it is evident that the dense compute-optimized design of Dell PowerEdge C6615, featuring lower cost, lower power AMD EPYC 8004 Series Server Processor, outperforms a similar 1S server equipped with the AMD EPYC 9004 Series Server Processor resulting in higher performance per watt per dollar and enhanced performance per dollar.

References

[1] Based on Dell internal calculations using AMD Stream benchmark achieved on a Dell PowerEdge C6615 and a TDP of 200W with AMD EPYC 8534P 64-Core processors compared to a Dell PowerEdge 1S server and a TDP 280W with AMD EPYC 9534P 64-Core processors. Actual performance will vary.

[2] Based on Dell internal calculations using AMD Stream benchmark achieved on a Dell PowerEdge C6615 and a TDP of 180W with AMD EPYC 8324P 32-Core processors compared to a Dell PowerEdge 1S server and a TDP 240W with AMD EPYC 9334 32-Core processors. Actual performance will vary.

[3] Based on Dell internal calculations using HPL benchmark achieved on a Dell PowerEdge C6615 and a TDP of 200W with AMD EPYC 8534P 64-Core processors compared to a Dell PowerEdge 1S server and a TDP 280W with AMD EPYC 9534P 64-Core processors. Actual performance will vary.

[4] Based on Dell internal calculations using HPL benchmark achieved on a Dell PowerEdge C6615 and a TDP of 180W with AMD EPYC 8324P 32-Core processors compared to a Dell PowerEdge 1S server and a TDP 240W with AMD EPYC 9334 32-Core processors. Actual performance will vary.

[5] Based on Dell analysis of submitted SPEC_CPU2017 score of 477 achieved on a Dell PowerEdge C6615 and a TDP of 200W with AMD EPYC 8534P 64-Core processors compared to a score of 606 on Dell PowerEdge 1S server and a TDP 280W with AMD EPYC 9534P 64-Core processors. Actual performance will vary.

[6] Based on Dell analysis of submitted SPEC_CPU2017 score of 277 achieved on a Dell PowerEdge C6615 and a TDP of 180W with AMD EPYC 8324P 32-Core processors compared to a score of 356 on Dell PowerEdge 1S server and a TDP 240W with AMD EPYC 9334 32-Core processors. Actual performance will vary.

PowerEdge MX and NVMe/TCP Storage

Fri, 28 Jul 2023 17:46:12 -0000

|Read Time: 0 minutes

Introduction

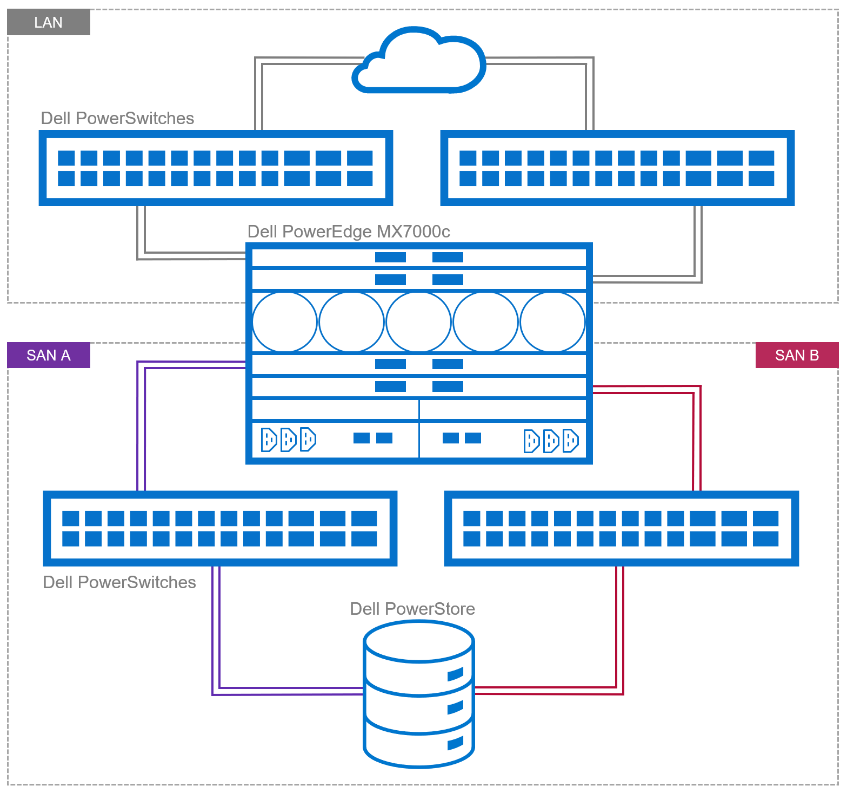

Dell PowerEdge MX was introduced in 2018, and since then Dell Technologies has continued to add new features and functionality to the platform. One such area is the support of NVMe over TCP (NVMe/TCP). As new applications such as Artificial Intelligence and Machine Learning (AI/ML) and the continuing consolidation of virtual workloads demand greater storage performance, NVMe/TCP brings performance improvements over protocols such as iSCSI at a lower price point than compatible Fibre Channel (FC) infrastructure (see Transport Performance Comparison). Incorporating this protocol into storage solution architecture brings new opportunities for higher performance using Ethernet and retiring FC infrastructure.

This tech note describes the architecture required to build PowerEdge MX solutions that use NVMe/TCP, simplifying connectivity to external storage arrays by reducing the physical network and streamlining protocols. It describes the value proposition and technology building blocks and provides high-level configuration examples using VMware.

Technology architecture

The four components of a Dell NVMe/TCP solution are a compute layer with the appropriate host network interface enabled for NVMe/TCP, high-performance 25 GbE or 100 GbE switching network, storage array supporting NVMe/TCP, and, finally, a management application to configure and control access. Dell offers several end-to-end PowerEdge MX base storage solutions that support NVMe/TCP on either 25 GbE or 100 GbE networking. The solutions include PowerEdge servers, PowerSwitch networking, and several Dell storage array products with Dell SmartFabric Storage Software for zoning management.

Figure 1. Example of NVMe/TPC SAN and LAN architecture

Dell continues to validate and expand the matrix of supported hardware and software. The document, NVMe/TCP Host/Storage Interoperability Simple Support Matrix, is available on E-Lab Navigator and updated on a regular basis. It includes details about tested configurations and supported storage arrays, such as PowerStore and PowerMax.

Table 1. Example of supported configurations extracted from NVMe/TCP Host/Storage Interoperability Simple Support Matrix

Server | NIC | MX Firmware/ | Storage Array | Boot From San | OS |

MX750c MX760c | Broadcom 57508 dual 100 GbE Mezz card | MX baseline 2.10.00 | PowerMax 2500/8500 OS 10.0.0 / 10.0.1 | No | VMware ESXi 8.0 |

MX760c

| Broadcom 57504 dual 25 GbE Mezz card | MX baseline 2.00.00 | PowerMax 2500/8500 OS 10.0.0 / 10.0.1 | No | VMware ESXi 8.0 |

MX750c MX760c | Broadcom 57508 dual 100 GbE Mezz card | MX baseline 2.10.00 | PowerStore 500T/1000T 3000T/7000T 9000T | No | VMware ESXi 8.0 |

MX760c | Broadcom 57504 dual 25 GbE Mezz card | MX baseline 2.00.00 | PowerStore 500T/1000T 3000T/7000T 9000T | No | VMware ESXi 8.0 |

These are the minimum supported versions. See the Dell support site for the latest approved version.

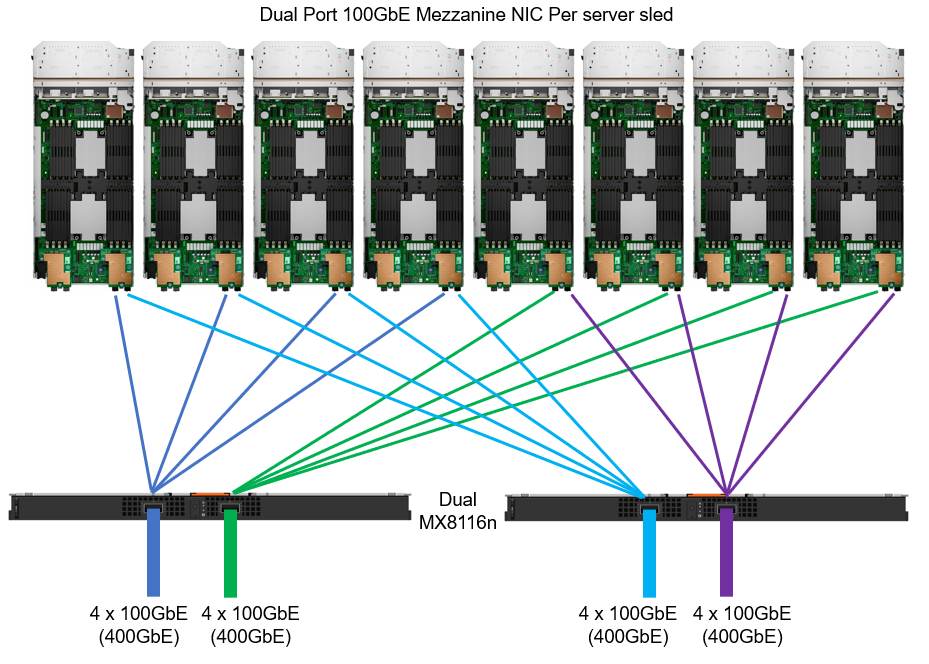

PowerEdge MX

The 100 GbE mezzanine card was added to the PowerEdge MX compute sled connectivity portfolio in April 2023. The PowerEdge MX offers a choice of both 25 GbE and 100 GbE at the compute sled, with a selection of various networking I/O modules.

Figure 2. MX chassis 100 GbE architecture

IP switch fabric

NVMe/TCP traffic uses traditional TCP/IP protocols, meaning the network design can be quite flexible. Often, existing networks can be used. The best-practice topology dedicates switches and device ports for storage area network (SAN) traffic only. In Figure 1, local area network (LAN) traffic connects to a pair of switches northbound from Fabric A in the MX chassis. Fabric B connects to dedicated, air-gapped switches to reach the storage array.

For more details about NVMe/TCP networking, see the SmartFabric Storage Software Deployment Guide.

For 25 GbE connectivity, there are a number of options, starting with dual- or quad-port mezzanine cards, with a selection of pass-through or fabric expansion modules or full switches integrated into the PowerEdge MX chassis. For scalability, a pair of external top-of-rack (ToR) switches are implemented for interfacing with the storage array.

For 100 GbE end-to-end connectivity, the MX8116n Fabric Expander Module is a required chassis component for the PowerEdge MX platform. A Z9432F-ON ToR switch is then required for MX8116n connectivity. The Z9432F supports 32 ports x 400 GbE (or 64 ports x 200 GbE using breakouts or 128 ports x multiple interface speeds from 10 GbE to 400 GbE ports using breakouts). So how does the Z9432F-ON work in the MX 100 GbE solution? The 400 GbE ports on the MX8116n connect to ports on the PowerSwitch. The solution scales the network fabric to 14 chassis with 112 PowerEdge MX compute sleds. Each MX7000 chassis uses only 4 x 400 GbE cables, dramatically reducing and simplifying cabling (see Figure 2).

Storage

Taking Dell PowerFlex as an example, NVMe/TCP is supported in the following manner: PowerFlex storage nodes are joined in storage pools. Typically, similar disk types are used within a pool (for example, a pool of NVMe drives or a pool of SAS drives). Volumes are then carved out from that pool, meaning the blocks/chunks/pages of that volume are distributed across every disk in the pool. Regardless of the underlying technology, these volumes can be assigned an NVMe/TCP storage protocol interface ready to be accessed across the network from the hosts accordingly.

Let’s look at another example—this one for Dell PowerStore, which is an all-NVMe flash storage array. A volume can be created and then presented using NVMe/TCP across the network. This allows the performance of the NVMe devices to be shared across the network, offering a truly end-to-end NVMe experience.

NVMe/TCP zoning

An advantage and challenge of Ethernet-based NVMe/TCP is that it scales out from tens to hundreds to thousands of fabric endpoints. This quickly becomes arduous, error prone, and highly cost inefficient. FC excels at automatic endpoint discovery and registration. For NVMe/TCP to be a viable alternative to FC in the data center, it must provide users with FC-like endpoint discovery and registration, and FC-like zoning capabilities. Dell SmartFabric Storage Software (SFSS) is designed to help automate the discovery and registration of hosts and storage arrays using NVMe/TCP.

Figure 3. Dell SmartFabric Storage Software (SFSS)

Dell SFSS is a centralized discovery controller (CDC). It discovers, registers, and zones the devices on the NVMe/TCP IP SAN. Customers can control connectivity from a single, centralized location instead of having to configure each host and storage array manually.

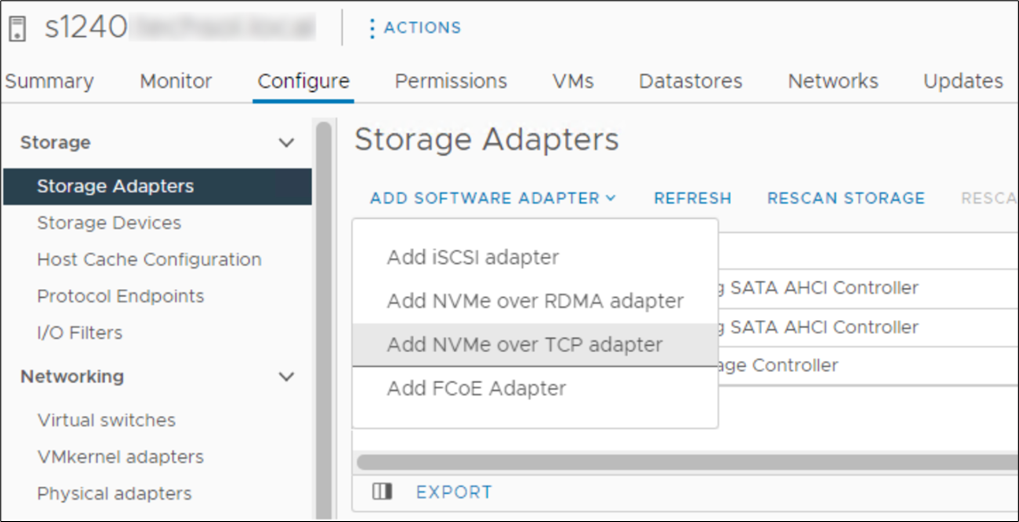

VMware support

In October 2021, VMware announced support of the NVMe/TCP storage protocol with the release of VMware vSphere 7 Update 3. VMware has since included support in vSphere 8. It is a simple task to configure an ESXi host for NVMe/TCP. Just select the adapter from the standard list of storage adapters for each required host. Once the adapter is selected in vSphere, the new volume appears automatically as a namespace, assuming access has been granted through SFSS. Any storage volume accessed through NVMe/TCP can be used to create a standard VMFS datastore.

Figure 4. Adding NVMe/TCP adapter in vSphere

Figure 4. Adding NVMe/TCP adapter in vSphere

Conclusion

NVMe/TCP is now a practical alternative to iSCSI and a replacement to older FC infrastructure. With NVMe/TCP's ability to provide higher IOPS at a lower latency while consuming less CPU than iSCSI, and offering similar performance to FC, NVMe/TCP can provide an immediate benefit. In addition, for customers who have cost constraints or skill shortages, moving from FC to NVMe/TCP is a viable choice. Dell SmartFabric Storage Software is the key component that makes scale-out NVMe/TCP infrastructures manageable. SFSS enables an FC-like user experience for NVMe/TCP. Hosts and storage subsystems can automatically discover and register with SFSS so that a user can create zones and zone groups in a familiar FC-like manner. Using Dell PowerEdge MX as the server compute element dramatically simplifies physical networking so customers can more quickly realize NVMe/TCP storage benefits.

References

- SmartFabric Storage Software Deployment Guide

- PowerEdge MX I/O Guide

- SmartFabric Storage Software: Create a Centralized Discovery Controller for NVMe/TCP (video)

- NVMe/TCP Host/Storage Interoperability (E-Lab Support Matrix)

- Dell Technologies Simple Support Matrices (storage E-Lab Support Matrices portal)

Dell PowerEdge MX7000 and MX760c Liquid Cooling for Maximum Efficiency

Tue, 18 Apr 2023 15:21:16 -0000

|Read Time: 0 minutes

Introduction

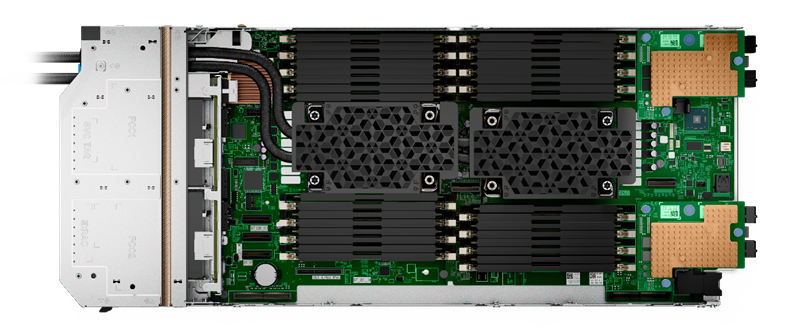

The market trend for high-performance servers to support the most demanding workloads has resulted in newer components, especially CPUs, putting more thermal demands on server design than ever before. Dell’s product engineers have brought new thermal innovation and added the choice of direct liquid cooling (DLC) to the PowerEdge MX7000 modular solution.

To maximize performance and cooling efficiency, customers now have the choice of liquid cooling or air cooling to support low-level to mid-level thermal design power (TDP) CPUs when selecting the MX760c with the latest 4th generation Intel® scalable processors. Implementing direct liquid cooling, or DLC, brings numerous benefits, including dramatically reducing the demand for cold air, so saving the costs of chilling, and reducing the power used to distribute cold air in the data center.

Improved efficiency

Thermal conductivity is basically the ability to move heat, and air’s thermal conductivity is much lower than liquid. (The thermal conductivity of air is 0.031; for water, it is a much higher 0.66. These are average values measured in SI units of watts per meter-kelvin [W·m−1·K−1]). This means that DLC-cooled servers can run top-bin, high-TDP CPUs that otherwise could not operate without throttling with air cooling alone. Also, it takes much less energy to pump liquid coolant through a DLC cold-plate loop than moving a high volume of air that might be cooled through a mechanical chiller. That provides an overall energy savings at the rack and data center level that translate to lower operating costs.

While Dell has offered DLC-cooled servers in previous generations, the MX DLC solution is completely new. It uses the latest cold-plate loop design with Leak Sense, a proprietary method of detecting and reporting any coolant leaks in the server node through an iDRAC alert.

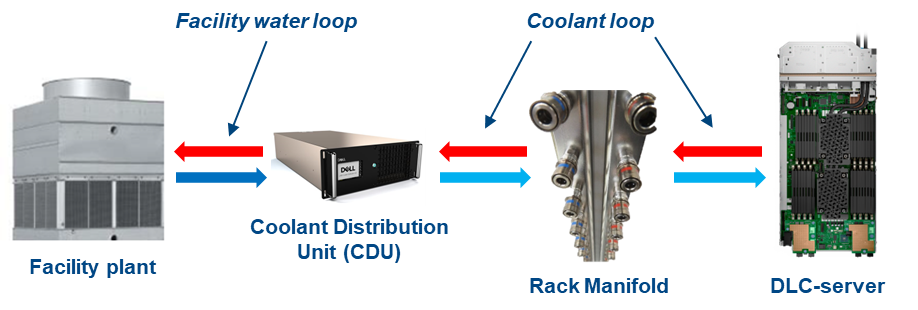

Figure 1. Liquid-cooled PowerEdge MX760c with DLC heat sinks and pipework

The first liquid-cooled Dell server was completed more than ten years ago for a large-scale web company running a dense computer farm. Since then, we have made DLC available on a broad range of PowerEdge platforms, available globally. DLC solutions consist of the server, rack, and rack manifolds to direct coolant to each of the units in a rack, and a Coolant Distribution Unit (CDU). The DLC CDU is connected to the data center water loop and exchanges heat from the rack to the facility water supply. With customers demanding higher levels of performance while also aiming to reduce carbon emissions and energy costs, liquid cooling adoption continues to accelerate. Liquid cooling’s lower energy usage with lower OPEX cost decreases TCO and could produce an ROI within 12 to 24 months depending on the environment.

Table 1. Sample configurations highlighting low fan requirement and power saved by DLC configurations

| Air cooling | Liquid cooling with DLC module | ||||

CPU SKU | 205 W | 225 W | 270 W | 270 W | 300 W | 350 W |

Rear Fan PWR/ Idle CPU Load | 82 W 33% duty | 82 W 33% duty | 82 W 33% duty | 82 W 33% duty | 82 W 33% duty | 82 W 33% duty |

Rear Fan PWR/ 50% CPU Load | 185.7 W 50% duty | 185.7 W 50% duty | 485.3 W 50% duty | 82 W 50% duty | 82 W 33% duty | 82 W 33% duty |

Rear Fan PWR/ 100% Load CPU/MEM/Drive | 1076.8 W 100% duty | 1076.8 W 100% duty | 1076.8 W 100% duty | 111.7 W 39% duty | 111.7 W 39% duty | 111.7 W 39% duty |

Results are based on a four-drive backplane configuration: 4 x 1.92 TB NVMe drives + 24 x 64 GB DDR5 + 2 x 25 Gb mezzanine cards.

Table 2. PowerEdge MX CPU details (offered liquid cooled only)

CPU | TDP | Specifications |

6458Q | 350 W | 4.00 GHz / Max Turbo 3.10 GHz / 60 MB cache / 32 cores |

8458P | 350 W | 2.70 GHz / Max Turbo 3.80 GHz / 82.5 MB cache / 44 cores |

8468 | 350 W | 2.10 GHz / Max Turbo 3.80 GHz / 105 MB cache / 48 cores |

8468V | 330 W | 2.40 GHz / Max Turbo 3.80 GHz / 97.5 MB cache / 48 cores |

8470 | 350 W | 2.00 GHz / Max Turbo 3.80 GHz / 105 MB cache / 52 cores |

8470Q | 350 W | 2.10 GHz / Max Turbo 3.80 GHz / 105 MB cache / 52 cores |

8480+ | 350 W | 2.00 GHz / Max Turbo 3.80 GHz / 105 MB cache / 56 cores |

A liquid cooling solution is limited to a four-drive backplane, E3.S backplane, or diskless configuration. A liquid cooling solution can be provided for all CPU SKUs to support various performance requirements.

Customers can monitor and manage server and chassis power plus thermal data. This information, supplied by the MX chassis and iDRACs, is collected by OpenManage Power Manager and can be reported per individual server, rack, row, and data center. This data can be used to review server power efficiency and locate thermal anomalies such as hotspots. Power Manager also offers additional features, including power capping, carbon emission calculation, and leak detection alert with action automation.

Total solution with direct liquid cooling

The MX760c uses a passive cold-plate loop with supporting liquid cooling infrastructure to capture and remove the heat from the two CPUs. The following image highlights the elements in a complete DLC solution. While customers must provide a facility water connection, a service partner or infrastructure specialist typically provides the remaining solution pieces.

Figure 2. DLC solution elements

Dell customers can now benefit from a pre-integrated DLC rack solution for MX that eliminates the complexity and risk associated with correctly selecting and installing these pieces. The DLC3000 rack solution for MX includes a rack, customer MX rack manifold, in-rack CDU, and each MX chassis and DLC-enabled compute node pre-installed and tested. The rack solution is then delivered to the customer’s data center floor, where the Dell services team connects the rack to facility water and ensures full operation. Finally, Dell ProSupport maintenance and warranty coverage backs everything in the rack to make the whole experience as simple as possible.

Figure 3. DLC3000 MX rack solution (front and rear views)

Moreover, with the DLC solution, the pre-integrated rack can support up to four MX chassis and 32 compute sleds. With top-bin 350 W Xeon Gen 4 CPUs, that translates to over 22 kW of CPU power captured to the DLC cooling solution. It is a major leap in capability and performance, now available for Dell customers.

Conclusion

As Dell offers the 4th generation Intel CPU in air-cooled and liquid-cooled configurations for use with the PowerEdge MX, customers need to review the choice between traditional air cooling and DLC, and understand the benefits of both to make an informed decision. Organizations need to consider server workload demands, capital expenditures (CAPEX) plus operating expense (OPEX), cost of power, and cost of cooling to understand the full life-cycle costs and determine whether air cooling or DLC provides a better TCO.

References

- Tech Talk Video: MX760c DLC walkthrough

- Unlock New MX CPU and Storage Configurations with a Thermally Optimized Air-Cooled Chassis

- The Future of Server Cooling—Part 1. The History of Server and Data Center Cooling Technologies

- Dell PowerEdge MX760c Technical Guide

PowerEdge MX7000 and OpenManage Enterprise–Modular Edition Advanced License

Wed, 22 Feb 2023 18:09:49 -0000

|Read Time: 0 minutes

Summary

Dell OpenManage Enterprise–Modular runs on the Dell PowerEdge MX7000 management module. OpenManage Enterprise–Modular facilitates configuration and management of PowerEdge MX chassis using a single web-based GUI or CLI and API. Script examples and code in Python or PowerShell using RACADM CLI and REST API are at Dell Technologies GitHub. OpenManage Enterprise–Modular is used to monitor and manage the chassis and chassis components such as compute sleds, network devices, I/O modules, and local storage devices.

The following additional OpenManage Enterprise–Modular features are available when the Advanced license is installed:

- OpenID Connect

- Telemetry

- RSA multifactor authentication

- Automatic certificate renewal

OpenID Connect

OpenID Connect (OIDC) is a solution that supports single sign-on (SSO). The following table lists the predefined roles that must be configured in the OIDC provider for OIDC users to log in to OpenManage Enterprise–Modular:

Table 1. OIDC predefined roles

Role in OpenManage Enterprise–Modular | Role in OIDC provider | Description |

CHASSIS_ADMINISTRATOR | CA | Can perform all tasks on the chassis |

COMPUTE_MANAGER

| CM | Can deploy services from a template for compute sleds and perform tasks on the service |

STORAGE_MANAGER | SM | Can perform tasks on storage sleds in the chassis |

FABRIC_MANAGER | FM | Can perform tasks that are related to fabrics |

VIEWER | VE | Has read-only access |

Telemetry

Chassis telemetry, including power consumption, thermal data, and fans speeds, is available to be passed into third-party analytics solutions such as Splunk. This telemetry data of more than 30 metrics is provided as granular, time-series data that can be streamed rather than polled.

RSA multifactor authentication

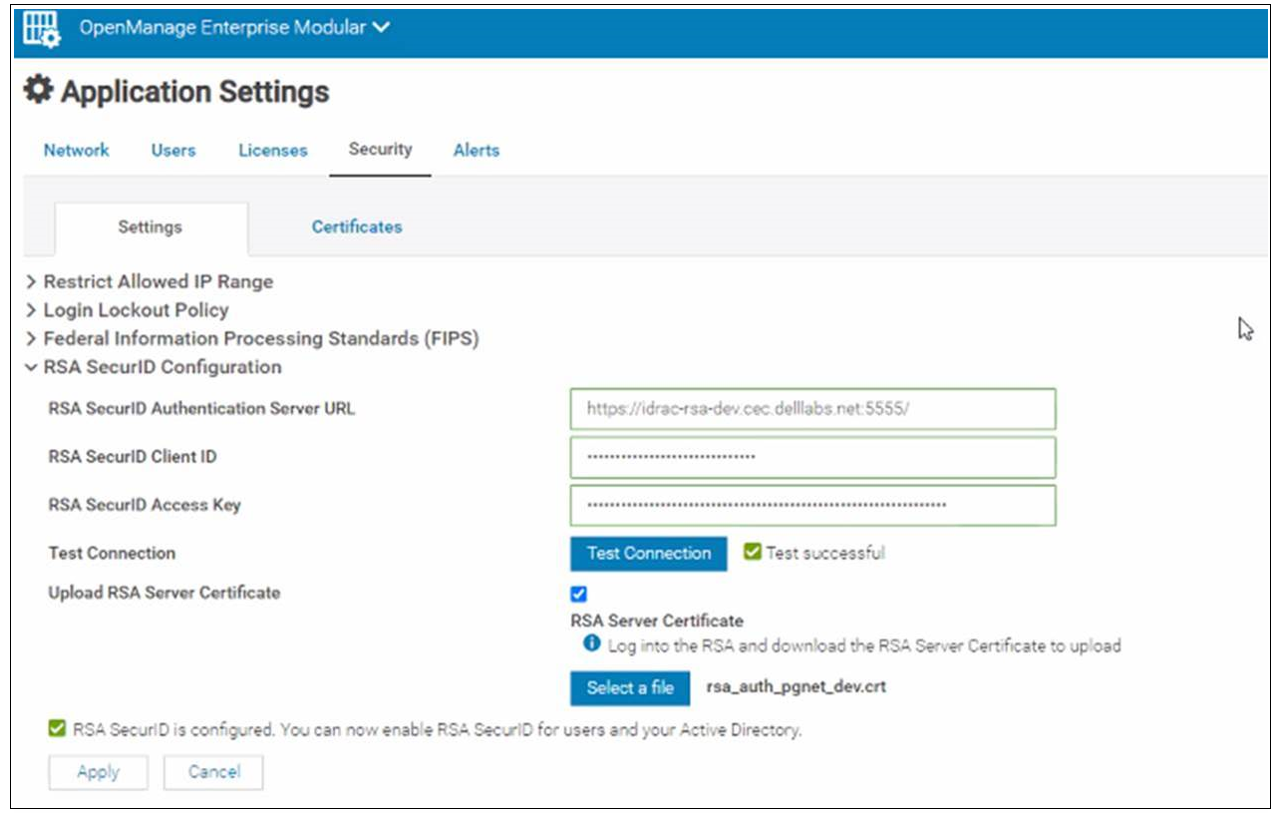

RSA SecurID can be used as another means of authenticating a user on a system. The OpenManage Enterprise–Modular Edition with the Advanced license supports RSA SecurID as a two-factor authentication (2FA) method.

Figure 1. OpenManage Enterprise–Modular RSA configuration

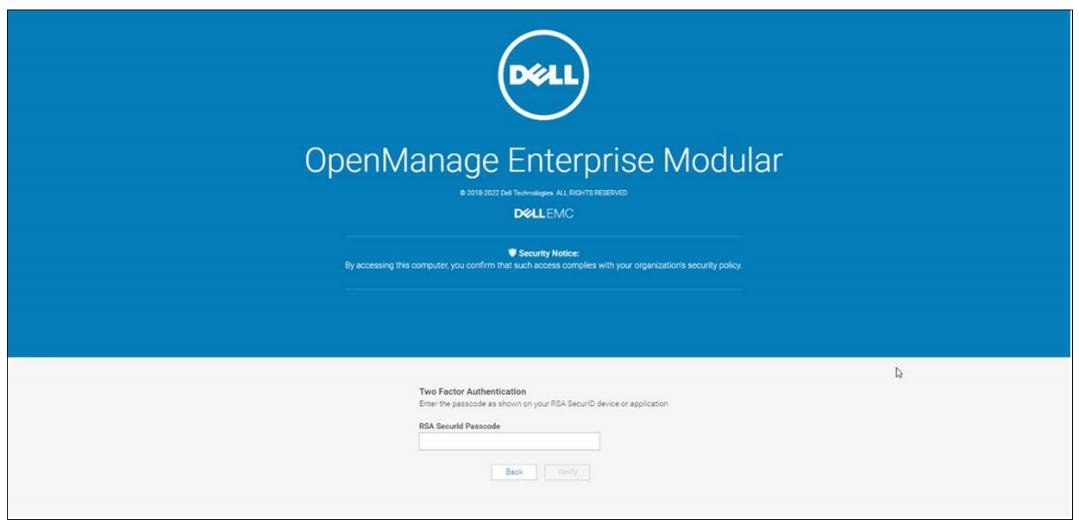

Figure 2. OpenManage Enterprise–Modular login using RSA two-factor authentication

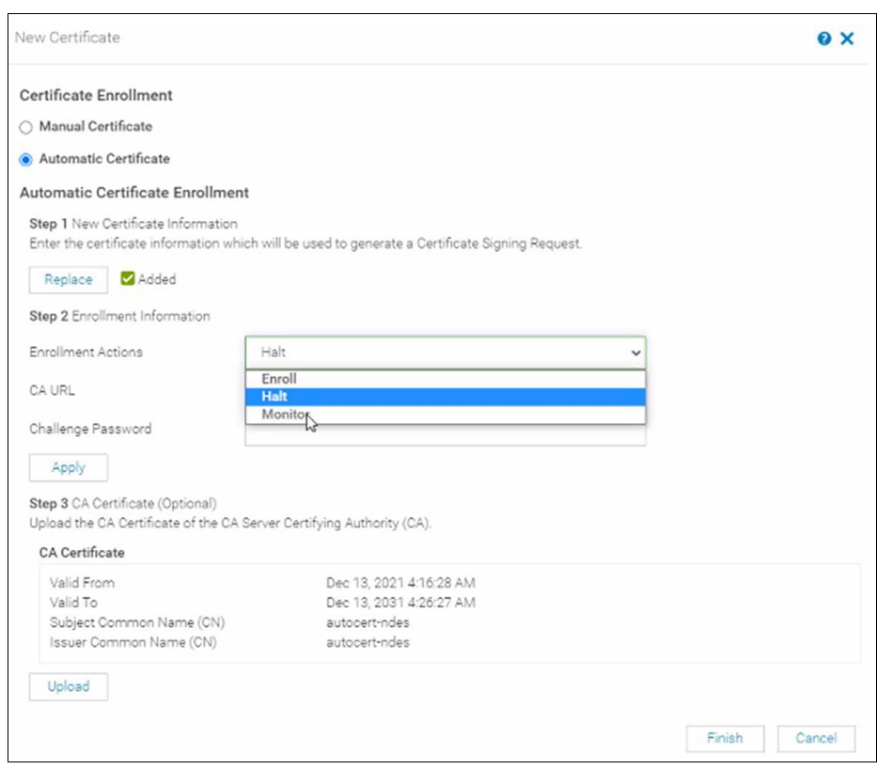

Automatic certificate renewal

The automatic certificate enrollment feature of OpenManage Enterprise–Modular enables fully automated installation and renewal of certificates used by the web server. When this feature is enabled, the existing web server certificate is replaced by a new certificate through a client for Simple Certificate Enrollment Protocol (SCEP) support. SCEP is a protocol standard used for managing certificates for large numbers of network devices using an automatic enrollment process. OpenManage Enterprise–Modular interacts with SCEP-compatible servers such as the Microsoft Network Device Enrollment Service (NDES) to automatically maintain SSL and TLS certificates.

Figure 3. Automatic certificate configuration

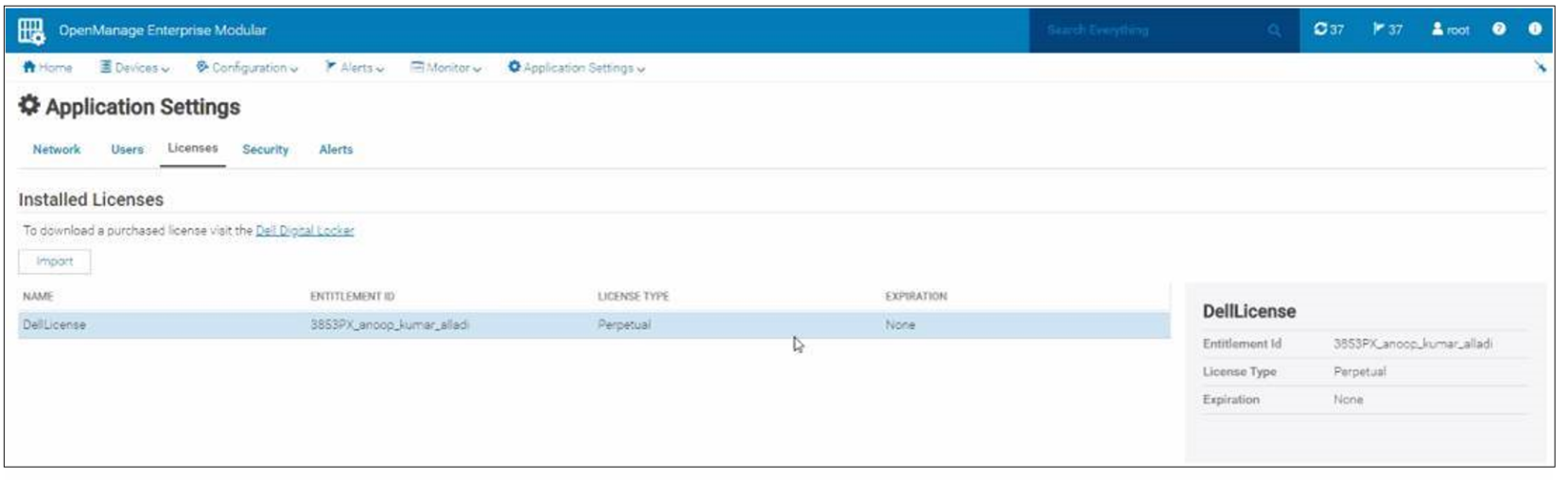

Reviewing entitlement

The license is stored on the management controller and can be imported or viewed as shown in the following figure. This license can be factory-installed or supplied by Dell's digital locker offering.

Figure 4. Advanced license installed

References

- OpenManage Enterprise–Modular documentation: Dell OpenManage Enterprise Modular User's Guide

- Interactive API explorer: Dell API Catalogue Guide

- API documentation: OpenManage Enterprise Modular RESTful API Guide

- Script examples using OpenManage Enterprise–Modular REST APIs: GitHub - Dell

- White paper detailing using PingFederate provider for OpenManage Enterprise login: Configuring OpenID Connect

Unlock New MX CPU and Storage Configurations with a Thermally Optimized Air-Cooled Chassis

Fri, 03 Mar 2023 20:08:02 -0000

|Read Time: 0 minutes

As the server industry trend of increasing CPU power goes on, Dell Technologies continues to offer customers feature-rich air-cooled configurations. Dell Engineering has applied thermal innovation and machine learning to the Dell PowerEdge MX chassis to support the MX760c server sled with a broad range of 4th Gen Intel® Xeon® Scalable processors and local storage configurations.

This Direct from Development tech note describes the new capabilities using air cooling that Dell has added to the PowerEdge MX configurations.

Introduction

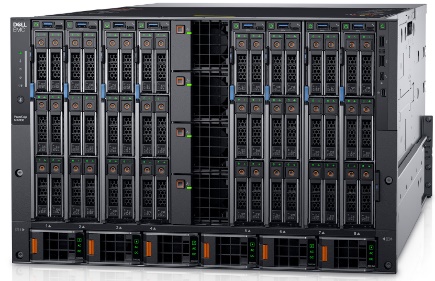

The PowerEdge MX7000 is a modular chassis that allows customers to build a set of compute, storage, networking, and management to meet their specific workload needs. Industry trends of new technologies, including CPUs increasing power per server sled, continually push the capability to air-cool feature-rich configurations. Dell Engineering used machine learning combined with next-generation fans to offer high-performance 4th Gen Intel® Xeon® Scalable processors in an air-cooled chassis with more local storage configurations than previously available.

Dell Engineering expertise

There are 8! = 40,320 modular sled permutations in the 8-slot MX chassis. Dell Engineering conducted a Design of Experiments (DOE) to train a machine learning model that dynamically calculates the airflow cooling capacity for each of the eight slots. This technology enables Dell to maximize the shared cooling infrastructure of the MX7000, unlocking configurations that were previously not possible, and provide clear guidance to customers about how to thermally optimize their chassis. When a chassis configuration is optimized for cooling, the fans run more efficiently at lower speeds across the server workload, which lowers fan power, reduces cooling costs, and decreases acoustics of the chassis.

Thermally optimized chassis

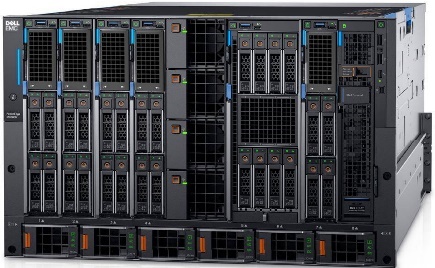

The ability of the MX7000 chassis to air-cool the eight slots is directly affected by the storage configuration of each sled as well as the placement of sleds in the chassis. For example: Pulling air through a sled that has six hard drives is harder than with a sled that has four hard drives. Machine learning is built into the sled and chassis firmware to dynamically analyze the ability of the chassis to deliver air-cooling to each sled.

A consistent storage configuration maximizes cooling across all sleds and enables the MX760c to support up to six 2.5-in. storage devices with the latest 4th Gen Intel® Xeon® CPUs.

A varied storage configuration with MX760c sleds enables support for up to four 2.5-in. storage devices to maximize cooling through each sled.

MX7000 air-cooling enhancements

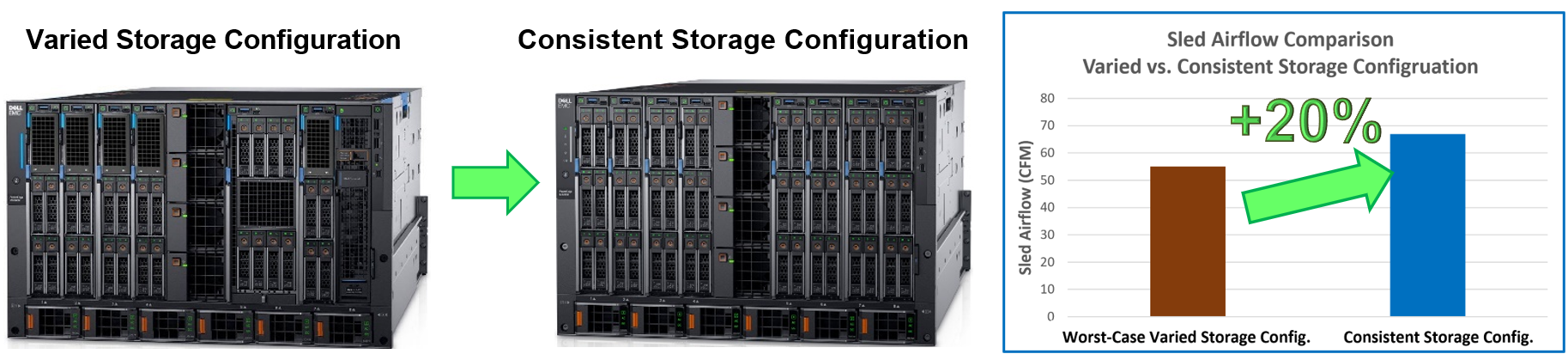

MX7000 chassis and MX sleds introduced the capability to dynamically calculate the cooling based on the chassis configuration. This capability enabled Dell to offer a thermally optimized chassis with a consistent storage configuration that increases cooling for sleds by 20 percent. Dell used this additional cooling capability to offer high-power CPUs with storage configurations that were not supported by previous generations.

The industry trend of increasing power per node every generation has significantly challenged the ability to deliver air-cooled solutions. The MX7000 chassis introduced the next-generation Gold Grade chassis fans with the MX760c sleds to provide an air-cooled solution with the latest high-powered CPUs. Gold Grade fans deliver 25 percent more cooling per sled than the previous-generation Silver Grade fans.

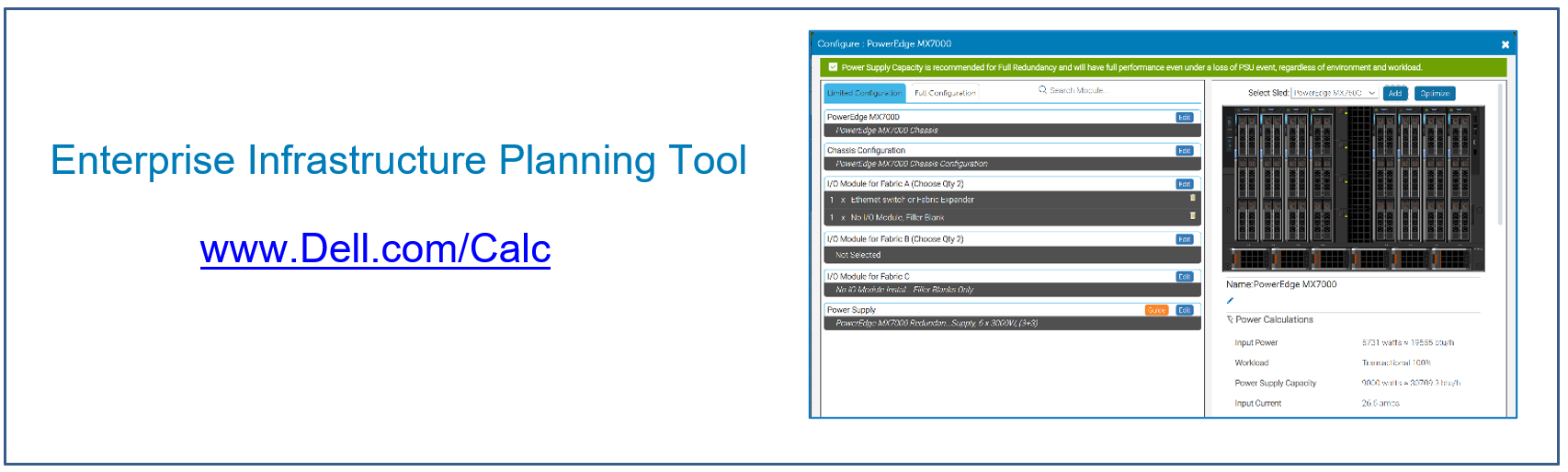

Enterprise Infrastructure Planning Tool

The Dell Enterprise Infrastructure Planning Tool (EIPT) helps IT professionals plan and tune their systems and infrastructure for maximum efficiency. Customers can model their customized MX7000 chassis and sled configurations in EIPT. The trained machine learning model enables the tool to identify the maximum data center ambient temperature supported by the sleds. It also identifies the most thermally optimized configuration when sleds have a varied storage configuration. This means that new and existing customers can identify the most efficient sled-to-slot configuration to optimize their chassis for maximum cooling capability while lowering power, costs, and fan noise.

Conclusion

Dell continues to deliver innovative solutions that expand the air-cooled feature-rich configuration choices for the PowerEdge MX7000 chassis and server sleds. Dell Engineering combined machine learning technology with next-generation fans to provide customers the latest high-performance CPUs with more local storage configurations than previous generations in an air-cooled chassis. In addition to the expanded air-cooling configurations, Dell also offers Direct Liquid Cooling (DLC) for the PowerEdge MX7000 chassis and server sleds. The features and potential benefits of DLC are discussed in a separate Direct from Development tech note.

References

- Tech Talk Video: The MX7000 Introduces a New Thermal Innovation

- Direct from Development Tech Note: The History of Server and Data Center Cooling Technologies

Reference Architecture: Acceleration over PCIe for Dell EMC PowerEdge MX7000

Mon, 16 Jan 2023 22:12:40 -0000

|Read Time: 0 minutes

Summary

Many of today’s most demanding applications can make use of PCIe acceleration. Liqid designed on Dell Technologies, enables the rapid and dynamic provisioning of PCIe resources such as GPU, FPGA, or NVMe to Dell EMC PowerEdge MX7000 compute sleds. Ensuring workload performance needs are met for the most accelerator hungry applications. Request a demo or quote from a Dell Technologies Design Solutions Expert Design Solution Portal.

Background

The Dell EMC PowerEdge MX7000 Modular Chassis simplifies the deployment and management of today’s most challenging workloads by allowing IT administrators to dynamically assign, move and scale shared pools of compute, storage and networking resources. It provides IT administrators the ability to deliver fast results, eliminating managing and reconfiguring infrastructure to meet ever-changing needs of their end users. The addition of PCIe infrastructure to this managed pool of resources using Liqid technology designed on Dell EMC MX7000 expands the promise of software-defined composability for today’s AI- driven compute environments and high-value applications.

GPU Acceleration for PowerEdge MX7000

For workloads like AI that require parallel accelerated computing, the addition of GPU acceleration within the PowerEdge MX7000 is paramount. With Liqid technology and management software, GPUs of any form factor can be quickly added to any new or existing MX compute sled via the management interface, quickly delivering the resources needed to manage each step of the machine learning workflow including data ingest, cleansing, training, and inferencing. Spin-up new bare-metal servers with the exact number of accelerators required and then dynamically add or remove them as workload needs change.

Essential PowerEdge Expansion Components

GPU Expansion Over PCIe | |

Compute Sleds | Up to 8 x Compute Sleds per Chassis |

GPU Chassis | PCIe Expansion Chassis (Contain GPU or other devices Direct Connect to Compute Sled) |

Interconnect | PCIe Gen3 x4 Per Compute Sled (Multiple Gen 3 x4 Links Possible) |

GPU Expansion | 20x GPU (FHFL) |

GPU Supported |

V100, A100, RTX, T4, Others |

OS Supported | Linux, Windows, VMWare and Others |

Devices Supported | GPU, FPGA, and NVMe Storage |

Form Factor | 14U Total = MX7000 (7U) + PCIe Expansion Chassis (7U) |

Implementing GPU Expansion for MX

GPUs are installed into the PCIe expansion chassis. Next, U.2 to PCIe Gen3 adapters are added to each compute sled that requires GPU acceleration, and then they are connected to the expansion chassis (Figure 1). Liqid Command Center software enables discovery of all GPUs, making them ready to be added to the server over native PCIe. FPGA and NVMe storage can also be added to compute nodes in tandem. This PCIe expansion chassis & software are available from the Dell Design Solutions team.

Figure 2

Figure 3 Liqid Command Center

Software Defined Composability

Once PCIe devices are connected to the MX7000, Liqid Command Center software enables the dynamic allocation of GPUs to MX compute sleds at the bare metal (GPU hot- plug supported). Any amount of resources can be added to the compute sleds, via Liqid Command Center (GUI) or RESTful API, in any ratio to meet the end user workload requirement To the operating system, the GPUs are

presented as local resources direct connected to the MX compute sled over PCIe (Figure 3). All operating systems are supported including Linux, Windows, and VMware. As workload needs change, add or remove resources on the fly, via software including NVMe SSD and FPGA (Table 1).

Enabling GPU Peer-2-Peer Capability

A key feature included with the PCIe expansion solution for PowerEdge MX7000 is the ability for RDMA Peer-2-Peer between GPU devices. Direct RDMA transfers have a massive impact on both throughput and latency for the highest performing GPU-centric applications. Up to 10x improvement in performance has been achieved with RDMA Peer-2-Peer enabled. Below is the overview of how PCIe Peer-2-Peer functions (Figure 4).

Figure 4 PCIe Peer-2-Peer

Bypassing the x86 processor and enabling direct RDMA communication between GPUs, realizes a dramatic improvement in bandwidth and in addition a reduction in latency is also realized. This chart outlines the performance expected for GPUs that are composed to a single node with GPU RDMA Peer-2-Peer enabled (Table 2).

Application Level Performance

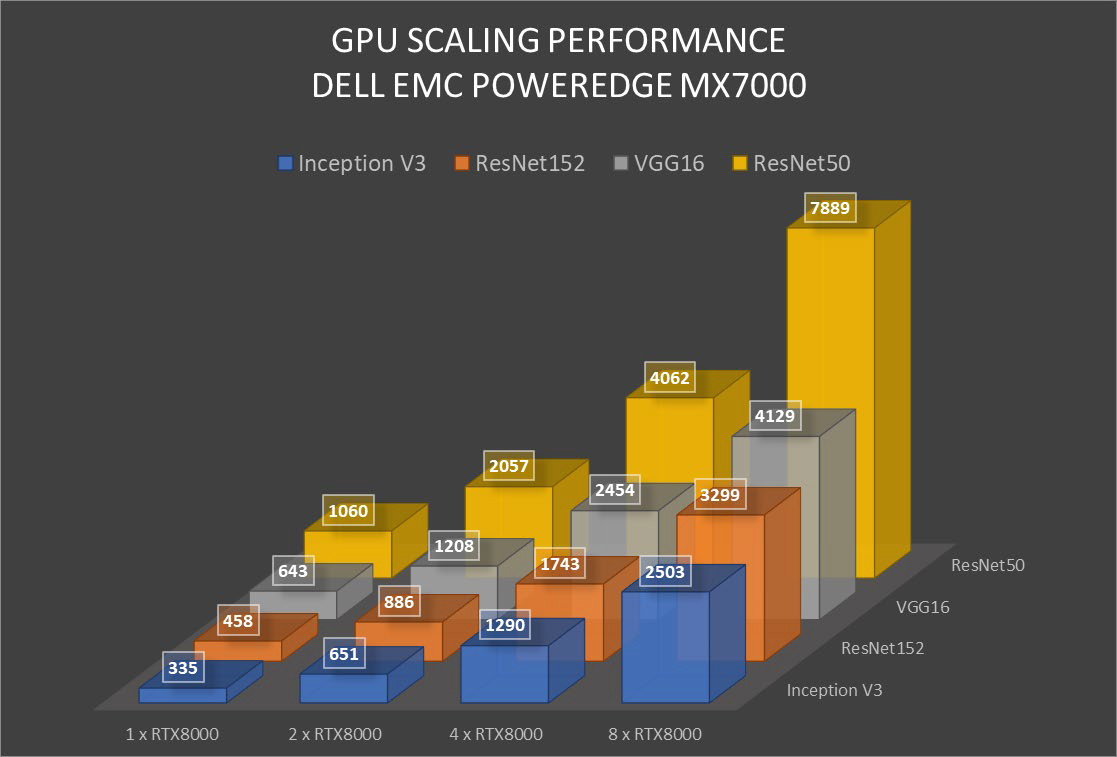

RDMA Peer-2-Peer is a key feature in GPU scaling for Artificial Intelligence, specifically machine learning based applications. Figure 5 outlines performance data measured on mainstream AI/ML applications on the MX7000 with GPU expansion over PCIe. It further demonstrates the performance scaling from 1-GPU to 8-GPU for a single MX740c compute

sled. High scaling efficiency is observed for ResNet152, VGG16, Inception V3, and ResNet50 on MX7000 with composable PCIe GPUs measured with Peer-2-Peer enabled. These results indicate a near-linear growth pattern, and with the current capabilities of the Liqid PCIe 7U expansion sled one can allocate up to 20 GPUs to an application running on a single node.

Figure 5 GPU Performance Scaling Comparison

- MX7000 Leverages RTX8000 in PCIe expansion chassis measured with P2P Enabled

Conclusion

Liqid PCIe expansion for the Dell EMC PowerEdge MX7000 unlocks the ability to manage the most demanding workloads in which accelerators are required for both new and existing deployments. Liqid collaborated with Dell Technologies Design Solutions to accelerate applications by through the addition of GPUs to the Dell EMC MX compute sleds over PCIe.

Learn More | See a Demo | Get a Quote

This reference architecture is available as part of the Dell Technologies Design Solutions.

Contact a Design Expert today https://www.delltechnologies.com/en-us/oem/index2.htm#open-contact-form

Dell EMC® PowerEdge™ MX750 Servers and MySQL Application Performance Gains with PCIe® 4.0 Technology

Mon, 16 Jan 2023 21:43:10 -0000

|Read Time: 0 minutes

Summary

This document summarizes a MySQL application performance comparison between the Dell EMC PowerEdge MX750 (with PCIe 4.0 technology) and the MX740 (with PCIe 3.0 technology). All performance and characteristics discussed are based on performance testing conducted in KIOXIA America, Inc. application labs. Results are accurate as of 5/1/21. Ad Ref #000072

Introduction

Dell EMC PowerEdge MX750 servers are based on the current PCIe 4.0 interface and the latest 3rd Gen Intel® Xeon® Ice Lake1 PCIe 4.0 scalable processors. Generationally, servers with PCIe 4.0 enable twice the bandwidth versus the previous PCIe 3.0 technology so peripheral devices, such as SSDs, GPUs and NICs can access data faster than ever before. The speed upgrade is well-suited for data-intensive and computational applications such as cloud computing, databases, data analytics, artificial intelligence, machine learning, container orchestration and media streaming. A faster PCIe interface enables today’s powerful CPUs, such as Ice Lake CPUs, to be continually fed with data.

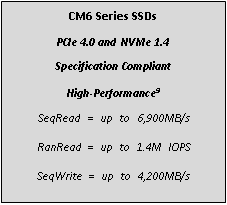

To validate application performance and productivity gains that can be achieved with the PCIe 4.0 interface, KIOXIA Corporation, a leader in PCIe 4.0 SSD storage, compared the performance of a MySQL database application running on an MX750 server with PCIe 4.0 SSD technology versus an MX740 server with PCIe 3.0 SSD technology. The test process and results are presented.

System and Application Test Scenario

The tests utilized an operational, high-performance MySQL database workload that was based on comparable TPC-C™ benchmarks created by HammerDB software2. The MySQL database is commonly used in hyperscale and enterprise environments, and widely deployed on PowerEdge servers. It supports key applications such as webserver, online transactional processing (OLTP), e- commerce and data warehousing, and is the most widely deployed open source database globally (ranked number two overall3).

The tests on each server platform were conducted with PCIe 4.0 and PCIe 3.0 SSDs that measured transactions per minute (TPM), average read/write latency and CPU utilization. For the MX750 server, four (4) KIOXIA CM6 Series PCIe 4.0 enterprise NVMe® SSDs were deployed. For the MX740 server, four (4) PCIe 3.0 specification-compliant enterprise NVMe SSDs were deployed. The test results provide a real-world scenario of TPM performance, average read/write latency and CPU utilization when running a MySQL database application using comparable equipment and performing queries against it. Supporting details include a description of the test criteria, the set-up and associated test procedures, a visual representation of the test results, and a test analysis.

Test Criteria:

The hardware and software equipment used for these tests included:

Server Configurations:

Server Setup 1: One (1) Dell EMC PowerEdge MX750 dual socket server with two (2) Intel Xeon Ice Lake PCIe

4.0 CPUs, featuring 28 processing cores, 2.0 GHz frequency, and 512 gigabytes4 (GB) of DDR4 RAM

Server Setup 2: One (1) Dell EMC PowerEdge MX740 dual socket server with two (2) Intel Xeon Cascade Lake5 PCIe 3.0 CPUs, featuring 24 processing cores, 2.2 GHz frequency, and 384 GB of DDR4 RAM

- Operating System: CentOS™ v8.3

- Application: MySQL v8.0 (database size of 440 GB)

- Test Software: Comparable TPC-C benchmark tests generated through HammerDB v3.3 test software

- Storage Devices (Table 1):

SSD Setup 1: Four (4) KIOXIA CM6-V Series (3 DWPD6) PCIe 4.0 enterprise NVMe SSDs with 3.2 terabyte4 (TB) capacities

SSD Setup 2: Four (4) PCIe 3.0 specification-compliant (3 DWPD) enterprise NVMe SSDs with 3.2 TB capacities

Specifications | CM6-V Series | PCIe 3.0-compliant |

Interface | PCIe 4.0 NVMe (U.3) | PCIe 3.0 NVMe (U.2) |

Capacity | 3.2 TB | 3.2 TB |

Form Factor | 2.5-inch7 (15mm) | 2.5-inch (15mm) |

NAND Flash Type | BiCS4 3D NAND (96-layer) | V-NAND |

Drive Writes per Day (DWPD) | 3 (5 years) | 3 (5 years) |

Power | 18W | 20W |

DRAM Allocation | 96 GB | 96 GB |

Table 1: SSD specifications and set-up parameters

PLEASE NOTE: The MySQL database was limited to 96 GB of RAM. Although the total capacity available to the test system differs (512 GB for server setup 1 versus 384 GB for server setup 2), the actual amount of capacity used by each server setup was expected to be the same and not contribute to any performance advantage8.

Set-up & Test Procedures

Set-up: The MX750 and MX740 servers were setup with the CentOS™ v8.3 operating system and MySQL v8.0 software. The MySQL database was set to a maximum of 96 GB of DRAM and was placed into a RAID10 set. RAID10 was selected because it is commonly used in data center environments. Once each SSD array was initialized (PCIe 4.0 SSDs and PCIe 3.0 SSDs), the RAID10 set was formatted to the XFS file system. A 440 GB database was then loaded into each server setup (MX750/MX740) using HammerDB test software. Once the database was loaded, it was then backed up. Before each test run, the 440 GB test database was restored to the exact same state for each run to control the test inputs and database size.

Test Procedures: The first set of tests (TPM, latency and CPU utilization) were run on the MX750 server. The comparable TPC-C workload utilized HammerDB software to run the test. The four (4) KIOXIA CM6-V Series SSDs were then placed into a RAID10 set and the tests were conducted. Multiple iterations of each test were run to determine an optimal configuration of virtual users. A configuration of 480 virtual users delivered the highest TPM performance numbers. See Test Results section.

The first set of tests were run on the MX740 server. The comparable TPC-C workload utilized HammerDB software to run the test. The four (4) PCIe 3.0 specification-compliant SSDs were then placed into a RAID10 set and the tests were conducted. Multiple iterations of each test were run and a configuration of 480 virtual users delivered the highest TPM performance numbers. See Test Results section.

Test Results

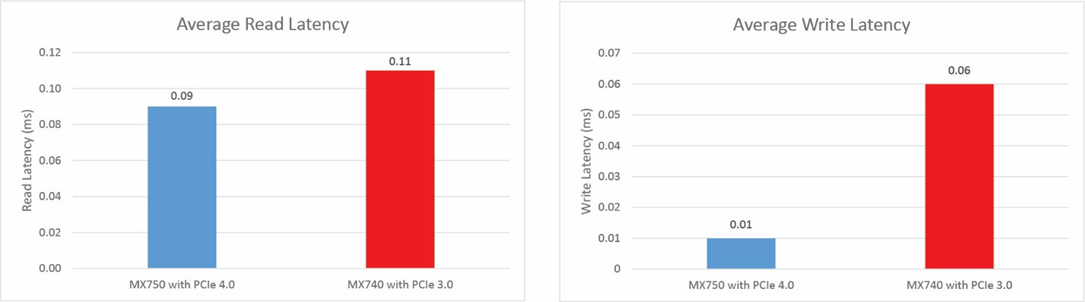

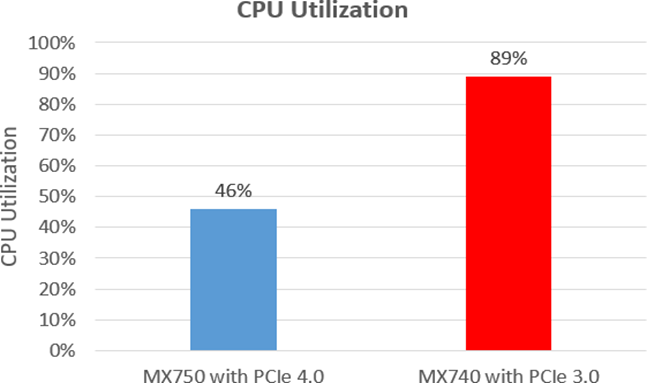

The tests were conducted with the MX750 server (Ice Lake CPUs with PCIe 4.0 technology) versus an MX740 server (Cascade Lake with PCIe 3.0 technology), with the results recorded. For the TPM result, the higher the test value, the better the result. For the latency and CPU utilization results, the lower the test value, the better the result.

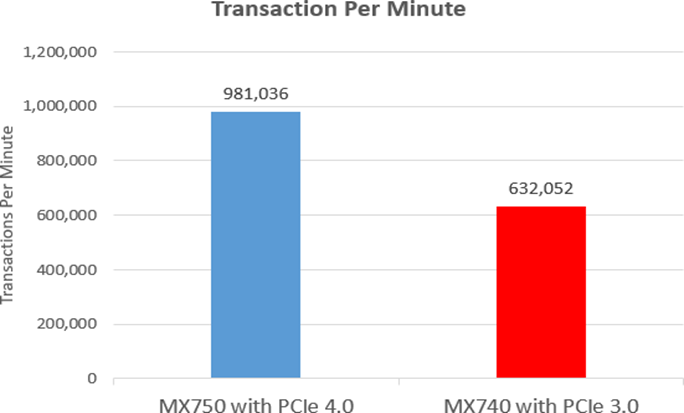

Transactions Per Minute

In an Online Transaction Processing (OLTP) database environment, TPM is a measure of how many transactions in the TPC-C transaction profile that are being executed per minute. HammerDB software, executing the TPC-C transaction profile, randomly performs new order transactions and randomly executes additional transaction types such as payment, order status, delivery and stock levels. This benchmark simulates an OLTP environment where there are a large number of users that conduct simple, yet short transactions that require sub-second response times and return relatively few records. The TPM test results:

TPM: MySQL Comparable TPC-C Workload | MX750 (PCIe 4.0) | MX740 (PCIe 3.0) |

TPM (higher is better) | 981,036 | 632,052 |

Advantage | +55% | - |

Average Read and Write Latency

Latency is the delay in time before a storage device completes a data transaction following an instruction from the host for that request, which can greatly affect application performance and the user experience. Application response time often has built-in latency between the user and the server, so maintaining low-latency within the server will usually translate into an overall better user experience. The read and write latency test results:

Latency: MySQL Comparable TPC-C Workload | MX750 (PCIe 4.0) | MX740 (PCIe 3.0) |

Average Read Latency (lower is better) | 0.09 ms | 0.11 ms |

Advantage | -18% | - |

Average Write Latency (lower is better) | 0.01 ms | 0.06 ms |

Advantage | -83% | - |

CPU Utilization

In general, CPU utilization represents a percentage of the total amount of computing tasks that are handled by the CPU, and is another estimation of system performance. For these tests, CPU utilization was measured to determine the unused CPU that is available for additional tasks, and where a lower result is better. The Dell MX750 server with the Ice Lake CPU has a 20% instructions per cycle (IPC) uplift over the previous CPU generation, which enables it to complete more instructions per clock cycle. The combination of higher transistor density (with the 10 nanometer fabrication process) and PCIe 4.0 technology results in faster storage transactions.

In this test scenario, the Ice Lake CPU was less taxed when running the test workload versus the MX740 server with the Cascade Lake CPU delivering about 46% utilization versus 89% utilization with the Cascade Lake CPU, as evident from the results:

Test Analysis

The test results validated that a PowerEdge MX750 server with PCIe 4.0 technology, such as KIOXIA CM6-V Series SSDs, delivers about 55% better TPM performance versus the previous generation server with PCIe 3.0 technology. Almost a million transactions per minute were delivered within this PCIe 4.0 server/storage platform, which in turn, enables systems and applications based on the PCIe 4.0 interface to run at higher performance. In addition, PCIe 4.0 technology delivers faster access to data per the latency results, enabling data transactions to be completed faster.

Though the total capacity available to each test system differed (512 GB for server setup 1 versus 384 GB for server setup 2), the actual amount of capacity used by each server setup for the MySQL database was the same and did not contribute to any performance advantage.

It should also be noted that the MX740 server with the Cascade Lake CPU was slightly faster in frequency than the MX750 server with the Ice Lake CPU (2.20 GHz vs 2.0GHz), but had less processing cores (24 vs 28). As it related to the testing process, system performance was roughly comparable between the two test systems. Therefore, the combination of higher PCIe 4.0 performance in both the Intel Ice Lake CPU and the KIOXIA CM6-V Series SSDs enabled significantly more transactions per minute and better read/write latency.

At 46% CPU utilization, the MX750 server with the Ice Lake CPU and PCIe 4.0 technology was less taxed when running the test workload versus the MX740 server. At 89% CPU utilization, the MX740 server with Cascade CPU and PCIe 3.0 technology was nearing the maximum workload leaving very little room for the CPU to address additional tasks.

CM6 Series SSD Overview

The CM6 Series is KIOXIA’s 3rd generation enterprise-class NVMe SSD product line that features significantly improved performance from PCIe Gen3 to PCIe Gen4, 30.72TB maximum capacity, dual-port for high availability, 1 DWPD for read-intensive applications (CM6-R Series) and 3 DWPD for mixed use applications (CM6-V Series), up to a 25-watt power envelope and a host of security options – all of which are geared to support a wide variety of workload requirements.

Summary

The test results presented validate that a Dell EMC PowerEdge MX750 PCIe 4.0 enabled server with KIOXIA CM6-V Series SSDs effectively delivered much faster TPM performance for MySQL database workloads than a comparable PCIe

3.0 system/server configuration.

Notes

1 Ice Lake is the codename for Intel Corporation’s 3rd generation Xeon scalable server processors.

2 HammerDB is benchmarking and load testing software that is used to test popular databases. It simulates the stored workloads of multiple virtual users against specific databases to identify transactional scenarios and derive meaningful information about the data environment, such as performance comparisons. TPC Benchmark C is a supported OLTP benchmark that includes a mix of five concurrent transactions of different types, and nine types of tables with a wide range of record and population sizes and where results are measured in transactions per minute.

3 Source: https://db-engines.com/en/ranking, January 2021.

4 Definition of capacity - KIOXIA Corporation defines a megabyte (MB) as 1,000,000 bytes, a gigabyte (GB) as 1,000,000,000 bytes and a terabyte (TB) as 1,000,000,000,000 bytes. A computer operating system, however, reports storage capacity using powers of 2 for the definition of 1Gbit = 230 bits = 1,073,741,824 bits, 1GB = 230 bytes = 1,073,741,824 bytes and 1TB = 240 bytes = 1,099,511,627,776 bytes and therefore shows less storage capacity. Available storage capacity (including examples of various media files) will vary based on file size, formatting, settings, software and operating system, and/or pre-installed software applications, or media content. Actual formatted capacity may vary.

5 Cascade Lake is the codename for Intel Corporation’s 2nd generation Xeon scalable server processors.

6 Drive Write(s) per Day (DWPD): One full drive write per day means the drive can be written and re-written to full capacity once a day, every day, for the specified lifetime. Actual results may vary due to system configuration, usage, and other factors.

7 2.5-inch indicates the form factor of the SSD and not the drive’s physical size.

8 Modifying DRAM usage can impact performance. The purpose of this testing was to stress SSD performance. 96GB of DRAM was allocated to prevent the database from being cached into DRAM as that would reduce stress on the storage devices.

Trademarks

CentOS is a trademark of Red Hat, Inc. in the United States and other countries. Dell, Dell EMC and PowerEdge are either registered trademarks or trademarks of Dell Inc. Intel and Xeon are registered trademarks of Intel Corporation or its subsidiaries in the U.S. and/or other countries. MySQL is a registered trademark of Oracle and/or its affiliates. NVMe is a registered trademark of NVM Express, Inc. PCIe is a registered trademark of PCI-SIG. TPC-C is a trademark of the Transaction Processing Performance Council. All company names, product names and service names may be the trademarks of their respective companies.

Disclaimers

© 2021 Dell, Inc. All rights reserved. Information in this application brief, including product specifications, tested content, and assessments are current and believed to be accurate as of the date that the document was published, but is subject to change without prior notice. Technical and application information contained here is subject to the most recent applicable product specifications.

PowerEdge MX Validate Baseline to Improve Operational Efficiency

Mon, 16 Jan 2023 21:29:16 -0000

|Read Time: 0 minutes

Summary

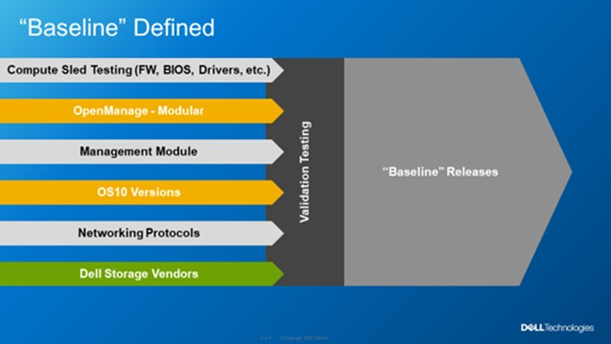

Modern compute platforms consist of many components requiring multiple firmware elements. This can lead to complexity and risk when updating these components. To eliminate this problem for MX customers, Dell produces a biennial firmware baseline and validates the complete end-to-end stack with testing built on real customer use cases. Dell OpenManage system management orchestration then offers a simple route to update, at scale, live environments to this desired state.

This Direct from Development (DfD) tech note describes at a high level the Dell methodology for applying updates with no disruption in service. This enables lowering risk, streamlining the update process, and saving time for organizations.

Market positioning

The PowerEdge MX is a scalable modular platform comprising compute, networking, and storage elements, and designed for data center consolidation with easy deployment and rich integrated management. PowerEdge MX features an industry- leading no midplane design and scalable network fabric, within a chassis architecture to support today’s emerging processor technologies, new storage technologies, and new connectivity innovations well into the future.

PowerEdge MX firmware baseline

Reduce complexity and simplify operations by leveraging Dell’s MX validated solution infrastructure firmware baseline. This is a set of system and component firmware for

the MX platform that is rigorously tested as “one release” in a number of configurations, using the most popular operating system environments based on real world customer use cases. When the updates have passed this testing as a group, a validated solution stack firmware catalog that details the release versions is published. Several solutions in the OpenManage portfolio can then consume the catalog as an update blueprint.

Figure 1. MX Baseline Components

Figure 1. MX Baseline Components

Dell MX firmware baselines offer customers an elegant and automated method for platform wide updates. Advantages for customers include:

- Aggregates multiple releases into one consolidated update

- Dell end-to-end validation helps eliminate the risk of element incompatibility

- Reduces the number of maintenance windows and the amount downtime required for updating

Anatomy of the PowerEdge MX baseline

The PowerEdge MX validated solution baseline consists of many elements, including system BIOS, iDRAC, NICs, CNAs, fibre channel adapters, HBAs and other critical updates. In addition, the stack extends into the chassis to include network switch code and management controller software “OME-M”. The MX platform baseline testing includes the Chassis I/O Modules such as MX9116n, MX7116n, MX5108, and MXG610 capabilities in all forms with scaled VLANs. It also includes testing with different configurations, protocols, and workloads. For Fiber Channel and FCoE, baseline testing also includes testing scenarios in NPIV Proxy Gateway, FIP Snooping Bridge, and Direct Attached mode. An example end-to-end stack test is VMWare ESXi running on the compute sleds connected to a PowerStore storage array using FCoE Ethernet and testing updating from an old baseline to the new baseline. When the Dell updates pass evaluation, a validated solution stack of the platform firmware catalog file containing details of the tested versions is published online ready to be consumed by Dell update mechanisms, such as the update manager integrated into OME. Think of the validated baseline as a recipe for success.

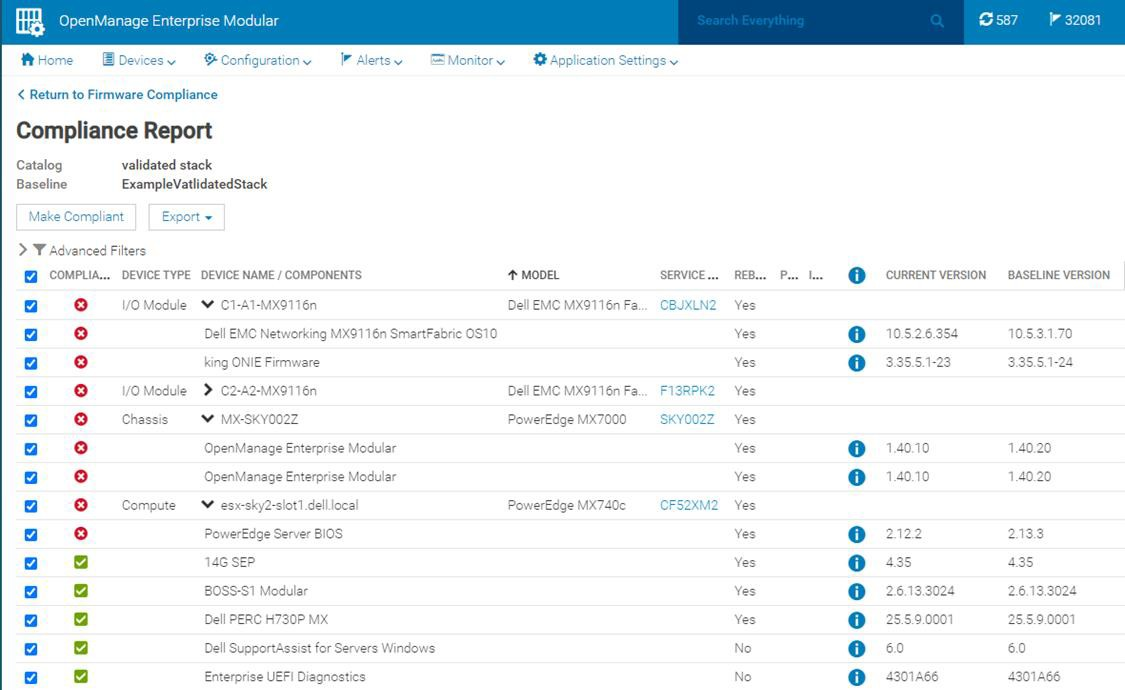

When it comes to apply updates, Dell’s OpenManage system management automation provides a timesaving centralized process with intelligent safeguards to eliminate downtime. The benefits of using OME-M to perform updates using the catalog include: automatically identifying components that require updates, downloading the updates from the Dell support site, creating and scheduling update jobs, correctly ordering tasks, and reporting. The following example shows a sample catalog, highlighting the non-compliant elements. An administrator needs only to click the “Make Compliance” to start the task to update multiple elements in the MX environment.

Figure 2. Detailed view of a firmware update

VMware enhancement

For customers running their VMware environment on PowerEdge MX platform, this firmware update process can be enhanced using OMEVV (OpenManage Enterprise plugin for VMware vCenter) to be “VMware cluster” aware, in order to safeguard services from outages. Cluster aware updates mean intelligent rules that allow patching only one member of a VMware cluster at a time. Leveraging ESXi maintenance mode, DRS, and vMotion, before patching a physical host,

virtual machines are systematically migrated “hot” to other ESXi hosts, ensuring that workloads and services running on the cluster are kept online at all times. After applying the updates, the host restarts and re-joins the cluster. DRS can then live migrate virtual machines back to the newly updated host. This sequence is repeated for each host in the cluster, offering a controlled rolling upgrade for the entire cluster.

Figure 3. OMEVV/VMware host rolling updates

OMEVV also includes a scheduling engine to manage timed updates during quiet periods or to set maintenance windows. Larger customers can run parallel updates on up to 15 clusters simultaneously from a single console.

IOMs

If a customer is using an MX environment with MX9116n/MX7116n network switches in SmartFabric mode, they simply select “make compliant” from the OME-M GUI. No searching for the correct switch code, no manual upload code to the switch, it is all taken care of as part of the catalog. OME-M interfaces with switches to upload the new code. If the switches are configured as a pair, the update runs automatically on one switch at a time to ensure problem free connectivity during the updates.

RESTful API

The OpenManage Enterprise APIs enable the customer to integrate with other management products such as Ansible play books or build tools based on common programming and scripting languages, including Python and PowerShell. These APIs are fully documented. Dell posts many examples on GitHub code repository for administrators / developers to download and use for free.

In Conclusion

Customers who rely on Dell PowerEdge MX for their compute needs can streamline the update process, saving time and ensuring firmware compliance, by leveraging MX validated solution stack firmware baselines. In addition, for VMware environments, intelligent rolling firmware updates for hosts offer updating with zero service outages, and no end user downtime.

References

To learn more, see: