Blogs

New SAP HANA World Record with 5th Generation Intel® Xeon® Processors

Fri, 15 Dec 2023 19:13:37 -0000

|Read Time: 0 minutes

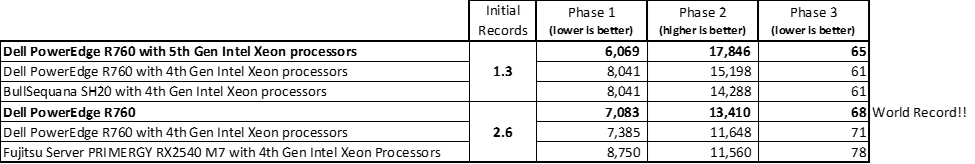

Dell just announced performance upgrades for PowerEdge, including the R660 and R760. In fact, according to the SAP Performance Benchmark, the PowerEdge R760 with the new 5th Generation Intel® Xeon® Scalable processor delivered outstanding SAP HANA performance in two categories, including a result with 2.6B initial records that sets a new World Record!

Check out the PowerEdge R760 Rack Server page for more information.

Author: Seamus Jones, Director, Server Technical Marketing Engineering

Six Years of Tower Servers: Accelerate Business Insights with AI Inferencing and the PowerEdge T560

Mon, 13 Nov 2023 19:44:02 -0000

|Read Time: 0 minutes

Tasked with describing PowerEdge tower servers in three words, ChatGPT landed on, “Reliable. Versatile, Scalable,” perfectly capturing the key qualities of PowerEdge towers. In the following blog, we’ll cover scalability in terms of – you guessed it – AI inferencing workloads.

Our deep learning and AI inferencing benchmarks revealed the PowerEdge T560 to perform up to 15.8x better than the T440 and up to 3.8x better than the T550. Even with over triple the performance, the T560 had nearly 74% lower latency compared to the T550 for the same workload. The rest of this blog highlights why the 2-socket T560 is well-suited for AI inferencing on CPU and provides greater detail behind the benchmarks – TensorFlow and OpenVINO – we tested in our lab.

In case you missed it in our last post, we covered exceptional database workload performance gains across the PowerEdge T440, T550, and T560. Make sure to give that a read to learn how these towers represent six years of innovation since the launch of 14th Generation PowerEdge servers.

PowerEdge towers and AI – a perfect pair

Databases, businesses applications, and virtualization are use cases commonly associated with tower servers. While the PowerEdge tower portfolio is designed to accelerate these more traditional workloads, it simultaneously matches the exploding business demand for AI solutions. In fact, IDC projects $154 billion in global AI spending this year, with retail and banking topping the industries with the greatest AI investment.

It is important to note that not all AI workloads look the same; they vary widely in scope and necessary compute power. Use cases range from predicting cancerous regions on CT scans to identifying the most trafficked aisles in a retail store. Irrespective of the specific application, McKinsey reveals organizations that adopted AI for specific functions in 2022 are already seeing a return on investment in 2023. Specifically, across all functions, an average of 59% of organizations report revenue increases from AI adoption and 42% report cost decreases.

Whether a business has a clearly defined need for AI compute power or anticipates having one in the future, the PowerEdge T560 scales with evolving industry demands. The key product features that drive the PowerEdge T560’s “AI-readiness” include:

- 2x Intel® Xeon® Scalable Processors

- Up to six single-width or two double-width GPUs

- PCle Gen 5 and DDR5 memory

Figure 1. PowerEdge T560 AI accelerators

Testing details and benchmark information

For our testing, we evaluated two AI inferencing performance benchmarks, TensorFlow and Intel’s OpenVINO, on the PowerEdge T440, T550, and T560 using Phoronix Test Suites. Inferencing, a subset of AI workloads, refers to the use of input data and an associated trained model to make real-time predictions. Common applications include detecting faces and monitoring traffic for incoming vehicles and pedestrians.

Both TensorFlow and OpenVINO are image-based, and we ran both on CPU. All systems tested were equipped with Intel® Xeon® processors, which is especially relevant to inferencing given that Intel reports “up to 70% of CPUs installed for inferencing are Intel Xeon processors.” While the T560’s GPU capacity allows businesses to scale up their AI workloads, our results show that inferencing on CPU alone still lends itself to impressive performance.

The full testing configurations are listed in the following table. Each system has a Gold-class Intel® Xeon® processor, equal memory capacity, and storage to reflect industry transitions. All testing was conducted in a Dell Technologies lab.

Note: We set the System Profile in BIOS setting to “Performance” on all systems, which has shown to boost out-of-the-box performance by up to 10%. Check out this paper for more details and other ways to simply and quickly optimize your AI workload performance.

Table 1. Testing configurations

| PowerEdge T440 | PowerEdge T550 | PowerEdge T560 |

CPU | Intel® Xeon® Gold 5222 4c/8T, TDP 105W | Intel® Xeon® Gold 6338N 32c/64T, TDP 185W | Intel® Xeon® Gold 6448Y 32c/64T, TDP 225W |

Storage | 4x 800 GB SAS SSD (RAID 5) | 4x 960 GB SAS SSD | 4x 1.6TB NVMe |

Memory | 512 GB DDR4

| 512 GB DDR4 | 512 GB DDR5 |

PowerEdge T560 inferencing performance “clean sweep”

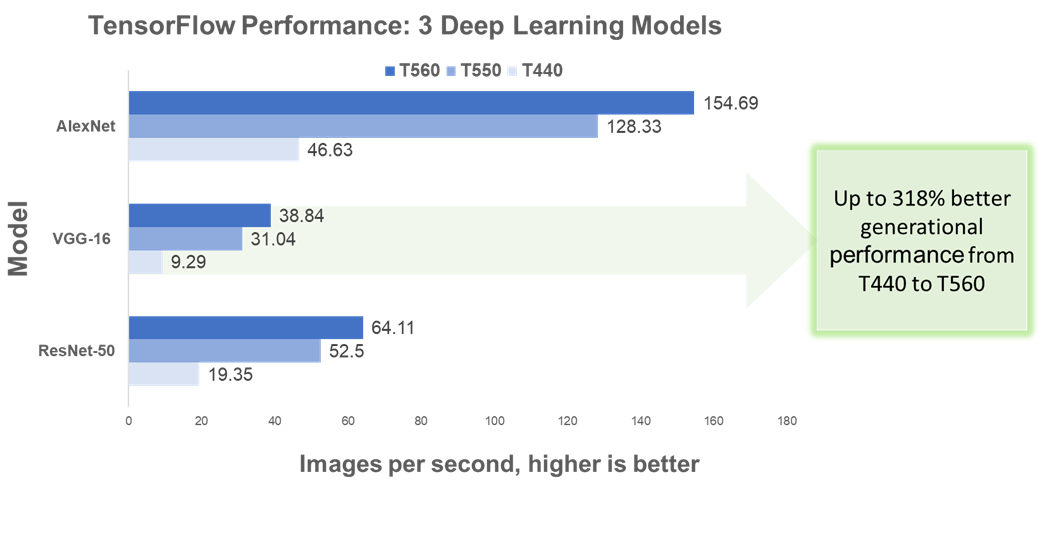

We report TensorFlow inferencing performance results for three common deep learning architectures: AlexNet, VGG-16, and RestNet-50. Performance – or in this case throughput – is measured by the number of images processed every second. The higher the images per second value, the better the inferencing performance.

As shown in Figure 1, the PowerEdge T560 processed significantly more images per second compared to both prior-gen towers and across all three architectures. Most notably, the T560 demonstrated up to 318% higher throughput than the T440.

Figure 2. TensorFlow benchmark performance

Table 2 provides more details about the performance improvements across all systems and architectures tested.

Table 2. TensorFlow benchmark results

| T440 to T550 | T550 to T560 | T440 to T560 |

CPU-Batch Size[1]-Architecture | Percent Uplift in Throughput | ||

CPU -512- ResNet-50 | 171.32% | 22.11% | 231.32% |

CPU -512- VGG-16 | 234.12% | 25.13% | 318.08% |

CPU – 16 - AlexNet | 175.21% | 20.54% | 231.74% |

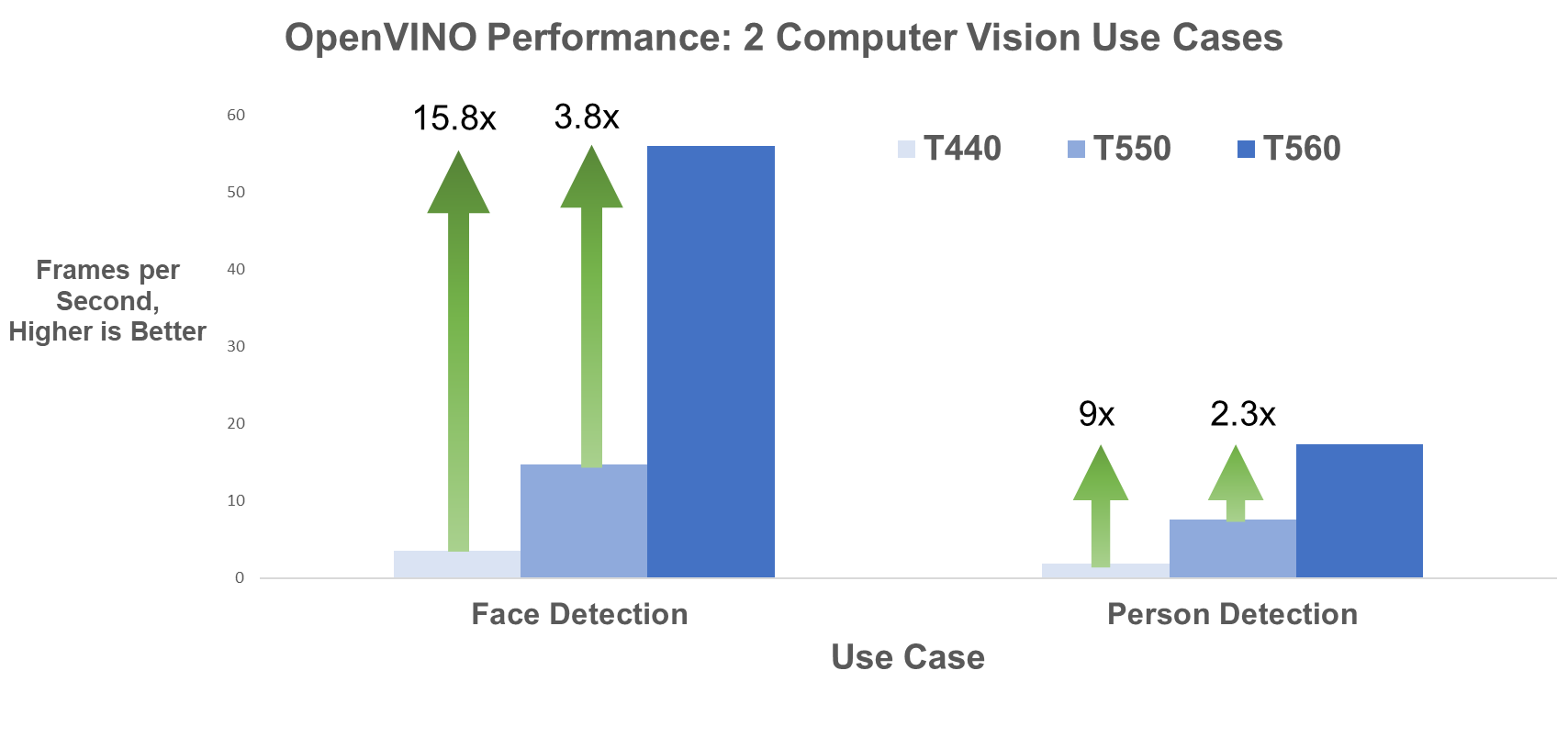

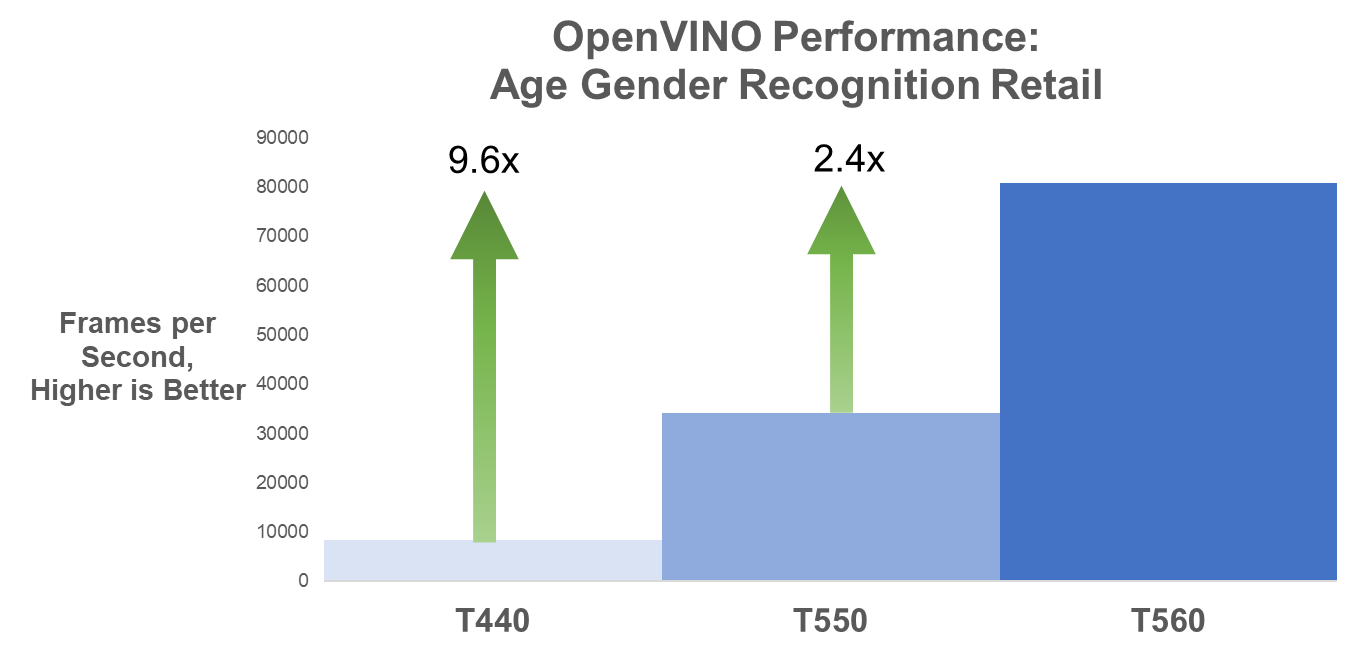

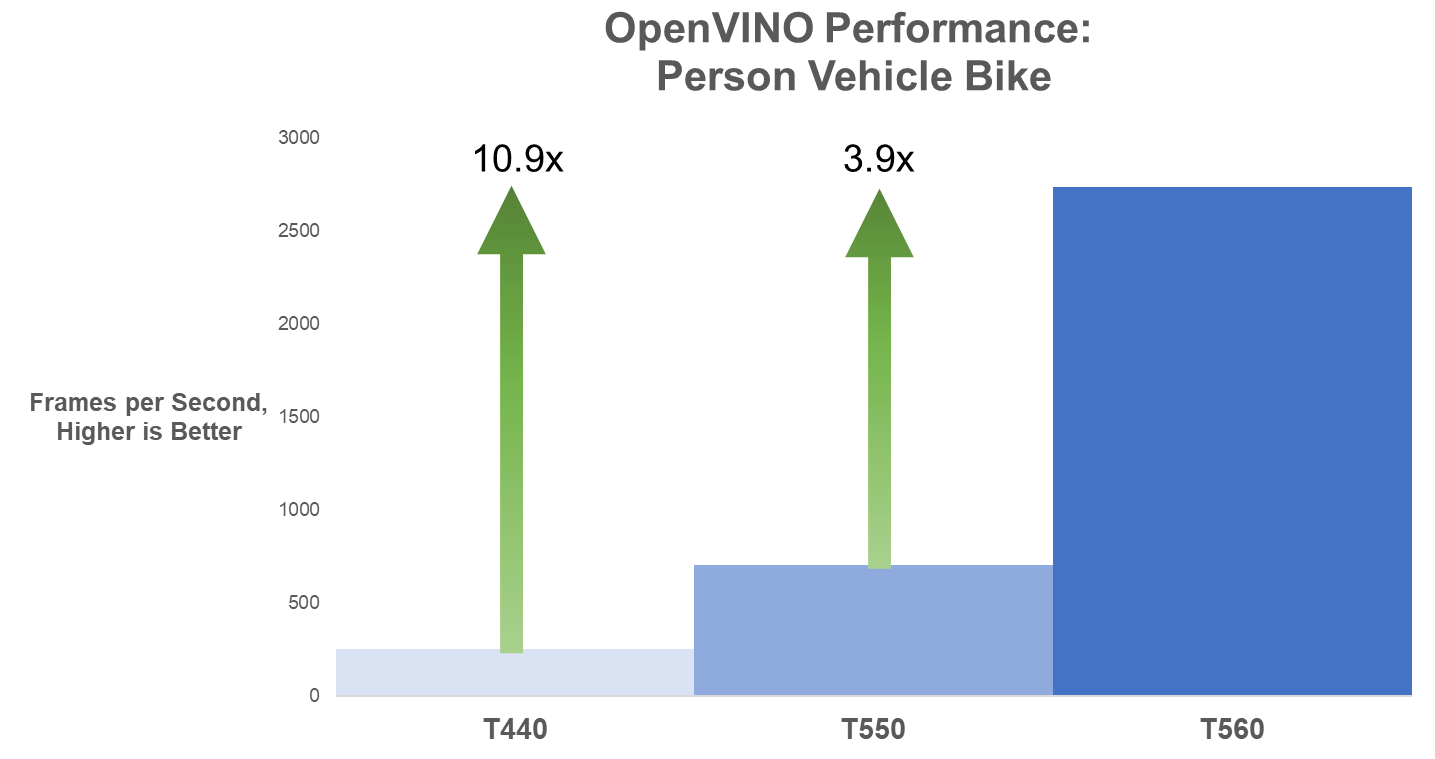

In a similar vein, we report OpenVINO performance results for four computer vision use cases:

- Person Detection

- Face Detection

- Age & Gender Recognition in Retail

- Person, Vehicle & Bike Detection

Performance is measured by both throughput in number of frames processed per second (FPS) and latency in milliseconds (ms). The higher the FPS value, the better the inferencing performance. Conversely, a lower latency indicates a quicker system response and therefore better performance.

The figures below illustrate changes in FPS for the four use cases across all three generations of tower servers. For Face Detection specifically, the T560 has 15.8x the FPS compared to the T440 and almost 4x the FPS compared to the T550.

Figure 3. Face Detection and Person Detection OpenVINO FPS

Figure 4. Age Gender Recognition Retail OpenVINO FPS

Figure 5. Person Vehicle Bike Detection OpenVINO FPS

The following table provides the FPS values for the use cases and all three systems tested.

Table 3. OpenVINO frames per second results

| PowerEdge T440 | PowerEdge T550 | PowerEdge T560 |

Model | Throughput in Frames per Second, More is Better | ||

Face Detection FP16 | 3.54 | 14.77 | 55.94 |

Person Detection FP16 | 1.94 | 7.6 | 17.37 |

Person Vehicle Bike Detection FP16 | 249.62

| 701.76 | 2732.94 |

Age Gender Recognition Retail FP16 | 8396.74 | 34131.92 | 80733.72 |

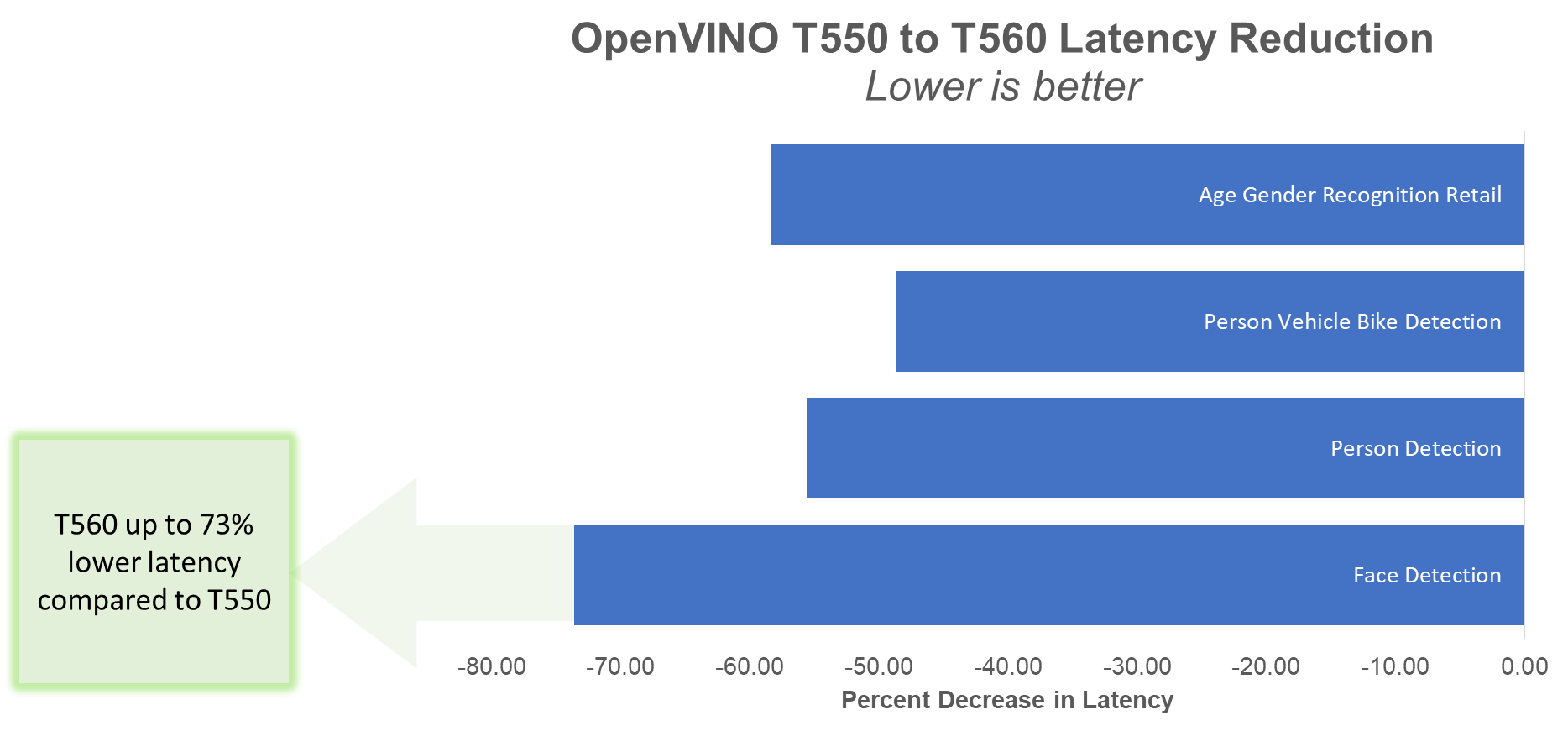

Lastly, the T560 reduces inferencing latency by up to 73% compared to the T550 on these same models, as illustrated in Figure 6.

Figure 6. Percent decrease in latency

The following table presents the latency values in ms for the T550 and T560.

Table 4. OpenVINO latency results

| PowerEdge T550 | PowerEdge T560 | Latency Reduction from T550 to T560 |

Model | Latency in ms, Less is Better | Reduction | |

Face Detection | 2164.53 | 570.48 | -73.64% |

Person Detection | 4130.79 | 1833.29 | -55.62% |

Person Vehicle Bike Detection | 45.56 | 23.4 | -48.64% |

Age Gender Recognition Retail | 1.73 | 0.72 | -58.38% |

Concluding Thoughts

Emerging AI workloads have taken numerous industries by storm, and the latest-gen PowerEdge T560 is built for businesses looking to scale up and reap the benefits of AI-generated insights. Between support for 4th Gen Intel® Xeon® Scalable Processors, up to 6 graphics cards, and DDR5 memory, this tower can handle both CPU- and GPU-heavy use cases.

Our recent AI inferencing testing on CPU revealed the PowerEdge T560 has:

Up to 318% percent better inferencing performance than the T440 for the TensorFlow benchmark

Up to 318% percent better inferencing performance than the T440 for the TensorFlow benchmark

Up to 15.8x the inferencing performance compared to the T440 and almost 4x the performance compared to the T550 for the OpenVINO benchmark

Up to 15.8x the inferencing performance compared to the T440 and almost 4x the performance compared to the T550 for the OpenVINO benchmark

Up to 73% lower latency compared to the T550 for the OpenVINO benchmark

Up to 73% lower latency compared to the T550 for the OpenVINO benchmark

While this concludes our blog series on “Six Years of Tower Servers,” we hope we have left you wanting to learn more about the PowerEdge T560. Don’t forget to check out our previous blog detailing exceptional database workload performance gains across tower servers. We’ll part ways with this short unboxing video for a look under the lid of the server:

Resources

- Six Years of Tower Servers: Exceptional Database Performance with PowerEdge T560 | Dell Technologies Info Hub

- Worldwide Spending on AI-Centric Systems Forecast to Reach $154 Billion in 2023, According to IDC

- The state of AI in 2023: Generative AI’s breakout year | McKinsey

- Tensorflow Benchmark - OpenBenchmarking.org

- OpenVINO Benchmark - OpenBenchmarking.org

- Optimize Inference with Intel® CPU Technology

[1] This is a manually set parameter, ranging from 16 to 512. Read about the parameter meaning here.

Legal Disclosures

Based on September 2023 Dell labs testing subjecting the PowerEdge T440, T550, and T560 tower servers to AI inference benchmarks – OpenVINO and TensorFlow via the Phoronix Test Suite. Actual results will vary.

Authors: Olivia Mauger, Jeremy Johnson, Delmar Hernandez | Compute Tech Marketing

HPC Application Performance on Dell PowerEdge C6620 with INTEL 8480+ SPR

Thu, 09 Nov 2023 15:46:41 -0000

|Read Time: 0 minutes

Overview

With a robust HPC and AI Innovation Lab at the helm, Dell continues to ensure that PowerEdge servers are cutting-edge pioneers in the ever-evolving world of HPC. The latest stride in this journey comes in the form of the Intel Sapphire Rapids processor, a powerhouse of computational prowess. When combined with the cutting-edge infrastructure of the Dell PowerEdge 16th generation servers, a new era of performance and efficiency dawns upon the HPC landscape. This blog post provides comprehensive benchmark assessments spanning various verticals within high-performance computing.

It is Dell Technologies’ goal to help accelerate time to value for customers, as well as leverage benchmark performance and scaling studies to help plan out their environments. By using Dell’s solutions, customers spend less time testing different combinations of CPU, memory, and interconnect, or choosing the CPU with the sweet spot for performance. Additionally, customers do not have to spend time deciding which BIOS features to tweak for best performance and scaling. Dell wants to accelerate the set-up, deployment, and tuning of HPC clusters to enable customers real value while running their applications and solving complex problems (such as weather modeling).

Testbed Configuration

This study conducted benchmarking on high-performance computing applications using Dell PowerEdge 16th generation servers featuring Intel Sapphire Rapids processors.

Benchmark Hardware and Software Configuration

Table 1. Test bed system configuration used for this benchmark study

Platform | Dell PowerEdge C6620 |

Processor | Intel Sapphire Rapids 8480+ |

Cores/Socket | 56 (112 total) |

Base Frequency | 2.0 GHz |

Max Turbo Frequency | 3.80 GHz |

TDP | 350 W |

L3 Cache | 105 MB |

Memory | 512 GB | DDR5 4800 MT/s |

Interconnect | NVIDIA Mellanox ConnectX-7 NDR 200 |

Operating System | Red Hat Enterprise Linux 8.6 |

Linux Kernel | 4.18.0-372.32.1 |

BIOS | 1.0.1 |

OFED | 5.6.2.0.9 |

System Profile | Performance Optimized |

Compiler | Intel OneAPI 2023.0.0 | Compiler 2023.0.0 |

MPI | Intel MPI 2021.8.0 |

Turbo Boost | ON |

Interconnect | Mellanox NDR 200 |

Application | Vertical Domain | Benchmark Datasets |

OpenFOAM | Manufacturing - Computational Fluid Dynamics (CFD) | Motorbike 50 M 34 M and 20 M cell mesh |

Weather Research and Forecasting (WRF) | Weather and Environment | |

Large-scale Atomic/Molecular Massively Parallel Simulator (LAMMPS) | Molecular Dynamics | Rhodo, EAM, Stilliger Weber, tersoff, HECBIOSIM, and Airebo |

GROMACS | Life Sciences – Molecular Dynamics | HECBioSim Benchmarks – 3M Atoms, Water, and Prace LignoCellulose |

CP2K | Life Sciences | H2O-DFT-LS-NREP- 4, 6 H2O-64-RI-MP 2 |

Performance Scalability for HPC Application Domain

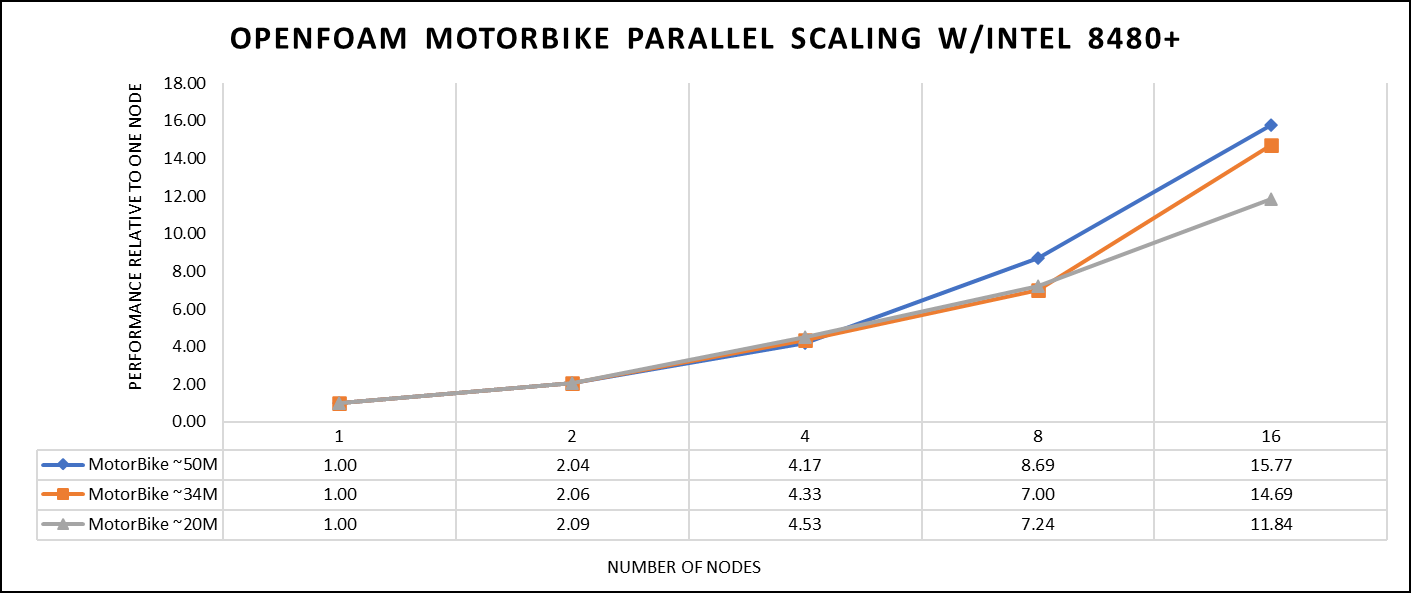

Vertical – Manufacturing | Application - OPENFOAM

OpenFOAM is an open-source computational fluid dynamics (CFD) software package renowned for its versatility in simulating fluid flows, turbulence, and heat transfer. It offers a robust framework for engineers and scientists to model complex fluid dynamics problems and conduct simulations with customizable features. This study worked on OpenFOAM version 9, which have been compiled with Intel ONE API 2023.0.0 and Intel MPI 2021.8.0 compilers. For successful compilation and optimization with the Intel compilers, additional flags such as '-O3 -xSAPPHIRERAPIDS -m64 -fPIC' have been added.

The tutorial case under the simpleFoam solver category, motorbike, were used for evaluating the performance of the OpenFOAM package on intel 8480+ processors. Three different types of grids were generated such as 20 M, 34 M, and 50 M cells using the blockMesh and snappyHexMesh utilities of OpenFOAM. Each run was conducted with full cores (112 cores per node) and from a single node to sixteen nodes, while scalability tests were done for all three sets of grids. The steady state simpleFoam solver execution time was noted as performance numbers.

The figure below shows the application performance for all the datasets:

Figure 1. The scaling performance of the OpenFOAM Motorbike dataset using the Intel 8480+ processor, with a focus on performance compared to a single node.

The results are non-dimensionalized with single node result, with the scalability depicted in Figure 1. The Intel-compiled binaries of OpenFOAM shows linear scaling from a single node to sixteen nodes on 8480+ processors for higher dataset (50 M). For other datasets with 20 M and 34 M cells, the linear scaling was shown up to eight nodes and from eight nodes to sixteen nodes the scalability was reduced.

Achieving satisfactory results with smaller datasets can be accomplished using fewer processors and nodes. Nonetheless, augmenting the node count; therefore, the processor count, in relation to the solver's computation time, leads to increased inter-processor communication, later extending the overall runtime. Consequently, higher node counts prove more beneficial when handling larger datasets within OpenFOAM simulations.

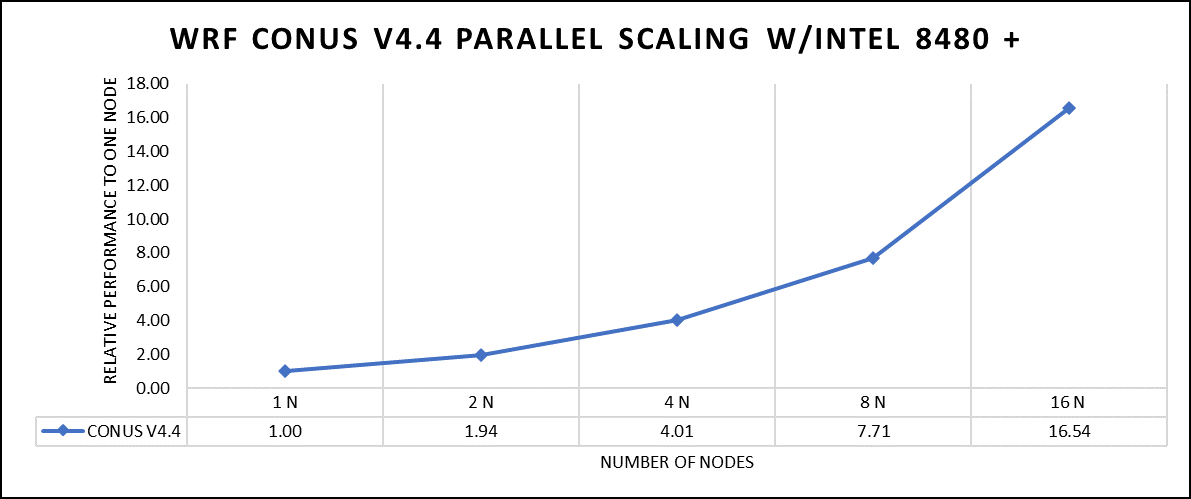

Vertical – Weather and Environment | Application - WRF

The Weather Research and Forecasting model (WRF) is at the forefront of meteorological and atmospheric research, with its latest version being a testament to advancements in high-performance computing (HPC). WRF enables scientists and meteorologists to simulate and forecast complex weather patterns with unparalleled precision. This study involved working on WRF version 4.5, which have been compiled with Intel ONEAPI 2023.0.0 and Intel MPI 2021.8.0 compilers. For successful compilation and optimization with the Intel compilers, additional flags such as ' -O3 qopt-zmm-usage=high –xSAPPHIRERAPIDS -fpic’ were used.

The dataset used in this study is CONUS v4.4, meaning the model's grid, parameters, and input data are set up to focus on weather conditions within the continental United States. This configuration is particularly useful for researchers, meteorologists, and government agencies who need high-resolution weather forecasts and simulations tailored to this specific geographic area. The configuration details, such as grid resolution, atmospheric physics schemes, and input data sources, can vary depending on the specific version of WRF and the goals of the modeling project. This study predominantly adhered to the default input configuration, making minimal alterations or adjustments to the source code or input file. Each run was conducted with full cores (112 cores per node). The scalability tests were done from a single node to sixteen nodes, and the performance metric in “sec” was noted.

Figure 2. The scaling performance of the WRF CONUS dataset using the Intel 8480+ processor, with a focus on performance compared to a single node.

The INTEL compiled binaries of WRF show linear scaling from a single node to sixteen nodes on 8480+ processors for the new CONUS v4.4. For the best performance with WRF, the impact of the tile size, process, and threads per process should be carefully considered. Given that the memory and DRAM bandwidth constrain the application, the team opted for the latest DDR5 4800 MT/s DRAM for test evaluations. Additionally, it is crucial to consider the BIOS settings, particularly SubNUMA configurations, as these settings can significantly influence the performance of memory-bound applications, potentially leading to improvements ranging from one to five percent.

For more detailed BIOS tuning recommendations, see the previous blog post on optimizing BIOS settings for optimal performance.

Vertical – Molecular Dynamics | Application – LAMMPS

LAMMPS, which stands for Large-scale Atomic/Molecular Massively Parallel Simulator, is a powerful tool for HPC. It is specifically designed to harness the immense computational capabilities of HPC clusters and supercomputers. LAMMPS allows researchers and scientists to conduct large-scale molecular dynamics simulations with remarkable efficiency and scalability. This study worked on LAMMPS, the 15 June 2023 version, which have been compiled with Intel ONEAPI 2023.0.0 and Intel MPI 2021.8.0 compilers. For successful compilation and optimization with the Intel compilers, additional flags such as “ -O3 qopt-zmm-usage=high –xSAPPHIRERAPIDS -fpic,” were used.

The team opted for the default INTEL package, which offers optimized atom pair styles for vector instructions on Intel processors. The team also tried running some benchmarks which are not supported with the INTEL package to check the performance and scaling. The performance metric for this benchmark is nanoseconds per day where higher is considered better.

There are two factors that were considered when compiling data for comparison: the number of nodes and the core count. Below are the results of performance improvement observed on processor 8480+ with 112 cores:

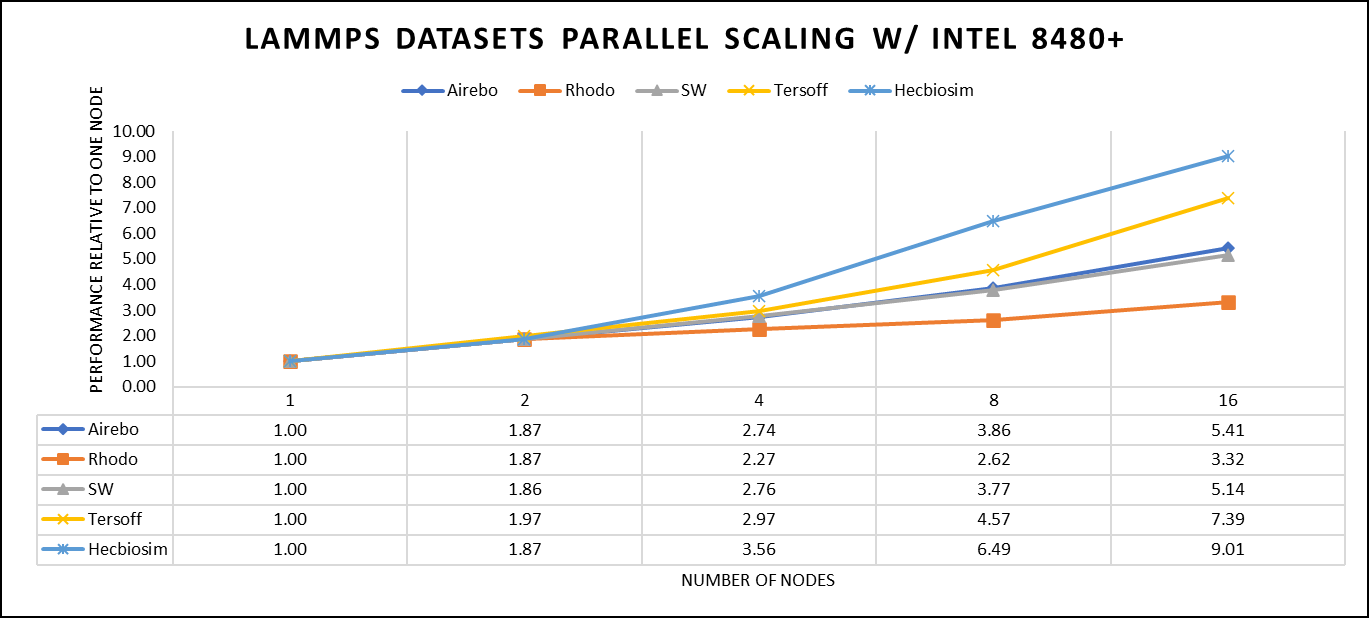

Figure 3. The scaling performance of the LAMMPS datasets using the Intel 8480+ processor, with a focus on performance compared to a single node.

Figure 3 shows the scaling of different LAMMPS datasets. Noticeable enhancement in scalability is evident with the increment in atom size and step size. The examination involved two datasets, EAM and Hecbiosim, each containing over 3 million atoms. The results indicated better scalability when compared to the other datasets analyzed.

Vertical – Molecular Dynamics | Application - GROMACS

GROMACS, a high-performance molecular dynamics software, is a vital tool for HPC environments. Tailored for HPC clusters and supercomputers, GROMACS specializes in simulating the intricate movements and interactions of atoms and molecules. Researchers in diverse fields, including biochemistry and biophysics, rely on its efficiency and scalability to explore complex molecular processes. GROMACS is used for its ability to harness the immense computational power of HPC, allowing scientists to conduct intricate simulations that reveal critical insights into atomicatomic-level behaviours, from biomolecules to chemical reactions and materials. This study worked on GROMACS 2023.1 version, which has been compiled with Intel ONEAPI 2023.0.0 and Intel MPI 2021.8.0 compilers. For successful compilation and optimization with the Intel compilers, additional flags such as “ -O3 qopt-zmm-usage=high –xSAPPHIRERAPIDS -fpic,” were used.

The team curated a range of datasets for the benchmarking assessments. First, the team included "water GMX_50 1536" and "water GMX_50 3072," which represent simulations involving water molecules. These simulations are pivotal for gaining insights into solvation, diffusion, and the water's behavior in diverse conditions. Next, the team incorporated "HECBIOSIM 14 K" and "HECBIOSIM 30 K" datasets, which were specifically chosen for their ability to investigate intricate systems and larger biomolecular structures. Lastly, the team included the "PRACE Lignocellulose" dataset, which aligns with the benchmarking objectives, particularly in the context of lignocellulose research. These datasets collectively offer a diverse array of scenarios for the benchmarking assessments.

The performance assessment was based on the measurement of nanoseconds per day (ns/day) for each dataset, providing valuable insights into the computational efficiency. Additionally, the team paid careful attention to optimizing the mdrun tuning parameters (i.e, ntomp, dlb tunepme nsteps, etc )in every test run to ensure accurate and reliable results. The team examined the scalability by conducting tests spanning from a single node to sixteen nodes.

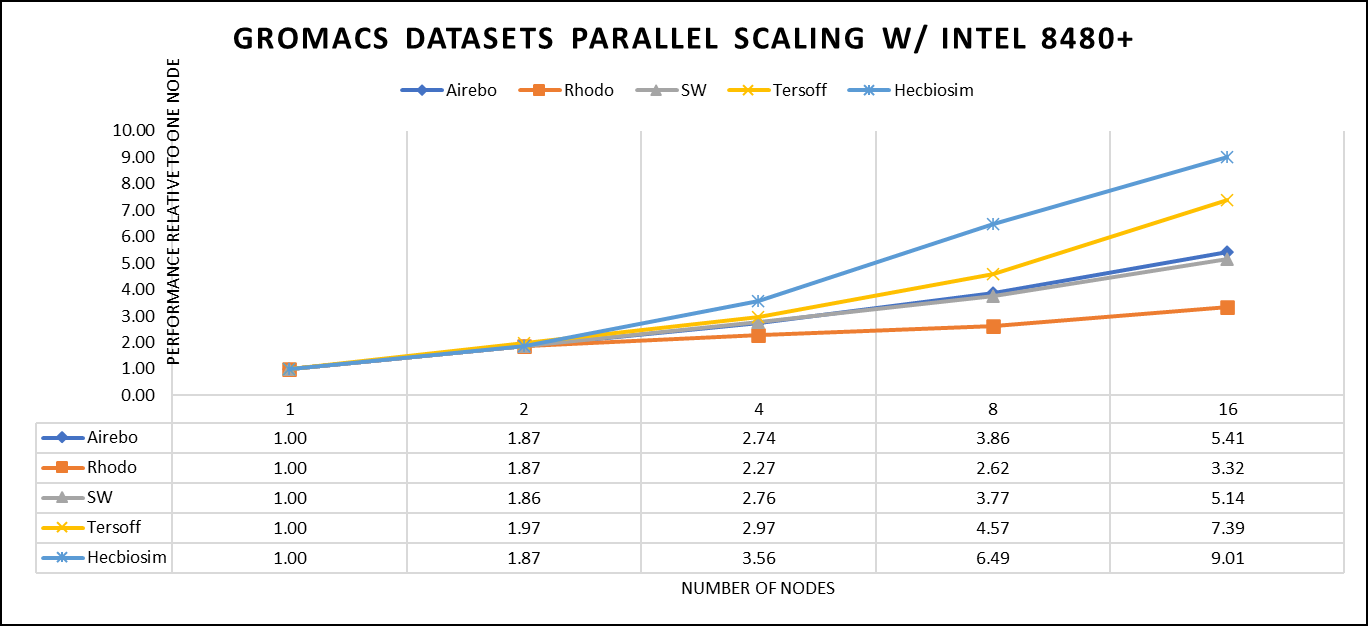

Figure 4. The scaling performance of the GROMACS datasets using the Intel 8480+ processor, with a focus on performance compared to a single node.

For ease of comparison across the various datasets, the relative performance has been included into a single graph. However, each dataset behaves individually when performance is considered, as each uses different molecular topology input files (tpr), and configuration files.

The team achieved the expected linear performance scalability for GROMACS of up to eight nodes All cores in each server were used while running these benchmarks. The performance increases are close to linear across all the dataset types; however, there is a drop in the larger number of nodes due to the smaller dataset size and the simulation iterations.

Vertical – Molecular Dynamics | Application – CP2K

CP2K is a versatile computational software package that covers a wide range of quantum chemistry and solid-state physics simulations, including molecular dynamics. It is not strictly limited to molecular dynamics but is instead a comprehensive tool for various computational chemistry and materials science tasks. While CP2K is widely used for molecular dynamics simulations, it can also perform tasks like electronic structure calculations, ab initio molecular dynamics, hybrid quantum mechanics/molecular mechanics (QM/MM) simulations, and more.

This study worked on the CP2K 2023.1 version, which has been compiled with Intel ONEAPI 2023.0.0 and Intel MPI 2021.8.0 compilers. For successful compilation and optimization with the Intel compilers, additional flags such as “ -O3 qopt-zmm-usage=high –xSAPPHIRERAPIDS -fpic,” were used.

Focusing on high-performance computing (HPC), the team used specific datasets optimized for computational efficiency. The first dataset, "H2O-DFT-LS-NREP-4,6," was configured for HPC simulations and calculations, emphasizing the modeling of water (H2O) using Density Functional Theory (DFT). The appended "NREP-4,6" parameter settings were fine-tuned to ensure efficient HPC performance. The second dataset, "H2O-64-RI-MP2," was exclusively crafted for HPC applications and revolved around the examination of a system consisting of 64 water molecules (H2O). By employing the Resolution of Identity (RI) method with the Møller–Plesset perturbation theory of second order (MP2), this dataset demonstrated the significant computational capabilities of HPC for conducting advanced electronic structure calculations within a high-molecule-count environment. The team examined the scalability by conducting tests spanning from a single node to sixteen nodes.

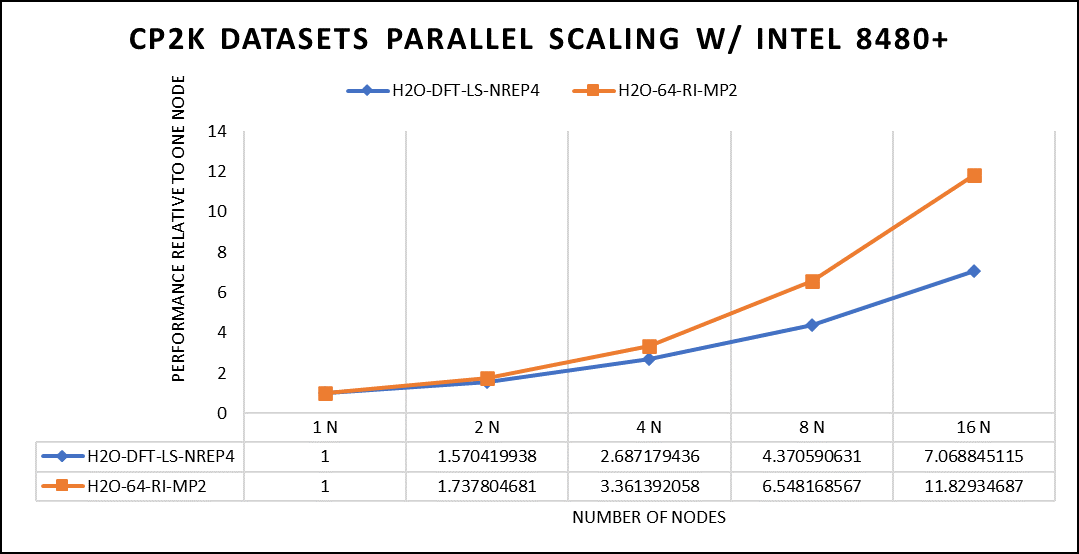

Figure 5. The scaling performance of the CP2K datasets using the Intel 8480+ processor, with a focus on performance compared to a single node.

The datasets represent a single-point energy calculation employing linear-scaling Density Functional Theory (DFT). The system consists of 6144 atoms confined within a 39 Å^3 simulation box, which translates to 2048 water molecules. To adjust the computational workload, you can modify the NREP parameter within the input file.

Performing with NREP6 necessitates more than 512 GB of memory on a single node. Failing to meet this requirement will result in a segmentation fault error. These benchmarking efforts encompass configurations involving up to 16 computational nodes. Optimal performance is achieved when using NREP4 and NREP6 in Hybrid mode, which combines Message Passing Interface (MPI) and Open Multi-Processing (OpenMP). This configuration exhibits the best scaling performance, particularly on four to eight nodes. However, it is worth noting that scaling beyond eight nodes does not exhibit a strictly linear performance improvement. Figure 5 depicts the outcomes when using Pure MPI, using 112 cores with a single thread per core.

Conclusion

With equivalent core counts, the prior generation of Intel Xeon processors can match the performance of the Sapphire Rapids counterpart. However, achieving this level of performance necessitates doubling the number of nodes. Therefore, a single 350W node equipped with the 8480+ processor can deliver comparable performance when compared to using two 500W nodes with the 8358 processor. In addition to optimizing the BIOS settings as outlined in our INTEL-focused blog, the team advises disabling Hyper-threading specifically for the benchmarks discussed in this article. However, for different types of workloads, the team recommends conducting thorough testing and enabling Hyper-threading if it proves beneficial. Furthermore, for this performance study, the team highly recommends using the Mellanox NDR 200 interconnect.

Six Years of Tower Servers: Exceptional Database Performance with PowerEdge T560

Thu, 12 Oct 2023 21:43:58 -0000

|Read Time: 0 minutes

Transformation is an intrinsic part of the technological world, just as seasons transition and leaves change hue during fall. In the six years since the launch of 14th Generation PowerEdge servers, the tower servers have evolved greatly with no shortage of performance gains, feature improvements, and expanding workload capabilities. To demonstrate the magnitude and scope of these improvements, we tested two different workloads across the PowerEdge T440, T550, and T560 towers.

While we primarily discuss database workload testing in this blog, stay tuned for another post covering Artificial Intelligence (AI) inferencing in the coming weeks.

PowerEdge tower upgrades – T440 to T550 to T560

Before we get ahead of ourselves, there are a variety of feature improvements to highlight in the latest tower server--the PowerEdge T560--that make it well-worth the upgrade:

Table 1. PowerEdge T440 vs T550 vs T560 key features

| |||

CPU | 2nd Generation Intel® Xeon® Scalable Processors | 3rd Generation Intel® Xeon® Scalable Processors | 4th Generation Intel® Xeon® Scalable Processors |

GPU | Up to 1 DW GPU | Up to 2 DW or 5 SW GPUs | Up to 2 DW or 6 SW GPU |

Drives up to: | 16 x 2.5” or 8 x 3.5” | 8 x 2.5” or 16 x 2.5” or 24 x 2.5” or 8 x 3.5” or 8 x 3.5” + 8 x 2.5” | 8 x 2.5’’ or 16 x 2.5’’ or 24 x 2.5’’ or 12 x 3.5’’ or 8 x 3.5’’ or 8 x 3.5’’ + 8 x 2.5’’ |

Memory | DDR4, up to 2666 MT/s DIMM Speed | DDR4, up to 3200 MT/s DIMM Speed | DDR5, up to 4800 MT/S DIMM Speed |

PCIe Slots | PCIe Gen3 slots | PCIe Gen4 slots | PCIe Gen5 slots |

As Table 1 illustrates, the T560 is truly a powerhouse, designed to reflect the evolving workload requirements of small to medium businesses from office to edge. Compared to the T550, the T560 has 20% greater GPU capacity. Considering Forrester projects a 36% average annual growth in generative AI spending, this increased GPU capacity is fantastic for businesses looking to pursue emerging AI workloads.

There are also huge benefits associated with the jump from 2nd Gen Intel® Xeon® Processors in the T440 to 4th Gen Intel® Xeon® Processors in the T560, including up to 1.8x greater memory bandwidth. Learn more about memory bandwidth for Next-Gen PowerEdge Servers here. Additionally, PCIe Gen 5 doubles the data transfer rate compared to PCIe Gen 4 and quadruples the transfer rate compared to PCIe Gen 3, described in greater detail here. The T560 also supports the latest PowerEdge RAID Controller 12 (PERC 12), while the T550 only supports PERC 11 and the T440 only supports PERC 9. Read about the performance improvements of PERC 12 here.

And these are just the highlights. Find more information on the technologies powering Next Generation PowerEdge servers here.

Figure 1. From left to right: T440, T550, T560

PowerEdge T560 accelerates database workloads

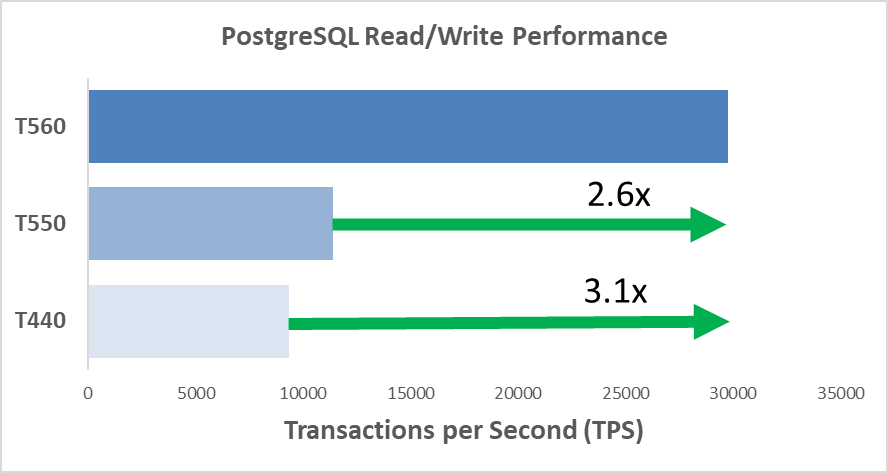

Throughout the world of small and medium businesses, one use case and attendant application cuts across nearly every enterprise: the Database. PowerEdge tower servers enable businesses to maintain control of vital customer data in a high-speed array of drives, all in your chosen database type. As demonstrated by lab testing, selecting a latest-gen T560 can provide up to 3x the transactions per second (read/write performance) compared to the T440 and 2.6x the transactions per second compared to the T550.[1]

When using a relational database with complex queries and large volumes of data, performance is pivotal. As we all know and have experienced, data often grows exponentially over the course of work and business. The exceptional read/write performance, scalable storage capacity, and easy deployment of the T560 keeps businesses ahead of this deluge of data and its associated headaches.

Testing Details and Results

On all three towers, we ran a Phoronix Test Suite to evaluate database performance with PostgreSQL, an open-source SQL relational database that is popular with small businesses. The testing configurations are listed in the following table. Each system has a Gold-class Intel® Xeon® processor, equal memory capacity, and storage to reflect industry transitions. All testing was conducted in a Dell Technologies lab.

Table 2. Testing Configurations

| PowerEdge T440 | PowerEdge T550 | PowerEdge T560 |

CPU | Intel® Xeon® Gold 5222 4c/8T, TDP 105W | Intel® Xeon® Gold 6338N 32c/64T, TDP 185W | Intel® Xeon® Gold 6448Y 32c/64T, TDP 225W |

Storage | 4x 800 GB SAS SSD (RAID 5) | 4x 960 GB SAS SSD | 4x 1.6TB NVMe |

Memory | 512 GB DDR4

| 512 GB DDR4 | 512 GB DDR5 |

The read/write performance (measured in transactions per second) of the PostgreSQL database workload is shown in Figure 2. These results correspond to the test with 800 clients and a scaling factor of 10,000. Clients essentially represent the number of users, and the scaling factor is a multiplier for the number of rows in each table.

Figure 2. PostgreSQL read/write performance gains compared to PowerEdge T560

As previously discussed, this performance benchmark reveals PowerEdge T560 to be 2.6x faster than the PowerEdge T550 and 3.1x faster than the PowerEdge T440 in PostgreSQL workloads.

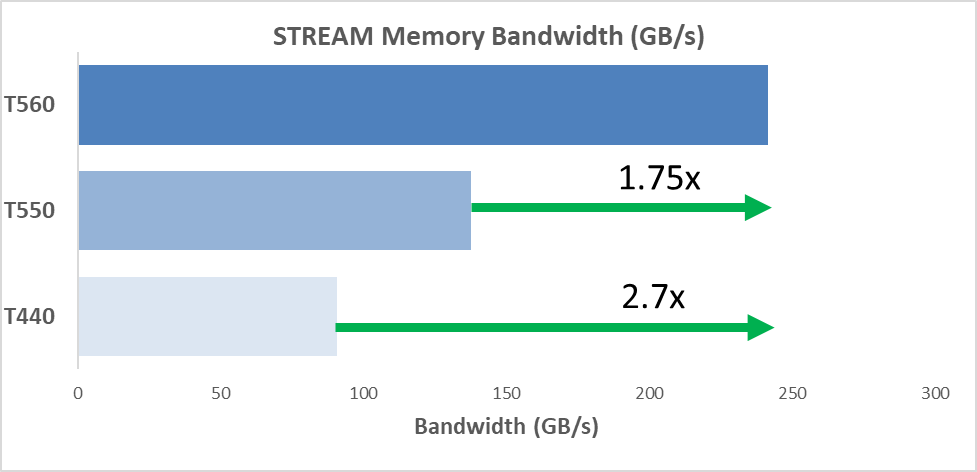

We also evaluated memory bandwidth across all three systems using STREAM. This benchmark is used throughout the tech industry to characterize memory bandwidth on many different devices. Synthetic benchmarks can be useful to show the relative performance of new technologies objectively. In the following figure, we report STREAM’s Triad (in GB/s), which is the most complex scenario in the benchmark.

Figure 3. Memory bandwidth gains compared to PowerEdge T560

The PowerEdge T560 is 1.75x faster (in GB/s) than the T550 and 2.7x faster than the T440, both of which have DDR4 memory. These results confirm the massive increase in memory bandwidth with DDR5.[2]

Concluding Thoughts

Choosing a server with great headroom for supporting more transactions per second helps future-proof businesses against the performance roadblocks that inevitably arise as data needs grow. With 2.7x faster memory speeds and up to 3x the transactions per second compared to the T440, the PowerEdge T560 delivers both exceptional performance and scalability for data-driven businesses of any size.

While all three towers are prepared for a variety of workloads, the next-gen technologies in the T560 make it a great investment for businesses looking to stay ahead of the curve, especially in terms of database performance. Remember to look out for our next blog which reveals results from two AI inferencing benchmarks--Intel’s OpenVINO and TensorFlow--tested on these same systems.

For now, we’ll leave you with this:

Why did the tower server go apple picking?

To find the core of its processing power!

Don’t worry, we groaned too.

Resources

- Forrester Generative AI Report

- Sapphire Rapids and Memory Bandwidth

- PCIe Gen 5 Performance

- Dell PowerEdge RAID Controller 12 (PERC 12) 16th Generation (16G) Server Performance vs PERC 11 & PERC 10 (delltechnologies.com)

- Dell PowerEdge 16G Intel Servers Announced - StorageReview.com

- PostgreSQL pgbench Benchmark - OpenBenchmarking.org

- PostgreSQL: Documentation: 16: pgbench

- STREAM Benchmark

Authors: Olivia Mauger, Jeremy Johnson, Delmar Hernandez | Compute Tech Marketing

[1] Based on September 2023 Dell Technologies lab testing on PowerEdge T440, T550, and T560. We used a public PostgreSQL benchmark via Phoronix Test Suite.

[2] Based on September 2023 Dell Technologies lab testing on PowerEdge T440, T550, and T560. We used the public STREAM benchmark via Phoronix Test Suite.

Save money, do more work, and use less energy with 16th Generation Dell PowerEdge R760 servers

Tue, 10 Oct 2023 16:51:44 -0000

|Read Time: 0 minutes

Principled Technologies testing showed that a 16th Generation Dell PowerEdge R760 server featuring a Broadcom BCM57508-P2100G NIC delivered all three benefits vs. previous-generation PowerEdge servers

With economic uncertainty, volatile energy prices, and sustainability goals, many organizations are looking for ways to reduce their energy costs and consumption. At the same time, data proliferation and new technologies such as machine learning and artificial intelligence require a large amount of processing power, leading to data centers running at higher power densities—creating more demand on cooling systems.

Principled Technologies (PT) compared the cost, performance, and power efficiency of three generations of Dell PowerEdge servers to show how organizations can potentially save money and accomplish more work by upgrading their older servers.

Using the memtier_benchmark to run 100 percent read workloads against a Redis database, PT measured the operations per second (Ops/s) and throughput (MB/s) of a 14th Generation Dell PowerEdge R740, a 15th Generation Dell PowerEdge R750, and a 16th Generation Dell PowerEdge R760 server. PT calculated the power efficiency (Ops/s/watt) of the devices using the results of their testing, as well as the performance per dollar of each solution (Ops/s/USD).

The PowerEdge R760 server processed 129.5 percent more Ops/s with 24.2 percent better power efficiency than the previous-generation servers, doing over twice as much work with nearly 25 percent better power efficiency than the R740. It also did significantly more work per dollar than the older servers, handling as much as 166.1 percent more Ops/s per dollar. Based on the results of these tests, organizations can improve power efficiency and performance while cutting costs by upgrading to the latest-generation Dell PowerEdge R760 server with Broadcom NICs.

Server | Performance (Ops/s) | Throughput (MB/s) | Power (W) | Perf/W | Perf/USD |

PowerEdge R760 | 64,282,525.28 | 2,533.67 | 832.0 | 77,265.46 | 2,019.9 |

PowerEdge R750 | 49,215,573 | 1,936.3 | 696.1 | 70,698.44 | 1,610.3 |

PowerEdge R740 | 28,000,329.07 | 1,116.47 | 450.3 | 62,179.70 | 758.9 |

Improving Oracle Performance with New Dell 4 Socket Servers

Mon, 02 Oct 2023 21:23:14 -0000

|Read Time: 0 minutes

Deploying Oracle on a PowerEdge Server presents various challenges, typical of intricate software and hardware integrations. As servers age, they become increasingly expensive to maintain and can have detrimental effects on business productivity. This is primarily due to the heightened demand for IT personnel's time and the heightened risk of unscheduled downtime.

In the case of older servers hosting virtualized Oracle® Database applications, they might struggle to keep up with growing usage demands. This can result in slower operations that, for example, dissuade customers from browsing a website for products and completing online transactions. Aging hardware is also more susceptible to data loss or corruption, potential security vulnerabilities, and elevated maintenance and repair expenses.

One effective solution to address these issues is migrating Oracle Database workloads from older servers to newer ones, such as the 16th Generation Dell™ PowerEdge™ R960 featuring 4th Gen Intel® Xeon® Scalable processors. This upgrade not only mitigates the aforementioned concerns but also opens doors to further IT enhancements and facilitates the achievement of business objectives. It can lead to improved customer responsiveness and quicker time-to-market.

Additionally, transitioning workloads from virtualized environments to bare metal solutions has the potential to significantly enhance transactional database performance, particularly for databases that come with high-performance service-level agreements (SLAs).

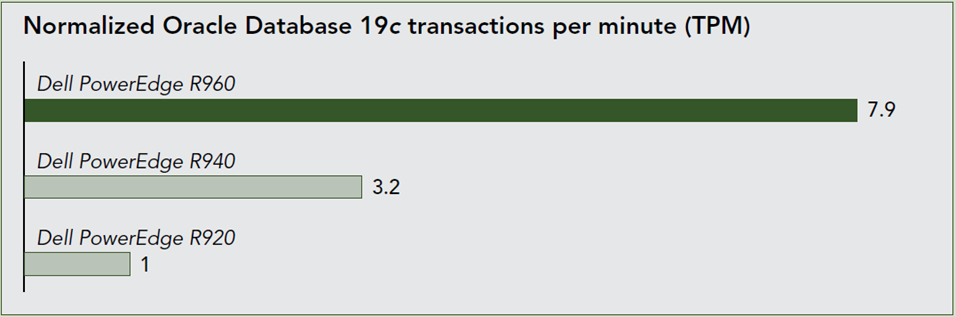

We recently submitted one of the new Dell PowerEdge R960’s to Principled Technologies for testing with an Oracle database 19c and compared the results to previous generations. The performance exceeded even our own lofty expectations. As the graph below shows, the R960 was able to process almost 8 times more transactions than the PowerEdge R920 and over double the transactions of the previous generation PowerEdge R940.

For more details read the full report here: Improving Oracle Database performance: Moving to Dell PowerEdge R960 servers with Intel processors | Dell Technologies Info Hub

When refreshing platforms it is critical to consider these performance characteristics and other common top 10 concerns such as:

- Compatibility Issues: Ensuring that the version of Oracle Database you want to deploy is compatible with the specific PowerEdge server hardware, operating system, and other software components can be a significant challenge. Compatibility matrices provided by Oracle and Dell (the manufacturer of PowerEdge servers) need to be thoroughly reviewed.

- Hardware Selection: Selecting the right PowerEdge server model with the appropriate CPU, memory, storage, and networking capabilities to meet the performance and scalability requirements of Oracle can be tricky. Overestimating or underestimating these requirements can lead to performance bottlenecks or wasted resources.

- Operating System Configuration: Configuring the operating system (typically, a Linux distribution like Oracle Linux or Red Hat Enterprise Linux) to meet Oracle's specific requirements can be complex. This includes setting kernel parameters, file system configurations, and installing necessary packages.

- Storage Configuration: Setting up storage correctly is critical for Oracle databases. Customers need to configure RAID levels, partitioning, and file systems optimally. Ensuring high I/O throughput and low latency is essential for database performance.

- Network Configuration: Proper network configuration, including setting up the network stack and configuring firewalls, is important for database security and accessibility.

- Oracle Database Configuration: Configuring Oracle Database itself, including memory allocation, database parameters, and storage management, requires a deep understanding of Oracle's architecture and best practices. Misconfigurations can lead to poor performance and stability issues.

- Backup and Recovery Strategy: Developing a robust backup and recovery strategy is crucial to protect the database against data loss. This includes configuring Oracle Recovery Manager (RMAN) and ensuring that backups are performed regularly and can be restored successfully.

- High Availability and Disaster Recovery: Implementing high availability and disaster recovery solutions, such as Oracle Real Application Clusters (RAC) or Data Guard, can be complex and requires careful planning and testing.

- Licensing and Compliance: Managing Oracle licenses and ensuring compliance with Oracle's licensing policies can be challenging, especially in virtualized or clustered environments.

- Performance Tuning: Continuously monitoring and tuning the Oracle database and the underlying PowerEdge server to optimize performance can be an ongoing challenge. This includes identifying and addressing performance bottlenecks and ensuring that the hardware is used efficiently.

To address these challenges, it is often advisable for customers to work with experienced system administrators, database administrators, and consultants who have expertise in both Oracle and PowerEdge server deployments. Additionally, staying informed about the latest updates, patches, and best practices from Oracle and Dell can help mitigate potential issues all of which can be found by partnering with Dell Technologies to take advantage of these performance enhancements found within the PowerEdge R960.

Author: Seamus Jones

Director, Server Technical Marketing Engineering

Discover Your Servers’ Untapped AI Potential: PowerEdge Offering Accelerated AI Adoption

Thu, 10 Aug 2023 18:57:45 -0000

|Read Time: 0 minutes

Imagine a world where machines comprehend our needs before we even express them—where AI drives innovation, and data centers form the beating heart of cutting-edge technology. That world is becoming increasingly lucid with every passing hour. In a world where technology is advancing at lightning speed, artificial intelligence (AI) has emerged as a game-changer. By delivering solutions that range from self-driving cars to personalized recommendations, AI is transforming industries and changing lives. But with great power comes great demand for computing resources. As the thirst for AI-driven solutions grows, so does the need for powerful servers that can handle the intense computational demands. The journey to this AI-driven utopia starts with a simple question: How can we turbocharge existing servers to tackle the AI revolution?

And what if we told you that the solution lies within your very own data center? That's right. The key to unlocking the true power of AI workloads might be hiding in plain sight. Welcome to the world of Dell PowerEdge servers.

At Dell Labs, we are obsessed with innovation. Our team of passionate engineers embarked on a mission to push the boundaries of what our PowerEdge servers could do. And boy, did we make a discovery that left us in awe!

By exploring the depths of server BIOS settings and fine-tuning them for AI workloads, we witnessed an astounding transformation. The performance boost was nothing short of extraordinary. Our engineers tinkered with these BIOS settings and uncovered hidden gems. These BIOS settings, often used for multitasking, proved to be the secret sauce for AI inferencing tasks. When we thoroughly tested industry-standard AI workloads against these settings, the server's performance skyrocketed like never before.

So, what does this mean for you? It means you may already have a secret weapon in your data center—Dell PowerEdge servers with Intel CPUs! Whether you're already harnessing the power of the latest PowerEdge servers or planning your next investment, our proven optimizations are set to maximize your returns. We've done rigorous testing and distilled the results into simple accessible settings that you can apply yourself. Alternatively, let us tailor your server's configuration at the point of purchase to unlock its full potential right out of the box. Imagine the possibilities: real-time facial recognition, ultra-fast data analysis, and predictions that can change the game for your business. The hidden power of your servers is waiting to be unleashed, and it's easier than you might think.

AI is the future, and you have a front-row seat to the revolution. With Dell PowerEdge servers optimized for AI workloads, you can embrace the full potential of AI without breaking a sweat. Your existing infrastructure holds the key to supercharging your journey in the fast-paced world of AI, where every advantage counts. PowerEdge servers have the untapped potential to fuel your AI ambitions like never before. The key to unlocking this hidden power lies in BIOS tuning—a simple yet powerful technique. Embrace this hidden power within your servers and join the ranks of AI pioneers to tap into the immense possibilities that await. The future is now, and your Dell PowerEdge servers are ready to lead the way.

Ready to dive into the secrets of BIOS tuning and its impact on AI performance? Our team has shared the magic of BIOS tuning with you in our comprehensive white paper. Let’s explore the fascinating world of AI together and see what's possible when you unleash the full power of your Dell PowerEdge servers.

To learn more about this groundbreaking solution and how it can revolutionize AI, see this Direct from Development Tech Note: Unlock the Power of PowerEdge Servers for AI Workloads: Experience Up to 177% Performance Boost!

Author:

Shreena Bhati, Product Management Intern

https://www.linkedin.com/in/shreena-santosh-bhati/

AI-Powered Smart Cities: PowerEdge and Intel Team Up to Deliver the Future

Mon, 07 Aug 2023 19:49:44 -0000

|Read Time: 0 minutes

Have you ever found yourself stuck at a red light with no other cars in sight, wondering why it takes so long to change? Or witnessed another never-ending traffic study in your city? What if we harness artificial intelligence to help cities make smart decisions fast?

The power of AI in smart cities

Artificial intelligence has emerged as a critical technology that is driving advancements in smart cities. It can analyze vast amounts of data to identify patterns and help make informed decisions, allowing city leaders to respond swiftly. These real-time insights will revolutionize how cities manage their infrastructures and services.

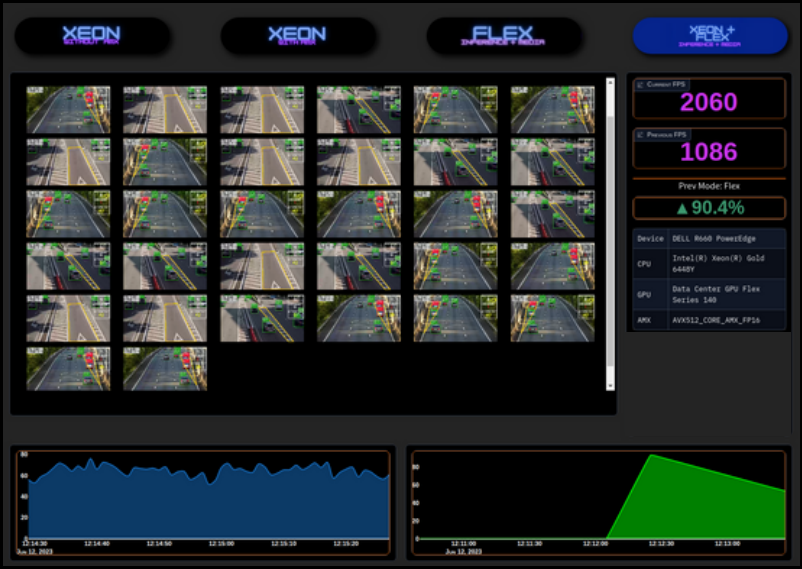

Improving traffic flow with AI

Imagine a world where AI optimizes traffic flow, minimizes wasted commute time, and reduces traffic congestion and thus pollution. Dell Technologies, Intel, and Scalers AI developed a concept solution combining the power of the latest PowerEdge servers offering 4th Gen Intel® Xeon® CPUs and Intel Data Center Flex Series GPUs. This innovative solution harnesses every ounce of computing power offered in the latest generation of PowerEdge servers to deliver maximum server performance.

Leading the way with smart city solutions

We developed this cutting-edge concept solution to give us a glimpse of the possible. Our approach involves monitoring automotive behavior and traffic using real-time video footage from many strategically positioned cameras. By analyzing this data, the application identifies safety hazards like reckless driving and vehicle collisions, empowering cities to respond swiftly.

The impact of the Intel–Dell partnership

The partnership between Intel and Dell, supported by the expertise of Scalers AI, is driving smart cities into reality. The combined power of CPUs and GPUs for AI workloads enhances urban safety, sustainability, and efficiency. This collaboration allows cities to explore the potential of AI for real-world applications.

To learn more about this groundbreaking solution and how the latest technology from Dell Technologies and Intel will revolutionize urban living, visit https://infohub.delltechnologies.com/section-assets/07-09-intel-data-center-flex-series-gpu-with-poweredge-r660-driving-innovation.

Author: Delmar Hernandez

On the record for Sapphire: World Record SAP HANA Performance with Dell PowerEdge R760 Servers

Wed, 17 May 2023 15:19:25 -0000

|Read Time: 0 minutes

SAP HANA is an in-memory database platform used to manage large amounts of data in real time for purposes such as point-of-sale data, real-time analytics for inventory management, supply-chain optimization, and customer behavior analysis. As the amount of data that must be processed grows, servers can be challenged to deliver information and analysis quickly enough to meet the increasingly demanding business requirements for fast data access. That is why SAP HANA users are always on the lookout for better-performing servers.

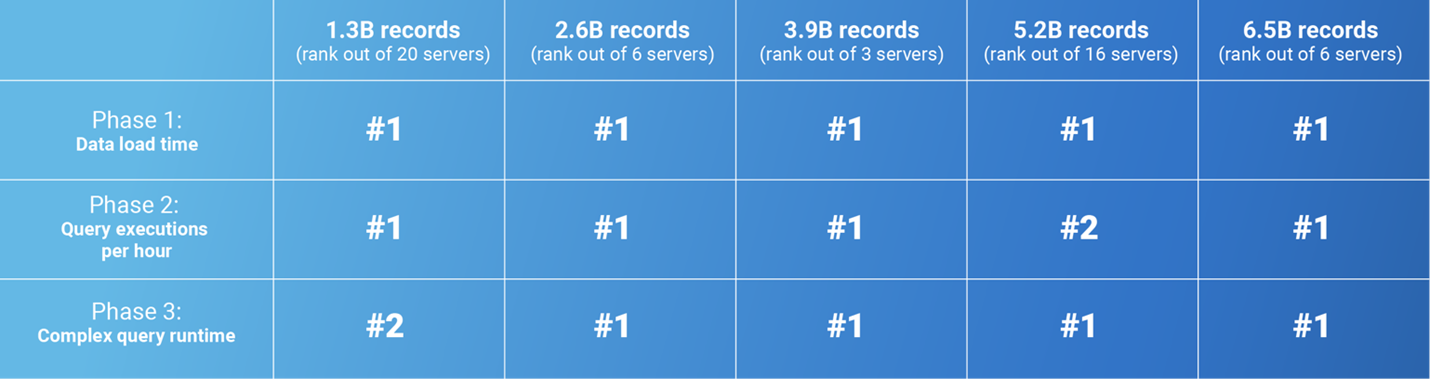

SAP publishes the results of standardized benchmark tests to assist customers in comparing the performance of different servers when running SAP HANA. Performance results for the Dell PowerEdge R760 server were recently published, and we are excited to share some highlights about how well this server stacks up against other servers.

Fifteen different data points are available for comparing the PowerEdge R760 server to other servers. The standard benchmarks measure three key performance data points for each of the five different database sizes on which the PowerEdge R760 server was tested.

The following table shows the rank of the PowerEdge R760 server for each of the 15 points of comparison among all the different servers tested using SAP’s benchmark version 3. The source of the data is the publicly available SAP Standard Application Benchmarks directory, accessed on 04-19-2023.

The PowerEdge R760 server outperformed all the other servers in 13 of the 15 benchmark points of comparison, and it ranked second in the remaining two.

The PowerEdge R760 server is a two-socket server built with the latest 4th Gen Intel Xeon Scalable processors. It outperforms all other servers in SAP HANA benchmarking—even 4 and 8-socket servers—in every database size of up to 6.5 billion initial records. It provides the performance and versatility to address your most demanding applications, including SAP HANA, with massive databases and mission-critical requirements for real-time performance.

Look for an in-depth study from Prowess: Remarkable SAP Benchmark Performance Results for Dell PowerEdge R760 Servers (delltechnologies.com) about how the PowerEdge R760 server performed against top competitors on the SAP Standard Application Benchmarks.

Learn more about PowerEdge servers and Dell Technologies solutions for SAP.

About the Author:

Seamus Jones

Director, Server Technical Marketing

Seamus serves Dell as Director of Server Technical Marketing, seasoned with over 20 years of real-world experience in both North America and EMEA. His unique perspective comes from experience consulting customers on data center initiatives and server virtualization strategies.

PERC 12 Generational Performance Boosts

Wed, 08 Feb 2023 21:17:34 -0000

|Read Time: 0 minutes

Some additional insights into the recent Tolly report on PERC 12 vs. PERC 11 and PERC 10

No matter your organization’s focus, faster, more reliable RAID is always a good thing. Recently, Tolly published a full-length report comparing the performance of Dell’s PERC 12 with prior generation PERC 11 and PERC 10. You can read that report here: https://infohub.delltechnologies.com/section-assets/tolly223103delltechnologiespoweredgeraidcontroller12performance

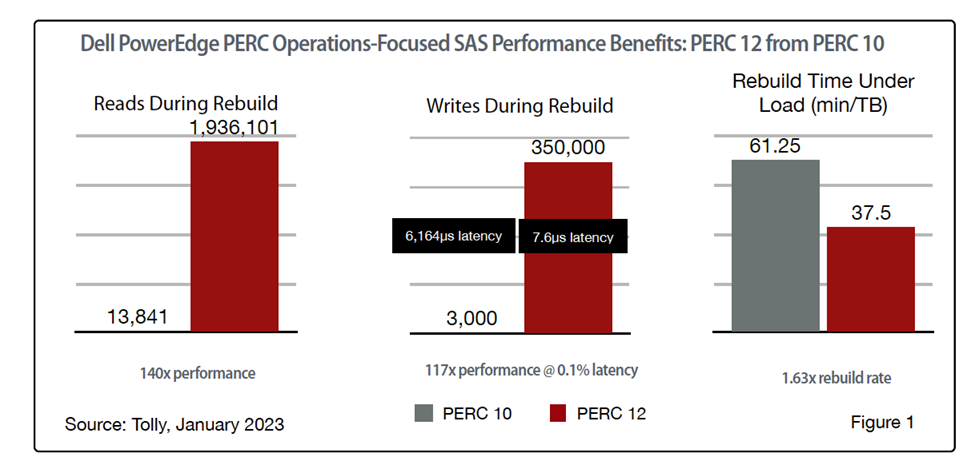

Even a quick glance at the full report will turn your head as there are some very impressive numbers. For example, PERC 12 delivers SAS read performance during rebuild that is 140x that of PERC 10; write performance during rebuilt that is 117x that of PERC 10 at 0.1% the latency; and volume rebuild time that is 1.63x the rate of PERC 10.

In total, we covered over 60 different tests that spanned PERC 10, 11, and 12 and both SAS and SSD storage environments. So much data, so little space! So, in this blog, we will focus on PERC 12 from PERC 11 and take a closer look at some of generational benefits of upgrading NVMe environments from existing PERC 11 to the new PERC 12 technology. (PERC 10 did not offer support for NVMe.)

PERC 12 Speeds & Feeds: IOPS & Bandwidth

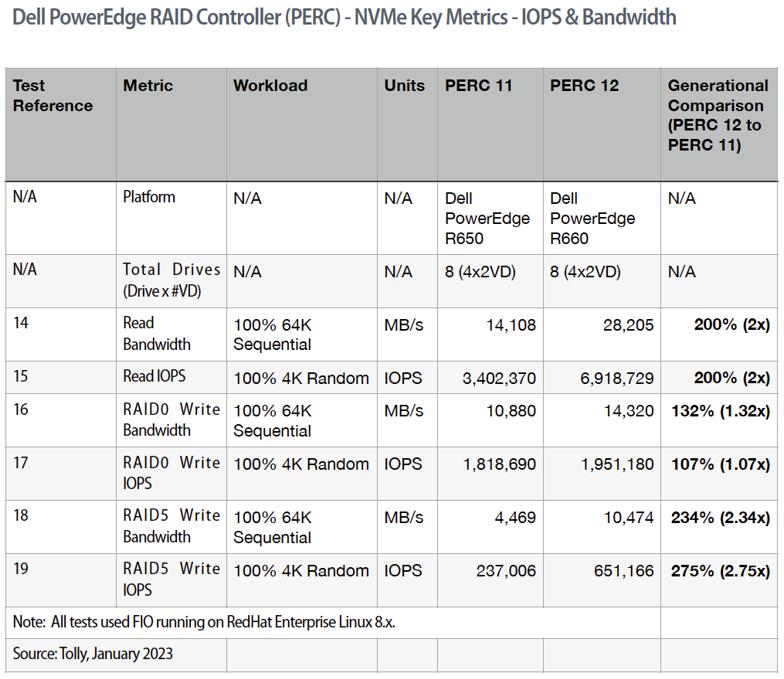

While most of us (hopefully) don’t spend our time going “0-60” in our cars, it has become a standard metric for car manufactures. Similarly, “IOPS and bandwidth” have become standard metrics for storage solutions. As with cars, it is not because the use case is a common one but because the extreme cases can go a long way in highlighting technological achievements – which end up helping one’s more mundane use cases. With that introduction, let’s look at the test results.

All these data points can be found in our Tolly report referenced above. For both reads and writes, we are interested in the maximum values for read/write operations and overall throughput.

To show maximum throughput, 64K sequential reads are used. To show maximum operations, 4K random reads are used. For simplicity with comparing results, 100% of the test uses the same pattern.

While out table contains all the raw results, it is the comparison column on the far right that draws our interest. In every case, PERC 12 outperforms PERC 11 with NVMe.

While RAID0 results are included, the fact that RAID0 does not provide protection against disk failure and, thus, is not relevant to most organizations.

Looking at RAID5, then, a quick glance shows that PERC 12 delivers twice (or more) the IOPS and bandwidth for both read and write operations when compared to PERC 11.

All these tests were run on “optimal” environments. That is, disk arrays with all of the disks operational. But that isn’t always the case in the realworld.

PERC 12 Rebuild Performance & “Tail” Latency

Across the board, what I find most impressive about PERC 12 is how it improves performance during rebuild. In the real world, disk failures happen. Always have, probably always will. What is important is what happens to your system in such situations.

Fortunately, RAID5 is all about data protection. Thus, when a RAID5 array has a disk failure, no data is lost. When a new, replacement disk is inserted, however, a “rebuild” has to take place. The RAID5 controller rebuilds the protected array by writing data to the “fresh” disk. Traditionally, this has translated into degraded performance for the array during the rebuild. PERC 12 changes all that.

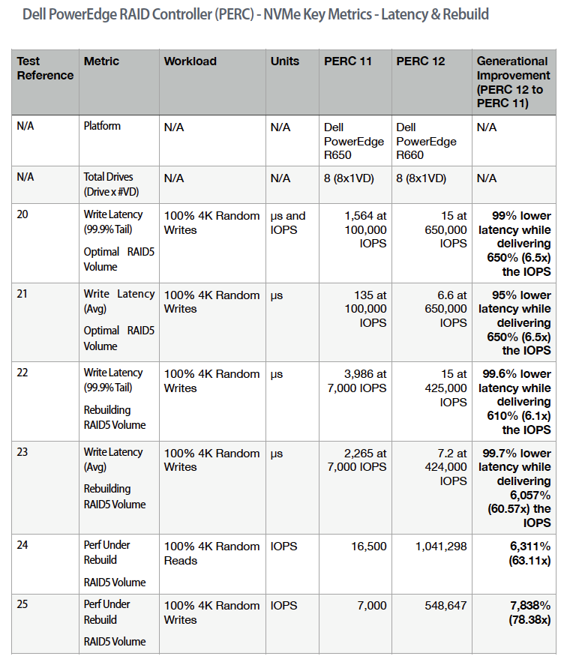

Again, looking at data from our report, there are several things to note.

First, you will note that in addition to average latency, we are also reporting “tail” latency. This latency value reports the latency for 99.9% of the test results. Looked at another way, it is the latency for all but the “worst” 0.1% of the results.

Tail latency is a much more rigorous measurement because of the scope of results that it covers. The test data shown in this table cover both “optimal” environments as well as tests run with rebuilds in progress. In all cases, the latency is dramatically lower. PERC 12 reduces average/tail latency by 95% to 99.7% compared with PERC 11.

At the same time, PERC 12 boosts 600% of the IOPS delivered with PERC 11.

And, as mentioned earlier, performance under rebuild is dramatically improved. PERC 12 random read IOPS are more than 60x PERC 11. PERC 12 random writes are more than 78x PERC 11.

Outro

Whether you are on a five-year refresh cycle and are jumping from PERC 10 to PERC 12 or on a latest-to-latest cycle and moving from PERC 11 directly PERC 12, the numbers are impressive no matter what test scenario you look at.

For a boost in real-world performance – especially during RAID volume rebuild – PERC 12 has your back.

Can CPUs Effectively Run AI Applications?

Fri, 03 Mar 2023 20:06:28 -0000

|Read Time: 0 minutes

Due to the inherent advantages of GPUs in high speed scale matrix operations, developers have gravitated to GPUs for AI training (developing the model) and inference (the model in execution).

With the scarcity of GPUs driven by the massive growth of AI applications, including recent advancements in stable diffusion and large language models that have taken the world by storm, such as ChatGPT by OpenAI, the question for many developers is:

Are CPUs up to the task of AI?

To answer the question, Dell Technologies and Scalers AI set up a Dell PowerEdge R760 server with 4th Gen Intel® Xeon® processors and integrated Intel® Deep Learning acceleration. Notably, we did not install a GPU on this server.

In this blog, Part One of a two-part series, we’ll put this latest and greatest Intel® Xeon® CPU just released this month by Intel® to the test on AI inference . We’ll also run AI on video streams, one of the most common mediums to run AI, and pair industry specific application logic to showcase a real-world AI workload.

In Part Two, we’ll train a model in a technique called transfer learning. Most training is done on GPUs today, and transfer learning presents a great opportunity to leverage existing models while customizing for targeted use cases.

The industry specific use case

Scalers AI developed a smart city solution that uses artificial intelligence and computer vision to monitor traffic safety in real time. The solution identifies potential safety hazards, such as illegal lane changes on freeway on-ramps, reckless driving, and vehicle collisions, by analyzing video footage from cameras positioned at key locations.

For comparison, we also set up the previous generation Dell PowerEdge R750 server and ran the AI inferencing object detection workload on both servers. What did we learn?

Dell PowerEdge R760 with 4th Gen Intel® Xeon® Processors and Intel® Deep Learning Boost delivered!

Let’s find out about the generational server comparison.

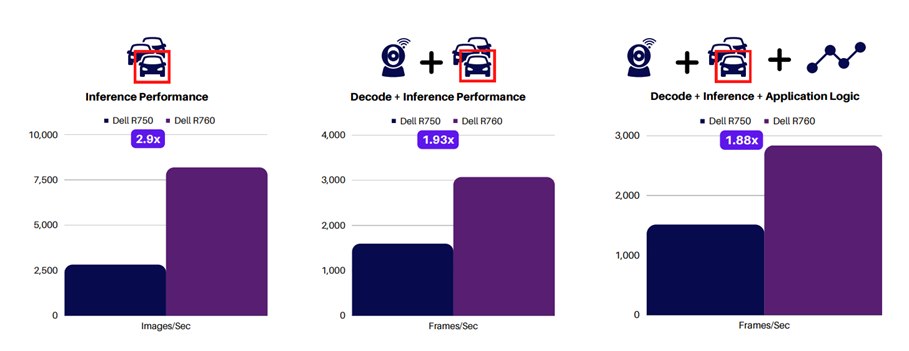

The following charts show the performance gain from the last gen to the current gen server. The graph on the left shows inference-only performance, while the middle graph adds video decode. Finally, the graph on the right shows the full application performance with the smart city solution application logic.

The performance claims are great. But what does this mean for my business?

Dell PowerEdge R760 and Scalers AI smart city solution results show that for a similar application, users can expect the Dell PowerEdge R760 server to perform real-time inferencing on up to 90 1080P video streams when it is deployed. Dell PowerEdge R750 can handle up to 50 1080P video streams, and this is all without a GPU. Although GPUs add additional AI computing capability, this study shows that they may only sometimes be necessary, depending on your unique requirements, such as how many streams must be displayed concurrently.

Given these results, Scalers AI confidently recommends using Dell PowerEdge R760 with 4th Gen Intel® Xeon® Processors and Intel® Deep Learning Boost for AI computer vision workloads, such as the Scalers AI Traffic Safety Solution using object detection, because they fulfill all application requirements.

Now that we have shown highly effective object detection on a CPU, what about a more compute-intensive complex model such as segmentation?

Here we are running segmentation on 10 streams, while displaying four streams on the more complex segmentation model.

As you can see, CPUs are up to the task of running AI inference on models such as object detection and segmentation. Perhaps more important for developers, they offer the flexibility to run the full workload on the same processor, thereby lowering the TCO.

With the rapid growth of AI, the ability to deploy on CPUs is a key differentiator for real-world use cases such as traffic safety. This frees up GPU resources for training and graphics use cases.

Check in for Part Two of this blog series as we discuss a technique to train a transfer learning model and put a CPU to the test there.

Resources

Interested in trying for yourself? Get access to the solution code!

To save developers hundreds of potential hours of development, Dell Technologies and Scalers AI are offering access to the solution code to fast-track development of AI workloads on next-generation Dell PowerEdge servers with 4th Gen Intel® Xeon® scalable processors.

For access to the code, reach out to your Dell representative or contact Scalers AI!

To learn more about the study discussed here, visit the following webpages:

• Myth-Busting:

Can Intel® Xeon® Processors Effectively Run AI Applications?

• Accelerate Industry Transformation:

Build Custom Models with Transfer Learning on Intel® Xeon®

• Scalers AI Performance Insights:

Dell PowerEdge R760 with 4th Gen Intel® Xeon® Scalable Processors in AI

Authors:

Steen Graham, CEO at Scalers AI

Delmar Hernandez, Server Technologist at Dell Technologies

Build a Continuous Innovation Machine

Sat, 10 Sep 2022 01:07:11 -0000

|Read Time: 0 minutes

Adopt a proven IT foundation that’s ready for anything and stops at nothing.

Perpetual motion, cold fusion, time travel, jetpacks, and many other hypothetical ideas continue to capture the imagination, even though they don’t actually exist. Each one promises to solve a slew of seemingly intractable problems with a single, elegant solution.

Those of us who work in IT know that there is no one solution that solves every problem. The technology landscape is incredibly dynamic, and the solution that’s just right for one workload today might not be the best match tomorrow—and will never be the right solution for another workload with different characteristics and requirements. The only constant in today’s enterprise data estates—encompassing multiple devices, data centers, clouds, and edges—is the relentless flow of change.

So how can you plan your IT strategy when every day launches you into uncharted digital territory?

At Dell Technologies, we believe that the best way to get from where you are to where you need to be is to understand that the route you take will not be a straight line from A to B. It will be a unique path with twists and turns determined by the demands and requirements of your customers, your industry, and your business. And all that can all change in an instant. Adopting a data-driven approach to modernization focuses on building an IT foundation that’s ready for anything and stops at nothing.

This continuous innovation machine is not a single solution, but an approach to IT that recognizes when you don’t know where the future will take you, you need a well-oiled machine that can help you chart a course to the future, navigating an evolving landscape at a rapid pace. The continuous innovation machine is a technology foundation that works together seamlessly to power your business today and can scale, evolve, and adapt quickly so you can take advantage of new opportunities as they come along.

Dell Technologies can help you on your way with benchmarked and proven solutions that help you innovate, adapt, and grow.

Adaptive compute

Be ready for what’s next and address evolving compute demands with a platform engineered to optimize the latest technology advancements while easily scaling to address your data at the point of need. For example, Dell PowerEdge servers have been tested and proven to deliver:

- 28% faster performance1

- 71% cost reduction2

- 37% higher virtual machine density3

Autonomous infrastructure

Respond rapidly to business opportunities with intelligent systems that work together and independently, delivering to the parameters that you set. Dell Technologies innovations can ease management tasks with:

- 46 seconds versus 42+ minutes to update multiple servers4

- 99.1% less hands-on deployment time5

- 17,280X more efficient reporting6

Proactive resilience

Build resilience into your digital transformation with an infrastructure designed for secure interactions and the capability to predict potential threats. Dell Technologies delivers:

- Built-in cybersecurity and a protected supply chain7

- Layered and pervasive security to combat sophisticated threats7

- Zero Trust to meet the challenge of ever-changing threats8

Be ready for anything

Be ready to drive innovation into new frontiers with an IT foundation that delivers critical capabilities across your environment. Dell Technologies delivers lab-tested, benchmarked, and third-party-proven benefits to help you adopt solutions that are just right for today and are ready to help you innovate, adapt, and grow into the future—wherever it might take you.

Learn more:

[1] Dell Technologies Direct from Development, Intel Xeon E-2300 Processor Series, and How They Improve Performance, Features, and Security For Next-Generation PowerEdge Rack and Tower Servers, 2021.

[2] Dell Technologies Direct from Development, Persistent Memory for PowerEdge Servers, 2021.

[3] A Principled Technologies report, Get more from Dell PowerEdge R750xs servers with 3rd Generation Intel Xeon Scalable processors, September 2021.

[4] A Principled Technologies report, Automate high-touch server lifecycle management tasks with OpenManage Enterprise integrations and plugins, March 2021.

[5] A Principled Technologies report, Reduce hands-on deployment times to near zero with iDRAC9 automation, February 2020.

[6] Tolly test report commissioned by Dell Technologies, iDRAC9 Telemetry Streaming, February 2020.

[7] Dell Technologies infographic, Dell PowerEdge Cyber Resilient Architecture 2.0, May 2021.

[8] Dell Technologies infographic, Zero Trust. Verified Trust, January 2021.