Blogs

The latest news about Dell PowerMax and VMAX releases and updates

Unisphere for PowerMax Workload Planner

Fri, 15 Mar 2024 21:21:58 -0000

|Read Time: 0 minutes

Workload Planner (WLP) is a FAST component used to display performance metrics to calculate VMAX component utilizations and storage group Service Level Objective compliance. It allows for more informed workload monitoring, using up-stream components (Unisphere GUI and REST API) with respect to current VMAX performance capacity.

WLP is supported on arrays running 5977 and upwards code levels. Each service level and workload type has a response band associated with it. When a storage group (workload) is said to be compliant, it is operating within the associated response time band.

When assessing the compliance of a storage group, Workload Planner calculates its weighted response time for the past 4 hours and the past 2 weeks, and then compares the two values to the maximum response time associated with its given service level. If both calculated values fall within (under) the service level-defined response time band, the compliance state is STABLE. If one of them complies and the other is out of compliance, then the compliance state is MARGINAL. If both are out of compliance, then the compliance state is CRITICAL.

SG SLO compliance

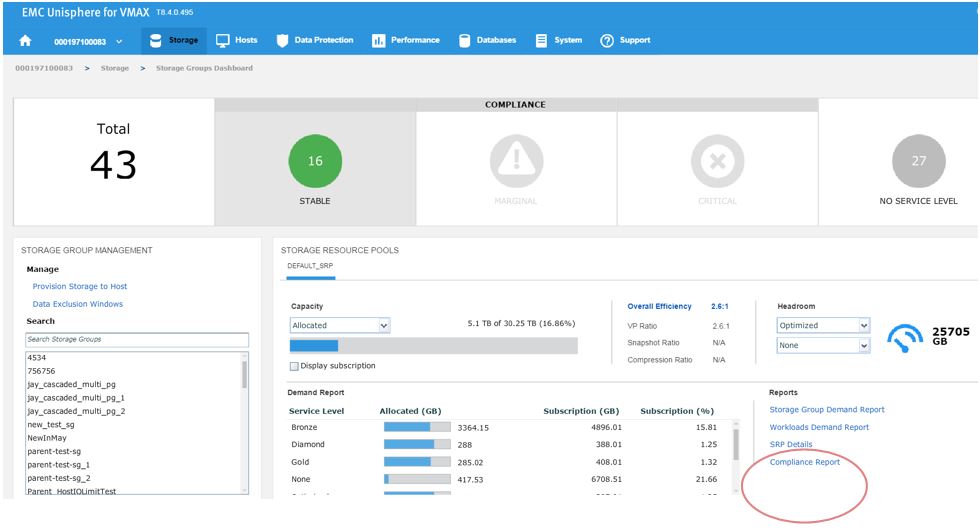

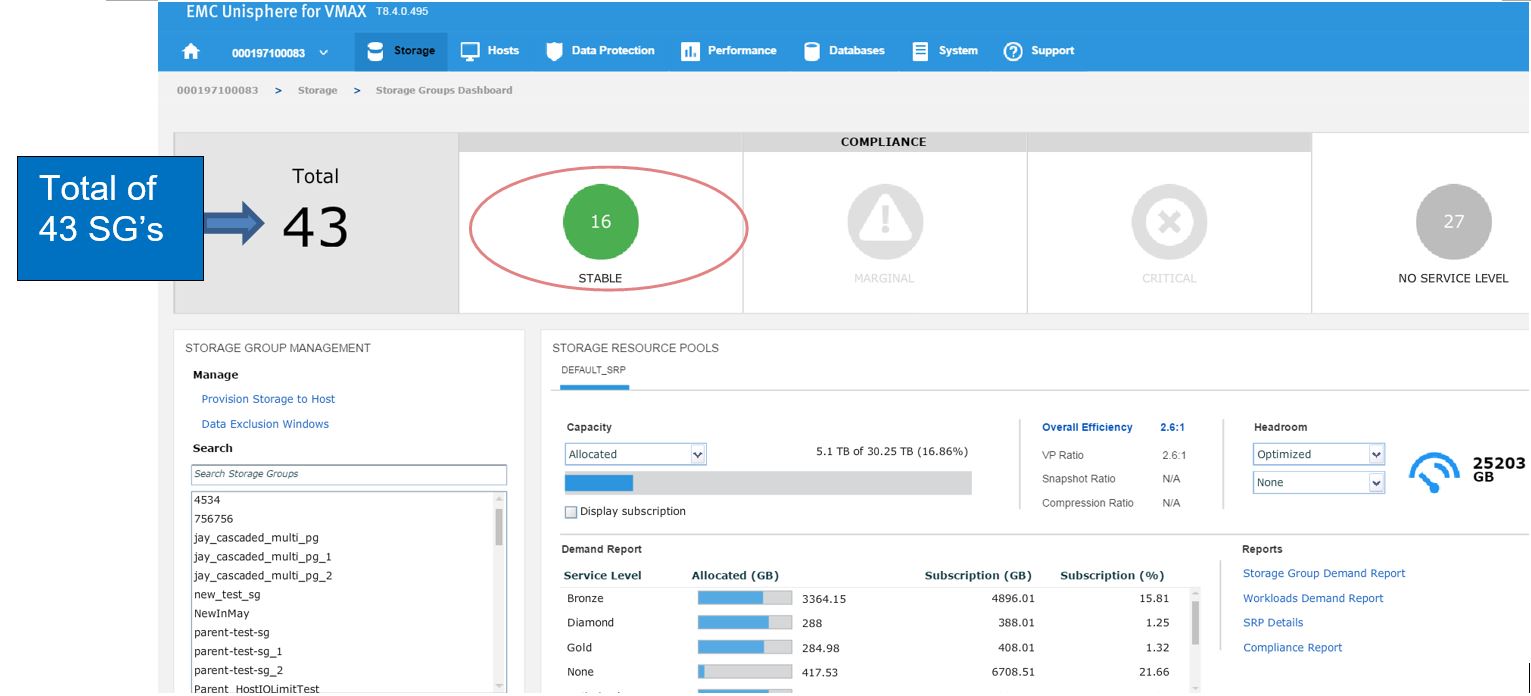

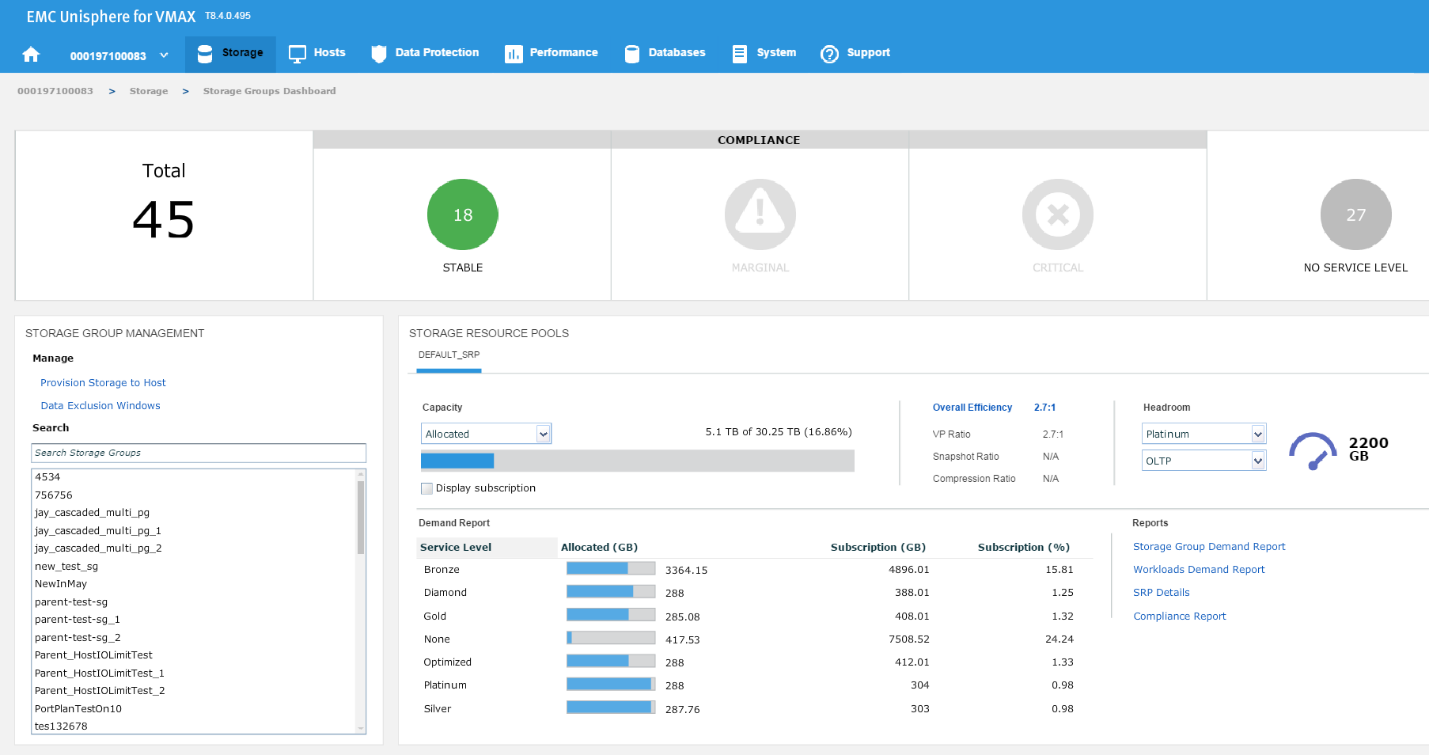

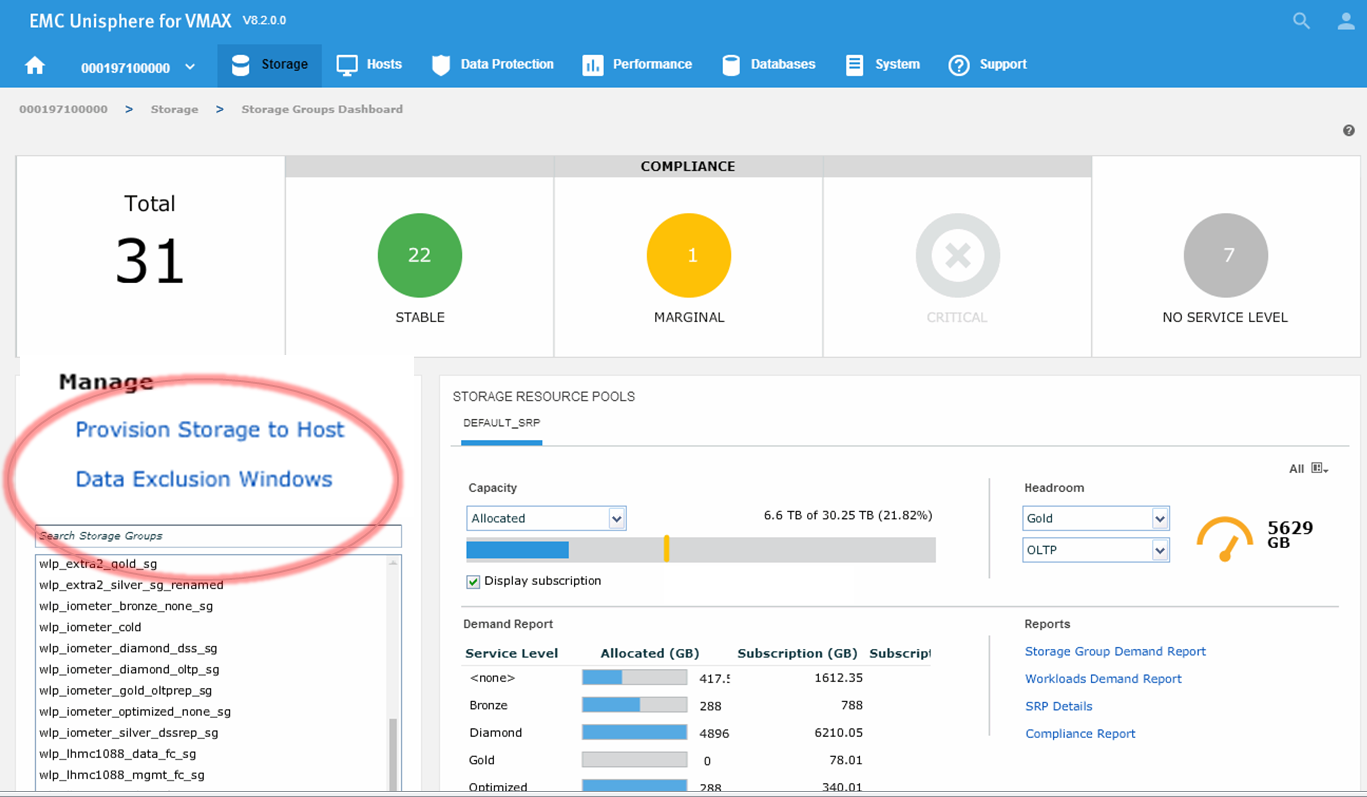

To begin, let’s examine our SG SLO compliance to examine how our SG’s have been performing.

In this example, we have a total of 43 SGs with none being in a marginal or critical state. Let’s click on 16 stable SG’s and review further.

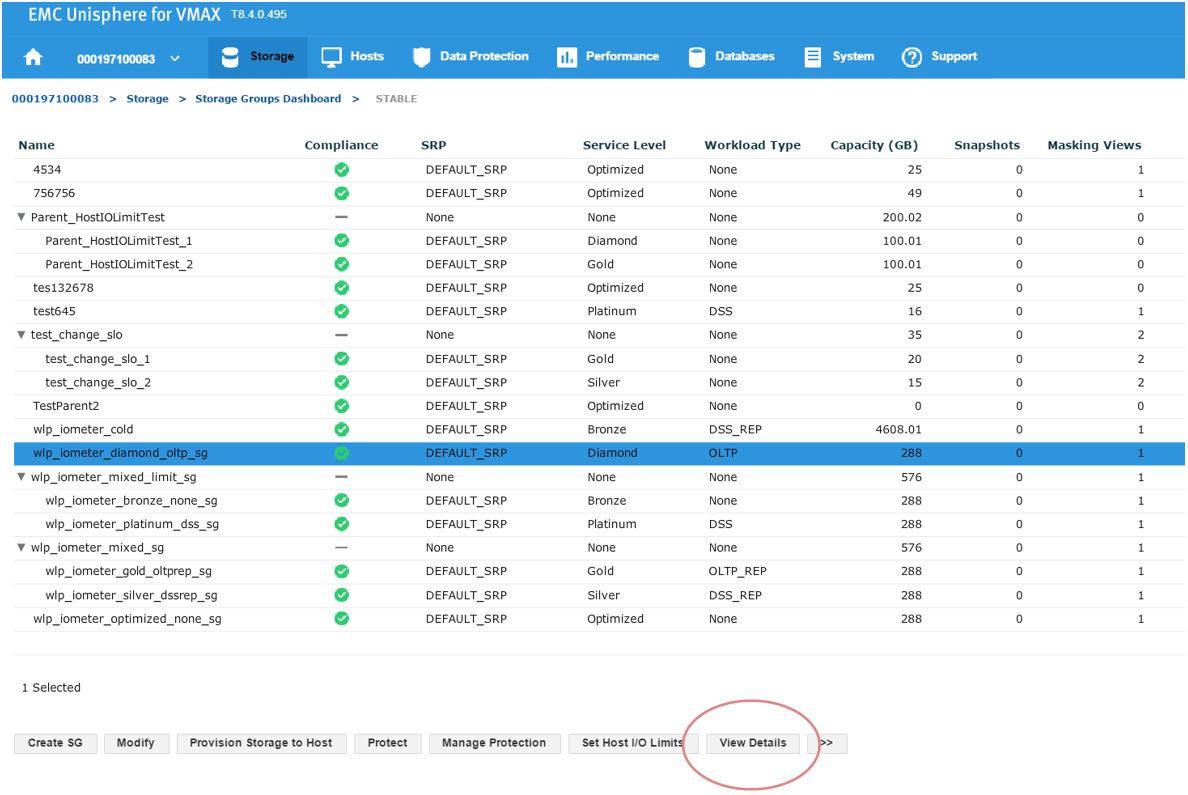

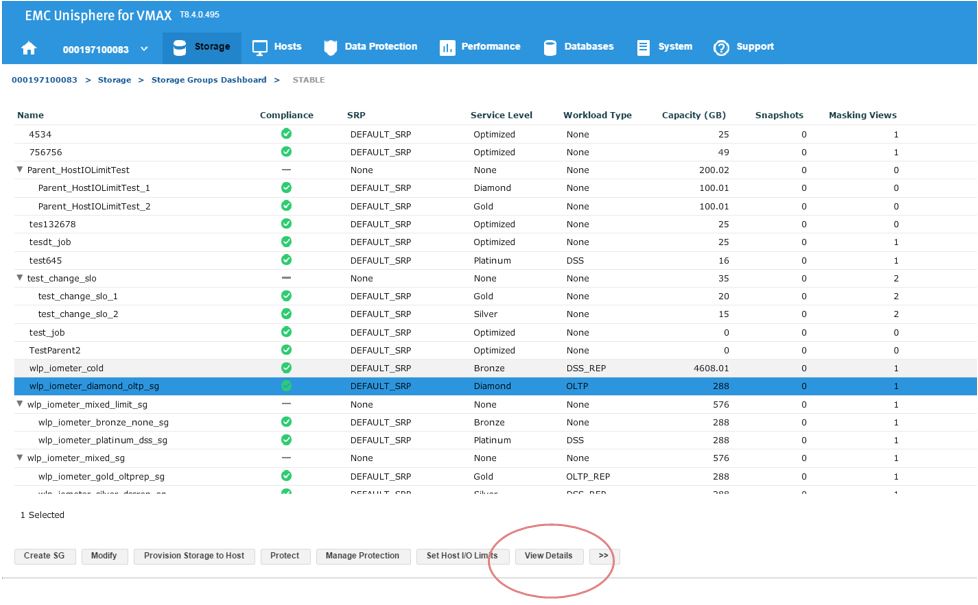

Figure 2. Storage groups drill down view

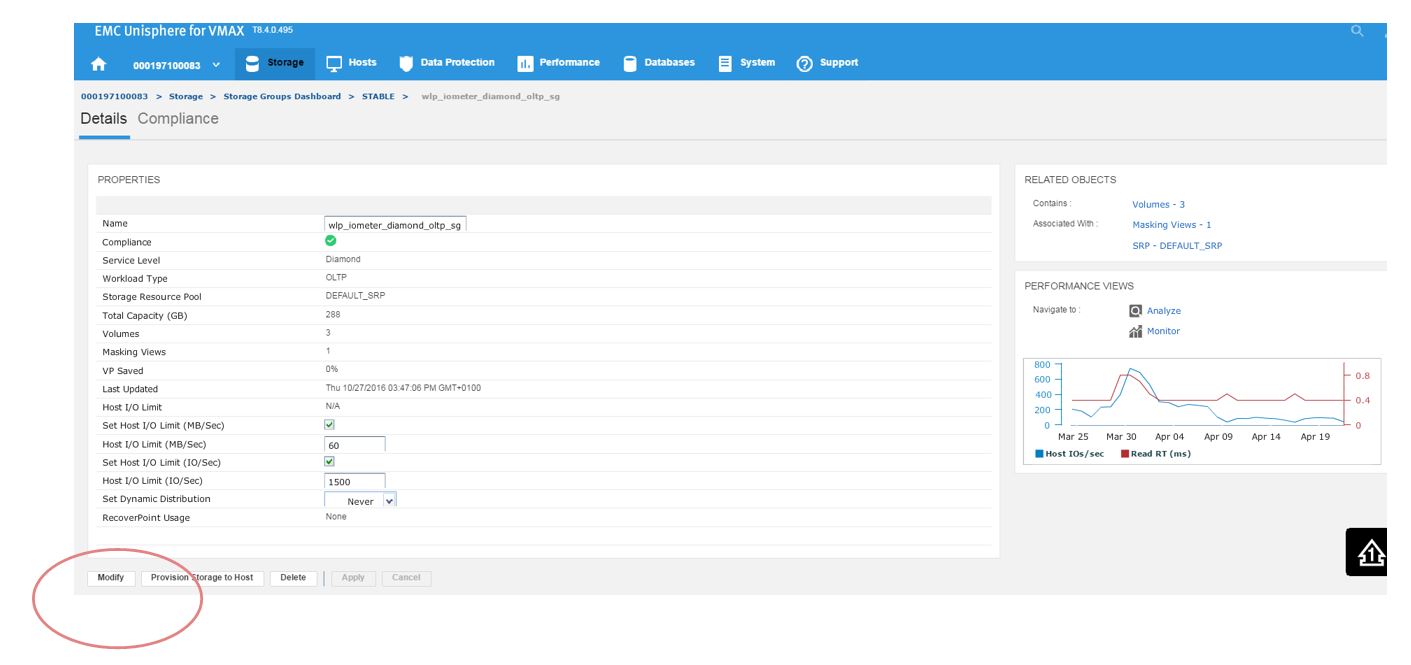

Here, we have a SG list for the array. In the second column, we see that our sg wlp_iometer_diamond is in a compliant SLO state. Let’s review this SG further by selecting this SG, and then clicking on View Details. This takes us to Details view where we can review the properties of the SG and check its performance on the right-hand side. Here, we want to select Compliance and select the storage tab.

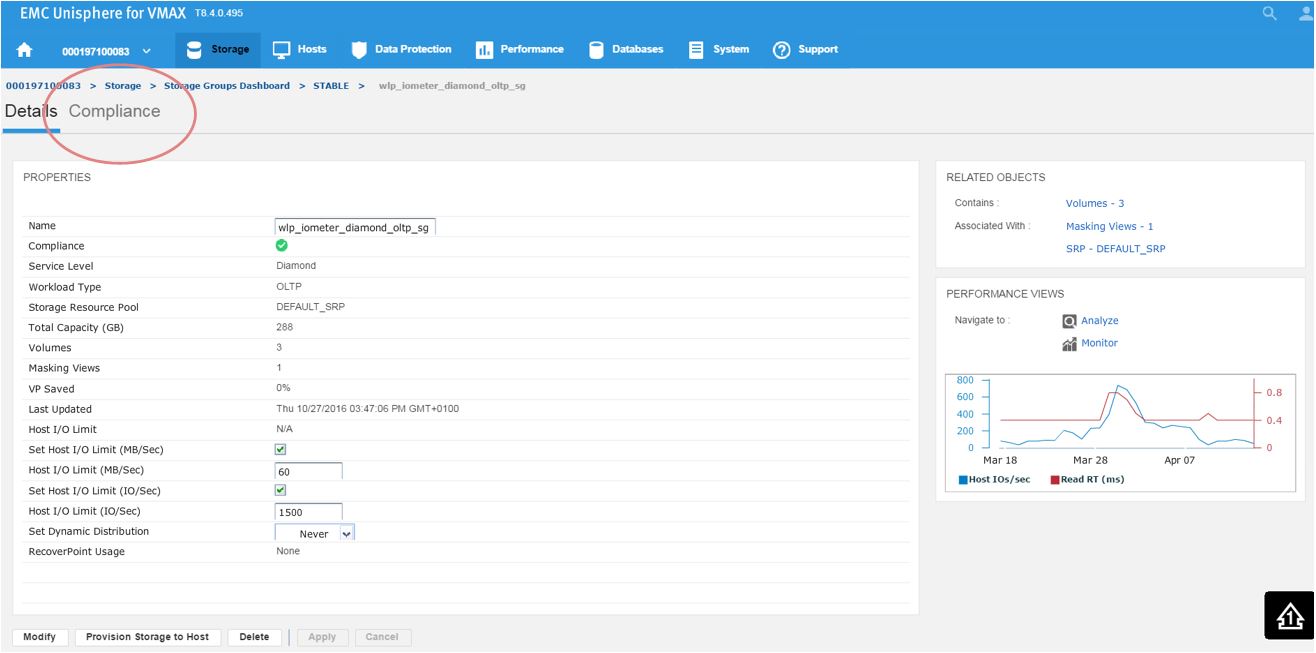

Figure 3. Storage group Details view

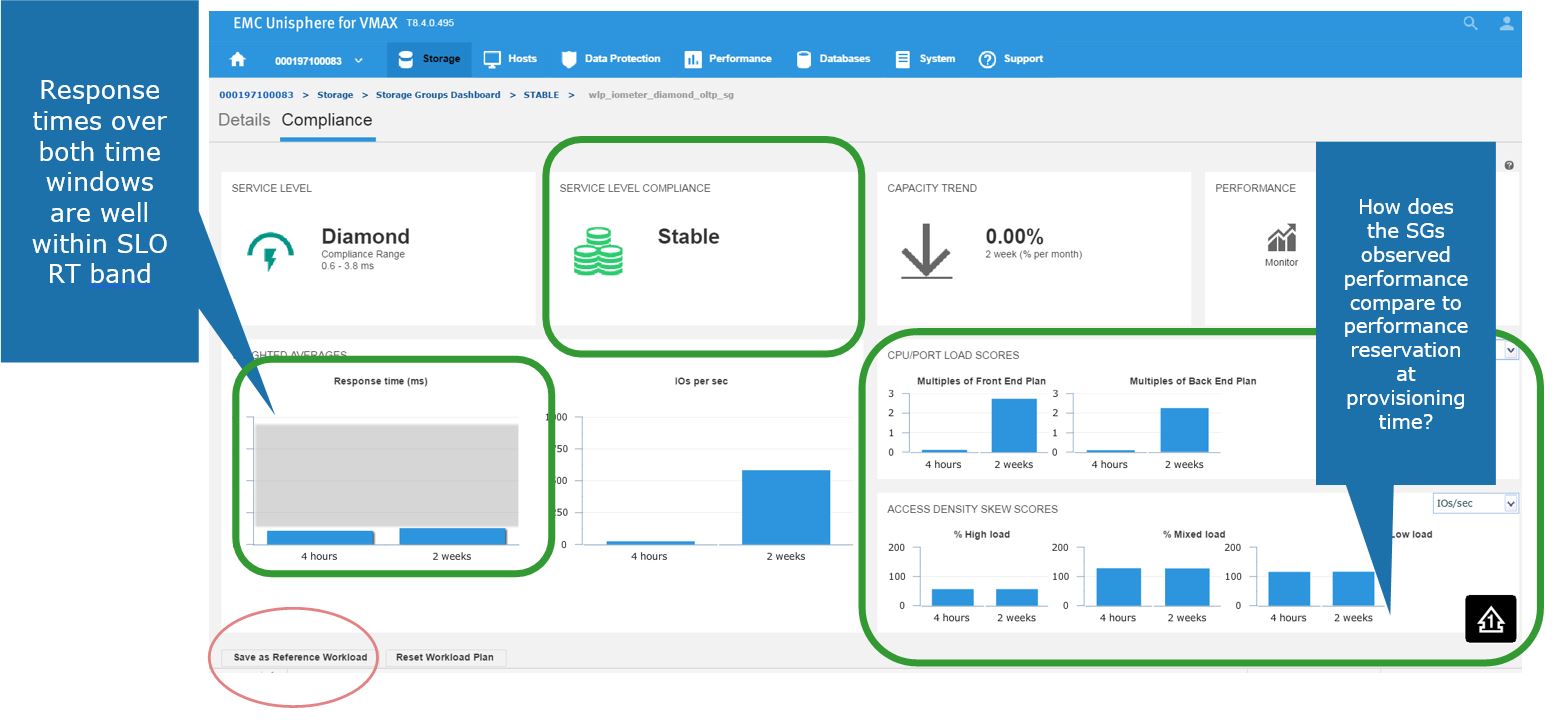

Here, we get greater detail on the current compliance state of the SG. We can see that it has a Diamond SLO which is stable. Currently, its capacity trend is flat, however we can also see how our response times over the windows of time. Additionally, we can review how the SG is performing compared to when it was first created.

Figure 4. Storage group Compliance view

Saving a favorite reference workload

Here, we also have the ability to save this as a reference workload. For example, this SG is being used for an Oracle database, and we are happy with its performance and configuration since we rolled the application out into production.

Let’s leverage Unisphere to keep this workload for use in the future.

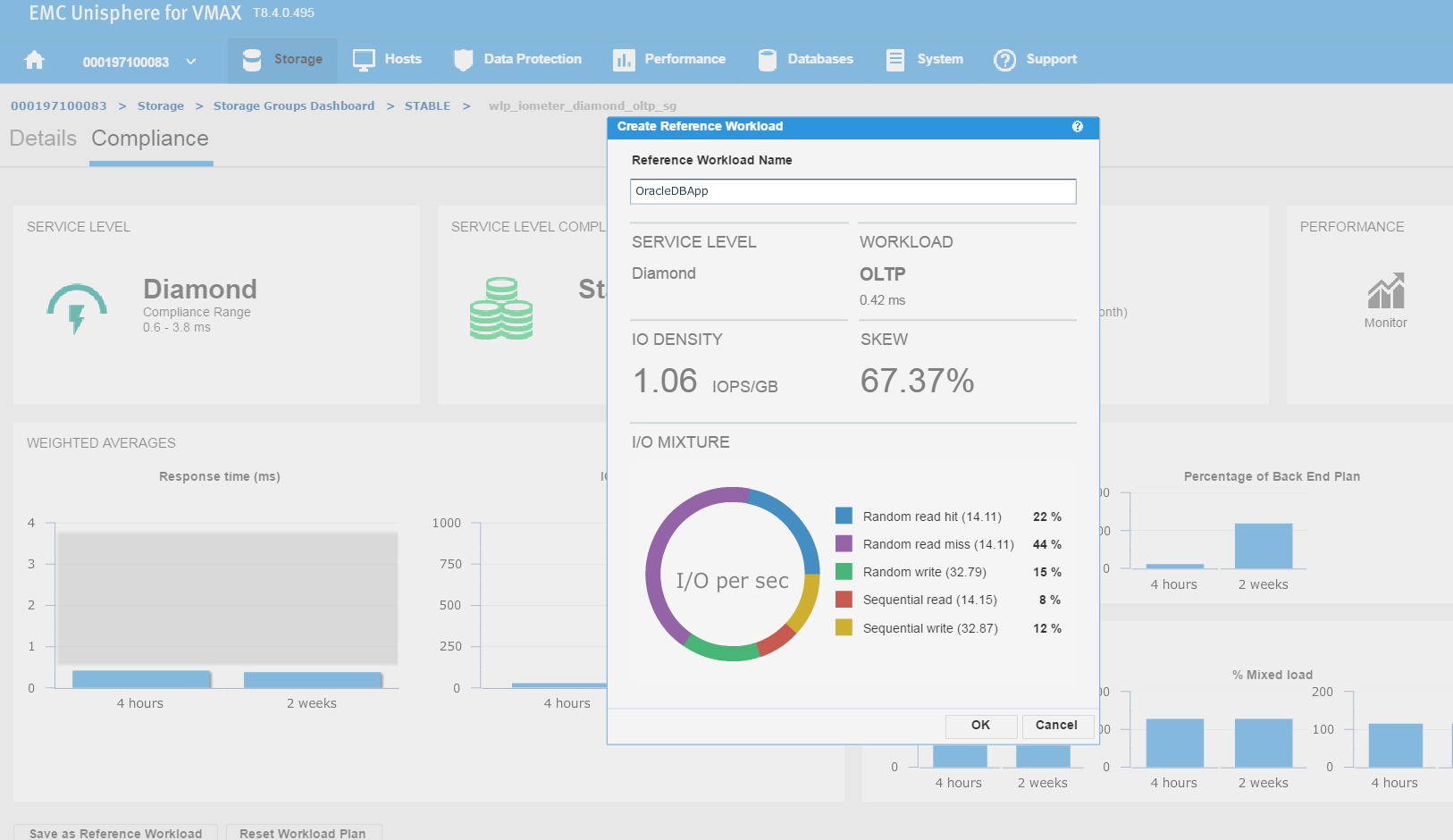

Figure 5. Create reference workload

We have named the workload OracleDBApp, so we can use this as a reference point when we roll out a similar Oracle Application with the breakdown of read and writes in terms of I/O.

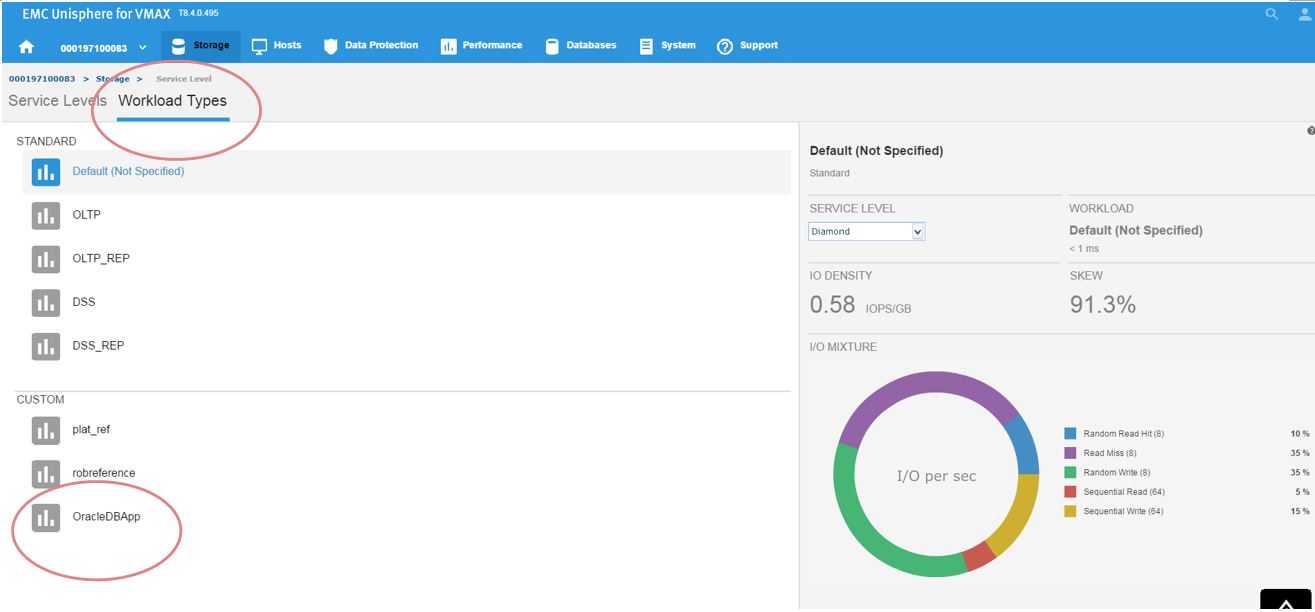

Continuing along, let’s first verify that our workload type was saved correctly. To do this, go to Storage > Service Level, and select the Workload Types tab.

Figure 6. Save workload type

Now, we can see the reference workload we have customized in OracleDBApp. Since we are satisfied with the configuration of this application’s performance, we can leverage this workload type for use in the future when rolling out similar type applications.

Let’s see how we can use this in the provisioning wizard.

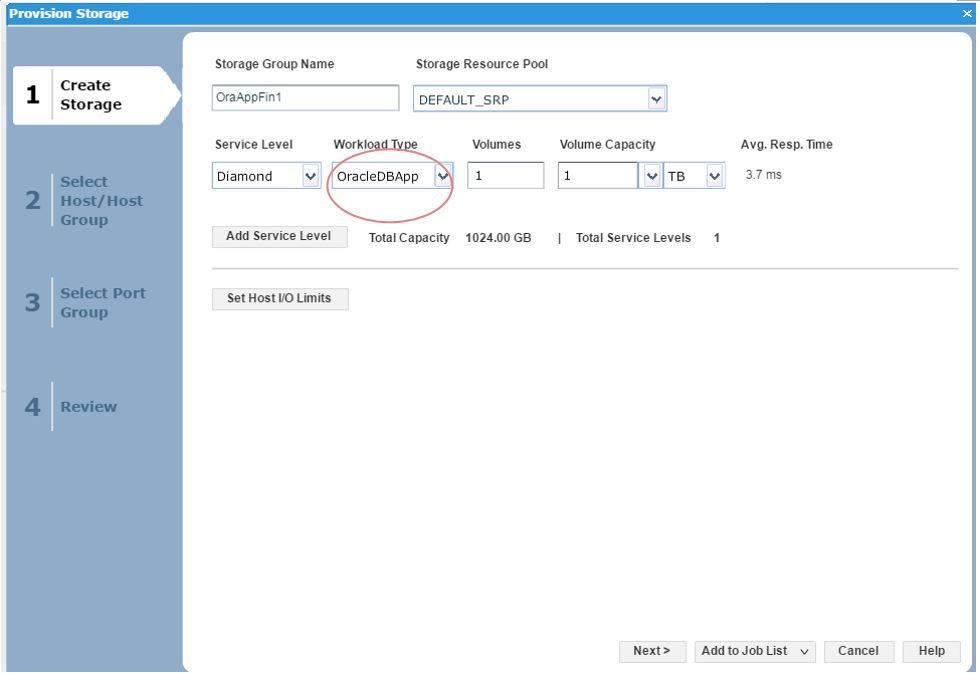

Figure 7. Create storage group view

Here, we can see that as we have received another request from our finance team for a new SG, so we decide to choose the workload type we had previously customized as a reference point and use this for the new SG.

Investigating an SG compliance issue with WLP

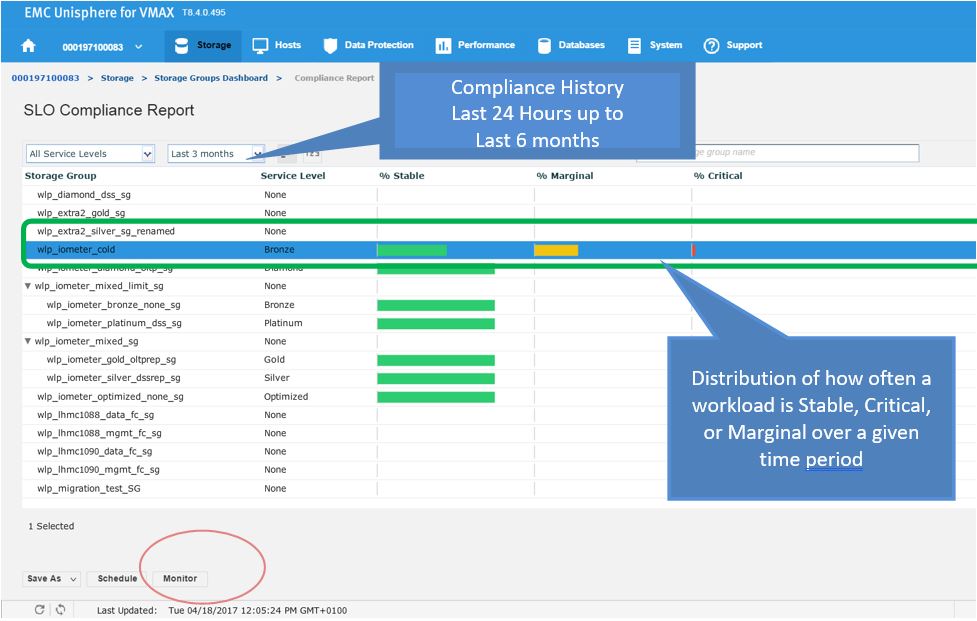

Let’s begin with a realistic scenario. One of our application teams contacted us over an issue with an SG, and they wanted us to investigate further to see if it has had any compliance issues in the recent past. Let’s begin with looking at the SG compliance report.

Figure 8. Storage group compliance view

Figure 9. Storage group compliance issue

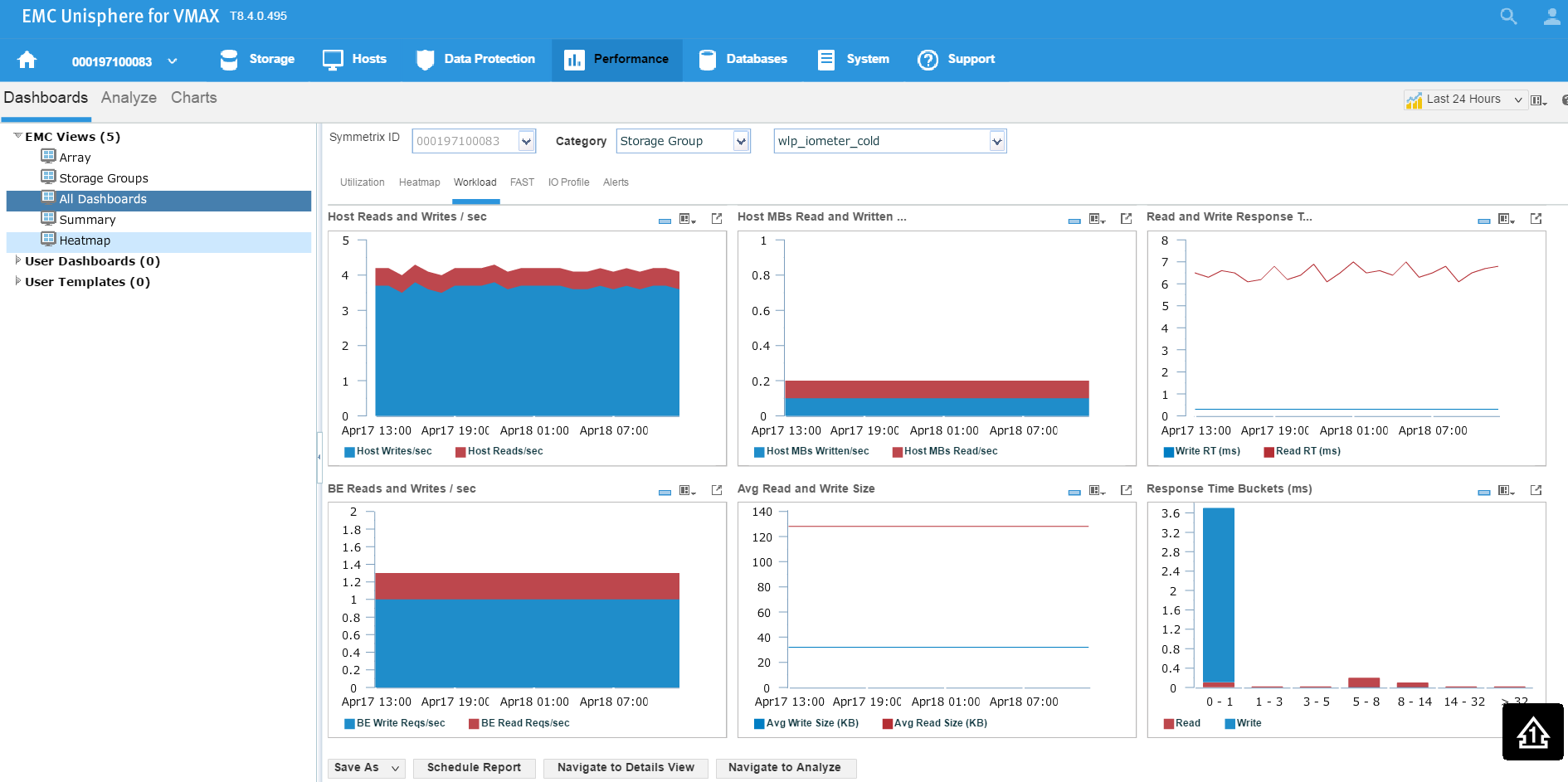

Here we see for the SG wlp_iometer_cold, we had a compliance issue in the last 3 months, with some being in a marginal state and some being in a critical state. If we select the SG, we have the option of saving the report off for reference or to potentially show to the application owner. We can also select Monitor, which will launch us directly into the Performance section of Unisphere shown in the following figure where we can check specific metrics.

Figure 10. Storage group performance view

Examining headroom for Service Level and Workload Type combinations

In this section, I will be covering how to examine the available headroom for various Service Level and Workload Type combinations. Also, I will show you how to run a suitability check while provisioning more storage to an existing storage group.

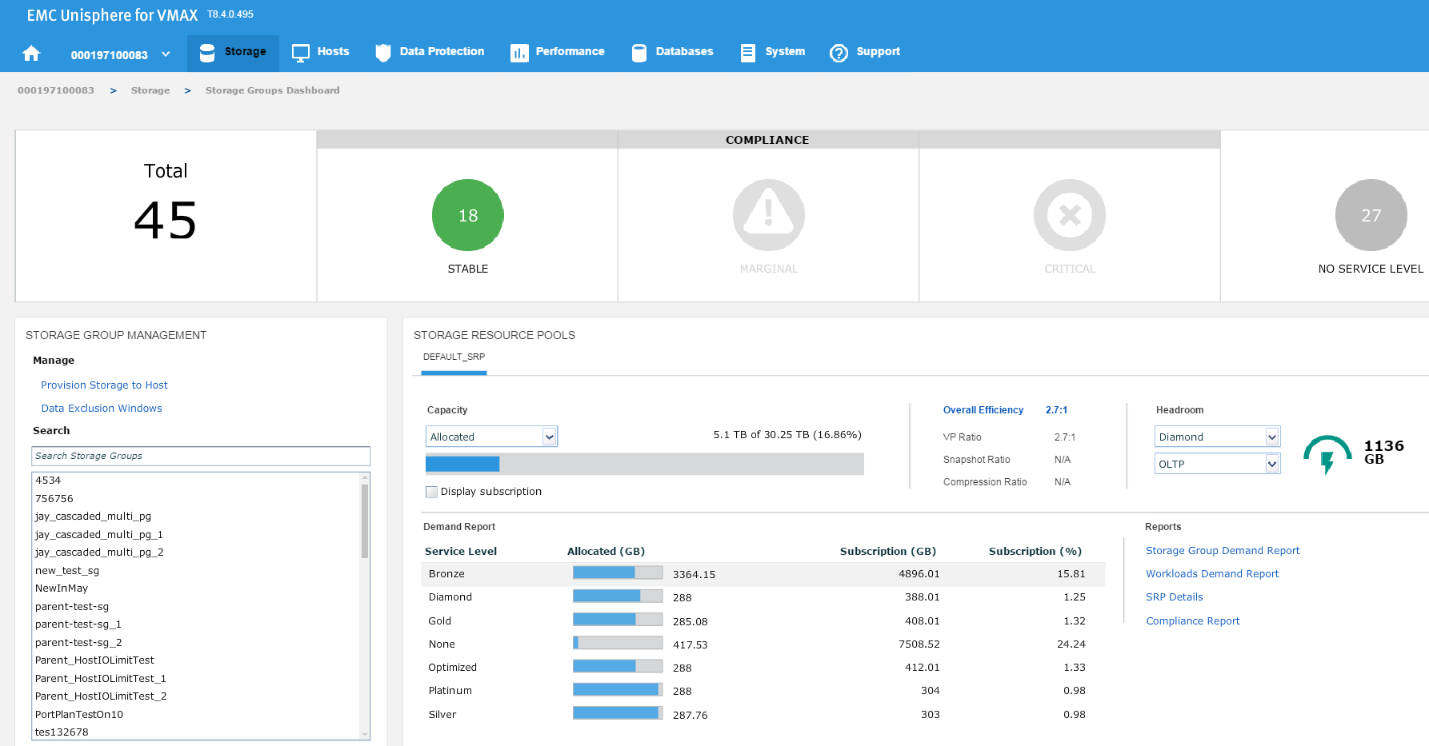

The headroom available shows the space available for a certain combination of service level and workload type if all the remaining capacity was on that type. Here, I wanted to show you two examples for different service levels to showcase the calculation change.

Figure 11. Storage group compliance view

In this example, we have chosen a Diamond SLO and an OLTP workload, which allows us free space of 1136 GB with that combination for that array. Let’s change the parameters, and see what happens.

Figure 12. Storage group resource pools view

Now, we have 2200 GB of space available at a platinum level, which is almost double the capacity we had at Diamond level. These can be useful indicators of when we have an array that is nearing capacity as we can gauge which of our most important applications can go on to which SLO so we can maximize the efficiency of our storage.

Expanding the capacity of an existing SG

Now, let’s examine how we can expand the capacity of an existing SG while checking its suitability through the provisioning wizard. Let’s start at the SG list view in the storage group dashboard. We select our SG and then click on View Details.

Figure 13. Storage group dashboard view

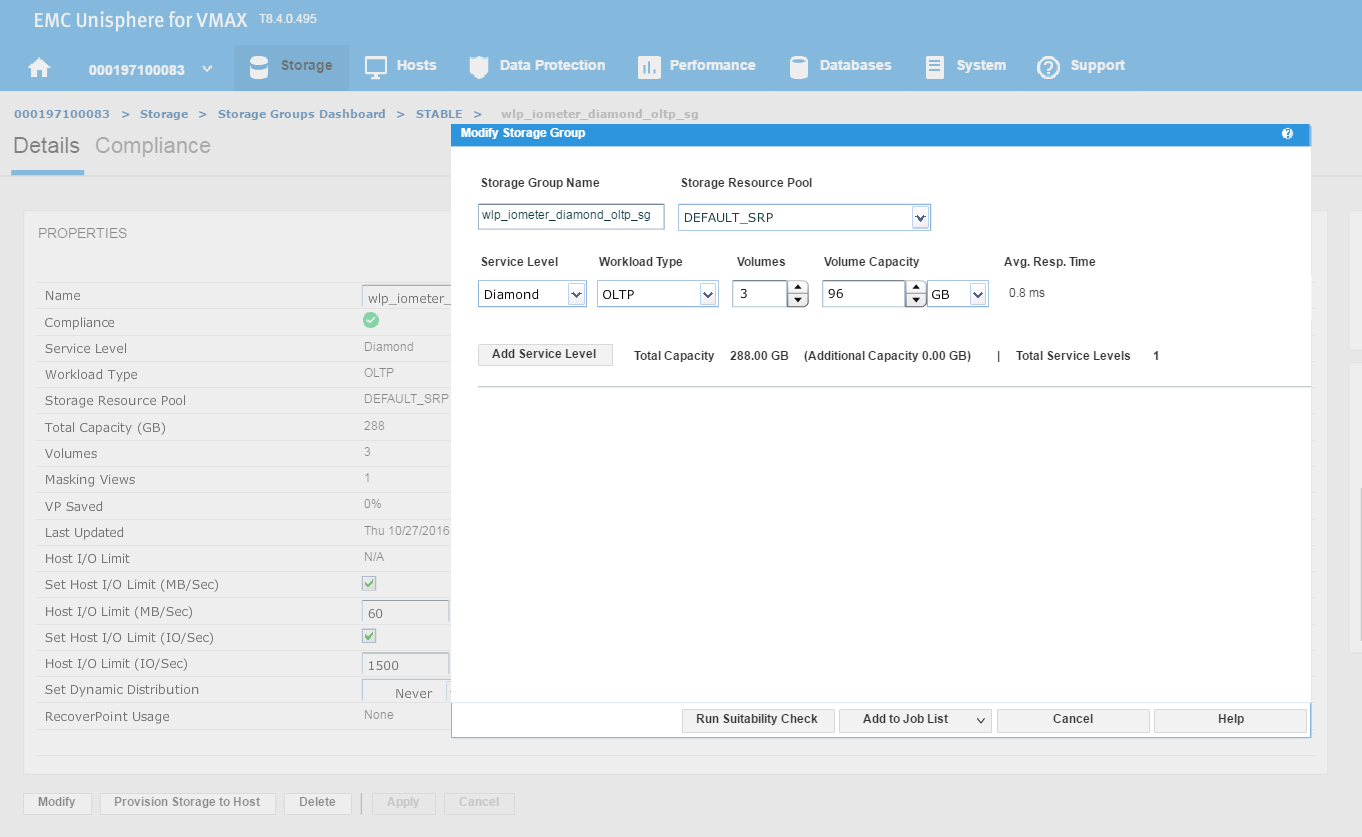

This will take us to the Details view of the wlp_iometer_diamond SG, which is the SG we want to expand. We then select Modify to start the process.

Figure 14. Storage group properties view

This launches the Modify Storage Group wizard shown in the following figure. We have received a request from the application owner that they need additional storage, however we wanted to be sure prior to allocation that they will not breach their SLO compliance nor experience any performance problems.

Figure 15. Modify storage group view

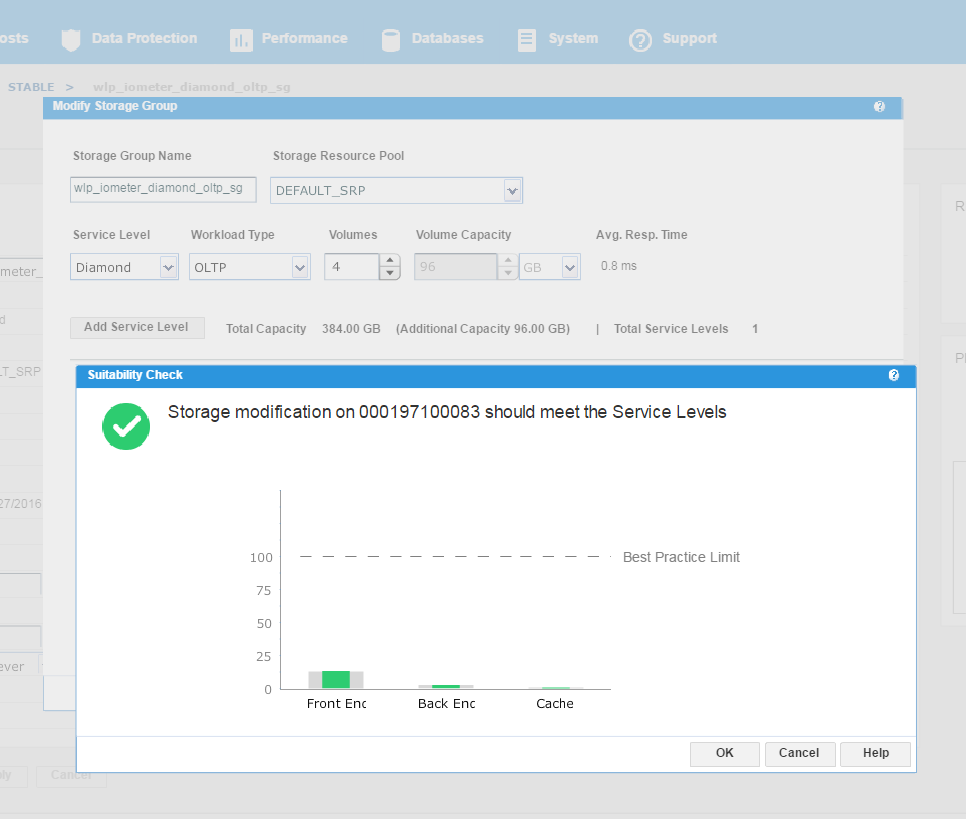

We have agreed with the application owners to grant them an additional 96 GB of storage, which will expand their capacity by roughly 25%. This allows us to increase the number of volumes by 1, and then select Run Suitability Check to verify this change won’t affect the performance of the SG adversely.

Figure 16. Storage group service levels view

We can see that the check has completed successfully and that increasing the capacity of the SG will not have any adverse effects on its performance. This promotes good housekeeping in terms of the storage array as we prevent poor configurations from being rolled out. At this stage, we can select Run Now and add to the job list to run later.

Data Exclusion Windows

In this section, I will be covering how to manage Data Exclusion Windows. This procedure explains how to manage Data Exclusion windows for calculating headroom and suitability.

Peaks in storage system statistics can occur due to:

- Anomalies or unusual events

- Recurring maintenance during off-hours that fully loads the storage system

Due to the way this data is condensed and used, unexpected headroom and suitability results can occur. There are two ways to improve the handling of these cases:

- One-time exclusion period – When the one-time exclusion period value is set, all statistics before this time are ignored. This helps resolve anomalies or unusual events where a significant one-time peak distorts the results due to reliance on two weeks of data points. This is set system wide for all components.

- Recurring exclusion period – You can select n of 42 buckets to use in admissibility checks. This is set system wide for all components. Recurring exclusion periods are repeating periods of selected weekday or time slot combinations where collected data is ignored for the purpose of compliance and admissibility considerations. The data is still collected and reported, but it is not used in those calculations.

Let’s begin at our usual starting point, the storage group dashboard, by selecting the Data Exclusion Window.

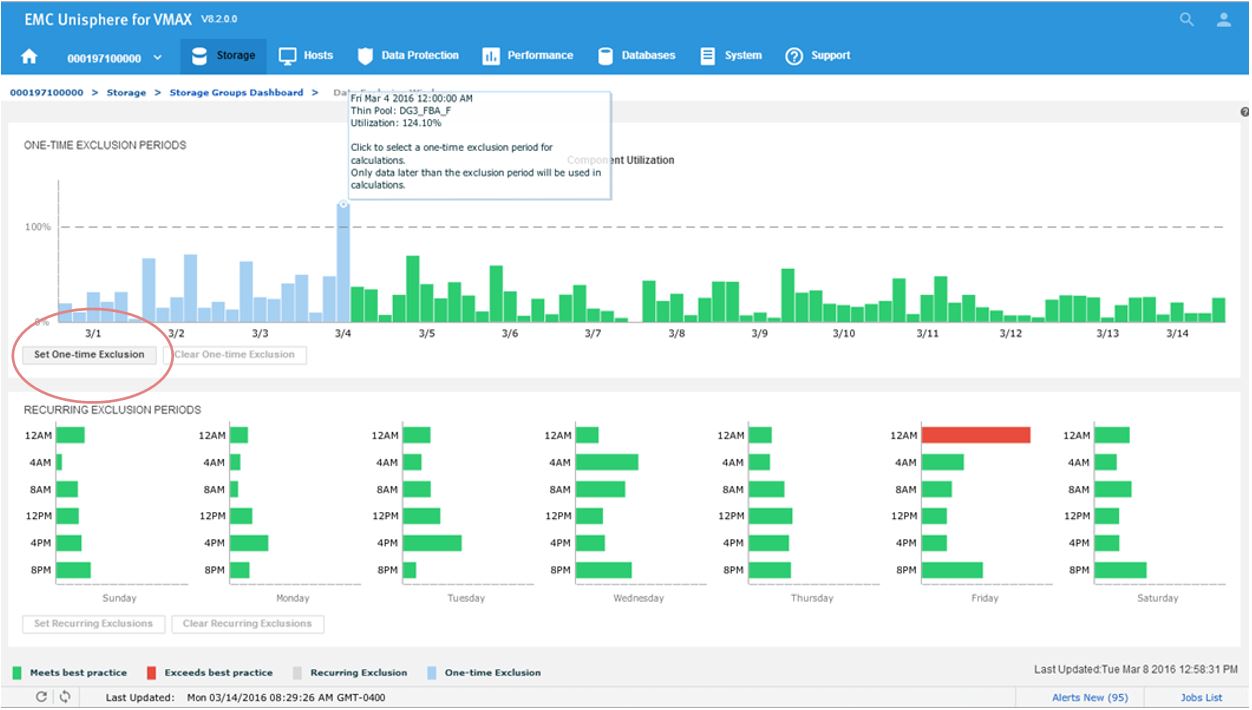

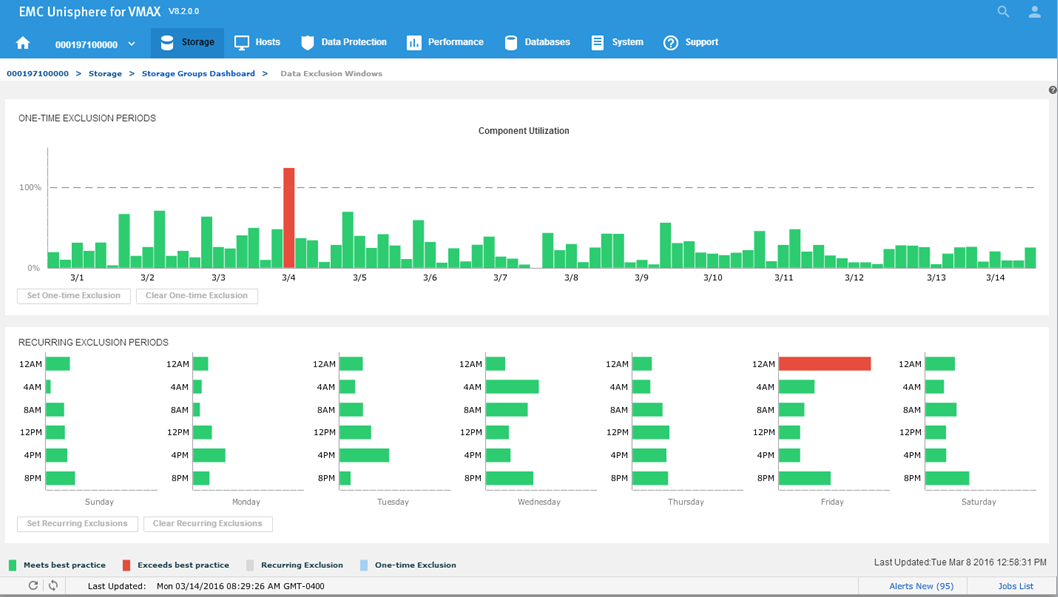

Figure 17. Data Exclusion Windows

Figure 18. Data Exclusion Windows

All the component utilizations for the prior two-week period are compared, and the greatest (with respect to its individual best-practice limit) is displayed across the top of this display. Each weekly time period, represented by two bars in the top display, is also displayed across the bottom.

Now we will set a one-time exclusion window. When you click on the tallest bar in the top display, all history up to that time is shaded. You can then select Set One-time Exclusion so the bottom display panel is recalculated excluding that time frame.

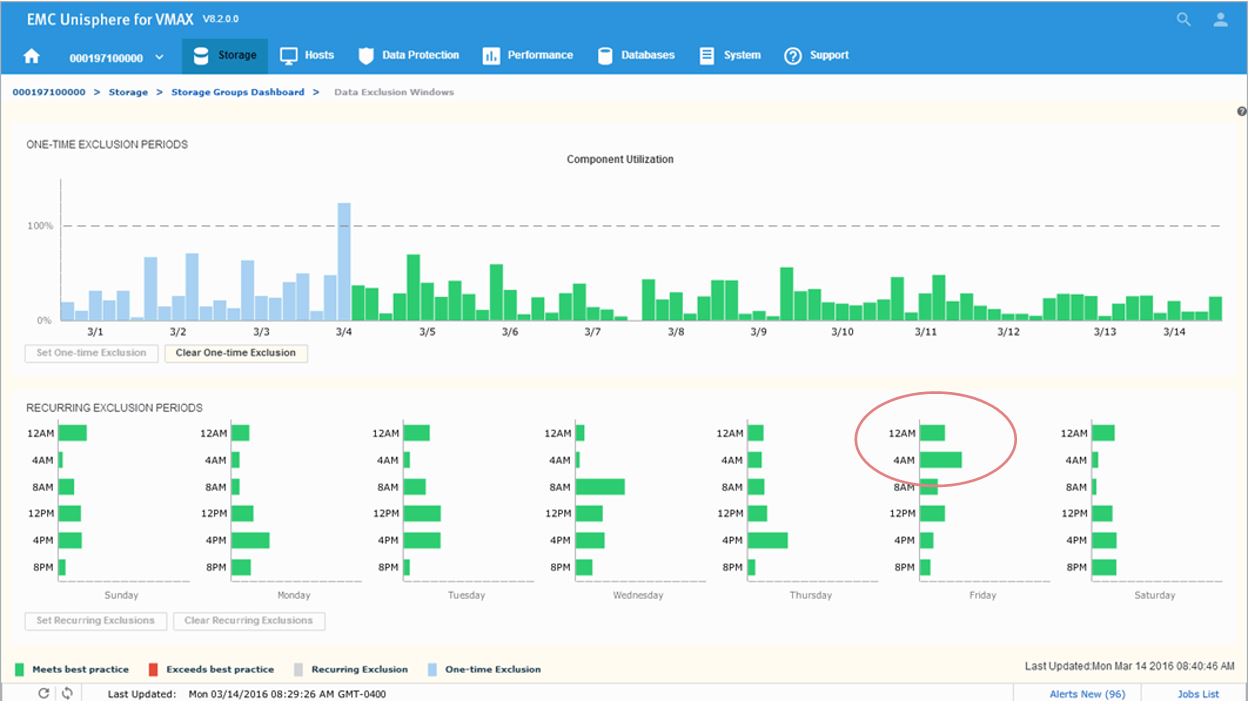

Figure 19. Data Exclusion time window view

After setting the exclusion, you can now see that at 12 a.m, we are green in the bottom panel as we have chosen to ignore that specific timeframe in our calculations.

Figure 20. Data Exclusion Windows time view

Next, we will look to set a recurring Exclusion window:

Figure 21. Set recurring Data Exclusion Window

If you click on the bars in the bottom display, their state will toggle, shading themselves and the two corresponding bars in the top display to set any weekly period to be excluded from all suitability and headroom calculations from there on for the future.

FAST Array Advisor

In this section, I will be covering how to leverage FAST Array Advisor to see if you could move workloads between arrays. The FAST Array Advisor wizard determines the performance impact of migrating the workload from one storage system (source) to another storage system (target). If the wizard determines that the target storage system can absorb the added workload, it automatically creates all the necessary auto provisioning groups to duplicate the source workload on the target system.

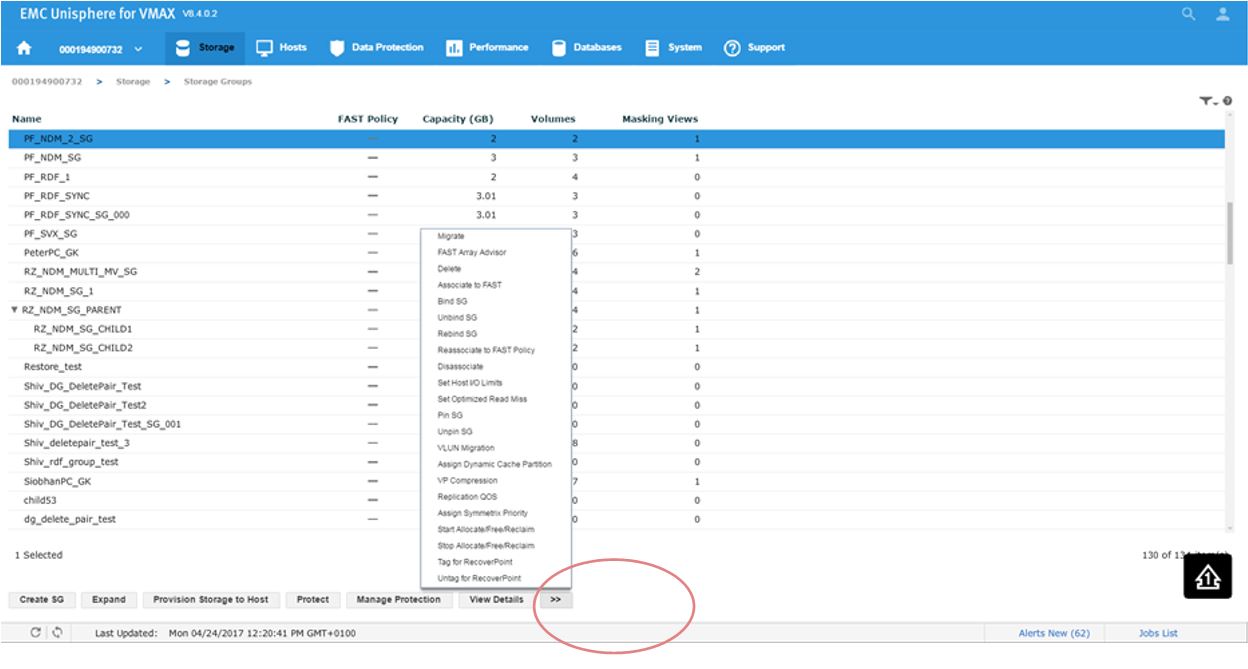

Let’s pick a suitable SG PF_NDM_2_SG and select the More Options button.

Figure 22. Storage group selection view

This will present us with a series of options. Second from the top, we see FAST Array Advisor, so we select that.

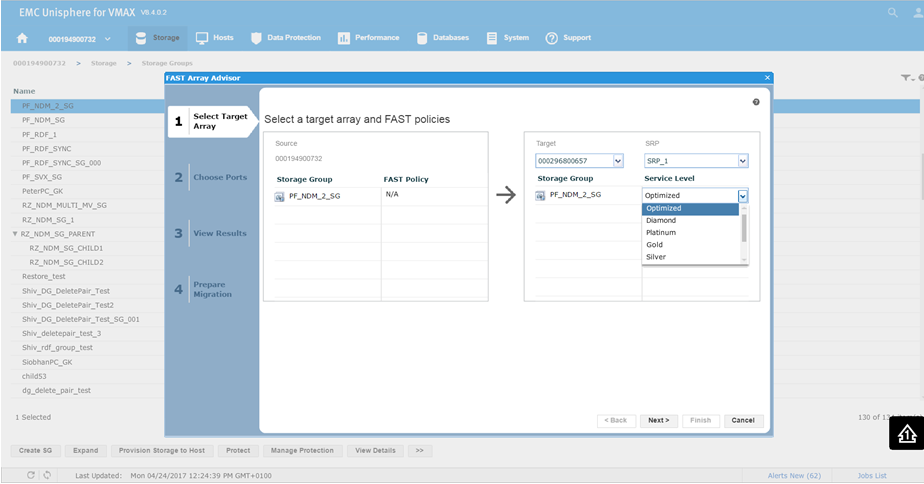

Figure 23. Select target array view

This will bring us the wizard where we select the source and target array. In this example, 0732 is a V1 running 76 code, and we want to look at the possibility of moving it to 0657 which is a V3 running 77 codes. This is viewed in terms of a migration of the SG off the older source array and on the newer target array. You can see that on a V1, we did not have service levels, however we can set them on our V3 array. For this example, we choose a Silver SLO and click Next.

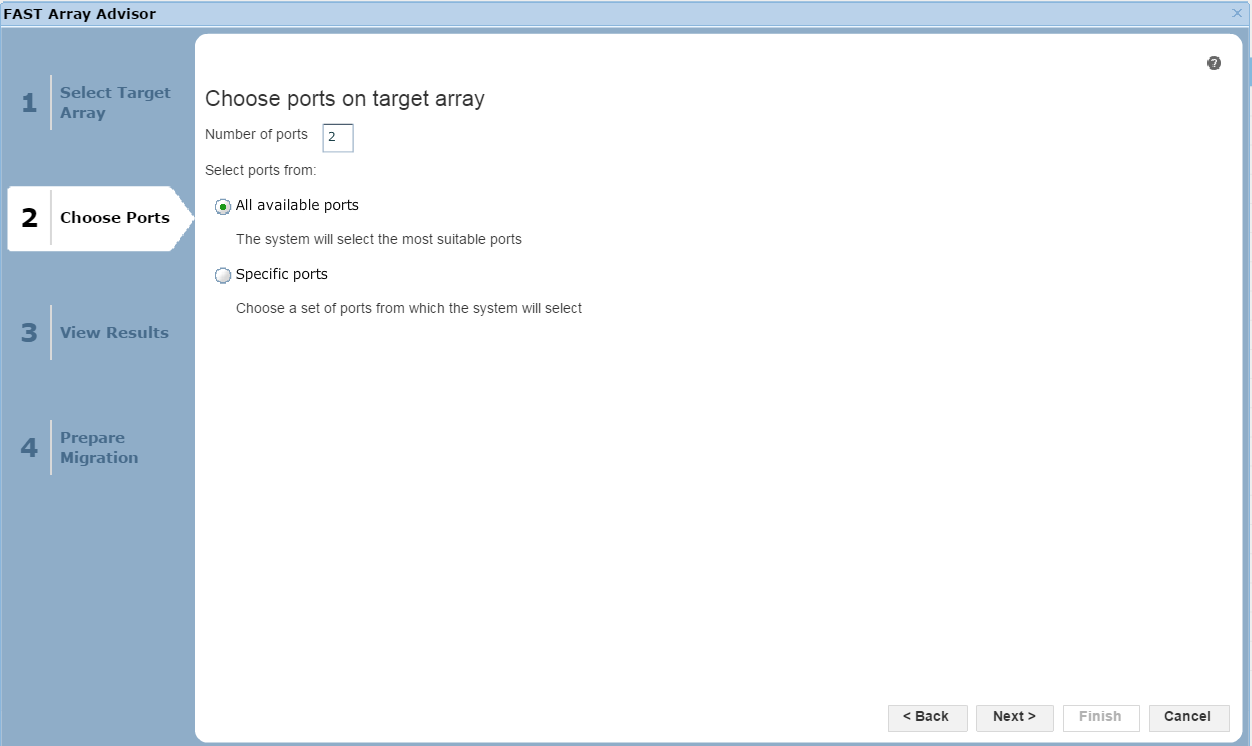

Figure 24. Port selection view

In this section of the wizard, we have the option of allowing Unisphere to choose the most suitable ports based on how busy they are, or we can select specific ports if we like. Click Next to view the results.

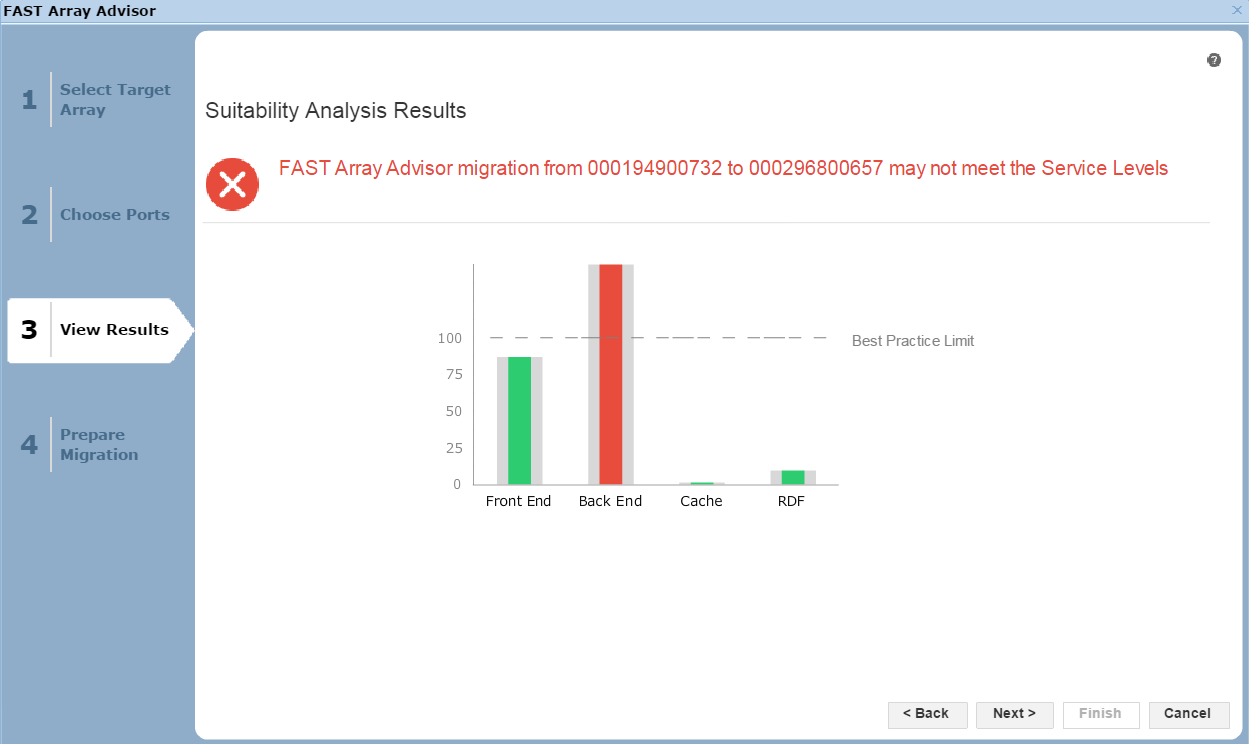

Figure 25. Suitability analysis view

Here, we see the results of the potential migration. The front end, the cache utilization, and the RDF impact all look fine. That said, in the back end, we would exceed our Best Practice Limit, so we may suffer from contention and performance issues as a result. It would be up to the individual customer to proceed in these circumstances, however we would recommend adhering to best practices. In this case, let’s select Next to view the final screen of the wizard.

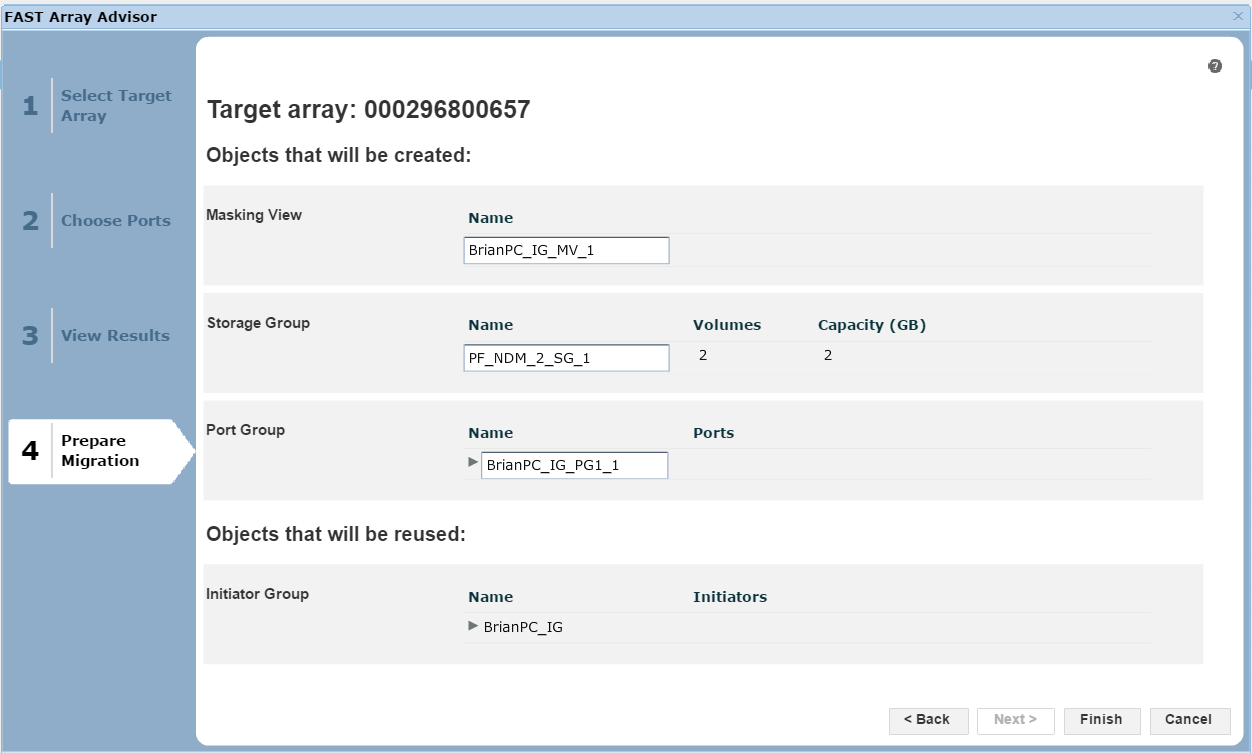

Figure 26. Prepare migration view

Here, we are presented with a summary of the migration if we were to go ahead, listing what objects, such as the masking views, storage groups and port groups, would be created on the target array 0657. At this point, we can click Finish to begin the migration.

Author: Finbarr O’Riordan, ISG Technical Program Manager for Transformation

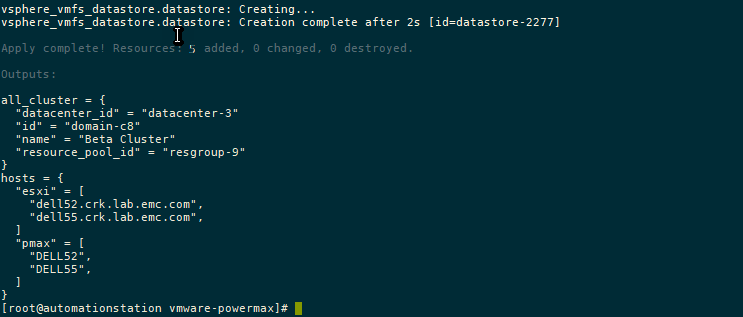

Elevate your operations: Mastering PowerMax Enhanced API Calls for Peak Efficiency

Wed, 22 Nov 2023 21:06:50 -0000

|Read Time: 0 minutes

Elevate your Operations: Mastering PowerMax Enhanced API Calls for Peak Efficiency

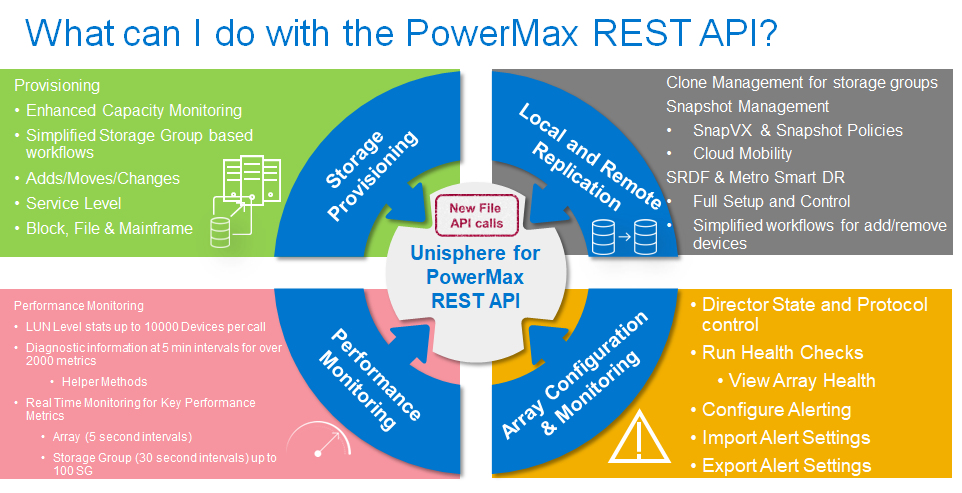

In today's fast-paced digital landscape, businesses rely heavily on APIs (Application Programming Interfaces) to manage and provision their IT environments which includes storage. While APIs are powerful tools, inefficient use can lead to increased server load on management appliances and unsatisfactory user experiences.

With PowerMax APIs new enhanced API calls, developers can: optimize workflows, reduce server load, and boost efficiency by reducing the amount of code needed to maintain and gather information about storage systems.

This article explores the New Enhanced API calls available with Unisphere for PowerMax 10.1 and best practices for working with these calls to create: seamless, responsive, and efficient applications using the PowerMax REST APIs.

In my previous post what’s new in the PowerMax API version 10.1, I summarise these enhancements at a high level. In this post, I will dive deeper into how to use these calls efficiently and provide inspiration where they can replace traditional API calls reducing the amount of code which needs to be maintained and getting the most out of your API calls.

Common challenges driving the need for enhanced API calls

One challenge is gathering detailed data on a large set of array objects with the existing API. It often requires multiple API calls. PowerMax Arrays support tens of thousands of devices and thousands of other objects like storage groups and snapshots. Another challenge is that large data mining often drives a high number of API calls and with multiple Arrays managed by a single server, the number of calls increases further. Taming this scale issue has always been a challenge and this created a need for batching and finding a different way of getting the data collections from the API.

With the newly enhanced API calls, developers can access a deeper level of data in bulk and select subsets or supersets of information filtered to their exact requirements.

With this release, we have introduced enhanced API calls for Performance and Data Collection for Storage Groups and Volumes. These improvements are designed to increase the overall performance of the API when used with the new calls and simplify the developer experience.

Versioning on Enhanced API calls.

The enhanced endpoints use a new URL. The base URL for traditional PowerMax API endpoints is:

https://unipowermax_ip:port/univmax/restapi/{version}

The new enhanced endpoints use:

https://unipowermax_ip:port/univmax/rest/v1

The key difference here is subtle but powerful from a developer perspective. The calls are versioned by attributes accessible. When new APIs become available or new attributes on existing API calls, the developer needs can simply select them via query path on the URL when the management server is at a version that supports them. More on this later.`

Authentication is still the same, API calls use basic authentication, so username and password required.

With that, there are 4 new API calls in the new format.

GET /systems/{id}/performance-categories

GET /systems/{id}/performance-categories/{id}

GET /systems/{id}/volumes

GET /systems/{id}/storage-groups

Enhanced Performance API calls and Usage

The performance related calls are GET calls as opposed to POST calls on the legacy performance calls and require little input from the developer.

Note: these calls work for all arrays supported by Unipshere not just the latest and greatest, but you do need Unisphere 10.1 minimum to take advantage.

https://ipaddress:8443/univmax/rest/v1/systems/{id}/performance-categories (GET)

Returns supported Performance Categories and Valid metrics based on an array model of supplied id/serial number.

https://ipaddress:8443/univmax/rest/v1/systems/{id}/performance-categories/{category} (GET)

Returns latest available diagnostic data for the specified performance category for all Key Performance Indicator Metrics (KPI)

With these enhanced performance calls there are no additional lookup calls needed to find out what components need to be queried, no keys to enter and no timestamps to generate. These reduce the number of calls being made to the system reducing overall load on the management servers and providing information with minimal effort for developers.

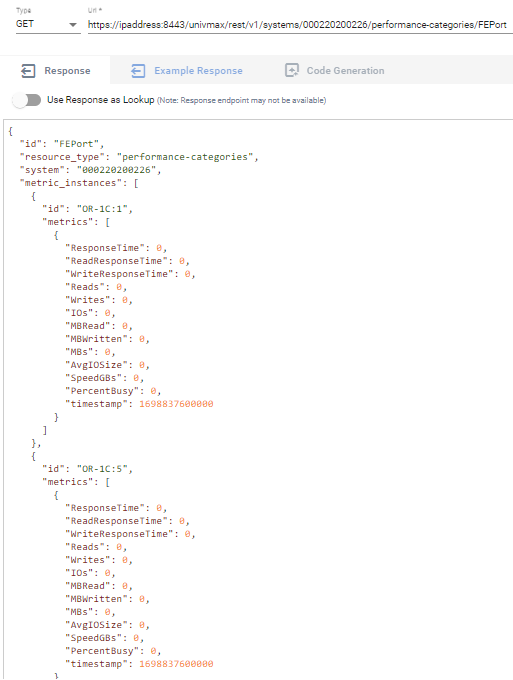

Below is an example requesting metrics for Front End Ports, note the call returns metrics for each port ID on the system without having to build any payload.

The new enhanced performance calls are ideal for customers building dashboards or exporting to time series databases (for use with tools like Grafana or Splunk as well as general use).

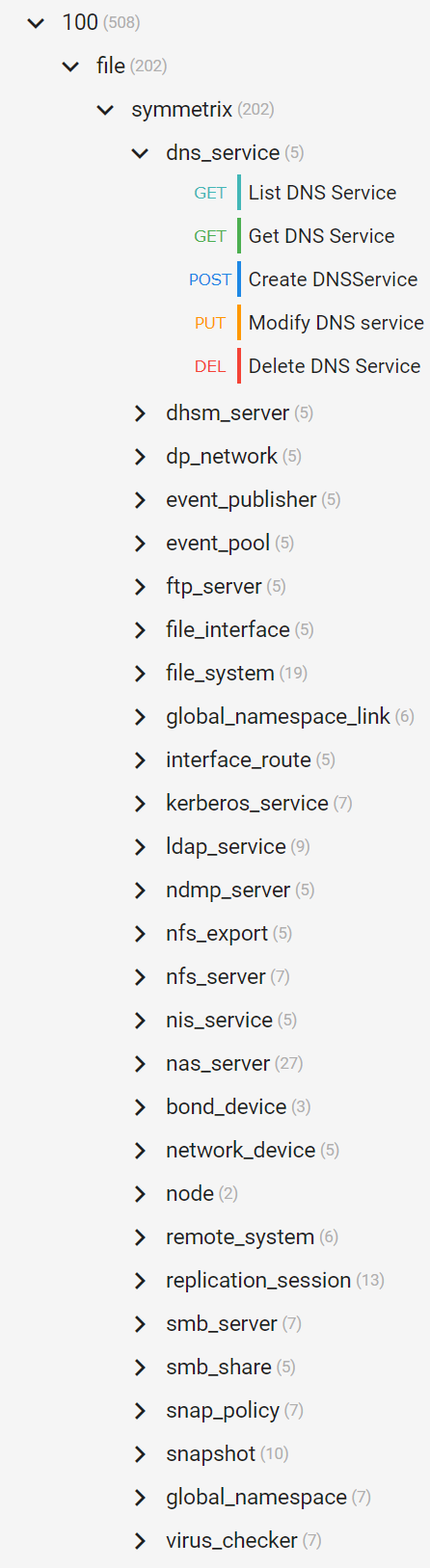

Storage Groups and Volumes Enhanced calls

For customers wishing to gather configuration information there are 2 new enhanced calls to help with this. By default, only the id of queried objects are returned for all objects on the system, however using select and filter options, the responses can be customized to return exactly the information they need in a single API call. With a single call you can get information on all storage groups or volumes on the array along with the specified attributes selected, and optionally filtered to your choice criteria.

https://ipaddress:8443/univmax/rest/v1/systems/{id}/storage-groups (GET)

https://ipaddress:8443/univmax/rest/v1/systems/{id}/volumes (GET)

In the following section I’ll dive into options for selecting and filtering to achieve great things with minimal effort.

Optimizing Data Filtering and Selection for Storage Groups and Volumes

To retrieve large amounts of data on all managed objects on an array with the traditional API calls requires multiple API calls for each object to get detailed level of information. When running against multiple arrays the number of calls increases with the number of managed objects being queried. With the new API calls the select and filter operations enable developers to get the same level or greater of information with just a couple of API calls.

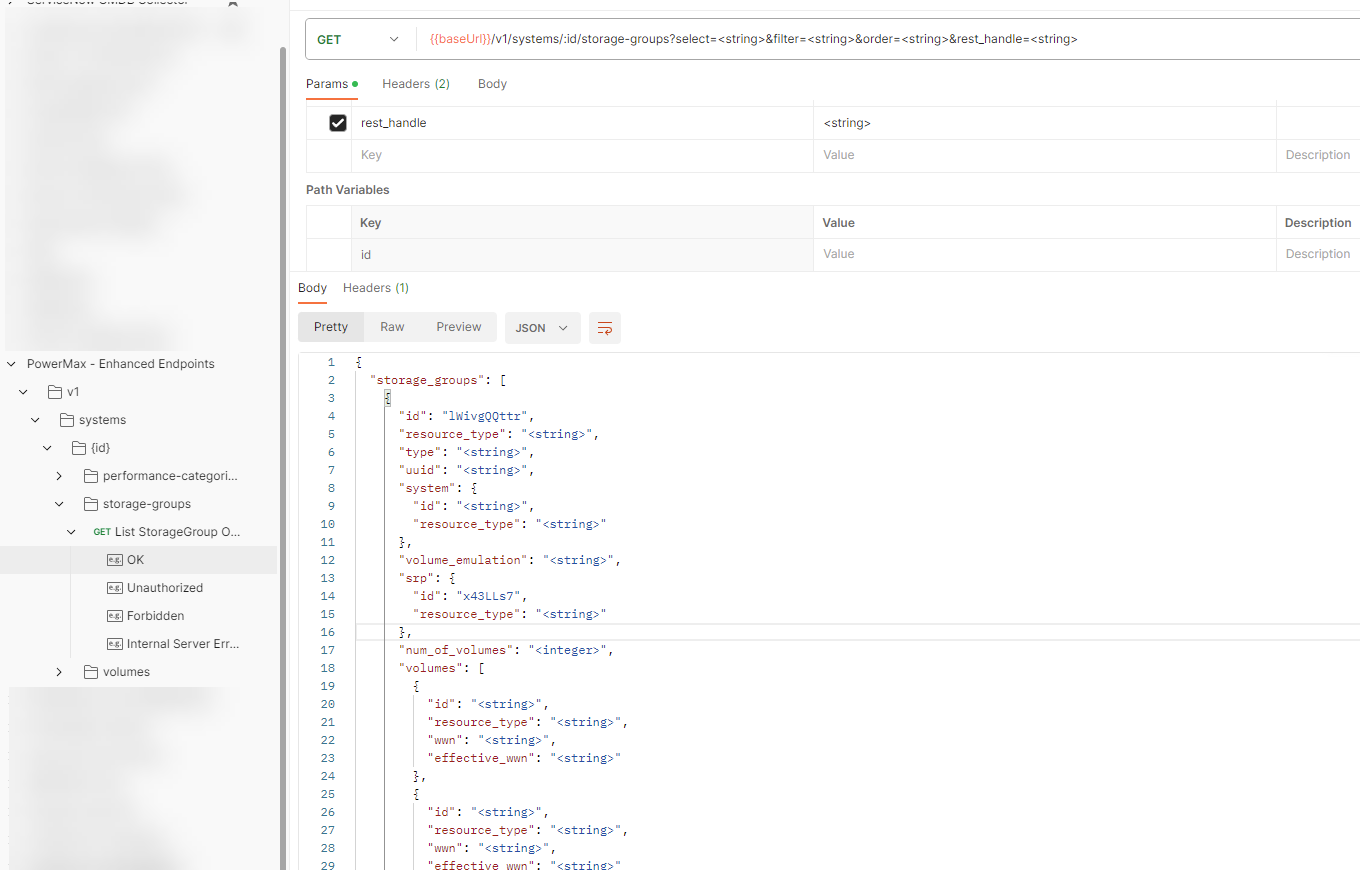

By default, running the GET call for storage-groups will return only the ID of every storage group on the array, this is to minimize the overhead the call will generate. The ID is the identifying object name for every attribute in these new API calls. The Object itself has a lot more optional attributes that are selectable and filterable to customize the return. The optional attributes for filtering and selection are documented in the developer docs here. The documentation is also available as OpenApi.json for easier and programmatic review.

The image below shows a snippet of the list of potential return parameters for the storage-groups get call as seen from the imported JSON in PostMan. The full list of attributes is too large to fit in a screenshot.

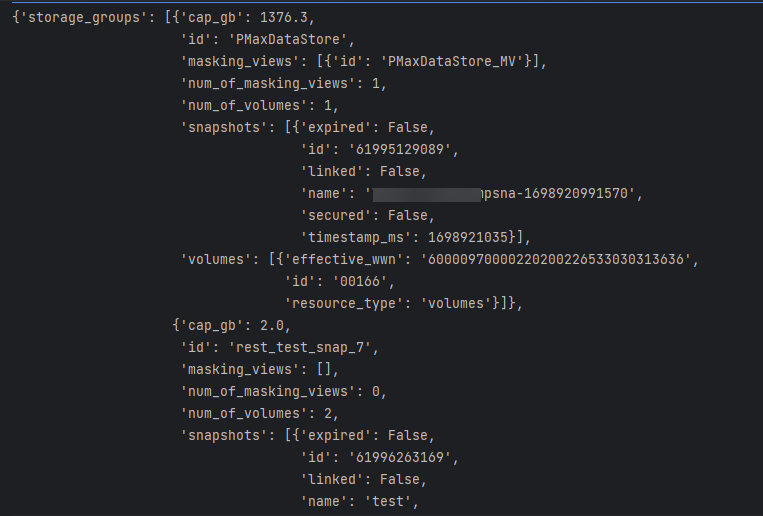

Let’s take an example call to build a return payload

- gathers data on all storage groups on a PowerMax array

- Select details on storage group capacity, the volume ids contained in the storage group with their effective_wwn

- Restrict the call to only return data only on storage groups with more than one snapshot

- Provide details on each snapshot including expiry data, link status and if it’s a secure snapshot.

To achieve this simply enter: append ?select= to the end of the URL for the API call followed by a comma separated list of attributes.

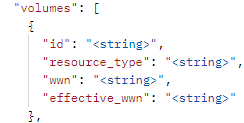

From the example, return in POSTMAN we can identify the following parameters will give the information

num_of_volumes, cap_gb, num_of_masking_views, masking_views.id, volumes.id, volumes.effective_wwn, volumes.resource_type, snapshots.id, snapshots.name, snapshots.timestamp_ms, snapshots.linked, snapshots.secured, snapshots.expired

Note: some of the attributes are noted with dot notation, this is necessary to select nested attributes so you will need to pay attention to this.

I generally recommend reviewing the JSON output in the examples, it’s easier to decipher for me than reading the tabular online documentation as the indentation makes it easier to identify sub attributes for the dot notation.

If I want to restrict the query only to storage groups that have at least one snapshot, I filter only storage groups with snapshots. To avoid unnecessary returned data,I can append a filter to the URL for the GET call &filter=num_of_snapshots gt 0.

You can also combine multiple filters, adding as many as you need on the URL for any attribute or sub attribute separated by comma. When specifying multiple filters, they are applied in an AND fashion.

Full details on all the operators for filters are documentation here.

After applying the attribute selection criteria and the filters of the API call, the URL look like the following:

https://ipaddress:8443/univmax/rest/v1/systems/000220200226/storage-groups?select=num_of_volumes,cap_gb,num_of_masking_views,masking_views.id,volumes.id,volumes.effective_wwn,volumes.resource_type,snapshots.id,snapshots.name,snapshots.timestamp_ms,snapshots.linked,snapshots.secured,snapshots.expired&filter=num_of_snapshots gt 0

When the call is executed, it returns a single response with the requested data for all storage groups matching the filter criteria. It displays all the selected attributes and sub-attributes. The resultant JSON return is shown below.

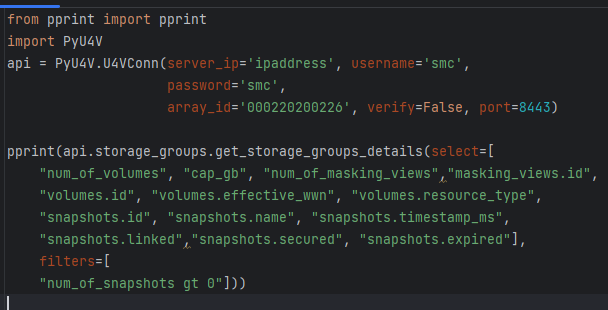

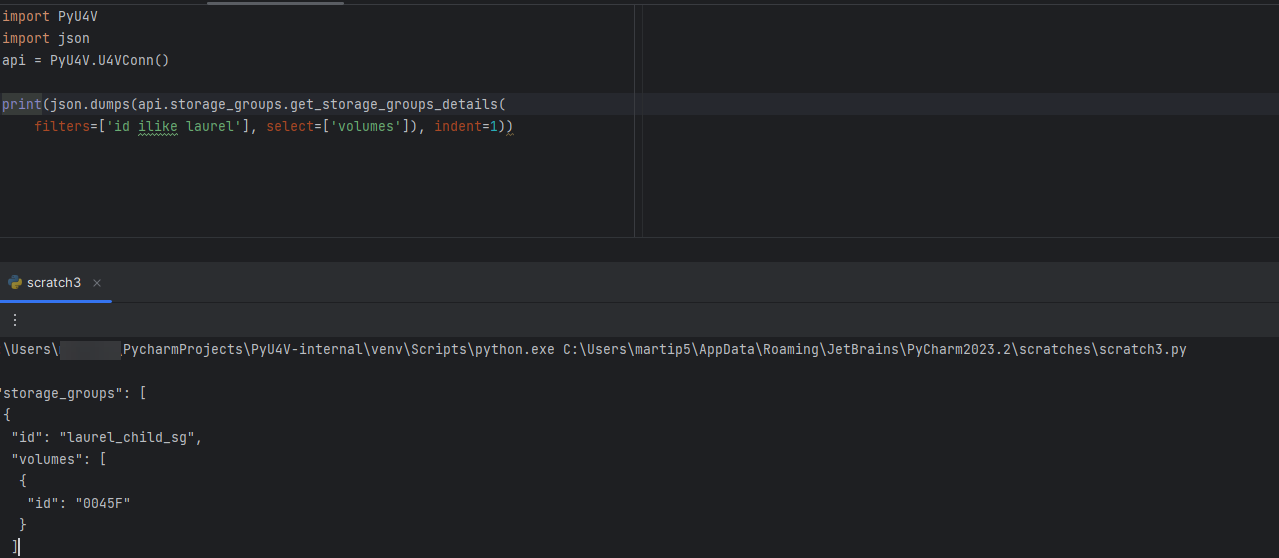

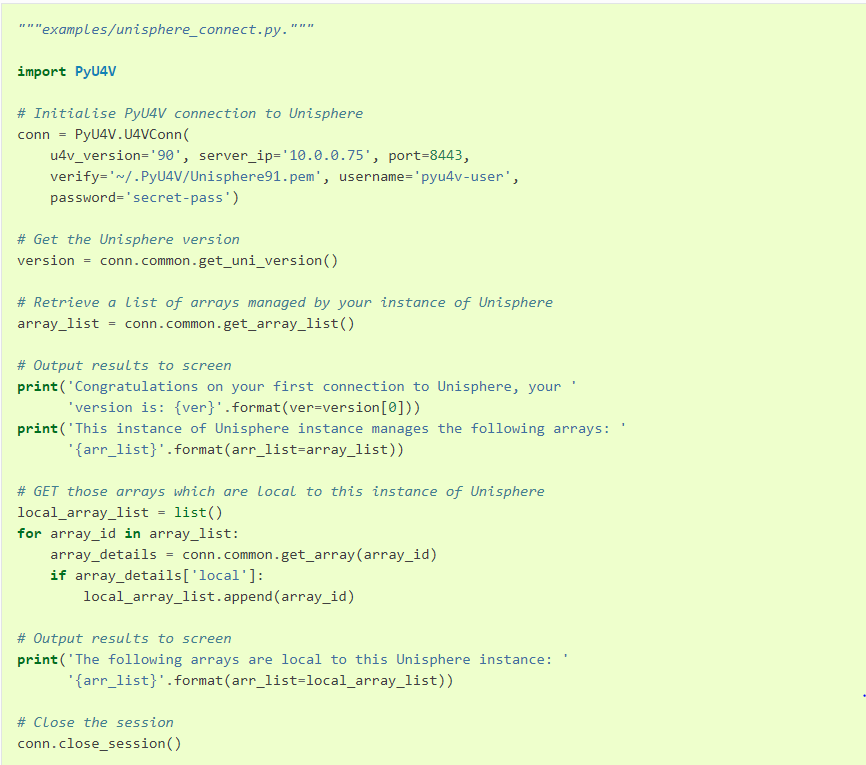

PyU4V our Python SDK for PowerMax API has support for the new enhanced API calls with version 10.1 available from PyPi and is fully documented on ReadTheDocs. New function libraries volumes, storage_groups, and performance_enhanced have been added. Functionality has been added to the volumes and storage_groups modules to return the attributes available for selection in JSON format so that the meta data is easier to access. The same query executed by python script is shown below.

The short Python script is easily readable, executing a single API call to get all this information for all volumes matching the criteria. There are hundreds of attributes available to you for selection and filtering. It’s worth spending a little time up front to define the reports you need and create functions to capture the information in a repeatable way.

Remember if you don’t need information, don’t request it as it will create less load on the management server and the client application. The API is explicit in this way. PyU4V functions for volume and storage group by default will select to return to the top-level attributes for both storage groups and volumes, excluding rdf_infos and snapshot.

In summary, the new enhanced API calls give access to an SQL like interface for selecting, filtering and ordering data on your storage objects via the API, meaning you can effectively design API calls for yourself to give you the data you want and need.

Hopefully this post was informative and gave you some inspiration into how you can reduce the number of API calls needed to get large datasets on your storage groups and volumes for your PowerMax and VMAX arrays.

Check out this space for more about the PowerMax API. If there are topics that are of interest to you and you would like to learn more, send a request to @rawstorage on Twitter, and I’ll try to accommodate your request!

Be sure to provide feedback through your account team for any enhancement requests or start a discussion on https://www.dell.com/community/en/topics/automation. Our product managers will take your requests and bring them to the development team.

Author: Paul Martin

Cyber Intrusion Detection for z Systems (zCID)

Tue, 12 Dec 2023 18:42:09 -0000

|Read Time: 0 minutes

Any cyber security event can have a devastating impact on a company’s financials. Stolen credit cards, identity theft, hacked emails, and so on hurt both the customer and the company’s brand, even going so far as to potentially ruin that company. Data Recovery takes time, but rebuilding customer trust may take even longer.

Dell Technologies has made major investments in a series of continuous security product enhancements to help protect companies and their end users from data loss and/or compromise in the event of an attack. Whether it’s an attack on open systems data or mainframe data, the result of any attack is the same: loss of productivity and concern over theft and exposure of sensitive information.

Ideally, technologies like storage should be able to detect a cyber threat, protect data from the threat, and, in the event of a loss or corruption of data, recover to a known good point. Eight years ago, Dell Technologies developed the first snapshot-based recovery capability for mainframe and open systems data and, as of the latest release of PowerMax in October 2023, has moved into the “intrusion detection” realm of cyber resiliency.

This blog is about a new enhancement to our Mainframe Enabler Software for PowerMax that is designed to provide advanced threat detection for PowerMax mainframe environments.

Mainframe Enabler for intrusion detection

Mainframe Enabler Software (MFE) runs on a z/OS LPAR and is designed to manage PowerMax 2500/8500 and 8000. During discussions about the most recent customer requirements for this release of MFE, it became apparent that customers urgently needed a way to determine whether a cyber event was imminent or occurring. The ask was to send the equivalent of a ‘flare in the sky’ to single-out any atypical behavior in mainframe data access. Upon learning of zCID’s capability within the larger Dell cyber solution, a large mainframe service provider commented “Dell’s innovation around detection of cyber events within PowerMax and CloudIQ is ahead of any other storage provider we talked to”.

Dell Mainframe Solutions development, Product Management, and other organizations within Dell designed a way to enhance MFE to provide awareness of atypical data access behavior. The result of that work was delivered as an enhancement in MFE 10.1.0, released 17 October 2023. This enhancement is known as ‘Cyber Intrusion Detection for z Systems’ or zCID for short.

We will jump into the technical details of zCID; but first, let’s cover the What, Why, and How of this valuable new feature.

What: zCID is a utility that detects atypical data access patterns in mainframe workloads.

Why: To warn PowerMax mainframe customers that atypical access is occurring, and which should be investigated if a cyber intrusion is suspected.

How: zCID monitors the number of unique tracks accessed for mainframe CKD devices and SMS groups within a customer specified time interval. First a baseline of “normal/typical” access is confirmed by the storage administrator. The next step is to create a set of rules for warning statements that will be generated if an anomaly was detected when data was accessed. Next, zCID is started and runs continually in the background. Finally, if an intrusion is suspected, zCID raw data can be converted to a CSV format for detailed analysis.

Technical and install requirements for zCID

The minimum technical requirements for zCID are:

- MFE 10.1.0 with available SCF address space

- PowerMax 8000, 2500, or 8500

- A list of CKD volumes or SMS groups to monitor

Customers must APF-authorize the MFE 10.1.0 LINKLIB dataset and add a STEPLIB DD statement in their zCID batch jobs. (zCID can also run as a started task.)

zCID is delivered in two programs:

- ECTRAARD is the zCID utility program

- ECTREXTR is a zCID program that converts the raw zCID data to a CSV file. This CSV file is intended to be imported into Microsoft Excel for additional analysis and reporting as determined by a storage analyst.

zCID modes of operation and high-level implementation strategy

ECTRAARD can run in “Live Run mode” or “Batch Run mode”. It is important to understand these two modes before deploying zCID:

- Live Run mode: processes data in real time and collects data from the resources you tell zCID to monitor.

- Batch Run mode: takes the output produced in Live Run mode and creates reports about the historical information.

To maximize the benefits of zCID, follow these five-steps:

- Live Run mode will vary from customer to customer. Typically, you would run zCID in Live Run mode to capture access rates for the z/OS resources you are monitoring. Typically, I would start Live Run mode for one week (seven days), then capture a month end batch processing cycle, and ideally, a quarterly and year end closing cycle. With that information, you can calibrate your WARN statements for your highest accessed rate z/OS workloads that zCID is monitoring.

- Run zCID in Live Run mode over a “long” period. View this period of time as an opportunity to collect access rate information for the z/OS resources that zCID is monitoring. In the future, you can use this information to test your warning statements for atypical access rates on monitored z/OS resources.

- Stop Live Run mode at the end of the “long period" so that the datasets zCID was building can be closed.

- Run zCID Batch Mode to create reports, then analyze the results.

- Create warning statements for the atypical access rates for which you want to be notified. To calibrate the warning statements, take the datasets created in Step 2 and run zCID in Batch Run mode. Are zCID warning messages being issued from the warning statements you created?

Calibrate the WARN statements ensures that z/OS SYSLOG, z/OS master console, and z/OS zCID started tasks are not spammed with zCID warning messages. - Restart zCID in Live Run mode with the calibrated warning control statements.

zCID will now actively monitor the z/OS resources you provided and generate an alert every time an atypical access rate occurs!

Summary

Cyber Intrusion Detection for z Systems (zCID) makes Dell PowerMax the industry’s first intrusion detection mechanism for on-array mainframe storage [1]. zCID is a layer of intelligence that detects atypical data access patterns for specified workloads by providing for first-time PowerMax customers insight into their z/OS workloads’ access rates. Customers can then automate the monitoring of those workloads with the goal of detecting cyber events within their mainframe storage infrastructure.

Check out https://infohub.delltechnologies.com/ for more information about zCID and Dell’s PowerMax mainframe solutions.

Author: Justin Bastin, Senior Principal Engineer

[1] Based on Dell's internal analysis comparing PowerMax 2500/8500 cyber detection for mainframe storage to mainstream mainframe competitors. August 2023.

What’s New with Data Mobility and Non-Disruptive Migration with Unisphere 10.1

Tue, 17 Oct 2023 13:52:34 -0000

|Read Time: 0 minutes

Unisphere 10.1 and PowerMax OS 10.1 provide new features for consumers of our Data Mobility suite for array migrations on PowerMax. Data Mobility suite for PowerMax provides customers with a comprehensive set of tools for migrating workloads between Dell VMAX and PowerMax arrays non-disruptively with Non-Disruptively Migration (NDM) also with minimal disruption using Minimally Disruptive Migration (MDM) workflows, both NDM and MDM utilize SRDF technology to provide this functionality. For an in-depth look and walkthrough procedures, check out the white paper on the Info Hub: Dell PowerMax and VMAX: Non-Disruptive and Minimally Disruptive Migration Best Practices and Operational Guide.

With the 10.1 release of Unisphere for PowerMax and PowerMax OS 10.1, two new features provide new options for customers:

- Automatic cleanup of migrated resources on the source array

- Ability to migrate devices that are SRDF Metro supported, replacing one leg of the SRDF Metro configuration

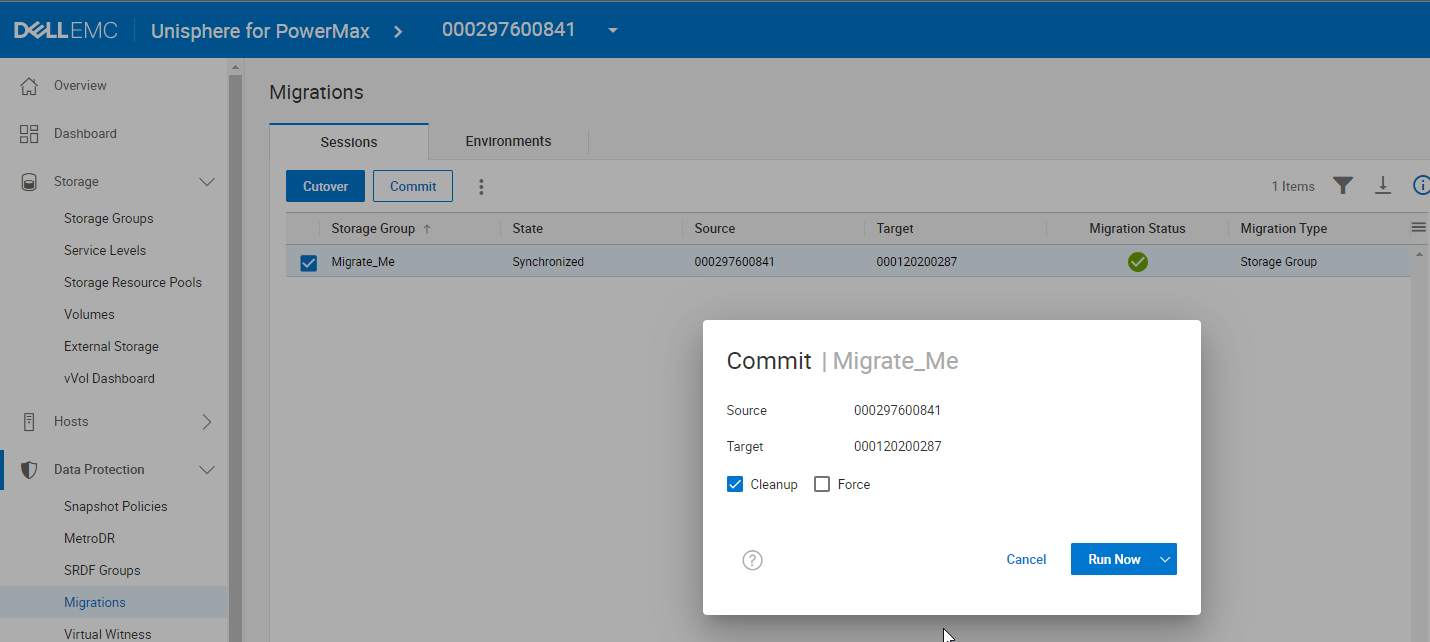

Automatic clean up of the source array

Unisphere 10.1 and Solutions Enabler 10.1 provide a new cleanup operation that can be tagged to the commit of a Non-Disruptive Migration. This functionality helps to eliminate manual cleanup tasks for already migrated components on the source array. This feature is strictly orchestration in the management software stack, so all arrays that support Non-Disruptive Migration can use it.

You can specify the cleanup as part of the commit operation either with Unisphere or the symdm command with solutions enabler. The following figure shows the operation in the Unisphere Data Migration Wizard. (Note: there is no microcode requirement for this operation, It is a feature of the management software version 10.1 rather than of the array operating environment.)

By selecting Cleanup, providing there are no gating factors, when the migration commit completes, the orchestration will delete:

- All snapshots including clone relationships on the devices. It will also unlink any linked snapshots.

- All Storage Groups that were migrated.

- All devices that were migrated.

- All Hosts/Initiator Groups and Port Groups that were migrated if they are not used by other masking views.

- Hosts/Initiator Groups will be removed from any parents before deletion if the parent is not deleted.

Conditions that can block the commit operation with cleanup on the source array are:

- Presence of secure snapshots

- Presence of Snapshot Policies or Cloud Policy snapshots.

- SRDF relationship on the source devices to a Disaster Recovery Array

- If Devices are in Multiple Storage Groups

- If a Migrated Storage Group contains Gatekeeper Devices.

You can also specify the force option to bypass this behavior to allow as many migrated objects as possible to be deleted.

The cleanup operation is intended to reduce any remaining manual steps that have been present following successful migrations of applications between arrays, freeing up resources for reuse and enabling maximum flexibility for workloads running on PowerMax arrays.

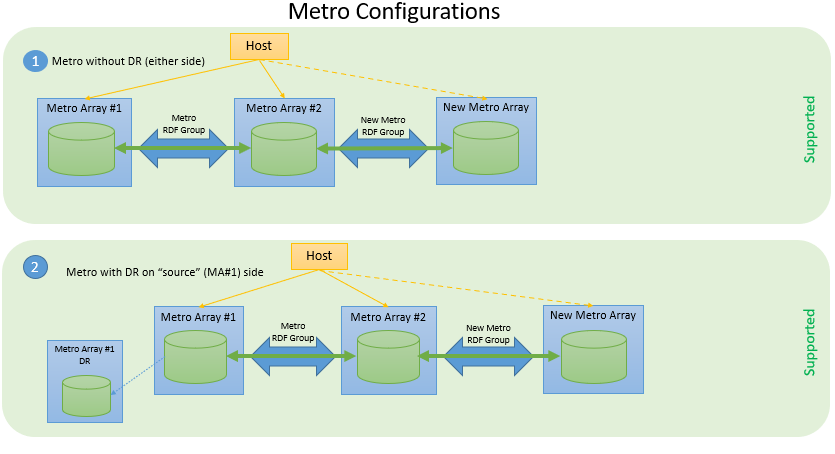

Migrating SRDF Metro Protected Devices with NDM

The second new feature removes a restriction on Migrating SRDF Devices. With PowerMax OS 10.1 and Management Software version 10.1 it is now possible for PowerMax storage administrators to migrate devices that are already protected with SRDF Metro.

The workflow for this operation is essentially the same as for a standard migration with Metro based NDM, as described in the previous section. However, because key changes in the microcode on the Target array make this feature possible, it is critical to ensure prior to migration that source and target arrays are running supported microcode to support the features. Any PowerMax 8500/2500 or higher model array involved in the migration must be running PowerMax OS 10.1 (6079.225) or higher. Both source arrays (existing Metro arrays) will need to be on 5978.711.711 minimum plus ePack. For details, see SRDF and NDM Interfamily Connectivity Information Guide for details on ePack and latest compatibility information.

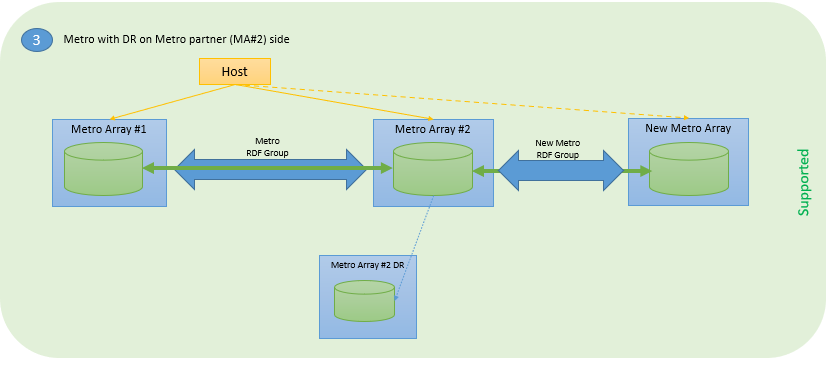

The following figure shows three supported configurations in which source devices are protected with SRDF/Metro.

Figure 1. Supported SRDF Metro configurations for application mobility with NDM

The new mobility feature is built on existing NDM and SRDF technologies, using a workflow similar to that for NDM, the same support for easily recovering failed operations, and the same support for easily canceling an ongoing migration.

- Familiar NDM commands: Create, Commit, Cancel, List with SG based unit of migration

- Existing DR will be maintained through migration without interruption

- Supports Metro Sessions in either Witness or Bias mode

- Metro sessions can contain any combination of FBA and iBMI D910 devices

- SRDF/Metro License is required on the target array because SRDF Metro will continue to run following the migration

- iSCSI connectivity is not supported

If you are migrating a Metro Session using a witness, you must configure the witnesses on the target array before performing migrations. Both physical and virtual witnesses are supported on the existing and the new Metro Sessions and the type of witness between the two can be different.

Both of these new features add to the already rich set of migration options and are a direct result of customer enhancement requests. This new functionality will help our PowerMax customers with migration operations and increase productivity and flexibility by reducing cleanup operations and by providing the ability to migrate devices with metro protection.

Author: Paul Martin

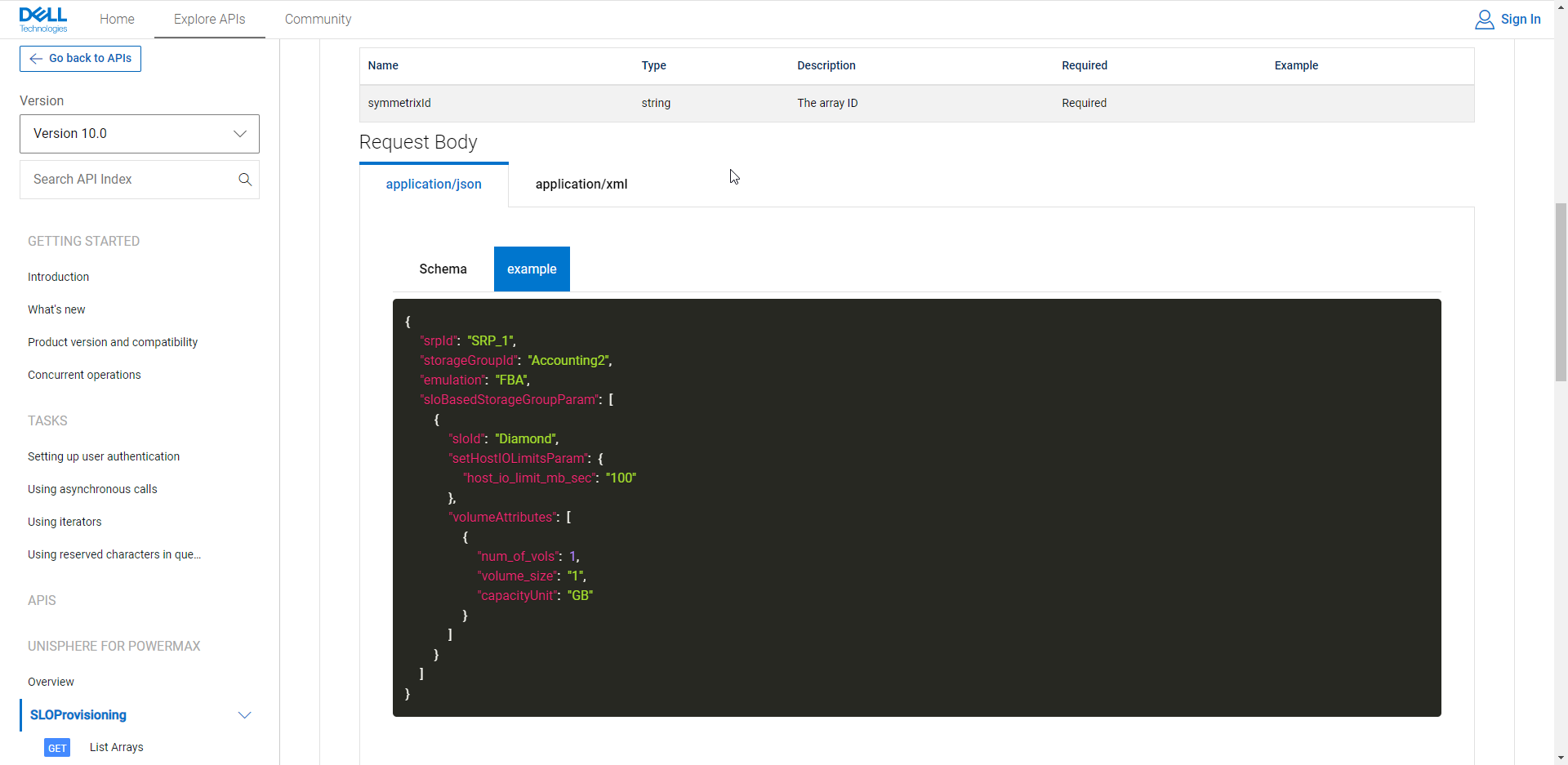

RESTing on our Laurels - What’s New with the PowerMax API and Unisphere 10.1

Tue, 17 Oct 2023 13:12:28 -0000

|Read Time: 0 minutes

Unisphere 10.1 has just been released! Although the internal code name was Laurel, we are doing a lot in the API, so we definitely haven’t been RESTing on our laurels!

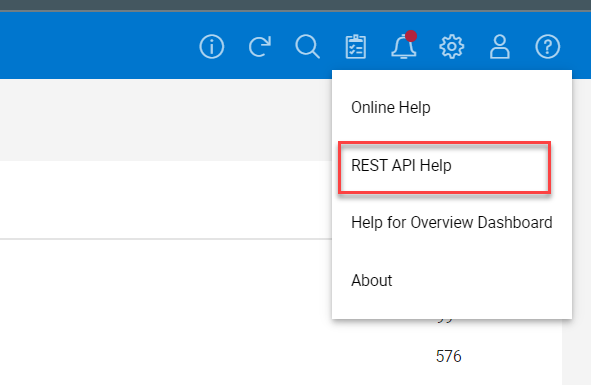

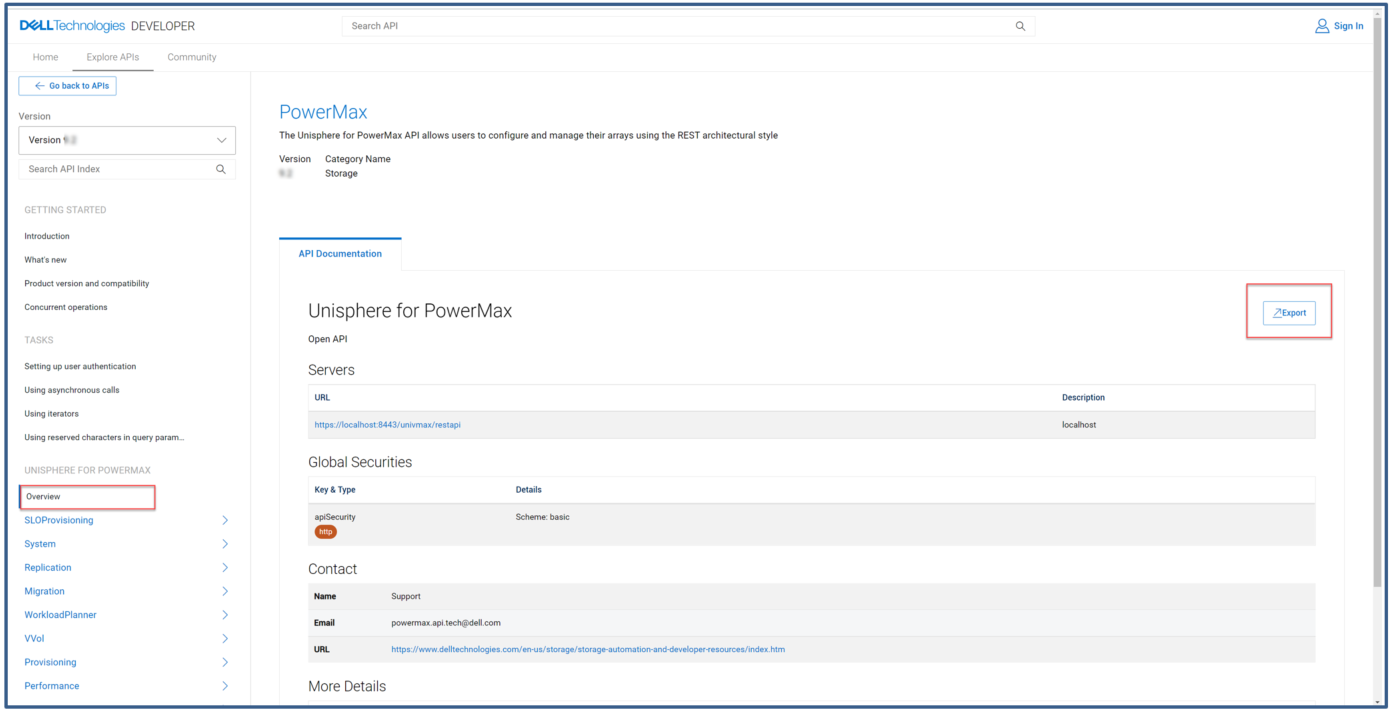

With this release the Unisphere for PowerMax team focused on what could be done better in the PowerMax API, to make things easier for developers and to reduce the amount of code people must maintain to work with the PowerMax API. Personally, I think they have knocked it out of the park with some new features. These features lay the groundwork for more of the same in the future. As always, there is a full change log for the API published along with updated OpenAPI documents available on https://developer.dell.com. In this blog I provide my own take and highlight some areas that I think will help you as a customer.

Let’s start with the traditional Unisphere for PowerMax API. With this new version of Unisphere there is a new version of the API and simpler versioning referencing throughout the API. For example, the following GET version API call returns the api_version (in this case, 101), and the currently supported API versions with this release (101, 100, and 92). As always, the previous two versions are supported. Here, the supported_api_versions key takes any guesswork out of the equation. If you are using PyU4V, a new version (10.1) is available, which supports all of the new functionality mentioned here.

https://Unisphere:8443/univmax/restapi/version (GET) { "version": "T10.1.0.468", "api_version": "101", "supported_api_versions": [ "101", "100", "92" ] }

I’ll break the other changes down by functional resource so you can skip any that you’re not using:

- Serviceability API calls

- Replication Call Enhancements

- Sloprovisioning Call Enhancements

- System Call Enhancements

- Performance API Call Enhancements

- The All New Enhanced PowerMax API – Bulk API calls

Serviceability API calls

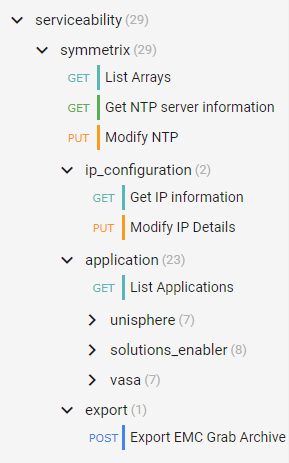

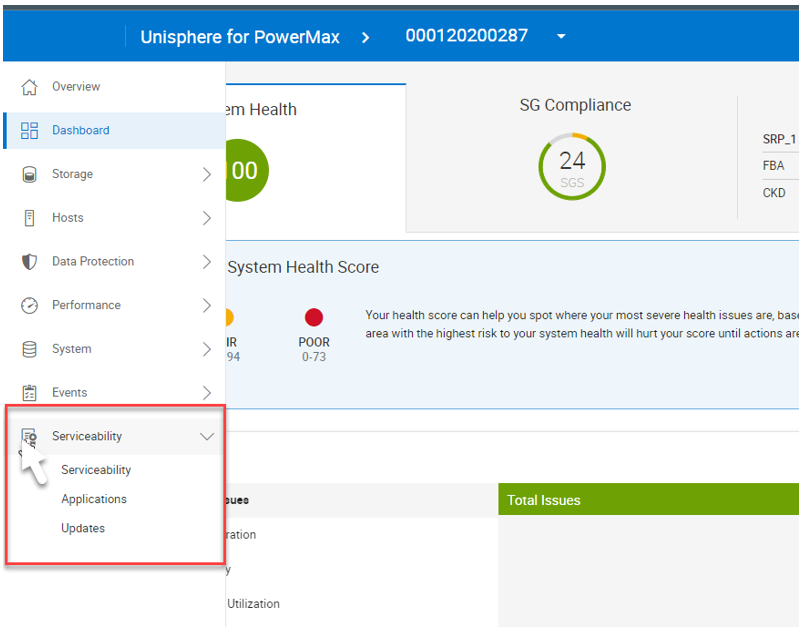

For embedded Unisphere for PowerMax users, when the system is updated to the latest version of Unipshere, a new Serviceability API Resource becomes available.

The new serviceability API calls (as shown in the following figure) give control over the embedded Unisphere, providing access to solutions enabler settings, and Unisphere settings that up until now have only been accessible in the UI, such as:

- Setting up the nethosts file for client/server access from CLI hosts running Solutions Enabler

- Host based access control

- Certificate Management of the Solutions Enabler Guest OS

- And more

Here’s the tree of the Serviceability API resource:

Note: When executing API calls to update the Unisphere application, the server will restart as a result of these changes. You must wait for these to complete before you can issue more API calls. Also, as a reminder, if you are changing the IP address of the Unisphere server you must update your API connection for future calls.

Replication call enhancements

Good news for customers using snapshots and snapshot policies. New replication calls and keys will make the management workflows easier for anyone automating snapshot control and using policies.

An updated API call adds keys for directly associated policies vs inherited policies:

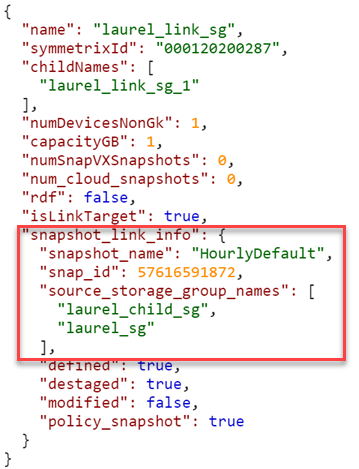

101/replication/symmetrix/{symmetrixId}/storagegroup/{storageGroupId} (GET)In the API, identifying and tracking back linked snapshots has been time consuming in the past. Based on valued customer feedback, our product management and developers have implemented key changes that will help.

The ability to list storage groups that are linked targets has been there for a while, with query parameters in the following GET call:

101/replication/symmetrix/{symmetrixId}/storagegroup?is_link_target=trueHowever, finding out which snapshot was linked, and which source storage group owned that snapshot was a challenge. To make this easier, new keys now appear:

101/replication/symmetrix/{symmetrixId}/storagegroup/{storageGroupId} (GET)

As you can see, the 10.1 API call returns a new key (snapshot_link_info) that includes all the information you need to be able to manipulate the linked snapshots, without having to engineer any trickery yourself.

Sloprovisioning call enhancements

Probably the single biggest change to the Provisioning API calls is the ability to Validate and return a Bad Request when the performance_impact_validation_option is set for sloprovisoning the /maskingview POST call. This gives the developer a way to check, at the time of provisioning, whether a workload is suited to run on the supplied PowerMax serial number. This call uses performance data from the workload planner database and can help create enhanced provisioning workloads with performance and zoning in mind.

The Payload can now accept a new key (performance_impact_validation_option) which can be set to:

- IfRecommended – Run performance impact tests. If no performance capacity threshold is breached, run the requested configuration change.

- Preview – Run performance impact tests and return performance impact scores for the requested operation. Return the input object (with generated fields, if applicable) for relevant follow up API calls.

In addition, the payload has a “portGroupSelection” key that can automatically create a new port group or use an existing port group based. If automatic selection is used, selection can be either UtilizationBased or ZoningBased. (Note: Automatic port selection is only available for Fibre (SCSI FC))

If you choose to use an existing port group, existing port groups are evaluated by the workload planner algorithms examining port groups that are already configured on the system. The algorithm will select the least loaded port group for the provisioning and ignore zoning. Users have the Option to restrict selection to a supplied list of port groups using the API keys. See documentation for details of the various keys.

Note: When using the performance impact selection, you can’t specify an existing storage group because it’s assumed that they are already provisioned. Full documentation for the API call mentioned is here with the supported parameters.

The provisioning API calls include other additions:

- Update POST for Create Masking View, to allow the specifying of a Starting LUN Address. This was a customer enhancement to make it easier to configure boot from SAN.

- Update PUT for Modify SG, to allow the specifying of a Starting LUN Address when adding volumes. .

- Update PUT for Modify SG, to allow terminating snapshots associated with volumes being removed from a SG in a single call. This is very useful because it prevents stranded snapshots from consuming space on the array.

System call enhancements

We have added system calls to enable the refresh of Unisphere. This is useful to customers who are working in a distributed environment and who want to ensure that Unisphere data is up to the second with the latest information. This should be the case, but in the event that there were changes made on a remote system, it could take a minute or so before these are reflected in the object model. The new refresh call has some guardrails, in that you can only run it once every five minutes. If you try to execute too soon, status code 429 will return with message telling you to wait for it to come back:

“Bad or unexpected response from the storage volume backend API: Error POST None resource. The status code received is 429 and the message is {'retry_after_ms': 285417}.”The documentation for this API call (/101/system/symmetrix/{array_serial}/refresh) is here.

Getting information about REST API resources and server utilization was previously only ever available in the user interface. It made sense to make this information available through the REST API because the information pertains to REST. The new GET call to obtain this information (/101/system/management_server_resources) is available, documentation is here.

Along the same lines, we have also added the following calls:

- Change Log Level - /101/system/logging (PUT/GET)

- Configure/Check SNMP Destination - /101/system/snmp (GET/POST/PUT/DELETE)

- Server Cert management - /101/system/snmp/server_cert (POST)

- Configure SNMPv3 with TLS - /101/system/snmp/v3_tls (POST)

- Manage PowerMax Licensing via API

Performance API call enhancements

There is only one minor change in the traditional API for performance with this release. We are adding the ability to register a list of storage groups for real time performance and also bring file metrics for SDNAS onto the Unisphere for PowerMax array for monitoring. The POST call /performance/Array/register has been updated to take new keys, selectedSGs, and a file.

The new payload would look something like this:

{

"symmetrixId": "01234568779",

"selectedSGs": "sg1,sg2,sg3",

"diagnostic": "true",

"realtime": "true",

"file": "true"

}There are some additional changes for mainframe and also Workload Planner which are covered in the changelog documentation. I just want to highlight here what I think most customers will be interested in and give some background.

The all new enhanced PowerMax API – Bulk API calls

I’ve been looking forward to being able to announce some very nice enhancements to the PowerMax API. The API provides new calls with resources accessible under a different Base URI. Rather than https:// {server_ip}:{port}/univmax/restapi/, the new API calls are under https://{server_ip}:{port}/univmax/rest/v1.

The difference between the two entry points will become apparent as you get used to these calls and the versioning will be arguably simpler going forward. Documentation is here.

- GET - /systems/{id}/volumes

- GET - /systems/{id}/storage-groups

- GET - /systems/{id}/performance-categories

- GET - /systems/{id}/performance-categories/{id}

For complete details about these endpoints, see:

- The API documentation marked "POWERMAX - ENHANCED ENDPOINTS"

- The Unisphere for PowerMax 10.1 REST API Changelog

“Ok” I hear you say, “so what’s the big deal?”. Well, these endpoints behave differently from our existing API calls. The provide more information faster so that developers don’t have to maintain a lot of code to get the information they need.

The volumes GET call returns details about every volume on a system in a single call. There is no pagination required and you don’t need to worry about iterators or have to deal with anything fancy. The API just gives you back one big JSON response with all the information you need. This eliminates the need to loop on calls and will dramatically cut down the number of API calls you need to issue to the server.

The same is true for the storage groups calls. With a single call, you can get information on all storage groups on the system, their attributes, and which volumes are in those groups.

But wait, there’s more…

We have implemented a modified form of filtering of the response. You can now filter on all attributes and nested attributes that are returned in the response:

../storage-groups?filter=cap_gb eq 100 ../storage-groups?filter=volumes.wwn like 12345678

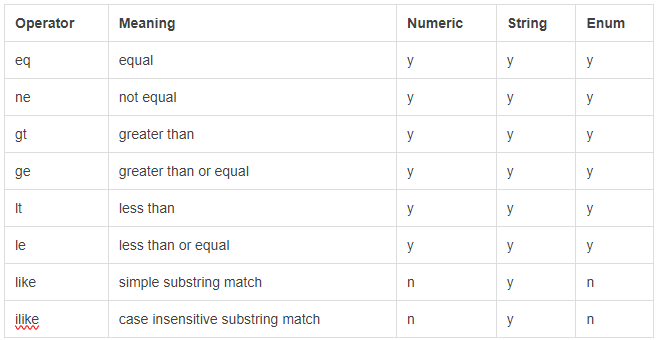

The available filter options are:

The endpoints require you to “select” the desired attributes instead of returning everything up front. By doing so, you can control how server-intensive each request is by omitting or including only the relevant info:

The endpoints require you to “select” the desired attributes instead of returning everything up front. By doing so, you can control how server-intensive each request is by omitting or including only the relevant info:

…/storage-groups?select=cap_gb,uuid,type,num_of_volumes

This returns only the cap_gb,uuid, type and num_of_volumes for each storage group.

This also applies to nested attributes (a full stop is used to define child attributes):

…/storage-groups?select=volumes.wwn,volumes.effective_wwn,snapshots.timestamp_ms

If no attributes are defined in the “select”, only the default values, such as “id”, are returned. The list of attributes that can be selected is available in the documentation here.

Functions are also available for this in PyU4V. Currently multiple filter options are combined in an AND pattern and select can be applied to the data to reduce the output to only what you are interested in. The following is an example of this functionality as executed through PyU4V 10.1:

With this new functionality you can get all details for your volumes and storage groups in two calls per array, reduced from tens of thousands of calls on larger systems.

Enhanced performance metrics with the new Bulk API

In addition to the new GET calls for Volumes and Storage groups, there are new calls for the performance metrics.

The /systems/{id}/performance-categories (GET) call returns a list of performance categories valid for the arrays you are querying.

When you query each category, the API returns the last interval of diagnostic performance data using the new /systems/{id}/performance-categories/{id} GET call. This returns all key performance indicator metrics at the diagnostic level for the category and all instances in that category for the last five minutes.

These new enhanced API calls reduce the amount of code that developers need to write and maintain. The API call is intentionally designed to provide only the latest information. This reduces the amount of code for which developers need to maintain performance data collection for dashboard type tools and data collectors.

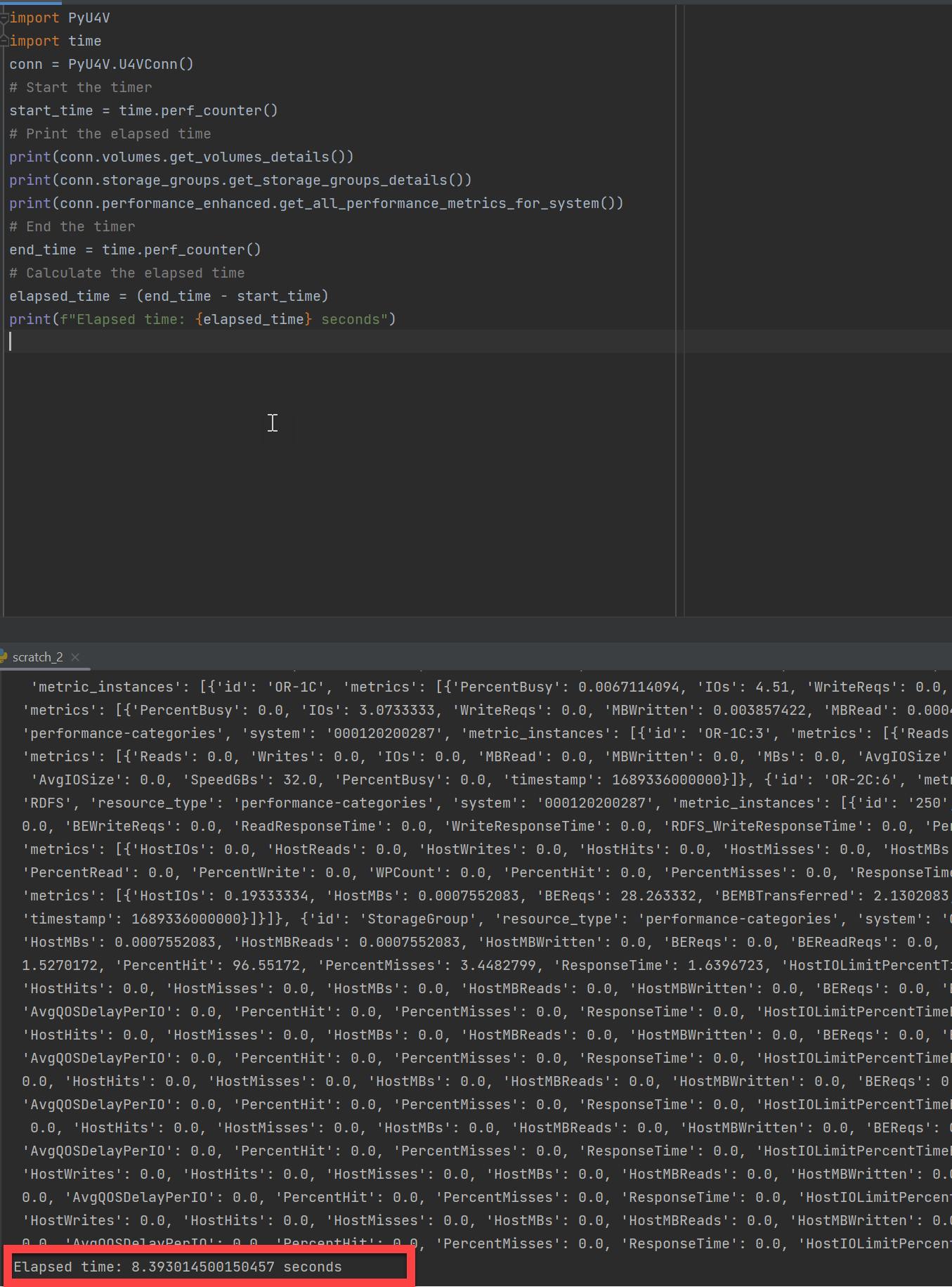

The following example shows a PyU4V script executing a few lines of code to gather all performance stats for every category on every component of a PowerMax array. It’s also gathering details on all volumes and all storage groups using the new Enhanced API calls. On my system, the code takes about eight seconds on average to gather all that information.

To wrap up

Hopefully this blog has provided some insight into the latest changes in the PowerMax REST API. As you can see, our developers have not been RESTing on their laurels!

Watch this space for more about the PowerMax API, and if there are topics that are of interest to you and you would like to learn more, send a request to @rawstorage on Twitter and I’ll try to accommodate your request!

Be sure to provide feedback through your account team for any enhancement requests or start a discussion on https://www.dell.com/community/en/topics/automation. Our product managers will take your requests and bring them to the development team.

Author: Paul Martin

Unreducible Capacity Alerting for PowerMax for Possibly Indicating a Ransomware Attack

Thu, 31 Aug 2023 21:00:21 -0000

|Read Time: 0 minutes

Unreducible Capacity Alerting for PowerMax for Possibly Indicating a Ransomware Attack

With the release of PowerMaxOS 6079.175.0042 and Unisphere 10.0.1 (March 2023), you can now get anomaly detection for your PowerMax storage environment. This blog explores the various ways you can set up capacity anomaly detection for your PowerMax storage environments that could possibly indicate a ransomware attack occurring on your PowerMax.

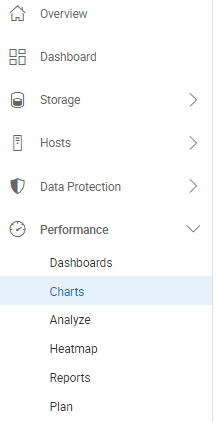

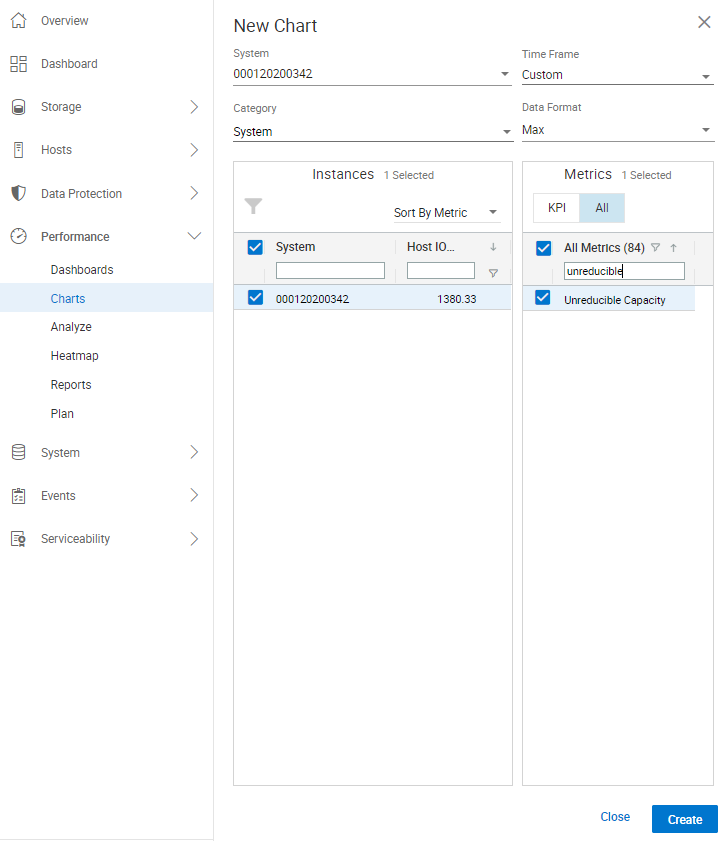

Unisphere 10.0.1 provides a new performance metric called Unreducible Capacity, which reports on the unreducible capacity in the storage array. With data reduction capabilities enabled by default, this metric monitors your unreducible capacity for a system and Storage Group. To chart the unreducible capacity within a PowerMax environment:

- Log in to Unisphere 10.0.1.

- Select the PowerMax for which you would like to chart unreducible capacity (this assumes that the PowerMax is leveraging DRR capabilities).

- On the left-hand side of Unisphere, expand the Performance section and select Charts (example shown here).

- Locate the Time Frame section and select a time frame. Please note, the ‘Unreducible Capacity’ metric is selectable with all ‘Diagnostic’ and ‘Historical’ time frames, however the metric is not selectable with the Realtime time frame.

- Under Category, Unreducible Capacity is eligible for System and Storage Group (which is under the System & Hosts category). There are benefits to charting unreducible capacity for system and Storage Groups. The benefits are:

- For System – Gives customers an idea if a massive ransomware attack is occurring across the whole system.

- For Storage Groups – Gives customers a more surgical approach because Storage Groups are typically created on an application-by-application basis. Therefore, customers can chart the unreducible capacity per Storage Group and give an indication if a ransomware attack may be occurring on the application.

- Change Data Format from the default setting of Avg (for average) to Max.

- If charting the System, check under Instances for the PowerMax array serial number you want to chart.

- Under Metric, select All for all the available metrics.

- In the All Metrics field, enter unreducible and Unreducible Capacity appears. Select Unreducible Capacity, and click Create at the bottom of the screen.

Here is how the screen now appears:

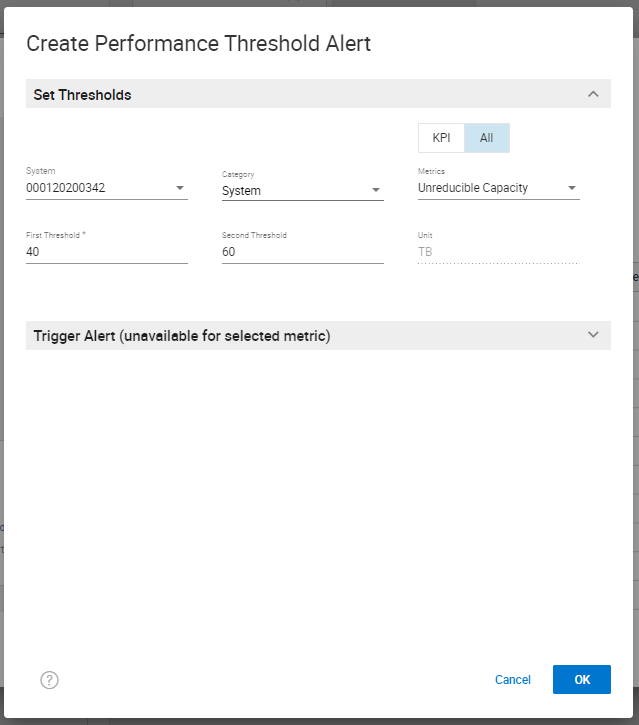

This figure shows how customers can chart the Unreducible Capacity metric in Unisphere. Customers can also set a Performance Threshold and Alert within the Settings section of Unisphere. Doing this enables customers (after monitoring the System and Storage Group for the appropriate thresholds) to set an alert within Unisphere. Unisphere will generate an alert within Unisphere and could email and/or have SNMP send the alert to stakeholders if the thresholds set for Unreducible Capacity are exceeded. To set an alert for Unreducible Capacity:

- Log in to Unisphere.

- Navigate to and select the gear icon in the top right-hand corner of Unisphere. The gear icon is the Settings section for Unisphere for this PowerMax instance.

- On the left-hand side, navigate to the Alerts section and expand it.

- Select the Performance Thresholds and Alerts section. This opens a panel to configure performance thresholds and alerts within Unisphere.

- By default, the category System is selected. To create a threshold for Unreducible Capacity for System:

- Select the Create button.

- On the top right-hand side, select the All setting to view all the Performance Metrics available within Unisphere for the category System.

- The metrics are listed in alphabetical order, so scroll down to the Unreducible Capacity metric and select it.

- Enter a number for First Threshold and a higher number for Second Threshold.

- On the bottom right-hand side, click OK to create a Performance Threshold alert for unreducible capacity being measured against the PowerMax system.

Here is how the information appears with these steps completed:

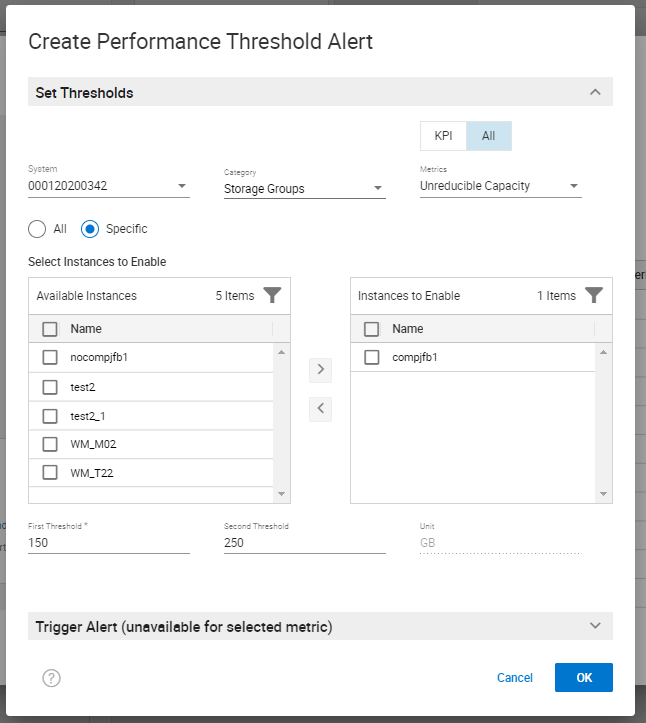

You can change the Category shown in this screenshot from System to Storage Groups to set a performance threshold alert for an individual storage group. Here is a screenshot of creating a performance threshold and alert for Storage Groups (Note: I selected the radio button Specific to pick specific Storage Groups that I want to monitor and alert on).

If you have SRS enabled on your PowerMax array, you can onboard CloudIQ through Unisphere. CloudIQ is a monitoring and reporting tool for all supported Dell assets that feed data into CloudIQ. With PowerMaxOS 6079.175.0042, you can use CloudIQ for Capacity Anomaly Detection on a PowerMax storage array.

For more information about the technologies mentioned in this blog, see the following resources:

- For Unisphere information, review the Dell Technologies Support page

- PowerMaxOS Release Notes

Authors: Justin Bastin (Senior Principal Engineer) and Richard Pace (Principal Engineering Technologist)

Local Replication with the PowerMax REST API, Working with SnapVX Snapshots

Tue, 13 Jun 2023 16:02:04 -0000

|Read Time: 0 minutes

PowerMax arrays provide feature rich local replication options in SnapVX and clone technologies. The PowerMax REST API supports all of these features, and Dell Technologies provides pre-written Python functions as part of PyU4V -- a Python package for managing the PowerMax REST API as well as Ansible modules that support SnapVX. In this blog I provide examples in native REST calls and in a Python example.

If you are not familiar with REST, you’ll enjoy reading one or both of these blogs:

- https://infohub.delltechnologies.com/p/getting-started-with-rest-api-1

- https://infohub.delltechnologies.com/p/getting-started-with-the-powermax-rest-api-time-for-a-rest

Full API documentation is available on the developer hub here. All REST endpoints for the SnapVX and clone operations are under the replication resource of the API. This means that URI addresses are prefixed with the base URL https://{{base_url}}/{{api_version}}/replication. In addition to SnapVX and clone functionality, it’s also possible to assign snapshot policies to storage groups at the time of creation or when modifying using sloprovisioning resources. Examples appear further ahead in this blog.

Managing SnapVX snapshots with the REST API

SnapVX provides PowerMax administrators with the ability to take point in time snapshots across a collection of devices to create a consistent point in time image of the data in a very space efficient manner. The user can manipulate the snapshots for restore purposes or link them to volumes and present them to hosts using masking views to provide a copy of the snapshot image.

For details about SnapVX functionality, see the white paper Dell PowerMax and VMAX All Flash: TimeFinder SnapVX Local Replication. The PowerMax REST API provides all the functionality of Unisphere for PowerMax with snapshot management, and just like the UI management is orchestrated with the storage group. If you need to manipulate a snapshot for a single volume, you can use the CLI tools or add the volume to its own storage group.

Some points to remember with SnapVX snapshots:

- A SnapVX snapshot is never altered by host access and therefore is always available to the administrator for restore purposes until it is terminated by user action or it expires based on the time to live value (if set).

- Snapshots will only automatically expire when they are unlinked from all target storage groups.

- Snapshots can be set secure at or after creation time. Once set secure, the user cannot terminate the snapshot until the time to live has passed or modify the security of that snapshot except to extend secure time to live.

- When linking snapshots with the API or UI, if the target storage group doesn’t already exist the API will automatically create the storage group with the correct number of devices and size, matching the source device configurations.

- If you add devices to a source storage group, you must add devices to target storage groups. This is not automatic and needs to be planned for. Naming conventions can help simplify this process.

- Although SnapVX snapshots present with both a generation number and snapId, if automating, snapid is preferred because this number is a static reference id.

Creating and identifying SnapVX SnapShots with the REST API

Creating a snapshot is a simple process. A REST API POST call is sent to the management software, directed at the storage group to be snapped, specifying a name for the snapshot along with any optional parameters to be set (for example, you can set the snapshot to be secure or set time to live).

The following is an example POST call and payload for creating a snapshot of a storage group, with a snapshot named REST_SG_snapshot, setting a time to live of 10 days. (Values to be supplied are enclosed in {} signaling substitution required for actual values or variables.)

https://unisphereIP:8443/univmax/restapi/{{api_version}}/replication/symmetrix/{symmetrixId}/storagegroup/{storageGroupId}/snapshot (POST)

Payload

{

"snapshotName": "REST_SG_snapshot",

"timeToLive": 10

}PyU4V has functions for complete control of SnapVX, making the API easier to consume. The following is a simple script to create a snapshot and print the return from the API.

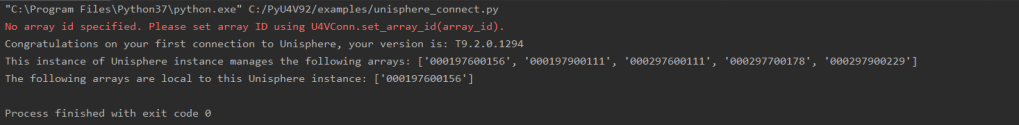

import PyU4V

conn = PyU4V.U4VConn(username='smc', password='smc',

server_ip='unisphereip', port='8443', array_id=None,

verify=False)

#create a snapshot and assign returned information to variable snap_details

snap_details = (conn.replication.create_storage_group_snapshot(

storage_group_id='snapsrc', snap_name='snap4blog', ttl=1, secure=False,

hours=True))

#Get SnapIds for all snapshots for storage group with name snap4blog

all_sg_snaps = (conn.replication.get_storage_group_snapshot_snap_id_list(

storage_group_id='snapsrc', snap_name='snap4blog'))

#print details of snapshot created above

print(snap_details)

#Print only snapid from snap_details

print(snap_details.get('snapid'))

#Print all SnapIds associated with snap4blog snapshot on snapsrc storage group

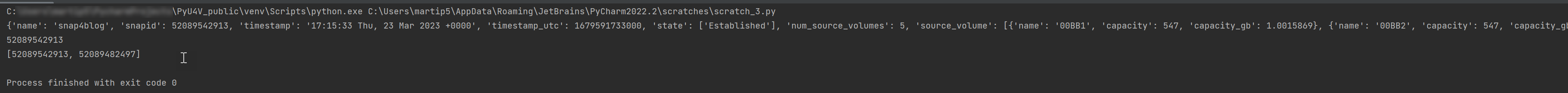

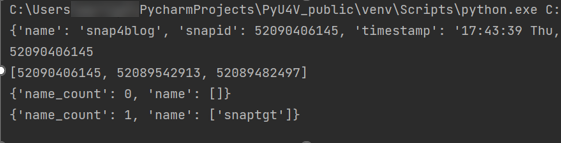

print(all_sg_snaps)The output is shown below.

- The first print statement shows the detailed information for the snapshot just created with details such as timestamp, snapid, and so on.

- The second line prints only the snapid extracted from the JSON using the key ‘snapid’.

- The third line prints the variable ‘all_sg_snaps’ showing all snapshot ids with the name snap4blog associated with the snapsrc storage group.

To make the snapshot data available to a host, you can add some additional REST calls to link to a storage group.

The following script performs these tasks:

- Lists any storage group that has a snapshot linked to it on my test array. There are none to begin with.

- Creates a link to an existing snapshot using the snapid

- Runs the listing again. This time there is data to display showing the linked storage group.

#list any storage group that is being used as a linked target for snapvx

#snapshot

print(conn.common.get_request(

target_uri=f'/100/replication/symmetrix/'

f'000120200287/storagegroup?is_link_target=True',

resource_type=None))

#Link a snapshot from ‘snapsrc’ storage group to ‘snaptgt’ storage group, #this will create snaptgt storage group as it doesn’t exist

#with the name ‘snap4blog’ and snapid matching value in variable set earlier.

conn.replication.modify_storage_group_snapshot_by_snap_id(

src_storage_grp_id='snapsrc',tgt_storage_grp_id='snaptgt',

snap_name='snap4blog', snap_id=snap_details.get('snapid'),

link=True)

#Print updated list of storage groups used as snap target

print(conn.common.get_request(

target_uri=f'/100/replication/symmetrix/'

f'000120200287/storagegroup?is_link_target=True',0

resource_type=None))The following is the output of the functions showing the snaptgt group in the list after the link has been issued.

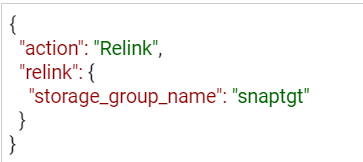

SnapVX lends a lot of flexibility when refreshing test and development environments. The ability to relink the same snapshot (essentially wiping any changes to the target copy while preserving the point in time image) greatly simplifies iterative testing. You can also quickly relink to a different snapshot or unlink to reuse the target volumes for another purpose. To relink a snapshot with PyU4V, simply change the parameter in the modify_storage_group call instead of “link=True” we set “relink=True”.

If you are relinking directly from the API, modify the payload for the PUT call to reflect that the action is relink, as shown here:

https://UnisphereIP:8443/univmax/restapi/100/replication/symmetrix/{symmetrixId}/storagegroup/{storageGroupId}/snapshot/{snapshotId}/snapid/{snapId} (PUT)

When you no longer require your snapshots, don’t forget to terminate or unlink any linked targets to allow for automatic expiration if you’ve set a time to live. All actions use the same URI link, however the REST method will be PUT (with modified payload) or DELETE.

It’s generally a good practice to adopt a friendly naming convention for storage groups that are being used as snapvx targets to make them easy to identify in the UI and API output. Simply adding _TGT to the sg name may be enough, but your naming convention can be whatever works best for your organization.

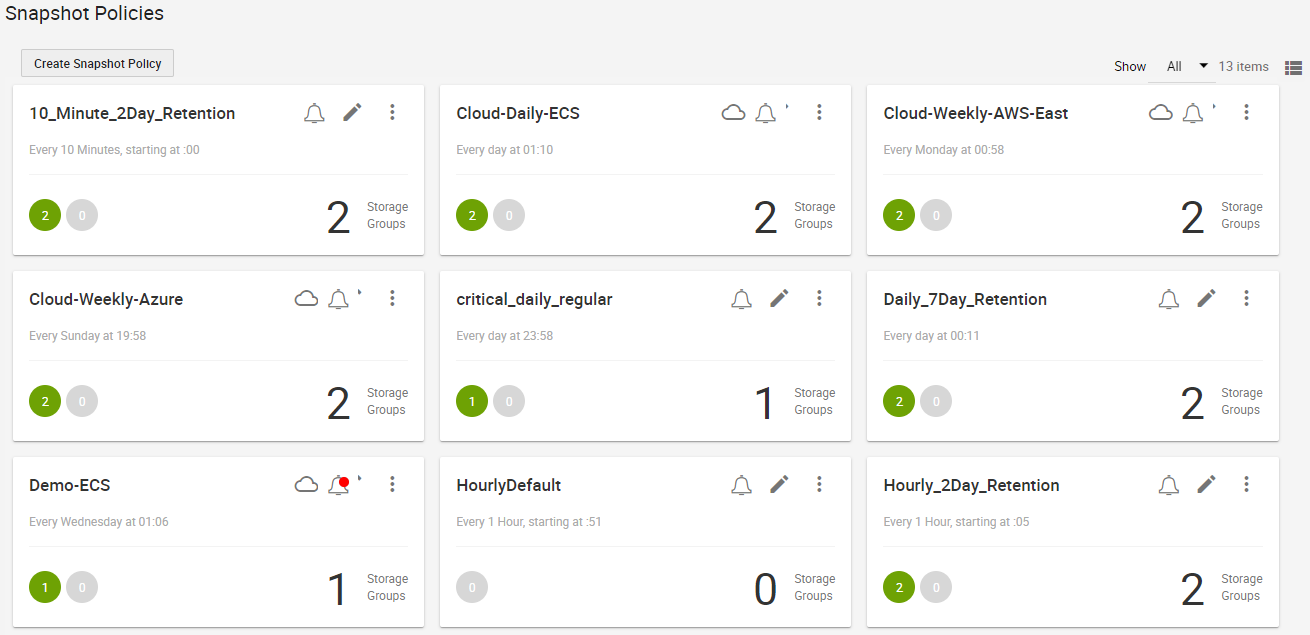

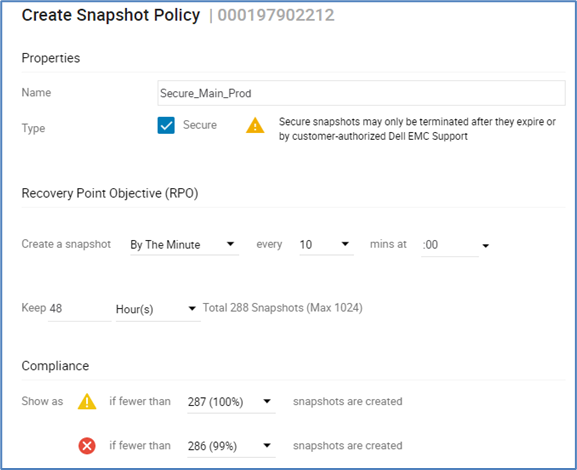

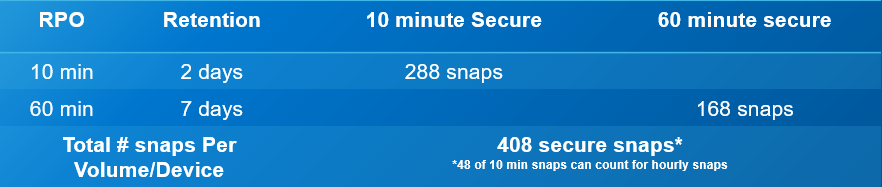

Snapshot policies

In addition to regular snapshots, SnapVX provides a mechanism for users to assign snapshot policies to storage groups. (More details about snapshot policies are described in the white paper Dell PowerMax and VMAX All Flash: TimeFinder SnapVX Local Replication.) Assigning a snapshot policy means that you will have an automated rotation and preservation of a defined number of snapshots for your storage groups with a clearly defined recovery time objective. Users don’t need to manage the scheduling -- the policy automates all of that and can be assigned when storage groups are created, or at a later time.

Associating snapshot policies to storage groups is available through the POST and PUT calls for creating storage groups. A separate set of API calls is available for managing directly from the Snapshot Policy section of the API documentation.

For Py4V in Python, the code only requires an extra variable on the create and modify functions. The following example will create a snapshot policy (example_policy) that will take a snapshot every 12 minutes, retaining a maximum of 24 snapshots. The second part creates a storage group associated with this policy. Note that there are default policies already created on all arrays that users can use without having to create anything new.

import PyU4V api = PyU4V.U4VConn(username='smc',password='smc', server_ip='unisphereip', verify=None, array_id='000297600841') api.snapshot_policy.create_snapshot_policy( snapshot_policy_name='example_policy', interval="12 Minutes", local_snapshot_policy_snapshot_count=24) api.provisioning.create_non_empty_storage_group( srp_id="SRP_1", storage_group_id="blog_example_sg", num_vols=1, vol_size=1, cap_unit="GB",snapshot_policy_ids=["example_policy"], service_level="Diamond", workload=None)

Hopefully this blog has given you the basics to working with SnapVX snapshots using REST and Python!

For more information and a list of all functions for SnapVX, see the documentation for PyU4V on readthedocs.io and the REST API documentation on the Dell developer portal here.

In upcoming blogs, I will also be looking at using clone technology with the REST API, and when this is a good alternative to SnapVX depending on your needs. Have fun automating and if you have questions, you can always start a discussion on the PyU4V GitHub -- we are always willing to help!

Author: Paul Martin

Local Replication with the PowerMax REST API, Working with Clones

Wed, 31 May 2023 19:48:07 -0000

|Read Time: 0 minutes

PowerMax arrays have several local replication features for administrators to use, depending on their needs. Happily, the PowerMax REST API supports all of these. Dell Technologies also provides them in pre-written Python functions as part of PyU4V (a Python package for managing the PowerMax RESasasT API) and as Ansible modules that support symclone functions. In this blog I provide examples and some background info about clones.

If you are not familiar with REST, you’ll enjoy reading one or both of these articles:

- https://infohub.delltechnologies.com/p/getting-started-with-rest-api-1/

- https://infohub.delltechnologies.com/p/getting-started-with-the-powermax-rest-api-time-for-a-rest/

Full API documentation is available on the developer hub here. Clone operations are under the replication resource of the API, with all endpoints prefixed https://{{base_url}}/ /replication/symmetrix/{symmetrixId}/storagegroup/{storageGroupId}/clone/.

Managing clones with the PowerMaxREST API

With symclone, PowerMax administrators can create cloned images of their data on demand. If you want to study up on symclone and its history with PowerMax and Symmetrix predecessors, see the blog PowerMax Attack of the Clones.

Clone copies tend to be used in environments where the copy data might be made available to a system for an extended period and only refreshed once in a while. This is in stark contrast to snapshots which are taken frequently, typically have shorter retention times, are available for quick restoration of data, and available to present near real-time copies of data that is constantly changing to test and dev environments.

Starting with Unisphere for PowerMax 10.0, one can use the User Interface and API to interact with symclone functionality. This opened the door to new functionality, and automation, that was previously only available through command line options. Let’s explore this functionality and concentrate on the how, and a little of the why.

Creating a clone on PowerMax with REST API

Just like with snapshots and the majority of operations with PowerMax REST API, clones are controlled at a storage group level. Users create a clone of all volumes in a storage group. Storage group operations make it simpler to manage clones because all devices are at the same point in time (using PowerMax Enginuity Continuity Assist Technology (ECA)) when the clone is created.

To create a clone copy of a storage group, perform a POST operation against the source storage group. (The documentation for the Create Clone API call is here.) Here’s a sample POST call:

https://{unisphereip}}:{{port}}/restapi/100/replication/symmetrix/{symmetrixId}/storagegroup/{storageGroupId}/clone/storagegroup

{

"target_storage_group_name": "target_storagegroup_name",

"establish_terminate": true,

"consistent": true

}The API call and payload shown here create a consistent clone of a source storage group with the clone devices present in the specified target storage group.

The additional parameter establish_terminate set to true signifies to the API to remove the clone relationship as soon as it’s activated. This action is only available on PowerMax arrays running PowerMaxOS 10 or higher.

To perform the same operation in a Python script with PyU4V, the code is simple:

import PyU4V api = PyU4V.U4VConn(username='smc',password='smc', server_ip='unisphereip', verify=None, array_id='000297600841') api.clone.create_clone(storage_group_id="REST_TEST", target_storage_group_id="REST_TEST_TGT", establish_terminate=True)

If you want to maintain a relationship between the source and target storage group after activating your clone, you can omit the key or variable establish_terminate and you can create clones with different capabilities. Note that no copying of tracks is done on the array so it can be easier to use this function because code will be shorter (because there are no relationships to track), and the data is available to the host as soon as the clone is created. So there’s no need to wait for any background operations.

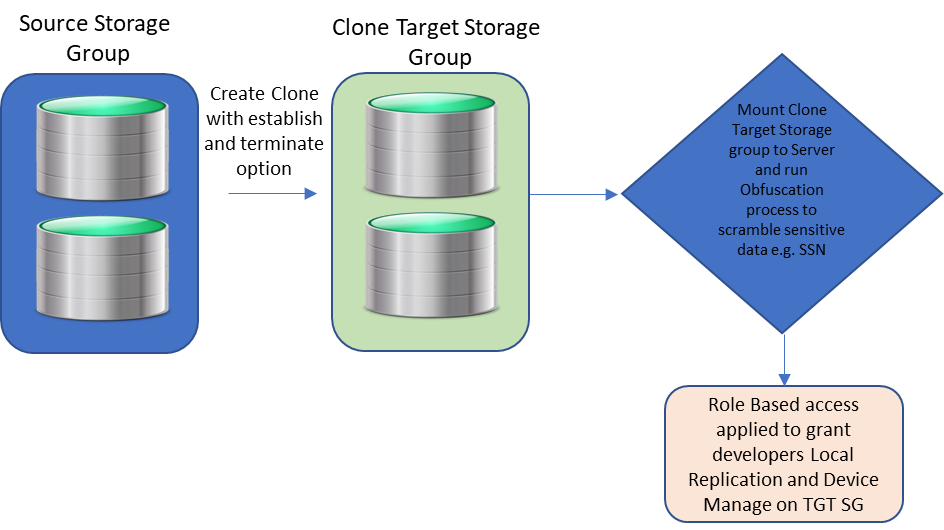

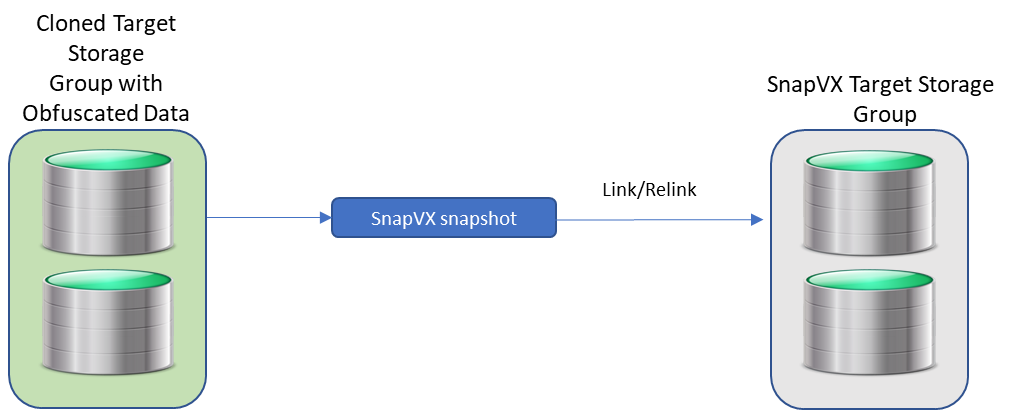

Clone and SnapVX better together

Providing developers with copies of data for building applications, to provide business advantages and to deliver user features to customers, is part and parcel of many organizations. However, the security of that data is paramount and often has legal requirements depending on location and industry.

A great way to help in situations like this is to use clone and snap technology together to create a standalone copy of the data that can be mounted to a host for obfuscation. This helps make sure that sensitive information like social security numbers are no longer intelligible. One can then take snapshots from the obfuscated clone and link them to any test or dev servers.

After the sensitive data has been scrambled by the obfuscation process and RBAC applied to the clones, developers with Local Replication rights can refresh their snapshots at will without security concerns about the data they are seeing. They will only be able to run API calls against storage groups for which they have been granted privileges.

Restoring Data from a Clone

From time-to-time you may want to restore data from a clone to the source. If you are using the traditional clone workflow and have not used the establish/terminate options, you can use the modify storage group clone PUT API call, using the API call here with the restore action.

The URI and body are here:

https://{unisphereIP}:8443/univmax/restapi/100/replication/symmetrix/{symmetrixId}/storagegroup/{storageGroupId}/clone/storagegroup/{targetStorageGroupId}

{

"action": "Restore",

"restore": {

"force": "false",

"star": "false"

}

}PyU4V has its own function for the restore action, taking the source and target storage groups names as parameters:

api.clone.restore_clone( storage_group_id="mysrc_sg", target_storage_group_id="mytgt_sg" ) conn.clone.terminate_clone( storage_group_id="mysrc_sg", target_storage_group_id="mytgt_sg", restored=True)

After the restore, you must terminate the restored session on the source storage group before any additional clone commands can run.

As with any feature, it’s easy to do things when you know how, but as with any technology there are rules which are well documented here. In that document, Table 5 lists the states and allowed actions. (Though this is a command line document, the rules are the same for API and UI operations.)

Hopefully this short blog has given some insight and possibly some inspiration into how to use PowerMax clone technologies with the REST API interfaces.

Watch this space for more about the PowerMax API, and if there are topics that are of interest to you and you would like to read more, send a request to @rawstorage on Twitter and I’ll try to accommodate your request!

Author: Paul Martin

PowerMax 2500 and 8500 Dynamic Fabric—A High-Performance Clustered Architecture for Enterprise Storage

Tue, 18 Apr 2023 16:15:55 -0000

|Read Time: 0 minutes

Introduced in 2022, the PowerMax 2500 and 8500 systems represent the next generation of the PowerMax family. At their core, the PowerMax 2500 and 8500 have a high-performing cluster architecture solely dedicated to processing the vast amounts of storage operations and storage data from modern applications. The four key components of the PowerMax cluster architecture are the compute nodes; the storage Dynamic Media Enclosures (DMEs); the Dynamic Fabric that ties the elements together; and the internal system software, PowerMaxOS 10. The PowerMax 2500 and 8500 cluster architecture provides the following benefits natively without the need for added hardware, software, or licensing:

- High reliability and fault tolerance: All the nodes in the PowerMax can access application I/O and supply resources to process it, making it a true shared everything, active/active system. In case of a node failure, there is no failover operation needed as with active/passive systems. PowerMaxOS 10 will automatically redirect the failed node’s I/O to other nodes. Since no failover is required, there is no need to ever configure a quorum device to guard against issues such as “split brain.”

- Simplicity: All the compute nodes and DMEs in a PowerMax system appear to the user as a single entity. A user does not have to log in to separate individual nodes to manage or configure the PowerMax system. The management of the entire system is performed using the HTML5-based Unisphere for PowerMax UI and/or REST API. These tools provide an easy-to-use interface for management actions and monitoring operations that are crucial to an organization’s needs.

- Fully autonomous operations: PowerMaxOS 10 manages all system resources autonomously—continuously running diagnostics, and scrubbing and moving data within the system for maximum performance and availability 24x7. There is no need to manually configure load balancing between the nodes because PowerMaxOS 10 automatically decides which node will process the incoming I/O data based on locality and resource availability.

- Predicable performance and scalability: As compute requirements increase, you can “scale out” the PowerMax system by adding nodes nondisruptively to supply a predictable linear increase in performance and computing power. As capacity requirements increase, you can “scale up” the system granularly, using single-drive increments. This allows PowerMax to scale based on application requirements rather than system architectural requirements. The architecture also enables holistic software scalability; the PowerMax can support 64K host-presentable devices, more than 65 million snapshots, and 2,048 remote replication groups with other systems.

The compute nodes, DMEs, and PowerMaxOS 10 software supply the foundation for a true active/active, shared everything solution for the demanding requirements of enterprise data centers. The glue that ties all these components together and allows PowerMax to deliver these benefits to customers is the internal Dynamic Fabric.

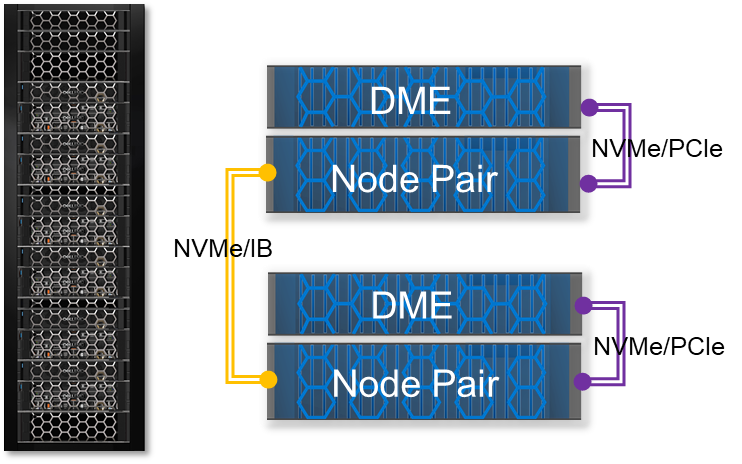

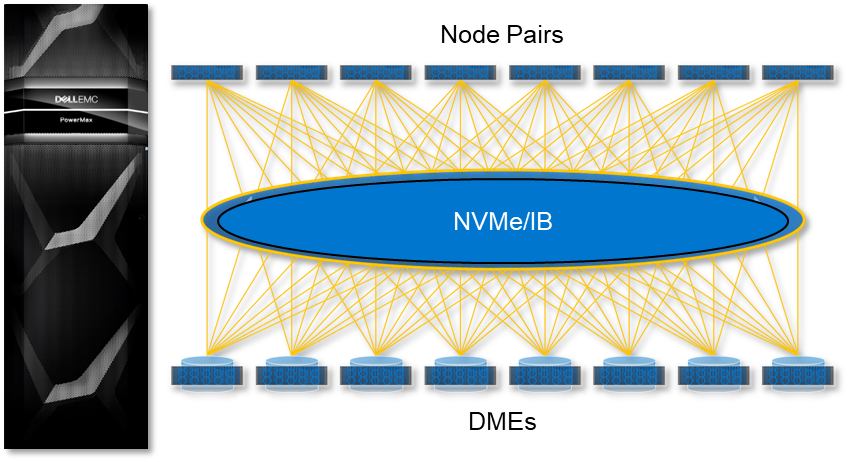

PowerMax Dynamic Fabric