Blogs

The latest news about Dell PowerStore releases and updates

When Performance Testing Your Storage, Avoid Zeros!

Tue, 20 Feb 2024 17:37:42 -0000

|Read Time: 0 minutes

Storage benchmarking

Occasionally, Dell Technologies customers will want to run their own storage performance tests to ensure that their storage can meet the demands of their workload. Dell Technologies partners like Microsoft publish guidance on how to use benchmarking tools such as Diskspd to test various workloads. When running these tools on intelligent storage appliances like those offered by Dell Technologies, don’t forget to watch for how your test files are populated!

The first step in using performance benchmark tools is creating one or more test files for use when testing. The benchmark tool will then write and read data to and from these files, taking measurements to assess performance. An important detail that is often overlooked is how the test files are populated with data. If the files are not populated correctly, it can lead to misleading results and inaccurate conclusions.

We’ll use Diskspd as an example, however please note that most tools have the same default behavior. By default, when you run a Diskspd test, you need to specify several parameters, such as a test file location and size, IO block size, read/write ratio, queue depth, and so on.

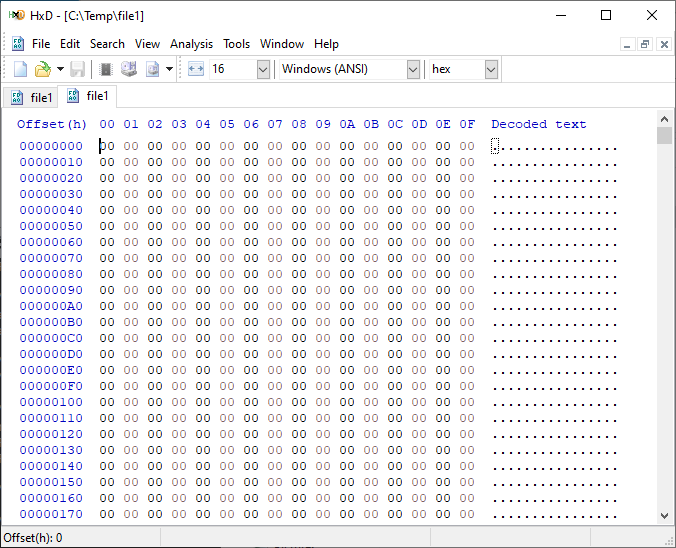

If we open a test file created with default parameters and examine it with a hexadecimal editor, this is what it looks like:

It is filled with nothing, 0x00 throughout the entire file – all “zeros”!

OK, so what is the problem?

When storage benchmarking tools create test files, they all use synthetic data for testing. This is fine when performing IO to a storage device with no “intelligence” built in because it will perform unaltered IO directly to the storage without the data content mattering. In the past, storage devices were simple and would read and write data as commanded, so the data content was irrelevant.

However, intelligent storage appliances such as those offered by Dell Technologies look at data differently. These products are built for efficiency and performance. Compression, deduplication, zero detection, and other optimizations may be used for space savings and performance. Since an empty file would obviously compress and deduplicate well, most of this IO will not access the disks in the same manner that a file of actual data would. It is also possible that other components in the data path would behave differently than normal when repeatedly presented with an identical piece of data.

It is safe to assume that these optimizations likely exist on data being stored in the cloud as well. Many cloud providers use intelligent storage appliances or have developed proprietary software to optimize storage.

The bottom line is that your test is likely inaccurate and may not represent your storage performance under more realistic conditions. While no synthetic test can reproduce a real workload 100%, you should try to make it as realistic as possible.

Mitigations

Some tools can initialize the test files with random data. Diskspd, for example, has parameters that can be added to create a buffer of random data to be used to write to the files or specify a source file of data. Regardless of the method used, you should inspect the test files to make sure that at a minimum, random data is being used. Zero-filled files and repeating patterns should be avoided.

Random data also may not achieve the expected behavior when compression and deduplication capabilities are used. More advanced testing tools such as vdbench can use target compression and deduplication capabilities independently.

Tips

Here are a few more tips when benchmarking storage performance to try to make it as realistic as possible:

- Use datasets of comparable size to real data workloads. Smaller datasets may fit entirely in the cache and skew results.

- Use IO sizes and read/write ratios that match your workload. If you are unsure of what your workload looks like, your Dell Technologies representative can assist you.

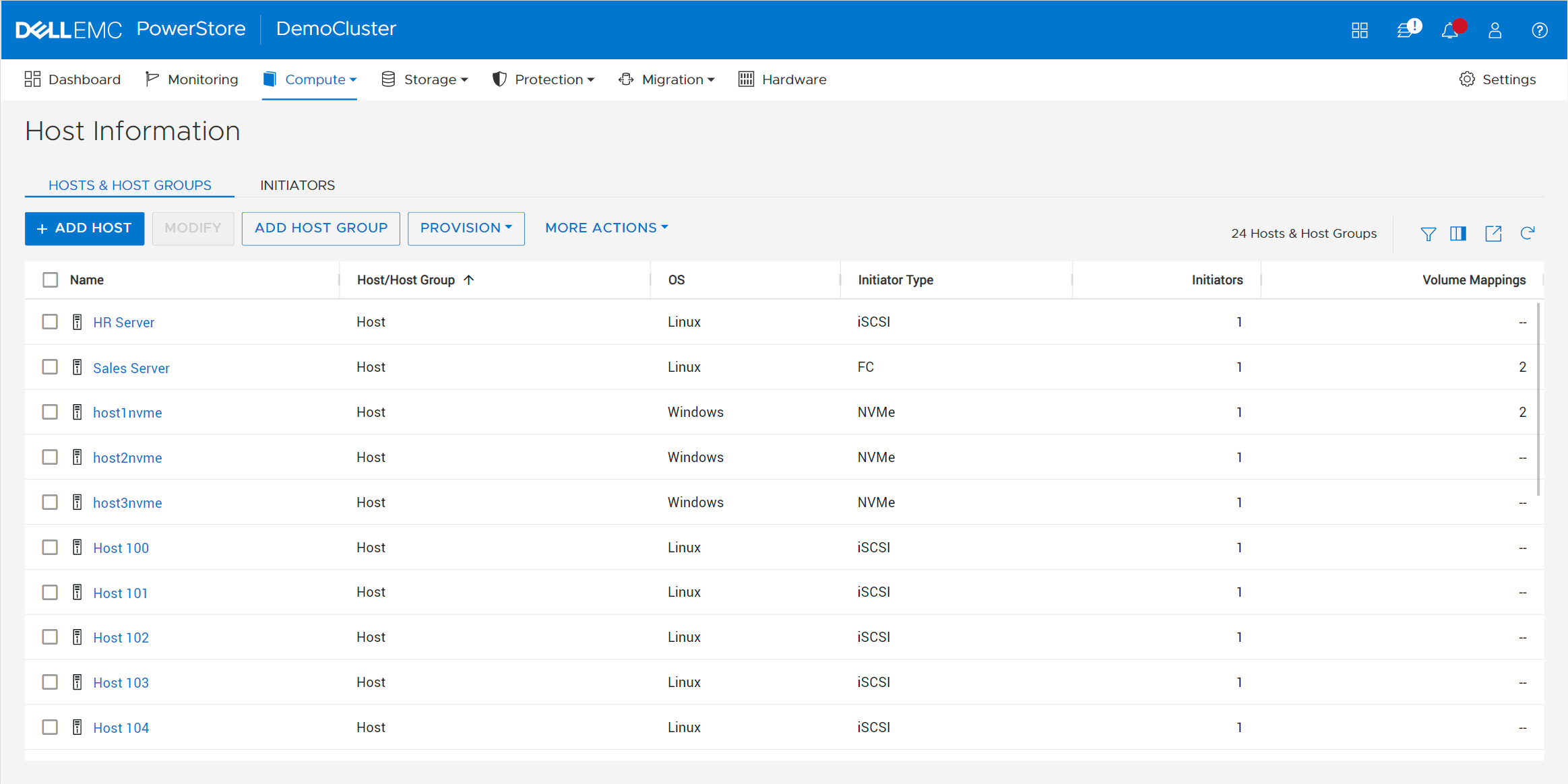

- Test with “multiples”. Intelligent storage assumes multiple files, volumes, and hosts. At a minimum, use multiple files and volumes. When testing larger block sizes, you may need to use multiple hosts and multiple host bus adapters to generate enough IO to test the full bandwidth capabilities of the storage.

- Start with a light load and scale up. Begin with one file, one worker thread, and a queue depth of one. In general, modern storage is designed for concurrency. Some amount of concurrency will be required to fully use storage system resources. As you scale up, observe the behavior. Pay attention to the measured latency. At some point as you scale the test, latency will start to increase rapidly.

- Excessive latency indicates a bottleneck. Once latencies are excessive, you have encountered a bottleneck somewhere. “Excessive” is a relative term when it comes to storage latency and is determined by your workload and business needs. Only scale the test to the point where the measured latency is within your acceptable range or above. Further increasing the test load will result in diminishing returns.

- Make sure the entire test environment can drive the wanted performance. The storage network and host configuration must be capable of desired performance levels and configured properly.

- Beware of outdated guidance. There are still articles online that are over a decade old that reference testing methods and best practices that were developed when storage was based on spinning disks. Those assumptions may be inaccurate on the latest storage devices and storage network protocols.

Summary

Storage performance benchmarking can be interesting and provide useful data points. That said, what is most important is how the storage supports actual business workloads and—most importantly—your unique workload. As such, there is no true substitute for testing with your actual workload.

Selecting the proper storage fit for your environment can be challenging, and Dell Technologies has the expertise to help. Leveraging tools like CloudIQ and LiveOptics, Dell Technologies can help you analyze your storage performance, explain storage metrics, and make recommendations to increase storage efficiency.

Author: Doug Bernhardt, Sr. Principal Engineering Technologist | LinkedIn

PowerStore validation with Microsoft Azure Arc-enabled data services updated to 1.25.0

Mon, 12 Feb 2024 20:04:34 -0000

|Read Time: 0 minutes

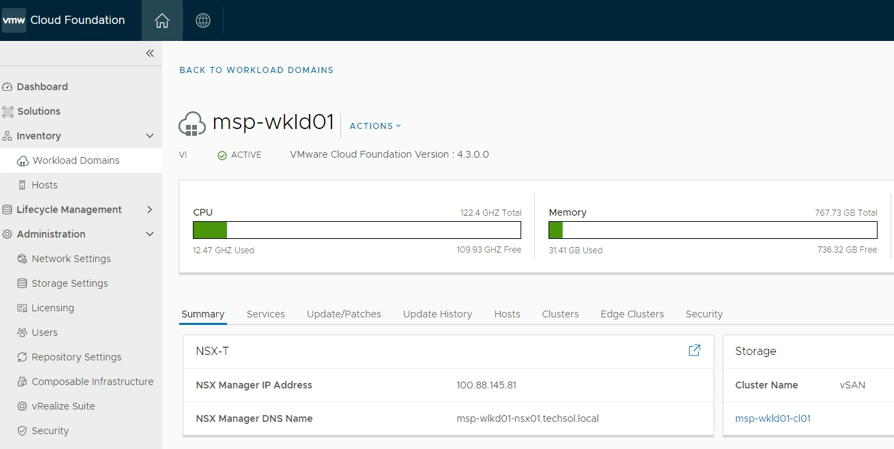

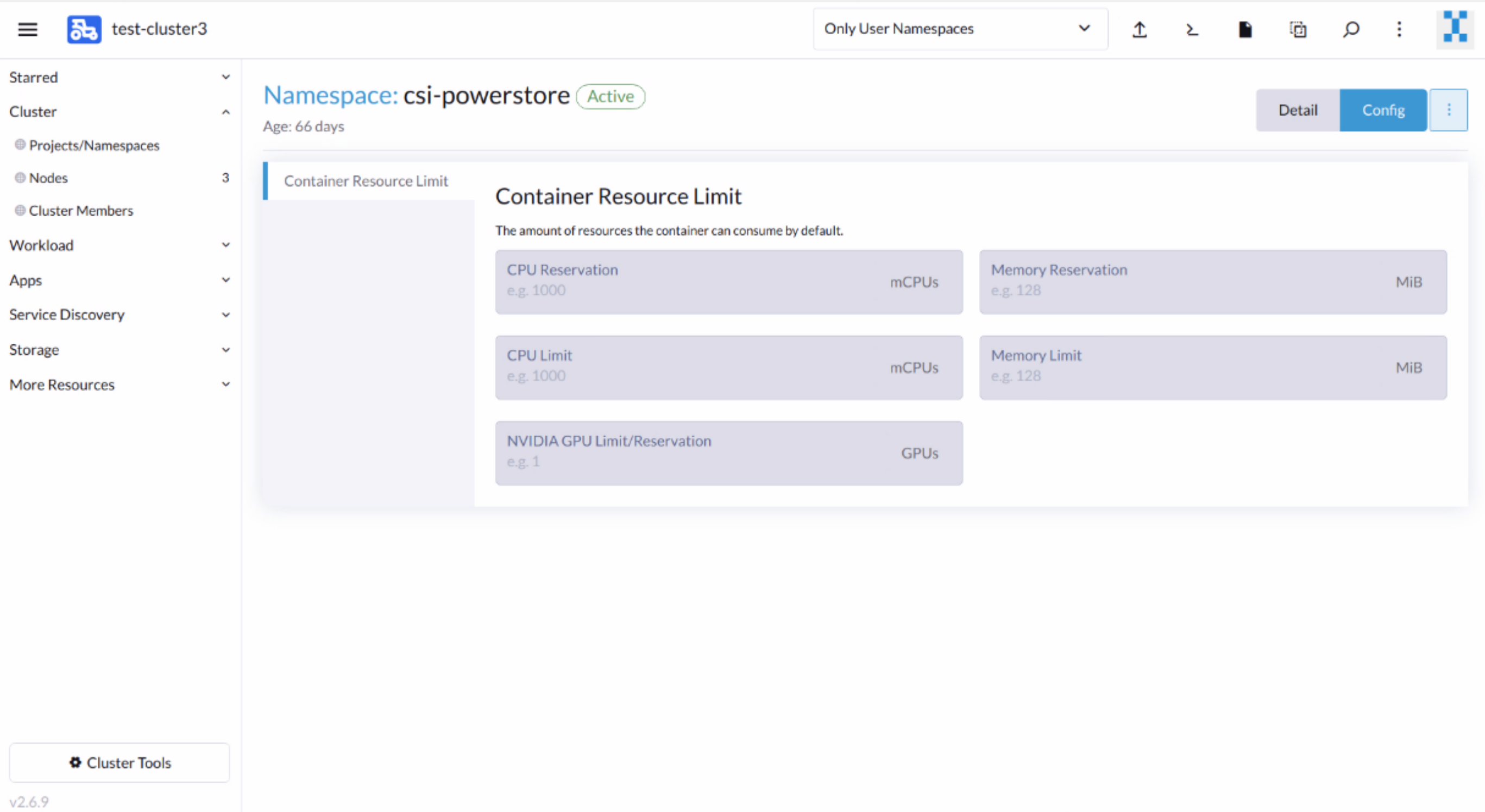

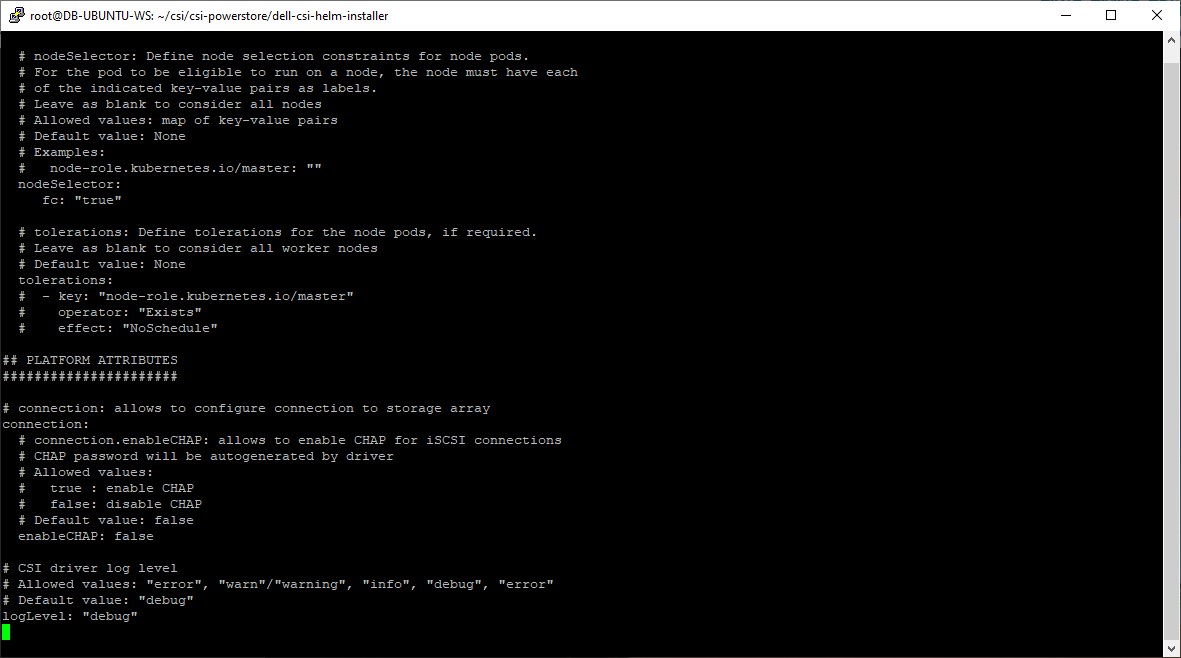

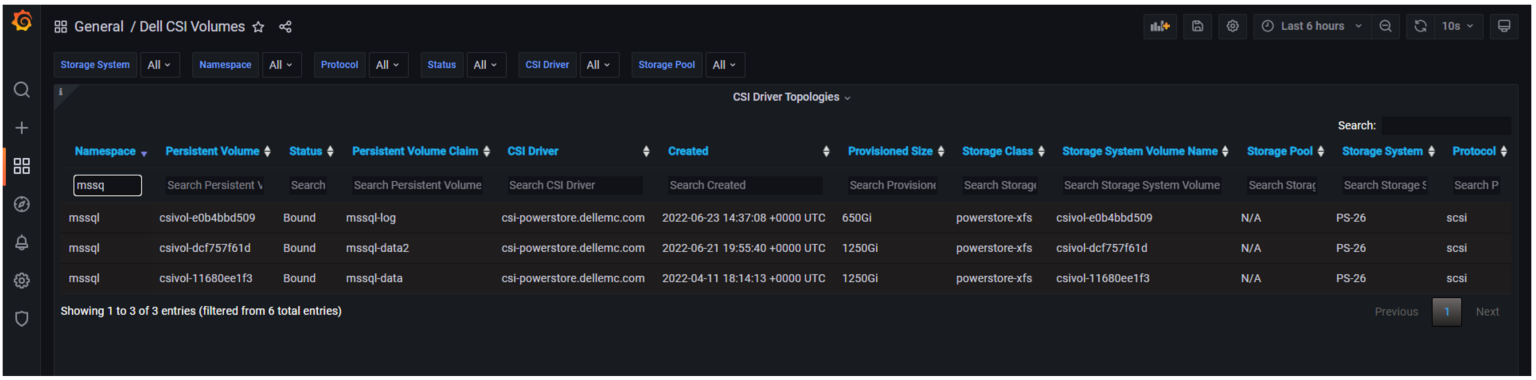

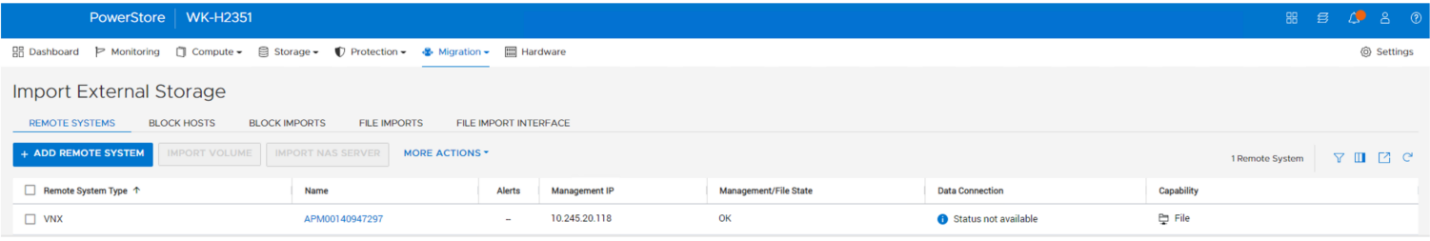

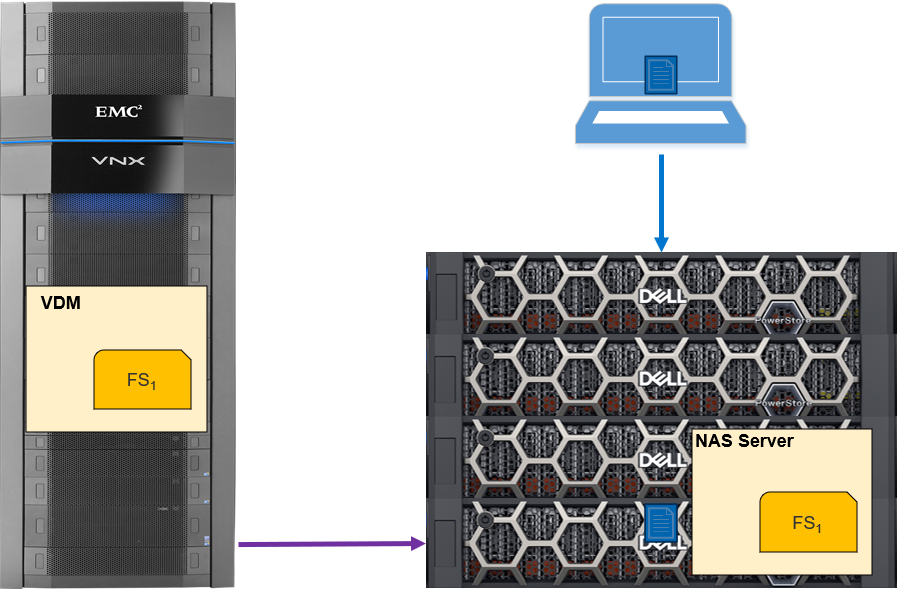

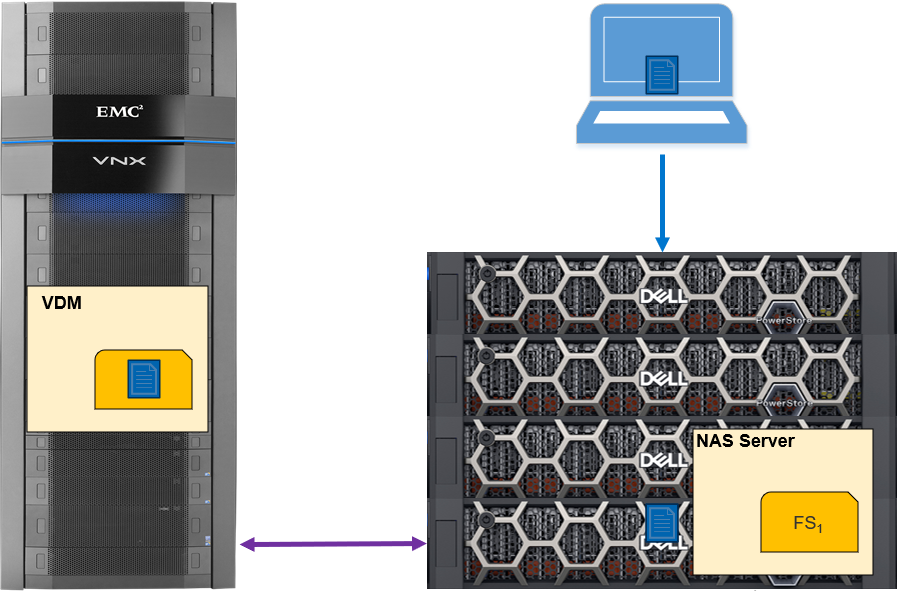

Microsoft Azure Arc-enabled data services allow you to run Azure data services on-premises, at the edge, or in the cloud. Arc-enabled data services align with Dell Technologies’ vision, by allowing you to run traditional SQL Server workloads on Kubernetes, on your infrastructure of choice. For details about a solution offering that combines PowerStore and Microsoft Azure Arc-enabled data services, see the white paper Dell PowerStore with Azure Arc-enabled Data Services.

Dell Technologies works closely with partners such as Microsoft to ensure the best possible customer experience. We are happy to announce that Dell PowerStore has been revalidated with the latest version of Azure Arc-enabled data services, 1.25.0.

Deploy with confidence

One of the deployment requirements for Azure Arc-enabled data services is that you must deploy on one of the validated solutions. At Dell Technologies, we understand that customers want to deploy solutions that have been fully vetted and tested. Key partners such as Microsoft understand this too, which is why they have created a validation program to ensure that the complete solution will work as intended.

By working through this process with Microsoft, Dell Technologies can confidently say that we have deployed and tested a full end-to-end solution and validated that it passes all tests.

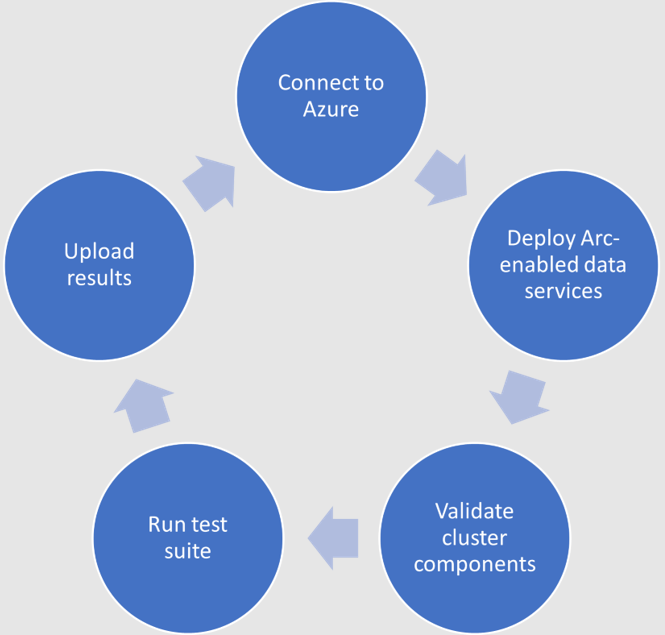

The validation process

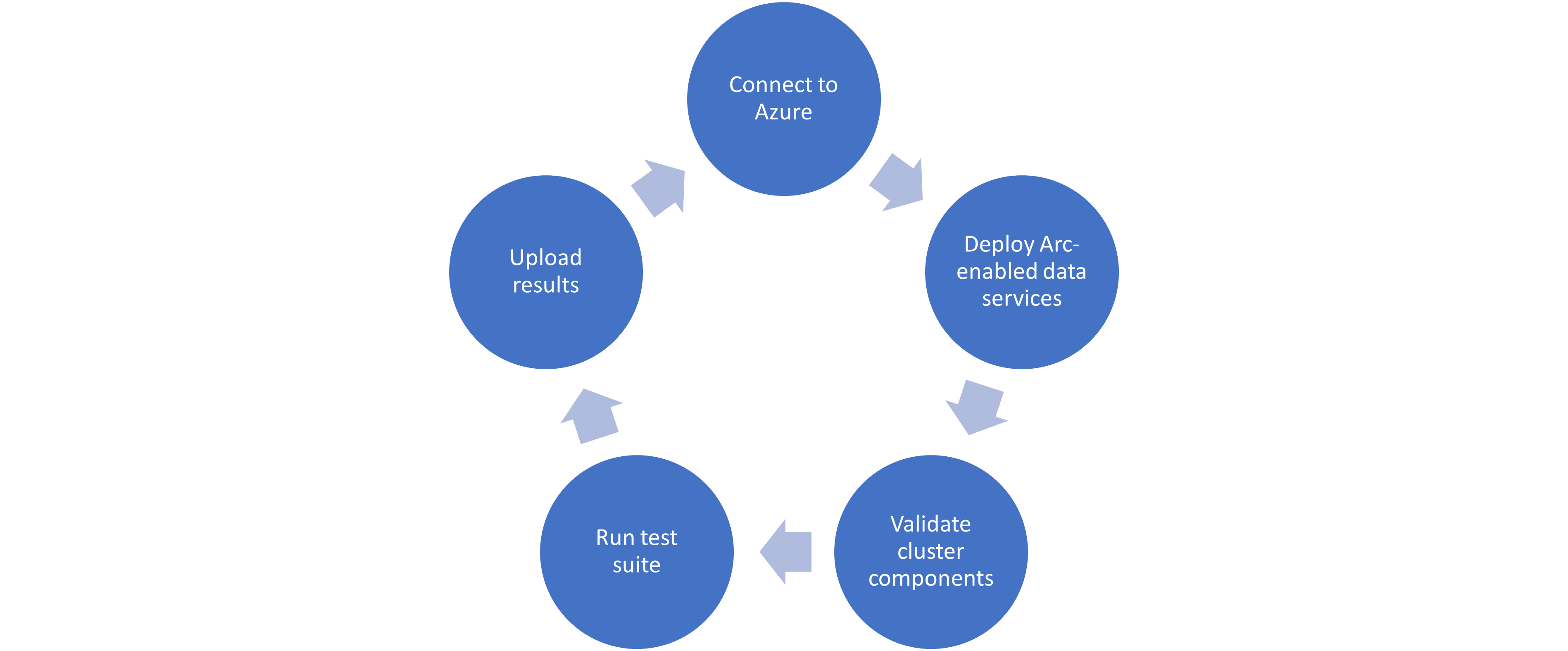

Microsoft haspublished tests for their continuous integration/continuous delivery (CI/CD) pipeline that partners and customers to run. For Microsoft to support an Arc-enabled data services solution, it must pass these tests. At a high level, these tests perform the following:

- Connect to an Azure subscription provided by Microsoft.

- Deploy the components for Arc-enabled data services, including SQL Managed Instance, using both direct and indirect connect modes.

- Validate Kubernetes (K8s), hosts, storage, container storage interface (CSI), and networking.

- Run Sonobuoy tests ranging from simple smoke tests to complex high-availability scenarios and chaos tests.

- Upload results to Microsoft for analysis.

When Microsoft accepts the results, they add the new or updated solution to their list of validated solutions. At that point, the solution is officially supported. This process is repeated as needed as new component versions are introduced. Complete details about the validation testing and links to the GitHub repositories are available here.

More to come

Stay tuned for more additions and updates from Dell Technologies to the list of validated solutions for Azure Arc-enabled data services. Dell Technologies is leading the way on hybrid solutions, proven by our work with partners such as Microsoft on these validation efforts. Reach out to your Dell Technologies representative for more information about these solutions and validations.

Author: Doug Bernhardt

Sr. Principal Engineering Technologist

What’s New in PowerStoreOS 3.6?

Thu, 05 Oct 2023 14:22:36 -0000

|Read Time: 0 minutes

Dell PowerStoreOS 3.6 is the latest software release on the Dell PowerStore platform.

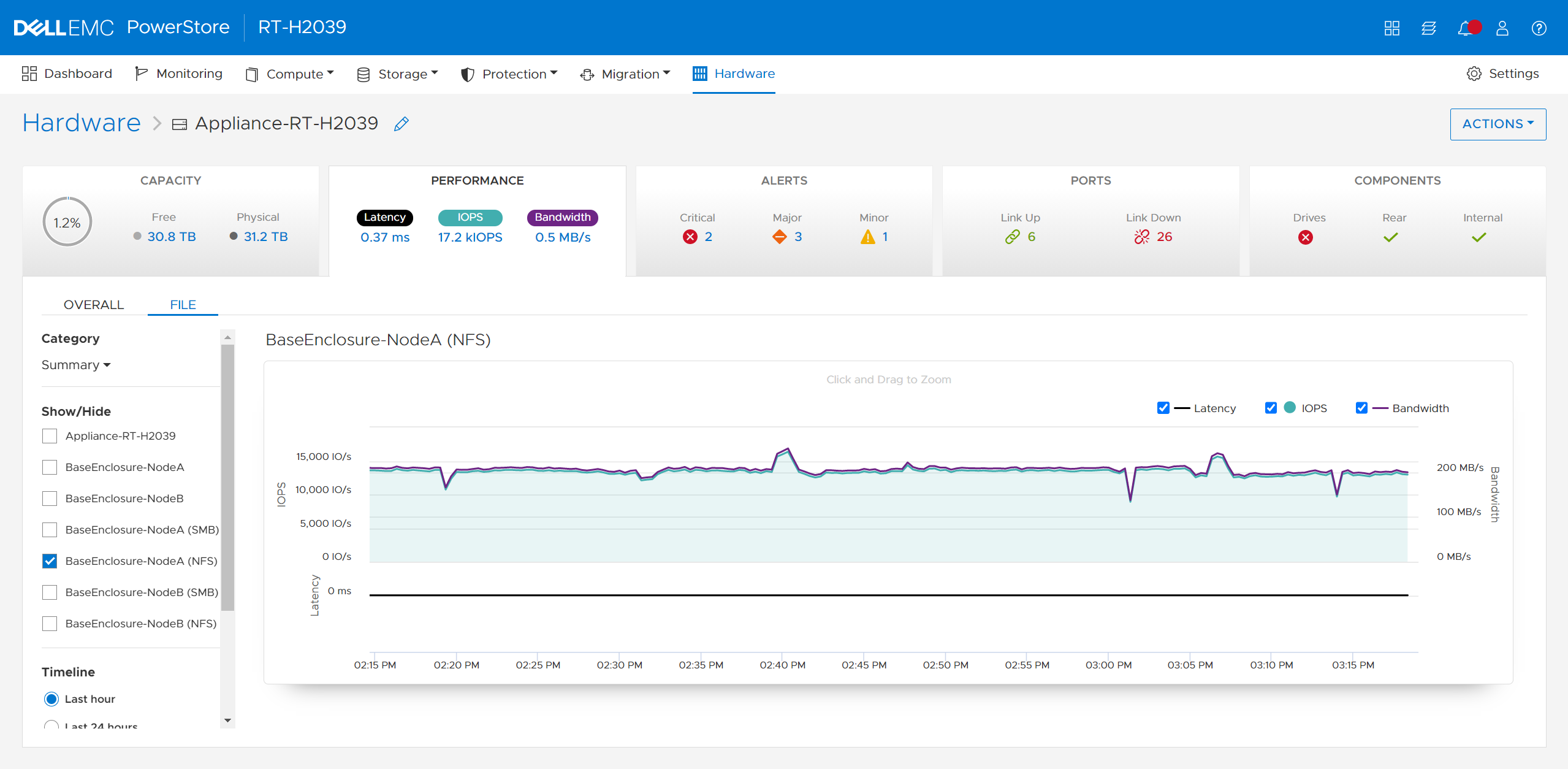

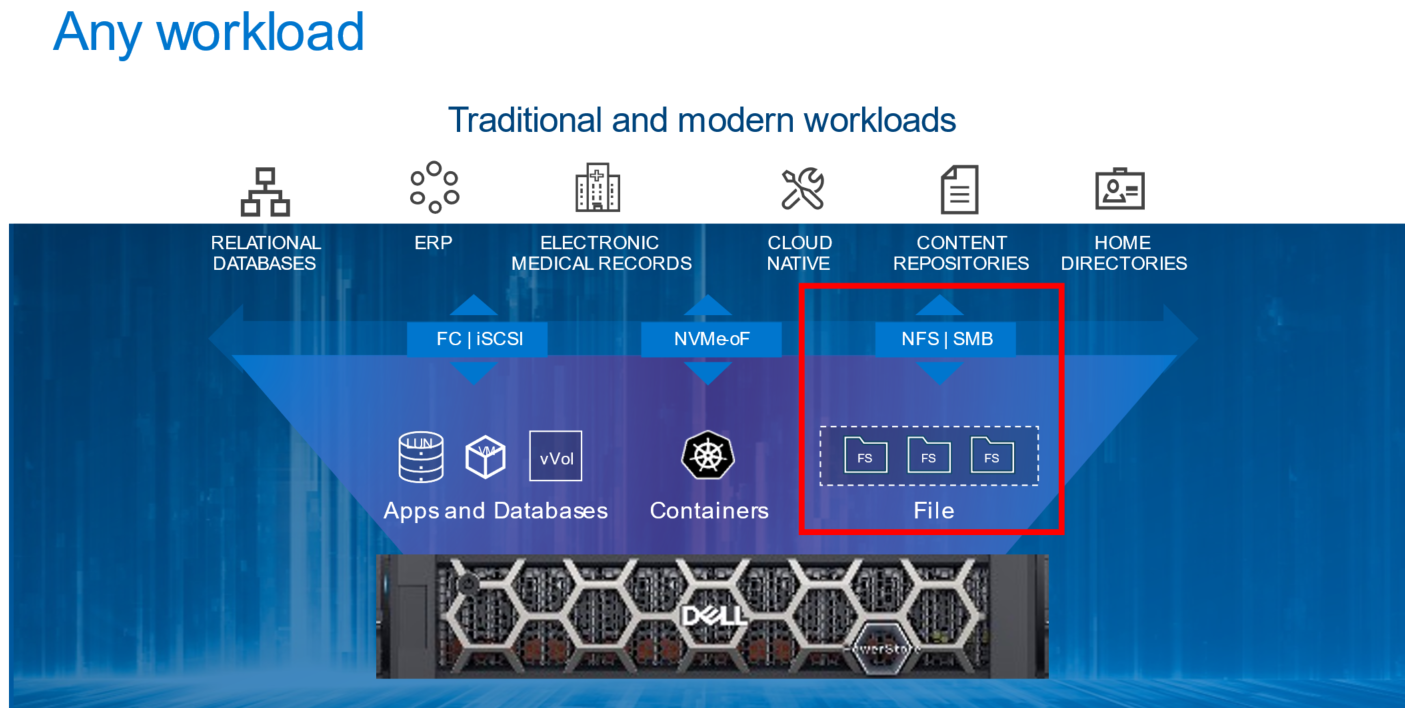

This release contains a diversified feature set in categories such as hardware, data protection, NVMe/TCP, file, and serviceability. The following list provides a brief overview of the major features in those categories:

- Hardware: PowerStoreOS 3.6 introduces the highly anticipated Data-In-Place (DIP) upgrade feature, which allows users to perform a hardware refresh while remaining online, with no downtime or host migration.

- Data Protection: PowerStoreOS 3.6 now includes support for Metro Witness Server, which allows users to configure a fully active-active configuration for metro volumes across two PowerStore clusters—with more intelligent failure handling, resiliency, and availability during an unplanned outage.

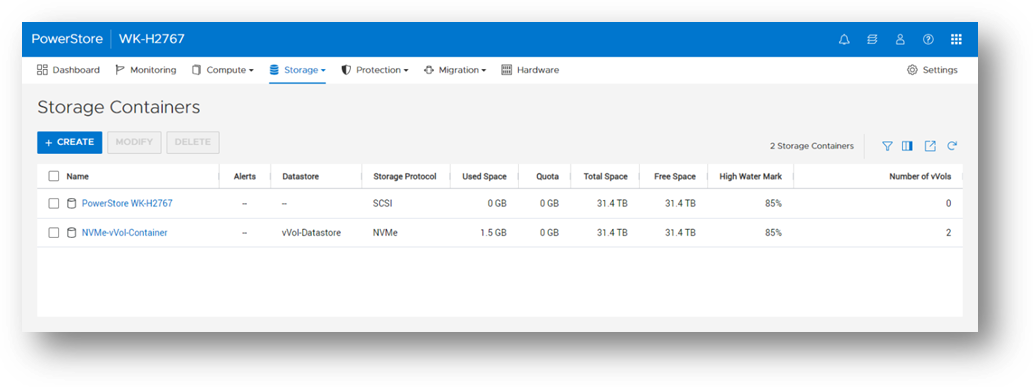

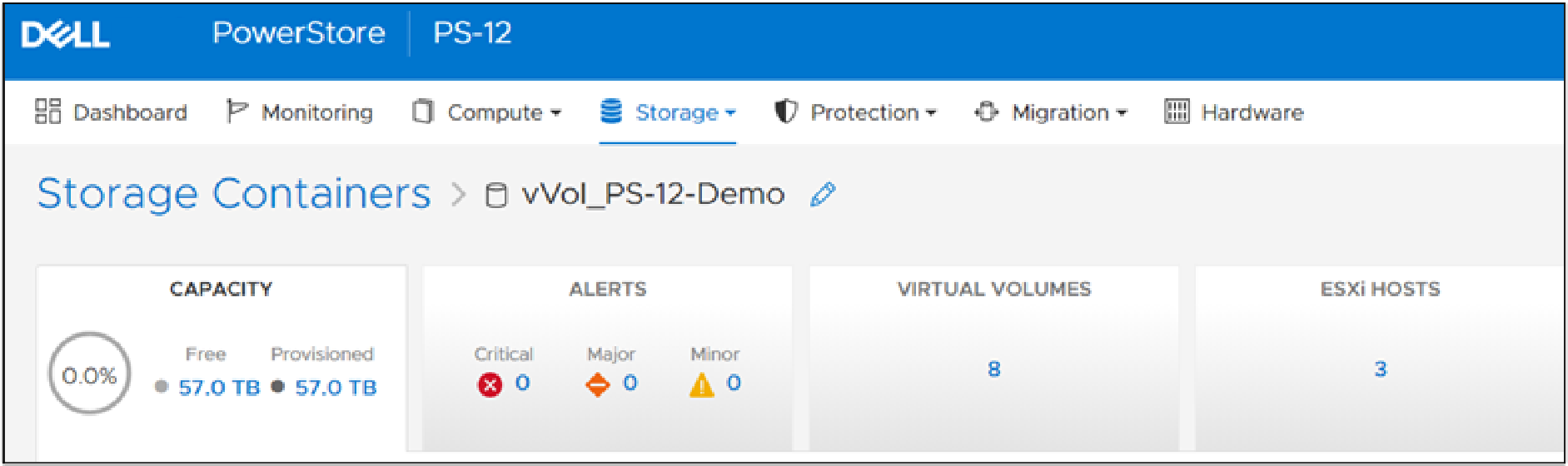

- NVMe/TCP enhancements: Users now have the option to use NVMe storage containers to support host access through the NVMe/TCP protocol for Virtual Volumes (vVols).

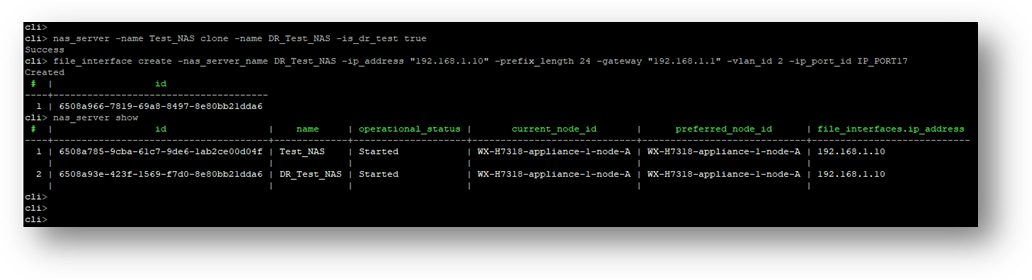

- File: Administrators can perform disaster recovery tests within a network bubble, while using an identical configuration as their production NAS server environment.

- Serviceability: To build on the existing remote syslog implementation, PowerStore alerts can now be forwarded to one or more remote syslog servers in PowerStoreOS 3.6. The following sections also provide information about the Non-Disruptive Upgrade (NDU) paths to the PowerStoreOS 3.6 release.

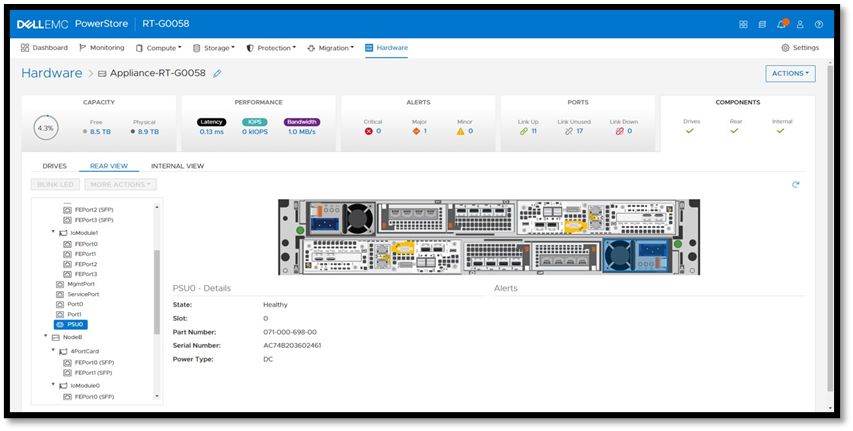

Hardware

Data-In-Place (DIP) upgrades

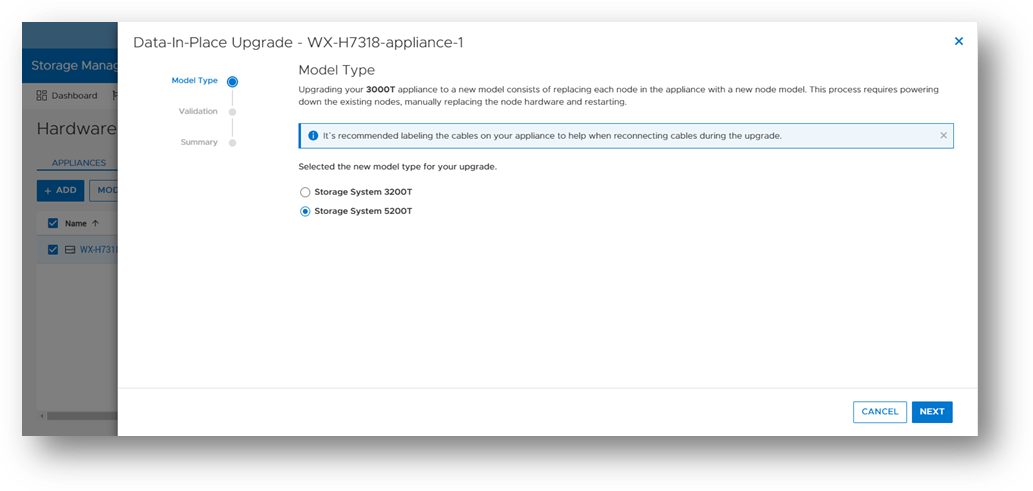

Data-In-Place upgrades allow users to convert their PowerStore Appliance from a PowerStore x000T model to a PowerStore x200T model. This is a non-disruptive process because only a single node is upgraded at a time, while the other node continues to service host I/O. Data-In-Place upgrades are performed easily through PowerStore Manager’s Hardware tab.

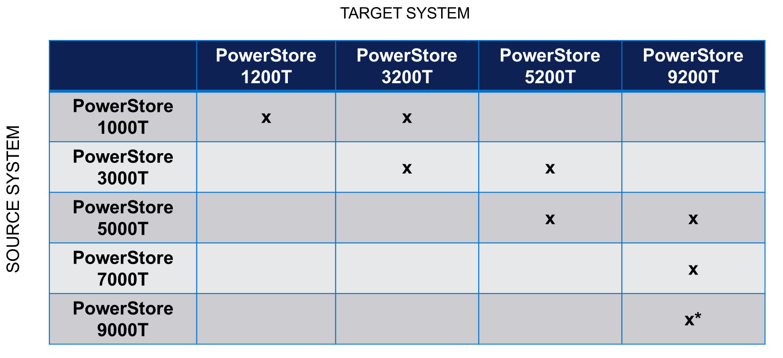

The following table outlines the supported Data-In-Place upgrade paths from the source to target models. For PowerStore 9000T models, only block-optimized upgrades are supported to the PowerStore 9200T model. When upgrading a PowerStore 3000T to a PowerStore 5200T model, additional NVRAM drives are required. When upgrading from a PowerStore 5000T model to a PowerStore 9200T model, a power supply upgrade may also be required.

Note: *Denotes only block-optimized upgrade is supported

Data Protection

Metro Witness server support

Metro Volume support was introduced in PowerStoreOS 3.0. Since PowerStoreOS 3.0, Metro Volumes required manual intervention to fail over if the preferred site went down. PowerStoreOS 3.6 introduces the Metro Witness server feature. The Metro Witness server runs software that automatically forces the non-preferred site to remain online and service I/O if the preferred site were to go offline.

The Metro Witness software is a distributed RPM package available for Linux SLES or RHEL distributions. The RPM can be deployed on a bare-metal server or a virtual machine. The Metro Witness server and software can easily be set up in minutes!

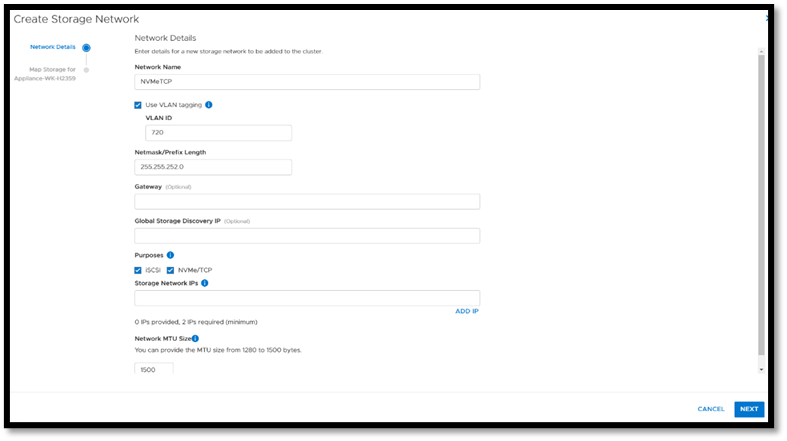

NVMe/TCP enhancements

NVMe/TCP for Virtual Volumes (vVols)

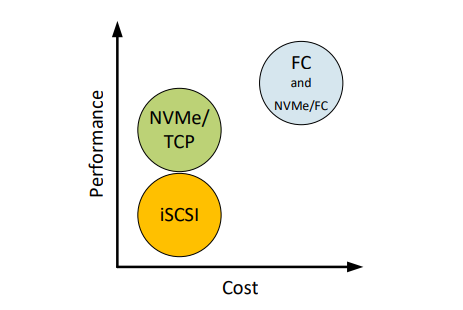

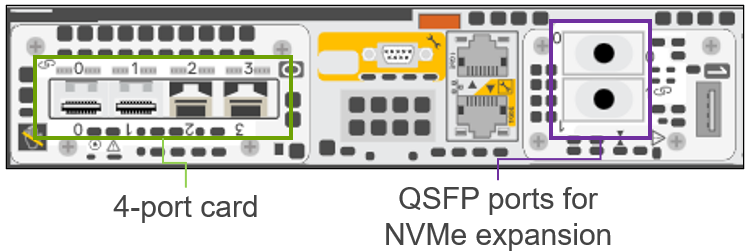

NVMe is transfer protocol that is specifically designed for connecting Solid State Drives (SSDs) to PCIe buses. NVMe over Fabrics (NVMe-oF) is an extension of the NVMe protocol to both TCP and Fibre Channel (FC) data streams. PowerStore currently supports both TCP and FC as NVMe-oF transports.

With the VMware vSphere 8.0U1 release, VMware introduced NVMe/TCP support for vVols. As the request for NVMe/TCP support grows, PowerStoreOS 3.6 expands its existing NVMe/TCP support to vVols as well! With this feature, PowerStore will be the industry’s 1st array to support NVMe/TCP for vVols[1].

From a performance perspective, NVMe/TCP is comparable to FC. From a cost perspective, NVMe/TCP infrastructure is cheaper than FC and can leverage existing network infrastructure. NVMe/TCP has a higher performance benefit than iSCSI and has lower hardware costs than FC. With the addition of NVMe/TCP support for vVols in PowerStoreOS 3.6, we combine performance, cost, and storage/compute granularity for system administrators.

File

Disaster Recovery (DR) tests within a network bubble

Many organizations are required to run disaster recovery (DR) tests using the exact same configuration as production. This includes identical IP addresses and fully qualified domain names. Running these types of tests reduces risk, increases reproducibility, and minimizes the chance of any surprises during an actual disaster recovery event.

These DR tests are carried out in an isolated environment, which is completely siloed from the production environment. Using network segmentation for proper isolation allows there to be no impact to production or replication. This allows users to meet the requirements of using identical IP addresses and FQDNs during their DR tests.

In PowerStoreOS 3.6, the appliance offers the file capability to create a Disaster Recovery Test (DRT) NAS server with a DR test interface. These DRT NAS servers permit a user to create a NAS server with an identical configuration as production, including the ability to duplicate IP addresses.

Note: DRT NAS servers and interfaces can only be configured using the CLI or REST API.

Serviceability

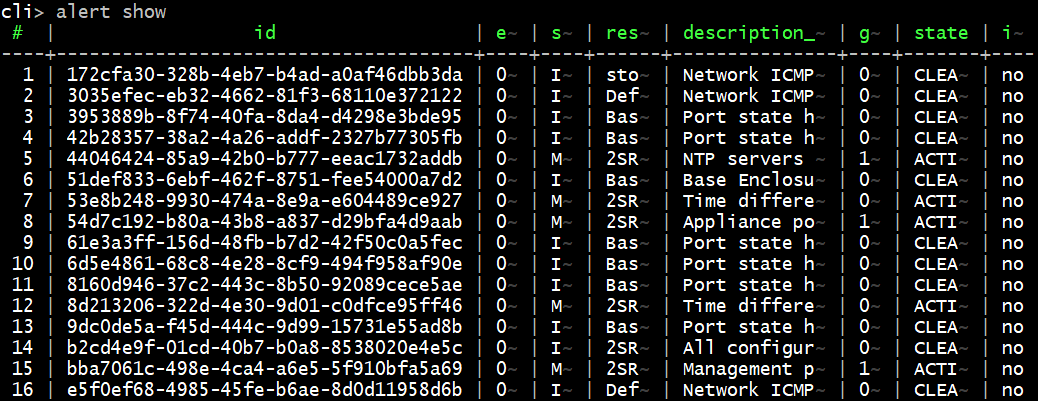

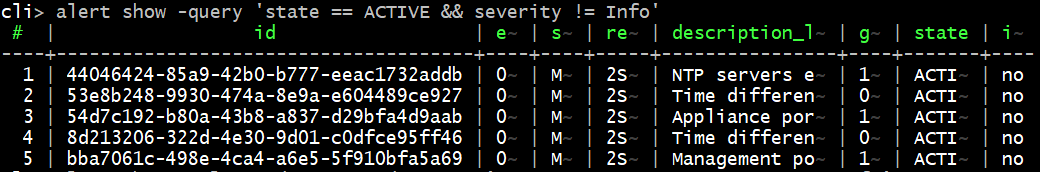

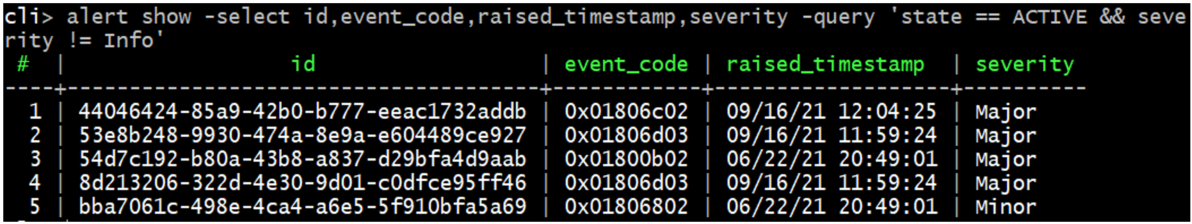

Remote Syslog support for PowerStore alerts

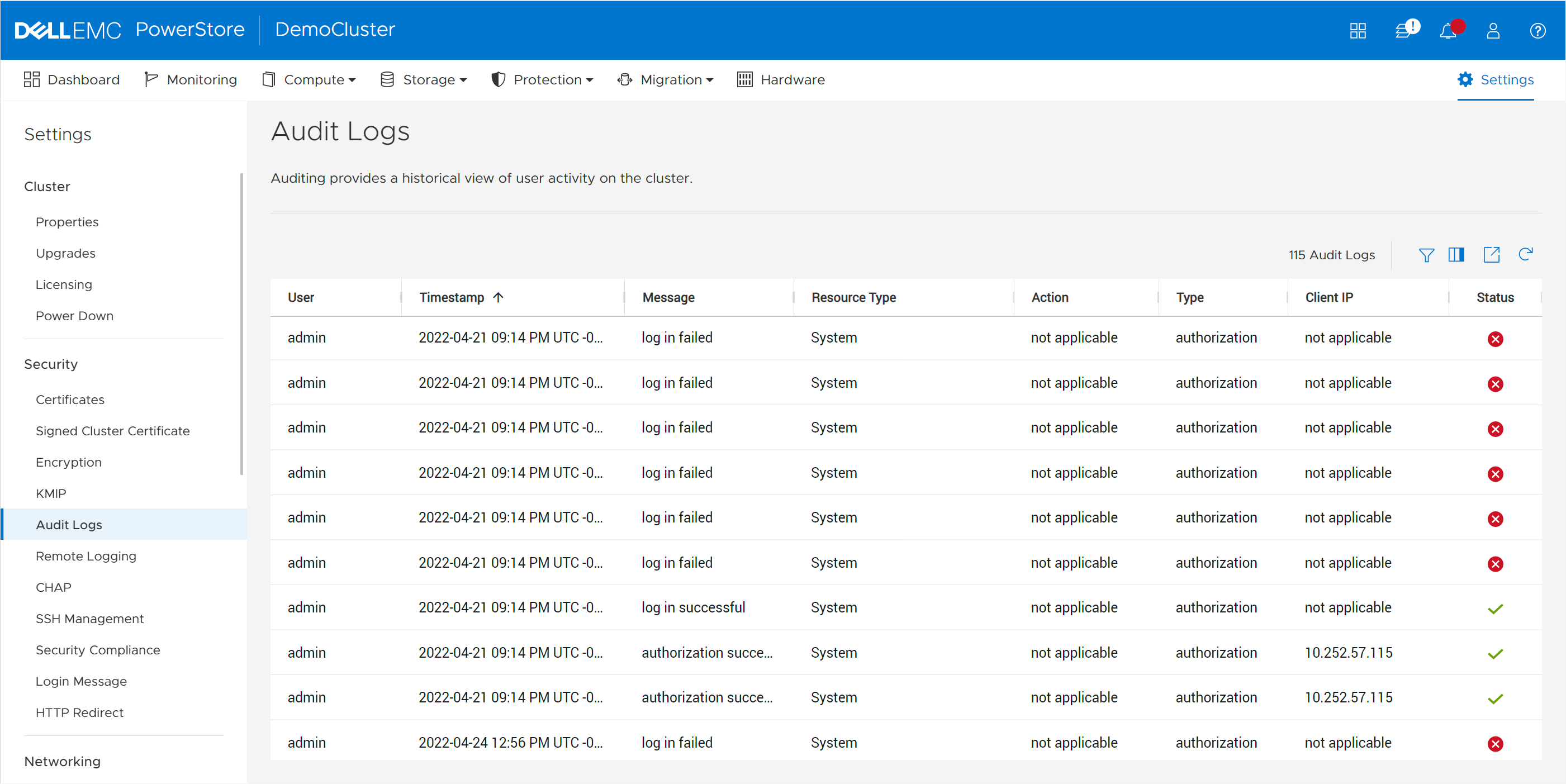

PowerStoreOS 2.0.x introduced support for remote syslog for auditing. These audit types included:

- Config

- System

- Service

- Authentication / Authorization / Logout

PowerStoreOS 3.6 has added support for forwarding of system alerts as well. This equips system administrators with more versatility to monitor their PowerStore appliances from a centralized location.

Upgrade Path

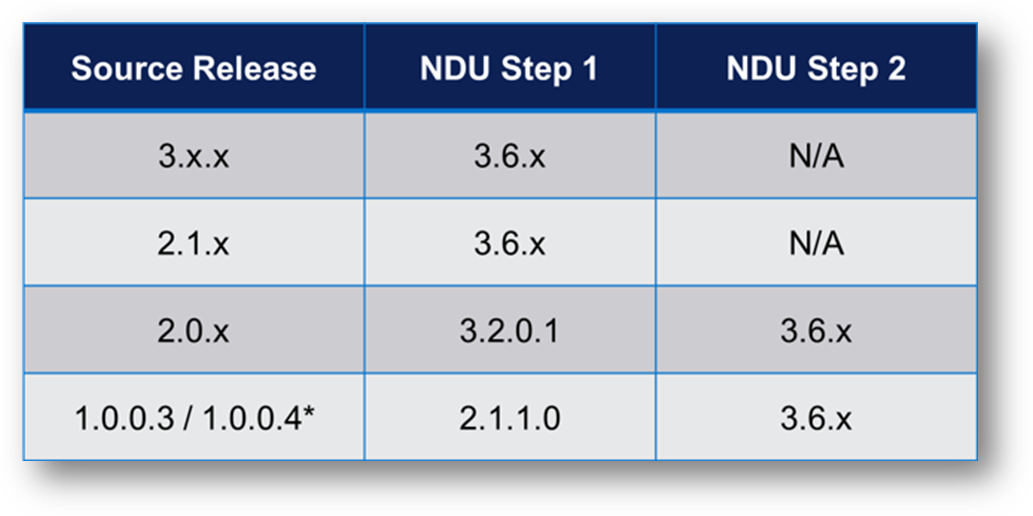

The following table outlines the NDU paths to upgrade to the PowerStoreOS 3.6 release. Depending on your source release, it may be a one or two step upgrade.

Note: *Denotes source release is not supported on PowerStore 500T models

Conclusion

The PowerStoreOS 3.6 release offers numerous feature enhancements that are unique and deepen the platform. It’s no surprise that PowerStore is deployed in over 90% of Fortune 500 vertical sectors[2] [1]. With PowerStore continuing to deliver on hardware, data protection, NVMe/TCP, file, and serviceability in this release, it’s no secret that the product is extremely adaptable and versatile in modern IT environments.

Resources

For additional information about the features described in this blog, plus other information about the PowerStoreOS 3.6 release, see the following white papers and solution documents:

- Dell PowerStore: Introduction to the Platform

- Dell PowerStore Manager Overview

- Dell PowerStore: File Capabilities

- Dell PowerStore: Replication Technologies

- Dell PowerStore: Virtualization Integration

- Dell PowerStore: Metro Volume

- Dell PowerStore: VMware vSphere Best Practices

- Dell PowerStore: VMware Site Recovery Manager Best Practices

- Dell PowerStore: VMware vSphere with Tanzu and TKG Clusters

- NVMe Transport Performance Comparison

Other Resources

- What’s New in PowerStoreOS 3.5?

- PowerStore Simple Support Matrix

- PowerStore: Info Hub – Product Documentation & Videos

- Dell Technologies PowerStore Info Hub

Author: Louie Sasa

[1] PowerStore is the industry's first array to support NVMe/TCP for vVols. Based on Dell internal analysis, September 2023.

[2] As of January 2023, based on internal analysis of vertical industry categories from 2022 Fortune 500 rankings.

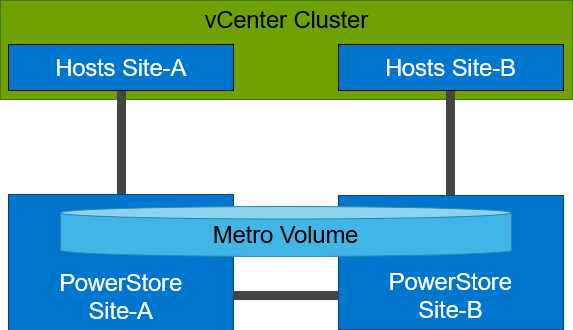

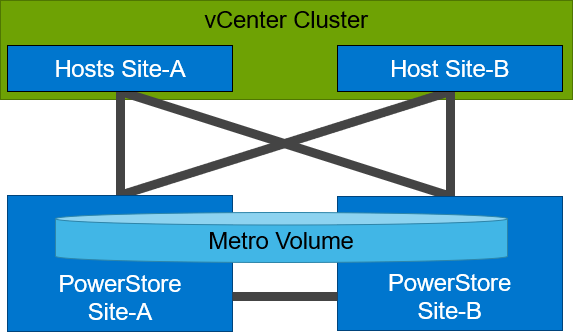

Protecting VMware Workloads with PowerStore Metro Volumes

Mon, 09 Oct 2023 18:14:42 -0000

|Read Time: 0 minutes

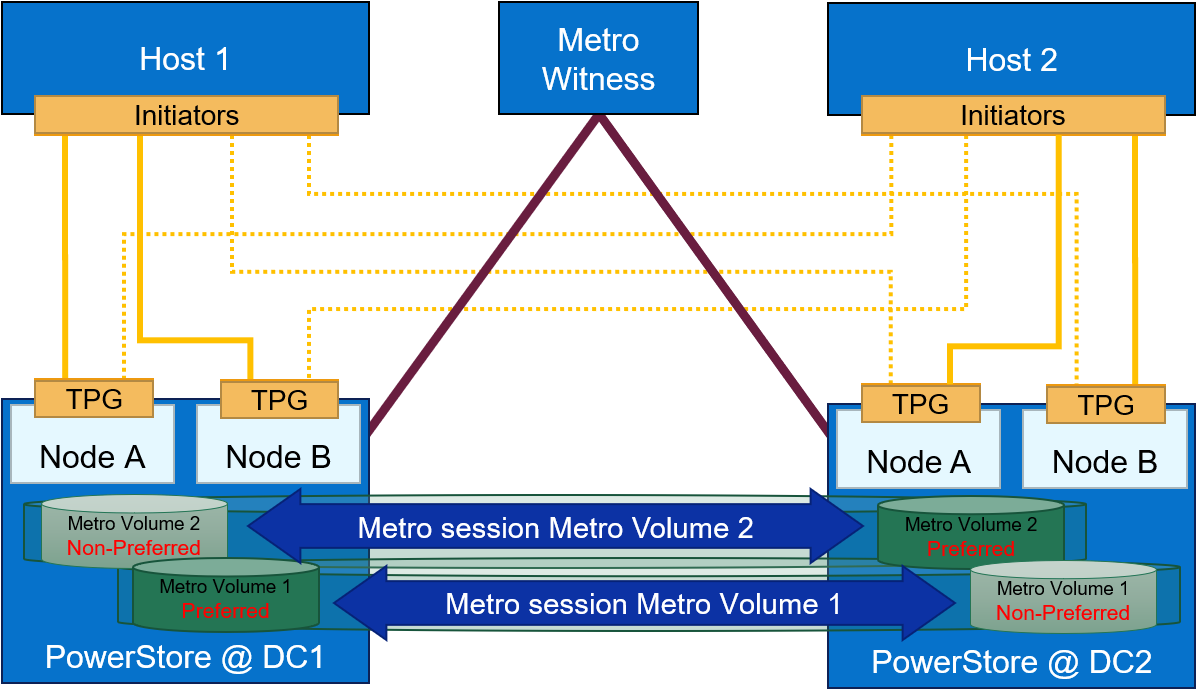

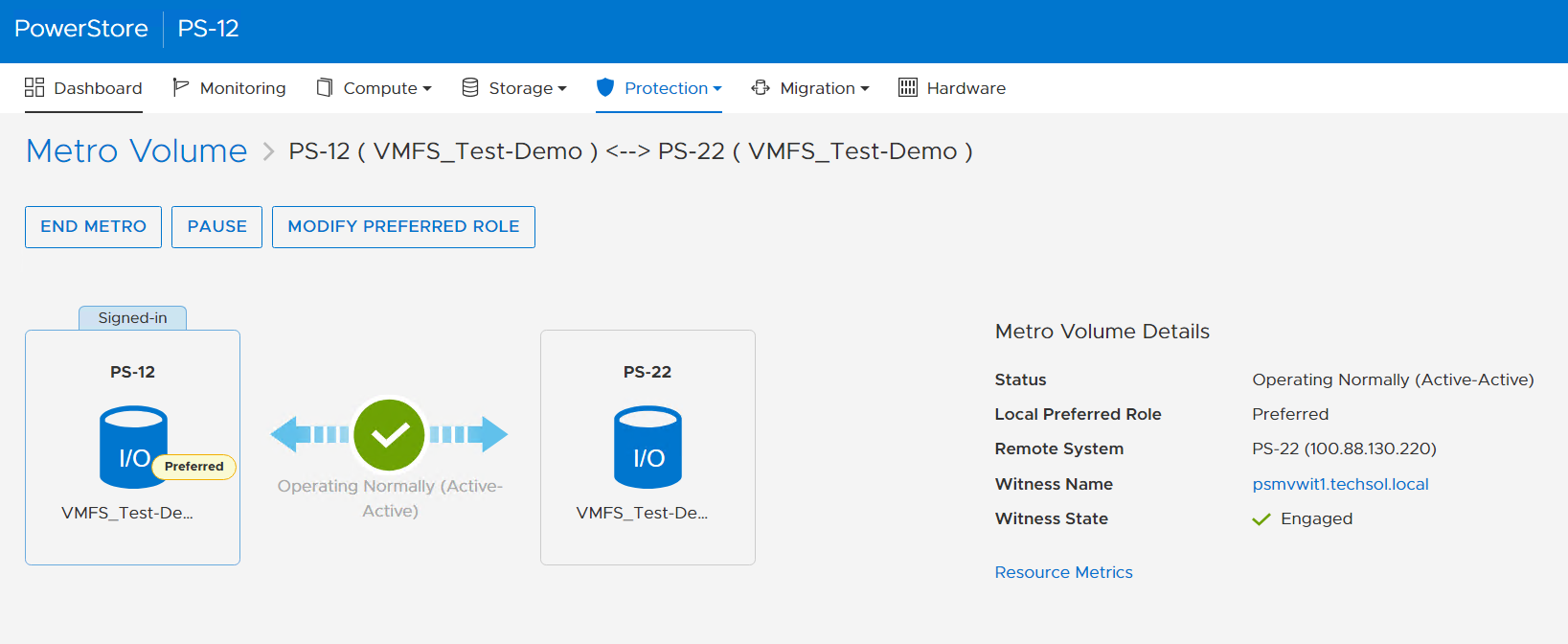

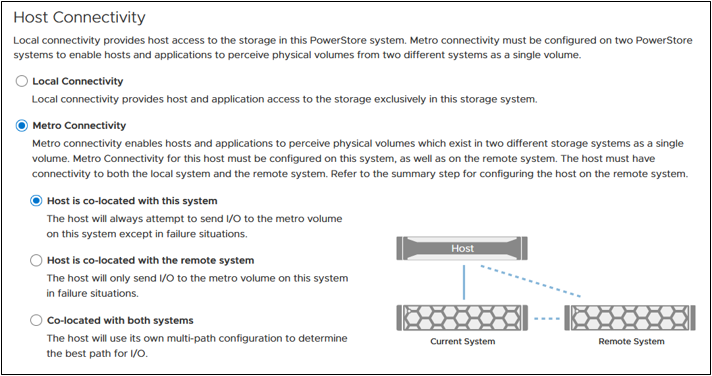

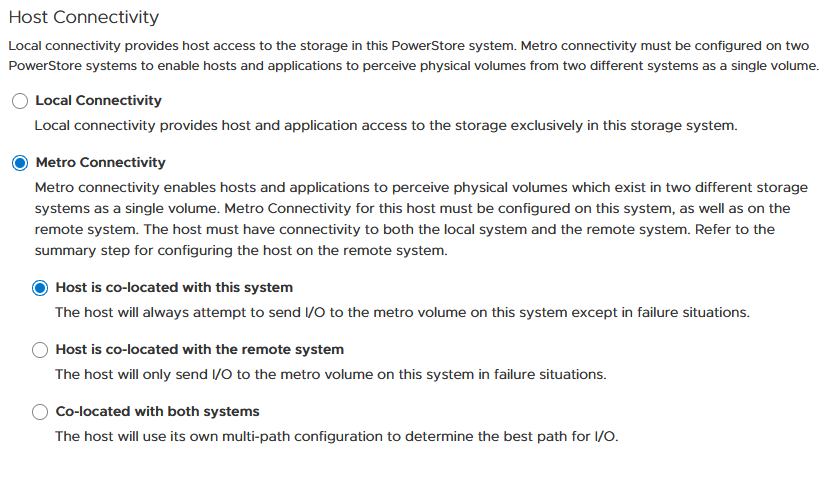

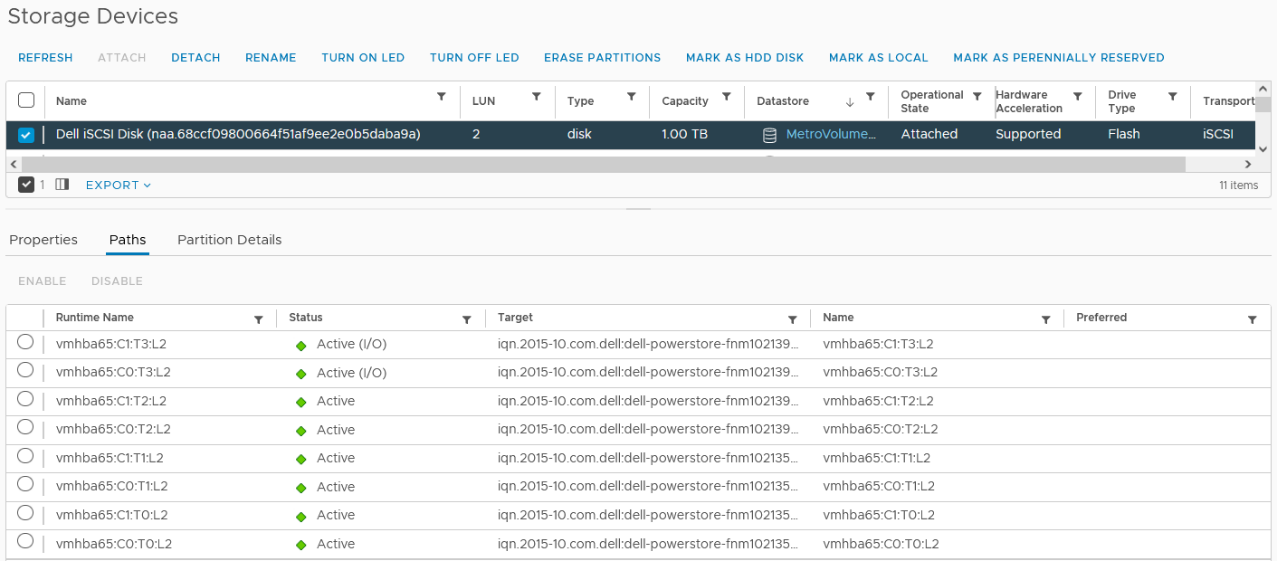

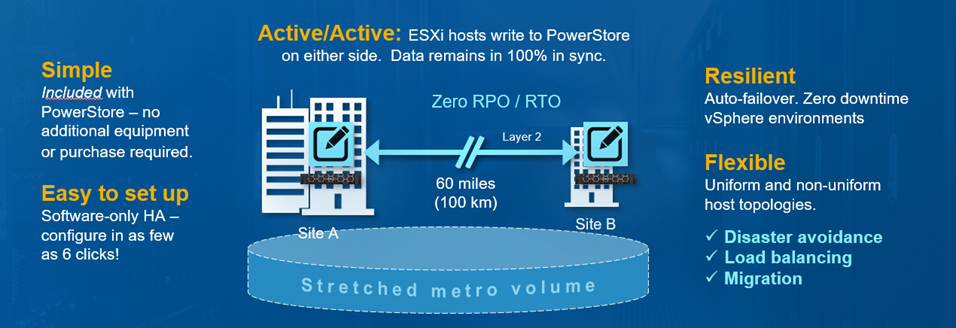

PowerStore’s metro volume replication allows storage administrators to create a high availability shared SAN environment across PowerStore clusters. Metro Volume provides symmetric active/active data access to VMware environments for use cases such as: planned migrations, disaster avoidance, and proactive resource rebalancing.

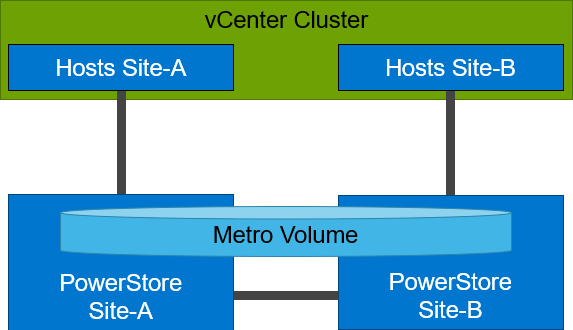

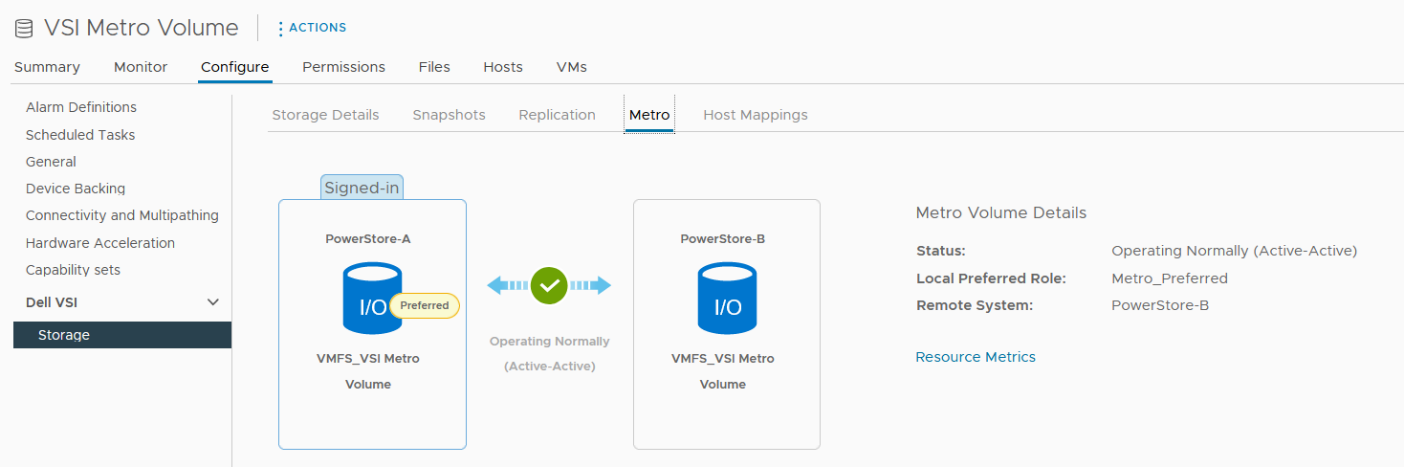

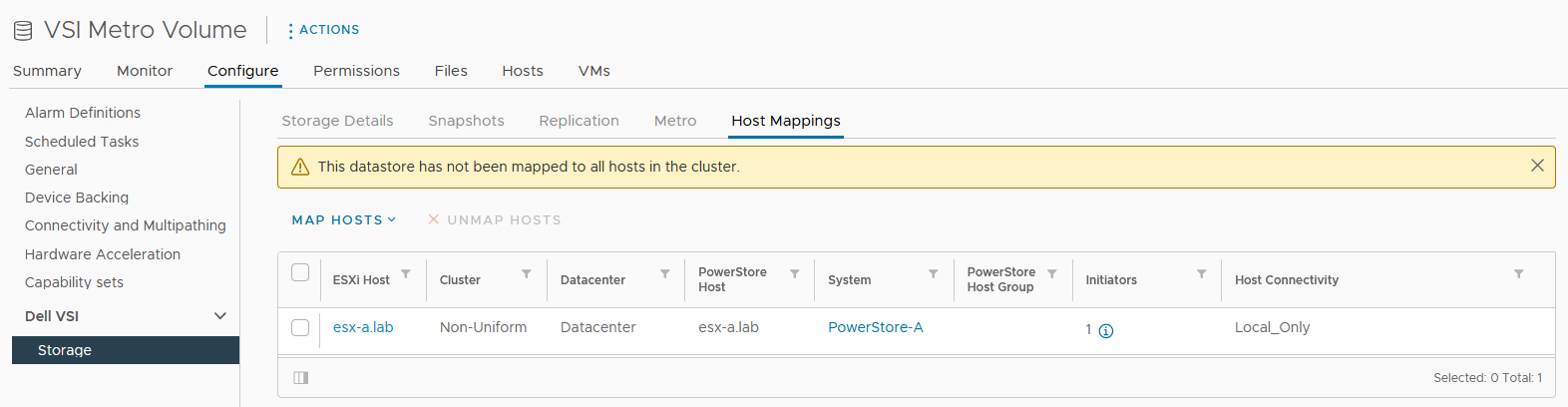

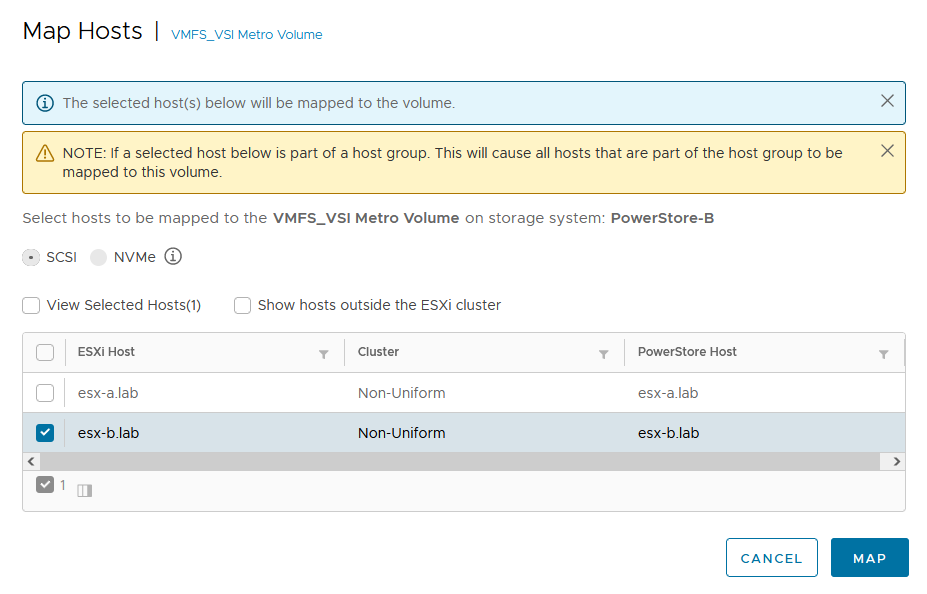

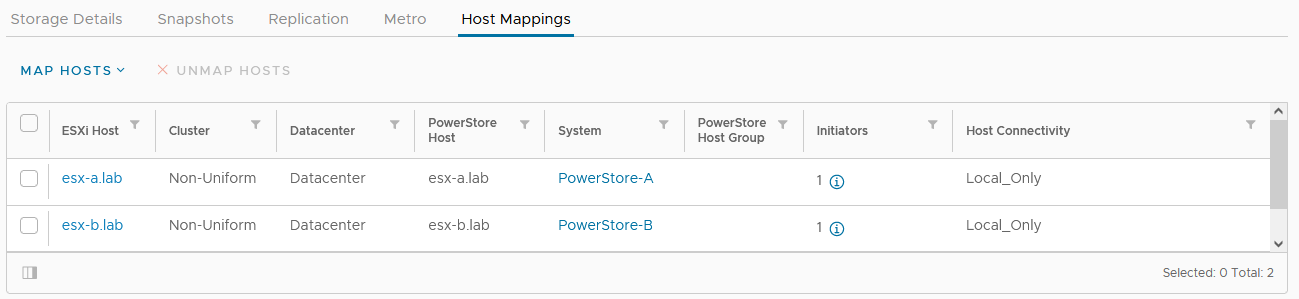

PowerStore supports two different configurations with Metro volume access: non-uniform and uniform. In this blog, our configuration is non-uniform.

Here is our sample non-uniform configuration, where hosts only have access to its local PowerStore system:

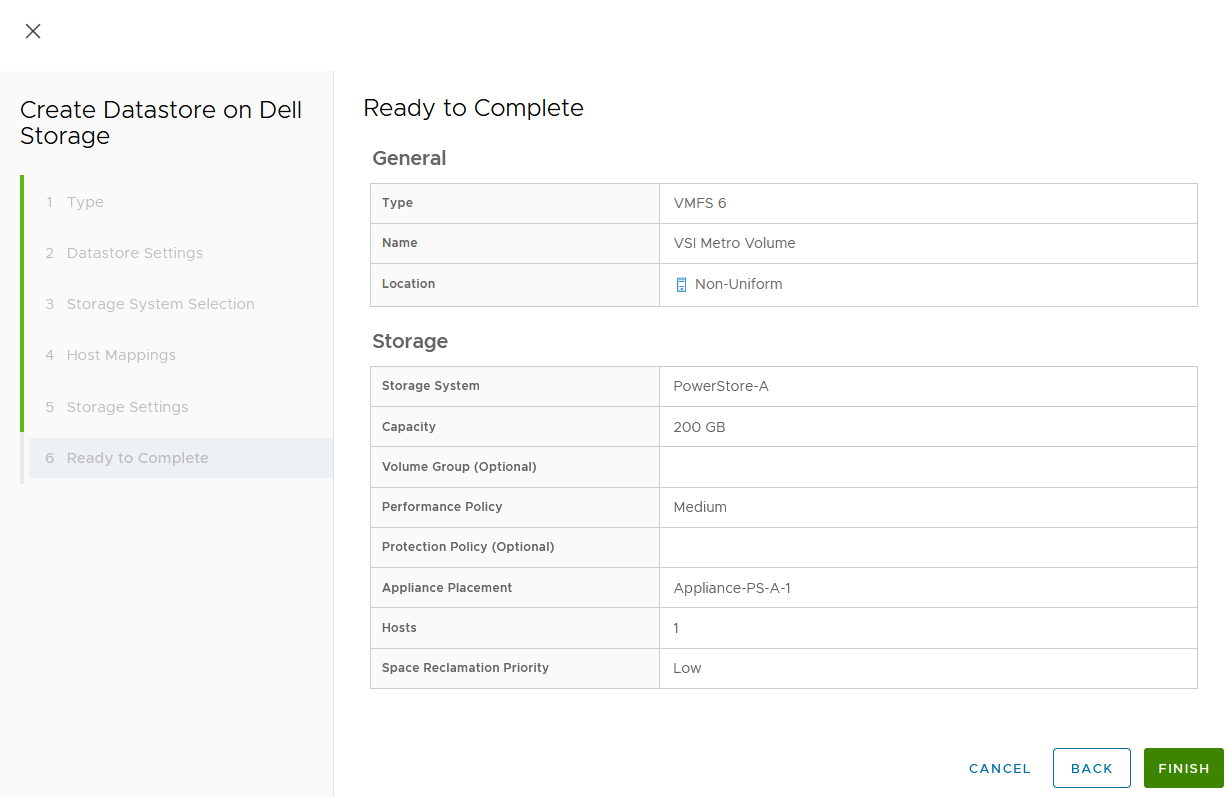

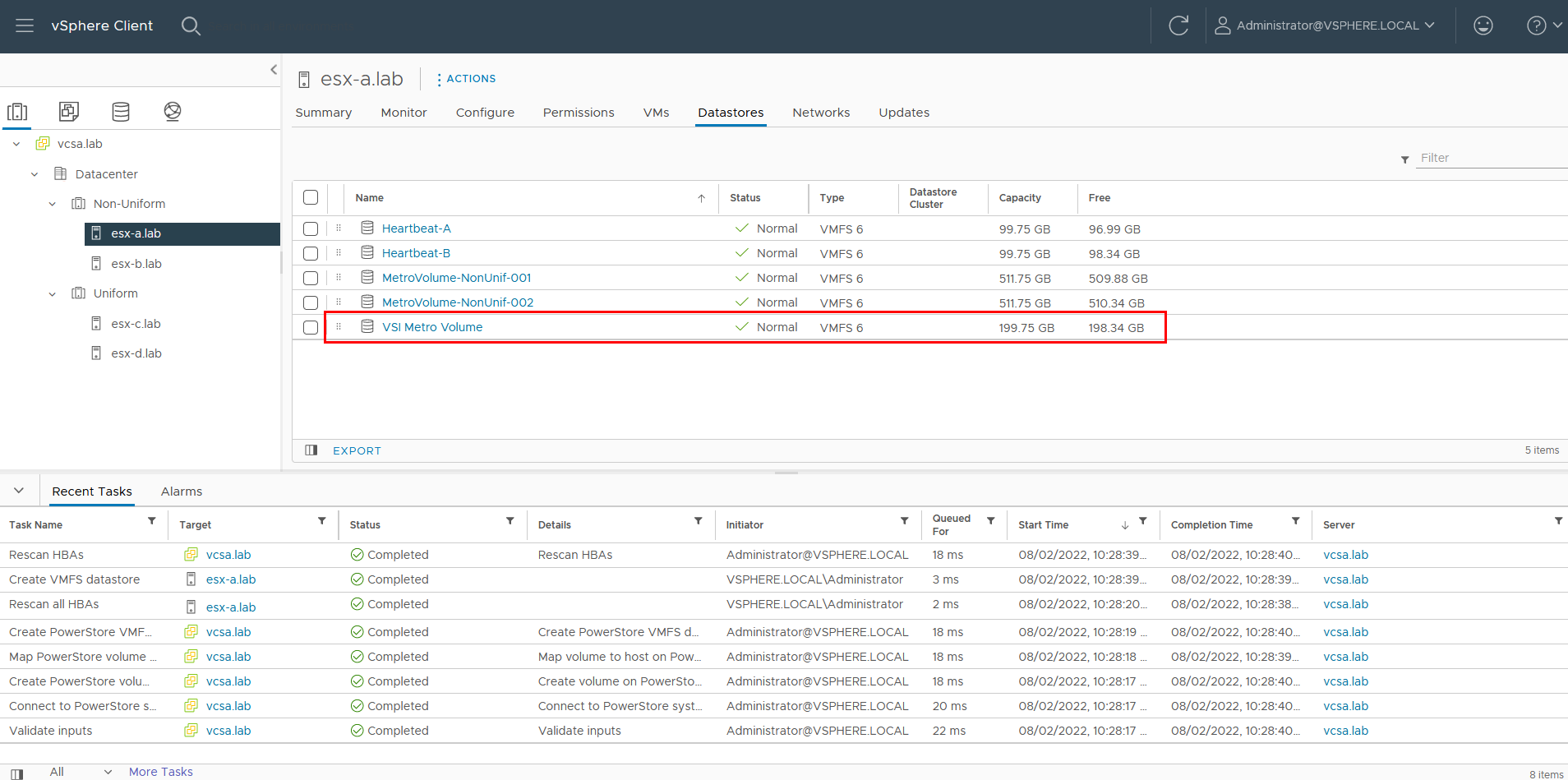

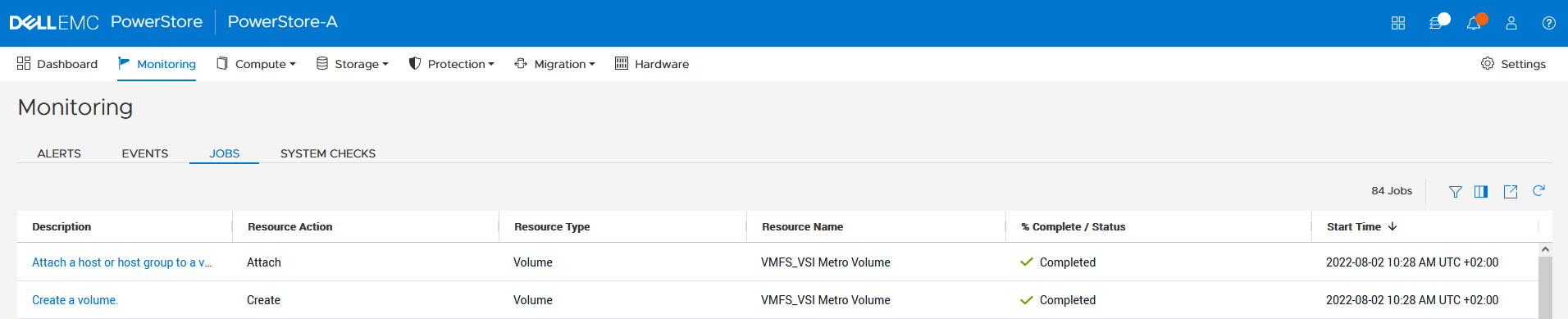

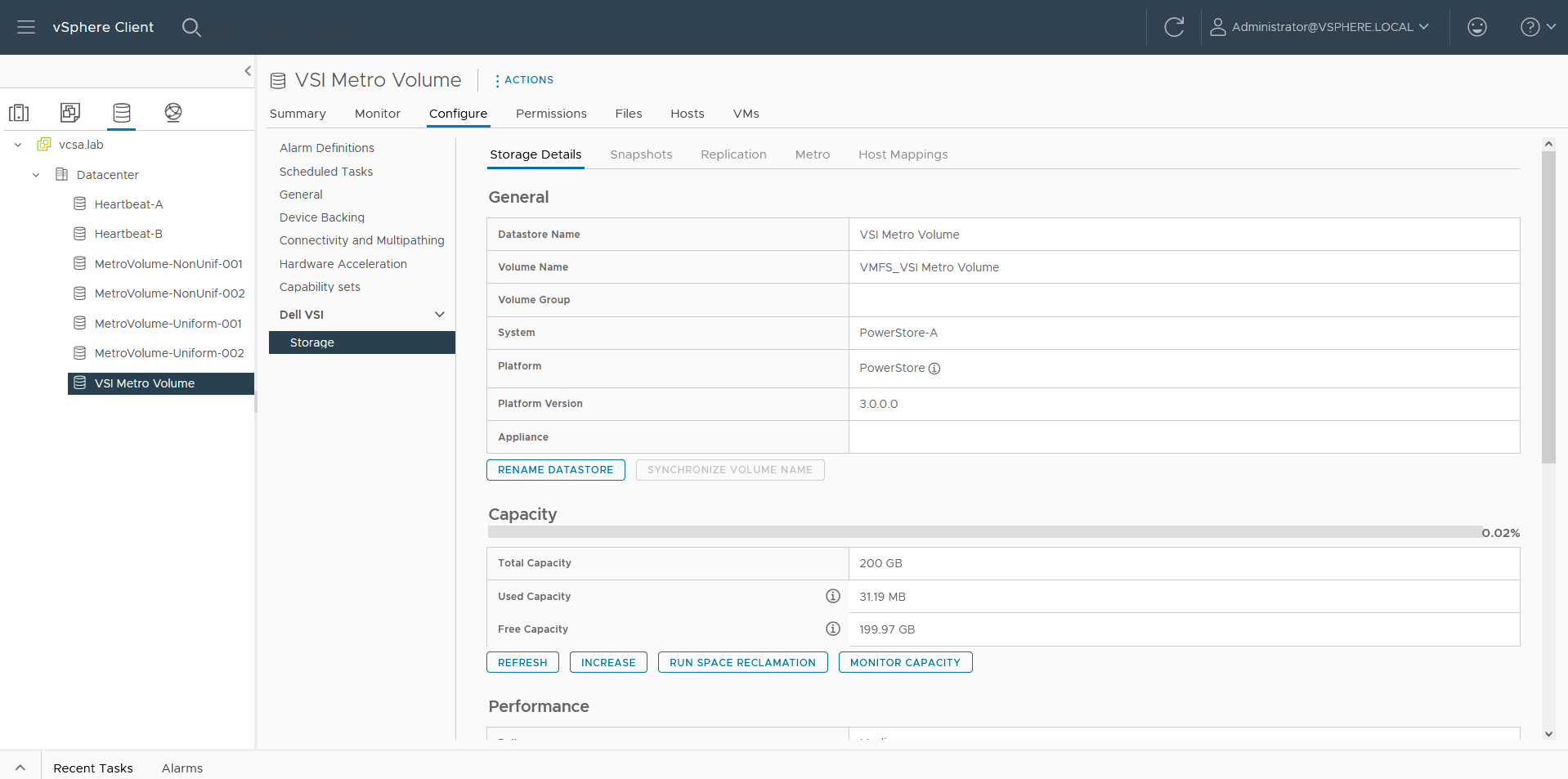

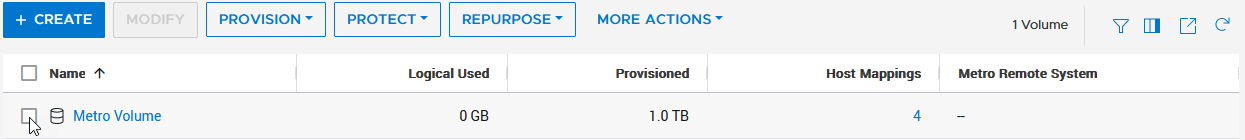

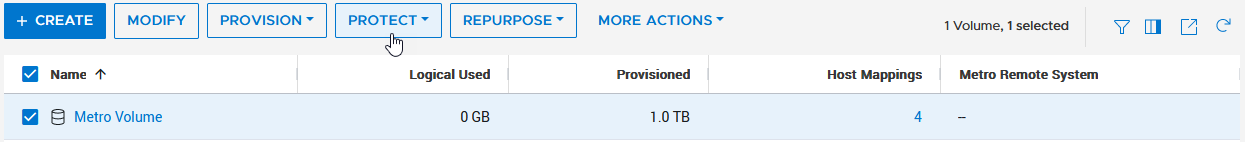

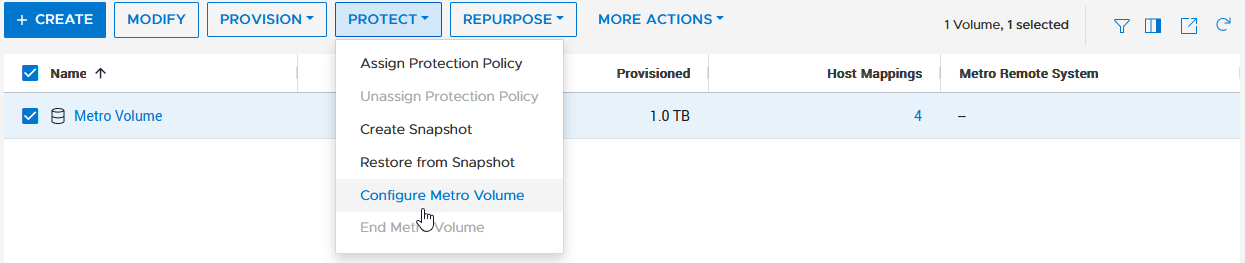

Creating the Metro Volume

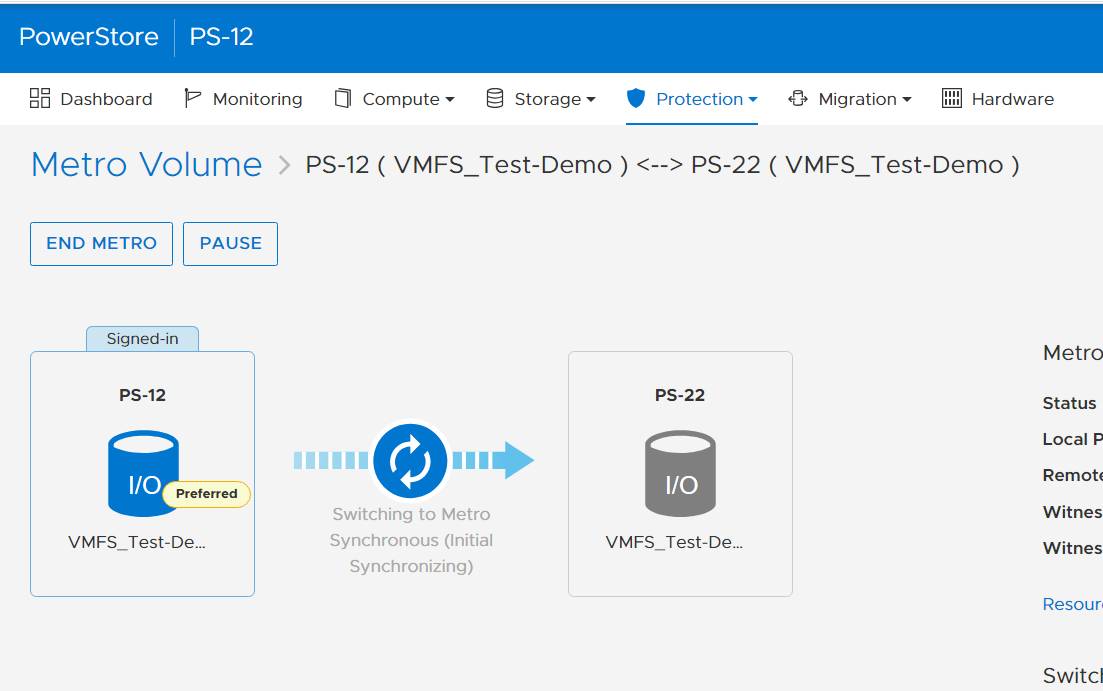

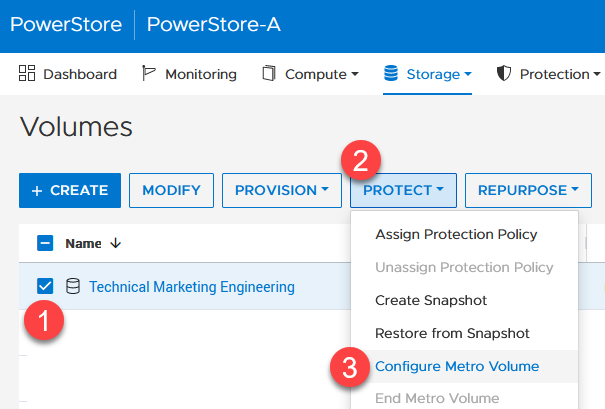

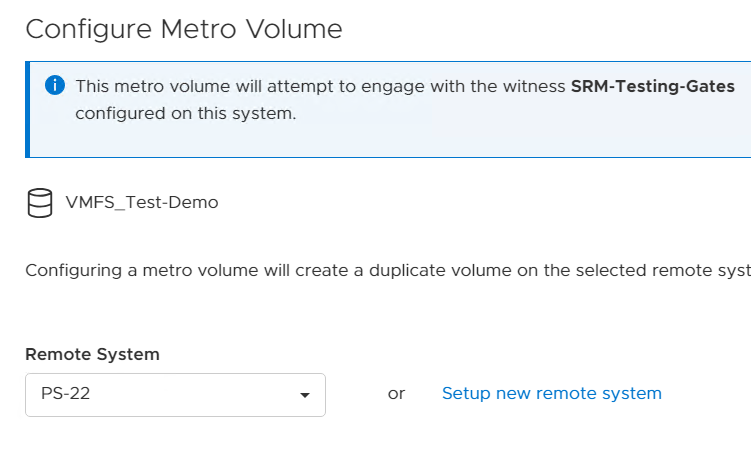

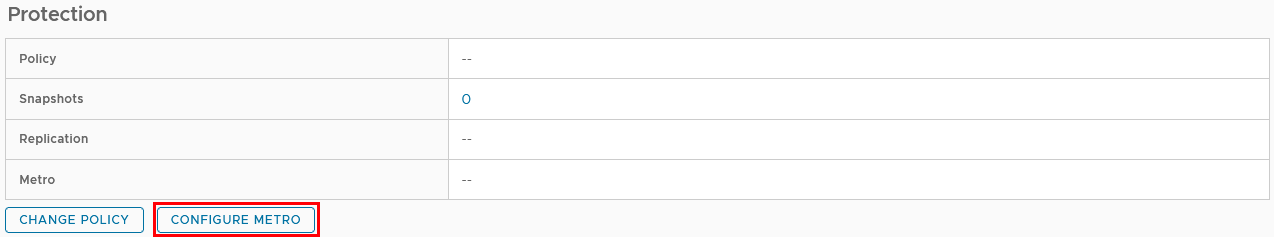

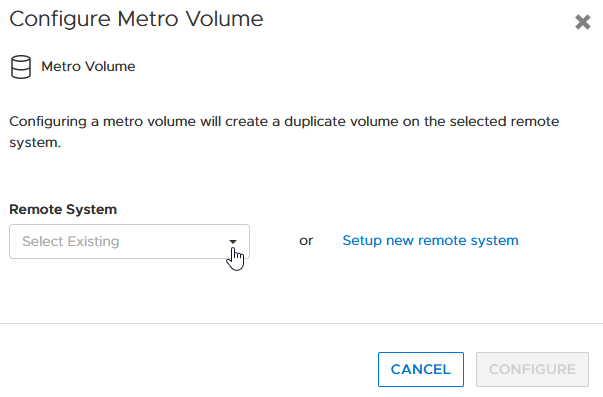

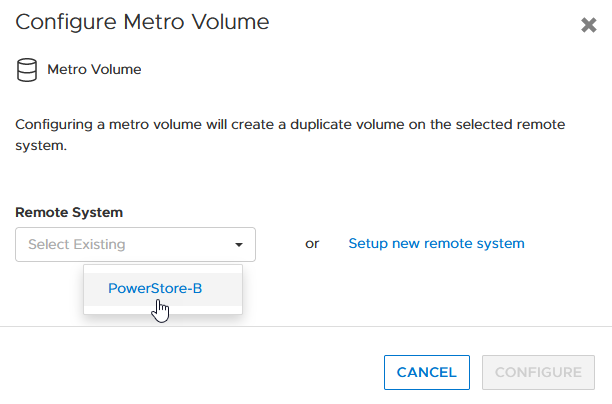

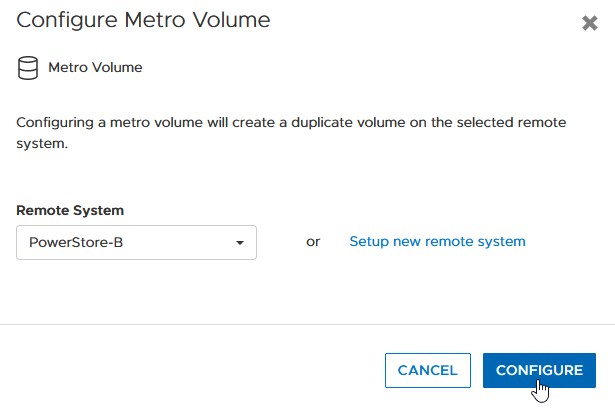

- Creating the metro volume session is a relatively simple process and involves a few clicks. Log into PowerStore Manager, select Storage, then select the volume (here, VMFS_Test-Demo), then select Configure Metro Volume.

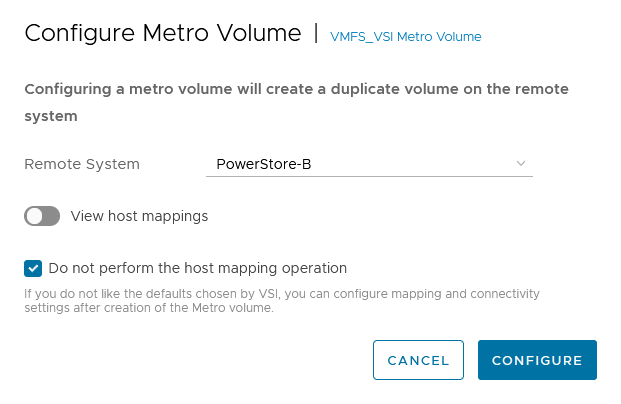

2. On the Configure Metro Volume page, select the remote PowerStore to create the duplicate volume.

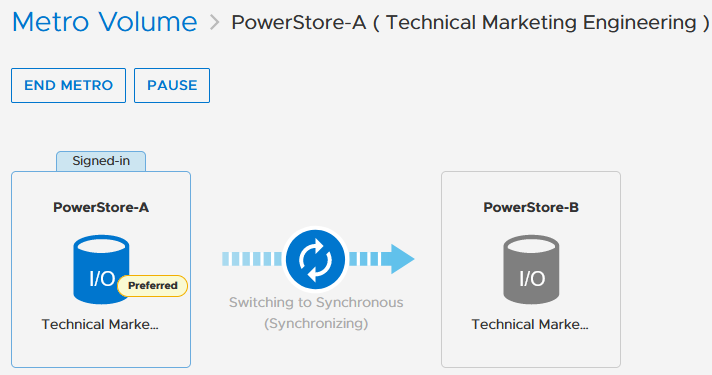

3. Now we see the volume switching to metro synchronous.

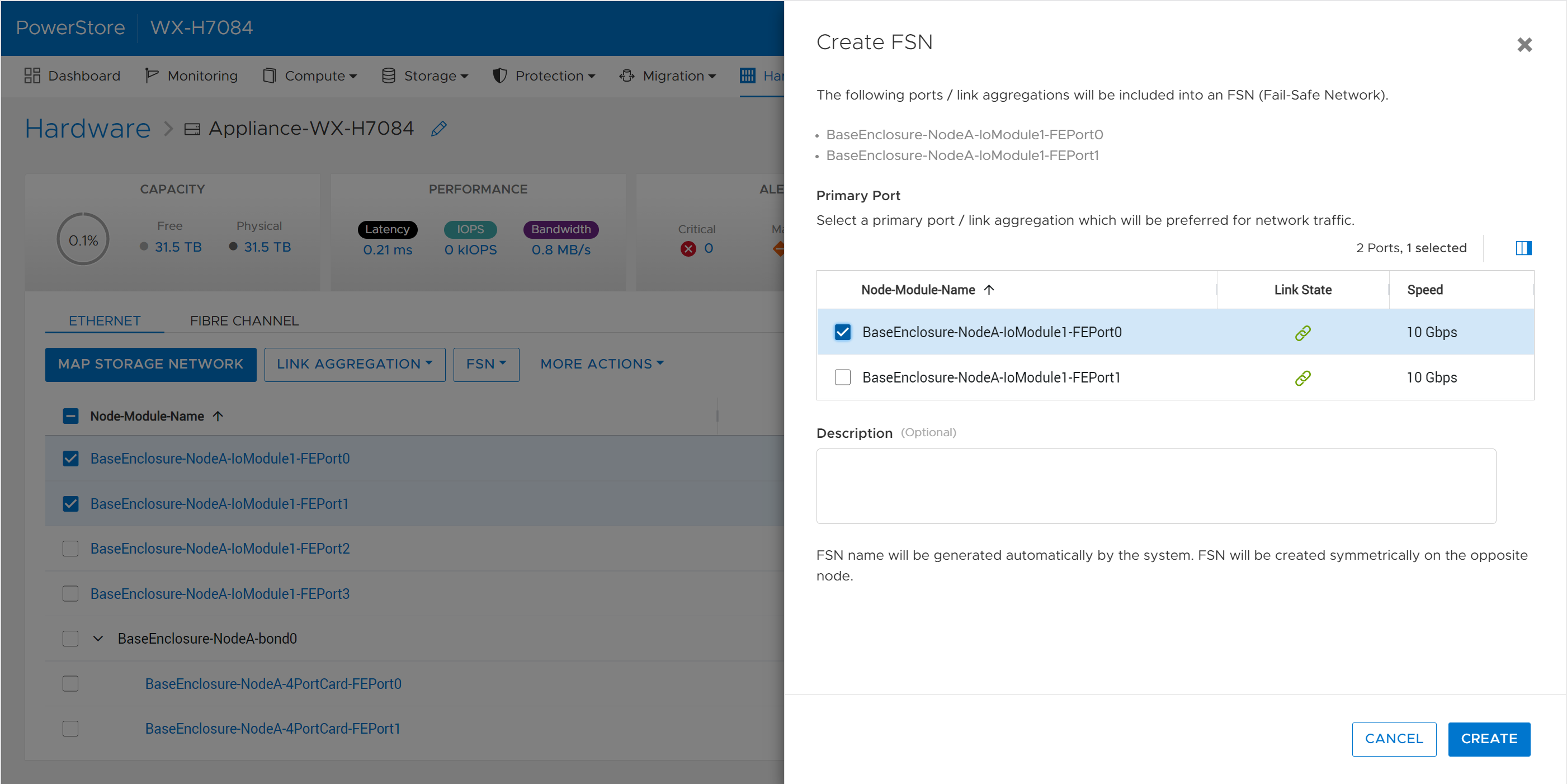

Creating the Metro Witness

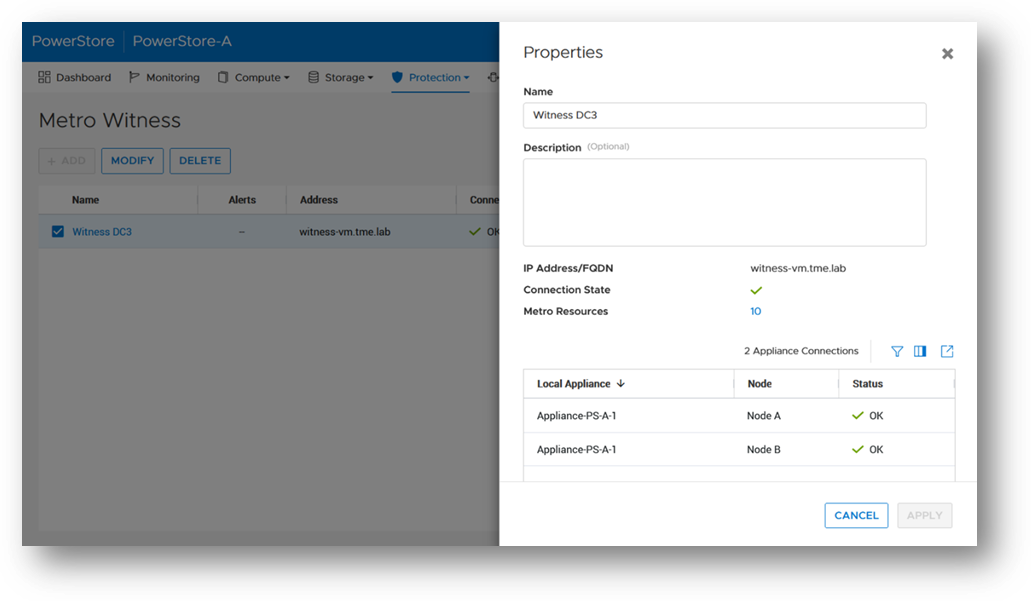

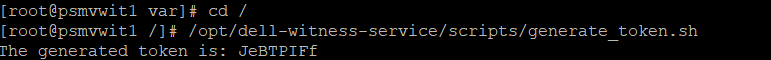

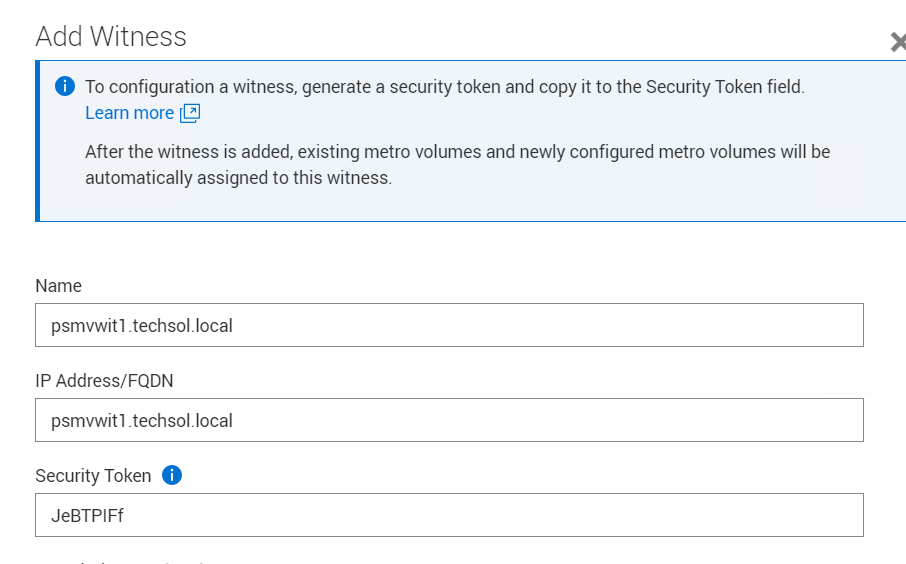

Starting with PowerStore version 3.6, Metro Volume supports a witness server. The witness server is a third party that is installed on a stand-alone host. The witness observes the status of the local and remote PowerStore systems. When a failure occurs, the witness server determines which system remains accessible to hosts and continues to service I/Os. When configuring Metro Witness on a PowerStore appliance, you must generate a unique token.

Note: You must configure the witness on each PowerStore cluster.

1. The following is an example of using the generate_token.sh script to create the token JeBTPIFf:

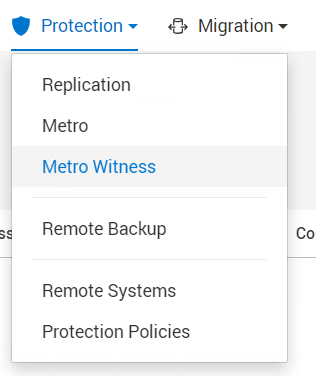

2. After gathering the token, select Protection > Metro Witness to enter the Metro witness configuration details.

3. Enter the witness details, including the DNS or IP address and security token.

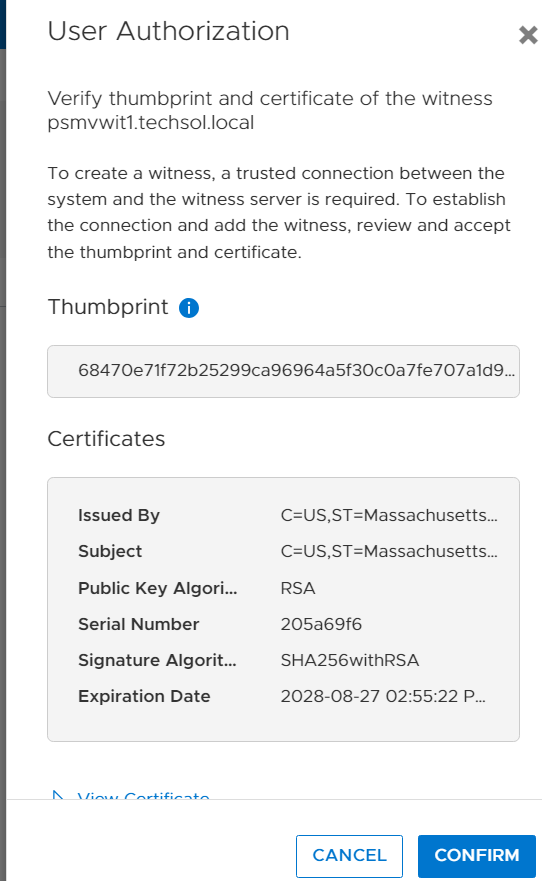

4. Confirm the witness settings.

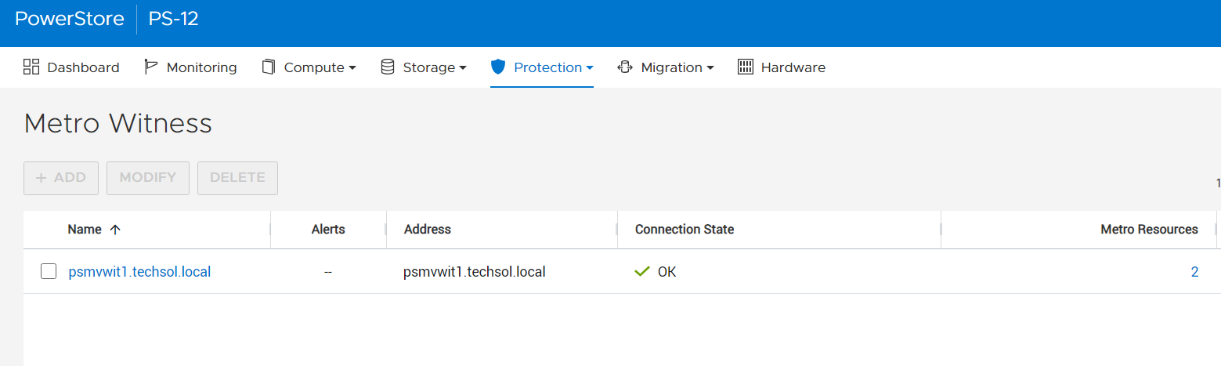

5. Witness is connected to the metro sessions.

6. The metro session is synced, and the witness is now engaged.

Metro Volume is designed to give VMware environments the ability to operate synchronously without disruption. Metro Volume integrates seamlessly with vSphere Metro Storage Cluster, for our PowerStore customers who must avoid disaster/data unavailability.

For more information, see the following resources.

Resources

- White paper: Dell PowerStore: Metro Volume

- White paper: Dell PowerStore: Replication Technologies

Author: Jason Gates

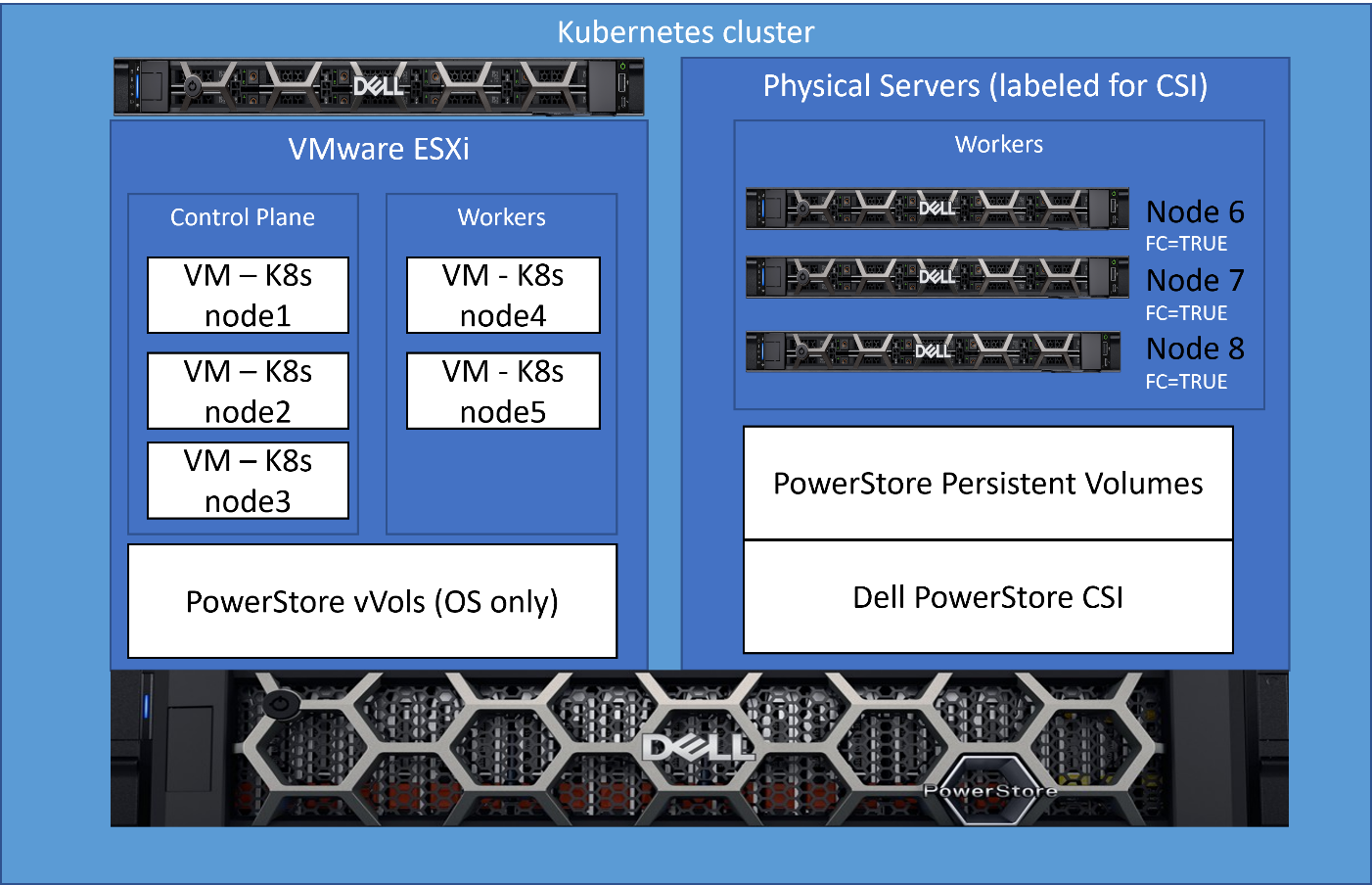

Dell PowerStore Enables Kubernetes Stretched Clusters

Thu, 05 Oct 2023 14:44:36 -0000

|Read Time: 0 minutes

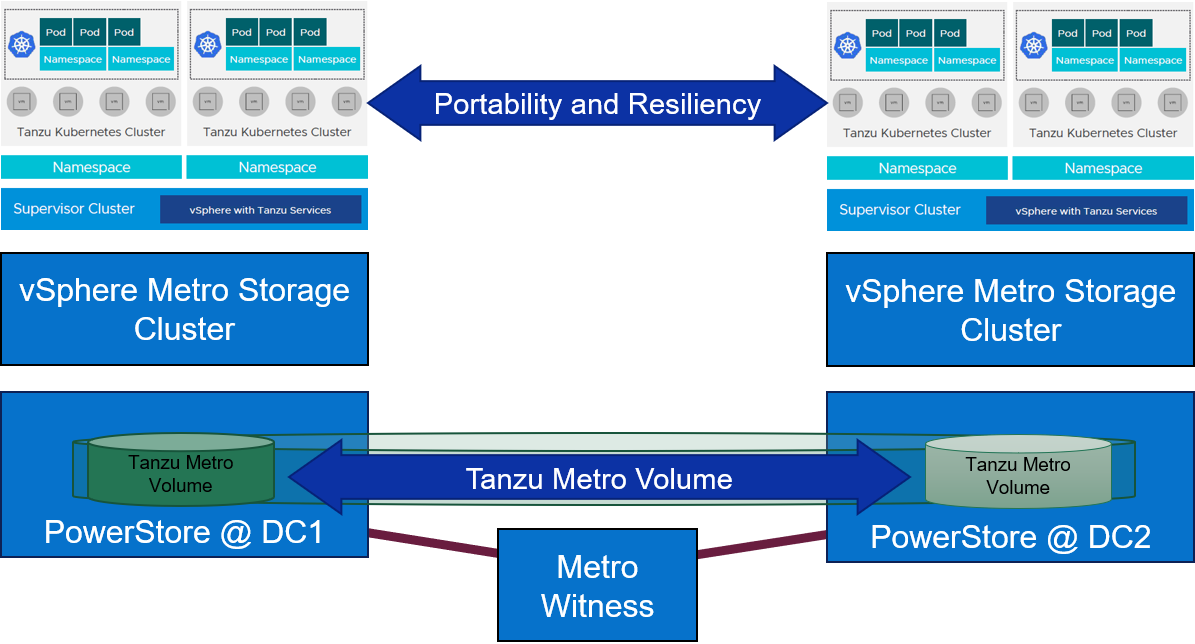

Kubernetes (K8s) is one of the hottest platforms for building enterprise applications. Keeping enterprise applications online is a major focus for IT administrators. K8s includes many features to provide high availability (HA) for enterprise applications. Dell PowerStore and its Metro volume feature can make K8s availability even better!

Enhance local availability

K8s applications should be designed to be as ephemeral as possible. However, there are some workloads such as databases that can present a challenge. If these workloads are restarted, it can cause an interruption to applications, impacting service levels.

Deploying K8s on VMware vSphere adds a layer of virtualization that allows a virtual machine, in this case a K8s node, to be migrated live (vMotion) to another host in the cluster. This can keep pods up and running and avoid a restart when host hardware changes are required. However, if those pods have a large storage footprint and multiple storage appliances are involved, storage migrations can be resource and time consuming.

Dell PowerStore Metro Volume provides synchronous data replication between two volumes on two different PowerStore clusters. The volume is an identical, active-active copy on both PowerStore clusters. This allows compute-only virtual machine migrations. Compute-only migrations occur much faster and are much more practical in most cases. Therefore, more workloads can take advantage of vMotion and availability is increased.

PowerStoreOS 3.6 introduces a witness component to the Metro Volume architecture. The functional design of the witness adds more resiliency to Metro Volume deployments and further mitigates the risk of split-brain situations. The witness enables PowerStore OS 3.6 to make intelligent decisions across a wider variety of infrastructure outage scenarios, including unplanned outages.

K8s stretched or geo clusters

Spreading an application cluster across multiple sites is a common design for increasing availability. The compute part is easy to solve because K8s will restart workloads on the remaining nodes, regardless of location. However, if the workload requires persistent storage, the storage needs to exist in the other site.

PowerStore Metro Volume solves this requirement. Metro Volume support for VMware ESXi synchronizes volumes across PowerStore clusters to meet latency and distance requirements. In addition to the enhanced vMotion experience, PowerStore Metro volume provides active-active storage to VMware VMFS datastores that can span two PowerStore clusters. For in-depth information about PowerStore Metro Volume, see the white paper Dell PowerStore: Metro Volume.

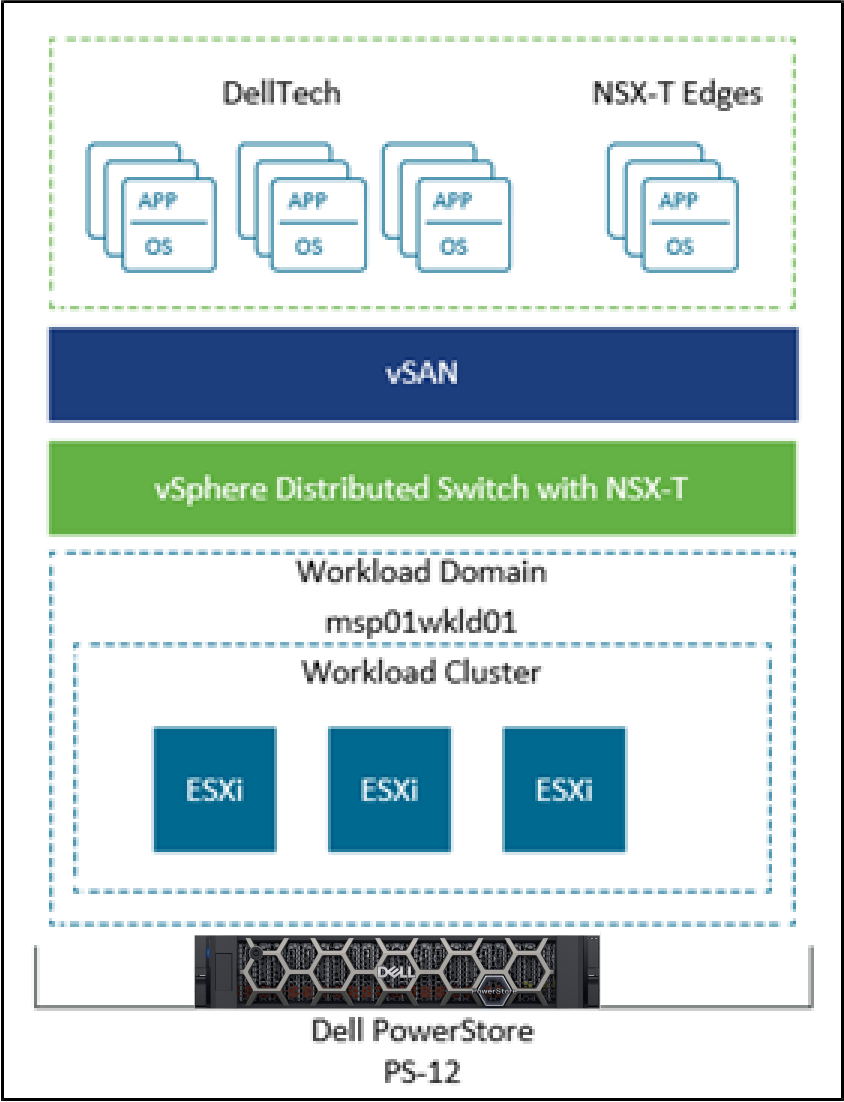

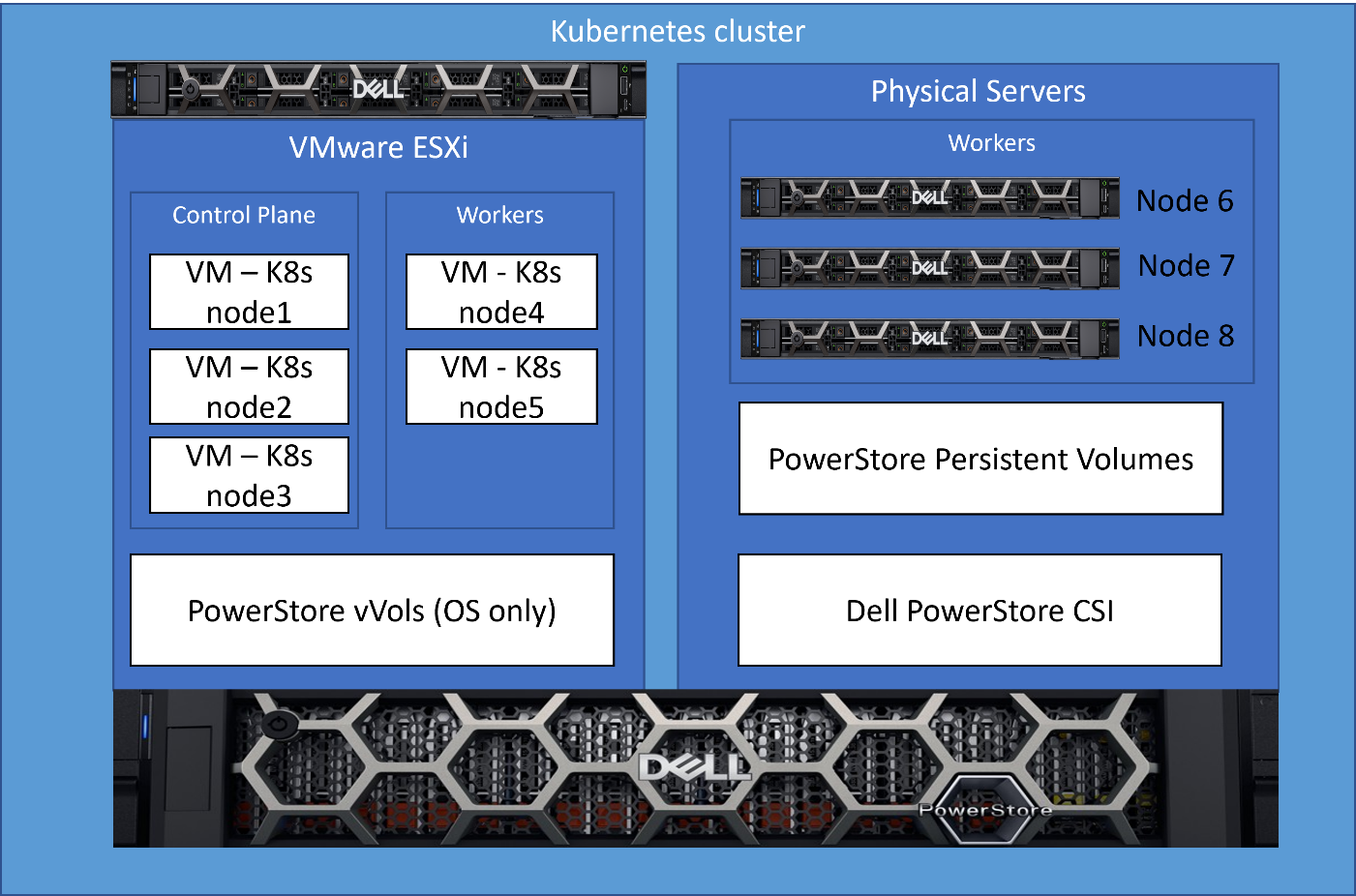

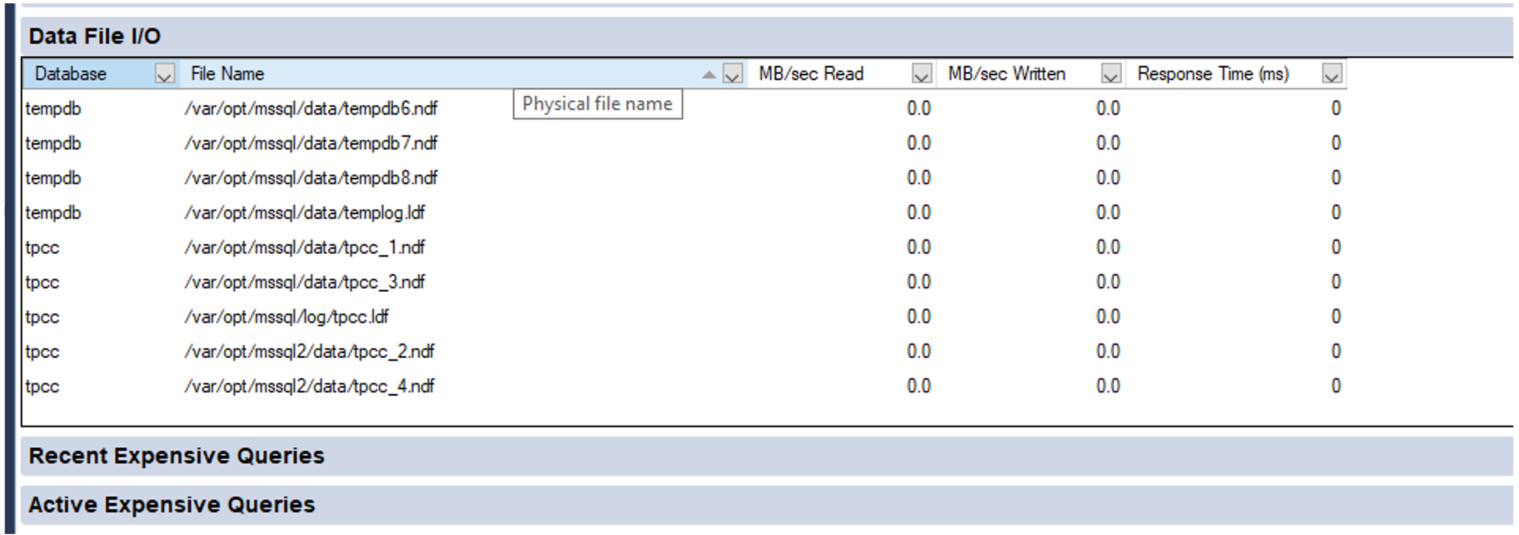

Lab testing

We tested Dell PowerStore Metro Volume with a SQL Server workload driven by HammerDB on a stretched K8s cluster running on vSphere with three physical hosts and two PowerStore clusters[1]. The K8s cluster was running Rancher RKE2 1.25.12+rke2r1 with a VMFS datastore on PowerStore Metro volume using the VMware CSI provider for storage access. We performed vMotion compute only migrations and simulated storage network outages as part of the testing.

During the testing, the synchronized active-active copy of the volume was able to assume the IO workload, maintain IO access, and keep SQL Server and the HammerDB workload online. This prevented client disconnects and reconnects, application error messages, and costly recovery time to synchronize and recover data.

After we successfully completed testing on Rancher, we pivoted to another K8s platform: a VMware Tanzu Kubernetes Cluster deployed on VMware vSphere 8 Update 1. We deployed the SQL Server and HammerDB workload and performed a number of other K8s deployments in parallel. Workload test results were consistent. When we took the PowerStore cluster that was running the workload offline, both compute and storage remained available. The result was that the containerized applications were continuously available: not only during the failover, but during the failback as well.

In our Tanzu environment, Metro Volume went beyond data protection alone. It also provided infrastructure protection for objects throughout the Workload Management hierarchy. For example, the vSphere Tanzu supervisor cluster control plane nodes, pods, Tanzu Kubernetes clusters, image registry, and content library can all be assigned a VM storage policy and a corresponding storage class which is backed by PowerStore Metro Volumes. Likewise, NSX Manager and NSX Edge networking components on Metro Volume can also take advantage of this deployment model by remaining highly available during an unplanned outage.

Figure 1. Metro Volume with a witness adds portability and resiliency to Tanzu deployments

For more information about PowerStore Metro Volume, increasing availability on SQL Server, and other new features and capabilities, be sure to check out all the latest information on the Dell PowerStore Info Hub page.

Authors:

Doug Bernhardt, Sr. Principal Engineering Technologist, LinkedIn

Jason Boche, Sr. Principal Engineering Technologist, LinkedIn

[1] Based on Dell internal testing, conducted in September 2023.

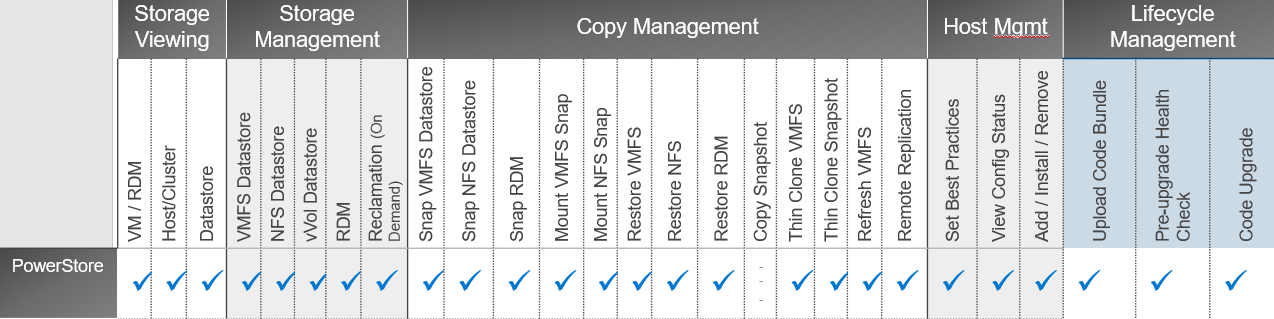

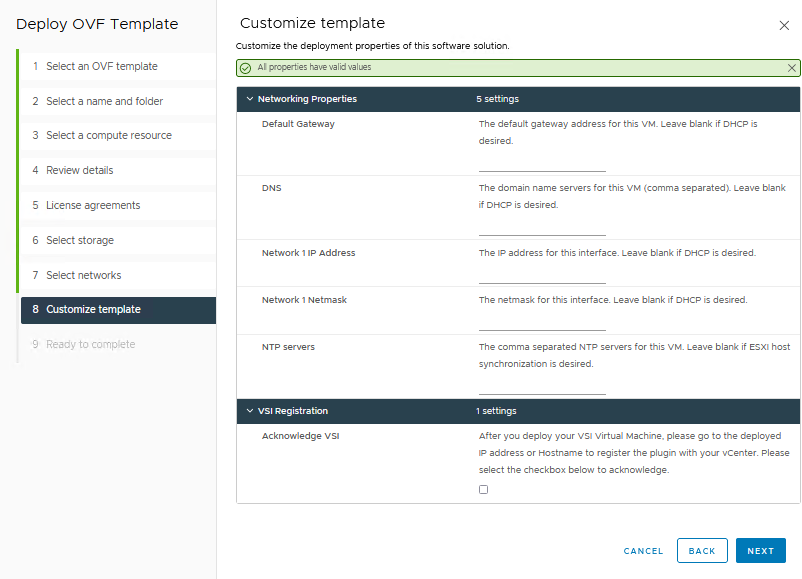

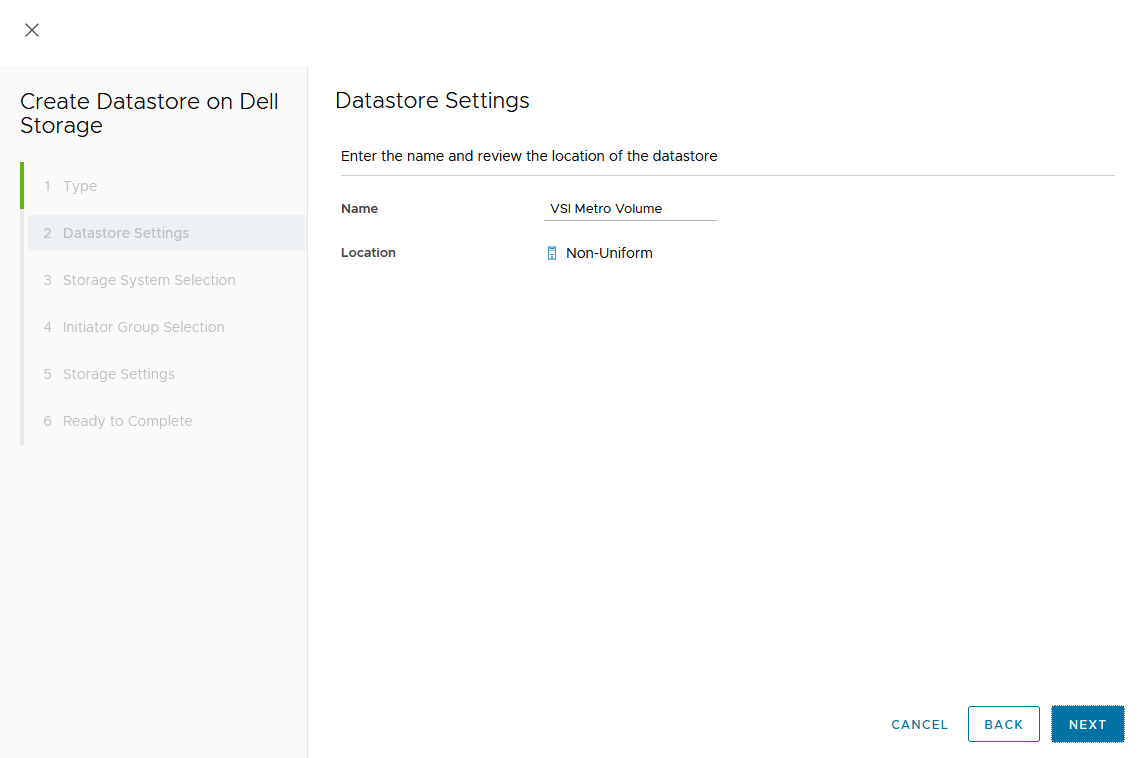

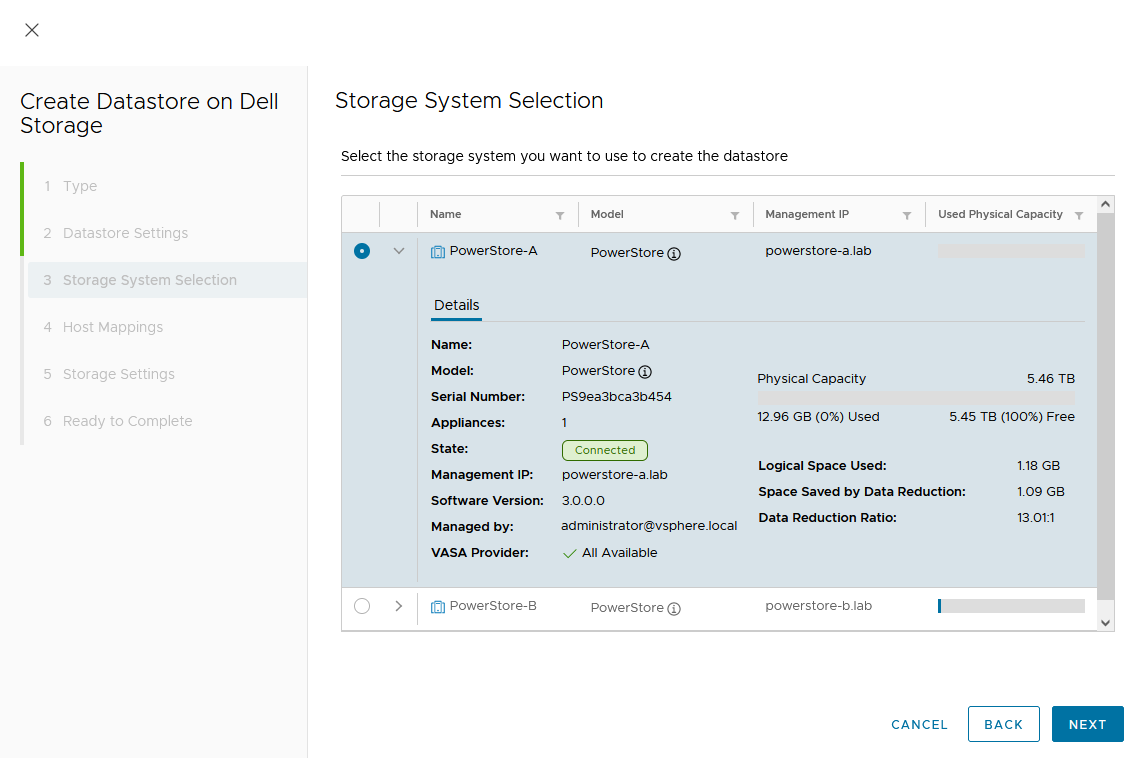

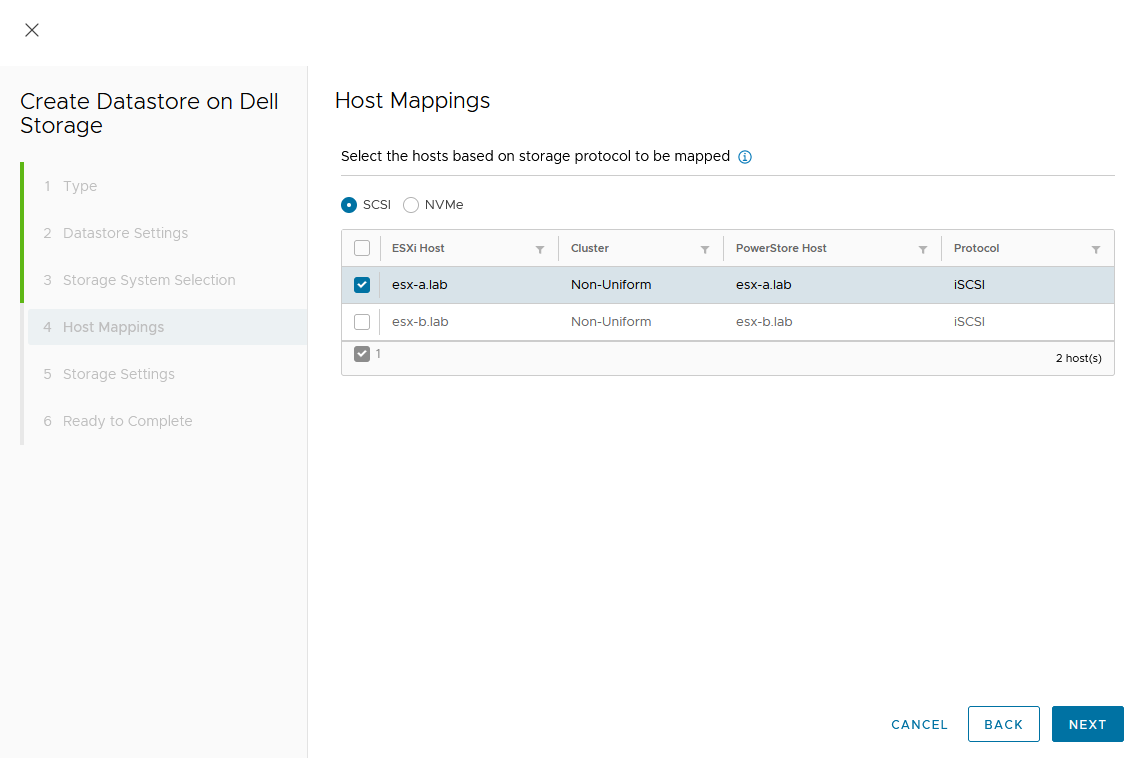

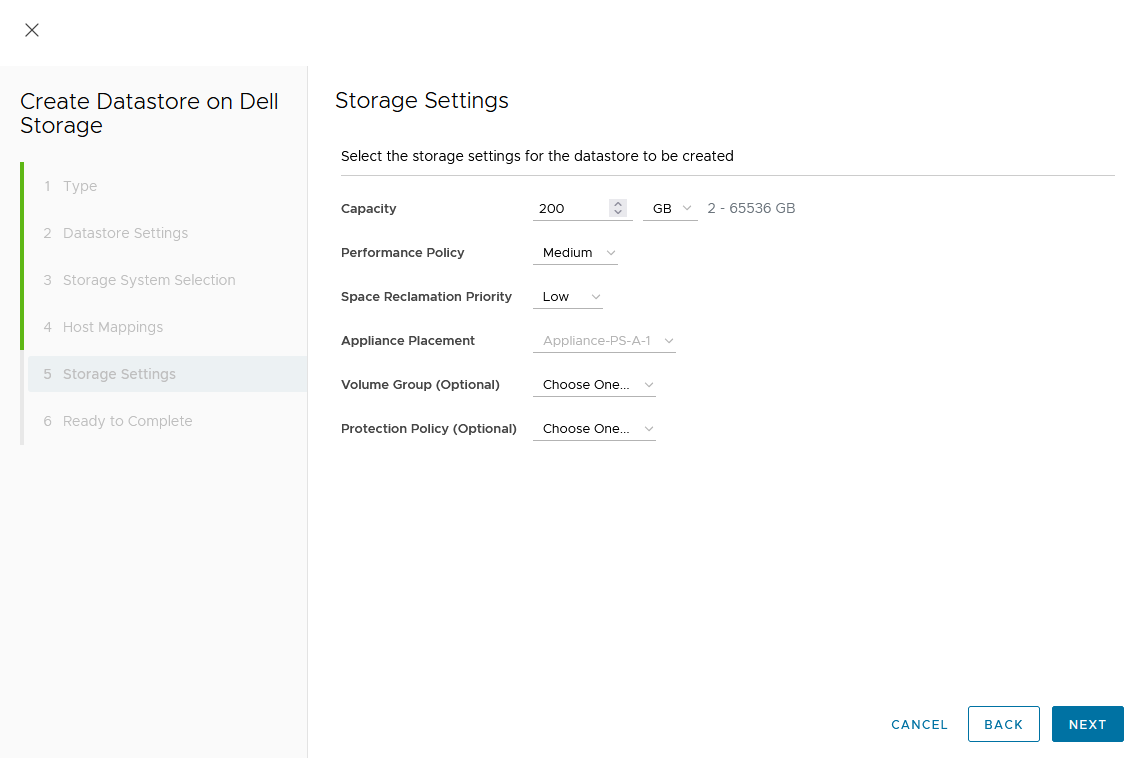

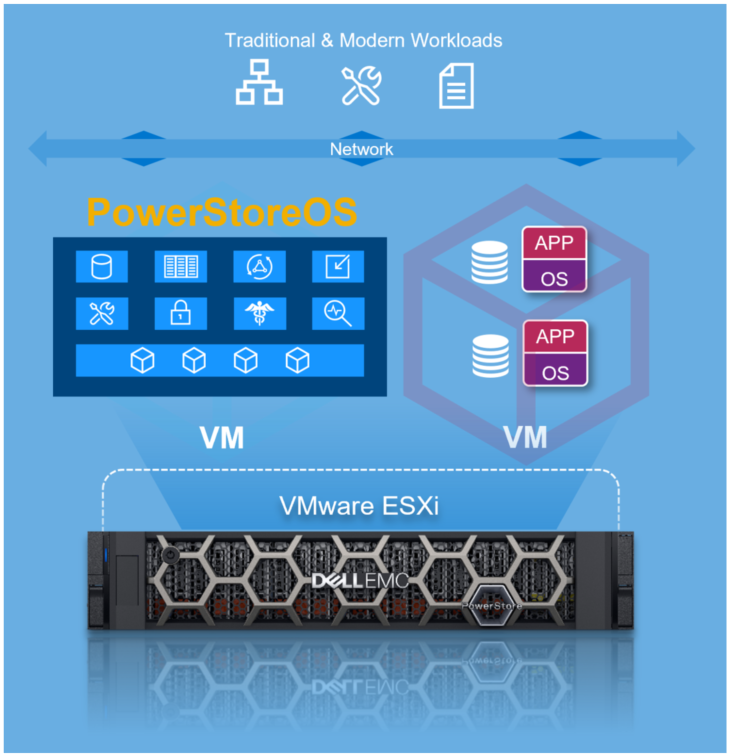

PowerStore: Lifecycle Management with Virtual Storage Integrator

Thu, 05 Oct 2023 14:39:06 -0000

|Read Time: 0 minutes

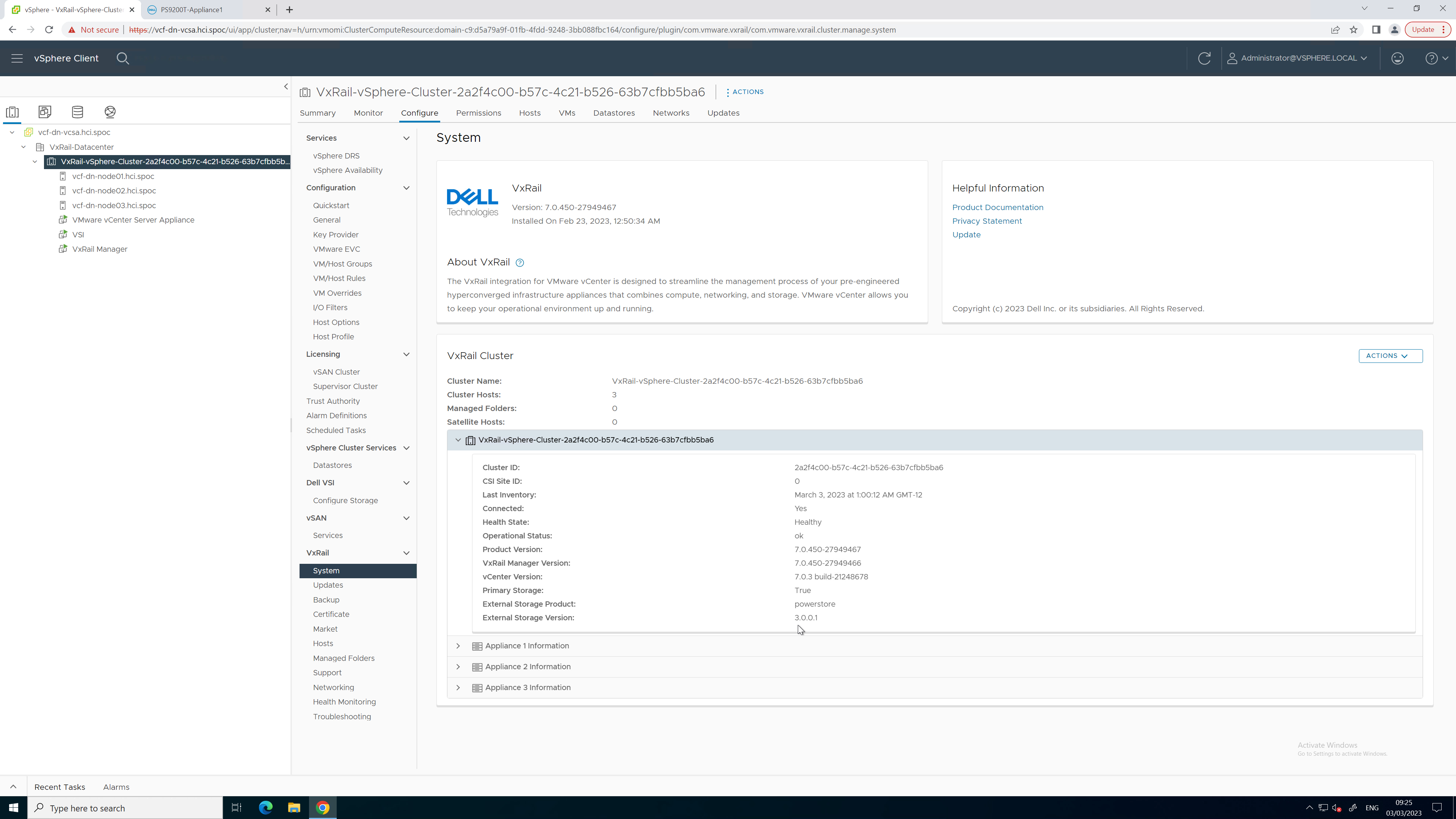

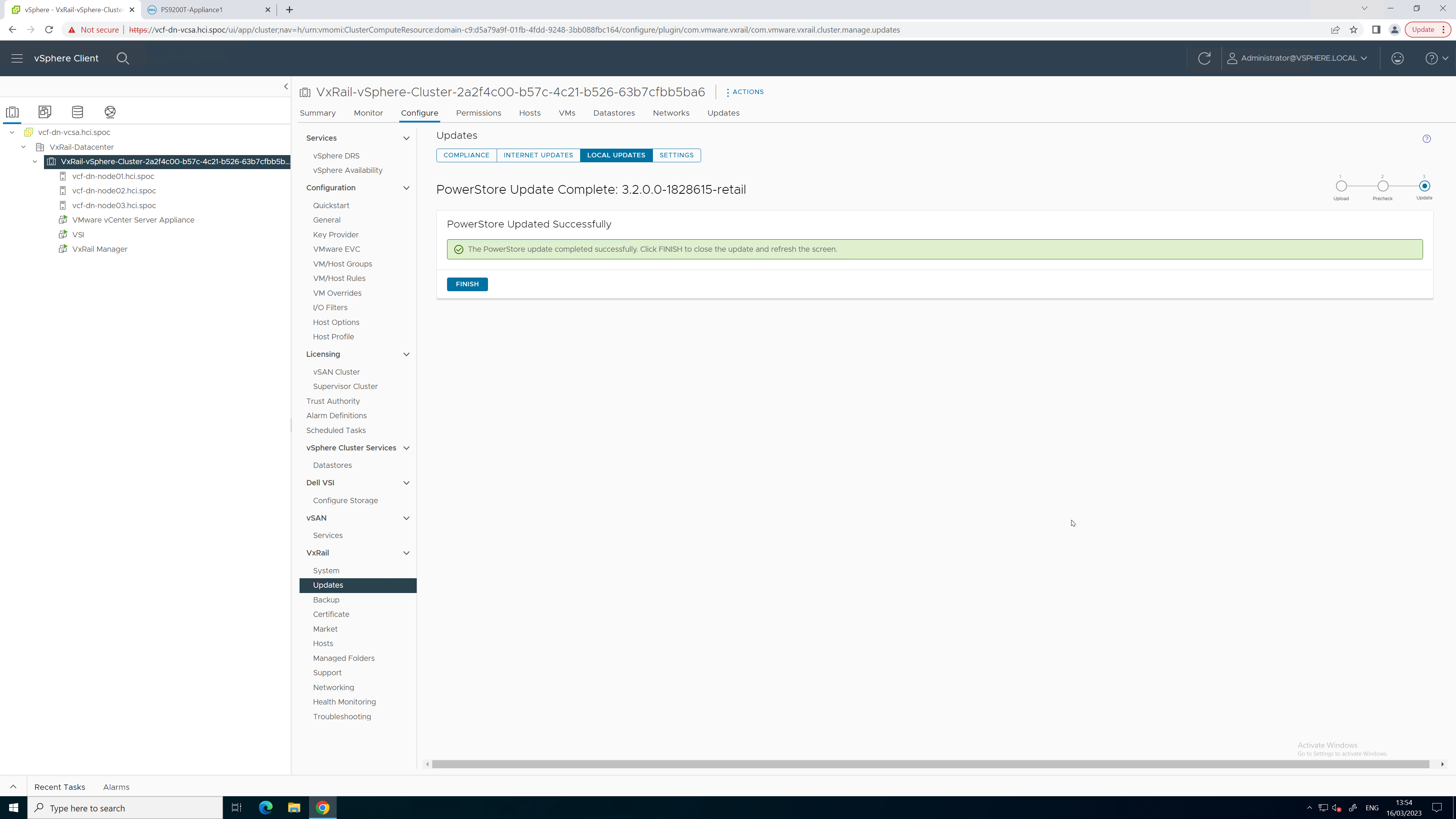

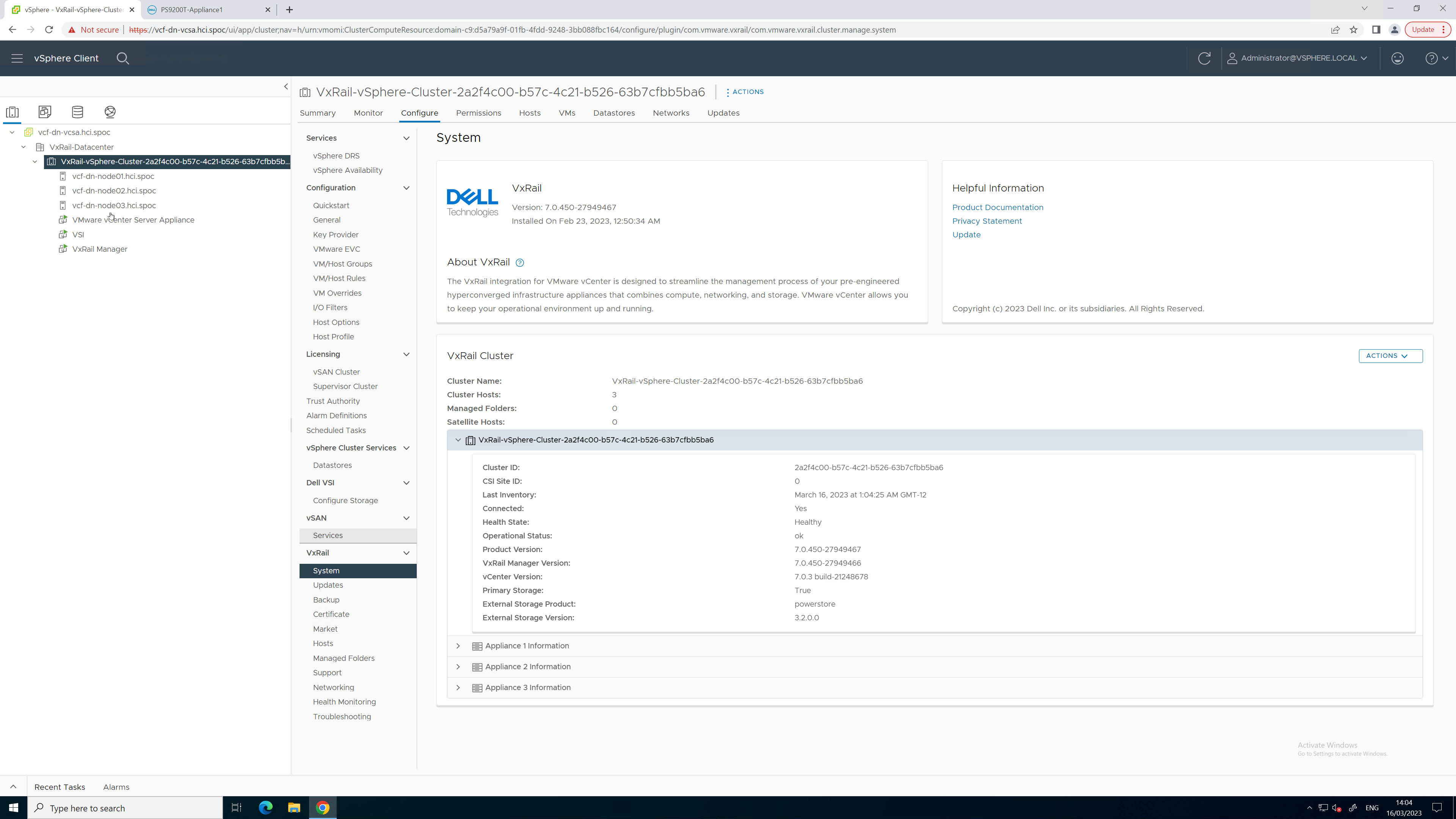

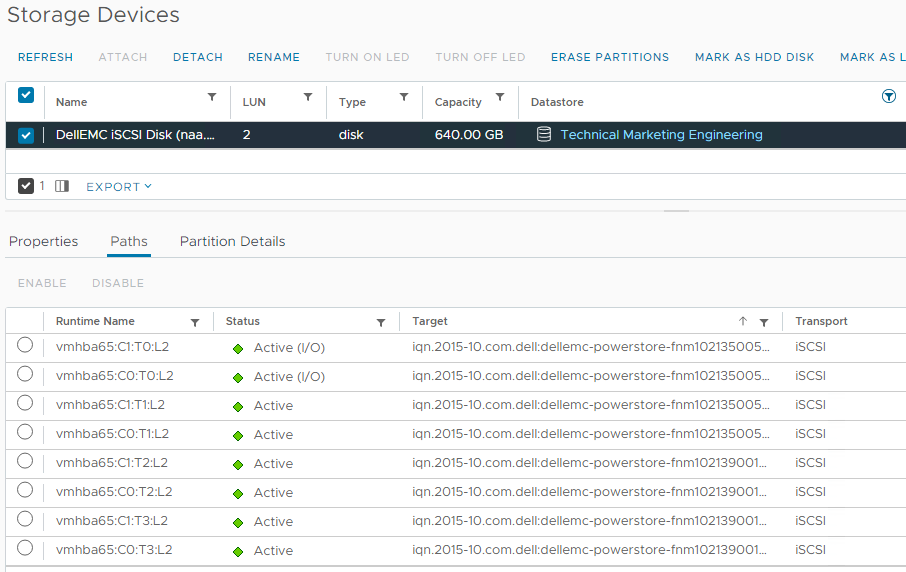

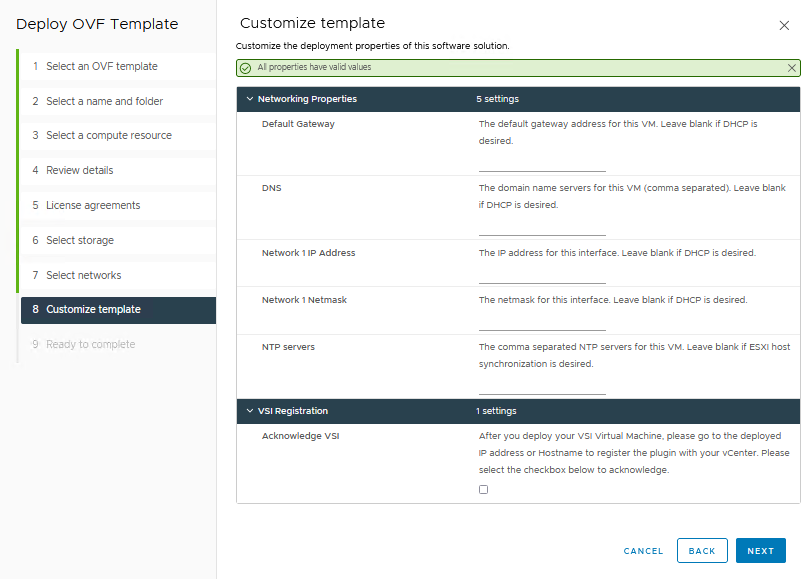

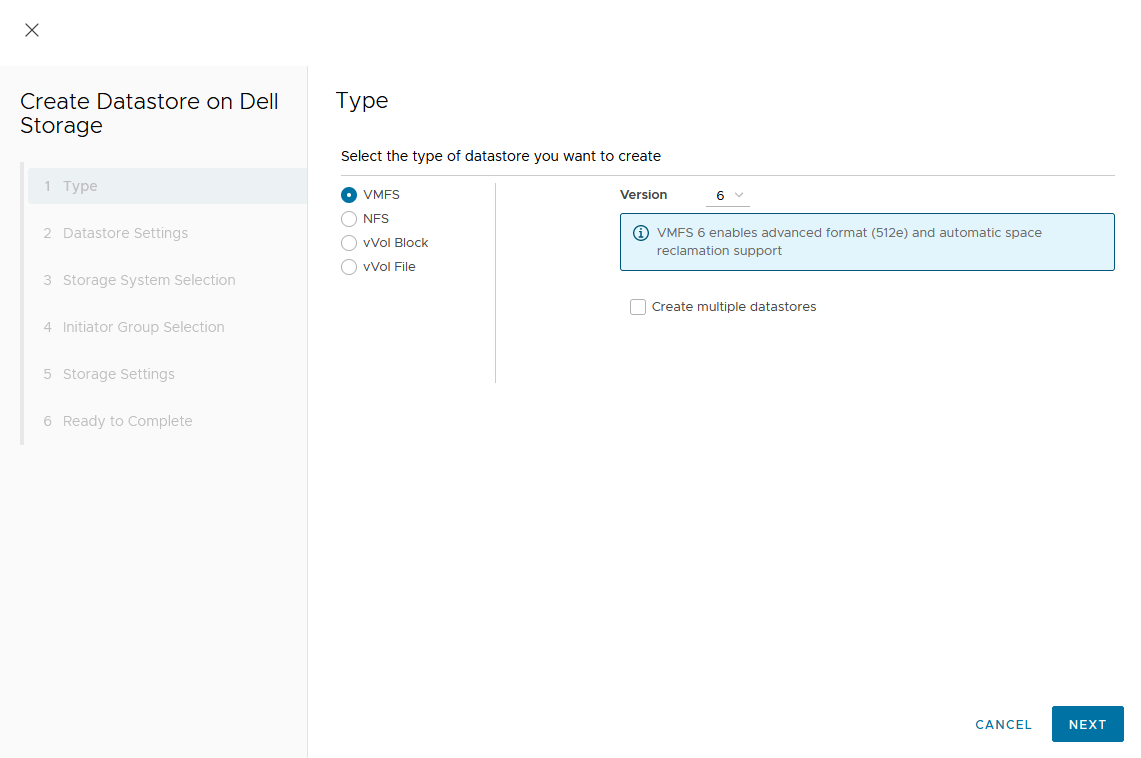

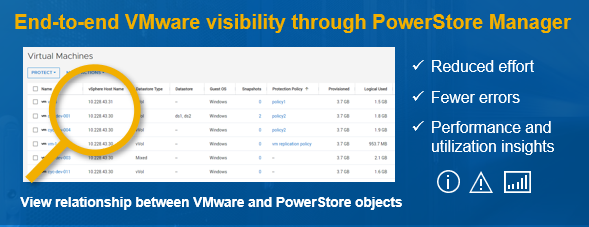

The integrated lifecycle management is available starting with virtual storage integrator (VSI) version 10.3. The plugin will upload the code, perform a health check, and update the PowerStore system. PowerStore code version 3.0 or later will be needed to take advantage of this capability.

This blog provides a quick overview of how to deploy Dell VSI and how to perform non-disruptive upgrades of PowerStore systems with the VSI plug-in in vCenter.

Components of VSI

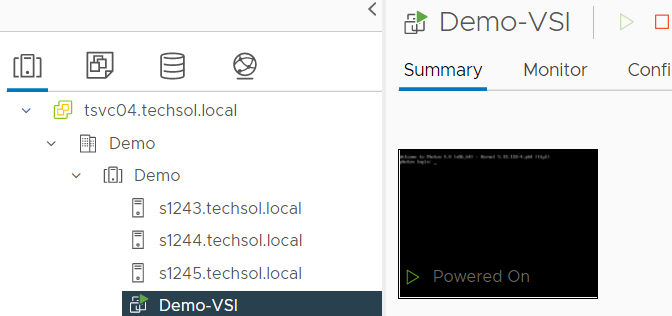

VSI consists of two components—a virtual machine (VM) and a plug-in for vCenter that is deployed when VSI is registered for the vCenter. The VSI 10.3 open virtual appliance (OVA) template is available on the Dell Technologies support website and is supported with vSphere 6.7 U2 (and later) through 8.0.x for deployments with an embedded platform services controller (PSC).

This chart shows VSI supported areas of operation for PowerStore:

Deployment

A deployed VSI VM needs 3.7 GB (thin) or 16 GB (thick) space on a datastore and is deployed with two vCPUs and 16 GB RAM. The VSI VM must be deployed on a network with access to the vCenter server and PowerStore.

When the VM is deployed and started, you can access the plug-in management with https://<VSI-IP>.

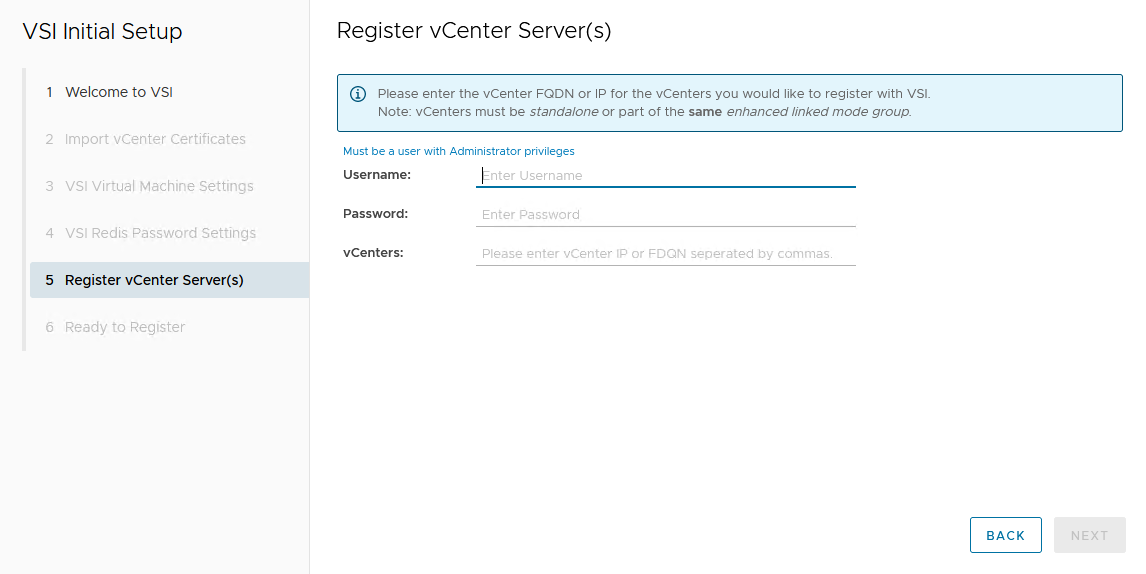

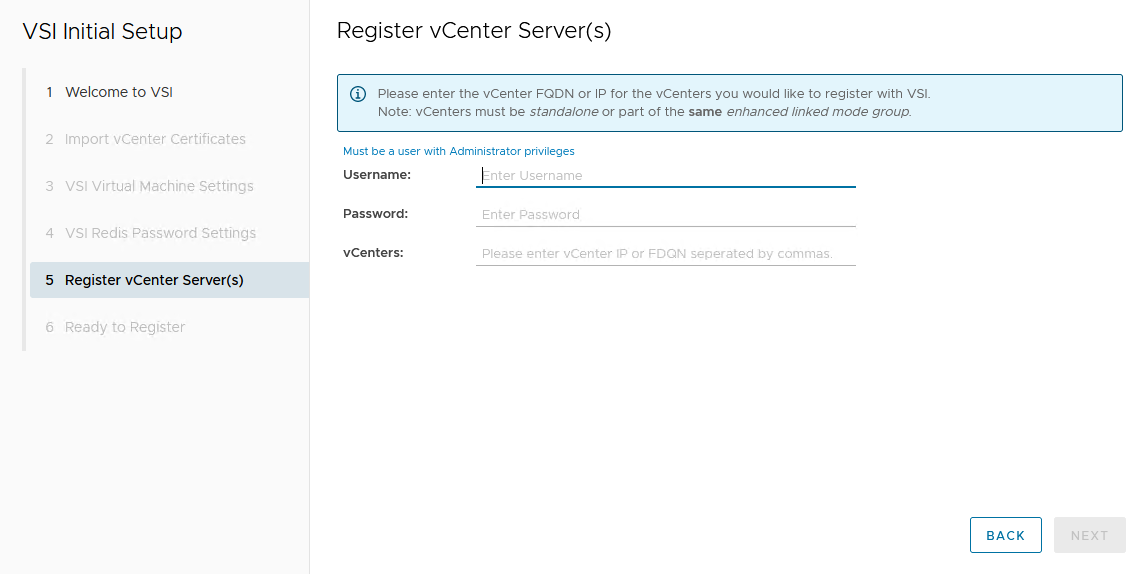

Register VSI plug-in in vCenter

A wizard helps you register the plug-in in a vCenter. Initial setup only requires that you set the VSI password for the internal database and supply the vCenter address with username/password. Multiple vCenters can be registered if they are in a linked mode group.

After the VSI VM is configured, it takes a few minutes for the VM to come online.

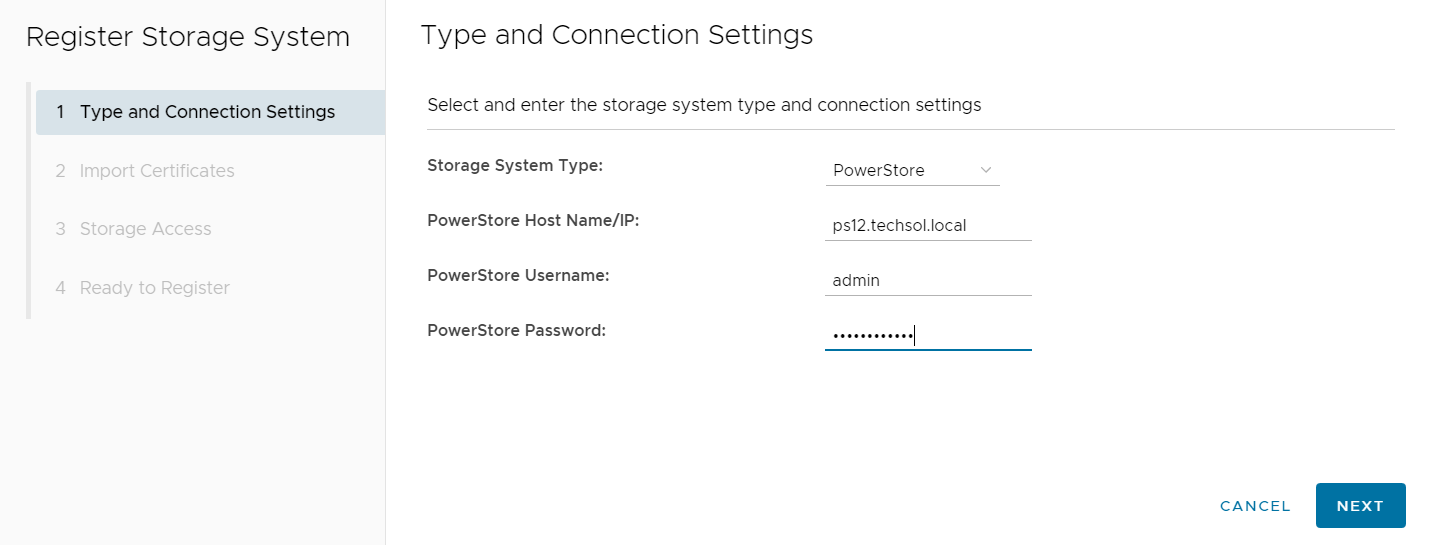

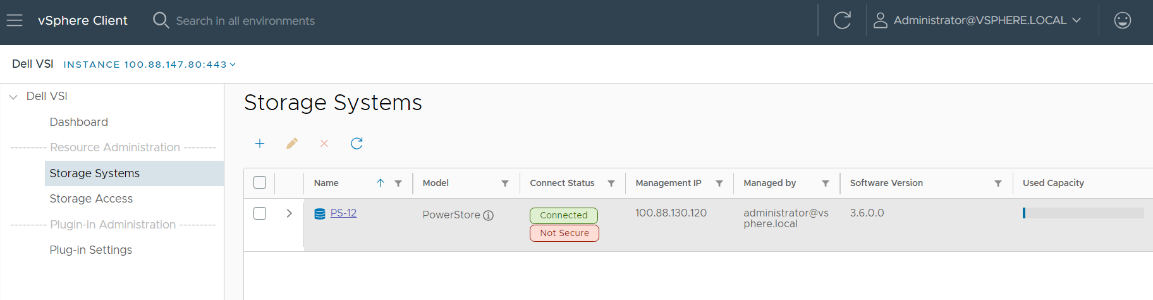

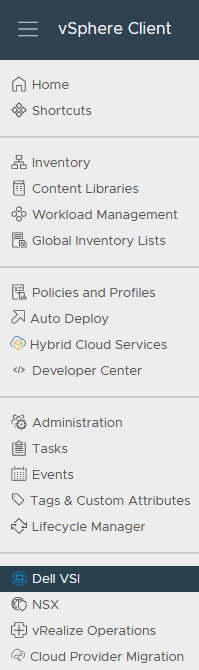

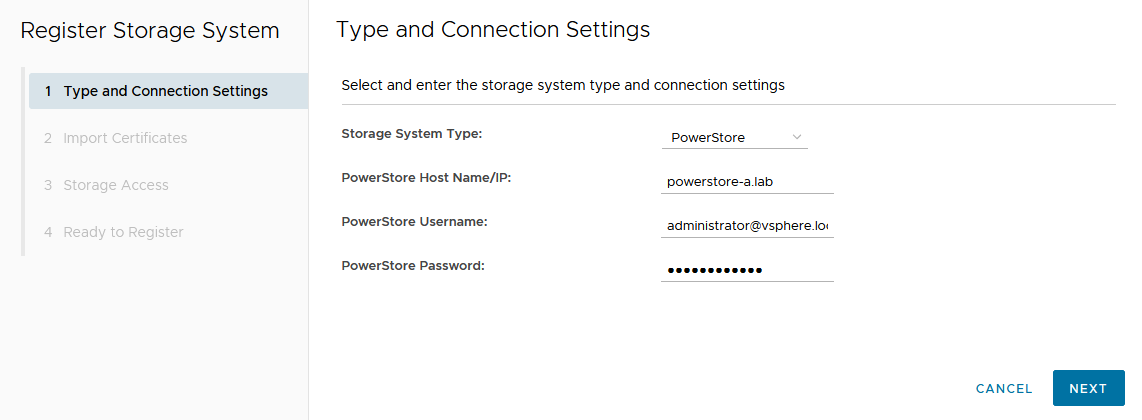

From the Dell VSI dashboard, select Storage Systems and the + sign to add the PowerStore system.

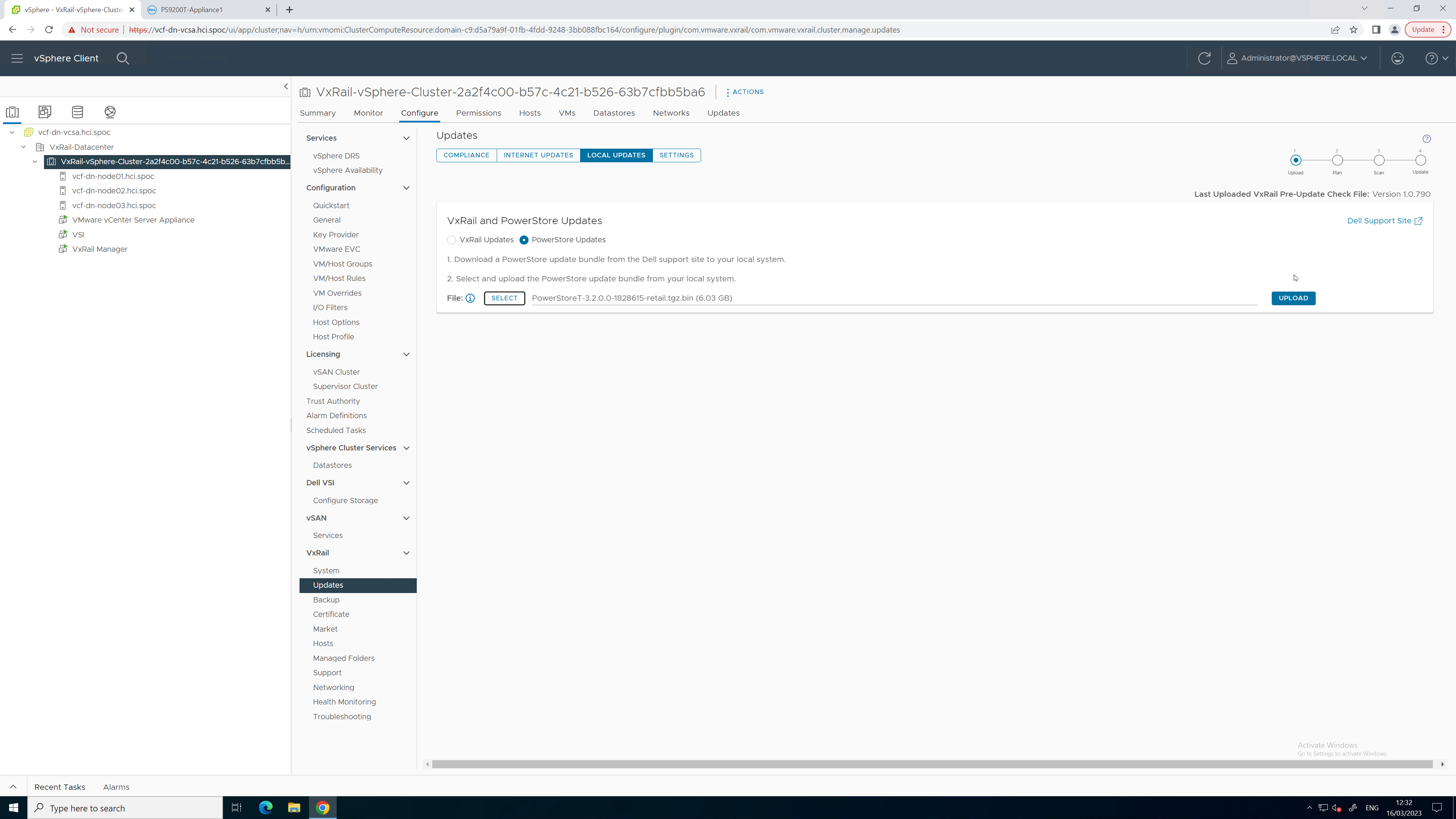

Perform a non-disruptive upgrade

VSI lifecycle management of PowerStore provides the following benefits:

- Upload the code bundle from the local device to PowerStore from the VSI plug-in to vCenter.

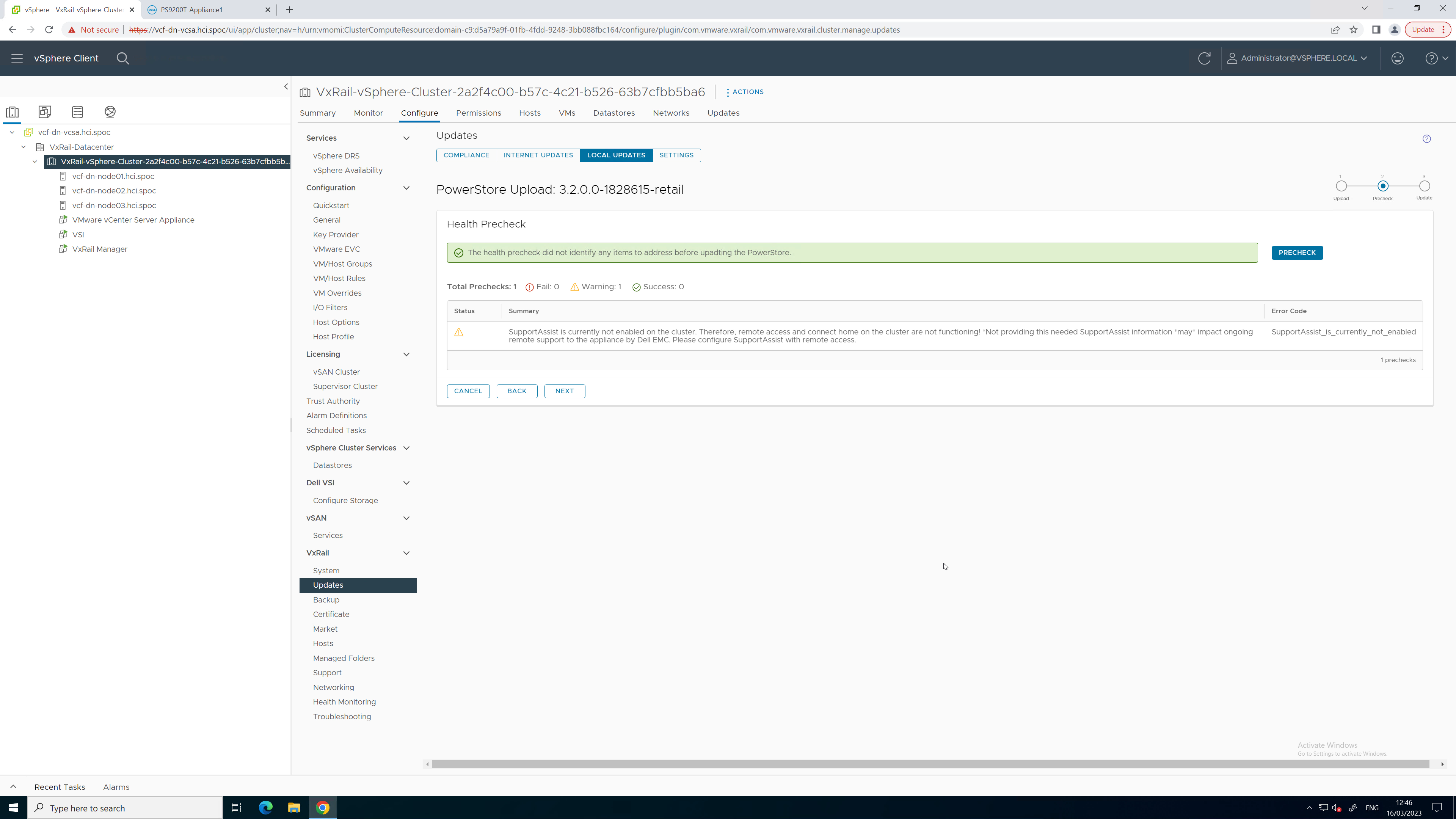

- Run a precheck after the bundle has been uploaded from the VSI plug-in to vCenter.

- Run the upgrade with the uploaded bundle from the VSI plug-in to vCenter.

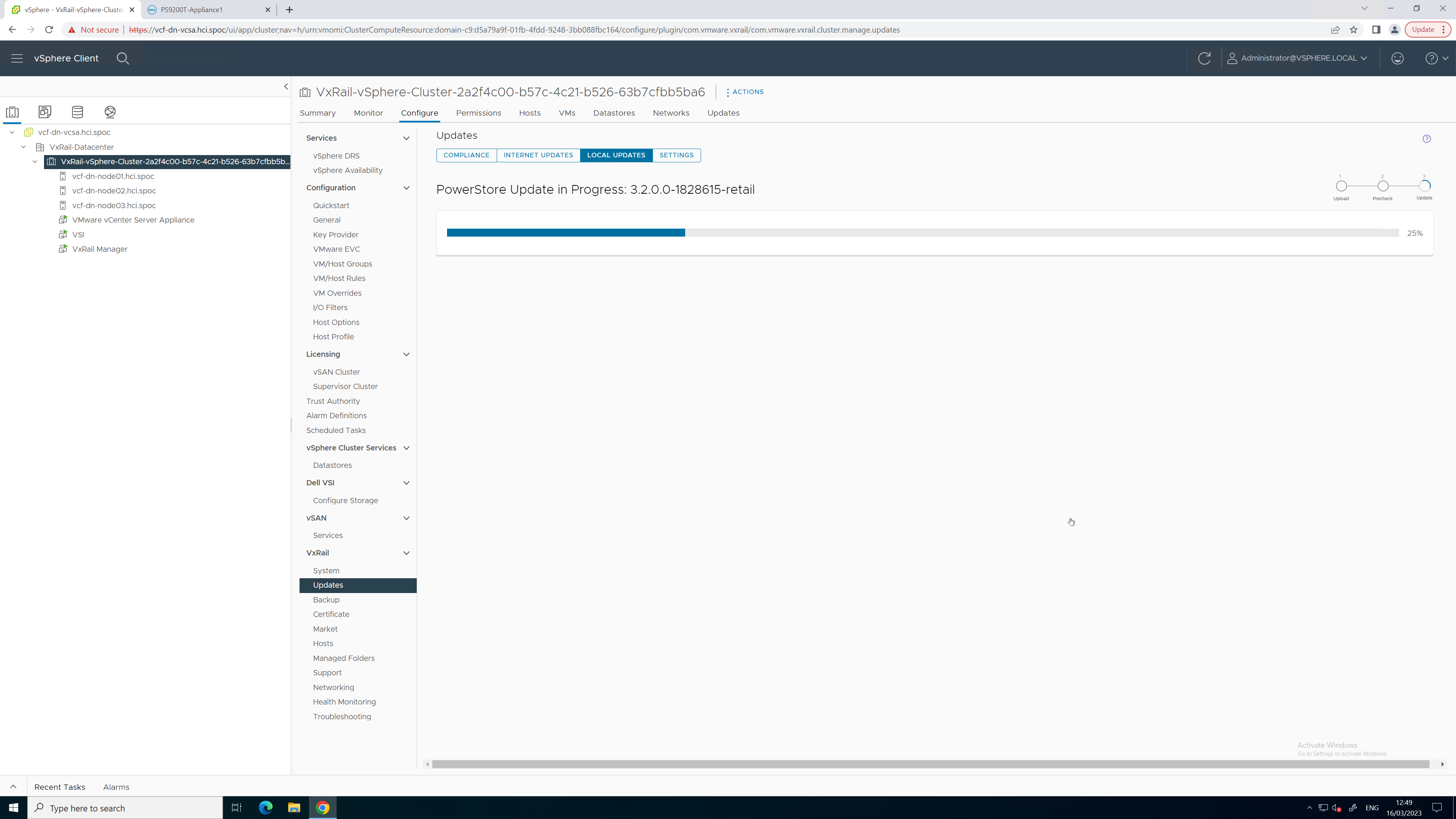

The non-disruptive upgrade process with the VSI plug-in requires three steps:

- Upload the code bundle.

- Complete a pre-upgrade health check.

- Perform a code upgrade.

To perform the non-disruptive upgrade, do the following:

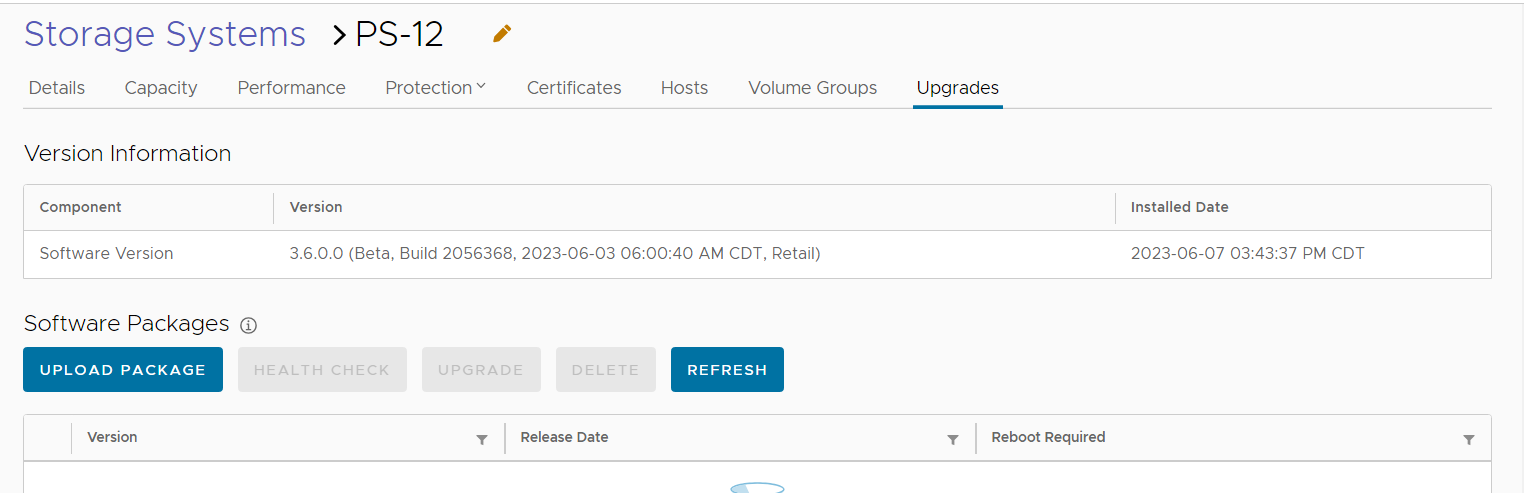

- From the Dell VSI dashboard, select the PowerStore system.

After selecting the PowerStore system, select the Upgrades tab.

After selecting the PowerStore system, select the Upgrades tab.

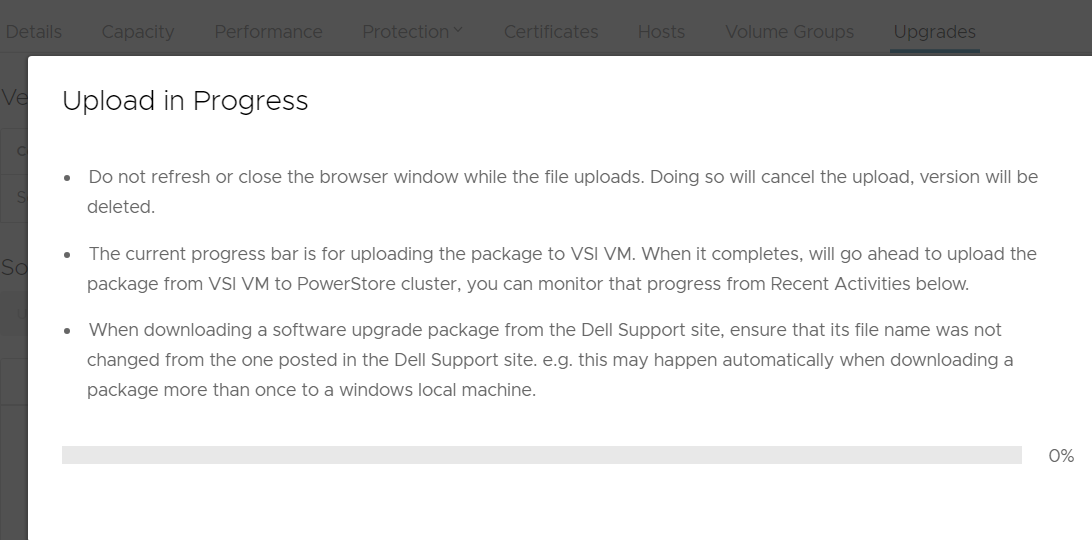

3. Under the Upgrades tab, select Upload package.

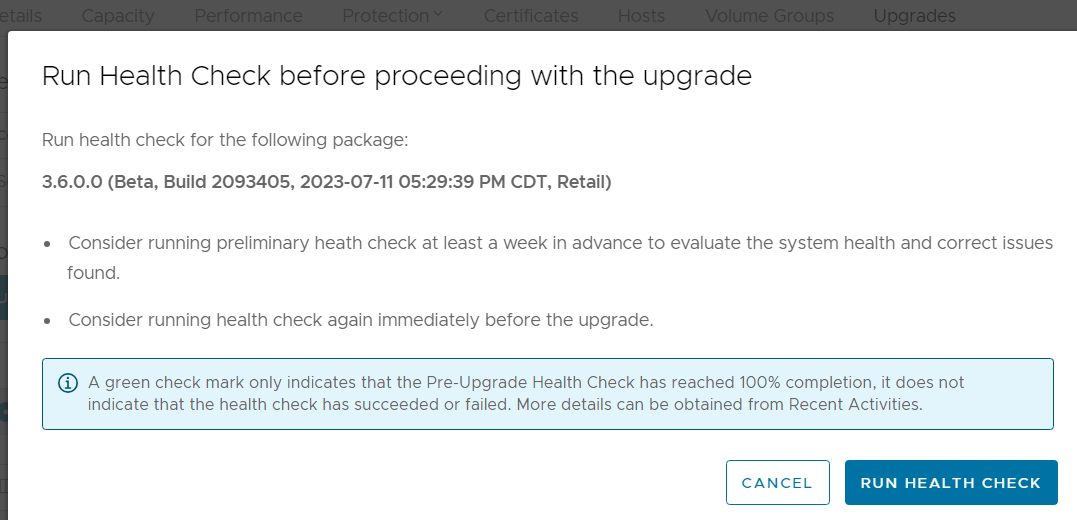

4. After uploading the code, select Run Health Check.

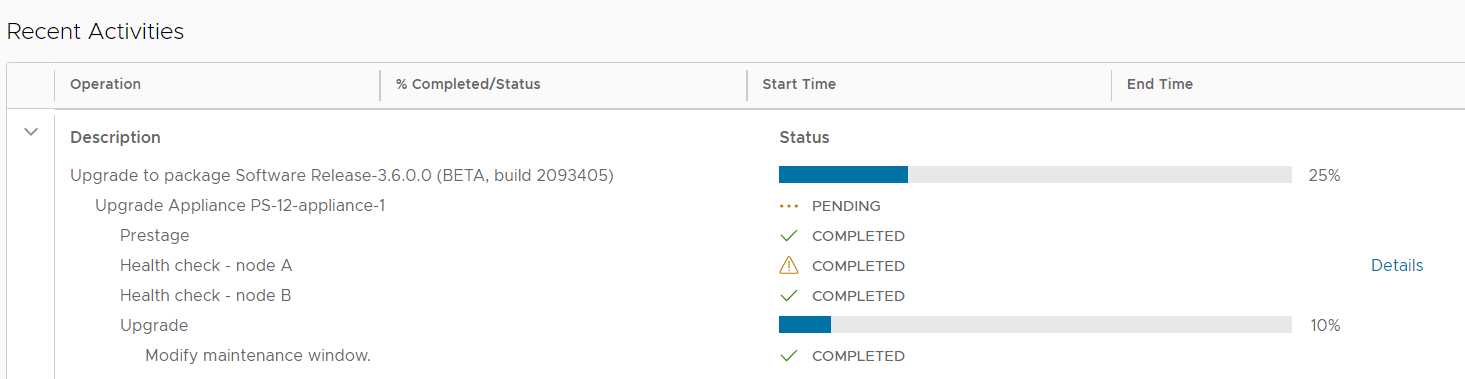

5. When the health check completes, kick off the upgrade process and monitor the status until complete.

Conclusion

Dell PowerStore extends the boundaries of mid-range storage with unmatched capabilities for enterprise storage. PowerStore integrates seamlessly with the VSI plugin. This system integration enables the orchestration necessary to deliver non-disruptive, streamlined PowerStore updates from within VMware vCenter.

Author: Jason Gates

Configuring PowerStore File Extension Filtering to Prevent Ransomware

Wed, 06 Sep 2023 18:12:28 -0000

|Read Time: 0 minutes

Overview

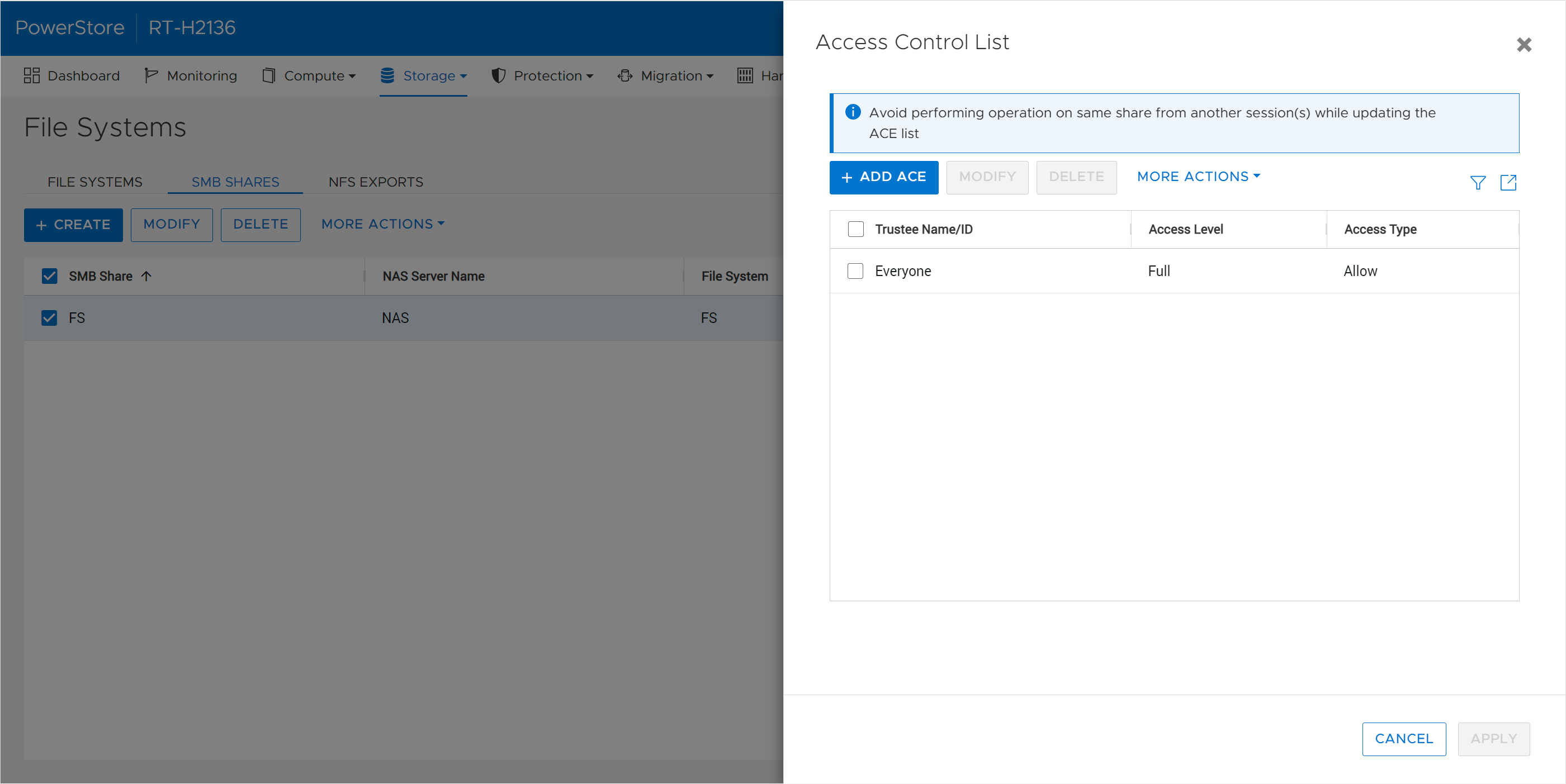

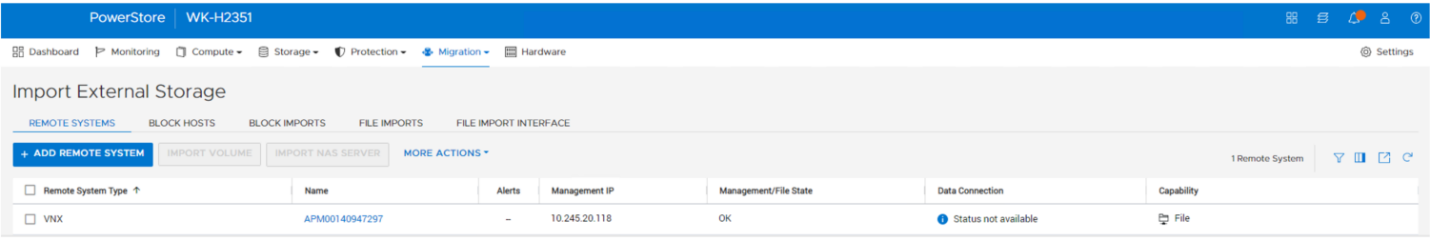

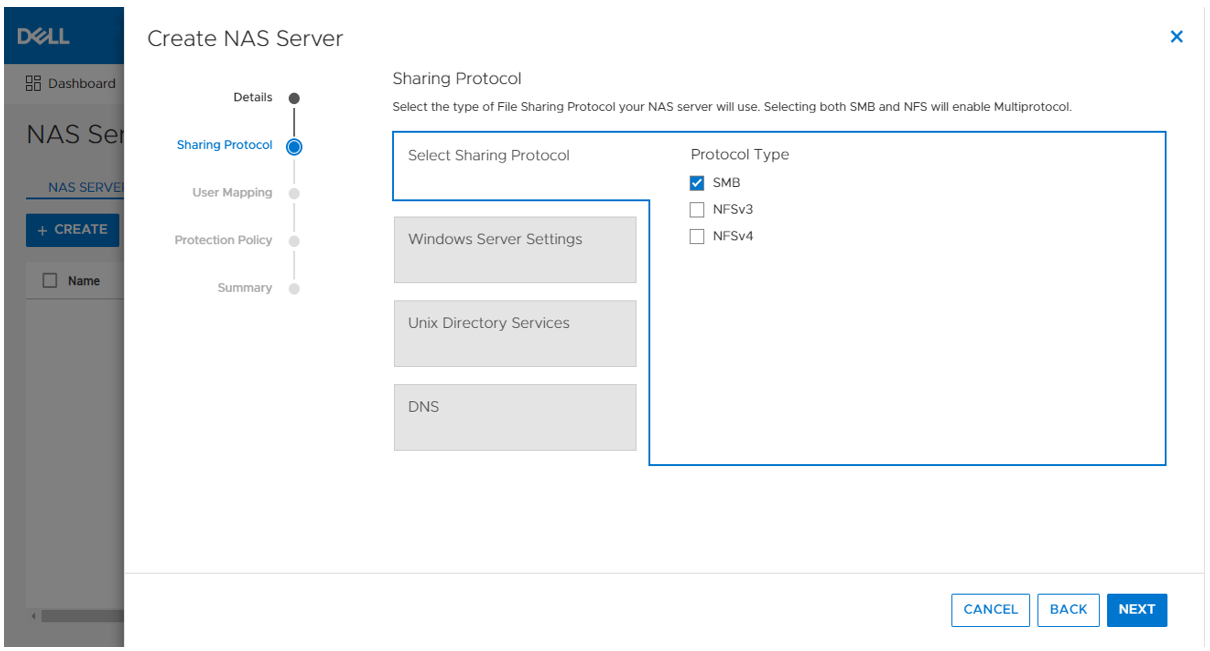

Disallowing known ransomware extensions from being written to the file system can be a simple and effective mechanism to deter and/or prevent ransomware. PowerStore file systems include a file extension filtering capability that restricts specific file extensions from being stored on an SMB share. Traditionally, this feature has been used to prevent users from storing non-business data on a share, however its uses extend to blocking malicious extensions from being written to a share at all.

File extension filtering can be leveraged in conjunction with other features such as CEPA to implement a ransomware strategy with multiple layers of defense. Let’s dive into how to configure PowerStore file extension filtering to better protect your system today.

Configuration

To configure file extension filtering:

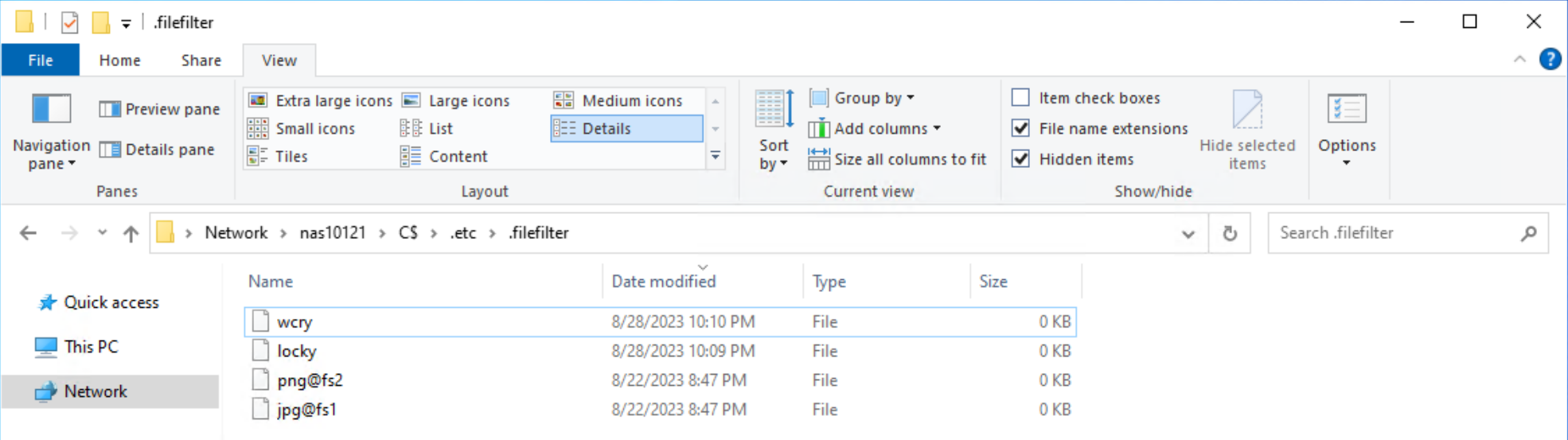

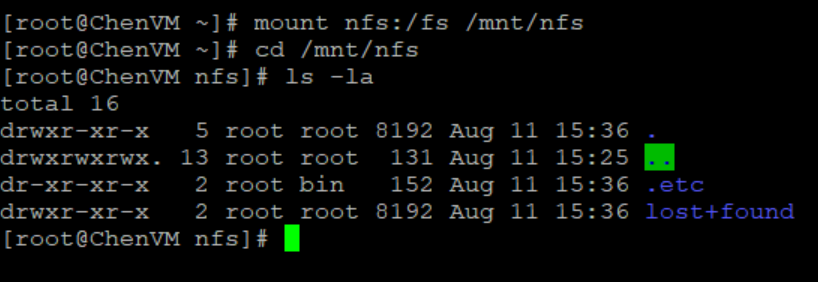

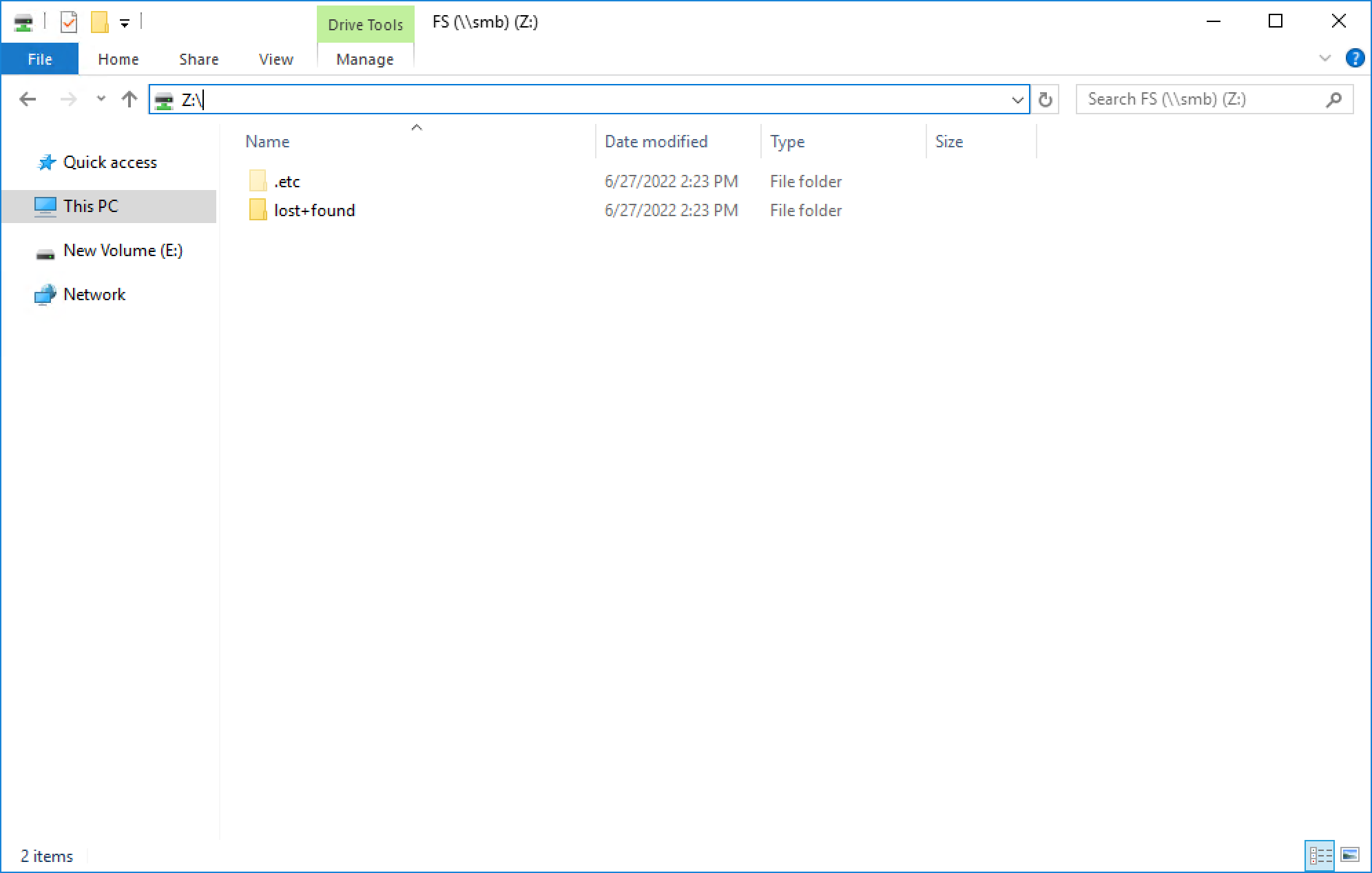

- Go to the \\<SMB_Server>\c$\.etc\.filefilter directory as an administrator

- To configure a filter, create an empty file using the naming convention extension@sharename

- For example, to filter .wcry ransomware files on the FS1 share, create a file named wcry@FS1

- To enable the filter on all shares on the SMB server, create the file with only the extension, such as wcry

You can configure multiple filters by creating additional files in this directory. For ransomware prevention use cases, create additional filters for other known ransomware extensions. Each SMB server has its own independent file extension filtering configuration, so each can be customized with its own configuration. The following figure shows an example of the configuration of the file extension filtering.

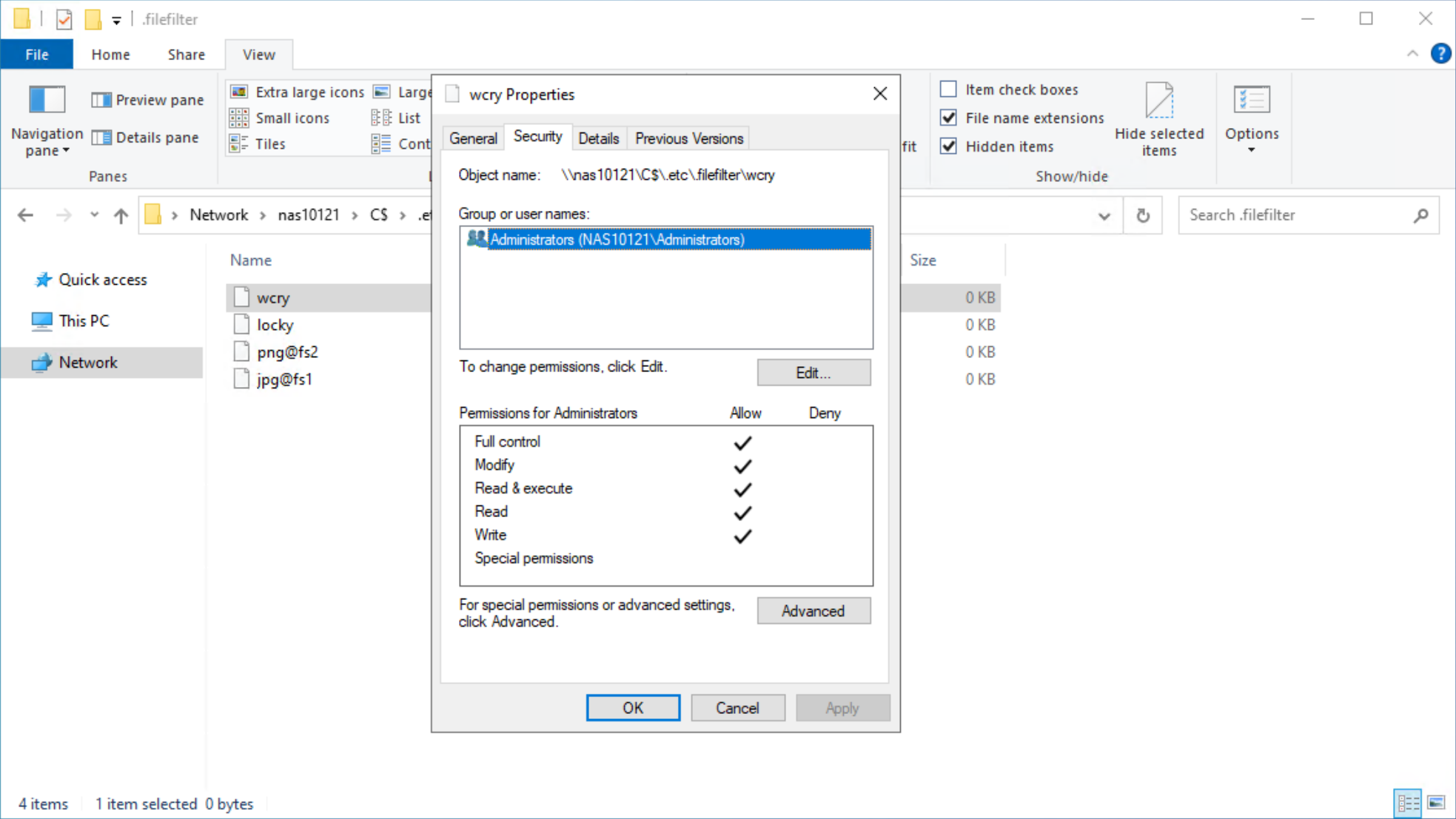

After configuring a file extension filter, you can permit exceptions for specific users or groups by changing the ACL on the filter file to provide Full Control privileges to the users or groups that should be excluded.

For example, if the Administrators group is provided Full Control permissions on the wcry filter file, then users in the Administrators group can store .wcry files on the share, while others cannot. Exceptions can be configured independently for each file filter being created, as shown in the following figure.

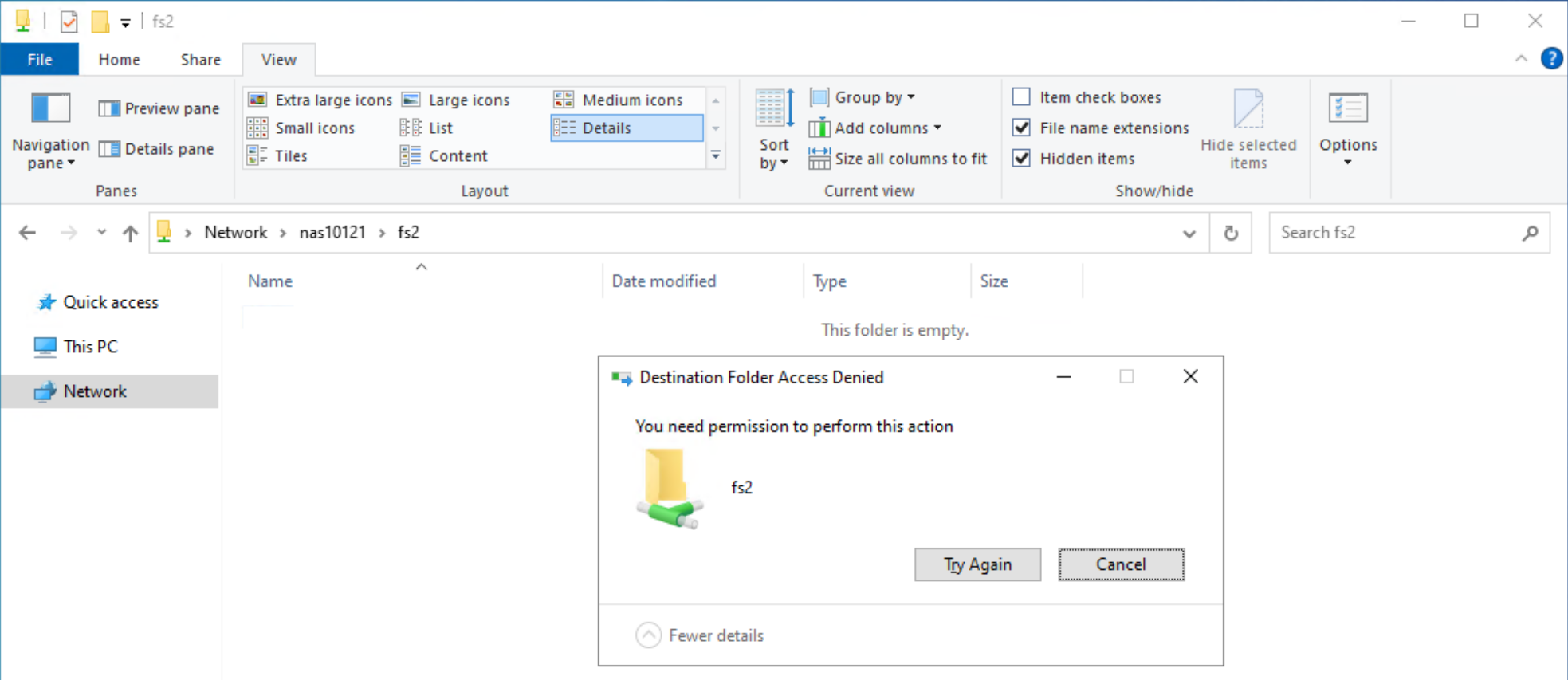

When users attempt to copy a file with a blocked extension, they receive an Access Denied error, as shown in the following figure.

Considerations

Note that this feature only works on SMB and does not filter file extensions when writing over NFS. Users could manually rename file extensions to bypass this filter, provided those other extensions are not also explicitly blocked, however malware may not be able to adapt and work around this as easily. Since the list of filtered extensions must be checked each time a file is written, having many filters could impact performance.

Conclusion

File extension filtering is a simple and powerful capability that provides administrators the ability to control the type of data that is stored on an SMB share. Easy to configure and able to provide an additional layer of protection against ransomware activity, file extension filtering is an effective addition to any comprehensive cybersecurity strategy to protect and secure your data.

Resources

The following resources provide more information about PowerStore:

Author: Wei Chen, Technical Staff, Engineering Technologist

PowerStore and SQL Server Ledger—Your Data Has Never Been More Secure!

Thu, 10 Aug 2023 17:49:01 -0000

|Read Time: 0 minutes

It’s all about security

Dell and Microsoft are constantly working together to provide the best security, availability, and performance for your valuable data assets. In the latest releases of PowerStoreOS 3.5 and SQL Server 2022, several new capabilities have been introduced that provide zero trust security for data protection.

PowerStore security

PowerStoreOS is packed with security features focused on secure platform, access controls, snapshot, auditing, and certifications. PowerStoreOS 3.5 introduces the following new features:

- STIG (Security Technical Implementation Guides) hardening, which enforces U.S. Department of Defense (DoD) specific rules regarding password complexity, timeout policies, and other practices.

- Multi-factor authentication to shield from hackers and mitigate poor password policies.

- Secure snapshots to prevent snapshots from being modified or deleted before the expiration date, even by an administrator.

- PowerProtect DD integration to create snapshots directly on Dell PowerProtect DD series appliances. The PowerStore Zero Trust video explains these features in more detail.

To enhance database security, Microsoft has introduced a new feature in SQL Server 2022, SQL Ledger. This feature leverages cryptography and blockchain architecture to produce a tamper-proof ledger of all changes made to a database over time. SQL Ledger provides cryptographic proof of data integrity to fulfill auditing requirements and streamline the audit process.

SQL Ledger 101

There are two main storage components to SQL Ledger. First is the database with special configuration that leverages blockchain architecture for increased security. The database resides on standard block or file storage. PowerStore supports both block and file storage, making it ideal for SQL Ledger deployments.

The second is an independently stored ledger digest that includes hash values for auditing and verification. The ledger digest is used as an append-only log to store point-in-time hash values for a ledger database. The ledger digest can then be used to verify the integrity of the main database by comparing a ledger block ID and hash value against the actual block ID and hash value in the database. If there is a mismatch, then some type of corruption or tampering has taken place.

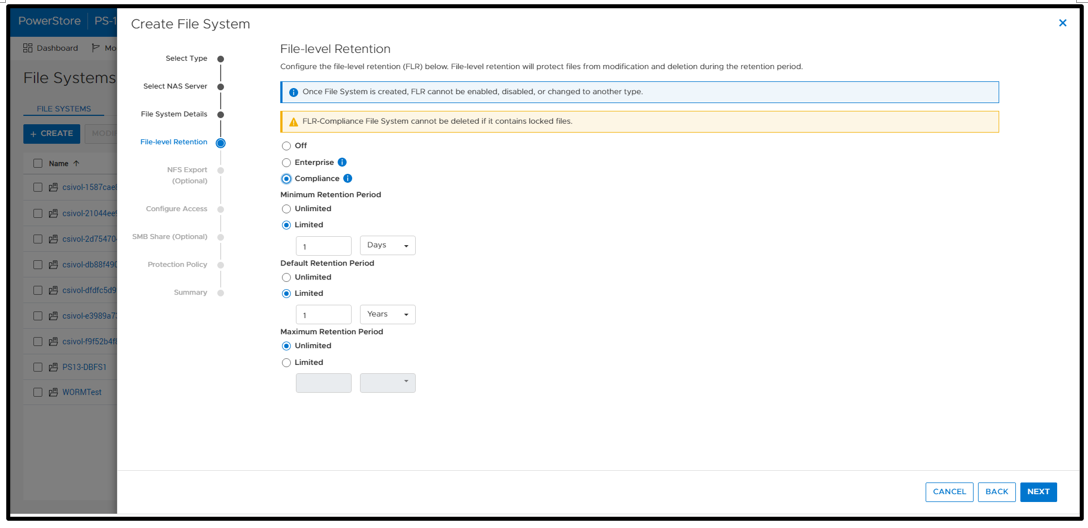

This second component, the ledger digest, requires Write Once Read Many (WORM) storage. PowerStore File-Level Retention (FLR) features can be configured to provide WORM storage for SQL Ledger. Additionally, PowerStore FLR can also be configured to include a data integrity check to detect write-tampering that complies with SEC rule 17a-4(f).

Automatic storage and generation of database digests is only available for Microsoft Azure Storage. For on-premises deployments of SQL Server, Dell Technologies has your back! Let’s look at how this is done with PowerStore.

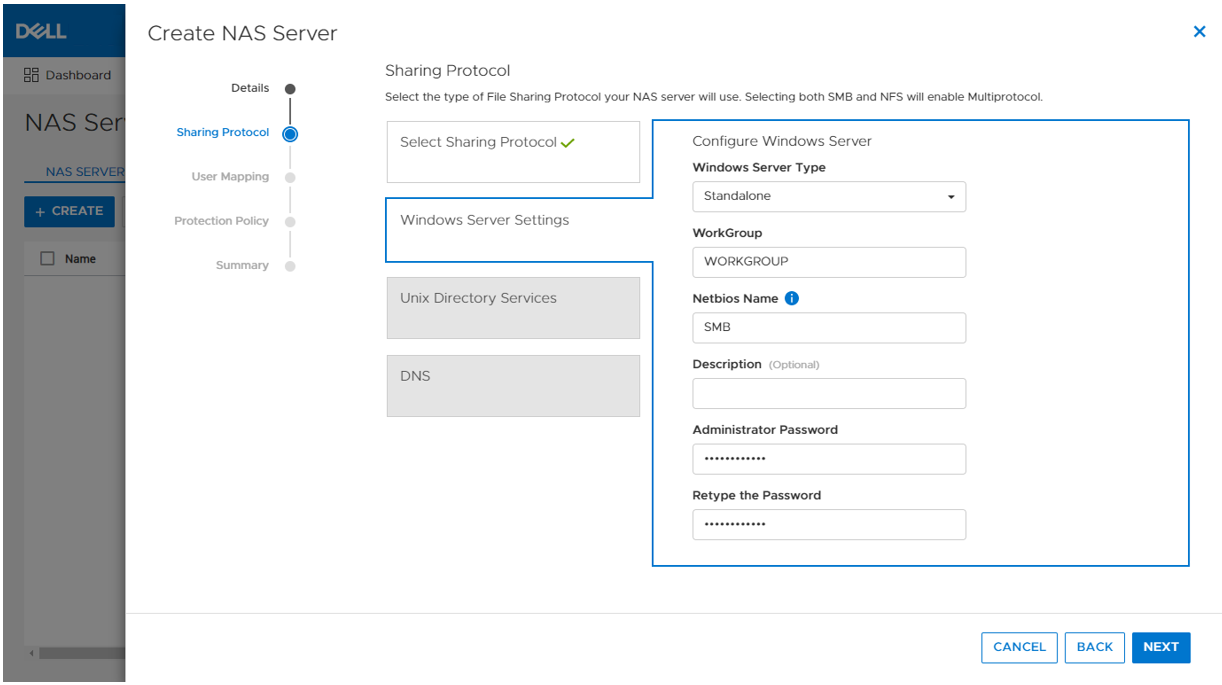

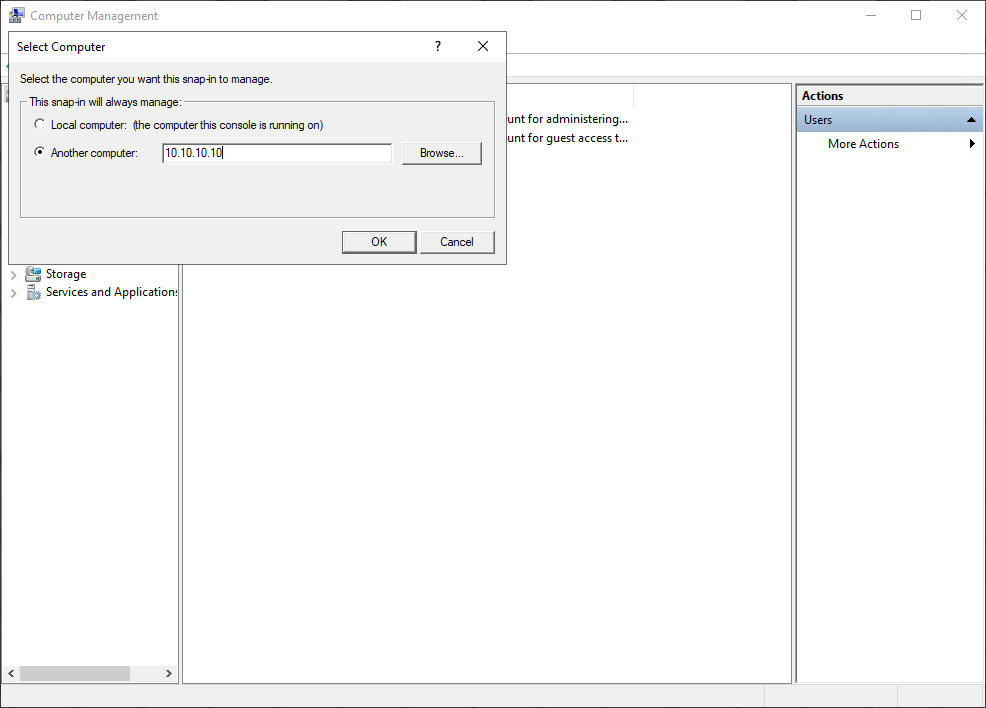

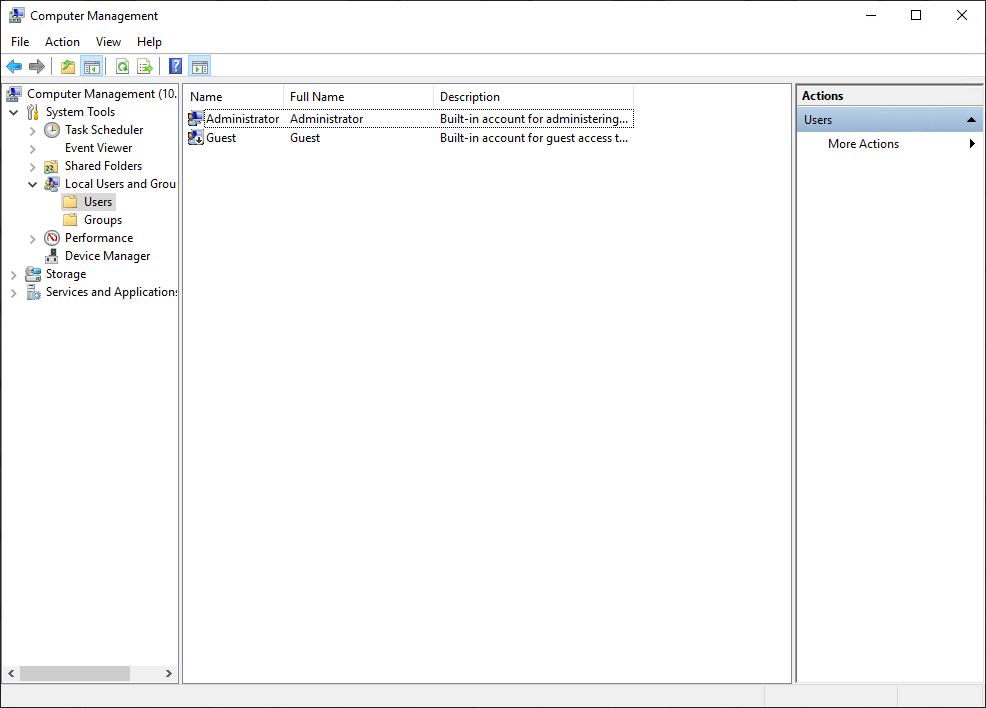

PowerStore configuration

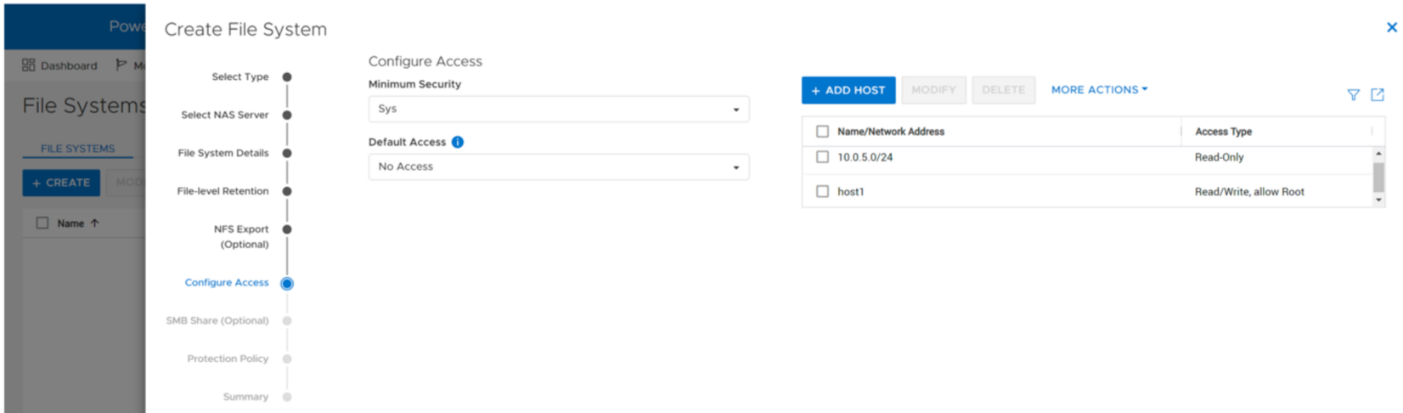

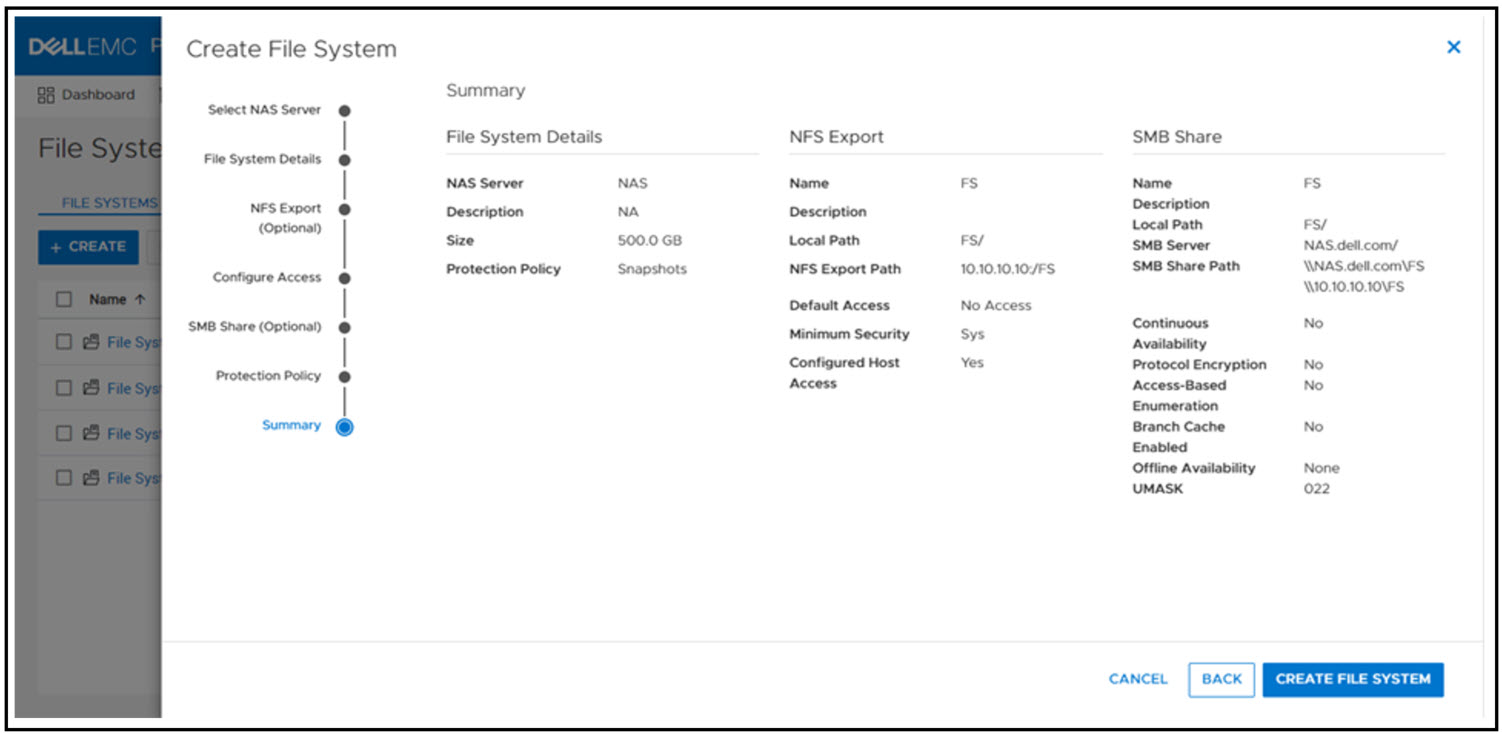

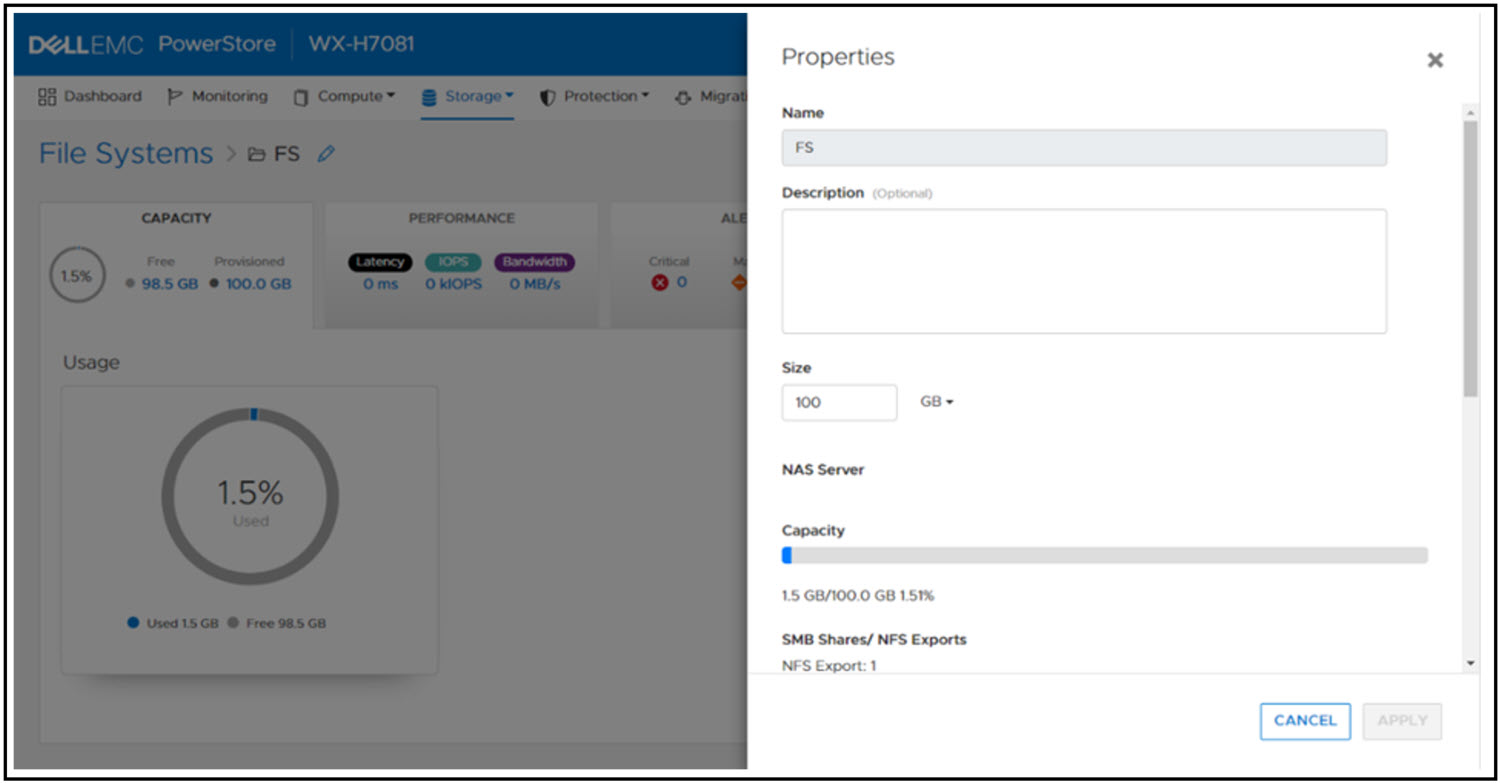

It’s time to deep dive into how to configure this capability with Dell PowerStore and how to implement SQL Ledger. First, we need to configure the basis for WORM storage on PowerStore for the digest portion of SQL Ledger. This is done by creating a file system that is configured for FLR. Due to the write-once feature, this is typically a separate dedicated system. PowerStore supports multiple file systems on the same appliance, so dedicating a separate file system to WORM is not an issue.

WORM functionality is implemented by configuring PowerStore FLR. You have the option of FLR-Enterprise (FLR-E) or FLR-Compliance (FLR-C). FLR-C is the most restrictive.

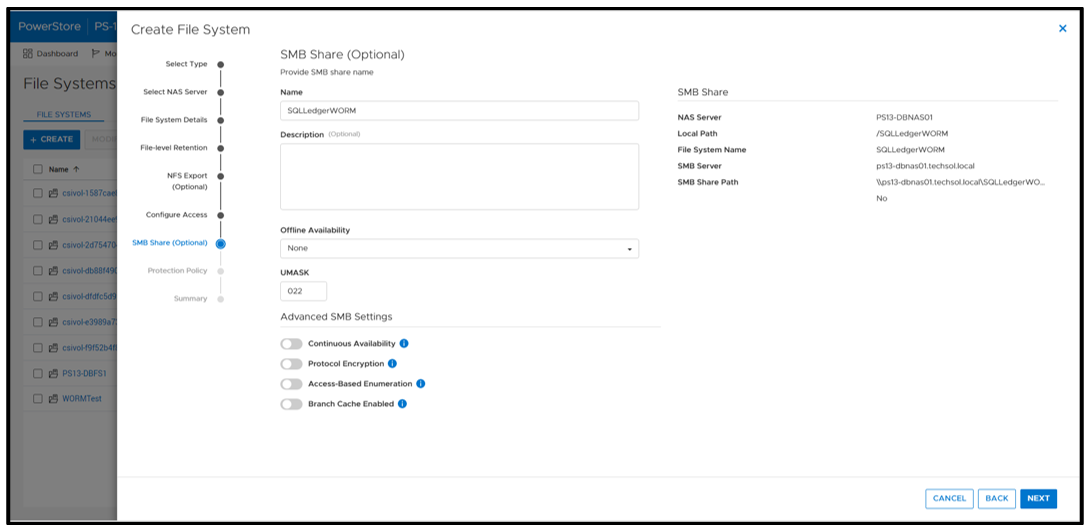

Next, configure an SMB (Server Message Block) Share for SQL Server to access the files. The settings here can be configured as needed; in this example, I am just using the defaults.

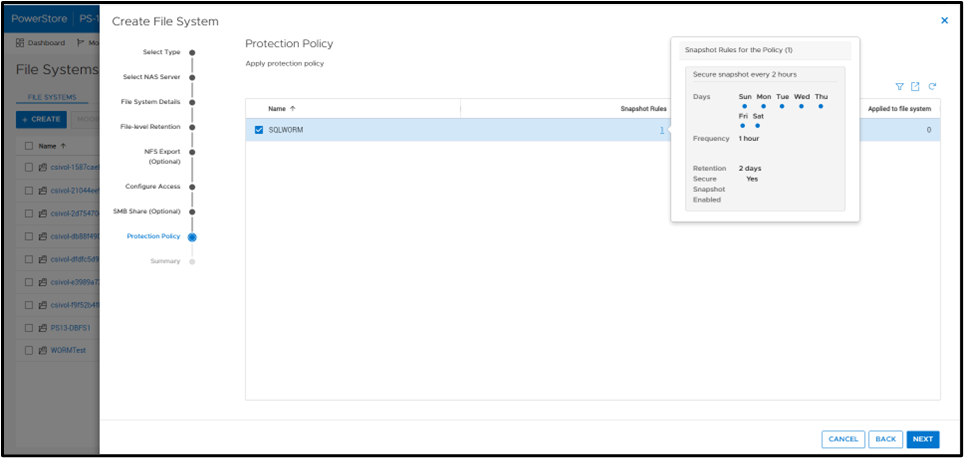

A best practice is to apply a protection policy. In this case, I am selecting a protection policy that has secure snapshot enabled. This will provide additional copies of the data that cannot be deleted prior to the retention period.

SQL Server database ops

Once the file system has been created, you can create a SQL Server database for ledger tables. Only ledger tables can exist in a ledger database. Therefore, it is common to create a separate database.

Database creation

The T-SQL CREATE DATABASE clause WITH LEDGER=ON indicates that it is a ledger database. Using a database name of SQLLedgerDemo, the most basic TSQL syntax would be:

CREATE DATABASE [SQLLedgerDemo] WITH LEDGER = ON

Ledger requires snapshot isolation to be enabled on the database with the following T-SQL command:

ALTER DATABASE SQLLedgerDemo SET ALLOW_SNAPSHOT_ISOLATION ON

Now that the database has been created, you can create either updatable or append-only ledger tables. These are database tables enhanced for SQL Ledger. Creating ledger tables is storage agnostic, so I am going to skip over it for brevity, but full instructions can be found in the Microsoft ledger documentation.

SQL Server ledger digest storage

The next step is to create the tamperproof database digest storage. This is where the verified block address and hash will be stored with a timestamp. The PowerStore file system that we just created will be used to fill this WORM storage device requirement. For it to operate correctly, we want to configure an append-only file. Because Windows does not have the ability to easily set this property, we can signal the PowerStore file system with FLR enabled that a file should be treated as append only.

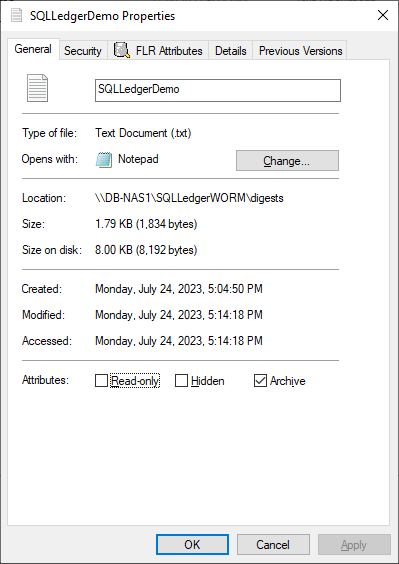

First, create an empty file. This can be done in Windows File Explorer by right-clicking New and selecting Text Document. Next, right-click the file and select Properties. Select the Read-only attribute, click Apply, and then clear the Read-only attribute, click Apply, and then click OK.

Note: This action must be done on an empty file. It will not work once the file has been written to.

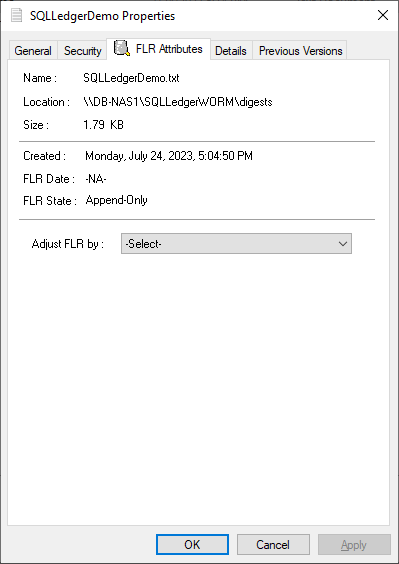

Notice that the dialog has an FLR Attributes tab. This is installed with the Dell FLR Toolkit and provides additional options not available in Windows, such as setting a file retention. Below we can also see that the FLR State of the file is set to Append-Only.

The FLR Toolkit can be downloaded from the Dell Support site. In addition to providing the ability to view the PowerStore FLR state, the FLR toolkit contains an FLR Explorer as well as additional command-line utilities.

The SQLLedgerDemo.txt file is now in the WORM state for SQL ledger digest storage: It is locked for delete and update, and can only be read or appended to.

For those of you running SQL Server on Linux, the process is the same to create the append only file. Create an empty file and then use the chmod command to remove and then reapply write permissions.

Before we get into populating the digest file, let’s understand what is being logged and how it is used. The digest file SQLLedgerDemo.txt will be populated by the output of a SQL Server stored procedure.

SQL Server ledger digest generation

Running the stored procedure sp_generate_database_ledger_digest on a ledger database produces the block ID and hash for our database verification. In this example, the output is:

{"database_name":"SQLLedgerDemo","block_id":0,"hash":"0xB222F775C84DC77BBA98B3C0E4E163484518102A10AE6D6DF216AFEDBD6D02E2","last_transaction_commit_time":"2023-07-24T13:52:20.3166667","digest_time":"2023-07-24T23:17:24.6960600"}This stored procedure is then run at regular intervals to produce a timestamped entry that can be used for validation.

SQL Server ledger digest verification

Using this info, another stored procedure, sp_verify_database_ledger, will recompute the hash for the given block ID to see if they match using the preceding output. You validate the block by passing in a previously generated digest to the stored procedure:

sp_verify_database_ledger N'{"database_name":"SQLLedgerDemo","block_id":0,"hash":"0xB222F775C84DC77BBA98B3C0E4E163484518102A10AE6D6DF216AFEDBD6D02E2","last_transaction_commit_time":"2023-07-24T13:52:20.3166667","digest_time":"2023-07-24T23:17:24.6960600"}'If it returns 0, there is a match; otherwise, you receive the following errors. You can verify this by modifying the hash value and calling sp_verify_database_ledger again, which will produce these errors.

Msg 37368, Level 16, State 1, Procedure sp_verify_database_ledger, Line 1 [Batch Start Line 10] The hash of block 0 in the database ledger does not match the hash provided in the digest for this block. Msg 37392, Level 16, State 1, Procedure sp_verify_database_ledger, Line 1 [Batch Start Line 10] Ledger verification failed.

Automating ledger digest generation and validation

Using these stored procedures, you put an automated process in place to generate a new ledger digest, append it to the file created above, and then verify that there is a match. If there is not a match, then you can go back to the last entry in the digest to determine when the corruption or tampering took place. If you're following the SQL Server Best Practices for PowerStore, you're taking regular snapshots of your database to enable fast point-in-time recovery. Because Dell PowerStore snapshots are secure and immutable, they serve as a fast recovery point, adding an additional layer of protection to critical data.

Because the generation and validation is driven with SQL Server stored procedures, automating the process using your favorite tools is extremely easy. Pieter Vanhove at Microsoft wrote a blog post, Ledger - Automatic digest upload for SQL Server without Azure connectivity, about how to automate the digest generation and verification using SQL Agent. The blog post contains sample scripts to create SQL Server Agent jobs to automate the entire process!

Summary

Your data can never be too secure. PowerStore secure snapshot capabilities add an additional another layer of security to any database. PowerStore FLR capabilities and SQL Server Ledger can be combined to further secure your database data and achieve compliance with the most stringent security requirements. Should the need arise, PowerStore secure, immutable snapshots can be used as a fast recovery point.

Author:

Doug Bernhardt

Sr. Principal Engineering Technologist

https://www.linkedin.com/in/doug-bernhardt-data/

Getting Tough with PowerStore and STIG Mode: Meeting Federal Compliance Requirements

Wed, 09 Aug 2023 15:22:52 -0000

|Read Time: 0 minutes

US Federal Security Technical Information Guide (STIG) overview

Compliance with the US Federal Security Technical Information Guide requirements, (STIG compliance) is a critical feature for many of our customers in the Federal space. STIG compliance is also a prerequisite for the Approved Product List (APL) certification. The latter is also a requirement for some Department of Defense (DoD) sites.

How PowerStoreOS 3.5 is supporting STIG

The new PowerStoreOS 3.5 release now supports STIG mode. This mode applies configuration changes to the core of the product for the system to meet STIG requirements related to the operating system, embedded web server, internal databases, and various networking functions.

Enabling STIG mode

When a user wants to enable STIG mode, they need to run a REST API command against the PowerStore cluster or use the PowerStore command line interface (PowerStore CLI). The following is an example of the REST API command where <IP> is the IP of the PowerStore cluster.

curl -kv https://<IP>:443/api/rest/security_config/1?is_async=true --user admin:Password -X PATCH --data '{"is_stig_enabled":true}' -H "Content-Type:application/json“You can also enable STIG mode by issuing the following command in the PowerStore CLI:

pstcli -user admin -password Password -destination <IP> security_config -id 1 set -is_stig_enabled true -async

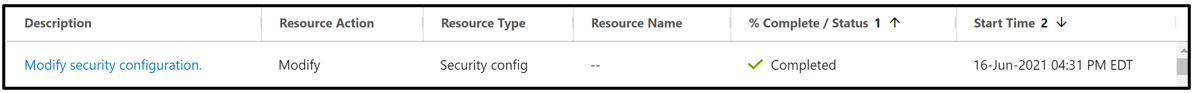

When the STIG enable process is kicked off, it takes about 45 minutes to enable STIG mode for a single appliance system. Larger clusters will take a little longer. You can confirm whether the process is running or completed by viewing the job status under Monitoring > Jobs in PowerStore Manager (Figure 1). In this example, notice that the ‘Modify security configuration’ job status is Completed.

Figure 1. STIG enablement job status

Enabling STIG is comparable to a PowerStoreOS upgrade, where the process is coordinated across the cluster, its nodes, and appliances. The process requires reboots across the nodes because of kernel changes and because additional Federal Information Processing Standard (FIPS) settings are enabled. FIPS in this case restricts the communication paths and the encryption used in those paths to be FIPS 140-2 compliant. By default, the drives in PowerStore are already leveraging FIPS encryption for storing data. STIG mode only enables the FIPS communication path part of the FIPS compliance, at the kernel level. This includes items such as data in flight and replication peers.

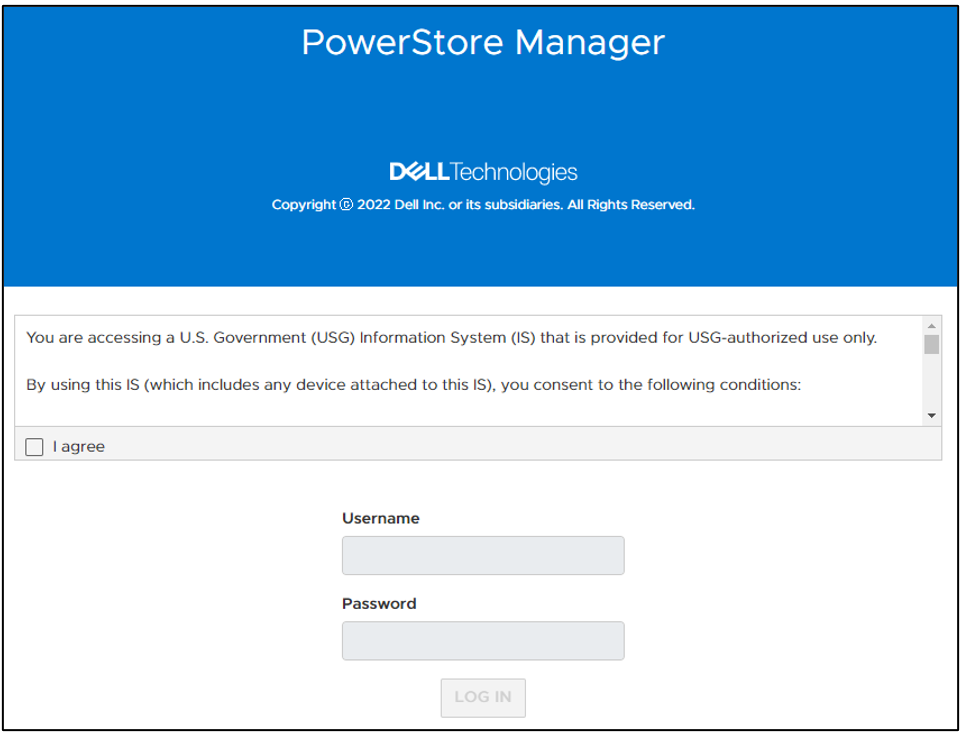

Disabling STIG mode, after it is enabled, is not supported. This is because a user enabling STIG mode is protecting top secret data, and we don’t want to enable anyone to disable this mode. The only way to remove or disable STIG mode from the PowerStore would be to perform a factory reset, which would delete all data. When STIG mode is enabled, PowerStore Manager displays a new login banner, as shown in Figure 2.

Figure 2. New STIG login banner

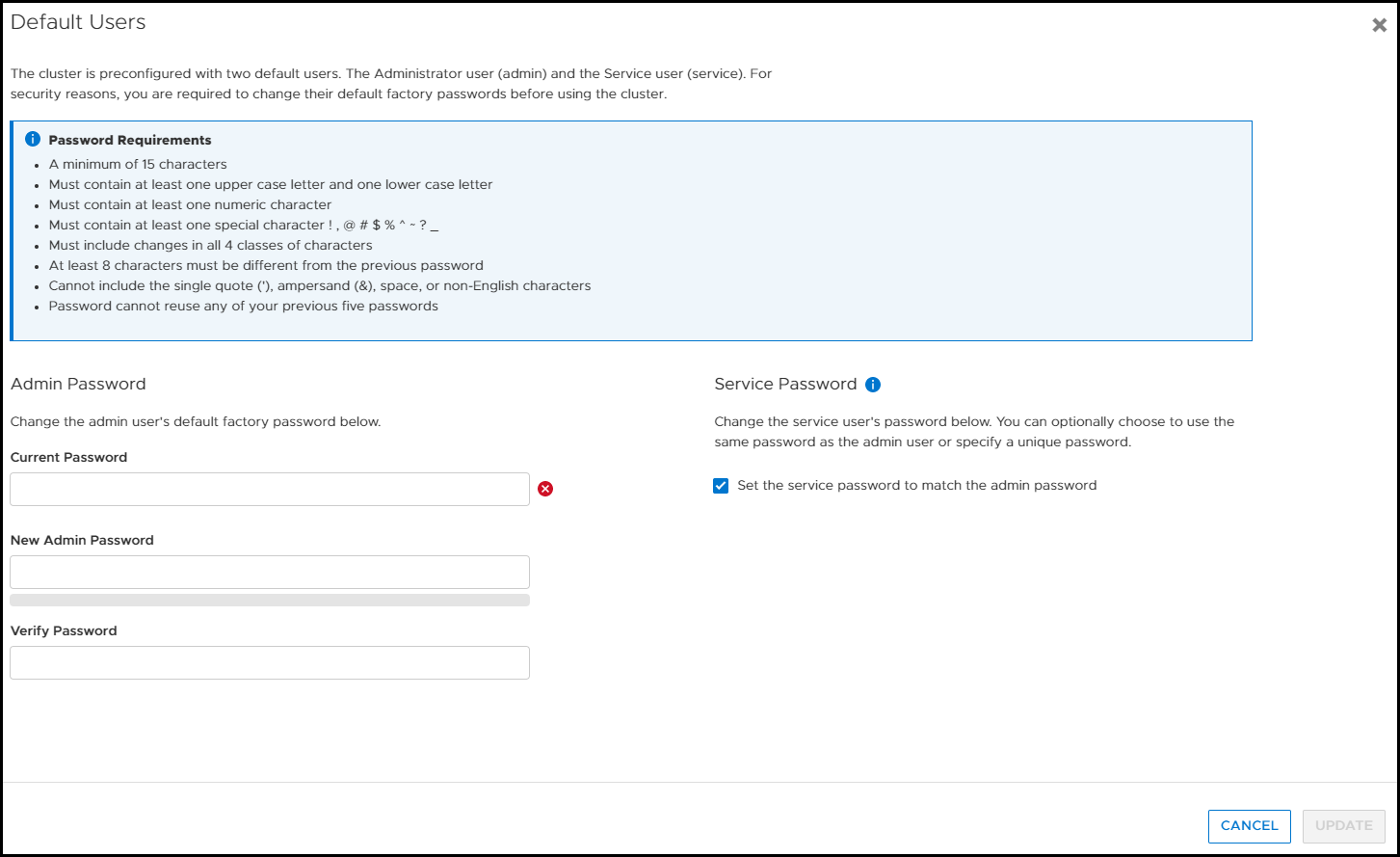

The user needs to scroll through this banner and click I agree to be able to input their credentials. They are then prompted to create a new password that meets STIG requirements and increases the security posture of the system. These requirements are outlined in the blue section of Figure 3.

Figure 3. Update password to meet STIG requirements

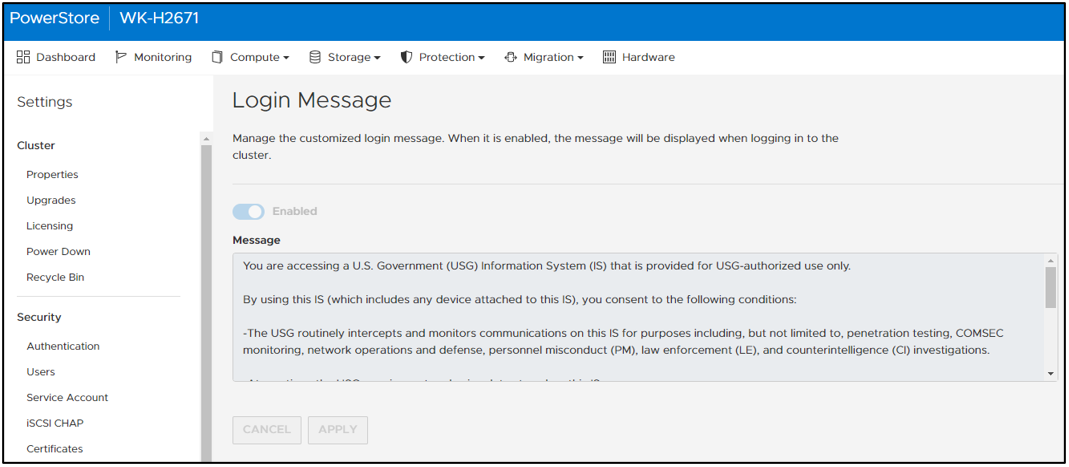

Now, after logging in for the first time, you can notice a few of the changes from having enabled STIG mode in PowerStore Manager. If we look at the Login Message under Settings, the user can’t disable or change the login banner message. In Figure 4, notice that Enabled is grayed out and the login message is read-only. (If this system weren’t in STIG mode, users would be able to set their own login banner message, and enable or disable it as they see fit.)

Figure 4. Login message can’t be changed or disabled

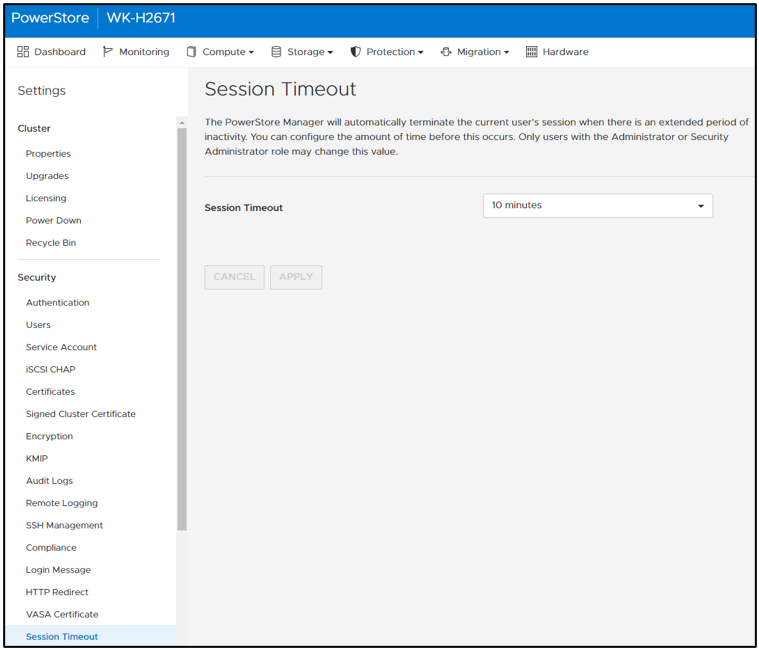

In PowerStore Manager, under Settings > Security > Session Timeout, only users with the Administrator or Security Administrator role can change the session timeout value. The options are 5, 10, and 20 minutes. Ten minutes is the default for STIG mode (Figure 5).

Figure 5. Default STIG mode session timeout

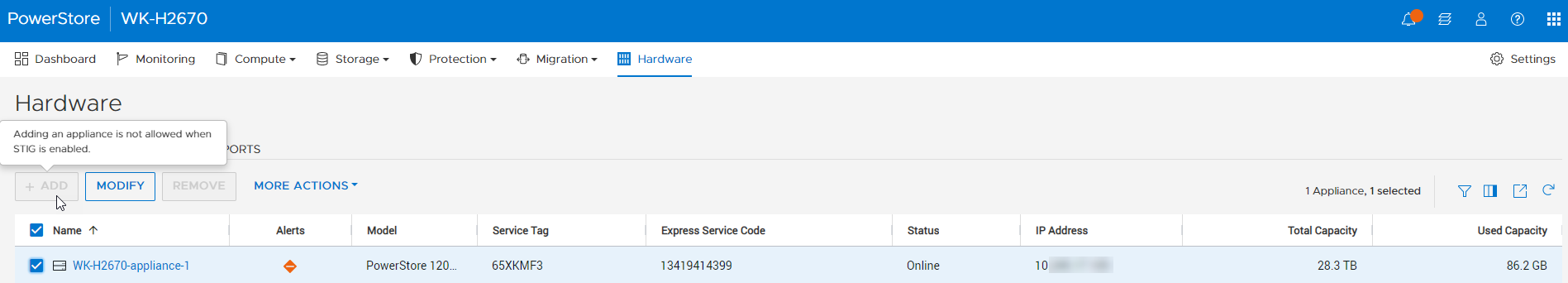

STIG mode also disables the ability for users to add PowerStore appliances to a STIG enabled cluster. Users who want to use multiple appliances must join them together before enabling STIG mode. This helps ensure a high security posture. On the Hardware page, notice that the ADD button is grayed out and that mousing over it displays a tooltip message (Figure 6).

Figure 6. Add appliance disabled

After STIG mode is enabled, the Advanced Intrusion Detection Environment (AIDE) is also enabled on PowerStore. AIDE runs a scan once a day to look for file tampering of system files. This is another method that STIG uses to protect the PowerStore. Because PowerStore system files should only be changed during upgrades, it is easy for AIDE to detect tampering. If tampering is detected, PowerStore alerts appear, and the audit log is updated.

Conclusion

This blog provided you a quick glimpse into how easy it is to enable STIG mode on PowerStore to increase the system’s security posture and meet Federal compliance requirements. We went over some of the basic changes that STIG mode makes on the surface. Many more security items are changed underneath the covers of PowerStore to make it secure for Federal environments. Federal users will benefit from these security features and still be able to take advantage of PowerStore’s intuitive interface.

Resources

For more information about PowerStoreOS 3.5, and PowerStore in general, check out these resources:

- Dell Technologies PowerStore Info Hub

- Dell PowerStore: Cybersecurity White Paper

- Dell PowerStore: Blogs

Author: Andrew Sirpis

Dell PowerStore: vVol Replication with PowerCLI

Wed, 14 Jun 2023 14:57:44 -0000

|Read Time: 0 minutes

Overview

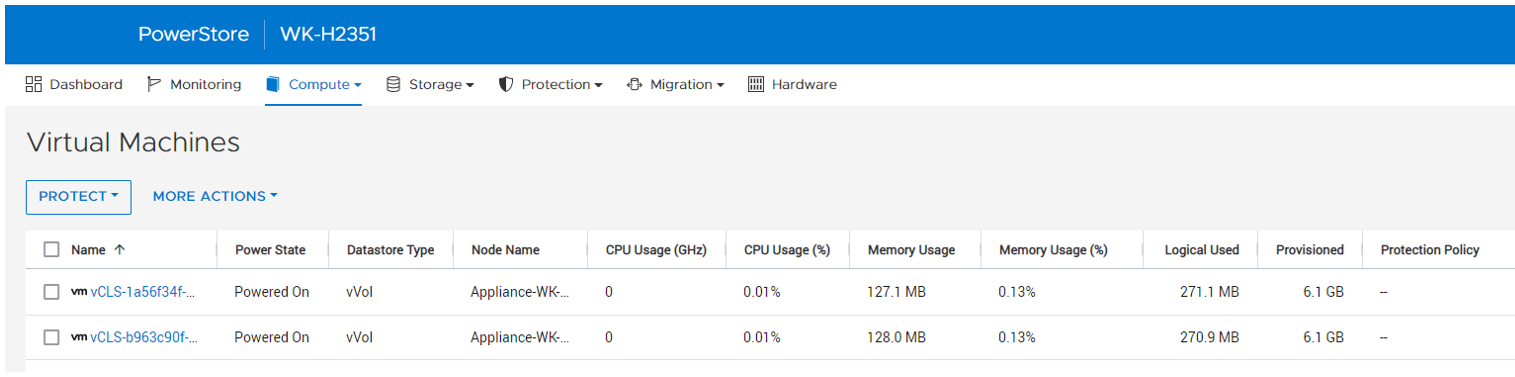

In PowerStoreOS 2.0, we introduced asynchronous replication of vVol-based VMs. In addition to using VMware SRM to manage and control the replication of vVol-based VMs, you can also use VMware PowerCLI to replicate vVols. This blog shows you how.

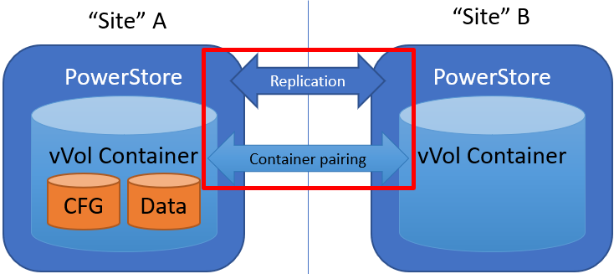

To protect vVol-based VMs, the replication leverages vSphere storage policies for datastores. Placing VMs in a vVol storage container with a vSphere storage policy creates a replication group. The solution uses VASA 3.0 storage provider configurations in vSphere to control the replication of all individual configuration-, swap-, and data vVols in a vSphere replication group on PowerStore. All vVols in a vSphere replication group are managed in a single PowerStore replication session.

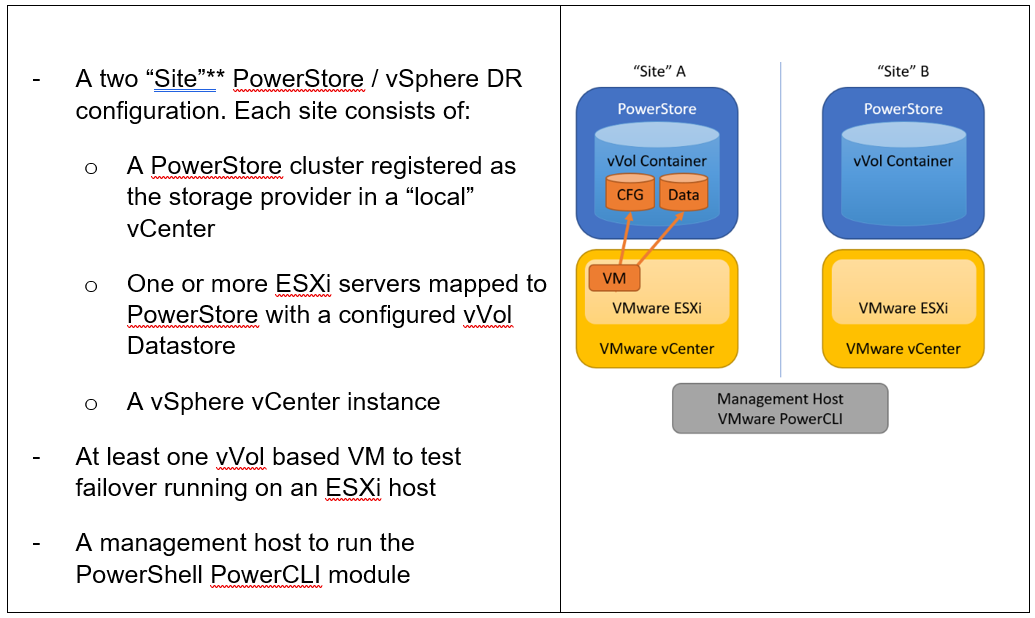

Requirements for PowerStore asynchronous vVol replication with PowerCLI:

**As in VMware SRM, I’m using the term “site” to differentiate between primary and DR installation. However,

depending on the use case, all systems could also be located at a single location.

Let’s start with some terminology used in this blog.

PowerStore cluster | A configured PowerStore system that consists of one to four PowerStore appliances. |

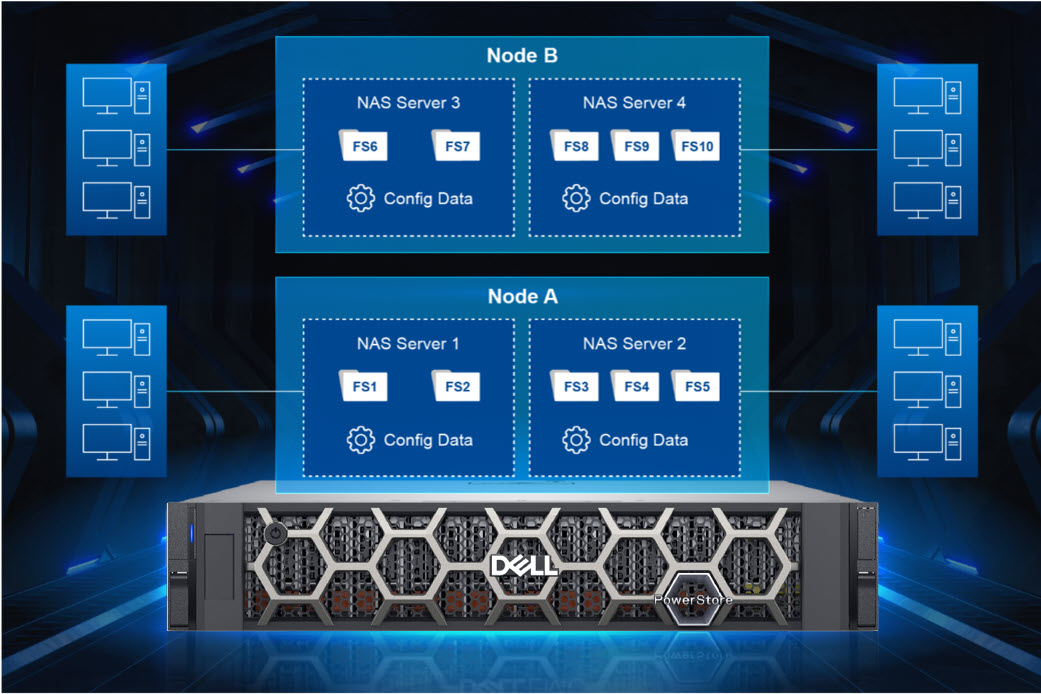

PowerStore appliance | A single PowerStore entity that comes with two nodes (node A and node B). |

PowerStore Remote Systems (pair) | Definition of a relationship of two PowerStore clusters, used for replication. |

PowerStore Replication Rule | A replication configuration used in policies to run asynchronous replication. The rule provides information about the remote systems pair and the targeted recovery point objective time (RPO). |

PowerStore Replication Session | One or more storage objects configured with a protection policy that include a replication rule. The replication session controls and manages the replication based on the replication rule configuration. |

VMware vSphere VM Storage Policy | A policy to configure the required characteristics for a VM storage object in vSphere. For vVol replication with PowerStore, the storage policy leverages the PowerStore replication rule to set up and manage the PowerStore replication session. A vVol-based VM consists of a config vVol, swap vVol, and one or more data vVols. |

VMware vSphere Replication Group | In vSphere, the replication is controlled in a replication group. For vVol replication, a replication group includes one or more vVols. The granularity for failover operations in vSphere is replication group. A replication group uses a single PowerStore replication session for all vVols in that replication group. |

VMware Site Recovery Manager (SRM) | A tool that automates failover from a production site to a DR site. |

Preparing for replication

For preparation, similar to VMware SRM, there are some steps required for replicated vVol-based VMs:

Note: When frequently switching between vSphere and PowerStore, an item may not be available as expected. In this case, a manual synchronization of the storage provider in vCenter might be required to make the item immediately available. Otherwise, you must wait for the next automatic synchronization.

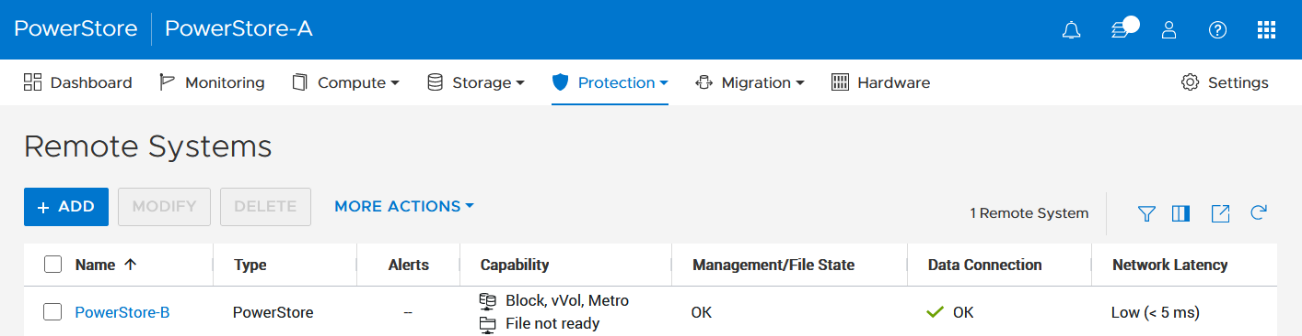

- Using the PowerStore UI, set up a remote system relationship between participating PowerStore clusters. It’s only necessary to perform this configuration on one PowerStore system. When a remote system relationship is established, it can be used by both PowerStore systems.

Select Protection > Remote Systems > Add remote system.

When there is only a single storage container on each PowerStore in a remote system relationship, PowerStoreOS also creates the container protection pairing required for vVol replication.

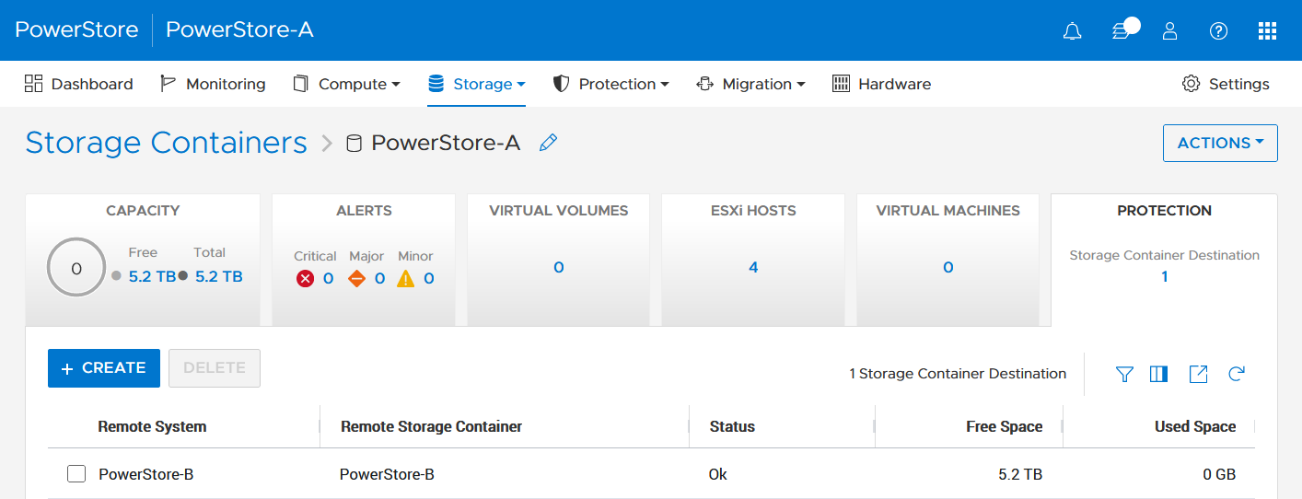

To check the configuration or create storage container protection pairing when more storage containers are configured, select Storage > Storage Containers > [Storage Container Name] > Protection.

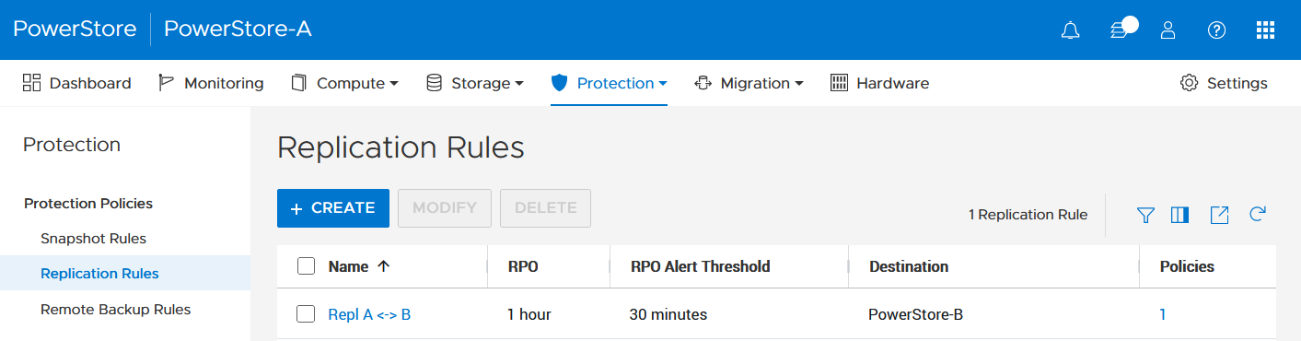

2. The VMware Storage Policy (which is created in a later step) requires existing replication rules on both PowerStoresystems, ideally with same characteristics. For this example, the replication rule replicates from PowerStore-A to PowerStore-B with a RPO of one hour, and 30 minutes for the RPO Alert Threshold.

Select Protection > Protection Policies > Replication Rules.

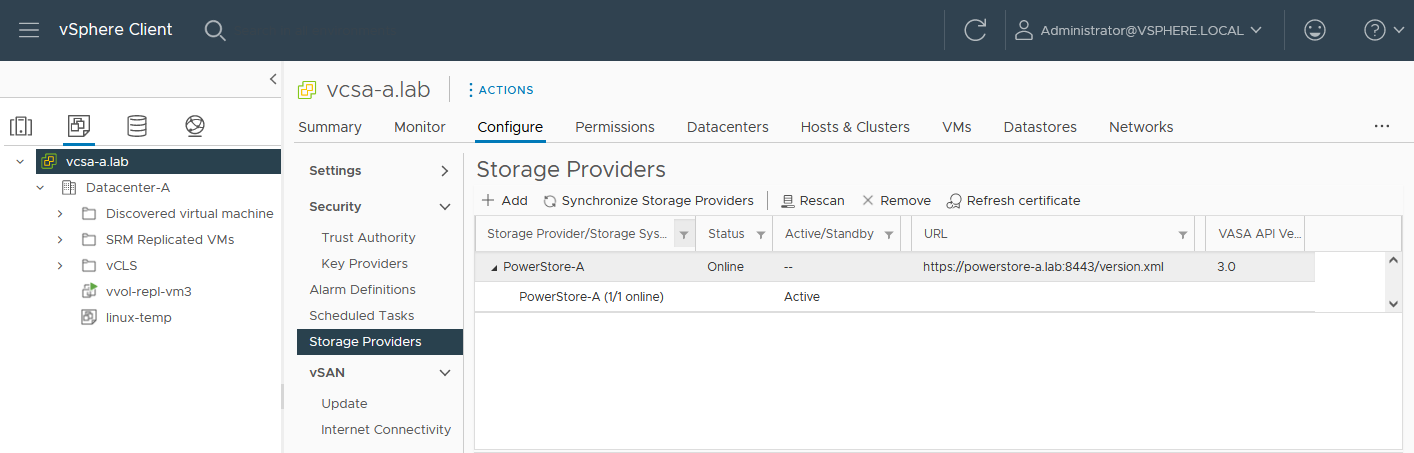

3. As mentioned in the Overview, VASA 3.0 is used for the communication between PowerStore and vSphere. If not configured already, register the local PowerStore in both vCenters as the storage provider in the corresponding vSphere vCenter instance.

In the vSphere Client, select [vCenter server] > Configuration > Storage Providers.

Use https://<PowerStore>:8443/version.xml as the URL with the PowerStore user and password to register the PowerStore cluster.

Alternatively, use PowerStore for a bidirectional registration. When vCenter is registered in PowerStore, PowerStoreOS gets more insight about running VMs for that vCenter. However, in the current release, PowerStoreOS can only handle a single vCenter connection for VM lookups. When PowerStore is used by more than one vCenter, it’s still possible to register a PowerStore in a second vCenter as the storage provider, as mentioned before.

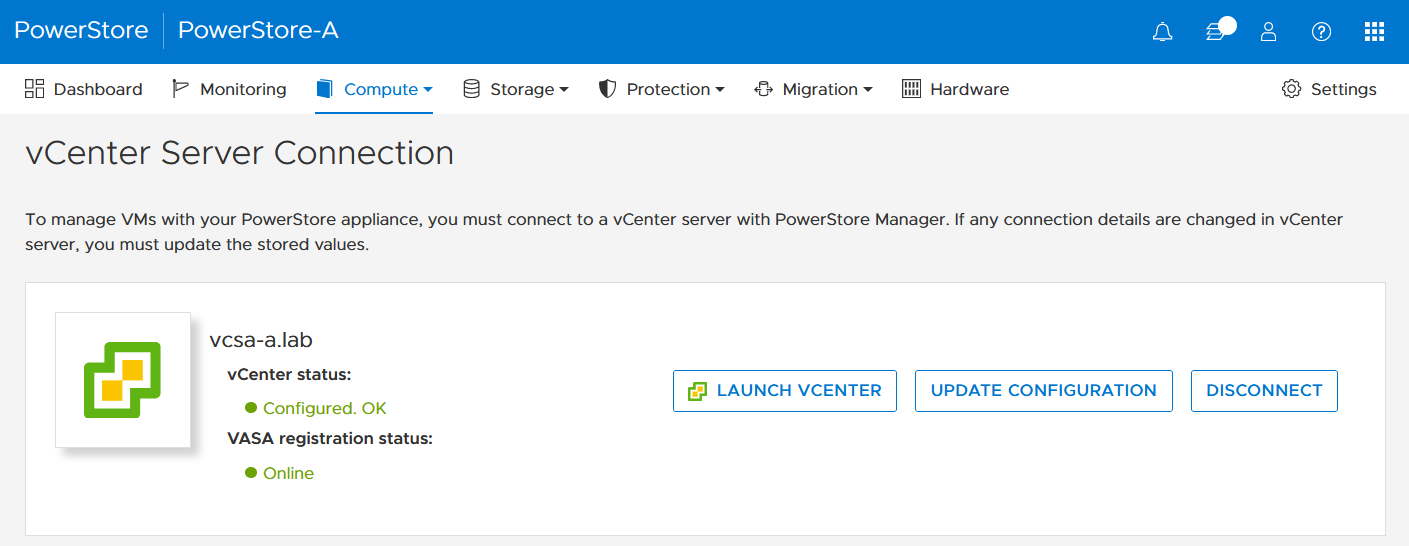

In the PowerStore UI, select Compute > vCenter Server Connection.

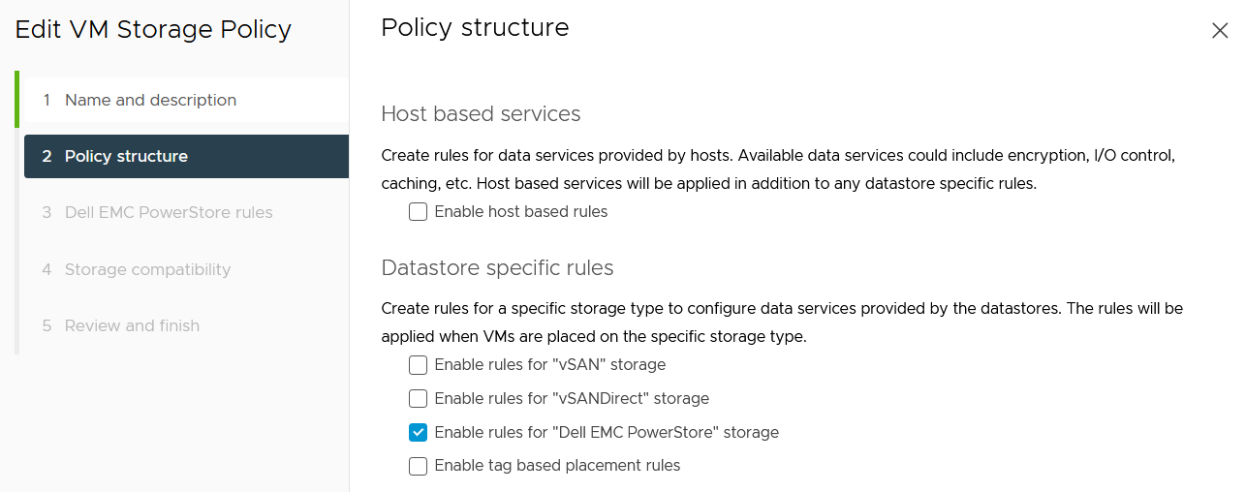

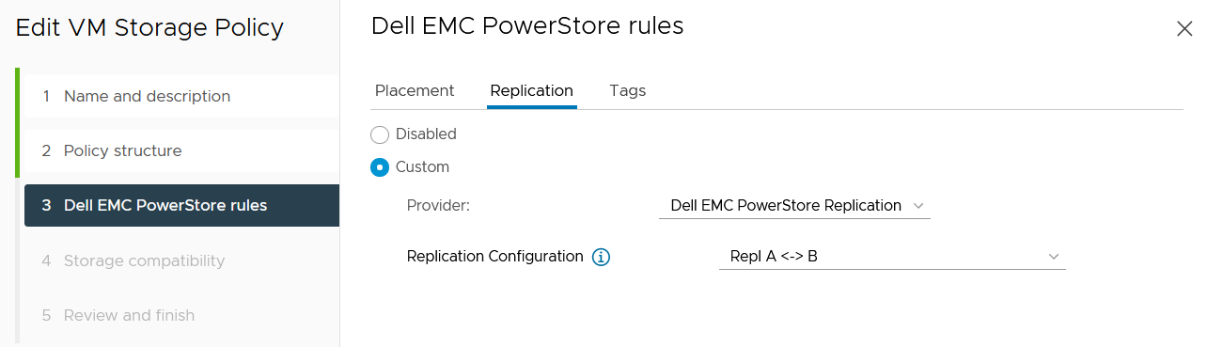

4. Set up a VMware storage policy with a PowerStore replication rule on both vCenters.

The example script in the section section Using PowerCLI and on myScripts4u@github requires the same Storage Policy name for both vCenters.

In the vSphere Client, select Policies and Profiles > VM Storage Policies > Create.

Enable “Dell EMC PowerStore” storage for datastore specific rules:

then choose the PowerStore replication rule:

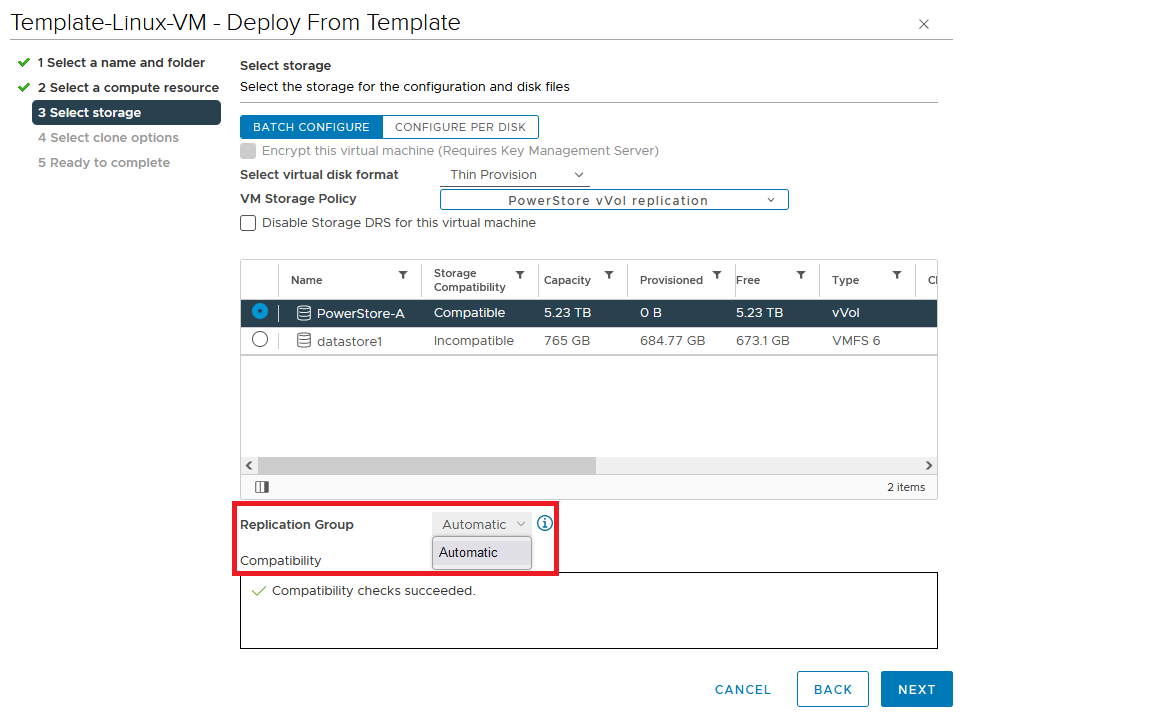

5. Create a VM on a vVol storage container and assign the storage protection policy with replication.

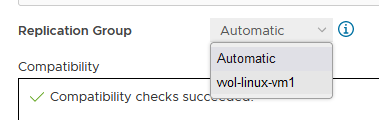

When a storage policy with replication is set up for a VM, you must specify a replication group. Selecting “automatic” creates a replication group with the name of the VM. Multiple VMs can be protected in one replication group.

When deploying another VM on the same vVol datastore, the name of the other replicated VM appears in the list for the Replication Group.

All vVol replication operations are on a Replication Group granularity. For instance, it’s not possible to failover only a single VM of a replication group.

That’s it for the preparation! Let’s continue with PowerCLI.

Using PowerCLI

Disclaimer: The PowerShell snippets shown below are developed only for educational purposes and provided only as examples. Dell Technologies and the blog author do not guarantee that this code works in your environment or can provide support in running the snippets.

To get the required PowerStore modules for PowerCLI, start PowerShell or PowerShell ISE and use Install-Module to install VMware.PowerCLI:

PS C:\> Install-Module -Name VMware.PowerCLI

The following example uses the replication group “vvol-repl-vm1”, which includes the virtual machines “vvol-repl-vm1” and “vvol-repl-vm2”. Because a replication group name might not be consistent with a VM to failover, the script uses the virtual machine name “vvol-repl-vm2” to get the replication group for failover.

Failover

This section shows an example failover of a vVol-based VM “vvol-vm2” from a source vCenter to a target vCenter.

1. Load modules, allow PowerStore to connect to multiple vCenter instances, and set variables for the VM, vCenters, and vCenter credentials. The last two commands in this step establishes the connection to both vCenters.

Import-Module VMware.VimAutomation.Core Import-Module VMware.VimAutomation.Common Import-Module VMware.VimAutomation.Storage Set-PowerCLIConfiguration -DefaultVIServerMode 'Multiple' -Scope ([VMware.VimAutomation.ViCore.Types.V1.ConfigurationScope]::Session) -Confirm:$false | Out-Null $virtualmachine = "vvol-vm2" # Enter VM name of a vVol VM which should failover $vcUser = 'administrator@vsphere.local' # Change this to your VC username $vcPass = 'xxxxxxxxxx' # VC password $siteA = "vcsa-a.lab" # first vCenter $siteB = "vcsa-b.lab" # second vCenter Connect-VIServer -User "$vcUser" -Password "$vcPass" -Server $siteA -WarningAction SilentlyContinue Connect-VIServer -User "$vcUser" -Password "$vcPass" -Server $siteB -WarningAction SilentlyContinue

2. Get replication group ($rg), replication group pair ($rgPair), and storage policy ($stoPol) for the VM. Because a replication group may have additional VMs, all VMs in the replication group are stored in $rgVMs.

$vm = get-vm $virtualmachine

# find source vCenter – this allows the script to failover (Site-A -> Site-B) and failback (Site-B -> Site-A)

$srcvCenter=$vm.Uid.Split(":")[0].Split("@")[1]

if ( $srcvCenter -like $siteA ) {

$siteSRC=$siteA

$siteDST=$siteB

} else {

$siteSRC=$siteB

$siteDST=$siteA

}

$rg = get-spbmreplicationgroup -server $siteSRC -VM $vm

$rgPair = Get-SpbmReplicationPair -Source $rg

$rgVMs=(Get-SpbmReplicationGroup -server $siteSRC -Name $rg| get-vm)

$stoPol = ( $vm | Get-SpbmEntityConfiguration).StoragePolicy.Name3. Try a graceful shutdown of VMs in $rgVMs and wait ten seconds. Shut down VMs after three attempts.

$rgVMs | ForEach-Object {

if ( (get-vm $_).PowerState -eq "PoweredOn")

{

stop-vmguest -VM $_ -confirm:$false -ErrorAction silentlycontinue | Out-Null

start-sleep -Seconds 10

$cnt=1

while ((get-vm $_).PowerState -eq "PoweredOn" -AND $cnt -le 3 ) {

Start-Sleep -Seconds 10

$cnt++

}

if ((get-vm $_).PowerState -eq "PoweredOn") {

stop-vm $_ -Confirm:$false | Out-Null

}

}

}4. It’s now possible to prepare and execute the failover. At the end, $vmxfile contains the vmx files that are required to register the VMs at the destination. During failover, a final synchronize before doing the failover ensures that all changes are replicated to the destination PowerStore. When the failover is completed, the vVols at the failover destination are available for further steps.

$syncRg = Sync-SpbmReplicationGroup -PointInTimeReplicaName "prePrepSync" -ReplicationGroup $rgpair.target $prepareFailover = start-spbmreplicationpreparefailover $rgPair.Source -Confirm:$false -RunAsync Wait-Task $prepareFailover $startFailover = Start-SpbmReplicationFailover $rgPair.Target -Confirm:$false -RunAsync $vmxfile = Wait-Task $startFailover

5. For clean up on the failover source vCenter, we remove the failed over VM registrations. On the failover target, we search for a host ($vmhostDST) and register, start, and set the vSphere Policy on VMs. The array @newDstVMs will contain VM information at the destination for the final step.

$rgvms | ForEach-Object {

$_ | Remove-VM -ErrorAction SilentlyContinue -Confirm:$false

}

$vmhostDST = get-vmhost -Server $siteDST | select -First 1

$newDstVMs= @()

$vmxfile | ForEach-Object {

$newVM = New-VM -VMFilePath $_ -VMHost $vmhostDST

$newDstVMs += $newVM

}

$newDstVms | forEach-object {

$vmtask = start-vm $_ -ErrorAction SilentlyContinue -Confirm:$false -RunAsync

wait-task $vmtask -ErrorAction SilentlyContinue | out-null

$_ | Get-VMQuestion | Set-VMQuestion -Option ‘button.uuid.movedTheVM’ -Confirm:$false

$hdds = Get-HardDisk -VM $_ -Server $siteDST

Set-SpbmEntityConfiguration -Configuration $_ -StoragePolicy $stopol -ReplicationGroup $rgPair.Target | out-null

Set-SpbmEntityConfiguration -Configuration $hdds -StoragePolicy $stopol -ReplicationGroup $rgPair.Target | out-null

}6. The final step enables the protection from new source.

start-spbmreplicationreverse $rgPair.Target | Out-Null

$newDstVMs | foreach-object {

Get-SpbmEntityConfiguration -HardDisk $hdds -VM $_ | format-table -AutoSize

}Additional operations

Other operations for the VMs are test-failover and an unplanned failover on the destination. The test failover uses the last synchronized vVols on the destination system and allows us to register and run the VMs there. The vVols on the replication destination where the test is running are not changed. All changes are stored in a snapshot. The writeable snapshot is deleted when the test failover is stopped.

Test failover

For a test failover, follow Step 1 through Step 3 from the failover example and continue with the test failover. Again, $vmxfile contains VMX information for registering the test VMs at the replication destination.

$sync=Sync-SpbmReplicationGroup -PointInTimeReplicaName "test" -ReplicationGroup $rgpair.target $prepareFailover = start-spbmreplicationpreparefailover $rgPair.Source -Confirm:$false -RunAsync $startFailover = Start-SpbmReplicationTestFailover $rgPair.Target -Confirm:$false -RunAsync $vmxfile = Wait-Task $startFailover

It’s now possible to register the test VMs. To avoid IP network conflicts, disable the NICs, as shown here.

$newDstVMs= @()

$vmhostDST = get-vmhost -Server $siteDST | select -First 1

$vmxfile | ForEach-Object {

write-host $_

$newVM = New-VM -VMFilePath $_ -VMHost $vmhostDST

$newDstVMs += $newVM

}

$newDstVms | forEach-object {

get-vm -name $_.name -server $siteSRC | Start-VM -Confirm:$false -RunAsync | out-null # Start VM on Source

$vmtask = start-vm $_ -server $siteDST -ErrorAction SilentlyContinue -Confirm:$false -RunAsync

wait-task $vmtask -ErrorAction SilentlyContinue | out-null

$_ | Get-VMQuestion | Set-VMQuestion -Option ‘button.uuid.movedTheVM’ -Confirm:$false

while ((get-vm -name $_.name -server $siteDST).PowerState -eq "PoweredOff" ) {

Start-Sleep -Seconds 5

}

$_ | get-networkadapter | Set-NetworkAdapter -server $siteDST -connected:$false -StartConnected:$false -Confirm:$false

}After stopping and deleting the test VMs at the replication destination, use stop-SpbmReplicationFailoverTest to stop the failover test. In a new PowerShell or PowerCLI session, perform Steps 1 and 2 from the failover section to prepare the environment, then continue with the following commands.

$newDstVMs | foreach-object {

stop-vm -Confirm:$false $_

remove-vm -Confirm:$false $_

}

Stop-SpbmReplicationTestFailover $rgpair.targetUnplanned failover

For an unplanned failover, the cmdlet Start-SpbmReplicationFailover provides the option -unplanned which can be executed against a replication group on the replication destination for immediate failover in case of a DR. Because each infrastructure and DR scenario is different, I only show the way to run the unplanned failover of a single replication group in case of a DR situation.

To run an unplanned failover, the script requires the replication target group in $RgTarget. The group pair information is only available when connected to both vCenters. To get a mapping of replication groups, use Step 1 from the Failover section and execute the Get-SpbmReplicationPair cmdlet:

PS> Get-SpbmReplicationPair | Format-Table -autosize Source Group Target Group ------------ ------------ vm1 c6c66ee6-e69b-4d3d-b5f2-7d0658a82292

The following part shows how to execute an unplanned failover for a known replication group. The example connects to the DR vCenter and uses the replication group id as an identifier for the unplanned failover. After the failover is executed, the script registers the VMX in vCenter to bring the VMs online.

Import-Module VMware.VimAutomation.Core

Import-Module VMware.VimAutomation.Common

Import-Module VMware.VimAutomation.Storage

$vcUser = 'administrator@vsphere.local' # Change this to your VC username

$vcPass = 'xxxxxxxxxx' # VC password

$siteDR = "vcsa-b.lab" # DR vCenter

$RgTarget = "c6c66ee6-e69b-4d3d-b5f2-7d0658a82292" # Replication Group Target – required from replication Source before running the unplanned failover

# to get the information run get-SpbmReplicationPair | froamt-table -autosize when connected to both vCenter

Connect-VIServer -User "$vcUser" -Password "$vcPass" -Server $siteDR -WarningAction SilentlyContinue

# initiate the failover and preserve vmxfiles in $vmxfile

$vmxfile = Start-SpbmReplicationFailover -server $siteDR -Unplanned -ReplicationGroup $RgTarget

$newDstVMs= @()

$vmhostDST = get-vmhost -Server $siteDR | select -First 1

$vmxfile | ForEach-Object {

write-host $_

$newVM = New-VM -VMFilePath $_ -VMHost $vmhostDST

$newDstVMs += $newVM

}

$newDstVms | forEach-object {

$vmtask = start-vm $_ -server $siteDST -ErrorAction SilentlyContinue -Confirm:$false -RunAsync

wait-task $vmtask -ErrorAction SilentlyContinue | out-null

$_ | Get-VMQuestion | Set-VMQuestion -Option ‘button.uuid.movedTheVM’ -Confirm:$false

}To recover from an unplanned failover after both vCenters are back up, perform the following required steps:

- Add a storage policy with the previous target recovery group to VMs and associated HDDs.

- Shutdown (just in case) and remove the VMs on the previous source.

- Start reprotection of VMs and associated HDDs.

- Use Spmb-ReplicationReverse to reestablish the protection of the VMs.

Conclusion

Even though Dell PowerStore and VMware vSphere do not provide native vVol failover handling, this example shows that vVol failover operations are doable with some script work. This blog should give you a quick introduction to a script based vVol mechanism, perhaps for a proof of concept in your environment. Note that it would need to be extended, such as with additional error handling, when running in a production environment.

Resources

- GitHub - myscripts4u/PowerStore-vVol-PowerCLI: Dell PowerStore vVol failover with PowerCLI

- Dell PowerStore: Replication Technologies

- Dell PowerStore: VMware Site Recovery Manager Best Practices

- VMware PowerCLI Installation Guide

Author: Robert Weilhammer, Principal Engineering Technologist

https://www.xing.com/profile/Robert_Weilhammer

Reduce Worry, Reuse Resources—Dell PowerStore Recycles

Tue, 06 Jun 2023 17:24:02 -0000

|Read Time: 0 minutes

When data loss occurs in an organization, it can be extremely devastating. Revenue loss, employee disruption, reputational damage, legal implications, and business closure are all potential ramifications.

Studies indicate that human error proves to be one of the most common causes of data loss today. With modern technology scaling in complexity and capacity, human error is bound to occur. Human error in an IT ecosystem can come in many forms, including accidental deletion of data, data sprawling (unorganized data), and administrative errors.

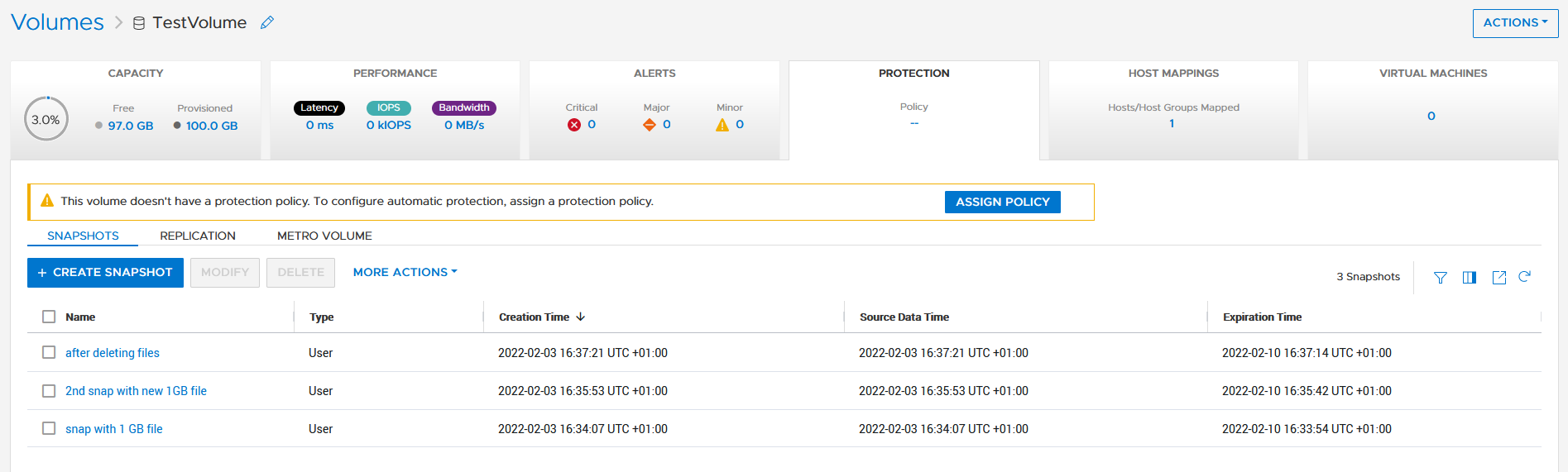

Data protection measures such as backups, snapshots, and replication may be used to rectify data loss from accidental deletion. In certain scenarios, using these measures may be time consuming and costly to a business. Users also might not have the resources to take backups or snapshots, or replicate data to another system.

Dell PowerStore Recycle Bin

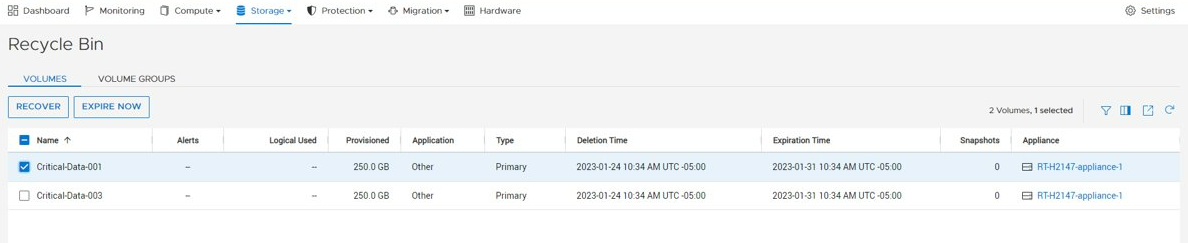

In PowerStoreOS 3.5, we introduced the Recycle Bin—a guardrail that combats the accidental deletion of block storage resources. This feature is intended to mitigate human error in an IT environment.

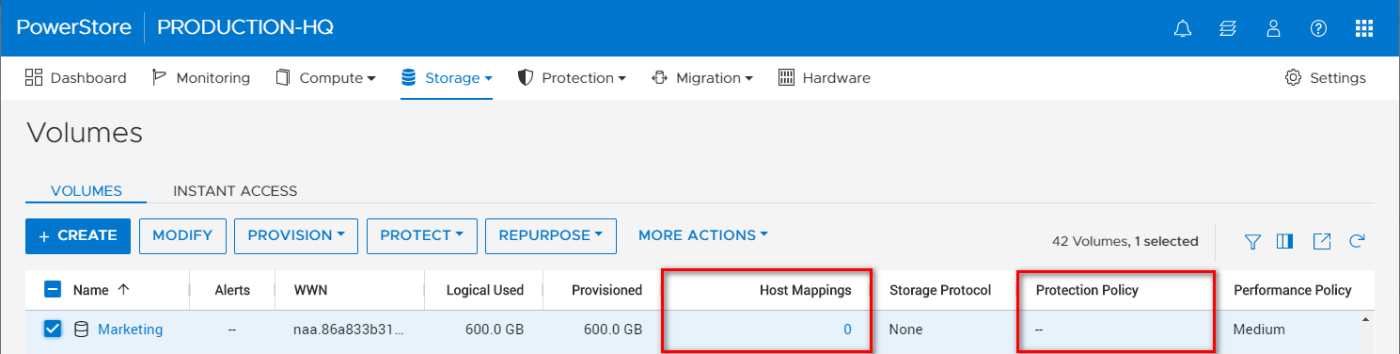

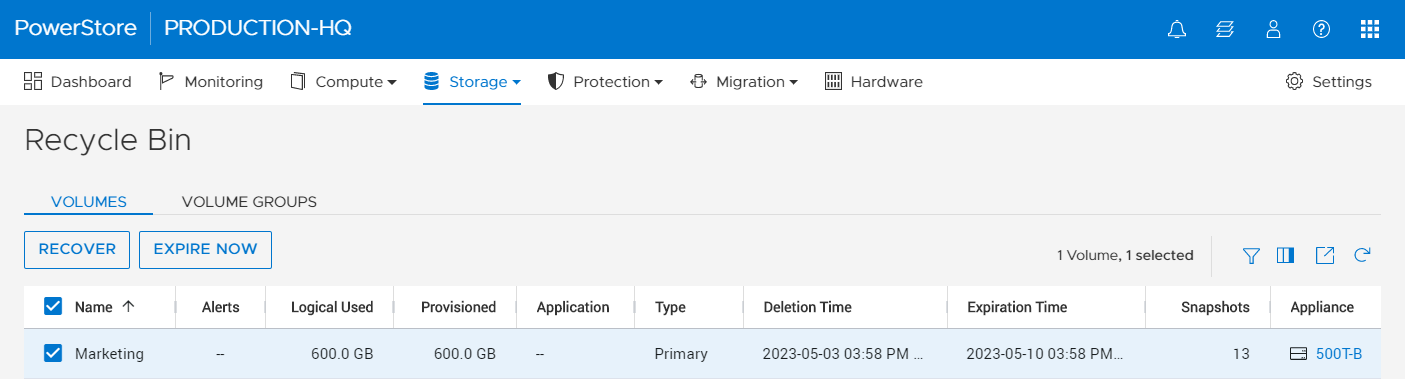

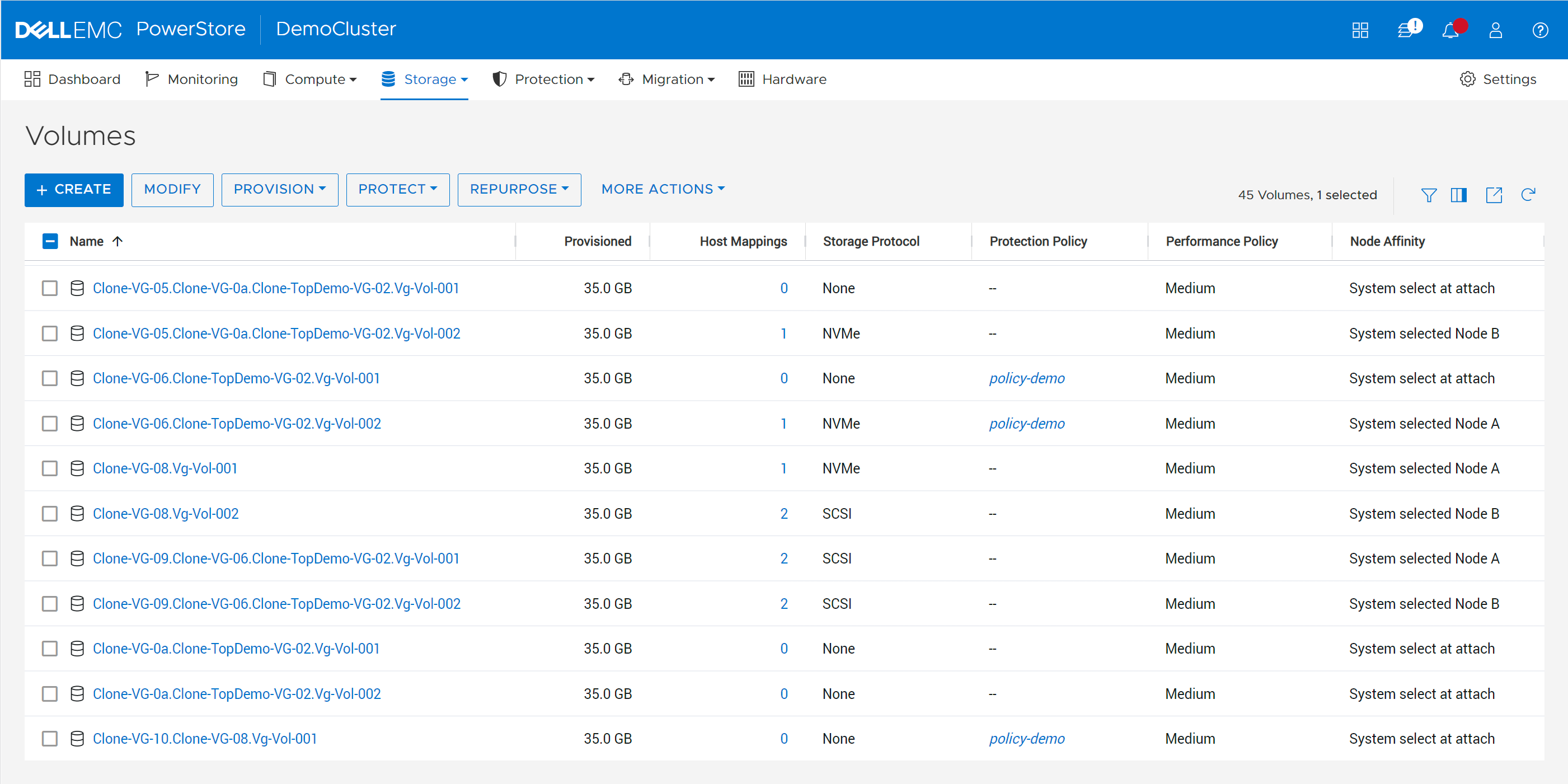

For instance, a user might mistakenly delete a mission-critical volume on a PowerStore appliance. The user initially planned on remapping and re-protecting the volume but accidentally deleted it. With the Recycle Bin automatically enabled in PowerStoreOS 3.5, the deleted resource can now be easily retrieved seconds later. The prerequisites for deleting a block storage resource in PowerStoreOS 3.5 remain the same as with previous versions, which means a block storage resource must have no associated host mappings and no protection policies. Figure 1 displays the volume named “Marketing” that fits the criteria for deletion.

Figure 1 – Volumes page

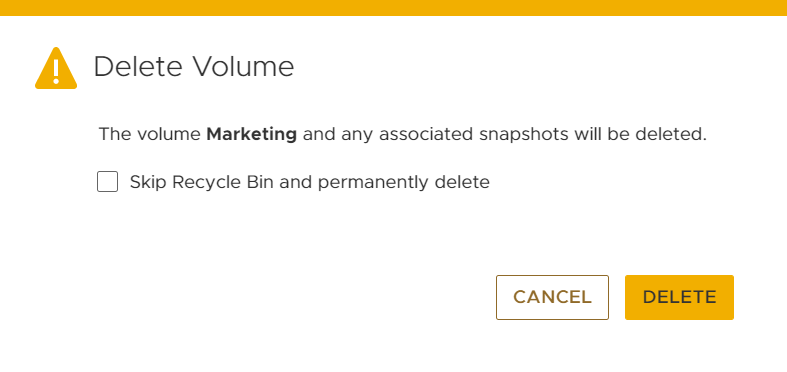

When users delete a block storage resource, they are prompted with a delete resource confirmation window, as shown in Figure 2. Users can elect to skip the Recycle Bin when deleting the block storage resource by selecting the checkbox, causing immediate deletion. By default the checkbox is not selected, and proceeding without selecting this option places the block storage resource into the Recycle Bin.

Figure 2 – Delete Volume Window

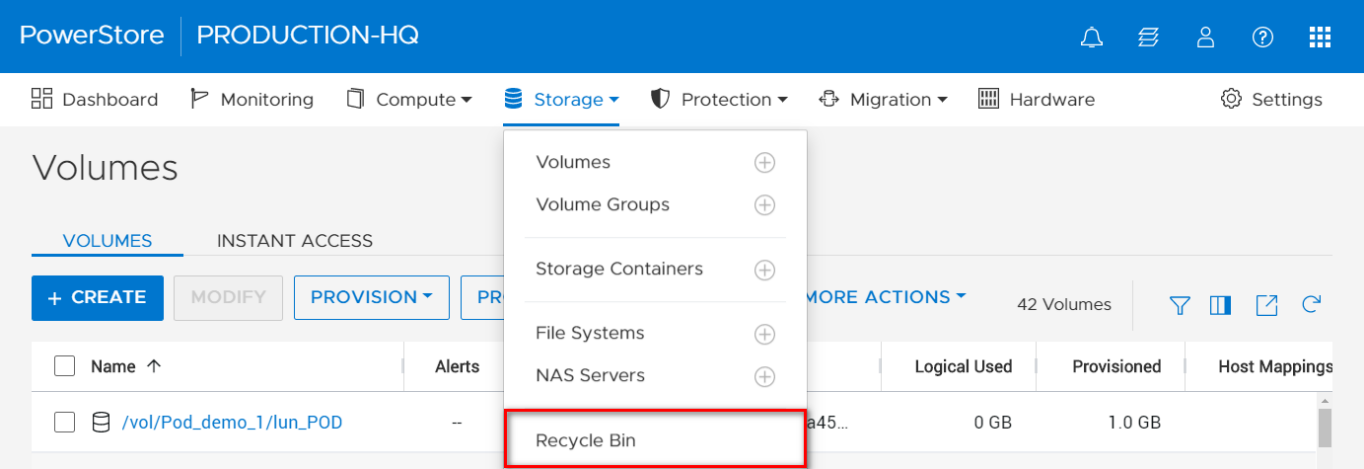

The Recycle Bin can be accessed in the PowerStore Manager UI under the Storage tab.

Figure 3 – Storage > Recycle Bin

The Recycle Bin view has sections for Volumes and Volume Groups. Once a resource is placed into the Recycle Bin, a user has the option to Recover or Expire Now, as shown in Figure 4. The Recover option recovers the block storage resource back into the storage section for use, while the Expire Now option triggers expiration to permanently delete the resource.

Figure 4 – Recycle Bin view

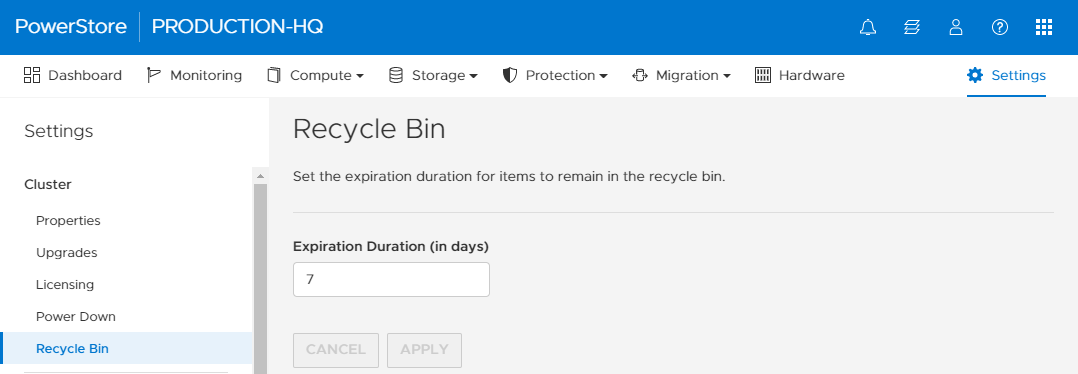

Configuration of the Recycle Bin expiration duration applies throughout the whole PowerStore cluster. By default, the expiration duration value is set to 7 days, but it can be set to range anywhere from 0–30 days. Note that resources placed in the Recycle Bin consume storage capacity and count against the PowerStore appliance limits.

Figure 5 – Settings > Cluster > Recycle Bin

Conclusion

Data is the core component of your organization. It’s what makes your business operational, which is why it is imperative to protect it. We’ve all had those difficult days at work, and mistakes can happen. It’s great to have technology that is forgiving when those errors occur. I hope this blog gave you a quick glimpse of Dell PowerStore Recycle Bin’s resilience and how it can benefit your organization.

Resources

For more information, check out these resources:

Author: Louie Sasa, Senior Engineering Technologist

PowerStore and RSA SecurID – Solving your Multi-Factor Authentication Requirements

Thu, 08 Jun 2023 16:30:18 -0000

|Read Time: 0 minutes

PowerStore authentication overview

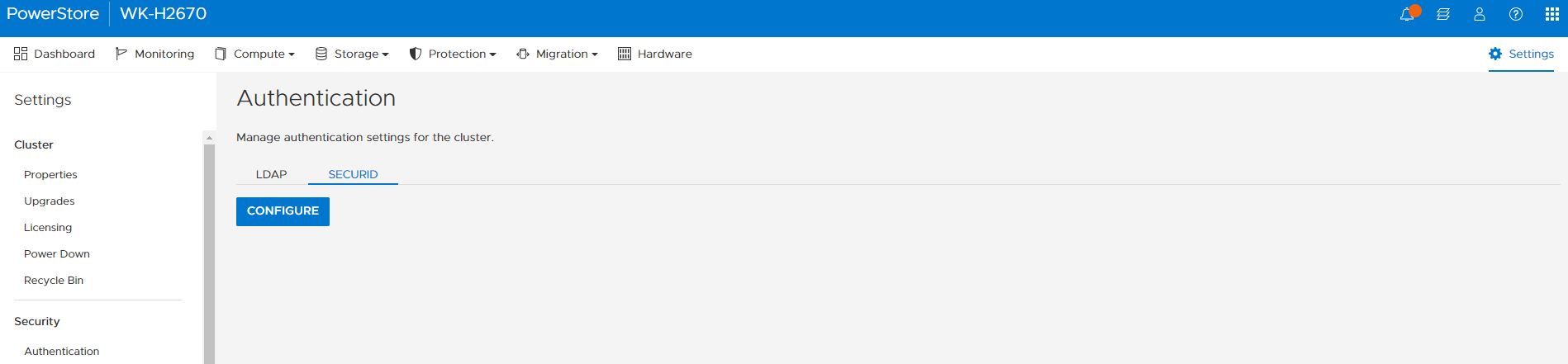

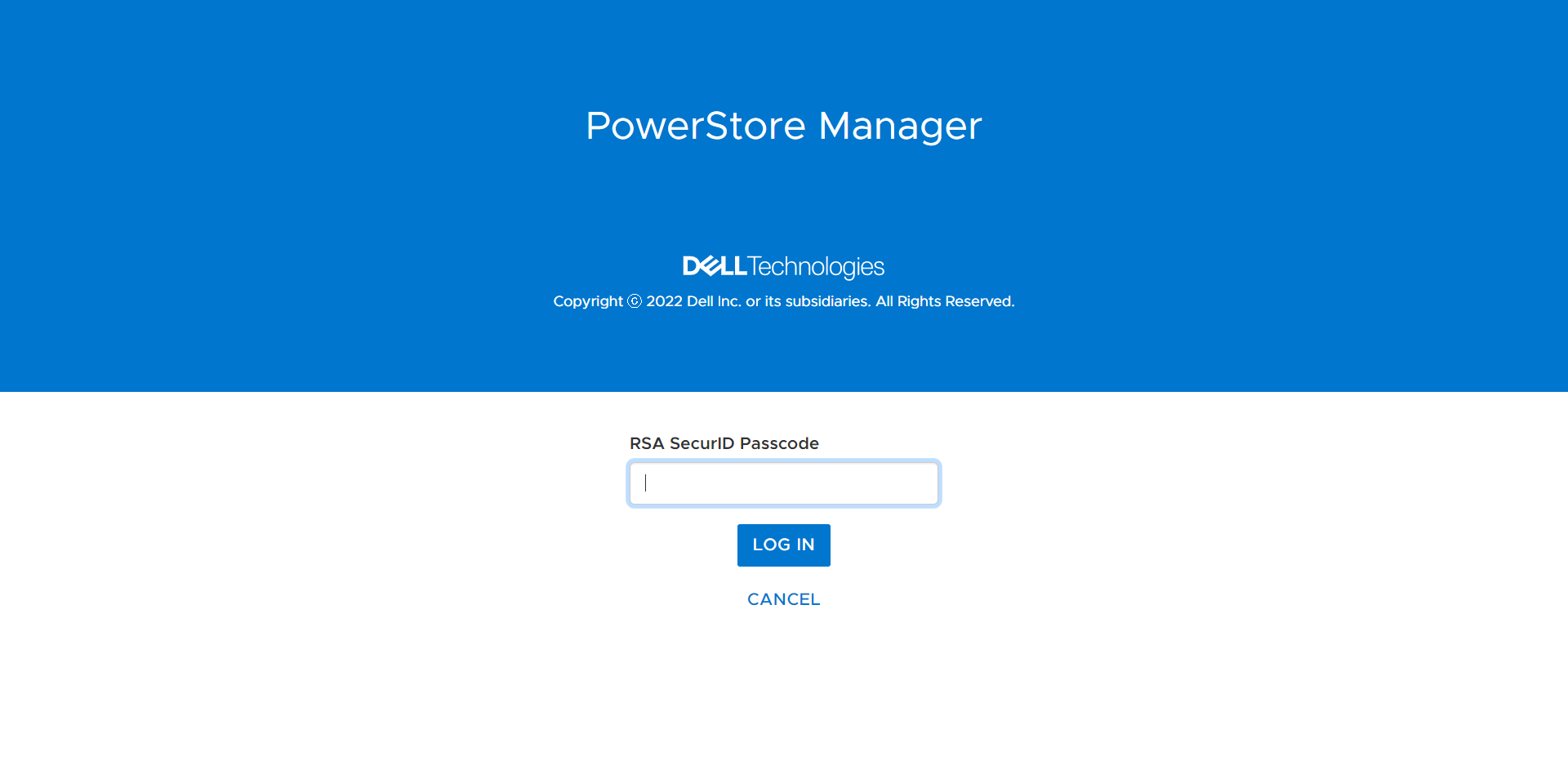

Previous versions of PowerStore supported basic or single factor authentication for both local and LDAP users. With growing security concerns in the global environment, many users are constantly looking to improve their security posture. The PowerStoreOS 3.5 release helps meet this goal by implementing multi-factor authentication support.

Improved security and authentication in PowerStoreOS 3.5

The new PowerStoreOS 3.5 release supports multi-factor authentication (MFA) with RSA SecurID. Multi-factor authentication, also known as advanced or two-factor authentication, provides an additional layer of security when logging into or performing functions on the system. It is a core component of a strong identity and access management (IAM) policy, which is critical to many organizations in today’s security climate.

Multi-factor authentication provides a higher security posture and has many advantages. It increases the security of accounts and data against hackers, mitigates the risks of poor password practices, and helps users stay compliant with regulations.

PowerStore’s multi-factor authentication software integrates with RSA SecurID, an authentication product that validates the identity of a user before allowing them access. Many users all over the world already leverage RSA for their intranet, so this release makes it easy to use that same infrastructure with PowerStore. Figure 1 shows how easy it is to configure and discover your RSA authentication manager from within PowerStore Manager.

Figure 1. Configure and Discover RSA authentication manager

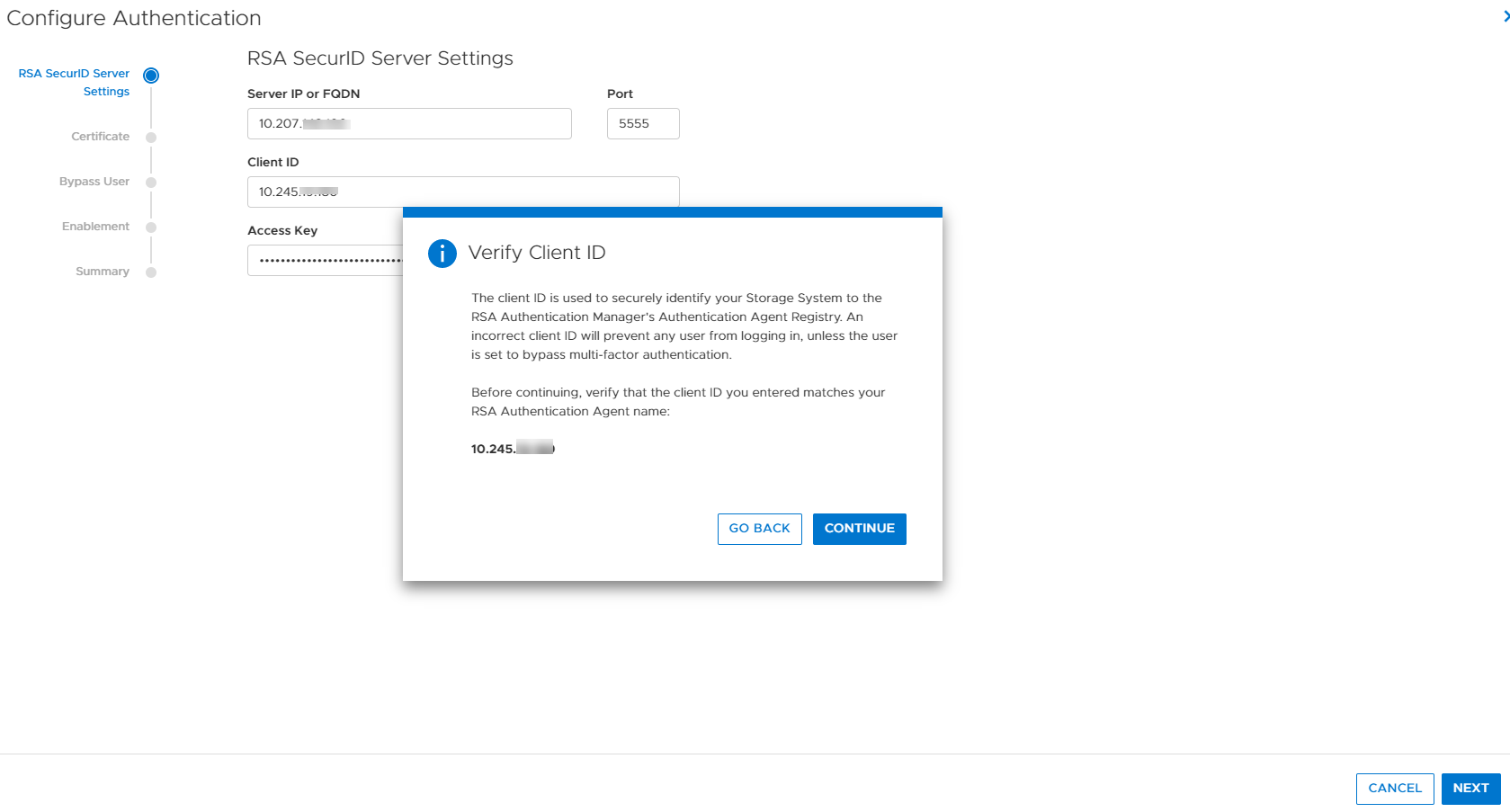

Here you click the Configure button and enter your RSA SecurID Server settings as shown in Figure 2. The required information is the Server IP or FQDN, Client ID (PowerStore cluster IP), and the access key created in your RSA server.

Figure 2. RSA SecurID Server settings

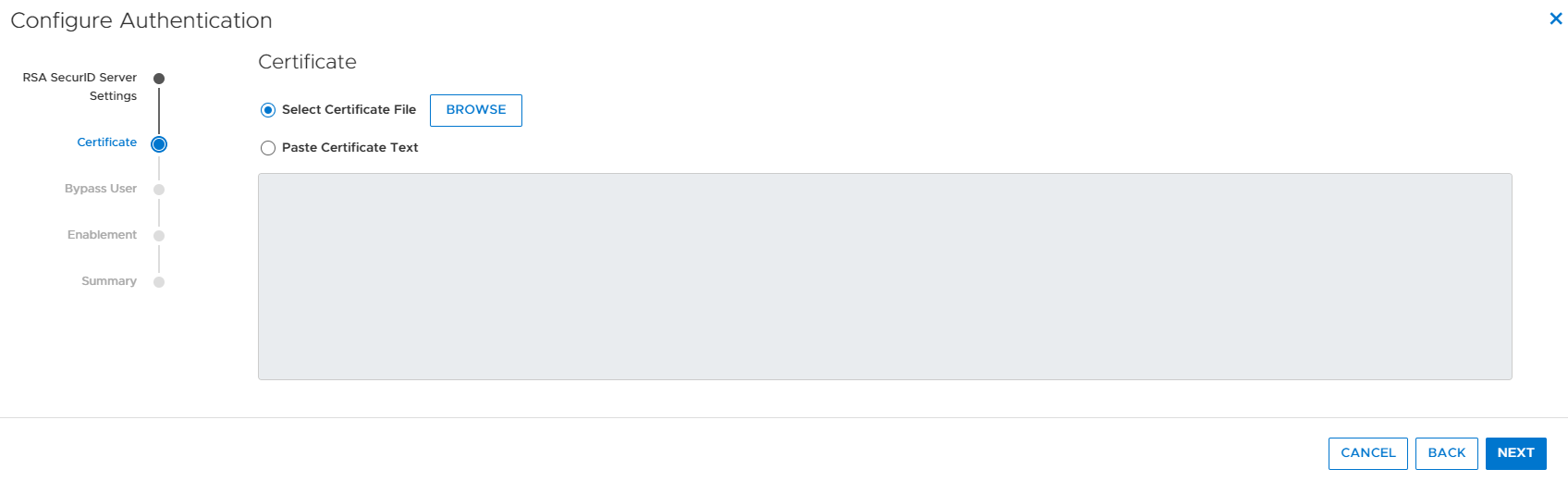

Next, you simply browse for your RSA certificate file or paste it into the box as shown in Figure 3. The RSA certificate file and access key that you enter help ensure secure communications between the RSA authentication manager and PowerStore.

Figure 3. RSA certificate

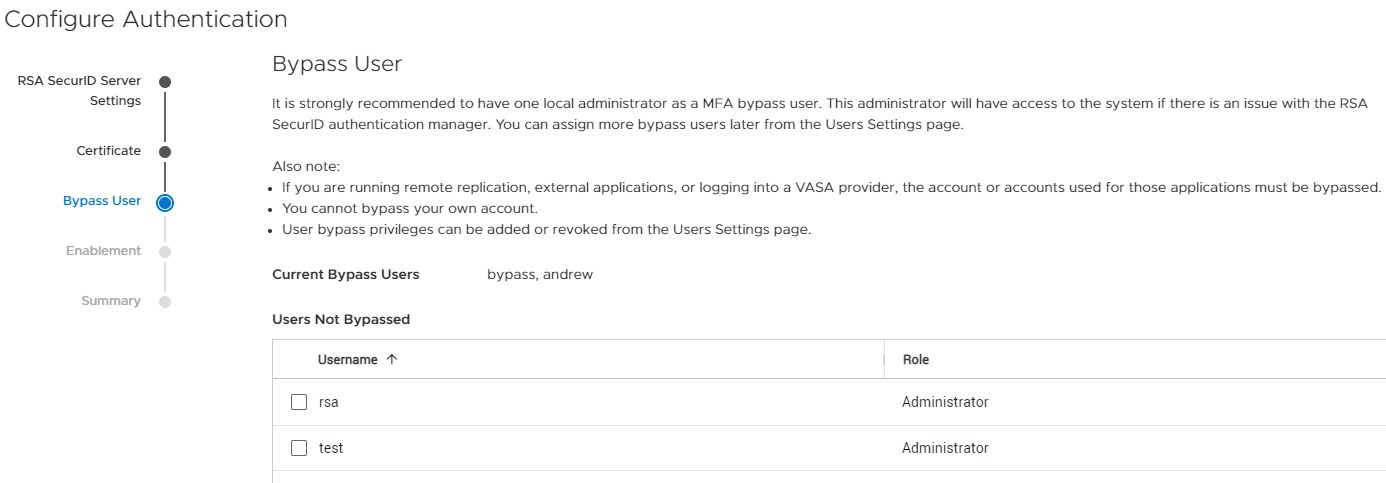

The next step in the authentication wizard is to configure a bypass user if desired. Selecting and configuring a bypass user (Figure 4) allows a user to have the ability to bypass the RSA MFA login process. It is highly recommended to choose a local administrator for this purpose in case you lose access to the SecurID authentication manager. If this happened and you didn’t have a valid bypass user, you wouldn’t be able to log into the PowerStore system. A service script also allows the service user to manually bypass users, if the need arises.

Figure 4. Bypass user

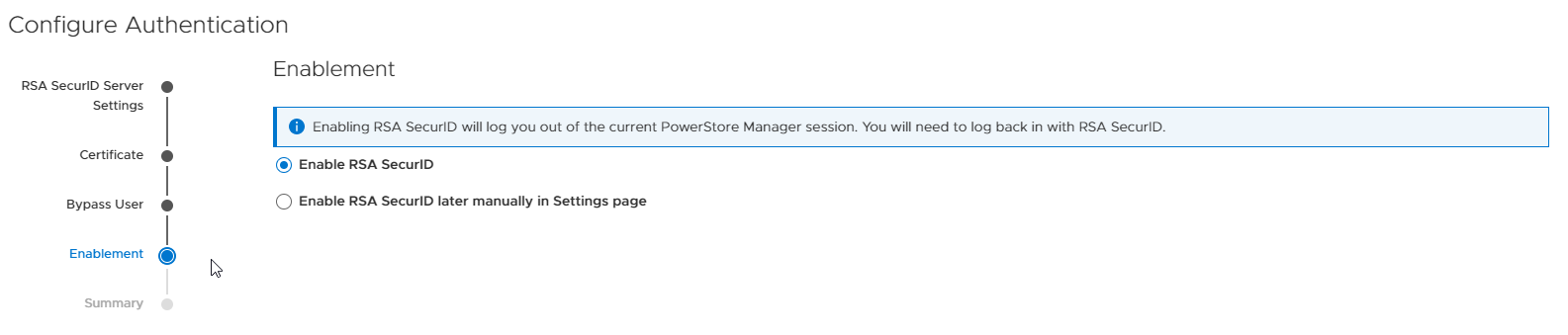

Then, you decide in the wizard if you want to enable MFA with RSA right away, or enable it manually later, see Figure 5. If you choose to enable RSA SecurID right away, you will be logged out of PowerStore Manager and be required to login with MFA RSA authentication.

Figure 5. Enable RSA SecurID

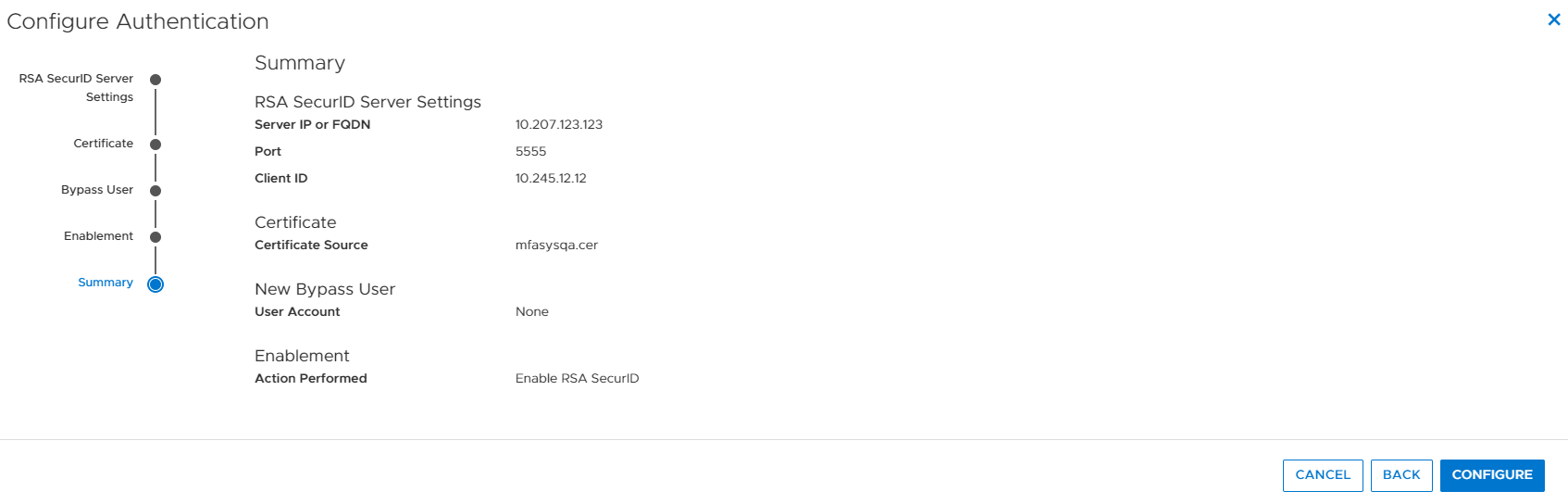

Finally, you review your configuration settings and click Configure (Figure 6),

Figure 6. Configure Authentication summary

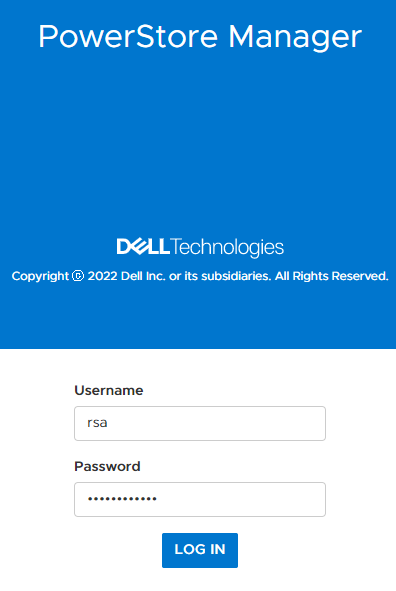

Now when you log in using MFA with RSA enabled on your PowerStore system, you log in as normal with single factor username and password authentication as shown in Figure 7, but will be prompted for the RSA SecurID passcode afterwards, as shown in Figure 8. This multi-factor authentication provides the additional security many customers and their infrastructures require. Note that this feature works with LDAP authentication as well as local users.

Figure 7. Single factor login

Figure 8. MFA with RSA SecurID login

Conclusion

I hope this blog gave you a quick glimpse into how easy it is to set up and use multi-factor authentication with RSA SecurID on PowerStore to increase your organization’s security posture.

Resources

For additional information about the features described in this blog, and other information about the PowerStoreOS 3.5 release, see the following:

Other resources

- What’s New In PowerStoreOS 3.5?

- Dell Technologies Main Info Hub

- Dell Technologies PowerStore Info Hub

- PowerStore: Info Hub - Product Documentation & Videos

- Dell PowerStore Hands-on Labs

Author: Andrew Sirpis

Time to Rethink your SQL Backup Strategy – Part 2

Wed, 10 May 2023 15:17:38 -0000

|Read Time: 0 minutes

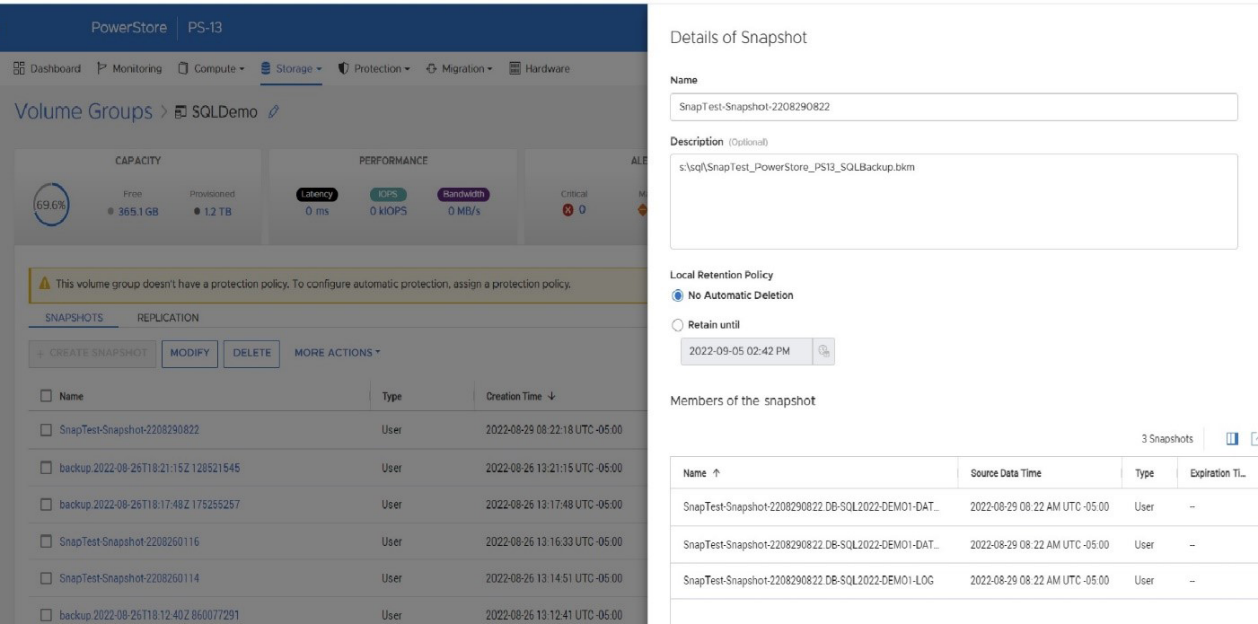

A while back, I wrote a blog about changes to backup/restore functionality in SQL Server 2022: SQL Server 2022 – Time to Rethink your Backup and Recovery Strategy. Now, more exciting features are here in PowerStoreOS 3.5 that provide additional options and enhanced flexibility for protecting, migrating, and recovering SQL Server workloads on PowerStore.

Secure your snapshots

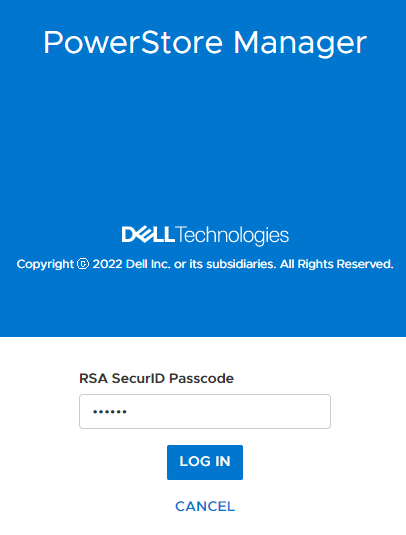

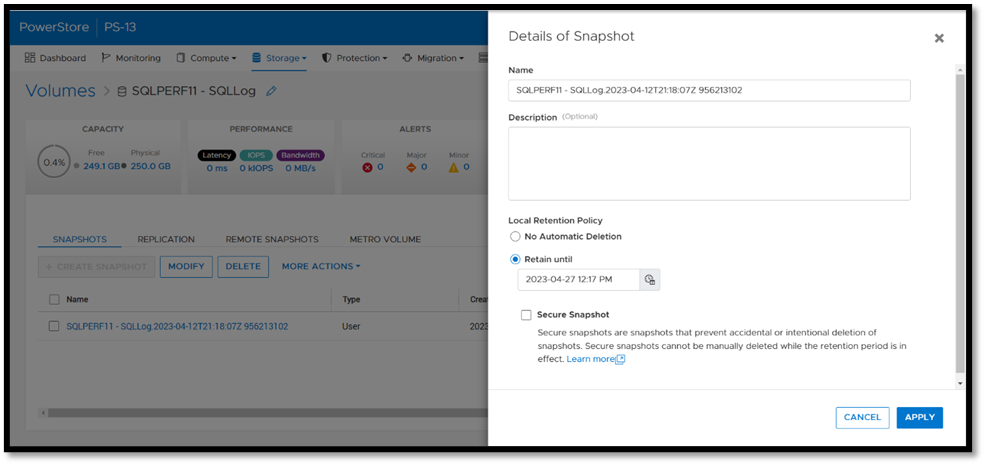

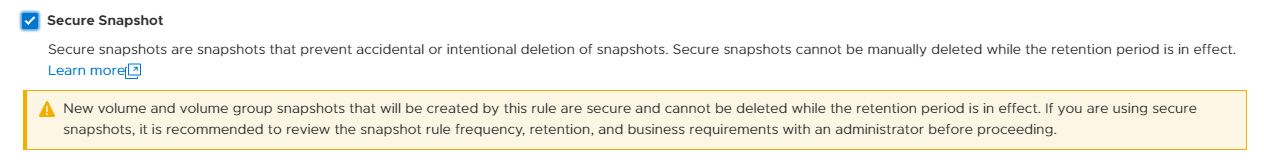

Backup copies provide zero value if they have been compromised when you need them the most. Snapshot removal could happen accidentally or intentionally as part of a malicious attack. PowerStoreOS 3.5 introduces a new feature, secure snapshot, to ensure that snapshots can't be deleted prior to their expiration date. This feature is a simple checkbox on a snapshot or protection policy that protects snapshots until they expire and can't be turned off. This ensures that your critical data will be available when you need it. Secure snapshot can be enabled on new or existing snapshots. Here’s an example of the secure snapshot option on an existing snapshot.

Once this option is selected, a warning is displayed stating that the snapshot can’t be deleted until the retention period expires. To make the snapshot secure, ensure that the Secure Snapshot checkbox is selected and click Apply.

Secure snapshot can be applied to individual snapshots of volumes or volume groups. The secure snapshot option can also be enabled on one or more snapshot rules in a protection policy to ensure that snapshots taken as part of the protection policy have secure snapshot applied.

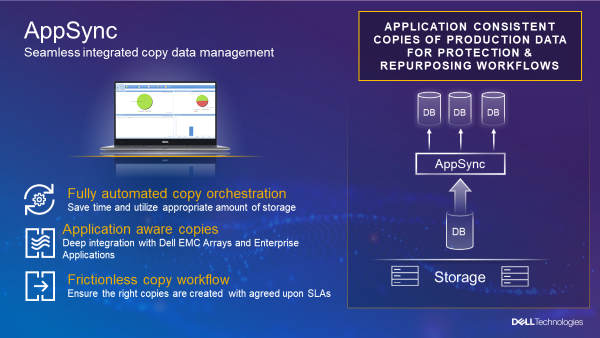

Since existing snapshots can be marked as secure, this option can be used on snapshots taken outside of PowerStore Manager or even snapshots taken with other utilities such as AppSync. Consider enabling this option on your critical snapshots to ensure that they are available when you need them!

There's no such thing as too many backups!

If you're responsible for managing and protecting SQL Server databases, you quickly learn that it's valuable to have many different backups and in various formats, for various reasons. It could be for disaster recovery, migration, reporting, troubleshooting, resetting dev/test environments, or any combination of these. Perhaps you’re trying to mitigate the risk of failure of a single platform, method, or tool. Each scenario and workflow has different requirements. PowerStoreOS 3.5 introduces direct integration with Dell PowerProtect DD series appliances, including PowerProtect DDVE which is the virtual edition for both on-premises and cloud deployments. This provides an agentless way to take crash consistent, off-array backups directly from PowerStore and send them to PowerProtect DD.

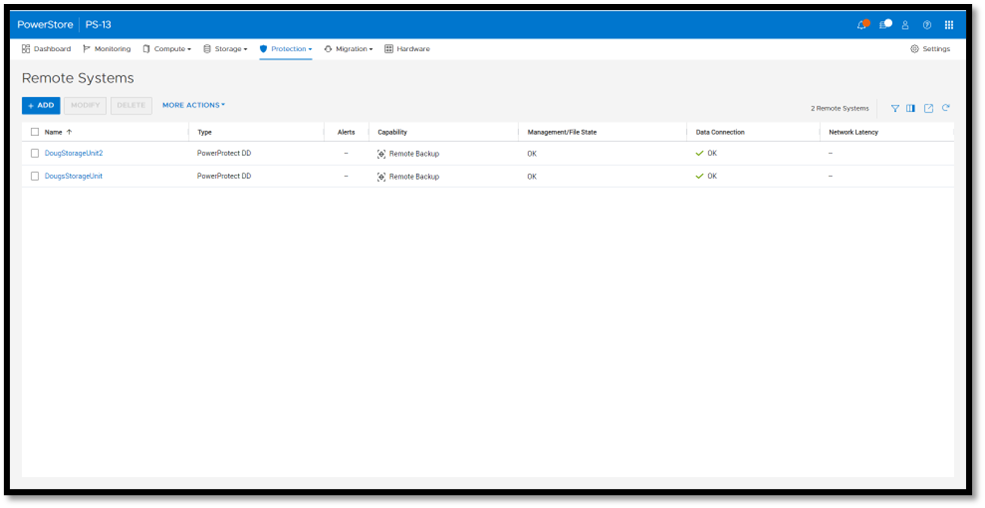

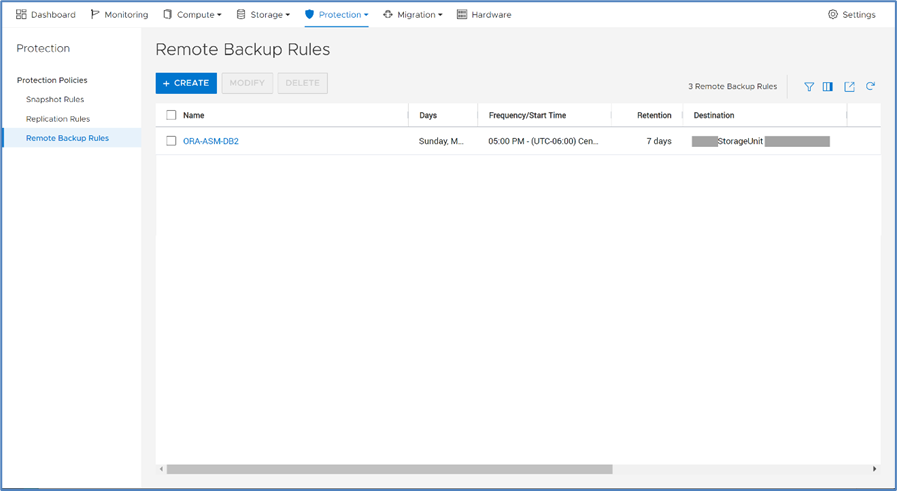

To enable PowerStore remote backup, you need to connect the PowerProtect DD appliance to your PowerStore system as a remote system.

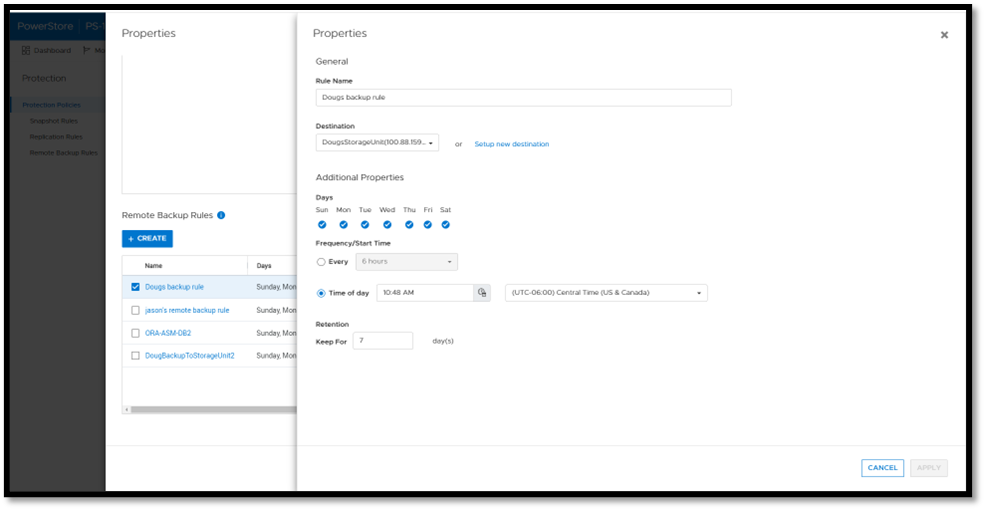

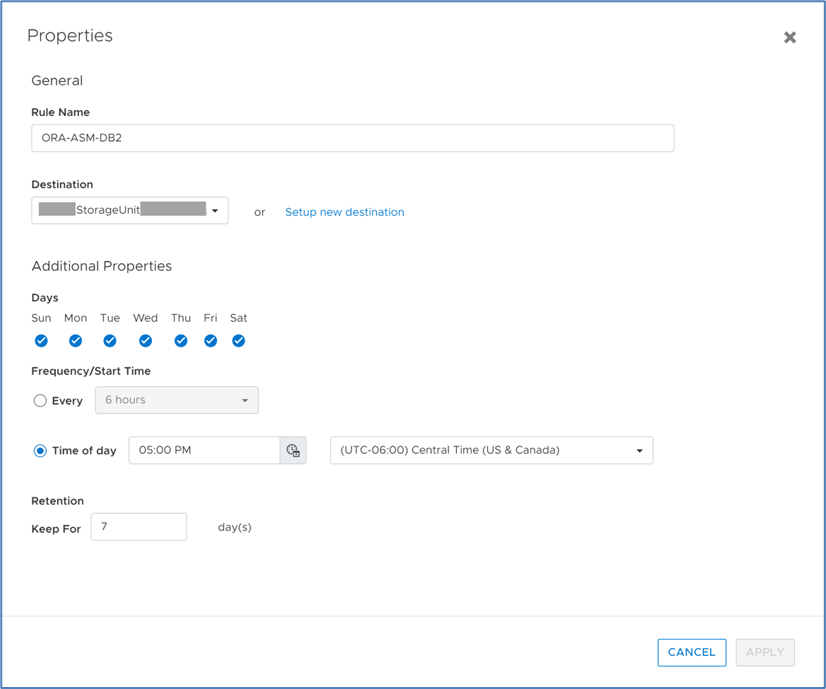

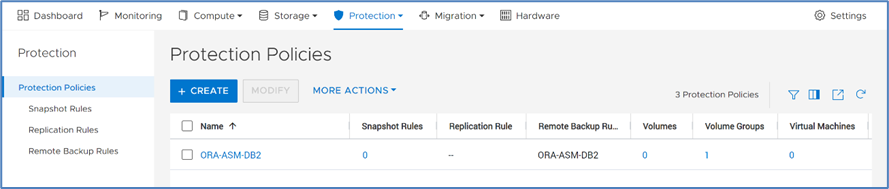

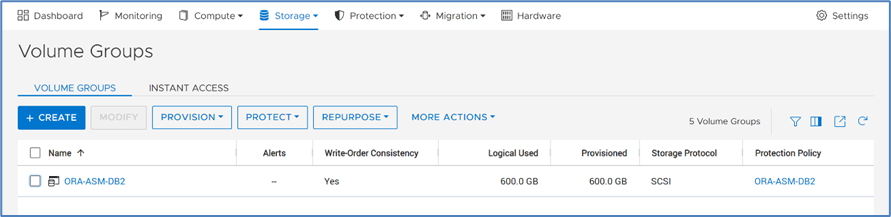

Next, you add a remote backup rule to a new or existing protection policy for the volume or volume group you want to protect, providing the destination, schedule, and retention.

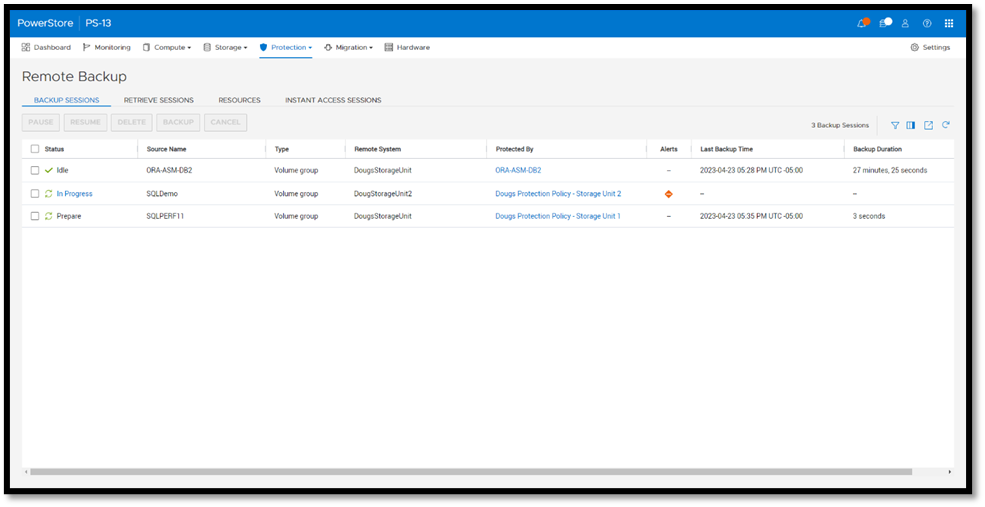

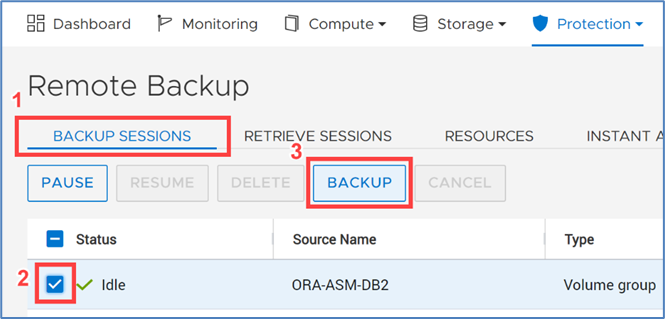

Once a protection policy is created with remote backup rules and assigned to a PowerStore volume or volume group, a backup session will appear.

Under Backup Sessions, you can see the status of all the sessions or select one to back up immediately, and click Backup.

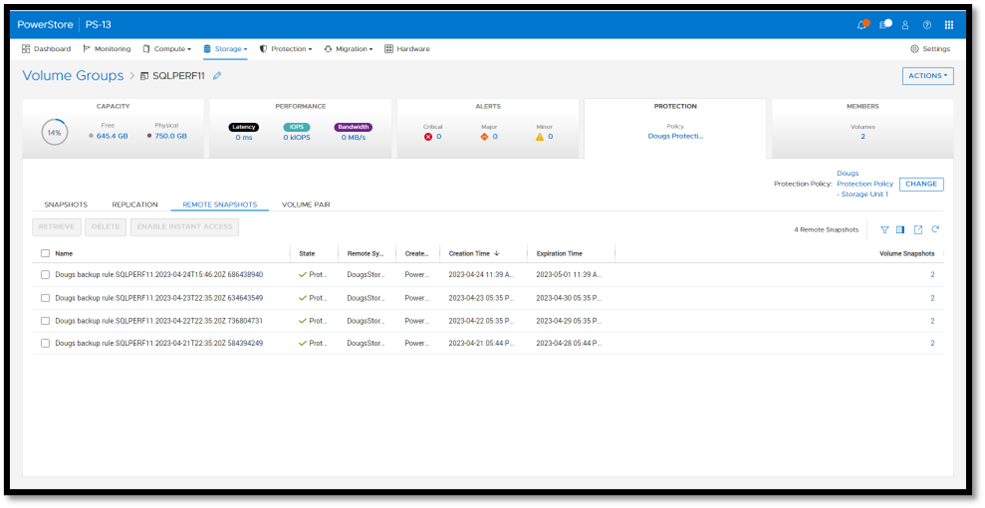

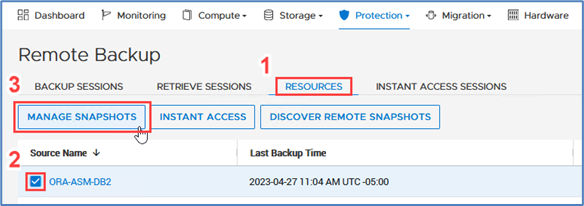

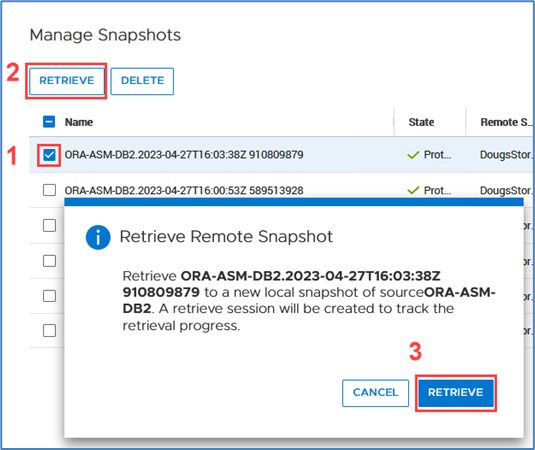

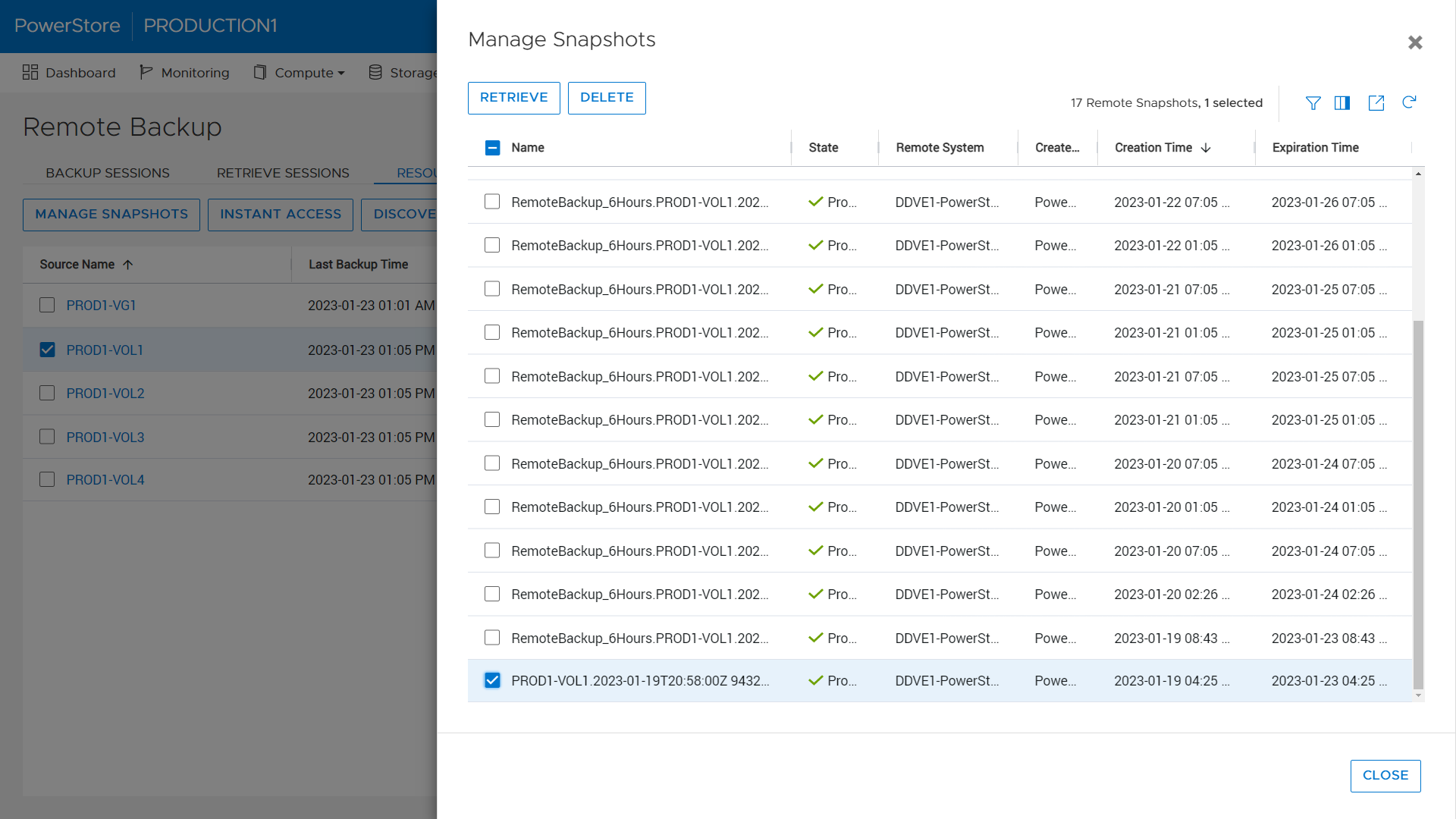

Once a remote backup is taken, it will appear under the Volume or Volume Group Protection tab as a remote snapshot.

From here, you can retrieve it and work with it as a normal snapshot on PowerStore or enable Instant Access whereby the contents can be accessed by a host directly from PowerProtect DD. You can even retrieve remote snapshots from other PowerStore clusters!

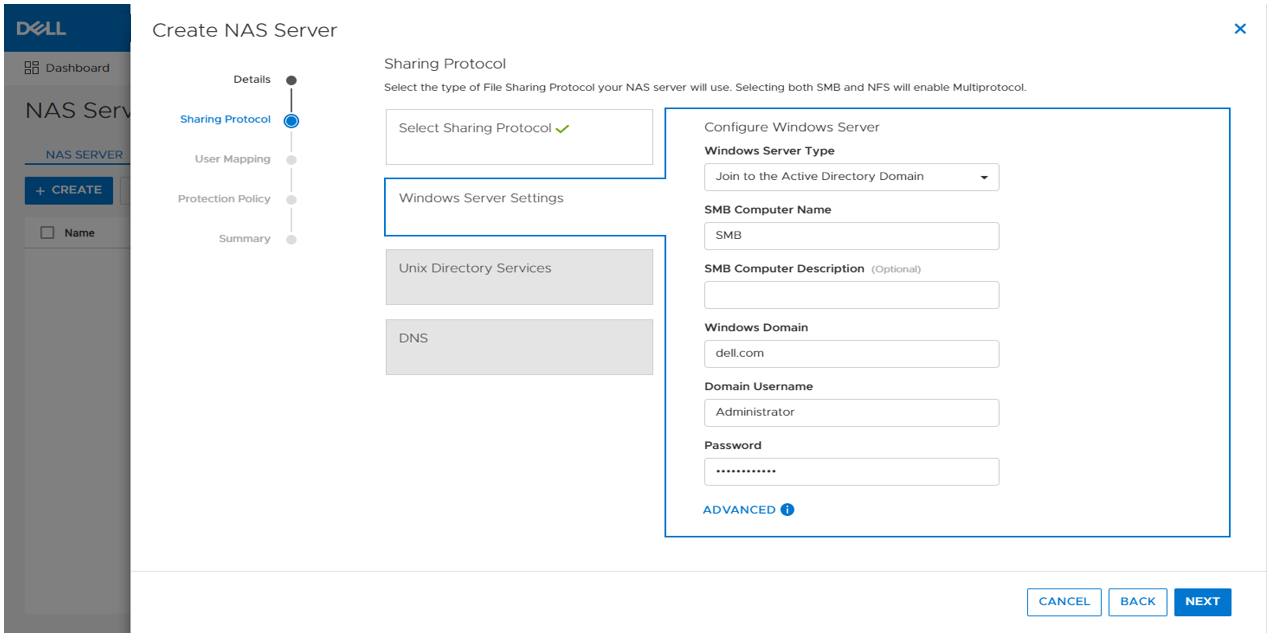

This is yet another powerful tool included with PowerStoreOS 3.5 to enhance data protection and data mobility workflows.