Blogs

Short articles related to Dell PowerScale.

OneFS and HTTP Security

Mon, 22 Apr 2024 20:35:30 -0000

|Read Time: 0 minutes

To enable granular HTTP security configuration, OneFS provides an option to disable nonessential HTTP components selectively. This can help reduce the overall attack surface of your infrastructure. Disabling a specific component’s service still allows other essential services on the cluster to continue to run unimpeded. In OneFS 9.4 and later, you can disable the following nonessential HTTP services:

Service | Description |

PowerScaleUI | The OneFS WebUI configuration interface. |

Platform-API-External | External access to the OneFS platform API endpoints. |

Rest Access to Namespace (RAN) | REST-ful access by HTTP to a cluster’s /ifs namespace. |

RemoteService | Remote Support and In-Product Activation. |

SWIFT (deprecated) | Deprecated object access to the cluster using the SWIFT protocol. This has been replaced by the S3 protocol in OneFS. |

You can enable or disable each of these services independently, using the CLI or platform API, if you have a user account with the ISI_PRIV_HTTP RBAC privilege.

You can use the isi http services CLI command set to view and modify the nonessential HTTP services:

# isi http services list ID Enabled ------------------------------ Platform-API-External Yes PowerScaleUI Yes RAN Yes RemoteService Yes SWIFT No ------------------------------ Total: 5

For example, you can easily disable remote HTTP access to the OneFS /ifs namespace as follows:

# isi http services modify RAN --enabled=0

You are about to modify the service RAN. Are you sure? (yes/[no]): yes

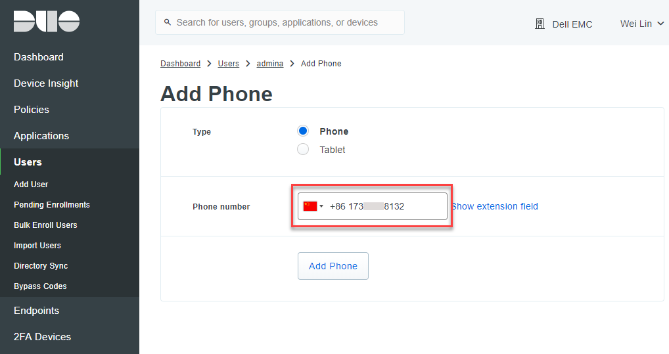

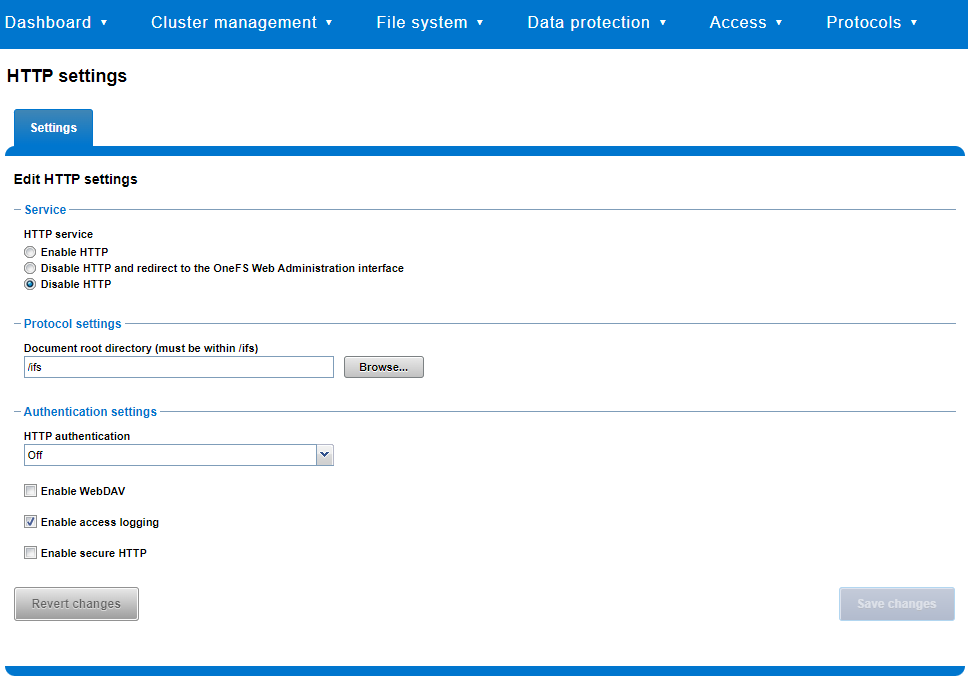

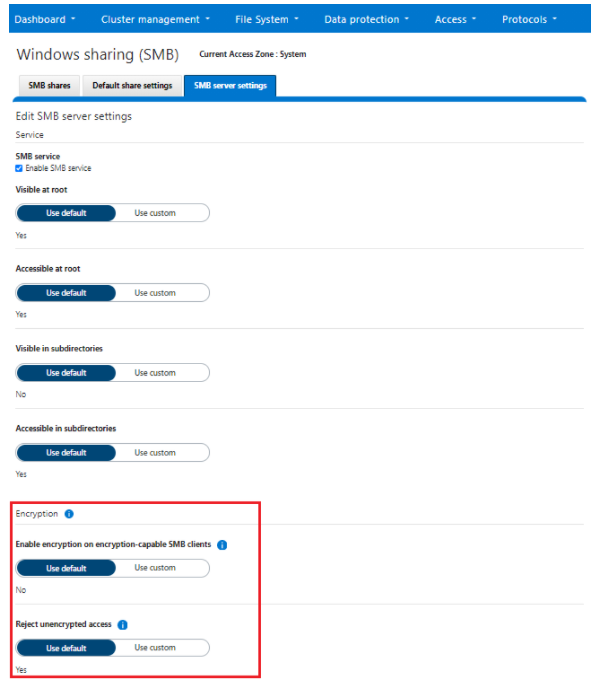

Similarly, you can also use the WebUI to view and edit a subset of the HTTP configuration settings, by navigating to Protocols > HTTP settings:

That said, the implications and impact of disabling each of the services is as follows:

Service | Disabling impacts |

WebUI | The WebUI is completely disabled, and access attempts (default TCP port 8080) are denied with the warning Service Unavailable. Please contact Administrator. If the WebUI is re-enabled, the external platform API service (Platform-API-External) is also started if it is not running. Note that disabling the WebUI does not affect the PlatformAPI service. |

Platform API | External API requests to the cluster are denied, and the WebUI is disabled, because it uses the Platform-API-External service. Note that the Platform-API-Internal service is not impacted if/when the Platform-API-External is disabled, and internal pAPI services continue to function as expected. If the Platform-API-External service is re-enabled, the WebUI will remain inactive until the PowerScaleUI service is also enabled. |

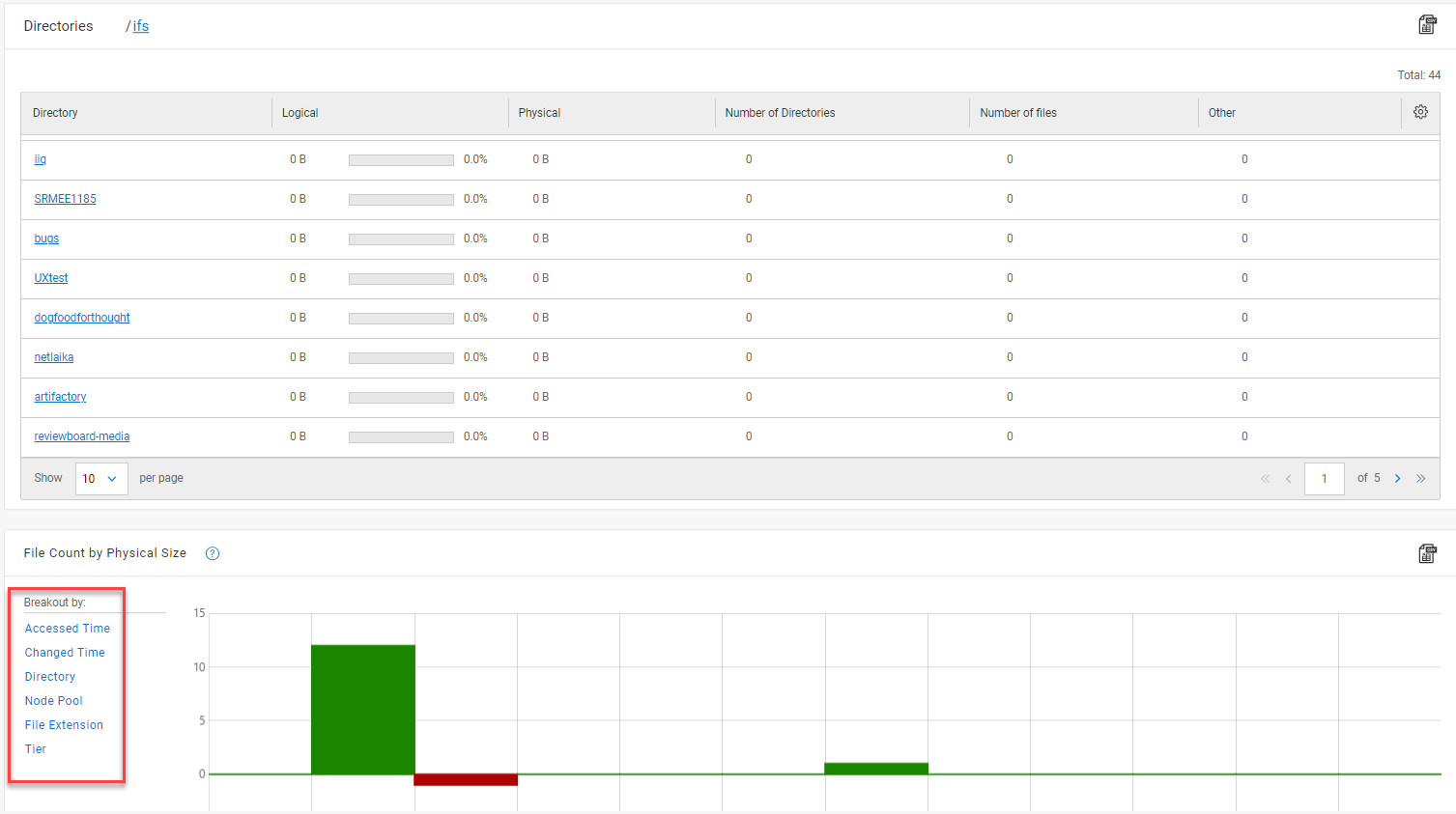

RAN | If RAN is disabled, the WebUI components for File System Explorer and File Browser are also automatically disabled. From the WebUI, attempts to access the OneFS file system explorer (File System > File System Explorer) fail with the warning message Browse is disabled as RAN service is not running. Contact your administrator to enable the service. This same warning also appears when attempting to access any other WebUI components that require directory selection. |

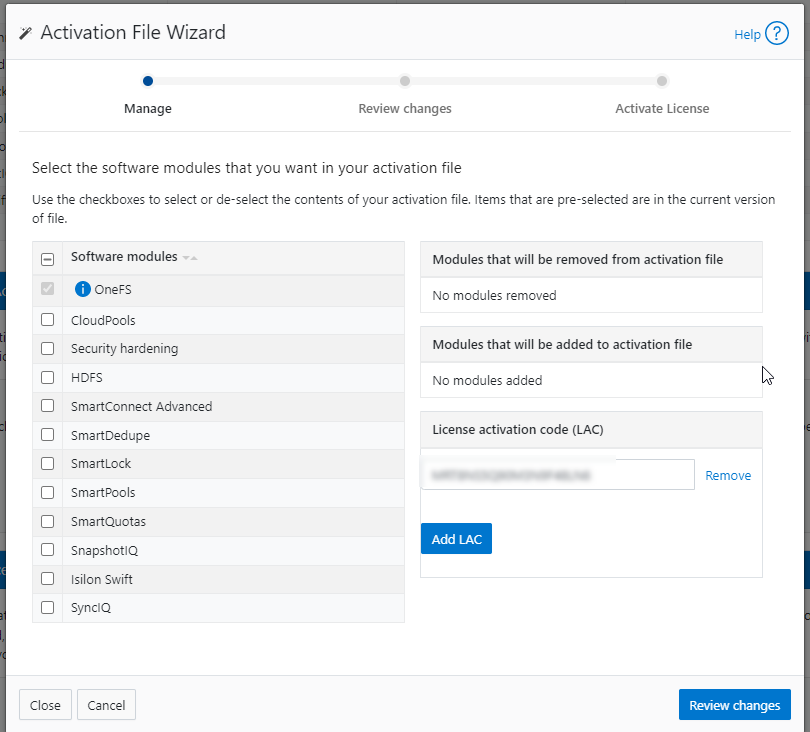

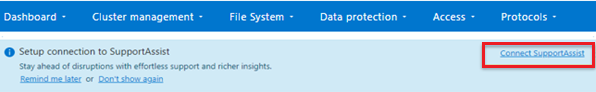

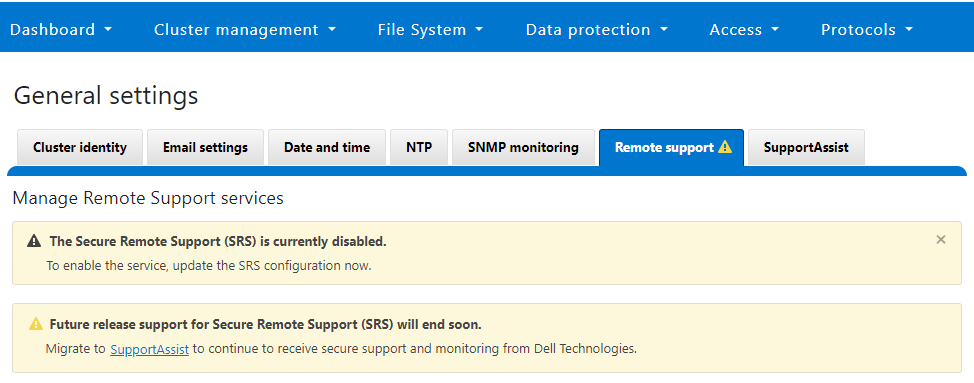

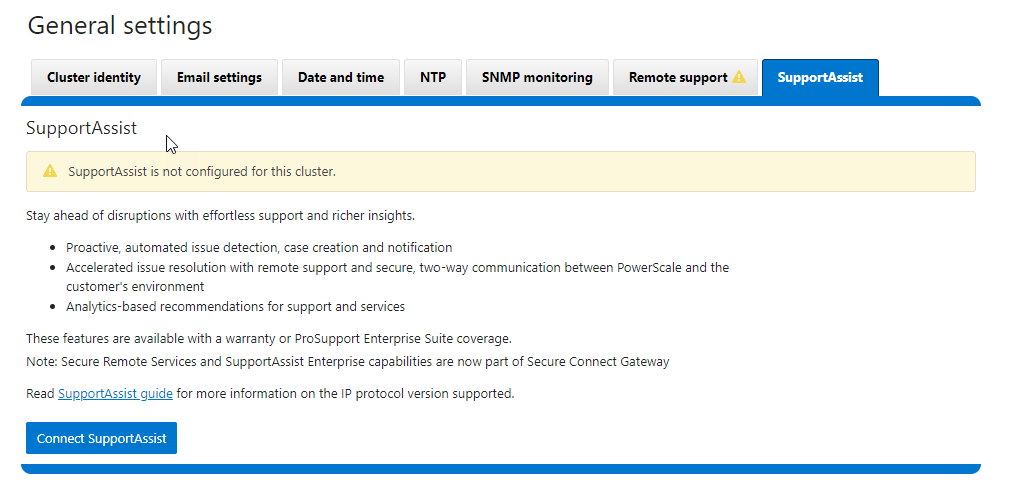

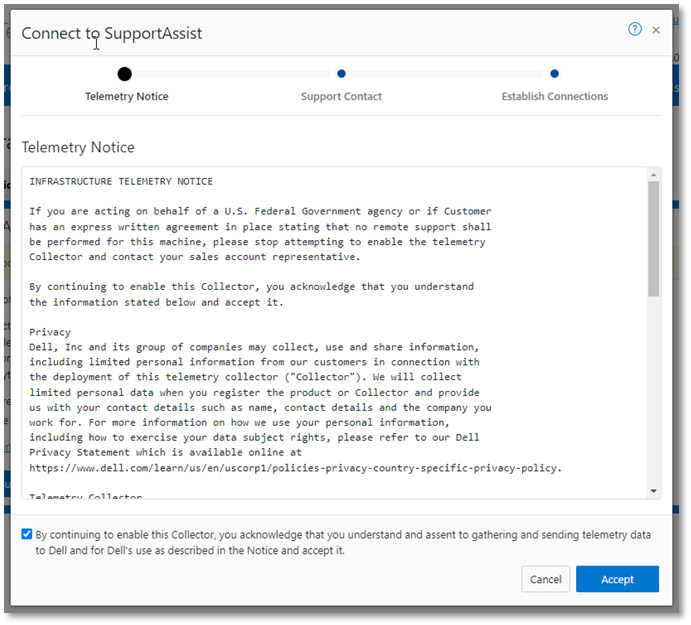

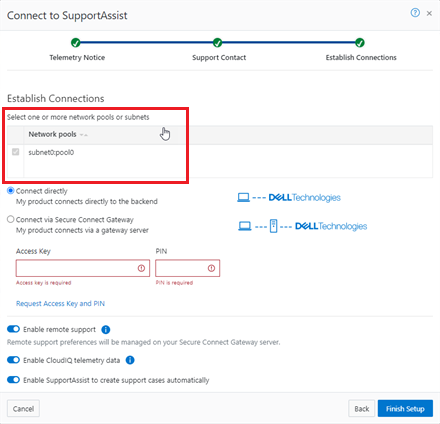

RemoteService | If RemoteService is disabled, the WebUI components for Remote Support and In-Product Activation are disabled. In the WebUI, going to Cluster Management > General Settings and selecting the Remote Support tab displays the message The service required for the feature is disabled. Contact your administrator to enable the service. In the WebUI, going to Cluster Management > Licensing and scrolling to the License Activation section displays the message The service required for the feature is disabled. Contact your administrator to enable the service. |

SWIFT | Deprecated object protocol and disabled by default. |

You can use the CLI command isi http settings view to display the OneFS HTTP configuration:

# isi http settings view Access Control: No Basic Authentication: No WebHDFS Ran HTTPS Port: 8443 Dav: No Enable Access Log: Yes HTTPS: No Integrated Authentication: No Server Root: /ifs Service: disabled Service Timeout: 8m20s Inactive Timeout: 15m Session Max Age: 4H Httpd Controlpath Redirect: No

Similarly, you can manage and change the HTTP configuration using the isi http settings modify CLI command.

For example, to reduce the maximum session age from four to two hours:

# isi http settings view | grep -i age Session Max Age: 4H # isi http settings modify --session-max-age=2H # isi http settings view | grep -i age Session Max Age: 2H

The full set of configuration options for isi http settings includes:

Option | Description |

--access-control <boolean> | Enable Access Control Authentication for the HTTP service. Access Control Authentication requires at least one type of authentication to be enabled. |

--basic-authentication <boolean> | Enable Basic Authentication for the HTTP service. |

--webhdfs-ran-https-port <integer> | Configure Data Services Port for the HTTP service. |

--revert-webhdfs-ran-https-port | Set value to system default for --webhdfs-ran-https-port. |

--dav <boolean> | Comply with Class 1 and 2 of the DAV specification (RFC 2518) for the HTTP service. All DAV clients must go through a single node. DAV compliance is NOT met if you go through SmartConnect, or using 2 or more node IPs. |

--enable-access-log <boolean> | Enable writing to a log when the HTTP server is accessed for the HTTP service. |

--https <boolean> | Enable the HTTPS transport protocol for the HTTP service. |

--https <boolean> | Enable the HTTPS transport protocol for the HTTP service. |

--integrated-authentication <boolean> | Enable Integrated Authentication for the HTTP service. |

--server-root <path> | Document root directory for the HTTP service. Must be within /ifs. |

--service (enabled | disabled | redirect | disabled_basicfile) | Enable/disable the HTTP Service or redirect to WebUI or disabled BasicFileAccess. |

--service-timeout <duration> | The amount of time (in seconds) that the server will wait for certain events before failing a request. A value of 0 indicates that the service timeout value is the Apache default. |

--revert-service-timeout | Set value to system default for --service-timeout. |

--inactive-timeout <duration> | Get the HTTP RequestReadTimeout directive from both the WebUI and the HTTP service. |

--revert-inactive-timeout | Set value to system default for --inactive-timeout. |

--session-max-age <duration> | Get the HTTP SessionMaxAge directive from both WebUI and HTTP service. |

--revert-session-max-age | Set value to system default for --session-max-age. |

--httpd-controlpath-redirect <boolean> | Enable or disable WebUI redirection to the HTTP service. |

Note that while the OneFS S3 service uses HTTP, it is considered a tier-1 protocol, and as such is managed using its own isi s3 CLI command set and corresponding WebUI area. For example, the following CLI command forces the cluster to only accept encrypted HTTPS/SSL traffic on TCP port 9999 (rather than the default TCP port 9021):

# isi s3 settings global modify --https-only 1 –https-port 9921 # isi s3 settings global view HTTP Port: 9020 HTTPS Port: 9999 HTTPS only: Yes S3 Service Enabled: Yes

Additionally, you can entirely disable the S3 service with the following CLI command:

# isi services s3 disable The service 's3' has been disabled.

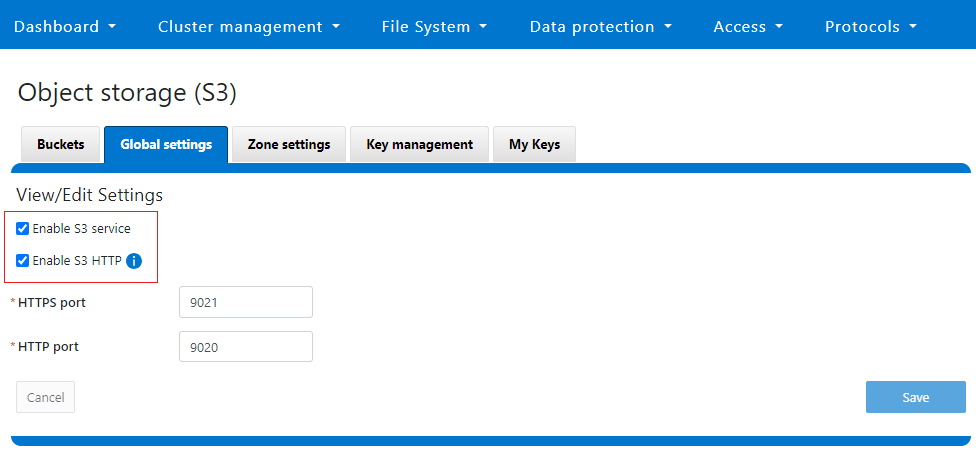

Or from the WebUI, under Protocols > S3 > Global settings:

Author: Nick Trimbee

OneFS and PowerScale F-series Management Ports

Mon, 22 Apr 2024 20:12:20 -0000

|Read Time: 0 minutes

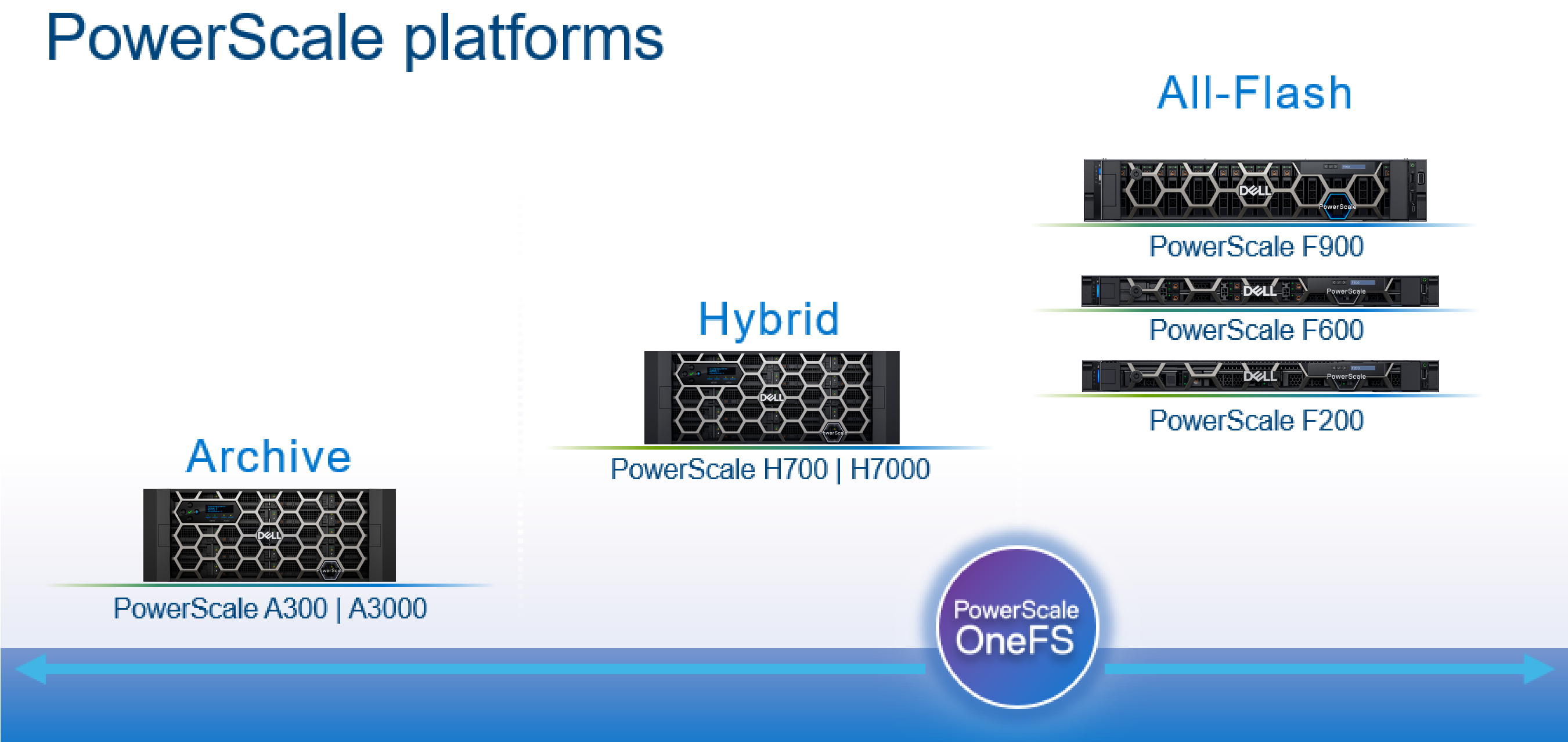

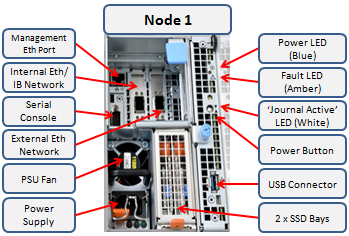

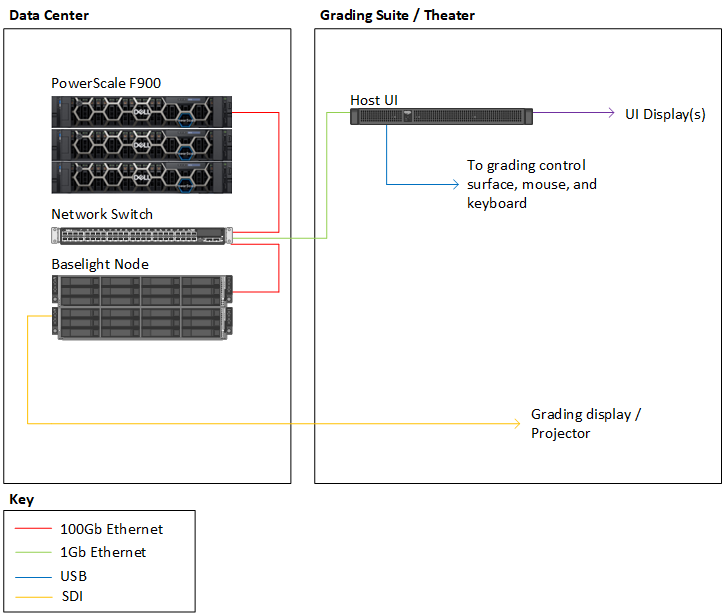

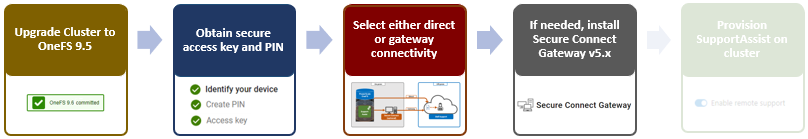

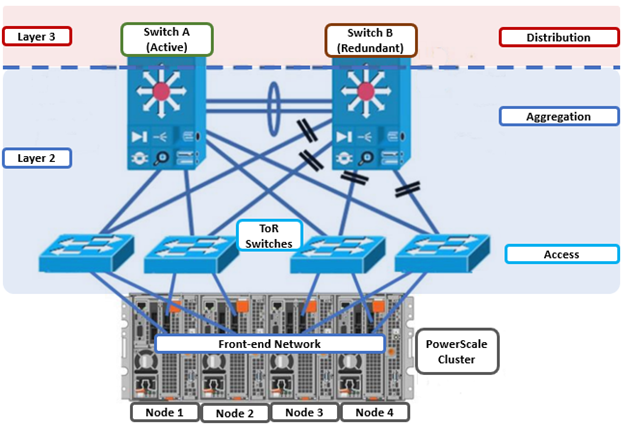

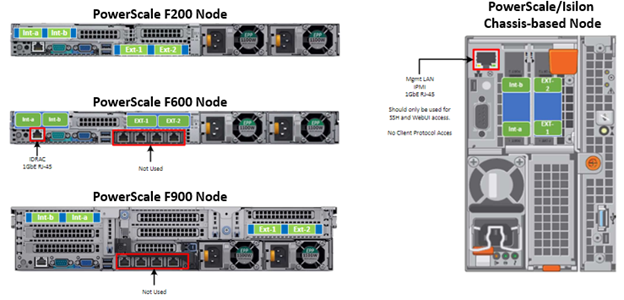

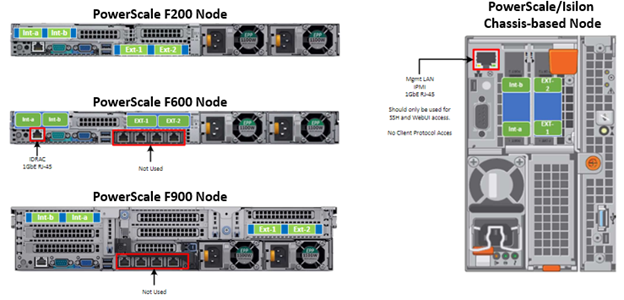

Another security enhancement that OneFS 9.5 and later releases brings to the table is the ability to configure 1GbE NIC ports dedicated to cluster management on the PowerScale F900, F710, F600, F210, and F200 all-flash storage nodes and P100 and B100 accelerators. Since these platforms were released, customers have been requesting the ability to activate the 1GbE NIC ports so that the node management activity and front end protocol traffic can be separated on physically distinct interfaces.

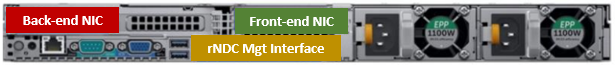

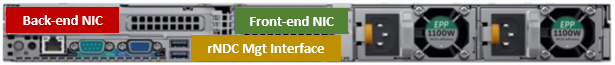

For background, since their introduction, the F600 and F900 have shipped with a quad port 1GbE rNDC (rack Converged Network Daughter Card) adapter. However, these 1GbE ports were non-functional and unsupported in OneFS releases prior to 9.5. As such, the node management and front-end traffic was co-mingled on the front-end interface.

In OneFS 9.5 and later, 1GbE network ports are now supported on all of the PowerScale PowerEdge based platforms for the purposes of node management, and are physically separate from the other network interfaces. Specifically, this enhancement applies to the F900, F600, F200 all-flash nodes, and P100 and B100 accelerators.

Under the hood, OneFS has been updated to recognize the 1GbE rNDC NIC ports as usable for a management interface. Note that the focus of this enhancement is on factory enablement and support for existing F600 customers that have the unused 1GbE rNDC hardware. This functionality has also been back-ported to OneFS 9.4.0.3 and later RUPs. Since the introduction of this feature, there have been several requests raised about field upgrades, but that use case is separate and will be addressed in a later release through scripts, updates of node receipts, procedures, and so on.

Architecturally, aside from some device driver and accounting work, no substantial changes were required to the underlying OneFS or platform architecture to implement this feature. This means that in addition to activating the rNDC, OneFS now supports the relocated front-end NIC in PCI slots 2 or 3 for the F200, B100, and P100.

OneFS 9.5 and later recognizes the 1GbE rNDC as usable for the management interface in the OneFS Wizard, in the same way it always has for the H-series and A-series chassis-based nodes.

All four ports in the 1GbE NIC are active, and for the Broadcom board, the interfaces are initialized and reported as bge0, bge1, bge2, and bge3.

The pciconf CLI utility can be used to determine whether the rNDC NIC is present in a node. If it is, a variety of identification and configuration details are displayed. For example, let’s look at the following output from a Broadcom rNDC NIC in an F200 node:

# pciconf -lvV pci0:24:0:0

bge2@pci0:24:0:0: class=0x020000 card=0x1f5b1028 chip=0x165f14e4 rev=0x00 hdr=0x00 class = network subclass = ethernet VPD ident = ‘Broadcom NetXtreme Gigabit Ethernet’ VPD ro PN = ‘BCM95720’ VPD ro MN = ‘1028’ VPD ro V0 = ‘FFV7.2.14’ VPD ro V1 = ‘DSV1028VPDR.VER1.0’ VPD ro V2 = ‘NPY2’ VPD ro V3 = ‘PMT1’ VPD ro V4 = ‘NMVBroadcom Corp’ VPD ro V5 = ‘DTINIC’ VPD ro V6 = ‘DCM1001008d452101000d45’

We can use the ifconfig CLI utility to determine the specific IP/interface mapping on the Broadcom rNDC interface. For example:

# ifconfig bge0 TME-1: bge0: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 1500 TME-1: ether 00:60:16:9e:X:X TME-1: inet 10.11.12.13 netmask 0xffffff00 broadcast 10.11.12.255 zone 1 TME-1: inet 10.11.12.13 netmask 0xffffff00 broadcast 10.11.12.255 zone 0 TME-1: media: Ethernet autoselect (1000baseT <full-duplex>) TME-1: status: active

In this output, the first IP address of the management interface’s pool is bound to bge0, which is the first port on the Broadcom rNDC NIC.

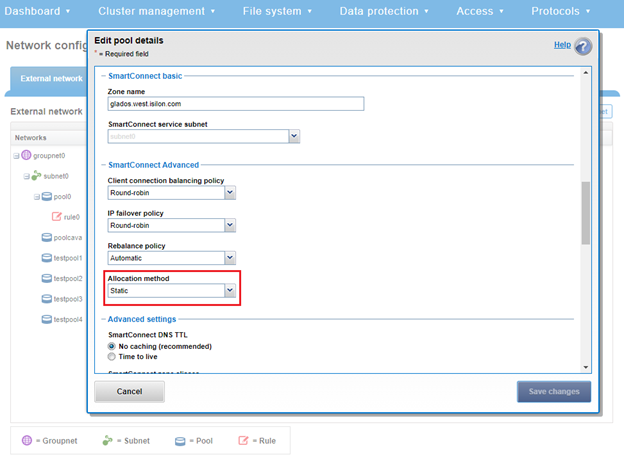

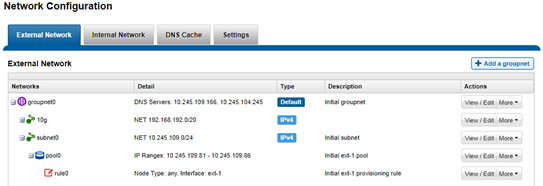

We can use the isi network pools CLI command to determine the corresponding interface. Within the system zone, the management interface is allocated an address from the configured IP range within its associated interface pool. For example:

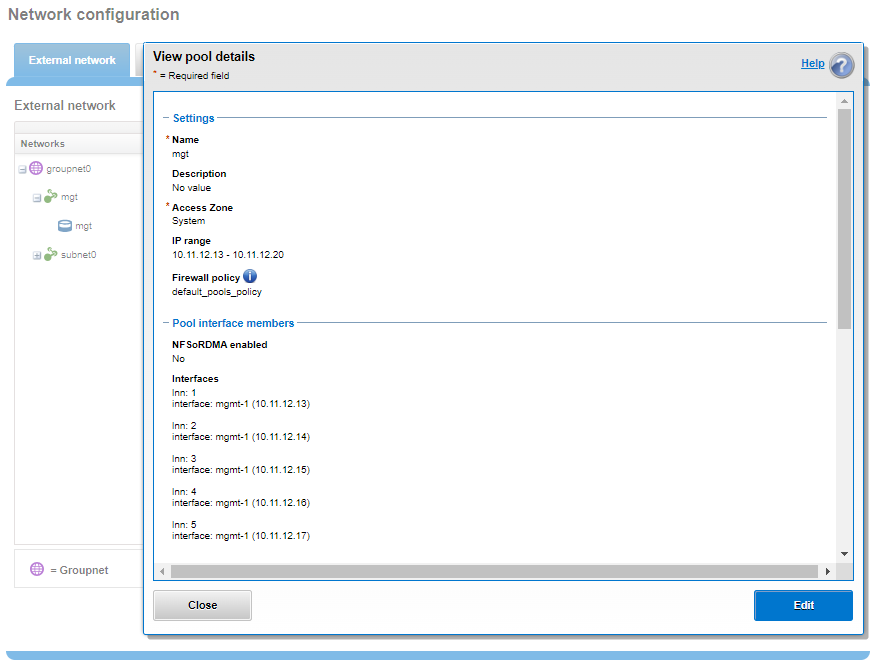

# isi network pools list ID SC Zone IP Ranges Allocation Method ---------------------------------------------------------------------------------------------- groupnet0.mgt.mgt cluster_mgt_isln.com 10.11.12.13-10.11.12.20 static # isi network pools view groupnet0.mgt.mgt | grep -i ifaces Ifaces: 1:mgmt-1, 2:mgmt-1, 3:mgmt-1, 4:mgmt-1, 5:mgmt-1

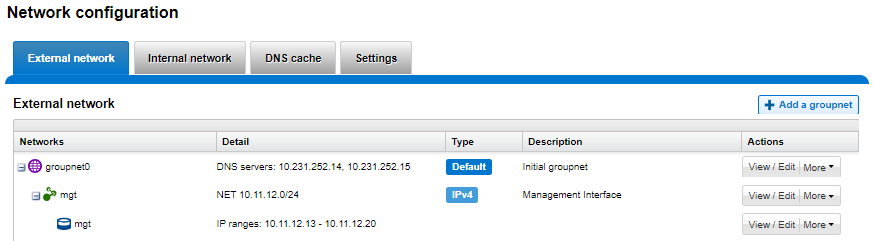

Or from the WebUI, under Network configuration > External network:

Drilling down into the mgt pool details shows the 1GbE management interfaces as the pool interface members:

Note that the 1GbE rNDC network ports are solely intended as cluster management interfaces. As such, they are not supported for use with regular front-end data traffic.

The F900 and F600 nodes already ship with a four port 1GbE rNDC NIC installed. However, the F200, B100, and P100 platform configurations have also been updated to include a quad port 1GbE rNDC card. These new configurations have been shipping by default since January 2023. This required relocating the front end network’s 25GbE NIC (Mellanox CX4) to PCI slot 2 in the motherboard. Additionally, the OneFS updates needed for this feature have also now allowed the F200 platform to be offered with a 100GbE option too. The 100GbE option uses a Mellanox CX6 NIC in place of the CX4 in slot 2.

With this 1GbE management interface enhancement, the same quad-port rNDC card (typically the Broadcom 5720) that has been shipped in the F900 and F600 since their introduction, is now included in the F200, B100 and P100 nodes as well. All four 1GbE rNDC ports are enabled and active under OneFS 9.5 and later, too.

Node port ordering continues to follow the standard, increasing numerically from left to right. However, be aware that the port labels are not visible externally because they are obscured by the enclosure’s sheet metal.

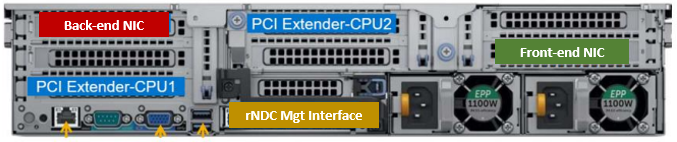

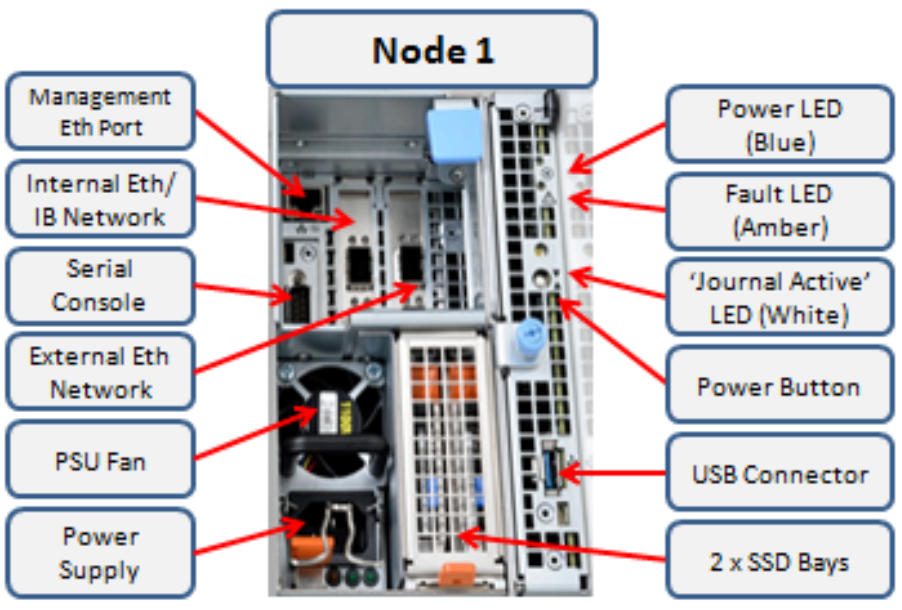

The following back-of-chassis hardware images show the new placements of the NICs in the various F-series and accelerator platforms:

F600

F900

For both the F600 and F900, the NIC placement remains unchanged, because these nodes have always shipped with the 1GbE quad port in the rNDC slot since their launch.

F200

The F200 sees its front-end NIC moved to slot 3, freeing up the rNDC slot for the quad-port 1GbE Broadcom 5720.

Because the B100 backup accelerator has a fibre-channel card in slot 2, it sees its front-end NIC moved to slot 3, freeing up the rNDC slot for the quad-port 1GbE Broadcom 5720.

Finally, the P100 accelerator sees its front-end NIC moved to slot 3, freeing up the rNDC slot for the quad-port 1GbE Broadcom 5720.

Note that, while there is currently no field hardware upgrade process for adding rNDC cards to legacy F200 nodes or B100 and P100 accelerators, this will be addressed in a future release.

Author: Nick Trimbee

OneFS Security and USB Device Control

Fri, 19 Apr 2024 17:34:44 -0000

|Read Time: 0 minutes

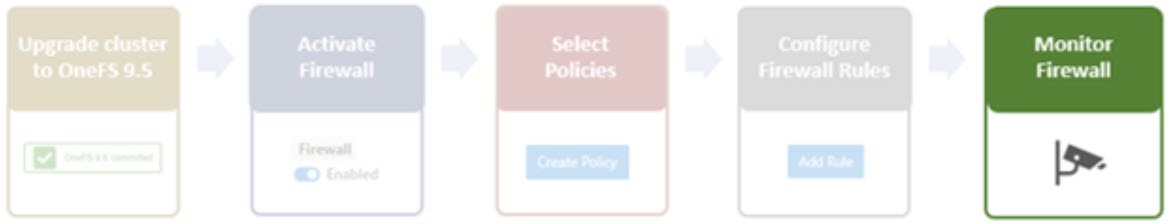

As we’ve seen over the course of the last several articles, OneFS 9.5 delivers a wealth of security focused features. These span the realms of core file system, protocols, data services, platform, and peripherals. Among these security enhancements is the ability to manually or automatically disable a cluster’s USB ports from either the CLI, platform API, or by activating a security hardening policy.

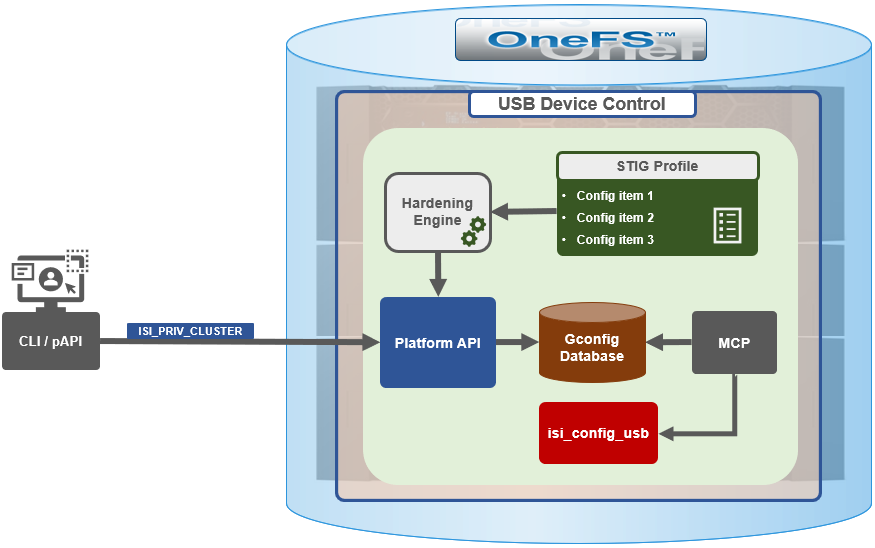

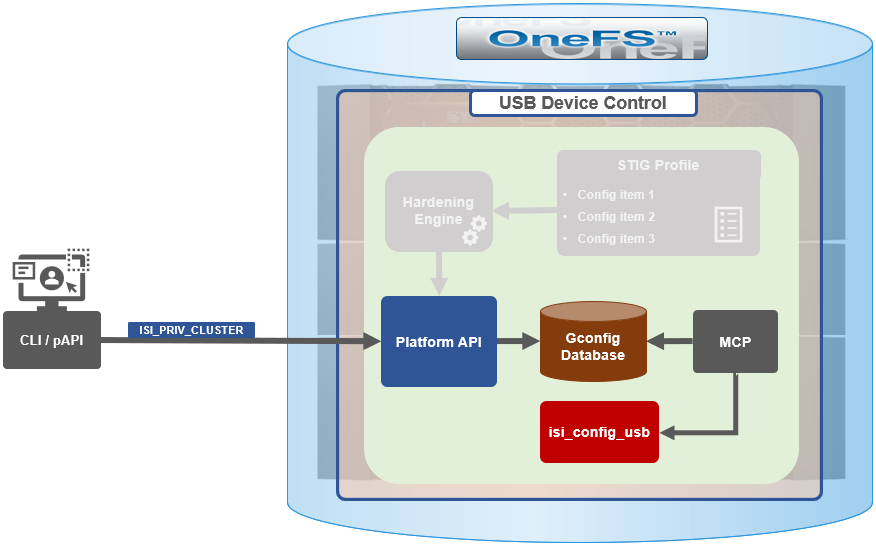

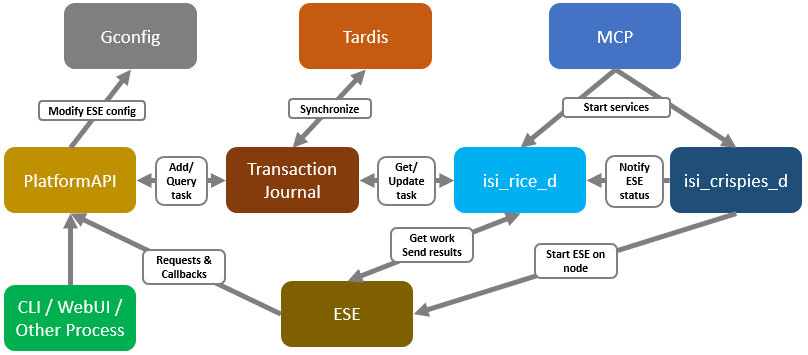

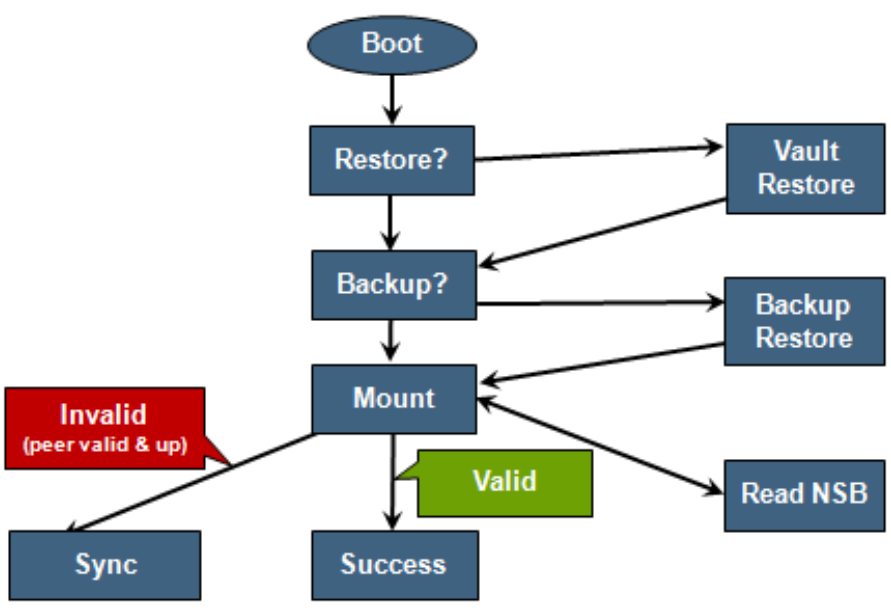

In support of this functionality, the basic USB port control architecture is as follows:

To facilitate this, OneFS 9.5 and subsequent releases see the addition of a new gconfig variable, ‘usb_ports_disabled’, in ‘security_config’, specifically to track the status of USB Ports on a cluster. On receiving an admin request either from the CLI or the platform API handler to disable the USB port, OneFS modifies the security config parameter in gconfig. For example:

# isi_gconfig -t security_config | grep -i usb

usb_ports_disabled (bool) = true

Under the hood, the MCP (master control process) daemon watches for any changes to the ‘isi_security.gcfg’ security config file on the cluster. If the value for the ‘usb_ports_disabled’ variable in the ‘isi_security.gcfg’ file is updated, then MCP executes the ‘isi_config_usb’ utility to enact the desired change. Note that because ‘isi_config_usb’ operates per-node but the MCP actions are global (executed cluster wide), isi_config_usb is invoked across each node by a Python script to enable or disable the cluster’s USB Ports.

The USB Ports enable/disable feature is only supported on PowerScale F900, F600, F200, H700/7000, and A300/3000 clusters running OneFS 9.5 and later, and PowerScale F710 and F210 running OneFS 9.7 or later.

In OneFS 9.5 and later, USB port control can be manually configured from either the CLI or platform API.

Note that there is no WebUI option at this time.

The following table lists the CLI and platform API configuration options for USB port control in OneFS 9.5 and later:

Action | CLI Syntax | Description |

View | isi security settings view | Report the state of a cluster’s USB ports. |

Enable | isi security settings modify --usb-ports-disabled=False | Activate a cluster’s USB ports. |

Disable | isi security settings modify --usb-ports-disabled=True | Disable a cluster’s USB ports. |

For example:

# isi security settings view | grep -i usb USB Ports Disabled: No # isi security settings modify --usb-ports-disabled=True # isi security settings view | grep -i usb USB Ports Disabled: Yes

Similarly, to re-enable a cluster’s USB ports:

# isi security settings modify --usb-ports-disabled=False # isi security settings view | grep -i usb USB Ports Disabled: No

Note that a user account with the OneFS ISI_PRIV_CLUSTER RBAC privilege is required to configure USB port changes on a cluster.

In addition to the ‘isi security settings’ CLI command, there is also a node-local CLI utility:

# whereis isi_config_usb isi_config_usb: /usr/bin/isi_hwtools/isi_config_usb

As mentioned previously, ‘isi security settings’ acts globally on a cluster, using ‘isi_config_usb’ to effect its changes on each node.

Alternatively, cluster USB ports can also be enabled and disabled using the OneFS platform API with the following endpoints:

API | Method | Argument | Output |

/16/security/settings | GET | No argument required. | JSON object for security settings with USB ports setting. |

/16/security/settings | PUT | JSON object with boolean value for USB ports setting. | None or Error. |

For example:

# curl -k -u <username>:<passwd> https://localhost:8080/platform/security/settings”

{

"settings" :

{

"fips_mode_enabled" : false,

"restricted_shell_enabled" : false,

"usb_ports_disabled" : true

}

}In addition to manual configuration, the USB ports are automatically disabled if the STIG security hardening profile is applied to a cluster.

This is governed by the following section of XML code in the isi_hardening configuration file, which can be found at /etc/isi_hardening/profiles/isi_hardening.xml:

<CONFIG_ITEM id ="isi_usb_ports" version = "1">

<PapiOperation>

<DO>

<URI>/security/settings</URI>

<BODY>{"usb_ports_disabled": true}</BODY>

<KEY>settings</KEY>

</DO>

<UNDO>

<URI>/security/settings</URI>

<BODY>{"usb_ports_disabled": false}</BODY>

<KEY>settings</KEY>

</UNDO>

<ACTION_SCOPE>CLUSTER</ACTION_SCOPE>

<IGNORE>FALSE</IGNORE>

</PapiOperation>

</CONFIG_ITEM>The ‘isi_config_usb’ CLI utility can be used to display the USB port status on a subset of nodes. For example:

# isi_config_usb --nodes 1-10 --mode display Node | Current | Pending ----------------------------------- TME-9 | UNSUP | INFO: This platform is not supported to run this script. TME-8 | UNSUP | INFO: This platform is not supported to run this script. TME-1 | On | TME-3 | On | TME-2 | On | TME-10 | On | TME-7 | AllOn | TME-5 | AllOn | TME-6 | AllOn | Unable to connect: TME-4

Note: In addition to port status, the output identifies any nodes that do not support USB port control (nodes 8 and 9 above) or that are unreachable (node 4 above).

When investigating or troubleshooting issues with USB port control, the following log files are the first places to check:

Log file | Description |

/var/log/isi_papi_d.log | Will log any requests to enable or disable USB ports. |

/var/log/isi_config_usb.log | Logs activity from the isi_config_usb script execution. |

/var/log/isi_mcp | Logs activity related to MCP actions on invoking the API. |

Author: Nick Trimbee

OneFS System Configuration Auditing

Thu, 18 Apr 2024 04:55:18 -0000

|Read Time: 0 minutes

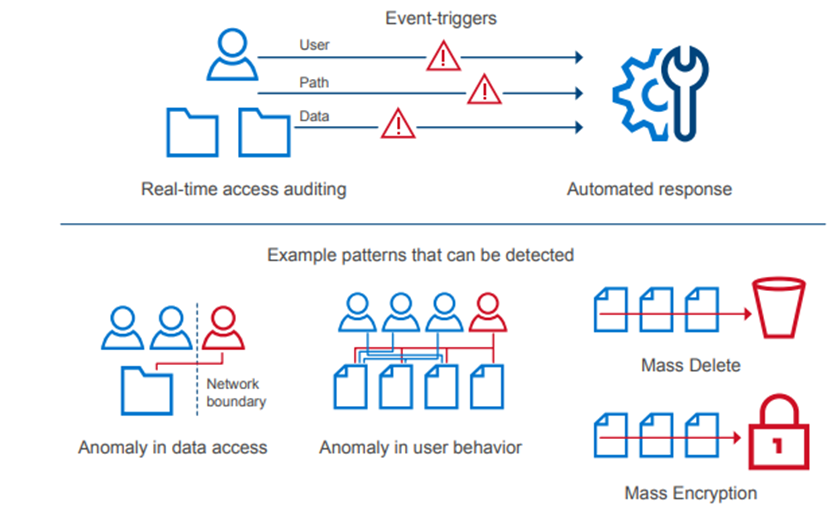

OneFS auditing can detect potential sources of data loss, fraud, inappropriate entitlements, access attempts that should not occur, and a range of other anomalies that are indicators of risk. This can be especially useful when the audit associates data access with specific user identities.

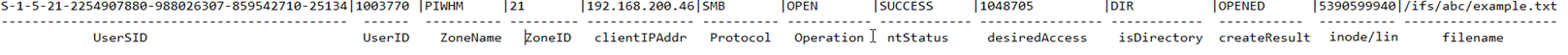

In the interests of data security, OneFS provides “chain of custody” auditing by logging specific activity on the cluster. This includes OneFS configuration changes plus NFS, SMB, and HDFS client protocol activity which are required for organizational IT security compliance, as mandated by regulatory bodies like HIPAA, SOX, FISMA, MPAA, and more.

OneFS auditing uses Dell’s Common Event Enabler (CEE) to provide compatibility with external audit applications. A cluster can write audit events across up to five CEE servers per node in a parallel, load-balanced configuration. This allows OneFS to deliver an end to end, enterprise grade audit solution which efficiently integrates with third party solutions like Varonis DatAdvantage.

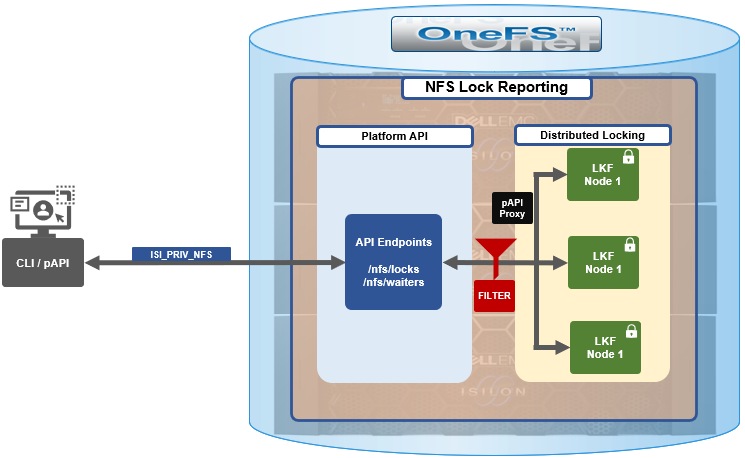

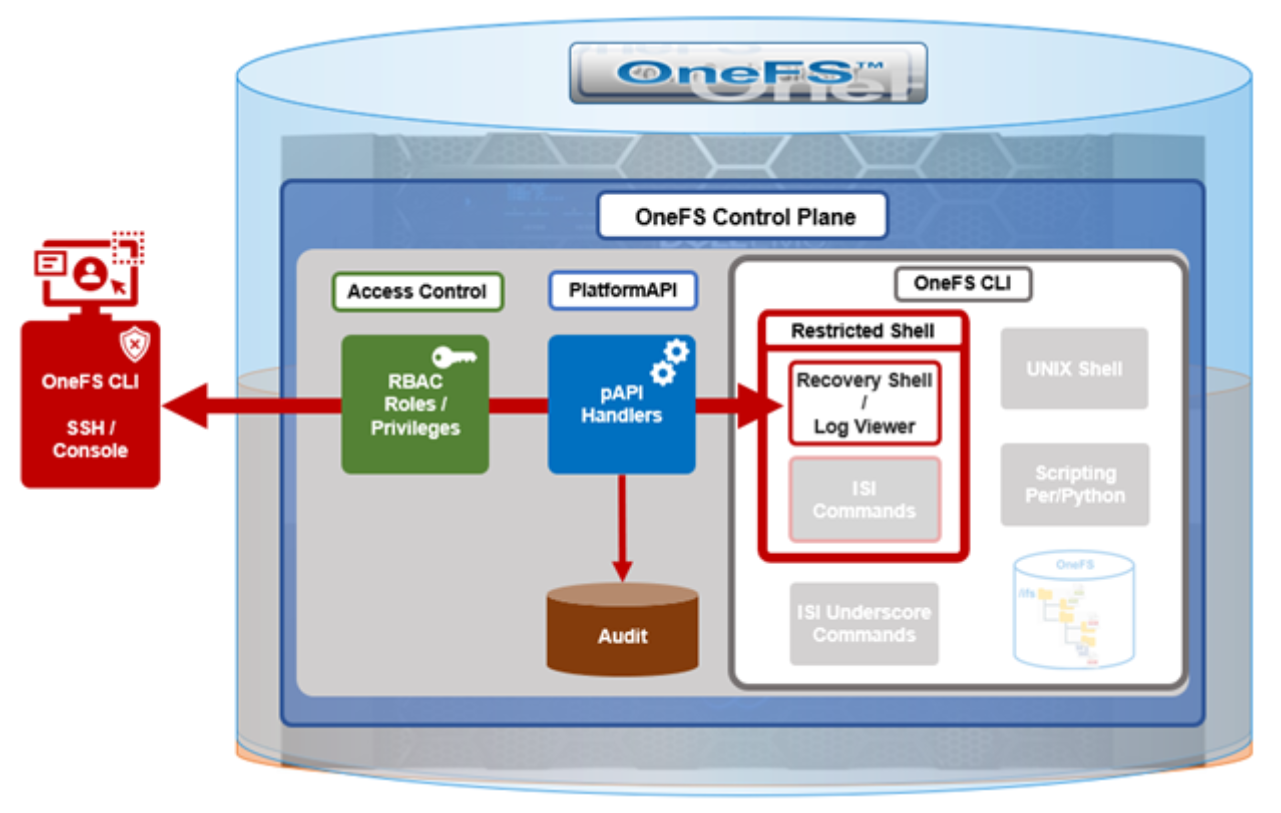

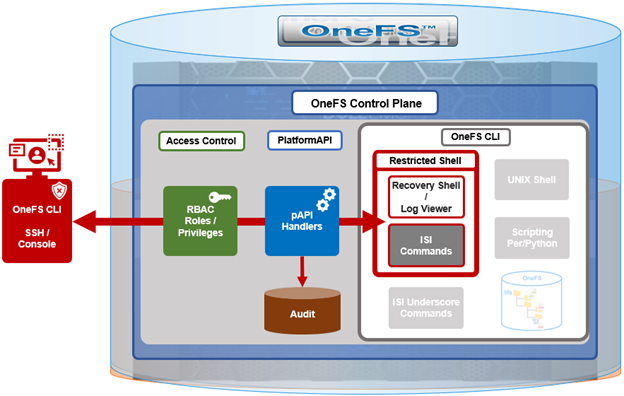

The following diagram outlines the basic architecture of OneFS audit:

Both system configuration changes, as well as protocol activity, can be easily audited on a PowerScale cluster. However, the protocol path is greyed out above, since it is outside the focus of this article. More information on OneFS protocol auditing can be found here.

Both system configuration changes, as well as protocol activity, can be easily audited on a PowerScale cluster. However, the protocol path is greyed out above, since it is outside the focus of this article. More information on OneFS protocol auditing can be found here.

As illustrated above, the OneFS audit framework is centered around three main services.

Service | Description |

isi_audit_cee | Service allowing OneFS to support third-party auditing applications. The main method of accessing protocol audit data from OneFS is through a third-party auditing application. |

isi_audit_d | Responsible for per-node audit queues and managing the data store for those queues. It provides a protocol on which clients may produce event payloads within a given context. It establishes a Unix domain socket for queue producers and handles writing and rotation of log files in /ifs/.ifsvar/audit/logs/node###/{config,protocol}/*. |

isi_audit_syslog | Daemon providing forwarding of audit config and protocol events to syslog. |

The basic configuration auditing workflow sees a cluster config change request come in via either the OneFS CLI, WebUI or platform API. The API handler infrastructure passes this request to the isi_audit_d service which intercepts it as a client thread and adds it to the audit queue. It is then processed and passed via a backend thread and written to the audit log files (IFS) as appropriate.

If audit syslog forwarding has been configured, IFS also passes the event to the isi_audit_syslog daemon, where a supervisor process instructs a writer thread to send it to the syslog which in turn updates its pertinent /var/log/ logfiles.

Similarly, if Common Event Enabler (CEE) forwarding has been enabled, IFS will also pass the request to the isi_audit_cee service where a delivery worker threads will intercept it and send the event to the CEE server pool. The isi_audit_cee heartbeat task makes CEE servers available for audit event delivery. Only after a CEE server has received a successful heartbeat will audit events be delivered to it. Every ten seconds, the heartbeat task wakes up and sends each CEE server in the configuration a heartbeat. While CEE servers are available and events are in memory, an attempt will be made to deliver these. Shutdown will only save audit log position if all the events are delivered to CEE since audit should not lose events. It isn't critical that all events are delivered at shutdown since any unsaved events can be resent to CEE on the next start of isi_audit_cee since CEE handles duplicates.

Within OneFS, all audit data is organized by topic and is securely stored in the file system.

# isi audit topics list

Name Max Cached Messages

-----------------------------

protocol 2048

config 1024

-----------------------------

Total: 2

Auditing can detect a variety of potential sources of data loss. These include unauthorized access attempts, inappropriate entitlements, plus a bevy of other fraudulent activities that plague organizations across the gamut of industries. Enterprises are increasingly required to comply with stringent regulatory mandates developed to protect against these sources of data theft and loss.

OneFS system configuration auditing is designed to track and record all configuration events that are handled by the API through the command-line interface (CLI).

# isi audit topics view config

Name: config

Max Cached Messages: 1024

Once enabled, system configuration auditing requires no additional configuration, and auditing events are automatically stored in the config audit topic directories. Audit access and management is governed by the ‘ISI_PRIV_AUDIT’ RBAC privilege, and OneFS provides a default ‘AuditAdmin’ role for this purpose.

Audit events are stored in a binary file under /ifs/.ifsvar/audit/logs. The logs automatically roll over to a new file after the size reaches 1 GB. The audit logs are consumable by auditing applications that support the Dell Common Event Enabler (CEE).

OneFS audit topics and settings can easily be viewed and modified. For example, to increase the configuration auditing maximum cached messages threshold to 2048 from the CLI:

# isi audit topics modify config --max-cached-messages 2048

# isi audit topics view config

Name: config

Max Cached Messages: 2048

Audit configuration can also be modified or viewed per access zone and/or topic.

Operation | CLI Syntax | Method and URI |

Get audit settings | isi audit settings view | GET <cluster-ip:port>/platform/3/audit/settings |

Modify audit settings | isi audit settings modify … | PUT <cluster-ip:port>/platform/3/audit/settings |

View JSON schema for this resource, including query parameters and object properties info. |

| GET <cluster-ip:port>/platform/3/audit/settings?describe |

View JSON schema for this resource, including query parameters and object properties info. |

| GET <cluster-ip:port>/platform/1/audit/topics?describe |

Configuration auditing can be enabled on a cluster from either the CLI or platform API. The current global audit configuration can be viewed as follows:

1# isi audit settings global view

Protocol Auditing Enabled: No

Audited Zones: -

CEE Server URIs: -

Hostname:

Config Auditing Enabled: No

Config Syslog Enabled: No

Config Syslog Servers: -

Config Syslog TLS Enabled: No

Config Syslog Certificate ID:

Protocol Syslog Servers: -

Protocol Syslog TLS Enabled: No

Protocol Syslog Certificate ID:

System Syslog Enabled: No

System Syslog Servers: -

System Syslog TLS Enabled: No

System Syslog Certificate ID:

Auto Purging Enabled: No

Retention Period: 180

System Auditing Enabled: No

In this case, configuration auditing is disabled – its default setting. The following CLI syntax will enable (and verify) configuration auditing across the cluster:

# isi audit settings global modify --config-auditing-enabled 1

# isi audit settings global view | grep -i 'config audit'

Config Auditing Enabled: Yes

In the next article, we’ll look at the config audit management, event viewing, and troubleshooting.

To enable configuration change audit redirection to syslog:

# isi audit settings global modify --config-auditing-enabled true

# isi audit settings global modify --config-syslog-enabled true

# isi audit settings global view | grep -i 'config audit'

Config Auditing Enabled: Yes

Similarly, to disable configuration change audit redirection to syslog:

# isi audit settings global modify --config-syslog-enabled false

# isi audit settings global modify --config-auditing-enabled false

configure audit

2.

#isi audit setting modify --add-cee-server-uris='http://seavee5.west.isilon.com:12228/cee'

4.

# isi audit settings modify --add-audited-zones=auditgti

4' if you don't want audit that much

# isi audit setting modify --remove-audited-zones=System

config zone

3.

#isi zone zones create --all-auth-providers=true --audit-failure=all --audit-success=all --path=/ifs/data --name=auditgti

3'. if you dont' want to audit that much

#isi zone zones create --all-auth-providers=true --audit-failure=read,logon --audit-success=write,delete --path=/ifs/data --name=auditgti

network pool

5.

#isi network create pool --name=subnet0:auditpool --access-zone=auditgit --iface=<your interface> --range=<your range>

5' you can also audit System by default, so this step can be ignored

other settings

#isi audit setting modify --hostname="<any name you want really, this just gets inserted into the payload>"

#isi audit setting modify --cee-log-time="Protocol@1900-01-01 00:00:01"

The platform API can also be used to configure and manage auditing. For example, to enable configuration auditing on a cluster:

PUT /platform/1/audit/settings

Authorization: Basic QWxhZGRpbjpvcGVuIHN1c2FtZQ==

{

'config_auditing_enabled': True

}

Response example

The HTTP ‘204 response code from the cluster indicates that the request was successful, and that configuration auditing is now enabled on the cluster. No message body is returned for this request.

204 No Content

Content-type: text/plain,

Allow: 'GET, PUT, HEAD'

Similarly, to modify the config audit topic’s maximum cached messages threshold to a value of ‘1000’ via the API:

PUT /1/audit/topics/config

Authorization: Basic QWxhZGRpbjpvcGVuIHN1c2FtZQ==

{

"max_cached_messages": 1000

}

Again, no message body is returned from OneFS for this request.

204 No Content

Content-type: text/plain,

Allow: 'GET, PUT, HEAD'

Note that, in the unlikely event that a cluster experiences an outage during which it loses quorum, auditing will be suspended until it is regained. Events similar to the following will be written to the /var/log/audit_d.log file:

940b5c700]: Lost quorum! Audit logging will be disabled until /ifs is writeable again.

2023-08-28T15:37:32.132780+00:00 <1.6> TME-1(id1) isi_audit_d[6495]: [0x345940b5c700]: Regained quorum. Logging resuming.

When it comes to reading audit events on the cluster, OneFS natively provides the handy ‘isi_audit_viewer’ utility. For example, the following audit viewer output shows the events logged when the cluster admin added the ‘/ifs/tmp’ path to the SmartDedupe configuration, and created a new user named ‘test’1’:

# isi_audit_viewer

[0: Tue Aug 29 23:01:16 2023] {"id":"f54a6bec-46bf-11ee-920d-0060486e0a26","timestamp":1693350076315499,"payload":{"user":{"token": {"UID":0, "GID":0, "SID": "SID:S-1-22-1-0", "GSID": "SID:S-1-22-2-0", "GROUPS": ["SID:S-1-5-11", "GID:5", "GID:10", "GID:20", "GID:70"], "protocol": 17, "zone id": 1, "client": "10.135.6.255", "local": "10.219.64.11" }},"uri":"/1/dedupe/settings","method":"PUT","args":{}

,"body":{"paths":["/ifs/tmp"]}

}}

[1: Tue Aug 29 23:01:16 2023] {"id":"f54a6bec-46bf-11ee-920d-0060486e0a26","timestamp":1693350076391422,"payload":{"status":204,"statusmsg":"No Content","body":{}}}

[2: Tue Aug 29 23:03:43 2023] {"id":"4cfce7a5-46c0-11ee-920d-0060486e0a26","timestamp":1693350223446993,"payload":{"user":{"token": {"UID":0, "GID":0, "SID": "SID:S-1-22-1-0", "GSID": "SID:S-1-22-2-0", "GROUPS": ["SID:S-1-5-11", "GID:5", "GID:10", "GID:20", "GID:70"], "protocol": 17, "zone id": 1, "client": "10.135.6.255", "local": "10.219.64.11" }},"uri":"/18/auth/users","method":"POST","args":{}

,"body":{"name":"test1"}

}}

[3: Tue Aug 29 23:03:43 2023] {"id":"4cfce7a5-46c0-11ee-920d-0060486e0a26","timestamp":1693350223507797,"payload":{"status":201,"statusmsg":"Created","body":{"id":"SID:S-1-5-21-593535466-4266055735-3901207217-1000"}

}}

The audit log entries, such as those above, typically comprise the following components:

- Timestamp (Human readable)

- Unique Entry ID

- Timestamp (Unix Epoch Time)

- Node Number

- The user tokens of the person executing the command

- User persona (Unix/Windows)

- Primary group persona (Unix/Windows)

- Supplemental group personas (Unix/Windows)

- RBAC privileges of the person executing the command

- Interface used to generate the command

- 10 = PAPI / WebUI

- 16 = Console

- 17 = SSH

- Access Zone that the command was executed against

- Where the user connected from

- The local node address where the command was executed

- Command

- Command arguments

- Command body

The ‘isi_audit_viewer’ utility automatically reads the ‘config’ log topic by default, but can also be used read the ‘protocol’ log topic too. Its CLI command syntax is as follows:

# isi_audit_viewer -h

Usage: isi_audit_viewer [ -n <nodeid> | -t <topic> | -s <starttime>|

-e <endtime> | -v ]

-n <nodeid> : Specify node id to browse (default: local node)

-t <topic> : Choose topic to browse.

Topics are "config" and "protocol" (default: "config")

-s <start> : Browse audit logs starting at <starttime>

-e <end> : Browse audit logs ending at <endtime>

-v verbose : Prints out start / end time range before printing

records

Note that, on large clusters where there is heavy (up to the 100,000’s) of audit writes, when running the isi_audit_viewer utility across the cluster with ‘isi_for_array’, it can potentially lead to memory starvation and other issues – especially if outputting to a directory under /ifs. As such, consider directing the output to a non-IFS location such as /var/temp. Also, the isi_audit_viewer ‘-s’ (start time) and ‘-e’ (end time) flags can be used to limit a search (for 1-5 minutes), helping reduce the size of data.

In addition to reading audit events, the view is also a useful tool to assist with troubleshoot any auditing issues. Additionally, any errors that are encountered while processing audit events, and when delivering them to an external CEE server, are written to the log file ‘/var/log/isi_audit_cee.log’. Additionally, the protocol specific logs will contain any issues the audit filter has collecting while auditing events.

OneFS System Configuration Auditing – Part 2

Thu, 18 Apr 2024 22:28:35 -0000

|Read Time: 0 minutes

In the previous article of this series, we looked at the architecture and operation of OneFS configuration auditing. Now, we’ll turn our attention to its management, event viewing, and troubleshooting.

The CLI command set for configuring ‘isi audit’ is split between two areas:

Area | Detail | Syntax |

Events | Specifies which events get logged, across three categories: •Audit Failure •Audit Success •Syslog Audit Events | isi audit settings … |

Global | Configuration of global audit parameters, including topics, zones, CEE, syslog, puring, retention, and more. | isi audit settings global ... |

The ‘view’ argument for each command returns the following output:

Events:

# isi audit settings view

Audit Failure: create_file, create_directory, open_file_write, open_file_read, close_file_unmodified, close_file_modified, delete_file, delete_directory, rename_file, rename_directory, set_security_file, set_security_directory

Audit Success: create_file, create_directory, open_file_write, open_file_read, close_file_unmodified, close_file_modified, delete_file, delete_directory, rename_file, rename_directory, set_security_file, set_security_directory

Syslog Audit Events: create_file, create_directory, open_file_write, open_file_read, close_file_unmodified, close_file_modified, delete_file, delete_directory, rename_file, rename_directory, set_security_file, set_security_directory

Syslog Forwarding Enabled: No

Global:

# isi audit settings global view

Protocol Auditing Enabled: Yes

Audited Zones: -

CEE Server URIs: -

Hostname:

Config Auditing Enabled: Yes

Config Syslog Enabled: No

Config Syslog Servers: -

Config Syslog TLS Enabled: No

Config Syslog Certificate ID:

Protocol Syslog Servers: -

Protocol Syslog TLS Enabled: No

Protocol Syslog Certificate ID:

System Syslog Enabled: No

System Syslog Servers: -

System Syslog TLS Enabled: No

System Syslog Certificate ID:

Auto Purging Enabled: No

Retention Period: 180

System Auditing Enabled: No

While configuration auditing is disabled on OneFS by default, the following CLI syntax can be used to enable and verify config auditing across the cluster:

# isi audit settings global modify --config-auditing-enabled 1

# isi audit settings global view | grep -i 'config audit'

Config Auditing Enabled: Yes

Similarly, to enable configuration change audit redirection to syslog:

# isi audit settings global modify --config-auditing-enabled true

# isi audit settings global modify --config-syslog-enabled true

# isi audit settings global view | grep -i 'config audit'

Config Auditing Enabled: Yes

Or to disable redirection to syslog:

# isi audit settings global modify --config-syslog-enabled false

# isi audit settings global modify --config-auditing-enabled false

CEE servers can be configured as follows:

#isi audit settings global modify --add-cee-server-uris='<URL>’

For example:

#isi audit settings global modify --add-cee-server

-uris='http://cee1.isilon.com:12228/cee'

Auditing can be constrained by access zone, too:

# isi audit settings modify --add-audited-zones=audit_az1

Note that, when auditing is enabled, the system zone is included by default. However, it can be excluded, if desired:

# isi audit setting modify --remove-audited-zones=System

Access zone’s audit parameters can also be configured via the ‘isi zones’ CLI command set. For example:

#isi zone zones create --all-auth-providers=true --audit-failure=all --audit-success=all --path=/ifs/data --name=audit_az1

Granular audit event type configuration can be specified, if desired, to narrow the scope and reduce the amount of audit logging.

For example, the following command syntax constrains auditing to read and logon failures and successful writes and deletes under path /ifs/data in the audit_az1 access zone:

#isi zone zones create --all-auth-providers=true --audit-failure=read,logon --audit-success=write,delete --path=/ifs/data --name=audit_az1

In addition to the CLI, the OneFS platform API can also be used to configure and manage auditing. For example, to enable configuration auditing on a cluster:

PUT /platform/1/audit/settings

Authorization: Basic QWxhZGRpbjpvcGVuIHN1c2FtZQ==

{

'config_auditing_enabled': True

}

The following ‘204’ HTTP response code from the cluster indicates that the request was successful, and that configuration auditing is now enabled on the cluster. No message body is returned for this request.

204 No Content

Content-type: text/plain,

Allow: 'GET, PUT, HEAD'

Similarly, to modify the config audit topic’s maximum cached messages threshold to a value of ‘1000’ via the API:

PUT /1/audit/topics/config

Authorization: Basic QWxhZGRpbjpvcGVuIHN1c2FtZQ==

{

"max_cached_messages": 1000

}

Again, no message body is returned from OneFS for this request.

204 No Content

Content-type: text/plain,

Allow: 'GET, PUT, HEAD'

Note that, in the unlikely event that a cluster experiences an outage during which it loses quorum, auditing will be suspended until it is regained. Events similar to the following will be written to the /var/log/audit_d.log file:

940b5c700]: Lost quorum! Audit logging will be disabled until /ifs is writeable again.

2023-08-28T15:37:32.132780+00:00 <1.6> TME-1(id1) isi_audit_d[6495]: [0x345940b5c700]: Regained quorum. Logging resuming.

When it comes to reading audit events on the cluster, OneFS natively provides the handy ‘isi_audit_viewer’ utility. For example, the following audit viewer output shows the events logged when the cluster admin added the ‘/ifs/tmp’ path to the SmartDedupe configuration, and created a new user named ‘test’1’:

# isi_audit_viewer

[0: Tue Aug 29 23:01:16 2023] {"id":"f54a6bec-46bf-11ee-920d-0060486e0a26","timestamp":1693350076315499,"payload":{"user":{"token": {"UID":0, "GID":0, "SID": "SID:S-1-22-1-0", "GSID": "SID:S-1-22-2-0", "GROUPS": ["SID:S-1-5-11", "GID:5", "GID:10", "GID:20", "GID:70"], "protocol": 17, "zone id": 1, "client": "10.135.6.255", "local": "10.219.64.11" }},"uri":"/1/dedupe/settings","method":"PUT","args":{}

,"body":{"paths":["/ifs/tmp"]}

}}

[1: Tue Aug 29 23:01:16 2023] {"id":"f54a6bec-46bf-11ee-920d-0060486e0a26","timestamp":1693350076391422,"payload":{"status":204,"statusmsg":"No Content","body":{}}}

[2: Tue Aug 29 23:03:43 2023] {"id":"4cfce7a5-46c0-11ee-920d-0060486e0a26","timestamp":1693350223446993,"payload":{"user":{"token": {"UID":0, "GID":0, "SID": "SID:S-1-22-1-0", "GSID": "SID:S-1-22-2-0", "GROUPS": ["SID:S-1-5-11", "GID:5", "GID:10", "GID:20", "GID:70"], "protocol": 17, "zone id": 1, "client": "10.135.6.255", "local": "10.219.64.11" }},"uri":"/18/auth/users","method":"POST","args":{}

,"body":{"name":"test1"}

}}

[3: Tue Aug 29 23:03:43 2023] {"id":"4cfce7a5-46c0-11ee-920d-0060486e0a26","timestamp":1693350223507797,"payload":{"status":201,"statusmsg":"Created","body":{"id":"SID:S-1-5-21-593535466-4266055735-3901207217-1000"}

}}

The audit log entries, such as those above, typically comprise the following components:

Order | Component | Detail |

1 | Timestamp | Timestamp in human readable form |

2 | ID | Unique entry ID |

3 | Timestamp | Timestamp in UNIX epoch time |

4 | Node | Node number |

5 | User tokens | The user tokens of the Roles and rights of user executing the command. 1. User persona (Unix/Windows 2. Primary group persona (Unix/Windows 3. Supplemental group personas (Unix/Windows) 4. RBAC privileges of the user executing the command |

6 | Interface | Interface used to generate the command: 1. 10 = pAPI / WebUI 2. 16 = Console CLI 3. 17 = SSH CLI |

7 | Zone | Access zone that the command was executed against |

8 | Client IP | Where the user connected from |

9 | Local node | Local node address where the command was executed |

10 | Command | Command syntax |

11 | Arguments | Command arguments |

12 | Body | Command body |

The ‘isi_audit_viewer’ utility automatically reads the ‘config’ log topic by default, but can also be used read the ‘protocol’ log topic too. Its CLI command syntax is as follows:

# isi_audit_viewer -h

Usage: isi_audit_viewer [ -n <nodeid> | -t <topic> | -s <starttime>|

-e <endtime> | -v ]

-n <nodeid> : Specify node id to browse (default: local node)

-t <topic> : Choose topic to browse.

Topics are "config" and "protocol" (default: "config")

-s <start> : Browse audit logs starting at <starttime>

-e <end> : Browse audit logs ending at <endtime>

-v verbose : Prints out start / end time range before printing

records

Note that, on large clusters where there is heavy (in the 100,000’s) of audit writes, when running the isi_audit_viewer utility across the cluster with ‘isi_for_array’, it can potentially lead to memory starvation and other issues – especially if outputting to a directory under /ifs. As such, consider directing the output to a non-IFS location such as /var/temp. Also, the isi_audit_viewer ‘-s’ (start time) and ‘-e’ (end time) flags can be used to limit a search (ie. for 1-5 minutes), helping reduce the size of data.

In addition to reading audit events, the view is also a useful tool to assist with troubleshoot any auditing issues. Additionally, any errors that are encountered while processing audit events, and when delivering them to an external CEE server, are written to the log file ‘/var/log/isi_audit_cee.log’. Additionally, the protocol specific logs will contain any issues the audit filter has collecting while auditing events.

Author: Nick Trimbee

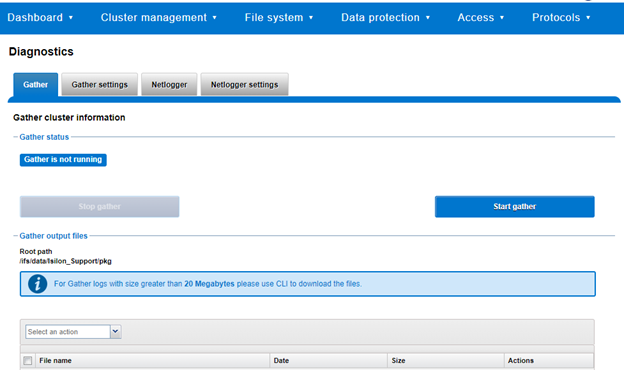

OneFS Log Gather Transmission

Wed, 17 Apr 2024 15:45:51 -0000

|Read Time: 0 minutes

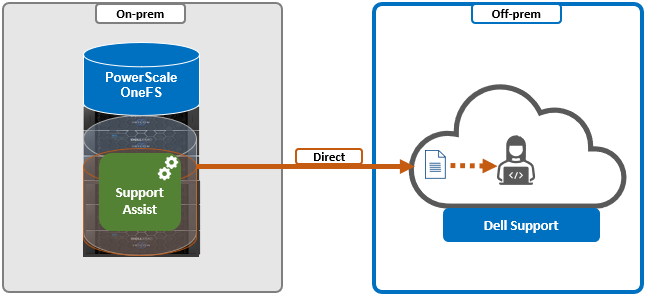

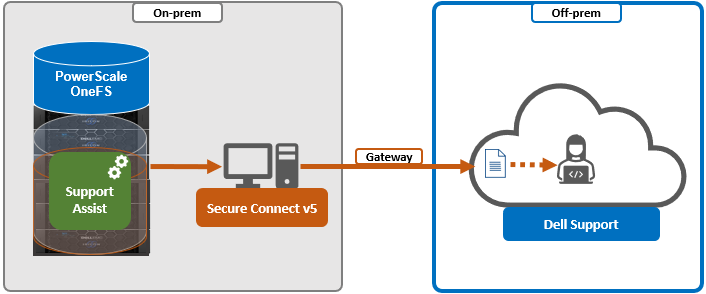

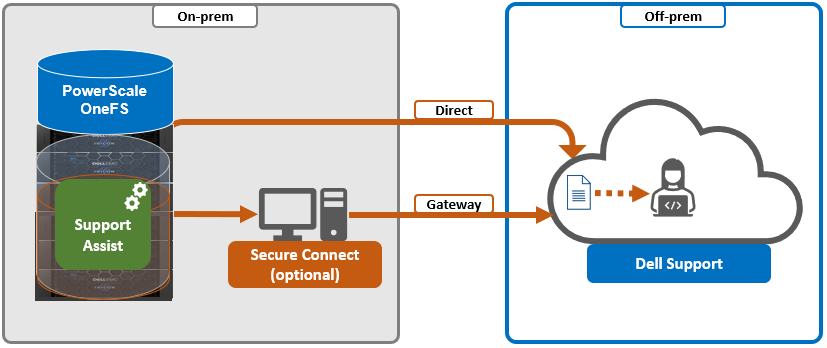

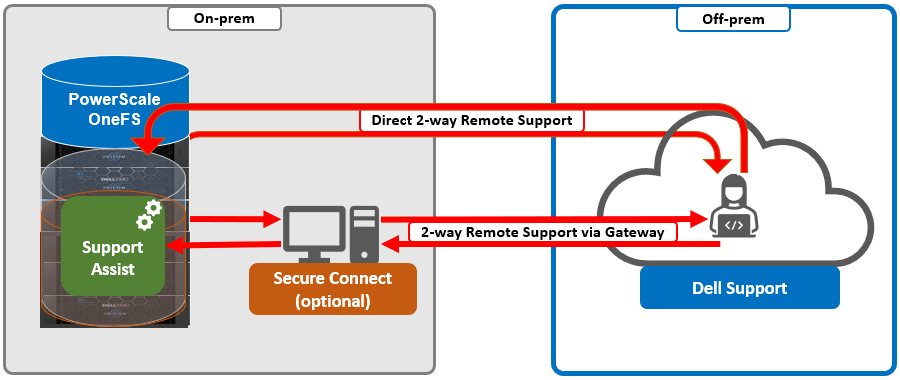

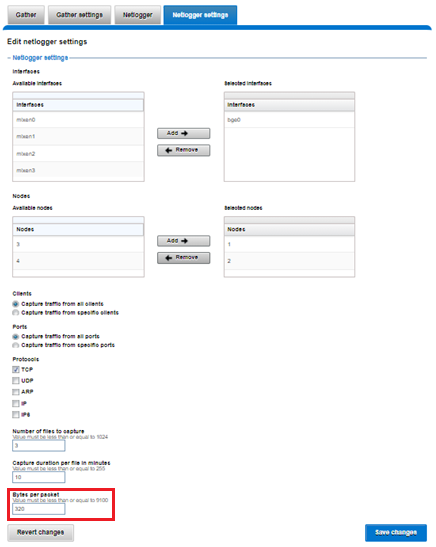

The OneFS isi_gather_info utility is the ubiquitous method for collecting and uploading a PowerScale cluster’s context and configuration to assist with the identification and resolution of bugs and issues. As such, it performs the following roles:

- Executes many commands, scripts, and utilities on a cluster, and saves their results

- Collates, or gathers, all these files into a single ‘gzipped’ package

- Optionally transmits this log gather package back to Dell using a choice of several transport methods

By default, a log gather tarfile is written to the /ifs/data/Isilon_Support/pkg/ directory. It can also be uploaded to Dell by the following means:

Upload mechanism | Description | TCP port | OneFS release support |

SupportAssist / ESRS | Uses Dell Secure Remote Support (SRS) for gather upload. | 443/8443 | Any |

FTP | Use FTP to upload the completed gather. | 21 | Any |

FTPS | Use SSH-based encrypted FTPS to upload the gather. | 22 | Default in OneFS 9.5 and later |

HTTP | Use HTTP to upload the gather. | 80/443 | Any |

As indicated in this table, OneFS 9.5 and later releases now leverage FTPS as the default option for FTP upload, thereby protecting the upload of cluster configuration and logs with an encrypted transmission session.

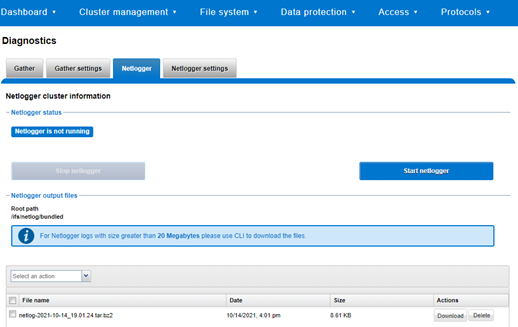

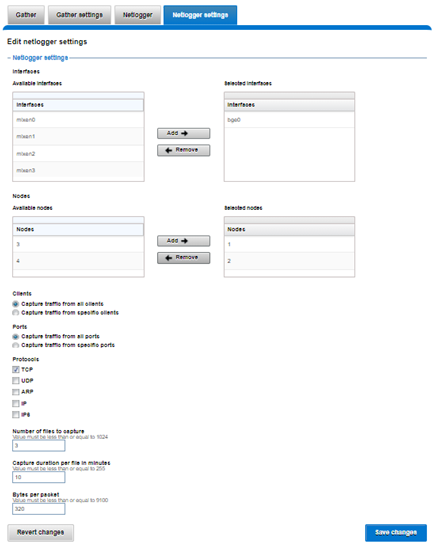

Under the hood, the log gather process comprises an eight phase workflow, with transmission comprising the penultimate ‘Upload’ phase:

The details of each phase are as follows:

Phase | Description |

1. Setup | Reads from the arguments passed in, and from any config files on disk, and sets up the config dictionary, which will be used throughout the rest of the codebase. Most of the code for this step is contained in isilon/lib/python/gather/igi_config/configuration.py. This is also the step in which the program is most likely to exit, if some config arguments end up being invalid. |

2. Run local | Executes all the cluster commands, which are run on the same node that is starting the gather. All these commands run in parallel (up to the current parallelism value). This is typically the second longest running phase. |

3. Run nodes | Executes the node commands across all of the cluster’s nodes. This runs on each node, and while these commands run in parallel (up to the current parallelism value), they do not run in parallel with the ‘Run local’ step. |

4. Collect | Ensures that all of the results end up on the overlord node (the node that started the gather). If the gather is using /ifs, it is very fast; if it is not using /ifs, it needs to SCP all the node results to a single node. |

5. Generate Extra Files | Generates nodes_info.xml and package_info.xml. These two files are present in every gather, and provide important metadata about the cluster. |

6. Packing | Packs (tars and gzips) all the results. This is typically the longest running phase, often by an order of magnitude. |

7. Upload | Transports the tarfile package to its specified destination using SupportAssist, ESRS, FTPS, FTP, HTTP, and so on. Depending on the geographic location, this phase might also be lengthy. |

8. Cleanup | Cleans up any intermediary files that were created on the cluster. This phase will run even if the gather fails, or is interrupted. |

Because the isi_gather_info tool is primarily intended for troubleshooting clusters with issues, it runs as root (or compadmin in compliance mode), because it needs to be able to execute under degraded conditions (such as without GMP, during upgrade, and under cluster splits, and so on). Given these atypical requirements, isi_gather_info is built as a standalone utility, rather than using the platform API for data collection.

While FTPS is the new default and recommended transport, the legacy plaintext FTP upload method is still available in OneFS 9.5 and later. As such, Dell’s log server, ftp.isilon.com, also supports both encrypted FTPS and plaintext FTP, so will not impact older release FTP log upload behavior.

This OneFS 9.5 FTPS security enhancement encompasses three primary areas where an FTPS option is now supported:

- Directly executing the /usr/bin/isi_gather_info utility

- Running using the isi diagnostics gather CLI command set

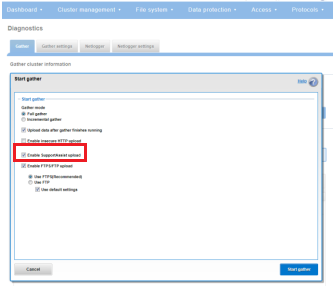

- Creating a diagnostics gather through the OneFS WebUI

For the isi_gather_info utility, two new options are included in OneFS 9.5 and later releases:

New option for isi_gather_info | Description | Default value |

--ftp-insecure | Enables the gather to use unencrypted FTP transfer. | False |

--ftp-ssl-cert | Enables the user to specify the location of a special SSL certificate file. | Empty string. Not typically required. |

Similarly, there are two corresponding options in OneFS 9.5 and later for the isi diagnostics CLI command:

New option for isi diagnostics | Description | Default value |

--ftp-upload-insecure | Enables the gather to use unencrypted FTP transfer. | No |

--ftp-upload-ssl-cert | Enables the user to specify the location of a special SSL certificate file. | Empty string. Not typically required. |

Based on these options, the following table provides some command syntax usage examples, for both FTPS and FTP uploads:

FTP upload type | Description | Example isi_gather_info syntax | Example isi diagnostics syntax |

Secure upload (default) | Upload cluster logs to the Dell log server (ftp.isilon.com) using encrypted FTP (FTPS). | # isi_gather_info Or # isi_gather_info --ftp | # isi diagnostics gather start Or # isi diagnostics gather start --ftp-upload-insecure=no |

Secure upload | Upload cluster logs to an alternative server using encrypted FTPS. | # isi_gather_info --ftp-host <FQDN> --ftp-ssl-cert <SSL_CERT_PATH> | # isi diagnostics gather start --ftp-upload-host=<FQDN> --ftp-ssl-cert= <SSL_CERT_PATH> |

Unencrypted upload | Upload cluster logs to the Dell log server (ftp.isilon.com) using plaintext FTP. | # isi_gather_info --ftp-insecure | # isi diagnostics gather start --ftp-upload-insecure=yes |

Unencrypted upload | Upload cluster logs to an alternative server using plaintext FTP. | # isi_gather_info --ftp-insecure --ftp-host <FQDN> | # isi diagnostics gather start --ftp-upload-host=<FQDN> --ftp-upload-insecure=yes |

Note that OneFS 9.5 and later releases provide a warning if the cluster admin elects to continue using non-secure FTP for the isi_gather_info tool. Specifically, if the --ftp-insecure option is configured, the following message is displayed, informing the user that plaintext FTP upload is being used, and that the connection and data stream will not be encrypted:

# isi_gather_info --ftp-insecure

You are performing plain text FTP logs upload.

This feature is deprecated and will be removed

in a future release. Please consider the possibility

of using FTPS for logs upload. For further information,

please contact PowerScale support

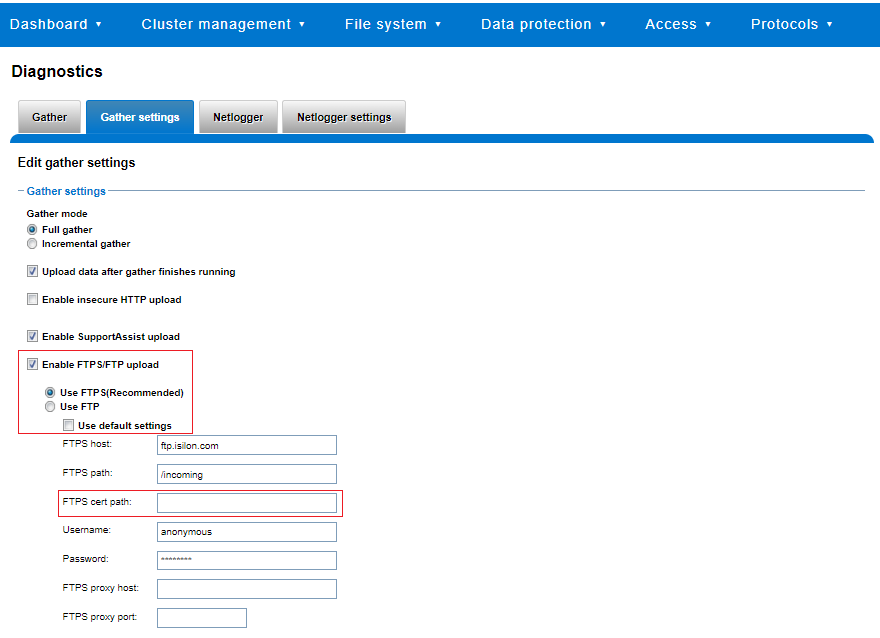

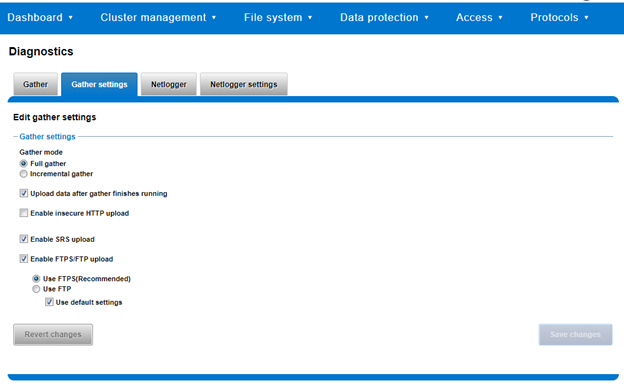

...In addition to the command line, log gathers can also be configured using the OneFS WebUI by navigating to Cluster management > Diagnostics > Gather settings.

The Edit gather settings page in OneFS 9.5 and later has been updated to reflect FTPS as the default transport method, plus the addition of radio buttons and text boxes to accommodate the new configuration options.

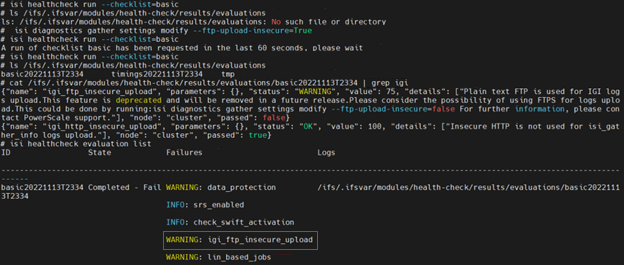

If plaintext FTP upload is configured, the healthcheck command will display a warning that plaintext upload is used and is no longer a recommended option. For example:

For reference, the OneFS 9.5 and later isi_gather_info CLI command syntax includes the following options:

Option | Description |

--upload <boolean> | Enable gather upload. |

--esrs <boolean> | Use ESRS for gather upload. |

--noesrs | Do not attempt to upload using ESRS. |

--supportassist | Attempt SupportAssist upload. |

--nosupportassist | Do not attempt to upload using SupportAssist. |

--gather-mode (incremental | full) | Type of gather: incremental or full. |

--http-insecure <boolean> | Enable insecure HTTP upload on completed gather. |

--http-host <string> | HTTP Host to use for HTTP upload. |

--http-path <string> | Path on HTTP server to use for HTTP upload. |

--http-proxy <string> | Proxy server to use for HTTP upload. |

--http-proxy-port <integer> | Proxy server port to use for HTTP upload. |

--ftp <boolean> | Enable FTP upload on completed gather. |

--noftp | Do not attempt FTP upload. |

--set-ftp-password | Interactively specify alternate password for FTP. |

--ftp-host <string> | FTP host to use for FTP upload. |

--ftp-path <string> | Path on FTP server to use for FTP upload. |

--ftp-port <string> | Specifies alternate FTP port for upload. |

--ftp-proxy <string> | Proxy server to use for FTP upload. |

--ftp-proxy-port <integer> | Proxy server port to use for FTP upload. |

--ftp-mode <value> | Mode of FTP file transfer. Valid values are both, active, and passive. |

--ftp-user <string> | FTP user to use for FTP upload. |

--ftp-pass <string> | Specify alternative password for FTP. |

--ftp-ssl-cert <string> | Specifies the SSL certificate to use in FTPS connection. |

--ftp-upload-insecure <boolean> | Whether to attempt a plaintext FTP upload. |

--ftp-upload-pass <string> | FTP user to use for FTP upload password. |

--set-ftp-upload-pass | Specify the FTP upload password interactively. |

When a logfile gather arrives at Dell, it is automatically unpacked by a support process and analyzed using the logviewer tool.

Author: Nick Trimbee

PowerScale Security Baseline Checklist

Tue, 16 Apr 2024 22:36:48 -0000

|Read Time: 0 minutes

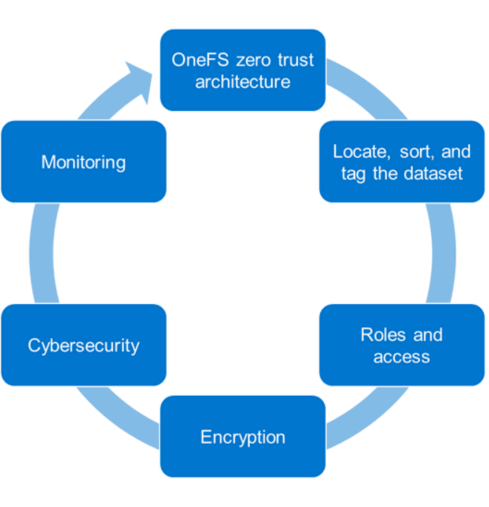

As a security best practice, a quarterly security review is recommended. Forming an aggressive security posture for a PowerScale cluster is composed of different facets that may not be applicable to every organization. An organization’s industry, clients, business, and IT administrative requirements determine what is applicable. To ensure an aggressive security posture for a PowerScale cluster, use the checklist in the following table as a baseline for security.

This table serves as a security baseline and must be adapted to specific organizational requirements. See the Dell PowerScale OneFS: Security Considerations | Dell Technologies Info Hub white paper for a comprehensive explanation of the concepts in the table below.

Further, cluster security is not a single event. It is an ongoing process: Monitor this blog for updates. As new updates become available, this post will be updated. Consider implementing an organizational security review on a quarterly basis.

The items listed in the following checklist are not in order of importance or hierarchy but rather form an aggressive security posture as more features are implemented.

Security feature | Configuration | References and notes | Complete (Y/N) | Notes |

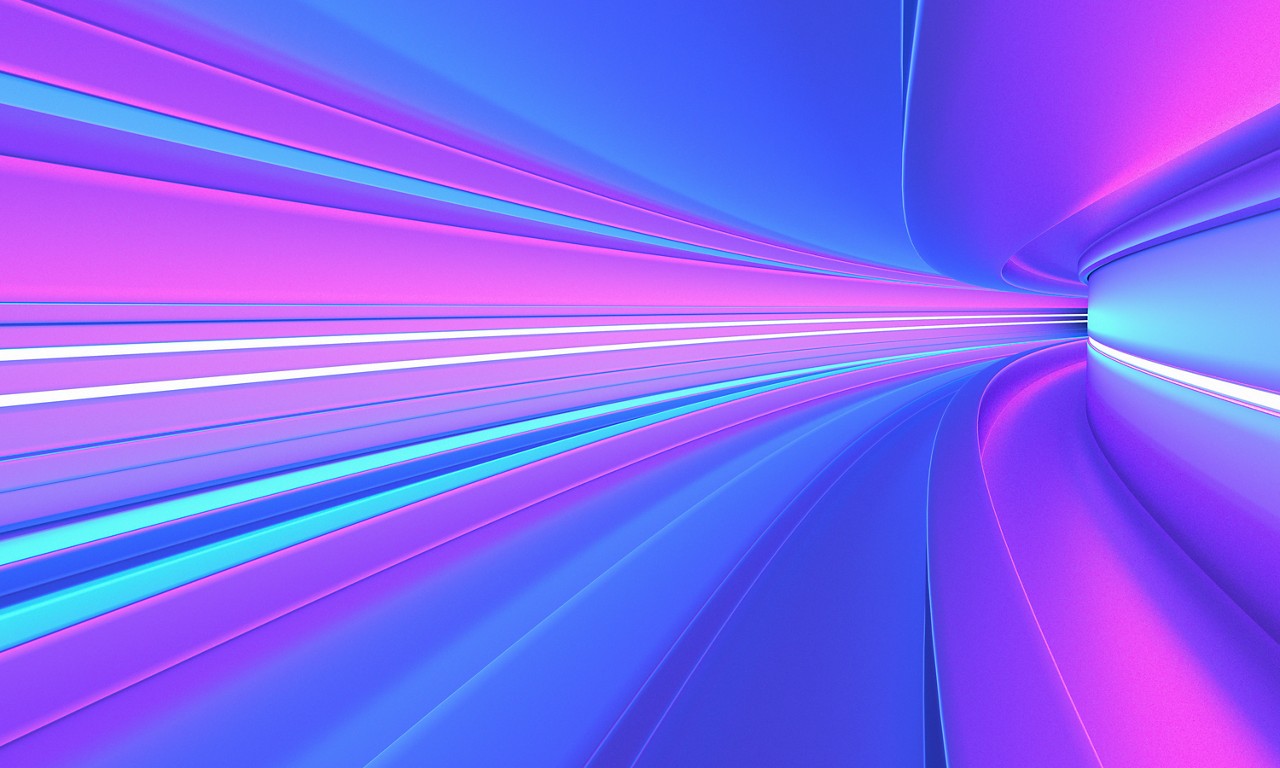

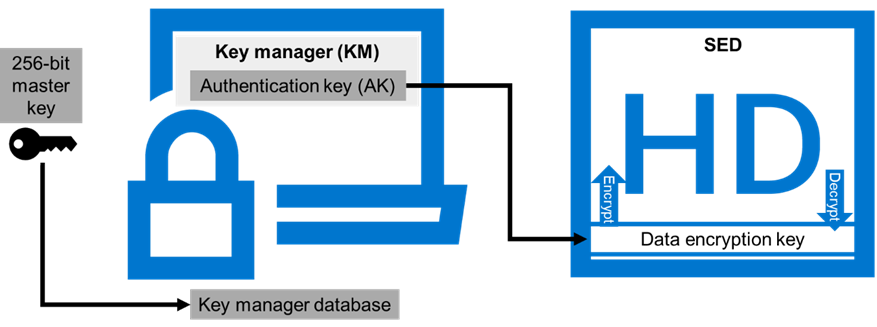

Data at Rest Encryption | Implement external key manager with SEDs | Overview | Dell PowerScale OneFS: Security Considerations | Dell Technologies Info Hub |

|

|

Data in flight encryption | Encrypt protocol communication and data replication | Dell PowerScale: Solution Design and Considerations for SMB Environments (delltechnologies.com)

PowerScale OneFS NFS Design Considerations and Best Practices | Dell Technologies Info Hub

Dell PowerScale SyncIQ: Architecture, Configuration, and Considerations | Dell Technologies Info Hub |

|

|

Role Based Access Control (RBAC) | Assign the lowest possible access required for each role | PowerScale OneFS Authentication, Identity Management, and Authorization | Dell Technologies Info Hub |

|

|

Multifactor authentication |

|

|

| |

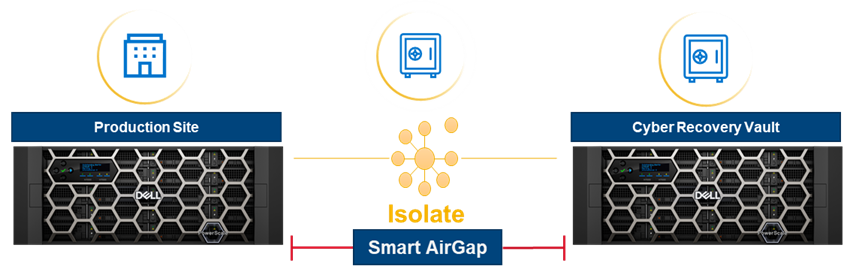

Cybersecurity | PowerScale Cyber Protection Suite Reference Architecture | Dell Technologies Info Hub |

|

| |

Monitoring | Monitor cluster activity |

|

|

|

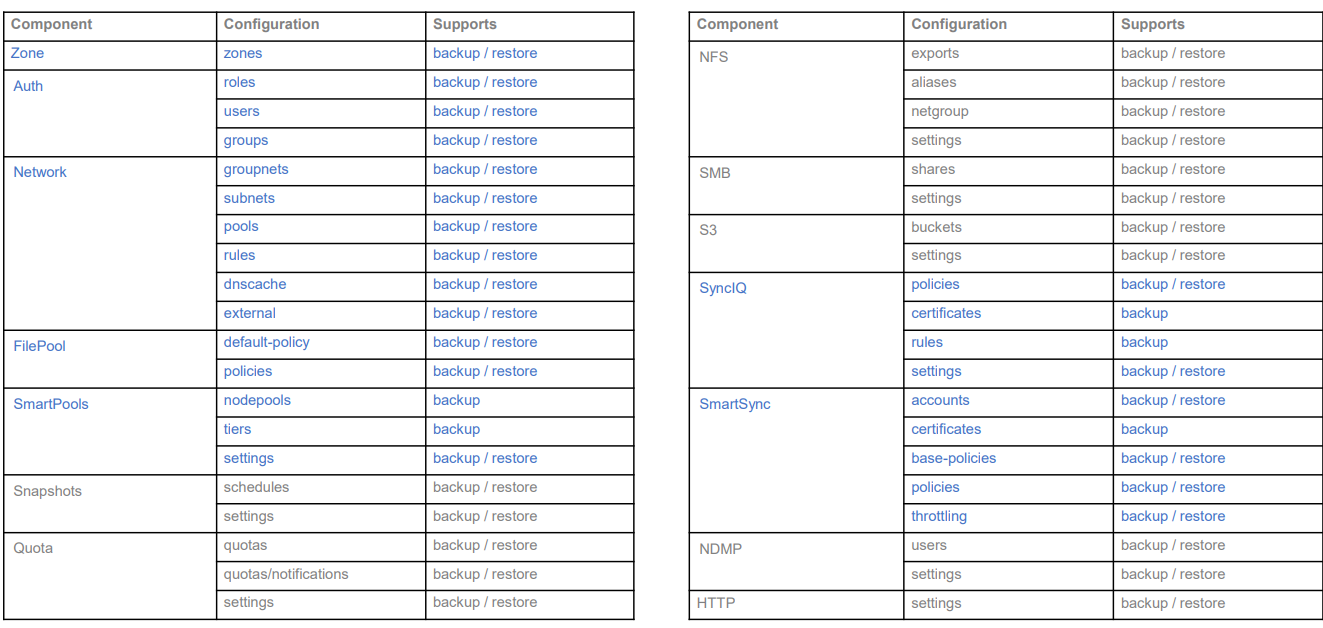

Cluster configuration backup and recovery | Ensure quarterly cluster backups | Backing Up and Restoring PowerScale Cluster Configurations in OneFS 9.7 | Dell Technologies Info Hub |

|

|

Secure Boot | Configure PowerScale Secure Boot | Overview | Dell PowerScale OneFS: Security Considerations | Dell Technologies Info Hub |

|

|

Auditing | Configure auditing |

|

| |

Custom applications | Create a custom application for cluster monitoring | GitHub - Isilon/isilon_sdk: Official repository for isilon_sdk |

|

|

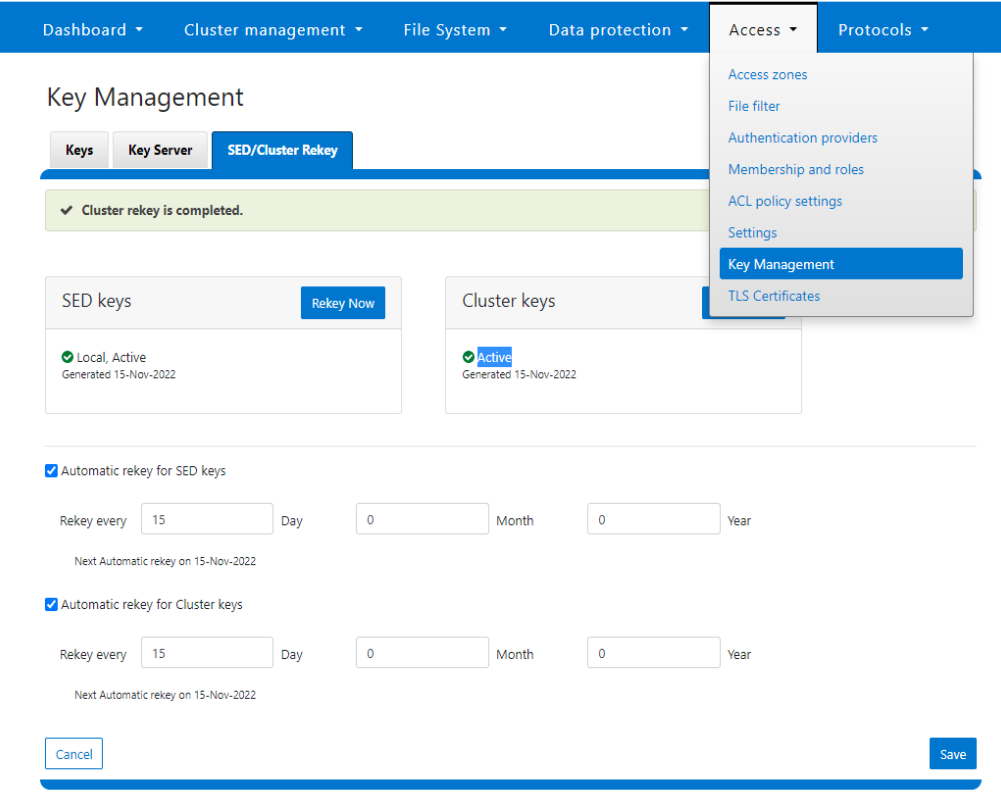

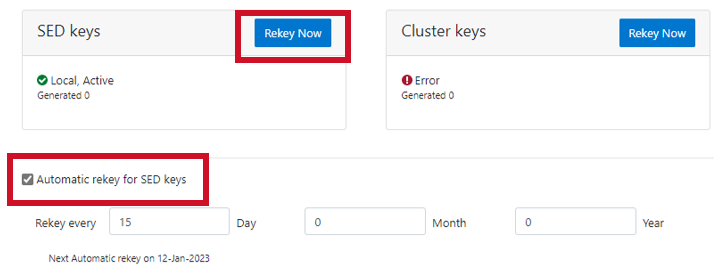

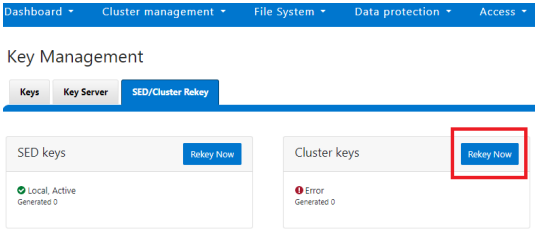

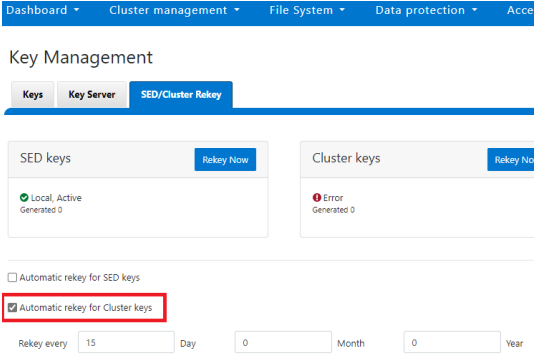

SED and cluster Universal Key rekey | Set a frequency to automatically rekey the Universal Key for SEDs and the cluster | Cluster services rekey | Dell PowerScale OneFS: Security Considerations | Dell Technologies Info Hub |

|

|

Perform a quarterly security review | Review all organizational security requirements and current implementation. Check this paper and checklist for updates: |

|

| |

General cluster security best practices | See the best practices section of the Security Configuration Guide for the relevant release, at PowerScale OneFS Info Hubs | Dell US |

|

| |

Login, authentication, and privileges best practices |

|

| ||

SNMP security best practices |

|

| ||

SSH security best practices |

|

| ||

Data-access protocols best practices |

|

| ||

Web interface security best practices |

|

| ||

Anti-virus | PowerScale: AntiVirus Solutions | Dell Technologies Info Hub |

|

| |

Author: Aqib Kazi – Senior Principal Engineering Technologist

OneFS SyncIQ and Windows File Create Date

Tue, 16 Apr 2024 17:15:51 -0000

|Read Time: 0 minutes

In the POSIX world, files typically possess three fundamental timestamps:

Timestamp | Alias | Description |

Access | atime | Access timestamp of the last read. |

Change | ctime | Status change timestamp of the last update to the file's metadata. |

Modify | mtime | Modification timestamp of the last write. |

These timestamps can be easily viewed from a variety of file system tools and utilities. For example, in this case running ‘stat’ from the OneFS CLI:

# stat -x tstr

File: "tstr"

Size: 0 FileType: Regular File

Mode: (0600/-rw-------) Uid: ( 0/ root) Gid: ( 0/ wheel)

Device: 18446744073709551611,18446744072690335895 Inode: 5103485107 Links: 1

Access: Mon Sep 11 23:12:47 2023

Modify: Mon Sep 11 23:12:47 2023

Change: Mon Sep 11 23:12:47 2023

A typical instance of a change, or “ctime”, timestamp update occurs when a file’s access permissions are altered. Since modifying the permissions doesn’t physically open the file (ie. access the file’s data), its “atime” field is not updated. Similarly, since no modification is made to the file’s contents the “mtime” also remains unchanged. However, the file’s metadata has been changed, and the ctime field is used to record this event. As such, the “ctime” stamp allows a workflow such as a backup application to know to make a fresh copy of the file, including its updated permission values. Similarly, a file rename is another operation that modifies its “ctime” entry without affecting the other timestamps.

Certain other file systems also include a fourth timestamp: namely the “birthtime” of when the file was created. Birthtime (by definition) should never change. It’s also an attribute which organizations and their storage administrators may or may not care about.

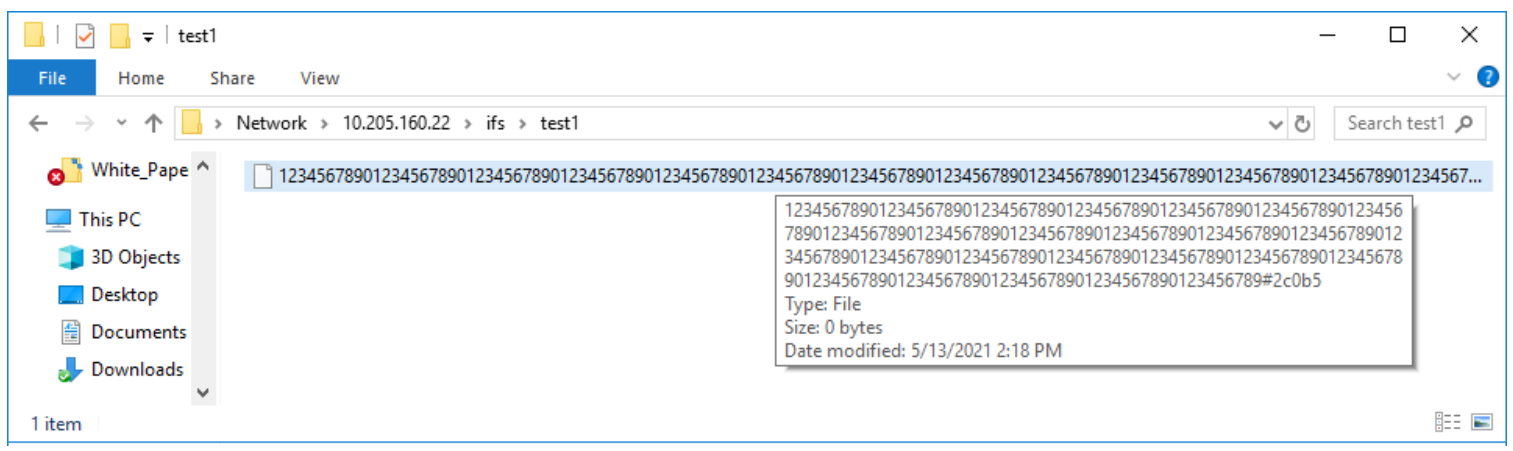

Within the Windows file system realm, this “birthtime” timestamp, is affectionally known as “create date”. The create date of a file is essentially the date and time when its inode is “born”.

Note: that this is not a recognized POSIX attribute, like ctime or mtime, rather it is something that was introduced as part of Windows compatibility requirements. And, because it’s a birthtime, linking operations do not necessarily affect it unless a new inode is not created.

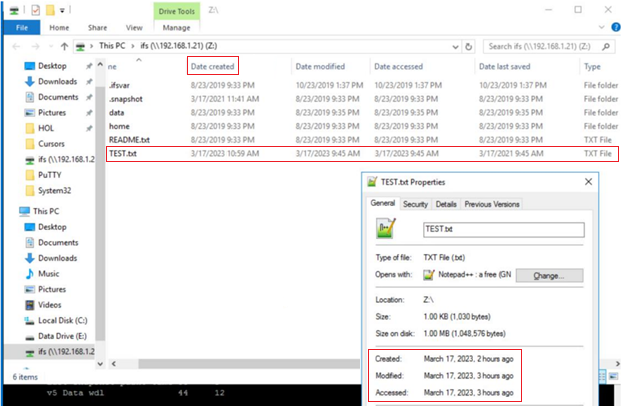

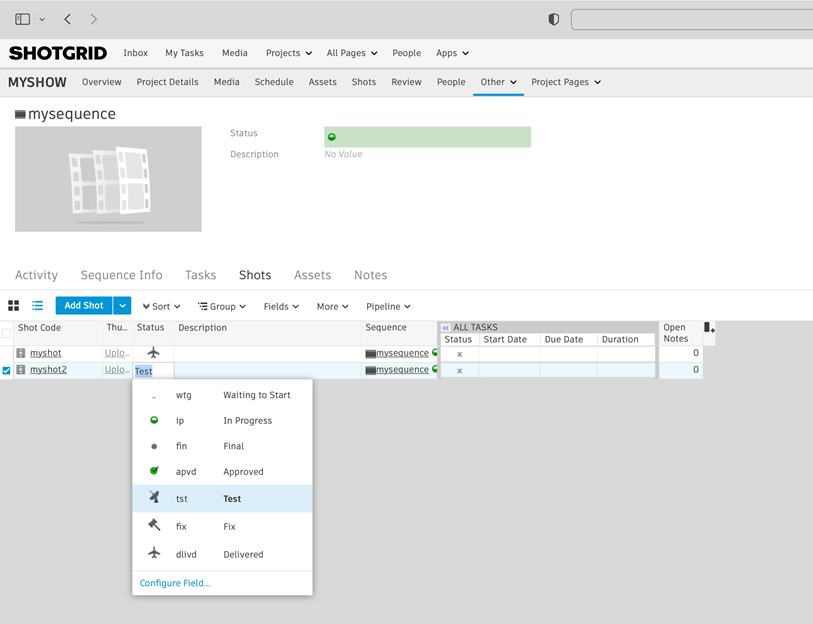

As shown below, this create, or birth, date can differ from a file’s modified or accessed dates because the creation date is when that file’s inode version originated. So, for instance, if a file is copied, the new file’s create date will be set to the current time since it has a new inode. This can be seen in the following example where a file is copied from a flash drive mounted on a Windows client’s file system under drive “E:”, to a cluster’s SMB share mounted at drive “Z:”.

The “Date created” date above is ahead in time of both the “accessed” and “modified”, because the latter two were merely inherited from the source file, whereas the create date was set when the copy was made.

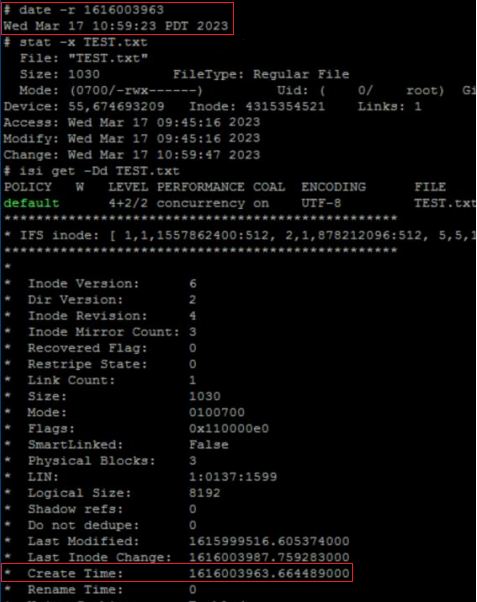

The corresponding “date”, “stat”, and “isi get” CLI output from the cluster confirms this:

# stat TEST.txt

18446744072690400151 5103485107 -rw------- 1 root wheel 18446744073709551615 0 "Sep 11 23:12:47 2023" "Sep 11 23:12:47 2023" "Sep 11 23:12:47 2023" "Sep 11 23:12:47 2023" 8192 48 0xe0 tstr

# isi get -Dd TEST.txt

POLICY W LEVEL PERFORMANCE COAL ENCODING FILE IADDRS

default 16+2/2 concurrency on UTF-8 tstr <34,12,58813849600:8192>, <35,3,58981457920:8192>, <69,12,57897025536:8192> ct: 1694473967 rt: 0

*************************************************

* IFS inode: [ 34,12,58813849600:8192, 35,3,58981457920:8192, 69,12,57897025536:8192 ]

*************************************************

*

* Inode Version: 8

* Dir Version: 2

* Inode Revision: 1

* Inode Mirror Count: 3

* Recovered Flag: 0

* Restripe State: 0

* Link Count: 1

* Size: 0

* Mode: 0100600

* Flags: 0xe0

* SmartLinked: False

* Physical Blocks: 0

* Phys. Data Blocks: 0

* Protection Blocks: 0

* LIN: 1:3031:00b3

* Logical Size: 0

* Shadow refs: 0

* Do not dedupe: 0

* In CST stats: False

* Last Modified: 1694473967.071973000

* Last Inode Change: 1694473967.071973000

* Create Time: 1694473967.071973000

* Rename Time: 0

<snip>

In releases before OneFS 9.5, when a file is replicated, its create date is timestamped when that file was copied from the source cluster. This means when the replication job ran, or, more specifically, when the individual job worker thread got around to processing that specific file.

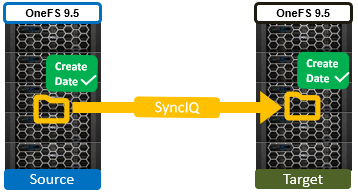

By way of contrast, OneFS 9.5 and later releases ensure that SyncIQ fully replicates the full array of metadata, preserving all values, including that of the birth time / create date.

The primary consideration for the new create date functionality is that it requires both source and target clusters in a replication set to be running OneFS 9.5 or later.

If either the source or the target is running pre-9.5 code, this time field retains its old behavior of being set to the time of replication (actual file creation) rather than the correct value associated with the source file.

In OneFS 9.5 and later releases, create date timestamping works exactly the same way as SyncIQ replication of other metadata (such as “mtime”, etc), occurring automatically as part of every file replication. Plus, no additional configuration is necessary beyond upgrading both clusters to OneFS 9.5 or later.

One other significant thing to note about this feature is that SyncIQ is changelist-based, using OneFS snapshots under the hood for its checkpointing and delta comparisons.. This means that, if a replication relationship has been configured prior to OneFS 9.5 or later upgrade, the source cluster will have valid birthtime data, but the target’s birthtime data will reflect the local creation time of the files it’s copied.

Note: that, upon upgrading both sides to OneFS 9.5 or later and running a SyncIQ job, nothing will change. This is because SyncIQ will perform its snapshot comparison, determine that no changes were made to the dataset, and so will not perform any replication work. However, if a source file is “touched” so that it’s mtime is changed (or any other action performed that will cause a copy-on-write, or CoW) that will cause the file to show up in the snapshot diff and therefore be replicated. As part of replicating that file, the correct birth time will be written on the target.

Note: that a full replication (re)sync does not get triggered as a result of upgrading a replication cluster pair to OneFS 9.5 or later and thereby enabling this functionality. Instead, any create date timestamp resolution happens opportunistically and in the background as files gets touched or modified - and thereby replicated. Be aware that ‘touching’ a file does change its modification time, in addition to updating the create date, which may be undesirable.

Author: Nick Trimbee

Securing PowerScale OneFS SyncIQ

Tue, 16 Apr 2024 17:55:56 -0000

|Read Time: 0 minutes

In the data replication world, ensuring your PowerScale clusters' security is paramount. SyncIQ, a powerful data replication tool, requires encryption to prevent unauthorized access.

Concerns about unauthorized replication

A cluster might inadvertently become the target of numerous replication policies, potentially overwhelming its resources. There’s also the risk of an administrator mistakenly specifying the wrong cluster as the replication target.

Best practices for security

To secure your PowerScale cluster, Dell recommends enabling SyncIQ encryption as per Dell Security Advisory DSA-2020-039: Dell EMC Isilon OneFS Security Update for a SyncIQ Vulnerability | Dell US. This feature, introduced in OneFS 8.2, prevents man-in-the-middle attacks and addresses other security concerns.

Encryption in new and upgraded clusters

SyncIQ is disabled by default for new clusters running OneFS 9.1. When SyncIQ is enabled, a global encryption flag requires all SyncIQ policies to be encrypted. This flag is also set for clusters upgraded to OneFS 9.1, unless there’s an existing SyncIQ policy without encryption.

Alternative measures

For clusters running versions earlier than OneFS 8.2, configuring a SyncIQ pre-shared key (PSK) offers protection against unauthorized replication policies.

By following these security measures, administrators can ensure that their PowerScale clusters are safeguarded against unauthorized access and maintain the integrity and confidentiality of their data.

SyncIQ encryption: securing data in transit

Securing information as it moves between systems is paramount in the data-driven world. Dell PowerScale OneFS release 8.2 has brought a game-changing feature to the table: end-to-end encryption for SyncIQ data replication. This ensures that data is not only protected while at rest but also as it traverses the network between clusters.

Why encryption matters

Data breaches can be catastrophic, and because data replication involves moving large volumes of sensitive information, encryption acts as a critical shield. With SyncIQ’s encryption, organizations can enforce a global setting that mandates encryption across all SyncIQ policies, to add an extra layer of security.

Test before you implement

It’s crucial to test SyncIQ encryption in a lab environment before deploying it in production. Although encryption introduces minimal overhead, its impact on workflow can vary based on several factors, such as network bandwidth and cluster resources.

Technical underpinnings

SyncIQ encryption is powered by X.509 certificates, TLS version 1.2, and OpenSSL version 1.0.2o6. These certificates are meticulously managed within the cluster’s certificate stores, ensuring a robust and secure data replication process.

Remember, this is just the beginning of a comprehensive guide about SyncIQ encryption. Stay tuned for more insights about configuration steps and best practices for securing your data with Dell PowerScale’s innovative solutions.

Configuration

Configuring SyncIQ encryption requires a supported OneFS release, certificates, and finally, the OneFS configuration. Before enabling SyncIQ encryption in production, test it in a lab environment that mimics the production setup. Measure the impact on transmission overhead by considering network bandwidth, cluster resources, workflow, and policy configuration.

Here’s a high level summary of the configuration steps:

- Ensure compatibility:

- Ensure that the source and target clusters are running OneFS 8.2 or later.

- Upgrade and commit both clusters to OneFS release 8.2 or later.

- Create X.509 certificates:

- Create X.509 certificates for the source and target clusters using publicly available tools.

- The certificate creation process results in the following components:

- Certificate Authority (CA) certificate

- Source certificate and private key

- Target certificate and private key

Note: Some certificate authorities may not generate the public and private key pairs. In that case, manually generate a Certificate Signing Request (CSR) and obtain signed certificates.

3. Transfer certificates to clusters:

- Transfer the certificates to each cluster.

4. Activate each certificate as follows:

- Add the source cluster certificate under Data Protection > SyncIQ > Certificates.

- Add the target server certificate under Data Protection > SyncIQ > Settings.

- Add the Certificate Authority under Access > TLS Certificates and select Import Authority.

5. Enforce encryption:

- Each cluster stores its certificate and its peer’s certificate.

- The source cluster must store the target cluster’s certificate, and vice versa.

- Storing the peer’s certificate creates a list of approved clusters for data replication.

By following these steps, you can secure your data in transit between PowerScale clusters using SyncIQ encryption. Remember to customize the certificates and settings according to your specific environment and requirements.

For more detailed information about configuring SyncIQ encryption, see SyncIQ encryption | Dell PowerScale SyncIQ: Architecture, Configuration, and Considerations | Dell Technologies Info Hub.

SyncIQ pre-shared key

A SyncIQ pre-shared key (PSK) is configured solely on the target cluster to restrict policies from source clusters without the PSK.

Use Cases: This is recommended for environments without SyncIQ encryption, such as clusters pre-OneFS 8.2 or due to other factors.

SmartLock Compliance: Not supported by SmartLock Compliance mode clusters; upgrading and configuring SyncIQ encryption is advised.

Policy Update: After updating source cluster policies with the PSK, no further configuration is needed. Use the isi sync policies view command to verify.

Remember, configuring the PSK will cause all replicating jobs to the target cluster to fail, so ensure that all SyncIQ jobs are complete or canceled before proceeding.

For more detailed information about configuring a SyncIQ pre-shared key, see SyncIQ pre-shared key | Dell PowerScale SyncIQ: Architecture, Configuration, and Considerations | Dell Technologies Info Hub.

Resources

- SyncIQ encryption | Dell PowerScale SyncIQ: Architecture, Configuration, and Considerations | Dell Technologies Info Hub

- SyncIQ pre-shared key | Dell PowerScale SyncIQ: Architecture, Configuration, and Considerations | Dell Technologies Info Hub

Author: Aqib Kazi, Senior Principal Engineering Technologist

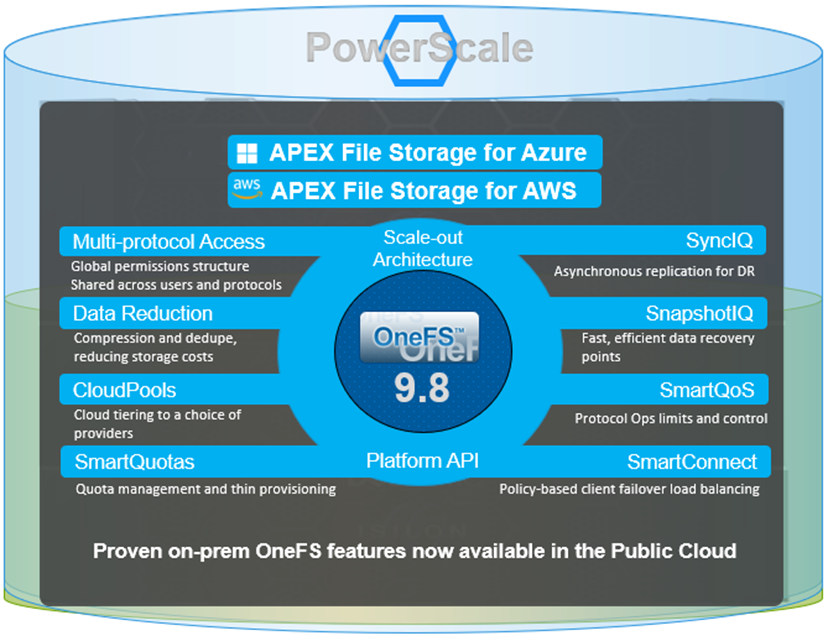

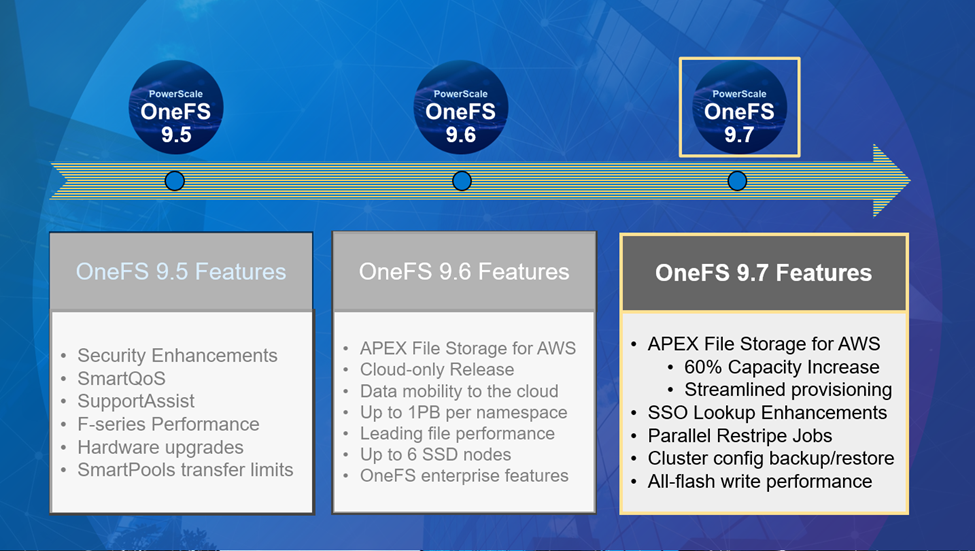

PowerScale OneFS 9.8

Tue, 09 Apr 2024 14:00:00 -0000

|Read Time: 0 minutes

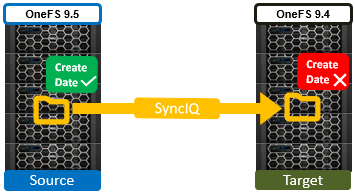

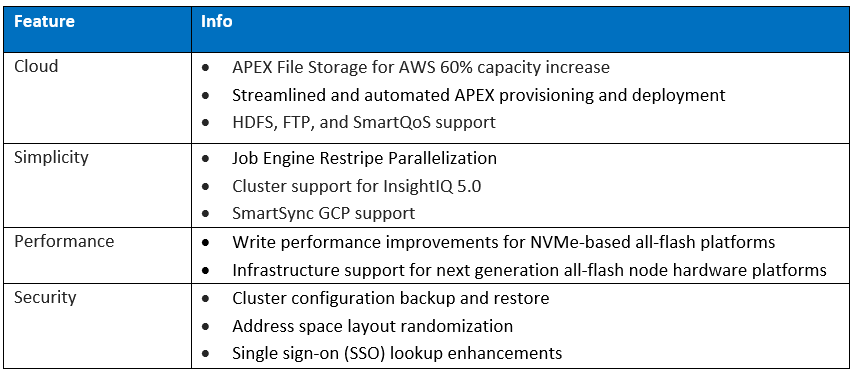

It’s launch season here at Dell Technologies, and PowerScale is already scaling up spring with the innovative OneFS 9.8 release which shipped today, 9th April 2024. This new 9.8 release has something for everyone, introducing PowerScale innovations in cloud, performance, serviceability, and ease of use.

Figure 1. OneFS 9.8 release features

Figure 1. OneFS 9.8 release features

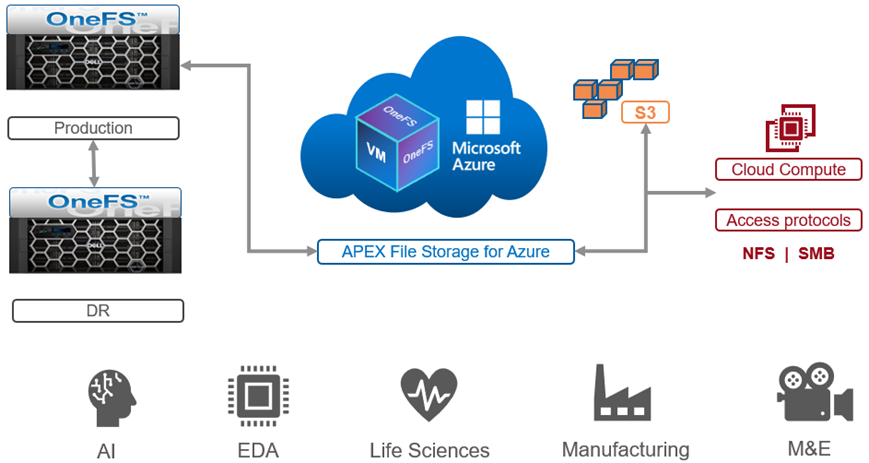

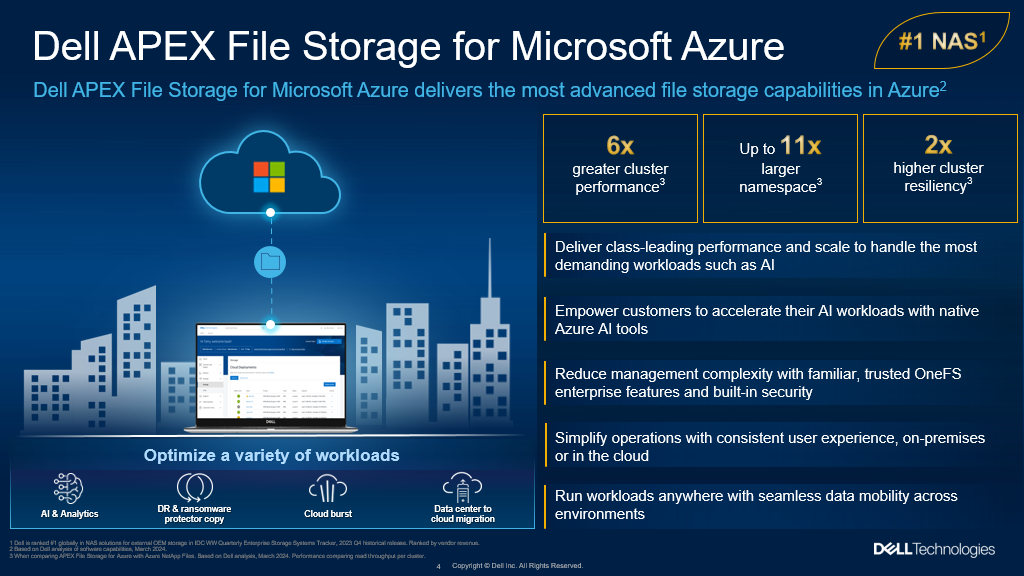

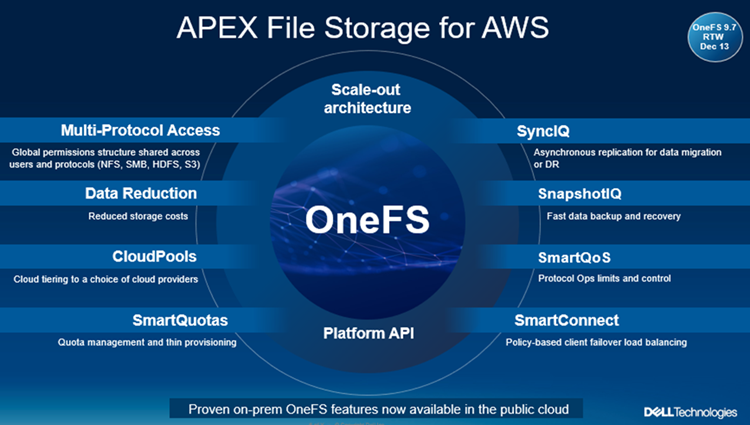

APEX File Storage for Azure

After the debut of APEX File Storage for AWS last year, OneFS 9.8 amplifies PowerScale’s presence in the public cloud by introducing APEX File Storage for Azure.

Figure 2. OneFS 9.8 APEX File Storage for Azure

Figure 2. OneFS 9.8 APEX File Storage for Azure

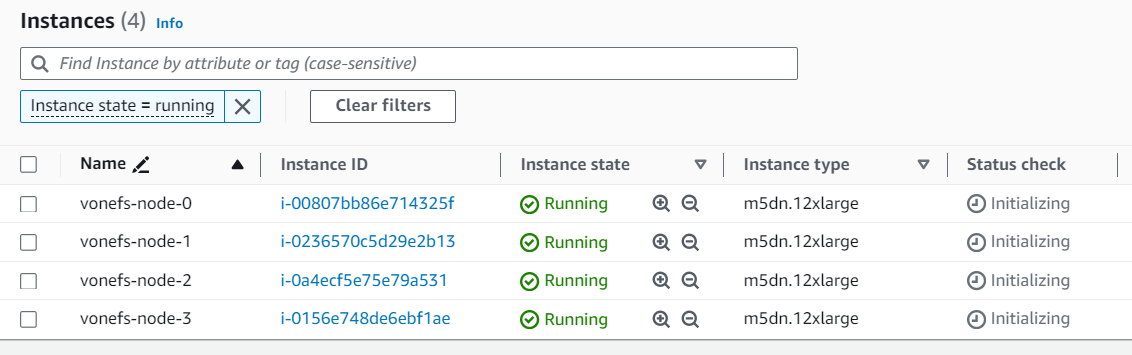

In addition to providing the same OneFS software platform on-prem and in the cloud as well as customer-managed for full control, APEX File Storage for Azure in OneFS 9.8 provides linear capacity and performance scaling from four to eighteen SSD nodes and up to 3PB per cluster, making it a solid fit for AI, ML, and analytics applications, as well as traditional file shares and home directories and vertical workloads like M&E, healthcare, life sciences, and financial services.

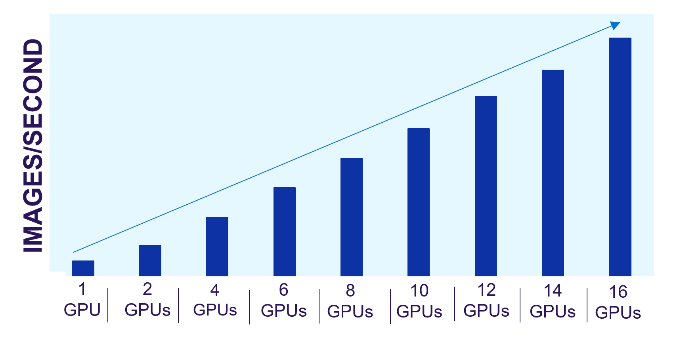

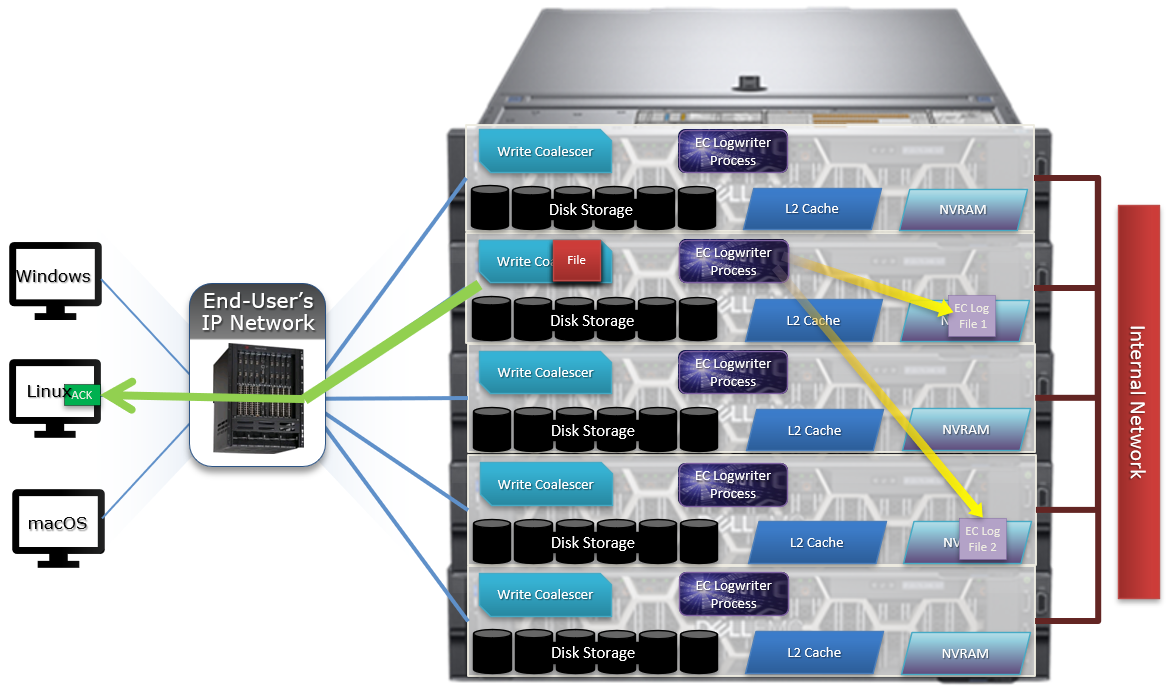

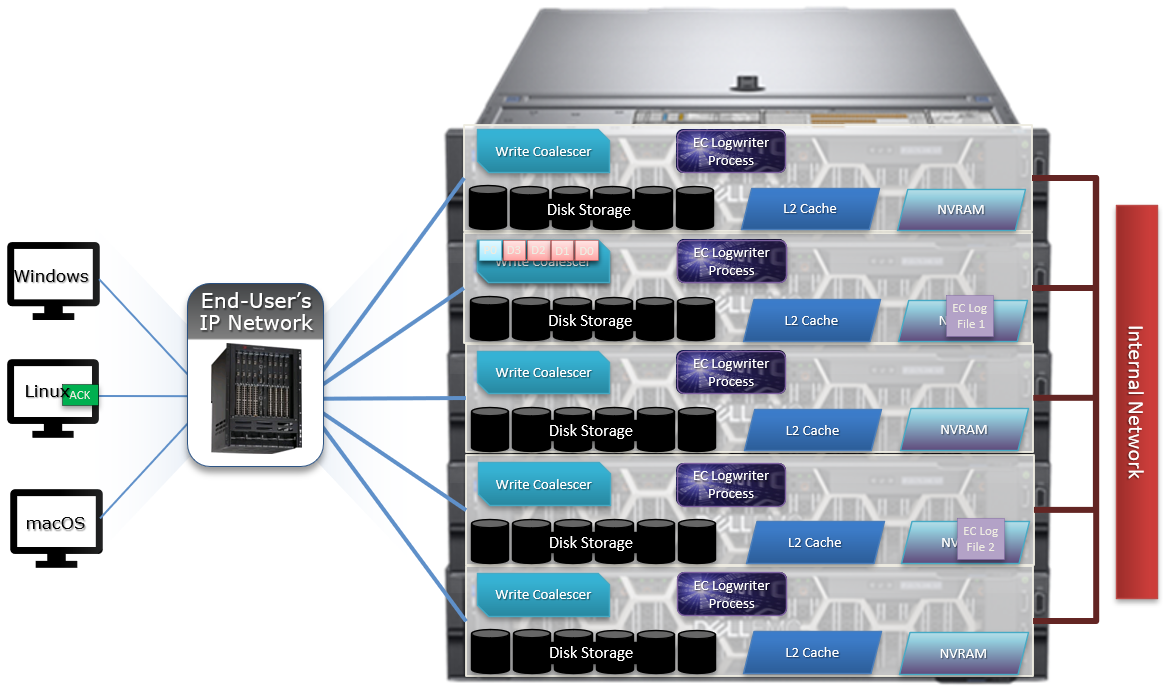

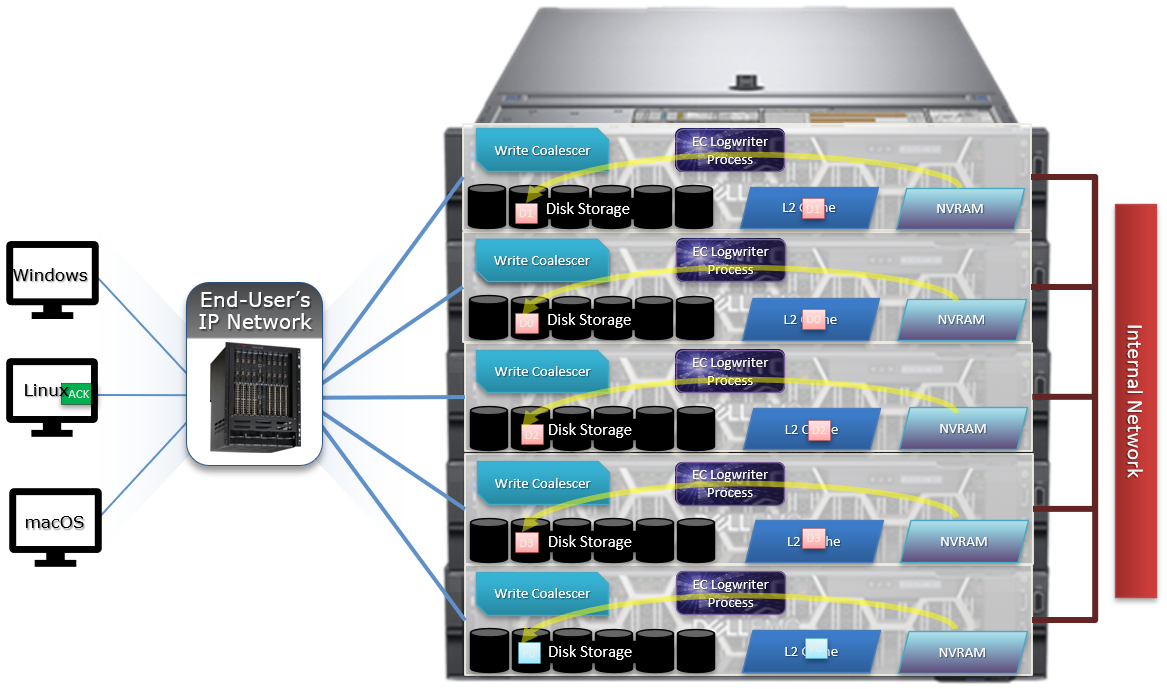

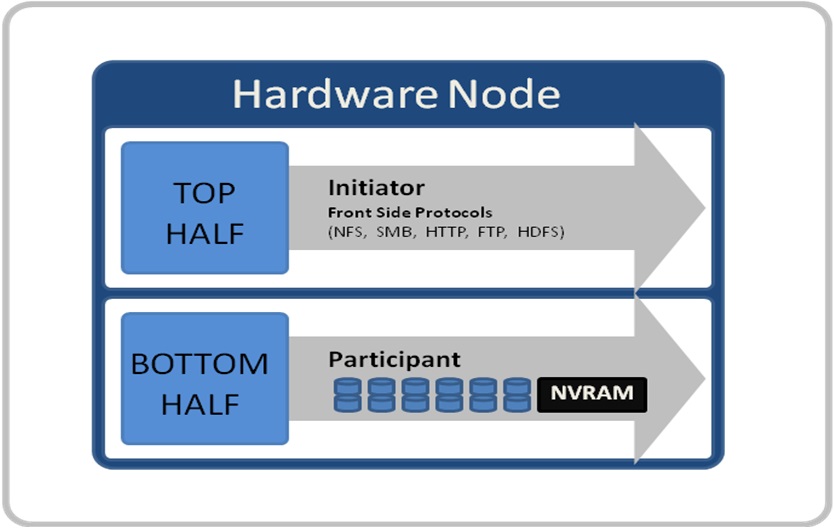

Figure 3. Dell PowerScale scale-out architecture

Figure 3. Dell PowerScale scale-out architecture

PowerScale’s scale-out architecture can be deployed on customer-managed AWS and Azure infrastructure, providing the capacity and performance needed to run a variety of unstructured workflows in the public cloud.

Once in the cloud, existing PowerScale investments can be further leveraged by accessing and orchestrating your data through the platform's multi-protocol access and APIs.

This includes the common OneFS control plane (CLI, WebUI, and platform API) and the same enterprise features, such as Multi-protocol, SnapshotIQ, SmartQuotas, Identity management, and so on.

Simplicity and efficiency

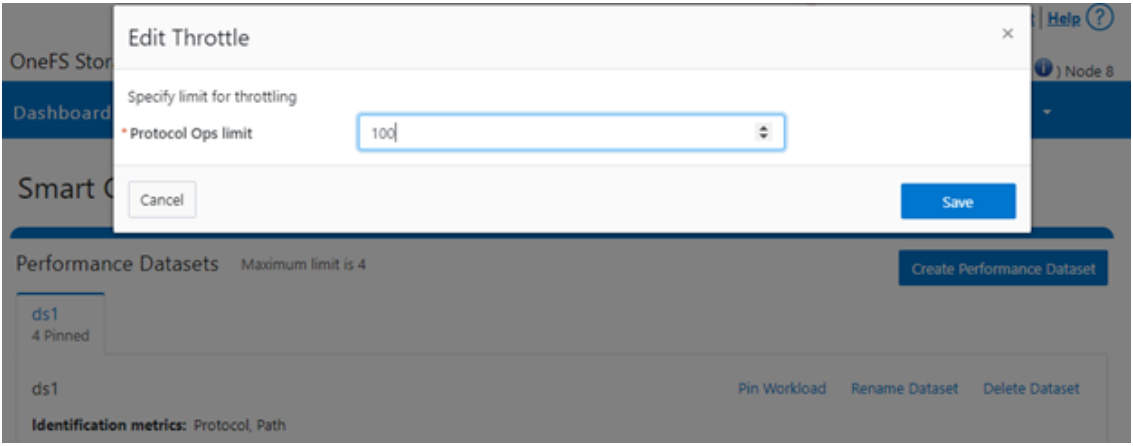

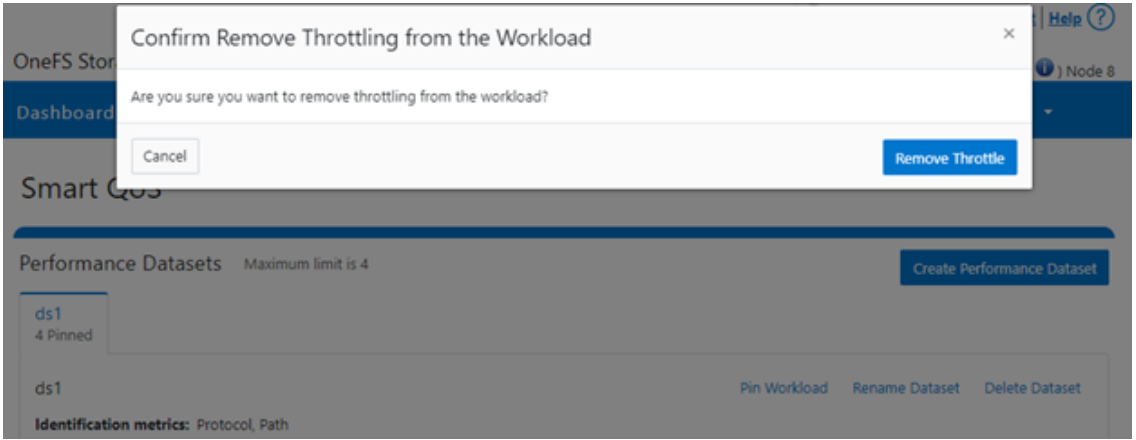

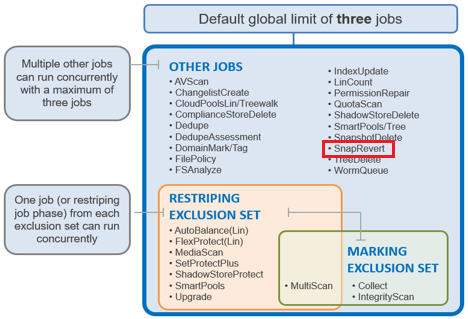

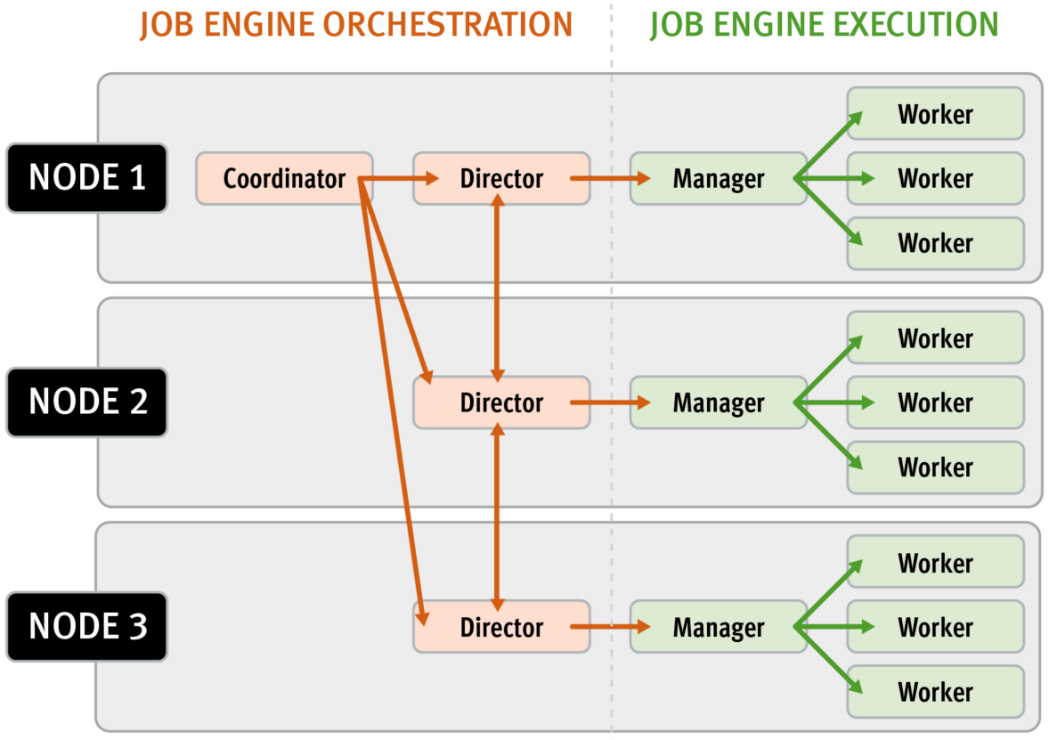

OneFS 9.8 SmartThrottling is an automated impact control mechanism for the job engine, allowing the cluster to automatically throttle job resource consumption if it exceeds pre-defined thresholds in order to prioritize client workloads.

OneFS 9.8 also delivers automatic on-cluster core file analysis, and SmartLog provides an efficient, granular log file gathering and transmission framework. Both of these new features help dramatically accelerate the ease and time to resolution of cluster issues.

Performance

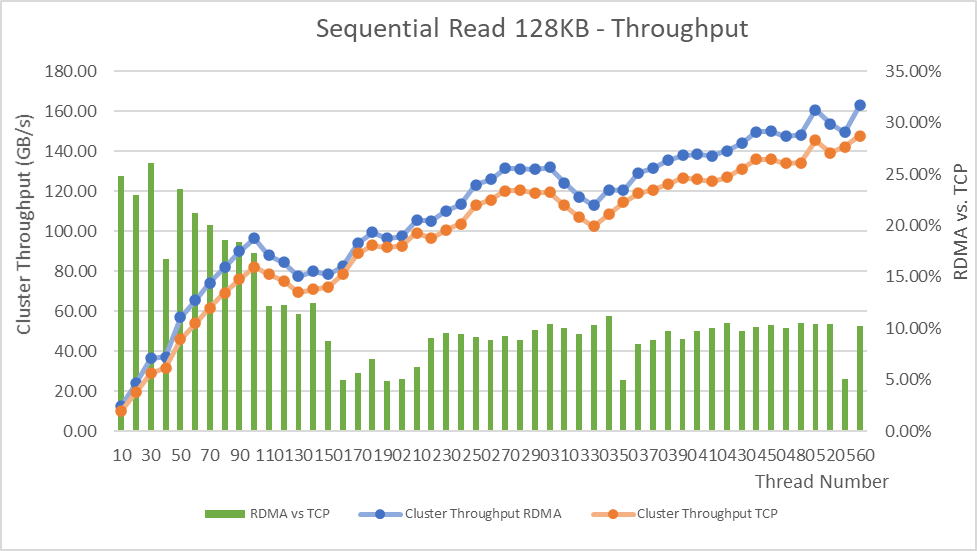

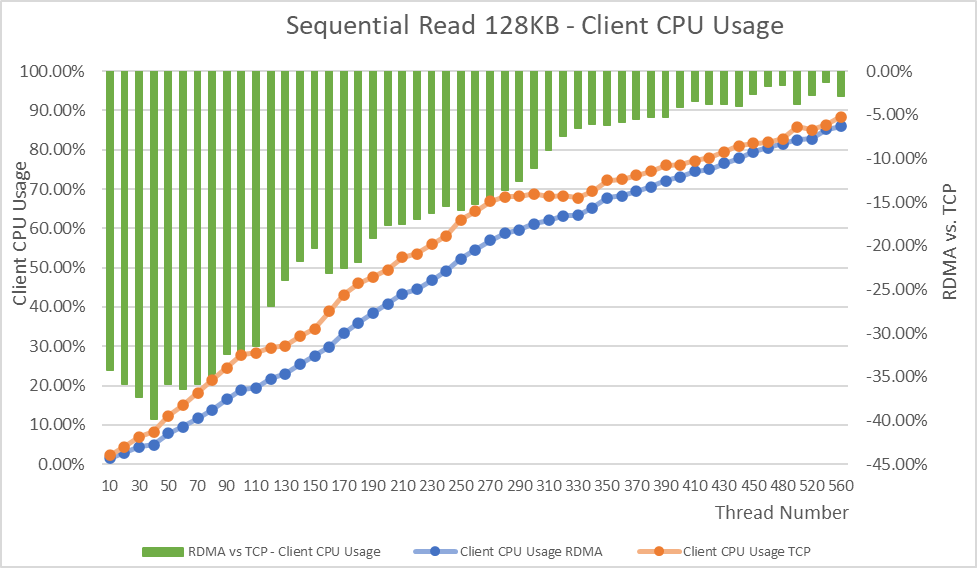

OneFS 9.8 also adds support for Remote Direct Memory Access (RDMA) over NFS 4.1 support for applications and clients. This allows for substantially higher throughput performance – especially in the case of single-connection and read-intensive workloads such as machine learning and generative AI model training – while also reducing both cluster and client CPU utilization and provides the foundation for interoperability with NVIDIA’s GPUDirect.

RDMA over NFSv4.1 in OneFS 9.8 leverages the ROCEv2 network protocol. OneFS CLI and WebUI configuration options include global enablement and IP pool configuration, filtering, and verification of RoCEv2 capable network interfaces. NFS over RDMA is available on all PowerScale platforms containing Mellanox ConnectX network adapters on the front end and with a choice of 25, 40, or 100 Gigabit Ethernet connectivity. The OneFS user interface helps easily identify which of a cluster’s NICs support RDMA.

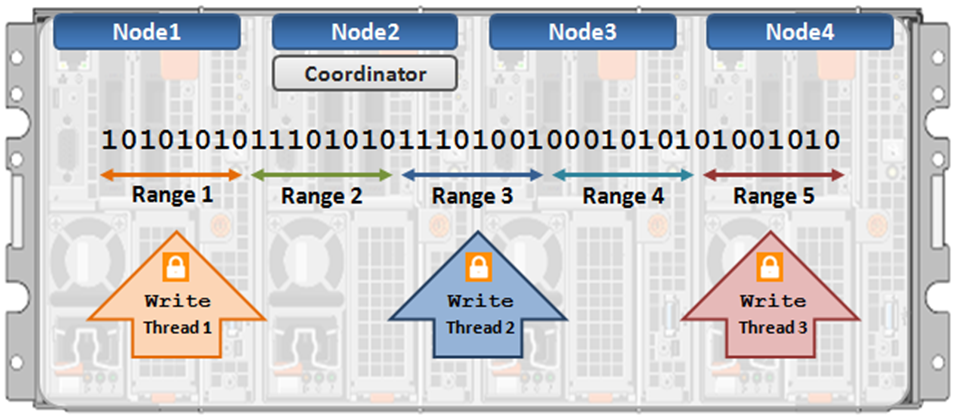

Under the hood, OneFS 9.8 introduces efficiencies such as lock sharding and parallel thread handling, delivering a substantial performance boost for streaming write-heavy workloads such as generative AI inferencing and model training. Performance scales linearly as compute is increased, keeping GPUs busy and allowing PowerScale to easily support AI and ML workflows both small and large. OneFS 9.8 also includes infrastructure support for future node hardware platform generations.

Multipath Client Driver

The addition of a new Multipath Client Driver helps expand PowerScale’s role in Dell Technologies’ strategic collaboration with NVIDIA, delivering the first and only end-to-end large scale AI system. This is based on the PowerScale F710 platform in conjunction with PowerEdge XE9680 GPU servers and NVIDIA’s Spectrum-X Ethernet switching platform to optimize performance and throughput at scale.

In summary, OneFS 9.8 brings the following new features to the Dell PowerScale ecosystem:

Feature | Info |

Cloud |

|

Simplicity |

|

Performance |

|

Serviceability |

|

We’ll be taking a deeper look at this new functionality in blog articles over the course of the next few weeks.

Meanwhile, the new OneFS 9.8 code is available on the Dell Online Support site, both as an upgrade and reimage file, allowing installation and upgrade of this new release.

Author: Nick Trimbee

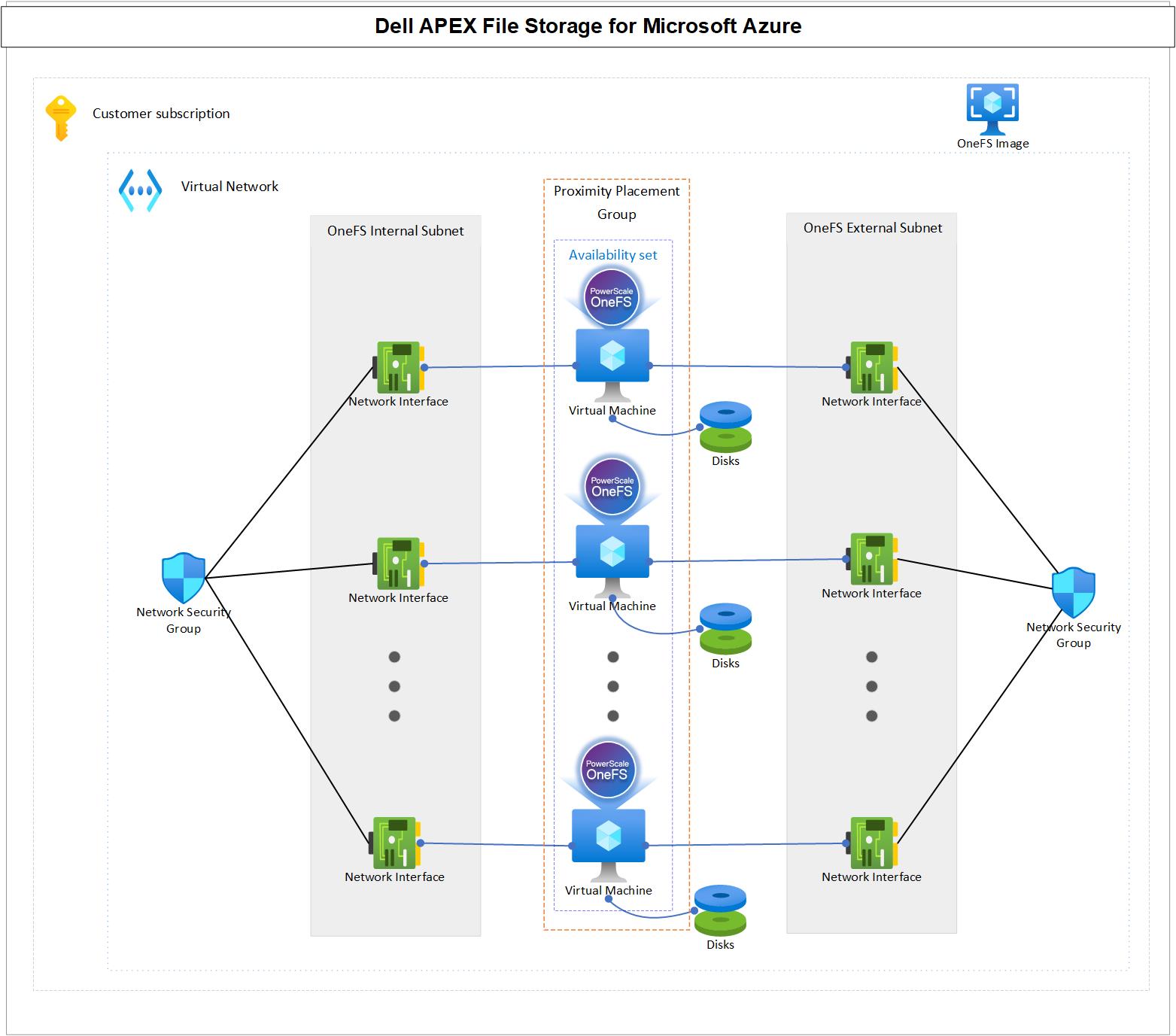

Unveiling APEX File Storage for Microsoft Azure – Running PowerScale OneFS on Azure

Tue, 09 Apr 2024 20:30:02 -0000

|Read Time: 0 minutes

Overview

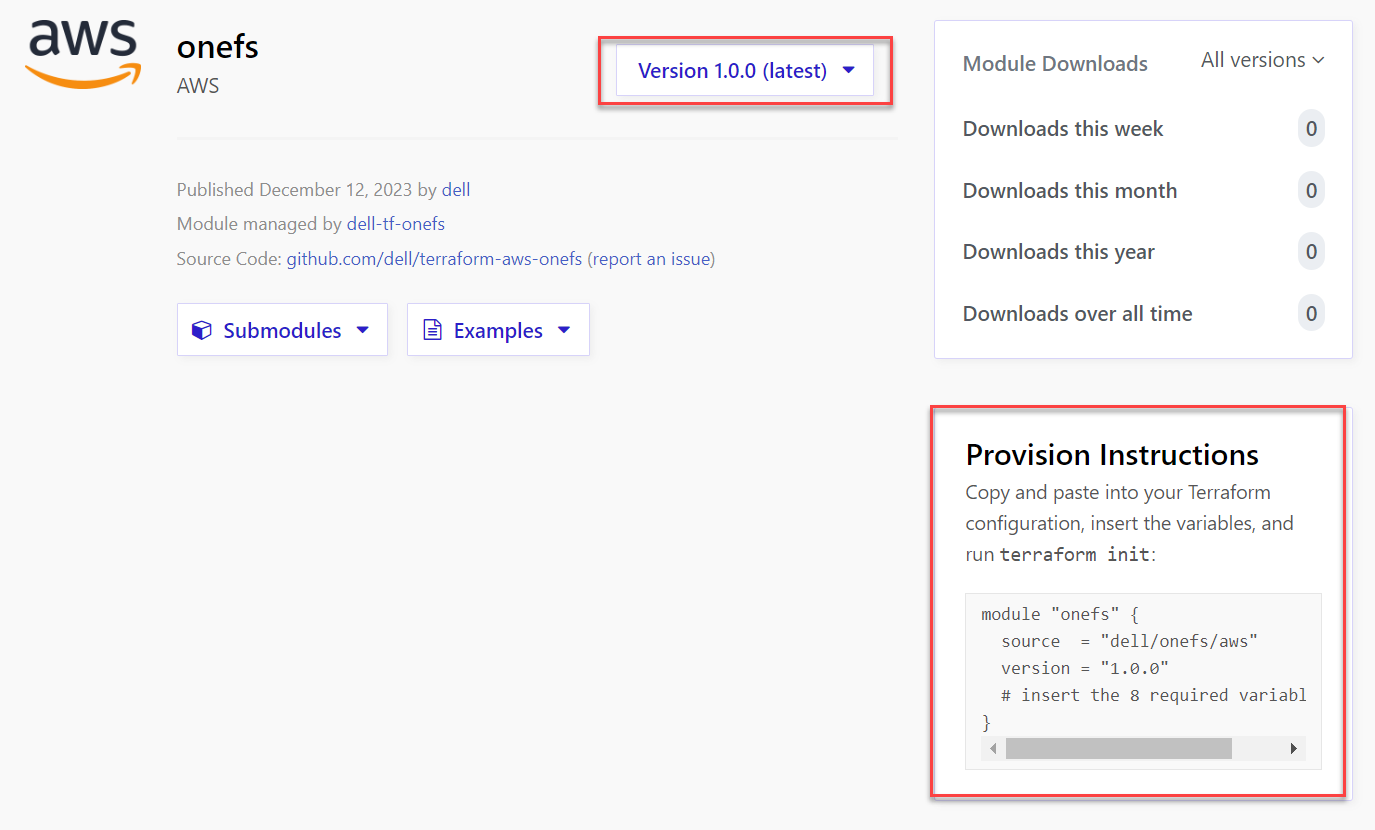

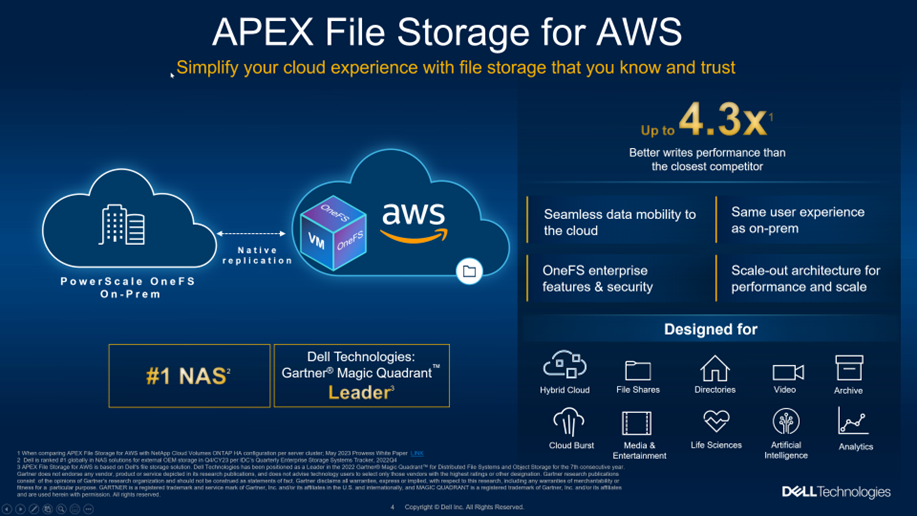

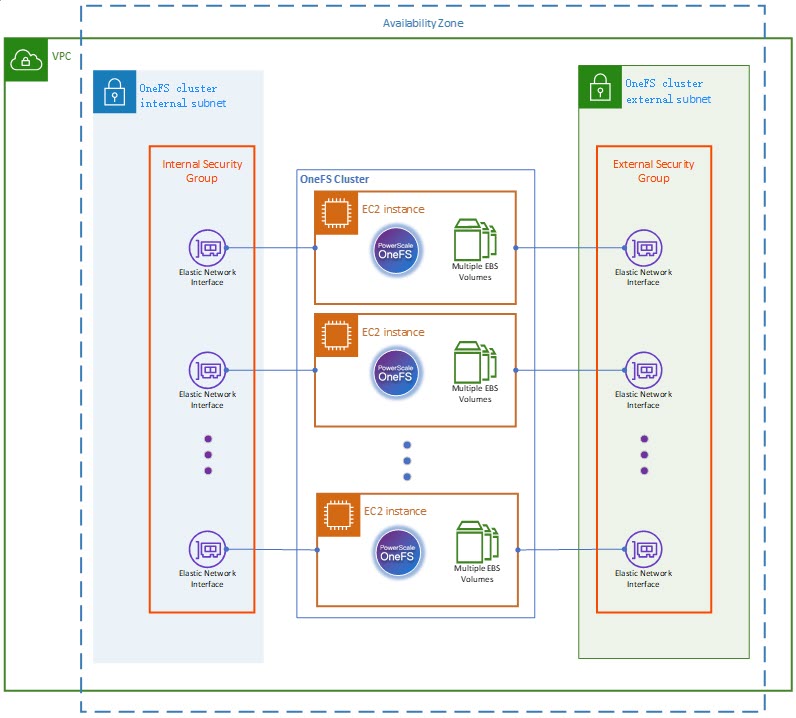

PowerScale OneFS 9.8 now brings a new offering in Azure — APEX File Storage for Microsoft Azure! It is a software-defined cloud file storage service that provides high-performance, flexible, secure, and scalable file storage for Microsoft Azure environments. It is also a fully customer managed service that is designed to meet the needs of enterprise-scale file workloads running on Azure. This offer joins another native cloud solution that was released last year - APEX File Storage for AWS, for more information, refer to the link: https://www.dell.com/en-us/dt/apex/storage/public-cloud/file.htm?hve=explore+file

Benefits of running OneFS in Cloud

APEX File Storage for Microsoft Azure brings the OneFS distributed file system software into the public cloud, allowing users to have the same management experience in the cloud as with their on-premises PowerScale appliance.