Blogs

Short articles about artificial intelligence solutions and related technology trends

Converting Hugging Face Large Language Models to TensorRT-LLM

Tue, 23 Apr 2024 21:27:56 -0000

|Read Time: 0 minutes

Introduction

Before getting into this blog proper, I want to take a minute to thank Fabricio Bronzati for his technical help on this topic.

Over the last couple of years, Hugging Face has become the de-facto standard platform to store anything to do with generative AI. From models to datasets to agents, it is all found on Hugging Face.

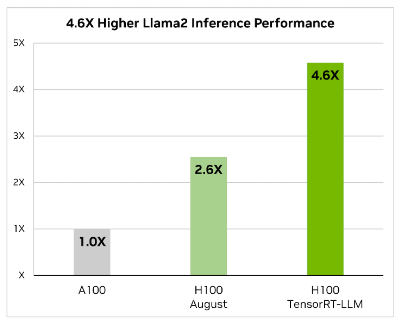

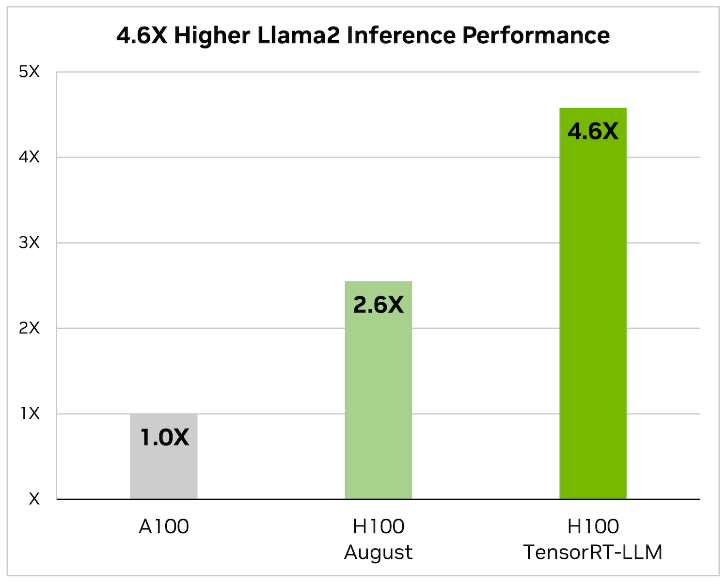

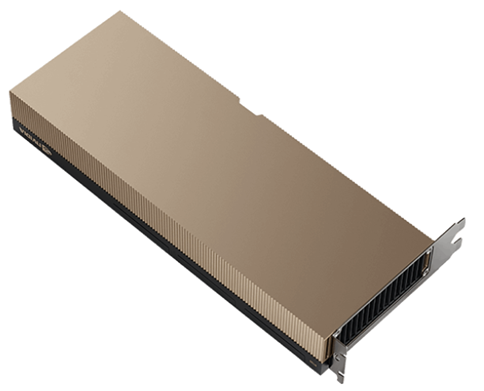

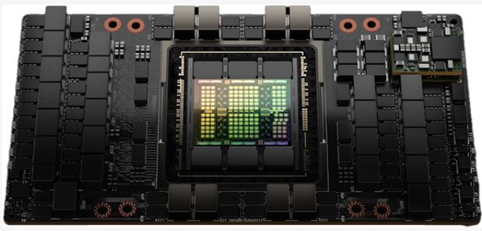

While NVIDIA graphic cards have been a popular choice to power AI workloads, NVIDIA has spent significant investment in building their software stack to help customers decrease the time to market for their generative AI-back applications. This is where the NVIDIA AI Enterprise software stack comes into play. 2 big components of the NVIDIA AI Enterprise stack are the NeMo framework and the Triton Inference server.

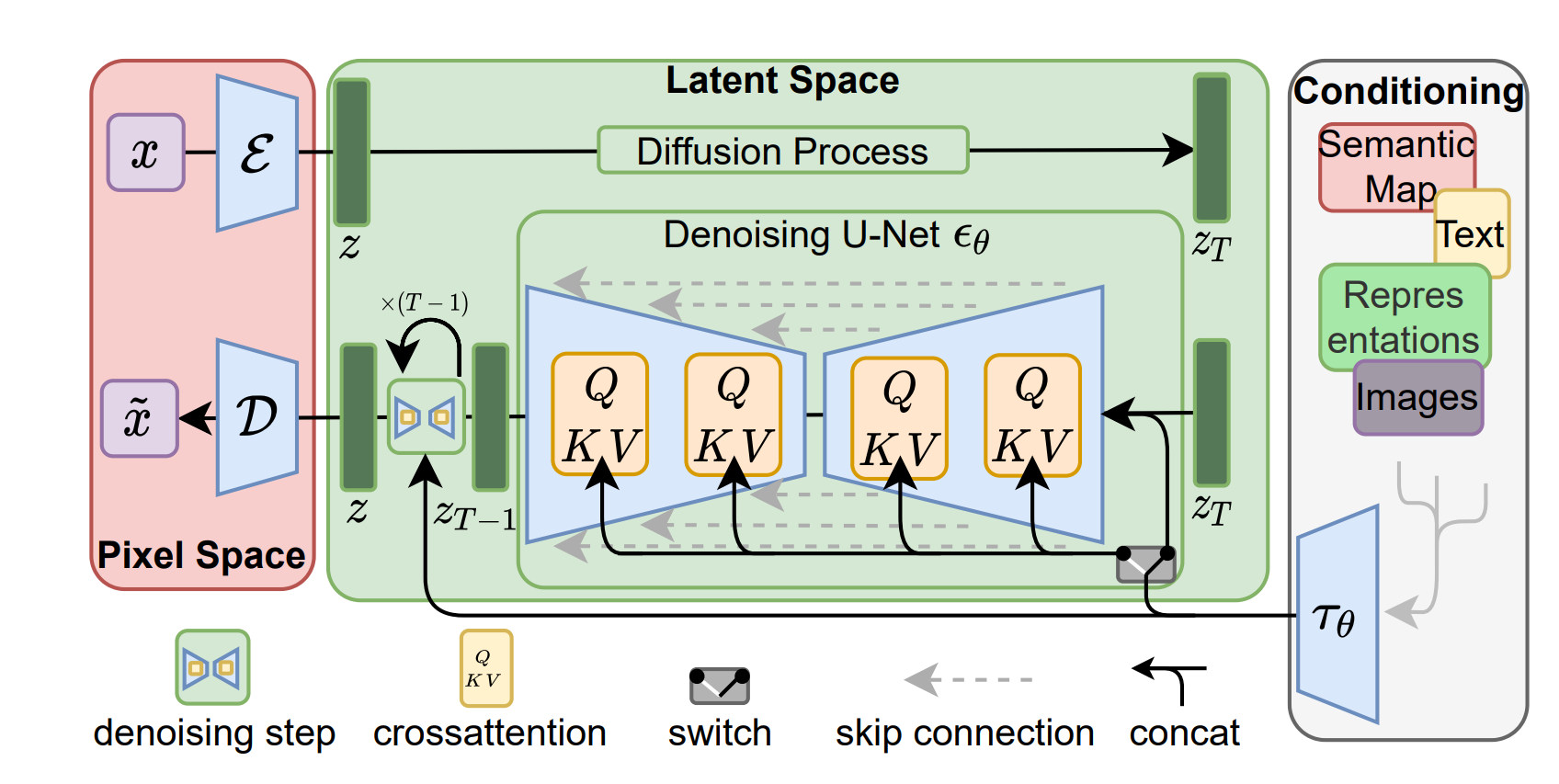

NeMo makes it really easy to spin up an LLM and start interacting with it. The perceived downside of NeMo is that it only supports a small number of LLMs, because it requires the LLM to be in a specific format. For folks looking to run LLMs that are not supported by NeMo, NVIDIA provides a set of scripts and containers to convert the LLMs from the Hugging Face format to the TensorRT, which is the underlying framework for NeMo and the Triton Inference server. According to NVIDIA's website, found here, TensorRT-LLM is an open-source library that accelerates and optimizes inference performance of the latest large language models (LLMs) on the NVIDIA AI platform.

The challenge with TensorRT-LLM is that one can't take a model from Hugging Face and run it directly on TensorRT-LLM. Such a model will need to go through a conversion stage and then it can leverage all the goodness of TensorRT-LLM.

When it comes to optimizing large language models, TensorRT-LLM is the key. It ensures that models not only deliver high performance but also maintain efficiency in various applications.

The library includes optimized kernels, pre- and post-processing steps, and multi-GPU/multi-node communication primitives. These features are specifically designed to enhance performance on NVIDIA GPUs.

The purpose of this blog is to show the steps needed to take a model on Hugging Face and convert it to TensorRT-LLM. Once a model has been converted, it can then be used by the Triton Inference server. TensorRT-LLM doesn't support all models on Hugging Face, so before attempting the conversion, I would check the ever-growing list of supported models on the TensorRT-LLM github page.

Pre-requisites

Before diving into the conversion, let's briefly talk about pre-requisites. There are a lot of steps in the conversion leverage docker, so you need: docker-compose and docker-buildx. You will also be cloning repositories, so you need git . One component of git that is required and is not always installed by default is the support for Large File Storage. So, you need to make sure that git-lfs is installed, because we will need to clone fairly large files (in the multi-GB size) from git, and using git-lfs is the most efficient way of doing it.

Building the TensorRT LLM library

At the time of writing this blog, NVIDIA hasn't yet released a pre-built container with the TensorRT LLM library, so unfortunately, it means that it is incumbent on whomever wants to use it to do so. So, let me show you how to do it.

First thing I need to do is clone the TensorRT LLM library repository:

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0$ git clone https://github.com/NVIDIA/TensorRT-LLM.gitCloning into 'TensorRT-LLM'...remote: Enumerating objects: 7888, done.remote: Counting objects: 100% (1696/1696), done.remote: Compressing objects: 100% (626/626), done.remote: Total 7888 (delta 1145), reused 1413 (delta 1061), pack-reused 6192Receiving objects: 100% (7888/7888), 81.67 MiB | 19.02 MiB/s, done.Resolving deltas: 100% (5368/5368), done.Updating files: 100% (1661/1661), done.

Then I need to initialize all the submodules contained in the repository:

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/TensorRT-LLM$ git submodule update --init --recursiveSubmodule '3rdparty/NVTX' (https://github.com/NVIDIA/NVTX.git) registered for path '3rdparty/NVTX'Submodule '3rdparty/cutlass' (https://github.com/NVIDIA/cutlass.git) registered for path '3rdparty/cutlass'Submodule '3rdparty/cxxopts' (https://github.com/jarro2783/cxxopts) registered for path '3rdparty/cxxopts'Submodule '3rdparty/json' (https://github.com/nlohmann/json.git) registered for path '3rdparty/json'Cloning into '/aipsf600/project-helix/TensonRT-LLM/v0.8.0/TensorRT-LLM/3rdparty/NVTX'...Cloning into '/aipsf600/project-helix/TensonRT-LLM/v0.8.0/TensorRT-LLM/3rdparty/cutlass'...Cloning into '/aipsf600/project-helix/TensonRT-LLM/v0.8.0/TensorRT-LLM/3rdparty/cxxopts'...Cloning into '/aipsf600/project-helix/TensonRT-LLM/v0.8.0/TensorRT-LLM/3rdparty/json'...Submodule path '3rdparty/NVTX': checked out 'a1ceb0677f67371ed29a2b1c022794f077db5fe7'Submodule path '3rdparty/cutlass': checked out '39c6a83f231d6db2bc6b9c251e7add77d68cbfb4'Submodule path '3rdparty/cxxopts': checked out 'eb787304d67ec22f7c3a184ee8b4c481d04357fd'Submodule path '3rdparty/json': checked out 'bc889afb4c5bf1c0d8ee29ef35eaaf4c8bef8a5d'

and then I need to initialize git lfs and pull the objects stored in git lfs:

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/TensorRT-LLM$ git lfs installUpdated git hooks.Git LFS initialized.fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/TensorRT-LLM$ git lfs pull

At this point, I am now ready to build the docker container that will contain the TensorRT LLM library:

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/TensorRT-LLM$ make -C docker release_buildmake: Entering directory '/aipsf600/project-helix/TensonRT-LLM/v0.8.0/TensorRT-LLM/docker'Building docker image: tensorrt_llm/release:latestDOCKER_BUILDKIT=1 docker build --pull \ --progress auto \ --build-arg BASE_IMAGE=nvcr.io/nvidia/pytorch \ --build-arg BASE_TAG=23.12-py3 \ --build-arg BUILD_WHEEL_ARGS="--clean --trt_root /usr/local/tensorrt --python_bindings --benchmarks" \ --build-arg TORCH_INSTALL_TYPE="skip" \ --build-arg TRT_LLM_VER="0.8.0.dev20240123" \ --build-arg GIT_COMMIT="b57221b764bc579cbb2490154916a871f620e2c4" \ --target release \ --file Dockerfile.multi \ --tag tensorrt_llm/release:latest \

[+] Building 2533.0s (41/41) FINISHED docker:default => [internal] load build definition from Dockerfile.multi 0.0s => => transferring dockerfile: 3.24kB 0.0s => [internal] load .dockerignore 0.0s => => transferring context: 359B 0.0s => [internal] load metadata for nvcr.io/nvidia/pytorch:23.12-py3 1.0s => [auth] nvidia/pytorch:pull,push token for nvcr.io 0.0s => [internal] load build context 44.1s => => transferring context: 579.18MB 44.1s => CACHED [base 1/1] FROM nvcr.io/nvidia/pytorch:23.12-py3@sha256:da3d1b690b9dca1fbf9beb3506120a63479e0cf1dc69c9256055125460eb44f7 0.0s => [devel 1/14] COPY docker/common/install_base.sh install_base.sh 1.1s => [devel 2/14] RUN bash ./install_base.sh && rm install_base.sh 13.7s => [devel 3/14] COPY docker/common/install_cmake.sh install_cmake.sh 0.0s => [devel 4/14] RUN bash ./install_cmake.sh && rm install_cmake.sh 23.0s => [devel 5/14] COPY docker/common/install_ccache.sh install_ccache.sh 0.0s => [devel 6/14] RUN bash ./install_ccache.sh && rm install_ccache.sh 0.5s => [devel 7/14] COPY docker/common/install_tensorrt.sh install_tensorrt.sh 0.0s => [devel 8/14] RUN bash ./install_tensorrt.sh --TRT_VER=${TRT_VER} --CUDA_VER=${CUDA_VER} --CUDNN_VER=${CUDNN_VER} --NCCL_VER=${NCCL_VER} --CUBLAS_VER=${CUBLAS_VER} && 448.3s => [devel 9/14] COPY docker/common/install_polygraphy.sh install_polygraphy.sh 0.0s => [devel 10/14] RUN bash ./install_polygraphy.sh && rm install_polygraphy.sh 3.3s => [devel 11/14] COPY docker/common/install_mpi4py.sh install_mpi4py.sh 0.0s => [devel 12/14] RUN bash ./install_mpi4py.sh && rm install_mpi4py.sh 42.2s => [devel 13/14] COPY docker/common/install_pytorch.sh install_pytorch.sh 0.0s => [devel 14/14] RUN bash ./install_pytorch.sh skip && rm install_pytorch.sh 0.4s => [wheel 1/9] WORKDIR /src/tensorrt_llm 0.0s => [release 1/11] WORKDIR /app/tensorrt_llm 0.0s => [wheel 2/9] COPY benchmarks benchmarks 0.0s => [wheel 3/9] COPY cpp cpp 1.2s => [wheel 4/9] COPY benchmarks benchmarks 0.0s => [wheel 5/9] COPY scripts scripts 0.0s => [wheel 6/9] COPY tensorrt_llm tensorrt_llm 0.0s => [wheel 7/9] COPY 3rdparty 3rdparty 0.8s => [wheel 8/9] COPY setup.py requirements.txt requirements-dev.txt ./ 0.1s => [wheel 9/9] RUN python3 scripts/build_wheel.py --clean --trt_root /usr/local/tensorrt --python_bindings --benchmarks 1858.0s => [release 2/11] COPY --from=wheel /src/tensorrt_llm/build/tensorrt_llm*.whl . 0.2s => [release 3/11] RUN pip install tensorrt_llm*.whl --extra-index-url https://pypi.nvidia.com && rm tensorrt_llm*.whl 43.7s => [release 4/11] COPY README.md ./ 0.0s => [release 5/11] COPY docs docs 0.0s => [release 6/11] COPY cpp/include include 0.0s => [release 7/11] COPY --from=wheel /src/tensorrt_llm/cpp/build/tensorrt_llm/libtensorrt_llm.so /src/tensorrt_llm/cpp/build/tensorrt_llm/libtensorrt_llm_static.a lib/ 0.1s => [release 8/11] RUN ln -sv $(TRT_LLM_NO_LIB_INIT=1 python3 -c "import tensorrt_llm.plugin as tlp; print(tlp.plugin_lib_path())") lib/ && cp -Pv lib/libnvinfer_plugin_tensorrt_llm.so li 1.8s => [release 9/11] COPY --from=wheel /src/tensorrt_llm/cpp/build/benchmarks/bertBenchmark /src/tensorrt_llm/cpp/build/benchmarks/gptManagerBenchmark /src/tensorrt_llm/cpp/build 0.1s => [release 10/11] COPY examples examples 0.1s => [release 11/11] RUN chmod -R a+w examples 0.5s => exporting to image 40.1s => => exporting layers 40.1s => => writing image sha256:a6a65ab955b6fcf240ee19e6601244d9b1b88fd594002586933b9fd9d598c025 0.0s => => naming to docker.io/tensorrt_llm/release:latest 0.0smake: Leaving directory '/aipsf600/project-helix/TensonRT-LLM/v0.8.0/TensorRT-LLM/docker'

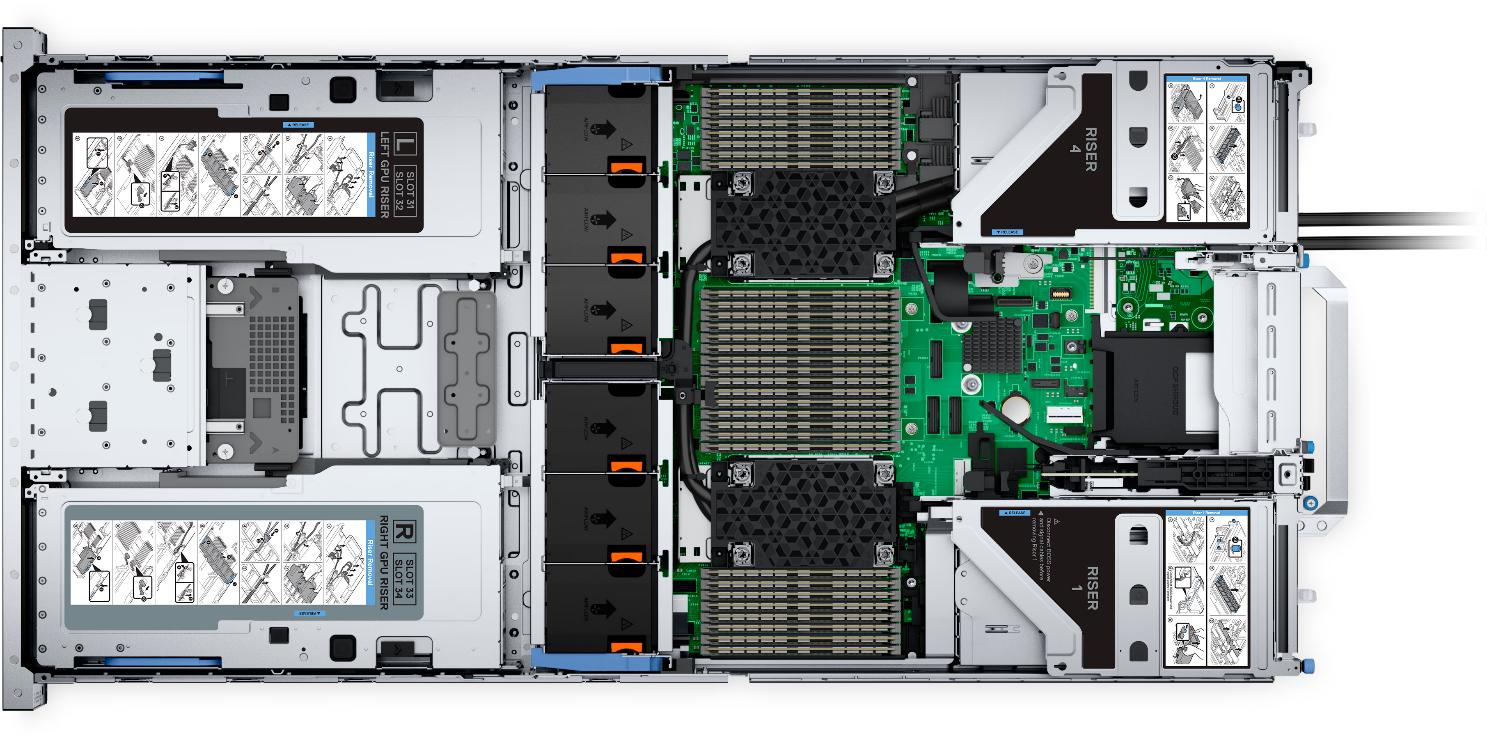

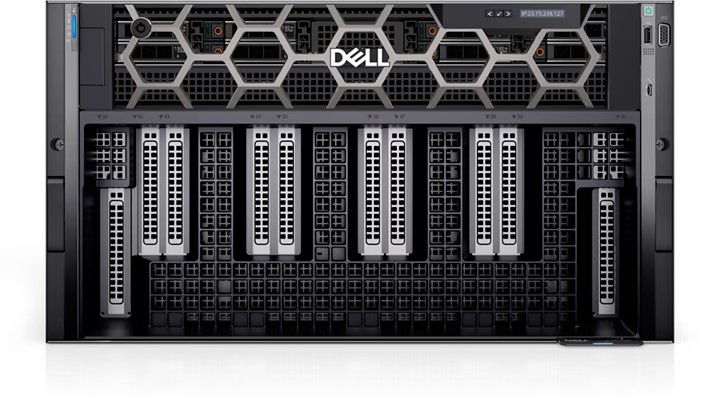

The time it will take to build the container is highly dependent on the resources available on the server you are running the command on. In my case, this was on a PowerEdge XE9680, which is the fastest server in the Dell PowerEdge portfolio.

Downloading model weights

Next, I need to download the weights for the model I am going to be converting to TensorRT. Even though I am doing this in this sequence, this step could have been done prior to cloning the TensorRT LLM repo.

Model weights can be downloaded in 2 different manners:

- Outside of the TensorRT container

- Inside the TensorRT container

The benefit of downloading them outside of the TensorRT container is that they can be reused for multiple conversions, whereas, if they are downloaded inside the container, they can only be used for that single conversion. In my case, I will download them outside of the container as I feel it will be the approach used by most people. This is how to do it:

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/TensorRT-LLM$ cd ..fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0$ git lfs installGit LFS initialized.fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0$ git clone https://huggingface.co/meta-llama/Llama-2-70b-chat-hfCloning into ‘Llama-2-70b-chat-hf’...Username for ‘https://huggingface.co’: ******Password for ‘https://bronzafa@huggingface.co’:remote: Enumerating objects: 93, done.remote: Counting objects: 100% (6/6), done.remote: Compressing objects: 100% (6/6), done.remote: Total 93 (delta 1), reused 0 (delta 0), pack-reused 87Unpacking objects: 100% (93/93), 509.43 KiB | 260.00 KiB/s, done.Updating files: 100% (44/44), done.Username for ‘https://huggingface.co’: ******Password for ‘https://bronzafa@huggingface.co’:

Filtering content: 18% (6/32), 6.30 GiB | 2.38 MiB/s

Filtering content: 100% (32/32), 32.96 GiB | 9.20 MiB/s, done.

Depending on your setup, you might see some error messages about files not being copied properly. Those can be safely ignored. One thing worth noting about downloading the weights is that you need to make sure you have lots of local storage as cloning this particular model will need over 500GB. The amount of storage will obviously depend on the size of the model and the model chosen, but definitely something to keep in mind.

Starting the TensorRT container

Now, I am ready to start the TensorRT container. This can be done with the following command:

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/TensorRT-LLM$ make -C docker release_run LOCAL_USER=1make: Entering directory ‘/aipsf600/project-helix/TensonRT-LLM/v0.8.0/TensorRT-LLM/docker’docker build –progress –pull --progress auto –build-arg BASE_IMAGE_WITH_TAG=tensorrt_llm/release:latest –build-arg USER_ID=1003 –build-arg USER_NAME=fbronzati –build-arg GROUP_ID=1001 –build-arg GROUP_NAME=ais –file Dockerfile.user –tag tensorrt_llm/release:latest-fbronzati ..[+] Building 0.5s (6/6) FINISHED docker:default => [internal] load build definition from Dockerfile.user 0.0s => => transferring dockerfile: 531B 0.0s => [internal] load .dockerignore 0.0s => => transferring context: 359B 0.0s => [internal] load metadata for docker.io/tensorrt_llm/release:latest 0.0s => [1/2] FROM docker.io/tensorrt_llm/release:latest 0.1s => [2/2] RUN (getent group 1001 || groupadd –gid 1001 ais) && (getent passwd 1003 || useradd –gid 1001 –uid 1003 –create-home –no-log-init –shell /bin/bash fbronzati) 0.3s => exporting to image 0.0s => => exporting layers 0.0s => => writing image sha256:1149632051753e37204a6342c1859a8a8d9068a163074ca361e55bc52f563cac 0.0s => => naming to docker.io/tensorrt_llm/release:latest-fbronzati 0.0sdocker run –rm -it –ipc=host –ulimit memlock=-1 –ulimit stack=67108864 \ --gpus=all \ --volume /aipsf600/project-helix/TensonRT-LLM/v0.8.0/TensorRT-LLM:/code/tensorrt_llm \ --env “CCACHE_DIR=/code/tensorrt_llm/cpp/.ccache” \ --env “CCACHE_BASEDIR=/code/tensorrt_llm” \ --workdir /app/tensorrt_llm \ --hostname node002-release \ --name tensorrt_llm-release-fbronzati \ --tmpfs /tmp:exec \ tensorrt_llm/release:latest-fbronzati

=============== PyTorch ===============

NVIDIA Release 23.12 (build 76438008)PyTorch Version 2.2.0a0+81ea7a4

Container image Copyright © 2023, NVIDIA CORPORATION & AFFILIATES. All rights reserved.

Copyrig©(c) 2014-2023 Facebook Inc.Copy©ht (c) 2011-2014 Idiap Research Institute (Ronan Collobert)C©right (c) 2012-2014 Deepmind Technologies (Koray Kavukcuoglu©opyright (c) 2011-2012 NEC Laboratories America (Koray Kavukcuo©)Copyright (c) 2011-2013 NYU (Clement F©bet)Copyright (c) 2006-2010 NEC Laboratories America (Ronan Collobert, Leon Bottou, Iain Melvin, Jas©Weston)Copyright (c) 2006 Idiap Research Institute ©my Bengio)Copyright (c) 2001-2004 Idiap Research Institute (Ronan Collobert, Samy Bengio, J©ny Mariethoz)Copyright (c) 2015 Google Inc.Copyright (c) 2015 Yangqing JiaCopyright (c) 2013-2016 The Caffe contributorsAll rights reserved.

Variou©iles include modifications (c) NVIDIA CORPORATION & AFFILIATES. All rights reserved.

This container image and its contents are governed by the NVIDIA Deep Learning Container License.By pulling and using the container, you accept the terms and conditions of this license:https://developer.nvidia.com/ngc/nvidia-deep-learning-container-license

fbronzati@node002-release:/app/tensorrt_llm$

One of the arguments of the command, the LOCAL_USER=1 is required to ensure proper ownership of the files that will be created later. Without that argument, all the newly created files will belong to root thus potentially causing challenges later on.

As you can see in the last line of the previous code block, the shell prompt has changed. Before running the command, it was fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/TensorRT-LLM$ and after running the command, it is fbronzati@node002-release:/app/tensorrt_llm$ . That is because, once the command completes, you will be inside the TensorRT container, and everything I will need to do for the conversion going forward will be done from inside that container. This is the reason why we build it in the first place as it allows us to customize the container based on the LLM being converted.

Converting the LLM

Now that I have started the TensorRT container and that I am inside of it, I am ready to convert the LLM from the Huggingface format to the Triton Inference server format.

The conversion process will need to download tokens from Huggingface, so I need to make sure that I am logged into Hugginface. I can do that by running this:

fbronzati@node002-release:/app/tensorrt_llm$ huggingface-cli login --token ******Token will not been saved to git credential helper. Pass `add_to_git_credential=True` if you want to set the git credential as well.Token is valid (permission: read).Your token has been saved to /home/fbronzati/.cache/huggingface/tokenLogin successful

Instead of the ******, you will need to enter your Huggingface API token. You can find it by log in to Hugginface and then go to Settings and then Access Tokens. If your login is successful, you will see the message at the bottom Login successful.

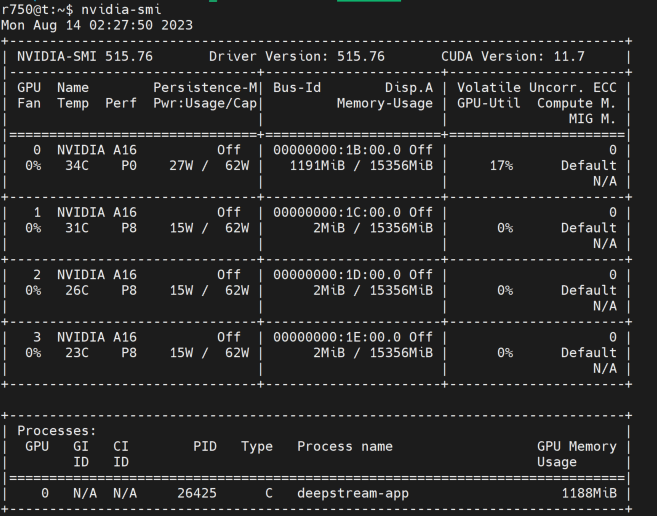

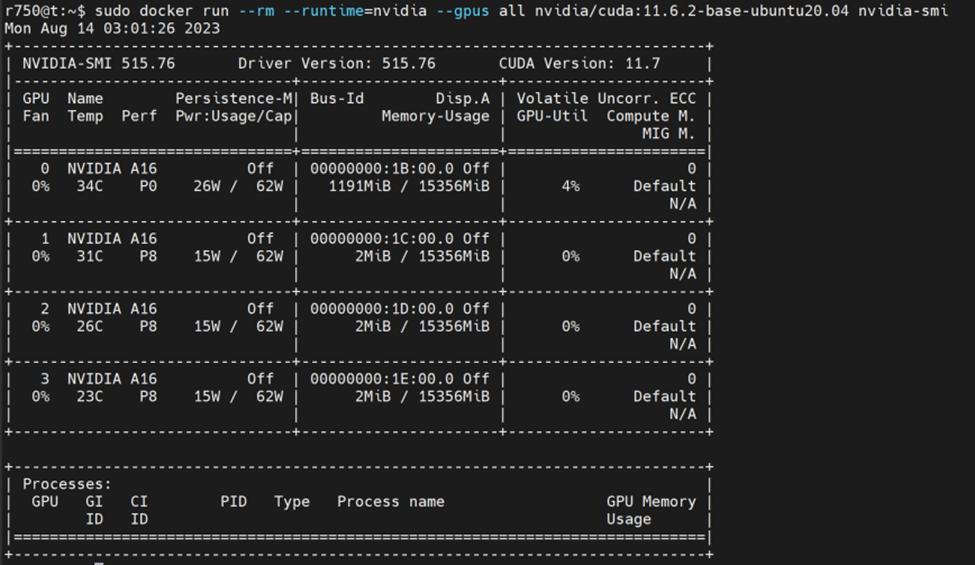

I am now ready to start the process to generate the new TensorRT engines. This process takes the weights we have downloaded earlier and generates the corresponding TensorRT engines. The number of engines created will depend on the number of GPUs available. In my case, I will create 4 TensorRT engines as I have 4 GPUs. One non-obvious advantage of the conversion process is that you can change the number of engines you want for your model. For instance, the initial version of the Llama-2-70b-chat-hf model required 8 GPUs, but through the conversion process, I changed that from 8 to 4.

How long the conversion process takes will totally depend on the hardware that you have, but, generally speaking it will take a while. Here is the command to do it :

fbronzati@node002-release:/app/tensorrt_llm$ python3 examples/llama/build.py \

--model_dir /code/tensorrt_llm/Llama-2-70b-chat-hf/ \

--dtype float16 \

--use_gpt_attention_plugin float16 \

--use_gemm_plugin float16 \

--remove_input_padding \

--use_inflight_batching \

--paged_kv_cache \

--output_dir /code/tensorrt_llm/examples/llama/out \

--world_size 4 \

--tp_size 4 \

--max_batch_size 64fatal: not a git repository (or any of the parent directories): .git[TensorRT-LLM] TensorRT-LLM version: 0.8.0.dev20240123[01/31/2024-13:45:14] [TRT-LLM] [W] remove_input_padding is enabled, while max_num_tokens is not set, setting to max_batch_size*max_input_len.It may not be optimal to set max_num_tokens=max_batch_size*max_input_len when remove_input_padding is enabled, because the number of packed input tokens are very likely to be smaller, we strongly recommend to set max_num_tokens according to your workloads.[01/31/2024-13:45:14] [TRT-LLM] [I] Serially build TensorRT engines.[01/31/2024-13:45:14] [TRT] [I] [MemUsageChange] Init CUDA: CPU +15, GPU +0, now: CPU 141, GPU 529 (MiB)[01/31/2024-13:45:20] [TRT] [I] [MemUsageChange] Init builder kernel library: CPU +4395, GPU +1160, now: CPU 4672, GPU 1689 (MiB)[01/31/2024-13:45:20] [TRT-LLM] [W] Invalid timing cache, using freshly created one[01/31/2024-13:45:20] [TRT-LLM] [I] [MemUsage] Rank 0 Engine build starts - Allocated Memory: Host 4.8372 (GiB) Device 1.6502 (GiB)[01/31/2024-13:45:21] [TRT-LLM] [I] Loading HF LLaMA ... from /code/tensorrt_llm/Llama-2-70b-chat-hf/Loading checkpoint shards: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 15/15 [00:00<00:00, 16.67it/s][01/31/2024-13:45:22] [TRT-LLM] [I] Loading weights from HF LLaMA...[01/31/2024-13:45:34] [TRT-LLM] [I] Weights loaded. Total time: 00:00:12[01/31/2024-13:45:34] [TRT-LLM] [I] HF LLaMA loaded. Total time: 00:00:13[01/31/2024-13:45:35] [TRT-LLM] [I] [MemUsage] Rank 0 model weight loaded. - Allocated Memory: Host 103.0895 (GiB) Device 1.6502 (GiB)[01/31/2024-13:45:35] [TRT-LLM] [I] Optimized Generation MHA kernels (XQA) Enabled[01/31/2024-13:45:35] [TRT-LLM] [I] Remove Padding Enabled[01/31/2024-13:45:35] [TRT-LLM] [I] Paged KV Cache Enabled[01/31/2024-13:45:35] [TRT] [W] IElementWiseLayer with inputs LLaMAForCausalLM/vocab_embedding/GATHER_0_output_0 and LLaMAForCausalLM/layers/0/input_layernorm/SHUFFLE_0_output_0: first input has type Half but second input has type Float.[01/31/2024-13:45:35] [TRT] [W] IElementWiseLayer with inputs LLaMAForCausalLM/layers/0/input_layernorm/REDUCE_AVG_0_output_0 and LLaMAForCausalLM/layers/0/input_layernorm/SHUFFLE_1_output_0: first input has type Half but second input has type Float.....[01/31/2024-13:52:56] [TRT] [I] Engine generation completed in 57.4541 seconds.[01/31/2024-13:52:56] [TRT] [I] [MemUsageStats] Peak memory usage of TRT CPU/GPU memory allocators: CPU 1000 MiB, GPU 33268 MiB[01/31/2024-13:52:56] [TRT] [I] [MemUsageChange] TensorRT-managed allocation in building engine: CPU +0, GPU +33268, now: CPU 0, GPU 33268 (MiB)[01/31/2024-13:53:12] [TRT] [I] [MemUsageStats] Peak memory usage during Engine building and serialization: CPU: 141685 MiB[01/31/2024-13:53:12] [TRT-LLM] [I] Total time of building llama_float16_tp4_rank3.engine: 00:01:13[01/31/2024-13:53:13] [TRT] [I] Loaded engine size: 33276 MiB[01/31/2024-13:53:17] [TRT] [I] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +0, GPU +64, now: CPU 38537, GPU 35111 (MiB)[01/31/2024-13:53:17] [TRT] [I] [MemUsageChange] Init cuDNN: CPU +1, GPU +64, now: CPU 38538, GPU 35175 (MiB)[01/31/2024-13:53:17] [TRT] [W] TensorRT was linked against cuDNN 8.9.6 but loaded cuDNN 8.9.4[01/31/2024-13:53:17] [TRT] [I] [MemUsageChange] TensorRT-managed allocation in engine deserialization: CPU +0, GPU +33267, now: CPU 0, GPU 33267 (MiB)[01/31/2024-13:53:17] [TRT-LLM] [I] Activation memory size: 34464.50 MiB[01/31/2024-13:53:17] [TRT-LLM] [I] Weights memory size: 33276.37 MiB[01/31/2024-13:53:17] [TRT-LLM] [I] Max KV Cache memory size: 12800.00 MiB[01/31/2024-13:53:17] [TRT-LLM] [I] Estimated max memory usage on runtime: 80540.87 MiB[01/31/2024-13:53:17] [TRT-LLM] [I] Serializing engine to /code/tensorrt_llm/examples/llama/out/llama_float16_tp4_rank3.engine...[01/31/2024-13:53:48] [TRT-LLM] [I] Engine serialized. Total time: 00:00:31[01/31/2024-13:53:49] [TRT-LLM] [I] [MemUsage] Rank 3 Engine serialized - Allocated Memory: Host 7.1568 (GiB) Device 1.6736 (GiB)[01/31/2024-13:53:49] [TRT-LLM] [I] Rank 3 Engine build time: 00:02:05 - 125.77239561080933 (sec)[01/31/2024-13:53:49] [TRT] [I] Serialized 59 bytes of code generator cache.[01/31/2024-13:53:49] [TRT] [I] Serialized 242287 bytes of compilation cache.[01/31/2024-13:53:49] [TRT] [I] Serialized 14 timing cache entries[01/31/2024-13:53:49] [TRT-LLM] [I] Timing cache serialized to /code/tensorrt_llm/examples/llama/out/model.cache[01/31/2024-13:53:51] [TRT-LLM] [I] Total time of building all 4 engines: 00:08:36

I have removed redundant output lines, so you can expect your output to be much longer than this. In my command, I have set the output directory to

/code/tensorrt_llm/examples/llama/out, so let's check the content of that directory:

fbronzati@node002-release:/app/tensorrt_llm$ ll /code/tensorrt_llm/examples/llama/out/total 156185008drwxr-xr-x 2 fbronzati ais 250 Jan 31 13:53 ./drwxrwxrwx 3 fbronzati ais 268 Jan 31 13:45 ../-rw-r--r-- 1 fbronzati ais 2188 Jan 31 13:46 config.json-rw-r--r-- 1 fbronzati ais 34892798724 Jan 31 13:47 llama_float16_tp4_rank0.engine-rw-r--r-- 1 fbronzati ais 34892792516 Jan 31 13:49 llama_float16_tp4_rank1.engine-rw-r--r-- 1 fbronzati ais 34892788332 Jan 31 13:51 llama_float16_tp4_rank2.engine-rw-r--r-- 1 fbronzati ais 34892800860 Jan 31 13:53 llama_float16_tp4_rank3.engine-rw-r--r-- 1 fbronzati ais 243969 Jan 31 13:53 model.cache

Sure enough, here are my 4 engine files. What can I do with those though? Those can be leveraged by the NVIDIA Triton Inference server to run inference. Let's take a look at how I can do that.

Now that I have finished the conversion, I can exit the TensorRT container:

fbronzati@node002-release:/app/tensorrt_llm$ exitexitmake: Leaving directory '/aipsf600/project-helix/TensonRT-LLM/v0.8.0/TensorRT-LLM/docker'

Deploying engine files to Triton Inference Server

Because NVIDIA is not offering a version of the Triton Inference Server container with the LLM as a parameter to the container, I will need to build it from scratch so it can leverage the engine files built through the conversion. The process is pretty similar to what I have done with the TensorRT container. From a high level, here is the process:

- Clone the Triton Inference Server backend repository

- Copy the engine files to the cloned repository

- Update some of the configuration parameters for the templates

- Build the Triton Inference Server container

Let's clone the Triton Inference Server backend repository:

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/TensorRT-LLM$ cd ..fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0$ git clone https://github.com/triton-inference-server/tensorrtllm_backend.gitCloning into 'tensorrtllm_backend'...remote: Enumerating objects: 870, done.remote: Counting objects: 100% (348/348), done.remote: Compressing objects: 100% (165/165), done.remote: Total 870 (delta 229), reused 242 (delta 170), pack-reused 522Receiving objects: 100% (870/870), 387.70 KiB | 973.00 KiB/s, done.Resolving deltas: 100% (439/439), done.

Let's initialize all the 3rd party modules and the support for Large File Storage for git:

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0$ cd tensorrtllm_backend/fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend$ git submodule update --init --recursiveSubmodule 'tensorrt_llm' (https://github.com/NVIDIA/TensorRT-LLM.git) registered for path 'tensorrt_llm'Cloning into '/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend/tensorrt_llm'...Submodule path 'tensorrt_llm': checked out 'b57221b764bc579cbb2490154916a871f620e2c4'Submodule '3rdparty/NVTX' (https://github.com/NVIDIA/NVTX.git) registered for path 'tensorrt_llm/3rdparty/NVTX'Submodule '3rdparty/cutlass' (https://github.com/NVIDIA/cutlass.git) registered for path 'tensorrt_llm/3rdparty/cutlass'Submodule '3rdparty/cxxopts' (https://github.com/jarro2783/cxxopts) registered for path 'tensorrt_llm/3rdparty/cxxopts'Submodule '3rdparty/json' (https://github.com/nlohmann/json.git) registered for path 'tensorrt_llm/3rdparty/json'Cloning into '/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend/tensorrt_llm/3rdparty/NVTX'...Cloning into '/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend/tensorrt_llm/3rdparty/cutlass'...Cloning into '/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend/tensorrt_llm/3rdparty/cxxopts'...Cloning into '/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend/tensorrt_llm/3rdparty/json'...Submodule path 'tensorrt_llm/3rdparty/NVTX': checked out 'a1ceb0677f67371ed29a2b1c022794f077db5fe7'Submodule path 'tensorrt_llm/3rdparty/cutlass': checked out '39c6a83f231d6db2bc6b9c251e7add77d68cbfb4'Submodule path 'tensorrt_llm/3rdparty/cxxopts': checked out 'eb787304d67ec22f7c3a184ee8b4c481d04357fd'Submodule path 'tensorrt_llm/3rdparty/json': checked out 'bc889afb4c5bf1c0d8ee29ef35eaaf4c8bef8a5d'

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend$ git lfs installUpdated git hooks.Git LFS initialized.

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend$ git lfs pull

I am now ready to copy the engine files to the cloned repository:

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend$ cp ../TensorRT-LLM/examples/llama/out/* all_models/inflight_batcher_llm/tensorrt_llm/1/

The next step can be done either by manually modifying the config.pbtxt files under various directories or by using the fill_template.py script to write the modifications for us. I am going to use the fill_template.py script, but that is my preference. Let me update those parameters:

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend$ export HF_LLAMA_MODEL=meta-llama/Llama-2-70b-chat-hf

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend$ cp all_models/inflight_batcher_llm/ llama_ifb -r

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend$ python3 tools/fill_template.py -i llama_ifb/preprocessing/config.pbtxt tokenizer_dir:${HF_LLAMA_MODEL},tokenizer_type:llama,triton_max_batch_size:64,preprocessing_instance_count:1

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend$ python3 tools/fill_template.py -i llama_ifb/postprocessing/config.pbtxt tokenizer_dir:${HF_LLAMA_MODEL},tokenizer_type:llama,triton_max_batch_size:64,postprocessing_instance_count:1

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend$ python3 tools/fill_template.py -i llama_ifb/tensorrt_llm_bls/config.pbtxt triton_max_batch_size:64,decoupled_mode:False,bls_instance_count:1,accumulate_tokens:False

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend$ python3 tools/fill_template.py -i llama_ifb/ensemble/config.pbtxt triton_max_batch_size:64

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend$ python3 tools/fill_template.py -i llama_ifb/tensorrt_llm/config.pbtxt triton_max_batch_size:64,decoupled_mode:False,max_beam_width:1,engine_dir:/llama_ifb/tensorrt_llm/1/,max_tokens_in_paged_kv_cache:2560,max_attention_window_size:2560,kv_cache_free_gpu_mem_fraction:0.5,exclude_input_in_output:True,enable_kv_cache_reuse:False,batching_strategy:inflight_batching,max_queue_delay_microseconds:600

I am now ready to build the Triton Inference Server docker container with my newly converted LLM (this step won't be required after the 24.02 launch):

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend$ DOCKER_BUILDKIT=1 docker build -t triton_trt_llm -f dockerfile/Dockerfile.trt_llm_backend .[+] Building 2572.9s (33/33) FINISHED docker:default => [internal] load build definition from Dockerfile.trt_llm_backend 0.0s => => transferring dockerfile: 2.45kB 0.0s => [internal] load .dockerignore 0.0s => => transferring context: 2B 0.0s => [internal] load metadata for nvcr.io/nvidia/tritonserver:23.12-py3 0.7s => [internal] load build context 47.6s => => transferring context: 580.29MB 47.6s => [base 1/6] FROM nvcr.io/nvidia/tritonserver:23.12-py3@sha256:363924e9f3b39154bf2075586145b5d15b20f6d695bd7e8de4448c3299064af0 0.0s => CACHED [base 2/6] RUN apt-get update && apt-get install -y --no-install-recommends rapidjson-dev python-is-python3 ccache git-lfs 0.0s => [base 3/6] COPY requirements.txt /tmp/ 2.0s => [base 4/6] RUN pip3 install -r /tmp/requirements.txt --extra-index-url https://pypi.ngc.nvidia.com 28.1s => [base 5/6] RUN apt-get remove --purge -y tensorrt* 1.6s => [base 6/6] RUN pip uninstall -y tensorrt 0.9s => [dev 1/10] COPY tensorrt_llm/docker/common/install_tensorrt.sh /tmp/ 0.0s => [dev 2/10] RUN bash /tmp/install_tensorrt.sh && rm /tmp/install_tensorrt.sh 228.0s => [dev 3/10] COPY tensorrt_llm/docker/common/install_polygraphy.sh /tmp/ 0.0s => [dev 4/10] RUN bash /tmp/install_polygraphy.sh && rm /tmp/install_polygraphy.sh 2.5s => [dev 5/10] COPY tensorrt_llm/docker/common/install_cmake.sh /tmp/ 0.0s => [dev 6/10] RUN bash /tmp/install_cmake.sh && rm /tmp/install_cmake.sh 3.0s => [dev 7/10] COPY tensorrt_llm/docker/common/install_mpi4py.sh /tmp/ 0.0s => [dev 8/10] RUN bash /tmp/install_mpi4py.sh && rm /tmp/install_mpi4py.sh 38.7s => [dev 9/10] COPY tensorrt_llm/docker/common/install_pytorch.sh install_pytorch.sh 0.0s => [dev 10/10] RUN bash ./install_pytorch.sh pypi && rm install_pytorch.sh 96.6s => [trt_llm_builder 1/4] WORKDIR /app 0.0s => [trt_llm_builder 2/4] COPY scripts scripts 0.0s => [trt_llm_builder 3/4] COPY tensorrt_llm tensorrt_llm 3.0s => [trt_llm_builder 4/4] RUN cd tensorrt_llm && python3 scripts/build_wheel.py --trt_root="/usr/local/tensorrt" -i -c && cd .. 1959.1s => [trt_llm_backend_builder 1/3] WORKDIR /app/ 0.0s => [trt_llm_backend_builder 2/3] COPY inflight_batcher_llm inflight_batcher_llm 0.0s => [trt_llm_backend_builder 3/3] RUN cd inflight_batcher_llm && bash scripts/build.sh && cd .. 68.3s => [final 1/5] WORKDIR /app/ 0.0s => [final 2/5] COPY --from=trt_llm_builder /app/tensorrt_llm/build /app/tensorrt_llm/build 0.1s => [final 3/5] RUN cd /app/tensorrt_llm/build && pip3 install *.whl 22.8s => [final 4/5] RUN mkdir /opt/tritonserver/backends/tensorrtllm 0.4s => [final 5/5] COPY --from=trt_llm_backend_builder /app/inflight_batcher_llm/build/libtriton_tensorrtllm.so /opt/tritonserver/backends/tensorrtllm 0.0s => exporting to image 69.3s => => exporting layers 69.3s => => writing image sha256:03f4164551998d04aefa2817ea4ba9f53737874fc3604e284faa8f75bc99180c 0.0s => => naming to docker.io/library/triton_trt_llm

If I check my docker images, I can see that I now have a new image for the Triton Inference server (this step won't be required either after the 24.02 launch as there won't be a need to build a custom Triton Inference Server container anymore):

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend$ docker imagesREPOSITORY TAG IMAGE ID CREATED SIZEtriton_trt_llm latest 03f416455199 2 hours ago 53.1GB

I can now start the newly created docker container:

fbronzati@node002:/aipsf600/project-helix/TensonRT-LLM/v0.8.0/tensorrtllm_backend$ docker run --rm -it --net host --shm-size=2g --ulimit memlock=-1 --ulimit stack=67108864 --gpus all -v $(pwd)/llama_ifb:/llama_ifb -v $(pwd)/scripts:/opt/scripts triton_trt_llm:latest bash

=============================== Triton Inference Server ===============================

NVIDIA Release 23.12 (build 77457706)Triton Server Version 2.41.0

Copyright (c) 2018-2023, NVIDIA CORPORATION & AFFILIATES. All rights reserved.

Various files include modifications (c) NVIDIA CORPORATION & AFFILIATES. All rights reserved.

This container image and its contents are governed by the NVIDIA Deep Learning Container License.By pulling and using the container, you accept the terms and conditions of this license:https://developer.nvidia.com/ngc/nvidia-deep-learning-container-license

root@node002:/app#

After the launch of version 24.02, the name of the container, which is triton_trt_llm here, will change, so you will need to keep an eye out for the new name. I will update this blog with the changes post-launch.

Once the container is started, I will be again at a shell prompt inside the container. I need to log in to Hugginface again:

root@node002:/app# huggingface-cli login --token ******Token will not been saved to git credential helper. Pass `add_to_git_credential=True` if you want to set the git credential as well.Token is valid (permission: read).Your token has been saved to /root/.cache/huggingface/tokenLogin successful

And I can now run the Triton Inference server:

root@node002:/app# python /opt/scripts/launch_triton_server.py --model_repo /llama_ifb/ --world_size 4root@node002:/app# I0131 16:54:40.234909 135 pinned_memory_manager.cc:241] Pinned memory pool is created at '0x7ffd8c000000' with size 268435456I0131 16:54:40.243088 133 pinned_memory_manager.cc:241] Pinned memory pool is created at '0x7ffd8c000000' with size 268435456I0131 16:54:40.252026 133 cuda_memory_manager.cc:107] CUDA memory pool is created on device 0 with size 67108864I0131 16:54:40.252033 133 cuda_memory_manager.cc:107] CUDA memory pool is created on device 1 with size 67108864I0131 16:54:40.252035 133 cuda_memory_manager.cc:107] CUDA memory pool is created on device 2 with size 67108864I0131 16:54:40.252037 133 cuda_memory_manager.cc:107] CUDA memory pool is created on device 3 with size 67108864I0131 16:54:40.252040 133 cuda_memory_manager.cc:107] CUDA memory pool is created on device 4 with size 67108864I0131 16:54:40.252042 133 cuda_memory_manager.cc:107] CUDA memory pool is created on device 5 with size 67108864I0131 16:54:40.252044 133 cuda_memory_manager.cc:107] CUDA memory pool is created on device 6 with size 67108864I0131 16:54:40.252046 133 cuda_memory_manager.cc:107] CUDA memory pool is created on device 7 with size 67108864.....I0131 16:57:04.101557 132 server.cc:676]+------------------+---------+--------+| Model | Version | Status |+------------------+---------+--------+| ensemble | 1 | READY || postprocessing | 1 | READY || preprocessing | 1 | READY || tensorrt_llm | 1 | READY || tensorrt_llm_bls | 1 | READY |+------------------+---------+--------+

I0131 16:57:04.691252 132 metrics.cc:817] Collecting metrics for GPU 0: NVIDIA H100 80GB HBM3I0131 16:57:04.691303 132 metrics.cc:817] Collecting metrics for GPU 1: NVIDIA H100 80GB HBM3I0131 16:57:04.691315 132 metrics.cc:817] Collecting metrics for GPU 2: NVIDIA H100 80GB HBM3I0131 16:57:04.691325 132 metrics.cc:817] Collecting metrics for GPU 3: NVIDIA H100 80GB HBM3I0131 16:57:04.691335 132 metrics.cc:817] Collecting metrics for GPU 4: NVIDIA H100 80GB HBM3I0131 16:57:04.691342 132 metrics.cc:817] Collecting metrics for GPU 5: NVIDIA H100 80GB HBM3I0131 16:57:04.691350 132 metrics.cc:817] Collecting metrics for GPU 6: NVIDIA H100 80GB HBM3I0131 16:57:04.691358 132 metrics.cc:817] Collecting metrics for GPU 7: NVIDIA H100 80GB HBM3I0131 16:57:04.728148 132 metrics.cc:710] Collecting CPU metricsI0131 16:57:04.728434 132 tritonserver.cc:2483]+----------------------------------+------------------------------------------------------------------------------------------------------------------------------------------------------------------+| Option | Value |+----------------------------------+------------------------------------------------------------------------------------------------------------------------------------------------------------------+| server_id | triton || server_version | 2.41.0 || server_extensions | classification sequence model_repository model_repository(unload_dependents) schedule_policy model_configuration system_shared_memory cuda_shared_memory binary_ || | tensor_data parameters statistics trace logging || model_repository_path[0] | /llama_ifb/ || model_control_mode | MODE_NONE || strict_model_config | 1 || rate_limit | OFF || pinned_memory_pool_byte_size | 268435456 || cuda_memory_pool_byte_size{0} | 67108864 || cuda_memory_pool_byte_size{1} | 67108864 || cuda_memory_pool_byte_size{2} | 67108864 || cuda_memory_pool_byte_size{3} | 67108864 || cuda_memory_pool_byte_size{4} | 67108864 || cuda_memory_pool_byte_size{5} | 67108864 || cuda_memory_pool_byte_size{6} | 67108864 || cuda_memory_pool_byte_size{7} | 67108864 || min_supported_compute_capability | 6.0 || strict_readiness | 1 || exit_timeout | 30 || cache_enabled | 0 |+----------------------------------+------------------------------------------------------------------------------------------------------------------------------------------------------------------+

I0131 16:57:04.738042 132 grpc_server.cc:2495] Started GRPCInferenceService at 0.0.0.0:8001I0131 16:57:04.738303 132 http_server.cc:4619] Started HTTPService at 0.0.0.0:8000I0131 16:57:04.779541 132 http_server.cc:282] Started Metrics Service at 0.0.0.0:8002

Again, I have removed some of the output lines to keep things within a reasonable size. Once the start sequence has completed, I can see that the Triton Inference server is listening on port 8000, so let's test it, right?

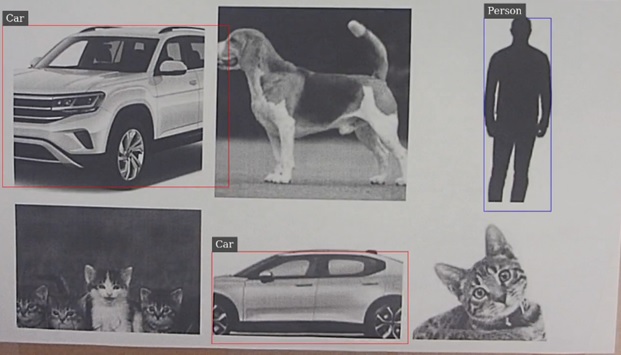

Let's ask the LLama 2 model running within the Triton Inference Server what the capital of Texas in the US is:

root@node002:/app# curl -X POST localhost:8000/v2/models/ensemble/generate -d '{"text_input": " <s>[INST] <<SYS>> You are a helpful assistant <</SYS>> What is the capital of Texas?[/INST]","parameters": {"max_tokens": 100,"bad_words":[""],"stop_words":[""],"temperature":0.2,"top_p":0.7}}'

Because I am running the curl command directly from inside the container running the Triton Inference server, I am using localhost as the endpoint. If you are running the curl command from outside of the container, then localhost will need to be replace by the proper hostname. This is the response I got:

{"context_logits":0.0,"cum_log_probs":0.0,"generation_logits":0.0,"model_name":"ensemble","model_version":"1","output_log_probs":[0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0],"sequence_end":false,"sequence_id":0,"sequence_start":false,"text_output":" Sure, I'd be happy to help! The capital of Texas is Austin."}

Yay! It works and I got the right answer from the LLM.

Conclusion

If you have reached this point in the blog, thank you for staying with me. Taking a large language model from Huggingface (that is in one of the supported models) and running it in the NVIDIA Triton Inference server allows customers to leverage the automation and simplicity built into the NVIDIA Triton Inference server. All while retaining the flexibility to choose the large language model that best meets their needs. It is almost like have your cake and eat it to.

Until next time, thank you for reading.

MLPerf™ Inference 4.0 on Dell PowerEdge Server with Intel® 5th Generation Xeon® CPU

Mon, 22 Apr 2024 05:40:38 -0000

|Read Time: 0 minutes

Introduction

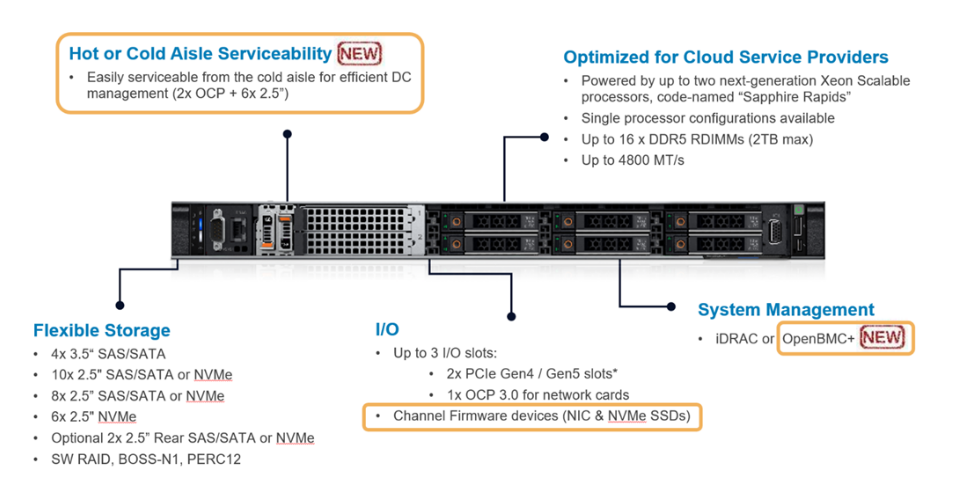

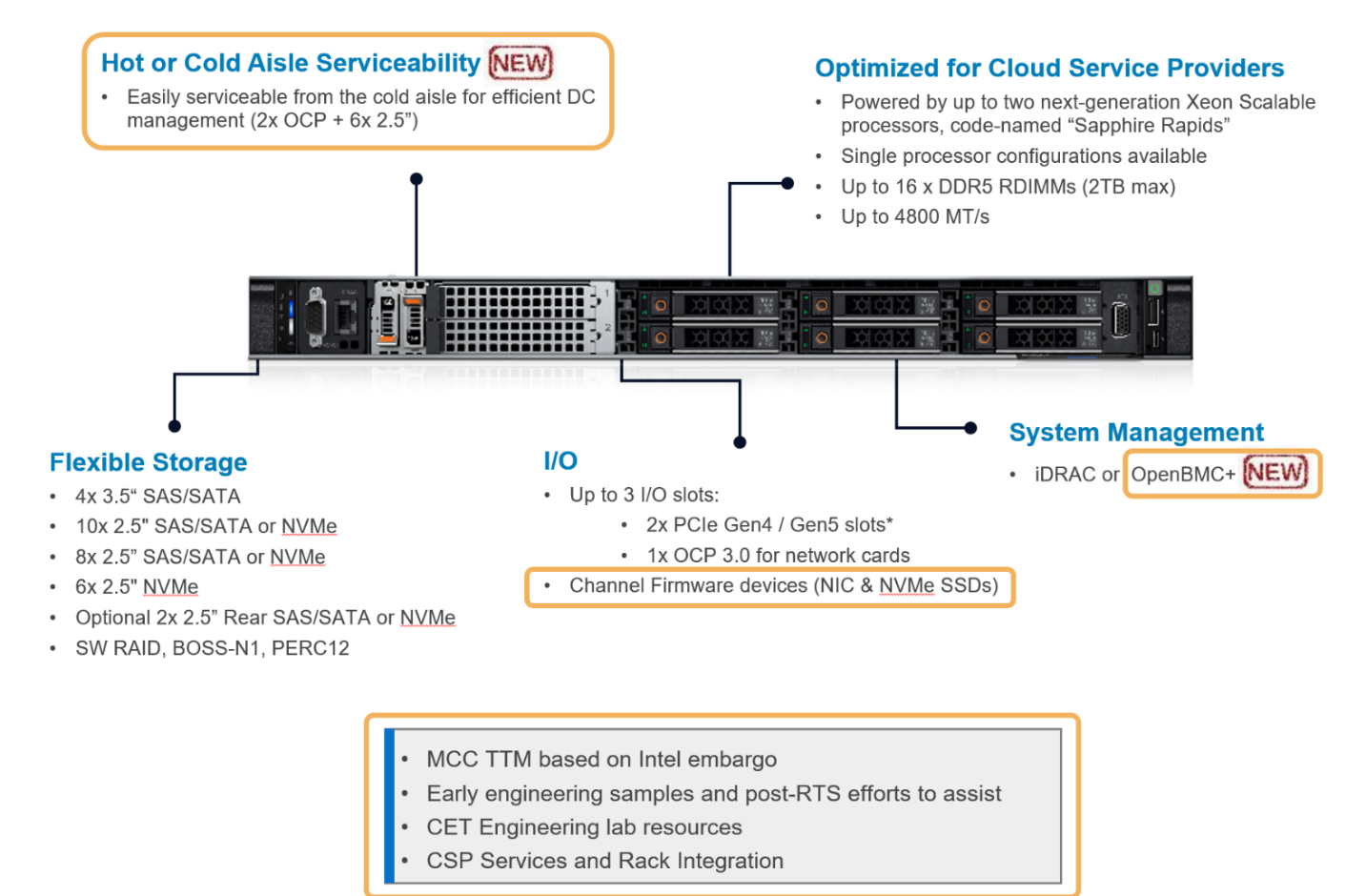

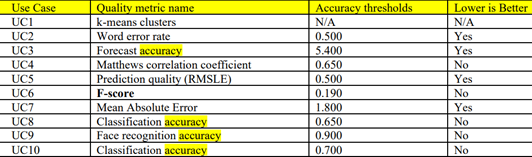

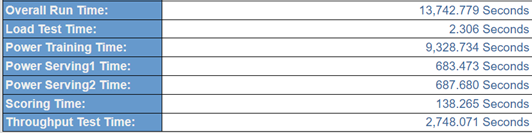

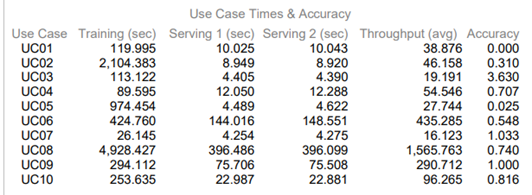

In this blog, we present the MLPerf™ v4.0 Data Center Inference results obtained on a Dell PowerEdge R760 with the latest 5th Generation Intel® Xeon® Scalable Processors (a CPU only system).

These new Intel® Xeon® processors use an Intel® AMX matrix multiplication engine in each core to boost overall inferencing performance. With a focus on ease of use, Dell Technologies delivers exceptional CPU performance results out of the box with an optimized BIOS profile that fully unleashes the power of Intel’s OneDNN software – a software which is fully integrated with both PyTorch and TensorFlow frameworks. The server configurations and the CPU specifications in the benchmark experiments are shown in Tables 1 and 2, respectively.

Table 1. Dell PowerEdge R760 Server Configuration

System Name | PowerEdge R760 |

Status | Available |

System Type | Data Center |

Number of Nodes | 1 |

Host Processor Model | 5th Generation Intel® Xeon® Scalable Processors |

Host Processors per Node | 2 |

Host Processor Core Count | 64 |

Host Processor Frequency | 1.9 GHz, 3.9 GHz Turbo Boost |

Host Memory Capacity | 2 TB, 16 x 128 GB 5600 MT/s |

Host Storage Capacity | 7.68TB, NVME |

Table 2. 5th Generation Intel® Xeon® Scalable Processor Technical Specifications

Product Collection | 5th Generation Intel® Xeon® Scalable Processors |

Processor Name | Platinum 8592+ |

Status | Launched |

# of CPU Cores | 64 |

# of Threads | 128 |

Base Frequency | 1.9 GHz |

Max Turbo Speed | 3.9 GHz |

Cache L3 | 320 MB |

Memory Type | DDR5 5600 MT/s |

ECC Memory Supported | Yes |

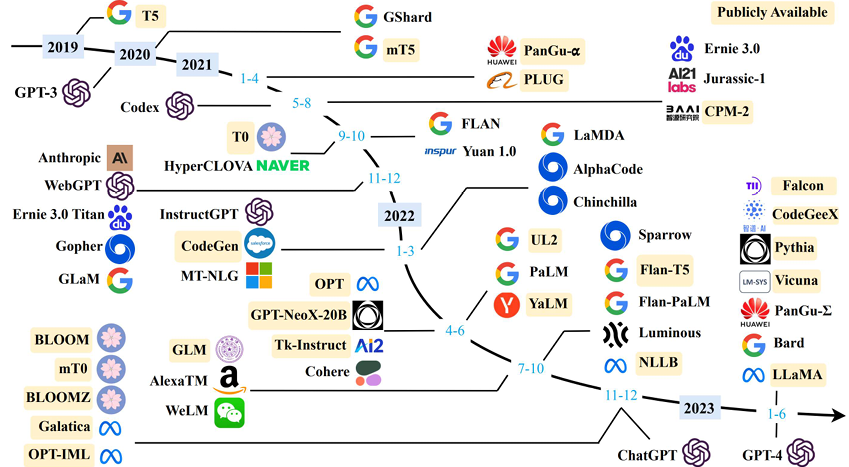

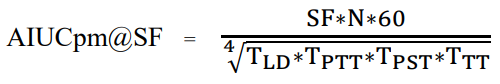

MLPerf™ Inference v4.0 - Datacenter

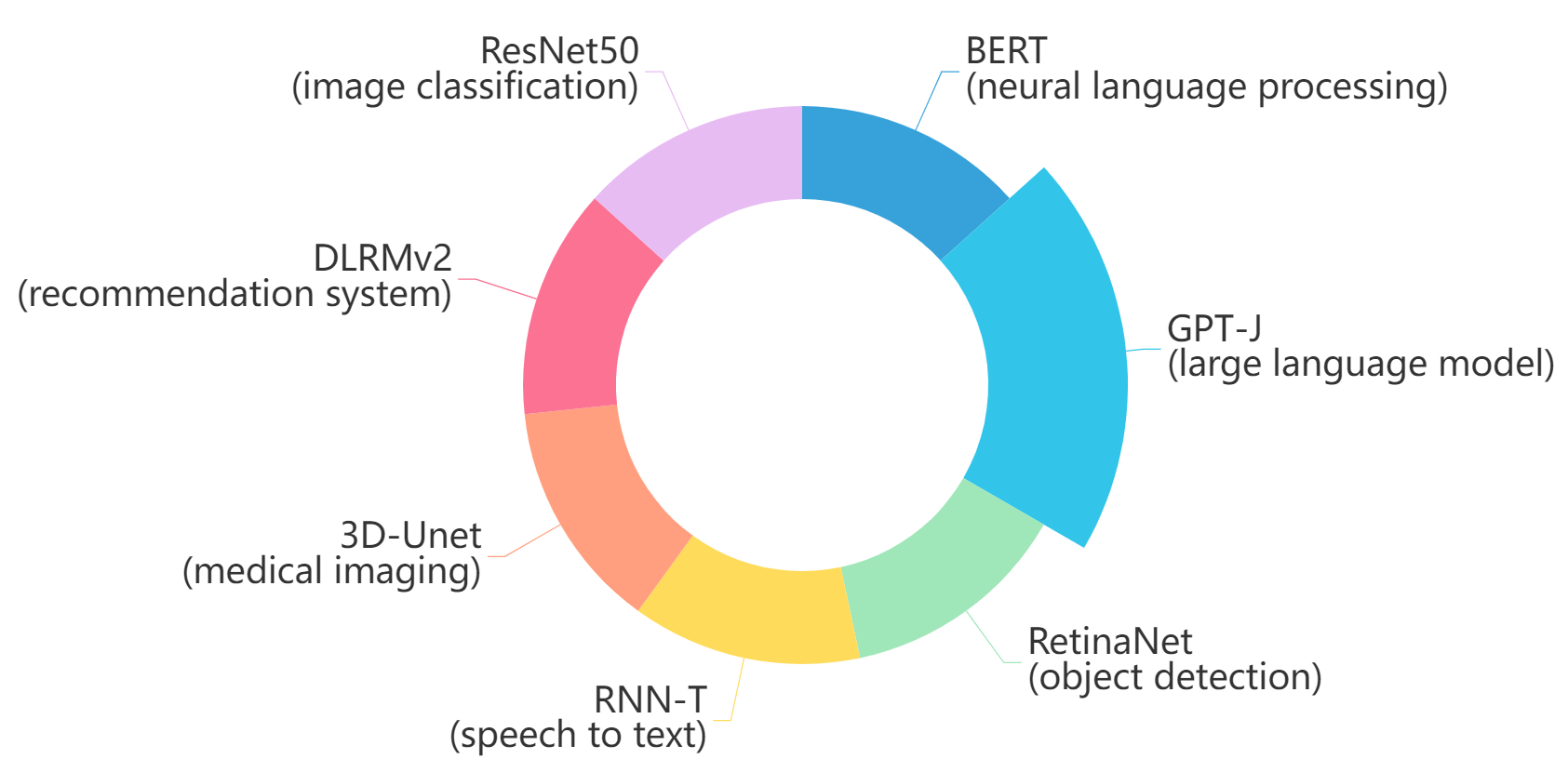

The MLPerf™ inference benchmark measures how fast a system can perform ML inference using a trained model with new data in a variety of deployment scenarios. There are two benchmark suites, one for Datacenter systems and one for Edge. Figure 1 shows the 7 models with each targeting at different task in the official release v4.0 for Datacenter systems category that were run on this PowerEdge R760 and submitted in the closed category. The dataset and quality target are defined for each model for benchmarking, as listed in Table 3.

Figure 1. Benchmarked models for MLPerf™ datacenter inference v4.0

Table 3. Datacenter Suite Benchmarks. Source: MLCommons™

Area | Task | Model | Dataset | QSL Size | Quality | Server latency constraint |

Vision | Image classification | ResNet50-v1.5 | ImageNet (224x224) | 1024 | 99% of FP32 (76.46%) | 15 ms |

Vision | Object detection | RetinaNet | OpenImages (800x800) | 64 | 99% of FP32 (0.20 mAP) | 100 ms |

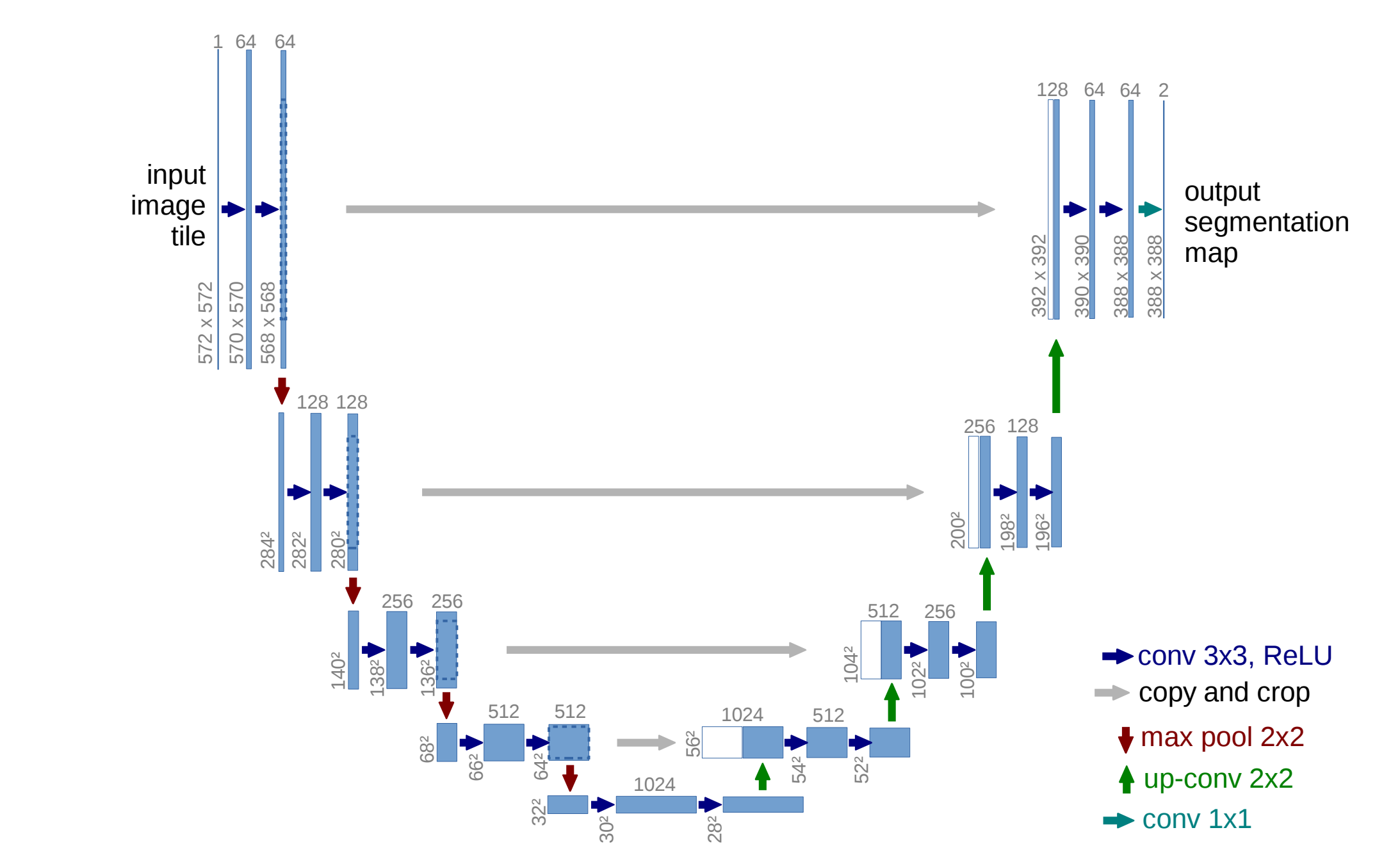

Vision | Medical imaging | 3D-Unet | KITS 2019 (602x512x512) | 16 | 99.9% of FP32 (0.86330 mean DICE score) | N/A |

Speech | Speech-to-text | RNN-T | Librispeech dev-clean (samples < 15 seconds) | 2513 | 99% of FP32 (1 - WER, where WER=7.452253714852645%) | 1000 ms |

Language | Language processing | BERT-large | SQuAD v1.1 (max_seq_len=384) | 10833 | 99% of FP32 and 99.9% of FP32 (f1_score=90.874%) | 130 ms |

Language | Summarization | GPT-J | CNN Dailymail (v3.0.0, max_seq_len=2048) | 13368 | 99% of FP32 (f1_score=80.25% rouge1=42.9865, rouge2=20.1235, rougeL=29.9881). | 20 s |

Commerce | Recommendation | DLRMv2 | Criteo 4TB multi-hot | 204800 | 99% of FP32 (AUC=80.25%) | 60 ms |

Scenarios

The models are deployed in a variety of critical inference applications or use cases known as “scenarios” where each scenario requires different metrics, demonstrating production environment performance in practice. Following is the description of each scenario. Table 4 shows the scenarios required for each Datacenter benchmark included in this submission v4.0.

Offline scenario: represents applications that process the input in batches of data available immediately and do not have latency constraints for the metric performance measured in samples per second.

Server scenario: represents deployment of online applications with random input queries. The metric performance is measured in queries per second (QPS) subject to latency bound. The server scenario is more complicated in terms of latency constraints and input queries generation. This complexity is reflected in the throughput-degradation results compared to the offline scenario.

Each Datacenter benchmark requires the following scenarios:

Table 4. Datacenter Suite Benchmark Scenarios. Source: MLCommons™

Area | Task | Required Scenarios |

Vision | Image classification | Server, Offline |

Vision | Object detection | Server, Offline |

Vision | Medical imaging | Offline |

Speech | Speech-to-text | Server, Offline |

Language | Language processing | Server, Offline |

Language | Summarization | Server, Offline |

Commerce | Recommendation | Server, Offline |

Software stack and system configuration

The software stack and system configuration used for this submission is summarized in Table 5.

Table 5. System Configuration

OS | CentOS Stream 8 (GNU/Linux x86_64) |

Kernel | 6.7.4-1.el8.elrepo.x86_64 |

Intel® Optimized Inference SW for MLPerf™ | MLPerf™ Intel® OneDNN integrated with Intel® Extension for PyTorch (IPEX) |

ECC memory mode | ON |

Host memory configuration | 2TB, 16 x 128 GB, 1 DIMM per channel, well balanced |

Turbo mode | ON |

CPU frequency governor | Performance |

What is Intel® AMX (Advanced Matrix Extensions)?

Intel® AMX is a built-in accelerator that enables 5th Gen Intel® Xeon® Scalable processors to optimize deep learning (DL) training and inferencing workloads. With the high-speed matrix multiplications enabled by Intel® AMX, 5th Gen Intel® Xeon® Scalable processors can quickly pivot between optimizing general computing and AI workloads.

Imagine an automobile that could excel at city driving and then quickly shift to deliver Formula 1 racing performance. 5th Gen Intel® Xeon® Scalable processors deliver this level of flexibility. Developers can code AI functionality to take advantage of the Intel® AMX instruction set as well as code non-AI functionality to use the processor instruction set architecture (ISA). Intel® has integrated the oneAPI Deep Neural Network Library (oneDNN) – its oneAPI DL engine – into popular open-source tools for AI applications, including TensorFlow, PyTorch, PaddlePaddle, and ONNX.

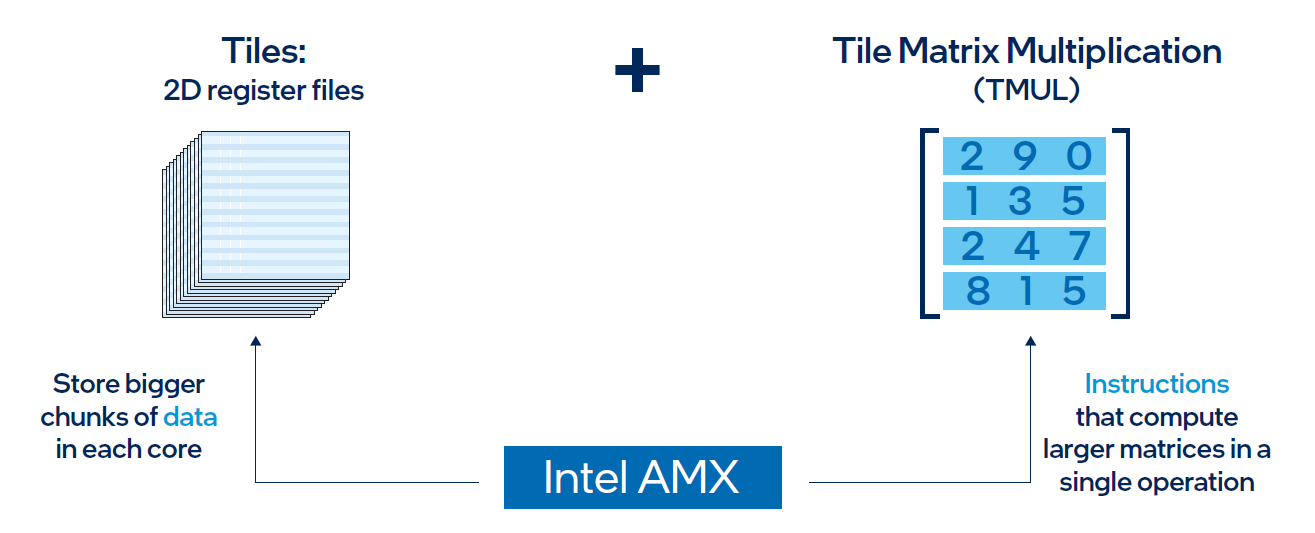

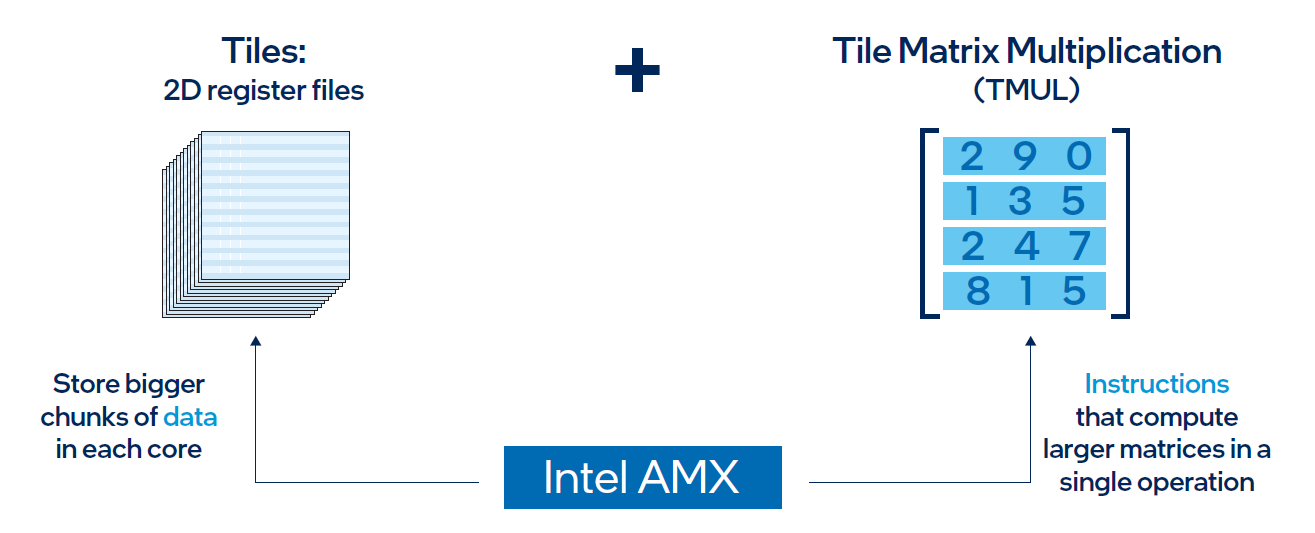

AMX architecture

Intel® AMX architecture consists of two components, as shown in Figure 1:

- Tiles consist of eight two-dimensional registers, each 1 kilobyte in size. They store large chunks of data.

- Tile Matrix Multiplication (TMUL) is an accelerator engine attached to the tiles that performs matrix-multiply computations for AI.

Figure 2. Intel® AMX architecture consists of 2D register files (tiles) and TMUL

Results

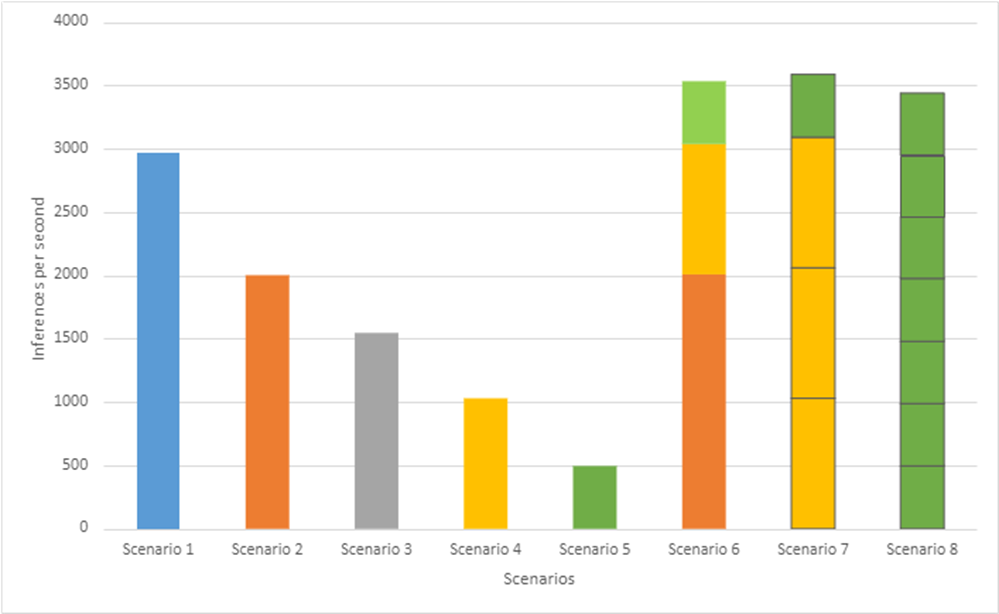

Both MLPerf™ v3.1 and MLPerf™ v4.0 benchmark results are based on the Dell R760 server but with different generations of Xeon® CPUs (4th Generation Intel® Xeon® CPUs for MLPerf™ v3.1 versus 5th Generation Intel® Xeon® CPUs for MLPerf™ v4.0) and optimized software stacks. In this section, we show the performance in the comparing mode so the improvement from the last submission can be easily observed.

Comparing Performance from MLPerfTM v4.0 to MLPerfTM v3.1

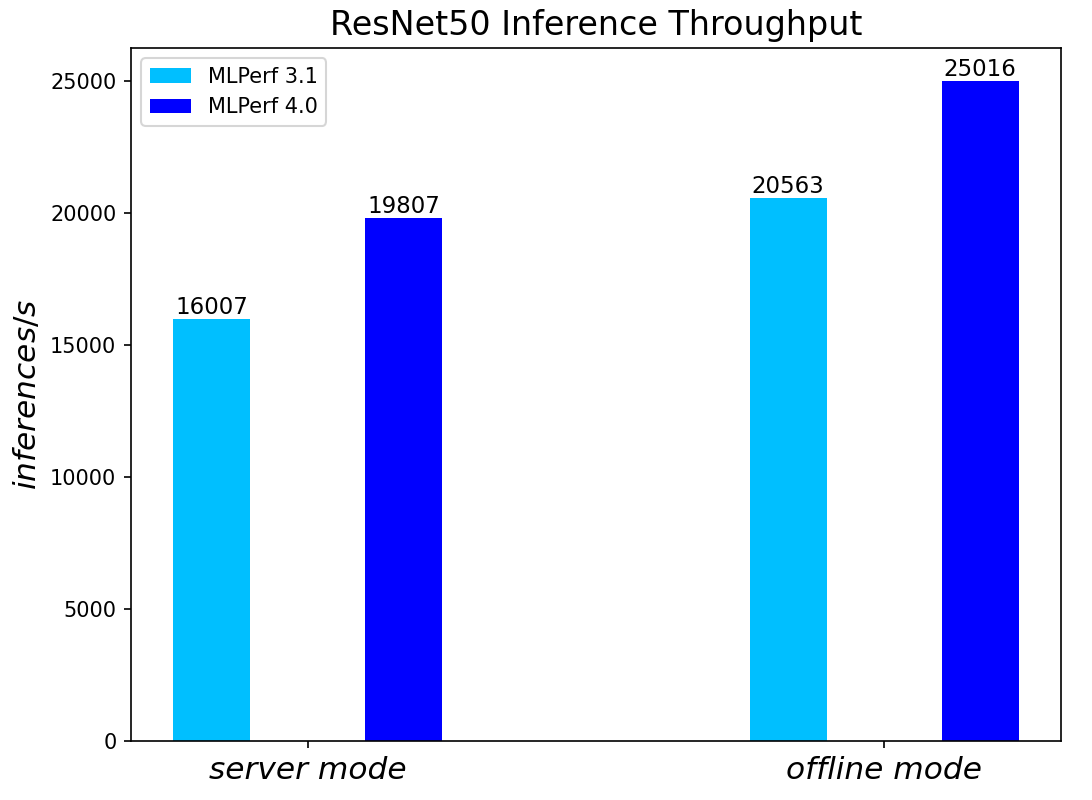

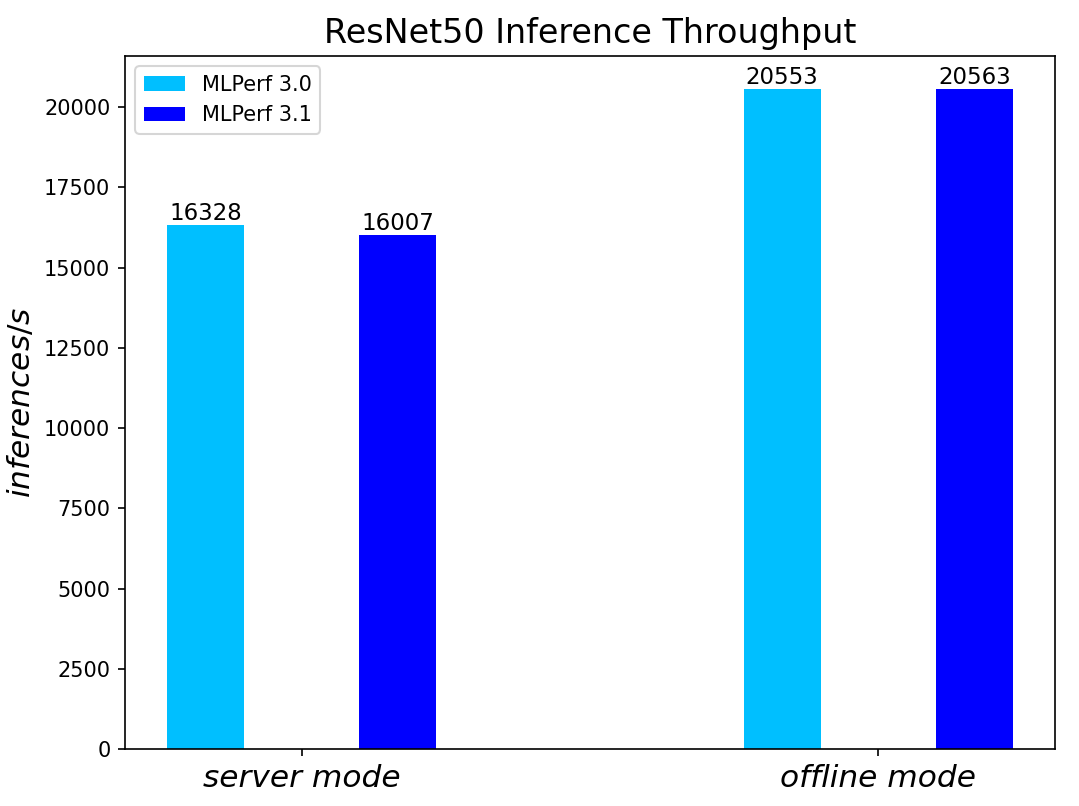

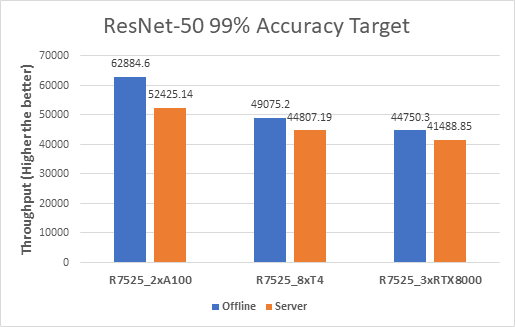

ResNet50 server & offline scenarios:

Figure 3. ResNet50 inference throughput in server and offline scenarios

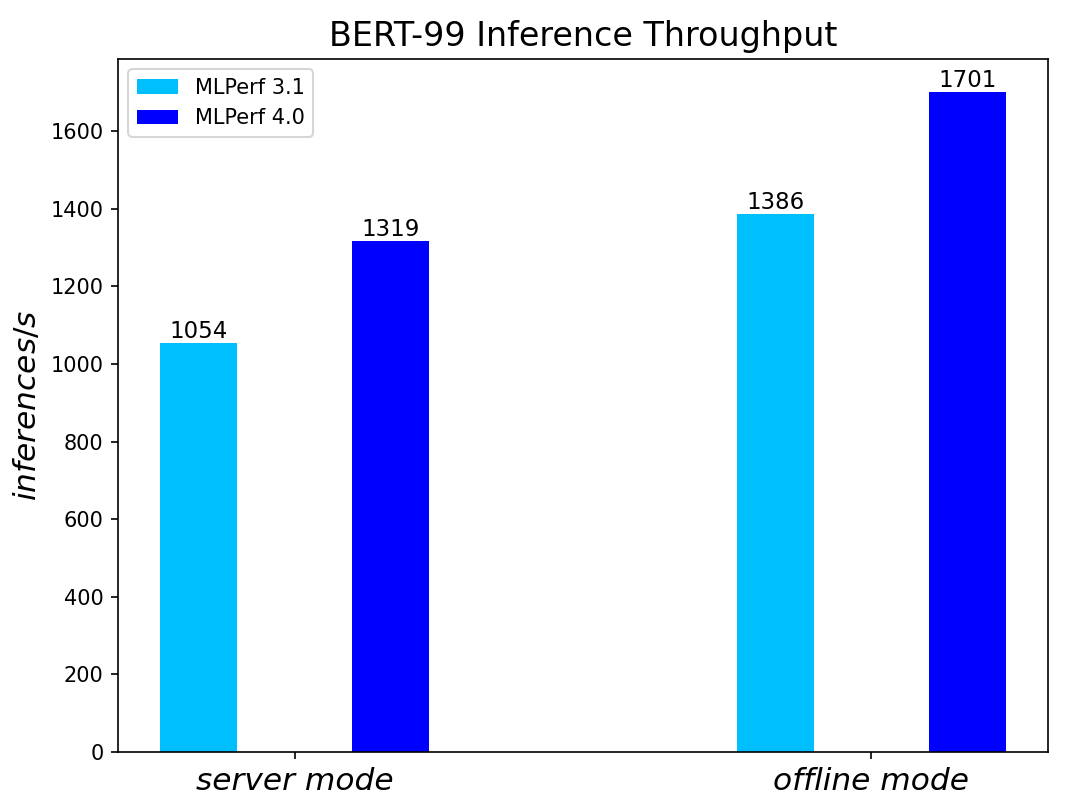

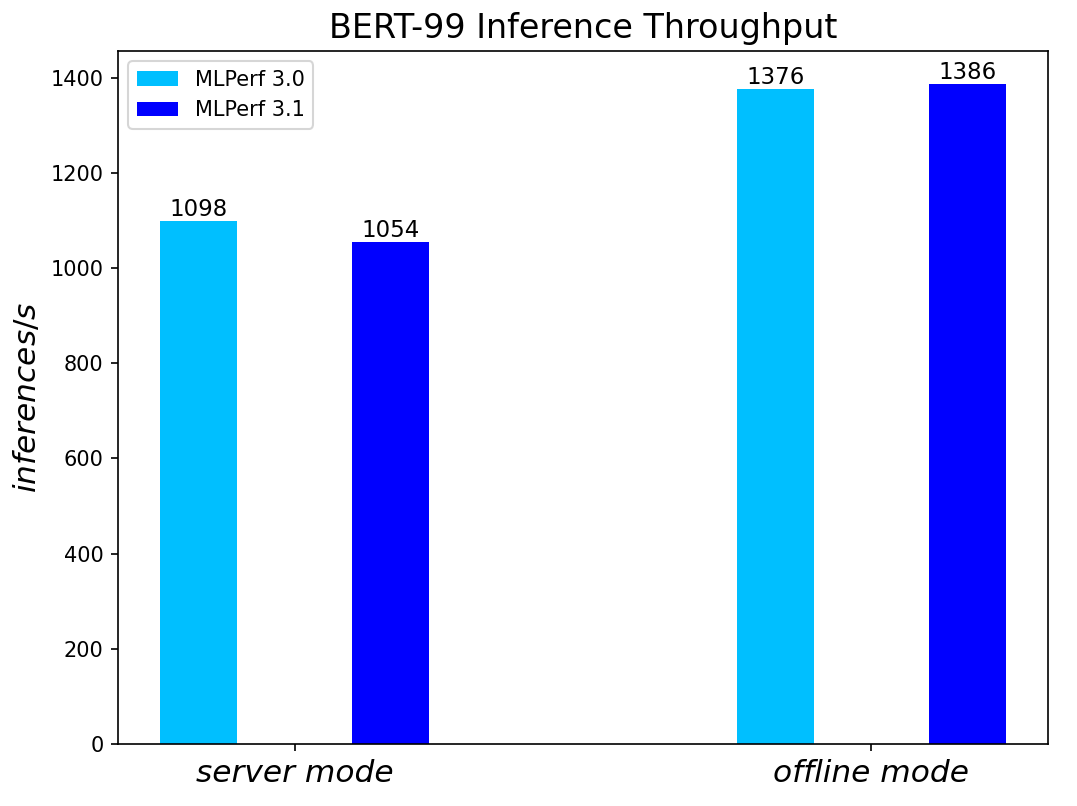

BERT Large Language Model server & offline scenarios:

Figure 4. BERT Inference results for server and offline scenarios

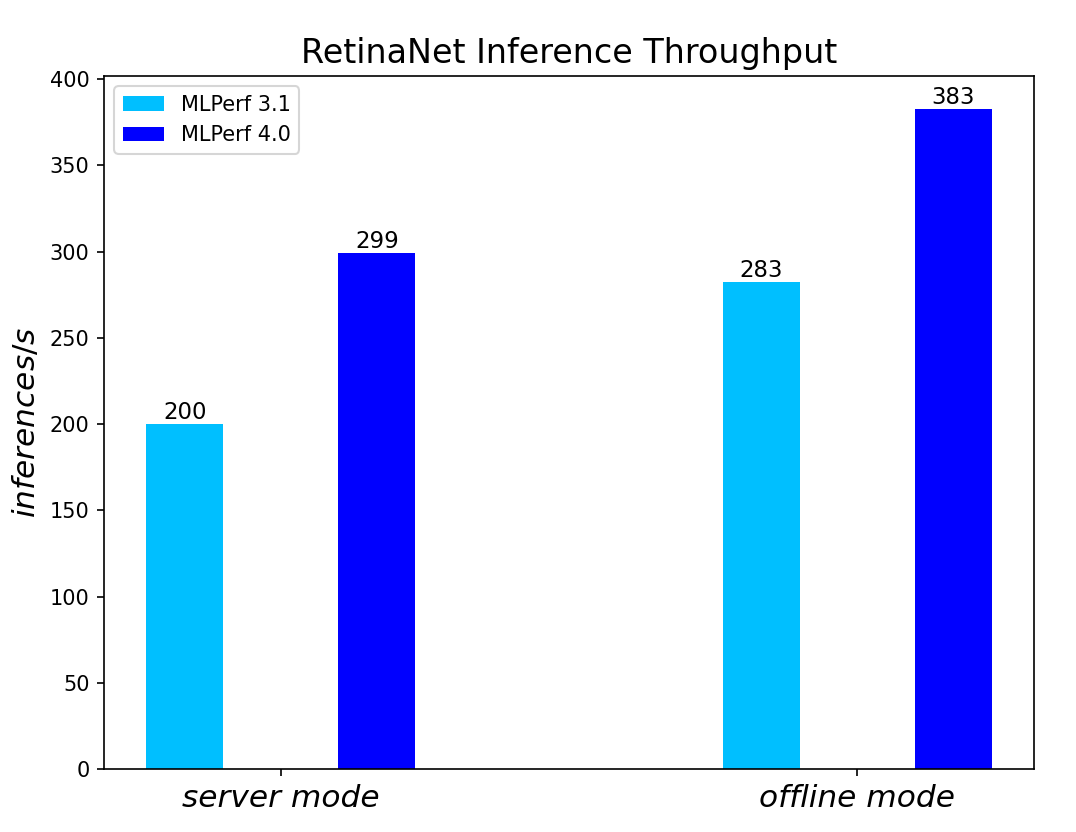

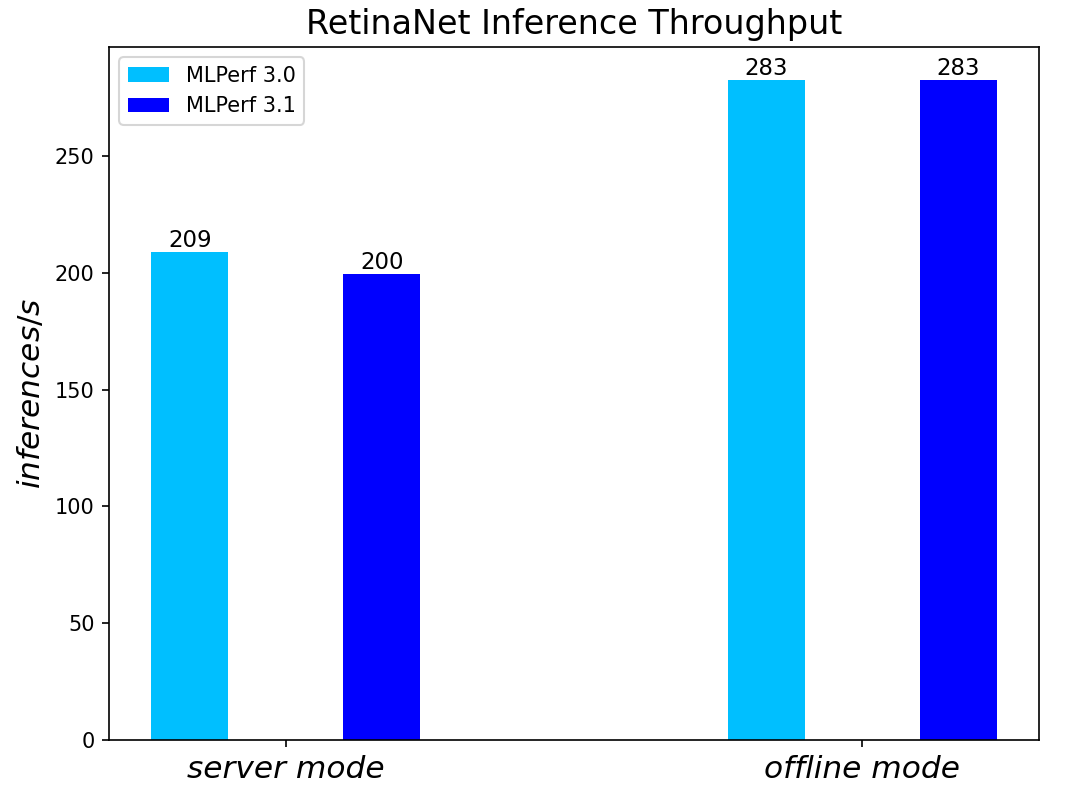

RetinaNet Object Detection Model server & offline scenarios:

Figure 5. RetinaNet Object Detection Model Inference results for server and offline scenarios

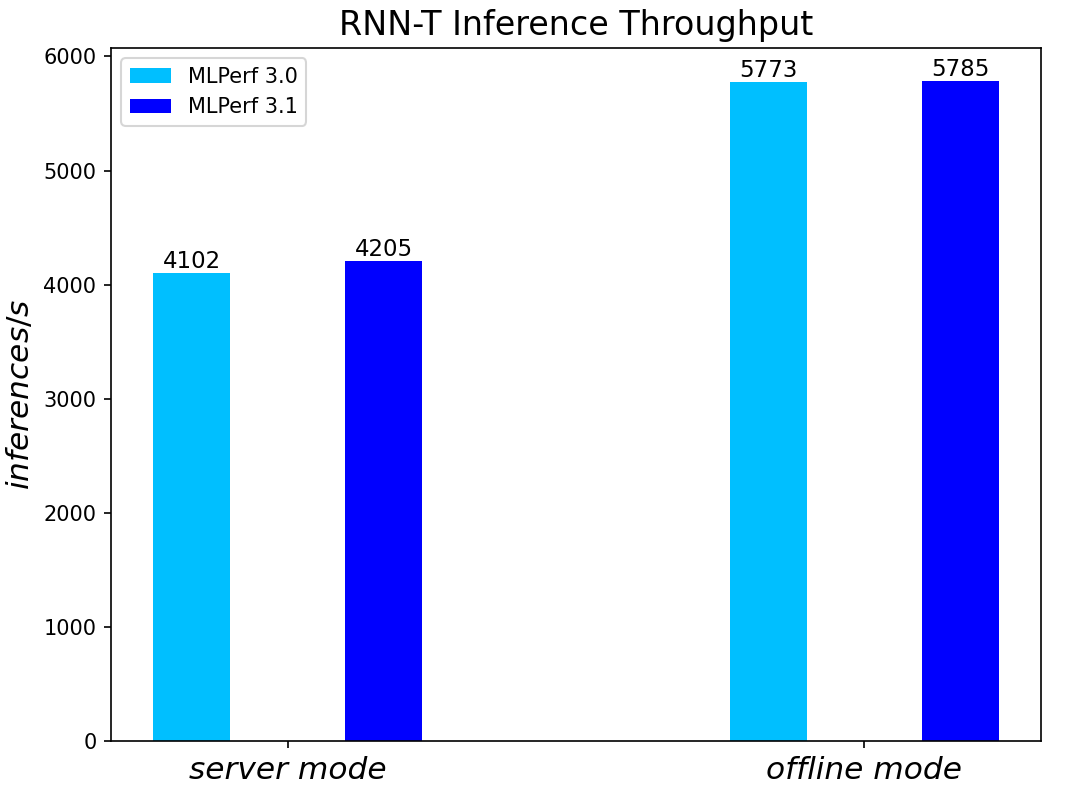

RNN-T Text to Speech Model server & offline scenarios:

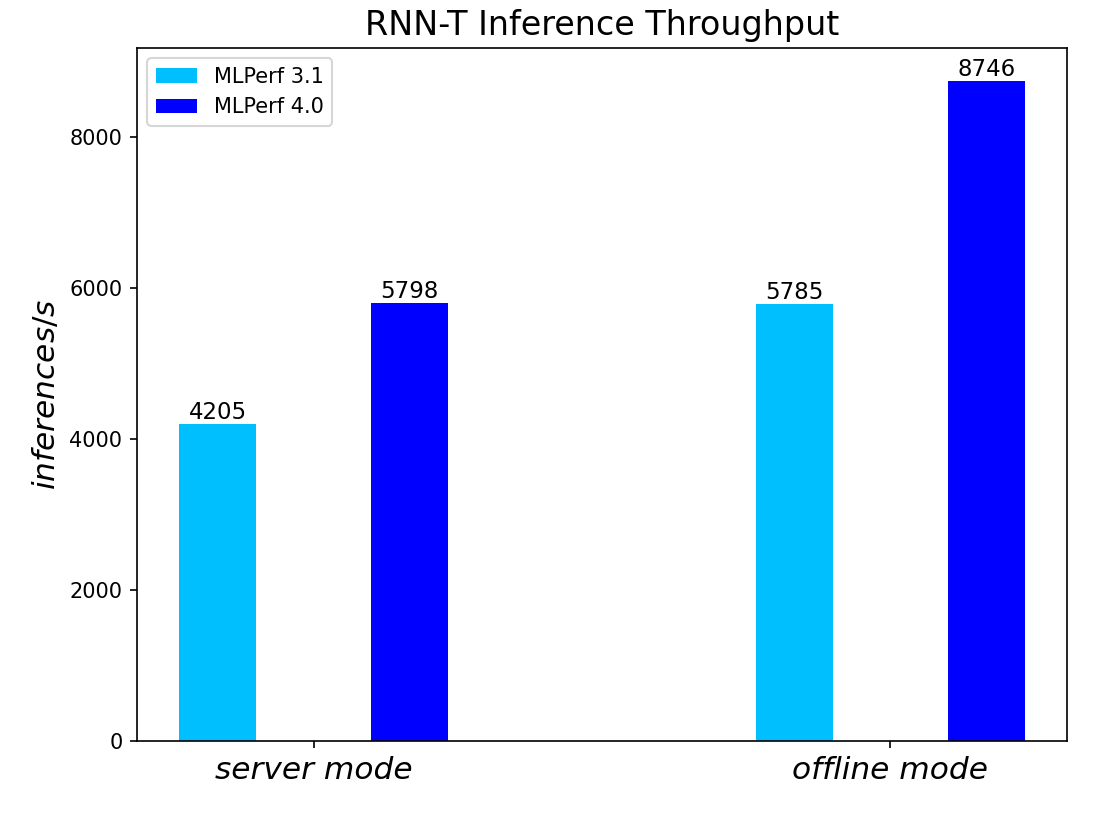

Figure 6. RNN-T Text to Speech Model Inference results for server and offline scenarios

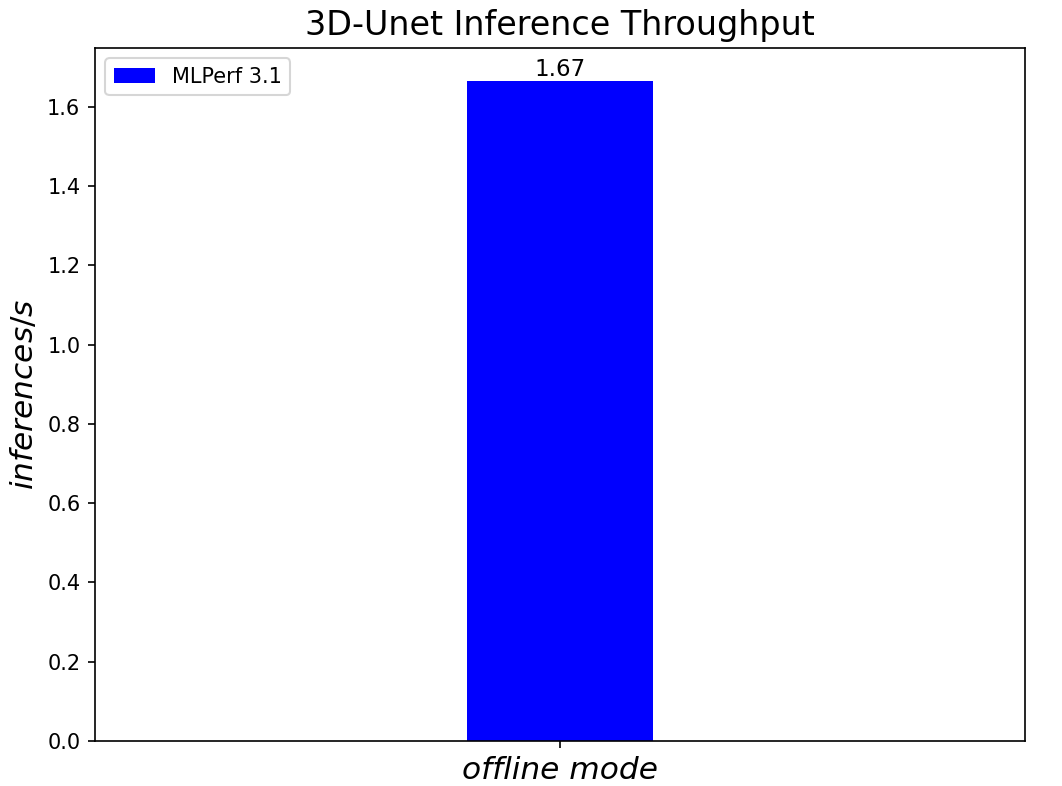

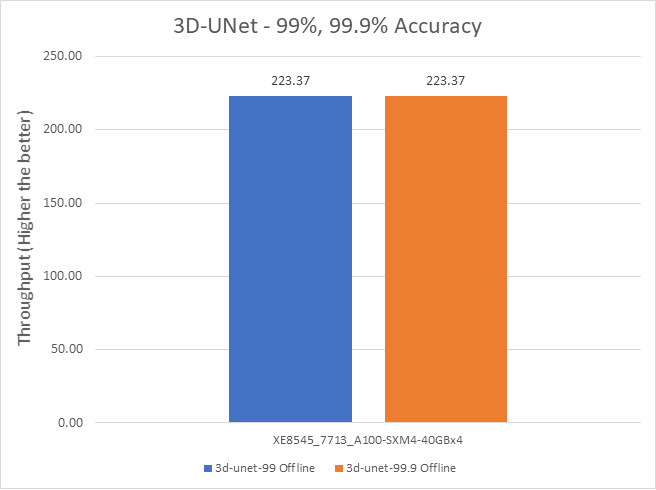

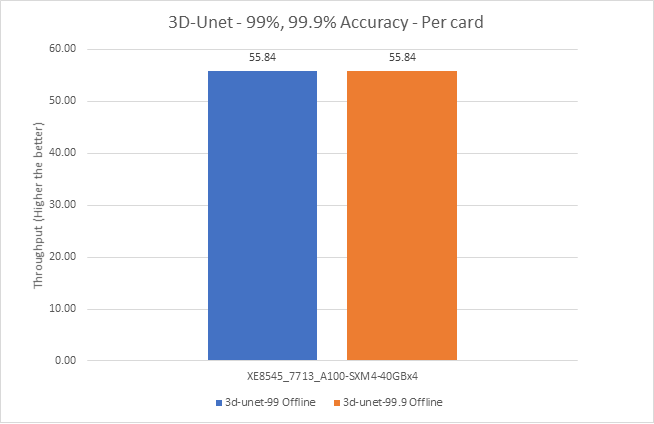

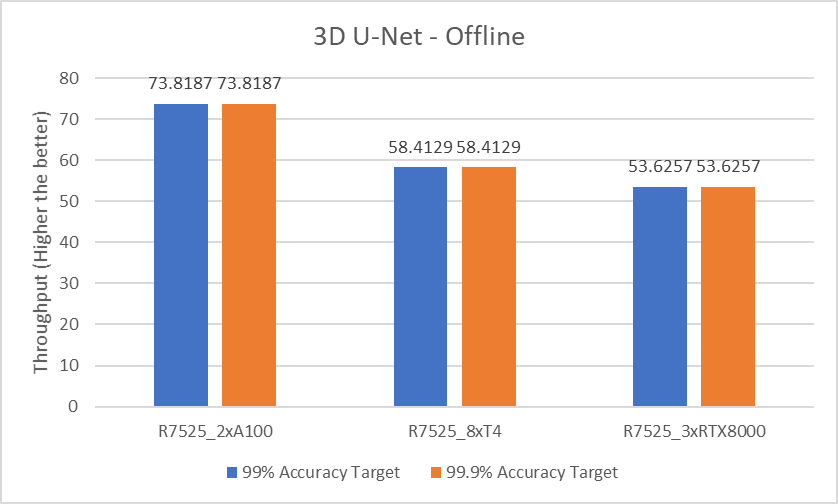

3D-Unet Medical Imaging Model offline scenarios:

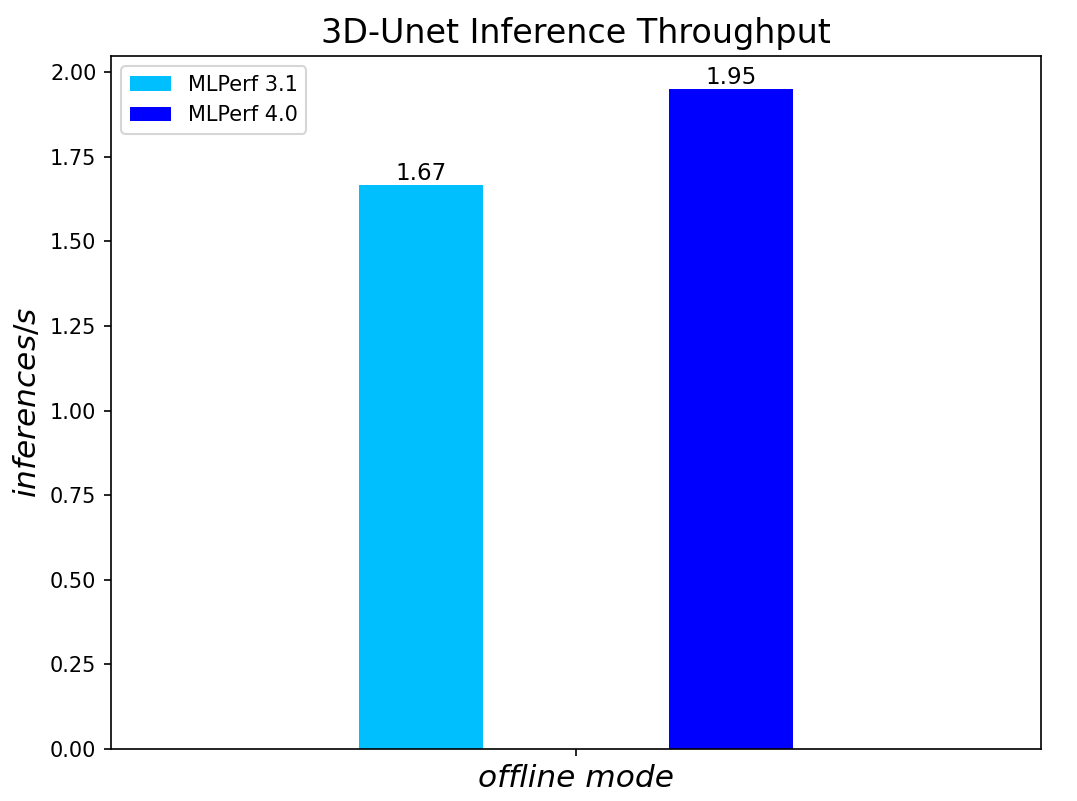

Figure 7. 3D-Unet Medical Imaging Model Inferencing results for server and offline scenarios

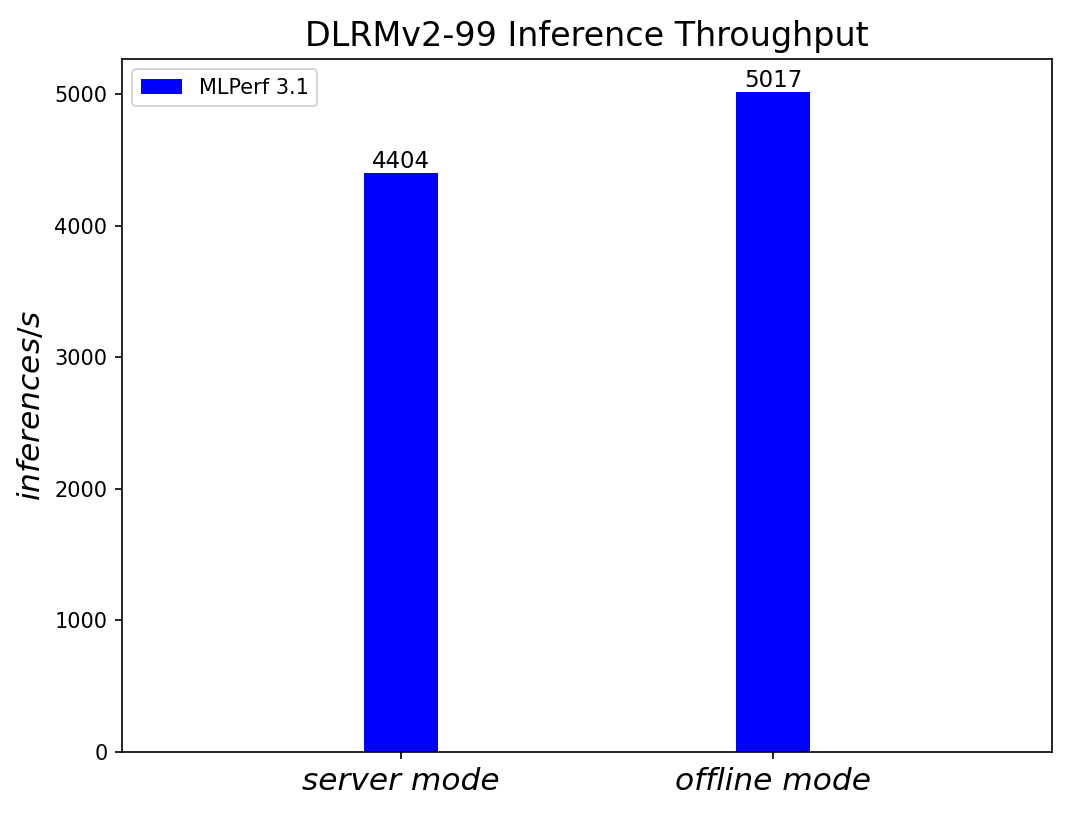

DLRMv2-99 Recommendation Model server & offline scenarios:

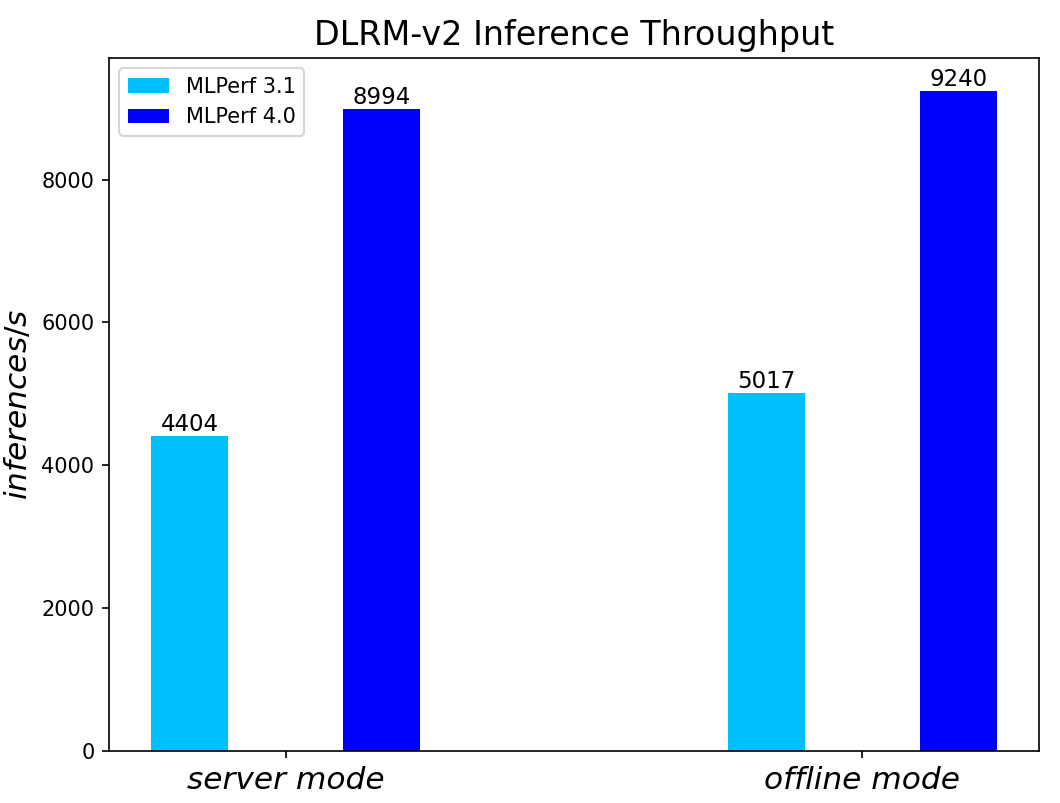

Figure 8. DLRMv2-99 Recommendation Model Inference results for server and offline scenarios

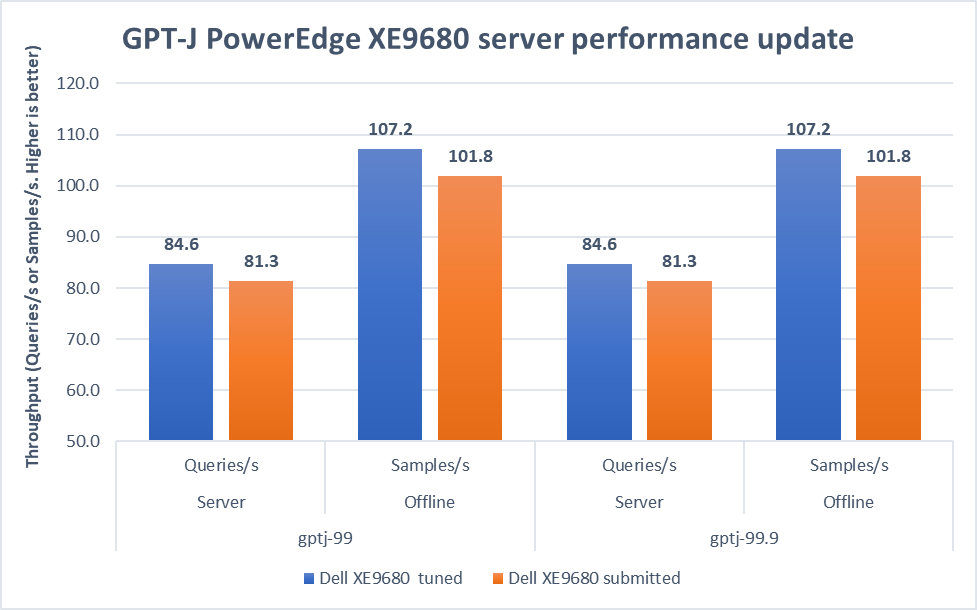

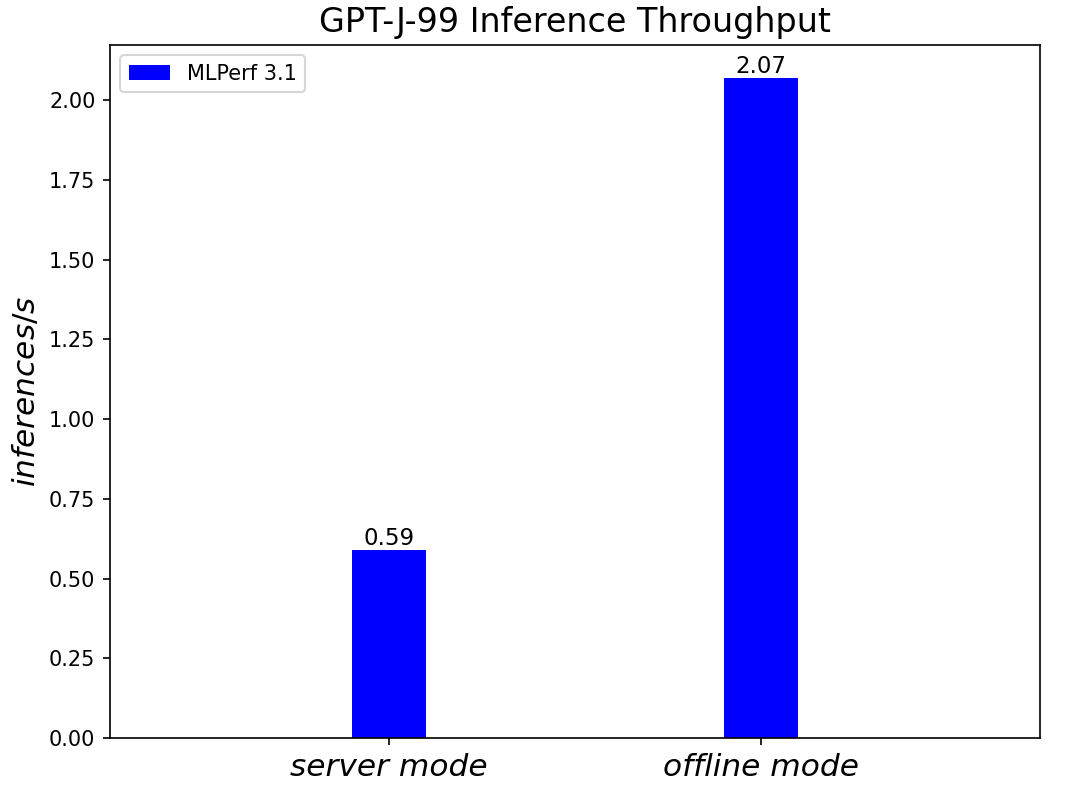

GPT-J-99 Summarization Model server & offline scenarios:

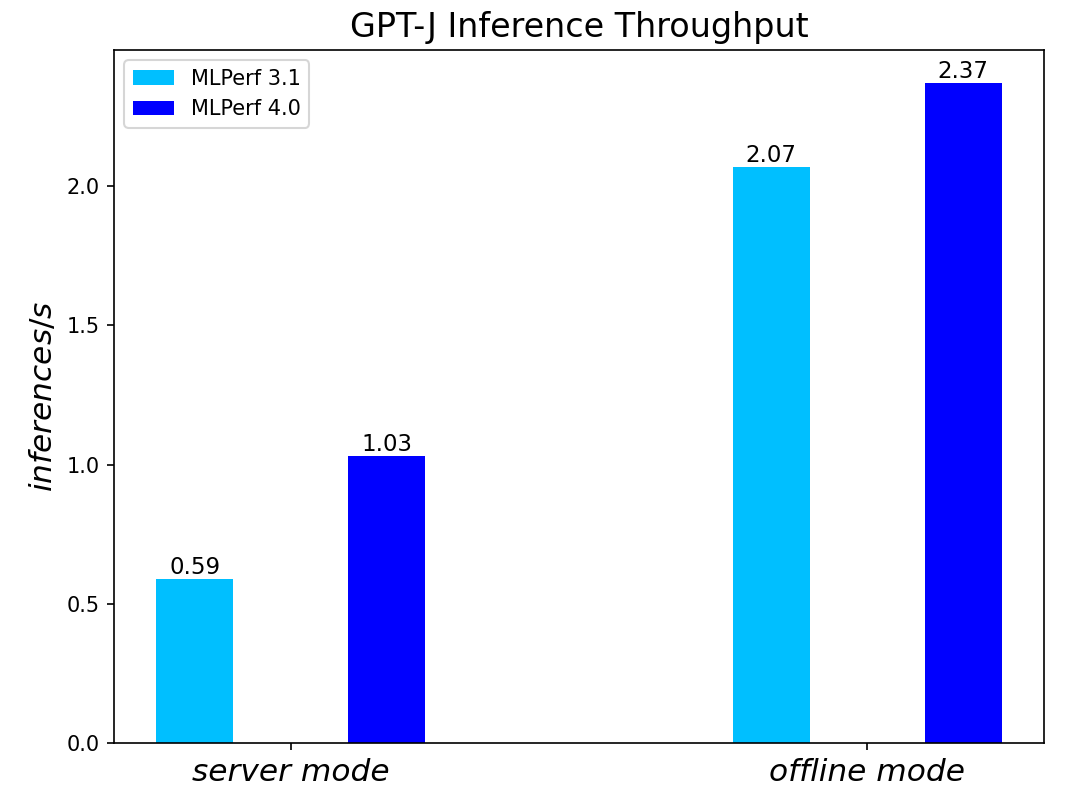

Figure 9. GPT-J-99 Summarization Model Inference results for server and offline scenarios

Conclusion

- The PowerEdge R760 server with 5th Generation Intel® Xeon® Scalable Processors produces strong data center inference performance, confirmed by the official version 4.0 MLPerfTM benchmarking results from MLCommonsTM.

- The high performance and versatility are demonstrated across natural language processing, image classification, object detection, medical imaging, speech-to-text inference, recommendation, and summarization systems.

- Compared to its prior version 3.0 and 3.1 submissions enabled by 4th Generation Intel® Xeon® Scalable Processors, the R760 with 5th Generation Intel® Xeon® Scalable Processors show significant performance improvement across different models, including the generative AI models like GPT-J.

- The R760 supports different deep learning inference scenarios in the MLPerfTM benchmark scenarios as well as other complex workloads such as database and advanced analytics. It is an ideal solution for data center modernization to drive operational efficiency, lead to higher productivity, and minimize total cost of ownership (TCO).

References

MLCommonsTM MLPerfTM v4.0 Inference Benchmark Submission IDs

ID | Submitter | System |

4.0-0026 | Dell | Dell PowerEdge Server R760 (2x Intel® Xeon® Platinum 8592+) |

MLPerf™ Inference v4.0 Performance on Dell PowerEdge R760xa and R7615 Servers with NVIDIA L40S GPUs

Fri, 05 Apr 2024 17:41:56 -0000

|Read Time: 0 minutes

Abstract

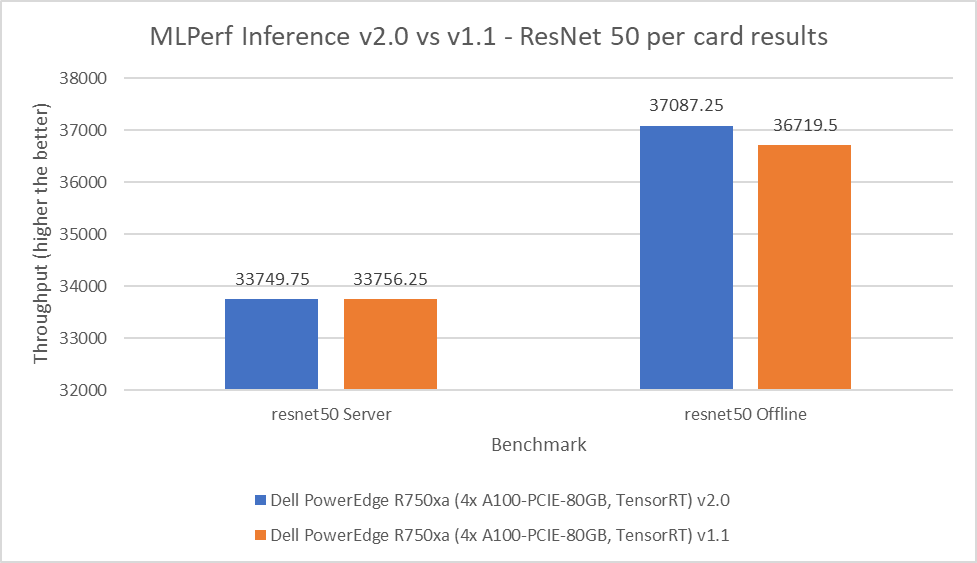

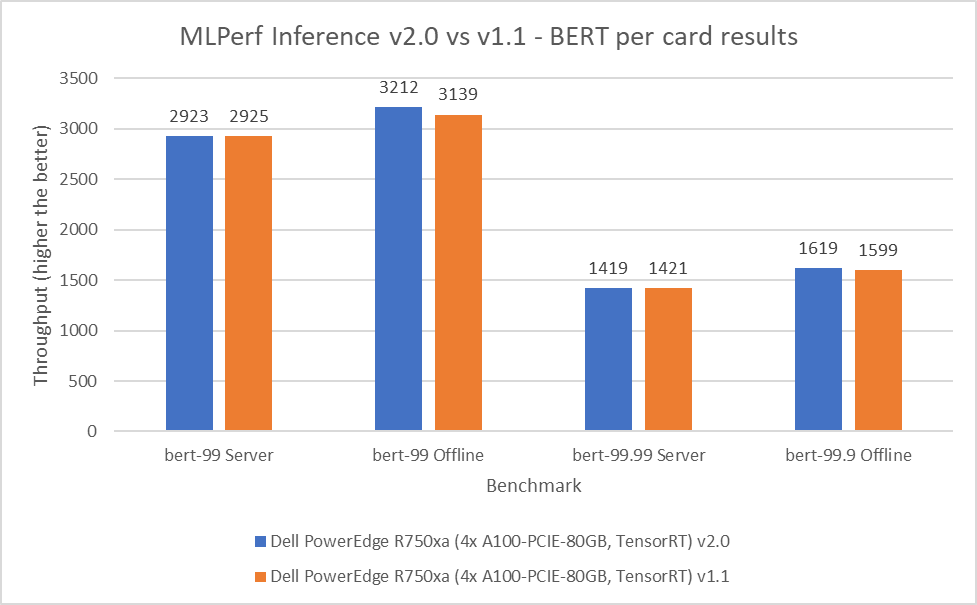

Dell Technologies recently submitted results to the MLPerf™ Inference v4.0 benchmark suite. This blog highlights Dell Technologies’ closed division submission made for the Dell PowerEdge R760xa, Dell PowerEdge R7615, and Dell PowerEdge R750xa servers with NVIDIA L40S and NVIDIA A100 GPUs.

Introduction

This blog provides relevant conclusions about the performance improvements that are achieved on the PowerEdge R760xa and R7615 servers with the NVIDIA L40S GPU compared to the PowerEdge R750xa server with the NVIDIA A100 GPU. In the following comparisons, we held the GPU constant across the PowerEdge R760xa and PowerEdge R7615 servers to show the excellent performance of the NVIDIA L40S GPU. Additionally, we also compared the PowerEdge R750xa server with the NVIDIA A100 GPU to its successor the PowerEdge R760xa server with the NVIDIA L40S GPU.

System Under Test configuration

The following table shows the System Under Test (SUT) configuration for the PowerEdge servers.

Table 1: SUT configuration of the Dell PowerEdge R750xa, R760xa, and R7615 servers for MLPerf Inference v4.0

Server | PowerEdge R750xa | PowerEdge R760xa | PowerEdge R7615 |

MLPerf Version | V4.0

| ||

GPU | NVIDIA A100 PCIe 80 GB | NVIDIA L40S

| |

Number of GPUs | 4 | 2 | |

MLPerf System ID | R750xa_A100_PCIe_80GBx4_TRT | R760xa_L40Sx4_TRT | R7615_L40Sx2_TRT

|

CPU | 2 x Intel Xeon Gold 6338 CPU @ 2.00GHz | 2 x Intel Xeon Platinum 8470Q | 1 x AMD EPYC 9354 32-Core Processor |

Memory | 512 GB | ||

Software Stack | TensorRT 9.3.0 CUDA 12.2 cuDNN 8.9.2 Driver 535.54.03 / 535.104.12 DALI 1.28.0 | ||

The following table lists the technical specifications of the NVIDIA L40S and NVIDIA A100 GPUs.

Table 2: Technical specifications of the NVIDIA A100 and NVIDIA L40S GPUs

Model | NVIDIA A100 | NVIDIA L40S | ||

Form factor | SXM4 | PCIe Gen4 | PCIe Gen4 | |

GPU architecture | Ampere | Ada Lovelace | ||

CUDA cores | 6912 | 18176 | ||

Memory size | 80 GB | 48 GB | ||

Memory type | HBM2e | HBM2e | ||

Base clock | 1275 MHz | 1065 MHz | 1110 MHz | |

Boost clock | 1410 MHz | 2520 MHz | ||

Memory clock | 1593 MHz | 1512 MHz | 2250 MHz | |

MIG support | Yes | No | ||

Peak memory bandwidth | 2039 GB/s | 1935 GB/s | 864 GB/s | |

Total board power | 500 W | 300 W | 350 W | |

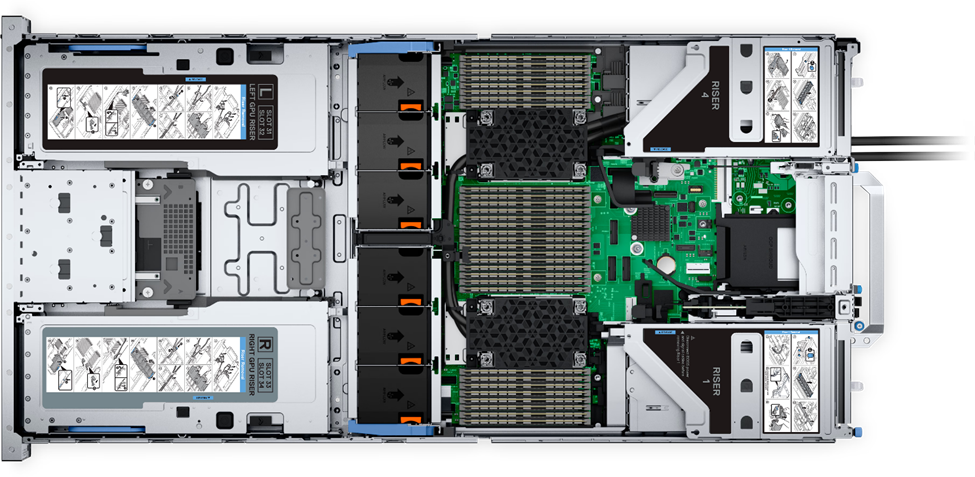

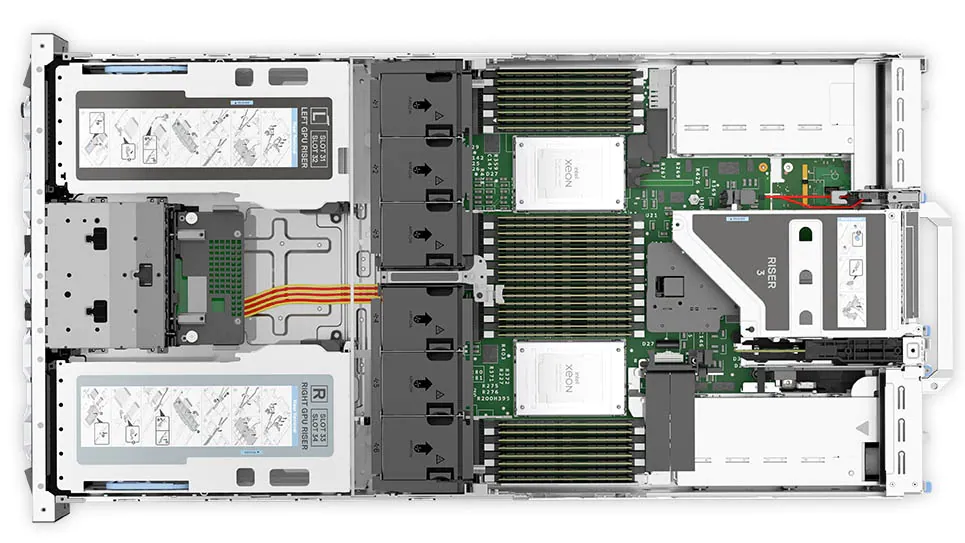

Dell PowerEdge R760xa server

The PowerEdge R760xa server shines as an Artificial Intelligence (AI) workload server with its cutting-edge inferencing capabilities. This server represents the pinnacle of performance in the AI inferencing space with its processing prowess enabled by Intel Xeon Platinum processors and NVIDIA L40S GPUs. Coupled with NVIDIA TensorRT and CUDA 12.2, the PowerEdge R760xa server is positioned perfectly for any AI workload including, but not limited to, Large Language Models, computer vision, Natural Language Processing, robotics, and edge computing. Whether you are processing image recognition tasks, natural language understanding, or deep learning models, the PowerEdge R760xa server provides the computational muscle for reliable, precise, and fast results.

Figure 1: Front view of the Dell PowerEdge R760xa server

Figure 2: Top view of the Dell PowerEdge R760xa server

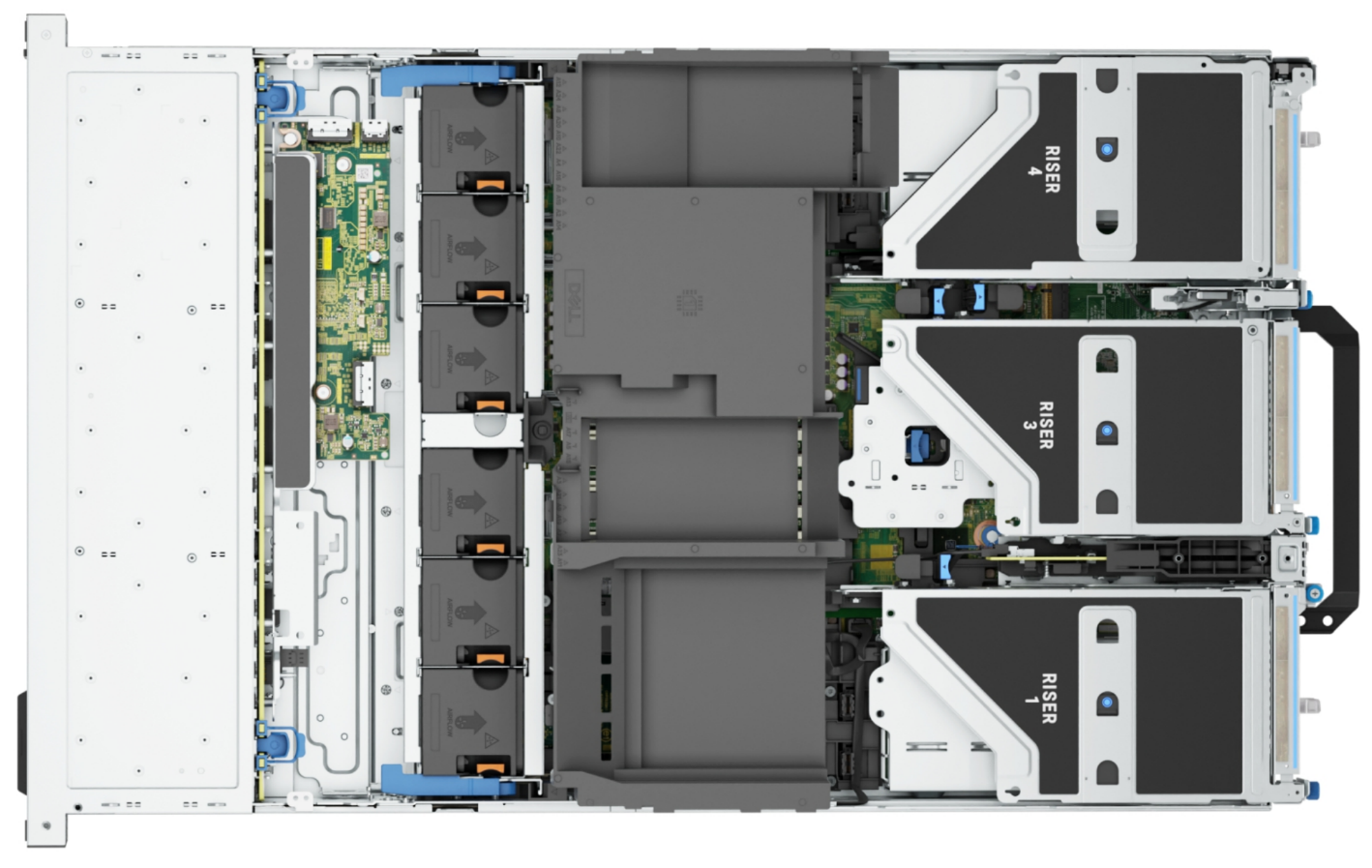

Dell PowerEdge R7615 server

The PowerEdge R7615 server stands out as an excellent choice for AI, machine learning (ML), and deep learning (DL) workloads due to its robust performance capabilities and optimized architecture. With its powerful processing capabilities including up to three NVIDIA L40S GPUs supported by TensorRT, this server can handle complex neural network inference and training tasks with ease. Powered by a single AMD EPYC processor, this server performs well for any demanding AI workloads.

Figure 3: Front view of the Dell PowerEdge R7615 server

Figure 4: Top view of the Dell PowerEdge R7615 server

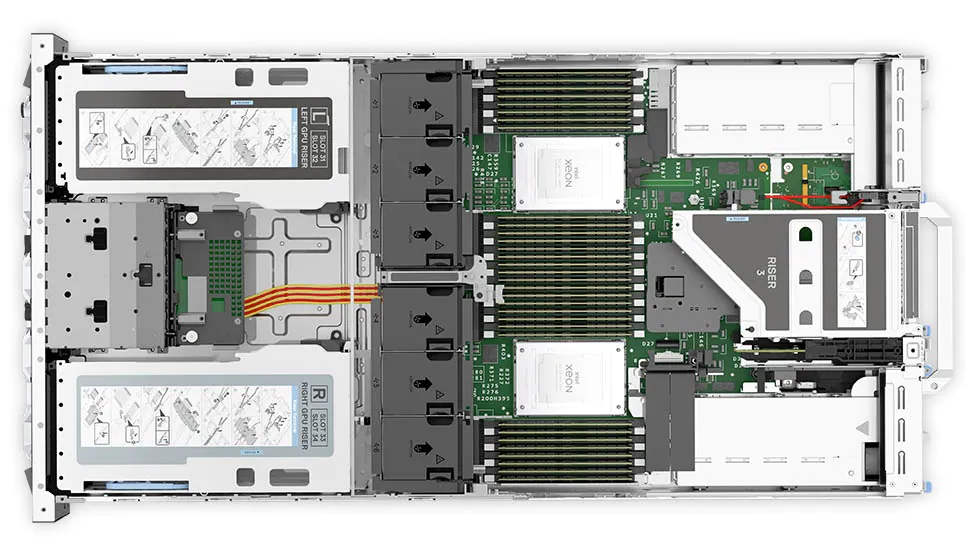

Dell PowerEdge R750xa server

The PowerEdge R750xa server is a perfect blend of technological prowess and innovation. This server is equipped with Intel Xeon Gold processors and the latest NVIDIA GPUs. The PowerEdge R760xa server is designed for the most demanding AI, ML, and DL workloads as it is compatible with the latest NVIDIA TensorRT engine and CUDA version. With up to nine PCIe Gen4 slots and availability in a 1U or 2U configuration, the PowerEdge R750xa server is an excellent option for any demanding workload.

Figure 5: Front view of the Dell PowerEdge R750xa server

Figure 6: Top view of the Dell PowerEdge R750xa server

Performance results

Classical Deep Learning models performance

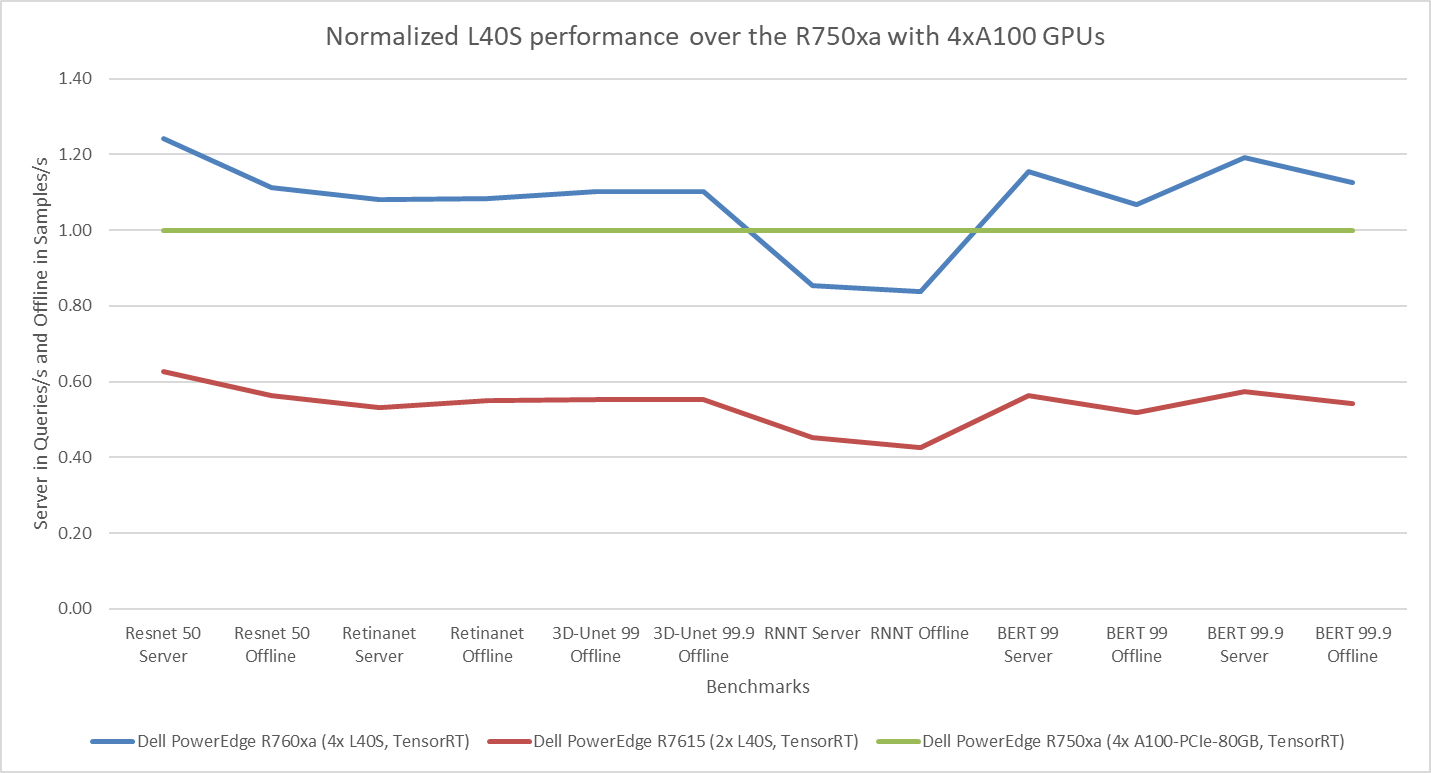

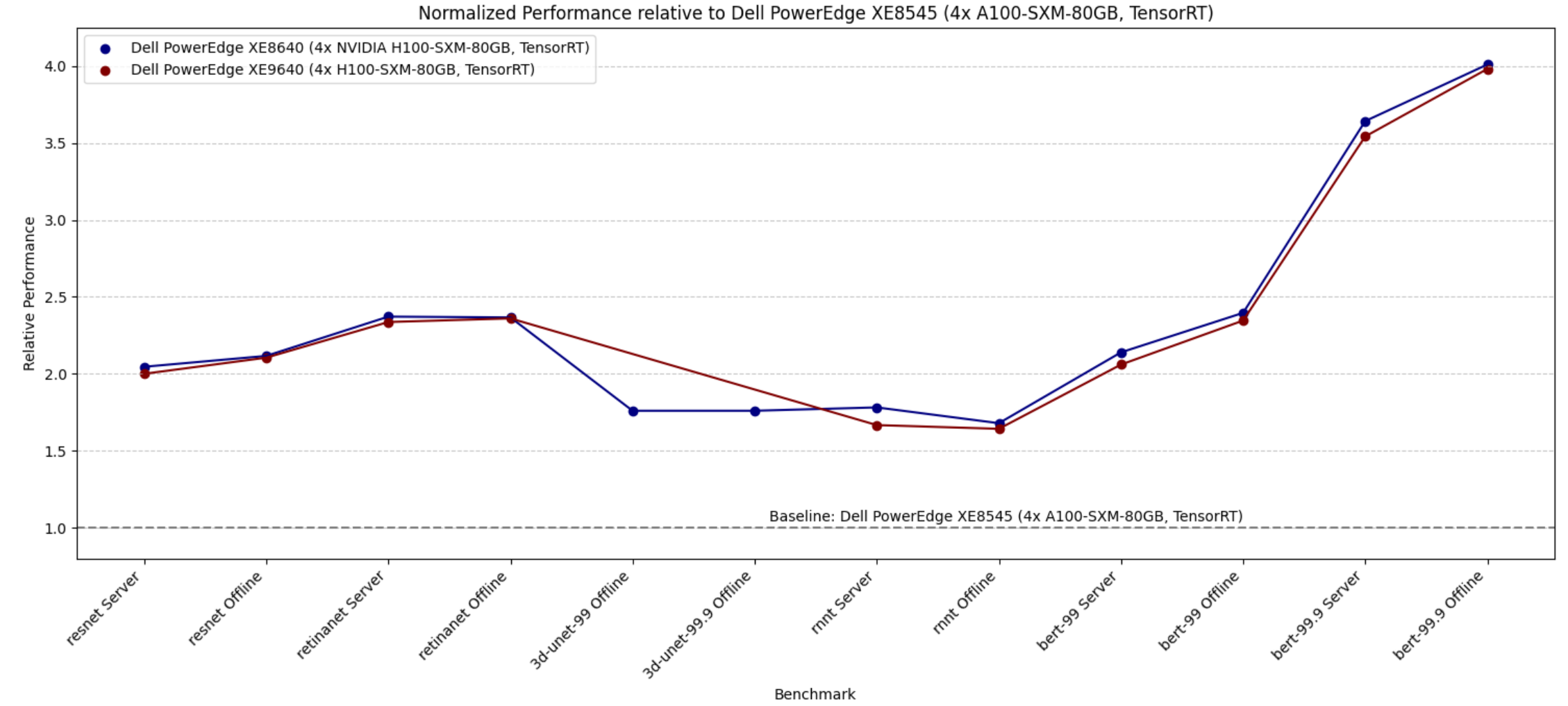

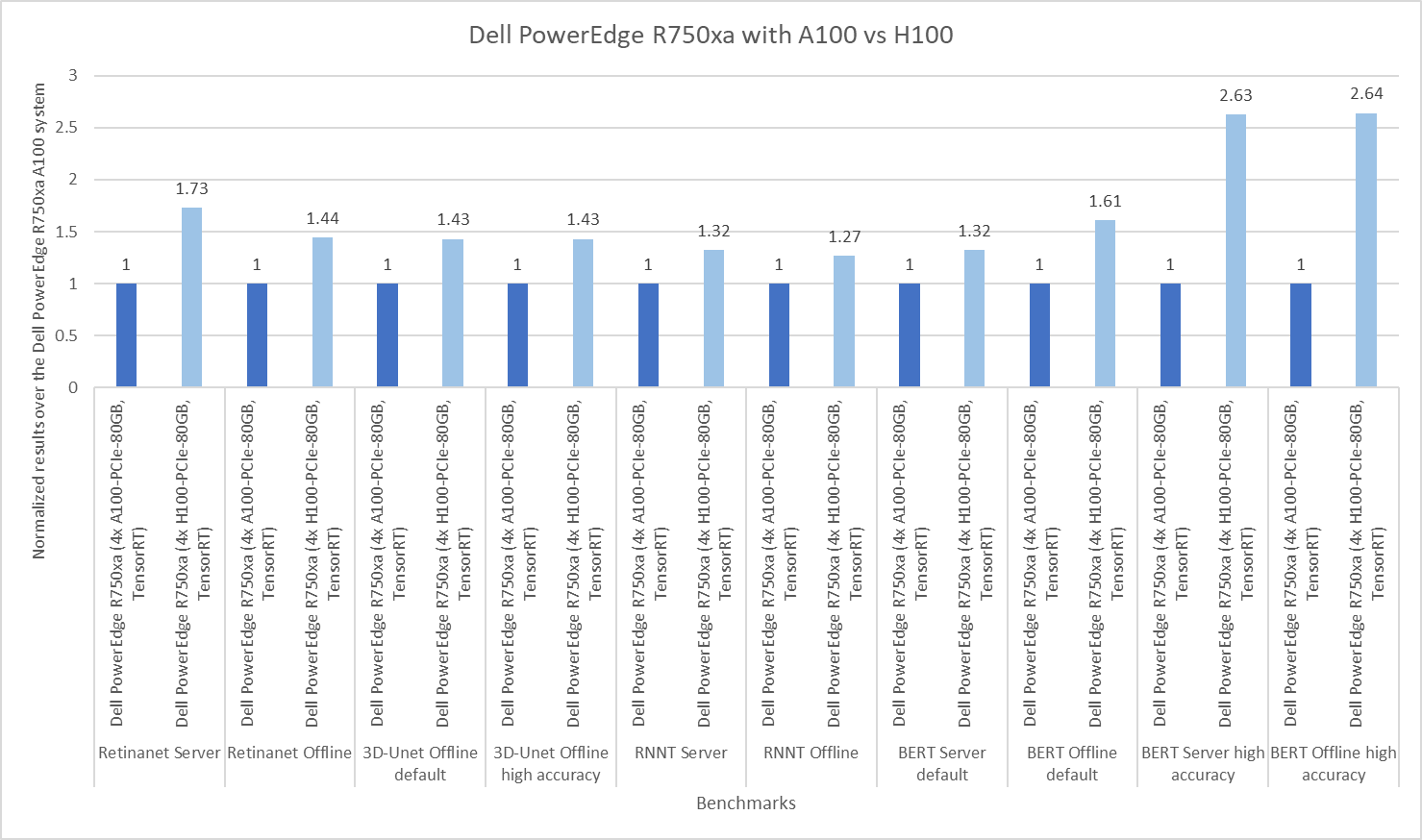

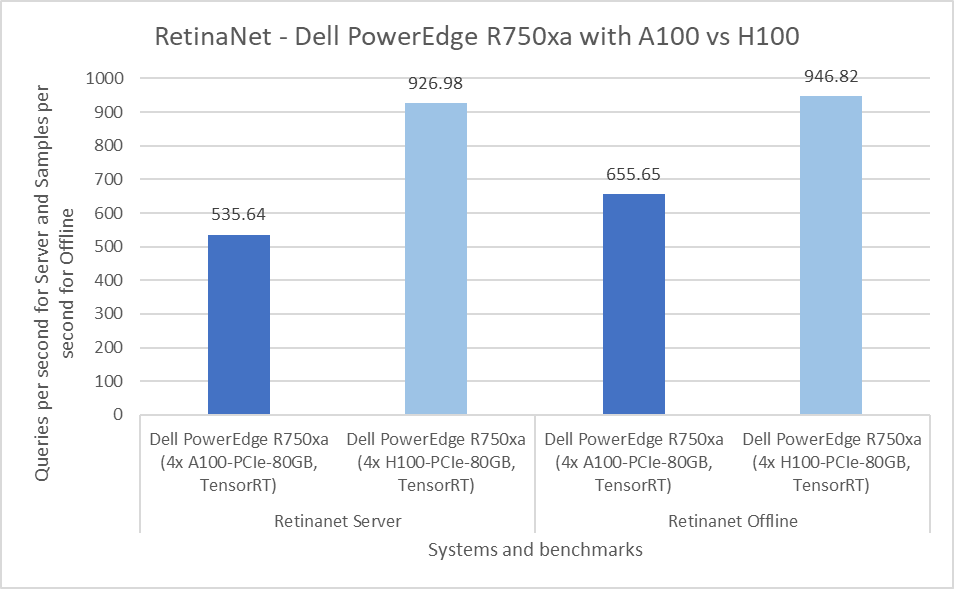

The following figure presents the results as a ratio of normalized numbers over the Dell PowerEdge R750xa server with four NVIDIA A100 GPUs. This result provides an easy-to-read comparison of three systems and several benchmarks.

Figure 7: Normalized NVIDIA L40S GPU performance over the PowerEdge R750xa server with four A100 GPUs

The green trendline represents the performance of the Dell PowerEdge R750xa server with four NVIDIA A100 GPUs. With a score of 1.00 for each benchmark value, the results have been divided by themselves to serve as the baseline in green for this comparison. The blue trendline represents the performance of the PowerEdge R760xa server with four NVIDIA L40S GPUs that has been normalized by dividing each benchmark result by the corresponding score achieved by the PowerEdge R750xa server. In most cases, the performance achieved on the PowerEdge R760xa server outshines the results of the PowerEdge R750xa server with NVIDIA A100 GPUs, proving the expected improvements from the NVIDIA L40S GPU. The red trendline has also been normalized over the PowerEdge R750xa server and represents the performance of the PowerEdge R7615 server with two NVIDIA L40S GPUs. It is interesting that the red line almost mimics the blue line. This result suggests that the PowerEdge R7615 server, despite having half the compute resources, still performs comparably well in most cases, showing its efficiency.

Generative AI performance

The latest submission saw the introduction of the new Stable Diffusion XL benchmark. In the context of generative AI, stable diffusion is a text to image model that generates coherent image samples. This result is achieved gradually by refining and spreading out information throughout the generation process. Consider the example of dropping food coloring into a large bucket of water. Initially, only a small, concentrated portion of the water turns color, but gradually the coloring is evenly distributed in the bucket.

The following table shows the excellent performance of the PowerEdge R760xa server with the powerful NVIDIA L40S GPU for the GPT-J and Stable Diffusion XL benchmarks. The PowerEdge R760xa takes the top spot in GPT-J and Stable Diffusion XL when compared to other NVIDIA L40S results.

Table 3: Benchmark results for the PowerEdge R760xa server with the NVIDIA L40S GPU

Benchmark | Dell PowerEdge R760xa L40S result (Server in Queries/s and Offline in Samples/s) | Dell’s % gain to the next best non-Dell results (%) |

Stable Diffusion XL Server | 0.65 | 5.24 |

Stable Diffusion XL Offline | 0.67 | 2.28 |

GPT-J 99 Server | 12.75 | 4.33 |

GPT-J 99 Offline | 12.61 | 1.88 |

GPT-J 99.9 Server | 12.75 | 4.33 |

GPT-J 99.9 Offline | 12.61 | 1.88 |

Conclusion

The MLPerf Inference submissions elicit insightful like-to-like comparisons. This blog highlights the impressive performance of the NVIDIA L40S GPU in the Dell PowerEdge R760xa and PowerEdge R7615 servers. Both servers performed well when compared to the performance of the Dell PowerEdge R750xa server with the NVIDIA A100 GPU. The outstanding performance improvements in the NVIDIA L40S GPU coupled with the Dell PowerEdge server position Dell customers to succeed in AI workloads. With the advent of the GPT-J and Stable diffusion XL Models, the Dell PowerEdge server is well positioned to handle Generative AI workloads.

Dell PowerEdge Servers Unleash Another Round of Excellent Results with MLPerf™ v4.0 Inference

Wed, 27 Mar 2024 15:12:53 -0000

|Read Time: 0 minutes

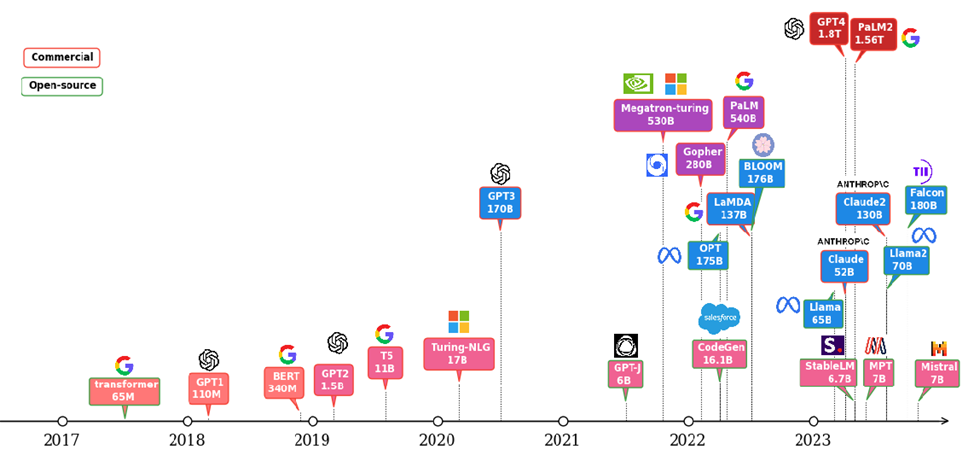

Today marks the unveiling of MLPerf v4.0 Inference results, which have emerged as an industry benchmark for AI systems. These benchmarks are responsible for assessing the system-level performance consisting of state-of-the-art hardware and software stacks. The benchmarking suite contains image classification, object detection, natural language processing, speech recognition, recommenders, medical image segmentation, LLM 6B and LLM 70B question answering, and text to image benchmarks that aim to replicate different deployment scenarios such as the data center and edge.

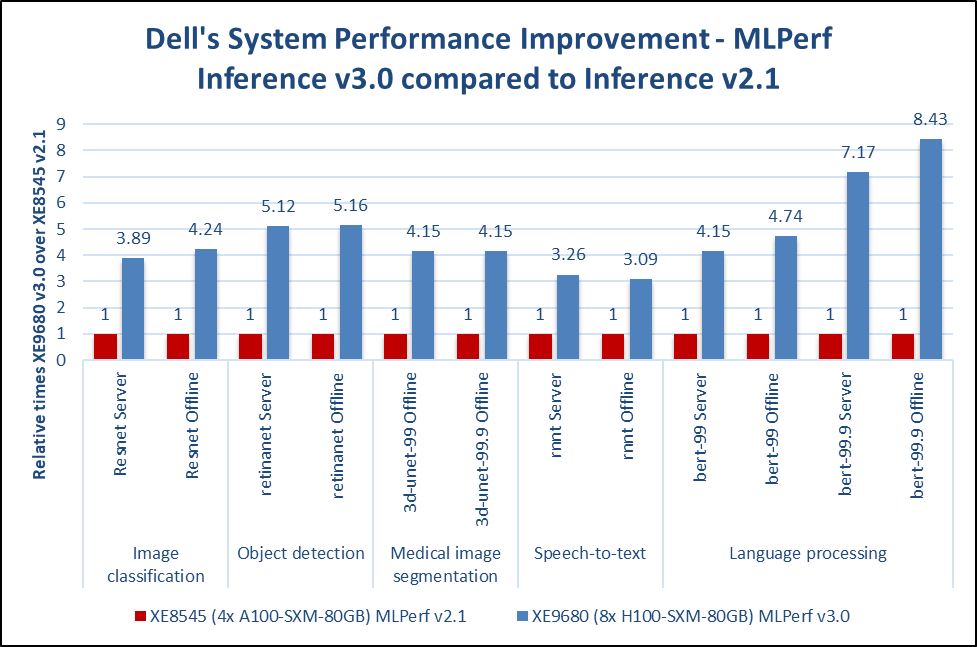

Dell Technologies is a founding member of MLCommons™ and has been actively making submissions since the inception of the Inference and Training benchmarks. See our MLPerf™ Inference v2.1 with NVIDIA GPU-Based Benchmarks on Dell PowerEdge Servers white paper that introduces the MLCommons Inference benchmark.

Our performance results are outstanding, serving as a clear indicator of our resolve to deliver outstanding system performance. These improvements enable higher system performance when it is most needed, for example, for demanding generative AI (GenAI) workloads.

What is new with Inference 4.0?

Inference 4.0 and Dell’s submission include the following:

- Newly introduced Llama 2 question answering and text to image stable diffusion benchmarks, and submission across different Dell PowerEdge XE platforms.

- Improved GPT-J (225 percent improvement) and DLRM-DCNv2 (100 percent improvement) performance. Improved throughput performance of the GPTJ and DLRM-DCNv2 workload means faster natural language processing tasks like summarization and faster relevant recommendations that allow a boost to revenue respectively.

- First-time submission of server results with the recently released PowerEdge R7615 and PowerEdge XR8620t servers with NVIDIA accelerators.

- Besides accelerator-based results, Intel-based CPU-only results.

- Results for PowerEdge servers with Qualcomm accelerators.

- Power results showing high performance/watt scores for the submissions.

- Virtualized results on Dell servers with Broadcom.

Overview of results

Dell Technologies delivered 187 data center, 28 data center power, 42 edge, and 24 edge power results. Some of the more impressive results were generated by our:

- Dell PowerEdge XE9680, XE9640, XE8640, and servers with NVIDIA H100 Tensor Core GPUs

- Dell PowerEdge R7515, R750xa, and R760xa servers with NVIDIA L40S and A100 Tensor Core GPUs

- Dell PowerEdge XR7620 and XR8620t servers with NVIDIA L4 Tensor Core GPUs

- Dell PowerEdge R760 server with Intel Emerald Rapids CPUs

- Dell PowerEdge R760 with Qualcomm QAIC100 Ultra accelerators

NVIDIA-based results include the following GPUs:

- Eight-way NVIDIA H100 GPU (SXM)

- Four-way NVIDIA H100 GPU (SXM)

- Four-way NVIDIA A100 GPU (PCIe)

- Four-way NVIDIA L40S GPU (PCIe)

- NVIDIA L4 GPU

These accelerators were benchmarked on different servers such as PowerEdge XE9680, XE8640, XE9640, R760xa, XR7620, and XR8620t servers across data center and edge suites.

Dell contributed to about 1/4th of the closed data center and edge submissions. The large number of result choices offers end users an opportunity to make data-driven purchase decisions and set performance and data center design expectations.

Interesting Dell data points

The most interesting data points include:

- Performance results across different benchmarks are excellent and show that Dell servers meet the increasing need to serve different workload types.

- Among 20 submitters, Dell Technologies was one of the few companies that covered all benchmarks in the closed division for data center suites.

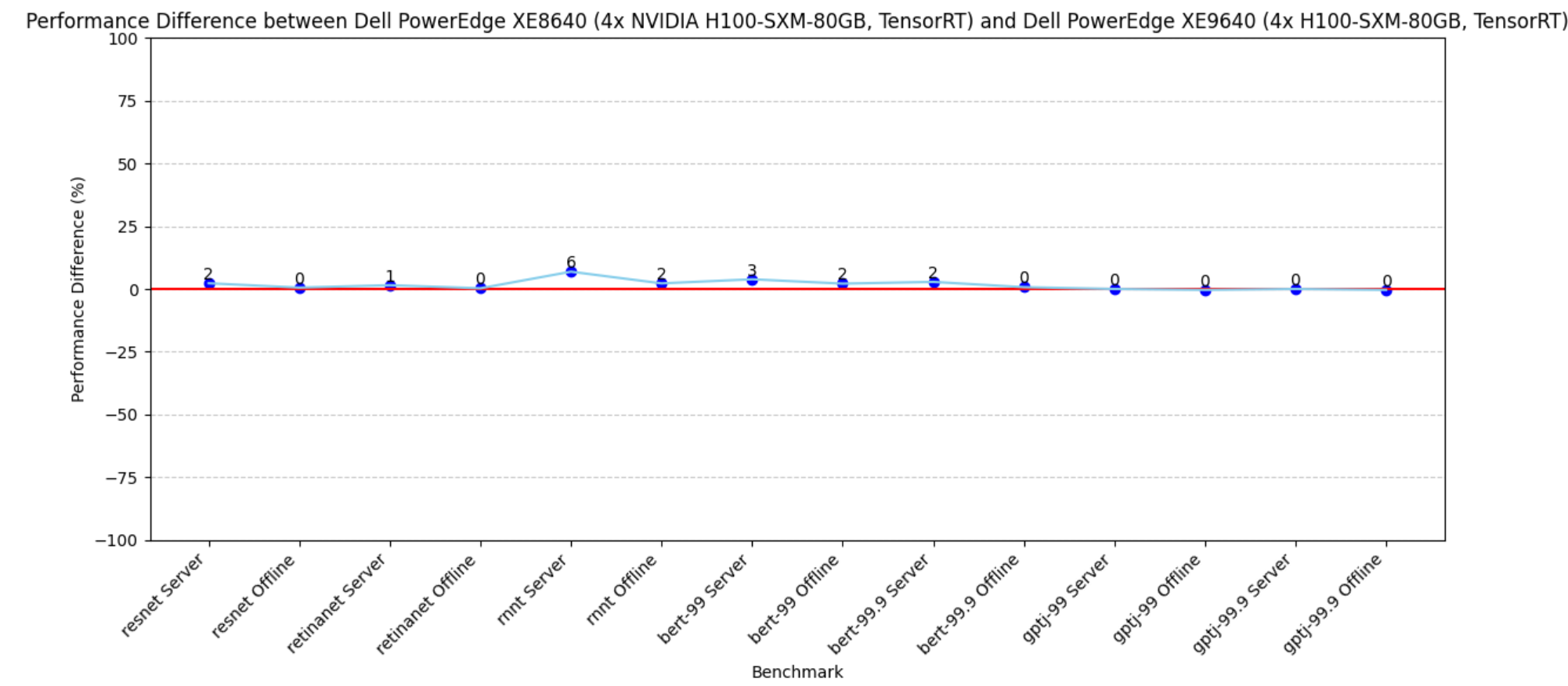

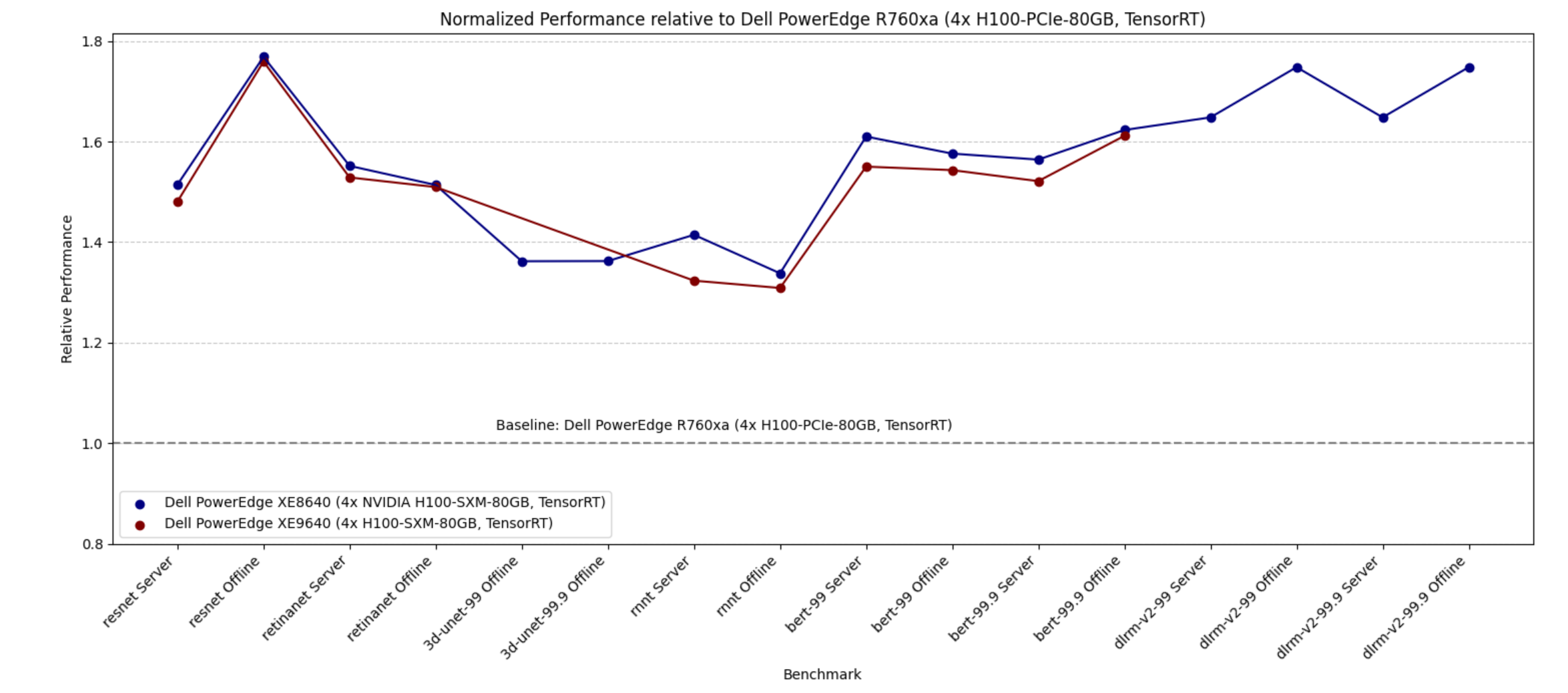

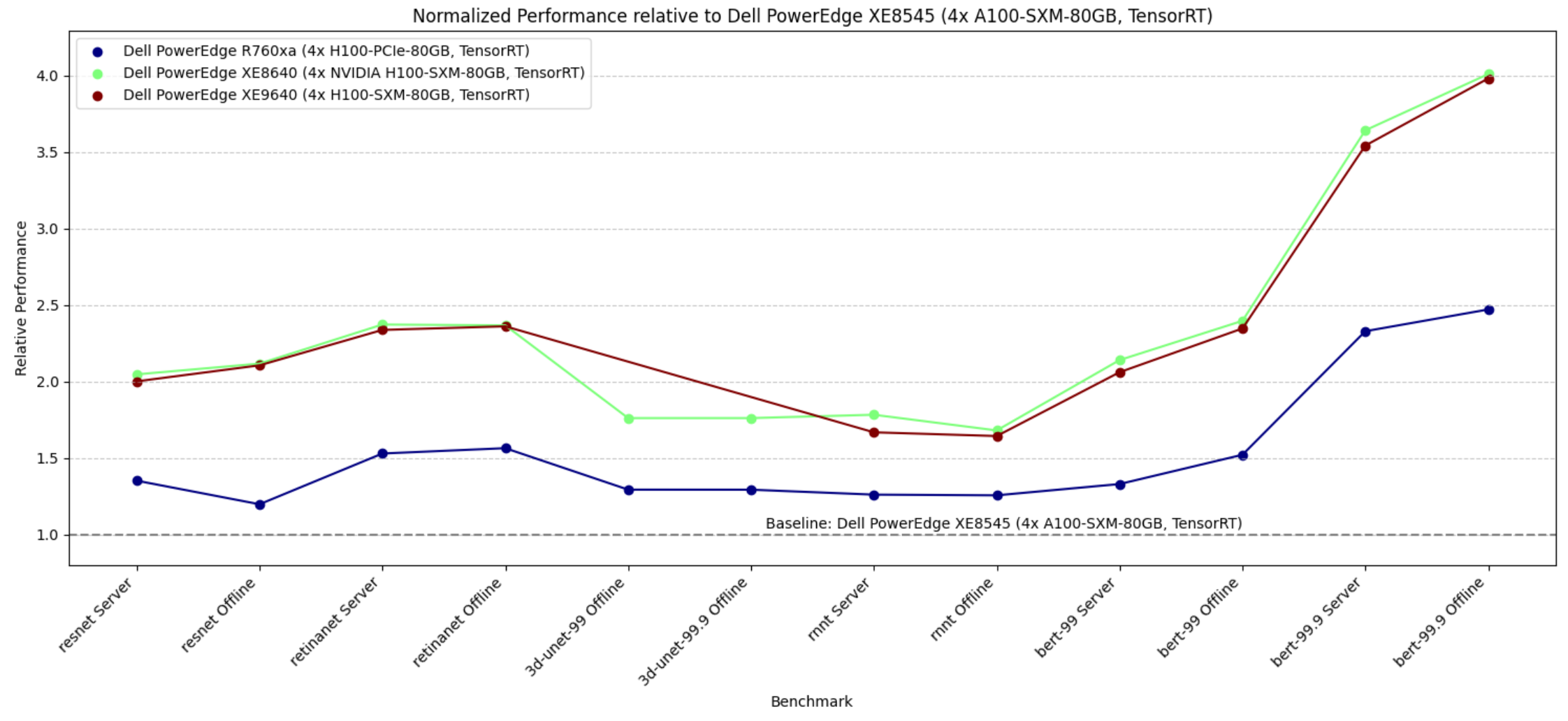

- The PowerEdge XE8640 and PowerEdge XE9640 servers compared to other four-way systems procured winning titles across all the benchmarks including the newly launched stable diffusion and Llama 2 benchmark.

- The PowerEdge XE9680 server compared to other eight-way systems procured several winning titles for benchmarks such as ResNet Server, 3D-Unet, BERT-99, and BERT-99.9 Server.

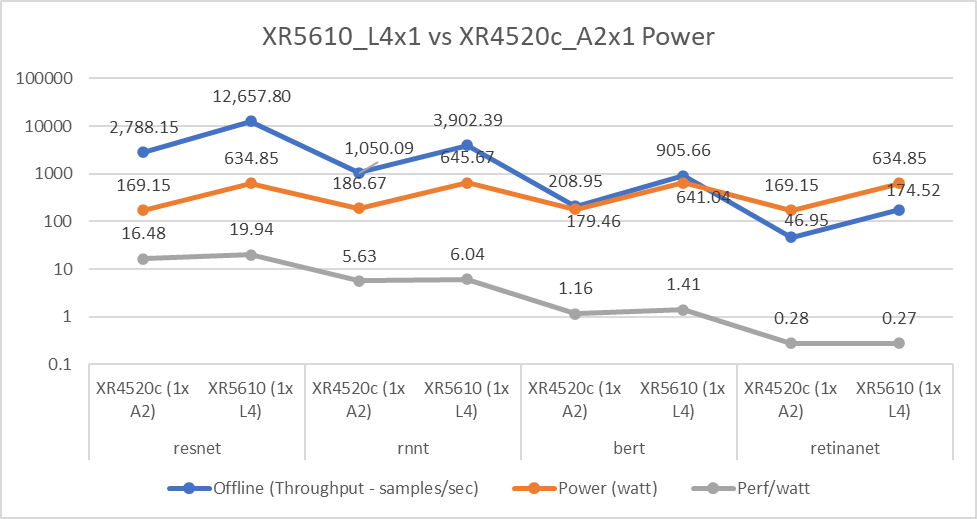

- The PowerEdge XE9680 server delivers the highest performance/watt compared to other submitters with 8-way NVIDIA H100 GPUs for ResNet Server, GPTJ Server, and Llama 2 Offline

- The Dell XR8620t server for edge benchmarks with NVIDIA L4 GPUs outperformed other submissions.

- The PowerEdge R750xa server with NVIDIA A100 PCIe GPUs outperformed other submissions on the ResNet, RetinaNet, 3D-Unet, RNN-T, BERT 99.9, and BERT 99 benchmarks.

- The PowerEdge R760xa server with NVIDIA L40S GPUs outperformed other submissions on the ResNet Server, RetinaNet Server, RetinaNet Offline, 3D-UNet 99, RNN-T, BERT-99, BERT-99.9, DLRM-v2-99, DLRM-v2-99.9, GPTJ-99, GPTJ-99.9, Stable Diffusion XL Server, and Stable Diffusion XL Offline benchmarks.

Highlights

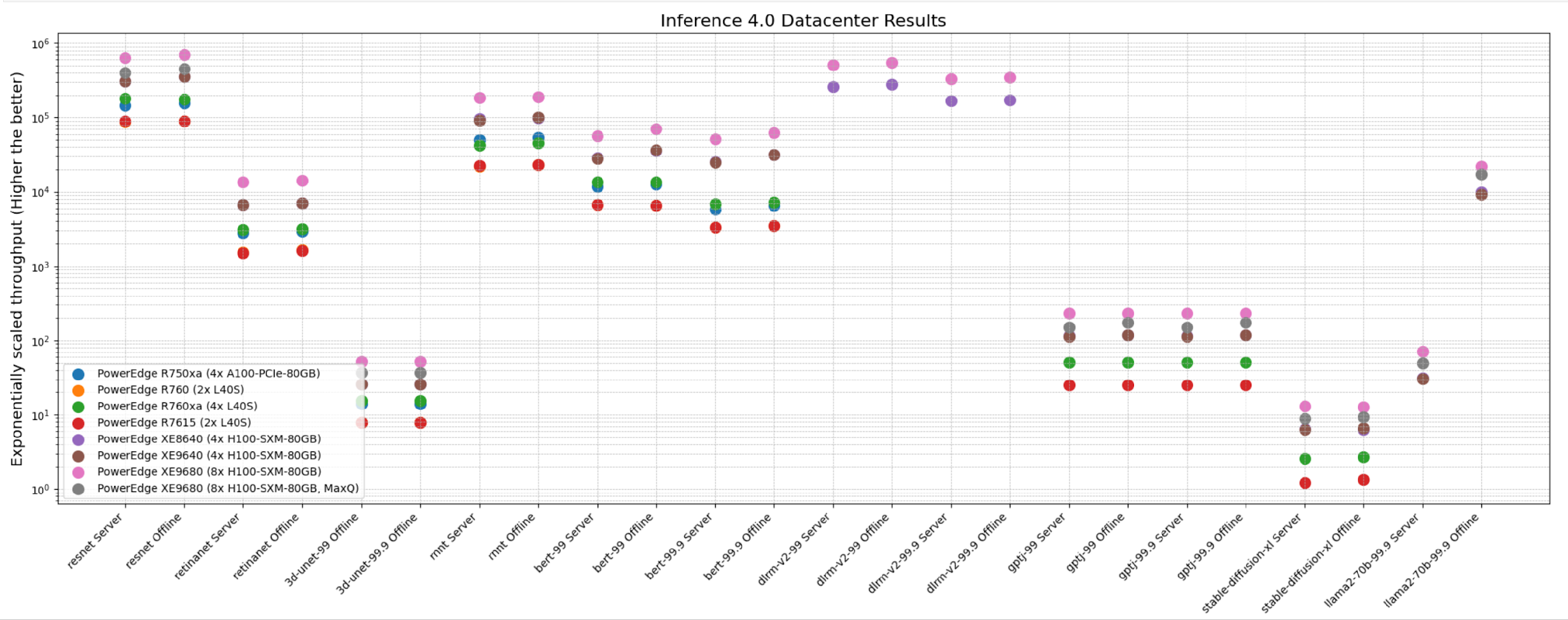

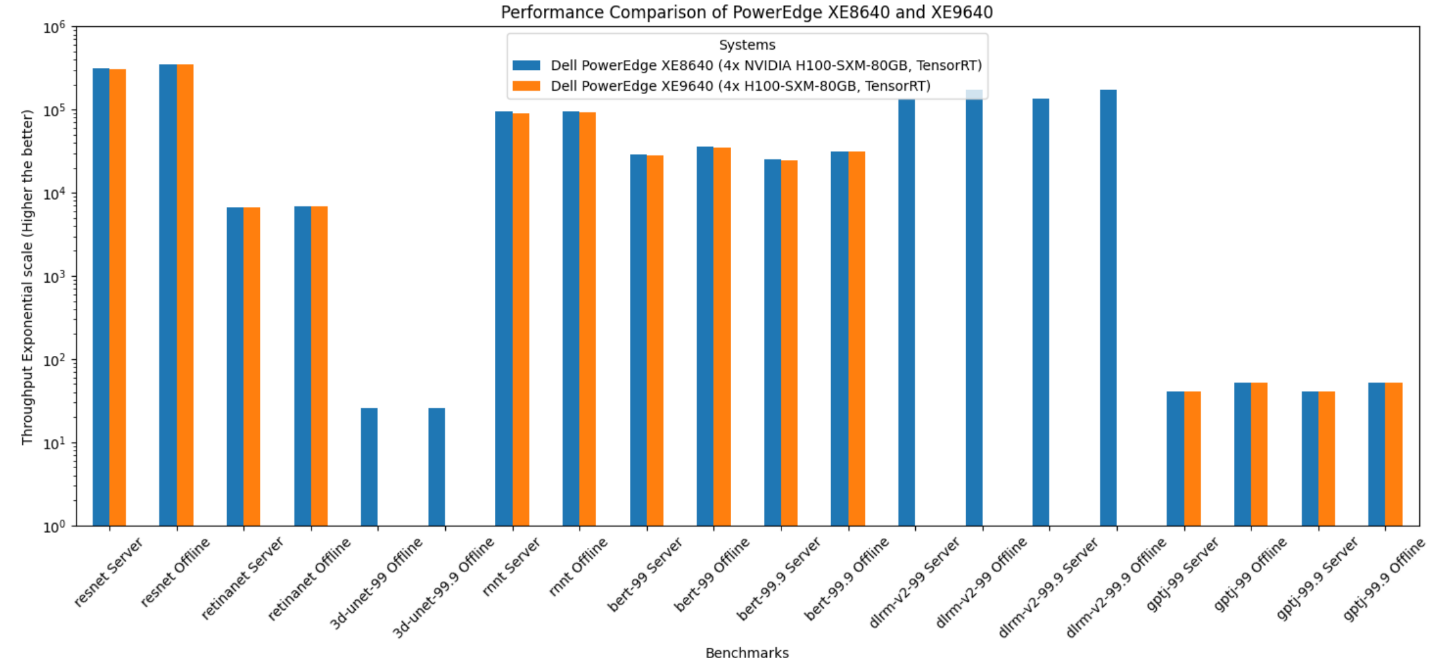

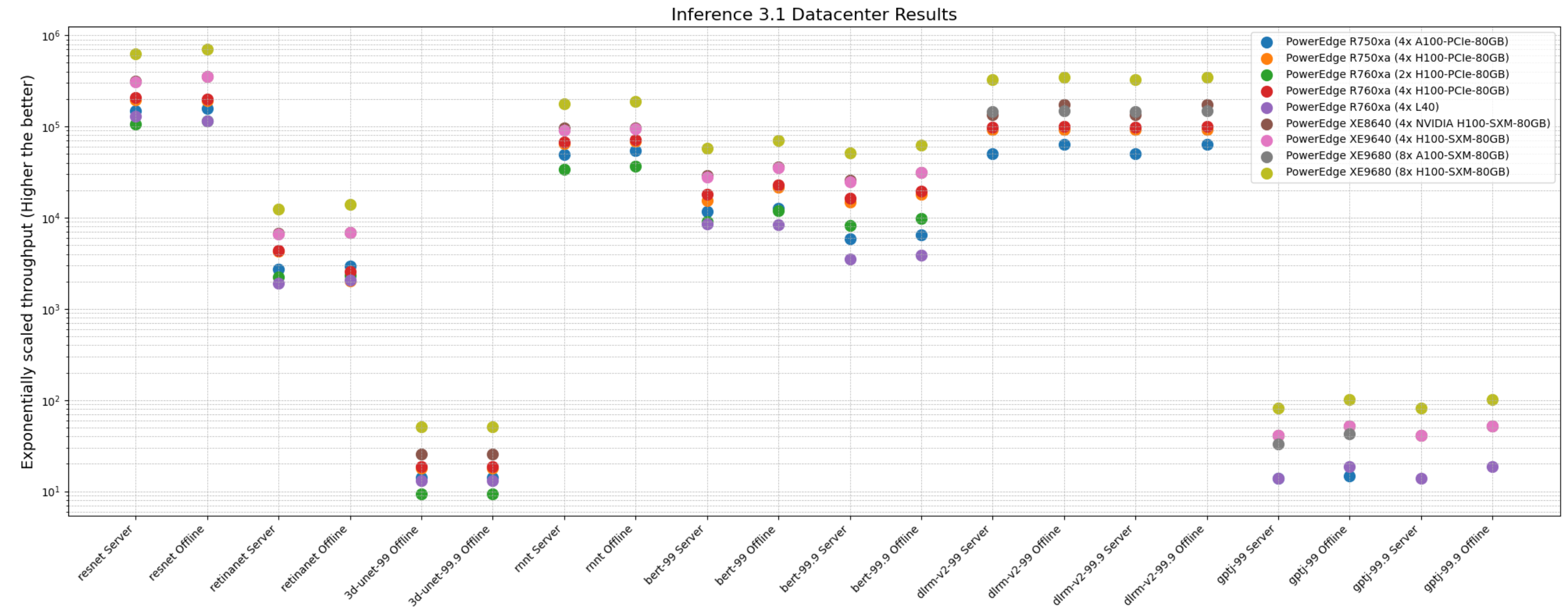

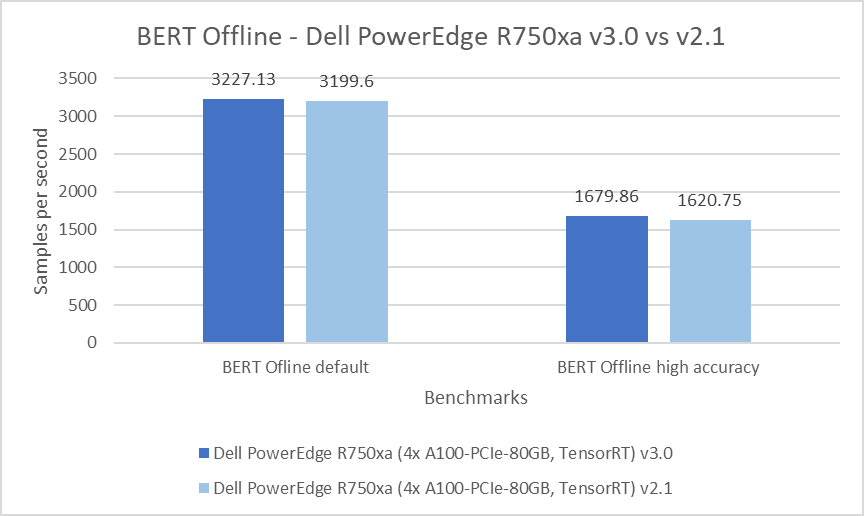

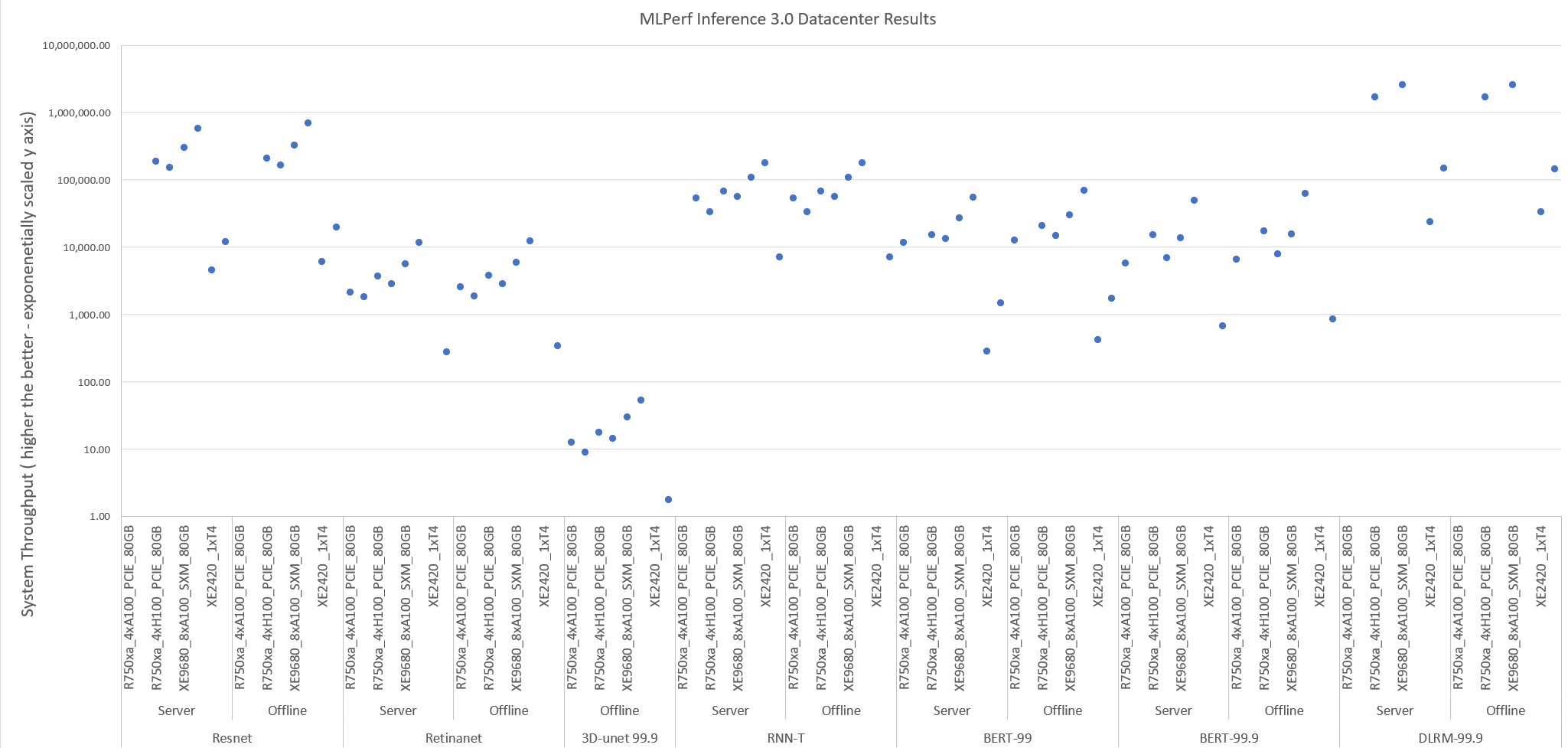

The following figure shows the different Offline and Server performance scenarios in the data center suite. These results provide an overview; follow-up blogs will provide more details about the results.

The following figure shows that these servers delivered excellent performance for all models in the benchmark such as ResNet, RetinaNet, 3D-UNet, RNN-T, BERT, DLRM-v2, GPT-J, Stable Diffusion XL, and Llama 2. Note that different benchmarks operate on varied scales. They have all been showcased in an exponentially scaled y-axis in the following figure:

Figure 1: System throughput for submitted systems for the data center suite.

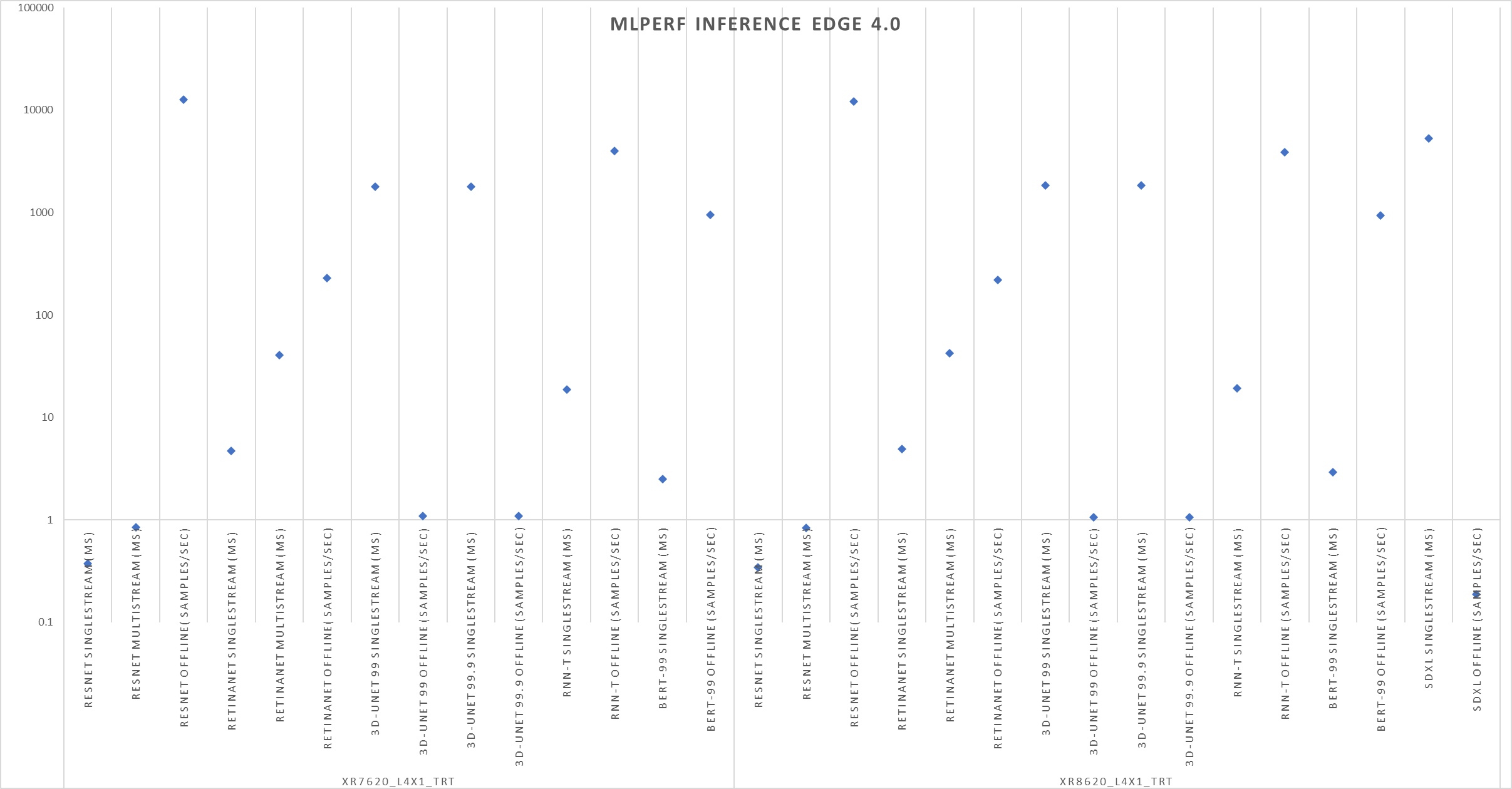

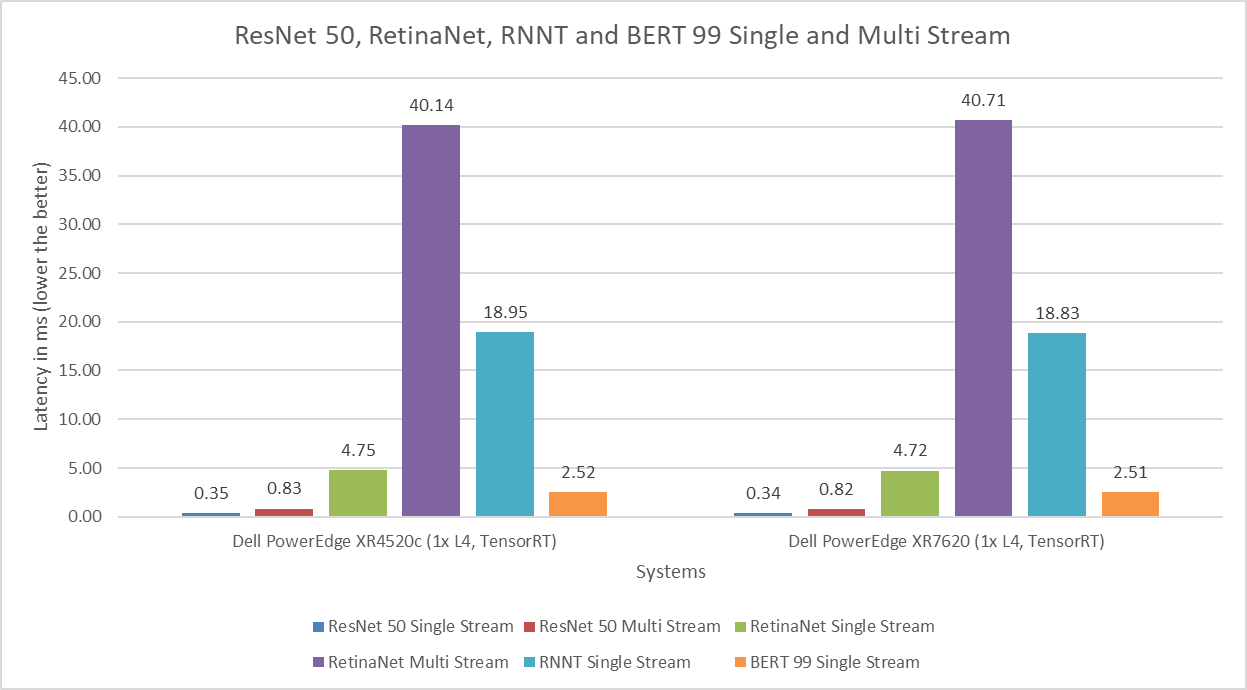

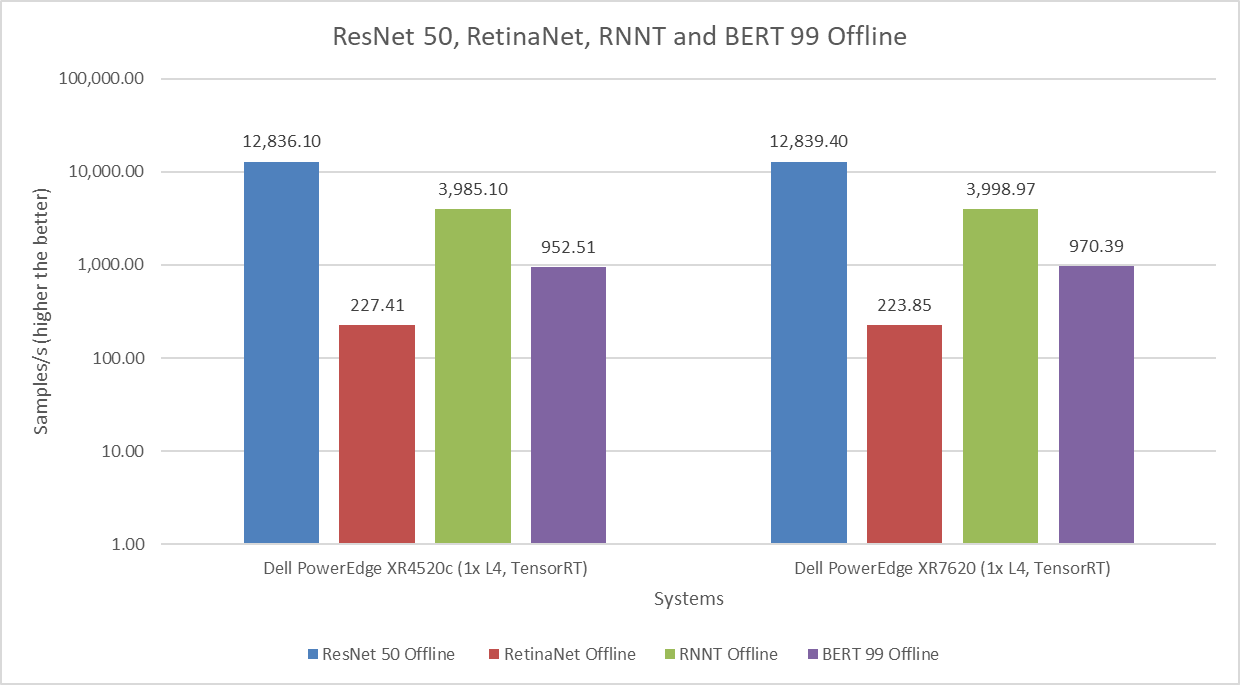

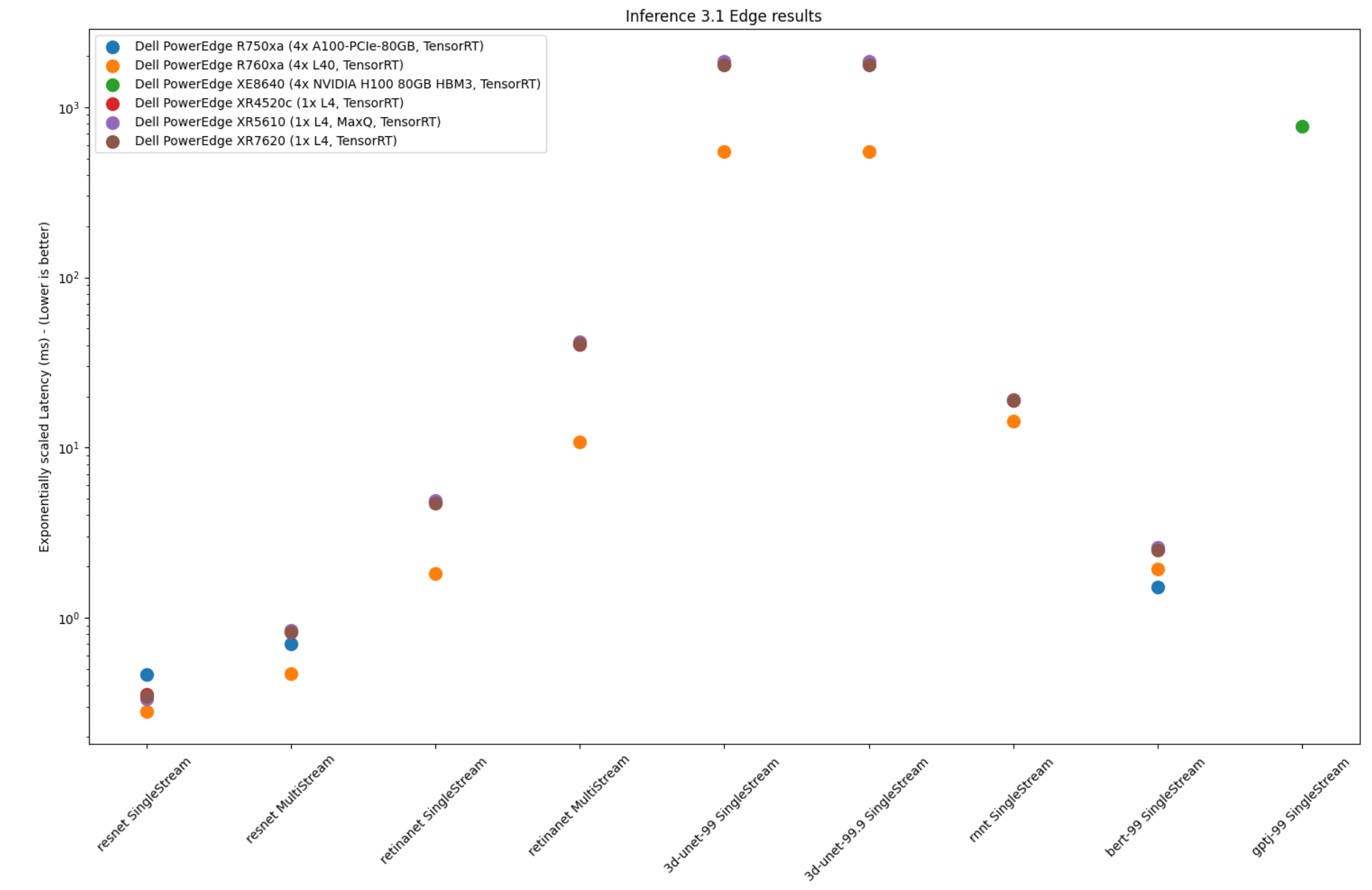

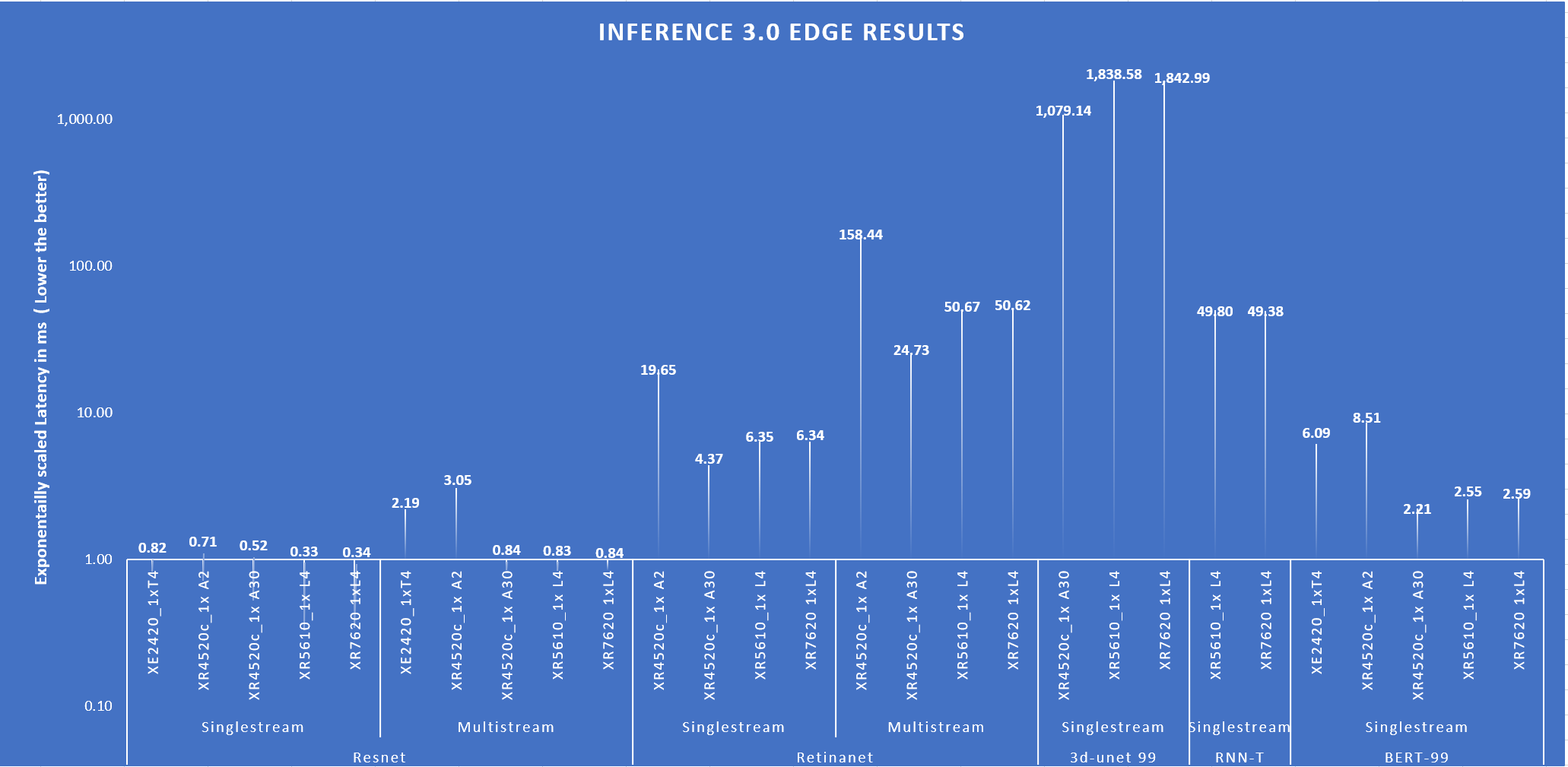

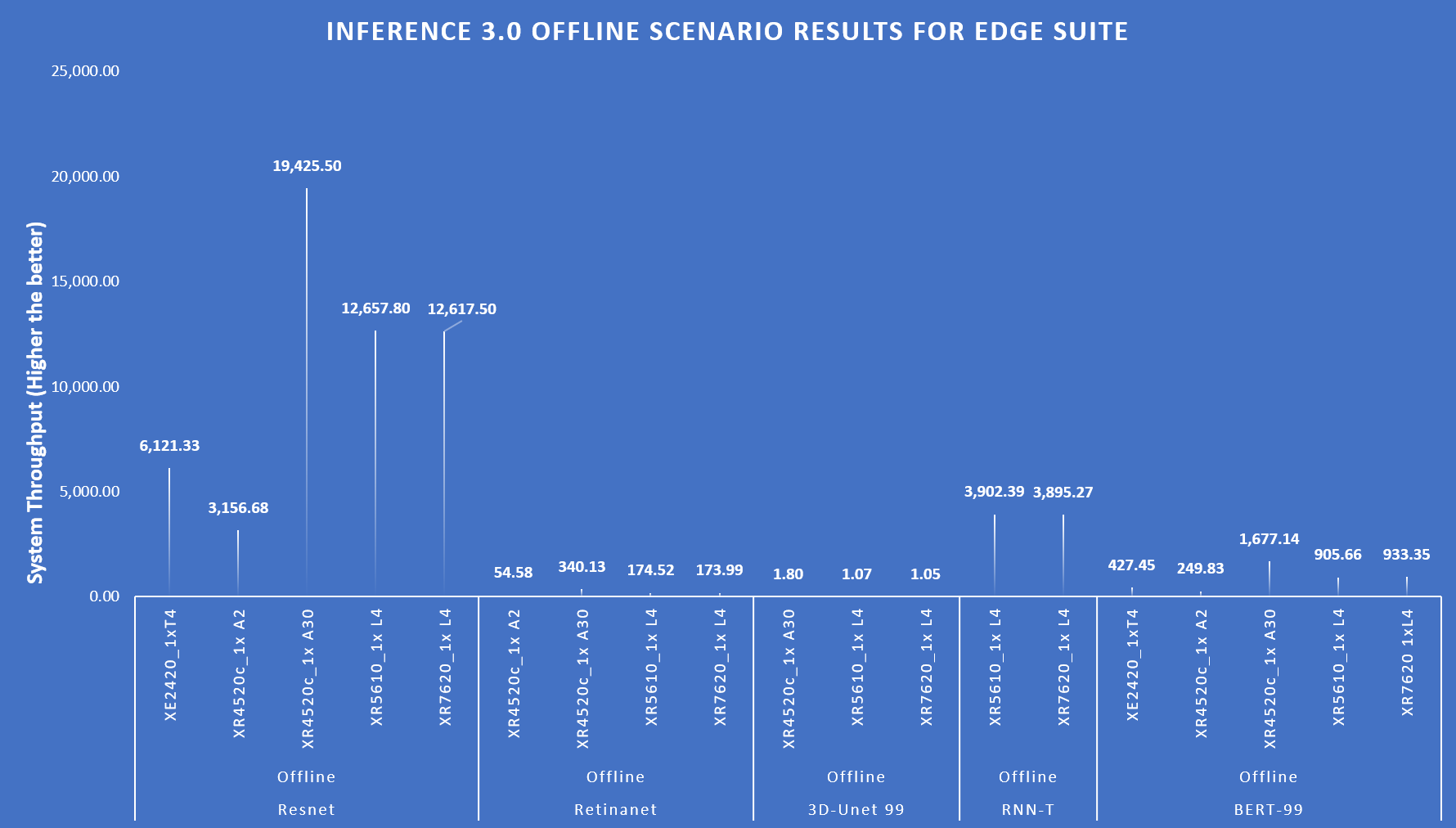

The following figure shows single-stream and multistream scenario results for the edge for ResNet, RetinaNet, 3D-Unet, RNN-T, BERT 99, GPTJ, and Stable Diffusion XL benchmarks. The lower the latency, the better the results and for Offline scenario, higher the better.

Figure 2: Edge results with PowerEdge XR7620 and XR8620t servers overview

Conclusion

The preceding results were officially submitted to MLCommons. They are MLPerf-compliant results for the Inference v4.0 benchmark across various benchmarks and suites for all the tasks in the benchmark such as image classification, object detection, natural language processing, speech recognition, recommenders, medical image segmentation, LLM 6B and LLM 70B question answering, and text to image. These results prove that Dell PowerEdge XE9680, XE8640, XE9640, and R760xa servers are capable of delivering high performance for inference workloads. Dell Technologies secured several #1 titles that make Dell PowerEdge servers an excellent choice for data center and edge inference deployments. End users can benefit from the plethora of submissions that help make server performance and sizing decisions, which ultimately deliver enterprises’ AI transformation and shows Dell’s commitment to deliver higher performance.

MLCommons Results

https://mlcommons.org/en/inference-datacenter-40/

https://mlcommons.org/en/inference-edge-40/

The preceding graphs are MLCommons results for MLPerf IDs from 4.0-0025 to 4.0-0035 on the closed datacenter, 4.0-0036 to 4.0-0038 on the closed edge, 4.0-0033 in the closed datacenter power, and 4.0-0037 in closed edge power.

Get started building RAG pipelines in your enterprise with Dell Technologies and NVIDIA (Part 1)

Wed, 24 Apr 2024 17:21:42 -0000

|Read Time: 0 minutes

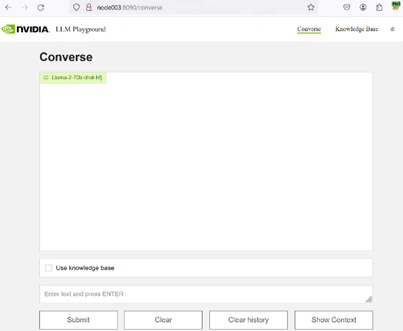

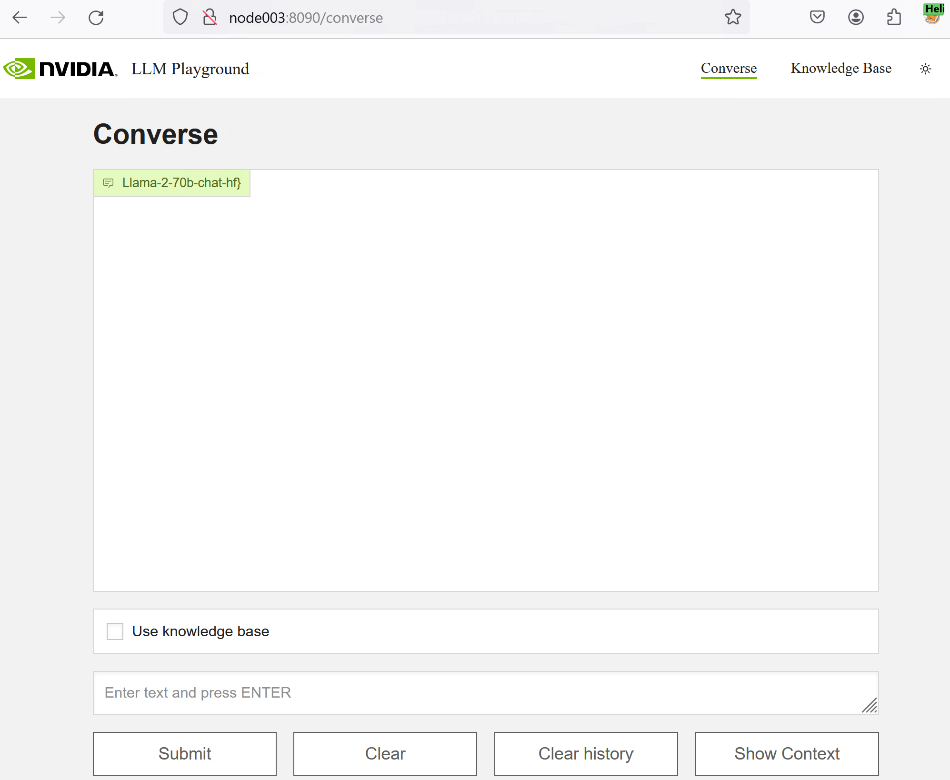

In our previous blog, we showcased running Llama 2 on XE9680 using NVIDIA's LLM Playground (part of the NeMo framework). It is an innovative platform for experimenting with and deploying large language models (LLMs) for various enterprise applications.

The reality is that running straight inference with foundational models in an enterprise context simply does not happen and presents several challenges, such as a lack of domain-specific knowledge, the potential for outdated or incomplete information, and the risk of generating inaccurate or misleading responses.

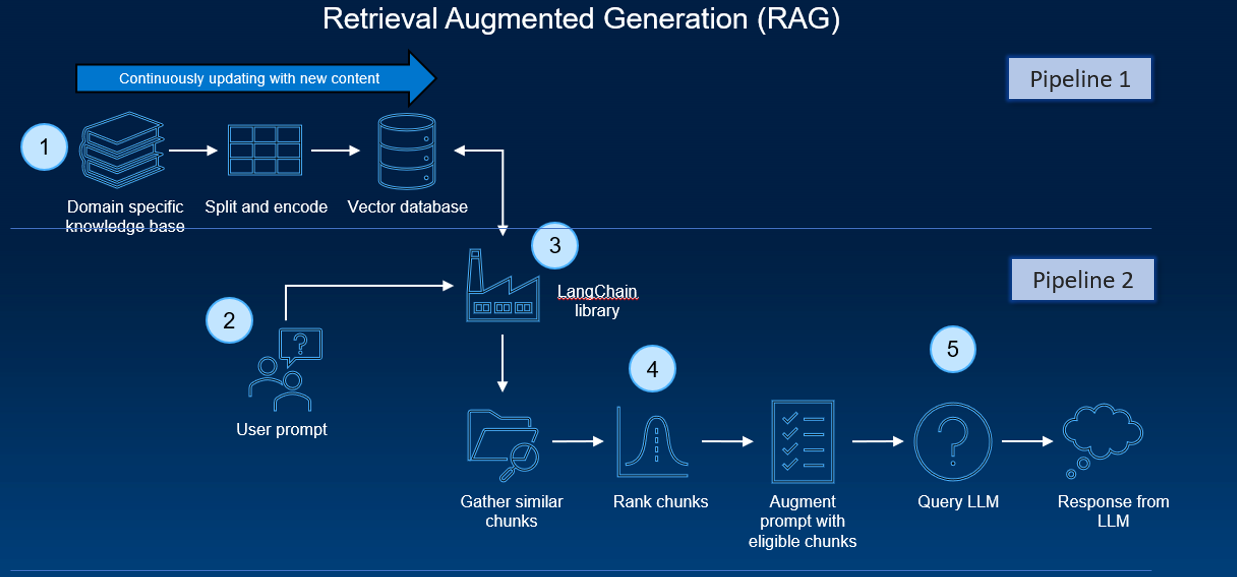

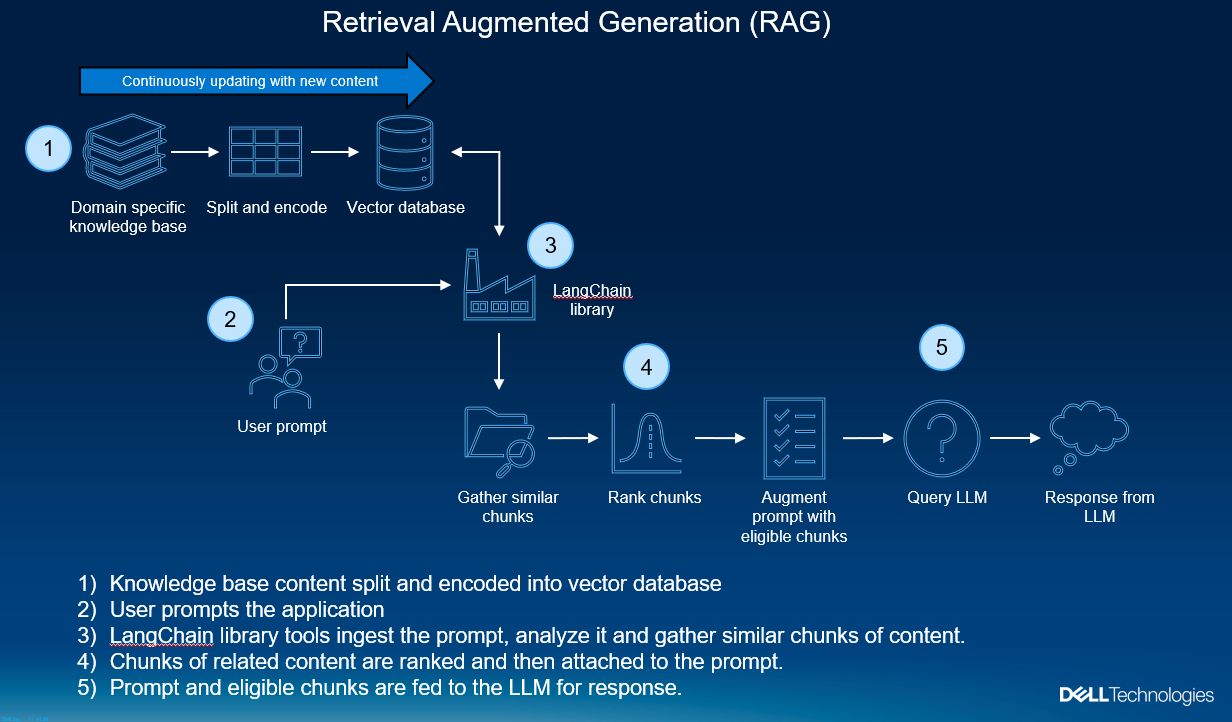

Retrieval-Augmented Generation (RAG) represents a pivotal innovation within the generative AI space.

RAG combines generative AI foundational models with advanced information retrieval techniques to create interactive systems that are both responsive and deeply informative. Because of their flexibility, RAG can be designed in many different ways. In a blog recently published, David O'Dell showed how RAG can be built from scratch.

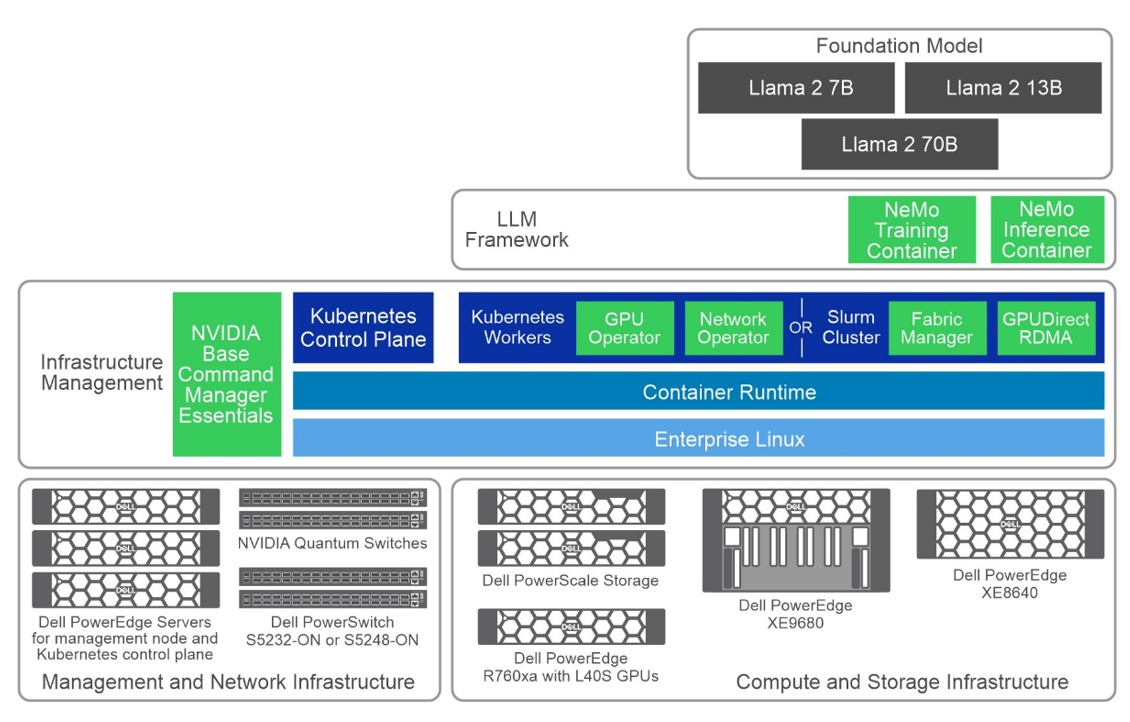

This blog also serves as a follow-on companion to the Technical White Paper NVIDIA RAG On Dell available here, which highlights the solution built on Dell Data Center Hardware, K8s, Dell CSI PowerScale for Kubernetes, and NVIDIA AI Enterprise suite. Check out the Technical White Paper to learn more about the solution architectural and logical approach employed.

In this blog, we will show how this new NVIDIA approach provides a more automated way of deploying RAG, which can be leveraged by customers looking at a more standardized approach.

We will take you through the step-by-step instructions for getting up and running with NVIDIA's LLM Playground software so you can experiment with your own RAG pipelines. In future blog posts (once we are familiar with the LLM playground basics), we will start to dig a bit deeper into RAG pipelines so you can achieve further customization and potential implementations of RAG pipelines using NVIDIA's software components.

But first, let's cover the basics.

Building Your Own RAG Pipeline (Getting Started)

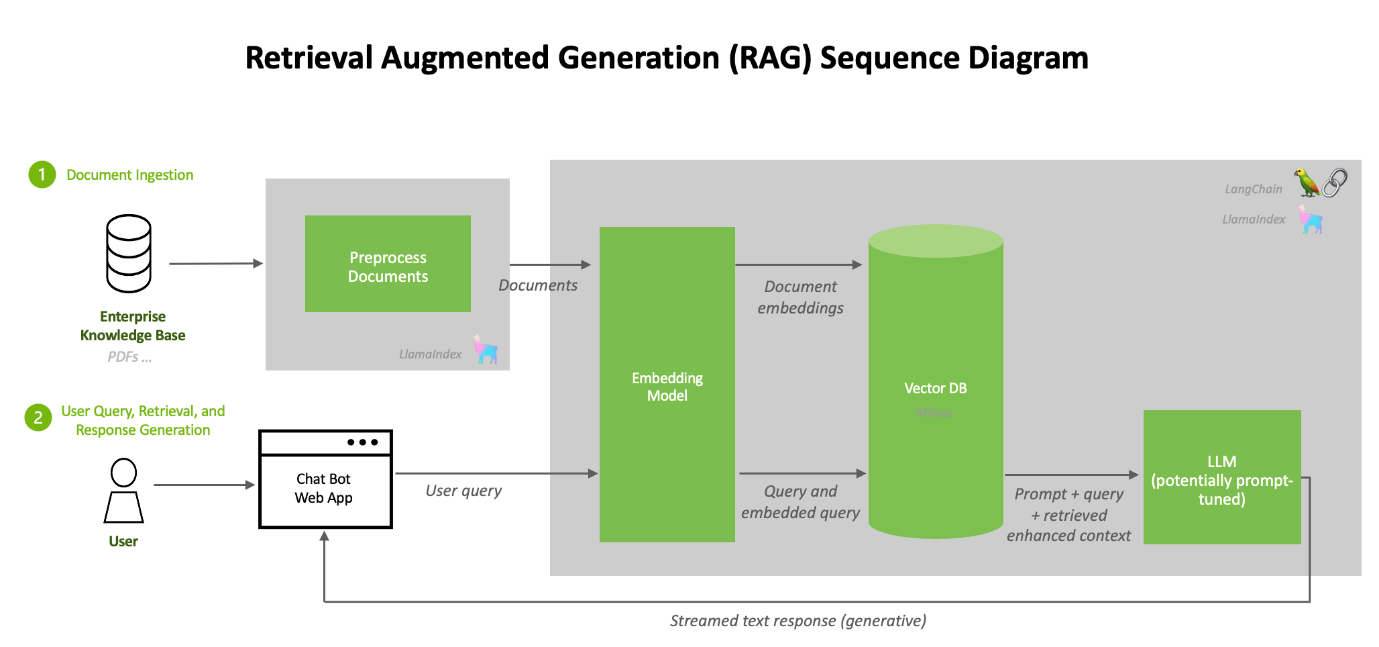

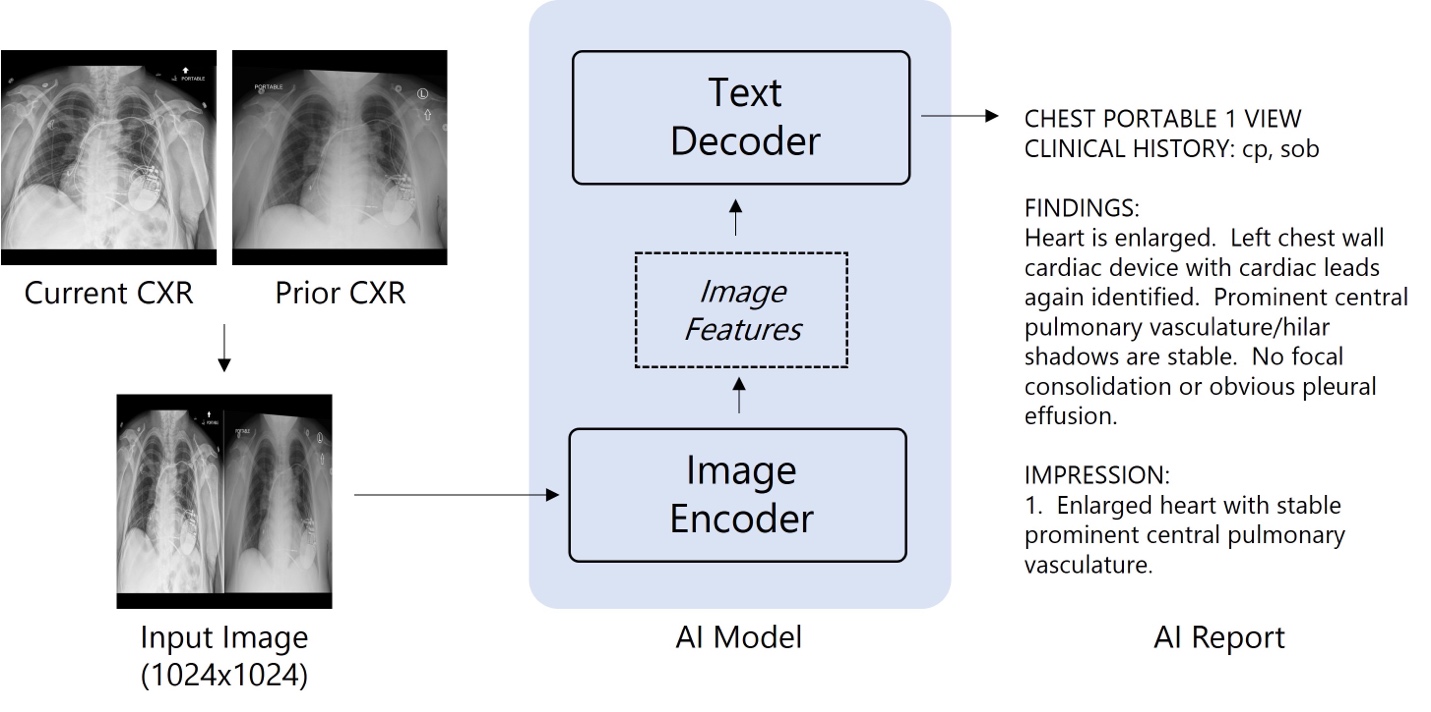

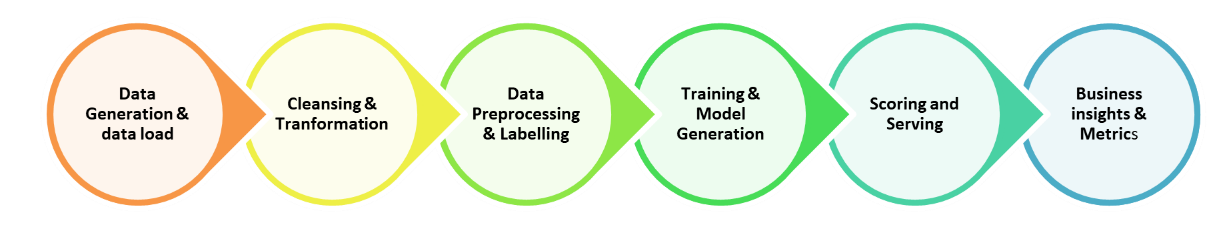

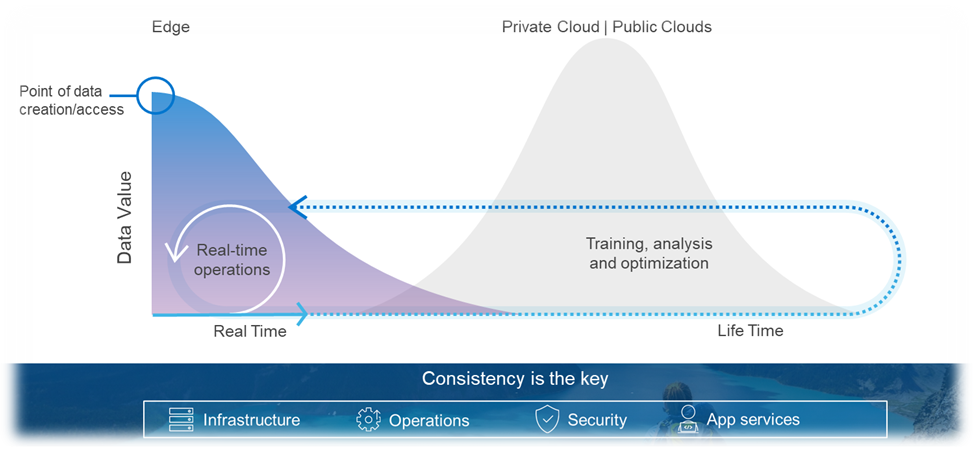

A typical RAG pipeline consists of several phases. The process of document ingestion occurs offline, and when an online query comes in, the retrieval of relevant documents and the generation of a response occurs.

At a high level, the architecture of a RAG system can be distilled down to two pipelines:

- A recurring pipeline of document preprocessing, ingestion, and embedding generation

- An inference pipeline with a user query and response generation

Several software components and tools are typically employed. These components work together to enable the efficient processing and handling of the data, and the actual execution of inferencing tasks.

These software components, in combination with the hardware setup (like GPUs and virtual machines/containers), create an infrastructure for running AI inferencing tasks within a typical RAG pipeline. These tools’ integration allows for processing custom datasets (like PDFs) and generating sophisticated, human-like responses by an AI model.

As previously stated, David O’Dell has provided an extremely useful guide to get a RAG pipeline up and running. One of the key components is the pipeline function.

The pipeline function in Hugging Face’s Transformers library is a high-level API designed to simplify the process of using pre-trained models for various NLP tasks, and it abstracts the complexities of model loading, data pre-processing (like tokenization), inference, and post-processing. The pipeline directly interfaces with the model to perform inference but is more focused on ease-of-use and accessibility rather than scaling and optimizing resource usage. It is as a high-level API that abstracts away much of the complexity involved in setting up and using various transformer-based models.

It’s ideal for quickly implementing NLP tasks, prototyping, and applications where ease of use and simplicity are key.

But is it easy to implement?

Setting up and maintaining RAG pipelines requires considerable technical expertise in AI, machine learning, and system administration. While some components (such as the ‘pipeline function’) have been designed for ease of use, typically, they are not designed to scale.

So, we need robust software that can scale and is easier to use.

NVIDIA's solutions are designed for high performance and scalability which is essential for handling large-scale AI workloads and real-time interactions.

NVIDIA provides extensive documentation, sample Jupyter notebooks, and a sample chatbot web application, which are invaluable for understanding and implementing the RAG pipeline.

The system is optimized for NVIDIA GPUs, ensuring efficient use of some of the most powerful available hardware.

NVIDA’s Approach to Simplify — Building a RAG System with NVIDIA’s Tools:

NVIDIA’s approach is to streamline the RAG pipeline and make it much easier to get up and running.

By offering a suite of optimized tools and pre-built components, NVIDIA has developed an AI workflow for retrieval-augmented generation that includes a sample chatbot and the elements users need to create their own applications with this new method. It simplifies the once daunting task of creating sophisticated AI chatbots, ensuring scalability and high performance.

Getting Started with NVIDIA’s LLM playground

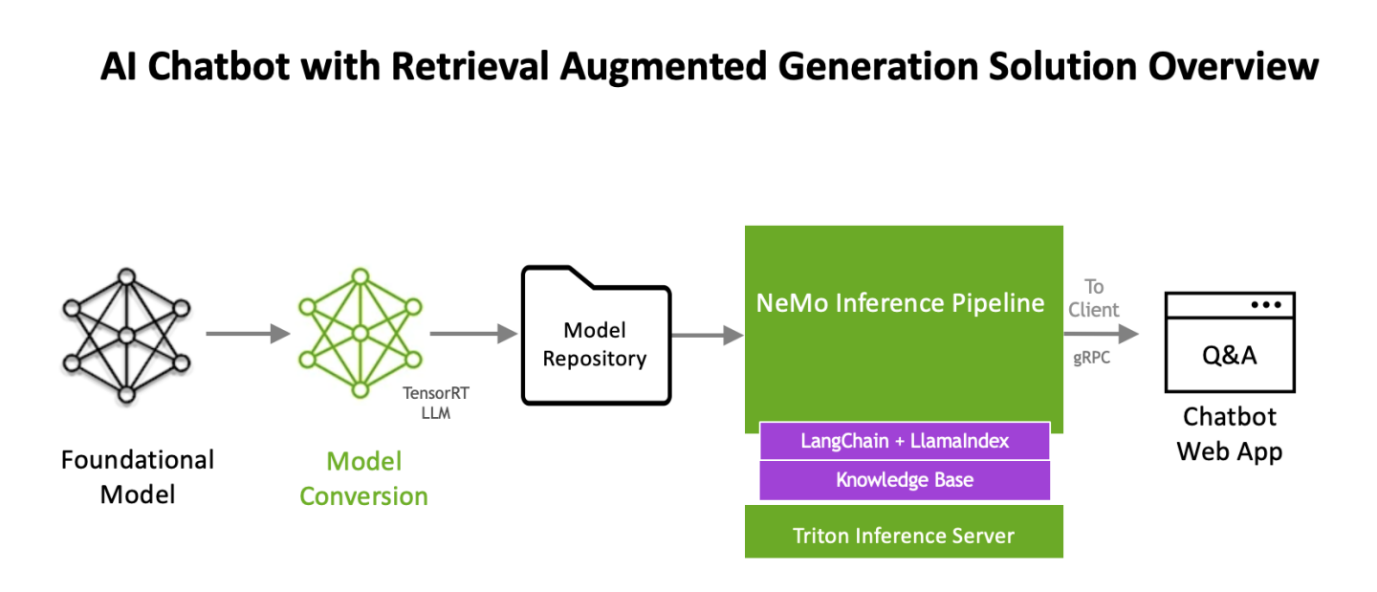

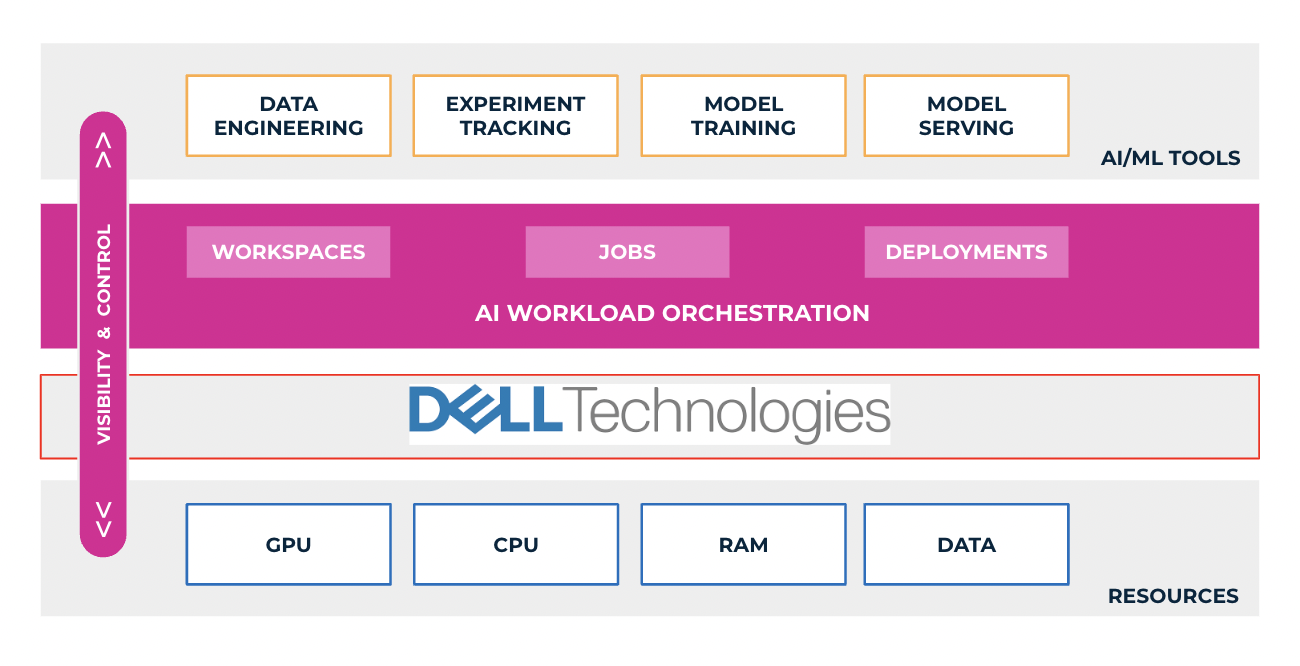

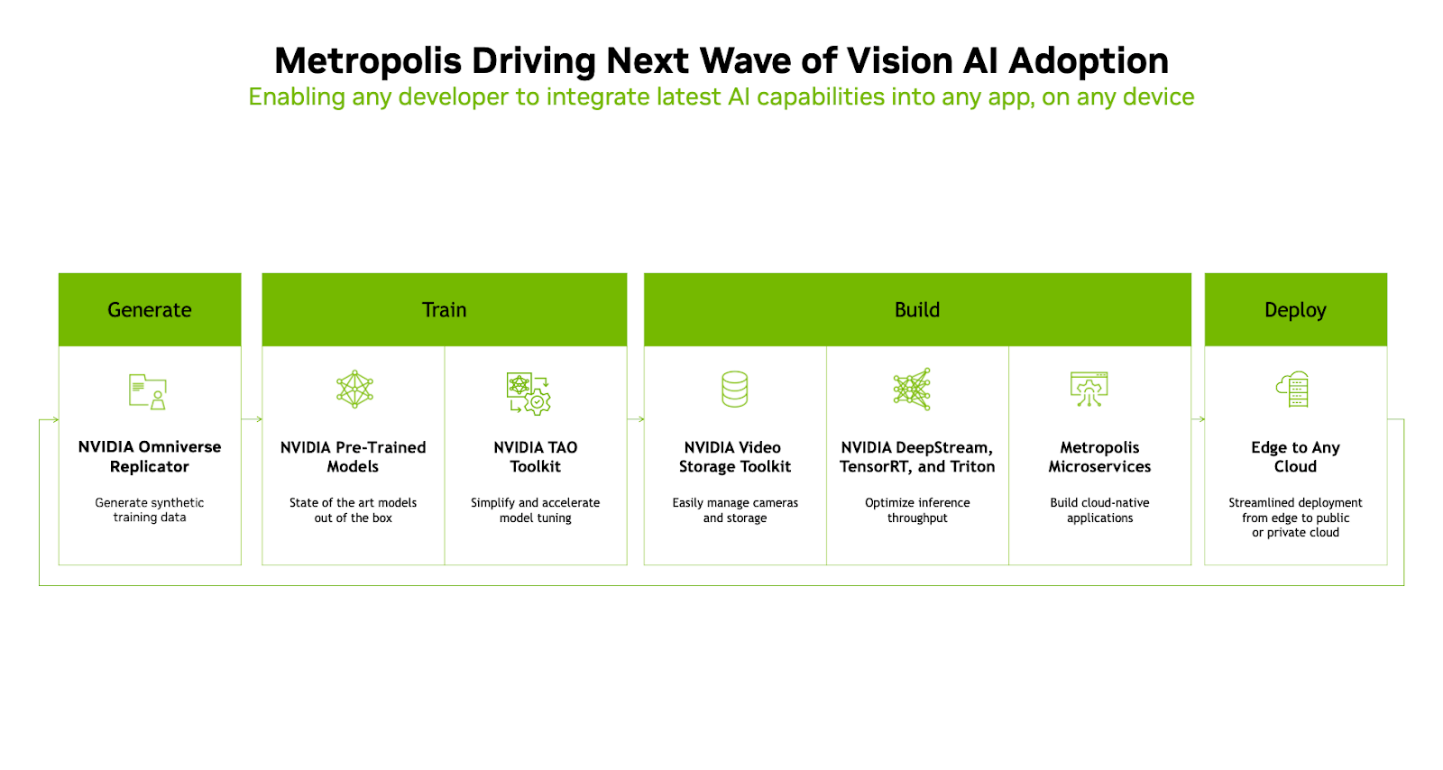

The workflow uses NVIDIA NeMo, a framework for developing and customizing generative AI models, as well as software like NVIDIA Triton Inference Server and NVIDIA TensorRT-LLM for running generative AI models in production.

The software components are all part of NVIDIA AI Enterprise, a software platform that accelerates the development and deployment of production-ready AI with the security, support, and stability businesses need.

Nvidia has published a retrieval augmented generation workflow as an app example at

https://resources.nvidia.com/en-us-generative-ai-chatbot-workflow/knowledge-base-chatbot-technical-brief

Also it maintains a git page with updated information on how to deploy it in Linux Docker, Kubernetes and windows at

https://github.com/NVIDIA/GenerativeAIExamples

Next, we will walk through (at a high level) the procedure to use the NVIDIA AI Enterprise Suite RAG pipeline implementation below.

This procedure is based on the documentation on link https://github.com/NVIDIA/GenerativeAIExamples/tree/v0.2.0/RetrievalAugmentedGeneration

Deployment

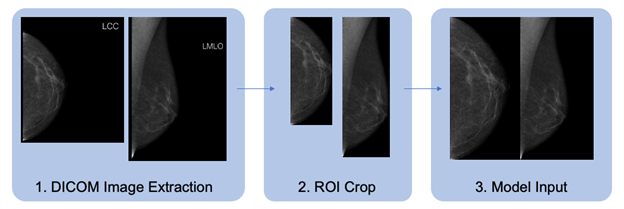

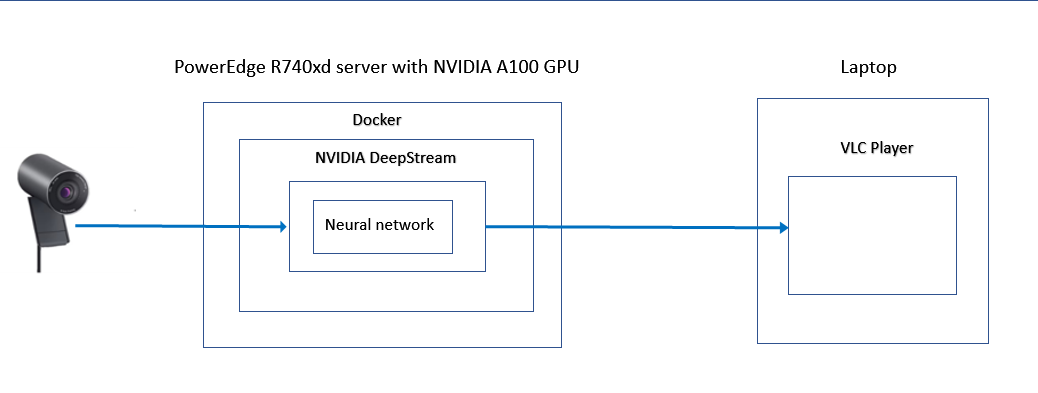

The NVIDIA developer guide provides detailed instructions for building a Retrieval Augmented Generation (RAG) chatbot using the Llama2 model on TRT-LLM. It includes prerequisites like NVIDIA GPU, Docker, NVIDIA Container Toolkit, an NGC Account, and Llama2 model weights. The guide covers components like Triton Model Server, Vector DB, API Server, and Jupyter notebooks for development.

Key steps involve setting up these components, uploading documents, and generating answers. The process is designed for enterprise chatbots, emphasizing customization and leveraging NVIDIA’s AI technologies. For complete details and instructions, please refer to the official guide.

Key Software components and Architectural workflow (for getting up and running with LLM playground)

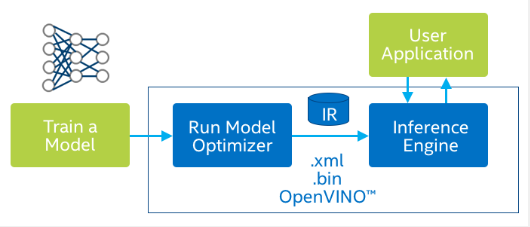

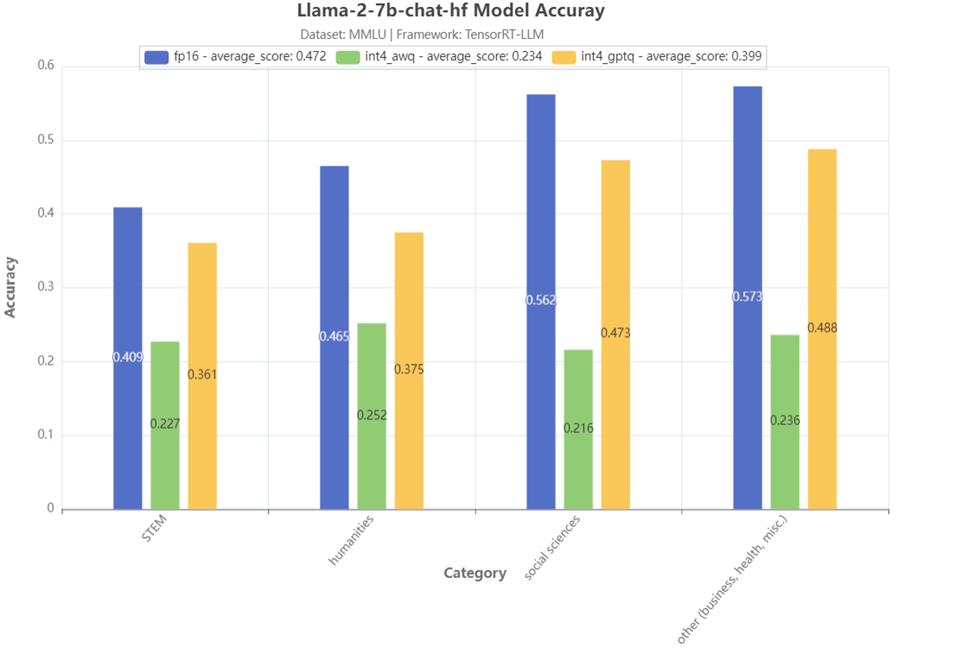

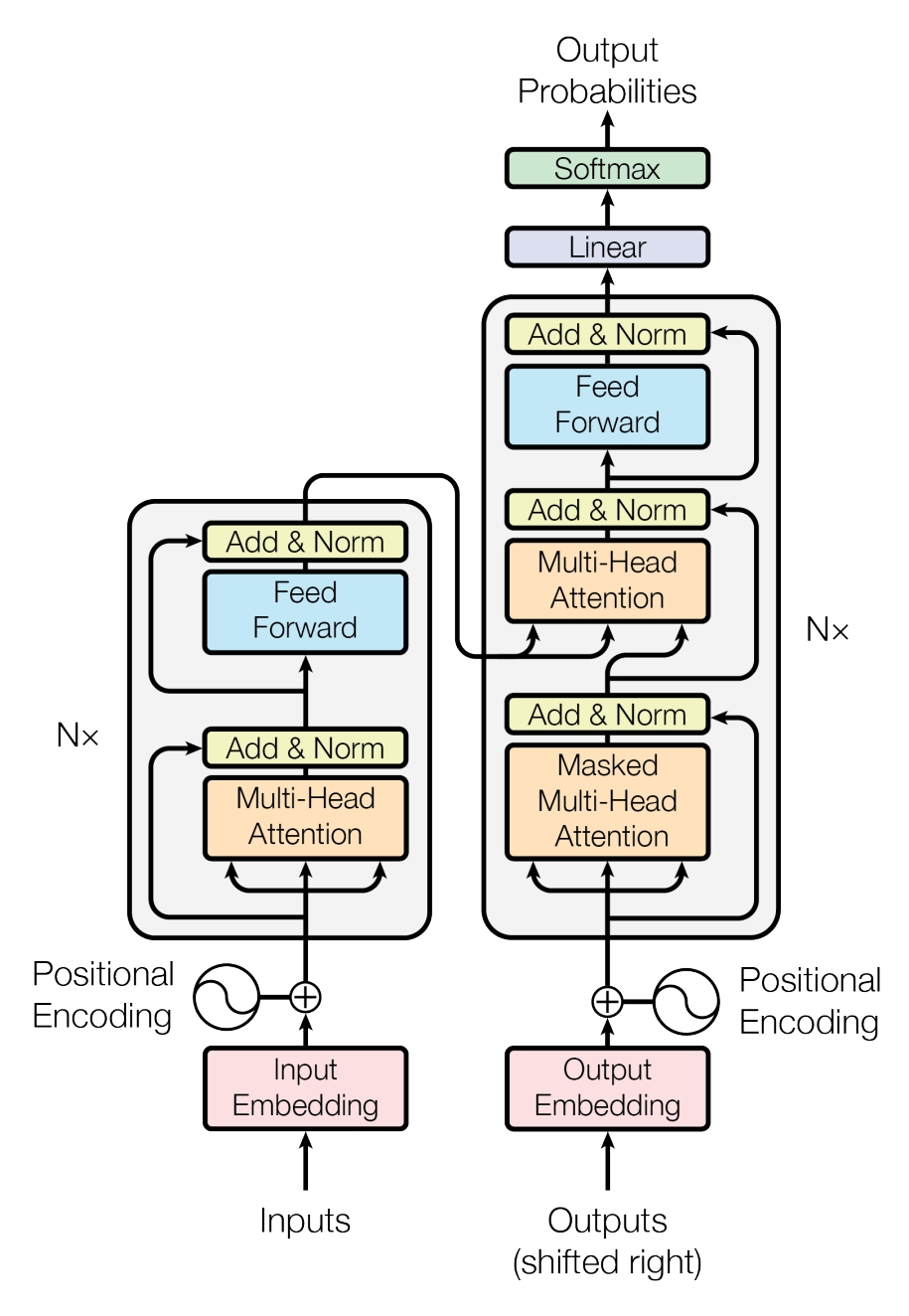

1. Llama2: Llama2 offers advanced language processing capabilities, essential for sophisticated AI chatbot interactions. It will be converted into TensorRT-LLM format.

Remember, we cancannot take a model from HuggingFace and run it directly on TensorRT-LLM. Such a model will need to go through a conversion stage before it can leverage all the goodness of TensorRT-LLM. We recently published a detailed blog on how to do this manually here. However, (fear not) as part of the LLM playground docker compose process, all we need to do is point one of our environment variables to the llama model. It will automatically do the conversion process for us! (steps are outlined in the implementation section of the blog)

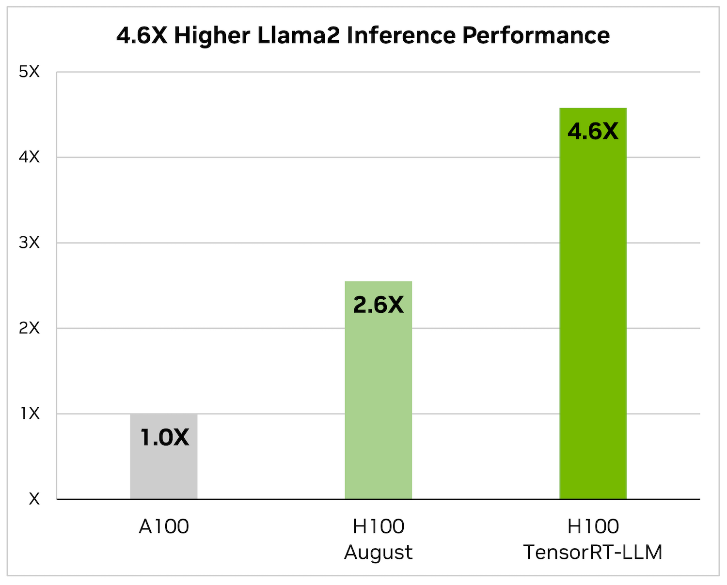

2. NVIDIA TensorRT-LLM: When it comes to optimizing large language models, TensorRT-LLM is the key. It ensures that models deliver high performance and maintain efficiency in various applications.

- The library includes optimized kernels, pre- and post-processing steps, and multi-GPU/multi-node communication primitives. These features are specifically designed to enhance performance on NVIDIA GPUs.

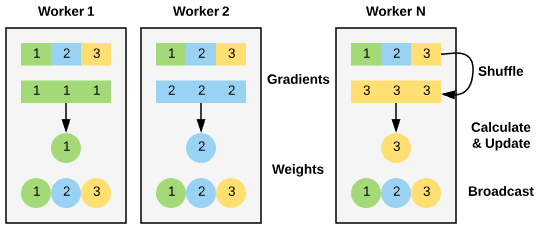

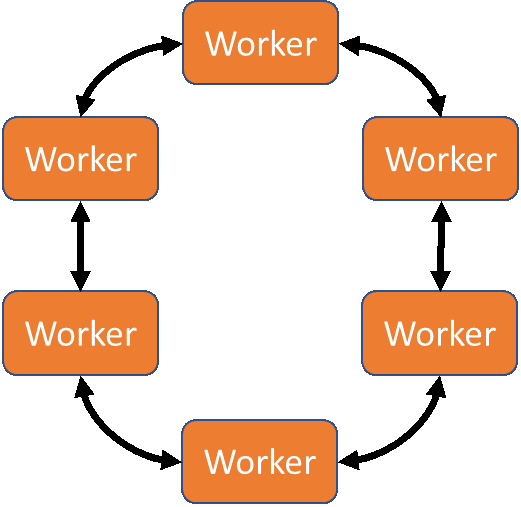

It utilizes tensor parallelism for efficient inference across multiple GPUs and servers, without the need for developer intervention or model changes.

It utilizes tensor parallelism for efficient inference across multiple GPUs and servers, without the need for developer intervention or model changes.

We will be updating our Generative AI in the Enterprise – Inferencing – Design Guide to reflect the new sizing requirements based on TensorRT-LLM

3. LLM-inference-server: NVIDIA Triton Inference Server (container): Deployment of AI models is streamlined with the Triton Inference Server. It supports scalable and flexible model serving, which is essential for handling complex AI workloads. The Triton inference server is responsible for hosting the Llama2 TensorRT-LLM model

Now that we have our optimized foundational model, we need to build up the rest of the RAG workflow.

- Chain-server: langChain and LlamaIndex (container): Required for the RAG pipeline to function. A tool for chaining LLM components together. LangChain is used to connect the various elements like the PDF loader and vector database, facilitating embeddings, which are crucial for the RAG process.

4. Milvus (container): As an AI-focused vector database, Milvus stands out for managing the vast amounts of data required in AI applications. Milvus is an open-source vector database capable of NVIDIA GPU accelerated vector searches.

5. e5-large-v2 (container): Embeddings model designed for text embeddings. When content from the knowledge base is passed to the embedding model (e5-large-v2), it converts the content to vectors (referred to as “embeddings”). These embeddings are stored in the Milvus vector database.

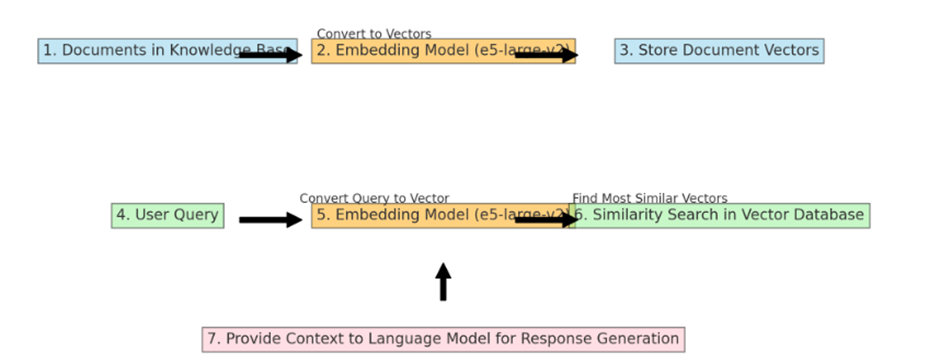

The embedding model like “e5-large-v2” is used twice in a typical RAG (Retrieval-Augmented Generation) workflow, but for slightly different purposes at each step. Here is how it works:

Using the same embedding model for both documents and user queries ensures that the comparisons and similarity calculations are consistent and meaningful, leading to more relevant retrieval results.

We will talk about how “provide context to the language model for response generation” is created in the prompt workflow section, but first, let’s look at how the two embedding workflows work.

Converting and Storing Document Vectors: First, an embedding model processes the entire collection of documents in the knowledge base. Each document is converted into a vector. These vectors are essentially numerical representations of the documents, capturing their semantic content in a format that computers can efficiently process. Once these vectors are created, they are stored in the Milvus vector database. This is a one-time process, usually done when the knowledge base is initially set up or when it’s updated with new information.

Processing User Queries: The same embedding model is also used to process user queries. When a user submits a query, the embedding model converts this query into a vector, much like it did for the documents. The key is that the query and the documents are converted into vectors in the same vector space, allowing for meaningful comparisons.