Blogs

Blogs by subject matter experts on various aspects of Azure Stack Hub.

The challenging Edge: Dell Technologies to the rescue with Azure Stack Hub – Tactical

Mon, 26 Jul 2021 12:46:04 -0000

|Read Time: 0 minutes

Some context for a turbulent environment

In August 31, 2017, Microsoft launched Azure Stack Hub and enabled a true hybrid cloud operating model to extend Azure services on-premises. An awesome and long expected milestone at that time!

Implementing Azure Stack Hub in our customers’ datacenters under normal circumstances is a pretty straightforward process today if you choose our Dell EMC Integrated System for Microsoft Azure Stack Hub.

But there are certain cases where delivering Azure Stack Hub may be complex (or even impossible), especially in scenarios such as:

Edge scenarios: semi-permanent or permanent sites where there is no planned decommissioning, that can include:

Edge scenarios: semi-permanent or permanent sites where there is no planned decommissioning, that can include:

- IoT Applications: Device provisioning, tracking and management applications

- Efficient field operations: Disaster Relief, humanitarian efforts, embassies

- Smarter management of mobile fleet assets: Utility and maintenance vehicles

- Tactical scenarios: strategic sites stood up for a specific task, temporary or permanent, that can experience:

- Limited/restricted connectivity: submarines, aircraft, and planes

- Harsh Conditions: combat zones, oil rigs, mine shafts

The final outcome in these environments remains the same: provide always-on cloud services everywhere from a minimal set of local resources.

The question is… how do we make this possible?

The answer is: Dell EMC Integrated System for Microsoft Azure Stack Hub – Tactical

Dell Technologies, in partnership with Microsoft and Tracewell Systems, has developed Dell EMC Integrated System for Microsoft Azure Stack Hub – Tactical (aka Azure Stack Hub – Tactical): a unique ruggedized and field-deployable solution for Azure Stack tactical edge environments.

Azure Stack Hub – Tactical extends Azure-based solutions beyond the traditional data center to a wide variety of non-standard environments, providing a local Azure consistent cloud with:

- Limited or no network connectivity

- Fully mobile, or high portability (“2-person lift”) requirements

- Harsh conditions requiring military specifications solutions

- High security requirements, with optional connectivity to Azure Government and Azure Government Secret

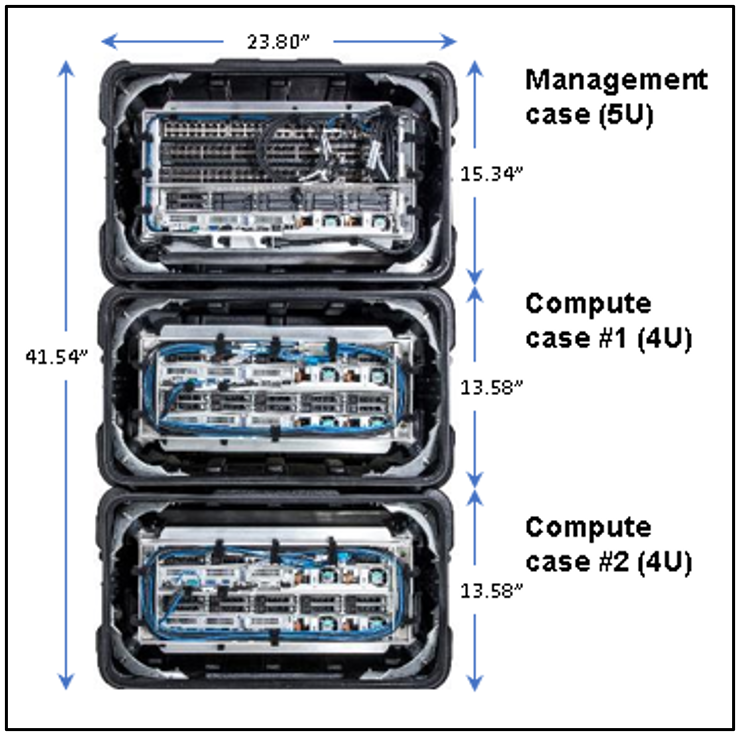

Azure Stack Hub – Tactical is functionally and electrically identical to Azure Stack Hub All-Flash to ensure interoperability. It includes custom engineered modifications to make the whole solution fit into just three ruggedized cases that are only23.80 inches wide, 41.54 inches high, and 25.63 inches deep.

The smallest Azure Stack Hub – Tactical configuration comprises one management case plus two compute cases, each of them containing:

- Management case:

- 1 x T-R640 HLH management server (2U)

- 1 x N3248TE-ON management switch (1U)

- 2 x S5248F-ON Top-of-Rack switches (1U each)

- Total weight: 146 lbs.

- Compute case:

2 x T-R640 servers, based on Dell EMC PowerEdge R640 All-Flash server adapted for tactical use (2U each)

Two configuration options for compute servers:

- Low:

- 2 x Intel 5118 12-core processors

- 384 GB memory

- 19.2TB total raw SSD capacity

- High:

- 2 x Intel 6130 16-core processors

- 768GB memory

- 38.4TB total raw SSD capacity

- Total weight: 116 lbs.

- Heater option for extended temperature operation support:

- The Tactical devices are designed to meet MIL-STD-810G specification

- Dell Technologies in collaboration with Tracewell systems has designed a fully automated heater which, when fully integrated, can provide supplemental heating to the device when needed.

Compute cases can grow up to 8, for a total of 16 servers (in 4-node increments) -- the scale unit maximum mandated by Microsoft.

You can read the full specifications here.

Azure Stack Hub – Tactical is a turnkey end to end engineered solution designed, tested, and sustained through the entire lifespan of all of its hardware and software components.

It includes non-disruptive operations and automated full stack life cycle management for on-going component maintenance, fully coordinated with Microsoft’s Update process.

Customers also benefit from a simplified one call support model across all solution components.

Conclusion

Desperate “edge-cuts” must have desperate “tactical-cures”, and that is exactly what Dell EMC Integrated System for Microsoft Azure Stack Hub – Tactical delivers to our customers for edge environments and extreme conditions.

Azure Stack Hub – Tactical resolves the challenges of providing Azure cloud services everywhere by allowing our customers to add/remove deployments with relative ease through an automated, repeatable, and predictable process requiring minimal local IT resources.

Thanks for reading and stay tuned for more blog updates in this space by visiting Info Hub!

GPU-Accelerated AI and ML Capabilities

Mon, 14 Dec 2020 15:37:06 -0000

|Read Time: 0 minutes

Dell EMC Integrated System for Microsoft Azure Stack Hub has been extending Microsoft Azure services to customer-owned data centers for over three years. Our platform has enabled organizations to create a hybrid cloud ecosystem that drives application modernization and to address business concerns around data sovereignty and regulatory compliance.

Dell Technologies, in collaboration with Microsoft, is excited to announce upcoming enhancements that will unlock valuable, real-time insights from local data using GPU-accelerated AI and ML capabilities. Actionable information can be derived from large on-premises data sets at the intelligent edge without sacrificing security.

Partnership with NVIDIA

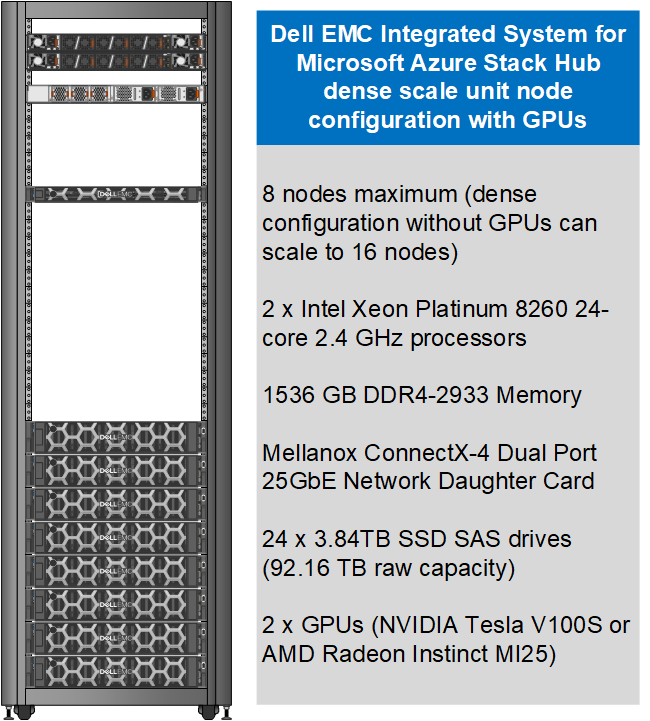

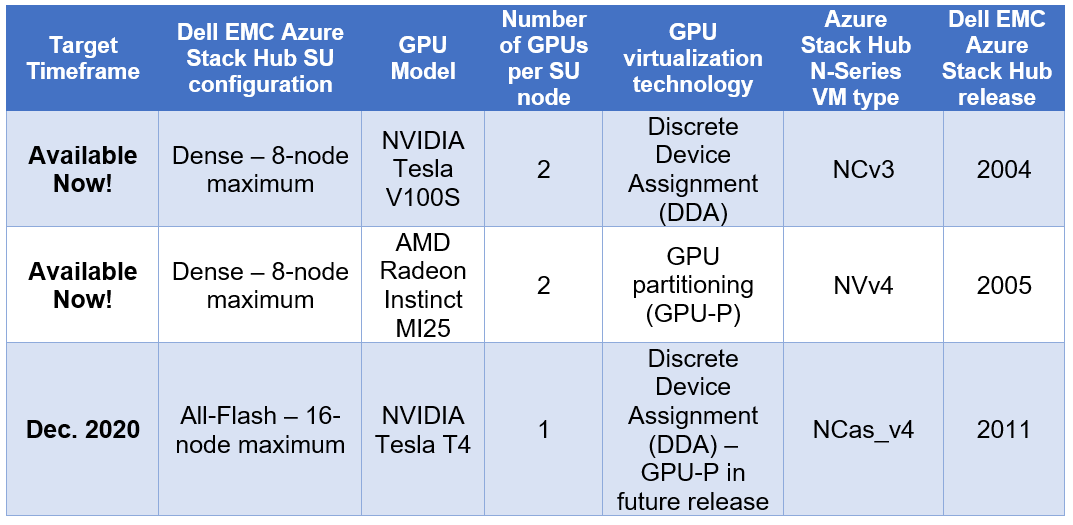

Today, customers can order our Azure Stack Hub dense scale unit configuration with NVIDIA Tesla V100S GPUs for running compute-intensive AI processes like inferencing, training, and visualization from virtual machine or container-based applications. Some customers choose to run Kubernetes clusters on their hardware-accelerated Azure Stack Hub scale units to process and analyze data sent from IoT devices or Azure Stack Edge appliances. Powered by the Dell EMC PowerEdge R840 rack server, these NVIDIA Tesla V100S GPUs use Discrete Device Assignment (DDA), also known as GPU pass-through, to dedicate one or more GPUs to an Azure Stack Hub NCv3 VM.

The following figure illustrates the resources installed in each GPU-equipped Azure Stack Hub dense configuration scale unit node.

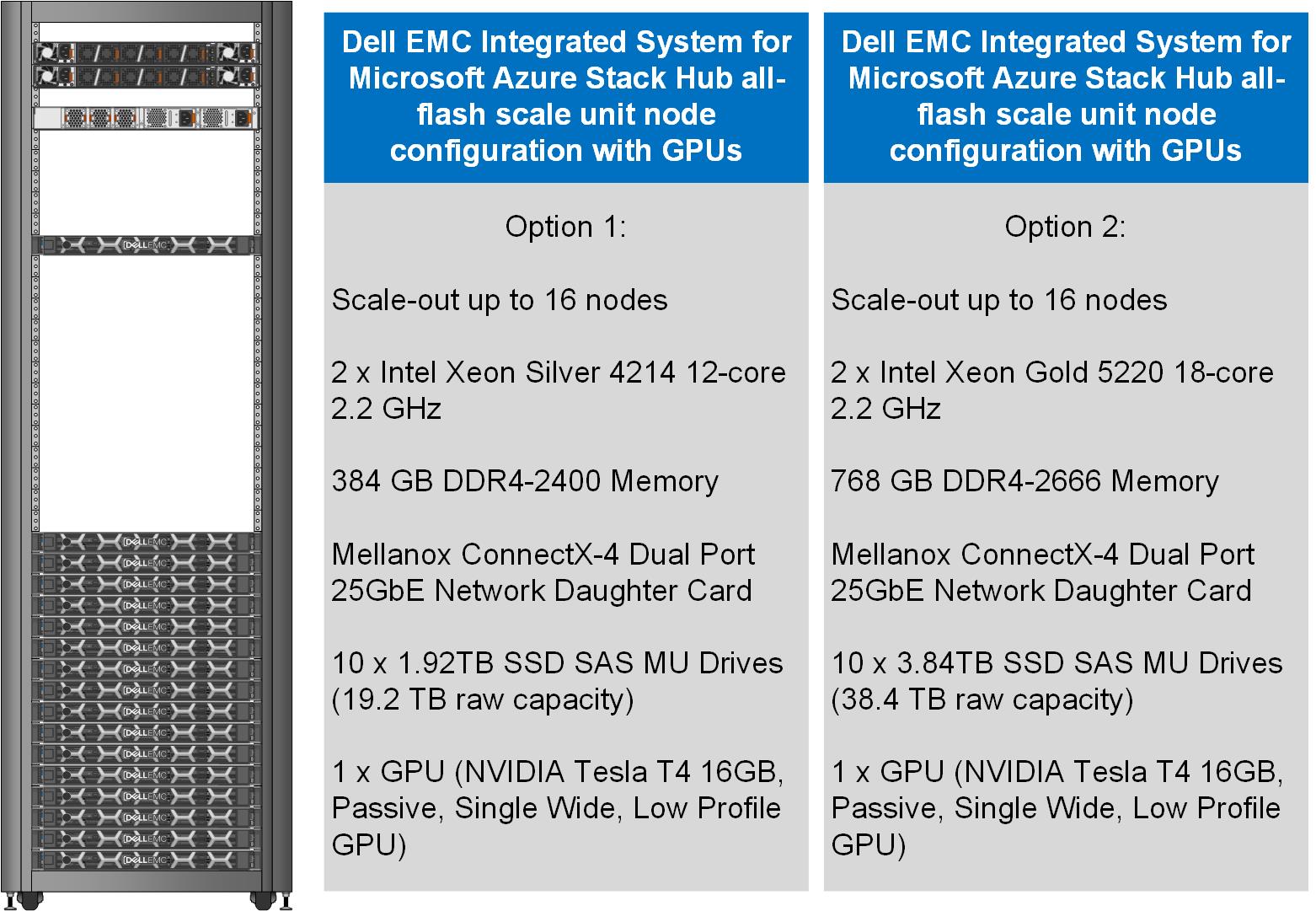

This month, our Dell EMC Azure Stack Hub release 2011 will also support the NVIDIA T4 GPU – a single-slot, low-profile adapter powered by NVIDIA Turing Tensor Cores. These GPUs are perfect for accelerating diverse cloud-based workloads, including light machine learning, inference, and visualization. These adapters can be ordered with Dell EMC Azure Stack Hub all-flash scale units powered by Dell EMC PowerEdge R640 rack servers. Like the NVIDIA Tesla V100S, these GPUs use DDA to dedicate one adapter’s powerful capabilities to a single Azure Stack Hub NCas_v4 VM. A future Azure Stack Hub release will also enable GPU partitioning on the NVIDIA T4.

The following figure illustrates the resources installed in each GPU-equipped Azure Stack Hub all-flash configuration scale unit node.

Partnership with AMD

We are also pleased to announce a partnership with AMD to deliver GPU capabilities in our Dell EMC Integrated System for Microsoft Azure Stack Hub. Available today, customers can order our dense scale unit configuration with AMD Radeon Instinct MI25 GPUs aimed at graphics intensive visualization workloads like simulation, CAD applications, and gaming. The MI25 uses GPU partitioning (GPU-P) technology to allow users of an Azure Stack Hub NVv4 VM to consume only a portion of the GPU’s resources based on their workload requirements.

The following table is a summary of our hardware acceleration capabilities.

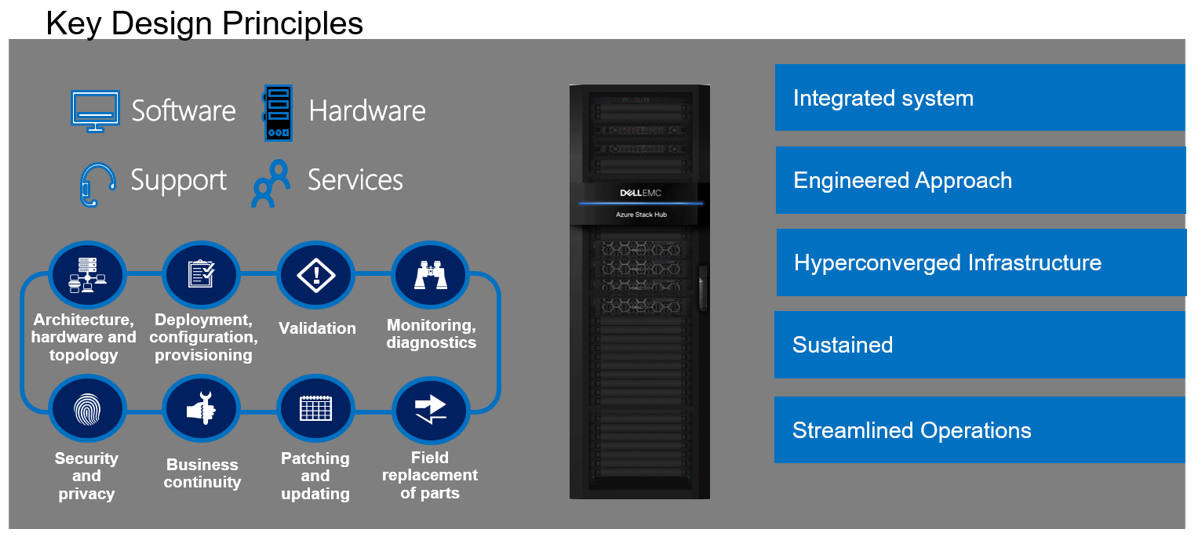

An engineered approach

Following our stringent engineered approach, Dell Technologies goes far beyond considering GPUs as just additional hardware components in the Dell EMC Integrated System for Microsoft Azure Stack Hub portfolio. We apply our pedigree as leaders in appliance-based solutions to the entire lifecycle of all our scale unit configurations. The dense and all-flash scale unit configurations with integrated GPUs are designed to follow best practices and use cases specifically with Azure-based workloads, rather than workloads running on traditional virtualization platforms. Dell Technologies is also committed to ensuring a simplified experience for initial deployment, patch and update, support, and streamlined operations and monitoring for these new configurations.

Additional considerations

There are a couple of additional details worth mentioning about our new Azure Stack Hub dense and all-flash scale unit configurations with hardware acceleration:

- The use of the GPU-backed N-Series VMs in Azure Stack Hub for compute-intensive AI and ML workloads is still in preview. Dell Technologies is very interested in speaking with customers about their use cases and workloads supported by this configuration. Please contact us at mhc.preview@dell.com to speak with one of our engineering technologists.

- The Dell EMC Integrated System for Microsoft Azure Stack Hub configurations with GPUs can be delivered fully racked and cabled in our Dell EMC rack. Customers can also elect to have the scale unit components re-racked and cabled in their own existing cabinets with the assistance of Dell Technologies Services.

Resources for further study

- At the time of publishing this blog post, only the NCv3 and NVv4 VMs are available in the Azure Stack Hub marketplace. The NCas_v4 currently is not visible in the portal. Please proceed to the Azure Stack Hub User Documentation for more information on these VM sizes.

- Customers may want to explore the Train Machine Learning (ML) model at the edge design pattern in the Azure Hybrid Documentation. This may prove to be a good starting point for putting this technology to work for their organization.

- Customers considering running AI and ML workloads on Dell EMC Integrated System for Microsoft Azure Stack Hub can also greatly benefit from storage-as-a-service with Dell EMC PowerScale. PowerScale can help enable faster training and validation of AI models, improve model accuracy, drive higher GPU utilization, and increase data science productivity. Visit Artificial Intelligence with Dell EMC PowerScale for more information.

Azure Stack with PowerScale

Mon, 17 Aug 2020 18:44:45 -0000

|Read Time: 0 minutes

Dell EMC Integrated System for Microsoft Azure Stack Hub has been at the forefront in bringing Azure to customer datacenters, enabling customers to operate their own region of Azure in a secure environment that addresses their data sovereignty and performance needs.

As data growth explodes at the edge, many of our customers are looking to process PB scale data in the context of file, image/video processing, analytics, simulation, and learning. With Azure Stack Hub, built on hyperconverged infrastructure (HCI), the need for external storage to handle this growth in data was critical. Additionally, for applications that use file storage with CIFS/NFS today, Azure files storage service is currently not supported.

As we set out to identify the right storage subsystem that met our customers’ needs (with performance, multi-tenancy, multi-petabyte scale-out storage, and advanced data management features), we did not have to look far. Dell Technologies has a large product portfolio that enables us not only to integrate with other infrastructures but to innovate in other areas to deliver the Azure consistent experience our customers expect.

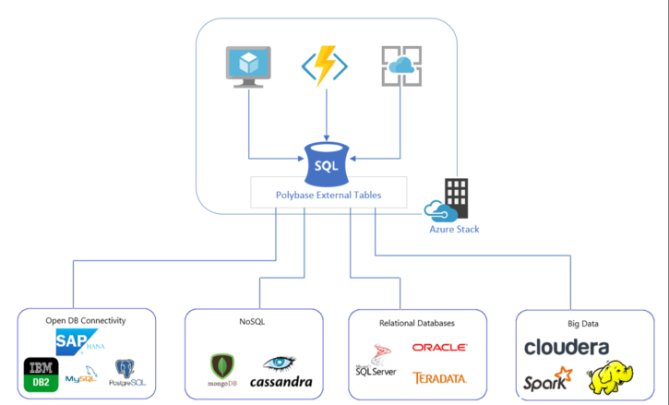

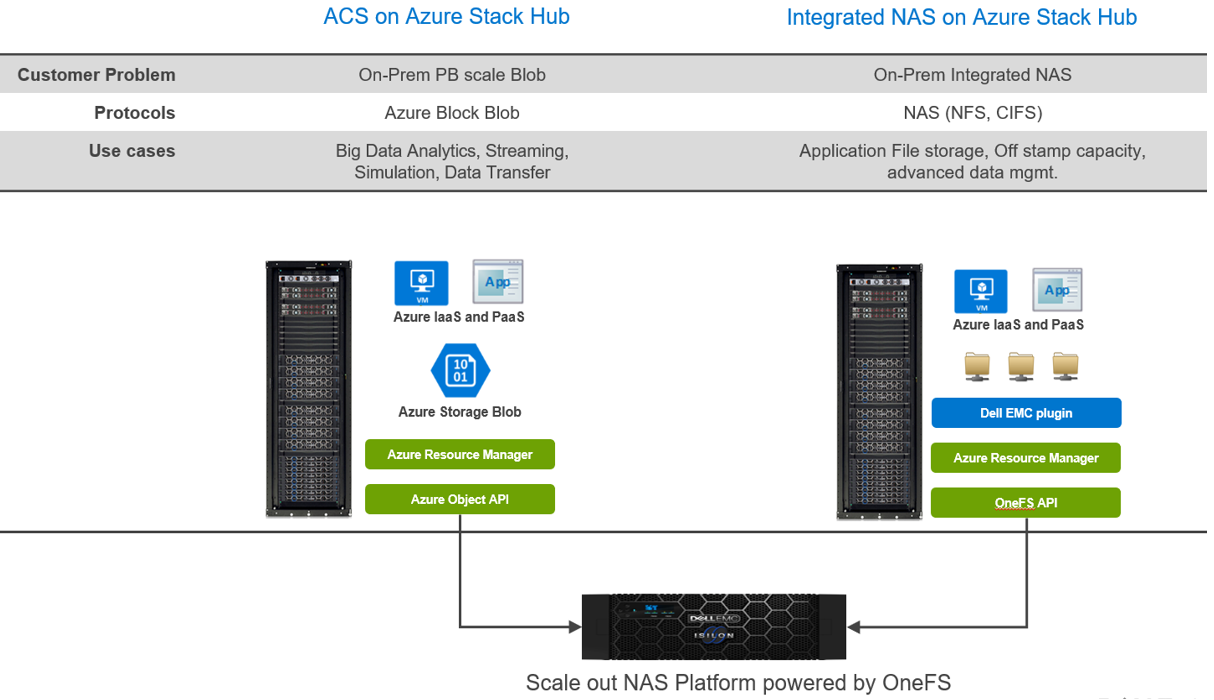

With newly announced Azure Stack Hub integration with Dell EMC PowerScale, customers can run their Azure IaaS and PaaS on-premises while connecting to data that is generated and stored locally. In the context of Azure consistency, depending on your application needs, there are two ways to consume this storage.

- Azure Consistent Storage (ACS): Applications that are using Azure Block Blob storage

- Integrated NAS (File Storage): NFS and CIFS

Here are some highlights about the choices and differences:

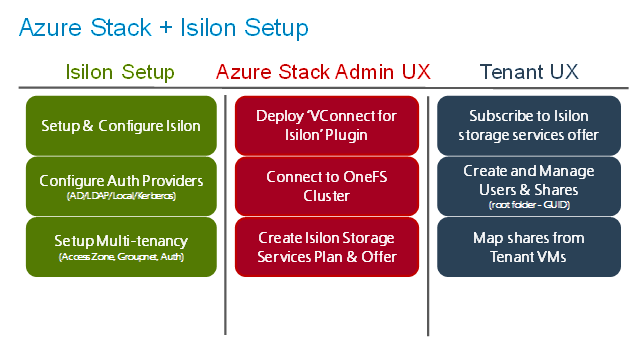

Regardless of your protocol of choice, you have two personas engaged:

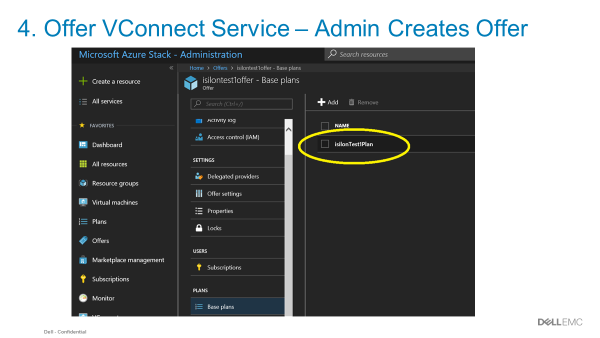

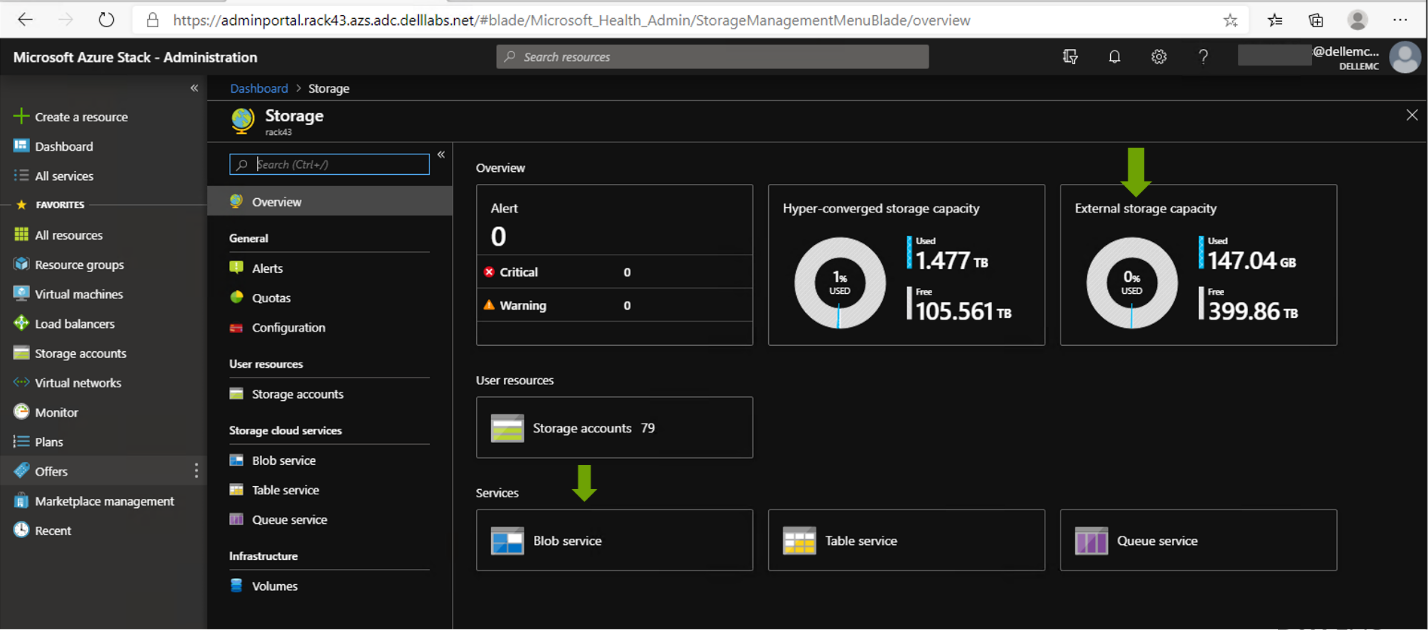

- The Azure Stack Hub Cloud administrator (screen below) is responsible for creating offers, quotas, and plans to offer the underlying storage, via subscriptions, to Azure tenants.

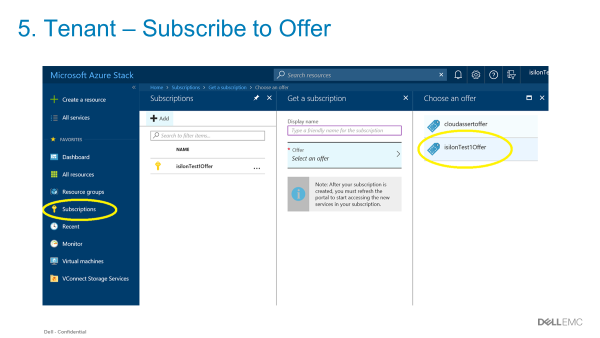

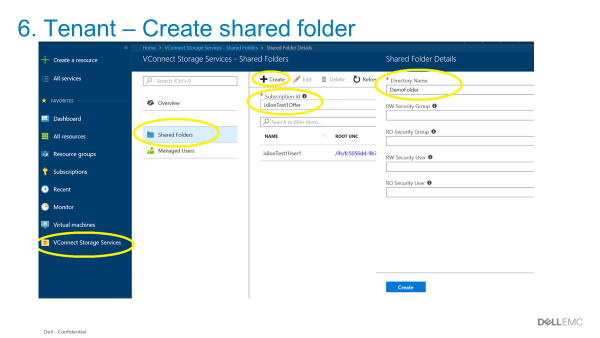

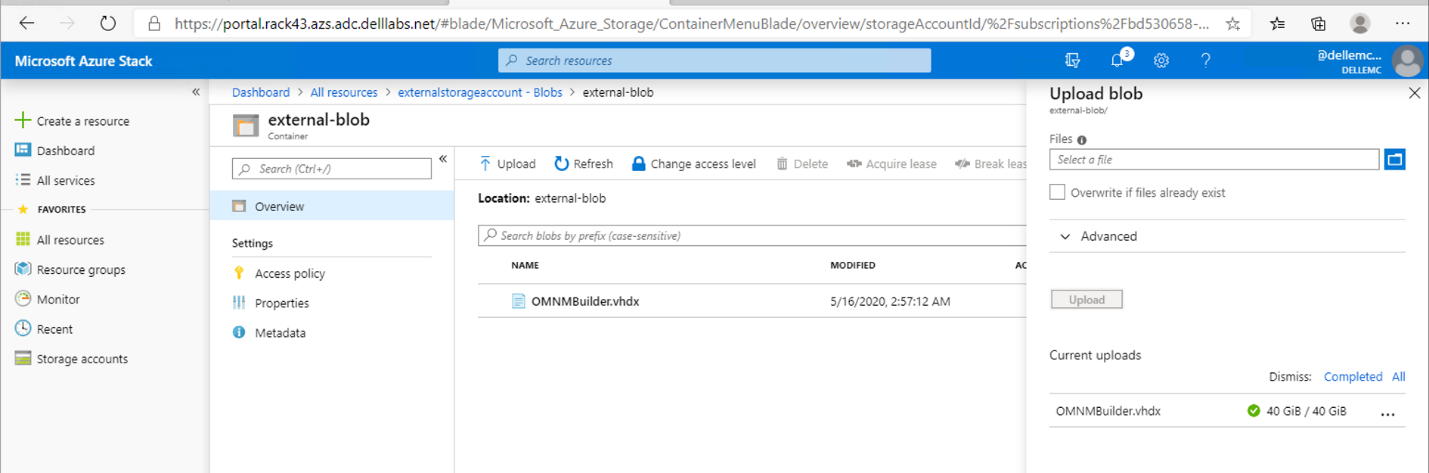

- The Azure Stack tenant can consume storage and be metered and billed consistent with other Azure Services. All of this, without having to manage anything in PowerScale.

With this strategy, our customers can tap into external PB storage to consume Azure Block Blob or Files via CIFS/NFS while maintaining the Azure consistent experience. Additionally, for customers looking to keep their applications in the public cloud while maintaining their data on-premises, Dell Technologies Cloud PowerScale extends OneFS running on-prem to Azure.

To read more about it, see this solution brief:

Dell Technologies Cloud PowerScale: Microsoft Azure

With the work Dell Technologies has been doing with Azure and Azure Stack Hub, your data is secure and compliant. You also have the choice to run your application in Azure or Azure Stack Hub and connect to your on-prem data without sacrificing bandwidth or latency.

Automated Detection of Server Configuration Drift

Mon, 17 Aug 2020 18:44:45 -0000

|Read Time: 0 minutes

Automated Detection of Server Configuration Drift

Security and compliance are key design principles of Microsoft Azure Stack Hub. The Dell EMC Integrated System for Microsoft Azure Stack Hub is engineered to meet Compliance, Regulatory, and Policy requirements of our customers.

Security posture on Dell EMC Integrated system for Microsoft Azure Stack Hub is implicit to our automated lifecycle management. Our goal is to extend and complement Microsoft’s strategy of baselining and remediating their security posture with a comprehensive drift and remediation strategy for all of our Azure Stack Hub elements.

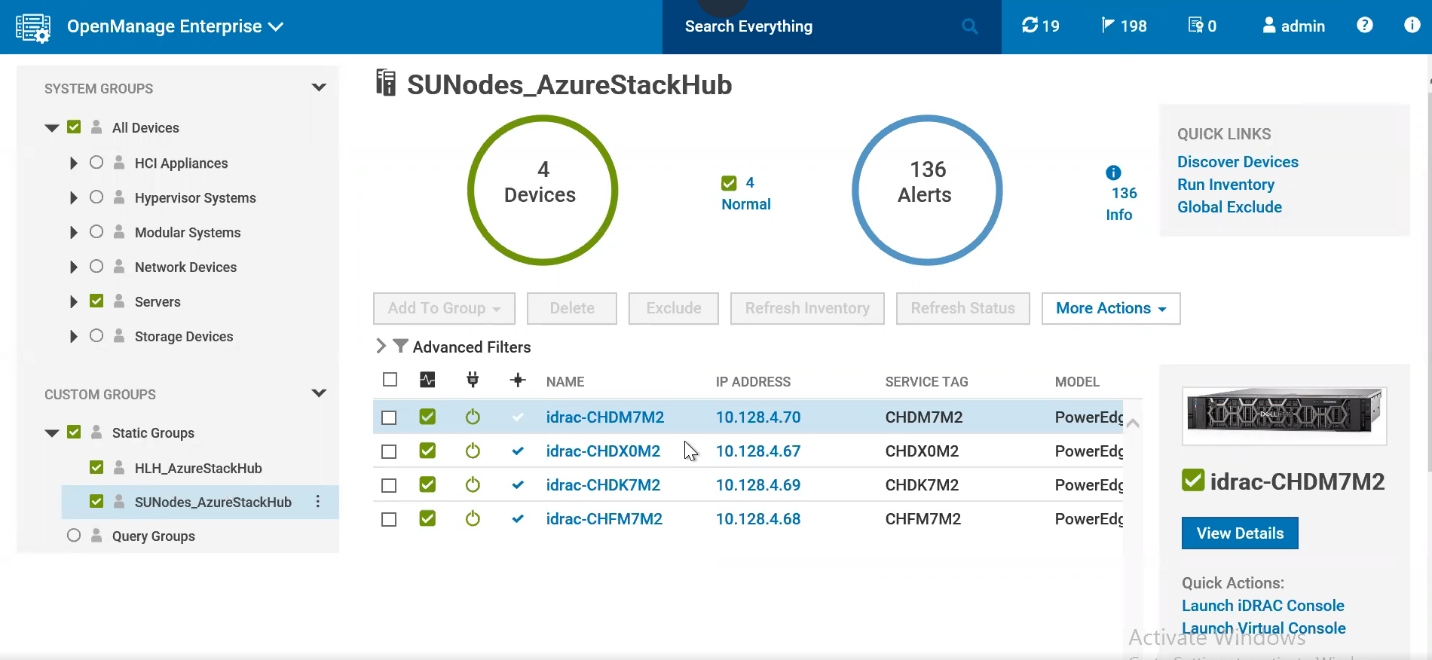

The Automated Server Config Drift Detection feature, enabled on Dell EMC OpenManage Enterprise as part of the Dell EMC Patch and Update Automation - 2004 Release, ensures Configuration Compliance as instituted by Microsoft and Dell EMC.

Monitor & Detect, Notify, and Remediate Server configuration Drift on Azure Stack Hub are the three key outcomes of the Automated Server config drift detection feature.

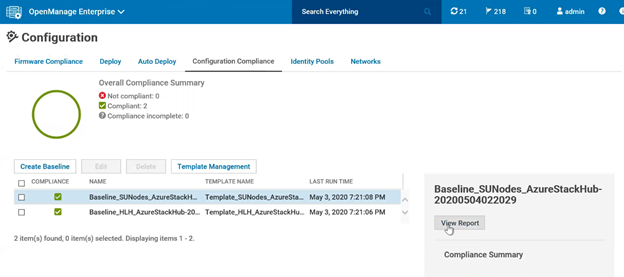

- Compliance Monitoring is kicked off by automated discovery of HLH and Scale Unit nodes on Dell EMC OpenManage Enterprise (Figure 1, below).

- Configuration integrity is maintained by enabling compliance baseline templates for the HLH and Scale Unit Nodes on OpenManage Enterprise in order to track drift (Figure 2).

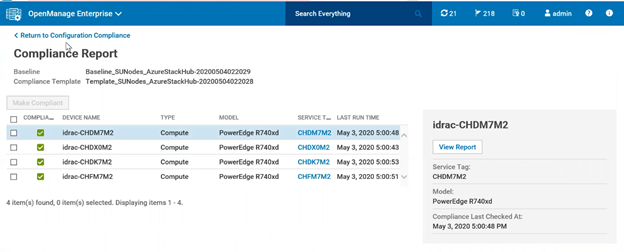

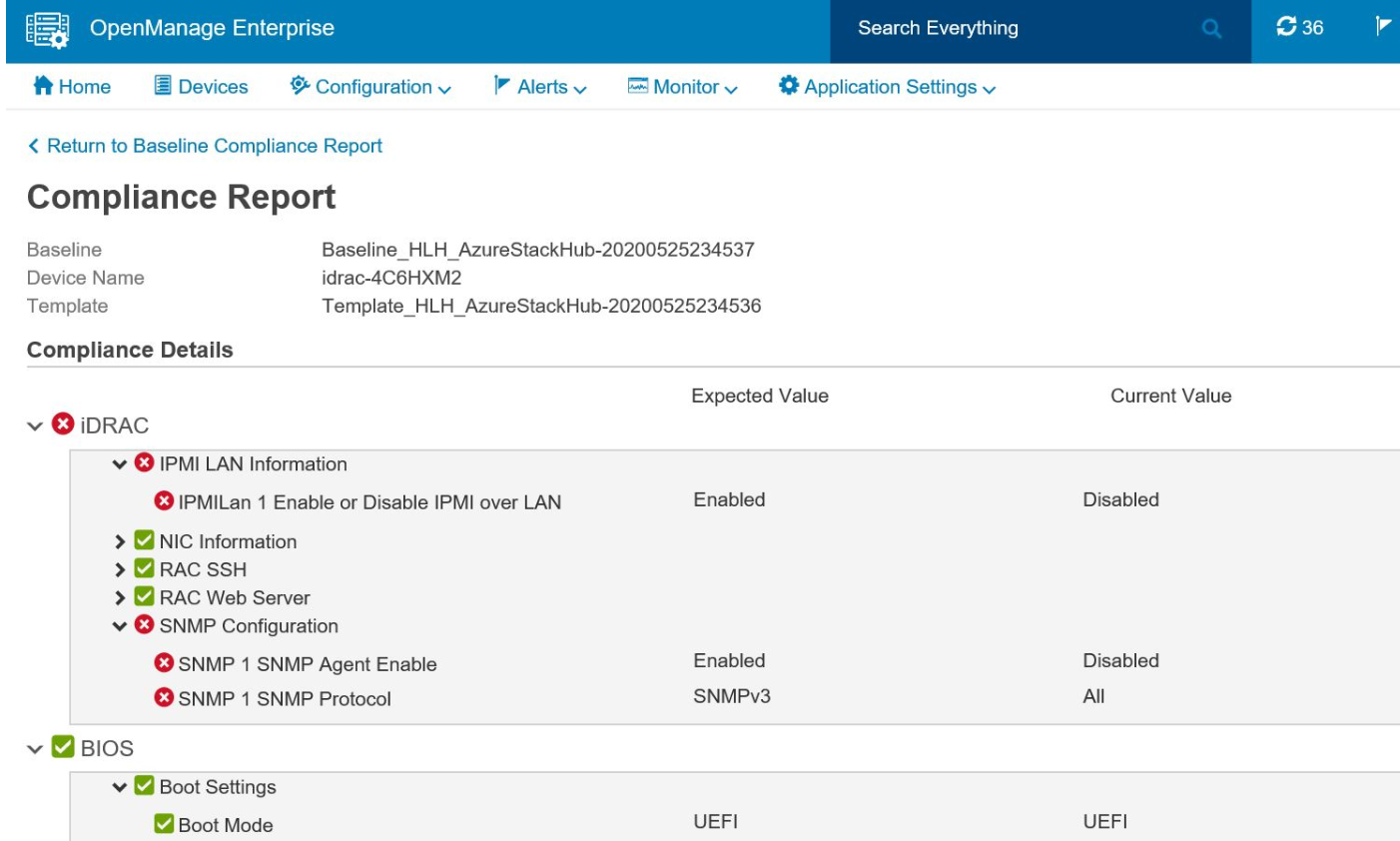

- Customers can view Compliance reports which display whether Server settings conform to the configuration baseline or not (Figure 3).

- Drift from any of the Server settings applied at initial deployment on the HLH or Scale Unit nodes will be automatically detected, resulting in the node being tagged as Non-Compliant (Figure 4).

- Server-drift Notification Alerts generated on OpenManage Enterprise are sent proactively via Dell Support Assist Enterprise (SAE) to Dell Technologies support.

- Customers can call Dell EMC Support to remediate non-compliance to ensure that the health and compliance status of their Azure Stack Hub continues to stay green.

Figure 1: Monitor HLH and SU nodes discovered on OpenManage Enterprise for alerts

Figure 2: Configuration Compliance status of HLH and SU nodes against configuration baseline

Figure 3: Compliance report indicating SU Node level Compliance status

Figure 4: Drill down view of Compliance report in case of Compliance failures

Future updates to the compliance baseline are seamlessly applied by means of the Dell EMC Patch and Update Automation as customers update to the latest Dell EMC Customer Toolkit.

Stay tuned as we move the needle towards a well-rounded compliance experience for our customers with similar features on ToR and Management switches in upcoming releases.

Running containerized applications on Microsoft Azure's hybrid ecosystem - Introduction

Mon, 17 Aug 2020 18:48:17 -0000

|Read Time: 0 minutes

Running containerized applications on Microsoft Azure’s hybrid ecosystem

Introduction

A vast array of services and tooling has evolved in support of microservices and container-based application development patterns. One indispensable asset in the technology value stream found in most of these patterns is Kubernetes (K8s). Technology professionals like K8s because it has become the de-facto standard for container orchestration. Business leaders like it for its potential to help disrupt their chosen marketplace. However, deploying and maintaining a Kubernetes cluster and its complimentary technologies can be a daunting task to the uninitiated.

Enter Microsoft Azure’s portfolio of services, tools, and documented guidance for developing and maintaining containerized applications. Microsoft continues to invest heavily in simplifying this application modernization journey without sacrificing features and functionality. The differentiators of the Microsoft approach are two-fold. First, the applications can be hosted wherever the business requirements dictate – i.e. public cloud, on-premises, or spanning both. More importantly, there is a single control plane, Azure Resource Manager (ARM), for managing and governing these highly distributed applications.

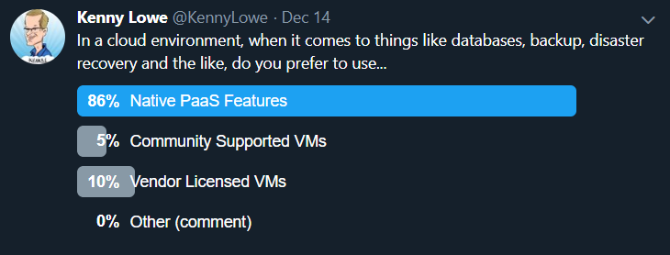

In this blog series, we share the results of hands-on testing in the Dell Technologies labs with container-related services that span both Public Azure and on-premises with Azure Stack Hub. Azure Stack Hub provides a discrete instance of ARM, which allows us to leverage a consistent control plane even in environments with no connectivity to the Internet. It might be helpful to review articles rationalizing the myriad of announcements made at Microsoft Ignite 2019 about Microsoft’s hybrid approach from industry experts like Kenny Lowe, Thomas Maurer, and Mary Branscombe before delving into the hands-on activities in this blog.

Services available in Public Azure

Azure Kubernetes Service (AKS) is a fully managed platform service hosted in Public Azure. AKS makes it simple to define, deploy, debug, and upgrade even the most complex Kubernetes applications. With AKS, organizations can accelerate past the effort of deploying and maintaining the clusters to leveraging the clusters as target platforms for their CI/CD pipelines. DevOps professionals only need to concern themselves with the management and maintenance of the K8s agent nodes and leave the management of the master nodes to Public Azure.

AKS is just one of Public Azure’s container-related services. Azure Monitor, Azure Active Directory, and Kubernetes role-based access controls (RBAC) provide the critical governance needed to successfully operate AKS. Serverless Kubernetes using Azure Container Instances (ACI) can add compute capacity without any concern about the underlying infrastructure. In fact, ACI can be used to elastically burst from AKS clusters when workload demand spikes. Azure Container Registry (ACR) delivers a fully managed private registry for storing, securing, and replicating container images and artifacts. This is perfect for organizations that do not want to store container images in publicly available registries like Docker Hub.

Leveraging the hybrid approach

Microsoft is working diligently to deliver the fully managed AKS resource provider to Azure Stack Hub. The first step in this journey is to use AKS engine to bootstrap K8s clusters on Azure Stack Hub. AKS engine provides a command-line tool that helps you create, upgrade, scale, and maintain clusters. Customers interested in running production-grade and fully supported self-managed K8s clusters on Azure Stack Hub will want to use AKS engine for deployment and not the Kubernetes Cluster (preview) marketplace gallery item. This marketplace item is only for demonstration and POC purposes.

AKS engine can also upgrade and scale the K8s cluster it deployed on Azure Stack Hub. However, unlike the fully managed AKS in Public Azure, the master nodes and the agent nodes need to be maintained by the Azure Stack Hub operator. In other words, this is not a fully managed solution today. The same warning applies to the self-hosted Docker Container Registry that can be deployed to an on-premises Azure Stack Hub via a QuickStart template. Unlike ACR in Public Azure, Azure Stack Hub operators must consider backup and recovery of the images. They would also need to deploy new versions of the QuickStart template as they become available to upgrade the OS or the container registry itself.

If no requirements prohibit the sending of monitoring data to Public Azure and the proper connectivity exists, Azure Monitor for containers can be leveraged for feature-rich monitoring of the K8s clusters deployed on Azure Stack Hub with AKS engine. In addition, Azure Arc for Data Services can be leveraged to run containerized images of Azure SQL Managed Instances or Azure PostgreSQL Hyperscale on this same K8s cluster. The Azure Monitor and Azure Arc for Data Services options would not be available in submarine scenarios where there would be no connectivity to Azure whatsoever. In the disconnected scenario, the customer would have to determine how best to monitor and run data services on their K8s cluster independent of Public Azure.

Here is a summary of the articles in this blog post series:

Part 1: Running containerized applications on Microsoft Azure’s hybrid ecosystem – Provides an overview of the concepts covered in the blog series.

Part 2: Deploy K8s Cluster into an Azure Stack Hub user subscription – Setup an AKS engine client VM, deploy a cluster using AKS engine, and onboard the cluster to Azure Monitor for Containers.

Part 3: Deploy a self-hosted Docker Container Registry – Use one of the Azure Stack Hub QuickStart templates to setup container registry and push images to this registry. Then, pull these images from the registry into the K8s cluster deployed with AKS engine in Part 2.

Deploy K8s clusters into Azure Stack Hub user subscriptions

Mon, 24 Jul 2023 15:06:01 -0000

|Read Time: 0 minutes

Deploy K8s Cluster into an Azure Stack Hub user subscription

Welcome to Part 2 of a three-part blog series on running containerized applications on Microsoft Azure’s hybrid ecosystem. Part 1 provided the conceptual foundation and necessary context for the hands-on efforts documented in parts 2 and 3. This article details the testing we performed with AKS engine and Azure Monitor for containers in the Dell Technologies labs.

Here are the links to all the series articles:

Part 1: Running containerized applications on Microsoft Azure’s hybrid ecosystem – Provides an overview of the concepts covered in the blog series.

Part 2: Deploy K8s Cluster into an Azure Stack Hub user subscription – Setup an AKS engine client VM, deploy a cluster using AKS engine, and onboard the cluster to Azure Monitor for containers.

Part 3: Deploy a self-hosted Docker Container Registry – Use one of the Azure Stack Hub QuickStart templates to setup container registry and push images to this registry. Then, pull these images from the registry into the K8s cluster deployed with AKS engine in Part 2.

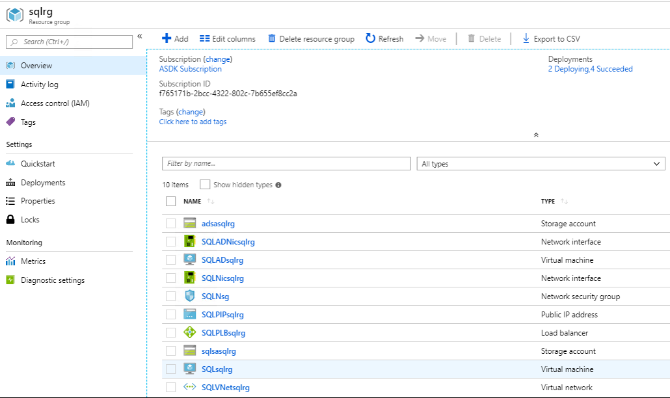

Introduction to the test lab

Before proceeding with the results of the testing with AKS engine and Azure Monitor for containers, we’d like to begin by providing a tour of the lab we used for testing all the container-related capabilities on Azure Stack Hub. Please refer to the following two tables. The first table lists the Dell EMC for Microsoft Azure Stack hardware and software. The second table details the various resource groups we created to logically organize the components in the architecture. The resource groups were provisioned into a single user subscription.

Scale Unit Information | Value |

Number of scale unit nodes | 4 |

Total memory capacity | 1 TB |

Total storage capacity | 80 TB |

Logical cores | 224 |

Azure Stack Hub version | 1.1908.8.41 |

Connectivity mode | Connected to Azure |

Identity store | Azure Active Directory |

Resource Group | Purpose |

demoaksengine-rg | Contains the resources associated with the client VM running AKS engine. |

demoK8scluster-rg | Contains the cluster artifacts deployed by AKS engine. |

demoregistryinfra-rg | Contains the storage account and key vault supporting the self-hosted Docker Container Registry. |

demoregistrycompute-rg | Contains the VM running Docker Swarm and the self-hosted container registry containers and the other supporting resources for networking and storage. |

kubecodecamp-rg | Contains the VM and other resources used for building the Sentiment Analysis application that was instantiated on the K8s cluster. |

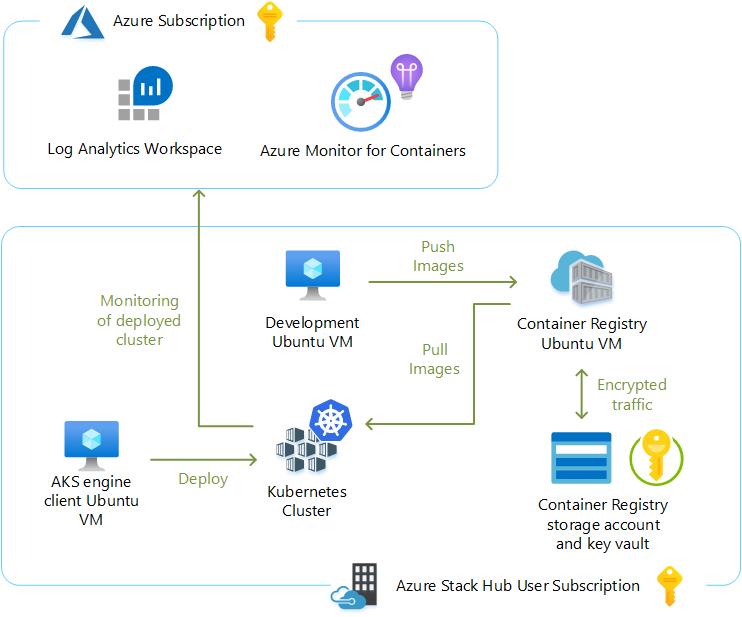

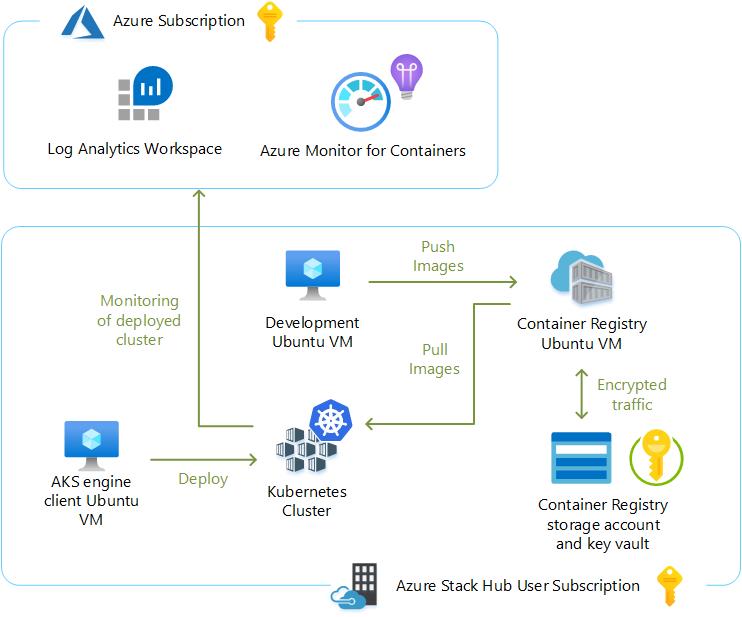

Please also refer to the following graphic for a high-level overview of the architecture.

Prerequisites

All the step-by-step instructions and sample artifacts used to deploy AKS engine can be found in the Azure Stack Hub User Documentation and GitHub. We do not intend to repeat what has already been written – unless we want to specifically highlight a key concept. Instead, we will share lessons we learned while following along in the documentation.

We decided to test the supported scenario whereby AKS engine deploys all cluster artifacts into a new resource group rather than deploying the cluster to an existing VNET. We also chose to deploy a Linux-based cluster using the kubernetes-azurestack.json API model file found in the AKS engine GitHub repository. A Windows-based K8s cluster cannot be deployed by AKS engine to Azure Stack Hub at the time of this writing (November 2019). Do not attempt to use the kubernetes-windows.json file in the GitHub repository, as this will not be fully functional.

Addressing the prerequisites for AKS engine was very straight forward:

- Ensured that the Azure Stack Hub system was completely healthy by running a Test-Azure Stack.

- Verified sufficient available memory, storage, and public IP address capacity on the system.

- Verified that the quotas embedded in the user subscription’s offers provided enough capacity for all the Kubernetes capabilities.

- Used marketplace syndication to download the appropriate gallery items. We made sure to match the version of AKS engine to the correct version of the AKS Base Image. In our testing, we used AKS engine v0.43.0, which depends on version 2019.10.24 for the AKS Base Image.

- Collected the name and ID of the Azure Stack Hub user subscription created in the lab.

- Created the Azure AD service principal (SPN) through the Public Azure Portal, but a link is provided to use programmatic means to create the SPN, as well.

- Assigned the SPN to the Contributor role of the user subscription.

- Generated the SSH key and private key file using PuTTY Key Generator (PUTTYGEN) for each of the Linux VMs used during testing. We associated the PPK file extension with PUTTYGEN on the management workstation so the saved private key files are opened within PUTTYGEN for easy copy and pasting. We used these keys with PuTTY and WinSCP throughout the testing.

At this point, we built a Linux client VM for running the AKS engine command-line tool used to deploy and manage the K8s cluster. Here are the specifications of the VM provisioned:

- Resource group: demoaksengine-rg

- VM size: Standard DS1v2 (1 vcpu + 3.5 GB memory)

- Managed disk: 30 GB default managed disk

- Image: Latest Ubuntu 16.04-LTS gallery item from the Azure Stack Hub marketplace

- Authentication type: SSH public key

- Assigned a Public IP address so we could easily SSH to it from the management workstation

- Since this VM was required for ongoing management and maintenance of the K8s cluster, we took the appropriate precautions to ensure that we could recover this VM in case of a disaster. A critical file that needed to be protected on this VM after the cluster was created is the apimodel.json file. This will be discussed later in this blog series.

Our Azure Stack Hub runs with certificates generated from an internal standalone CA in the lab. This means we needed to import our CA’s root certificate into the client VM’s OS certificate store so it could properly connect to the Azure Stack Hub management endpoints before going any further. We thought we would share the steps to import the certificate:

- The standalone CA provides a file with a .cer extension when requesting the root certificate. In order to work with an Ubuntu certificate store, we had to convert this to a .crt file by issuing the following command from Git Bash on the management workstation:

openssl x509 -inform DER -in certnew.cer -out certnew.crt - Created a directory on the Ubuntu client VM for the extra CA certificate in /usr/share/ca-certificates:

sudo mkdir /usr/share/ca-certificates/extra - Transferred the certnew.crt file to the Ubuntu VM using WinSCP to the /home/azureuser directory. Then, we copied the file from the home directory to the /usr/share/ca-certificates/extra directory.

sudo cp certnew.crt /usr/share/ca-certificates/extra/certnew.crt - Appended a line to /etc/ca-certificates.conf.

sudo nano /etc/ca-certificates.conf

Add the following line to the very end of the file:

extra/certnew.crt - Updated certs non-interactively

sudo update-ca-certificates

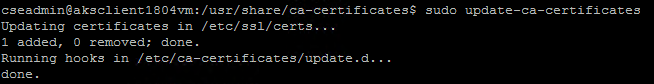

This command produced the following output that verified that the lab’s CA certificate was successfully added:

Note: We learned that if this procedure to import a CA’s root certificate is ever carried out on a server already running Docker, you have to stop and re-start Docker at this point. This is done on Ubuntu via the following commands:

sudo systemctl stop docker

sudo systemctl start docker

We then SSH’d into the client VM. While in the home directory, we executed the prescribed command in the documentation to download the get-akse.sh AKS engine installation script.

curl -o get-akse.sh https://raw.githubusercontent.com/Azure/aks-engine/master/scripts/get-akse.sh

chmod 700 get-akse.sh

./get-akse.sh --version v0.43.0

Once installed, we issued the aks-engine version command to verify a successful installation of AKS engine.

Deploy K8s cluster

There was one more step that needed to be taken before issuing the command to deploy the K8s cluster. We needed to customize a cluster specification using an example API Model file. Since we were deploying a Linux-based Kubernetes cluster, we downloaded the kubernetes-azurestack.json file into the home directory of the client VM. Though we could have used nano on the Linux VM to customize the file, we decided to use WinSCP to copy this file over to the management workstation so we could use VS Code to modify it instead. Here are a few notes on this:

- Through issuing an aks-engine get-versions command, we noticed that cluster versions up to 1.17 were supported by AKS engine v0.43.0. However, the Azure Monitor for Container solution only supported versions 1.15 and earlier. We decided to leave the orchestratorRelease key value in the kubernetes-azurestack.json file at the default value of 1.14.

- Inserted https://portal.rack04.azs.mhclabs.com as the portalURL.

- Used labcluster as the dnsPrefix. This was the DNS name for the actual cluster. We confirmed that this hostname was unique in the lab environment.

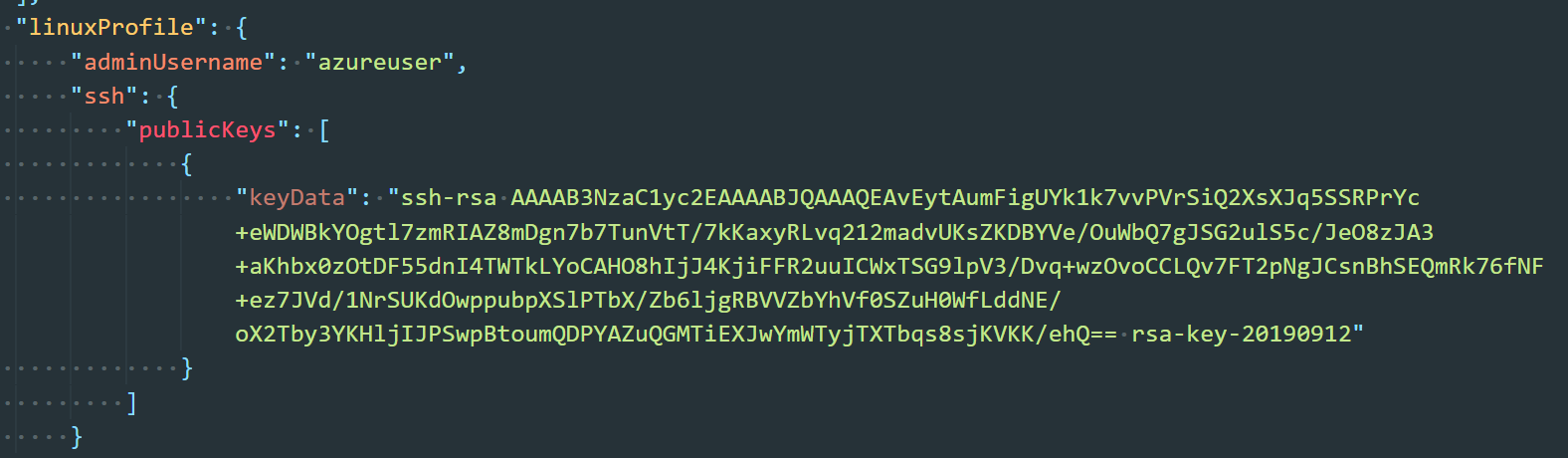

- Left the adminUsername at azureuser. Then, we copied and pasted the public key from PUTTYGEN into the keyData field. The following screen shot shows what was pasted.

- Copied the modified file back to the home directory of the client VM.

While still in the home directory of the client VM, we issued the command to deploy the K8s cluster. Here is the command that was executed. Remember that the client ID and client secret are associated with the SPN, and the subscription ID is that of the Azure Stack Hub user subscription.

aks-engine deploy \

--azure-env AzureStackCloud \

--location Rack04 \

--resource-group demoK8scluster-rg \

--api-model ./kubernetes-azurestack.json \

--output-directory demoK8scluster-rg \

--client-id xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx \

--client-secret xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx \

--subscription-id xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx

Once the deployment was complete and verified by deploying mysql with Helm, we copied the apimodel.json file found in the home directory of the client VM under a subdirectory with the name of the cluster’s resource group – in this case demoK8scluster-rg – to a safe location outside of the Azure Stack Hub scale unit. This file was used as input in all of the other AKS engine operations. The generated apimodel.json contains the service principal, secret, and SSH public key used in the input API Model. It also has all the other metadata needed by the AKS engine to perform all other operations. If this gets lost, AKS engine won't be able configure the cluster down the road.

Onboarding the new K8s cluster to Azure Monitor for containers

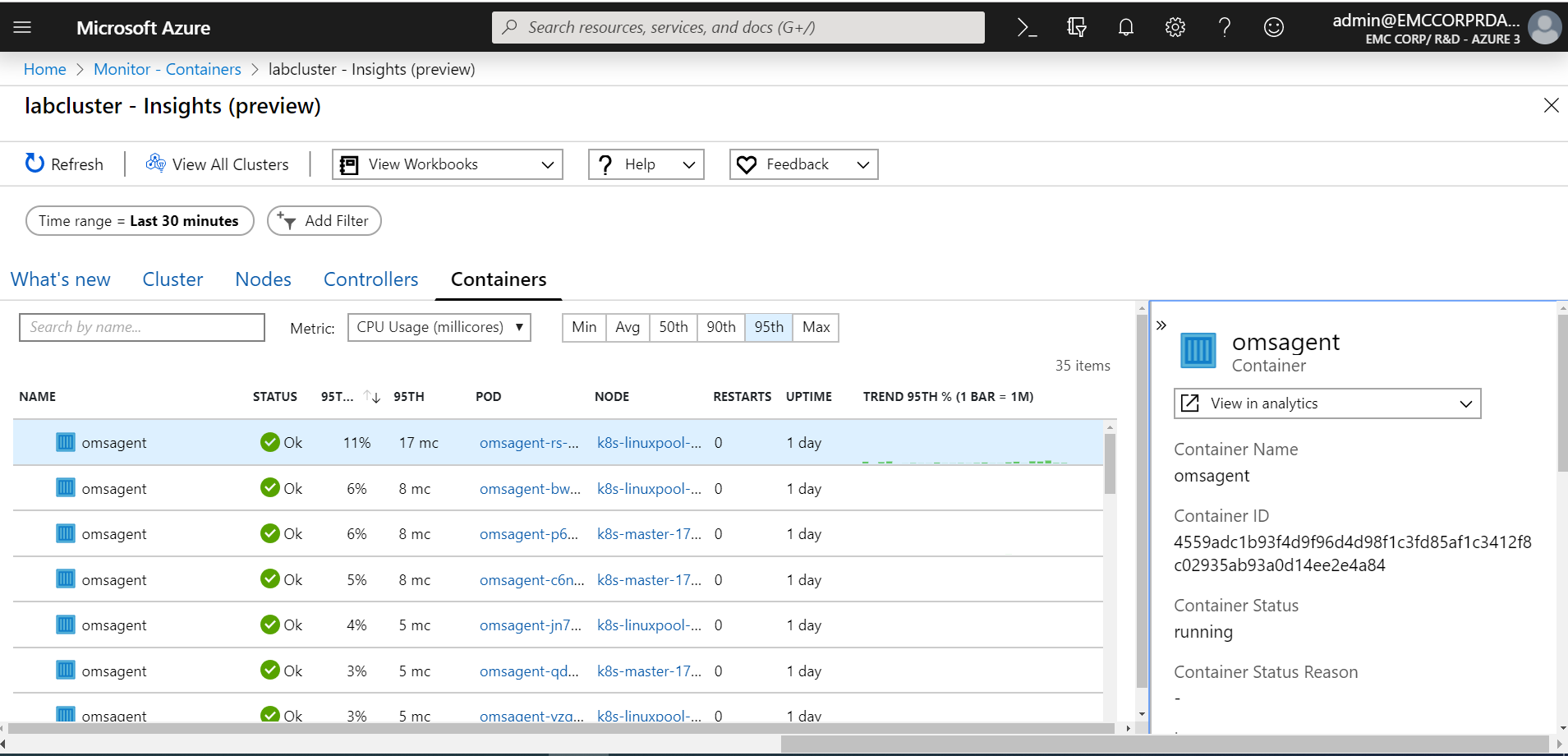

Before introducing our first microservices-based application to the K8s cluster, we preferred to onboard the cluster to Azure Monitor for containers. Azure Monitor for containers not only provides a rich monitoring experience for AKS in Public Azure but also for K8s clusters deployed in Azure Stack using AKS engine. We wanted to see what resources were being used only by the Kubernetes system functions before deploying any applications. The steps we performed in this section were performed on one of the K8s primary nodes using an SSH connection.

The prerequisites were fairly straight forward, but we did make a couple observations while stepping through them:

- We decided to perform the onboarding using the HELM chart. For this option, the latest version of the HELM client was required. We found that as long as we performed the steps under Verify your cluster in the documentation, we did not encounter any issues.

- Since we were only running a single cluster in our environment, we found we didn’t have to do anything with regards to configuring kubectl or the HELM client to use the K8s cluster context.

- We did not have to make any changes in the environment to allow the various ports to communicate properly within the cluster or externally with Public Azure.

Note: Microsoft also supports the enabling of monitoring on this K8s cluster on Azure Stack Hub deployed with AKS engine using the API definition as an alternative to using the HELM chart. The API definition option doesn’t have a dependency on Tiller or any other component. Using this option, monitoring can be enabled during the cluster creation itself. The only manual step for this option would be to add the Container Insights solution to the Log Analytics workspace specified in the API definition.

We already had a Log Analytics Workspace at our disposal for this testing. We did make one mistake during the onboarding preparations, though. We intended to manually add the Container Insights solution to the workspace but installed the legacy Container Monitoring solution instead of Container Insights. To be on the safe side, we ran the onboarding_azuremonitor_for_containers.ps1 PowerShell script and supplied the values for our resources as parameters. The script skipped the creation of the workspace since we already had one and just installed the Container Insights solution via an ARM template in GitHub identified in the script.

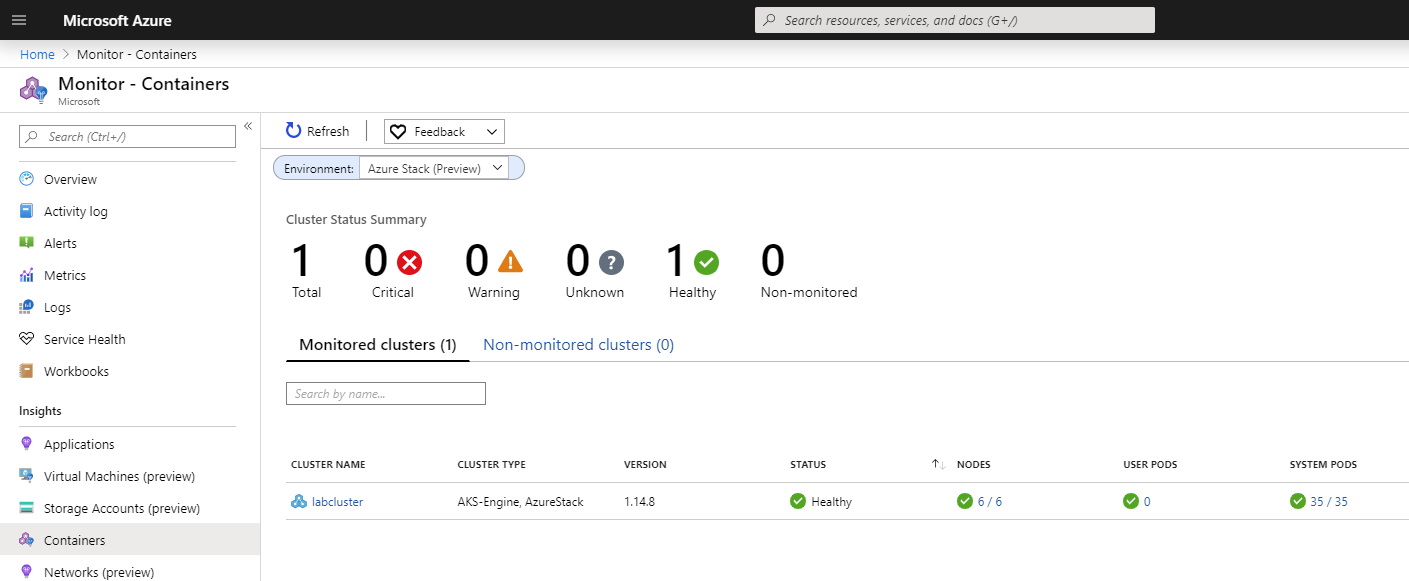

At this point, we could simply issue the HELM commands under the Install the chart section of the documentation. Besides inserting the workspace ID and workspace key, we also replaced the --name parameter value with azuremonitor-containers. We did not observe anything out of the ordinary during the onboarding process. Once complete, we had to wait about 10 minutes before we could go to the Public Azure portal and see our cluster appear. We had to remember to click on the drop-down menu for Environment in the Container Insights section in Azure Monitor and select “Azure Stack (Preview)” for the cluster to appear.

We hope this blog post proves to be insightful to anyone deploying a K8s cluster on Azure Stack Hub using AKS engine. We also trust that the information provided will assist in the onboarding of that new cluster to Azure Monitor for containers. In Part 3, we will step through our experience deploying a self-hosted Docker Container Registry into Azure Stack Hub.

Microsoft Azure Stack and CSP Uncovered

Mon, 17 Aug 2020 18:55:28 -0000

|Read Time: 0 minutes

- Microsoft Azure Stack and CSP Uncovered

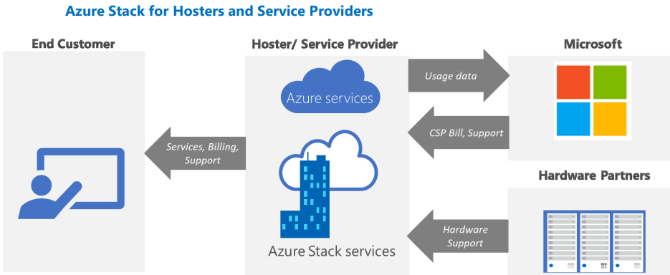

Microsoft’s Cloud Solution Provider (CSP) program allows partners to completely own their end-to-end customer lifecycle, which may include activities such as deployment of new services, provisioning, management, pricing and billing. The CSP program helps value-added resellers (VARs) and managed service providers (MSPs) sell Microsoft software and Cloud Service licenses with additional support so that you can become more involved with your customer base. This means that every cloud solution from Azure to Office 365 could be resold to your customers at a price that you set, and with unique value added by you. The goal of the CSP program is not simply to resell Microsoft services, but to enhance them and deliver them in a way that makes sense for you and your customers.

What's the fuss all about Azure Stack CSPs?

As an Azure Stack Product Technologist, one question I am frequently asked by customers who are existing Microsoft CSPs is, how to operate and offer services on Microsoft Azure Stack in a CSP model. Although this information is available through Microsoft Azure Stack online documentation and videos from various events, I am trying to consolidate that information into this blogpost as well as trying to explain the various CSP operating models in Azure Stack and the steps required to successfully manage and operate Azure Stack as a CSP.

The key difference between operating as an Azure CSP vs Azure Stack CSP is the additional responsibility of managing the Azure Stack Integrated system. Really? well, it really depends on the CSP operating model which we will discuss in detail in the subsequent sections in this blog.

What's the Business Opportunity here?

Today, Azure services are available in 54 regions spread across various geographical locations. Which also means that Azure services are not available in every nook and corner of the globe. This could be due to poor internet connectivity or no connectivity or customers want to operate in a disconnected mode or to simply meet the regulatory compliance, adhering to the policy guidelines and laws in the respective country of origin. Most importantly it is your geographical presence that differentiates you as a CSP who can deliver consistent Azure services in that region. With the rise of Edge computing, it becomes more and more critical that data is processed as close to the edge as possible, and as a result we see more and more cloud services moving back towards the edge. As a result, as a CSP not only is your geographical location important, but you can also provide differentiated or specialized applications while serving the needs of certain industries with a specific requirement.

What are the various CSP operating models for Azure Stack?

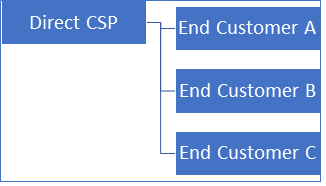

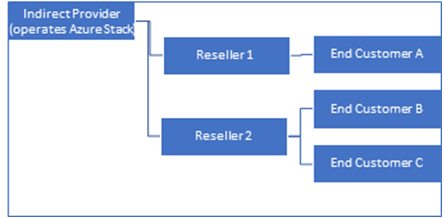

When you make the decision to be an Azure Stack CSP, you will need to explore and understand the two CSP operating models. You must ensure that you understand which model aligns best with your organization. So let's try and understand the two CSP operating models.

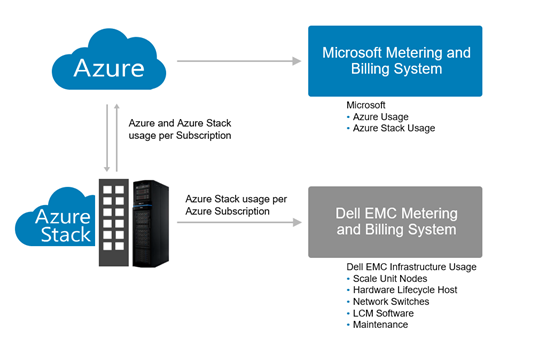

In this model CSP operates Azure Stack and has a direct billing relationship with Microsoft. All usage of Azure Stack is directly billed to the CSP. The CSP in turn generates a bill for each customer or tenant who is consuming the services offered by the CSP. The period of billing, the amount you bill, and what you bill for are entirely in your hands as a CSP.

In this model, Indirect CSP is also referred to as Distributor, is responsible to operate Azure Stack. Here a network of Resellers can help sell CSP offered services to the end customers. Indirect CSP has direct billing relationship with Microsoft and hence all usage of Azure Stack resources are billed to the Indirect CSP. The indirect CSP in turn bills either the reseller or the end customer

Now that you've familiarized yourself with Azure Stack CSP operating models, it is important to understand the two types of CSP subscriptions that are available.

- Azure Partner Shared Services (APSS) Subscription

According to Microsoft's definition, Azure Partner Shared Services (APSS) are the preferred choice for registration when a direct CSP or an indirect CSP, also known as CSP distributor operates Azure Stack. This means a CSP directly purchases Azure subscription from Microsoft for their own use. This creates opportunity for CSPs to build differentiated solutions and offer them to their tenants.

- CSP Subscription

This is the most common subscription model. In this either a CSP reseller or the customer operates the Azure Stack admin and tenant spaces, or in many cases splits the responsibility, with CSP managing the Admin space and customer managing the Tenant.

Roles and Responsibilities in a CSP Model:

With so many myriads of options available in a CSP model involving various personas such as Direct CSPs, Indirect CSPs, Resellers and End Customers along with two types of CSP subscriptions, let's try and understand from the chart below on the various roles and responsibilities associated with each persona and the right CSP subscription applicable for each scenario.

Persona | Subscription Type | Azure Stack Operator | Usage and Billing | Selling | Support |

Direct CSP | APSS | Direct CSP | Direct CSP | Direct CSP | Direct CSP |

Indirect CSP or Distributor | APSS | Distributor | Distributor | Reseller | Distributor or Reseller |

Reseller | CSP | Reseller | Indirect CSP or Distributor | Reseller | Distributor or Reseller |

End Customer | CSP | End Customer | Indirect CSP or Distributor | Reseller or Distributor | Distributor or Reseller |

Note: When End Customer operates Azure Stack, multi-tenancy is not required. The end customer needs a CSP subscription from the CSP partner, then uses it for the initial (default) registration. Usage is billed to the Distributor or Indirect CSP.

How do you get started?

Once you have made the decision on the type of CSP model you will be operating Azure Stack, we will now dive into the nitty-gritties of how to make Azure Stack operational in this model. To be able to successfully operate and run Azure Stack, you will need to take some steps to plan on how you want to offer services and configure Azure Stack. Let's look at what are some of the key steps in this planning

phase.

- Billing: During this phase, you will need to come up with a plan on how you want to bill your end customers based on the usage of services you offer. This is where you will need to plan on how to register Azure Stack and plan the integration of your billing system. You will also need to plan on coming up with appropriate pricing model for the services you offer. During this phase you may also want to explore some of the third party billing services offered by ISVs.

- Services: In this phase you will come up with a plan on what are the various native Azure Stack services you would want to offer your end customers. You may also plan on offering differentiated value added services to your end customers. This will determine how you configure quotas, plans and offers on the Azure Stack system. For more information on how to create quotas, plans and offers please refer to this video.

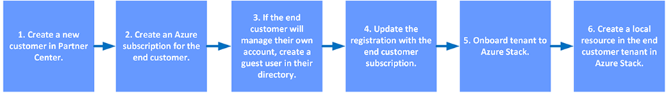

- On boarding End Customers: Once you have planned on billing and services for your end customers, you will need to onboard new customers and users to your Azure Stack system. Let's look at how to do this in the following section.

Note: This blogpost assumes that you already have access to the Microsoft Partner Center and have some knowledge on CSP Program. If you need access to training materials on CSP Program please refer to the Microsoft Partner Center documentation.

Customer On boarding Flow

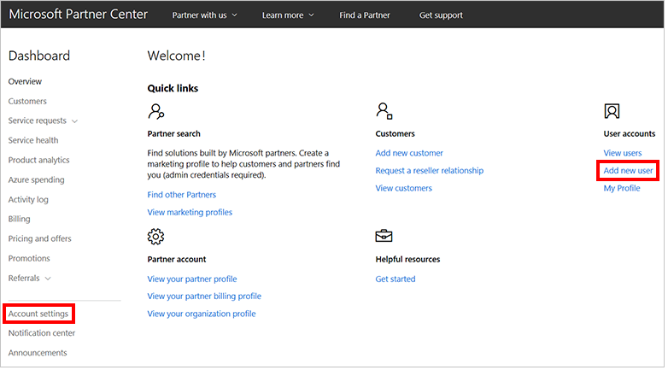

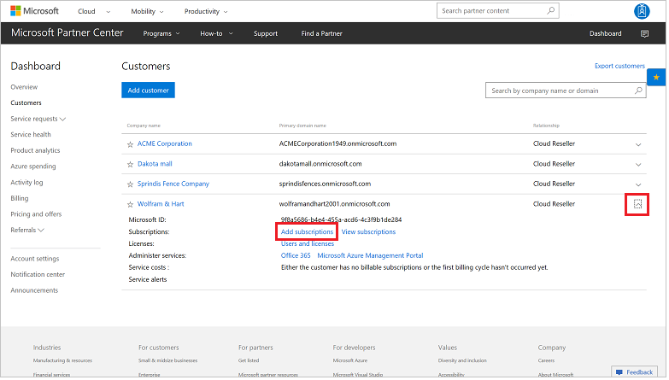

- First, create a CSP or APSS Subscription depending on the CSP operating model that best fits your organization from Microsoft Partner Center (For Azure Partner Shared Services subscription (APSS) - Please go through Microsoft documentation on How to create APSS Subscription)

- CSP Subscription - Please go through Microsoft documentation on How to create CSP Subscription

- Register Azure Stack against the CSP subscription as opted in Step 1. Steps on how to register Azure Stack can be found here

- After Azure Stack is registered, you need to enable multi-tenancy if you are planning to offer Azure Stack services to multiple tenants. If you don't enable multi-tenancy, all usage will be reflected against the subscription which was used for Azure Stack registration. You can refer to Microsoft documentation on How to Enable multi-tenancy

- Once multi-tenancy is enabled, you will need to first create End Customers or tenants in the Partner Center portal so that when those End Customers consume the services you offer, Azure Stack reports the usage to their CSP subscription. (You can refer to the Microsoft documentation on How to add tenants in Partner Center as shown below)

- Create an Azure subscription for each End Customer you created in the above step in Partner Center. You can refer to Creating New Subscriptions as shown below

2. Create guest users in the End Customer's directory: The reason you do this is because by default, you as a CSP will not have access to the End Customer's Azure Stack subscription. If the End Customer wants you to manage their resources they can add your account as owner/contributor to their Azure Stack subscription. In order to do that they will need to add your account as a guest user in their Azure AD tenant. It is recommended that you as a CSP use a different account other than your CSP account to manage your Enc Customer's Azure Stack subscription.

3. Update the registration in Azure Stack with the End Customer's Azure Stack subscription. By doing this End Customer's usage is tracked using the customer's identity in Partner Center thereby ensuring that usage tracking and billing is made easier. You can refer to this link on How to update Azure Stack registration

4. On board tenants to Azure Stack to enable users from multiple Azure AD tenant directory to use services you offer on Azure Stack. You can refer to the link How to Enable multi-tenancy

5. As a last step you need to ensure you are able to create a resource in the End Customer's Azure Stack subscription using the guest user account created in Step c

By now you should be all set to successfully operate and offer services on Azure Stack. To track usage and billing, CSPs can use APIs or use the Partner Center. CSPs can also work with third party billing solution providers like Cloud Assert or Exivity for a more customized billing solutions for those who need more flexibility in the pricing and billing model.

Sources:

Manage usage and billing for Azure Stack as a Cloud Solution Provider

All you need to know about CSP by Alfredo Pizzirani and Tiberiu Radu

How to Register and Manage Tenants on Azure Stack for CSPs

Deploy a self-hosted Docker Container Registry on Azure Stack Hub

Thu, 22 Jul 2021 10:35:43 -0000

|Read Time: 0 minutes

Deploy a self-hosted Docker Container Registry on Azure Stack Hub

Introduction

Welcome to Part 3 of a three-part blog series on running containerized applications on Microsoft Azure’s hybrid ecosystem. In this part, we step through how we deployed a self-hosted, open-source Docker Registry v2 to an Azure Stack Hub user subscription. We also discuss how we pushed a microservices-based application to the self-hosted registry and then pulled those images from that registry onto the K8s cluster we deployed using AKS engine.

Here are the links to all the series articles:

Part 1: Running containerized applications on Microsoft Azure’s hybrid ecosystem – Provides an overview of the concepts covered in the blog series.

Part 2: Deploy K8s Cluster into an Azure Stack Hub user subscription – Setup an AKS engine client VM, deploy a cluster using AKS engine, and onboard the cluster to Azure Monitor for containers.

Part 3: Deploy a self-hosted Docker Container Registry – Use one of the Azure Stack Hub QuickStart templates to setup container registry and push images to this registry. Then, pull these images from the registry into the K8s cluster deployed with AKS engine in Part 2.

Here is a diagram depicting the high-level architecture in the lab for review.

There are a few reasons why an organization may want to deploy and maintain a container registry on-premises rather than leveraging a publicly accessible registry like Docker Hub. This approach may be particularly appealing to customers in air-gapped deployments where there is unreliable connectivity to Public Azure or no connectivity at all. This can work well with K8s clusters that have been deployed using AKS engine at military installations or other such locales. There may also be regulatory compliance, data sovereignty, or data gravity issues that can be addressed with a local container registry. Some customers may simply require tighter control over where their images are being stored or need to integrate image storage and distribution tightly into their in-house development workflows.

Prerequisites

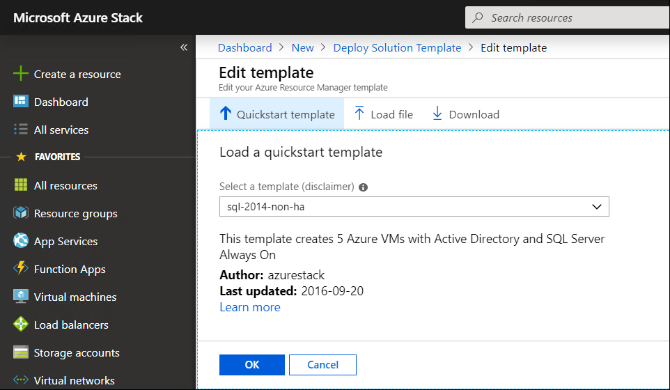

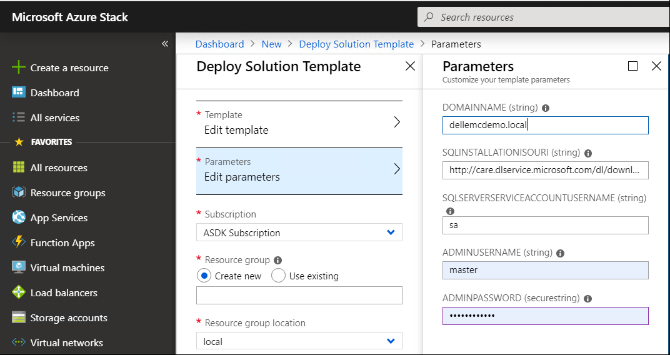

The deployment of Docker Registry v2 into an Azure Stack Hub user subscription was performed using the 101-vm-linux-docker-registry Azure Stack Hub QuickStart template. There are two phases to this deployment:

1. Creation of a storage account and key vault in a discrete resource group by running the setup.ps1 PowerShell script provided in the template repo.

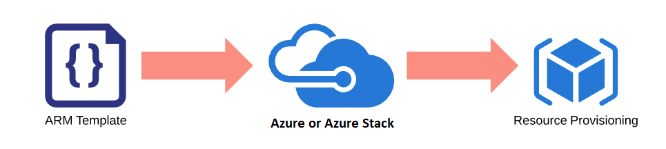

2. The setup.ps1 script also created an azuredeploy.parameters.json file that is used in the PowerShell command to deploy the ARM template that describes the container registry VM and its associated resources. These resources get provisioned into a separate resource group.

Be aware that there is another experimental repository in GitHub called msazurestackworkloads for deploying Docker Registry. The main difference is that the code in the msazurestackworkloads repo includes artifacts for creating a marketplace gallery item that an Azure Stack Hub user could deploy into their subscription. Again, this is currently experimental and is not supported for deploying the Docker Registry.

One of the prerequisite steps was to obtain an X.509 SSL certificate in PFX format for loading into key vault. We pointed to its location during the running of the setup.ps1 script. We used our lab’s internal standalone CA to create the certificate, which is the same CA used for deploying the K8s cluster with AKS engine. We thought we’d share the steps we took to obtain this certificate in case any readers aren’t familiar with the process. All these steps must be completed from the same workstation to ensure access to the private key.

We performed these steps on the management workstation:

1. Created an INF file that looked like the following. The subject’s CN is the DNS name we provided for the registry VM’s public IP address.

[Version]

Signature="$Windows NT$"

[NewRequest]

Subject = "CN=cseregistry.rack04.cloudapp.azs.mhclabs.com,O=CSE Lab,L=Round Rock,S=Texas,C=US"

Exportable = TRUE

KeyLength = 2048

KeySpec = 1

KeyUsage = 0xA0

MachineKeySet = True

ProviderName = "Microsoft RSA SChannel Cryptographic Provider"

HashAlgorithm = SHA256

RequestType = PKCS10

[Strings]

szOID_SUBJECT_ALT_NAME2 = "2.5.29.17"

szOID_ENHANCED_KEY_USAGE = "2.5.29.37"

szOID_PKIX_KP_SERVER_AUTH = "1.3.6.1.5.5.7.3.1"

szOID_PKIX_KP_CLIENT_AUTH = "1.3.6.1.5.5.7.3.2"

[Extensions]

%szOID_SUBJECT_ALT_NAME2% = "{text}dns=cseregistry.rack04.cloudapp.azs.mhclabs.com"

%szOID_ENHANCED_KEY_USAGE% = "{text}%szOID_PKIX_KP_SERVER_AUTH%,%szOID_PKIX_KP_CLIENT_AUTH%"

[RequestAttributes]

2. We used the certreq.exe command to generate a CSR that I then submitted to the CA.

certreq.exe -new cseregistry_req.inf cseregistry_csr.req

3. We received a .cer file back from the standalone CA and followed the instructions here to convert this .cer file to a .pfx file for use with the container registry.

Prepare Azure Stack PKI certificates for deployment or rotation

We also needed to have CA’s root certificate in .crt file format. We originally obtained this during the K8s cluster deployment using AKS engine. This needs to be imported into the certificate store of any device that intends to interact with the container registry.

Deploy the container registry supporting infrastructure

We used the setup.ps1 PowerShell script included in the QuickStart template’s GitHub repo for creating the supporting infrastructure for the container registry. We named the new resource group created by this script demoregistryinfra-rg. This resource group contains a storage account and key vault. The registry is configured to use the Azure storage driver to persist the container images in the storage account blob container. The key vault stores the credentials required to authenticate to the registry as a secret and secures the certificate. A service principal (SPN) created prior to executing the setup.ps1 script is leveraged to access the storage account and key vault.

Here are the values we used for the variables in our setup.ps1 script for reference (sensitive information removed, of course). Notice that the $dnsLabelName value is only the hostname of the container registry server and not the fully qualified domain name.

$location = "rack04"

$resourceGroup = "registryinfra-rg"

$saName = "registrystorage"

$saContainer = "images"

$kvName = "registrykv"

$pfxSecret = "registrypfxsecret"

$pfxPath = "D:\file_system_path\cseregistry.pfx"

$pfxPass = "mypassword"

$spnName = "Application (client) ID"

$spnSecret = "Secret"

$userName = "cseadmin"

$userPass = "!!123abc"

$dnsLabelName = "cseregistry"

$sshKey = "SSH key generated by PuttyGen"

$vmSize = "Standard_F8s_v2"

$registryTag = "2.7.1"

$registryReplicas = "5"

Deploy the container registry using the QuickStart template

We deployed the QuickStart template using a similar PowerShell command as the one indicated in the README.md of the GitHub repo. Again, the azuredeploy.parameters.json file was created automatically by the setup.ps1 script. This was very straightforward. The only thing to mention is that we created a new resource group when deploying the QuickStart template. We could have also selected an existing resource group that did not contain any resources.

Testing the Docker Registry with the Sentiment Analyzer application

At this point, it was time to test the K8s cluster and self-hosted container registry running on Azure Stack Hub from end-to-end. For this, we followed a brilliant blog article entitled Learn Kubernetes in Under 3 Hours: A Detailed Guide to Orchestrating Containers written by Rinor Maloku. This was a perfect introduction to the world of creating a microservices-based application running in multiple containers. It covers Docker and Kubernetes fundamentals and is an excellent primer for anyone just getting started in the world of containers and container orchestration. The name of the application is Sentiment Analyser, and it uses text analysis to ascertain the emotion of a sentence.

Learn Kubernetes in Under 3 Hours: A Detailed Guide to Orchestrating Containers

We won’t share all the notes we took while walking through the article. However, there are a couple tips we wanted to highlight as they pertain to testing the K8s cluster and new self-hosted Docker Registry in the lab:

- The first thing we did to prepare for creating the Sentiment Analyser application was to setup a development Ubuntu 18.04 VM in a unique resource group in our lab’s user subscription on Azure Stack Hub. We installed Firefox on the VM and Xming on the management workstation so we could test the functionality of the application at the required points in the process. Then, it was just a matter of setting up PuTTY properly with X11 forwarding.

- Before installing anything else on the VM, we imported the root certificate from our lab’s standalone CA. This was critical to facilitate the secure connection between this VM and the registry VM for when we started pushing the images.

- Whenever Rinor’s article talked about pushing the container images to Docker Hub, we instead targeted the self-hosted registry running on Azure Stack Hub. We had to take these steps on the development VM to facilitate those procedures:

- Logged into the container registry using the credentials for authentication that we specified in the setup.ps1 script.

- Tagged the image to target the container registry.

sudo docker tag <image ID> cseregistry.rack04.cloudapp.azs.mhclabs.com/sentiment-analysis-frontend

- Pushed the image to the container registry.

sudo docker push cseregistry.rack04.cloudapp.azs.mhclabs.com/sentiment-analysis-frontend

- Once the images were pushed to the registry, we found out that we could view them from the management workstation by browsing to the following URL and authenticating to the registry:

https://cseregistry.rack04.cloudapp.azs.mhclabs.com/v2/_catalog

The following output was given after we pushed all the application images:

{"repositories":["sentiment-analysis-frontend","sentiment-analysis-logic","sentiment-analysis-web-app"]}

- We noticed that we did not have to import the lab’s standalone CA’s root certificate on the primary node before attempting to pull the image from the container registry. We assumed that the cluster picked up the root certificate from the Azure Stack Hub system, as there was a file named /etc/ssl/certs/azsCertificate.pem on the primary node from where we were running kubectl.

- Prior to attempting to create the K8s pod for the Sentiment Analyser frontend, we had to create a Kubernetes cluster secret. This is always necessary when pulling images from private repositories – whether they are private repos on Docker Hub or privately hosted on-premises using a self-hosted container registry. We followed the instructions here to create the secret:

Pull an Image from a Private Registry

In the section of this article entitled Create a Secret by providing credentials on the command line, we discovered a couple items to note:

- When issuing the kubectl create secret docker-registry command, we had to enclose the password in single quotes because it was a complex password.

kubectl create secret docker-registry regcred --docker-

server=cseregistry.rack04.cloudapp.azs.mhclabs.com --docker-username=cseadmin --docker-

password='!!123abc' --docker-email=<email address>

We’ve now gotten into the habit of verifying the secret after I create it by using the following command:

kubectl get secret regcred --output="jsonpath={.data.\.dockerconfigjson}" | base64 --decode

- Then, when we came to the modification of the YAML files in the article, we learned just how strict YAML is about the formatting of the file. When we added the imagePullSecrets: object, we had to ensure it perfectly lined up with the containers: object. Also, interesting to note is that the numbers in the right-hand column were not necessary to duplicate.

Here is the content of the file that worked, but this blog post interface will not be able to display the indentation correctly:

apiVersion: v1

kind: Pod # 1

metadata:

name: sa-frontend

labels:

app: sa-frontend # 2

spec: # 3

containers:

- image: cseregistry.rack04.cloudapp.azs.mhclabs.com/sentiment-analysis-frontend # 4

name: sa-frontend # 5

ports:

- containerPort: 80 # 6

imagePullSecrets:

- name: regcred

At this point, we were able to observe the fully functional Sentiment Analyser application running on our K8s cluster on Azure Stack Hub. We were not only running this application on-premises in a highly prescriptive, self-managed Kubernetes cluster, but we were also able to do so while leveraging a self-hosted Docker Registry for the transferring of the images. We could also proceed to Azure Monitor for containers using the Public Azure portal to monitor our running containerized application and create thresholds for timely alerting on any potential issues.

Articles in this blog series

We hope this blog post proves to be insightful to anyone deploying a self-hosted container registry on Azure Stack Hub. It has been a great learning experience stepping through the deployment of a containerized application using the Microsoft Azure toolset. There are so many other things we want to try like Deploying Azure Cognitive Services to Azure Stack Hub and using Azure Arc to run Azure data services on our K8s cluster on Azure Stack Hub. We look forward to sharing more of our testing results on these exciting capabilities in the near future.

Azure Stack Development Kit - Removing Network Restrictions

Mon, 24 Jul 2023 15:06:15 -0000

|Read Time: 0 minutes

Azure Stack Development Kit - Removing Network Restrictions

This process is confirmed working for Azure Stack version 1910.

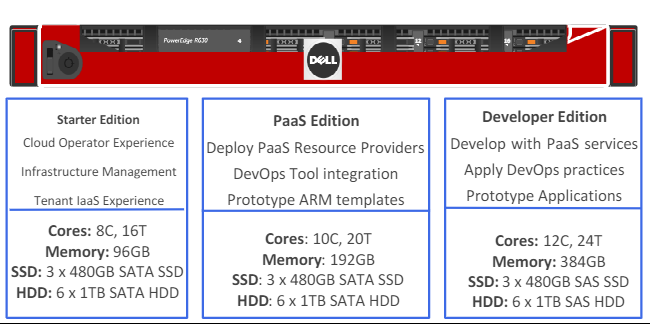

So you've got your hands on an Azure Stack Development Kit (ASDK), hopefully at least of the spec of the PaaS Edition Dell EMC variant below or higher, and you've been testing it for a while now. You've had a kick of the tyres, you've fired up some VMs, syndicated from the marketplace, deployed a Kubernetes cluster from template, deployed Web and API Apps, and had some fun with Azure Functions.

All of this is awesome and can give you a great idea of how Azure Stack can work for you, but there comes a time where you want to see how it'll integrate with the rest of your corporate estate. One of the design limitations for the ASDK is that it's enclosed in a Software Defined Networking (SDN) boundary, which limits access to the Azure Stack infrastructure and any tenant workloads deployed in it to being accessed from the ASDK host. Tenant workloads are able to route out to your corporate network, however nothing can talk back in.

There's a documented process for allowing VPN Access to the ASDK to allow multiple people to access the tenant and admin portals from their own machines at the same time, but this doesn't allow access to deployed resources, and nor does it allow your other existing server environments to connect to them.

There are a few blogs out there which have existed since the technical preview days of Azure Stack, however they're either now incomplete or inaccurate, don't work in all environments, or require advanced networking knowledge to follow. The goal of this blog is to provide a method to open up the ASDK environment to deliver the same tenant experience you'll get with a full multi-node Azure Stack deployed in your corporate network.

Note: When you deploy an Azure Stack production environment, you have to supply a 'Public VIP' network range which will function as external IPs for services deployed in Azure Stack. This range can either be internal to your corporate network, or a true public IP range. Most enterprises deploy within their corporate network while most service providers deploy with public IPs, to replicate the Azure experience. The output of this process will deliver a similar experience to an Azure Stack deployed in your internal network.

The rest of this blog assumes you have already deployed your ASDK and finished all normal post-deployment activities such as registration and deployment of PaaS resource providers.

Removing Network Restrictions

This process is designed to be non-disruptive to the ASDK environment, and can be fully rolled back without needing a re-deployment.

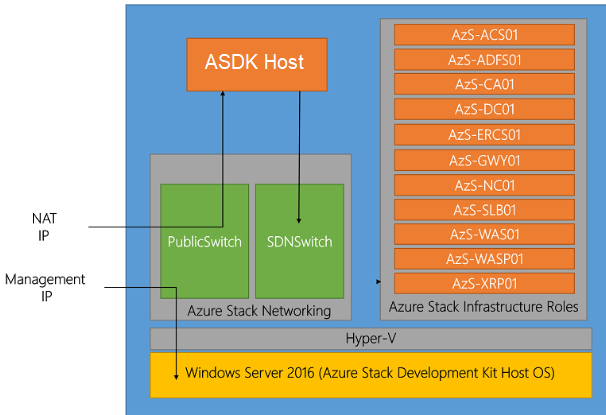

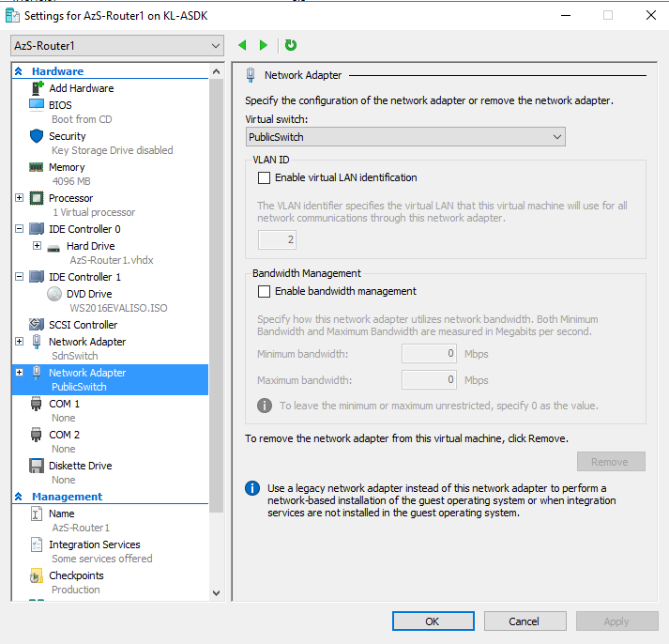

Within the ASDK environment there are two Hyper-V switches. A Public Switch, and an SDN Switch.

- The Public Switch is attached to your internal/corporate network, and provides you the ability to RDP to the host to manage the ASDK.

- The SDN Switch is a Hyper-V 2016 SDN managed switch which provides all of the networking for all ASDK infrastructure and tenant VMs which are and will be deployed.

The ASDK Host has NICs attached to both Public and SDN switches, and has a NAT set up to allow access outbound to the corporate network and (in a connected scenario) the internet.

Rather than make any changes to the host which might be a pain to rollback later, we'll deploy a brand new VM which will have a second NAT, operating in the opposite direction. This makes rollback a simple case of decommissioning that VM in the future.

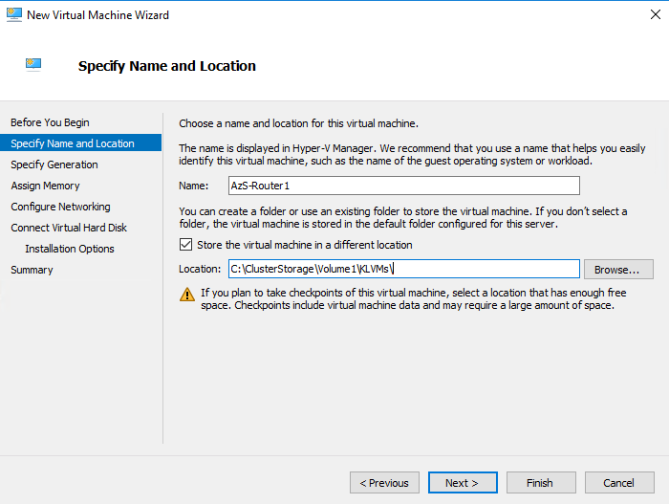

On the ASDK Host open up Hyper-V Manager, and deploy a new Windows Server 2016 VM. You can place the VM files in a new folder in the C:\ClusterStorage\Volume1 CSV.

The VM can be Generation 1 or Generation 2, it doesn't make a difference for our purposes here. I've just used the Gen 1 default as it's consistent with Azure.

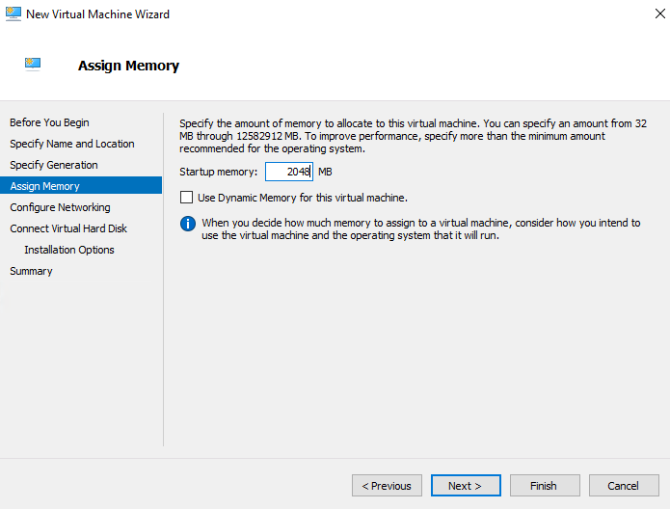

Set the Startup Memory to at least 2048MB and do not use Dynamic Memory.

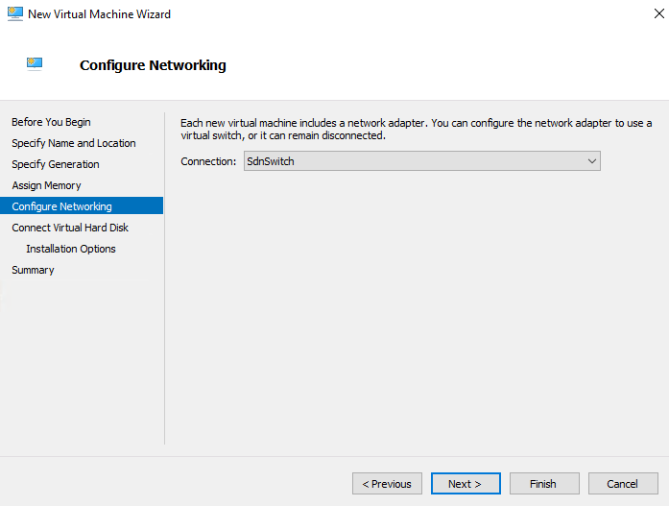

Attach the network to the SdnSwitch.

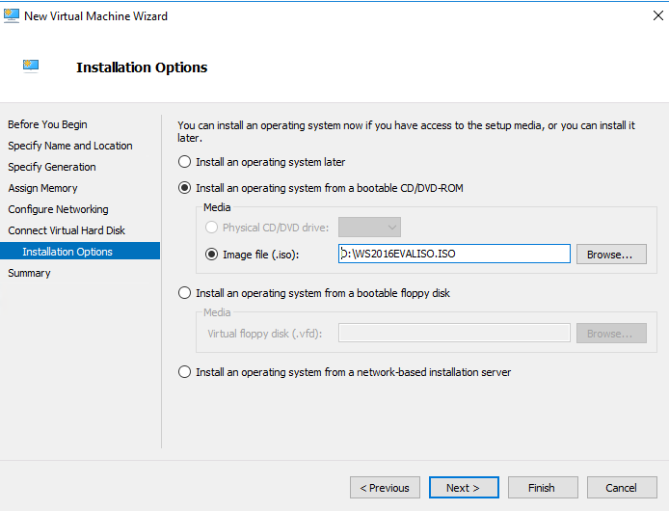

Click through the Hard Disk options, and then on the Installation Options, specify a Server 2016 ISO. Typically you'll have one on-host already from doing the ASDK deployment, so just use that.

Finish the wizard, but do not power on the VM.

While we've attached the VM's NIC to the SDN Network, because that network is managed by a Server 2016 SDN infrastructure, it won't be able to communicate with any other VM resources attached to it by default. First we have to make this VM part of that SDN family.

In an elevated PowerShell window on your ASDK host, run the following:

$Isolation = Get-VM -VMName 'AzS-DC01' | Get-VMNetworkAdapter | Get-VMNetworkAdapterIsolation

$VM = Get-VM -VMName 'AzS-Router1'

$VMNetAdapter = $VM | Get-VMNetworkAdapter

$IsolationSettings = @{

IsolationMode = 'Vlan'

AllowUntaggedTraffic = $true

DefaultIsolationID = $Isolation.DefaultIsolationID

MultiTenantStack = 'off'

}

$VMNetAdapter | Set-VMNetworkAdapterIsolation @IsolationSettings

Set-PortProfileId -resourceID ([System.Guid]::Empty.tostring()) -VMName $VM.Name -VMNetworkAdapterName $VMNetAdapter.Name

Now that this NIC is part of the SDN infrastructure, we can go ahead and add a second NIC and connect it to the Public Switch.

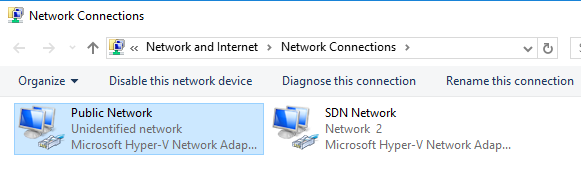

Now you can power on the VM, and install the Server 2016 operating system - this VM does not need to be domain joined. Once done, open a console to the VM from Hyper-V Manager.

Open the network settings, and rename the NICs to make them easier to identify.

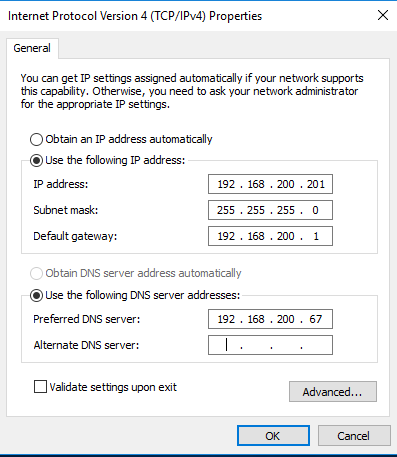

Give the SDN NIC the following settings:

IP Address: 192.168.200.201

Subnet: 255.255.255.0

Default Gateway: 192.168.200.1

DNS Server: 192.168.200.67

The IP Address is an unused IP on the infrastructure range.

The Default Gateway is the IP Address of the ASDK Host, which still handles outbound traffic.

The DNS Server is the IP Address of AzS-DC01, which handles DNS resolution for all Azure Stack services.

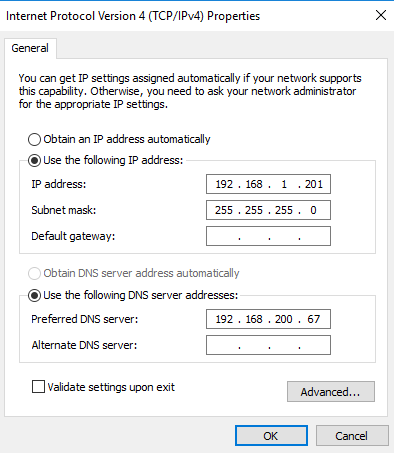

Give the Public Network NIC an unused IP Address on your corporate network. Don't use DHCP for this, as you don't want a default gateway to be set. In my case, my internal network is 192.168.1.0/24, and I've given the same final octet as the SDN NIC so it's easier for me to remember.

On the VM, open an elevated PowerShell window, and run the following command:

New-NetNAT -Name "NATSwitch" -InternalIPInterfaceAddressPrefix "192.168.1.0/24" -Verbose

Where the IP range matches your internal network's subnet settings.

While we have a default route set up to the ASDK Host, Azure Stack also uses a Software Load Balancer as part of the SDN infrastructure, AzS-SLB01. In order for all to work correctly, we need to set up some static routes from the new VM to pass appropriate traffic to the SLB.

Run the following on your new VM to add the appropriate static routes:

$range = 2..48

foreach ($r in $range) { route add -p "192.168.102.$($r)" mask 255.255.255.255 192.168.200.64 }

$range = 1..8

foreach ($r in $range) { route add -p "192.168.105.$($r)" mask 255.255.255.255 192.168.200.64 }

That's all the setup on the new VM complete.

Next you will need to add appropriate routing to your internal network or clients. How you do this is up to you, however you'll need to set up the following routes:

Each of:

192.168.100.0/24

192.168.101.0/24

192.168.102.0/24

192.168.103.0/24

192.168.104.0/24

192.168.105.0/24

192.168.200.0/24

… needs to use the Public Switch IP of the new VM you deployed as their Gateway.

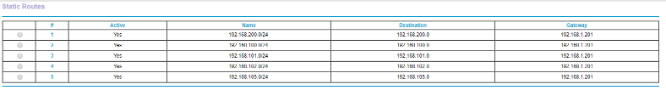

In my case, I configured this on my router as below (click to expand).

You will need DNS to be able to resolve entries in the ASDK environment from your corporate network. You can either set up a forwarder from your existing DNS infrastructure to 192.168.200.67 (AzS-DC01), or you can add 192.168.200.67 as an additional DNS server in your client or server's network settings.

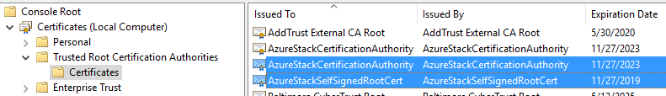

Finally, on the ASDK Host, open up an MMC and add the Local Certificates snap-in.

Export the following two certificates, and import them to the Trusted Root CA container on any machine you'll be accessing ASDK services from.

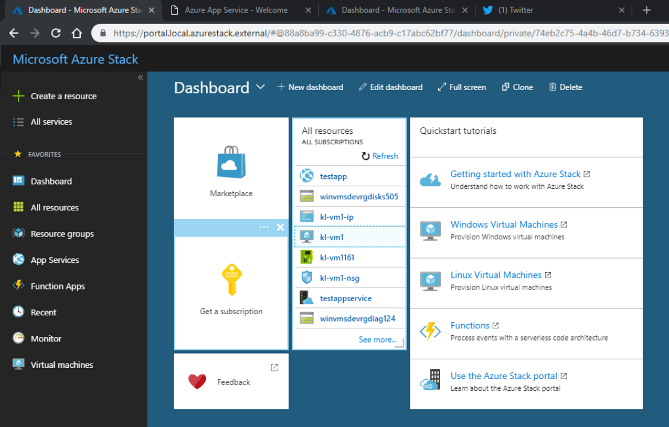

You should now be able to navigate to https://portal.local.azurestack.external from your internal network.

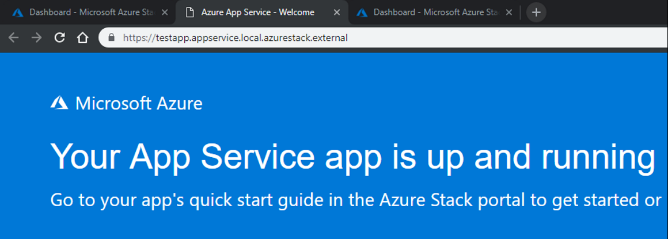

If you deploy any Azure Stack services, for example an App Service WebApp, you will also be able to access those over your internal network.

Even deployment of an HTTPTrigger Function App from Visual Studio now works the same from your internal network to Azure Stack as it does to Public Azure (click to expand).

If at any time you want to roll the environment back to the default configuration, simply power off the new VM you deployed.

This setup enables the testing of many new scenarios that aren't available out of the box with an ASDK, and can significantly enhance the value of having an Azure Stack Development Kit running in your datacenter, enabling new interoperability, migration, integration, hybrid, and multi-cloud scenarios.

Back Up Azure Stack Workloads with Native Data Domain Integration

Mon, 17 Aug 2020 19:03:53 -0000

|Read Time: 0 minutes

Back Up Azure Stack Workloads with Native Data Domain Integration

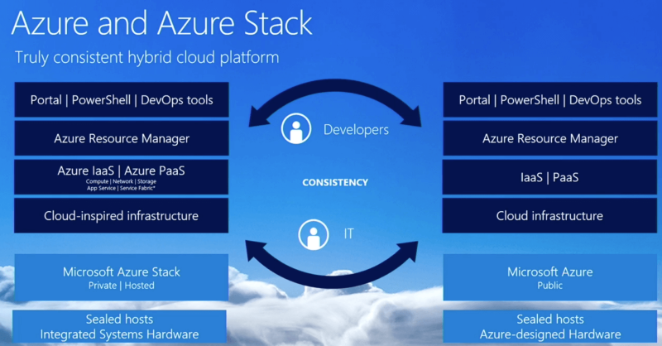

Azure Stack

Azure Stack is designed to help organizations deliver Azure services from their own datacenter. By allowing end users to ‘develop once and deploy anywhere' (public Azure or on premises), customers can now take full advantage of Azure services in various scenarios that they otherwise could not, whether due to regulations, data sensitivity, latency, edge use cases, or location of data that prevents them from using public cloud.

Dell EMC co-engineers this solution with Microsoft, with added value in automation of deployments, patches and updates, along with integration of various key solutions to meet our customers’ holistic needs. One such value add which we're proud to have now launched is off-stack backup storage integration, with Data Domain.

Backup in Azure Stack

Backup of tenant workloads in Azure Stack requires consideration both from an administrator (Azure Stack Operator), and tenant perspective. From an administrator perspective, a mechanism has to be provided to tenants in order to perform backups, enabling them to protect their workloads in the event that they need to be restored. Ideally the storage used to hold this backup data long term will not reside on the Azure Stack itself, as this will a) waste valuable Azure Stack storage, and b) not provide protection in the event of outage or a disaster scenario which affects the Azure Stack itself.

In an ideal world, an Azure Stack administrator wants to be able to provide their tenants with resilient, cost effective, off-stack backup storage, which is integrated into the Azure Stack tenant portal, and which enforces admin-defined quotas. Finally, the backup storage target should not force tenants down one particular path when it comes to what backup software they choose to use.

From a tenant perspective, protection and recovery of IaaS workloads in Azure Stack is done by in-guest agent today, often making use of Azure Stack storage to hold the backup data.

Azure Backup

Microsoft provides native integration of Azure Backup into Azure Stack, enabling tenants to backup their workloads to the Azure Public cloud. While this solution suits a subset of Azure Stack customers, there are many who are unable to use Azure Backup, due to…

- Lack of connectivity or bandwidth to Public Azure

- Regulatory compliance requirements mandating data resides on-premises

- Cost of recovery - data egress from Azure has an associated cost

- Time for recovery - restoring multiple TB of data from Azure can just take too long

Data Domain

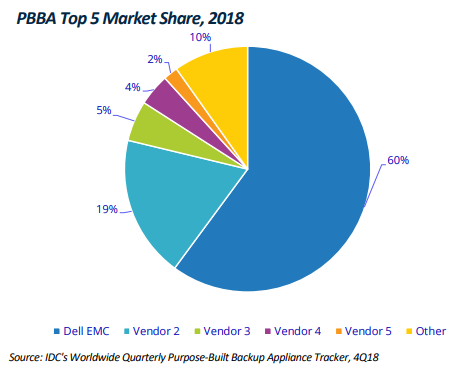

Today, Dell EMC Data Domain leads the purpose-built backup appliance market, holding more than 60% of the market share. Data Domain provides cost-effective, resilient, scalable, reliable storage specifically for holding and protecting backup data on-premises. Many backup vendors make use of Data Domain as a back-end storage target, and many Azure Stack customers have existing Data Domain investments in their datacentres.

Data Domain and Azure Stack Integration

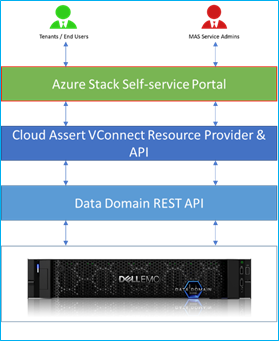

Extending on our previous announcement of native Isilon integration into Azure Stack, Dell EMC have continued work with our partner CloudAssert, to develop a native resource provider for Azure Stack which enables the management and provisioning of Data Domain storage from within Azure Stack.

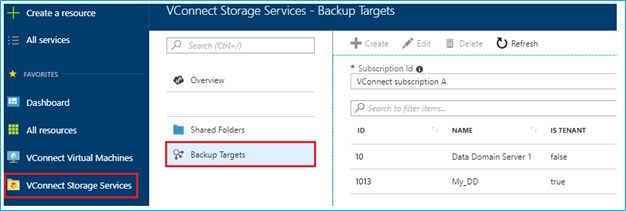

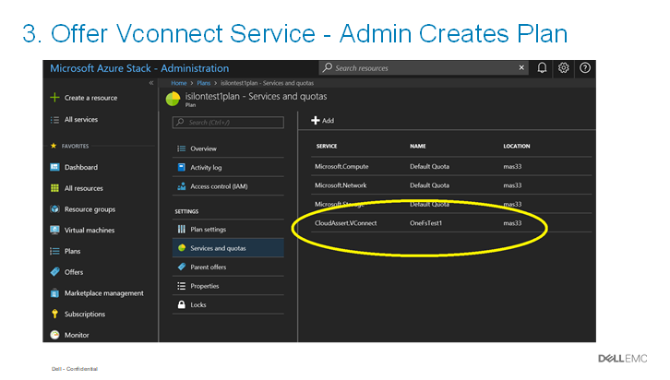

This resource provider enables Azure Stack Operators to provide their tenants with set quotas of Data Domain storage, which they can then use to protect their IaaS workloads. Just like with other Azure Stack services, the Operator assigns a Data Domain quota to a Plan, which is then enforced in tenant Subscriptions.

In the tenant space, Azure Stack tenants are able to deploy their choice of validated backup software - currently Networker, Avamar, Veeam, or Commvault - and then connect that backup software to the Data Domain, with multi-tenancy* and quota management handled transparently.

With a choice of backup software vendors, industry leading data protection with Data Domain, and full integration into the Azure Stack Admin and Tenant portals, Dell EMC is the only Azure Stack vendor to provide a native, multi-tenancy-aware, off-stack backup solution integrated into Azure Stack.

Delving Deeper

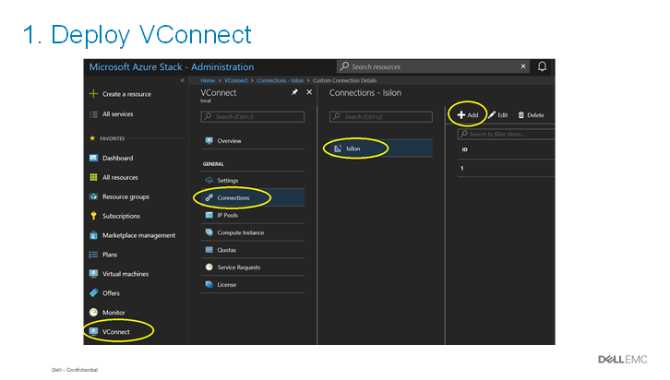

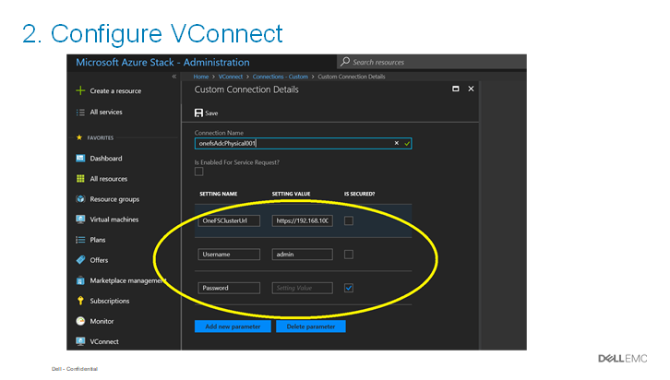

Data Domain data protection services are offered as a solution within the Azure Stack admin and tenant portals, by integrating with the VConnect Resource Provider for Azure Stack. Data Domain integration with the VConnect Resource Provider delivers the following capabilities:

- Managing Data Domain storage quotas like maximum number of MTrees allowed per Tenant, or storage hard limits per MTree

- Managing CIFS shares, NFS exports and DD Boost Storage units

- Honoring role-based access control for the built-in roles of Azure Stack - Owner, Contributor, and Reader

- Tracking the usage consumption of MTree storage and reporting the usage to the Azure Stack pipeline

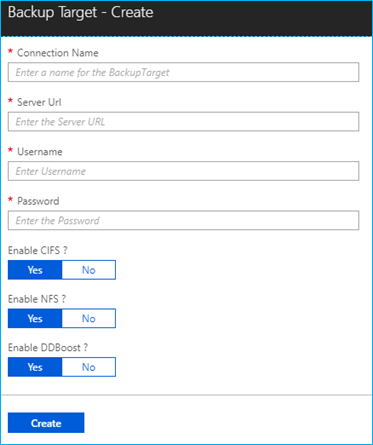

From within the Azure Stack Tenant portal, a tenant can configure and manage their own backup targets. From the create backup target wizard, the connection URL and credentials are specified which will be used to connect to the Data Domain infrastructure.

The three protocols supported by Data Domain - namely CIFS, NFS and DD Boost protocol - can be optionally disabled or enabled. Creation of CIFS shares, NFS export, and DD Boost storage unit is allowed based on this configuration.

A full walkthrough of how to configure and manage Data Domain functionality in Azure Stack is included in the Dell EMC Cloud for Microsoft Azure Stack Data Domain Integration whitepaper.

Backup Vendors

While Data Domain functions as the backend storage target for IaaS backups, backup software still needs to be deployed into the tenant space to manage the backup process and scheduling. In this release, we support the following backup vendors:

Backup Provider | Targeted Version |

NetWorker | NetWorker 9.x |

Avamar* | Avamar 18.1 |

Commvault | Commvault Simpana v11 |

Veeam | Veeam backup and replication 9.5 |

*Avamar currently supported for single tenant scenarios, not multi-tenancy.

A tenant, or a cloud operator providing a fully managed service, will deploy their backup software of choice in their tenant space, configure it to use Data Domain as the backend storage target, and immediately have the ability to store their backup data off-stamp, in a secure, protected, and cost-effective platform which respects Azure Stack storage quotas.

Conclusion