The Value of Azure Stack as an IaaS Platform

Mon, 17 Aug 2020 19:08:33 -0000

|Read Time: 0 minutes

The Value of Azure Stack as an IaaS Platform

Out in the field with customers and partners, we hear an awful lot about what people think Azure Stack is and isn't, what its capabilities are, and where it should or shouldn't be used when needs arise for an on-premises solution.

"There's no point in just moving existing VMs into Azure Stack, there are already established virtualization platforms with rich ecosystems which will run them just the same and for a lower cost'"

"Sure Azure Stack can run VMs, but it's really designed as a PaaS first platform, not for IaaS."

"Azure Stack is a platform for modern applications, there's no point in just using it for VM workloads."

"I already have an IaaS platform that I'm depreciating over the next four years, I don't need another more expensive one to do the same thing."

Each of the above is a commonly held belief about Azure Stack, and each of them is definitely built around a grain of truth, while at the same time missing or not embracing much of the larger picture around Azure Stack, and indeed Azure and other public cloud platforms.

To be fair this isn't anyone's fault in particular; Azure Stack is a nascent product, and as such messaging around it has pivoted multiple times since it was first announced over three years ago. It wouldn't have been seemly to have been seen to be competing with Hyper-V in any way, and so it was announced and positioned as a PaaS-first play, bringing the rich goodness of Azure platform services back to the edge! While that's still true, at the same time it really does do Azure Stack a massive disservice.

To be clear - Azure Stack is a brilliant IaaS platform for running VM workloads, and takes us leaps and bounds beyond traditional virtualization.

Ok, in retrospect, that wasn't so clear - let's break it down.

Virtualization, Advanced Virtualization, and IaaS are three distinct capabilities which are often conflated.

Infrastructure as a Service isn't simply the ability to run virtual machines, it's a set of management constructs on top of a hypervisor on top of a fully automated infrastructure which support and deliver the essential characteristics of a Cloud computing platform.

This isn't a new message from Dell EMC, in fact it's been consistent for quite some time now. Rather than rehash previously trodden ground, I'll instead just recap with a link to Greg's excellent blog below.

https://blog.dellemc.com/en-us/is-that-iaas-or-just-really-good-virtualization/

A lot has changed in Azure Stack in the last year and a half though, and that in and of itself isn't surprising, nor should it be. Azure Stack updates are released on a regular basis, bringing with them new capabilities and improvements, including of course in the IaaS space.

Over and above the monthly updates, and far more importantly though, customers have now had their hands on Azure Stack for over a year since official GA launch, and we have a much better idea of the interesting, innovative, and sometimes downright cool scenarios they're using Azure Stack for in the real world.

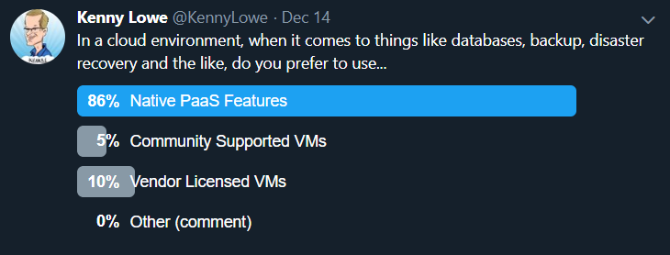

Of course the available Azure PaaS Services are used with gusto, that goes without saying - people prefer to use native PaaS features wherever they can! The Azure App Service and Azure Functions are my personal favourites. Below is a quick Twitter poll I ran, just to gauge if indeed this was general sentiment, and while decidedly unscientific, the results were interesting.

But while Azure Stack is indeed a great PaaS platform, it is definitely not a PaaS only platform, and nor for that matter is Azure by any stretch of the imagination.

Indeed one of the fastest growing parts of Public Azure today is through the migration of existing (appropriate) workloads into Azure as VMs, and don't worry we'll cover off what appropriate means a bit later on. This doesn't involve any day one workload transformation or changes to how applications are running, it does typically bring some immediate benefits though, and those benefits are largely the same, or dare I say… consistent, in Azure Stack.

Benefit #1

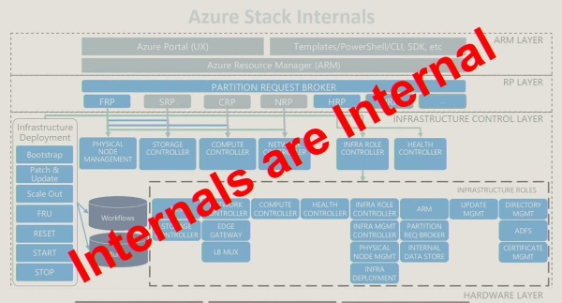

Firstly, and probably most importantly, you don't need to design, deploy, or manage any of the underlying hypervisor or software defined constructs. All of the extremely complex virtual infrastructure which goes into running a platform like Azure Stack is delivered as an appliance, consistent every time.

In most businesses, large portions (if not the majority) of an ops team's time and energy goes into the ongoing management of the infrastructure which supports their workloads. Azure Stack is designed to give pretty much all of that time back. In Azure Stack, all of the hypervisor components, the host OS, the software defined networking, the software defined storage, and everything that goes around and supports them is delivered as a turnkey solution, and then patched and updated automatedly as part of regular Azure Stack updates, albeit scheduled by you, the admin.

Each of the dozens of Virtual Machines running on top of the platform to support and deliver Azure Stack itself are locked down and delivered to you as a service. Azure Stack updates are delivered on a mostly-monthly cadence, and while they take some time to run, they are fully automated end to end. This includes not just the hypervisor and software defined constructs, but also the ongoing patch and update all of the virtual infrastructure required to deliver Azure Stack itself.

Through Dell EMC, OEM updates are also automated through our Azure Stack patch and update tool, a unique capability in the Azure Stack OEM market. One of the greatest benefits a true cloud platform delivers is automating you away from the hum drum to where you can spend your time most valuably. Where any part of that solution from bare metal to cloud isn't automated, not only is there potential for human error and configuration drift, your valuable time is also being wasted. Today, uniquely, Dell EMC provides you both that consistency and that time back.

Benefit #2

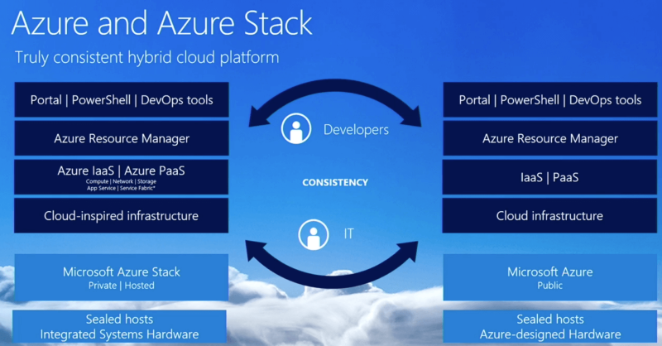

The second benefit to Azure Stack comes through its consistency with Public Azure. Any time and skills investment into learning Public Azure automatically translates to Azure Stack, and vice versa. Any infrastructure as code templates developed through the likes of ARM or Terraform can, with some caveats, work across each platform. Potentially most importantly though, Azure is a mature platform which has been around for many years now, and as such has a robust and well established community.

Many of the challenges you'll encounter, solutions you'll want to deploy, or knowledge you want to gain have already been round the houses in Public Azure. There are many, many public repositories of infrastructure templates out there in the community just waiting to be deployed, and many knowledgebases and courses ready to be scoured for knowledge.

Take the Azure Stack Quickstart Template Gallery for example. There are dozens of pre-built templates there covering a plethora of IaaS application use cases, everything from a single Windows or Linux VM to an Ethereum consortium blockchain infrastructure, and a huge amount in between. Each of these templates can be used as a starting point, or in some cases a finishing point, for deploying your own IaaS infrastructure in Azure Stack.

When you enter into the Azure Stack community, you also enter the wider Azure community, and having a community that well established and open is of incredibly value.

Benefit #3

The Azure Marketplace is an IaaS-centric 'app store' for the cloud, where software vendors certify through Microsoft and then make available golden images of their software to anyone who wants to deploy it into Azure. Sometimes the resultant VMs are managed by you, sometimes they're delivered as more of an appliance-based offering. Sometimes they're a single VM, sometimes a whole infrastructure will be deployed to deliver the service requested.

The marketplace is one of the most well used parts of Azure, and again note that its focus is not in other Azure PaaS services. It's in delivering as much value from IaaS as possible, giving you images you know you can trust, which have been battle-tested at hyperscale, and which are typically kept up to date by the very people who create the software running on them.

This same marketplace experience exists in Azure Stack, and through it you have the ability to choose which marketplace images you want to syndicate to your own Azure Stack. Every image isn't downloaded by default, as they take up some space, so you choose what ones make sense to you, and then make use of them knowing that they're the exact same images as you would deploy in Public Azure.

Benefit #4… to #n

There are many more benefits to 'just' running virtual machines in Azure Stack, from the automated patching of SQL workloads, to the continued three year support of Windows and SQL Server 2008/R2 workloads, to being able to access cloud constructs like object, table, and queue storage, to leveraging VM Scale Sets for horizontal scalability of traditional workloads, to pre-validation for compliance standards like PCI DSS, to service measurement for chargeback, to built-in load balancers, to built-in site to site VPN capabilities, to managed disks and the removal of VM disk and storage management, to extensions providing features to VMs like anti-virus and VM patch and update, to integration of those IaaS workloads with higher tier PaaS capabilities… and so it goes on. The benefits are myriad, and in retrospect should be unsurprising given the popularity of Public Azure for IaaS workloads.

An Azure Stack Operator does still have administrative tasks to carry out it's true, but they are not the same as a virtualization admin, or even an advanced virtualization admin. As we've said, all of the underlying infrastructure that delivers the Azure services is delivered 'as a service', so as ever in cloud your attention is pushed higher, focusing on more rapid update cadence, capacity management, offering services, chargeback, and other more cloud-centric operating tasks.

Azure Stack doesn't replace virtualization

There are two core routes to IaaS workloads entering an Azure Stack:

Fresh Deployment

If you're deploying a fresh infrastructure, you may be doing it to create a new application, or to deploy your own in-house application, or to install a third party vendor's application. For the first two of these, Azure Stack can provide a great platform assuming you follow cloud application development patterns for resiliency. For the third, you're largely in the hands of the vendor. If they require specific hypervisor features, or shared storage between VMs, or specific CPU:RAM:Storage ratios, or high performance OS disks, or… well, all the same reasons an application can't be deployed into Azure apply to Azure Stack.

There's an enormous mass of software out there which will never be rewritten for a cloud-native environment, and yet more which is suited best to environments with more customizability than Azure or Azure Stack provides. For those workloads, existing virtualization platforms with their rich and well established ecosystems remain the best place to run them, even if deploying fresh.

If you are deploying a fresh infrastructure over which you have application control, there's a whole host of cloud-native tooling available to transform how you design, manage, and maintain that application. Infrastructure as Code, VM Scale Sets, Service Fabric and Kubernetes templates, and more all exist to allow you to apply the same DevOps principals to your VMs in Azure Stack as you can in Azure.

Migration

Migrating VMs to Azure Stack is largely the same in principle as migrating VMs to Azure, and just like in Azure, consideration needs to be given to the workload and how (and indeed if) it will run well within the cloud environment. Typically some resizing will need to be done to fit an Azure Stack VM 't-shirt' size, and testing will need to happen to ensure the workload performs as expected. For these reasons and more, when evaluating Azure Stack as a platform it's critical that you evaluate it against the workloads you'll be running, not just the aggregate CPU/RAM/Storage you think you'll need.

Probably the most important consideration for any deployment or migration into Azure or Azure Stack is that these are platforms designed for cloud workloads. The most fundamental difference between a cloud workload and a traditional workload is that workload availability should be delivered and accounted for by the application, not by the infrastructure. That's not to say that Azure Stack isn't a highly available and resilient platform within a rack, it is, however if a workload needs to be but cannot be resilient (and in particular resilient across site or rack if needed) without traditional hypervisor or storage technologies, then it may not be best suited to run in a cloud platform.

Never Forget: Azure Stack is Azure

Azure Stack is undoubtedly an incredibly powerful IaaS platform, boasting features like the Azure Marketplace that don't even exist in other on-premises platforms. If your workload can be deployed or migrated into Azure Stack, and it does perform well, then all of the above benefits will apply to it. You'll find yourself with an up to date, patched and secure environment, which gives you the time back to start working on higher tier PaaS services without being additive to existing tasks.

Ultimately though the core of the matter is that when you deploy or migrate virtual machines into an Azure Stack environment, you're not just making use of a hypervisor, you're gaining the power, the ecosystem, and the community of the Azure Cloud, and that's a glorious place to be.

Related Blog Posts

Azure Stack Development Kit - Removing Network Restrictions

Mon, 24 Jul 2023 15:06:15 -0000

|Read Time: 0 minutes

Azure Stack Development Kit - Removing Network Restrictions

This process is confirmed working for Azure Stack version 1910.

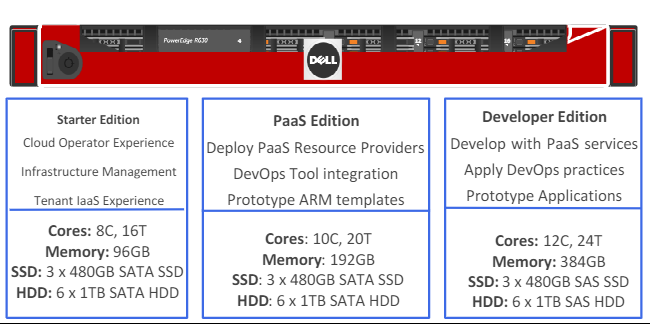

So you've got your hands on an Azure Stack Development Kit (ASDK), hopefully at least of the spec of the PaaS Edition Dell EMC variant below or higher, and you've been testing it for a while now. You've had a kick of the tyres, you've fired up some VMs, syndicated from the marketplace, deployed a Kubernetes cluster from template, deployed Web and API Apps, and had some fun with Azure Functions.

All of this is awesome and can give you a great idea of how Azure Stack can work for you, but there comes a time where you want to see how it'll integrate with the rest of your corporate estate. One of the design limitations for the ASDK is that it's enclosed in a Software Defined Networking (SDN) boundary, which limits access to the Azure Stack infrastructure and any tenant workloads deployed in it to being accessed from the ASDK host. Tenant workloads are able to route out to your corporate network, however nothing can talk back in.

There's a documented process for allowing VPN Access to the ASDK to allow multiple people to access the tenant and admin portals from their own machines at the same time, but this doesn't allow access to deployed resources, and nor does it allow your other existing server environments to connect to them.

There are a few blogs out there which have existed since the technical preview days of Azure Stack, however they're either now incomplete or inaccurate, don't work in all environments, or require advanced networking knowledge to follow. The goal of this blog is to provide a method to open up the ASDK environment to deliver the same tenant experience you'll get with a full multi-node Azure Stack deployed in your corporate network.

Note: When you deploy an Azure Stack production environment, you have to supply a 'Public VIP' network range which will function as external IPs for services deployed in Azure Stack. This range can either be internal to your corporate network, or a true public IP range. Most enterprises deploy within their corporate network while most service providers deploy with public IPs, to replicate the Azure experience. The output of this process will deliver a similar experience to an Azure Stack deployed in your internal network.

The rest of this blog assumes you have already deployed your ASDK and finished all normal post-deployment activities such as registration and deployment of PaaS resource providers.

Removing Network Restrictions

This process is designed to be non-disruptive to the ASDK environment, and can be fully rolled back without needing a re-deployment.

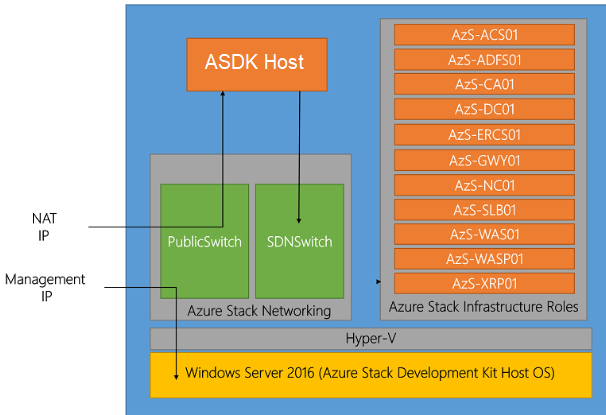

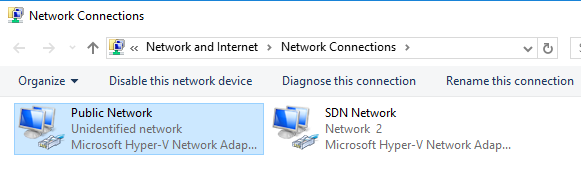

Within the ASDK environment there are two Hyper-V switches. A Public Switch, and an SDN Switch.

- The Public Switch is attached to your internal/corporate network, and provides you the ability to RDP to the host to manage the ASDK.

- The SDN Switch is a Hyper-V 2016 SDN managed switch which provides all of the networking for all ASDK infrastructure and tenant VMs which are and will be deployed.

The ASDK Host has NICs attached to both Public and SDN switches, and has a NAT set up to allow access outbound to the corporate network and (in a connected scenario) the internet.

Rather than make any changes to the host which might be a pain to rollback later, we'll deploy a brand new VM which will have a second NAT, operating in the opposite direction. This makes rollback a simple case of decommissioning that VM in the future.

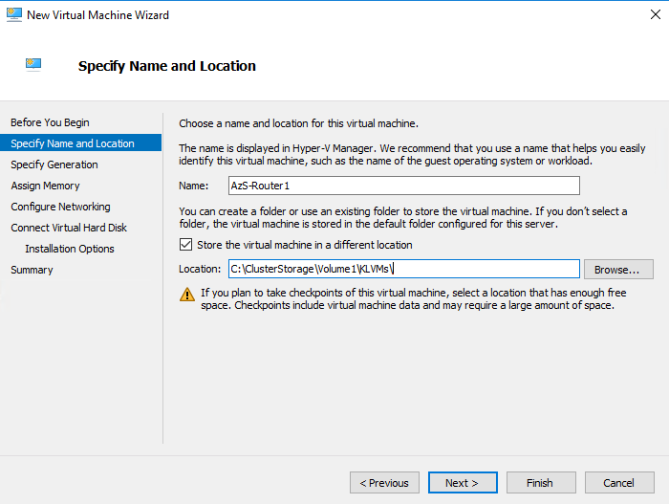

On the ASDK Host open up Hyper-V Manager, and deploy a new Windows Server 2016 VM. You can place the VM files in a new folder in the C:\ClusterStorage\Volume1 CSV.

The VM can be Generation 1 or Generation 2, it doesn't make a difference for our purposes here. I've just used the Gen 1 default as it's consistent with Azure.

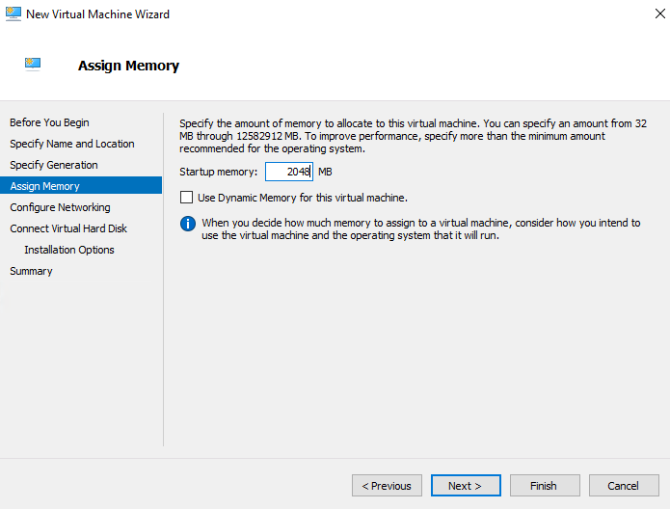

Set the Startup Memory to at least 2048MB and do not use Dynamic Memory.

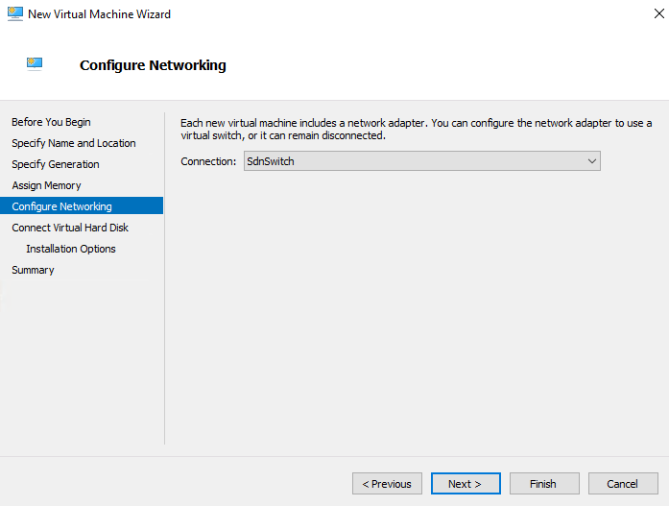

Attach the network to the SdnSwitch.

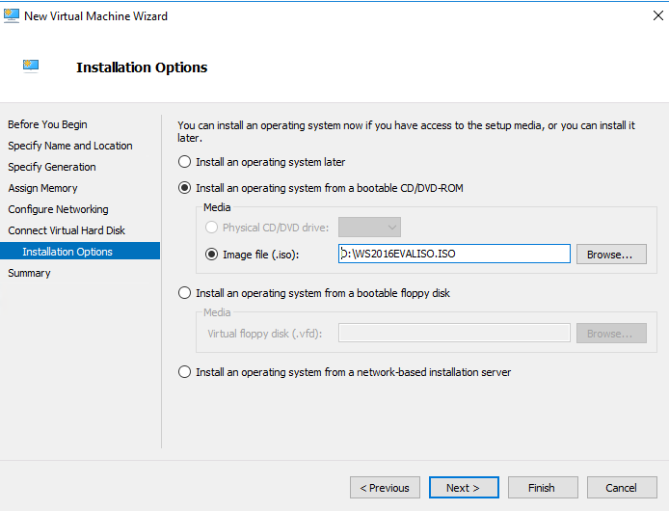

Click through the Hard Disk options, and then on the Installation Options, specify a Server 2016 ISO. Typically you'll have one on-host already from doing the ASDK deployment, so just use that.

Finish the wizard, but do not power on the VM.

While we've attached the VM's NIC to the SDN Network, because that network is managed by a Server 2016 SDN infrastructure, it won't be able to communicate with any other VM resources attached to it by default. First we have to make this VM part of that SDN family.

In an elevated PowerShell window on your ASDK host, run the following:

$Isolation = Get-VM -VMName 'AzS-DC01' | Get-VMNetworkAdapter | Get-VMNetworkAdapterIsolation

$VM = Get-VM -VMName 'AzS-Router1'

$VMNetAdapter = $VM | Get-VMNetworkAdapter

$IsolationSettings = @{

IsolationMode = 'Vlan'

AllowUntaggedTraffic = $true

DefaultIsolationID = $Isolation.DefaultIsolationID

MultiTenantStack = 'off'

}

$VMNetAdapter | Set-VMNetworkAdapterIsolation @IsolationSettings

Set-PortProfileId -resourceID ([System.Guid]::Empty.tostring()) -VMName $VM.Name -VMNetworkAdapterName $VMNetAdapter.Name

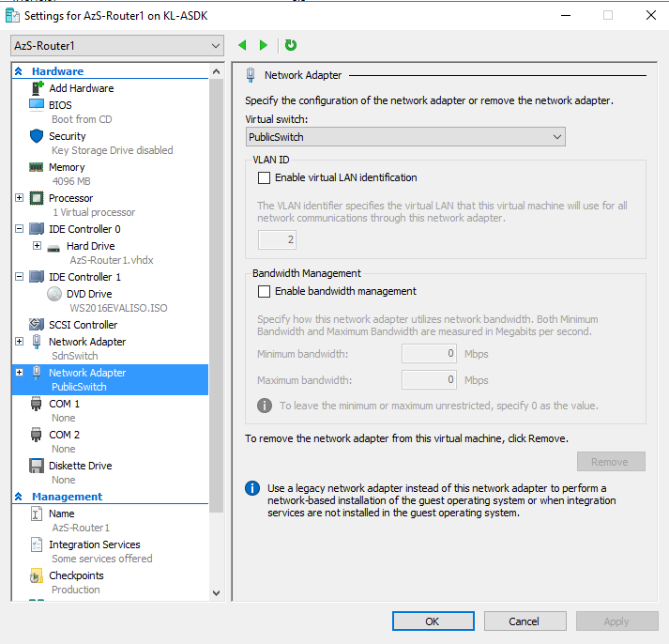

Now that this NIC is part of the SDN infrastructure, we can go ahead and add a second NIC and connect it to the Public Switch.

Now you can power on the VM, and install the Server 2016 operating system - this VM does not need to be domain joined. Once done, open a console to the VM from Hyper-V Manager.

Open the network settings, and rename the NICs to make them easier to identify.

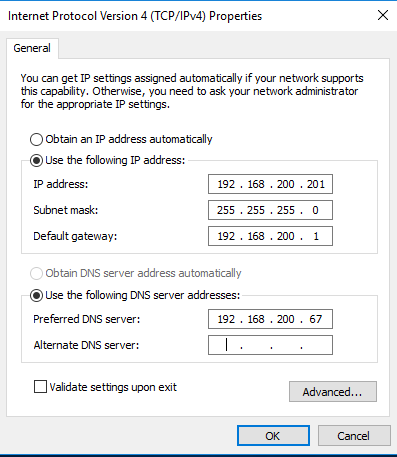

Give the SDN NIC the following settings:

IP Address: 192.168.200.201

Subnet: 255.255.255.0

Default Gateway: 192.168.200.1

DNS Server: 192.168.200.67

The IP Address is an unused IP on the infrastructure range.

The Default Gateway is the IP Address of the ASDK Host, which still handles outbound traffic.

The DNS Server is the IP Address of AzS-DC01, which handles DNS resolution for all Azure Stack services.

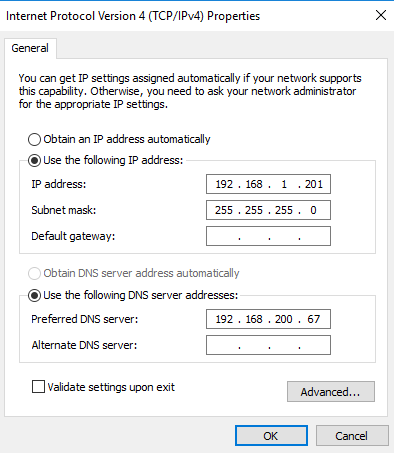

Give the Public Network NIC an unused IP Address on your corporate network. Don't use DHCP for this, as you don't want a default gateway to be set. In my case, my internal network is 192.168.1.0/24, and I've given the same final octet as the SDN NIC so it's easier for me to remember.

On the VM, open an elevated PowerShell window, and run the following command:

New-NetNAT -Name "NATSwitch" -InternalIPInterfaceAddressPrefix "192.168.1.0/24" -Verbose

Where the IP range matches your internal network's subnet settings.

While we have a default route set up to the ASDK Host, Azure Stack also uses a Software Load Balancer as part of the SDN infrastructure, AzS-SLB01. In order for all to work correctly, we need to set up some static routes from the new VM to pass appropriate traffic to the SLB.

Run the following on your new VM to add the appropriate static routes:

$range = 2..48

foreach ($r in $range) { route add -p "192.168.102.$($r)" mask 255.255.255.255 192.168.200.64 }

$range = 1..8

foreach ($r in $range) { route add -p "192.168.105.$($r)" mask 255.255.255.255 192.168.200.64 }

That's all the setup on the new VM complete.

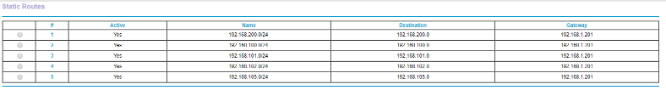

Next you will need to add appropriate routing to your internal network or clients. How you do this is up to you, however you'll need to set up the following routes:

Each of:

192.168.100.0/24

192.168.101.0/24

192.168.102.0/24

192.168.103.0/24

192.168.104.0/24

192.168.105.0/24

192.168.200.0/24

… needs to use the Public Switch IP of the new VM you deployed as their Gateway.

In my case, I configured this on my router as below (click to expand).

You will need DNS to be able to resolve entries in the ASDK environment from your corporate network. You can either set up a forwarder from your existing DNS infrastructure to 192.168.200.67 (AzS-DC01), or you can add 192.168.200.67 as an additional DNS server in your client or server's network settings.

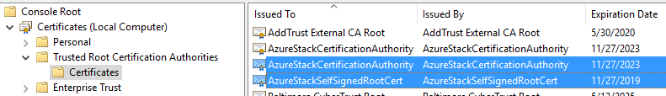

Finally, on the ASDK Host, open up an MMC and add the Local Certificates snap-in.

Export the following two certificates, and import them to the Trusted Root CA container on any machine you'll be accessing ASDK services from.

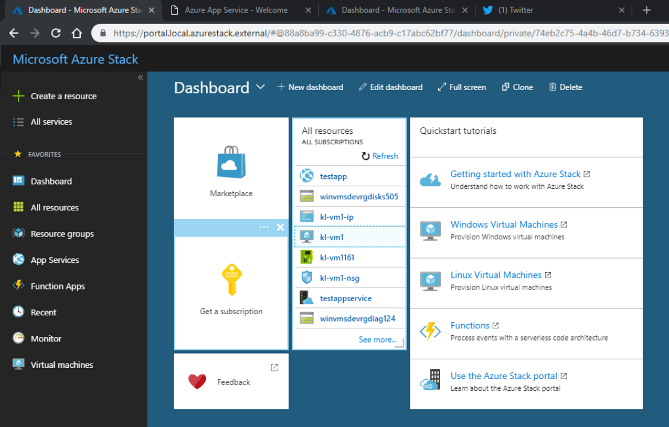

You should now be able to navigate to https://portal.local.azurestack.external from your internal network.

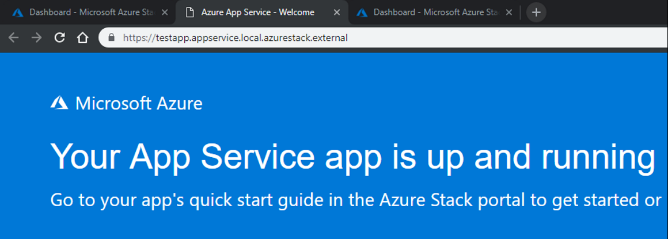

If you deploy any Azure Stack services, for example an App Service WebApp, you will also be able to access those over your internal network.

Even deployment of an HTTPTrigger Function App from Visual Studio now works the same from your internal network to Azure Stack as it does to Public Azure (click to expand).

If at any time you want to roll the environment back to the default configuration, simply power off the new VM you deployed.

This setup enables the testing of many new scenarios that aren't available out of the box with an ASDK, and can significantly enhance the value of having an Azure Stack Development Kit running in your datacenter, enabling new interoperability, migration, integration, hybrid, and multi-cloud scenarios.

Back Up Azure Stack Workloads with Native Data Domain Integration

Mon, 17 Aug 2020 19:03:53 -0000

|Read Time: 0 minutes

Back Up Azure Stack Workloads with Native Data Domain Integration

Azure Stack

Azure Stack is designed to help organizations deliver Azure services from their own datacenter. By allowing end users to ‘develop once and deploy anywhere' (public Azure or on premises), customers can now take full advantage of Azure services in various scenarios that they otherwise could not, whether due to regulations, data sensitivity, latency, edge use cases, or location of data that prevents them from using public cloud.

Dell EMC co-engineers this solution with Microsoft, with added value in automation of deployments, patches and updates, along with integration of various key solutions to meet our customers’ holistic needs. One such value add which we're proud to have now launched is off-stack backup storage integration, with Data Domain.

Backup in Azure Stack

Backup of tenant workloads in Azure Stack requires consideration both from an administrator (Azure Stack Operator), and tenant perspective. From an administrator perspective, a mechanism has to be provided to tenants in order to perform backups, enabling them to protect their workloads in the event that they need to be restored. Ideally the storage used to hold this backup data long term will not reside on the Azure Stack itself, as this will a) waste valuable Azure Stack storage, and b) not provide protection in the event of outage or a disaster scenario which affects the Azure Stack itself.

In an ideal world, an Azure Stack administrator wants to be able to provide their tenants with resilient, cost effective, off-stack backup storage, which is integrated into the Azure Stack tenant portal, and which enforces admin-defined quotas. Finally, the backup storage target should not force tenants down one particular path when it comes to what backup software they choose to use.

From a tenant perspective, protection and recovery of IaaS workloads in Azure Stack is done by in-guest agent today, often making use of Azure Stack storage to hold the backup data.

Azure Backup

Microsoft provides native integration of Azure Backup into Azure Stack, enabling tenants to backup their workloads to the Azure Public cloud. While this solution suits a subset of Azure Stack customers, there are many who are unable to use Azure Backup, due to…

- Lack of connectivity or bandwidth to Public Azure

- Regulatory compliance requirements mandating data resides on-premises

- Cost of recovery - data egress from Azure has an associated cost

- Time for recovery - restoring multiple TB of data from Azure can just take too long

Data Domain

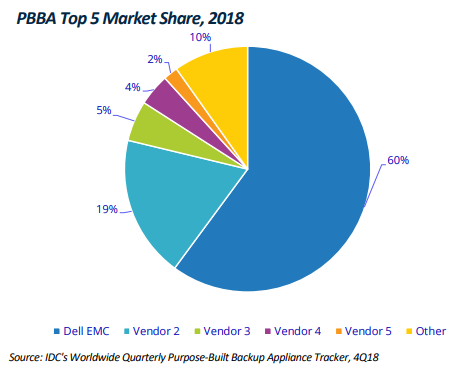

Today, Dell EMC Data Domain leads the purpose-built backup appliance market, holding more than 60% of the market share. Data Domain provides cost-effective, resilient, scalable, reliable storage specifically for holding and protecting backup data on-premises. Many backup vendors make use of Data Domain as a back-end storage target, and many Azure Stack customers have existing Data Domain investments in their datacentres.

Data Domain and Azure Stack Integration

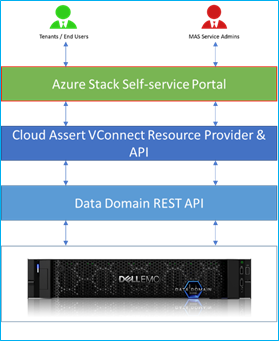

Extending on our previous announcement of native Isilon integration into Azure Stack, Dell EMC have continued work with our partner CloudAssert, to develop a native resource provider for Azure Stack which enables the management and provisioning of Data Domain storage from within Azure Stack.

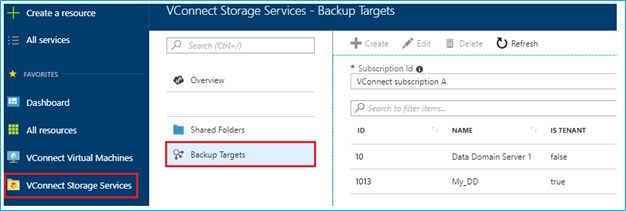

This resource provider enables Azure Stack Operators to provide their tenants with set quotas of Data Domain storage, which they can then use to protect their IaaS workloads. Just like with other Azure Stack services, the Operator assigns a Data Domain quota to a Plan, which is then enforced in tenant Subscriptions.

In the tenant space, Azure Stack tenants are able to deploy their choice of validated backup software - currently Networker, Avamar, Veeam, or Commvault - and then connect that backup software to the Data Domain, with multi-tenancy* and quota management handled transparently.

With a choice of backup software vendors, industry leading data protection with Data Domain, and full integration into the Azure Stack Admin and Tenant portals, Dell EMC is the only Azure Stack vendor to provide a native, multi-tenancy-aware, off-stack backup solution integrated into Azure Stack.

Delving Deeper

Data Domain data protection services are offered as a solution within the Azure Stack admin and tenant portals, by integrating with the VConnect Resource Provider for Azure Stack. Data Domain integration with the VConnect Resource Provider delivers the following capabilities:

- Managing Data Domain storage quotas like maximum number of MTrees allowed per Tenant, or storage hard limits per MTree

- Managing CIFS shares, NFS exports and DD Boost Storage units

- Honoring role-based access control for the built-in roles of Azure Stack - Owner, Contributor, and Reader

- Tracking the usage consumption of MTree storage and reporting the usage to the Azure Stack pipeline

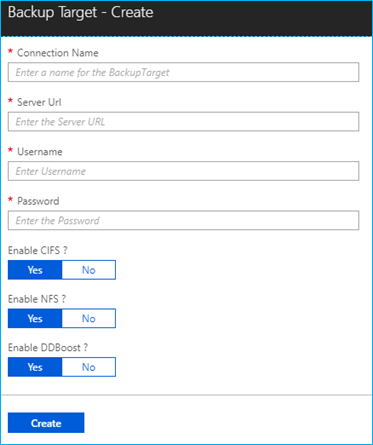

From within the Azure Stack Tenant portal, a tenant can configure and manage their own backup targets. From the create backup target wizard, the connection URL and credentials are specified which will be used to connect to the Data Domain infrastructure.

The three protocols supported by Data Domain - namely CIFS, NFS and DD Boost protocol - can be optionally disabled or enabled. Creation of CIFS shares, NFS export, and DD Boost storage unit is allowed based on this configuration.

A full walkthrough of how to configure and manage Data Domain functionality in Azure Stack is included in the Dell EMC Cloud for Microsoft Azure Stack Data Domain Integration whitepaper.

Backup Vendors

While Data Domain functions as the backend storage target for IaaS backups, backup software still needs to be deployed into the tenant space to manage the backup process and scheduling. In this release, we support the following backup vendors:

Backup Provider | Targeted Version |

NetWorker | NetWorker 9.x |

Avamar* | Avamar 18.1 |

Commvault | Commvault Simpana v11 |

Veeam | Veeam backup and replication 9.5 |

*Avamar currently supported for single tenant scenarios, not multi-tenancy.

A tenant, or a cloud operator providing a fully managed service, will deploy their backup software of choice in their tenant space, configure it to use Data Domain as the backend storage target, and immediately have the ability to store their backup data off-stamp, in a secure, protected, and cost-effective platform which respects Azure Stack storage quotas.

Conclusion

With the release of the VConnect resource provider for Data Domain in Azure Stack, Dell EMC is reaffirming its commitment to the Azure Stack market and to our customers, by continuing to innovate and lead the market in Azure Stack innovation. The understanding that Azure Stack does not live in a silo, but instead needs to integrate and extend into the wider datacentre landscape is a key tenet of the Dell EMC Azure Stack vision, and we're committed to continuing to uniquely innovate in this important space.

To find out more about Data Domain integration for Dell EMC Cloud for Microsoft Azure Stack, please contact kenny.lowe@dell.com.