Supercharge Inference Performance at the Edge using the Dell EMC PowerEdge XE2420

Wed, 05 Jul 2023 13:43:30 -0000

|Read Time: 0 minutes

Deployment of compute at the Edge enables the real-time insights that inform competitive decision making. Application data is increasingly coming from outside the core data center (“the Edge”) and harnessing all that information requires compute capabilities outside the core data center. It is estimated that 75% of enterprise-generated data will be created and processed outside of a traditional data center or cloud by 2025.[1]

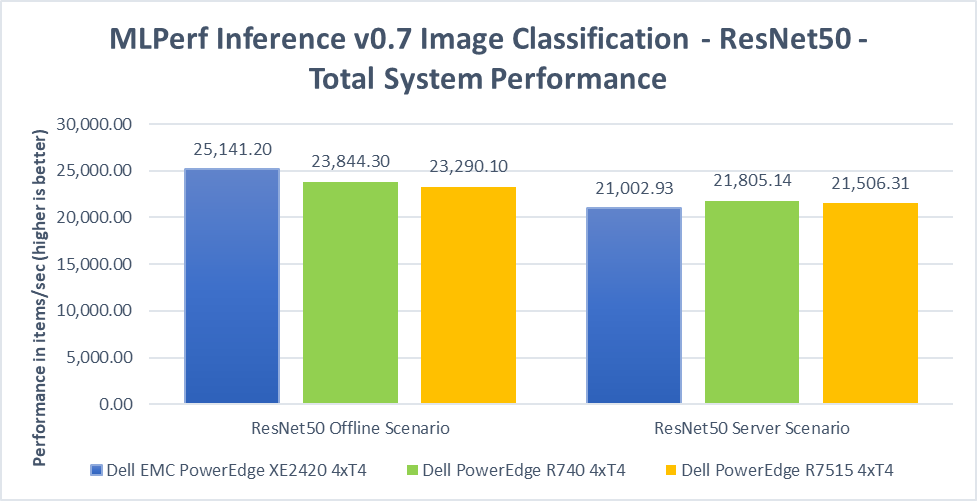

This blog demonstrates that the Dell EMC PowerEdge XE2420, a high-performance Edge server, performs AI inference operations more efficiently by leveraging its ability to use up to four NVIDIA T4 GPUs in an edge-friendly short-depth server. The XE2420 with NVIDIA T4 GPUs can classify images at 25,141 images/second, an equal performance to other conventional 2U rack servers that is persistent across the range of benchmarks.

XE2420 Features and Capabilities

The Dell EMC PowerEdge XE2420 is a 16” (400mm) deep, high-performance server that is purpose-built for the Edge. The XE2420 has features that provide dense compute, simplified management and robust security for harsh edge environments.

Built for performance: Powerful 2U, two-socket performance with the flexibility to add up to four accelerators per server and a maximum local storage of 132TB.

Designed for harsh edge environments: Tested to Network Equipment-Building System (NEBS) guidelines, with extended operating temperature tolerance of 5˚-45˚C without sacrificing performance, and an optional filtered bezel to guard against dust. Short depth for edge convenience and lower latency.

Integrated security and consistent management: Robust, integrated security with cyber-resilient architecture, and the new iDRAC9 with Datacenter management experience. Front accessible and cold-aisle serviceable for easy maintenance.

The XE2420 allows for flexibility in the type of GPUs you use, in order to accelerate a wide variety of workloads including high-performance computing, deep learning training and inference, machine learning, data analytics, and graphics. It can support up to 2x NVIDIA V100/S PCIe, 2x NVIDIA RTX6000, or up to 4x NVIDIA T4.

Edge Inferencing with the T4 GPU

The NVIDIA T4 is optimized for mainstream computing environments and uniquely suited for Edge inferencing. Packaged in an energy-efficient 70-watt, small PCIe form factor, it features multi-precision Turing Tensor Cores and new RT Cores to deliver power efficient inference performance. Combined with accelerated containerized software stacks from NGC, XE240 and NVIDIA T4 is a powerful solution to deploy AI application at scale on the edge.

Fig 1: NVIDIA T4 Specifications

Fig 2: Dell EMC PowerEdge XE2420 w/ 4x T4 & 2x 2.5” SSDs

Dell EMC PowerEdge XE2420 MLPerf Inference Tested Configuration

Processors | 2x Intel Xeon Gold 6252 CPU @ 2.10GHz |

Storage

| 1x 2.5" SATA 250GB |

1x 2.5" NVMe 4TB | |

Memory | 12x 32GB 2666MT/s DDR4 DIMM |

GPUs | 4x NVIDIA T4 |

OS | Ubuntu 18.04.5 |

Software

| TensorRT 7.2 |

CUDA 11.0 Update 1 | |

cuDNN 8.0.2 | |

DALI 0.25.0 | |

Hardware Settings | ECC off |

Inference Use Cases at the Edge

As computing further extends to the Edge, higher performance and lower latency become vastly more important in order to decrease response time and reduce bandwidth. One suite of diverse and useful inference workload benchmarks is MLPerf. MLPerf Inference demonstrates performance of a system under a variety of deployment scenarios and aims to provide a test suite to enable balanced comparisons between competing systems along with reliable, reproducible results.

The MLPerf Inference v0.7 suite covers a variety of workloads, including image classification, object detection, natural language processing, speech-to-text, recommendation, and medical image segmentation. Specific scenarios covered include “offline”, which represents batch processing applications such as mass image classification on existing photos, and “server”, which represents an application where query arrival is random, and latency is important. An example of server is essentially any consumer-facing website where a consumer is waiting for an answer to a question. Many of these workloads are directly relevant to Telco & Retail customers, as well as other Edge use cases where AI is becoming more prevalent.

Measuring Inference Performance using MLPerf

We demonstrate inference performance for the XE2420 + 4x NVIDIA T4 accelerators across the 6 benchmarks of MLPerf Inference v0.7 in order to showcase the versatility of the system. The inference benchmarking was performed on:

- Offline and Server scenarios at 99% accuracy for ResNet50 (image classification), RNNT (speech-to-text), and SSD-ResNet34 (object detection)

- Offline and Server scenarios at 99% and 99.9% accuracy for BERT (NLP) and DLRM (recommendation)

- Offline scenario at 99% and 99.9% accuracy for 3D-Unet (medical image segmentation)

These results and the corresponding code are available at the MLPerf website.[1]

Key Highlights

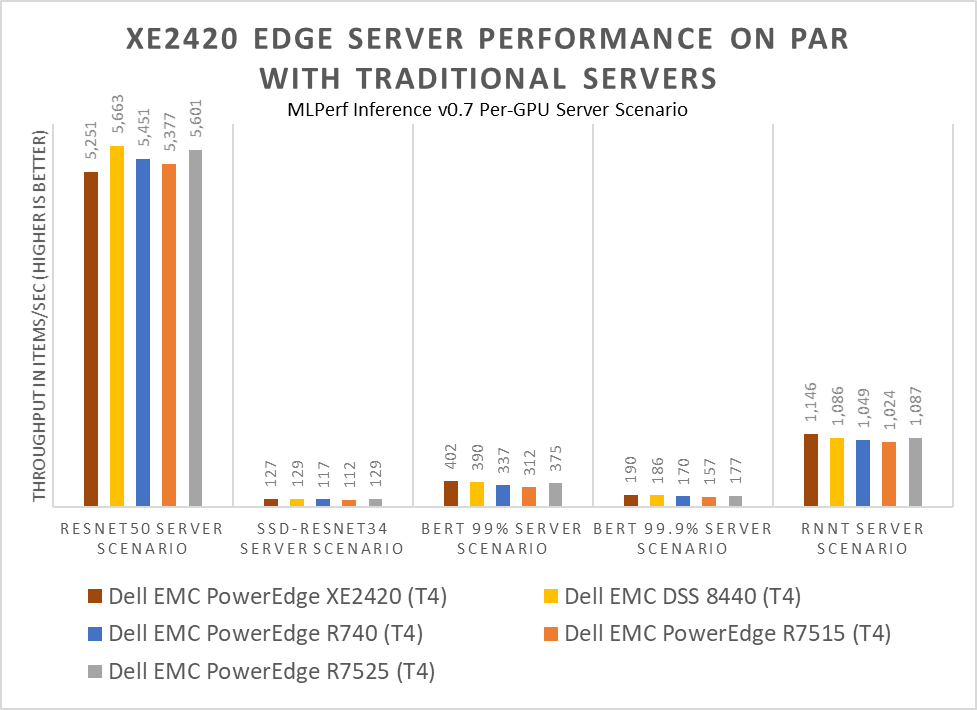

The XE2420 is a compact server that supports 4x 70W T4 GPUs in an efficient manner, reducing overall power consumption without sacrificing performance. This high-density and efficient power-draw lends it increased performance-per-dollar, especially when it comes to a per-GPU performance basis.

Additionally, the PowerEdge XE2420 is part of the NVIDIA NGC-Ready and NGC-Ready for Edge validation programs[i]. At Dell, we understand that performance is critical, but customers are not willing to compromise quality and reliability to achieve maximum performance. Customers can confidently deploy inference and other software applications from the NVIDIA NGC catalog knowing that the PowerEdge XE2420 meets the requirements set by NVIDIA to deploy customer workloads on-premises or at the Edge.

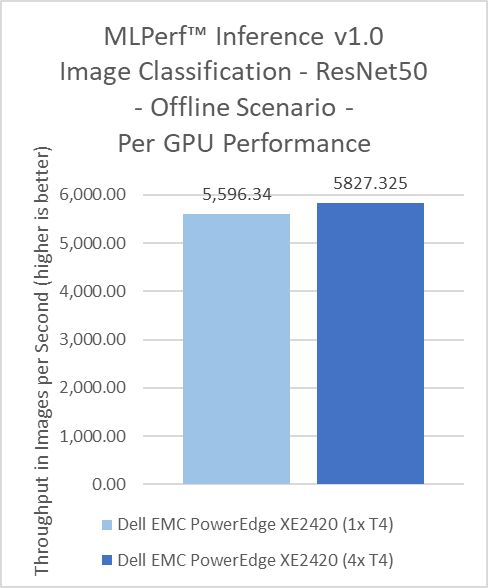

In the chart above, per-GPU (aka 1x T4) performance numbers are derived from the total performance of the systems on MLPerf Inference v0.7 & total number of accelerators in a system. The XE2420 + T4 shows equivalent per-card performance to other Dell EMC + T4 offerings across the range of MLPerf tests.

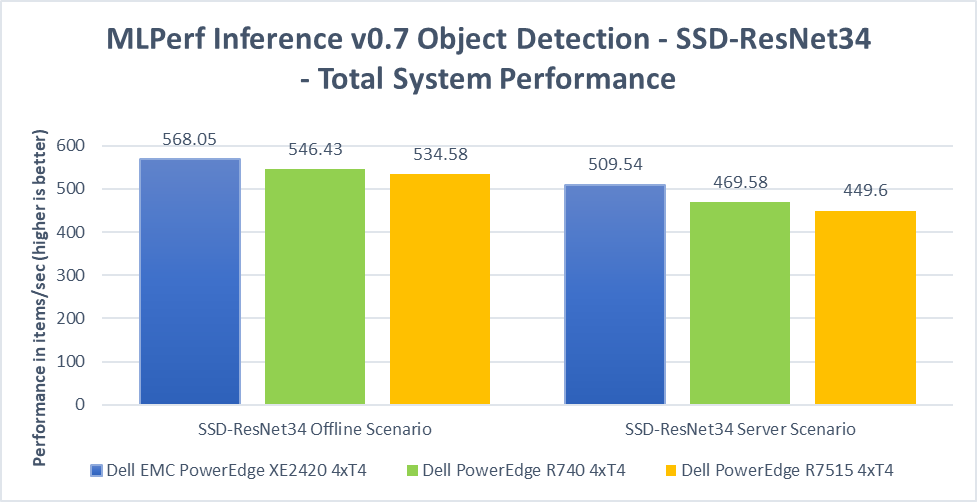

When placed side by side with the Dell EMC PowerEdge R740 (4x T4) and R7515 (4x T4), the XE2420 (4x T4) showed performance on par across all MLPerf submissions. This demonstrates that operating capabilities and performance were not sacrificed to achieve the smaller depth and form-factor.

Conclusion: Better Density and Flexibility at the Edge without sacrificing Performance

MLPerf inference benchmark results clearly demonstrate that the XE2420 is truly a high-performance, half-depth server ideal for edge computing use cases and applications. The capability to pack four NVIDIA T4 GPUs enables it to perform AI inference operations at par with traditional mainstream 2U rack servers that are deployed in core data centers. The compact design provides customers new, powerful capabilities at the edge to do more, faster without extra components. The XE2420 is capable of true versatility at the edge, demonstrating performance not only for common retail workloads but also for the full range of tested workloads. Dell EMC offers a complete portfolio of trusted technology solutions to aggregate, analyze and curate data from the edge to the core to the cloud and XE2420 is a key component of this portfolio to meet your compute needs at the Edge.

XE2420 MLPerf Inference v0.7 Full Results

The raw results from the MLPerf Inference v0.7 published benchmarks are displayed below, where the metric is throughput (items per second).

Benchmark | ResNet50 | RNNT | SSD-ResNet-34 | |||

Scenario | Offline | Server | Offline | Server | Offline | Server |

Result | 25,141 | 21,002 | 6,239 | 4,584 | 568 | 509 |

Benchmark | BERT | DLRM | ||||||

Scenario | Offline | Server | Offline | Server | ||||

Accuracy % | 99 | 99.9 | 99 | 99.9 | 99 | 99.9 | 99 | 99.9 |

Result | 1,796 | 839 | 1,608 | 759 | 140,217 | 140,217 | 126,513 | 126,513 |

Benchmark | 3D-Unet | |

Scenario | Offline | |

Accuracy % | 99 | 99.9 |

Result | 30.32 | 30.32 |

Related Blog Posts

Supercharge Inference Performance at the Edge using the Dell EMC PowerEdge XE2420 (June 2021 revision)

Mon, 07 Jun 2021 13:42:14 -0000

|Read Time: 0 minutes

Deployment of compute at the Edge enables the real-time insights that inform competitive decision making. Application data is increasingly coming from outside the core data center (“the Edge”) and harnessing all that information requires compute capabilities outside the core data center. It is estimated that 75% of enterprise-generated data will be created and processed outside of a traditional data center or cloud by 2025.[1]

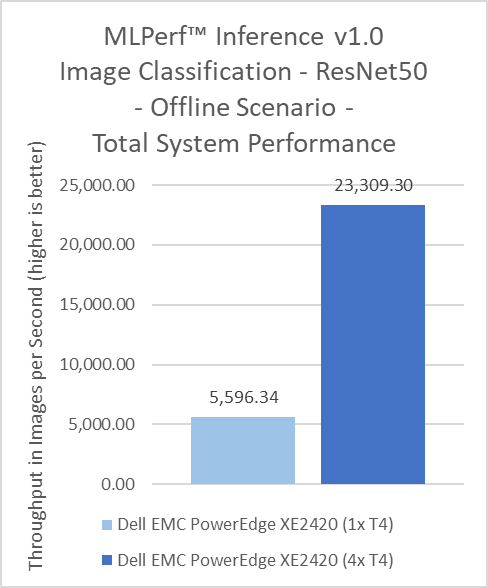

This blog demonstrates the high power-performance potential of the Dell EMC PowerEdge XE2420, an edge-friendly, short-depth server. Utilizing up to four NVIDIA T4 GPUs, the XE2420 can perform AI inference operations faster while efficiently managing power-draw. The XE2420 is capable of classifying images at 23,309 images/second while drawing an average of 794 watts, all while maintaining its equal performance with other conventional rack servers.

XE2420 Features and Capabilities

The Dell EMC PowerEdge XE2420 is a 16” (400mm) deep, high-performance server that is purpose-built for the Edge. The XE2420 has features that provide dense compute, simplified management and robust security for harsh edge environments.

- Built for performance: Powerful 2U, two-socket performance with the flexibility to add up to four accelerators per server and a maximum local storage of 132TB.

- Designed for harsh edge environments: Tested to Network Equipment-Building System (NEBS3) guidelines, with extended operating temperature tolerance of 5˚-45˚C, and an optional filtered bezel to guard against dust. Short depth for edge convenience and lower latency.

- Integrated security and consistent management: Robust, integrated security with cyber-resilient architecture, and the new iDRAC9 with Datacenter management experience. Front accessible and cold-aisle serviceable for easy maintenance.

- Power efficiency: High-end capacity supporting 2x 2000W AC PSUs or 2x 1100W DC PSUs to support demanding configurations, while maintaining efficient operation minimizing power draw

The XE2420 allows for flexibility in the type of GPUs you use in order to accelerate a wide variety of workloads including high-performance computing, deep learning training and inference, machine learning, data analytics, and graphics. It can support up to 2x NVIDIA V100/S PCIe, 2x NVIDIA RTX6000, or up to 4x NVIDIA T4.

Edge Inferencing with the T4 GPU

The NVIDIA T4 is optimized for mainstream computing environments and uniquely suited for Edge inferencing. Packaged in an energy-efficient 70-watt, small PCIe form factor, it features multi-precision Turing Tensor Cores and RT Cores to deliver power efficient inference performance. Combined with accelerated containerized software stacks from NGC, the XE2420 combined with NVIDIA T4s is a powerful solution to deploy AI application at scale on the edge.

Fig 1: NVIDIA T4 Specifications

Fig 2: Dell EMC PowerEdge XE2420 w/ 4x T4 & 2x 2.5” SSDs

Dell EMC PowerEdge XE2420 MLPerf™ Inference v1.0 Tested Configuration

Processors | 2x Intel Xeon Gold 6252 CPU @ 2.10GHz |

Storage

| 1x 2.5" SATA 250GB |

1x 2.5" NVMe 4TB | |

Memory | 12x 32GB 2666MT/s DDR4 DIMM |

GPUs | 4x NVIDIA T4 |

OS | Ubuntu 18.04.4 |

Software

| TensorRT 7.2.3 |

CUDA 11.1 | |

cuDNN 8.1.1 | |

Driver 460.32.03 | |

DALI 0.30.0 | |

Hardware Settings | ECC on |

Inference Use Cases at the Edge

As computing further extends to the Edge, higher performance and lower latency become vastly more important in order to increase throughput, while decreasing response time and power draw. One suite of diverse and useful inference workload benchmarks is the MLPerf™ suite from MLCommons™. MLPerf™ Inference demonstrates performance of a system under a variety of deployment scenarios, aiming to provide a test suite to enable balanced comparisons between competing systems along with reliable, reproducible results.

The MLPerf™ Inference v1.0 suite covers a variety of workloads, including image classification, object detection, natural language processing, speech-to-text, recommendation, and medical image segmentation. Specific datacenter scenarios covered include “offline”, which represents batch processing applications such as mass image classification on existing photos, and “Server”, which represents an application where query arrival is random, and latency is important. An example of server is any consumer-facing website where a consumer is waiting for an answer to a question. For MLPerf™ Inference v1.0, we also submitted using the edge scenario of “SingleStream”, representing an application that delivers single queries in a row, waiting to deliver the next only when the first is finished; latency is important to this scenario. One example of SingleStream is smartphone voice transcription: Each word is rendered as it spoken, and the second word does not render the next until the first is done. Many of these workloads are directly relevant to Telco & Retail customers, as well as other Edge use cases where AI is becoming more prevalent.

MLPerf™ Inference v1.0 now includes power benchmarking. This addition allows for measurement of power draw under active test for any of the benchmarks, which provide accurate and precise power metrics across a range of scenarios, and is accomplished by utilization of the proprietary measurement tool belonging to SPECPower – PTDaemon®. SPECPower is an industry-standard benchmark built to measure power and performance characteristics of single or multi-node compute servers. Dell EMC regularly submits PowerEdge systems to SPECPower to provide customers the data they need to effectively plan server deployment. The inclusion of comparable power benchmarking to MLPerf™ Inference further emphasizes Dell’s commitment to customer needs.

Measuring Inference Performance using MLPerf™

We demonstrate inference performance for the XE2420 + 4x NVIDIA T4 accelerators across the 6 benchmarks of MLPerf™ Inference v1.0 with Power v1.0 in order to showcase the workload versatility of the system. Dell tuned the XE2420 for best performance and measured power under that scenario to showcase the optimized NVIDIA T4 power cooling algorithms. The inference benchmarking was performed on:

- Offline, Server, and SingleStream scenarios at 99% accuracy for ResNet50 (image classification), RNNT (speech-to-text), and SSD-ResNet34 (object detection), including power

- Offline and Server scenarios at 99% and 99.9% for DLRM (recommendation), including power

- Offline and SingleStream scenario at 99% and 99.9% accuracy for 3D-Unet (medical image segmentation)

These results and the corresponding code are available at the MLPerf™ website. We have submitted results to both the Datacenter[2] & the Edge suites[3].

Key Highlights

At Dell, we understand that performance is critical, but customers do not want to compromise quality and reliability to achieve maximum performance. Customers can confidently deploy inference workloads and other software applications with efficient power usage while maintaining high performance, as demonstrated below.

The XE2420 is a compact server that supports 4x 70W NVIDIA T4 GPUs in an efficient manner, reducing overall power consumption without sacrificing performance. This high-density and efficient power-draw lends it increased performance-per-dollar, especially when it comes to a per-GPU performance basis.

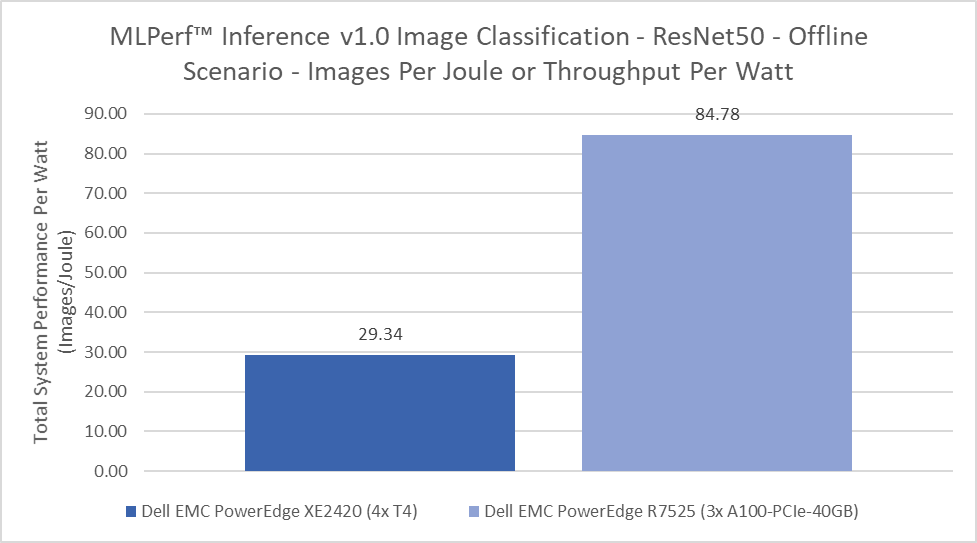

Dell is a leader in the new addition of MLPerf™ Inference v1.0 Power measurements. Due to the leading-edge nature of the measurement, limited datasets are available for comparison. Dell also has power measurements for the core datacenter R7525, configured with 3x NVIDIA A100-PCIe-40GB. On a cost per throughput per watt comparison, XE2420 configured with 4x NVIDIA T4s gets better power performance in a smaller footprint and at a lower price, all factors that are important for an edge deployment.

Inference benchmarks tend to scale linearly within a server, as this type of workload does not require GPU P2P communication. However, the quality of the system can affect that scaling. The XE2420 showcases above-average scaling; 4 GPUs provide more than 4x performance increase! This demonstrates that operating capabilities and performance were not sacrificed to support 4 GPUs in a smaller depth and form-factor.

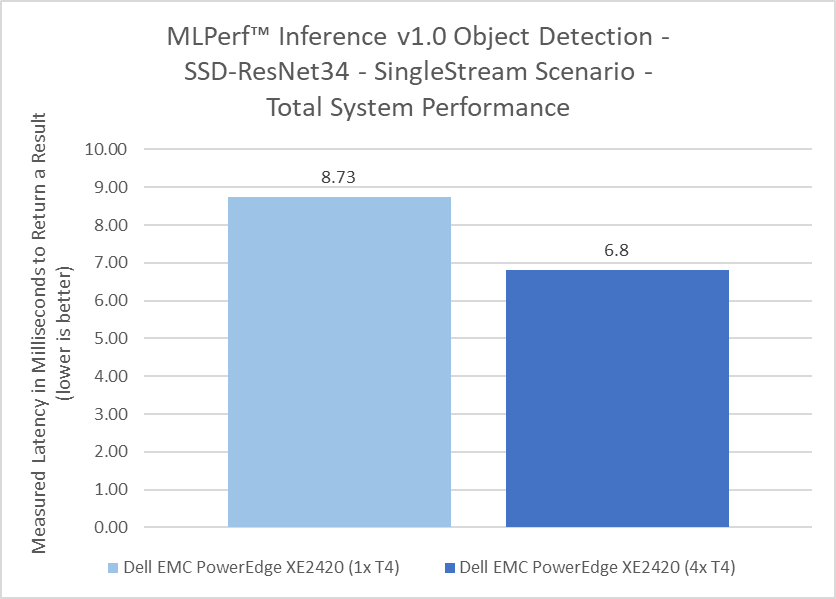

Dell submitted to the Edge benchmark suite of MLPerf™ Inference v1.0 for the third round of MLPerf Inference Testing. The unique scenario in this suite is “SingleStream”, discussed above. With SingleStream, system latency is paramount, as the server cannot move onto the second query until the first is finished. The fewer milliseconds, the faster the system, and the better suited it is for the Edge! System architecture affects latency, so depending on where the GPU is located latency may increase or decrease. This figure can be read as a best and worst case scenario; ie the XE2420 will return results on average in between 6.8 to 8.73 milliseconds, below the range of human-recongnizable delay for the SSD-ResNet34 benchmark. Not every server will meet this bar on every benchmark, and the XE2420 scores below this range on many of the submissions.

Comparisons to MLPerf™ Inference v0.7 XE2420 results will show that v1.0 results are slightly different in terms of total system and per-GPU throughput. This is due to a changed requirement between the two test suites. In v0.7, ECC could be turned off, which is common to improve performance of GDDR6 based GPUs. In v1.0, ECC is turned on. This better reflects most customer environments and use cases, since administrators will typically be alerted to any memory errors that could affect accuracy of results.

Conclusion: Better Performance-per-Dollar and Flexibility at the Edge without sacrificing Performance

MLPerf™ inference benchmark results clearly demonstrate that the XE2420 is truly a high-performance, efficient, half-depth server ideal for edge computing use cases and applications. The capability to support four NVIDIA T4 GPUs in a short-depth, edge-optimized form factor, while keeping them sufficiently cool enables customers to perform AI inference operations at the Edge on par with traditional mainstream 2U rack servers deployed in core data centers. The compact design provides customers new, powerful capabilities at the edge to do more even faster without extra cost or increased power requirements. The XE2420 is capable of true versatility at the edge, demonstrating strong performance not only for mundane workloads but also for a broad range of tested workloads, applicable in a number of Edge industries from Retail to Manufacturing to Autonomous driving. Dell EMC offers a complete portfolio of trusted technology solutions to aggregate, analyze and curate data from the edge to the core to the cloud and XE2420 is a key component of this portfolio to meet your compute needs at the Edge.

XE2420 MLPerf™ Inference v1.0 Full Results

The raw results from the MLPerf™ Inference v1.0 published benchmarks are displayed below, where the performance metric is throughput (items per second) for Offline and Server and latency (length of time to return a result, in milliseconds) for SingleStream. The power metric is Watts for Offline and Server and Energy (Joules) per Stream for SingleStream.

|

| 3d-unet-99 | 3d-unet-99.9 | ||

|

| Offline | SingleStream | Offline | SingleStream |

XE2420_T4x1_TRT | Performance | - | - | - | - |

Power/Energy | - | - | - | - | |

XE2420_T4x4_TRT | Performance | 31.22 (imgs/sec) | 171.73 (ms) | 31.22 (imgs/sec) | 171.73 (ms) |

Power/Energy | - | - | - | - | |

|

| dlrm-99.9 | dlrm-99 | ||

|

| Offline | Server | Offline | Server |

XE2420_T4x1_TRT | Performance | - | - | - | - |

Power/Energy | - | - | - | - | |

XE2420_T4x4_TRT | Performance | 135,149.00 (imgs/sec) | 126,531.00 (imgs/sec) | 135,189.00 (imgs/sec) | 126,531.00 (imgs/sec) |

Power/Energy | 829.09 (W) | 835.52 (W) | 830.13 (W) | 835.91 (W) | |

|

| resnet50 | ||

|

| Offline | Server | SingleStream |

XE2420_T4x1_TRT | Performance | 5,596.34 (imgs/sec) | - | 0.83 (ms) |

Power/Energy | - | - | - | |

XE2420_T4x4_TRT | Performance | 23,309.30 (imgs/sec) | 21,691.30 (imgs/sec) | 0.91 (ms) |

Power/Energy | 794.46 (W) | 792.69 (W) | 0.59 (Joules/Stream) | |

|

| rnnt | ||

|

| Offline | Server | SingleStream |

XE2420_T4x1_TRT | Performance | - | - | - |

Power/Energy | - | - | - | |

XE2420_T4x4_TRT | Performance | 5,704.60 (imgs/sec) | 4,202.02 (imgs/sec) | 71.75 (ms) |

Power/Energy | 856.80 (W) | 862.46 (W) | 31.77 (Joules/Stream) | |

|

| ssd-resnet34 | ||

|

| Offline | Server | SingleStream |

XE2420_T4x1_TRT | Performance | 129.28 (imgs/sec) | - | 8.73 (ms) |

Power/Energy | - | - | - | |

XE2420_T4x4_TRT | Performance | 557.43 (imgs/sec) | 500.96 (imgs/sec) | 6.80 (ms) |

Power/Energy | 792.85 (W) | 790.83 (W) | 4.81 (Joules/Stream) | |

Multinode Performance of Dell PowerEdge Servers with MLPerfTM Training v1.1

Mon, 07 Mar 2022 19:51:12 -0000

|Read Time: 0 minutes

The Dell MLPerf v1.1 submission included multinode results. This blog showcases performance across multiple nodes on Dell PowerEdge R750xa and XE8545 servers and demonstrates that the multinode scaling performance was excellent.

The compute requirement for deep learning training is growing at a rapid pace. It is imperative to train models across multiple nodes to attain a faster time-to-solution. Therefore, it is critical to showcase the scaling performance across multiple nodes. To demonstrate to customers the performance that they can expect across multiple nodes, our v1.1 submission includes multinode results. The following figures show multinode results for PowerEdge R750xa and XE8545 systems.

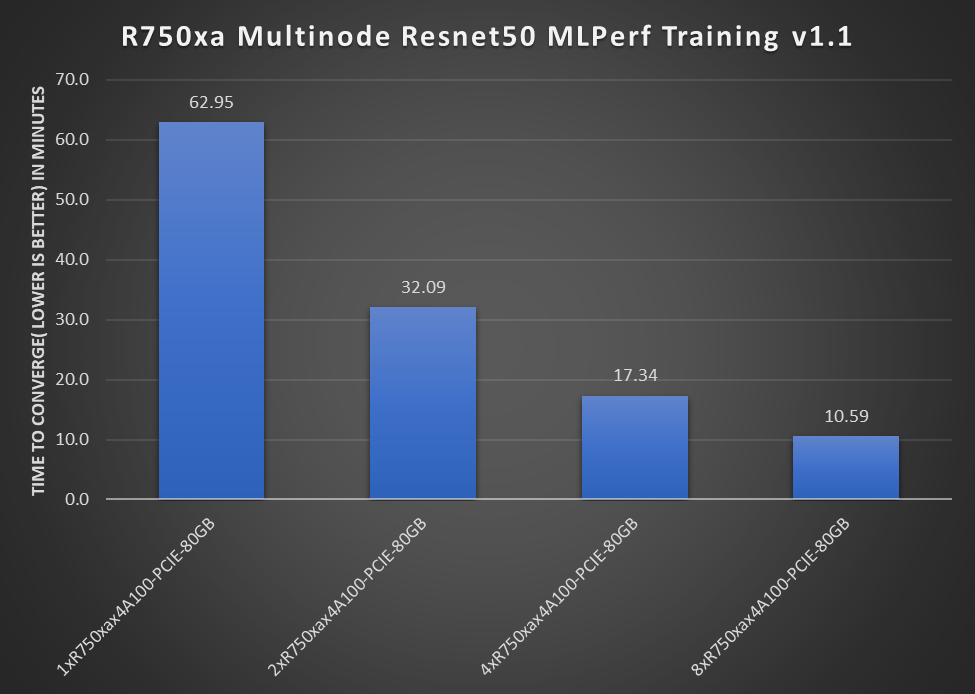

Figure 1: One-, two-, four-, and eight-node results with PowerEdge R750xa Resnet50 MLPerf v1.1 scaling performance

Figure 1 shows the performance of the PowerEdge R750xa server with Resnet50 training. These numbers scale from one node to eight nodes, from four NVIDIA A100-PCIE-80GB GPUs to 32 NVIDIA A100-PCIE-80GB GPUs. We can see that the scaling is almost linear across nodes. MLPerf training requires passing Reference Convergence Points (RCP) for compliance. These RCPs were inhibitors to show linear scaling for the 8x scaling case. The near linear scaling makes a PowerEdge R750xa node an excellent choice for multinode training setup.

The workload was distributed by using singularity on PowerEdge R750xa servers. Singularity is a secure containerization solution that is primarily used in traditional HPC GPU clusters. Our submission includes setup scripts with singularity that help traditional HPC customers run workloads without the need to fully restructure their existing cluster setup. The submission also includes Slurm Docker-based scripts.

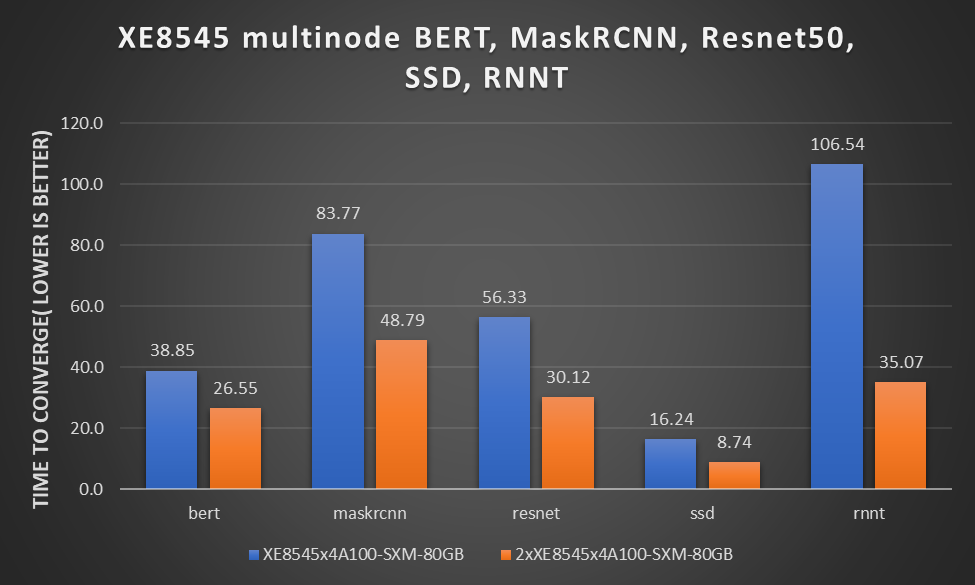

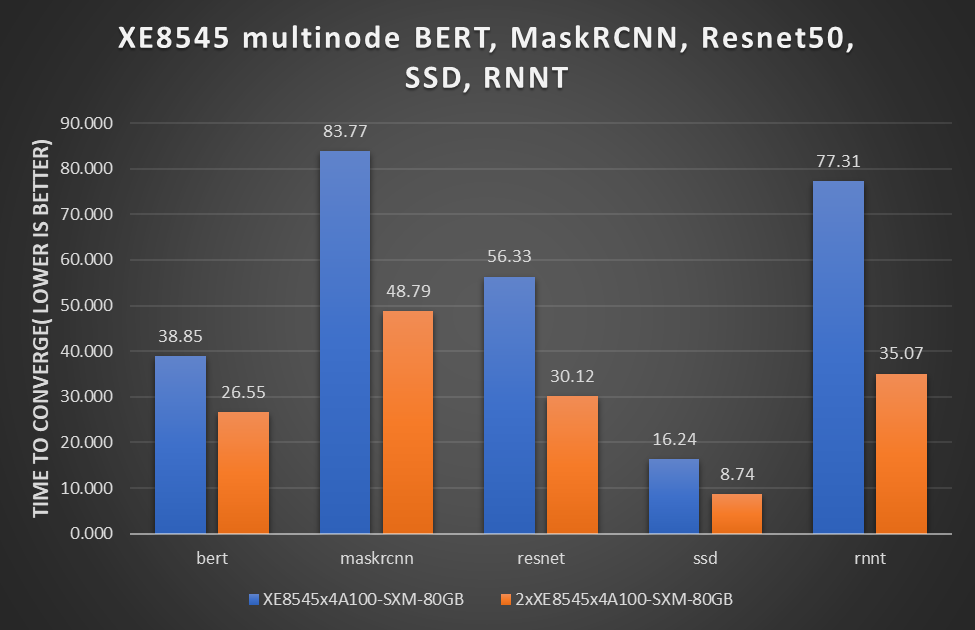

Figure 2: Multinode submission results for PowerEdge XE8545 server with BERT, MaskRCNN, Resnet50, SSD, and RNNT

Figure 2 shows the submitted performance of the PowerEdge XE8545 server with BERT, MaskRCNN, Resnet50, SSD, and RNNT training. These numbers scale from one node to two nodes, from four NVIDIA A100-SXM-80GB GPUs to eight NVIDIA A100-SXM-80GB GPUs. All GPUs operate at 500W TDP for maximum performance. They were distributed using Slurm and Docker on PowerEdge XE8545 servers. The performance is nearly linear.

Note: The RNN-T single node results submitted for the PowerEdge XE8545x4A100-SXM-80GB system used a different set of hyperparameters than for two nodes. After the submission, we ran the RNN-T benchmark again on the PowerEdge XE8545x4A100-SXM-80GB system with the same hyperparameters and found that the new time to converge is approximately 77.37 minutes. Because we only had the resources to update the results for the 2xXE8545x4A100-SXM-80GB system before the submission deadline, the MLCommons results show 105.6 minutes for a single node XE8545x4100-SXM-80GB system.

The following figure shows the adjusted representation of performance for the PowerEdge XE8545x4A100-SXM-80GB system. RNN-T provides an unverified score of 77.31 minutes[1]:

Figure 3: Revised multinode results with PowerEdge XE8545 BERT, MaskRCNN, Resnet50, SSD, and RNNT

Figure 3 shows the linear scaling abilities of the PowerEdge XE8545 server across different workloads such as BERT, MaskRCNN, ResNet, SSD, and RNNT. This linear scaling ability makes the PowerEdge XE8545 server an excellent choice to run large-scale multinode workloads.

Note: This rnnt.zip file includes log files for 10 runs that show that the averaged performance is 77.31 minutes.

Conclusion

- It is critical to measure deep learning performance across multiple nodes to assess the scalability component of training as deep learning workloads are growing rapidly.

- Our MLPerf training v1.1 submission includes multinode results that are linear and perform extremely well.

- Scaling numbers for the PowerEdge XE8545 and PowerEdge R750xa server make them excellent platform choices for enabling large scale deep learning training workloads across different areas and tasks.

[1] MLPerf v1.1 Training RNN-T; Result not verified by the MLCommonsTM Association. The MLPerf name and logo are trademarks of MLCommons Association in the United States and other countries. All rights reserved. Unauthorized use strictly prohibited. See http://www.mlcommons.org for more information.