Running the MLPerf™ Inference v1.1 Benchmark on Dell EMC Systems

Fri, 24 Sep 2021 16:51:50 -0000

|Read Time: 0 minutes

This blog is a guide for running the MLPerf inference v1.1 benchmark. Information about how to run the MLPerf inference v1.1 benchmark is available online at different locations. This blog provides all the steps in one place.

MLPerf is a benchmarking suite that measures the performance of Machine Learning (ML) workloads. It focuses on the most important aspects of the ML life cycle: training and inference. For more information, see Introduction to MLPerf™ Inference v1.1 Performance with Dell EMC Servers.

This blog focuses on inference setup and describes the steps to run closed data center MLPerf inference v1.1 tests on Dell Technologies servers with NVIDIA GPUs. It enables you to run the tests and reproduce the results that we observed in our HPC & AI Innovation Lab. For details about the hardware and the software stack for different systems in the benchmark, see this list of systems.

The MLPerf inference v1.1 suite contains the following benchmarks:

- Resnet50

- SSD-Resnet34

- BERT

- DLRM

- RNN-T

- 3D U-Net

Note: The BERT, DLRM, and 3D U-Net models have 99 percent (default accuracy) and 99.9 percent (high accuracy) targets.

This blog describes the steps to run all these benchmarks.

1 Getting started

A system under test consists of a defined set of hardware and software resources that will be measured for performance. The hardware resources may include processors, accelerators, memories, disks, and interconnect. The software resources may include an operating system, compilers, libraries, and drivers that significantly influence the running time of a benchmark. In this case, the system on which you clone the MLPerf repository and run the benchmark is known as the system under test (SUT).

For storage, SSD RAID or local NVMe drives are acceptable for running all the subtests without any penalty. Inference does not have strict requirements for fast-parallel storage. However, the BeeGFS or Lustre file system, the PixStor storage solution, and so on help make multiple copies of large datasets.

2 Prerequisites

Prerequisites for running the MLPerf inference v1.1 tests include:

- An x86_64 Dell EMC systems

- Docker installed with the NVIDIA runtime hook

- Ampere-based NVIDIA GPUs (Turing GPUs have legacy support but are no longer maintained for optimizations.)

- NVIDIA driver version 470.xx or later

- As of inference v1.0, ECC turned ON

Set ECC to on,run the following command:sudo nvidia-smi --ecc-config=1

Preparing to run the MLPerf inference v1.1

Before you can run the MLPerf inference v1.1 tests, perform the following tasks to prepare your environment.

3.1 Clone the MLPerf repository

- Clone the repository to your home directory or another acceptable path:

cd - git clone https://github.com/mlperf/inference_results_v1.1

- Go to the closed/DellEMC directory:

cd inference_results_v1.1/closed/DellEMC

- Create a “scratch” directory with at least 3 TB of space in which to store the models, datasets, preprocessed data, and so on:

mkdir scratch

- Export the absolute path for $MLPERF_SCRATCH_PATH with the scratch directory:

export MLPERF_SCRATCH_PATH=/home/user/inference_results_v1.1/closed/DellEMC/scratch

3.2 Set up the configuration file

The closed/DellEMC/configs directory includes an __init__.py file that lists configurations for different Dell EMC servers that were systems in the MLPerf Inference v1.1 benchmark. If necessary, modify the configs/<benchmark>/<Scenario>/__init__.py file to include the system that will run the benchmark.

Note: If your system is already present in the configuration file, there is no need to add another configuration.

In the configs/<benchmark>/<Scenario>/__init__.py file, select a similar configuration and modify it based on the current system, matching the number and type of GPUs in your system.

For this blog, we used a Dell EMC PowerEdge R7525 server with a one-A100 GPU as the example. We chose R7525_A100_PCIE_40GBx1 as the name for this new system. Because the R7525_A100_PCIE_40GBx1 system is not already in the list of systems, we added the R7525_A100-PCIe-40GBx1 configuration.

Because the R7525_A100_PCIE_40GBx3 reference system is the most similar, we modified that configuration and picked Resnet50 Server as the example benchmark.

The following example shows the reference configuration for three GPUs for the Resnet50 Server benchmark in the configs/resnet50/Server/__init__.py file:

@ConfigRegistry.register(HarnessType.LWIS, AccuracyTarget.k_99, PowerSetting.MaxP)

class R7525_A100_PCIE_40GBx3(BenchmarkConfiguration):

system = System("R7525_A100-PCIE-40GB", Architecture.Ampere, 3)

active_sms = 100

input_dtype = "int8"

input_format = "linear"

map_path = "data_maps/<dataset_name>/val_map.txt"

precision = "int8"

tensor_path = "${PREPROCESSED_DATA_DIR}/<dataset_name>/ResNet50/int8_linear"

use_deque_limit = True

deque_timeout_usec = 5742

gpu_batch_size = 205

gpu_copy_streams = 11

gpu_inference_streams = 9

server_target_qps = 91250

use_cuda_thread_per_device = True

use_graphs = True

scenario = Scenario.Server

benchmark = Benchmark.ResNet50

start_from_device=TrueThis example shows the modified configuration for one GPU:

@ConfigRegistry.register(HarnessType.LWIS, AccuracyTarget.k_99, PowerSetting.MaxP)

class R7525_A100_PCIE_40GBx1(BenchmarkConfiguration):

system = System("R7525_A100-PCIE-40GB", Architecture.Ampere, 1)

active_sms = 100

input_dtype = "int8"

input_format = "linear"

map_path = "data_maps/<dataset_name>/val_map.txt"

precision = "int8"

tensor_path = "${PREPROCESSED_DATA_DIR}/<dataset_name>/ResNet50/int8_linear"

use_deque_limit = True

deque_timeout_usec = 5742

gpu_batch_size = 205

gpu_copy_streams = 11

gpu_inference_streams = 9

server_target_qps = 30400

use_cuda_thread_per_device = True

use_graphs = True

scenario = Scenario.Server

benchmark = Benchmark.ResNet50

start_from_device=TrueWe modified the queries per second (QPS) parameter (server_target_qps) to match the number of GPUs. The server_target_qps parameter is linearly scalable, therefore the QPS = number of GPUs x QPS per GPU.

The modified parameter is server_target_qps set to 30400 in accordance with one GPU performance expectation.

3.3 Add the new system

After you add the new system to the __init__.py file as shown in the preceding example, add the new system to the list of available systems. The list of available systems is in the code/common/system_list.py file. This entry informs the benchmark that a new system exists and ensures that the benchmark selects the correct configuration.

Note: If your system is already added, there is no need to add it to the code/common/system_list.py file.

Add the new system to the list of available systems in the code/common/system_list.py file.

At the end of the file, there is a class called KnownSystems. This class defines a list of SystemClass objects that describe supported systems as shown in the following example:

SystemClass(<system ID>, [<list of names reported by nvidia-smi>], [<known PCI IDs of this system>], <architecture>, [list of known supported gpu counts>])

Where:

- For <system ID>, enter the system ID with which you want to identify this system.

- For <list of names reported by nvidia-smi>, run the nvidia-smi -L command and use the name that is returned.

- For <known PCI IDs of this system>, run the following command:

$ CUDA_VISIBLE_ORDER=PCI_BUS_ID nvidia-smi --query-gpu=gpu_name,pci.device_id --format=csv

name, pci.device_id

A100-PCIE-40GB, 0x20F110DE

---This pci.device_id field is in the 0x<PCI ID>10DE format, where 10DE is the NVIDIA PCI vendor ID. Use the four hexadecimal digits between 0x and 10DE as your PCI ID for the system. In this case, it is 20F1.

- For <architecture>, use the architecture Enum, which is at the top of the file. In this case, A100 is Ampere architecture.

- For the <list of known GPU counts>, enter the number of GPUs of the systems you want to support (that is, [1,2,4] if you want to support 1x, 2x, and 4x GPU variants of this system.). Because we already have a 3x variant in the system_list.py file, we simply need to include the number 1 as an additional entry.

Note: Because a configuration is already present for the PowerEdge R7525 server, we added the number 1 for our configuration, as shown in the following example. If your system does not exist in the system_list.py file, add the entire configuration and not just the number.

class KnownSystems: """ Global List of supported systems """ # before the addition of 1 - this config only supports R7525_A100-PCIE-40GB x3 # R7525_A100_PCIE_40GB= SystemClass("R7525_A100-PCIE-40GB", ["A100-PCIE-40GB"], ["20F1"], Architecture.Ampere, [3]) # after the addition – this config now supports R7525_A100-PCIE-40GB x1 and R7525_A100-PCIE-40GB x3 versions. R7525_A100_PCIE_40GB= SystemClass("R7525_A100-PCIE-40GB", ["A100-PCIE-40GB ["20F1"], Architecture.Ampere, [1, 3]) DSS8440_A100_PCIE_80GB = SystemClass("DSS8440_A100-PCIE-80GB", ["A100-PCIE-80GB"], ["20B5"], Architecture.Ampere, [10]) DSS8440_A30 = SystemClass("DSS8440_A30", ["A30"], ["20B7"], Architecture.Ampere, [8], valid_mig_slices=[MIGSlice(1, 6), MIGSlice(2, 12), MIGSlice(4, 24)]) R750xa_A100_PCIE_40GB = SystemClass("R750xa_A100-PCIE-40GB", ["A100-PCIE-40GB"], ["20F1"], Architecture.Ampere, [4]) R750xa_A100_PCIE_80GB = SystemClass("R750xa_A100-PCIE-80GB", ["A100-PCIE-80GB"], ["20B5"], Architecture.Ampere, [4],valid_mig_slices=[MIGSlice(1, 10), MIGSlice(2, 20), MIGSlice(3, 40)]) ----

Note: You must provide different configurations in the configs/resnet50/Server/__init__.py file for the x1 variant and x3 variant. In the preceding example, the R7525_A100-PCIE-40GBx3 configuration is different from the R7525_A100-PCIE-40GBx1 configuration.

3.4 Build the Docker image and required libraries

Build the Docker image and then launch an interactive container. Then, in the interactive container, build the required libraries for inferencing.

- To build the Docker image, run the make prebuild command inside the closed/DellEMC folder:

Command:

make prebuildThe following example shows sample output:

Launching Docker session nvidia-docker run --rm -it -w /work \ -v /home/user/article_inference_v1.1/closed/DellEMC:/work -v /home/user:/mnt//home/user \ --cap-add SYS_ADMIN \ -e NVIDIA_VISIBLE_DEVICES=0 \ --shm-size=32gb \ -v /etc/timezone:/etc/timezone:ro -v /etc/localtime:/etc/localtime:ro \ --security-opt apparmor=unconfined --security-opt seccomp=unconfined \ --name mlperf-inference-user -h mlperf-inference-user --add-host mlperf-inference-user:127.0.0.1 \ --user 1002:1002 --net host --device /dev/fuse \ -v =/home/user/inference_results_v1.0/closed/DellEMC/scratch:/home/user/inference_results_v1.1/closed/DellEMC/scratch \ -e MLPERF_SCRATCH_PATH=/home/user/inference_results_v1.0/closed/DellEMC/scratch \ -e HOST_HOSTNAME=node009 \ mlperf-inference:user

The Docker container is launched with all the necessary packages installed.

- Access the interactive terminal on the container.

- To build the required libraries for inferencing, run the make build command inside the interactive container:

Command

make build

The following example shows sample output:

(mlperf) user@mlperf-inference-user:/work$ make build ……. [ 26%] Linking CXX executable /work/build/bin/harness_default make[4]: Leaving directory '/work/build/harness' make[4]: Leaving directory '/work/build/harness' make[4]: Leaving directory '/work/build/harness' [ 36%] Built target harness_bert [ 50%] Built target harness_default [ 55%] Built target harness_dlrm make[4]: Leaving directory '/work/build/harness' [ 63%] Built target harness_rnnt make[4]: Leaving directory '/work/build/harness' [ 81%] Built target harness_triton make[4]: Leaving directory '/work/build/harness' [100%] Built target harness_triton_mig make[3]: Leaving directory '/work/build/harness' make[2]: Leaving directory '/work/build/harness' Finished building harness. make[1]: Leaving directory '/work' (mlperf) user@mlperf-inference-user:/work

The container in which you can run the benchmarks is built.

3.5 Download and preprocess validation data and models

To run the MLPerf inference v1.1, download datasets and models, and then preprocess them. MLPerf provides scripts that download the trained models. The scripts also download the dataset for benchmarks other than Resnet50, DLRM, and 3D U-Net.

For Resnet50, DLRM, and 3D U-Net, register for an account and then download the datasets manually:

- Resnet50—Download the most common image classification 2012 Validation set and extract the downloaded file to $MLPERF_SCRATCH_PATH/data/<dataset_name>/

(https://github.com/mlcommons/training/tree/master/image_classification). - DLRM—Download the Criteo Terabyte dataset and extract the downloaded file to $MLPERF_SCRATCH_PATH/data/criteo/

- 3D U-Net—Download the BraTS challenge data and extract the downloaded file to $MLPERF_SCRATCH_PATH/data/BraTS/MICCAI_BraTS_2019_Data_Training

Except for the Resnet50, DLRM, and 3D U-Net datasets, run the following commands to download all the models, datasets, and then preprocess them:

$ make download_model # Downloads models and saves to $MLPERF_SCRATCH_PATH/models $ make download_data # Downloads datasets and saves to $MLPERF_SCRATCH_PATH/data $ make preprocess_data # Preprocess data and saves to $MLPERF_SCRATCH_PATH/preprocessed_data

Note: These commands download all the datasets, which might not be required if the objective is to run one specific benchmark. To run a specific benchmark rather than all the benchmarks, see the following sections for information about the specific benchmark.

(mlperf) user@mlperf-inference-user:/work$ tree -d -L 1 . ├── build ├── code ├── compliance ├── configs ├── data_maps ├── docker ├── measurements ├── power ├── results ├── scripts └── systems # different folders are as follows ├── build—Logs, preprocessed data, engines, models, plugins, and so on ├── code—Source code for all the benchmarks ├── compliance—Passed compliance checks ├── configs—Configurations that run different benchmarks for different system setups ├── data_maps—Data maps for different benchmarks ├── docker—Docker files to support building the container ├── measurements—Measurement values for different benchmarks ├── power—files specific to power submission (it’s only needed for power submissions) ├── results—Final result logs ├── scratch—Storage for models, preprocessed data, and the dataset that is symlinked to the preceding build directory ├── scripts—Support scripts └── systems—Hardware and software details of systems in the benchmark

4 Running the benchmarks

After you have performed the preceding tasks to prepare your environment, run any of the benchmarks that are required for your tests.

The Resnet50, SSD-Resnet34, and RNN-T benchmarks have 99 percent (default accuracy) targets.

The BERT, DLRM, and 3D U-Net benchmarks have 99 percent (default accuracy) and 99.9 percent (high accuracy) targets. For information about running these benchmarks, see the Running high accuracy target benchmarks section below.

If you downloaded and preprocessed all the datasets (as shown in the previous section), there is no need to do so again. Skip the download and preprocessing steps in the procedures for the following benchmarks.

NVIDIA TensorRT is the inference engine for the backend. It includes a deep learning inference optimizer and runtime that delivers low latency and high throughput for deep learning applications.

4.1 Run the Resnet50 benchmark

To set up the Resnet50 dataset and model to run the inference:

- If you already downloaded and preprocessed the datasets, go step 5.

- Download the required validation dataset (https://github.com/mlcommons/training/tree/master/image_classification).

- Extract the images to $MLPERF_SCRATCH_PATH/data/<dataset_name>/

- Run the following commands:

make download_model BENCHMARKS=resnet50 make preprocess_data BENCHMARKS=resnet50 - Generate the TensorRT engines:

# generates the TRT engines with the specified config. In this case it generates engine for both Offline and Server scenario make generate_engines RUN_ARGS="--benchmarks=resnet50 --scenarios=Offline,Server --config_ver=default"

- Run the benchmark:

# run the performance benchmark make run_harness RUN_ARGS="--benchmarks=resnet50 --scenarios=Offline --config_ver=default --test_mode=PerformanceOnly" make run_harness RUN_ARGS="--benchmarks=resnet50 --scenarios=Server --config_ver=default --test_mode=PerformanceOnly" # run the accuracy benchmark make run_harness RUN_ARGS="--benchmarks=resnet50 --scenarios=Offline --config_ver=default --test_mode=AccuracyOnly" make run_harness RUN_ARGS="--benchmarks=resnet50 --scenarios=Server --config_ver=default --test_mode=AccuracyOnly"

The following example shows the output for a PerformanceOnly mode and displays a “VALID” result:

======================= Perf harness results: ======================= R7525_A100-PCIe-40GBx1_TRT-default-Server: resnet50: Scheduled samples per second : 30400.32 and Result is : VALID ======================= Accuracy results: ======================= R7525_A100-PCIe-40GBx1_TRT-default-Server: resnet50: No accuracy results in PerformanceOnly mode.

4.2 Run the SSD-Resnet34 benchmark

To set up the SSD-Resnet34 dataset and model to run the inference:

- If necessary, download and preprocess the dataset:

make download_model BENCHMARKS=ssd-resnet34 make download_data BENCHMARKS=ssd-resnet34 make preprocess_data BENCHMARKS=ssd-resnet34

- Generate the TensorRT engines:

# generates the TRT engines with the specified config. In this case it generates engine for both Offline and Server scenario make generate_engines RUN_ARGS="--benchmarks=ssd-resnet34 --scenarios=Offline,Server --config_ver=default"

- Run the benchmark:

# run the performance benchmark make run_harness RUN_ARGS="--benchmarks=ssd-resnet34 --scenarios=Offline --config_ver=default --test_mode=PerformanceOnly" make run_harness RUN_ARGS="--benchmarks=ssd-resnet34 --scenarios=Server --config_ver=default --test_mode=PerformanceOnly" # run the accuracy benchmark make run_harness RUN_ARGS="--benchmarks=ssd-resnet34 --scenarios=Offline --config_ver=default --test_mode=AccuracyOnly" make run_harness RUN_ARGS="--benchmarks=ssd-resnet34 --scenarios=Server --config_ver=default --test_mode=AccuracyOnly"

4.3 Run the RNN-T benchmark

To set up the RNN-T dataset and model to run the inference:

- If necessary, download and preprocess the dataset:

make download_model BENCHMARKS=rnnt make download_data BENCHMARKS=rnnt make preprocess_data BENCHMARKS=rnnt

- Generate the TensorRT engines:

# generates the TRT engines with the specified config. In this case it generates engine for both Offline and Server scenario make generate_engines RUN_ARGS="--benchmarks=rnnt --scenarios=Offline,Server --config_ver=default"

- Run the benchmark:

# run the performance benchmark make run_harness RUN_ARGS="--benchmarks=rnnt --scenarios=Offline --config_ver=default --test_mode=PerformanceOnly" make run_harness RUN_ARGS="--benchmarks=rnnt --scenarios=Server --config_ver=default --test_mode=PerformanceOnly" # run the accuracy benchmark make run_harness RUN_ARGS="--benchmarks=rnnt --scenarios=Offline --config_ver=default --test_mode=AccuracyOnly" make run_harness RUN_ARGS="--benchmarks=rnnt --scenarios=Server --config_ver=default --test_mode=AccuracyOnly"

5 Running high accuracy target benchmarks

The BERT, DLRM, and 3D U-Net benchmarks have high accuracy targets.

5.1 Run the BERT benchmark

To set up the BERT dataset and model to run the inference:

- If necessary, download and preprocess the dataset:

make download_model BENCHMARKS=bert make download_data BENCHMARKS=bert make preprocess_data BENCHMARKS=bert

- Generate the TensorRT engines:

# generates the TRT engines with the specified config. In this case it generates engine for both Offline and Server scenario and also for default and high accuracy targets. make generate_engines RUN_ARGS="--benchmarks=bert --scenarios=Offline,Server --config_ver=default,high_accuracy"

- Run the benchmark:

# run the performance benchmark make run_harness RUN_ARGS="--benchmarks=bert --scenarios=Offline --config_ver=default --test_mode=PerformanceOnly" make run_harness RUN_ARGS="--benchmarks=bert --scenarios=Server --config_ver=default --test_mode=PerformanceOnly" make run_harness RUN_ARGS="--benchmarks=bert --scenarios=Offline --config_ver=high_accuracy --test_mode=PerformanceOnly" make run_harness RUN_ARGS="--benchmarks=bert --scenarios=Server --config_ver=high_accuracy --test_mode=PerformanceOnly" # run the accuracy benchmark make run_harness RUN_ARGS="--benchmarks=bert --scenarios=Offline --config_ver=default --test_mode=AccuracyOnly" make run_harness RUN_ARGS="--benchmarks=bert --scenarios=Server --config_ver=default --test_mode=AccuracyOnly" make run_harness RUN_ARGS="--benchmarks=bert --scenarios=Offline --config_ver=high_accuracy --test_mode=AccuracyOnly" make run_harness RUN_ARGS="--benchmarks=bert --scenarios=Server --config_ver=high_accuracy --test_mode=AccuracyOnly"

5.2 Run the DLRM benchmark

To set up the DLRM dataset and model to run the inference:

- If you already downloaded and preprocessed the datasets, go to step 5.

- Download the Criteo Terabyte dataset.

- Extract the images to $MLPERF_SCRATCH_PATH/data/criteo/ directory.

- Run the following commands:

make download_model BENCHMARKS=dlrm make preprocess_data BENCHMARKS=dlrm

- Generate the TensorRT engines:

# generates the TRT engines with the specified config. In this case it generates engine for both Offline and Server scenario and also for default and high accuracy targets. make generate_engines RUN_ARGS="--benchmarks=dlrm --scenarios=Offline,Server --config_ver=default, high_accuracy"

- Run the benchmark:

# run the performance benchmark make run_harness RUN_ARGS="--benchmarks=dlrm --scenarios=Offline --config_ver=default --test_mode=PerformanceOnly" make run_harness RUN_ARGS="--benchmarks=dlrm --scenarios=Server --config_ver=default --test_mode=PerformanceOnly" make run_harness RUN_ARGS="--benchmarks=dlrm --scenarios=Offline --config_ver=high_accuracy --test_mode=PerformanceOnly" make run_harness RUN_ARGS="--benchmarks=dlrm --scenarios=Server --config_ver=high_accuracy --test_mode=PerformanceOnly" # run the accuracy benchmark make run_harness RUN_ARGS="--benchmarks=dlrm --scenarios=Offline --config_ver=default --test_mode=AccuracyOnly" make run_harness RUN_ARGS="--benchmarks=dlrm --scenarios=Server --config_ver=default --test_mode=AccuracyOnly" make run_harness RUN_ARGS="--benchmarks=dlrm --scenarios=Offline --config_ver=high_accuracy --test_mode=AccuracyOnly" make run_harness RUN_ARGS="--benchmarks=dlrm --scenarios=Server --config_ver=high_accuracy --test_mode=AccuracyOnly"

5.3 Run the 3D U-Net benchmark

Note: This benchmark only has the Offline scenario.

To set up the 3D U-Net dataset and model to run the inference:

- If you already downloaded and preprocessed the datasets, go to step 5

- Download the BraTS challenge data.

- Extract the images to the $MLPERF_SCRATCH_PATH/data/BraTS/MICCAI_BraTS_2019_Data_Training directory.

- Run the following commands:

make download_model BENCHMARKS=3d-unet make preprocess_data BENCHMARKS=3d-unet

- Generate the TensorRT engines:

# generates the TRT engines with the specified config. In this case it generates engine for both Offline and Server scenario and for default and high accuracy targets. make generate_engines RUN_ARGS="--benchmarks=3d-unet --scenarios=Offline --config_ver=default,high_accuracy"

- Run the benchmark:

# run the performance benchmark make run_harness RUN_ARGS="--benchmarks=3d-unet --scenarios=Offline --config_ver=default --test_mode=PerformanceOnly" make run_harness RUN_ARGS="--benchmarks=3d-unet --scenarios=Offline --config_ver=high_accuracy --test_mode=PerformanceOnly" # run the accuracy benchmark make run_harness RUN_ARGS="--benchmarks=3d-unet --scenarios=Offline --config_ver=default --test_mode=AccuracyOnly" make run_harness RUN_ARGS="--benchmarks=3d-unet --scenarios=Offline --config_ver=high_accuracy --test_mode=AccuracyOnly"

6 Limitations and Best Practices for Running MLPerf

Note the following limitations and best practices:

- To build the engine and run the benchmark by using a single command, use the make run RUN_ARGS… shortcut. The shortcut is valid alternative to the make generate_engines … && make run_harness.. commands.

- Include the --fast flag in the RUN_ARGS command to test runs quickly by setting the run time to one minute. For example

make run_harness RUN_ARGS="–-fast --benchmarks=<bmname> --scenarios=<scenario> --config_ver=<cver> --test_mode=PerformanceOnly"

The benchmark runs for one minute instead of the default 10 minutes.

- If the server results are “INVALID”, reduce the server_target_qps for a Server scenario run. If the latency constraints are not met during the run, “INVALID” results are expected.

- If the results are “INVALID” for an Offline scenario run, then increase the offline_expected_qps. “INVALID” runs for the Offline scenario occurs when the system can deliver a significantly higher QPS than what is provided through the offline_expected_qps configuration.

- If the batch size changes, rebuild the engine.

- Only the BERT, DLRM, 3D-U-Net benchmarks support high accuracy targets.

- 3D-U-Net only has the Offline scenario.

- Triton Inference Server runs by passing triton and high_accuracy_triton for default and high_accuracy targets respectively inside the config_ver argument.

- When running a command with RUN_ARGS, be aware of the quotation marks. Errors can occur if you omit the quotation marks.

Conclusion

This blog provided the step-by-step procedures to run and reproduce closed data center MLPerf inference v1.1 results on Dell EMC servers with NVIDIA GPUs.

Related Blog Posts

MLPerf™ Inference v4.0 Performance on Dell PowerEdge R760xa and R7615 Servers with NVIDIA L40S GPUs

Fri, 05 Apr 2024 17:41:56 -0000

|Read Time: 0 minutes

Abstract

Dell Technologies recently submitted results to the MLPerf™ Inference v4.0 benchmark suite. This blog highlights Dell Technologies’ closed division submission made for the Dell PowerEdge R760xa, Dell PowerEdge R7615, and Dell PowerEdge R750xa servers with NVIDIA L40S and NVIDIA A100 GPUs.

Introduction

This blog provides relevant conclusions about the performance improvements that are achieved on the PowerEdge R760xa and R7615 servers with the NVIDIA L40S GPU compared to the PowerEdge R750xa server with the NVIDIA A100 GPU. In the following comparisons, we held the GPU constant across the PowerEdge R760xa and PowerEdge R7615 servers to show the excellent performance of the NVIDIA L40S GPU. Additionally, we also compared the PowerEdge R750xa server with the NVIDIA A100 GPU to its successor the PowerEdge R760xa server with the NVIDIA L40S GPU.

System Under Test configuration

The following table shows the System Under Test (SUT) configuration for the PowerEdge servers.

Table 1: SUT configuration of the Dell PowerEdge R750xa, R760xa, and R7615 servers for MLPerf Inference v4.0

Server | PowerEdge R750xa | PowerEdge R760xa | PowerEdge R7615 |

MLPerf Version | V4.0

| ||

GPU | NVIDIA A100 PCIe 80 GB | NVIDIA L40S

| |

Number of GPUs | 4 | 2 | |

MLPerf System ID | R750xa_A100_PCIe_80GBx4_TRT | R760xa_L40Sx4_TRT | R7615_L40Sx2_TRT

|

CPU | 2 x Intel Xeon Gold 6338 CPU @ 2.00GHz | 2 x Intel Xeon Platinum 8470Q | 1 x AMD EPYC 9354 32-Core Processor |

Memory | 512 GB | ||

Software Stack | TensorRT 9.3.0 CUDA 12.2 cuDNN 8.9.2 Driver 535.54.03 / 535.104.12 DALI 1.28.0 | ||

The following table lists the technical specifications of the NVIDIA L40S and NVIDIA A100 GPUs.

Table 2: Technical specifications of the NVIDIA A100 and NVIDIA L40S GPUs

Model | NVIDIA A100 | NVIDIA L40S | ||

Form factor | SXM4 | PCIe Gen4 | PCIe Gen4 | |

GPU architecture | Ampere | Ada Lovelace | ||

CUDA cores | 6912 | 18176 | ||

Memory size | 80 GB | 48 GB | ||

Memory type | HBM2e | HBM2e | ||

Base clock | 1275 MHz | 1065 MHz | 1110 MHz | |

Boost clock | 1410 MHz | 2520 MHz | ||

Memory clock | 1593 MHz | 1512 MHz | 2250 MHz | |

MIG support | Yes | No | ||

Peak memory bandwidth | 2039 GB/s | 1935 GB/s | 864 GB/s | |

Total board power | 500 W | 300 W | 350 W | |

Dell PowerEdge R760xa server

The PowerEdge R760xa server shines as an Artificial Intelligence (AI) workload server with its cutting-edge inferencing capabilities. This server represents the pinnacle of performance in the AI inferencing space with its processing prowess enabled by Intel Xeon Platinum processors and NVIDIA L40S GPUs. Coupled with NVIDIA TensorRT and CUDA 12.2, the PowerEdge R760xa server is positioned perfectly for any AI workload including, but not limited to, Large Language Models, computer vision, Natural Language Processing, robotics, and edge computing. Whether you are processing image recognition tasks, natural language understanding, or deep learning models, the PowerEdge R760xa server provides the computational muscle for reliable, precise, and fast results.

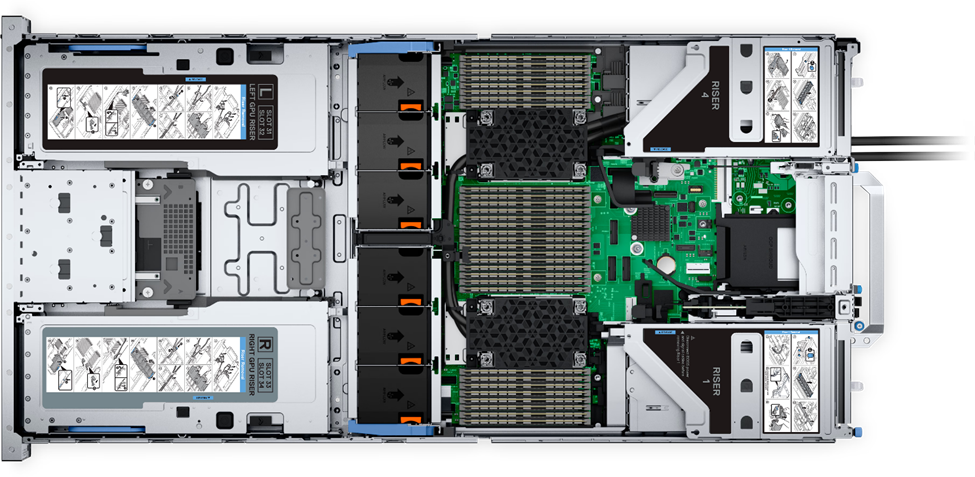

Figure 1: Front view of the Dell PowerEdge R760xa server

Figure 2: Top view of the Dell PowerEdge R760xa server

Dell PowerEdge R7615 server

The PowerEdge R7615 server stands out as an excellent choice for AI, machine learning (ML), and deep learning (DL) workloads due to its robust performance capabilities and optimized architecture. With its powerful processing capabilities including up to three NVIDIA L40S GPUs supported by TensorRT, this server can handle complex neural network inference and training tasks with ease. Powered by a single AMD EPYC processor, this server performs well for any demanding AI workloads.

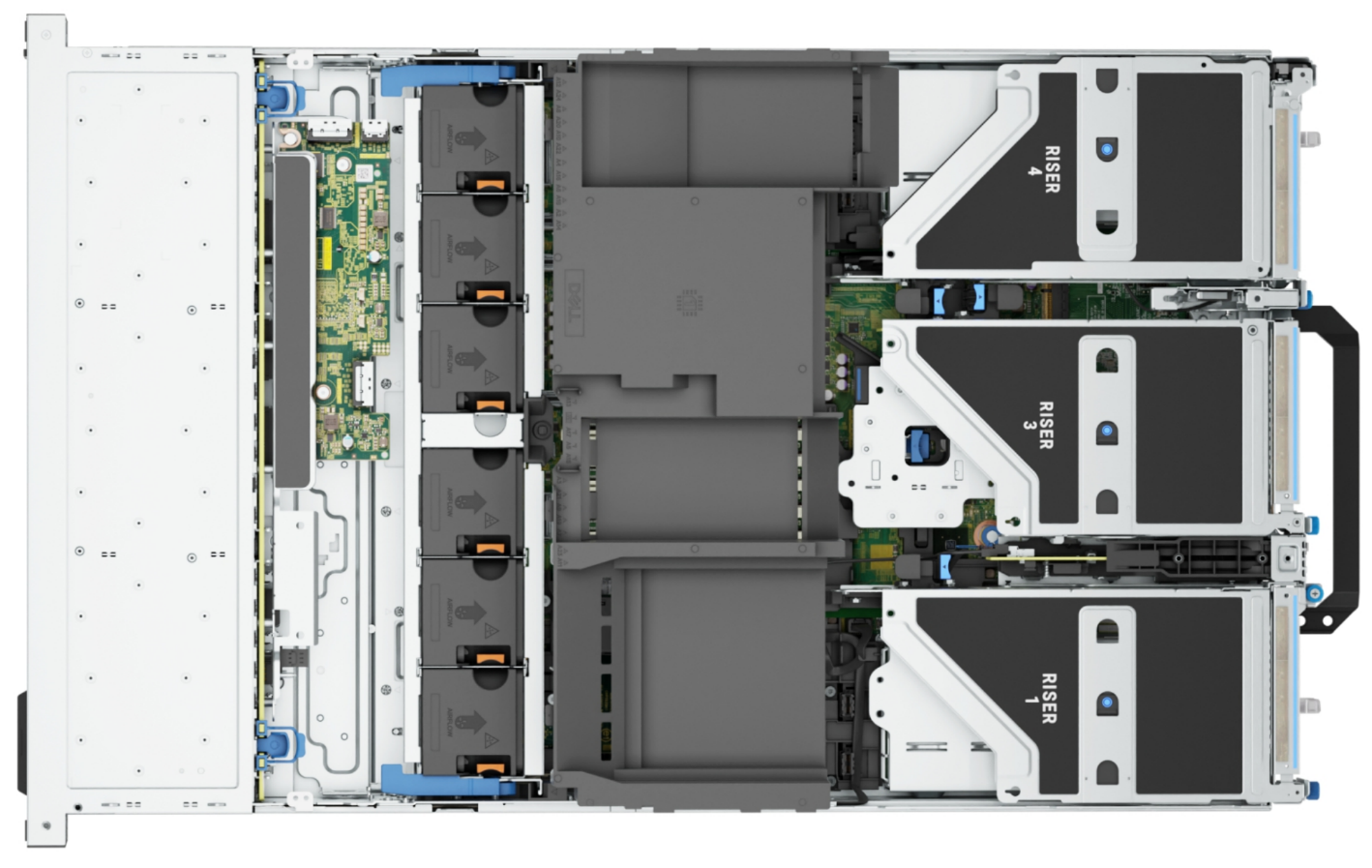

Figure 3: Front view of the Dell PowerEdge R7615 server

Figure 4: Top view of the Dell PowerEdge R7615 server

Dell PowerEdge R750xa server

The PowerEdge R750xa server is a perfect blend of technological prowess and innovation. This server is equipped with Intel Xeon Gold processors and the latest NVIDIA GPUs. The PowerEdge R760xa server is designed for the most demanding AI, ML, and DL workloads as it is compatible with the latest NVIDIA TensorRT engine and CUDA version. With up to nine PCIe Gen4 slots and availability in a 1U or 2U configuration, the PowerEdge R750xa server is an excellent option for any demanding workload.

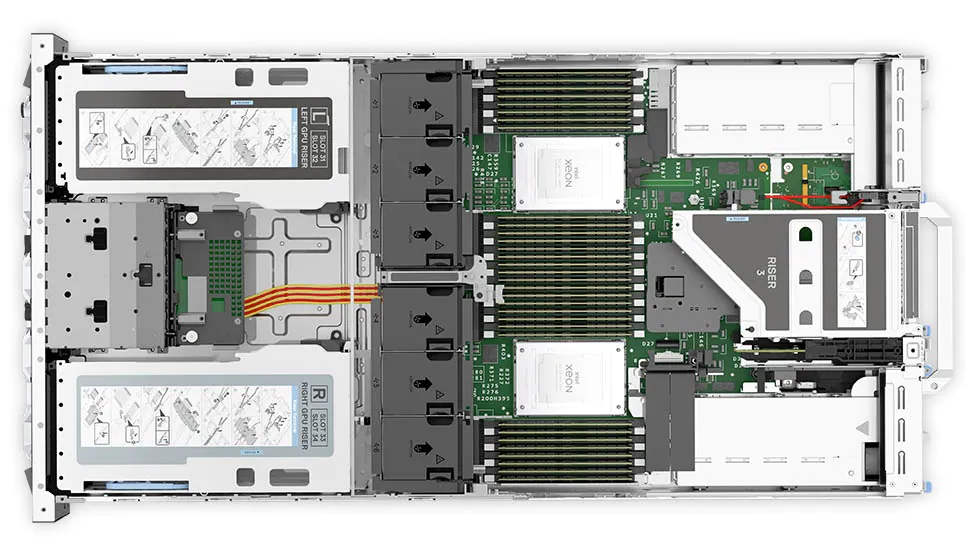

Figure 5: Front view of the Dell PowerEdge R750xa server

Figure 6: Top view of the Dell PowerEdge R750xa server

Performance results

Classical Deep Learning models performance

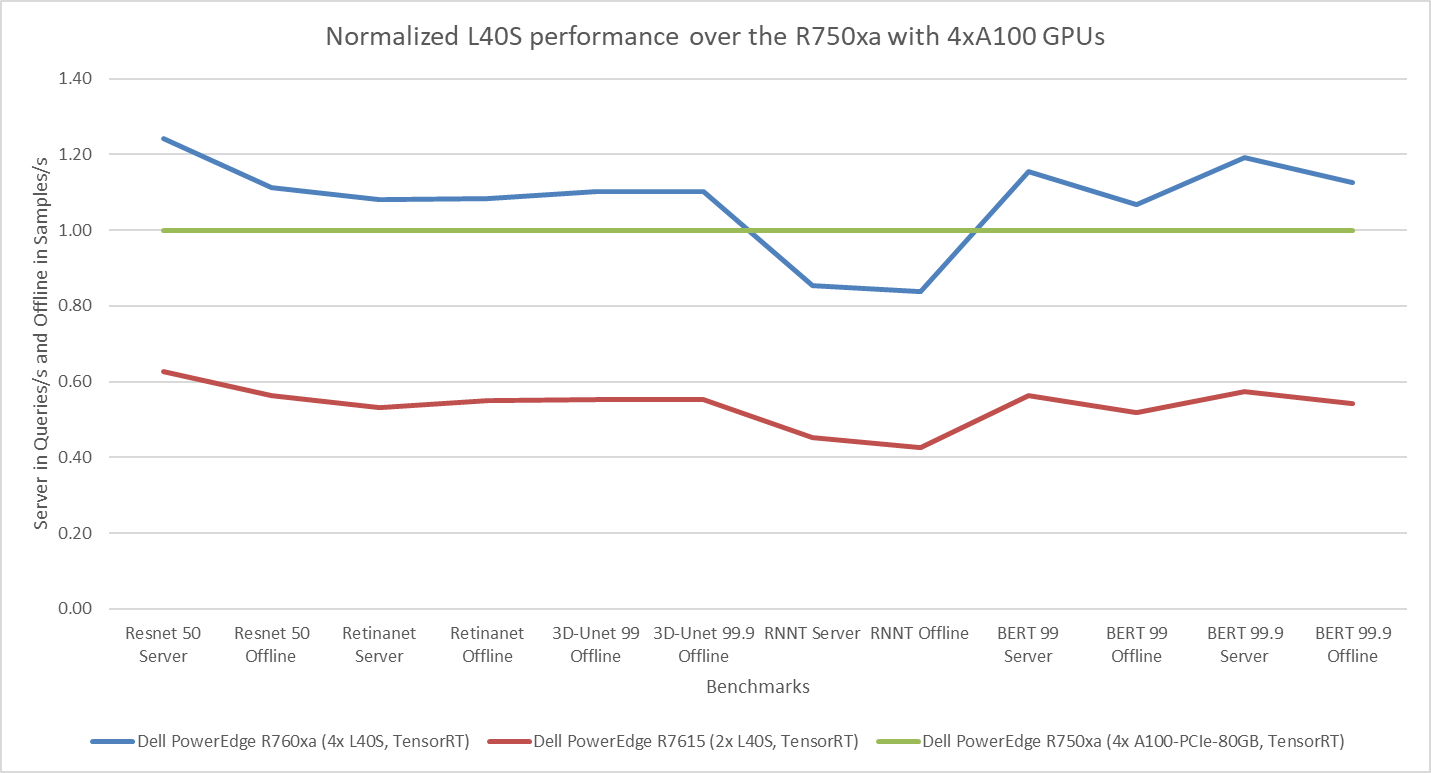

The following figure presents the results as a ratio of normalized numbers over the Dell PowerEdge R750xa server with four NVIDIA A100 GPUs. This result provides an easy-to-read comparison of three systems and several benchmarks.

Figure 7: Normalized NVIDIA L40S GPU performance over the PowerEdge R750xa server with four A100 GPUs

The green trendline represents the performance of the Dell PowerEdge R750xa server with four NVIDIA A100 GPUs. With a score of 1.00 for each benchmark value, the results have been divided by themselves to serve as the baseline in green for this comparison. The blue trendline represents the performance of the PowerEdge R760xa server with four NVIDIA L40S GPUs that has been normalized by dividing each benchmark result by the corresponding score achieved by the PowerEdge R750xa server. In most cases, the performance achieved on the PowerEdge R760xa server outshines the results of the PowerEdge R750xa server with NVIDIA A100 GPUs, proving the expected improvements from the NVIDIA L40S GPU. The red trendline has also been normalized over the PowerEdge R750xa server and represents the performance of the PowerEdge R7615 server with two NVIDIA L40S GPUs. It is interesting that the red line almost mimics the blue line. This result suggests that the PowerEdge R7615 server, despite having half the compute resources, still performs comparably well in most cases, showing its efficiency.

Generative AI performance

The latest submission saw the introduction of the new Stable Diffusion XL benchmark. In the context of generative AI, stable diffusion is a text to image model that generates coherent image samples. This result is achieved gradually by refining and spreading out information throughout the generation process. Consider the example of dropping food coloring into a large bucket of water. Initially, only a small, concentrated portion of the water turns color, but gradually the coloring is evenly distributed in the bucket.

The following table shows the excellent performance of the PowerEdge R760xa server with the powerful NVIDIA L40S GPU for the GPT-J and Stable Diffusion XL benchmarks. The PowerEdge R760xa takes the top spot in GPT-J and Stable Diffusion XL when compared to other NVIDIA L40S results.

Table 3: Benchmark results for the PowerEdge R760xa server with the NVIDIA L40S GPU

Benchmark | Dell PowerEdge R760xa L40S result (Server in Queries/s and Offline in Samples/s) | Dell’s % gain to the next best non-Dell results (%) |

Stable Diffusion XL Server | 0.65 | 5.24 |

Stable Diffusion XL Offline | 0.67 | 2.28 |

GPT-J 99 Server | 12.75 | 4.33 |

GPT-J 99 Offline | 12.61 | 1.88 |

GPT-J 99.9 Server | 12.75 | 4.33 |

GPT-J 99.9 Offline | 12.61 | 1.88 |

Conclusion

The MLPerf Inference submissions elicit insightful like-to-like comparisons. This blog highlights the impressive performance of the NVIDIA L40S GPU in the Dell PowerEdge R760xa and PowerEdge R7615 servers. Both servers performed well when compared to the performance of the Dell PowerEdge R750xa server with the NVIDIA A100 GPU. The outstanding performance improvements in the NVIDIA L40S GPU coupled with the Dell PowerEdge server position Dell customers to succeed in AI workloads. With the advent of the GPT-J and Stable diffusion XL Models, the Dell PowerEdge server is well positioned to handle Generative AI workloads.

Dell PowerEdge Servers Unleash Another Round of Excellent Results with MLPerf™ v4.0 Inference

Wed, 27 Mar 2024 15:12:53 -0000

|Read Time: 0 minutes

Today marks the unveiling of MLPerf v4.0 Inference results, which have emerged as an industry benchmark for AI systems. These benchmarks are responsible for assessing the system-level performance consisting of state-of-the-art hardware and software stacks. The benchmarking suite contains image classification, object detection, natural language processing, speech recognition, recommenders, medical image segmentation, LLM 6B and LLM 70B question answering, and text to image benchmarks that aim to replicate different deployment scenarios such as the data center and edge.

Dell Technologies is a founding member of MLCommons™ and has been actively making submissions since the inception of the Inference and Training benchmarks. See our MLPerf™ Inference v2.1 with NVIDIA GPU-Based Benchmarks on Dell PowerEdge Servers white paper that introduces the MLCommons Inference benchmark.

Our performance results are outstanding, serving as a clear indicator of our resolve to deliver outstanding system performance. These improvements enable higher system performance when it is most needed, for example, for demanding generative AI (GenAI) workloads.

What is new with Inference 4.0?

Inference 4.0 and Dell’s submission include the following:

- Newly introduced Llama 2 question answering and text to image stable diffusion benchmarks, and submission across different Dell PowerEdge XE platforms.

- Improved GPT-J (225 percent improvement) and DLRM-DCNv2 (100 percent improvement) performance. Improved throughput performance of the GPTJ and DLRM-DCNv2 workload means faster natural language processing tasks like summarization and faster relevant recommendations that allow a boost to revenue respectively.

- First-time submission of server results with the recently released PowerEdge R7615 and PowerEdge XR8620t servers with NVIDIA accelerators.

- Besides accelerator-based results, Intel-based CPU-only results.

- Results for PowerEdge servers with Qualcomm accelerators.

- Power results showing high performance/watt scores for the submissions.

- Virtualized results on Dell servers with Broadcom.

Overview of results

Dell Technologies delivered 187 data center, 28 data center power, 42 edge, and 24 edge power results. Some of the more impressive results were generated by our:

- Dell PowerEdge XE9680, XE9640, XE8640, and servers with NVIDIA H100 Tensor Core GPUs

- Dell PowerEdge R7515, R750xa, and R760xa servers with NVIDIA L40S and A100 Tensor Core GPUs

- Dell PowerEdge XR7620 and XR8620t servers with NVIDIA L4 Tensor Core GPUs

- Dell PowerEdge R760 server with Intel Emerald Rapids CPUs

- Dell PowerEdge R760 with Qualcomm QAIC100 Ultra accelerators

NVIDIA-based results include the following GPUs:

- Eight-way NVIDIA H100 GPU (SXM)

- Four-way NVIDIA H100 GPU (SXM)

- Four-way NVIDIA A100 GPU (PCIe)

- Four-way NVIDIA L40S GPU (PCIe)

- NVIDIA L4 GPU

These accelerators were benchmarked on different servers such as PowerEdge XE9680, XE8640, XE9640, R760xa, XR7620, and XR8620t servers across data center and edge suites.

Dell contributed to about 1/4th of the closed data center and edge submissions. The large number of result choices offers end users an opportunity to make data-driven purchase decisions and set performance and data center design expectations.

Interesting Dell data points

The most interesting data points include:

- Performance results across different benchmarks are excellent and show that Dell servers meet the increasing need to serve different workload types.

- Among 20 submitters, Dell Technologies was one of the few companies that covered all benchmarks in the closed division for data center suites.

- The PowerEdge XE8640 and PowerEdge XE9640 servers compared to other four-way systems procured winning titles across all the benchmarks including the newly launched stable diffusion and Llama 2 benchmark.

- The PowerEdge XE9680 server compared to other eight-way systems procured several winning titles for benchmarks such as ResNet Server, 3D-Unet, BERT-99, and BERT-99.9 Server.

- The PowerEdge XE9680 server delivers the highest performance/watt compared to other submitters with 8-way NVIDIA H100 GPUs for ResNet Server, GPTJ Server, and Llama 2 Offline

- The Dell XR8620t server for edge benchmarks with NVIDIA L4 GPUs outperformed other submissions.

- The PowerEdge R750xa server with NVIDIA A100 PCIe GPUs outperformed other submissions on the ResNet, RetinaNet, 3D-Unet, RNN-T, BERT 99.9, and BERT 99 benchmarks.

- The PowerEdge R760xa server with NVIDIA L40S GPUs outperformed other submissions on the ResNet Server, RetinaNet Server, RetinaNet Offline, 3D-UNet 99, RNN-T, BERT-99, BERT-99.9, DLRM-v2-99, DLRM-v2-99.9, GPTJ-99, GPTJ-99.9, Stable Diffusion XL Server, and Stable Diffusion XL Offline benchmarks.

Highlights

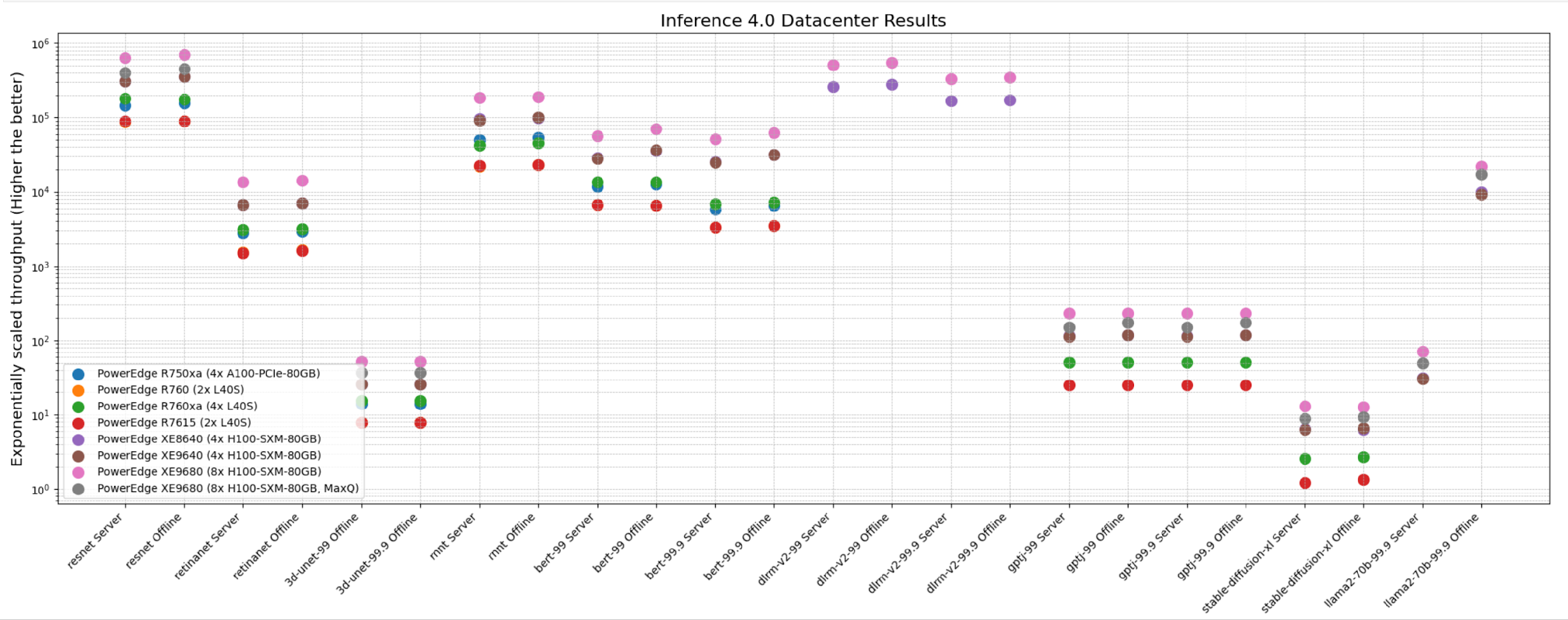

The following figure shows the different Offline and Server performance scenarios in the data center suite. These results provide an overview; follow-up blogs will provide more details about the results.

The following figure shows that these servers delivered excellent performance for all models in the benchmark such as ResNet, RetinaNet, 3D-UNet, RNN-T, BERT, DLRM-v2, GPT-J, Stable Diffusion XL, and Llama 2. Note that different benchmarks operate on varied scales. They have all been showcased in an exponentially scaled y-axis in the following figure:

Figure 1: System throughput for submitted systems for the data center suite.

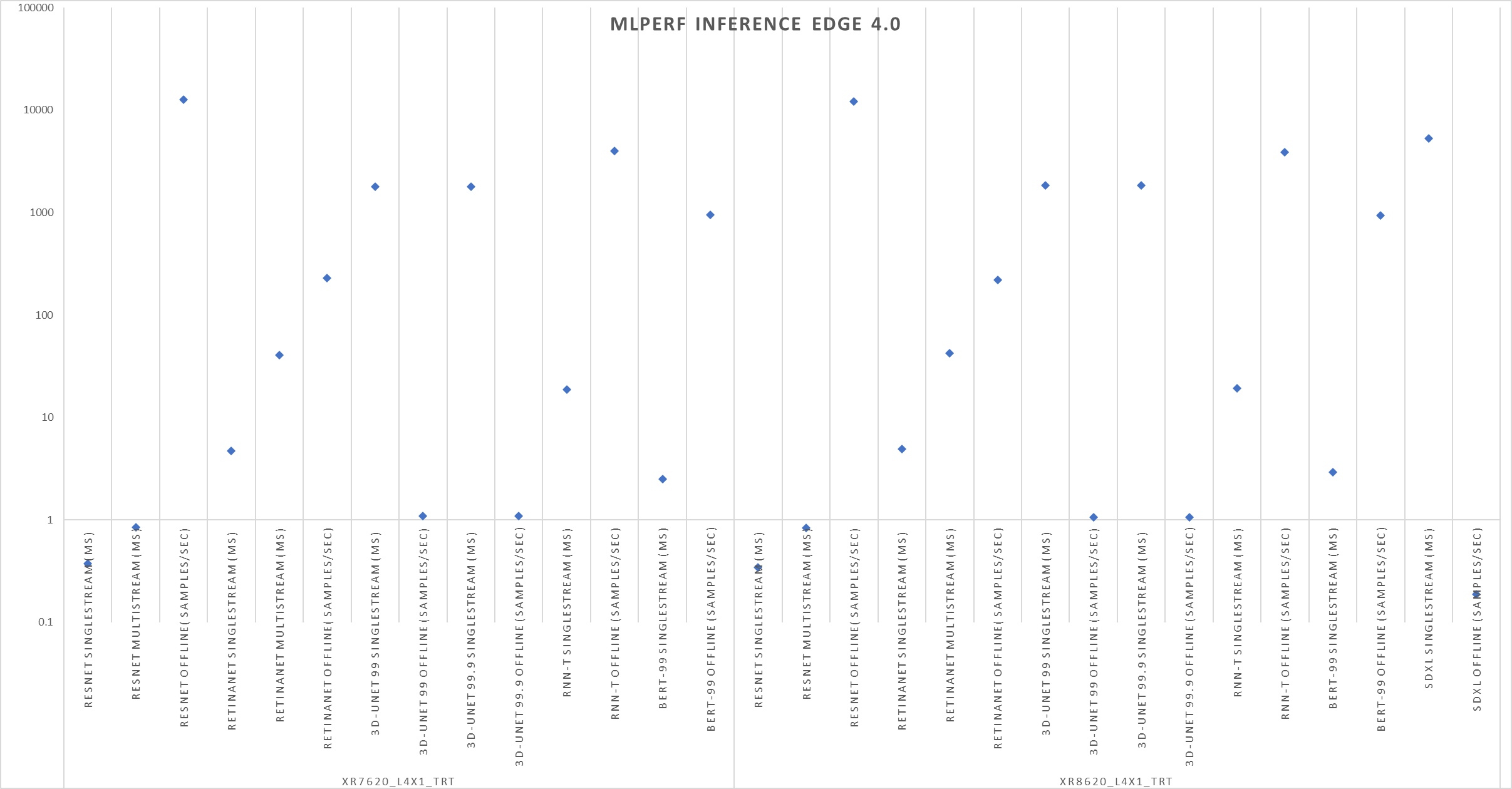

The following figure shows single-stream and multistream scenario results for the edge for ResNet, RetinaNet, 3D-Unet, RNN-T, BERT 99, GPTJ, and Stable Diffusion XL benchmarks. The lower the latency, the better the results and for Offline scenario, higher the better.

Figure 2: Edge results with PowerEdge XR7620 and XR8620t servers overview

Conclusion

The preceding results were officially submitted to MLCommons. They are MLPerf-compliant results for the Inference v4.0 benchmark across various benchmarks and suites for all the tasks in the benchmark such as image classification, object detection, natural language processing, speech recognition, recommenders, medical image segmentation, LLM 6B and LLM 70B question answering, and text to image. These results prove that Dell PowerEdge XE9680, XE8640, XE9640, and R760xa servers are capable of delivering high performance for inference workloads. Dell Technologies secured several #1 titles that make Dell PowerEdge servers an excellent choice for data center and edge inference deployments. End users can benefit from the plethora of submissions that help make server performance and sizing decisions, which ultimately deliver enterprises’ AI transformation and shows Dell’s commitment to deliver higher performance.

MLCommons Results

https://mlcommons.org/en/inference-datacenter-40/

https://mlcommons.org/en/inference-edge-40/

The preceding graphs are MLCommons results for MLPerf IDs from 4.0-0025 to 4.0-0035 on the closed datacenter, 4.0-0036 to 4.0-0038 on the closed edge, 4.0-0033 in the closed datacenter power, and 4.0-0037 in closed edge power.