Overview of MLPerf™ Inference v2.0 Results on Dell Servers

Fri, 09 Sep 2022 15:15:36 -0000

|Read Time: 0 minutes

Dell Technologies has been an active participant in the MLCommons™ Inference benchmark submission since day one. We have completed five rounds of inference submission.

This blog provides an overview of the latest results of MLPerf Inference v2.0 closed data center, closed data center power, closed edge, and closed edge power categories on Dell servers from our HPC & AI Innovation Lab. It shows optimal inference and power (performance per watt) performance for Dell GPU-based servers (DSS 8440, PowerEdge R750xa, PowerEdge XE2420, PowerEdge XE8545, and PowerEdge XR12). The previous blog about MLPerf Inference v1.1 performance results can be found here.

What is new?

- There were 3,800 performance results for this round compared to 1,800 performance results for v1.1. Additionally, 885 systems in v2.0 compared to 424 systems in v1.1 shows that there were more than twice the systems submitted for this round.

- For the 3D U-Net benchmark, the dataset now used is the KiTs 2019 Kidney Tumor Segmentation set.

- Early stopping was introduced in this round to replace a deterministic minimum query count with a function that dynamically determines when further runs are not required to identify additional performance gain.

Results at a glance

Dell Technologies submitted 167 results to the various categories. The Dell team made 86 submissions to the closed data center category, 28 submissions to the closed data center power category, and 53 submissions to the closed edge category. For the closed data center category, the Dell team submitted the second most results. In fact, Dell Technologies submitted results from 17 different system configurations with the NVIDIA TensorRT and NVIDIA Triton inference engines. Among these 17 configurations, the PowerEdge XE2420 server with T4 and A30 GPUs and the PowerEdge XR12 server with the A2 GPU were two new systems that have not been submitted before. Additionally, Dell Technologies submitted to the reintroduced Multiterm scenario. Only Dell Technologies submitted results for different host operating systems.

Noteworthy results

Noteworthy results include:

- The PowerEdge XE8545 and R750xa servers yield Number One results for performance per accelerator with NVIDIA A100 GPUs. The use cases for this top classification include Image Classification, Object Detection, Speech-to-text, Medical Imaging, Natural Language Processing, and Recommendation.

- The DSS 8440 server yields Number Two results for system performance for multiple benchmarks including Speech-to-text, Object Detection, Natural Language Processing, and Medical Image Segmentati on uses cases among all submissions.

- The PowerEdge R750xa server yields Number One results for the highest system performance for multiple benchmarks including Image Classification, Object Detection, Speech-to-text, Natural Language Processing, and Recommendation use cases among all the PCIe-based GPU servers.

- The PowerEdge XE8545 server yields Number One results for the lowest multistrand latency with NVIDIA Multi-Instance GPU (MIG) in the edge category for the Image Classification and Object Detection use cases.

- The PowerEdge XE2420 server yields Number One results for the highest T4 GPU inference results for the Image Classification, Speech-to-text, and Recommendation use cases.

- The PowerEdge XR12 server yields Number One results for the highest performance per watt with NVIDIA A2 GPU results in power for the Image Classification, Object Detection, Speech-to-text, Natural Language Processing, and Recommendation use cases.

MLPerf Inference v2.0 benchmark results

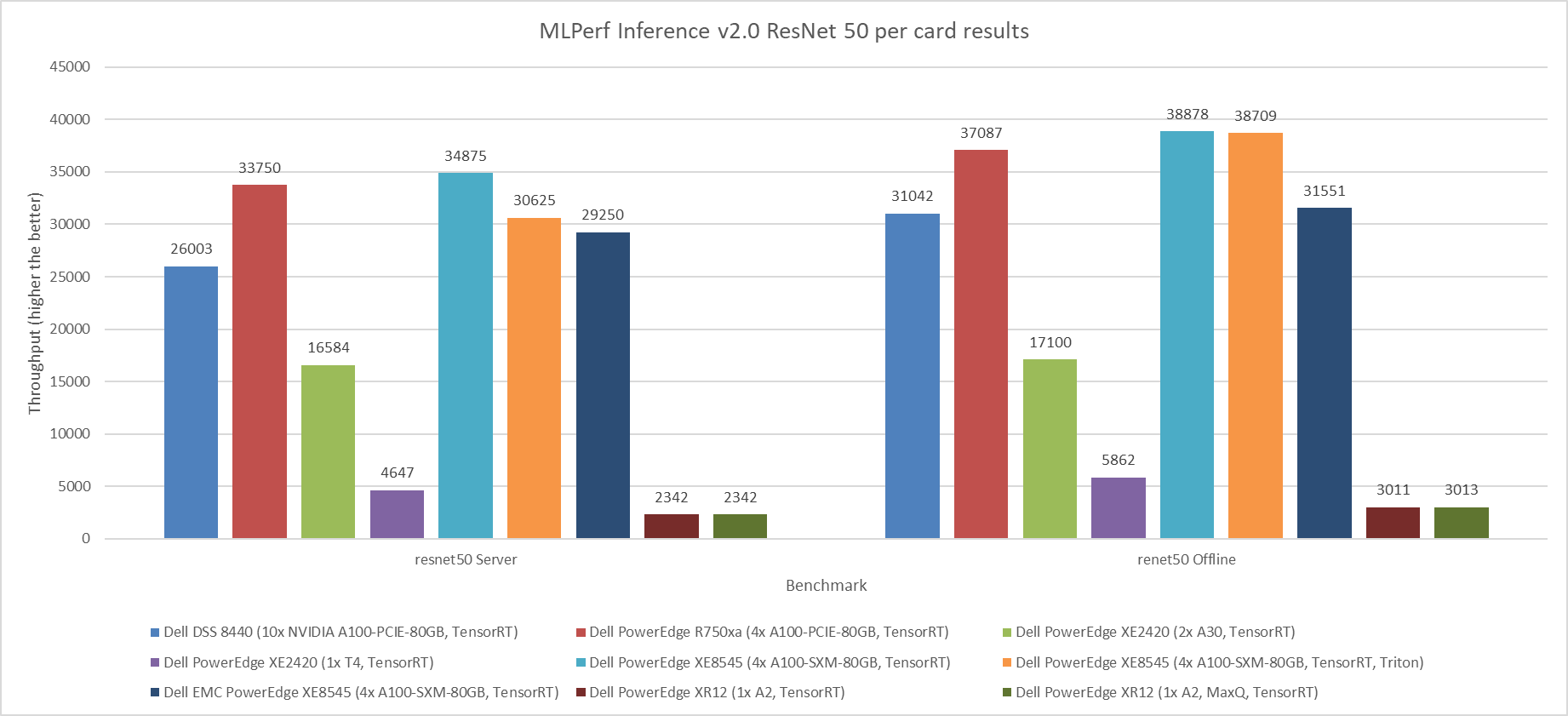

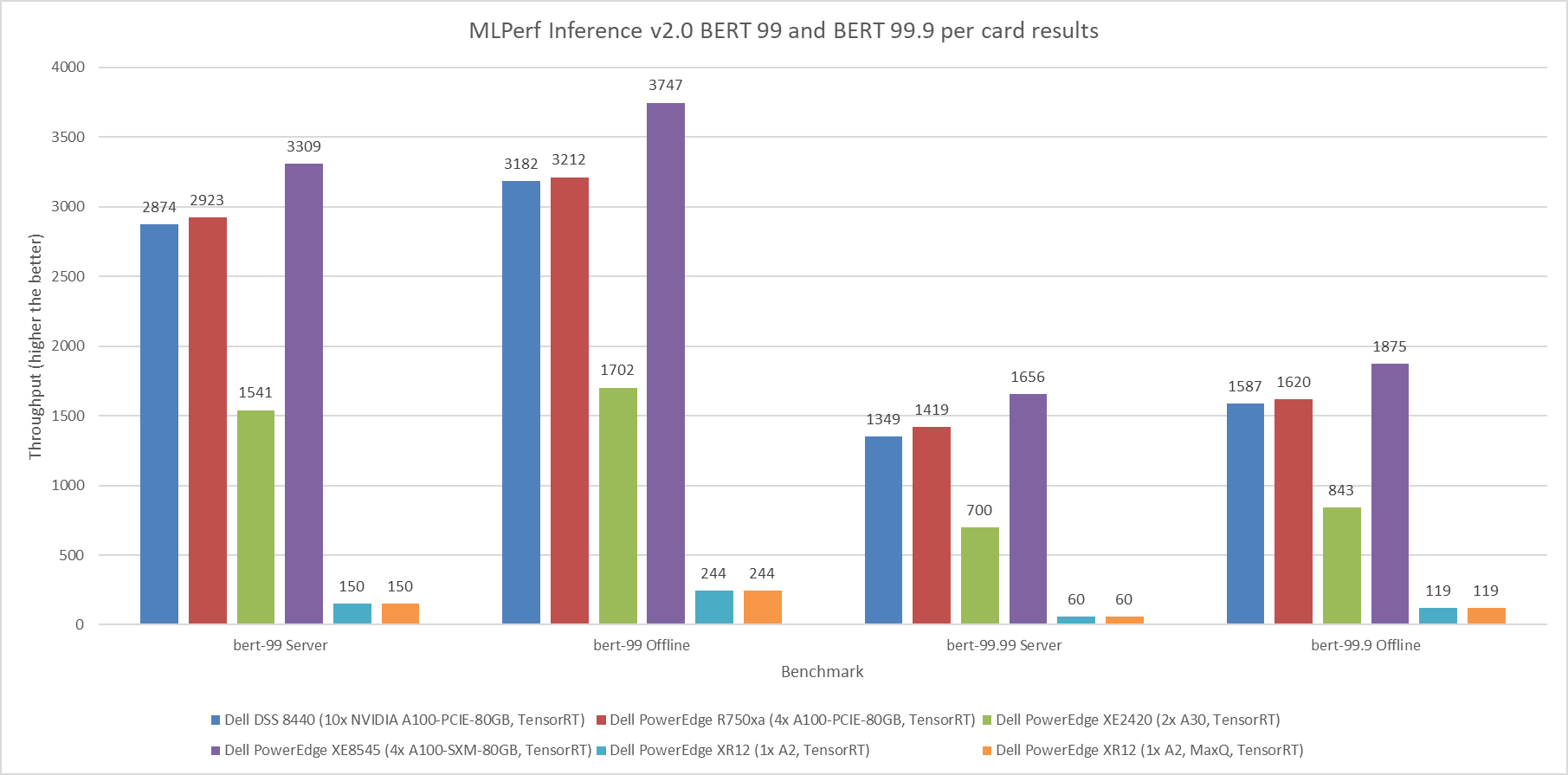

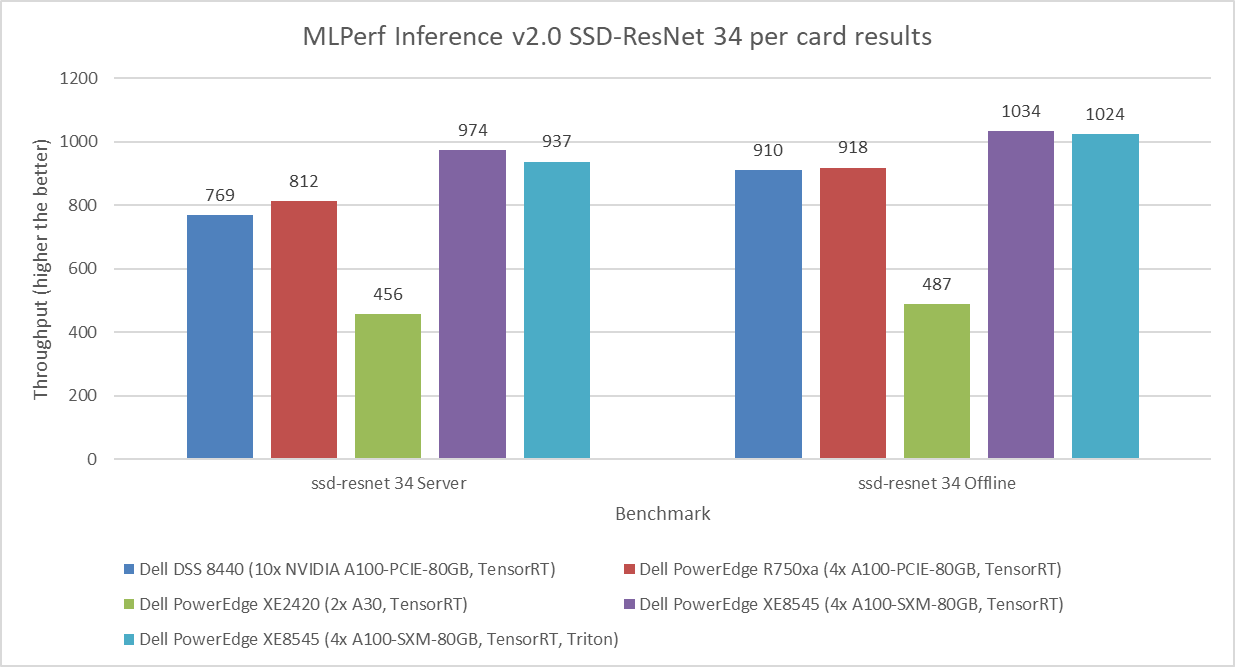

The following graphs highlight the performance metrics for the Server and Offline scenarios across the various benchmarks from MLCommons. Dell Technologies presents results as an method for our customers to identify options to suit their deep learning application demands. Additionally, this performance data serves as a reference point to enable sizing of deep learning clusters. Dell Technologies strives to submit as many results as possible to offer answers to ensure that customer questions are resolved.

For the Server scenario, the performance metric is queries per second (QPS). For the Offline scenario, the performance metric is Offline samples per second. In general, the metrics represent throughput, and a higher throughput indicates a better result. In the following graphs, the Y axis is an exponentially scaled axis representing throughput and the X axis represents the systems under test (SUTs) and their corresponding models. The SUTs are described in the appendix.

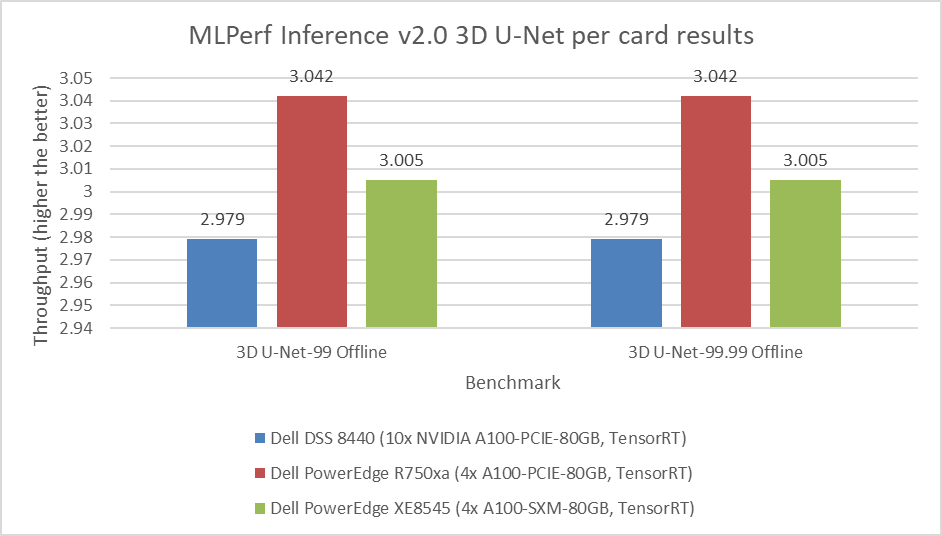

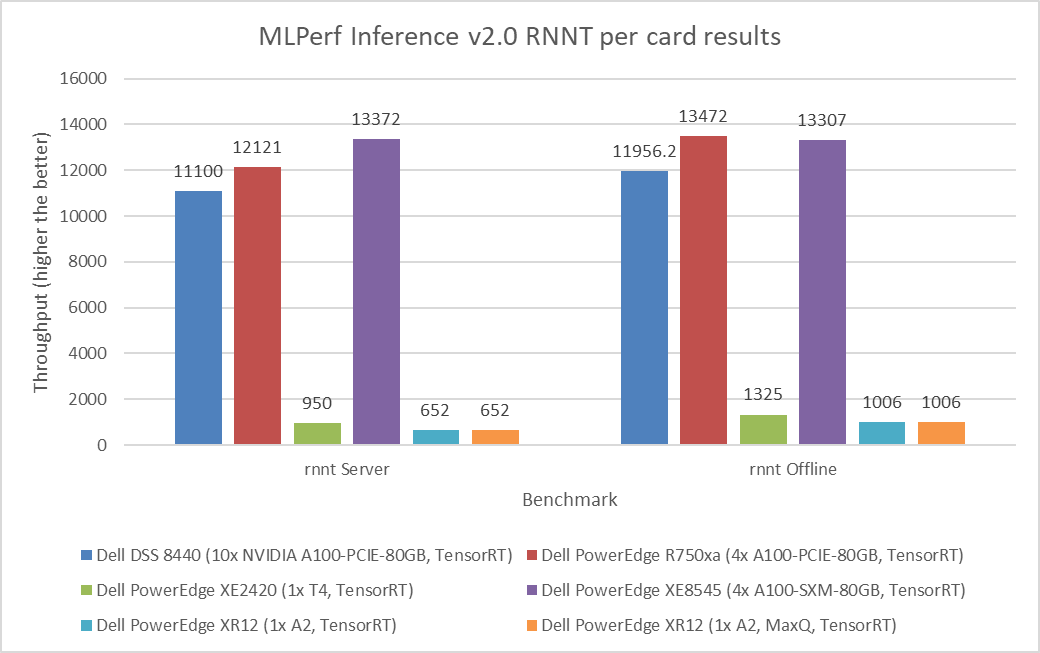

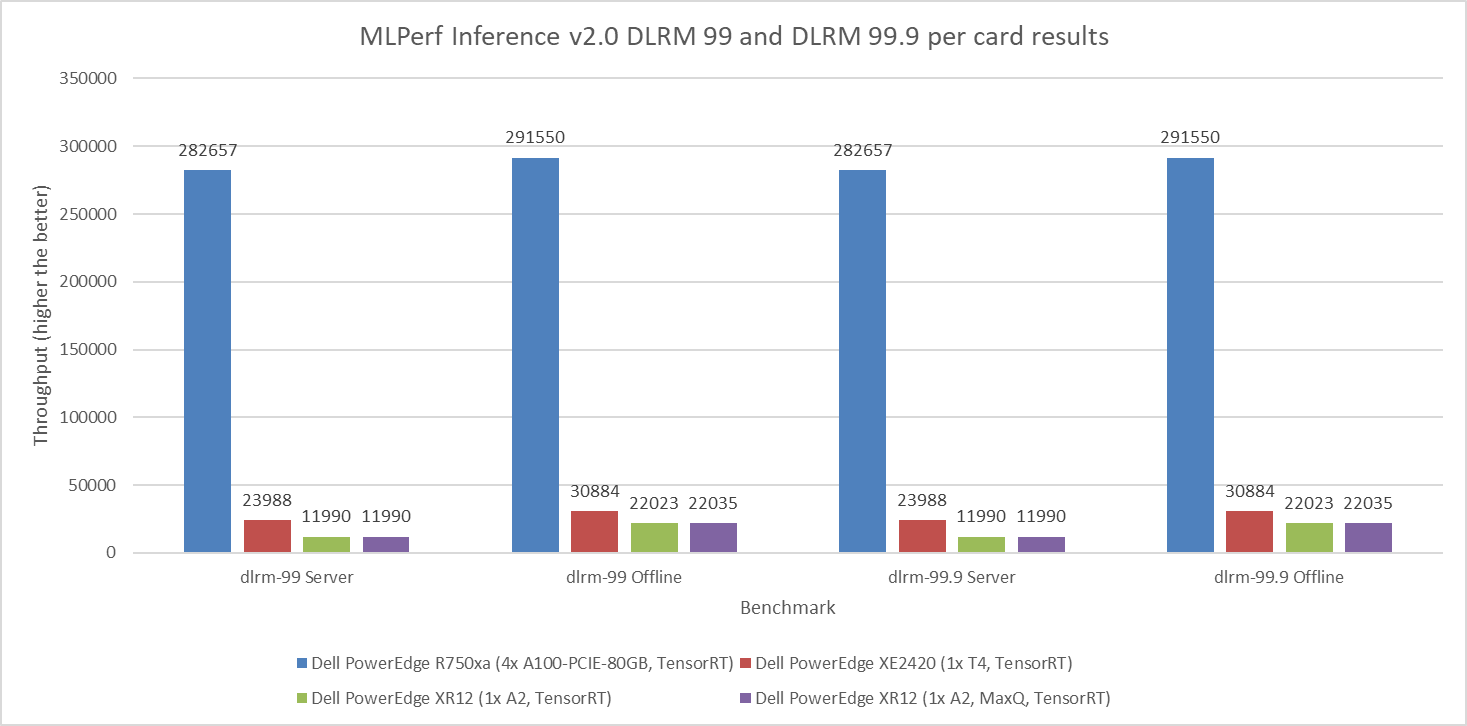

Figure 1 through Figure 6 show the per card performance of the various SUTs on the ResNet 50, BERT, SSD, 3dUnet, RNNT, and DLRM modes respectively in the Server and Offline scenarios:

Figure 1: MLPerf Inference v2.0 ResNet 50 per card results

Figure 2: MLPerf Inference v2.0 BERT default and high accuracy per card results

Figure 3: MLPerf Inference v2.0 SSD-ResNet 34 per card results

Figure 4: MLPerf Inference v2.0 3D U-Net per card results

Figure 5: MLPerf Inference v2.0 RNNT per card results

Figure 6: MLPerf Inference v2.0 DLRM default and high accuracy per card results

Observations

The results in this blog have been officially submitted to and accepted by the MLCommons organization. These results have passed compliance tests, been peer reviewed, and adhered to the constraints enforced by MLCommons. Customers and partners can reproduce our results by following steps to run MLPerf Inference v2.0 in its GitHub repository.

Submissions from Dell Technologies included approximately 140 performance results and 28 performance and power results. Across the various workload tasks including Image Classification, Object Detection, Medical Image Segmentation, Speech-to-text, Language Processing, and Recommendation, server performance from Dell Technologies was promising.

Dell servers performed with optimal performance and power results. They were configured with different GPUs such as:

- NVIDIA A30 Tensor Core GPU

- NVIDIA A100 (PCIe and SXM)

- NVIDIA T4 Tensor Core GPU

- NVIDIA A2 Tensor Core GPU, which is newly released

More information about performance for specific configurations that are not discussed in this blog can be found in the v1.1 or v1.0 results.

The submission included results from different inference backends such as NVIDIA TensorRT and NVIDIA Triton. The appendix provides a summary of the full hardware and software stacks.

Conclusion

This blog quantifies the performance of Dell servers in the MLPerf Inference v2.0 round of submission. Readers can use these results to make informed planning and purchasing decisions for their AI workload needs.

Appendix

Software stack

The NVIDIA Triton Inference Server is an open-source inferencing software tool that aids in the deployment and execution of AI models at scale in production. Triton not only works with all major frameworks but also with customizable backends, further enabling developers to focus on their AI development. It is a versatile tool because it supports any inference type and can be deployed on any platform including CPU, GPU, data center, cloud, or edge. Additionally, Triton supports the rapid and reliable deployment of AI models at scale by integrating well with Kubernetes, Kubeflow, Prometheus, and Grafana. Triton supports the HTTP/REST and GRPC protocols that allow remote clients to request inferencing for any model that the server manages.

The NVIDIA TensorRT SDK delivers high-performance deep learning inference that includes an inference optimizer and runtime. It is powered by CUDA and offers a unified solution to deploy on various platforms including edge or data center. TensorRT supports the major frameworks including PyTorch, TensorFlow, ONNX, and MATLAB. It can import models trained in these frameworks by using integrated parsers. For inference, TensorRT performs orders of magnitude faster than its CPU-only counterparts.

NVIDIA MIG can partition GPUs into several instances that extend compute resources among users. MIG enables predictable performance and maximum GPU use by running jobs simultaneously on the different instances with dedicated resources for compute, memory, and memory bandwidth.

SUT configuration

The following table describes the SUT from this round of data center inference submission:

Table 1: MLPerf Inference v2.0 system configurations for DSS 8440 and PowerEdge R750xa servers

Platform | DSS 8440 10xA100 TensorRT | R750xa 4xA100 TensorRT |

MLPerf system ID | DSS8440_A100_PCIE_80GBx10_TRT | R750xa_A100_PCIE_80GBx4_TRT |

Operating system | CentOS 8.2 | |

CPU | Intel Xeon Gold 6248R CPU @ 3.00 GHz | Intel Xeon Gold 6338 CPU @ 2.00 GHz |

Memory | 768 GB | 1 TB |

GPU | NVIDIA A100 | |

GPU form factor | PCIe | |

GPU count | 10 | 4 |

Software stack | TensorRT 8.4.0 CUDA 11.6 cuDNN 8.3.2 Driver 510.39.01 DALI 0.31.0 | |

Table 2: MLPerf Inference v2.0 system configurations for PowerEdge XE2420 servers

Platform | PowerEdge XE2420 1xA30 TensorRT | PowerEdge XE2420 2xA30 TensorRT | PowerEdge XE2420 1xA30 TensorRT MaxQ | PowerEdge XE2420 1xAT4 TensorRT |

MLPerf system ID | XE2420_A30x1_TRT | XE2420_A30x2_TRT | XE2420_A30x1_TRT_MaxQ | XE2420_T4x1_TRT |

Operating system | Ubuntu 20.04.4 | CentOS 8.2.2004 | ||

CPU | Intel Xeon Gold 6252 CPU @ 2.10 GHz | Intel Xeon Gold 6252N CPU @ 2.30 GHz | Intel Xeon Silver 4216 CPU @ 2.10 GHz | Intel Xeon Gold 6238 CPU @ 2.10 GHz |

Memory | 1 TB | 64 GB | 256 GB | |

GPU | NVIDIA A30 | NVIDIA T4 | ||

GPU form factor | PCIe | |||

GPU count | 1 | 2 | 1 | 1 |

Software stack | TensorRT 8.4.0 CUDA 11.6 cuDNN 8.3.2 Driver 510.39.01 DALI 0.31.0 | |||

Table 3: MLPerf Inference v2.0 system configurations for PowerEdge XE8545 servers

Platform | PowerEdge XE8545 4xA100 TensorRT | PowerEdge XE8545 4xA100 TensorRT, Triton | PowerEdge XE8545 1xA100 MIG 1x1g.10g TensorRT

|

MLPerf system ID | XE8545_A100_SXM_80GBx4_TRT | XE8545_A100_SXM_80GBx4_TRT_Triton | XE8545_A100_SXM_80GB_1xMIG_TRT |

Operating system | Ubuntu 20.04.3 | ||

CPU | AMD EPYC 7763 | ||

Memory | 1 TB | ||

GPU | NVIDIA A100-SXM-80 GB | NVIDIA A100-SXM-80 GB (1x1g.10gb MIG) | |

GPU form factor | SXM | ||

GPU count | 4 | 1 | |

Software stack | TensorRT 8.4.0 CUDA 11.6 CuDNN 8.3.2 Driver 510.47.03 DALI 0.31.0 | ||

| Triton 22.01 |

| |

Table 4: MLPerf Inference v2.0 system configurations for PowerEdge XR12 servers

Platform | PowerEdge XR12 1xA2 TensorRT | PowerEdge XR12 1xA2 TensorRT MaxQ |

MLPerf system ID | XR12_A2x1_TRT | XR12_A2x1_TRT_MaxQ |

Operating system | CentOS 8.2 | |

CPU | Intel Xeon Gold 6312U CPU @ 2.40 GHz | |

Memory | 256 GB | |

GPU | NVIDIA A2 | |

GPU form factor | PCIe | |

GPU count | 1 | |

Software stack | TensorRT 8.4.0 CUDA 11.6 cuDNN 8.3.2 Driver 510.39.01 DALI 0.31.0 | |

Related Blog Posts

Promising MLPerf™ Inference 3.1 Performance of Dell PowerEdge XE8640 and XE9640 Servers with NVIDIA H100 GPUs

Wed, 04 Oct 2023 20:54:55 -0000

|Read Time: 0 minutes

Abstract

The recent release of MLPerf Inference v3.1 showcased the latest performance results from Dell's new PowerEdge XE8640 and PowerEdge XE9640 servers, and another submission from the PowerEdge R760xa server. The data underscores the outstanding performance of PowerEdge servers. These benchmarks illustrate the surging demand for compute power, with PowerEdge servers consistently emerging on top across various models, claiming numerous top titles. This blog examines the expected performance for image classification, object detection, question answering, speech recognition, medical image segmentation and summarization, focusing specifically on the capabilities of the PCIe and SXM form factor NVIDIA H100 Tensor Core GPUs in the new generation PowerEdge systems.

Overview of top title results

The PowerEdge XE8640 and XE9640 servers won several #1 titles.

For instance, the PowerEdge XE8640 server emerged as a winner in all benchmarks in the data center suite such as image classification, object detection, question answering, speech recognition, medical image segmentation, and summarization relative to other systems having four NVIDIA H100 SXM GPUs. The PowerEdge XE9640 server received #1 titles for all benchmarks previously mentioned relative to other liquid-cooled systems having four NVIDIA H100 SXM GPUs.

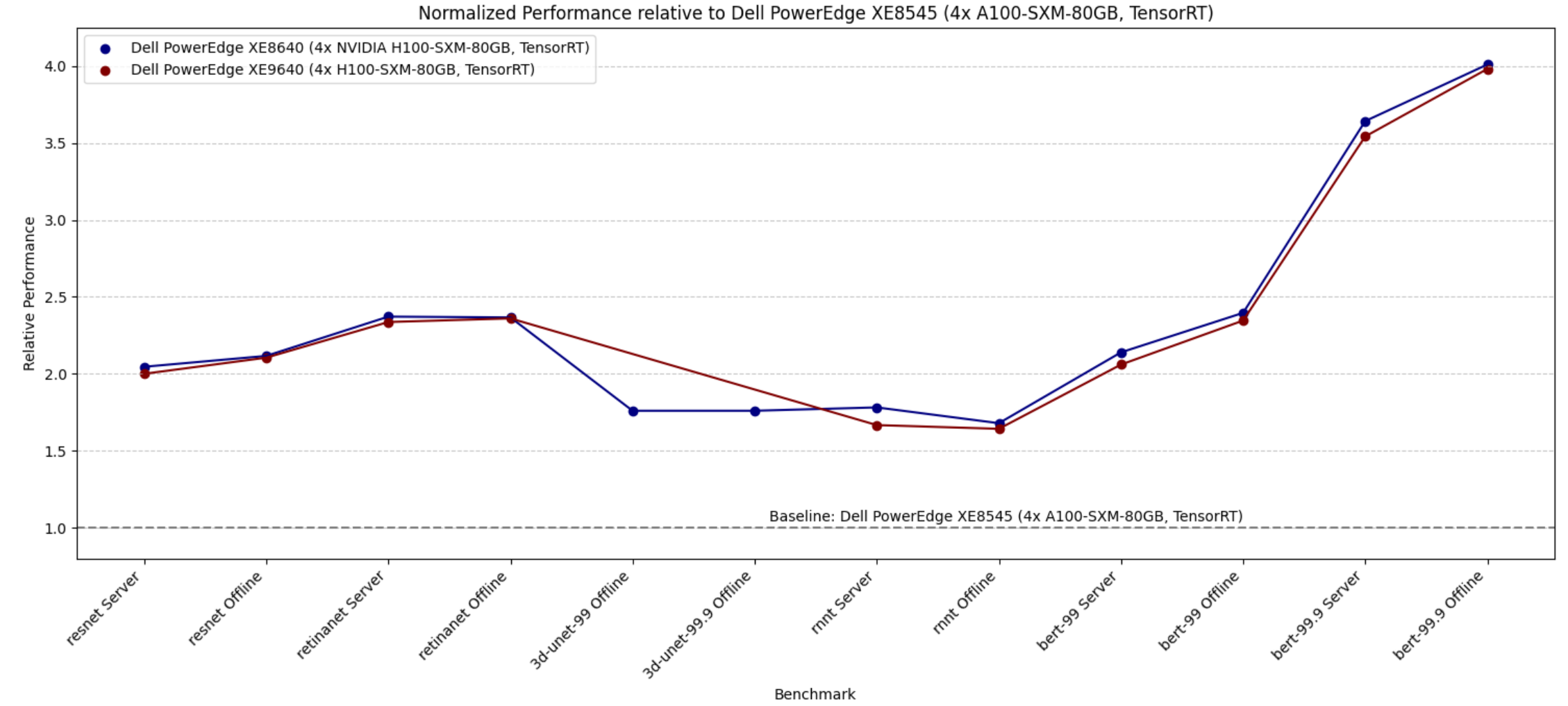

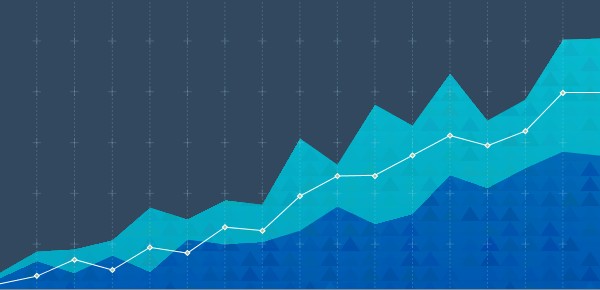

Comparison from the previous rounds of submission

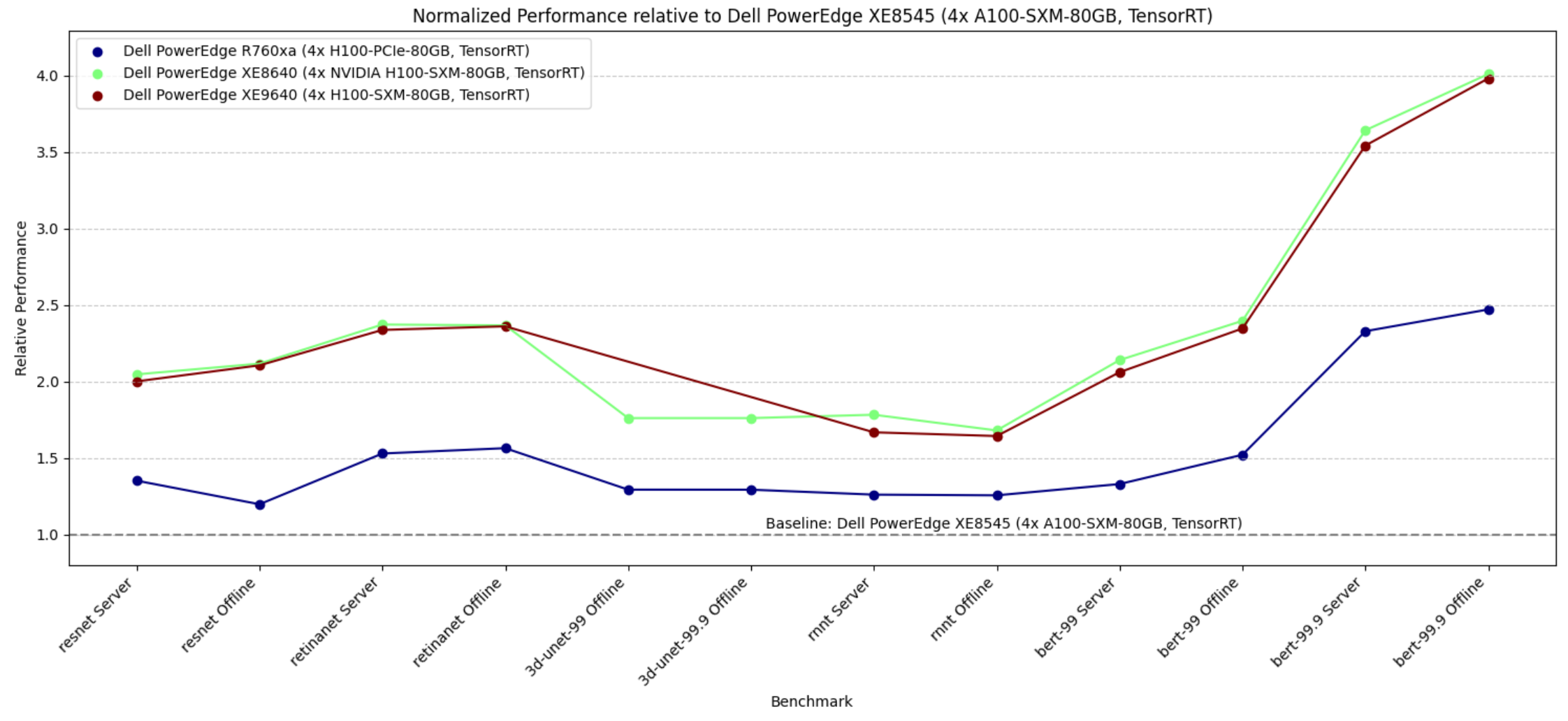

The following figure shows the improvement customers can derive by using the new generation PowerEdge XE8640 and XE9640 servers from our previous generation PowerEdge XE8545 server.

Figure 1. Relative performance of PowerEdge XE8640 and PowerEdge XE9640 servers using the PowerEdge XE8545 server as a baseline reference (for the Y axis, the higher the better)

The graph shows that the relative performance improvement from the PowerEdge XE8545 server with four NVIDIA A100 SXM Tensor Core GPUs as a baseline (from MLPerf Inference v3.0) and the new generation severs such as the PowerEdge XE8640 and PowerEdge XE9640 servers using NVIDIA H100 Tensor Core GPUs. The improvement in performance is substantial, as evident from the graph. End users can derive a two- to four-times improvement in performance for different tasks in MLPerf Inference benchmarks. We see relatively higher performance with BERT benchmarks because of the NVIDIA H100 GPU’s FP8 support.

Comparing air-cooled and liquid-cooled servers

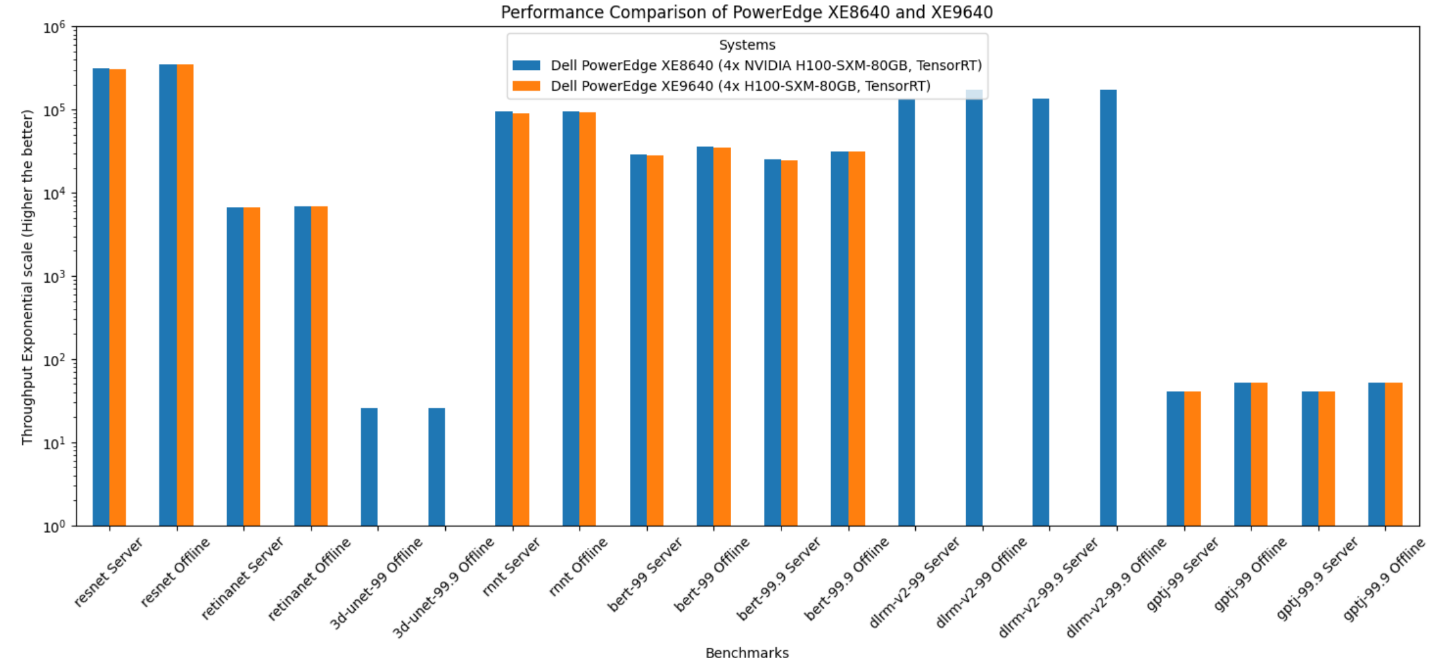

The following figure shows the raw performance of PowerEdge XE8640 and XE9640 servers; this graph and the following graph provide relative scores. The graph includes all the benchmarks in the Inference closed data center suite that we submitted. Note that different benchmarks have different scales. All the benchmarks are presented in one graph, therefore, the y-axis is expressed logarithmically.

Figure 2. Performance of PowerEdge XE8640 and PowerEdge XE9640 servers

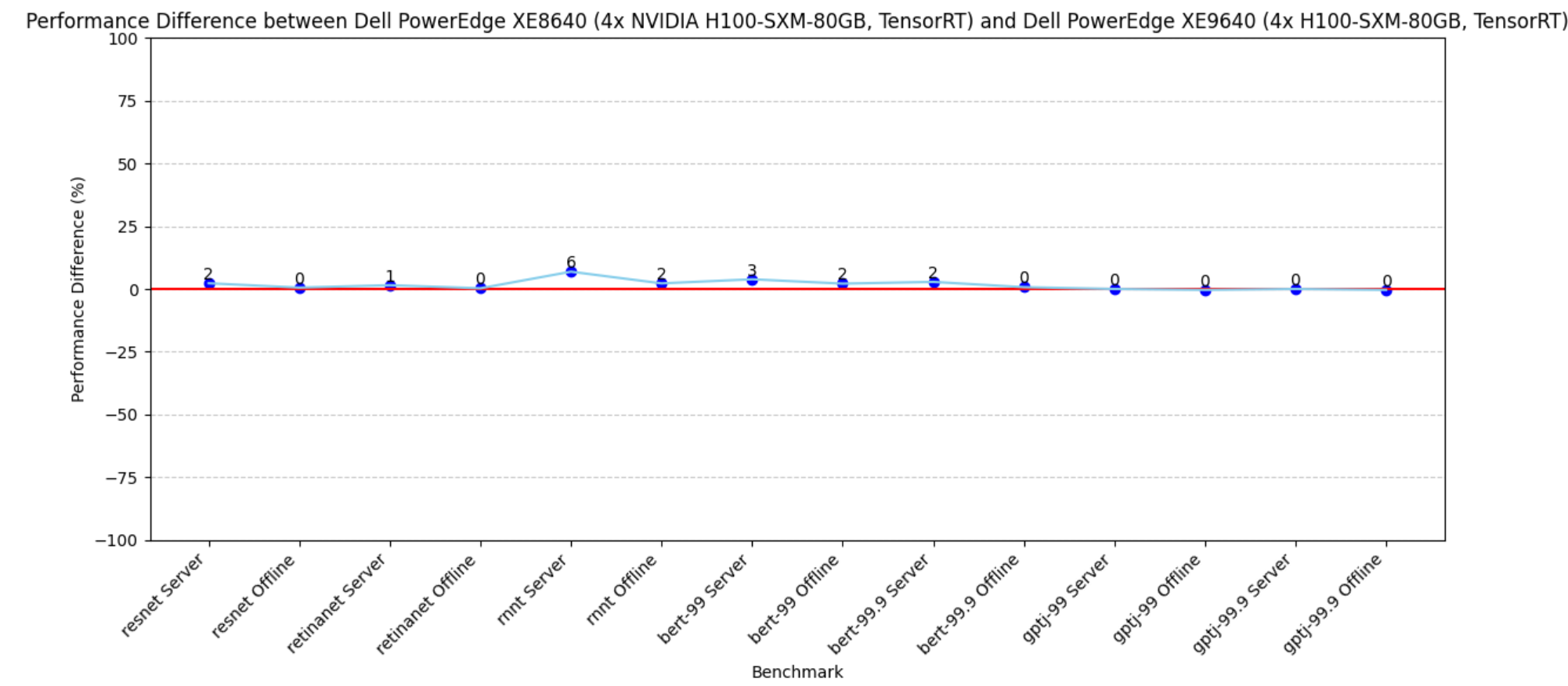

PowerEdge XE8640 and XE9640 servers are both great choices for inference workloads with four NVIDIA H100 SXM Tensor Core GPUs. The PowerEdge XE9640 server is a liquid-cooled server and the PowerEdge XE8640 server is an air-cooled server. The following figure shows the difference in performance between these systems; they both performed optimally. Both systems have similar effective throughput and render excellent performance as the CPU and GPU configurations are the same.

Figure 3. Performance difference between PowerEdge XE9640 and XE8640 servers using the PowerEdge XE9640 server as a baseline

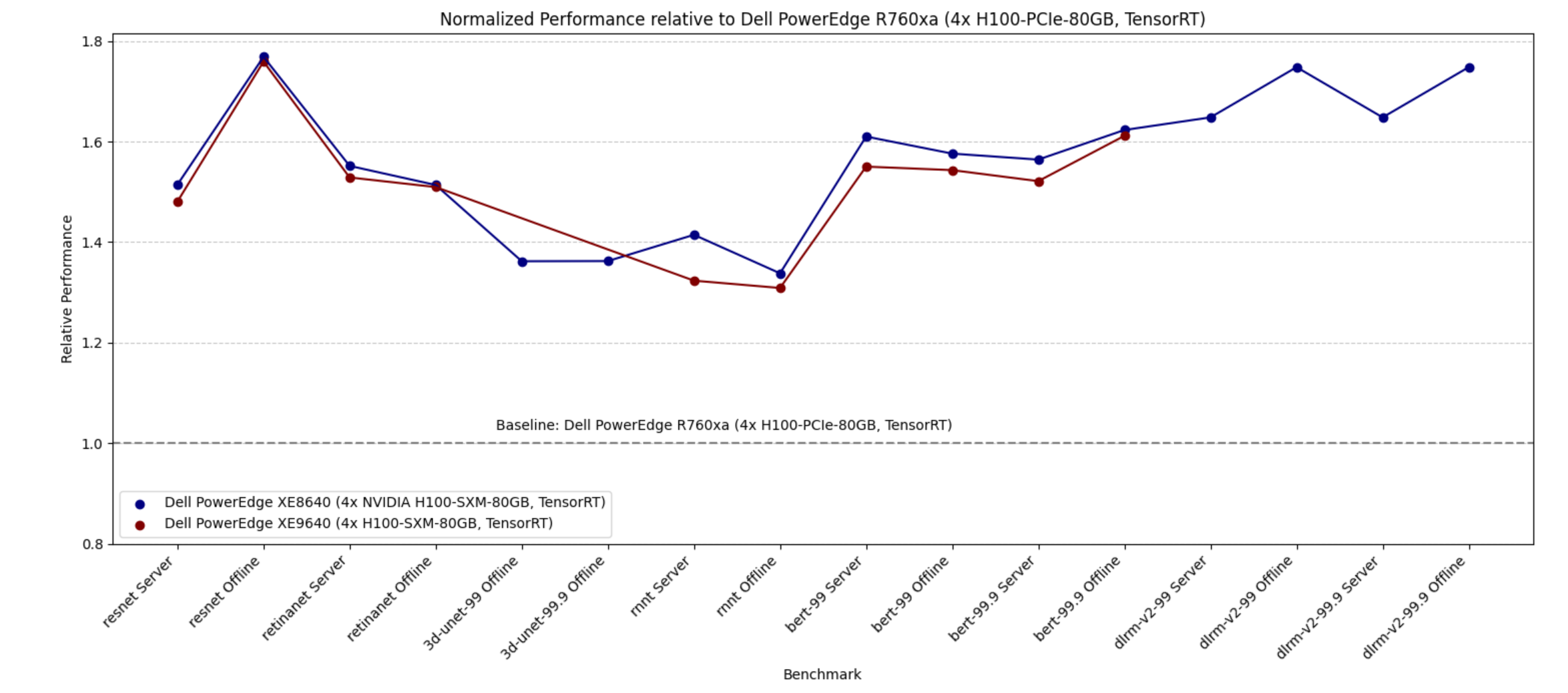

Impact of SXM over PCIe form factors

The following figure shows the performance of the PowerEdge R760xa server with NVIDIA H100 PCIe GPUs as the baseline and shows the performance improvement of PowerEdge XE9640 and PowerEdge XE8640 servers with NVIDIA H100 Tensor Core SXM GPUs. The graph demonstrates that the PowerEdge XE8640 server with NVIDIA H100 SXM GPUs performs approximately 1.25 to 1.7 times better than the PowerEdge R760xa server with NVIDIA H100 PCIe GPUs.

Figure 4. Performance difference between PowerEdge XE9640 and XE8640 servers with 4x H100 SXM and PowerEdge R760xa server with 4x H100 PCIe as a baseline

Because the NVIDIA H100 SXM GPUs have higher Thermal Design Power (TDP), if high performance is imperative, then using NVIDIA SXM GPUs is a great choice.

Comparing efficiency of new and previous generation servers

The following figure shows the performance of the previous generation PowerEdge XE8545 server with NVIDIA A100 SXM GPUs compared to the new generation servers such as the PowerEdge R760xa server with the NVIDIA H100 PCIE form factor and the PowerEdge XE8640 and XE9640 servers with the NVIDIA H100 SXM form factor. We see that all the new generation servers rendered higher performance. Furthermore, our new generation PowerEdge R760xa server with four NVIDIA H100 PCIe GPUs is more power efficient than our previous generation PowerEdge XE8545 server with four NVIDIA A100 SXM GPUs. This result is because NVIDIA A100 SXM GPUs have higher TDP relative to the NVIDIA H100 PCIe GPU.

Figure 5. Relative performance of PowerEdge R760xa, PowerEdge XE9640, and PowerEdge XE8640 servers using the PowerEdge XE8545 server as a baseline

Hardware overview

The following sections describe the system components. The appendix lists the system configurations in the benchmark.

Dell PowerEdge XE8640 server

The PowerEdge XE8640 server is an air-cooled 4U server that accelerates traditional AI training and inferencing, modeling, simulation, and other high-performance computing (HPC) applications with optimized compute, turning data and automating insights into outcomes with a four-way GPU platform. Its powerful architecture and the power of two 4th Generation Intel Xeon processors with a high core count of up to 56 cores and the latest on-chip innovations to boost AI and machine learning operations.

The following figure shows the PowerEdge XE8640 server:

Figure 6. Dell PowerEdge XE8640 server

Dell PowerEdge XE9640 server

The PowerEdge XE9640 server is a purpose-built direct liquid-cooled (DLC) 2U server for AI and HPC workloads. NVIDIA NVLink and Intel Xelink technologies in the PowerEdge XE9640 server allow seamless communication between the GPUs, pooling their memory and cores to tackle memory-coherent workloads such as large language models (LLM) efficiently.

The following figure shows the PowerEdge XE9640 server:

Figure 7. Dell PowerEdge XE8640

NVIDIA H100 Tensor core GPU

The NVIDIA H100 GPU is an integral part of the NVIDIA data center platform. Built for AI, HPC, and data analytics, the platform accelerates over 3,000 applications, and is available everywhere from the data center to the edge, delivering both dramatic performance gains and cost-saving opportunities. The NVIDIA H100 Tensor Core GPU delivers unprecedented performance, scalability,

and security for every workload. With NVIDIA® NVLink® Switch System, up to 256

NVIDIA H100 GPUs can be connected to accelerate exascale workloads, while the dedicated

Transformer Engine supports trillion-parameter language models. The NVIDIA H100 GPU uses

breakthrough innovations in the NVIDIA Hopper™ architecture to deliver industry-leading conversational AI, speeding up large language models by 30 times over the previous generation.

The following figure shows the NVIDIA H100 PCIe accelerator:

Figure 8. NVIDIA H100 PCIe accelerator

The following figure shows the NVIDIA H100 SXM accelerator:

Figure 9. NVIDIA H100 SXM accelerator

Conclusion

The key takeaways include:

- Both the Dell PowerEdge XE8640 and Dell PowerEdge XE9640 servers are an excellent choice for inference. The performance of the air-cooled PowerEdge XE8640 server is almost identical to the liquid-cooled PowerEdge XE9640 server. While the PowerEdge XE9640 server is a 2U server, it requires additional cooling unit attachments. It is a good choice if there are space and temperature constraints, otherwise the PowerEdge XE8640 server is a great choice.

- PowerEdge XE8640 and PowerEdge 9640 servers have received several top titles. They are clear leaders in inference compute.

- New generation PowerEdge XE8640 and PowerEdge XE9640 servers with NVIDIA H100 GPUs have delivered 2- to 4-times improvement relative to the previous generation PowerEdge XE8545 server with NVIDIA A100 GPUs. Upgrading from the PowerEdge XE8545 sever would render higher performance.

- The PowerEdge XE9640 and PowerEdge XE8640 servers with four NVIDIA H100 SXM form-factor GPUs are significantly more effective than the PowerEdge R760xa server with four NVIDIA H100 PCIe GPUs by a factor of 1.25 to 1.7 times.

Our submission results to MLPerf Inference since its inception have continuously demonstrated significant performance improvements. We have submitted to different tasks to provide customers with a wide spectrum of possible results to review. This round marked a new and the first submission to MLPerf with PowerEdge XE8640 and XE9640 servers. Customers can rely on these high compute machines for their fast/low latency inference needs. If constrained by TDP or other factors, the PowerEdge R760xa server with the PCIe form factor is an excellent choice on which to run inference workloads.

Appendix

The following table lists the system configuration details for the servers described in this blog:

Table 1. System configurations

| Dell PowerEdge XE 8640 (4x NVIDIA H100-SXM-80GB, TensorRT) | Dell PowerEdge XE 9640 (4x H100-SXM-80GB, TensorRT) | Dell PowerEdge R760xa (4x H100-PCIe-80GB, TensorRT) | Dell PowerEdge XE 8545 (4x A100-SXM-80GB, TensorRT) |

MLPerf submission ID | 3.1-0066 | 3.1-0067 | 3.1-0064 | 3.0-0011 |

MLPerf system ID | XE8640_H100_SXM_80GBx4_TRT | XE9640_H100_SXM_80GBx4_TRT | R760xa_H100_PCIe_80GBx4_TRT | XE8545_A100_SXM4_80GBx4_TRT |

Operating system | Rocky Linux 9.1 | Ubuntu 22.04 | Ubuntu 20.04.4 | Ubuntu 22.04 |

CPU | Intel Xeon Platinum 8480 | Intel Xeon Platinum 8480+ | Intel Xeon Platinum 8480+ | AMD EPYC 7763 |

Memory | 1 TB | 1 TB | 2 TB | 2 TB |

GPU | NVIDIA H100 SXM 80 GB | NVIDIA H100 PCIE 80 GB | NVIDIA A100 SXM 80 GB CTS | |

GPU count | 4 | |||

Software stack | TensorRT 9.0.0 CUDA 12.2 | TensorRT 8.6.0 CUDA 12.2 | ||

MLCommons results

MLPerf system IDs:

- ID 3.0-0011

- ID 3.1-0064

- ID 3.1-0066

- ID 3.1-0067

Note: We reran the RetinaNet Offline benchmark for the PowerEdge R760xa server and the DLRMv2 benchmark for the PowerEdge XE8640 server to reflect the correct performance that the servers can render. Only these two results are not official due to MLCommons rules.

The MLPerf™ name and logo are trademarks of MLCommons Association in the United States and other countries. All rights reserved. Unauthorized use strictly prohibited. See www.mlcommons.org for more information.

Comparing the NVIDIA H100 and A100 GPUs in Dell PowerEdge R760xa and R750xa Servers

Wed, 04 Oct 2023 16:47:00 -0000

|Read Time: 0 minutes

Abstract

Dell Technologies recently submitted results to the MLPerf™ Inference v3.1 benchmark suite. This blog highlights Dell Technologies’ closed division submission made for the Dell PowerEdge R760xa and Dell PowerEdge R750xa servers with NVIDIA H100 and NVIDIA A100 GPUs.

Introduction

This blog provides comparisons that draw relevant conclusions about the performance improvements that are achieved on the Dell PowerEdge R760xa server with the NVIDIA H100 GPU compared to their respective predecessors, the Dell PowerEdge R750xa server with the NVIDIA A100 GPU. In the Dell PowerEdge R760xa server section of this blog, we compare the performance of the PowerEdge R760xa server to the PowerEdge R750xa server while keeping the NVIDIA H100 GPU constant to demonstrate the improvement of the new generation of PowerEdge servers. Also, we compared the performance of the PowerEdge R760xa server with the NVIDIA H100 GPU to the PowerEdge R750xa server with the NVIDIA A100 GPU to showcase the server plus the GPU generation-to-generation improvements. In the Dell PowerEdge R750xa server section of this blog, we kept the server constant and compared the performance of the NVIDIA H100 GPU to the NVIDIA A100 GPU. For an additional angle, we held the PowerEdge R750xa server and the NVIDIA A100 GPU constant to showcase the performance improvements delivered by software stack updates.

System Under Test (SUT) configuration

Table 1: SUT configuration of the Dell PowerEdge R760xa and Dell PowerEdge R750xa servers for MLPerf Inference v3.1 and v3.0

| Platform | R750xa | R750xa | R760xa |

| MLPerf Version | V3.0 | V3.1 | V3.1 |

| GPU | NVIDIA A100 PCIe 80 GB | NVIDIA A100 PCIe 80 GB NVIDIA H100 PCIe 80 GB | NVIDIA H100 PCIe 80 GB |

| GPU Count | 4 | ||

| MLPerf System ID | R750xa_A100_PCIE_80GBx4_TRT | R750xa_A100_PCIe_80GBx4_TRT R750xa_H100_PCIe_80GBx4_TRT | R760xa_H100_PCIe_80GBx4_TRT |

| CPU | Intel Xeon Gold 6338 CPU @ 2.00 GHz | Intel Xeon Platinum 8480+ | |

| Memory | 512 GB | 512 GB 1 TB | 2 TB |

| Software Stack | TensorRT 8.6 CUDA 12.0 cuDNN 8.8.0 Driver 525.85.12 DALI 1.17.0 | TensorRT 9.0.0 CUDA 12.2 cuDNN 8.9.2 Driver 535.86.10 DALI 1.28.0 | |

The following table shows the technical specifications of the NVIDIA H100 and NVIDIA A100 GPUs:

Table 2: Technical specification comparison of the NVIDIA H100 and NVIDIA A100 GPUs

GPU | NVIDIA A100 | NVIDIA H100 | ||||||

Form factor | SXM4 | PCIe Gen4 | SXM4 | PCIe Gen4 | PCIe Gen5 | NVL PCIe Gen5 | SXM5 | |

GPU architecture | Ampere | Hopper | ||||||

CUDA cores | 6912 | 14592 | 2x 16895 | 16895 | ||||

Memory size | 40 GB | 80 GB | 80 GB | 2x 94 GB (188 GB) | 80 GB | 94 GB | ||

Memory type | HBM2e | HBM2 | HBM2e | HBM2e | HBM3 | HBM2e | ||

Base clock | 1095 MHz | 765 MHz | 1275 MHz | 1065 MHz | 1095 MHz | 1080 MHz | 1590 MHz | 1605 MHz |

Boost clock | 1410 MHz | 1755 MHz | 1785 MHz | 1980 MHz | ||||

Memory clock | 1215 MHz | 1593 MHz | 1512 MHz | 1593 MHz | 2619 MHz | 1593 MHz | ||

MIG support | Yes | Yes/2nd Gen | ||||||

Peak memory bandwidth | 1555 GB/s | 2039 GB/s

| 1935 GB/s | 2039 GB/s | 3938 GB/s | 3352 GB/s | 2359 GB/s | |

Total board power | 400 W | 250 W | 400 W | 300 W | 310/350 W | 400 W | 700 W | |

Dell PowerEdge R760xa server

The PowerEdge R760xa server shines as an Artificial Intelligence (AI) workload server with its cutting-edge inferencing capabilities. This server represents the pinnacle of performance in the AI inferencing space with its processing prowess enabled by Intel Xeon Platinum processors and NVIDIA H100 PCIe 80 GB GPUs. Coupled with NVIDIA TensorRT and CUDA 12.2, the PowerEdge R760xa server is positioned perfectly for any AI workload including but not limited to Large Language Models, computer vision, Natural Language Processing, robotics, and edge computing. Whether you are processing image recognition tasks, natural language understanding, or deep learning models, the PowerEdge R760xa server provides the computational muscle for reliable, precise, and fast results.

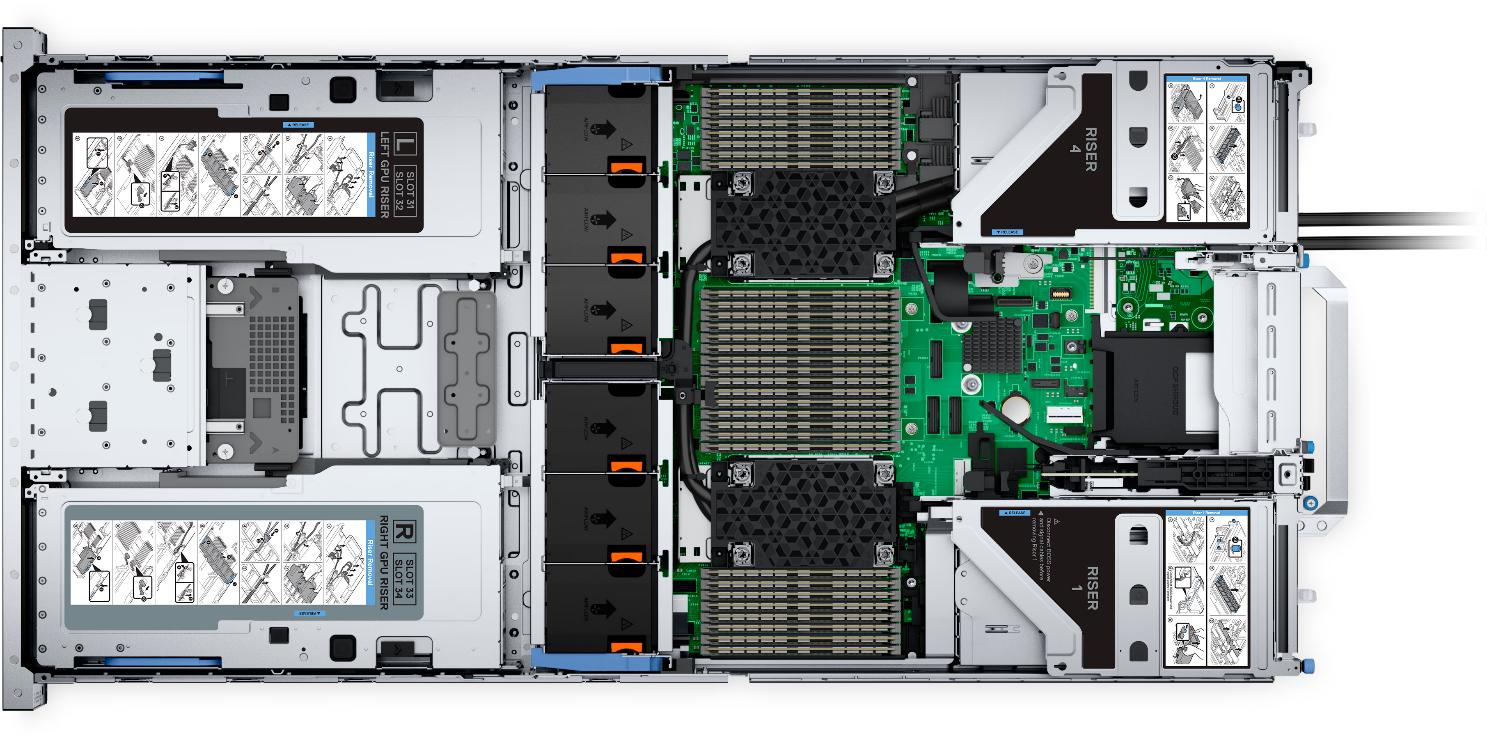

Figure 1: Front view of the Dell PowerEdge R760xa server

|

|

Figure 2: Top view of the Dell PowerEdge R760xa server

The results in the following figures are represented as percentage differences while maintaining a single SUT as the baseline. To determine the percentage difference between the two results, we subtracted the performance value achieved on the first server from the performance value achieved on the second server. We divided the difference by the performance achieved on the second server and multiplied it by 100 to get a percentage. By applying this formula, we obtain the performance delta between the second and first server. This result provides an easy-to-read comparison across two systems and several benchmarks.

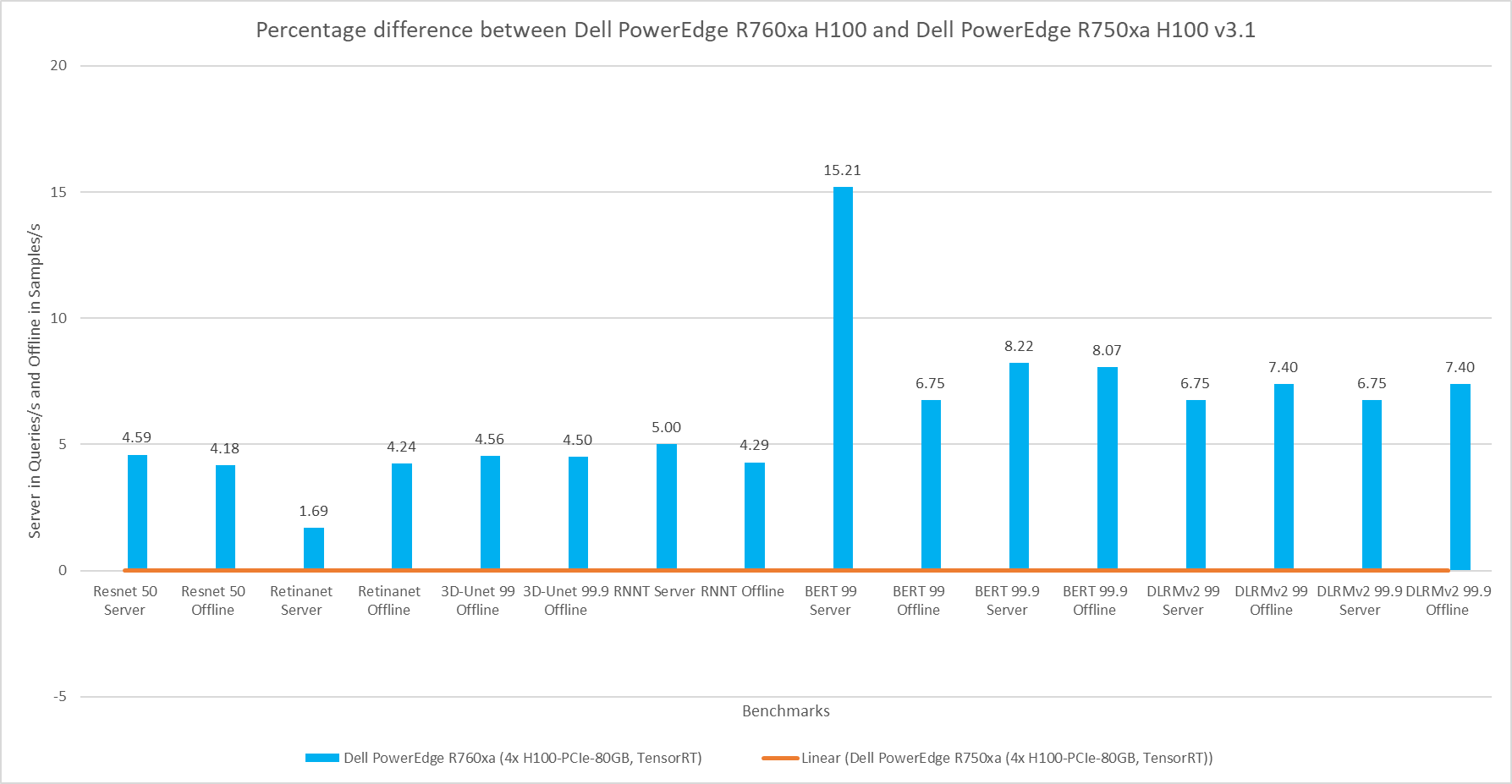

The following figure shows the percent difference between the PowerEdge R760xa and PowerEdge R750xa servers while maintaining the NVIDIA H100 GPU constant. Both results were collected from the latest official MLPerf Inference v.3.1 submission with the identical software stack. Across all the benchmarks, the PowerEdge R760xa server comprehensively outperformed its predecessor. The PowerEdge R760xa server shined in the Natural Language Processing task with a noticeable 15 percent improvement. On average, it performed approximately 6 percent better for all workloads.

Figure 3: Percentage difference between the Dell PowerEdge R760xa server with the NVIDIA H100 GPU and the Dell PowerEdge R750xa server with the NVIDIA H100 GPU for the v3.1 submission

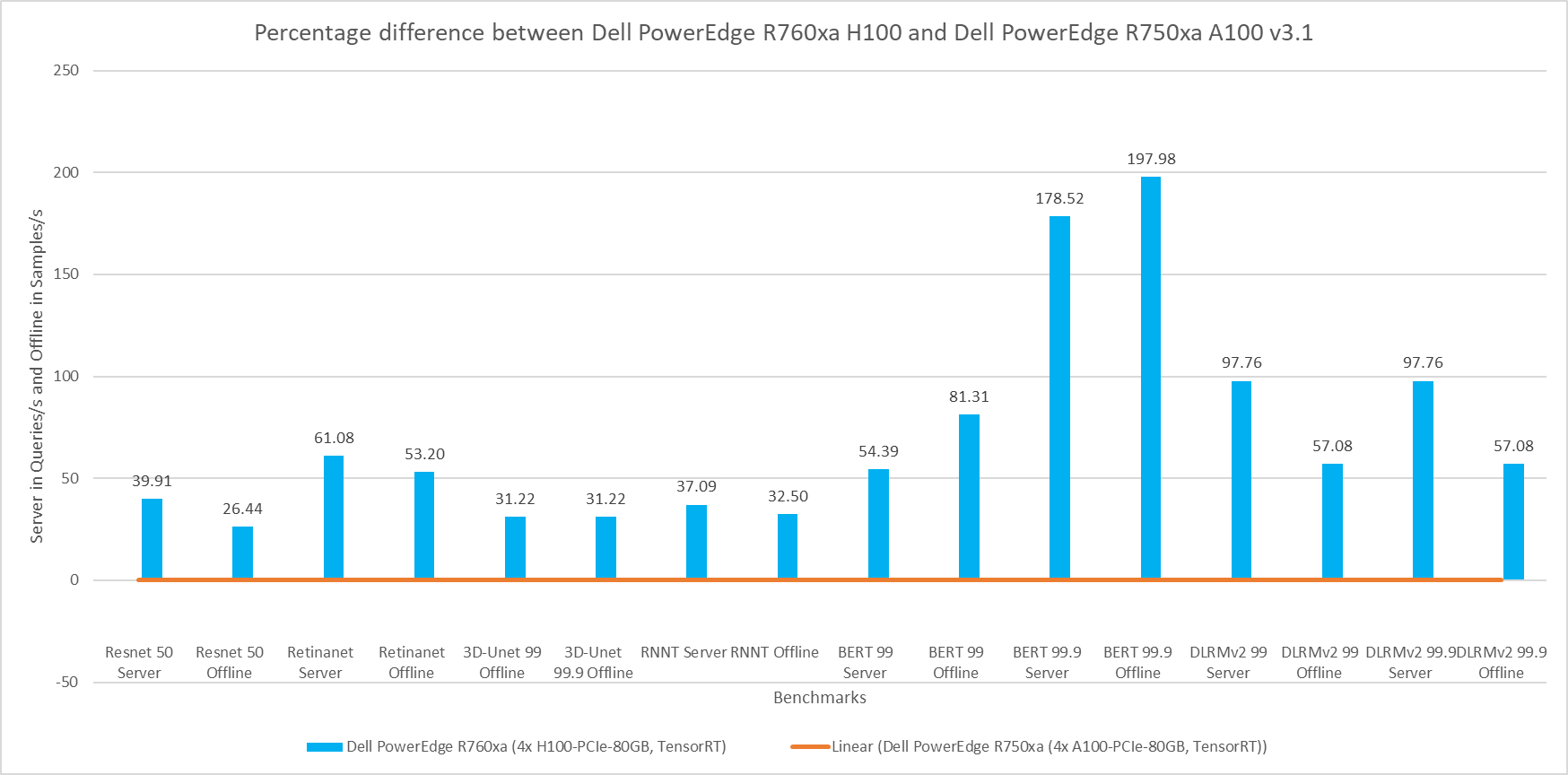

The following figure shows a comparison of the PowerEdge R760xa server with the NVIDIA H100 GPU to the PowerEdge R750xa server with the NVIDIA A100 GPU. This comparison is expected to yield the highest delta in performance due to the hardware upgrades of both the server and GPU. Both submissions were made to the MLPerf Inference v3.1 round in which the software stack was kept the same. The PowerEdge R760xa server paired with the NVIDIA H100 GPU thoroughly outperformed its predecessor in all workloads. In the high accuracy category of the Natural Language Processing workload, the PowerEdge R760xa server boasts an impressive 178 percent and 197 percent performance improvement in the Server and Offline modes respectively. On average, the newer configuration showcased a noteworthy 71 percent improvement across all the benchmarks.

Figure 4: Percentage difference between the Dell PowerEdge R760xa server with the NVIDIA H100 GPU and the Dell PowerEdge R750xa server with the NVIDIA A100 GPU for v3.1

Dell PowerEdge R750xa server

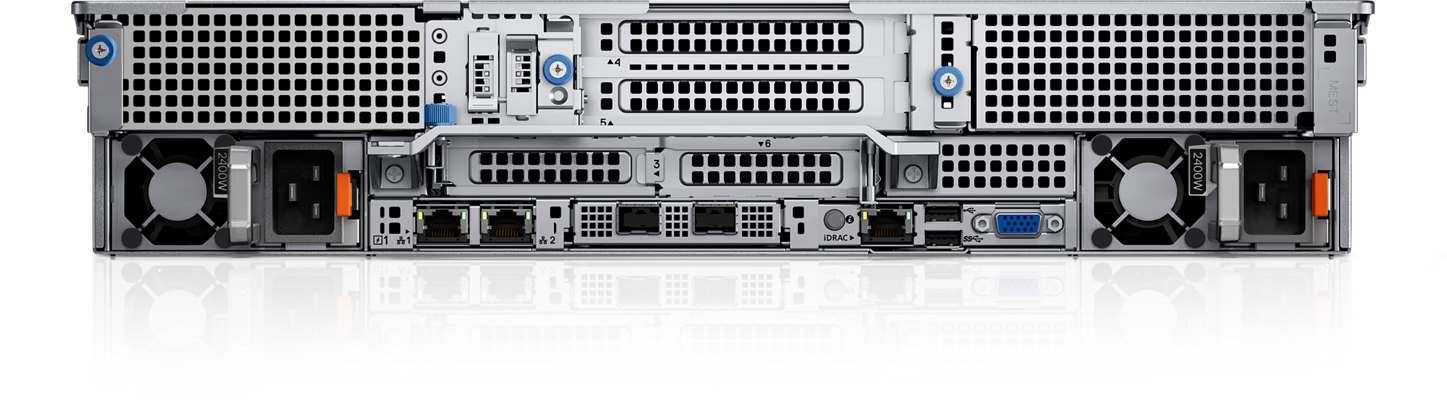

The PowerEdge R750xa server is a perfect blend of technological prowess and innovation. This server is equipped with Intel Xeon Gold processors as well as with the latest NVIDIA GPUs. The PowerEdge R760xa server has been designed for the most demanding AI/ML/DL workloads as it is compatible with the latest NVIDIA TensorRT engine and CUDA version. With up to nine PCIe Gen4 slots and availability in a 1U or 2U configuration, the PowerEdge R750xa server is an excellent option for any demanding workload.

Figure 5: Front view of the Dell PowerEdge R750xa server

Figure 6: Rear view of the Dell PowerEdge R750xa server

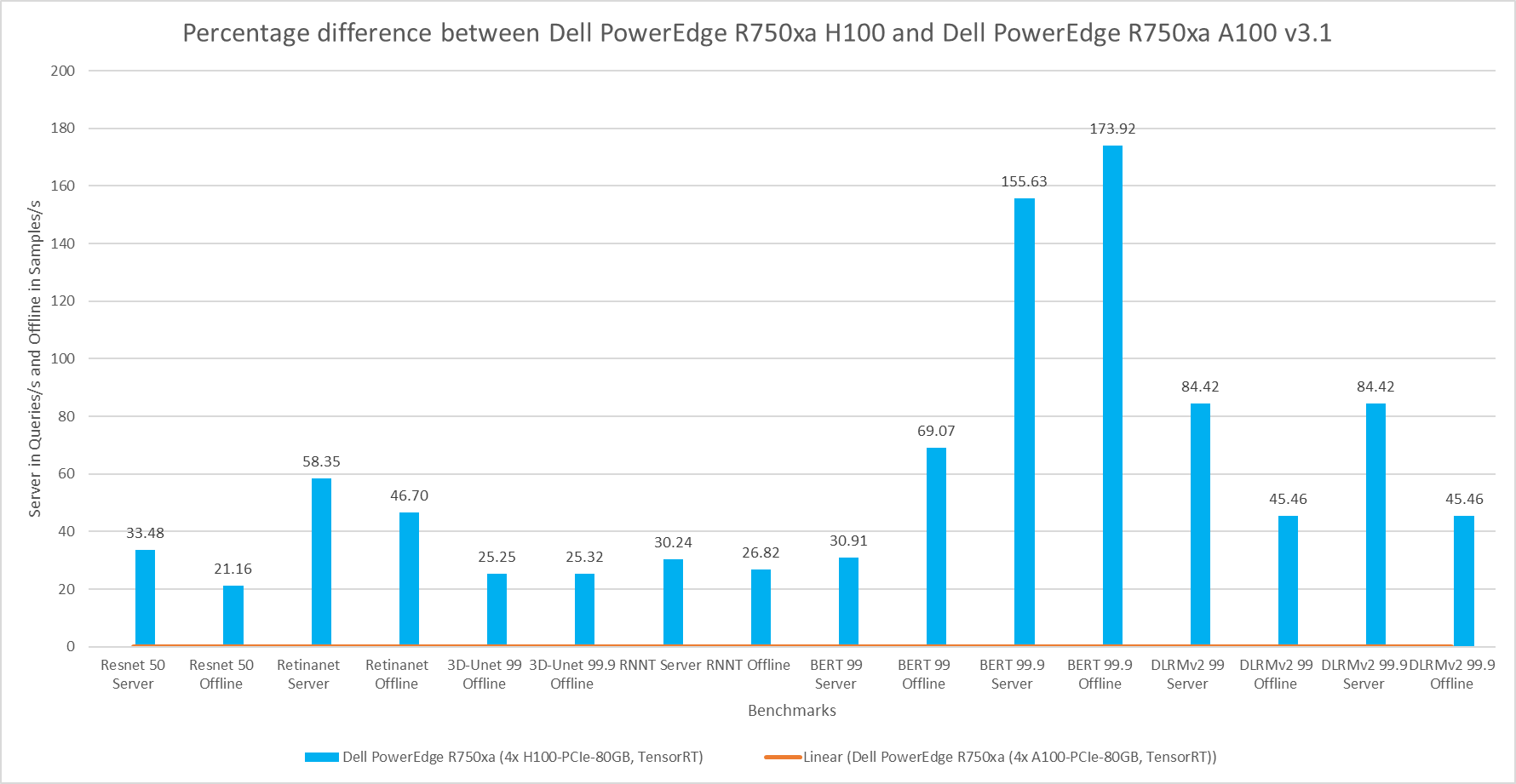

For the following comparison, the Dell PowerEdge R750xa server is held constant but the GPU is updated from the NVIDIA A100 GPU to the NVIDIA H100 GPU. This comparison is useful if you are interested in keeping the server that you already have but are upgrading the GPU. As expected, the server with the NVIDIA H100 GPU shows significant performance improvements across all the workloads. Similar to the previous comparison, the high accuracy Natural Language Processing task on the NVIDIA H100 GPU shows promising performance improvements. In the high accuracy Server scenario for BERT, the NVIDIA H100 GPU showed a 156 percent improvement and in the Offline scenario a 174 percent improvement. On average, the PowerEdge R750xa server paired with the NVIDIA H100 GPU performed approximately 60 percent better than its GPU predecessor.

Figure 7: Percentage difference between the Dell PowerEdge R750xa H100 and Dell PowerEdge R750xa A100 for MLPerf Inference v3.1

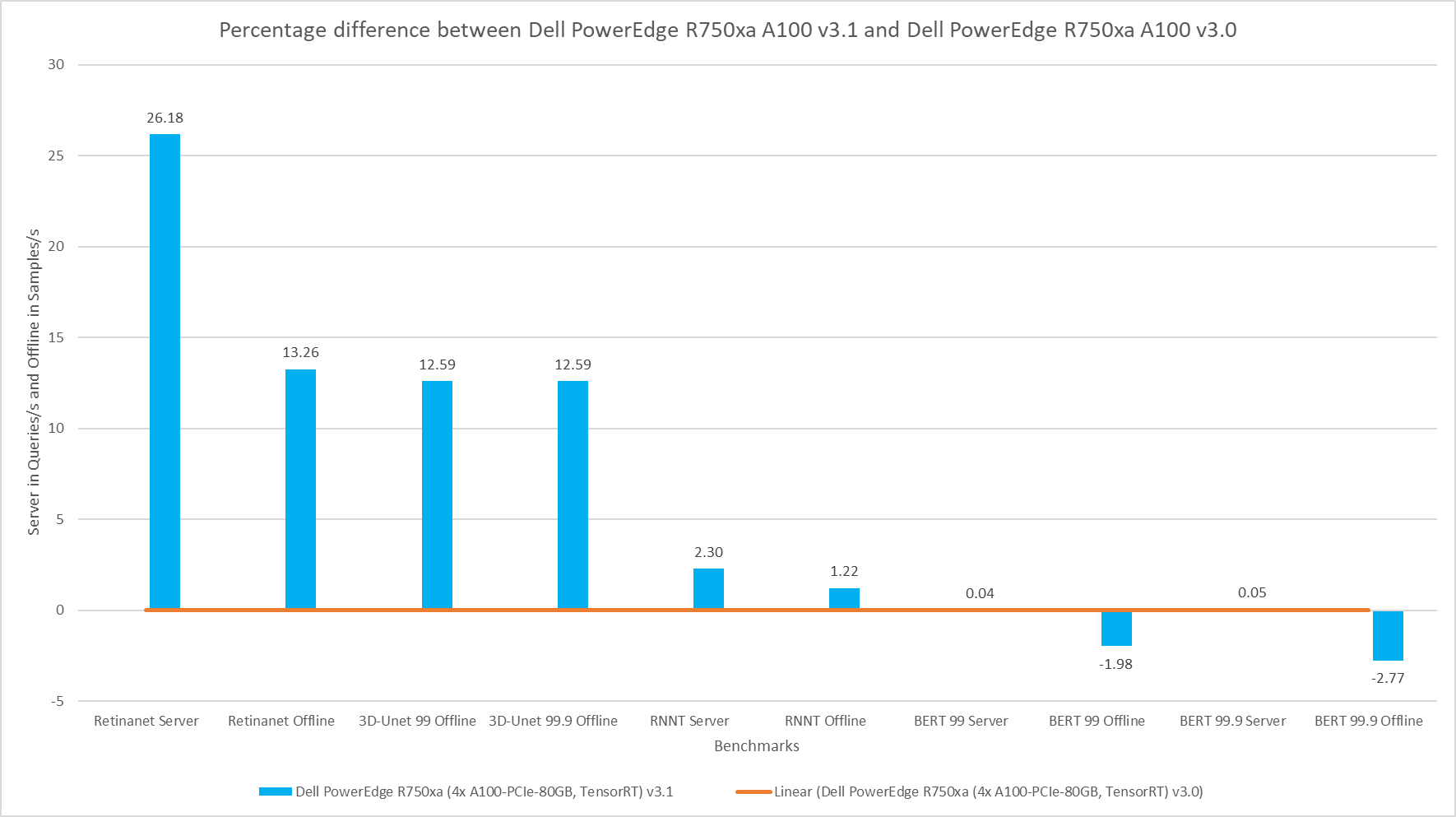

The comparison shown in the following figure is an interesting comparison across two rounds of submissions. The hardware for the SUT is identical with the Dell PowerEdge R750xa server paired with the NVIDIA A100 GPU. The performance delta from this graph can be attributed to the changes in the software stack. For the vision tasks, RetinaNet and 3D-UNet, the NVIDIA H100 GPU showed a considerable improvement in performance. For the BERT Server scenario, the performance is approximately the same. However, for the BERT Offline scenario in both the default and high accuracy modes, there was a slight regression in performance. This result can be attributed to regressions in the BERT model.

Figure 8: Percentage difference between the Dell PowerEdge R750xa server with the NVIDIA A100 GPU v3.1 submission and the Dell PowerEdge R750xa server with the NVIDIA A100 GPU v3.0 submission

Conclusion

The MLPerf Inference submissions always elicit insightful comparisons. This blog highlighted these comparisons between the MLPerf Inference v3.1 and v3.0 rounds of submission:

- A generation-to-generation comparison of the Dell PowerEdge R760xa server and the Dell PowerEdge R750xa server while keeping the GPU constant on average boasts an impressive 6.22 percent performance improvement.

- An upgrade of the server as well as the GPU from the Dell PowerEdge R750xa server paired with the NVIDIA A100 GPU to the Dell PowerEdge R760xa server paired with the NVIDIA H100 GPU shows a noteworthy boost in performance. You can expect about an average of 71 percent increase in performance across benchmarks by upgrading both the server and the GPU.

- While maintaining the Dell PowerEdge R750xa server and upgrading the GPU from the NVIDIA A100 GPU to the NVIDIA H100 GPU, you can expect an approximate 60 percent increase in performance across benchmarks.

- While maintaining the same SUT across rounds with the Dell PowerEdge R750xa server and the NVIDIA A100 GPU, you can expect on average an 11.36 percent increase in improvement for RetinaNet, 3D-UNet, and RNNT tasks, thanks to software improvements. However, there are minor regressions in performance in the BERT benchmark.

Across the first three comparisons, a pattern of improvement in the Natural Language Processing task was noticeable. With the advent of new Large Language Models, the Dell PowerEdge server is positioned well to handle Generative AI workloads. For the last comparison, we kept the Dell PowerEdge R750xa server and NVIDIA A100 GPU consistent but looked at the performance across different rounds of submission.

MLCommons™ results

Note: We ran the RetinaNet Offline results for the Dell PowerEdge R760xa and Dell PowerEdge R750xa servers with the NVIDIA H100 GPU again after the submission with a larger GPU batch size. These results significantly improved the performance and are a true representation of Dell servers as we saw a 78 percent and 114 percent increase in performance on the PowerEdge R760xa server and PowerEdge R750xa servers respectively. For the Dell PowerEdge R760xa server with four NVIDIA H100 GPUs, the RetinaNet Offline results improved from 2069.79 to 4550.67. The RetinaNet Offline results for the system ID 3.1-0063 and 3.1-0065 submissions are not official due to MLCommons rules because they were rerun after the submission and not officially submitted before the deadline.

MLPerf Inference v3.1 and v3.0 system IDs:

- 3.1-0058, 3.1-0061 Dell PowerEdge R750xa (4x A100-PCIe-80GB, TensorRT)

- 3.1-0062 Dell PowerEdge R750xa (4x H100-PCIe-80GB, TensorRT)

- 3.1-0064 Dell PowerEdge R760xa (4x H100-PCIe-80GB, TensorRT)

- 3.0-0008 Dell PowerEdge R750xa (4x A100-PCIe-80GB, TensorRT)

The MLPerf™ name and logo are trademarks of MLCommons Association in the United States and other countries. All rights reserved. Unauthorized use strictly prohibited. See www.mlcommons.org for more information.