Edge Intelligence Trends in the Retail Industry

Tue, 07 Feb 2023 22:58:02 -0000

|Read Time: 0 minutes

Retail and Edge Computing

In today's digital landscape, the integration of Retail and Edge Computing is becoming increasingly important. Retail encompasses the sale of goods and services both online and in physical stores, while Edge computing deals with processing and analyzing data close to its source of collection, rather than in a centralized location. The use of Edge computing in retail environments can improve Customer Experiences, optimize store operations, and support new technologies such as self-checkout and augmented reality systems. This leads to a mandate for real-time data analysis, allowing for a more personalized and seamless shopping experience for customers, as well as cost reduction and increased revenue for retailers. In this post, we will delve into the advantages of Edge computing and how they align with modern AI trends in the retail industry, serving as a reference to Dell's positioning and solutions for retail organizations to effectively utilize AI to meet business needs.

Leading Retail Edge AI Trends in 2023

Focus on AI uses cases with High ROI

Edge AI holds vast potential in a variety of industries with a high ROI in the form of automation of repetitive tasks, increased efficiency, and revenue growth. Edge AI can be used to analyze customer data and predict future purchasing patterns, leading to optimized product offerings and improved sales. Personalization through tailored marketing campaigns and personalized product recommendations can boost customer loyalty and repeat business. Edge AI can track Point of Sales (POS) and online shopping transactions inventory levels in real-time, identify discrepancies, and prevent stockouts, leading to cost savings and increased revenue. It can also be used to detect and prevent theft in retail environments, increasing profitability. The technology can be applied in smart shelves and robotics for inventory management and stocking shelves, resulting in increased efficiency and reduced labor costs.

Growth in Human and Machine Collaboration

Edge AI boosts human-machine collaboration in retail by automating tasks and powering virtual assistants, predictive analytics, sensors, and robots. These AI tools free up store staff for tasks requiring human skills, offer personalized information, optimize product offerings, track inventory, detect theft, and provide real-time data analysis and recommendations to improve the customer experience.

New AI Use cases for safety

Edge AI can be used to improve safety in retail environments in various ways. Edge AI-powered cameras and sensors can be used for real-time surveillance, helping to detect potential safety hazards such as spills or broken equipment. Edge AI can also be used for crowd management, analyzing data on foot traffic to identify patterns and optimize store layouts. In case of emergencies, Edge AI can detect potential incidents such as fires or gas leaks and send real-time alerts to emergency responders. Edge AI can be used to track inventory levels in real-time and monitor individual movements, detecting falls or accidents and alerting store staff or emergency responders. Edge AI can also be used for temperature monitoring in areas such as refrigerated storage to ensure safe temperatures and prevent food-borne illnesses. Real-time alerting can be done using Edge AI to ensure quick and effective responses to safety incidents.

IT Focus on Cybersecurity at the Edge

The retail industry is rapidly adopting Edge AI technologies to improve the customer experience and enhance operational efficiency. However, this increased reliance on Edge AI also creates new cybersecurity risks, as cyber criminals seek to exploit vulnerabilities in these systems. To address these challenges, retailers are increasing their investment in cybersecurity for Edge AI technologies. Some of the key trends in Edge AI cybersecurity for retail include the implementation of advanced security protocols, such as encryption and multi-factor authentication, to protect sensitive customer data. Another trend is the use of artificial intelligence to detect and prevent cyber threats in real-time, by analyzing data from Edge AI devices and network traffic. As Edge AI continues to become more widespread in the retail industry, the need for robust cybersecurity measures will only continue to grow, making it essential for retailers to stay ahead of the latest trends and best practices.

Connecting Digital Twins to the Edge

Edge AI can be utilized to create digital twins for various aspects of the retail environment, leading to improvements in store operations and customer experience. Retailers can create digital twins of store layouts to optimize product placement and simulate customer movement. Digital twins can also be created for inventory systems, smart shelves, and automated systems to optimize stock levels, predict demand patterns, and improve task efficiency and accuracy. Predictive maintenance can be performed by creating digital twins of equipment and infrastructure to avoid downtime. Edge AI also enables real-time monitoring of retail environments by creating digital twins and analyzing data, such as foot traffic and temperature, to inform better decision-making. Virtual reality applications can also be enhanced by creating digital twins of the store, providing virtual try-ons and product demonstrations to customers.

Importance of Edge Computing

Edge computing is becoming a crucial technology as the amount of data generated from devices and sensors continues to grow. By decentralizing and distributing computing, edge computing offers several advantages, including real-time processing with lower latency, improved efficiency, enhanced security, cost-effectiveness, and increased scalability.

Benefits

Low Latency: Edge computing enables near real-time processing for applications like self-driving cars, industrial automation, and IoT devices.

Improved Efficiency: By processing data near source, edge computing reduces data transmission, improving system efficiency and reducing data storage costs.

Improved Security: Edge computing protects sensitive data by processing it near source and analyzing it before transmitting to a centralized location, reducing data breach risks.

Cost-effective: Edge computing reduces costs by reducing the need for powerful servers and large data centers, and reducing data transmission and storage costs.

Increased Scalability: Edge computing allows decentralized, distributed computing, making it easier to scale systems as needed without infrastructure constraints.

Dell Solutions for Retail AI Edge Computing

Dell Technologies collaborates with a multitude of business partners to offer market-leading software integrated with its latest PowerEdge XR4000 infrastructure for Retail AI Edge Computing. These comprehensive solutions are carefully curated and validated through Dell's Validated Designs to support retailers in realizing their AI objectives and applications. In this discourse, we will delve into three distinctive solutions that pertain to the following areas: Manage and Scale AI at the Edge, Retail Loss Prevention, and Retail Analytics.

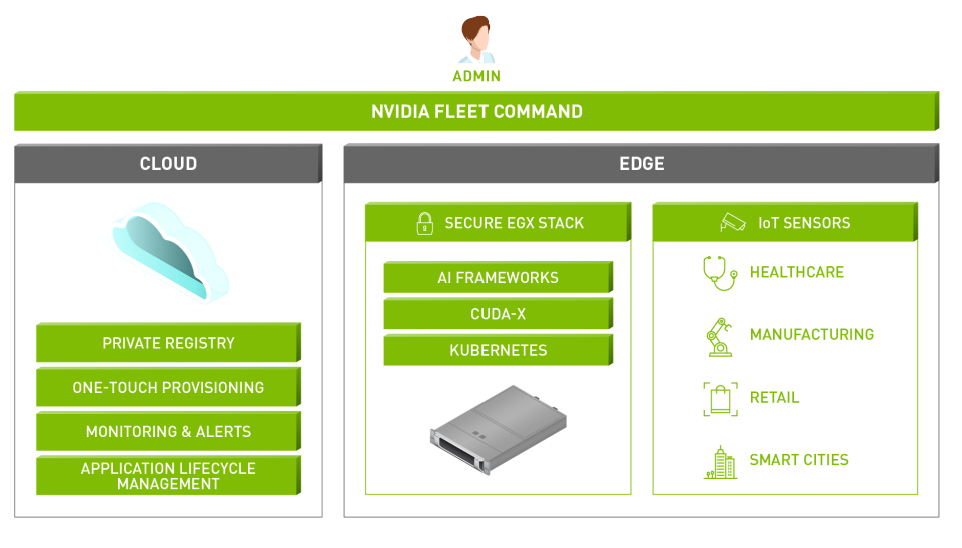

Nvidia Fleet Command – Manage Configurations and Scale AI at the Edge

Edge AI deployment introduces new opportunities with real-time insights at the decision point, reducing latency and costs compared to data center and cloud transfer. However, bringing Edge AI to retail pipelines can be challenging due to resource limitations. Fleet Command is a hybrid cloud platform for managing large-scale AI, including edge devices, through a single web-based control plane. With Fleet Command and Dell EMC PowerEdge servers, IT administrators can remotely control AI deployments securely and efficiently, streamlining deployment and ensuring resilient AI across the network.

Adaptive Compute

Dell Technologies' systems management enables fast response to business opportunities through intelligent systems that collaborate and act independently to align outcomes with business goals, freeing IT to focus on innovation. Fleet Command simplifies AI management through centralization and one-touch provisioning, reducing the learning curve and accelerating the path to AI.

Autonomous Management

Dell Technologies' systems management enables quick response to business opportunities with smart systems that act independently to align with business goals, freeing IT to focus on innovation. Fleet Command streamlines AI management with centralized, straightforward management and one-touch provisioning.

Proactive Resilience

Dell EMC PowerEdge servers, prioritize security throughout the infrastructure and IT setup, detecting potential risks. Fleet Command adds extra protection with integrated security features that secure application and sensor data, including self-healing capabilities to minimize downtime and reduce maintenance expenses.

Figure 1. Fleet Command Platform UI

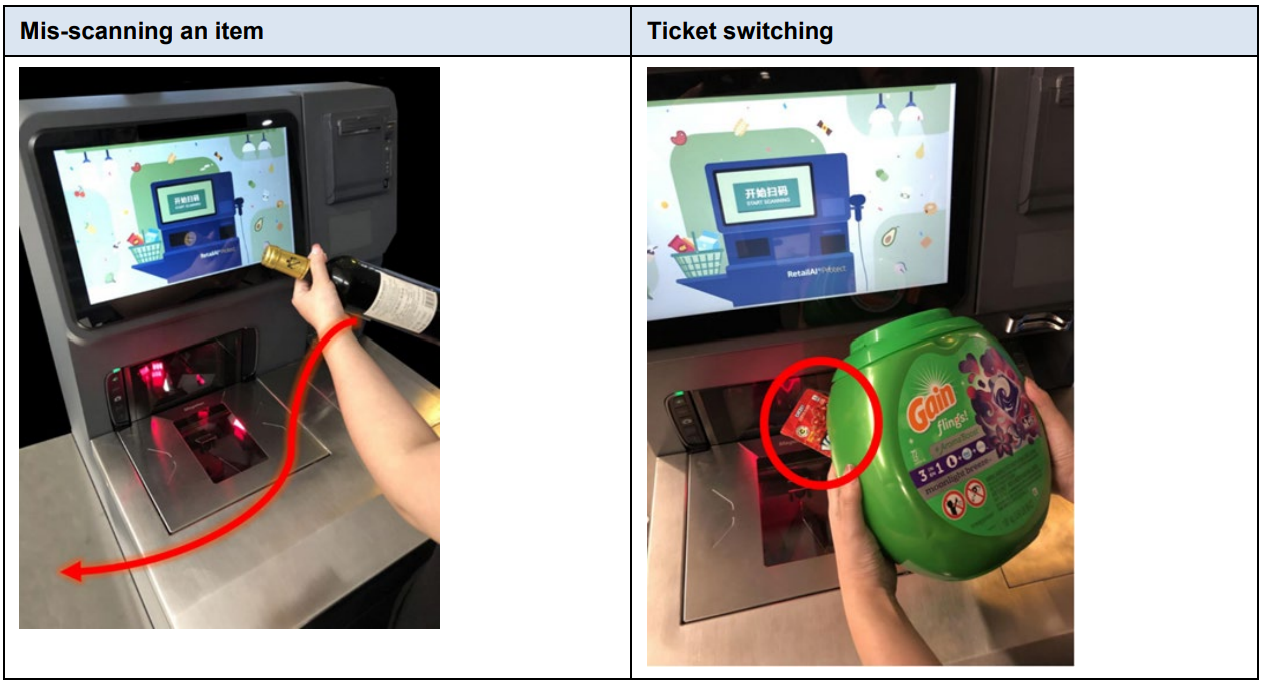

RetailAI Protect by Malong Technologies – Retail Loss Prevention

The Retail Loss Prevention solution is driven by Dell EMC PowerEdge server in-store and uses advanced product recognition technology from Malong RetailAI® Protect. The aim of the Retail Loss Prevention architecture is to prevent fraud while maintaining a seamless customer experience. It is an AI-powered system that can detect mis-scans and ticket switching in near real-time, covering a wide range of stock keeping units (SKUs). The solution components are chosen based on their compatibility and enhancement of existing POS scanners. The Malong RetailAI Protect solution can prevent retail loss in two ways: ticket switching and mis-scans. The overhead fixed-dome camera records an item with a suspect UPC barcode or an item that wasn't scanned. The video is processed in a GPU and fed into the Malong RetailAI model to predict the item's UPC. If the item wasn't scanned, the Malong RetailAI Protect system alerts the self-checkout (SCO) system after a set time interval. If the scanned UPC doesn't match the correct code, the system immediately raises an alert for the retail associate to take appropriate action.

Figure 2. Examples of mis-scanning and ticket switching

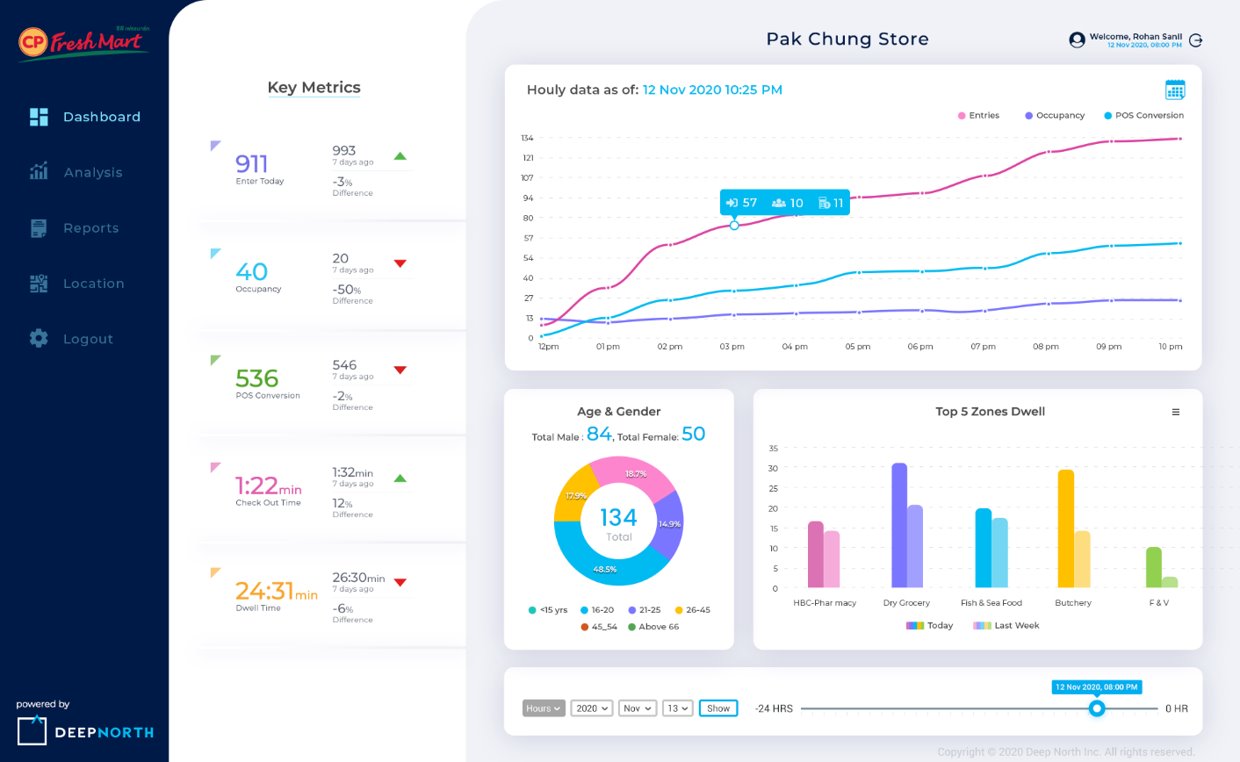

Deep North Video Analytics– Retail Analytics

Deep North Video Analytics is a leading-edge platform that performs real-time and batch processing of images that are captured by cameras that are typically located in the ceiling of a retail environment. The Deep North platform ingests the images and feeds them directly into the memory of GPU cards that are installed in a Dell PowerEdge or Dell VxRail node. Each camera stream is analyzed frame by frame, and then the Deep North inferencing algorithms produce specific metadata. This metadata is then sent to the Deep North Analytics Cloud and converted into a dashboard that provides valuable information to the store owner.

Figure 3. Visualizations in a Deep North Analytics Dashboard

Dell is your partner in your AI Edge for Retail Journey

As AI continues to evolve, keeping up with the design, development, deployment, and management of AI solutions can be a challenge for organizations lacking AI expertise. That's where Dell Technologies comes in, as your trusted partner in the AI journey. With a decade of experience as a leader in advanced computing, Dell offers industry-leading products, solutions, and expertise to empower your organization. Our specialized team of AI, HPC, and Data Analytics experts are dedicated to helping you stay ahead of the curve, with a focus on Edge AI for Retail. Our experts can assist you in leveraging Edge AI to drive business outcomes, improve customer experiences, and increase operational efficiency. Trust Dell to help you stay at the forefront of AI innovation.

Customer Solution Center

The Customer Solution Center is a dedicated resource designed to provide customers with a comprehensive range of information, recommendations, and demonstrations of technologies and platforms that support AI. Our experienced staff are well-versed in the challenges faced by customers and offer valuable insight and guidance to help organizations leverage AI to its full potential.

AI and HPC Innovation Lab

The AI and HPC Innovation Lab is a state-of-the-art infrastructure staffed by an exceptional team of computer scientists, engineers, and Ph.D.-level experts. These specialists work in close collaboration with customers and the wider AI and HPC community to advance the field through early access to emerging technologies, performance optimization of clusters, benchmarking of applications, best practice development, and publication of industry thought leadership. By engaging with the Lab, organizations have direct access to Dell's leading experts, enabling them to tailor a customized solution for their unique AI or HPC needs.

Conclusion

Edge computing has become a crucial technology in the retail industry, allowing for real-time data processing and analysis close to the information source. This leads to improved customer experiences, optimized store operations, and new technology implementation, such as self-checkout and augmented reality systems. As data generation continues to grow, edge computing offers low latency, improved efficiency, enhanced security, cost-effectiveness, and increased scalability. Edge AI is at the forefront of retail trends, offering high ROI in the form of intelligent automation and improved efficiency, human-machine collaboration, new AI use cases for safety, a focus on cybersecurity at the edge, and the creation of digital twins for improved intelligent decision making.

Related Blog Posts

Using Edge Computing to Enhance Customer Experience and Operational Efficiency - Part 3

Thu, 10 Aug 2023 12:41:15 -0000

|Read Time: 0 minutes

As discussed in Part 1 and Part 2, this blog provides a comprehensive analysis of potential edge computing solutions for those current problems in the retail sector, including retail shrink, stocking, and item locations.

In this part, we will be looking at a consolidated solution for the problems discussed in Part 1 and Part 2.

Consolidated solution

Solution overview

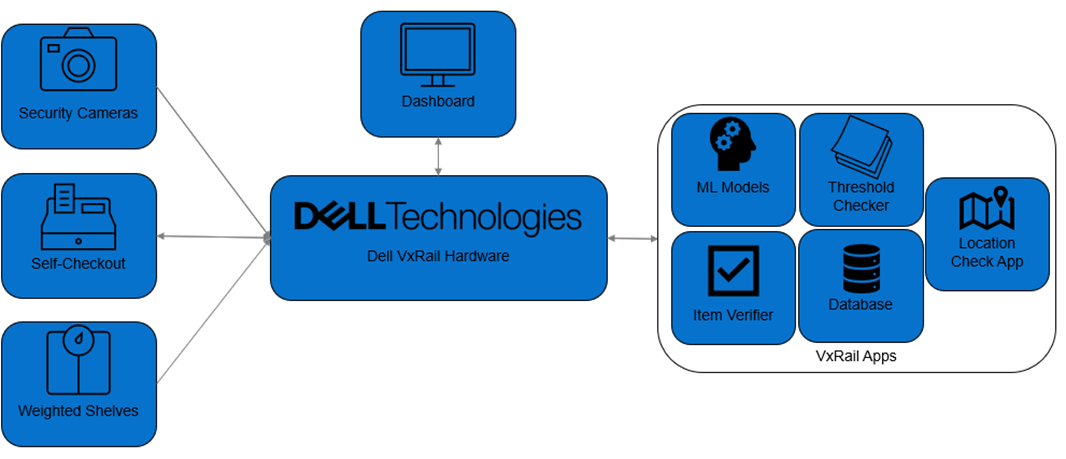

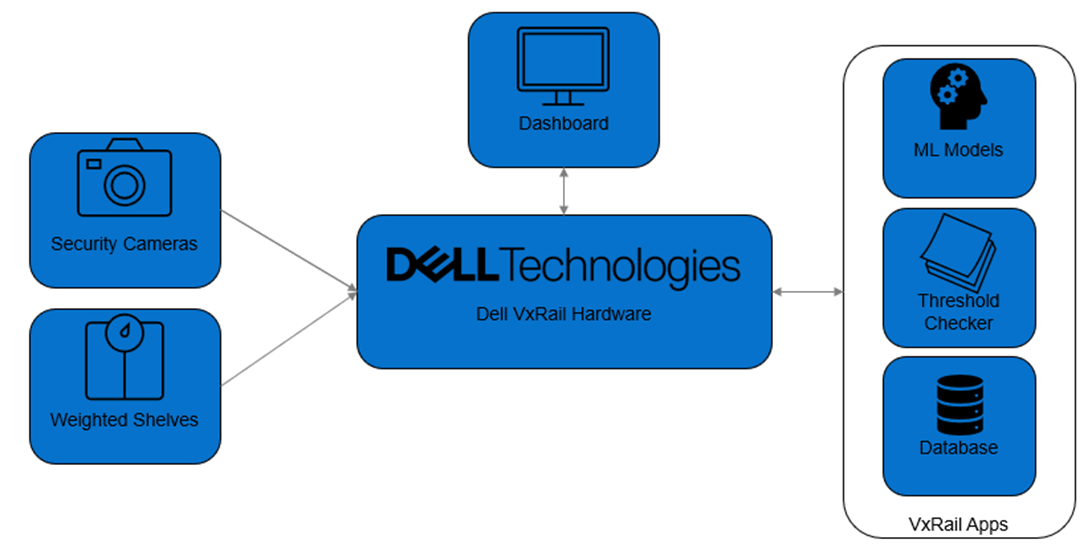

All three solutions I have created for the retail problems involve the use of smart cameras and computer vision to analyze products and their whereabouts in the store. Since there are so many common pieces between the solutions, it would be easy to create a fourth solution that encompasses and solves the three problems.

For this solution, there are many different items you will need. The retail store’s pre-existing or newly installed cameras must be placed throughout the store aisles, the self-checkout stations, and the storefront. You also need the Dell VxRail cluster which has the compute power, storage, machine learning models, and computer vision applications. The computer vision applications themselves are use real-time video analysis to identify, track, and count products on the shelves, validate self-checkout items, and monitor where the items are located. The self-checkout machines must be connected to the cluster. Optionally, you can use the shelf weight sensors to complement the cameras in the aisle for better estimates of the number of items on the shelves.

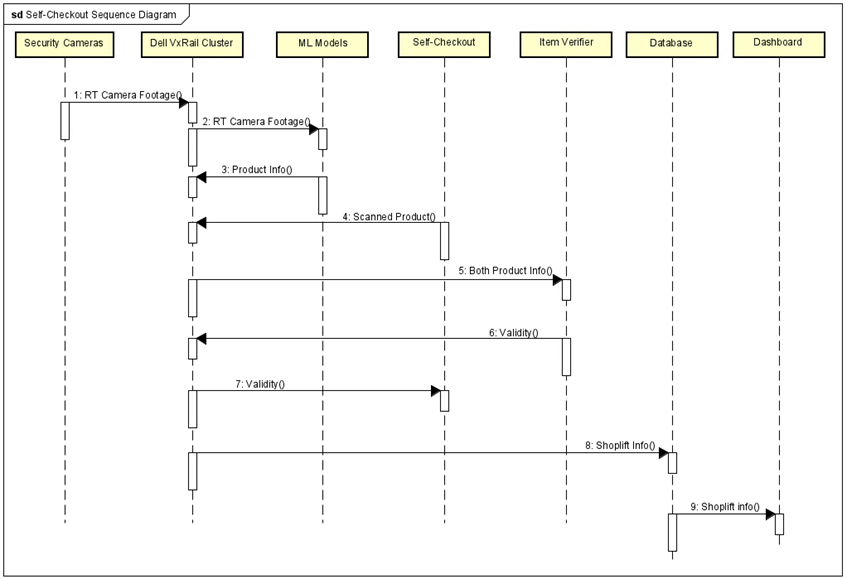

There are many different problems that are solved with this solution, the first being shoplifting. At the self-checkout machines, the customers scan their items like normal, but the cameras send the feed to the ML models to verify in real-time whether the item is correctly labeled. If a mismatch is detected, then the customer is prompted to try again. If the customer is unable to fix it, then the system can alert the cashiers to assist. The system can also track products throughout the store to make sure nobody is storing items in their clothing or refusing to scan them at the self-checkout. If they do, the system can alert a staff member to handle it.

The next problem being solved with this solution is the monitoring of stock in the shelves. The cameras stationed throughout the aisles record and send real-time footage back to the cluster, which can count how many items there are on the shelf. The store can set thresholds to determine low-stock situations, and when the amount of the product enters that threshold, the system will alert the employees to go and restock the shelf. Additionally, the customer can add weight sensors to the shelf to aid the system in determining how many items there are on the shelf. This will enhance the inventory management, and it will minimize the amount of time a product is unable to be purchased due to empty shelves.

The last problem to be solved with this solution is misplaced items in the store. Using the same cameras and real time footage from the last problem, the models on the cluster will determine what products there are on the shelf. The cameras will be assigned an aisle when they are installed, and the store will enter beforehand what camera corresponds with which aisle and tray. A separate store database will contain information on what item goes where in the store, and the application will check the item location against the store database to make sure it is in the right spot. If it is not, the system can alert an employee to return the item to its proper location.

From combining all three solutions, the store can gain valuable data about the store and its items as well. The system can record what items are attempted to be shoplifted, and if enough people are trying to steal the items, the store may want to take precautions by putting them behind glass or storing them in the back. The store will also gain data on how fast a product’s available stock is being sold, so that the store can determine which items sell slowly and have too much shelf space, and which items need more shelf space so they can put more in the aisles. The system will also record what items are frequently misplaced, which the store owners can use to reorganize the layout and optimize the shelf arrangements to encourage more sales.

Figure 1. Technological diagram

This solution would use the same sequence diagrams as the other three, since they are all different use cases that would be running concurrently.

Figure 2. Shelf-checkout sequence diagram

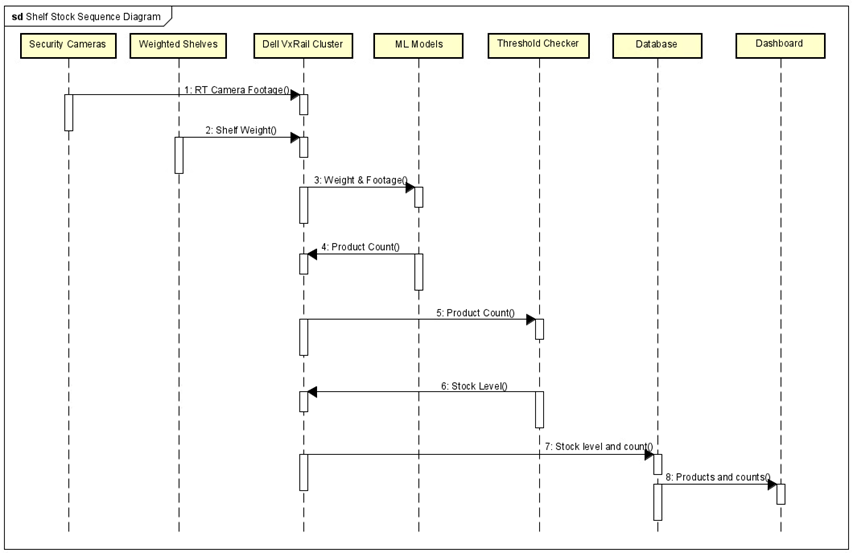

Figure 3. Shelf stock sequence diagram

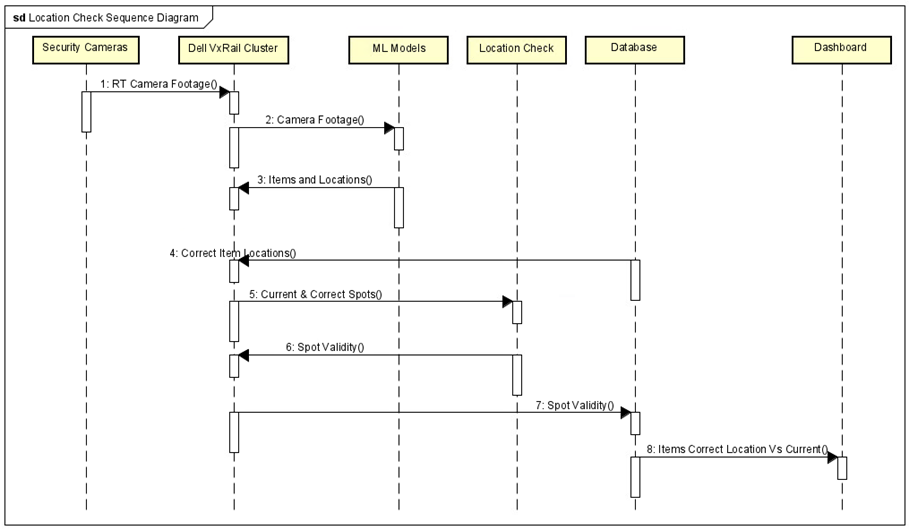

Figure 4. Location check sequence diagram

Why edge?

Why do we need to use edge computing in these solutions as opposed to something like the cloud? While you can run apps and databases in the cloud, there are two main reasons why it is imperative that we use edge computing to create these solutions.

The first of these reasons is that we need real-time analysis of the camera footage. Having to transfer your camera footage to the machine learning models and back would be extremely time consuming, and you would lose all the benefits of running the analysis on-site. For example, in the time it would take for you to receive your data back about the stock levels of a product, you may have lost out on many sales because the product was not available.

The second reason is the cost of using a cloud service. Not only is transferring all your camera footage to another server in the cloud extremely slow, but it would also be very expensive. Stores have a lot of cameras and sending the video to another site would take all of your bandwidth, and it would cost a lot of money to get it transferred over there. The price it would take to hold all your camera footage while you did analysis on it would cost more than it would be worth to get the analysis.

More possible problems

Introduction

I took the time to interview people about what problems they encountered when they went to the store. By interviewing actual customers of retail environments, I could gather more insight into what problems affected the lives of shoppers, problems that could possibly lose the business of the customer. If the customer has their problems solved, it may result in more business for the store. These problems are not directly solved by the edge computing solutions I designed, but I believe the impact the solutions make on store efficiency and sales will help to lessen the impact of these problems.

Interviewees and their problems

Interviewee 1: Undergraduate intern, Dell Technologies

Problem: Every store is different

Having a consistent layout in stores can be important because it can help customers find what they are looking for more easily. When walking into a brand-new store, even if the franchise is the same, the layout of the store will be different. This can make it harder for customers to find what they want, which could make the customer go to another store the next time they need to buy something. In addition, having things hard to find can negatively affect the customer experience. Customers may become frustrated if they cannot find what they are looking for and may leave the store without making a purchase.

On the other hand, having things hard to find can also cause the customers to spend more time in the store looking around, which can make them more likely to pick up and buy things that they do not need.

Interviewee 2: Undergraduate intern, Dell Technologies

Problem 1: Occupancy is high

High occupancy can be a problem for stores because it can negatively affect the customer experience. When stores are crowded, customers may feel uncomfortable and may not want to spend as much time in the store. In addition, high occupancy can also lead to safety concerns. If there are too many people in the store at once, it can be difficult for customers to move around and for employees to keep track of everyone.

Problem 2: Time it takes to get checked out is high

Having a long checkout time will negatively impact the sales of the business. Waiting customers could give up and abandon their carts, and they may even go to a different store where the checkout time is shorter.

Interviewee 3: Undergraduate intern, Dell Technologies

Problem 1: Labels of what items in the aisle are not detailed enough

When aisle signs are not detailed enough, it can affect the customer flow, the customer experience, and the sales performance of the store. If aisle signs do not correctly communicate what is in the aisle, then customers will spend more time wandering in the store, when they could be getting checked out to make room for more customers.

Problem 2: Calling for help needs to be easier

Sometimes, customers need to call upon staff to find help. If a customer does not know where something is at in a store, it is possible that one of the retail workers knows instead. In some stores, a customer getting help requires them searching the store for an available attendant.

Interviewee 4: Undergraduate intern, Dell Technologies

Problem: Too expensive

Having an item at a high price means that the company will get more money when it is sold, but sometimes it means that the company will have a harder time selling the item. If an item is priced to highly, a customer may choose to go to a competing store where the prices are lower instead.

Interviewee 5: Undergraduate intern, Dell Technologies

Problem: It is too hard to find things in the store

When it is hard to navigate a store, customers will not be able to find what they are looking for and may leave the store without making a purchase. This can lead to the store losing sales. If a customer leaves the store once because it is too confusing, it is unlikely that they will enter the store again.

Conclusion

In conclusion, edge computing is a valuable resource that can help retail owners elevate and exceed in their business. Edge computing is becoming increasingly valuable for retailers looking to do real-time analysis of data and management of different machines, allowing them to solve new problems and generate more sales. I looked over three different problems in the retail sector that impacted sales, those being retail shrink, empty shelves, and misplaced items, and discussed how those could be solved using edge computing through a text overview and diagrams. I then went over how these solutions all had similar parts, and how you could combine them into one solution to solve all three problems at once. I then went over why edge computing was the right tool for the job and how it was a better choice than other computing options. To end it off, I talk about how I interviewed multiple shoppers on their problems in retail stores. Overall, edge computing is a powerful tool that can help retailers improve their business and provide better customer experiences.

Read more

For more information about using edge computing to enhance the customer experience and operational efficiency, see Part 1 and Part 2 of this blog.

Using Edge Computing to Enhance Customer Experience and Operational Efficiency - Part 2

Thu, 10 Aug 2023 12:41:15 -0000

|Read Time: 0 minutes

As discussed in Part 1, this blog provides a comprehensive analysis of potential edge computing solutions for those current problems in the retail sector, including retail shrink, stocking, and item locations.

In this part, we will be looking at more potential problems as well as suggested solutions for them.

Problem 2: Stocking (Empty store shelves)

Overview

Stocking store shelves is an essential part to keeping up sales in a retail store. Customers expect to find what they need when they visit a store, and they may switch to a competitor if they encounter frequent out-of-stocks. It is essential for retailers to adopt an efficient and effective replenishment strategy that ensures optimal inventory levels and minimizes out-of-stocks. According to retail store IHL Group, shoppers encounter out-of-stocks in as often as one in three shopping trips, about 32% of those being empty shelves.

A common replenishment strategy that some retailers use is to stock store shelves only on specific days, such as once or twice a week. This approach may seem convenient and cost effective, since it would reduce the labor needed to frequently restock the shelves. However, this strategy also has some drawbacks that can harm the sales performance and profitability of the retailer.

Firstly, products sell at different rates. Some products may sell faster than others, and some days may even have higher sales than other days. This means that some products may sell out of stock before the next time the shelves are restocked, while other products take up too much space that can be used to hold other, more sought-after products.

Secondly, not having store shelves stocked continuously lowers the customer satisfaction with the store. The store may not seem reliable if they never have the items in stock, and customers may feel frustrated or disappointed with the store. It is even possible that customers will then switch to a competing retailer to meet their needs.

Real-world scenario

Once, I went to the store to purchase some potato chip. I went to the store on a Friday afternoon after class, one day before the typical restocking. Upon entering the store, I found that all the potato chips were sold out. There were not even corn chips available. Instead of waiting another day for the store to restock, I went to another store and purchased some other brand of chips. As a result of this experience, the first store not only lost the sale of my bag of potato chips but also likely lost the sales from other customers who were looking for chips that day.

Benefits of addressing the problem

Restocking products when they need to be restocked has several benefits that can improve the sales performance and profitability of the retailer. There are some benefits to restocking shelves: you can attract and retain customers, and you can optimize your inventory management.

Customers are more likely to enter and stay in a store that has well-stocked and appealing shelves. They can easily locate the products they want to buy, and they can also discover new products that are tempting to purchase. This can increase the likelihood of impulse purchases and repeat visits, since the customer would be happy with the store.

Restocking shelves can help retailers to monitor their inventory levels and prevent overstocking and understocking. By keeping track of what products are selling well and what products are not, retailers can adjust their orders and promotions accordingly. This can reduce inventory costs, waste, and shrinkage, and improve cash flow and profitability.

Possible solution

Like in the last solution, you will need a camera system, a Dell VxRail cluster for both compute power and storage, and computer vision applications for analyzing the incoming video feeds. Additionally, the cluster can connect to shelves that gather the weight of the items on top to better represent how many items more accurately there are on the shelf.

In this solution, there will once again be cameras placed in the aisles of the store. The cameras will feed real-time footage to the VxRail cluster, where pre-trained models with knowledge of the products will analyze the footage and determine how many of each product there are left. When the stock of a product in an aisle is running low, the system can alert the employees that the item needs to be restocked.

Sometimes, the shelves are built in a way that is difficult to see how many of a certain item there are in a shelf from how they are lined up. To solve this, the customer can use weighted shelves that record how heavy the items on the shelf are. The sensors in the shelf report back to the VxRail cluster, which combined with the Computer Vision view of the shelves, can be used to get an accurate reading of how many of each item there are on the shelves.

This solution gives the store valuable data regarding store traffic and the items the store needs to purchase more of. If an item is frequently running out of stock, the store may want to look into expanding the shelf space that the item takes up or keeping more of the items in the back. That way, this solution can also help you with inventory management.

Figure 1. Technological diagram

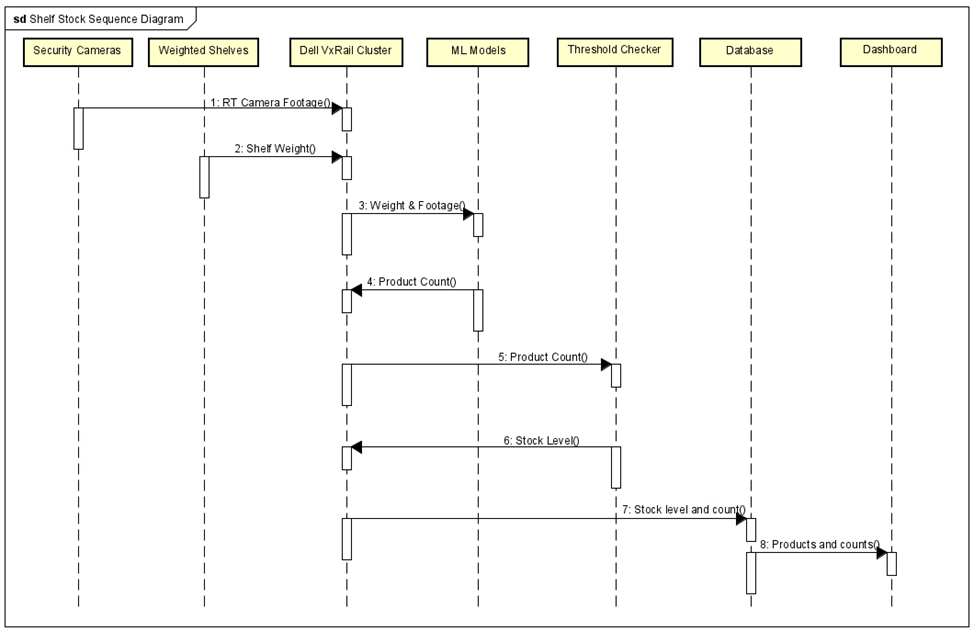

Figure 2. Shelf-stock sequence diagram

In this diagram, the security cameras feed the real-time camera footage of the aisles over to the VxRail cluster. At the same time, the weight sensors in the shelves will send the weight info over to the cluster as well. Both the camera footage and the weight information will be passed over to the ML Models nodes, which can then use the information to count how many of the products there are on the shelves. This count can then be passed back to the cluster, which can hand it off to the Threshold Checker application. The Threshold Checker application, which the store owners can customize to change what amount of an item on a shelf is considered low stock, then checks the status of the amount of the item against the threshold and gives the value back to the cluster, which stores it in the Database. The Dashboard can then show all of the items and their amounts, and which items are in need of restocking.

Problem 3: Storing (Misplaced items)

Overview

One of the challenges that retailers face is customers misplacing items in the store. This can lead to lost sales, and customers may not be able to find the products they are looking for or may purchase a different product instead. Customers often pick up a product at the store, but then leave it in a placed where it does not belong when they realize they do not want it anymore. This creates clutter, confusion, and inefficiency in the store layout and inventory management. Moreover, currently where stores have mobile apps that can tell you what aisle an item is supposed to be in, customers may get frustrated or confused if the app does not match the reality of the store layout. This can damage the customer’s trust and loyalty and reduce the likelihood of repeat purchases. Sometimes when inventory is missing and not accounted for, it has simply been mistaken for another item. Therefore, it is important for retailers to maintain a consistent and accurate inventory system. For more information, see 5 Most Common Reasons for Inventory Discrepancy and How to Resolve Them.

Real-world scenario

One time at the store, I was looking to purchase some cayenne pepper seasoning for a recipe of mine. I went to a local store and checked the store’s mobile app to see if cayenne pepper was in stock. The app indicated that the store had cayenne pepper in stock, so I went down to the correct aisle. Despite going through all the different rows multiple times, I could not find the cayenne pepper. I went to purchase the other ingredients, when I noticed that the cayenne pepper was not in the correct location. If I could not find where the cayenne pepper was misplaced to, the store would have lost my sale.

Benefits of addressing the problem

Solving this problem can have many benefits for both the retailers and the customers. Some of the benefits are improved customer satisfaction, increased sales and revenue, and reduced costs and waste.

Customers would be able to find the products they want more easily and quickly, without having to search the aisles for misplaced items. They can also trust that the products they see on the shelves are fresh, available, and correctly priced.

When products are in their proper locations, they are more likely to catch the attention of potential buyers and generate impulse purchases. Customers are also more likely to buy more items when they have a good shopping experience and can see all the options available.

Misplaced products can cause inventory errors, shrinkage, and spoilage. By keeping the products in the areas that they belong in, retailers can avoid these losses and save money on labor, storage, and disposal. They can also optimize their space utilization and merchandising strategies.

Possible solution

As for the other two solutions, you will need a camera setup, a Dell VxRail cluster for compute power and storage, and computer vision applications for analyzing the incoming video feeds.

This solution will also require the use of cameras in the storefront. Like the first two solutions, the cameras will need to be placed in the aisle to monitor the items there. The cameras will send live camera footage over to the VxRail cluster which has pre-trained models that know all the items. The cameras will be identified based on what aisle they are in, so the apps of the VxRail cluster will know what aisle the item came from as well. The machine learning models will report back what items are in the aisle to a separate app of the VxRail cluster, which will check a database containing items and their locations to make sure that the item is in the proper spot. If it is not, it can alert an employee and they can go and retrieve the item and put it in the proper location.

There is a lot of data that can be gathered in this solution. For example, you can find out what products are being left at what places. If a lot of the same product are being misplaced in the same location, then maybe there is another product nearby that they would rather have. The store can then find these competing brands and decide how they want to organize their store more to encourage more sales. The store could also find products that are more likely to be removed from the cart, and then use that data to determine what products need to be stocked less.

Figure 3. Technological diagram

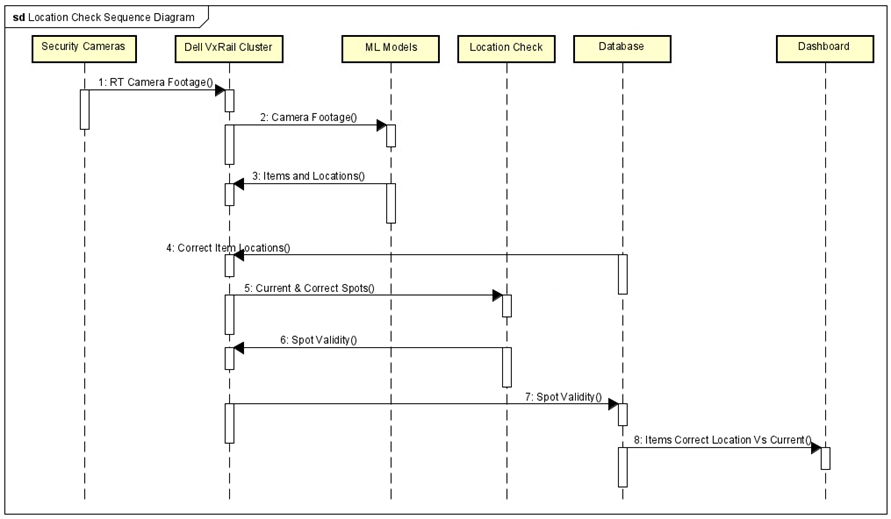

Figure 4. Location check sequence diagram

In this diagram, the security cameras feed the real-time camera footage to the Dell VxRail cluster. The cluster sends that footage to the ML Models, which reports the items there and their current locations. The cluster receives the correct location info from the database, and passes the database info, and the items and their current locations to the location check app. The app will verify that it is in the right spot and will send the state of the item’s location back to the cluster, which can then store it in the database. The store owner’s dashboard can then showcase the locations of the items, and which ones are in the wrong spot.

Read more

For more information about using edge computing to enhance the customer experience and operational efficiency, see Part 1 and Part 3 of this blog.