Dell Technologies PowerEdge MX with Cisco ACI Integration

Thu, 09 Feb 2023 20:05:01 -0000

|Read Time: 0 minutes

Introduction

This paper provides an example of integrating the Dell PowerEdge MX platform running Dell SmartFabric Services (SFS) with Cisco Application Centric Infrastructure (ACI).

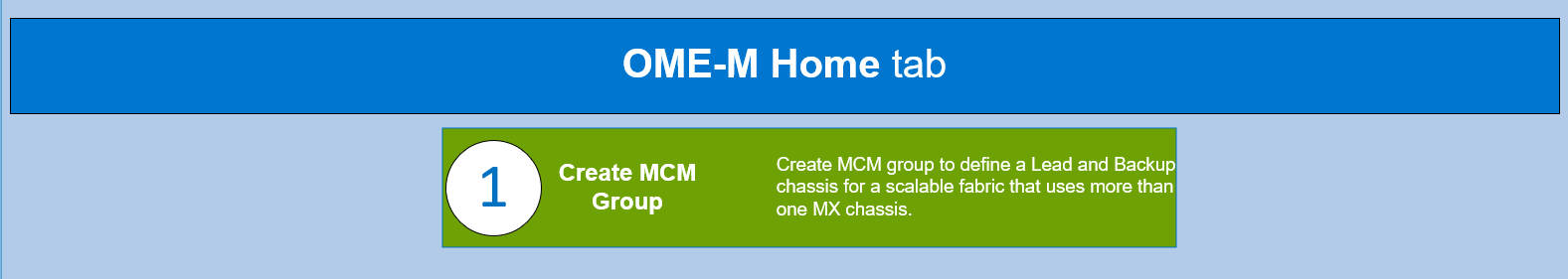

The example in this blog assumes that the PowerEdge MX7000 chassis are configured in a Multi Chassis Management Group (MCM) and that you have a basic understanding of the PowerEdge MX platform.

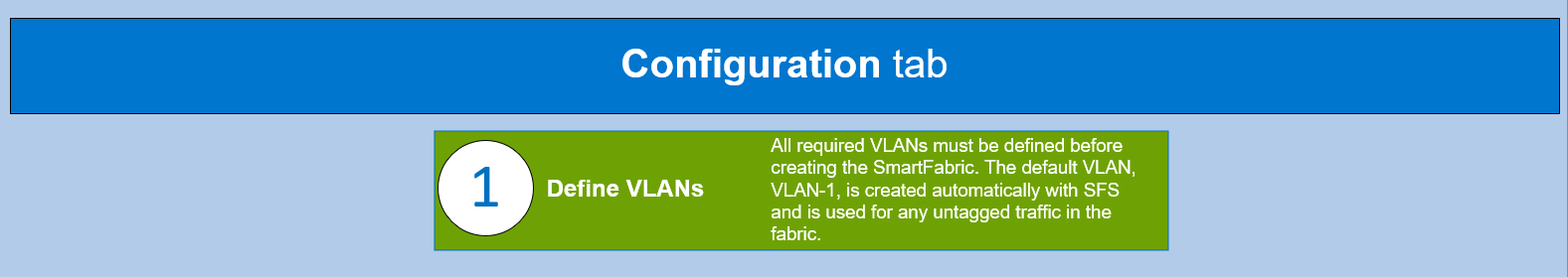

As part of the PowerEdge MX platform, the SmartFabric OS10 network operating system includes SmartFabric Services, a network automation and orchestration solution that is fully integrated with the MX platform.

Configuration Requirements

Configuration of SmartFabric on PowerEdge MX with Cisco ACI makes the following assumptions:

- All MX7000 chassis and management modules are cabled correctly and in an MCM group.

- VLTi cables between MX Fabric Switching Engines (FSE) and Fabric Expander Modules (FEM) are connected correctly.

- PowerEdge and Cisco ACI platforms are in healthy status and are running updated software.

The example setup is validated using the following software versions:

- MX chassis: 2.00.00

- MX IOMs (MX9116n): 10.5.4.1.29

- Cisco APIC: 5.2(6e).

- Cisco leaf switches: 4.2(7u)

Refer to the Dell Networking Support and Interoperability Matrix for the latest validated versions.

Hardware and Logical Topology

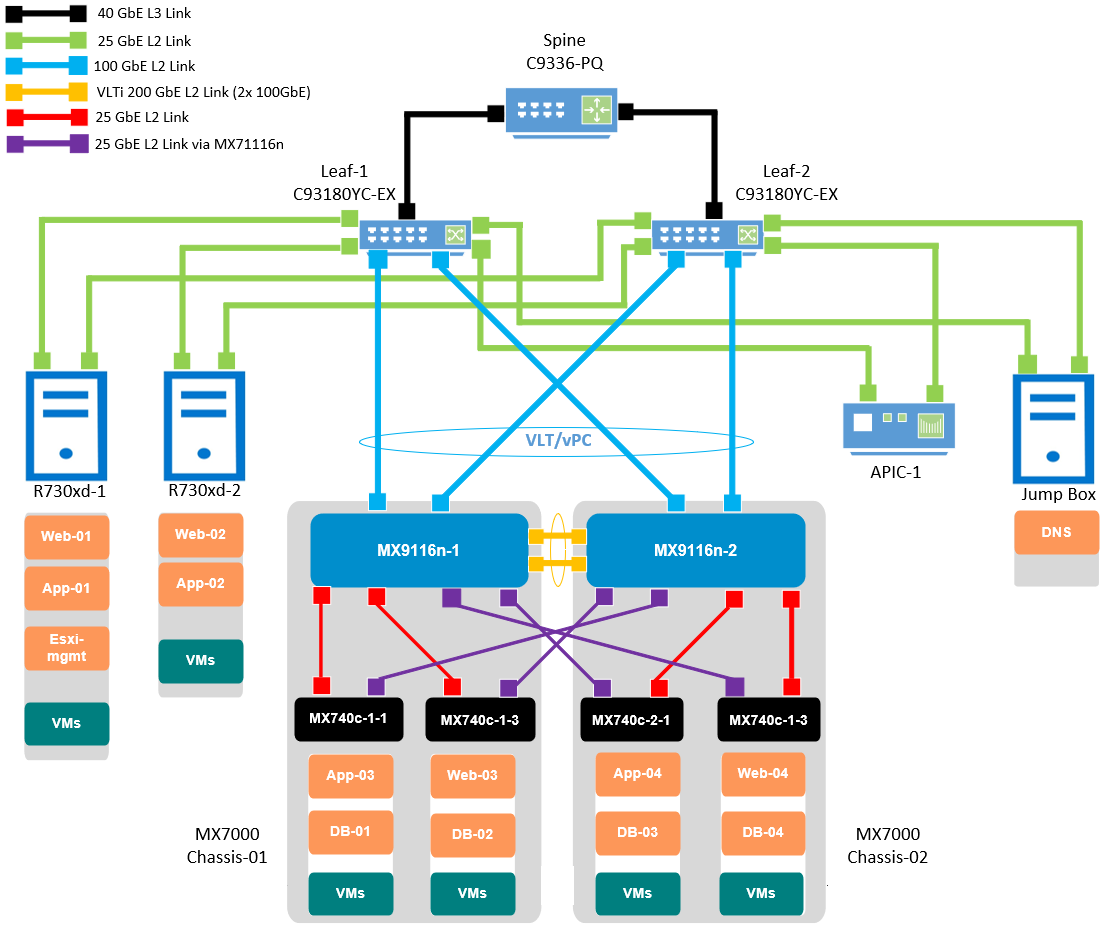

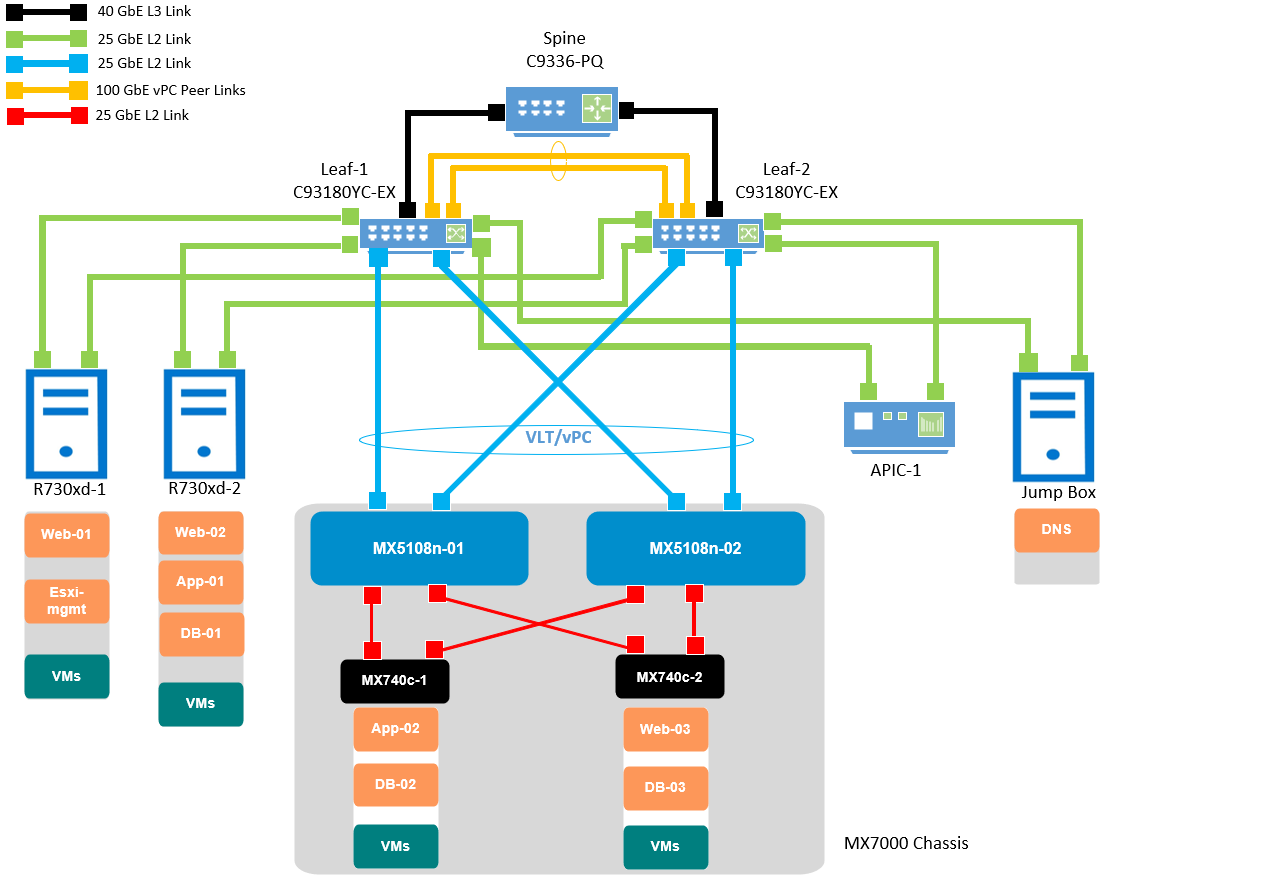

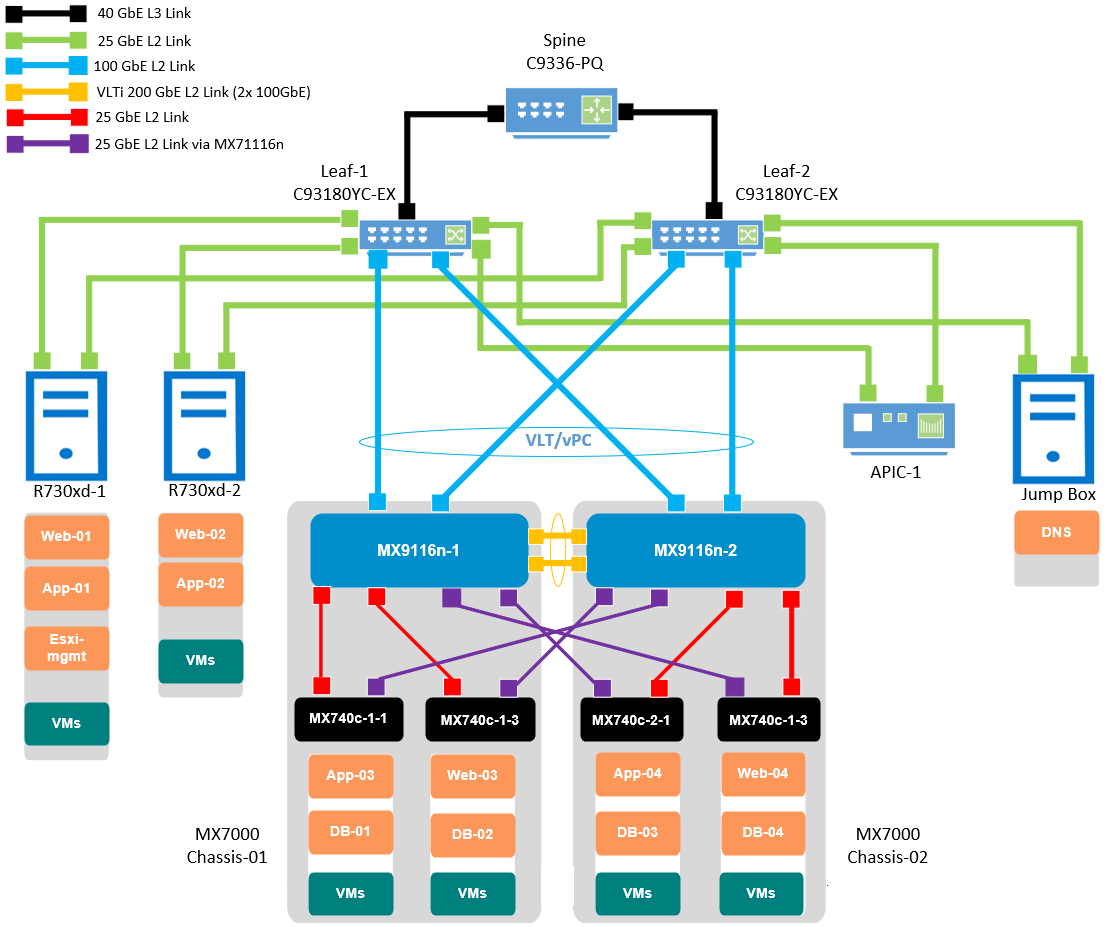

The validated Cisco ACI environment includes a pair of Nexus C93180YC-EX switches as leafs. These switches are connected to a single Nexus C9336-PQ switch as the spine using 40GbE connections. MX9116n FSE switches are connected to the C93180YC-EX leafs using 100GbE cables.

The following section provides an overview of the topology and configuration steps. For detailed configuration instructions, refer to the Dell EMC PowerEdge MX SmartFabric and Cisco ACI Integration Guide.

Caution: The connection of an MX switch directly to the ACI spine is not supported.

Figure 1 Validated SmartFabric and ACI environment logical topology

This blog is categorized into four major parts:

- Cisco Application Policy Infrastructure Controller (APIC)

- Dell PowerEdge MX OpenManage Enterprise-Modular (OME-M)

- VMware vCenter Server Appliance (VCSA)

- Dell OpenManage Network Integration (OMNI)

Cisco APIC

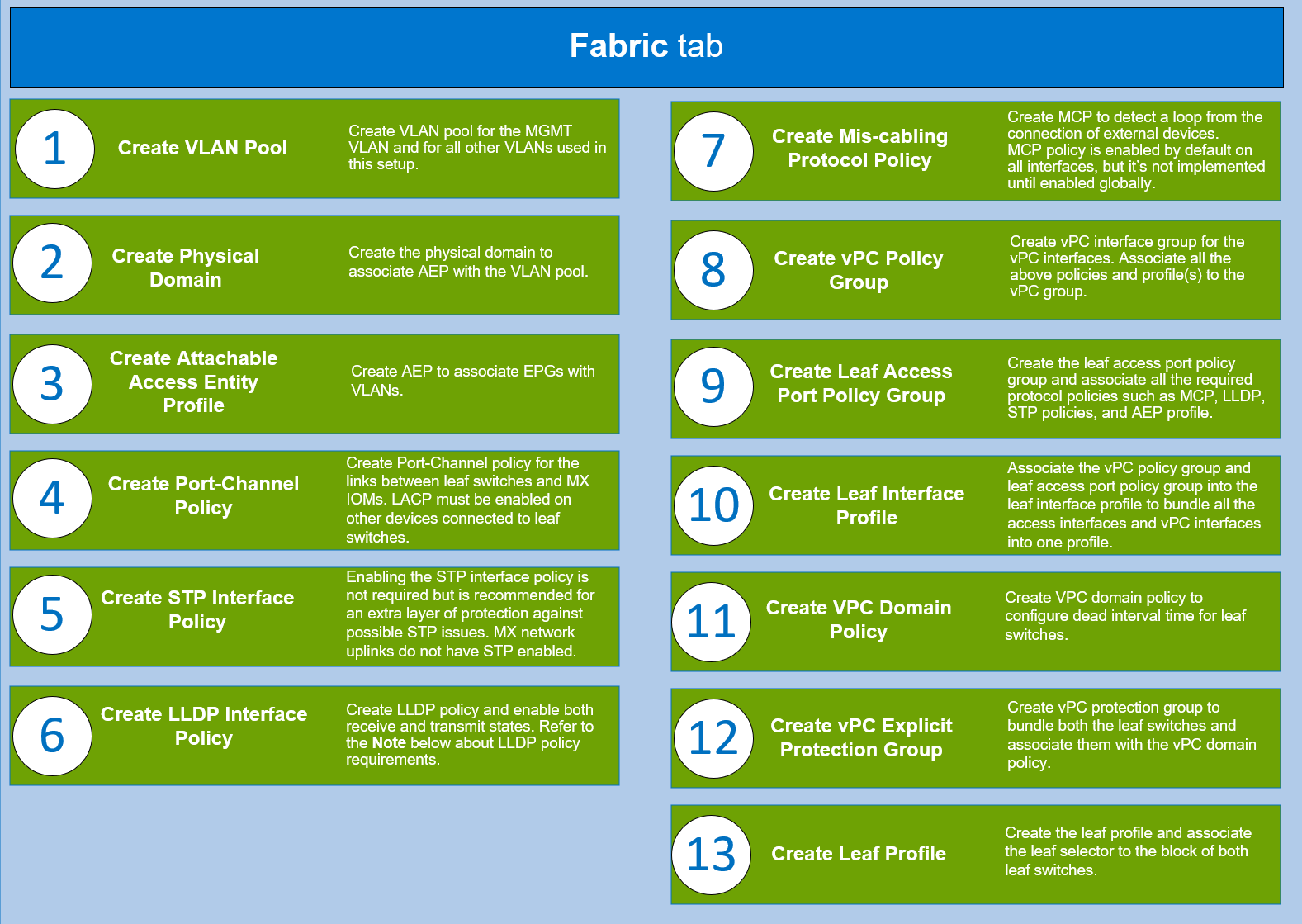

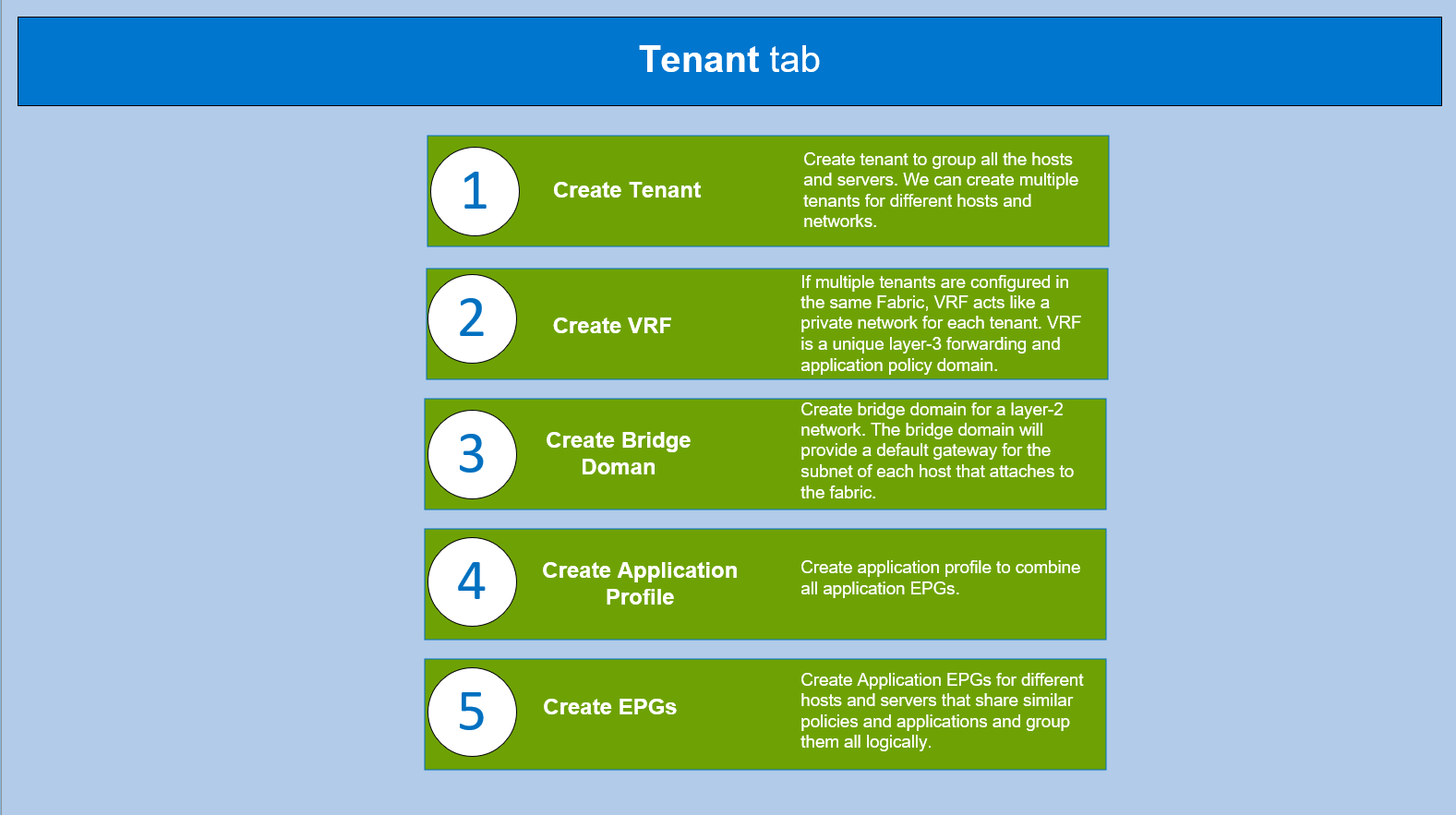

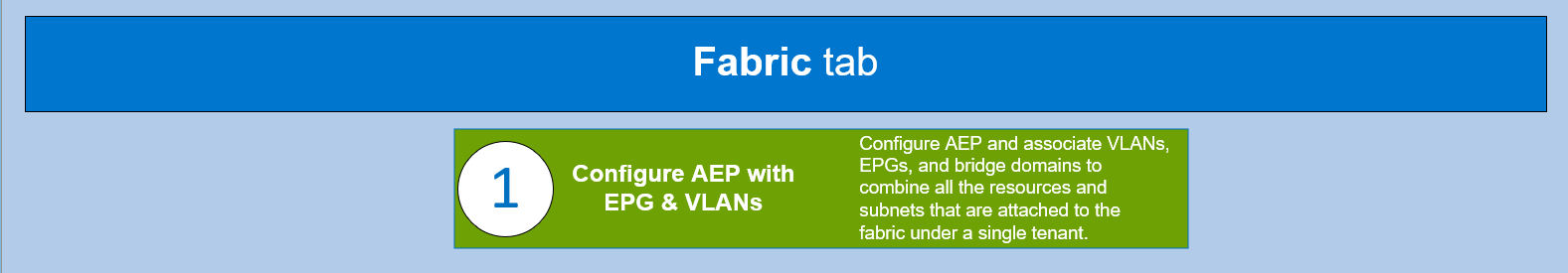

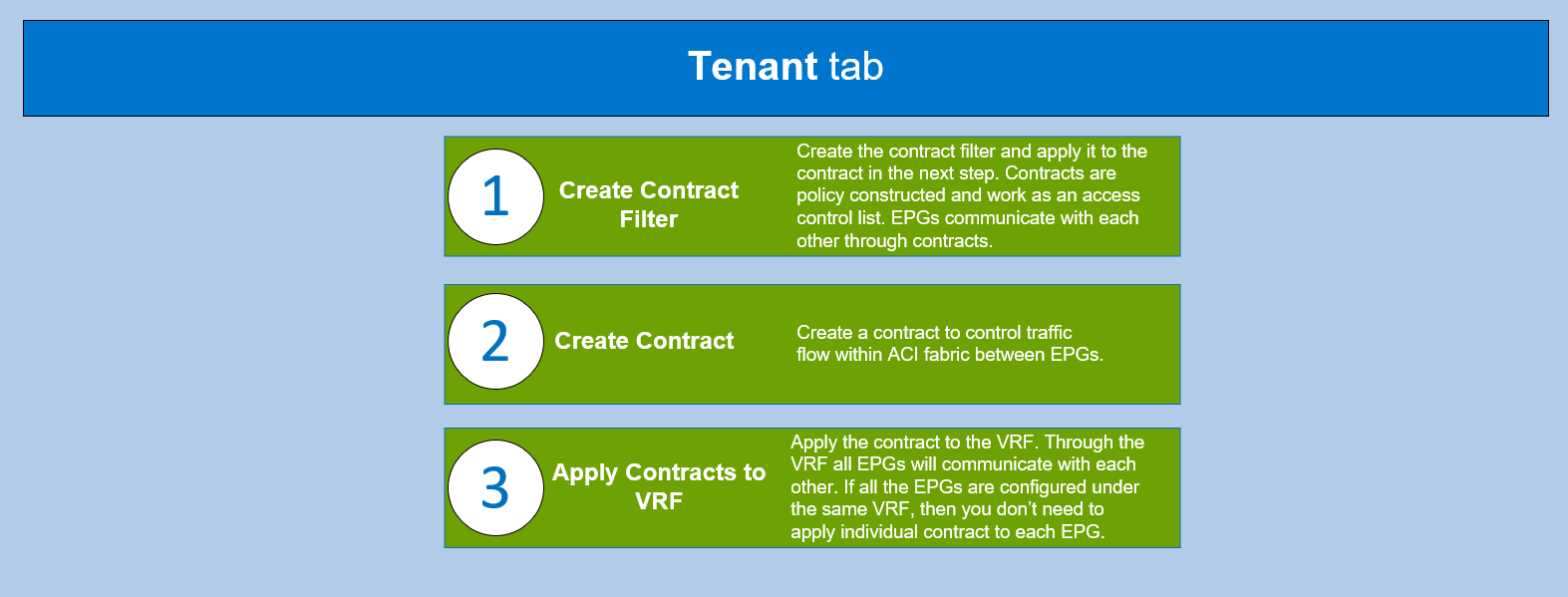

Cisco APIC provides a single point of automation and fabric element management in both virtual and physical environments. It helps the operators build fully automated and scalable multi-tenant networks.

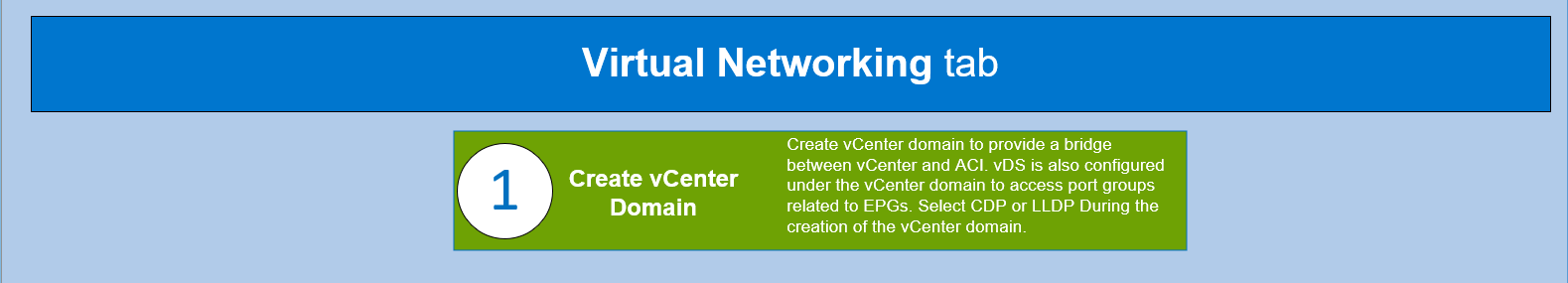

To understand the required protocols, policies, and features that you must configure to set up the Cisco ACI, log in to the Cisco APIC controller and complete the steps shown in the following flowcharts.

CAUTION: Ensure all the required hardware is in place and all the connections are made as shown in the above logical topology.

Note: If a storage area network protocol (such as FCoE) is configured, Dell Technologies suggest that you use CDP as a discovery protocol on ACI and vCenter, while LLDP remains disabled on the MX SmartFabric.

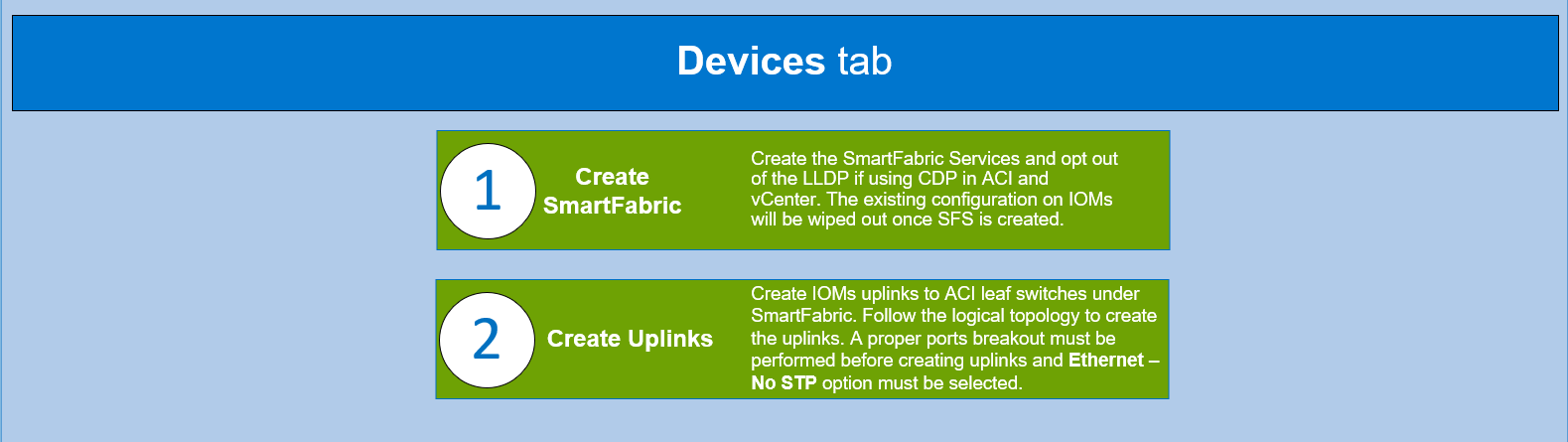

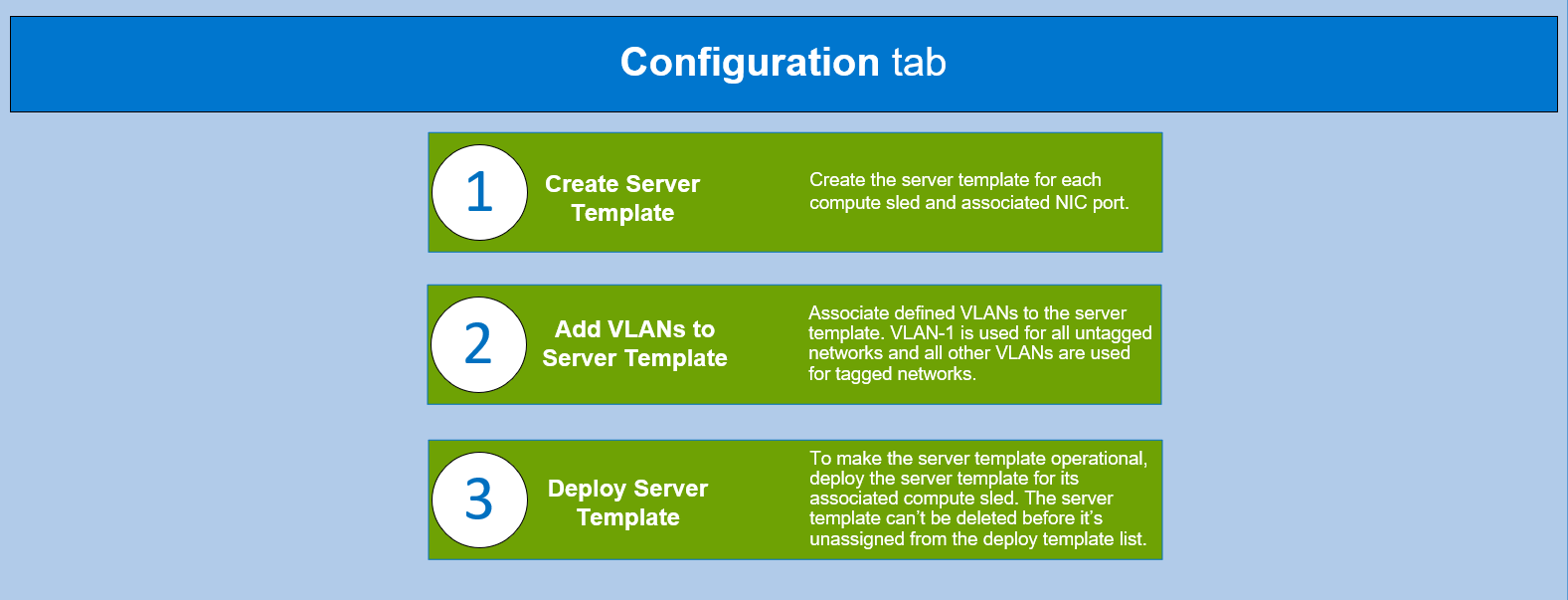

PowerEdge MX OME-M

The PowerEdge MX platform is a unified, high-performance data center infrastructure. It provides the agility, resiliency, and efficiency to optimize a wide variety of traditional and new, emerging data center workloads and applications. With its kinetic architecture and agile management, PowerEdge MX dynamically configures compute, storage, and fabric; increases team effectiveness; and accelerates operations. The responsive design delivers the innovation and longevity that customers need for their IT and digital business transformations.

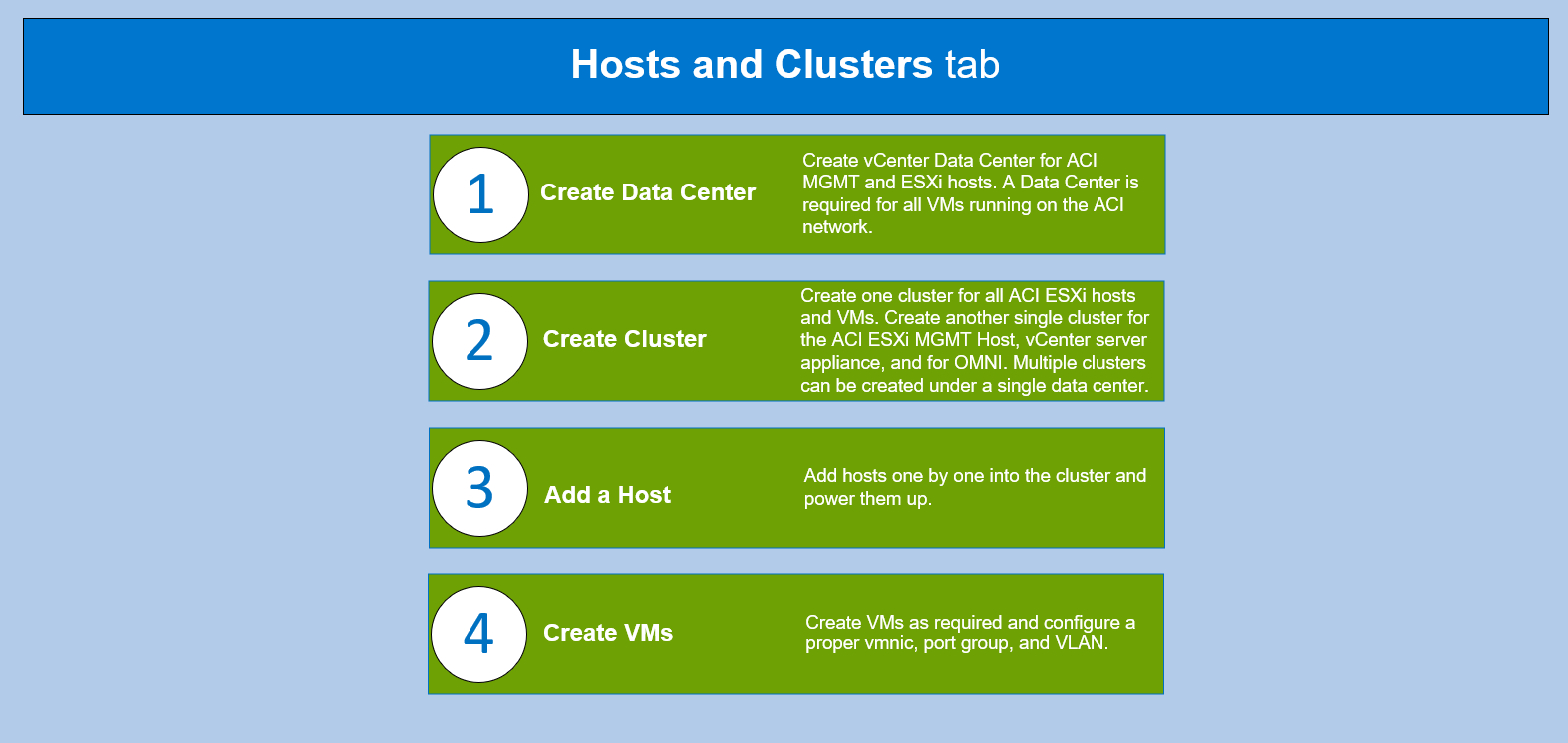

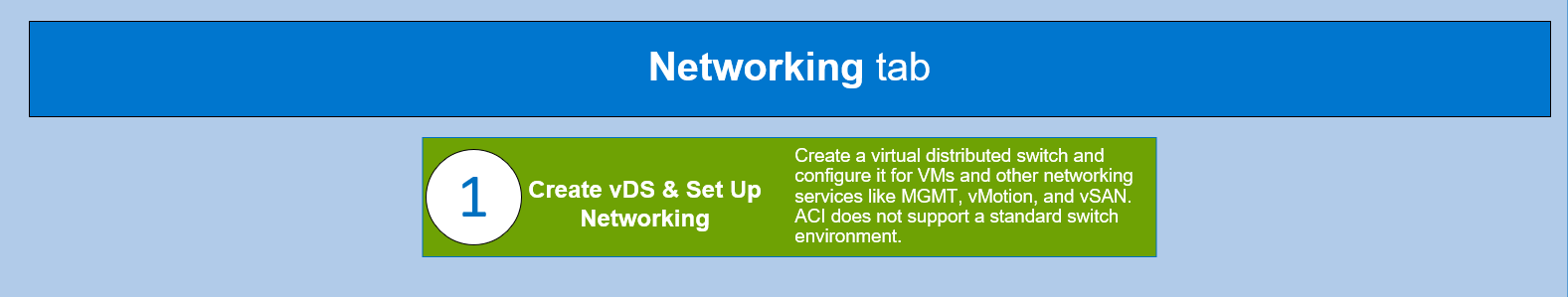

VMware vCenter

VMware vCenter is an advanced management centralized platform application. The flowchart below assumes that you have completed the following prerequisites:

- Install the vCenter server appliance on the ESXi MGMT server.

- Install the ESXi VMvisor on the ESXi host servers for the MX SmartFabric and Cisco ACI integration environment.

OMNI

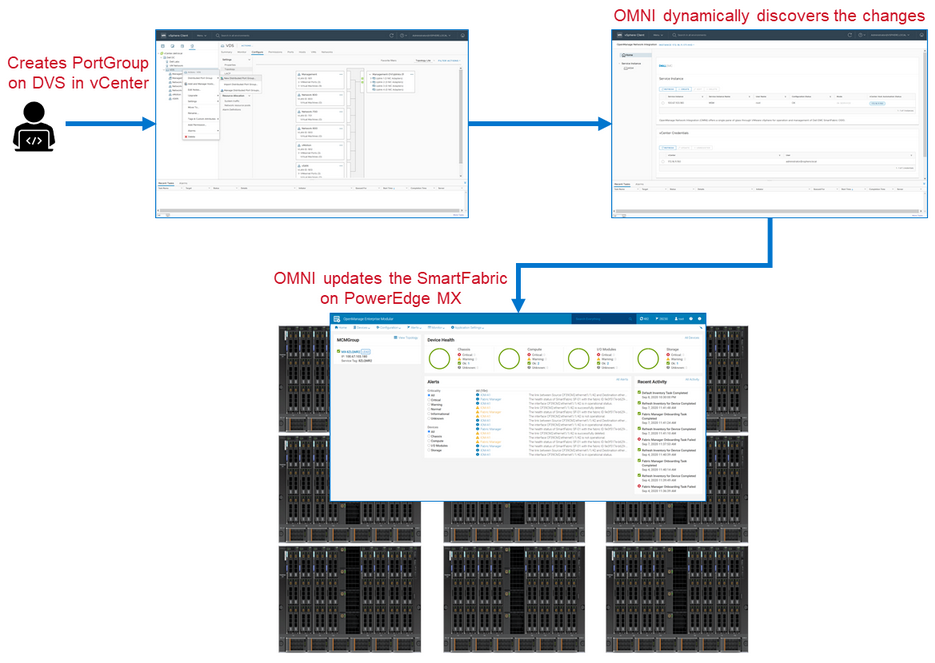

OMNI is an external plug-in for VMware vCenter that is designed to complement SFS by integrating with VMware vCenter to perform fabric automation. This integration automates VLAN changes that occur in VMware vCenter and propagates those changes into the related SFS instances running on the MX platform, as shown in the following flowchart figure.

The combination of OMNI and Cisco ACI vCenter integration creates a fully automated solution. OMNI and the Cisco APIC recognize and allow a VLAN change to be made in vCenter, and this change will flow through the entire solution without any manual intervention.

For more information about OMNI, see the SmartFabric Services for OpenManage Network Integration User Guide on the Dell EMC OpenManage Network Integration for VMware vCenter documentation page.

Figure 2 OMNI integration workflow

MX Single Chassis Deployment for ACI Integration

A single MX7000 chassis may also join an existing Cisco ACI environment by using the MX5108n ethernet switch. The MX chassis in this example has two MX5108n ethernet switches and two MX compute sleds.

The connections between the ACI environment and the MX chassis are made using a double-sided multi-chassis link aggregation group (MLAG). The MLAG is called a vPC on the Cisco ACI side and a VLT on the PowerEdge MX side. The following figure shows the environment.

Figure 3 SmartFabric and ACI environment using MX5108n Ethernet switches logical topology

Reference

List of Acronyms

ACI: Cisco Application Centric Infrastructure (ACI) AEP: Attachable Access Entity Profile APIC: Cisco Application Policy Infrastructure Controller CDP: Cisco Discovery Protocol EPG: End Point Groups LLDP: Link Local Discovery Protocol MCP: Mis-Cabling Protocol MCM: Multi Chassis Management Group MLAG: Multi-chassis link aggregation group MX FSE: Dell MX Fabric Switching Engines MX FEM: Dell MX Fabric Expander Modules MX IOMs: Dell MX I/O Modules | MX MCM: Dell MX Multichassis Management Group OME-M: Dell OpenManage Enterprise-Modular OMNI: Dell OpenManage Network Integration PC: Port Channel STP: Spanning Tree Protocol VCSA: VMware vCenter Server Appliance vDS: Virtual Distributed Switch VLAN: Virtual Local Area Network VM: virtual machine VMM: VMware Virtual Machine Manager vPC: Virtual Port Channel VRF: Virtual Routing Forwarding |

Documentation and Support

Dell EMC PowerEdge MX Networking Deployment Guide

Dell EMC PowerEdge MX SmartFabric and Cisco ACI Integration Guide.

Networking Support & Interoperability Matrix

Dell EMC PowerEdge MX VMware ESXi with SmartFabric Services Deployment Guide

Related Blog Posts

Integrate Dell PowerEdge MX with Cisco ACI

Thu, 09 Feb 2023 20:05:01 -0000

|Read Time: 0 minutes

You can integrate the Dell PowerEdge MX platform running Dell SmartFabric Services (SFS) with Cisco Application Centric Infrastructure (ACI). This blog shows an example setup using PowerEdge MX7000 chassis that are configured in a Multi Chassis Management Group (MCM).

We validated the example setup using the following software versions:

- MX chassis: 2.00.00

- MX IOMs (MX9116n): 10.5.4.1.29

- Cisco APIC: 5.2(6e).

- Cisco leaf switches: 4.2(7u)

The validated Cisco ACI environment includes a pair of Nexus C93180YC-EX switches as leafs. We connected these switches to a single Nexus C9336-PQ switch as the spine using 40GbE connections. We connected MX9116n FSE switches to the C93180YC-EX leafs using 100GbE cables.

Configuration

The Dell Technologies PowerEdge MX with Cisco ACI Integration blog provides an overview of the configuration steps for each of the components:

- Cisco Application Policy Infrastructure Controller (APIC)

- Dell PowerEdge MX OpenManage Enterprise-Modular (OME-M)

- VMware vCenter Server Appliance (VCSA)

- Dell OpenManage Network Integration (OMNI)

For more detailed configuration instructions, refer to the Dell EMC PowerEdge MX SmartFabric and Cisco ACI Integration Guide.

Resources

Dell EMC PowerEdge MX Networking Deployment Guide

Dell EMC PowerEdge MX SmartFabric and Cisco ACI Integration Guide.

Networking Support & Interoperability Matrix

Dell EMC PowerEdge MX VMware ESXi with SmartFabric Services Deployment Guide

Dell Technologies PowerEdge MX 100 GbE solution with external Fabric Switching Engine

Mon, 26 Jun 2023 20:31:38 -0000

|Read Time: 0 minutes

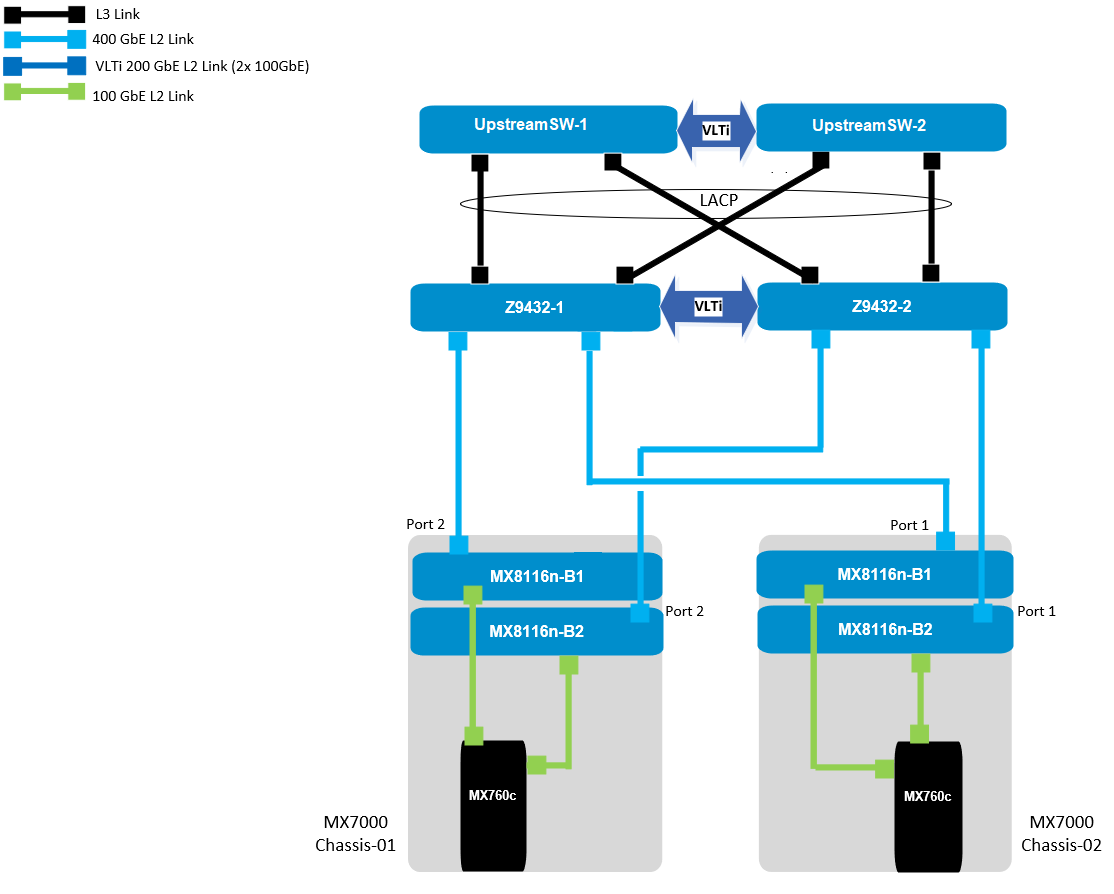

The Dell PowerEdge MX platform is advancing its position as the leading high-performance data center infrastructure by introducing a 100 GbE networking solution. This evolved networking architecture not only provides the benefit of 100 GbE speed but also increases the number of MX7000 chassis within a Scalable Fabric. The 100 GbE networking solution brings a new type of architecture, starting with an external Fabric Switching Engine (FSE).

PowerEdge MX 100 GbE solution design example

The diagram shows only one connection on each MX8116n for simplicity. See the port-mapping section in the networking deployment guide here.

Figure 1. 100 GbE solution example topology

Components for 100 GbE networking solution

The key hardware components for 100 GbE operation within the MX Platform are described below with a minimal description.

Dell Networking MX8116n Fabric Expander Module

The MX8116n FEM includes two QSFP56-DD interfaces, with each interface providing up to 4x 100Gbps connections to the chassis, 8x 100 GbE internal server-facing ports for 100 GbE NICs, and 16x 25 GbE for 25 GbE NICs.

The MX7000 chassis supports up to four MX8116n FEMs in Fabric A and Fabric B.

Figure 2. MX8116n FEM

The following MX8116n FEM components are labeled in the preceding figure:

- Express service tag

- Power and indicator LEDs

- Module insertion and removal latch

- Two QSFP56-DD fabric expander ports

Dell PowerEdge MX760c compute sled

- The MX760c is ideal for dense virtualization environments and can serve as a foundation for collaborative workloads.

- Businesses can install up to eight MX760c sleds in a single MX7000 chassis and combine them with compute sleds from different generations.

- Single or dual CPU (up to 56 cores per processor/socket with four x UPI @ 24 GT/s) and 32x DIMM slots DDR5 with eight memory channels.

- 8x E3.S NVMe (Gen5 x4) or 6 x 2.5" SAS/SATA SSDs or 6 x NVMe (Gen4) SSDs and iDRAC9 with lifecycle controller.

Note: The 100 GbE Dual Port Mezzanine card is also available on the MX750c.

Figure 3. Dell PowerEdge MX760c sled with eight E3.s SSD drives

Dell PowerSwitch Z9432F-ON external Fabric Switching Engine

The Z9432F-ON provides state-of-the-art, high-density 100/400 GbE ports, and a broad range of functionality to meet the growing demands of modern data center environments. Compact and offers an industry-leading density of 32 ports of 400 GbE in QSFP56-DD, 128 ports of 100, or up to 144 ports of 10/25/50 (through breakout) in a 1RU design. Up to 25.6 Tbps non-blocking (full duplex), switching fabric delivers line-rate performance under full load.L2 multipath support using Virtual Link Trunking (VLT) and Routed VLT support. Scalable L2 and L3 Ethernet switching with QoS and a full complement of standards-based IPv4 and IPv6 features, including OSPF and BGP routing support.

Figure 4. Dell PowerSwitch Z9432F-ON

Note: Mixed dual port 100 GbE and quad port 25 GbE mezzanine cards connecting to the same MX8116n are not a supported configuration.

100 GbE deployment options

There are four deployment options for the 100 GbE solution, and every option requires servers with a dual port 100 GbE mezzanine card. You can install the mezzanine card in either mezzanine slot A, B, or both. When you use the Broadcom 575 KR dual port 100 GbE mezzanine card, you should set the Z9432F-ON port-group to unrestricted mode and configure the port mode for 100g-4x.

PowerSwitch CLI example:

port-group 1/1/1

profile unrestricted

port 1/1/1 mode Eth 100g-4x

port 1/1/2 mode Eth 100g-4x

Note: The 100 GbE solution deployment, 14 maximum numbers of chassis are supported in single fabric, and 7 maximum numbers of chassis are supported in dual fabric using the same pair of FSE solution.

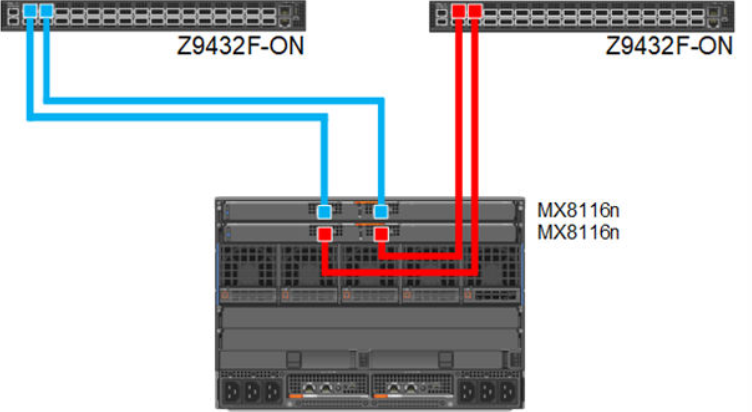

Single fabric

In a single fabric deployment, two MX8116n can be installed either in Fabric A or Fabric B, and the corresponding slot of the sled in slot-A or slot-B can have the 100 GbE mezzanine card installed.

Figure 5. 100 GbE Single Fabric

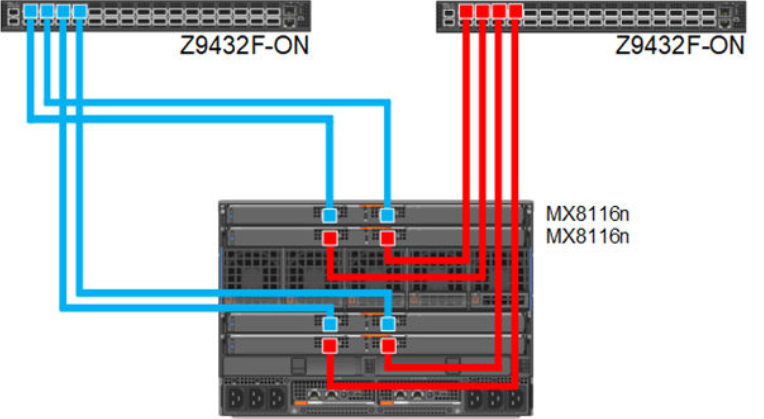

Dual fabric combined fabrics

In this option, four MX8116n (2x in Fabric A and 2x in Fabric B) can be installed and combined to connect Z9432F-ON external FSE.

Figure 6. 100 GbE Dual Fabric combined Fabrics

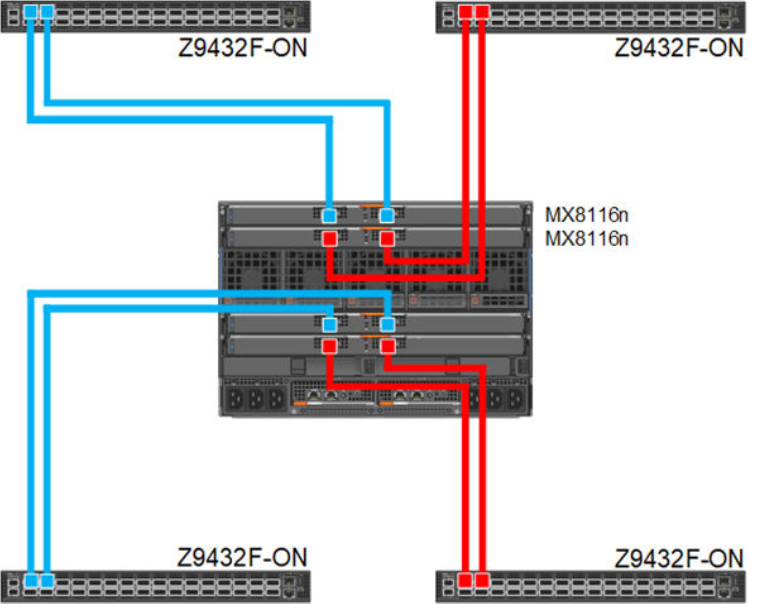

Dual fabric separate fabrics

In this option four, MX8116n (2x in Fabric A and 2x in Fabric B) can be installed and connected to two different networks. In this case, the MX760c server module has two mezzanine cards, with each card connected to a separate network.

Figure 7. 100 GbE Dual Fabric separate Fabrics

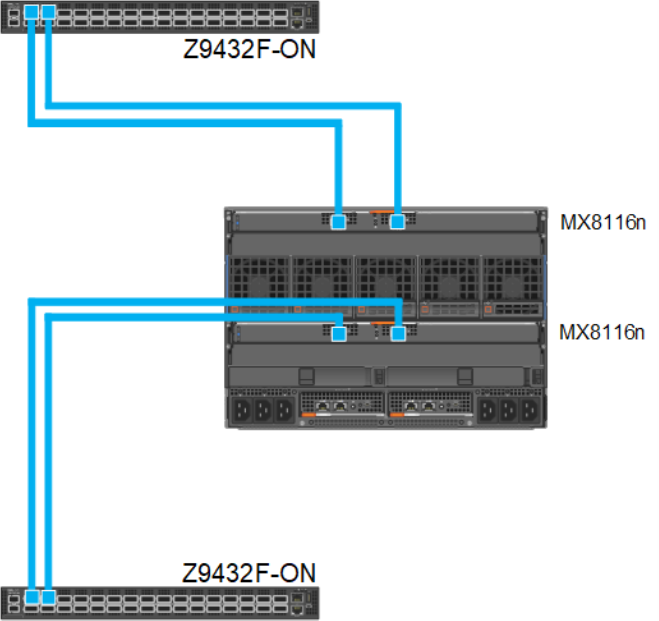

Dual fabric, single MX8116n in each fabric, separate fabrics

In this option two, MX8116n (1x in Fabric A and 1x in Fabric B) can be installed and connected to two different networks. In this case, the MX760c server module has two mezzanine cards, each connected to a separate network.

Figure 8. 100 GbE Dual Fabric single FEM in separate Fabrics

References

Dell PowerEdge Networking Deployment Guide

A chapter about 100 GbE solution with external Fabric Switching Engine