Deep Learning on Spark is Getting Interesting

Mon, 03 Aug 2020 15:53:44 -0000

|Read Time: 0 minutes

The year 2012 will be remembered in history as a break out year for data analytics. Deep learnings meteoric rise to prominence can largely be attributed to the 2012 introduction of convolution neural networks (CNN)for image classification using the ImageNet dataset during the Large-Scale Visual Recognition Challenge (LSVRC) [1]. It was a historic event after a very, very long incubation period for deep learning that started with mathematical theory work in the 1940s, 50s, and 60s. The prior history of neural networks and deep learning development is a fascination and should not be forgotten, but it is not an overstatement to say that 2012 was the breakout year for deep learning.

Coincidentally, 2012 was also a breakout year for in-memory distributed computing. A group of researchers from the University of AMPlab published a paper with an unusual title that changed the world of data analytics. “Resilient distributed datasets: A fault-tolerant abstraction for in-memory cluster computing”. [2] This paper describes how the initial creators developed an efficient, general-purpose and fault-tolerant in-memory data abstraction for sharing data in cluster applications. The effort was motivated by the short-comings of both MapReduce and other distributed-memory programming models for processing iterative algorithms and interactive data mining jobs.

The ongoing development of so many application libraries that all leverage Spark’s RDD abstraction including GraphX for creating graphs and graph-parallel computation, Spark Streaming for scalable fault-tolerant streaming applications and MLlib for scalable machine learning is proof that Spark achieved the original goal of being a general-purpose programming environment. The rest of this article will describe the development and integration of deep learning libraries – a now extremely useful class of iterative algorithms that Spark was designed to address. The importance of the role that deep learning was going to have on data analytics and artificial intelligence was just starting to emerge at the same time Spark was created so the combination of the two developments has been interesting to watch.

MLlib – The original machine learning library for Spark

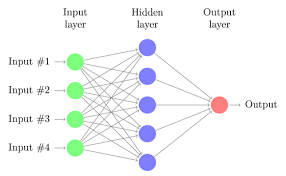

MLlib development started not long after the AMPlab code was transferred to the Apache Software Foundation in 2013. It is not really a deep learning library however there is an option for developing Multilayer perceptron classifiers [3] based on the feedforward artificial neural network with backpropagation implemented for learning the model. Fully connected neural networks were quickly abandoned after the development of more sophisticated models constructed using convolutional, recursive, and recurrent networks.

Fully connected shallow and deep networks are making a comeback as alternatives to tree-based models for both regression and classification. There is also a lot of current interest in various forms of autoencoders used to learn latent (hidden) compressed representations of data dimension reduction and self-supervised classification. MLlib, therefore, can be best characterized as a machine learning library with some limited neural network capability.

Fully connected shallow and deep networks are making a comeback as alternatives to tree-based models for both regression and classification. There is also a lot of current interest in various forms of autoencoders used to learn latent (hidden) compressed representations of data dimension reduction and self-supervised classification. MLlib, therefore, can be best characterized as a machine learning library with some limited neural network capability.

BigDL – Intel open sources a full-featured deep learning library for Spark

BigDL is a distributed deep learning library for Apache Spark. BigDL implements distributed, data-parallel training directly on top of the functional compute model using the core Spark features of copy-on-write and coarse-grained operations. The framework has been referenced in applications as diverse as transfer learning-based image classification, object detection and feature extraction, sequence-to-sequence prediction for precipitation nowcasting, neural collaborative filtering for recommendations, and more. Contributors and users include a wide range of industries including Mastercard, World Bank, Cray, Talroo, University of California San Francisco (UCSF), JD, UnionPay, Telefonica, GigaSpaces. [4]

Engineers with Dell EMC and Intel recently completed a white paper demonstrating the use of deep learning development tools from the Intel Analytics Zoo [5] to build an integrated pipeline on Apache Spark ending with a deep neural network model to predict diseases from chest X-rays. [6] Tools and examples in the Analytics Zoo give data scientists the ability to train and deploy BigDL, TensorFlow, and Keras models on Apache Spark clusters. Application developers can also use the resources from the Analytics Zoo to deploy production class intelligent applications through model extractions capable of being served in any Java, Scala, or other Java virtual machine (JVM) language.

The researchers conclude that modern deep learning applications can be developed and deployed at scale on an existing Hadoop and Spark cluster. This approach avoids the need to move data to a different deep learning cluster and eliminates the operational complexities of provisioning and maintaining yet another distributed computing environment. The open-source software that is described in the white paper is available from Github. [7]

H20.ai – Sparkling Water for Spark

H2O is fast, scalable, open-source machine learning, and deep learning for smarter applications. Much like MLlib, the H20 algorithms cover a wide range of useful machine learning techniques but only fully connected MLPs for deep learning. With H2O, enterprises like PayPal, Nielsen Catalina, Cisco, and others can use all their data without sampling to get accurate predictions faster. [8] Dell EMC, Intel, and H2o.ai recently developed a joint reference architecture that outlines both technical considerations and sizing guidance for an on-premises enterprise AI platform. [9]

The engineers show how running H2O.ai software on optimized Dell EMC infrastructure with the latest Intel® Xeon® Scalable processors and NVMe storage, enables organizations to use AI to improve customer experiences, streamline business processes, and decrease waste and fraud. Validated software included the H2O Driverless AI enterprise platform and the H2O and H2O Sparkling Water open-source software platforms. Sparkling Water is designed to be executed as a regular Spark application. It provides a way to initialize H2O services on Spark and access data stored in both Spark and H2O data structures. H20 Sparkling Water algorithms are designed to take advantage of the distributed in-memory computing of existing Spark clusters. Results from H2O can easily be deployed using H2O low-latency pipelines or within Spark for scoring.

H2O Sparkling Water cluster performance was evaluated on three- and five-node clusters. In this mode, H2O launches through Spark workers, and Spark manages the job scheduling and communications between the nodes. Three and five Dell EMC PowerEdge R740xd Servers with Intel Xeon Gold 6248 processors were used to train XGBoost and GBM models using the mortgage data set derived from the Fannie Mae Single-Family Loan Performance data set.

Spark and GPUs

Many data scientists familiar with Spark for machine learning have been waiting for official support for GPUs. The advantages realized from modern neural network models like the CNN entry in the 2012 LSVRC would not have been fully realized without the work of NVIDIA and others on new acceleration hardware. NVIDIA’s GPU technology like the Volta V100 has morphed into a class of advanced, enterprise-class ML/DL accelerators that reduce training time for all types of neural network configurations including CCN, RNN (recurrent neural networks) and GAN (generative adversarial networks) to mention just a few of the most popular forms. Deep learning researchers see many advantages to building end-to-data model training “pipelines” that take advantage of the generalized distributed computing capability of Spark for everything from data cleaning and shaping through to scale-out training using integration with GPUs.

Many data scientists familiar with Spark for machine learning have been waiting for official support for GPUs. The advantages realized from modern neural network models like the CNN entry in the 2012 LSVRC would not have been fully realized without the work of NVIDIA and others on new acceleration hardware. NVIDIA’s GPU technology like the Volta V100 has morphed into a class of advanced, enterprise-class ML/DL accelerators that reduce training time for all types of neural network configurations including CCN, RNN (recurrent neural networks) and GAN (generative adversarial networks) to mention just a few of the most popular forms. Deep learning researchers see many advantages to building end-to-data model training “pipelines” that take advantage of the generalized distributed computing capability of Spark for everything from data cleaning and shaping through to scale-out training using integration with GPUs.

NVIDIA recently announced that it has been working with Apache Spark’s open source community to bring native GPU acceleration to the next version of the big data processing framework, Spark 3.0 [10] The Apache Spark community is distributing a preview release of Spark 3.0 to encourage wide-scale community testing of the upcoming release. The preview is not a stable release of the expected API specification or functionality. No firm date for the general availability of Spark 3.0 has been released but organizations exploring options for distributed deep learning with GPUs should start evaluating the proposed features and advantages of Spark 3.0.

Cloudera is also giving developers and data science an opportunity to do testing and evaluation with the preview release of Spark 3.0. The current GA version of the Cloudera Runtime includes the Apache Spark 3.0 preview 2 as part of their CDS 3 (Experimental) Powered by Apache Spark release. [11] Full Spark 3.0 preview 2 documentation including many code samples is available from the Apache Spark website [12]

What’s next

It’s been 8 years since the breakout events for deep learning and distributed computing with Spark were announced. We have seen tremendous adoption of both deep learning and Spark for all types of analytics use cases from medical imaging to language processing to manufacturing control and beyond. We are just now poised to see new breakthroughs in the merging of Spark and deep learning, especially with the addition of support for hardware accelerators. IT professionals and data scientists are still too heavily burdened with the hidden technical debt overhead for managing machine learning systems. [13] The integration of accelerated deep learning with the power of the Spark generalized distributed computing platform will give both the IT and data science communities a capable and manageable environment to develop and host end-to-end data analysis pipelines in a common framework.

References

[1] Alom, M. Z., Taha, T. M., Yakopcic, C., Westberg, S., Sidike, P., Nasrin, M. S., ... & Asari, V. K. (2018). The history began from alexnet: A comprehensive survey on deep learning approaches. arXiv preprint arXiv:1803.01164.

[2] Zaharia, M., Chowdhury, M., Das, T., Dave, A., Ma, J., McCauly, M., ... & Stoica, I. (2012). Resilient distributed datasets: A fault-tolerant abstraction for in-memory cluster computing. In Presented as part of the 9th {USENIX} Symposium on Networked Systems Design and Implementation ({NSDI} 12) (pp. 15-28).

[3] Apache Spark (June 2020) Multilayer perceptron classifier https://spark.apache.org/docs/latest/ml-classification-regression.html#multilayer-perceptron-classifier

[4] Dai, J. J., Wang, Y., Qiu, X., Ding, D., Zhang, Y., Wang, Y., ... & Wang, J. (2019, November). Bigdl: A distributed deep learning framework for big data. In Proceedings of the ACM Symposium on Cloud Computing (pp. 50-60).

[5] Intel Analytics Zoo (June 2020) https://software.intel.com/content/www/us/en/develop/topics/ai/analytics-zoo.html

[6] Chandrasekaran, Bala (Dell EMC) Yang, Yuhao (Intel) Govindan, Sajan (Intel) Abd, Mehmood (Dell EMC) A. A. R. U. D. (2019). Deep Learning on Apache Spark and Analytics Zoo.

[7] Dell AI Engineering (June 2020) BigDL Image Processing Examples https://github.com/dell-ai-engineering/BigDL-ImageProcessing-Examples

[8] Candel, A., Parmar, V., LeDell, E., and Arora, A. (Apr 2020). Deep Learning with H2O https://www.h2o.ai/wp-content/themes/h2o2016/images/resources/DeepLearningBooklet.pdf

[9] Reference Architectures for H2O.ai (February 2020) https://www.dellemc.com/resources/en-us/asset/white-papers/products/ready-solutions/dell-h20-architectures-pdf.pdf Dell Technologies

[10] Woodie, Alex (May 2020) Spark 3.0 to Get Native GPU Acceleration https://www.datanami.com/2020/05/14/spark-3-0-to-get-native-gpu-acceleration/ datanami

[11] CDS 3 (Experimental) Powered by Apache Spark Overview (June 2020) https://docs.cloudera.com/runtime/7.0.3/cds-3/topics/spark-spark-3-overview.html

[12] Spark Overview (June 2020) https://spark.apache.org/docs/3.0.0-preview2/

[13] Sculley, D., Holt, G., Golovin, D., Davydov, E., Phillips, T., Ebner, D., ... & Dennison, D. (2015). Hidden technical debt in machine learning systems. In Advances in neural information processing systems (pp. 2503-2511).

Related Blog Posts

Omnia: Open-source deployment of high-performance clusters to run simulation, AI, and data analytics workloads

Mon, 12 Dec 2022 18:31:28 -0000

|Read Time: 0 minutes

High Performance Computing (HPC), in which clusters of machines work together as one supercomputer, is changing the way we live and how we work. These clusters of CPU, memory, accelerators, and other resources help us forecast the weather and understand climate change, understand diseases, design new drugs and therapies, develop safe cars and planes, improve solar panels, and even simulate life and the evolution of the universe itself. The cluster architecture model that makes this compute-intensive research possible is also well suited for high performance data analytics (HPDA) and developing machine learning models. With the Big Data era in full swing and the Artificial Intelligence (AI) gold rush underway, we have seen marketing teams with their own Hadoop clusters attempting to transition to HPDA and finance teams managing their own GPU farms. Everyone has the same goals: to gain new, better insights faster by using HPDA and by developing advanced machine learning models using techniques such as deep learning and reinforcement learning. Today, everyone has a use for their own high-performance computing cluster. It’s the age of the clusters!

Today's AI-driven IT Headache: Siloed Clusters and Cluster Sprawl

Unfortunately, cluster sprawl has taken over our data centers and consumes inordinate amounts of IT resources. Large research organizations and businesses have a cluster for this and a cluster for that. Perhaps each group has a little “sandbox” cluster, or each type of workload has a different cluster. Many of these clusters look remarkably similar, but they each need a dedicated system administrator (or set of administrators), have different authorization credentials, different operating models, and sit in different racks in your data center. What if there was a way to bring them all together?

That’s why Dell Technologies, in partnership with Intel, started the Omnia project.

The Omnia Project

The Omnia project is an open-source initiative with a simple aim: To make consolidated infrastructure easy and painless to deploy using open open source and free use software. By bringing the best open source software tools together with the domain expertise of Dell Technologies' HPC & AI Innovation Lab, HPC & AI Centers of Excellence, and the broader HPC Community, Omnia gives customers decades of accumulated expertise in deploying state-of-the-art systems for HPC, AI, and Data Analytics – all in a set of easily executable Ansible playbooks. In a single day, a stack of servers, networking switches, and storage arrays can be transformed into one consolidated cluster for running all your HPC, AI, and Data Analytics workloads. Omnia project logo

Omnia project logo

Simple by Design

Omnia’s design philosophy is simplicity. We look for the best, most straightforward approach to solving each task.

- Need to run the Slurm workload manager? Omnia assembles Ansible plays which build the right rpm files and deploy them correctly, making sure all the correct dependencies are installed and functional.

- Need to run the Kubernetes container orchestrator? Omnia takes advantage of community supported package repositories for Linux (currently CentOS) and automates all the steps for creating a functional multi-node Kubernetes cluster.

- Need a multi-user, interactive Python/R/Julia development environment? Omnia takes advantage of best-of-breed deployments for Kubernetes through Helm and OperatorHub, provides configuration files for dynamic and persistent storage, points to optimized containers in DockerHub, Nvidia GPU Cloud (NGC), or other container registries for unaccelerated and accelerated workloads, and automatically deploys machine learning platforms like Kubeflow.

Before we go through the process of building something from scratch, we will make sure there isn’t already a community actively maintaining that toolset. We’d rather leverage others' great work than reinvent the wheel.

Inclusive by Nature

Omnia’s contribution philosophy is inclusivity. From code and documentation updates to feature requests and bug reports, every user’s contributions are welcomed with open arms. We provide an open forum for conversations about feature ideas and potential implementation solutions, making use of issue threads on GitHub. And as the project grows and expands, we expect the technical governance committee to grow to include the top contributors and stakeholders from the community.

What's Next?

Omnia is just getting started. Right now, we can easily deploy Slurm and Kubernetes clusters from a stack of pre-provisioned, pre-networked servers, but our aim is higher than that. We are currently adding capabilities for performing bare-metal provisioning and supporting new and varying types of accelerators. In the future, we want to collect information from the iDRAC out-of-band management system on Dell EMC PowerEdge servers, configure Dell EMC PowerSwitch Ethernet switches, and much more.

What does the future hold? While we have plans in the near-term for additional feature integrations, we are looking to partner with the community to define and develop future integrations. Omnia will grow and develop based on community feedback and your contributions. In the end, the Omnia project will not only install and configure the open source software we at Dell Technologies think is important, but the software you – the community – want it to, as well! We can’t think of a better way for our customers to be able to easily setup clusters for HPC, AI, and HPDA workloads, all while leveraging the expertise of the entire Dell Technologies' HPC Community.

Omnia is available today on GitHub at https://github.com/dellhpc/omnia. Join the community now and help guide the design and development of the next generation of open-source consolidated cluster deployment tools!

Optimizing AI: Meeting Unstructured Storage Demands Efficiently

Thu, 21 Mar 2024 14:46:23 -0000

|Read Time: 0 minutes

The surge in artificial intelligence (AI) and machine learning (ML) technologies has sparked a revolution across industries, pushing the boundaries of what's possible. However, this innovation comes with its own set of challenges, particularly when it comes to storage. The heart of AI's potential lies in its ability to process and learn from vast amounts of data, most of which is unstructured. This has placed unprecedented demands on storage solutions, becoming a critical bottleneck for advancing AI technologies.

Navigating the complex landscape of unstructured data storage is no small feat. Traditional storage systems struggle to keep up with the scale and flexibility required by AI workloads. Enterprises find themselves at a crossroads, seeking solutions that can provide scalable, affordable, and fault-tolerant storage. The quest for such a platform is not just about meeting current needs but also paving the way for the future of AI-driven innovation.

The current state of ML and AI

The evolution of ML and AI technologies has reshaped industries far and wide, setting new expectations for data processing and analysis capabilities. These advancements are directly tied to an organization's capacity to handle vast volumes of unstructured data, a domain where traditional storage solutions are being outpaced.

ML and AI applications demand unprecedented levels of data ingestion and computational power, necessitating scalable and flexible storage solutions. Traditional storage systems—while useful for conventional data storage needs—grapple with scalability issues, particularly when faced with the immense file quantities AI and ML workloads generate.

Although traditional object storage methods are capable of managing data as objects within a pool, they fall short when meeting the agility and accessibility requirements essential for AI and ML processes. These storage models struggle with scalability and facilitating the rapid access and processing of data crucial for deep learning and AI algorithms.

The dire necessity of a new kind of storage solution is evident as the current infrastructure is unable to cope with the silos of unstructured data. These silos make it challenging to access, process, and unify data sources, which in turn cripples the effectiveness of AI and ML projects. Furthermore, the maximum storage capacity of traditional storage, tethering at tens of terabytes, is insufficient for the needs of AI-driven initiatives which often require petabytes of data to train sophisticated models.

As ML and AI continue to advance, the quest for a storage solution that can support the growing demands of these technologies remains pivotal. The industry is in dire need of systems that provide ample storage and ensure the flexibility, reliability, and performance efficiency necessary to propel AI and ML into their next phase of innovation.

Understanding unstructured storage demands for AI

The advent of AI and ML has brought unprecedented advancements across industries, enhancing efficiency, accuracy, and the ability to manage and process large datasets. However, the core of these technologies relies on the capability to store, access, and analyze unstructured data efficiently. Understanding the storage demands essential for AI applications is crucial for businesses looking to harness the full power of AI technology.

High throughput and low latency

For AI and ML applications, time is of the essence. The ability to process data at high speeds with high throughput and access it with minimal delay and low latency are non-negotiable requirements. These applications often involve complex computations performed on vast datasets, necessitating quick access to data to maintain a seamless process. For instance, in real-time AI applications such as voice recognition or instant fraud detection, any delay in data processing can critically impact performance and accuracy. Therefore, storage solutions must be designed to accommodate these needs, delivering data as swiftly as possible to the application layer.

Scalability and flexibility

As AI models evolve and the volume of data increases, the need for scalability in storage solutions becomes paramount. The storage architecture must accommodate growth without compromising on performance or efficiency. This is where the flexibility of the storage solutions comes into play. An ideal storage system for AI would scale in capacity and performance, adapting to the changing demands of AI applications over time. Combining the best of on-premises and cloud storage, hybrid storage solutions offer a viable path to achieving this scalability and flexibility. They enable businesses to leverage the high performance of on-premise solutions and the scalability and cost-efficiency of cloud storage, ensuring the storage infrastructure can grow with the AI application needs.

Data durability and availability

Ensuring the durability and availability of data is critical for AI systems. Data is the backbone of any AI application, and its loss or unavailability can lead to significant setbacks in development and performance. Storage solutions must, therefore, provide robust data protection mechanisms and redundancies to safeguard against data loss. Additionally, high availability is essential to ensure that data is always accessible when needed, particularly for AI applications that require continuous operation. Implementing a storage system with built-in redundancy, failover capabilities, and disaster recovery plans is essential to maintain continuous data availability and integrity.

In the context of AI where data is continually ingested, processed, and analyzed, the demands on storage solutions are unique and challenging. Key considerations include maintaining high throughput and low latency for real-time processing, establishing scalability and flexibility to adapt to growing data volumes, and ensuring data durability and availability to support continuous operation. Addressing these demands is critical for businesses aiming to leverage AI technologies effectively, paving the way for innovation and success in the digital era.

What needs to be stored for AI?

The evolution of AI and its underlying models depends significantly on various types of data and artifacts generated and used throughout its lifecycle. Understanding what needs to be stored is crucial for ensuring the efficiency and effectiveness of AI applications.

Raw data

Raw data forms the foundation of AI training. It's the unmodified, unprocessed information gathered from diverse sources. For AI models, this data can be in the form of text, images, audio, video, or sensor readings. Storing vast amounts of raw data is essential as it provides the primary material for model training and the initial step toward generating actionable insights.

Preprocessed data

Once raw data is collected, it undergoes preprocessing to transform it into a more suitable format for training AI models. This process includes cleaning, normalization, and transformation. As a refined version of raw data, preprocessed data needs to be stored efficiently to streamline further processing steps, saving time and computational resources.

Training datasets

Training datasets are a selection of preprocessed data used to teach AI models how to make predictions or perform tasks. These datasets must be diverse and comprehensive, representing real-world scenarios accurately. Storing these datasets allows AI models to learn and adapt to the complexities of the tasks they are designed to perform.

Validation and test datasets

Validation and test datasets are critical for evaluating an AI model's performance. These datasets are separate from the training data and are used to tune the model's parameters and test its generalizability to new, unseen data. Proper storage of these datasets ensures that models are both accurate and reliable.

Model parameters and weights

An AI model learns to make decisions through its parameters and weights. These elements are fine-tuned during training and crucial for the model's decision-making processes. Storing these parameters and weights allows models to be reused, updated, or refined without retraining from scratch.

Model architecture

The architecture of an AI model defines its structure, including the arrangement of layers and the connections between them. Storing the model architecture is essential for understanding how the model processes data and for replicating or scaling the model in future projects.

Hyperparameters

Hyperparameters are the configuration settings used to optimize model performance. Unlike parameters, hyperparameters are not learned from the data but set prior to the training process. Storing hyperparameter values is necessary for model replication and comparison of model performance across different configurations.

Feature engineering artifacts

Feature engineering involves creating new input features from the existing data to improve model performance. The artifacts from this process, including the newly created features and the logic used to generate them, need to be stored. This ensures consistency and reproducibility in model training and deployment.

Results and metrics

The results and metrics obtained from model training, validation, and testing provide insights into model performance and effectiveness. Storing these results allows for continuous monitoring, comparison, and improvement of AI models over time.

Inference data

Inference data refers to new, unseen data that the model processes to make predictions or decisions after training. Storing inference data is key for analyzing the model's real-world application and performance and making necessary adjustments based on feedback.

Embeddings

Embeddings are dense representations of high-dimensional data in lower-dimensional spaces. They play a crucial role in processing textual data, images, and more. Storing embeddings allows for more efficient computation and retrieval of similar items, enhancing model performance in recommendation systems and natural language processing tasks.

Code and scripts

The code and scripts used to create, train, and deploy AI models are essential for understanding and replicating the entire AI process. Storing this information ensures that models can be retrained, refined, or debugged as necessary.

Documentation and metadata

Documentation and metadata provide context, guidelines, and specifics about the AI model, including its purpose, design decisions, and operating conditions. Proper storage of this information supports ethical AI practices, model interpretability, and compliance with regulatory standards.

Challenges of unstructured data in AI

In the realm of AI, handling unstructured data presents a unique set of challenges that must be navigated carefully to harness its full potential. As AI systems strive to mimic human understanding, they face the intricate task of processing and deriving meaningful insights from data that lacks a predefined format. This section delves into the core challenges associated with unstructured data in AI, primarily focusing on data variety, volume, and velocity.

Data variety

Data variety refers to the myriad types of unstructured data that AI systems are expected to process, ranging from texts and emails to images, videos, and audio files. Each data type possesses its unique characteristics and demands specific preprocessing techniques to be effectively analyzed by AI models.

- Richer Insights but Complicated Processing: While the diverse data types can provide richer insights and enhance model accuracy, they significantly complicate the data preprocessing phase. AI tools must be equipped with sophisticated algorithms to identify, interpret, and normalize various data formats.

- Innovative AI Applications: The advantage of mastering data variety lies in the development of innovative AI applications. By handling unstructured data from different domains, AI can contribute to advancements in natural language processing, computer vision, and beyond.

Data volume

The sheer volume of unstructured data generated daily is staggering. As digital interactions increase, so does the amount of data that AI systems need to analyze.

- Scalability Challenges: The exponential growth in data volume poses scalability challenges for AI systems. Storage solutions must not only accommodate current data needs but also be flexible enough to scale with future demands.

- Efficient Data Processing: AI must leverage parallel processing and cloud storage options to keep up with the volume. Systems designed for high-throughput data analysis enable quicker insights, which are essential for timely decision-making and maintaining relevance in a rapidly evolving digital landscape.

Data velocity

Data velocity refers to the speed at which new data is generated and the pace at which it needs to be processed to remain actionable. In the age of real-time analytics and instant customer feedback, high data velocity is both an opportunity and a challenge for AI.

- Real-Time Processing Needs: AI systems are increasingly required to process information in real-time or near-real-time to provide timely insights. This necessitates robust computational infrastructure and efficient data streaming technologies.

- Constant Adaptation: The dynamic nature of unstructured data, coupled with its high velocity, demands that AI systems constantly adapt and learn from new information. Maintaining accuracy and relevance in fast-moving data environments is critical for effective AI performance.

In addressing these challenges, AI and ML technologies are continually evolving, developing more sophisticated systems capable of handling the complexity of unstructured data. The key to unlocking the value hidden within this data lies in innovative approaches to data management where flexibility, scalability, and speed are paramount.

Strategies to manage unstructured data in AI

The explosion of unstructured data poses unique challenges for AI applications. Organizations must adopt effective data management strategies to harness the full potential of AI technologies. In this section, we delve into key strategies like data classification and tagging and the use of PowerScale clusters to efficiently manage unstructured data in AI.

Data classification and tagging

Data classification and tagging are foundational steps in organizing unstructured data and making it more accessible for AI applications. This process involves identifying the content and context of data and assigning relevant tags or labels, which is crucial for enhancing data discoverability and usability in AI systems.

- Automated tagging tools can significantly reduce the manual effort required to label data, employing AI algorithms to understand the content and context automatically.

- Custom metadata tags allow for the creation of a rich set of file classification information. This not only aids in the classification phase but also simplifies later iterations and workflow automation.

- Effective data classification enhances data security by accurately categorizing sensitive or regulated information, enabling compliance with data protection regulations.

Implementing these strategies for managing unstructured data prepares organizations for the challenges of today's data landscape and positions them to capitalize on the opportunities presented by AI technologies. By prioritizing data classification and leveraging solutions like PowerScale clusters, businesses can build a strong foundation for AI-driven innovation.

Best practices for implementing AI storage solutions

Implementing the right AI storage solutions is crucial for businesses seeking to harness the power of artificial intelligence. With the explosive growth of unstructured data, adhering to best practices that optimize performance, scalability, and cost is imperative. This section delves into key practices to ensure your AI storage infrastructure meets the demands of modern AI workloads.

Assess workload requirements

Before diving into storage solutions, one must thoroughly assess AI workload requirements. Understanding the specific needs of your AI applications—such as the volume of data, the necessity for high throughput/low latency, and the scalability and availability requirements—is fundamental. This step ensures you select the most suitable storage solution that meets your application's needs.

AI workloads are diverse, with each having unique demands on storage infrastructure. For instance, training a machine learning model could require rapid access to vast amounts of data, whereas inference workloads may prioritize low latency. An accurate assessment leads to an optimized infrastructure, ensuring that storage solutions are neither overprovisioned nor underperforming, thereby supporting AI applications efficiently and cost-effectively.

Leverage PowerScale

For managing large volumes and varieties of unstructured data, leveraging PowerScale nodes offers a scalable and efficient solution. PowerScale nodes are designed to handle the complexities of AI and machine learning workloads, offering optimized performance, scalability, and data mobility. These clusters allow organizations to store and process vast amounts of data efficiently for a range of AI use cases due to the following:

- Scalability is a key feature, with PowerScale clusters capable of growing with the organization's data needs. They support massive capacities, allowing businesses to store petabytes of data seamlessly.

- Performance is optimized for the demanding workloads of AI applications with the ability to process large volumes of data at high speeds, reducing the time for data analyses and model training.

- Data mobility within PowerScale clusters on-premise and in the cloud ensures that data can be accessed when and where needed, supporting various AI and machine learning use cases across different environments.

PowerScale clusters allow businesses to start small and grow capacity as needed, ensuring that storage infrastructure can scale alongside AI initiatives without compromising on performance. The ability to handle multiple data types and protocols within a single storage infrastructure simplifies management and reduces operational costs, making PowerScale nodes an ideal choice for dynamic AI environments.

Utilize PowerScale OneFS 9.7.0.0

PowerScale OneFS 9.7.0.0 is the latest version of the Dell PowerScale operating system for scale-out network-attached storage (NAS). OneFS 9.7.0.0 introduces several enhancements in data security, performance, cloud integration, and usability.

OneFS 9.7.0.0 extends and simplifies the PowerScale offering in the public cloud, providing more features across various instance types and regions. Some of the key features in OneFS 9.7.0.0 include:

- Cloud Innovations: Extends cloud capabilities and features, building upon the debut of APEX File Storage for AWS

- Performance Enhancements: Enhancements to overall system performance

- Security Enhancements: Enhancements to data security features

- Usability Improvements: Enhancements to make managing and using PowerScale easier

Employ PowerScale F210 and F710

PowerScale, through its continuous innovation, extends into the AI era by introducing the next generation of PowerEdge-based nodes: the PowerScale F210 and F710. These new all-flash nodes leverage the Dell PowerEdge R660 from the PowerEdge platform, unlocking enhanced performance capabilities.

On the software front, both the F210 and F710 nodes benefit from significant performance improvements in PowerScale OneFS 9.7. These nodes effectively address the most demanding workloads by combining hardware and software innovations. The PowerScale F210 and F710 nodes represent a powerful combination of hardware and software advancements, making them well-suited for a wide range of workloads. For more information on the F210 and F710, see PowerScale All-Flash F210 and F710 | Dell Technologies Info Hub.

Ensure data security and compliance

Given the sensitivity of the data used in AI applications, robust security measures are paramount. Businesses must implement comprehensive security strategies that include encryption, access controls, and adherence to data protection regulations. Safeguarding data protects sensitive information and reinforces customer trust and corporate reputation.

Compliance with data protection laws and regulations is critical to AI storage solutions. As regulations can vary significantly across regions and industries, understanding and adhering to these requirements is essential to avoid significant fines and legal challenges. By prioritizing data security and compliance, organizations can mitigate risks associated with data breaches and non-compliance.

Monitor and optimize

Continuous storage environment monitoring and optimization are essential for maintaining high performance and efficiency. Monitoring tools can provide insights into usage patterns, performance bottlenecks, and potential security threats, enabling proactive management of the storage infrastructure.

Regular optimization efforts can help fine-tune storage performance, ensuring that the infrastructure remains aligned with the evolving needs of AI applications. Optimization might involve adjusting storage policies, reallocating resources, or upgrading hardware to improve efficiency, reduce costs, and ensure that storage solutions continue to effectively meet the demands of AI workloads.

By following these best practices, businesses can build and maintain a storage infrastructure that supports their current AI applications and is poised for future growth and innovation.

Conclusion

Navigating the complexities of unstructured storage demands for AI is no small feat. Yet, by adhering to the outlined best practices, businesses stand to benefit greatly. The foundational steps include assessing workload requirements, selecting the right storage solutions, and implementing robust security measures. Furthermore, integrating PowerScale nodes and a commitment to continuous monitoring and optimization are key to sustaining high performance and efficiency. As the landscape of AI continues to evolve, these practices will not only support current applications but also pave the way for future growth and innovation. In the dynamic world of AI, staying ahead means being prepared, and these strategies offer a roadmap to success.

Frequently asked questions

How big are AI data centers?

Data centers catering to AI, such as those by Amazon and Google, are immense, comparable to the scale of football stadiums.

How does AI process unstructured data?

AI processes unstructured data including images, documents, audio, video, and text by extracting and organizing information. This transformation turns unstructured data into actionable insights, propelling business process automation and supporting AI applications.

How much storage does an AI need?

AI applications, especially those involving extensive data sets, might require significant memory, potentially as much as 1TB or more. Such vast system memory efficiently facilitates the processing and statistical analysis of entire data sets.

Can AI handle unstructured data?

Yes, AI is capable of managing both structured and unstructured data types from a variety of sources. This flexibility allows AI to analyze and draw insights from an expansive range of data, further enhancing its utility across diverse applications.

Author: Aqib Kazi, Senior Principal Engineer, Technical Marketing