Database security methodologies of SQL Server

Mon, 03 Aug 2020 16:06:37 -0000

|Read Time: 0 minutes

In general, security touches every aspect and activity of an information system. The subject of security is vast, and we need to understand that security can never be perfect. Every organization has unique way of dealing with security based on their requirements. In this blog, I describe database security models and briefly review SQL Server security principles.

A few definitions:

- Database: A collection of information stored in computer

- Security: Freedom from danger

- Database security: The mechanism that protects the database against intentional or accidental threats or that protects it against malicious attempts to steal (view) or modify data

Database security models

Today’s organizations rely on database systems as the key data management technology for a large variety of tasks ranging from regular business operations to critical decision making. The information in the databases is used, shared, and accessed by various users. It needs to be protected and managed because any changes to the database can affect it or other databases.

The main role of a security system is to preserve integrity of an operational system by enforcing a security policy that is defined by a security model. These security models are the basic theoretical tools to start with when developing a security system.

Database security models include the following elements:

- Subject: Individual who performs some activity on the database

- Object: Database unit that requires authorization in order to manipulate

- Access mode/action: Any activity that might be performed on an object by a subject

- Authorization: Specification of access modes for each subject on each object

- Administrative rights: Who has rights in system administration and what responsibilities administrators have

- Policies: Enterprise-wide accepted security rules

- Constraint: A more specific rule regarding an aspect of an object and action

Database security approaches

A typical DBMS supports basic approaches of data security—discretionary control, mandatory control, and role-based access control.

Discretionary control: A given user typically has different access rights, also known as privileges, for different objects. For discretionary access control, we need a language to support the definition of rights—for example, SQL.

Mandatory control: Each data object is labeled with a certain classification level, and a given object can be accessed only by a user with a sufficient clearance level. Mandatory access control is applicable to the databases in which data has a rather static or rigid classification structure—for example, military or government environments.

In both discretionary and mandatory control cases, the unit of data and the data object to be protected can range from the entire database to a single, specific tuple.

Role-based access control (RBAC): Permissions are associated with roles, and users are made members of appropriate roles. However, a role brings together a set of users on one side and a set of permissions on the other, whereas user groups are typically defined as a set of users only.

Role-based security provides the flexibility to define permissions at a high level of granularity in Microsoft SQL, thus greatly reducing the attack surface area of the database system.

RBAC mechanisms are a flexible alternative to mandatory access control (MAC) and discretionary access control (DAC).

RBAC terminology:

- Objects: Any system, resource file, printer, terminal, database record, etc.

- Operations: An executable image of a program, which upon invocation performs some function for the user.

- Permissions: An approval to perform an operation on one or more RBAC-protected objects

- Role: A job function within the context of an organization with some associated semantics regarding the authority and responsibility conferred on the user assigned to the role.

For more information, see Database Security Models — A Case Study.

Note: Access control mechanisms regulate who can access which data. The need for such mechanisms can be concluded from the variety of actors that work with a database system—for example, DBA, application admin and programmer, and users. Based on actor characteristics, access control mechanisms can be divided into three categories – DAC, RBAC, and MAC.

Principles of SQL Server security

A SQL Server instance contains a hierarchical collection of entities, starting with the server. Each server contains multiple databases, and each database contains a collection of securable objects. Every SQL Server securable has associated permissions that can be granted to a principal, which is an individual, group, or process granted access to SQL Server.

For each security principal, you can grant rights that allow that principal to access or modify a set of the securables, which are the objects that make up the database and server environment. They can include anything from functions to database users to endpoints. SQL Server scopes the objects hierarchically at the server, database, and schema levels:

- Server-level securables include databases as well as objects such as logins, server roles, and availability groups.

- Database-level securables include schemas as well as objects such as database users, database roles, and full-text catalogs.

- Schema-level securables include objects such as tables, views, functions, and stored procedures.

SQL Server authentication approaches include:

- Authentication: Authentication is the SQL Server login process by which a principal requests access by submitting credentials that the server evaluates. Authentication establishes the identity of the user or process being authenticated. SQL Server authentication helps ensure that only authorized users with valid credentials can access the database server. SQL Server supports two authentication modes, Windows authentication mode and mixed mode.

- Windows authentication is often referred to as integrated security because this SQL Server security model is tightly integrated with Windows.

- Mixed mode supports authentication both by Windows and by SQL Server, using usernames and passwords.

- Authorization: Authorization is the process of determining which securable resources a principal can access and which operations are allowed for those resources. Microsoft SQL -based technologies support this principle by providing mechanisms to define granular object-level permissions and simplify the process by implementing role-based security. Granting permissions to roles rather than users simplifies security administration.

- It is a best practice to use server-level roles for managing server-level access and security, and database roles for managing database-level access.

- Role-based security provides the flexibility to define permissions at a high level of granularity in Microsoft SQL, thus greatly reducing the attack surface area of the database system.

Here are a few additional recommended best practices for SQL Server authentication:

- Use Windows authentication.

- Enables centralized management of SQL Server principals via Active Directory

- Uses Kerberos security protocol to authenticate users

- Supports integrated password policy enforcement including complexity validation for strong passwords, password expiration, and account lockout

- Use separate accounts to authenticate users and applications.

- Enables limiting the permissions granted to users and applications

- Reduces the risks of malicious activity such as SQL injection attacks

- Use contained database users.

- Isolates the user or application account to a single database

- Improves performance, as contained database users authenticate directly to the database without an extra network hop to the master database

- Supports both SQL Server and Azure SQL Database, as well as Azure SQL Data Warehouse

Conclusion

Database security is an important goal of any data management system. Each organization should have a data security policy, which is set of high-level guidelines determined by:

- User requirements

- Environmental aspects

- Internal regulations

- Government laws

Database security is based on three important constructs—confidentiality, integrity, and availability. The goal of database security is to protect your critical and confidential data from unauthorized access.

References

https://docs.microsoft.com/en-us/dotnet/framework/data/adonet/sql/overview-of-sql-server-security

https://sqlsunday.com/2014/07/20/the-sql-server-security-model-part-1/

Related Blog Posts

Manage and analyze humongous amounts of data with SQL Server 2019 Big Data Cluster

Mon, 22 Apr 2024 11:50:19 -0000

|Read Time: 0 minutes

A collection of facts and statistics for reference or analysis is called data, and, in a way, the term “big data” is a large sum of data. The big data concept has been around for many years, and the volume of data is growing like never, which is why data is a hugely valued asset in this connected world. Effective big data management enables an organization to locate valuable information with ease, regardless of how large or unstructured the data is. The data is collected from various sources including system logs, social media sites, and call detail records.

The four V's associated with big data are Volume, Variety, Velocity, and Veracity:

- Volume is about the size—how much data you have.

- Variety means that the data is very different—that you have very different types of data structures.

- Velocity is about the speed of how fast the data is getting to you.

- Veracity, the final V, is a difficult one. The issue with big data is that it is very unreliable.

SQL Server Big Data Clusters make it easy to manage this complex assortment of data.

You can use SQL Server 2019 to create a secure, hybrid, machine learning architecture starting with preparing data, training a machine learning model, operationalizing your model, and using it for scoring. SQL Server Big Data Clusters make it easy to unite high-value relational data with high-volume big data.

Big Data Clusters bring together multiple instances of SQL Server with Spark and HDFS, making it much easier to unite relational and big data and use them in reports, predictive models, applications, and AI.

In addition, using PolyBase, you can connect to many different external data sources such as MongoDB, Oracle, Teradata, SAP HANA, and more. Hence, SQL Server 2019 Big Data Cluster is a scalable, performant, and maintainable SQL platform, data warehouse, data lake, and data science platform that doesn’t require compromising between cloud and on-premises. Components include:

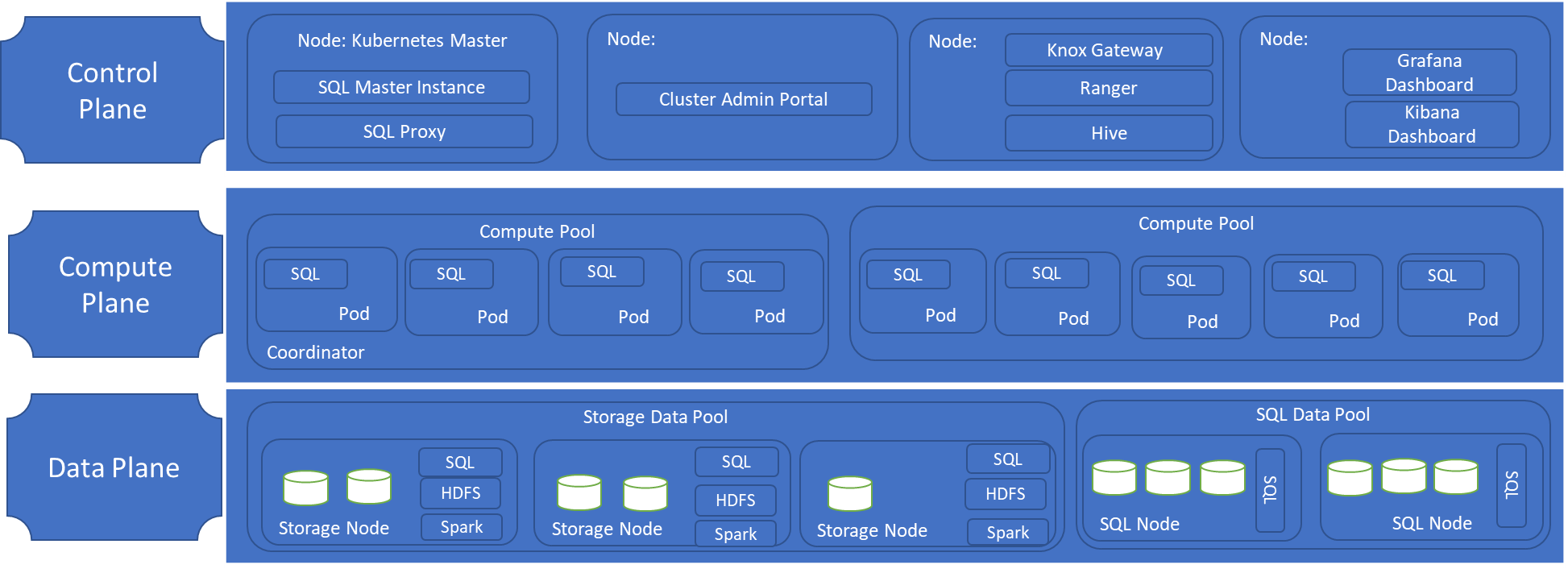

Controller | The controller provides management and security for the cluster. It contains the control service, the configuration store, and other cluster-level services such as Kibana, Grafana, and Elastic Search. |

Compute pool | The compute pool provides computational resources to the cluster. It contains nodes running SQL Server on Linux pods. The pods in the compute pool are divided into SQL compute instances for specific processing tasks. |

Data pool | The data pool is used for data persistence and caching. The data pool consists of one or more pods running SQL Server on Linux. It is used to ingest data from SQL queries or Spark jobs. SQL Server Big Data Cluster data marts are persisted in the data pool. |

Storage pool | The storage pool consists of storage pool pods comprising SQL Server on Linux, Spark, and HDFS. All the storage nodes in a SQL Server Big Data Cluster are members of an HDFS cluster. |

Following is the reference architecture of SQL Server 2019 on Big Data Cluster:

Big data analysis

Data analytics is the science of examining raw data to uncover underlying information. The primary goal is to ensure that the resulting information is of high data quality and accessible for business intelligence as well as big data analytics applications. Big Data Clusters make machine learning easier and more accurate by handling the four Vs of big data:

The impact of the Vs on analytics | How a Big Data Cluster helps | |

Volume | The greater the volume of data processed by a machine learning algorithm, the more accurate the predictions will be. | Increases the data volume available for AI by capturing data in scalable, inexpensive big data storage in HDFS and by integrating data from multiple sources using PolyBase connectors. |

Variety | The greater the variety of different sources of data, the more accurate the predictions will be. | Increases the number of varieties of data available for AI by integrating multiple data sources through the PolyBase connectors. |

Velocity | Real-time predictions depend on up to-date data flowing quickly through the data processing pipelines. | Increases the velocity of data to enable AI by using elastic compute and caching to speed up queries. |

Veracity | Accurate machine learning depends on the quality of the data going into the model training. | Increases the veracity of data available for AI by sharing data without copying or moving data, which introduces data latency and data quality issues. SQL Server and Spark can both read and write into the same data files in HDFS. |

Cluster management

Azure Data Studio is the tool that data engineers, data scientists, and DBAs use to manage databases and write queries. Cluster admins use the admin portal, which runs as a pod inside the same namespace as a whole cluster and provides information such as status of all pods and overall storage capacity.

Azure Data Studio is a cross-platform management tool for Microsoft databases. It’s like SQL Server Management Studio on top of the popular VS Code editor engine, a rich T-SQL editor with IntelliSense and plug-in support. Currently, it’s the easiest way to connect to the different SQL Server 2019 endpoints (SQL, HDFS, and Spark). To do so, you need to install Data Studio and the SQL Server 2019 extension.

If you have a Kubernetes infrastructure, you can deploy this with a single server cluster in single command and have a cluster in about 30 minutes.

If you want to install SQL Server 2019 Big Data Cluster on your on-premises Kubernetes cluster, you can find an official deployment guide for Big Data Clusters on Minikube in Microsoft docs.

Conclusion

Planning is everything and good planning will get a lot of problems out of the way, especially if you are thinking about streaming data and real-time analytics.

When it comes to technology, organizations have many different types of big data management solutions to choose from. Dell Technologies solutions for SQL Server help organizations achieve some of the key benefits of SQL Server 2019 Big Data Clusters:

- Insights to everyone: Access to management services, an admin portal, and integrated security in Azure Data Studio, which makes it easy to manage and create a unified development and administration experience for big data and SQL Server users

- Enriched data: Data using advanced analytics and artificial intelligence that’s built into the platform

- Overall data intelligence:

- Unified access to all data with unparalleled performance

- Easily and securely manage data (big/small)

- Build intelligent apps and AI with all data

- Management of any data, any size, anywhere: Simplified management and analysis through unified deployment, governance, and tooling

- Easy deployment and management of using Kubernetes-based big data solution built into SQL Server

To make better decisions and to gain insights from data, large, small, and medium-size enterprises use big data analysis. For information about how the SQL solutions team at Dell help customers store, analyze, and protect data with Microsoft SQL Server 2019 on Big Data Cluster technologies, see the following links:

https://www.delltechnologies.com/en-us/big-data/solutions.htm#dropdown0=0

https://infohub.delltechnologies.com/t/sql-server/

How IT organizations benefit from the Dell EMC Live Optics monitoring tool

Mon, 03 Aug 2020 16:10:08 -0000

|Read Time: 0 minutes

How can a monitoring tool bring value to any organization? Why is it, in fact, essential for any IT organization? And do IT organizations need to invest in monitoring to see the value prop?

This blog provides an overview of how organizations benefit from monitoring. Later in the blog, you will get to know about the Live Optics free online software that Dell EMC introduced in 2017 and the value proposition that this monitoring software brings to your organization.

Traditional Monitoring Challenges

Traditionally, infrastructure was managed and monitored by IT engineers who would log in to each device or server and check the disk space, memory, processor, network gears, and so on. This required a lot of manual effort and time to identify the issues. It was difficult for IT engineers to proactively predict the issues, and so their efforts were typically reactive. Later, due to the rapid changes and evolution of technologies, consolidated monitoring tools were introduced to help IT administrators analyze the environment, foresee threats, detect anomalies, and provide end-to-end dashboard reports of the environment.

Today’s digital transformation has triggered a growth in the number of products, resources, and technologies. The challenge for organizations is investing budget and time into monitoring solutions that can enable greater efficiencies on premises, in the public cloud, or in a blended hybrid environment. The goal is to move away from the traditional labor-intensive monitoring that uses scripts and relies on knowledge experts to automated monitoring that enables IT engineers to focus more on innovation.

Modern Monitoring Tool Benefits

The benefits of monitoring and how it plays a major role in your organizational growth:

- Never miss a beat: Helps to prevent and reduce downtime and business losses by actively monitoring the heartbeat of the server’s IT infrastructure

- Faster alerts: Identifies business interruptions and actively monitors and alerts via email, mobile calls, text, and instant messages

- Comprehensive view: Helps to resolve uncertainty and provides understanding on how end-to-end infrastructure and its applications work and perform

- Insights: Recommends upgrades, identifies architectural or technical hiccups, and tracks the smooth transitions (technology upgrades, migrations, and third-party integrations)

- Budgeting and planning: Enables the IT organization to develop a plan for future projects and costs

- Protecting against threats: Helps to detect early threats or problems to mitigate risks

- Analytics: Incorporates analytics and machine learning techniques to analyze live data and to bring about greater improvements in productivity and performance

- Rich dashboard reporting: Powers BI integration and capturing of consolidated dashboard reports for management leads

In a monitoring context, artificial intelligence plays a major role by proactively tuning or fixing issues—by sending notifications to the appropriate team or individual, or even automatically creating a ticket in a service desk and assigning it to a queue.

Dell EMC Live Optics

We live in a world of constantly changing products. New features are added, competitive features are enhanced, new alternatives are introduced, and prices are changed. To address these changes, Dell EMC offers Live Optics, free online software that helps you to collect, visualize, and share data about your IT environment and workloads. Live Optics is an agentless monitoring tool that you can set up in minutes.

Eliminate overspending and speed decision-making in your IT environment. Live Optics captures performance, software, OS distribution, and VM data for time frames ranging from a few hours to one week. Live Optics lets you share IT performance and workload data characteristics securely and anonymously. You can collaborate with peers, vendors, or channel partners without compromising security. With Live Optics, you can even model project requirements to gain a deeper knowledge of workloads and their resource requirements.

What is the customer value proposition? Live Optics brings together customer intelligence, competitive insight, and product valuation. Here are ways in which Dell EMC Live Optics can bring value to any IT organization:

Host Optics:

- Software for data collection—platform and hardware agnostic; physical or virtual

- Support for all major operating systems and virtualization platforms

- Quite often, all the information you need to make a recommendation

Workload or File Optics:

- Intense workload-specific assessments for diagnostic issues

- Rapid file characterization of unstructured data

- Data archival candidacy

- Data compression estimates using proprietary algorithms

Hardware Optics:

- Performance and configuration retrieved on supported platforms via API and/or file processing

- Custom options for support of proprietary, OEM-specific APIs

Please reach out to our Live Optics support team at https://support.liveoptics.com/hc/en-us for assistance. We can help in the following ways:

- Consultation

- Deployment

- Adoption

- Support

- Optimization

One of my favorite idioms is "Health is wealth." In the same way, the wealth of an IT environment is measured by the health of an organization’s IT infrastructure.

Important links:

https://www.liveoptics.com

https://support.liveoptics.com/hc/en-us

https://www.youtube.com/LiveOptics

https://app.liveoptics.com/Account/Login?ReturnUrl=%2f

https://support.liveoptics.com/hc/en-us/community/topics