Built to Scale with VCF on VxRail and Oracle 19C RAC

Wed, 11 Nov 2020 19:58:39 -0000

|Read Time: 0 minutes

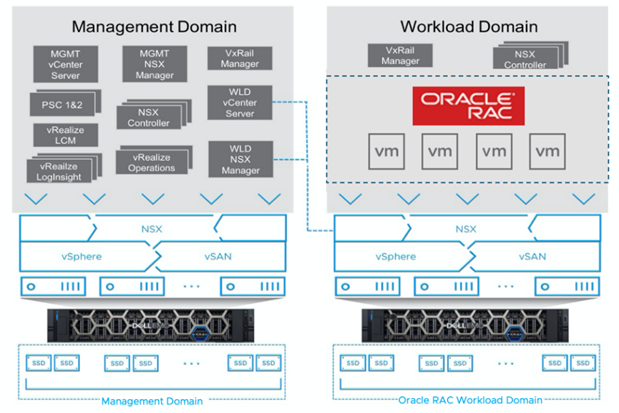

The newly released Oracle RAC on Dell EMC VxRail with VMware Cloud Foundations (VCF) Reference Architecture (RA) guides customers to building an efficient and high performing hyperconverged infrastructure to run their OLTP workloads. Scalability was the primary goal of this RA, and performance was highlighted as the numbers were generated. As Oracle RAC scaled, TPM increased to over 1 million TPM, while read IOPs showed sub-milli-second (0.64-0.70 ms) performance. The performance achieved with VxRail is a great added benefit to the core design points for Oracle RAC environments of which the primary focus is the availability and resiliency of the solution. Links to a reference architecture (“Oracle RAC on VMware Cloud Foundation on Dell EMC VxRail”) and a solution brief (“Deploying Oracle RAC on Dell EMC VxRail “) are available here and at the end of this post.

The RAC solution with VxRail scaled-out easily — you simply add a new node to join an existing VxRail cluster. The VxRail Manager provides a simple path that automatically discovers and non-disruptively adds each new node. VMware vSphere and vSAN can then rebalance resources and workloads across the cluster, creating a single resource pool for compute and storage.

The VxRail clusters were built with eight P570F nodes; four for the VCF Management Domain and four for the Oracle RAC Workload Domain.

Specifics on the build, including the hardware and software used, are detailed within the reference architecture. It also provides information on the testing, tools used, and results.

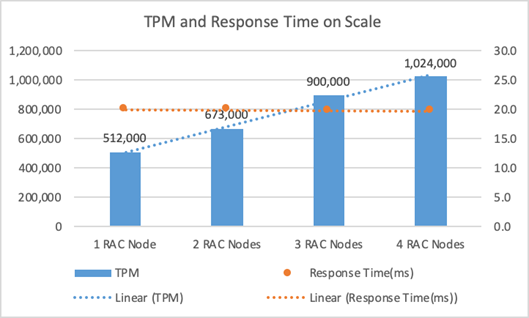

This graph shows the performance of TPM and Response Time when increasing the RAC node count from one to four. Notice that the average TPM increased with near-linear trendline (shown by the dotted line) as additional RAC nodes were added, while total application response time was maintained at 20 milliseconds or less.

Note: TPM near-linear trendline is shown in the above graph (blue dotted line), As additional RAC nodes are added, an increase in performance is seen as well as an increase in RAC high availability. TPM linear performance (scale equal performance per each note) growth is not achieved due to RAC nodes’ dependency on concurrency of access, instance, network, or other factors. See the RA for additional performance related information.

Summary of performance

Different-sized databases kept the TPM at the same level (about one million transactions) while keeping the application response time at 20ms or below. When increasing the database size, the physical read and write IOPS increased near-linearly, as reported from the Oracle AWR. This indicated that more read and write I/O requests were served by the backend storage, under the same configuration. Overall, when the peak client IOPS was up to 100,000, vSAN still provided excellent storage performance at sub-milliseconds at read and single-digit milliseconds latency at write.

Sidebar about Oracle licensing: While not mentioned in the RA; the VxRail offers several facilities to both control Oracle licenses and in some cases eliminates the need for costly licensed options. These include a broad choice of CPU core configurations, some with fewer cores and higher processing power per core, to maximize the customer’s Oracle workload performance while minimizing the license requirements. Costly add on options such as encryption and compression can be provided via vSAN and are handled by VxRail. Further, and the vSphere hypervisor features, like DRS, allow Oracle VMs to be contained to only licensed nodes.

You can speak to a Dell Technologies’ Oracle specialist for more details on how to control Oracle licensing costs for VMware environments.

Conclusion

Oracle Database 19c on VxRail offers customers performance, scalability, reliability, and security for all their operational and analytical workloads. The Oracle RAC on VxRail test environment was first created to highlight the architecture. It also had the added benefit of showcasing the great performance VxRail delivers. If you need more performance, it is simple to adjust the configuration by adding more VxRail nodes to the cluster. If you need more storage, add more drives to meet the scale required of the database. Dell Technologies has Oracle specialists to ensure the VxRail cluster will meet the scale and performance outcomes desired for Oracle environments.

Additional Resources:

Reference Architecture - Oracle RAC on VMware Cloud Foundation on Dell EMC VxRail

Solution Brief - Deploying Oracle RAC on Dell EMC VxRail

Author: Vic Dery, Senior Principal Engineer, VxRail Technical Marketing

Special thank you to David Glynn for assisting with the reviews

Related Blog Posts

Simpler Cloud Operations and Even More Deployment Options Please!

Wed, 03 Aug 2022 21:32:14 -0000

|Read Time: 0 minutes

The latest VMware Cloud Foundation on Dell EMC VxRail release debuts LCM and storage enhancements, support for transitioning from VCF Consolidated to VCF Standard Architecture, AMD-based VxRail hardware platforms, and more!

Dell Technologies and VMware are happy to announce the availability of VMware Cloud Foundation 4.2.0 on VxRail 7.0.131.

This release brings about support for the latest versions of VCF and Dell EMC VxRail that provide a simple and consistent operational experience for developer ready infrastructure across core, edge, and cloud. Let’s review these new updates and enhancements.

Some Important Updates:

VCF on VxRail Management Operations

Ability For Customers to Perform Their Own VxRail Cluster Expansion Operations in VCF on VxRail Workload Domains. Sometimes some of the best announcements that come with a new release have nothing to do with a new technical feature but instead are about new customer driven serviceability operations. The VCF on VxRail team is happy to announce this new serviceability enhancement. Customers no longer must purchase a professional services engagement simply to expand a single site layer 2 configured VxRail WLD cluster deployment by adding nodes to it. This should save time and money and give customers the freedom to perform these operations on their own.

This aligns to existing support that already exists for customers performing similar cluster expansion operations for VxRail systems deployed as standard clusters in non-VCF use cases.

Note: There are some restrictions on which cluster configurations support customer driven expansion serviceability. Stretched VxRail cluster deployments and layer 3 VxRail cluster configurations will still require engagement with professional services as these are more advanced deployment scenarios. Please reach out to your local Dell Technologies account team for a complete list of the cluster configurations that are supported for customer driven expansions.

VCF on VxRail Deployment and Services

Support for Transitioning From VCF on VxRail Consolidated Architecture to VCF on VxRail Standard Architecture. Continuing the operations improvements, the VCF on VxRail team is also happy to announce this new capability. We introduced support for VCF Consolidated Architecture deployments in VCF on VxRail back in May 2020. You can read about it here. VCF Consolidated Architecture deployments provide customers a way to familiarize themselves with VCF on VxRail in their core datacenters without a significant investment in cost and infrastructure footprint. Now, with support for transitioning from VCF Consolidated Architecture to VCF Standard Architecture, customers can expand as their scale demands it in their core, edge, or distributed datacenters! Now that’s flexible!

Please reach out to your local Dell Technologies account team for details on the transition engagement process requirements.

And Some Notable Enhancements:

VxRail Hardware Platform

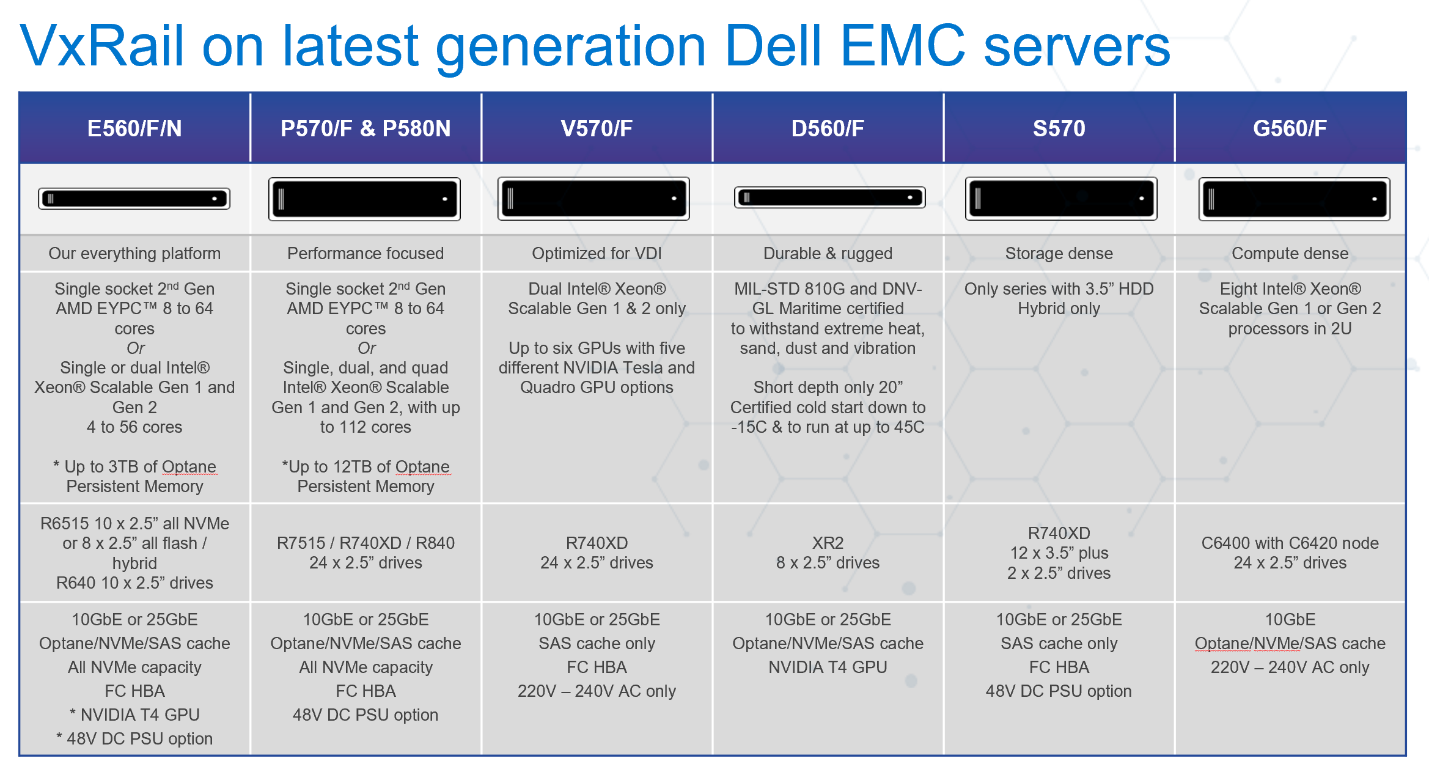

AMD-based VxRail Platform Support in VCF 4.x Deployments. With the latest VxRail 7.0.131 HCI System Software release, ALL available AMD-based VxRail series models are now supported in VCF 4.x deployments. These models include VxRail E-Series and P-Series and support single socket 2nd Gen AMD EYPC™ processors with 8 to 64 cores, allowing for extremely high core densities per socket.

The figure below shows the latest VxRail HW platform family.

For more info on these AMD platforms, check out my colleague David Glynn’s blog post on the subject here when AMD platform support was first introduced to the VxRail family last year. (Note: New 2U P-Series options have been released since that post.)

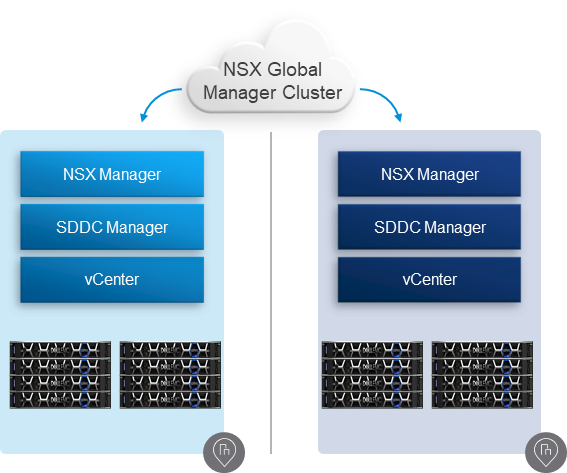

VCF on VxRail Multi-Site Architecture

NSX-T 3.1 Federation Now Supported with VCF 4.2 on VxRail 7.0.131. NSX-T Federation provides a cloud-like operating model for network administrators by simplifying the consumption of networking and security constructs. NSX-T Federation includes centralized management, consistent networking and security policy configuration with enforcement and synchronized operational state across large scale federated NSX-T deployments. With NSX-T Federation, VCF on VxRail customers can leverage stretched networks and unified security policies across multi-region VCF on VxRail deployments, providing workload mobility and simplified disaster recovery. This initial support will be through prescriptive manual guidance that will be made available soon after VCF on VxRail solution general availability. For a detailed explanation of NSX-T Federation, check out this VMware blog post here.

The figure below depicts what the high-level architecture would look like.

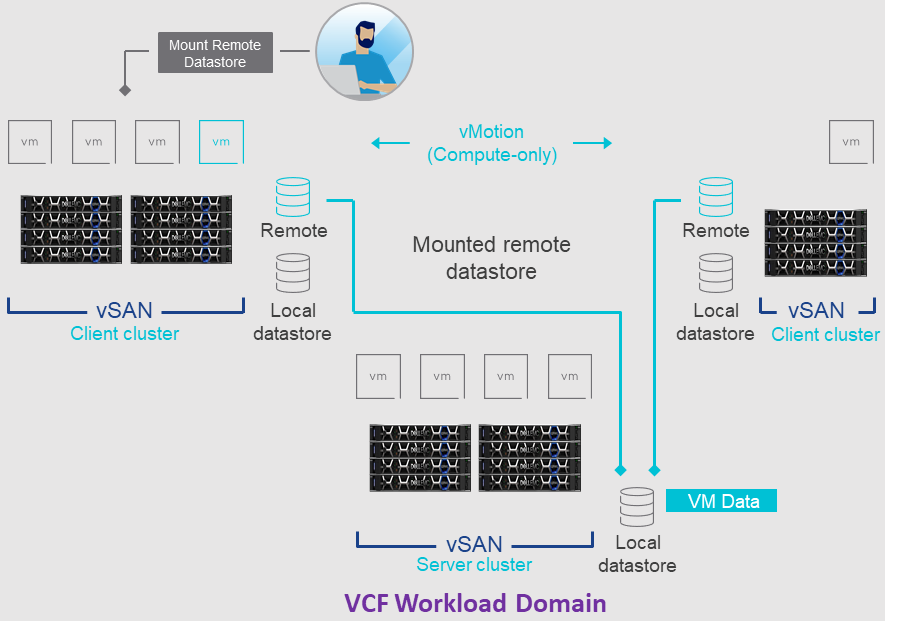

VCF on VxRail Storage

VCF 4.2 on VxRail 7.0.131 Support for VMware HCI Mesh. VMware HCI Mesh is a vSAN feature that provides for “Disaggregated HCI” exclusively through software. In the context of VCF on VxRail, HCI Mesh allows an administrator to easily define a relationship between two or more vSAN clusters contained within a workload domain. It also allows a vSAN cluster to borrow capacity from other vSAN clusters, improving the agility and efficiency in an environment. This disaggregation allows the administrator to separate compute from storage. HCI Mesh uses vSAN’s native protocols for optimal efficiency and interoperability between vSAN clusters. HCI Mesh accomplishes this by using a client/server mode architecture. vCenter is used to configure the remote datastore on the client side. Various configuration options are possible that can allow for multiple clients to access the same datastore on a server. VMs can be created that utilize the storage capacity provided by the server. This can enable other common features, such as performing a vMotion of a VM from one vSAN cluster to another.

The figure below depicts this architecture.

VCF on VxRail Networking

This release continues to extend networking flexibility to further adapt to various customer environments and to reduce deployment efforts.

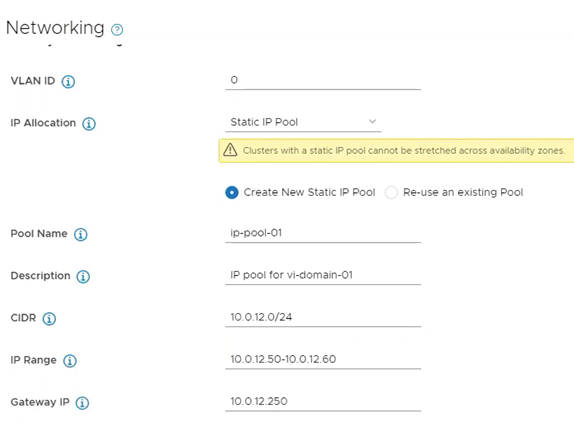

Customer-Defined IP Pools for NSX-T TEP IP Addresses for the Management Domain and Workload Domain Hosts. To extend networking flexibility, this release introduces NSX-T TEP IP Address Pools. This enhances the existing support for using DHCP to assign NSX-T TEP IPs. This new feature allows customers to avoid deploying and maintaining a separate DHCP server for this purpose. Admins can select to use IP Pools as part of VCF Bring Up by entering this information in the Cloud Builder template configuration file. The IP Pool will then be automatically configured during Bring Up by Cloud Builder. There is also a new option to choose DHCP or IP Pools during new workload domain deployments in the SDDC Manager.

The figure below illustrates what this looks like. Once domains are deployed, IP address blocks are managed through each domain’s NSX Manager respectively.

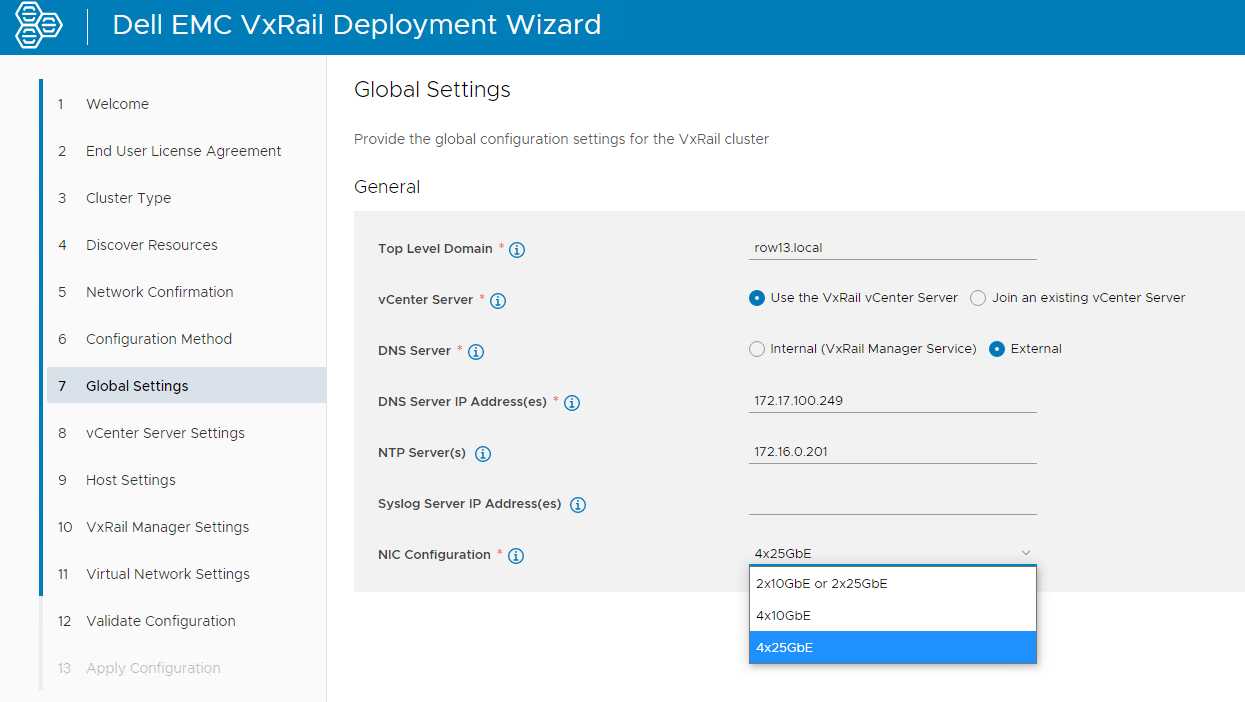

pNIC-Level Redundancy Configuration During VxRail First Run. Network flexible configurations are further extended with this feature in VxRail 7.0.131. It allows an administrator to configure the VxRail System VDS traffic across NDC and PCIe pNICs automatically during VxRail First Run using a new VxRail Custom NIC Profile option. Not only does this help provide additional high availability network configurations for VCF on VxRail domain clusters, it also helps to further simplify operations by removing the need for additional Day 2 activities in order to get to the same host configuration outcome.

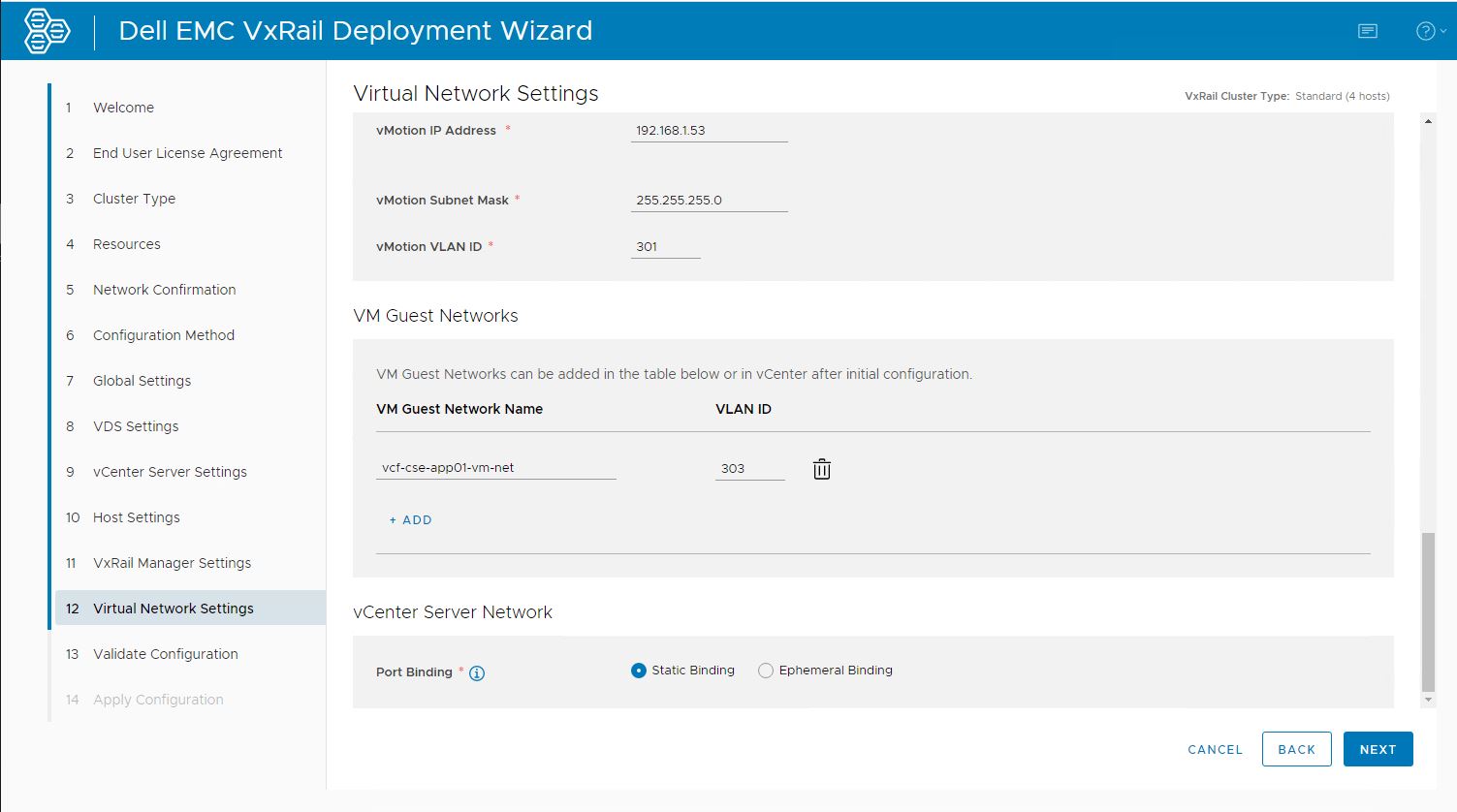

Specify the VxRail Network Port Group Binding Mode During VxRail First Run. To further accelerate and simplify VCF on VxRail deployments, VxRail 7.0.131 has introduced this new enhancement designed with VCF in mind. VCF requires all host Port Group Binding Modes be set to Ephemeral. VxRail First Run now enables admins to have this parameter configured automatically, reducing the number of manual steps needed to prep VxRail hosts for VCF on VxRail use. Admins can set this parameter using the VxRail First Run JSON configuration file or manually enter it into the UI during deployment.

The figure below illustrates an example of what this looks like in the Dell EMC VxRail Deployment Wizard UI.

VCF on VxRail LCM

New SDDC Manager LCM Manifest Architecture. This new LCM Manifest architecture changes the way SDDC Manager handles the metadata required to enable upgrade operations as compared to the legacy architecture used up until this release.

With the legacy LCM Manifest architecture:

- The metadata used to determine upgrade sequencing and availability was published as part of the LCM bundle itself or was part of SDDC Manager VM.

- Did not allow for changes to the metadata after the bundle was published. This limited the ability for VMware to modify upgrade sequencing without requiring an upgrade to a new VCF release.

The newly updated LCM Manifest architecture helps address these challenges by enabling dynamic updates to LCM metadata, enabling future functionality such as recalling upgrade bundles or modifying skip level upgrade sequencing.

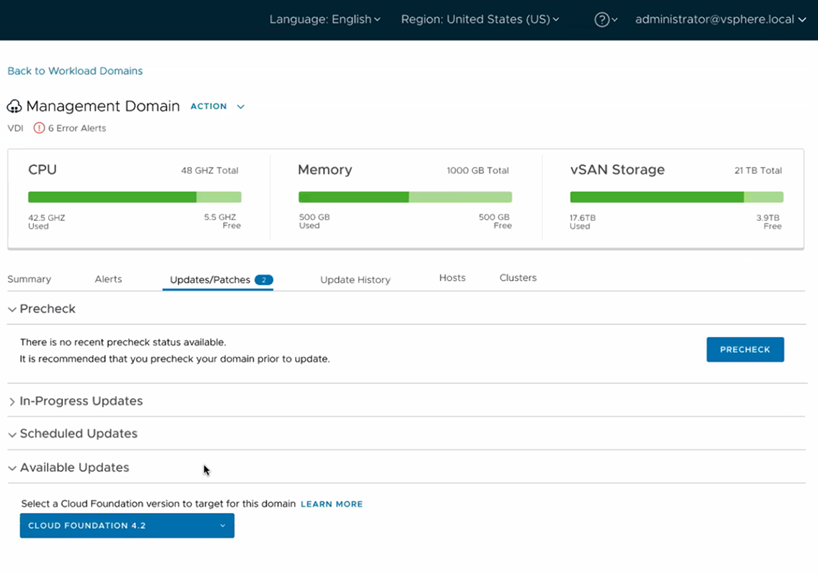

VCF Skip-Level Upgrades Using SDDC Manager UI and Public API. Keeping up with new releases can be challenging and scheduling maintenance windows to perform upgrades may not be as readily available for every customer. The goal behind this enhancement is to provide VCF on VxRail administrators the flexibility to reduce the number of stepwise upgrades needed in order to get to the latest SDDC Manager/VCF release if they are multiple versions behind. All required upgrade steps are now automated as a single SDDC Manager orchestrated LCM workflow and is built upon the new SDDC Manager LCM Manifest architecture. VCF skip level upgrades allow admins to quickly and directly adopt code versions of choice and to reduce maintenance window requirements.

Note: To take advantage of VCF skip level upgrades for future VCF releases, customers must be at a minimum of VCF 4.2.

The figure below shows what this option looks like in the SDDC Manager UI.

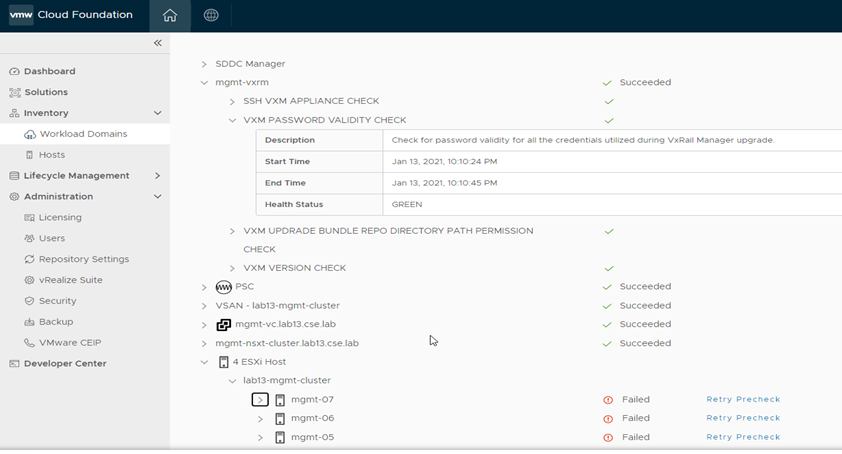

Improvements to Upgrade Resiliency Through VCF Password Prechecks. Other LCM enhancements in this release come in the area of password prechecks. When performing an upgrade, VCF needs to communicate to various components to complete various actions. Of course, to do this, the SDDC Manager needs to have valid credentials. If the passwords have expired or have been changed outside of VCF, the patching operation fails. To avoid any potential issues, VCF now checks to ensure that the credentials needed are valid prior to commencing the patching operation. These checks will occur both during the execution of the pre-check validation as well as during an upgrade of a resource, such as ESXi, NSX-T, vCenter, or VxRail Manager. Check out what this looks like in the figure below

Automated In-Place vRSLCM Upgrades. Upgrading vRSLCM in the past required the deployment of a net new vRSLCM appliance. With VCF 4.2, the SDDC Manager keeps the existing vRSLCM appliance, takes a snapshot of it, then transfers the upgrade packages directly to it and upgrades everything in place. This provides a more simplified and streamlined LCM experience.

VCF API Performance Enhancements. Administrators who use a programmatic approach will experience a quicker retrieval of information through the caching of certain information when executing API calls.

VCF on VxRail Security

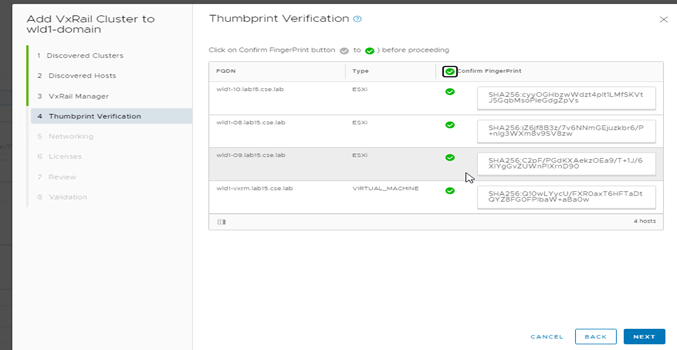

Mitigate Man-In-The-Middle Attacks. Want to prevent Man-In-The-Middle Attacks on your VCF on VxRail cloud infrastructure? This release is for you. Introduced in VCF 4.2, customers can leverage SSH RSA fingerprint and SSL thumbprint enforcement capabilities that are natively built-into SDDC Manager in order to verify the authenticity of cloud infrastructure components (vCenter, ESXi, and VxRail Manager). Customers can choose to enable this feature for their VCF on VxRail deployment during VCF Bring Up by filling in the affiliated parameter fields in the Cloud Builder configuration file.

An SSH RSA Fingerprint comes from the host SSH public key while an SSL Thumbprint comes from the host’s certificates. One or more of these data points can be used to validate the authenticity of VCF on VxRail infrastructure components when being added and configured into the environment. For the Management Domain, both SSH fingerprints and SSL thumbprints are available to use while Workload Domains have SSH Fingerprints available. See what this looks like in the figure below.

Natively Integrated Dell Technologies Next Gen SSO Support With SDDC Manager. Dell Technologies Next Gen SSO is a newly implemented backend service used in authenticating with Dell Technologies support repositories where VxRail update bundles are published. With the native integration that SDDC Manager has with monitoring and downloading the latest supported VxRail upgrade bundles from this depot, SDDC Manager now utilizes this new SSO service for its authentication. While this is completely transparent to customers, existing VCF on VxRail customers may need to log SDDC Manager out of their current depot connection and re-authenticate with their existing credentials to ensure future VxRail updates are accessible by SDDC Manager.

New Advanced Security Add-on for VMware Cloud Foundation License SKUs: Though not necessarily affiliated with the VCF 4.2 on VxRail 7.0.131 BOM directly, new VMware security license SKUs for Cloud Foundation are now available for customers who want to bring their own VCF licenses to VCF on VxRail deployments.

The Advanced Security Add-on for VMware Cloud Foundation now includes advanced threat protection, and workload and endpoint security that provides the following capabilities:

- Carbon Black Workload Advanced: This includes Next Generation Anti-Virus, Workload Audit/Remediation, and Workload EDR.

- Advanced Threat Prevention Add-on for NSX Data Center – Coming in Advanced and Enterprise Plus editions, this includes NSX Firewall, NSX Distributed IDS/IPS, NSX Intelligence, and Advanced Threat Prevention

- NSX Advanced Load Balancer with Web Application Firewall

Updated VMware Cloud Foundation and VxRail BOM

VMware Cloud Foundation 4.2.0 on VxRail 7.0.131 introduces support for the latest versions of the SDDC and VxRail. For the complete list of component versions in the release, please refer to the VCF on VxRail release notes. A link is available at the end of this post.

Well, that about covers it for this release. The innovation continues with co-engineered features coming from all layers of the VCF on VxRail stack. This further illustrates the commitment that Dell Technologies and VMware have to drive simplified turnkey customer outcomes. Until next time, feel free to check out the links below to learn more about VCF on VxRail.

Jason Marques

Twitter - @vwhippersnapper

Additional Resources

- VMware Cloud Foundation on Dell EMC VxRail Release Notes

- VxRail page on DellTechnologies.com

- VxRail Videos

- VCF on VxRail Interactive Demos

- Blog: 2nd Gen AMD EPYC now available to power your favorite hyperconverged platform: VxRail

- Blog: The Dell Technologies Cloud Platform – Smaller in Size, Big on Features

- Blog: Introducing NSX-T Federation support in VMware Cloud Foundation

Take VMware Tanzu to the Cloud Edge with Dell Technologies Cloud Platform

Wed, 12 Jul 2023 16:23:35 -0000

|Read Time: 0 minutes

Dell Technologies and VMware are happy to announce the availability of VMware Cloud Foundation 4.1.0 on VxRail 7.0.100.

This release brings support for the latest versions of VMware Cloud Foundation and Dell EMC VxRail to the Dell Technologies Cloud Platform and provides a simple and consistent operational experience for developer ready infrastructure across core, edge, and cloud. Let’s review these new features.

Updated VMware Cloud Foundation and VxRail BOM

Cloud Foundation 4.1 on VxRail 7.0.100 introduces support for the latest versions of the SDDC listed below:

- vSphere 7.0 U1

- vSAN 7.0 U1

- NSX-T 3.0 P02

- vRealize Suite Lifecycle Manager 8.1 P01

- vRealize Automation 8.1 P02

- vRealize Log Insight 8.1.1

- vRealize Operations Manager 8.1.1

- VxRail 7.0.100

For the complete list of component versions in the release, please refer to the VCF on VxRail release notes. A link is available at the end of this post.

VMware Cloud Foundation Software Feature Updates

VCF on VxRail Management Enhancements

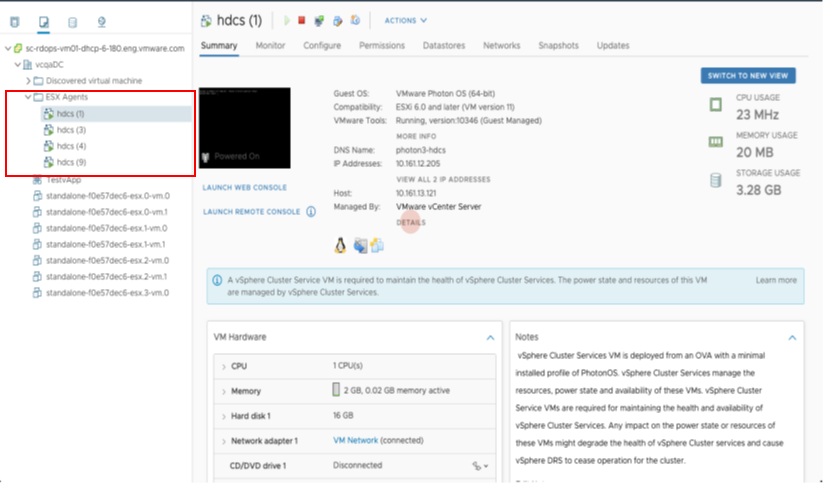

vSphere Cluster Level Services (vCLS)

vSphere Cluster Services is a new capability introduced in the vSphere 7 Update 1 release that is included as a part of VCF 4.1. It runs as a set of virtual machines deployed on top of every vSphere cluster. Its initial functionality provides foundational capabilities that are needed to create a decoupled and distributed control plane for clustering services in vSphere. vCLS ensures cluster services like vSphere DRS and vSphere HA are all available to maintain the resources and health of the workloads running in the clusters independent of the availability of vCenter Server. The figure below shows the components that make up vCLS from the vSphere Web Client.

Figure 1

Not only is vSphere 7 providing modernized data services like embedded vSphere Native Pods with vSphere with Tanzu but features like vCLS are now beginning the evolution of modernizing to distributed control planes too!

VCF Managed Resources and VxRail Cluster Object Renaming Support

VCF can now rename resource objects post creation, including the ability to rename domains, datacenters, and VxRail clusters.

The domain is managed by the SDDC Manager. As a result, you will find that there are additional options within the SDDC Manager UI that will allow you to rename these objects.

VxRail Cluster objects are managed by a given vCenter server instance. In order to change cluster names, you will need to change the name within vCenter Server. Once you do, you can go back to the SDDC Manager and after a refresh of the UI, the new cluster name will be retrieved by the SDDC Manager and shown.

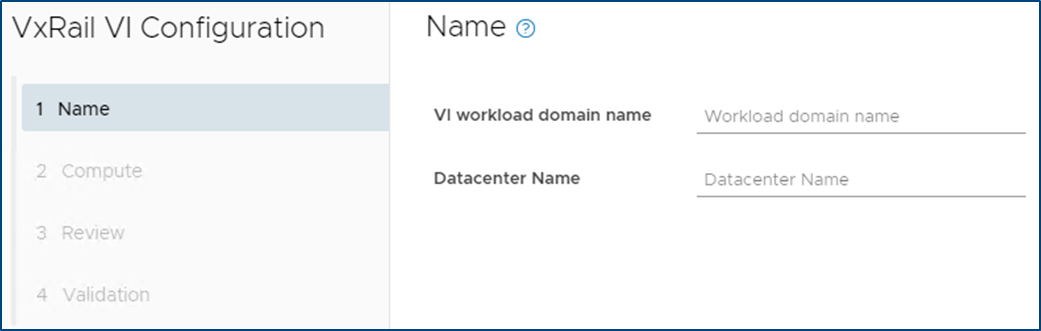

In addition to the domain and VxRail cluster object rename, SDDC Manager now supports the use of a customized Datacenter object name. The enhanced VxRail VI WLD creation wizard process has been updated to include inputs for Datacenter Name and is automatically imported into the SDDC Manager inventory during the VxRail VI WLD Creation SDDC Manager workflow. Note: Make sure the Datacenter name matches the one used during the VxRail Cluster First Run. The figure below shows the Datacenter Input step in the enhanced VxRail VI WLD creation wizard from within SDDC Manager.

Figure 2

Being able to customize resource object names makes VCF on VxRail more flexible in aligning with an IT organization’s naming policies.

VxRail Integrated SDDC Manager WLD Cluster Node Removal Workflow Optimization

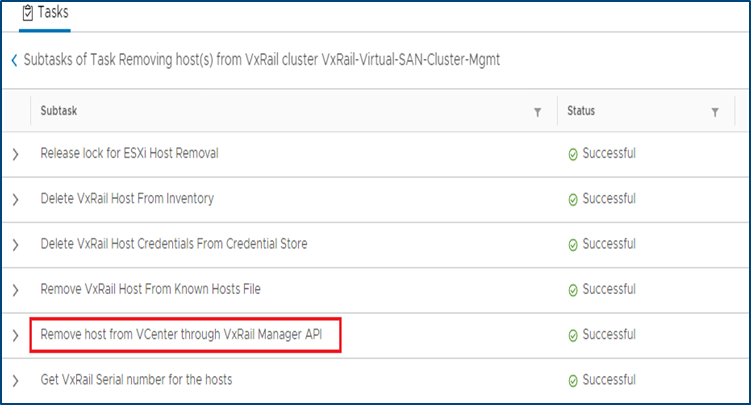

Furthering the Dell Technologies and VMware co-engineering integration efforts for VCF on VxRail, new workflow optimizations have been introduced in VCF 4.1 that take advantage of VxRail Manager APIs for VxRail cluster host removal operations.

When the time comes for VCF on VxRail cloud administrators to remove hosts from WLD clusters and repurpose them for other domains, admins will use the SDDC Manager “Remove Host from WLD Cluster” workflow to perform this task. This remove host operation has now been fully integrated with native VxRail Manager APIs to automate removing physical VxRail hosts from a VxRail cluster as a single end-to-end automated workflow that is kicked off from the SDDC Manager UI or VCF API. This integration further simplifies and streamlines VxRail infrastructure management operations all from within common VMware SDDC management tools. The figure below illustrates the SDDC Manager sub tasks that include new VxRail API calls used by SDDC Manager as a part of the workflow.

Figure 3

Note: Removed VxRail nodes require reimaging prior to repurposing them into other domains. This reimaging currently requires Dell EMC support to perform.

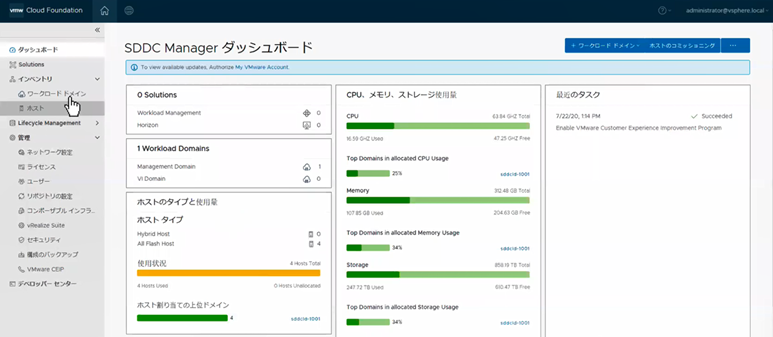

I18N Internationalization and Localization (SDDC Manager)

SDDC Manager now has international language support that meets the I18N Internationalization and Localization standard. Options to select the desired language are available in the Cloud Builder UI, which installs SDDC Manager using the selected language settings. SDDC Manager will have localization support for the following languages – German, Japanese, Chinese, French, and Spanish. The figure below illustrates an example of what this would look like in the SDDC Manager UI.

Figure 4

vRealize Suite Enhancements

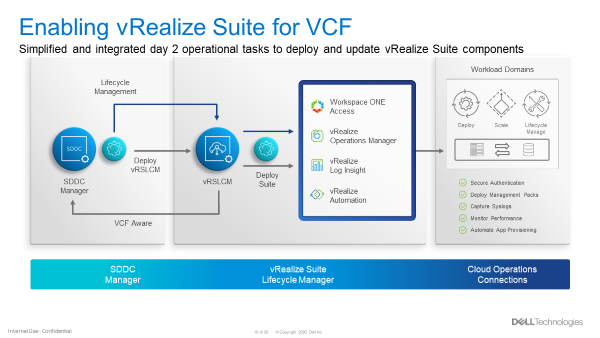

VCF Aware vRSLCM

New in VCF 4.1, the vRealize Suite is fully integrated into VCF. The SDDC Manager deploys the vRSLCM and creates a two way communication channel between the two components. When deployed, vRSLCM is now VCF aware and reports back to the SDDC Manager what vRealize products are installed. The installation of vRealize Suite components utilizes built standardized VVD best practices deployment designs leveraging Application Virtual Networks (AVNs).

Software Bundles for the vRealize Suite are all downloaded and managed through the SDDC Manager. When patches or updates become available for the vRealize Suite, lifecycle management of the vRealize Suite components is controlled from the SDDC Manager, calling on vRSLCM to execute the updates as part of SDDC Manager LCM workflows. The figure below showcases the process for enabling vRealize Suite for VCF.

Figure 5

VCF Multi-Site Architecture Enhancements

VCF Remote Cluster Support

VCF Remote Cluster Support enables customers to extend their VCF on VxRail operational capabilities to ROBO and Cloud Edge sites, enabling consistent operations from core to edge. Pair this with an awesome selection of VxRail hardware platform options and Dell Technologies has your Edge use cases covered. More on hardware platforms later…For a great detailed explanation on this exciting new feature check out the link to a detailed VMware blog post on the topic at the end of this post.

VCF LCM Enhancements

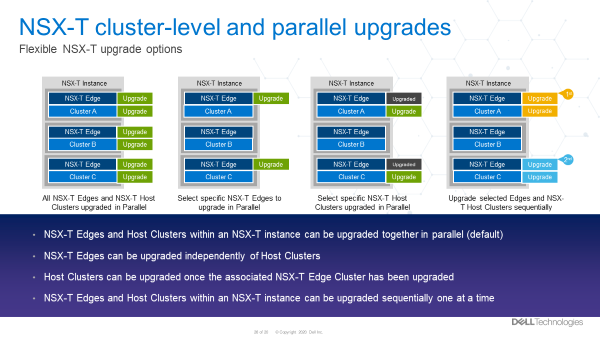

NSX-T Edge and Host Cluster-Level and Parallel Upgrades

With previous VCF on VxRail releases, NSX-T upgrades were all encompassing, meaning that a single update required updates to all the transport hosts as well as the NSX Edge and Manager components in one evolution.

With VCF 4.1, support has been added to perform staggered NSX updates to help minimize maintenance windows. Now, an NSX upgrade can consist of three distinct parts:

- Updating of edges

- Can be one job or multiple jobs. Rerun the wizard.

- Must be done before moving to the hosts

- Updating the transport hosts

- Once the hosts within the clusters have been updated, the NSX Managers can be updated.

Multiple NSX edge and/or host transport clusters within the NSX-T instance can be upgraded in parallel. The Administrator has the option to choose some clusters without having to choose all of them. Clusters within a NSX-T fabric can also be chosen to be upgraded sequentially, one at a time. Below are some examples of how NSX-T components can be updated.

NSX-T Components can be updated in several ways. These include updating:

- NSX-T Edges and Host Clusters within an NSX-T instance can be upgraded together in parallel (default)

- NSX-T Edges can be upgraded independently of NSX-T Host Clusters

- NSX-T Host Clusters can be upgraded independently of NSX-T Edges only after the Edges are upgraded first

- NSX-T Edges and Host Clusters within an NSX-T instance can be upgraded sequentially one after another.

The figure below visually depicts these options.

Figure 6

These options provide Cloud admins with a ton of flexibility so they can properly plan and execute NSX-T LCM updates within their respective maintenance windows. More flexible and simpler operations. Nice!

VCF Security Enhancements

Read-Only Access Role, Local and Service Accounts

A new ‘view-only’ role has been added to VCF 4.1. For some context, let’s talk a bit now about what happens when logging into the SDDC Manager.

First, you will provide a username and password. This information gets sent to the SDDC Manager, who then sends it to the SSO domain for verification. Once verified, the SDDC Manager can see what role the account has privilege for.

In previous versions of Cloud Foundation, the role would either be for an Administrator or it would be for an Operator.

Now, there is a third role available called a ‘Viewer’. Like its name suggests, this is a view only role which has no ability to create, delete, or modify objects. Users who are assigned this role may not see certain items in the SDDC Manger UI, such as the User screen. They may also see a message saying they are unauthorized to perform certain actions.

Also new, VCF now has a local account that can be used during an SSO failure. To help understand why this is needed let’s consider this: What happens when the SSO domain is unavailable for some reason? In this case, the user would not be able to login. To address this, administrators now can configure a VCF local account called admin@local. This account will allow the performing of certain actions until the SSO domain is functional again. This VCF local account is defined in the deployment worksheet and used in the VCF bring up process. If bring up has already been completed and the local account was not configured, then a warning banner will be displayed on the SDDC Manager UI until the local account is configured.

Lastly, SDDC Manager now uses new service accounts to streamline communications between SDDC manager and the products within Cloud Foundation. These new service accounts follow VVD guidelines for pre-defined usernames and are administered through the admin user account to improve inter-VCF communications within SDDC Manager.

VCF Data Protection Enhancements

As described in this blog, with VCF 4.1, SDDC Manager backup-recovery workflows and APIs have been improved to add capabilities such as backup management, backup scheduling, retention policy, on-demand backup & auto retries on failure. The improvement also includes Public APIs for 3rd party ecosystem and certified backup solutions from Dell PowerProtect.

VxRail Software Feature Updates

VxRail Networking Enhancements

VxRail 4 x 25Gbps pNIC redundancy

VxRail engineering continues innovate in areas that drive more value to customers. The latest VCF on VxRail release follows through on delivering just that for our customers. New in this release, customers can use the automated VxRail First Run Process to deploy VCF on VxRail nodes using 4 x 25Gbps physical port configurations to run the VxRail System vDS for system traffic like Management, vSAN, and vMotion, etc. The physical port configuration of the VxRail nodes would include 2 x 25Gbps NDC ports and additional 2 x 25Gbps PCIe NIC ports.

In this 4 x 25Gbps set up, NSX-T traffic would run on the same System vDS. But what is great here (and where the flexibility comes in) is that customers can also choose to separate NSX-T traffic on its own NSX-T vDS that uplinks to separate physical PCIe NIC ports by using SDDC Manager APIs. This ability was first introduced in the last release and can also be leveraged here to expand the flexibility of VxRail host network configurations.

The figure below illustrates the option to select the base 4 x 25Gbps port configuration during VxRail First Run.

Figure 7

By allowing customers to run the VxRail System VDS across the NDC NIC ports and PCIe NIC ports, customers gain an extra layer of physical NIC redundancy and high availability. This has already been supported with 10Gbps based VxRail nodes. This release now brings the same high availability option to 25Gbps based VxRail nodes. Extra network high availability AND 25Gbps performance!? Sign me up!

VxRail Hardware Platform Updates

Recently introduced support for ruggedized D-Series VxRail hardware platforms (D560/D560F) continue expanding the available VxRail hardware platforms supported in the Dell Technologies Cloud Platform.

These ruggedized and durable platforms are designed to meet the demand for more compute, performance, storage, and more importantly, operational simplicity that deliver the full power of VxRail for workloads at the edge, in challenging environments, or for space-constrained areas.

These D-Series systems are a perfect match when paired with the latest VCF Remote Cluster features introduced in Cloud Foundation 4.1.0 to enable Cloud Foundation with Tanzu on VxRail to reach these space-constrained and challenging ROBO/Edge sites to run cloud native and traditional workloads, extending existing VCF on VxRail operations to these locations! Cool right?!

To read more about the technical details of VxRail D-Series, check out the VxRail D-Series Spec Sheet.

Well that about covers it all for this release. The innovation train continues. Until next time, feel free to check out the links below to learn more about DTCP (VCF on VxRail).

Jason Marques

Twitter - @vwhippersnapper

Additional Resources

VMware Blog Post on VCF Remote Clusters

Cloud Foundation on VxRail Release Notes

VxRail page on DellTechnologies.com

VCF on VxRail Interactive Demos