Bandwidth Guarantees for Telecom Services using SR-IOV and Containers

Mon, 12 Dec 2022 19:14:38 -0000

|Read Time: 0 minutes

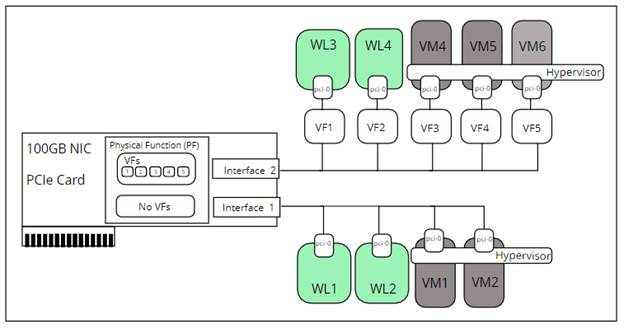

With the emergence of Container-native Virtualization (CNV) or the ability to run and manage virtual machines alongside container workloads, Single Root I/O Virtualization (SR-IOV) takes on an important role in the Communications Industry. Most telecom services require guarantees of capacity e.g. number of simultaneous TCP connections, or concurrent voice calls, or other similar metrics. Each telecom service capacity requirement can be translated into the amount of upload/download data that must be handled, and the maximum amount of time that can pass before a service is deemed non-operational. These bounds of data and time must be met end-to-end, as a telecom service is delivered. The SR-IOV technology plays a crucial role on meeting these requirements.

With SR-IOV being available to workloads and VMs, Telecom customers can divide the bandwidth provided by the physical PCIe device (NICs) into virtual functions or virtual NICs. This allows the virtual NICs with dedicated bandwidth to be assigned to individual workloads or VMs ensuring SLA agreements can be fulfilled.

In the illustration above, say we have a 100GB NIC device that is shared amongst workloads and VMs on a single hardware server. The bandwidth on a single interface is typically shared amongst the workloads and VMs as shown for interface 1. If one workload or VM is extremely bandwidth hungry it could consume a large portion of the bandwidth, say 50%, leaving the other workloads or VMs to share the remaining 50% of the bandwidth which could impact the SLAs agreements under contract the Telco customer.

To ensure this doesn’t happen the specification for SR-IOV allows the PCIe NIC to be sliced up into virtual NICs or VFs as shown with interface 2 above. Slicing the NIC interface into VFs, one can specify the bandwidth per VF. For example, 30GB bandwidth could be specified for VF1 and VF2 for the workloads while VF3–5 could be allocated the remaining bandwidth divided evenly or perhaps only give 5GB each leaving 15GB for future VMS or workloads. By specifying the bandwidth at the VF level, Telco companies can guarantee bandwidths for workloads or VMs thus meeting the SLA agreement with their customers.

While this high-level description of the mechanics illustrates how you enabled the two aspects: SR-IOV for workloads and SR-IOV for VMs, Dell Technology has a white paper, SR-IOL Enablement for Container Pods in OpenShift 4.3 Ready Stack, which provides the step-by-step details for enabling this technology.

Related Blog Posts

CSI drivers 2.0 and Dell EMC Container Storage Modules GA!

Thu, 26 Jan 2023 19:18:02 -0000

|Read Time: 0 minutes

The quarterly update for Dell CSI Driver is here! But today marks a significant milestone because we are also announcing the availability of Dell EMC Container Storage Modules (CSM). Here’s what we’re covering in this blog:

Container Storage Modules

Dell Container Storage Modules is a set of modules that aims to extend Kubernetes storage features beyond what is available in the CSI specification.

The CSM modules will expose storage enterprise features directly within Kubernetes, so developers are empowered to leverage them for their deployment in a seamless way.

Most of these modules are released as sidecar containers that work with the CSI driver for the Dell storage array technology you use.

CSM modules are open-source and freely available from : https://github.com/dell/csm.

Volume Group Snapshot

Many stateful apps can run on top of multiple volumes. For example, we can have a transactional DB like Postgres with a volume for its data and another for the redo log, or Cassandra that is distributed across nodes, each having a volume, and so on.

When you want to take a recoverable snapshot, it is vital to take them consistently at the exact same time.

Dell CSI Volume Group Snapshotter solves that problem for you. With the help of a CustomResourceDefinition, an additional sidecar to the Dell CSI drivers, and leveraging vanilla Kubernetes snapshots, you can manage the life cycle of crash-consistent snapshots. This means you can create a group of volumes for which the drivers create snapshots, restore them, or move them with one shot simultaneously!

To take a crash-consistent snapshot, you can either use labels on your PersistantVolumeClaim, or be explicit and pass the list of PVCs that you want to snap. For example:

apiVersion: v1 apiVersion: volumegroup.storage.dell.com/v1alpha2 kind: DellCsiVolumeGroupSnapshot metadata: # Name must be 13 characters or less in length name: "vg-snaprun1" spec: driverName: "csi-vxflexos.dellemc.com" memberReclaimPolicy: "Retain" volumesnapshotclass: "poweflex-snapclass" pvcLabel: "vgs-snap-label" # pvcList: # - "pvcName1" # - "pvcName2"

For the first release, CSI for PowerFlex supports Volume Group Snapshot.

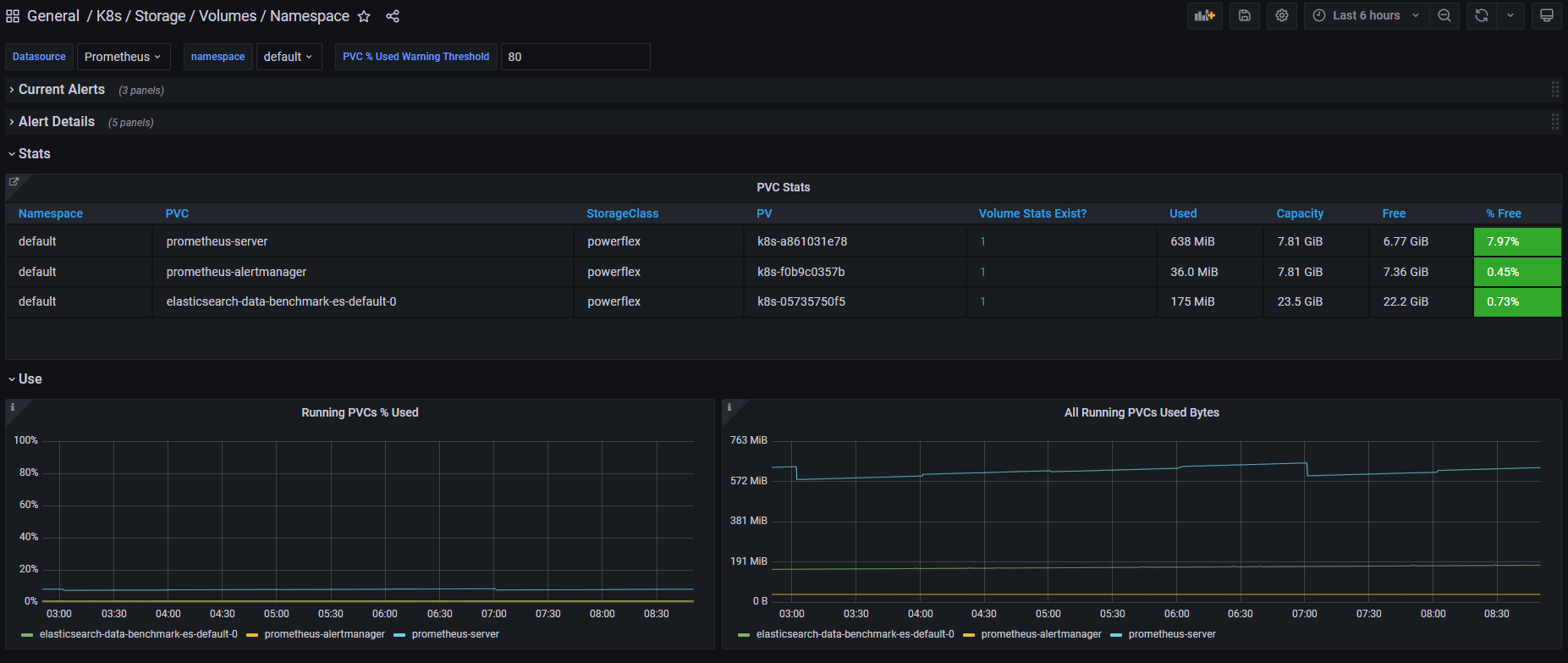

Observability

The CSM Observability module is delivered as an open-telemetry agent that collects array-level metrics to scrape them for storage in a Prometheus DB.

The integration is as easy as creating a Prometheus ServiceMonitor for Prometheus. For example:

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: otel-collector

namespace: powerstore

spec:

endpoints:

- path: /metrics

port: exporter-https

scheme: https

tlsConfig:

insecureSkipVerify: true

selector:

matchLabels:

app.kubernetes.io/instance: karavi-observability

app.kubernetes.io/name: otel-collectorWith the observability module, you will gain visibility on the capacity of the volume you manage with Dell CSI drivers and their performance, in terms of bandwidth, IOPS, and response time.

Thanks to pre-canned Grafana dashboards, you will be able to go through these metrics’ history and see the topology between a Kubernetes PersistentVolume (PV) until its translation as a LUN or fileshare in the backend array.

The Kubernetes admin can also collect array level metrics to check the overall capacity performance directly from the familiar Prometheus/Grafana tools.

For the first release, Dell EMC PowerFlex and Dell EMC PowerStore support CSM Observability.

Replication

Each Dell storage array supports replication capabilities. It can be asynchronous with an associated recovery point objective, synchronous replication between sites, or even active-active.

Each replication type serves a different purpose related to the use-case or the constraint you have on your data centers.

The Dell CSM replication module allows creating a persistent volume that can be of any of three replication types -- synchronous, asynchronous, and metro -- assuming the underlying storage box supports it.

The Kubernetes architecture can build on a stretched cluster between two sites or on two or more independent clusters. The module itself is composed of three main components:

- The Replication controller whose role is to manage the CustomResourceDefinition that abstracts the concept of Replication across the Kubernetes cluster

- The Replication sidecar for the CSI driver that will convert the Replication controller request to an actual call on the array side

- The repctl utility, to simplify managing replication objects across multiple Kubernetes clusters

The usual workflow is to create a PVC that is replicated with a classic Kubernetes directive by just picking the right StorageClass. You can then use repctl or edit the DellCSIReplicationGroup CRD to launch operations like Failover, Failback, Reprotect, Suspend, Synchronize, and so on.

For the first release, Dell EMC PowerMax and Dell EMC PowerStore support CSM Replication.

Authorization

With CSM Authorization we are giving back more control of storage consumption to the storage administrator.

The authorization module is an independent service, installed and owned by the storage admin.

Within that module, the storage administrator will create access control policies and storage quotas to make sure that Kubernetes consumers are not overconsuming storage or trying to access data that doesn’t belong to them.

CSM Authorization makes multi-tenant architecture real by enforcing Role-Based Access Control on storage objects coming from multiple and independent Kubernetes clusters.

The authorization module acts as a proxy between the CSI driver and the backend array. Access is granted with an access token that can be revoked at any point in time. Quotas can be changed on the fly to limit or increase storage consumption from the different tenants.

For the first release, Dell EMC PowerMax and Dell EMC PowerFlex support CSM Authorization.

Resilency

When dealing with StatefulApp, if a node goes down, vanilla Kubernetes is pretty conservative.

Indeed, from the Kubernetes control plane, the failing node is seen as not ready. It can be because the node is down, or because of network partitioning between the control plane and the node, or simply because the kubelet is down. In the latter two scenarios, the StatefulApp is still running and possibly writing data on disk. Therefore, Kubernetes won’t take action and lets the admin manually trigger a Pod deletion if desired.

The CSM Resiliency module (sometimes named PodMon) aims to improve that behavior with the help of collected metrics from the array.

Because the driver has access to the storage backend from pretty much all other nodes, we can see the volume status (mapped or not) and its activity (are there IOPS or not). So when a node goes into NotReady state, and we see no IOPS on the volume, Resiliency will relocate the Pod to a new node and clean whatever leftover objects might exist.

The entire process happens in seconds between the moment a node is seen down and the rescheduling of the Pod.

To protect an app with the resiliency module, you only have to add the label podmon.dellemc.com/driver to it, and it is then protected.

For more details on the module’s design, you can check the documentation here.

For the first release, Dell EMC PowerFlex and Dell EMC Unity support CSM Resiliency.

Installer

Each module above is released either as an independent helm chart or as an option within the CSI Drivers.

For more complex deployments, which may involve multiple Kubernetes clusters or a mix of modules, it is possible to use the csm installer.

The CSM Installer, built on top of carvel gives the user a single command line to create their CSM-CSI application and to manage them outside the Kubernetes cluster.

For the first release, all drivers and modules support the CSM Installer.

New CSI features

Across portfolio

For each driver, this release provides:

- Support of OpenShift 4.8

- Support of Kubernetes 1.22

- Support of Rancher Kubernetes Engine 2

- Normalized configurations between drivers

- Dynamic Logging Configuration

- New CSM installer

VMware Tanzu Kubernetes Grid

VMware Tanzu offers storage management by means of its CNS-CSI driver, but it doesn’t support ReadWriteMany access mode.

If your workload needs concurrent access to the filesystem, you can now rely on CSI Driver for PowerStore, PowerScale and Unity through the NFS protocol. The three platforms are officially supported and qualified on Tanzu.

NFS behind NAT

NFS Driver, PowerStore, PowerScale, and Unity have all been tested and work when the Kubernetes cluster is behind a private network.

PowerScale

By default, the CSI driver creates volumes with 777 POSIX permission on the directory.

Now with the isiVolumePathPermissions parameter, you can use ACLs or any more permissive POSIX rights.

The isiVolumePathPermissions can be configured as part of the ConfigMap with the PowerScale settings or at the StorageClass level. The accepted parameter values are: private_read, private, public_read, public_read_write, and public for the ACL or any combination of [POSIX Mode].

Useful links

For more details you can:

- Watch CSM demos on our VP Youtube channel : https://www.youtube.com/user/itzikreich/

- Ask for help from the Dell container community website

- Read the FAQs

- Subscribe to Github notification and be informed of the latest releases on: https://github.com/dell/csm

- Chat with us on Slack

Author: Florian Coulombel

Looking Ahead: Dell Container Storage Modules 1.2

Thu, 26 Jan 2023 19:15:43 -0000

|Read Time: 0 minutes

The quarterly update for Dell CSI Drivers & Dell Container Storage Modules (CSM) is here! Here’s what we’re planning.

CSM Features

New CSM Operator!

Dell Container Storage Modules (CSM) add data services and features that are not in the scope of the CSI specification today. The new CSM Operator simplifies the deployment of CSMs. With an ever-growing ecosystem and added features, deploying a driver and its affiliated modules need to be carefully studied before beginning the deployment.

The new CSM Operator:

- Serves as a one-stop-shop for deploying all Dell CSI driver and Container Storage Modules

- Simplifies the install and upgrade operations

- Leverages the Operator framework to give a clear status of the deployment of the resources

- Is certified by Red Hat OpenShift

In the short/middle term, the CSM Operator will deprecate the experimental CSM Installer.

Replication support with PowerScale

For disaster recovery protection, PowerScale implements data replication between appliances by means of the the SyncIQ feature. SyncIQ replicates the data between two sites, where one is read-write while the other is read-only, similar to Dell storage backends with async or sync replication.

The role of the CSM replication module and underlying CSI driver is to provision the volume within Kubernetes clusters and prepare the export configurations, quotas, and so on.

CSM Replication for PowerScale has been designed and implemented in such a way that it won’t collide with your existing Superna Eyeglass DR utility.

A live-action demo will be posted in the coming weeks on our VP YouTube channel: https://www.youtube.com/user/itzikreich/.

CSI features

Across the portfolio

In this release, each CSI driver:

- Supports OpenShift 4.9

- Supports Kubernetes 1.23

- Supports the CSI Spec 1.5

- Updates the latest UBI-minimal image

- Supports fsGroupPolicy

fsGroupPolicy support

Kubernetes v1.19 introduced the fsGroupPolicy to give more control to the CSI driver over the permission sets in the securityContext.

There are three possible options:

- None -- which means that the fsGroup directive from the securityContext will be ignored

- File -- which means that the fsGroup directive will be applied on the volume. This is the default setting for NAS systems such as PowerScale or Unity-File.

- ReadWriteOnceWithFSType -- which means that the fsGroup directive will be applied on the volume if it has fsType defined and is ReadWriteOnce. This is the default setting for block systems such as PowerMax and PowerStore-Block.

In all cases, Dell CSI drivers let kubelet perform the change ownership operations and do not do it at the driver level.

Standalone Helm install

Drivers for PowerFlex and Unity can now be installed with the help of the install scripts we provide under the dell-csi-installer directory.

A standalone Helm chart helps to easily integrate the driver installation with the agent for Continuous Deployment like Flux or Argo CD.

Note: To ensure that you install the driver on a supported Kubernetes version, the Helm charts take advantage of the kubeVersion field. Some Kubernetes distributions use labels in kubectl version (such as v1.21.3-mirantis-1 and v1.20.7-eks-1-20-7) that require manual editing.

Volume Health Monitoring support

Drivers for PowerFlex and Unity implement Volume Health Monitoring.

This feature is currently in alpha in Kubernetes (in Q1-2022), and is disabled with a default installation.

Once enabled, the drivers will expose the standard storage metrics, such as capacity usage and inode usage through the Kubernetes /metrics endpoint. The metrics will flow natively in popular dashboards like the ones built-in OpenShift Monitoring:

Pave the way for full open source!

All Dell drivers and dependencies like gopowerstore, gobrick, and more are now on Github and will be fully open-sourced. The umbrella project is and remains https://github.com/dell/csm, from which you can open tickets and see the roadmap.

Google Anthos 1.9

The Dell partnership with Google continues, and the latest CSI drivers for PowerScale and PowerStore support Anthos v1.9.

NFSv4 POSIX and ACL support

Both CSI PowerScale and PowerStore now allow setting the default permissions for the newly created volume. To do this, you can use POSIX octal notation or ACL.

- In PowerScale, you can use plain ACL or built-in values such as private_read, private, public_read, public_read_write, public or custom ones;

- In PowerStore, you can use the custom ones such as A::OWNER@:RWX, A::GROUP@:RWX, and A::OWNER@:rxtncy.

Useful links

For more details you can:

- Watch these great CSM demos on our VP YouTube channel: https://www.youtube.com/user/itzikreich/

- Read the FAQs

- Subscribe to Github notification and be informed of the latest releases on: https://github.com/dell/csm

- Ask for help or chat with us on Slack

Author: Florian Coulombel