Home > Storage > PowerFlex > White Papers > VMware Greenplum on Dell PowerFlex > Greenplum architecture

Greenplum architecture

-

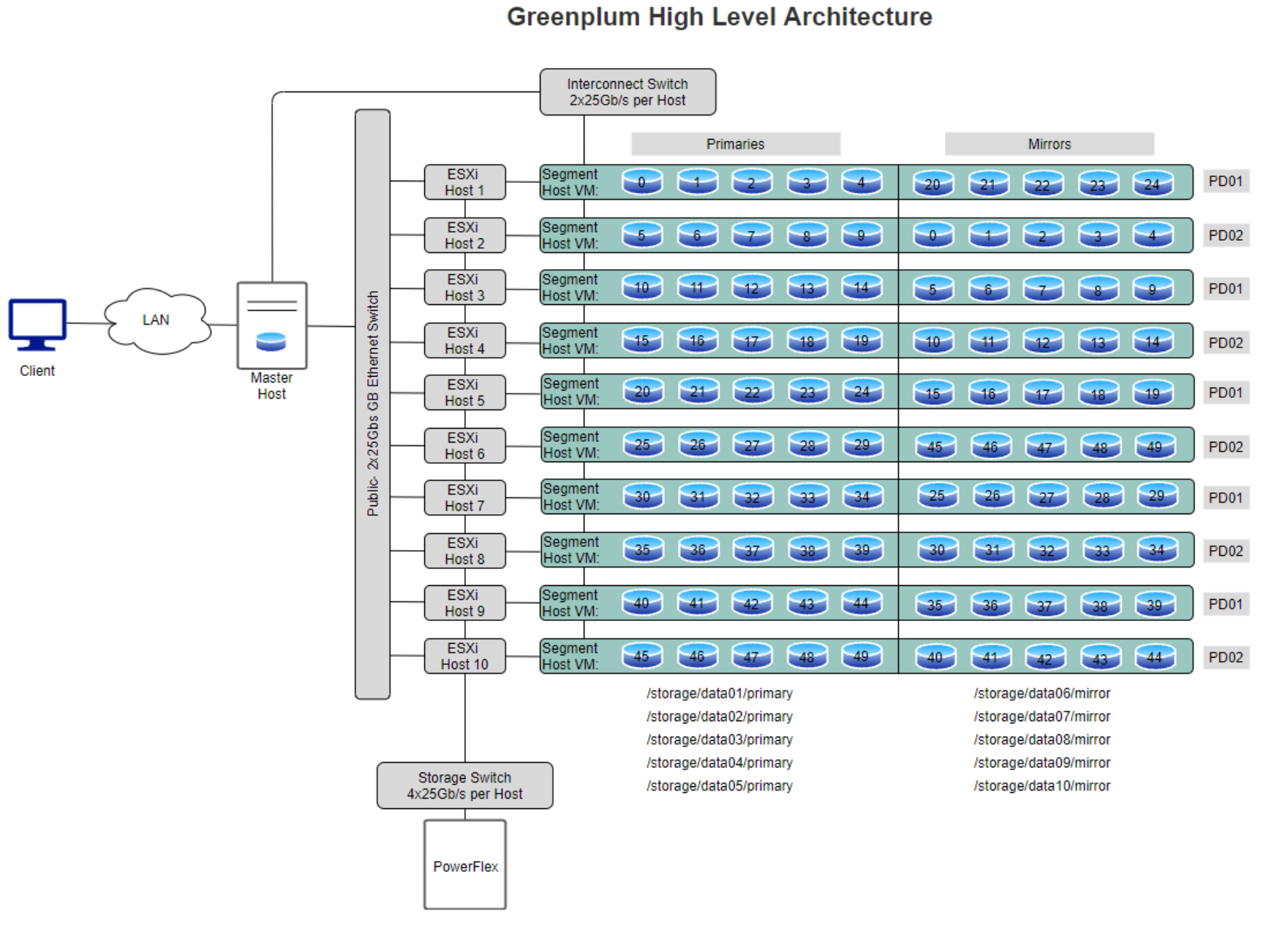

After PowerFlex is installed, Greenplum database is configured by creating VMs on the compute nodes. As shown in the following figure, each ESXi host holds a single VM with five primary and five mirror segments. Greenplum master is configured on one of the ESXi hosts with no master mirror being configured. The configuration details of master and segments are mentioned in the node configuration section of the Appendix. Greenplum interconnect uses a standard Ethernet switching fabric in this case its a 2x25 Gb switch. Half of the primary segments are using protection domain 01 and the other half are using protection domain 02. Their corresponding mirrors are using the opposite protection domains. These mirrors act as an additional level of data protection; in case an entire protection domain goes down in PowerFlex.

Figure 4. Greenplum logical architecture