Home > Workload Solutions > Artificial Intelligence > White Papers > Performance of Dell Servers Running with NVIDIA Accelerators on MLPerf™ Training v2.0 > GPUs

GPUs

-

NVIDIA A100 GPUs

The NVIDIA A100 Tensor Core GPU delivers unprecedented acceleration—at every scale—to power the world’s highest performing elastic data centers for AI, data analytics, and high-performance computing (HPC) applications. As the engine of the NVIDIA data center platform, A100 provides up to 20X higher performance over the prior NVIDIA Volta™ generation. A100 can efficiently scale up or be partitioned into seven isolated GPU instances with Multi-Instance GPU (MIG), providing a unified platform that enables elastic data centers to dynamically adjust to shifting workload demands.

The following figure shows the NVIDIA A100 PCIe accelerator:

Figure 7. NVIDIA A100 PCIe accelerator

The following figure shows the NVIDIA A100 SXM accelerator:

Figure 8. NVIDIA A100 SXM accelerator

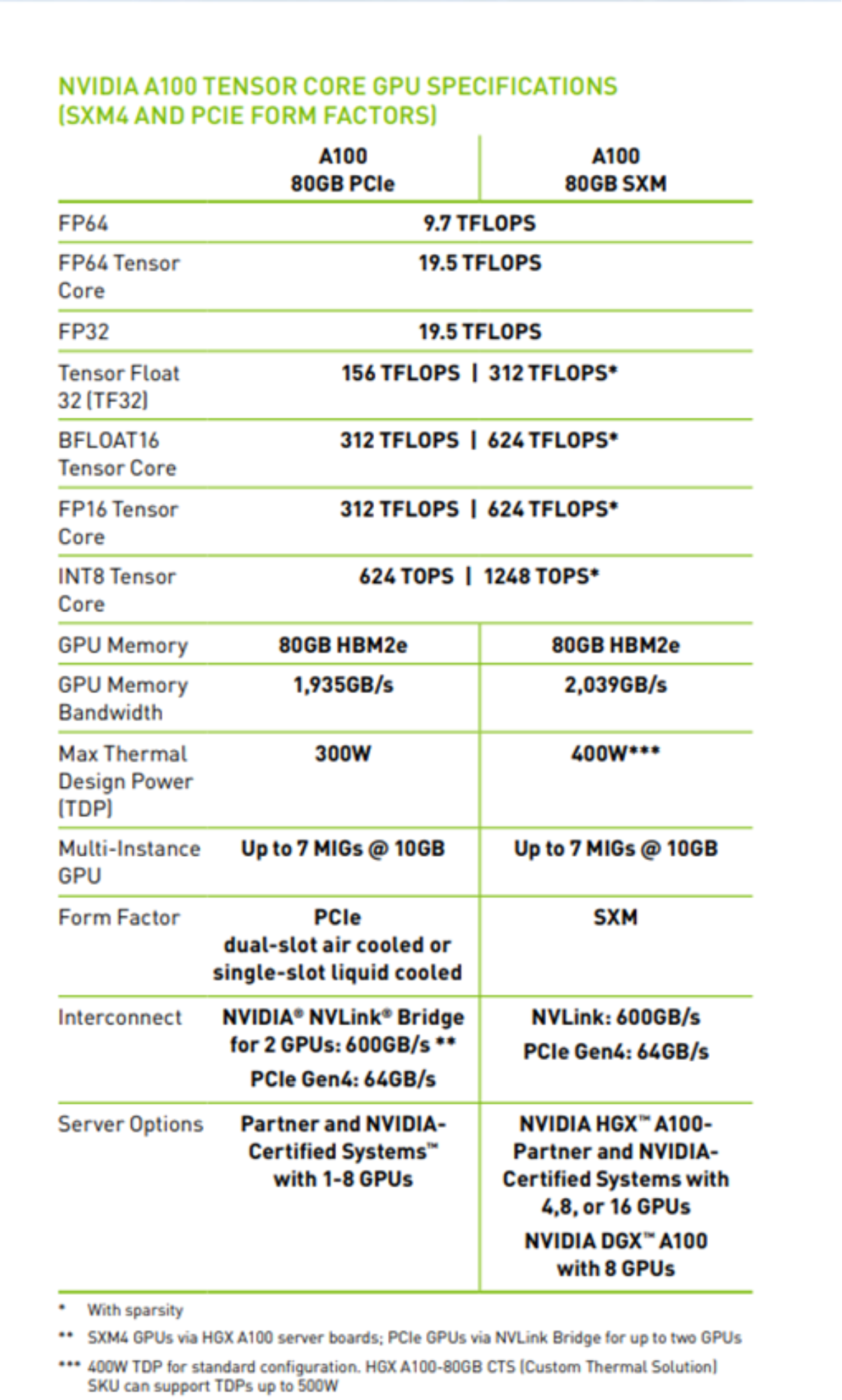

The following table provides details of the NVIDIA A100-PCIe-80G and A100-SXM4-80GB accelerator configurations:

Figure 9. NVIDIA A100-PCIe-80G and A100-SXM4-80GB accelerator configurations

NVIDIA A30 GPU

NVIDIA A30 Tensor Core GPU is the most versatile mainstream compute GPU for AI inference and mainstream enterprise workloads. Powered by NVIDIA Ampere architecture Tensor Core technology, it supports a broad range of math precisions, providing a single accelerator to speed up every workload.

The following figure shows the NVIDIA A30 GPU:

Figure 10. NVIDIA A30 GPU

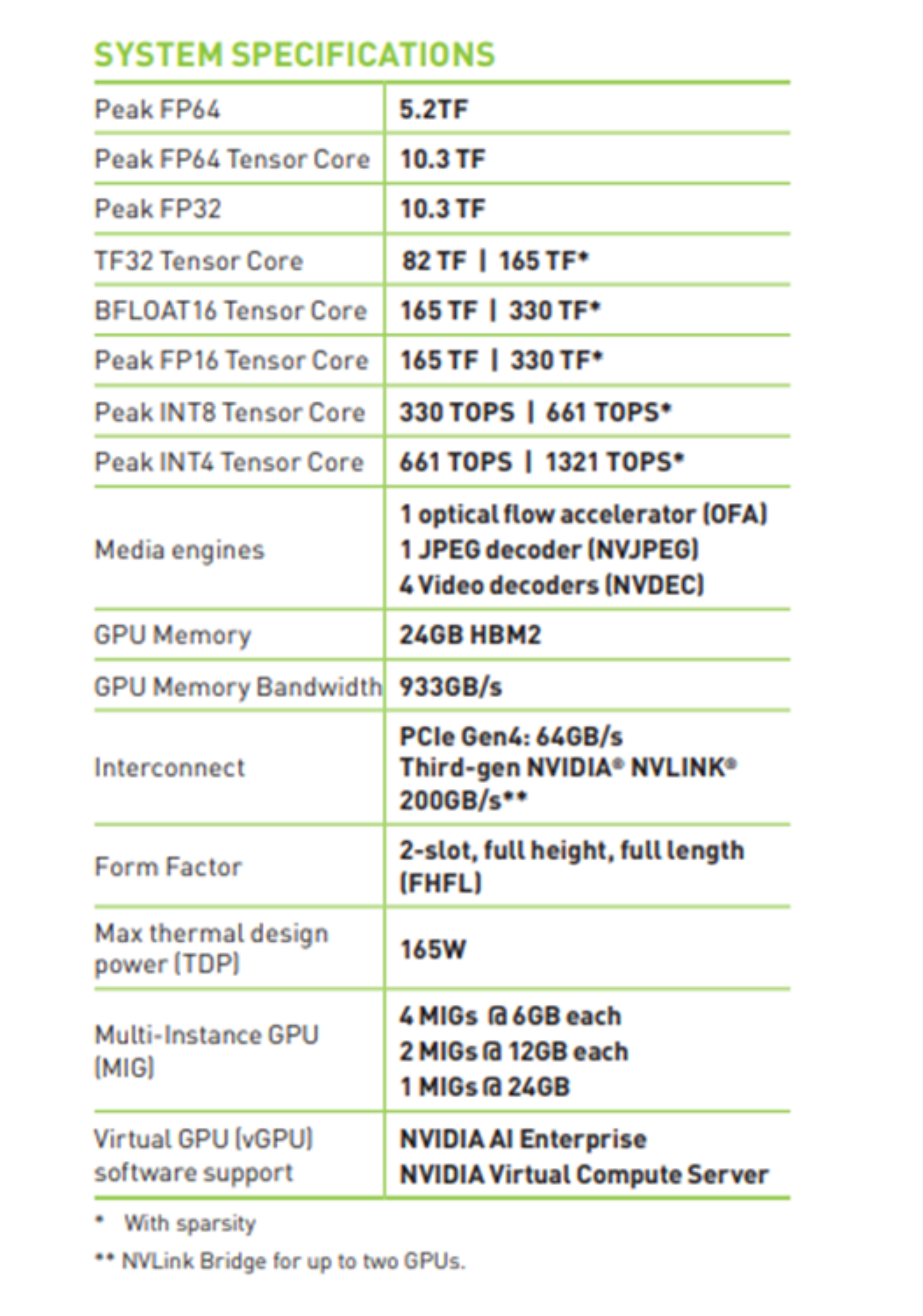

The following table provides details of the NVIDIA A30 accelerator configuration:

Figure 11. NVIDIA A30 accelerator configuration