Home > Workload Solutions > Container Platforms > Red Hat OpenShift Container Platform > Archive > Design Guide—Red Hat OpenShift Container Platform 4.8 on Dell Infrastructure > Validated hardware configuration options

Validated hardware configuration options

-

Overview

For validation test work in our laboratories, we used various server configurations for OpenShift Container Platform 4.8. Dell Technologies recommends selecting server configurations that are known to provide a satisfactory deployment experience and to meet or exceed Day-2 operating experience expectations. The following sections of this chapter provide guidelines for AMD microprocessor selection, memory configuration, local (on-server) disk storage, and network configuration.

Selecting the server processors

The AMD EPYCTM processor family provides performance, advanced reliability, and hardware-enhanced security for demanding compute, network, and storage workloads.

AMD EPYCTM processors are recommended for PowerEdge R6525 and R7525 servers.

While many sites prefer to use a single-server configuration for all node types, that option is not always cost-effective or practical.

When selecting a processor, consider the following criteria:

- Processor core count: The processor core count must be sufficient to ensure satisfactory performance of the workload operations and base services that are running on each node.

- Thermal design power (TDP): The CPU must be suitable for the amount of heat that is removed from the server through the heat sinks and cooling airflow.

- Ability to dissipate heat: During validation work with high-core-count and high-TDP processors, the thermal delta (air discharge temperature minus air intake temperature) across a server was recorded at 65°F (18°C). Excessive air discharge (egress) temperature from the server can lead to a premature server-component or system failure.

- Compute node configurations: The design of compute nodes for use as part of your OpenShift Container Platform cluster can use many compute node configurations. Compute nodes can use Intel® or AMD-based CPU platforms. The processor architecture and core count per node selection can significantly affect the acquisition and operating cost of the cluster that is required to run your application workload.

When ordering and configuring your PowerEdge servers, see the corresponding guide:

For CPU information, see AMD EPYCTM Processors.

Per-node memory configuration

The Dell Technologies engineering team designated 192 GB, 384 GB, or 768 GB RAM as the best choice of memory configuration based on memory usage, DIMM module capacity for the current cost, and likely obsolescence over a five-year server life cycle. We chose a mid-range memory configuration of 384 GB RAM to ensure that the memory for each CPU has multiples of three banks of DIMM slots that are populated to ensure maximum memory-access cycle speed. You can modify the memory configuration to meet your budgetary constraints and operating needs.

Consult architectural guidance for OpenShift Container Platform and consider your own observations from running your workloads on OpenShift Container Platform 4.8.

Disk drive capacities

The performance of disk drives significantly limits the performance of many aspects of OpenShift Container Platform cluster deployment and operation. We validated the deployment and operation of OpenShift Container Platform using magnetic storage drives (spinners), SATA SSD drives, SAS SSD drives, and NVMe SSD drives.

Our selection of all NVMe SSD drives was based on a comparison of cost per GB of capacity divided by observed performance criteria such as deployment time for the cluster and application deployment characteristics and performance. While there are no universal guidelines, users gain insight over time into the capacities that best enable them to meet their requirements. Optionally, you can deploy the cluster with only hard drive disk drives. In tests, this configuration has been shown to have few adverse performance consequences.

Network controllers and switches

When selecting the switches to include in the OpenShift Container Platform cluster infrastructure, consider the overall balance of I/O pathways in server nodes, the network switches, and the NICs for your cluster. When you choose to include high-I/O bandwidth drives as part of your platform, consider your choice of network switches and NICs so that sufficient network I/O is available to support high-speed, low-latency drives:

- Hard drives: These drives have lower throughput per drive. You can use 10 GbE for this configuration.

- SATA/SAS SSD drives: These drives have high I/O capability. SATA SSD drives operate at approximately four times the I/O level of a spinning hard drive. SAS SSDs operate at up to 10 times the I/O level of a spinning hard drive. With SSD drives, configure your servers with 25 GbE.

- NVMe SSD drives: These drives have high I/O capability, up to three times the I/O rate of SAS SSDs. We populated each node with 4 x 25 GbE NICs, or 2x 100 GbE NICS, to provide optimal I/O bandwidth.

The following table provides information about selecting NICs to ensure adequate I/O bandwidth and to take advantage of available disk I/O bandwidth:

Table 6. NIC and storage selection to optimize I/O bandwidth

NIC selection

Compute node storage device type

2 x 25 GbE

Spinning magnetic media (hard drive)

2 x 25 GbE or 4 x 25 GbE

SATA or SAS SSD drives

4 x 25 GbE or 2 x 100 GbE

NVMe SSD drives

True network HA fail-safe design demands that each NIC is duplicated, permitting a pair of ports to be split across two physically separated switches. A pair of PowerSwitch S5248F-ON switches provides 96 x 25 GbE ports, enough for approximately 20 servers. This switch is cost-effective for a compact cluster. While you could add another pair of S5248F-ON switches to scale the cluster to a full rack, consider using PowerSwitch S5232F-ON switches for a larger cluster.

The PowerSwitch S5232F-ON provides 32 x 100 GbE ports. When used with a four-way QSFP28 to SFP28, a pair of these switches provides up to 256 x 25 GbE endpoints, more than enough for a rackful of servers in the cluster before more complex network topologies are required.

Low latency in an NFV environment

NFV-centric data centers require low latency in all aspects of container ecosystem design for application deployment. This requirement means that you must give attention to selecting low-latency components throughout the OpenShift cluster. We strongly recommend using only NVMe drives, NFV-centric versions of AMD CPUs, and, at a minimum, the PowerSwitch S5232F-ON switch. For specific guidance, consult the Dell Technologies Service Provider Support team.

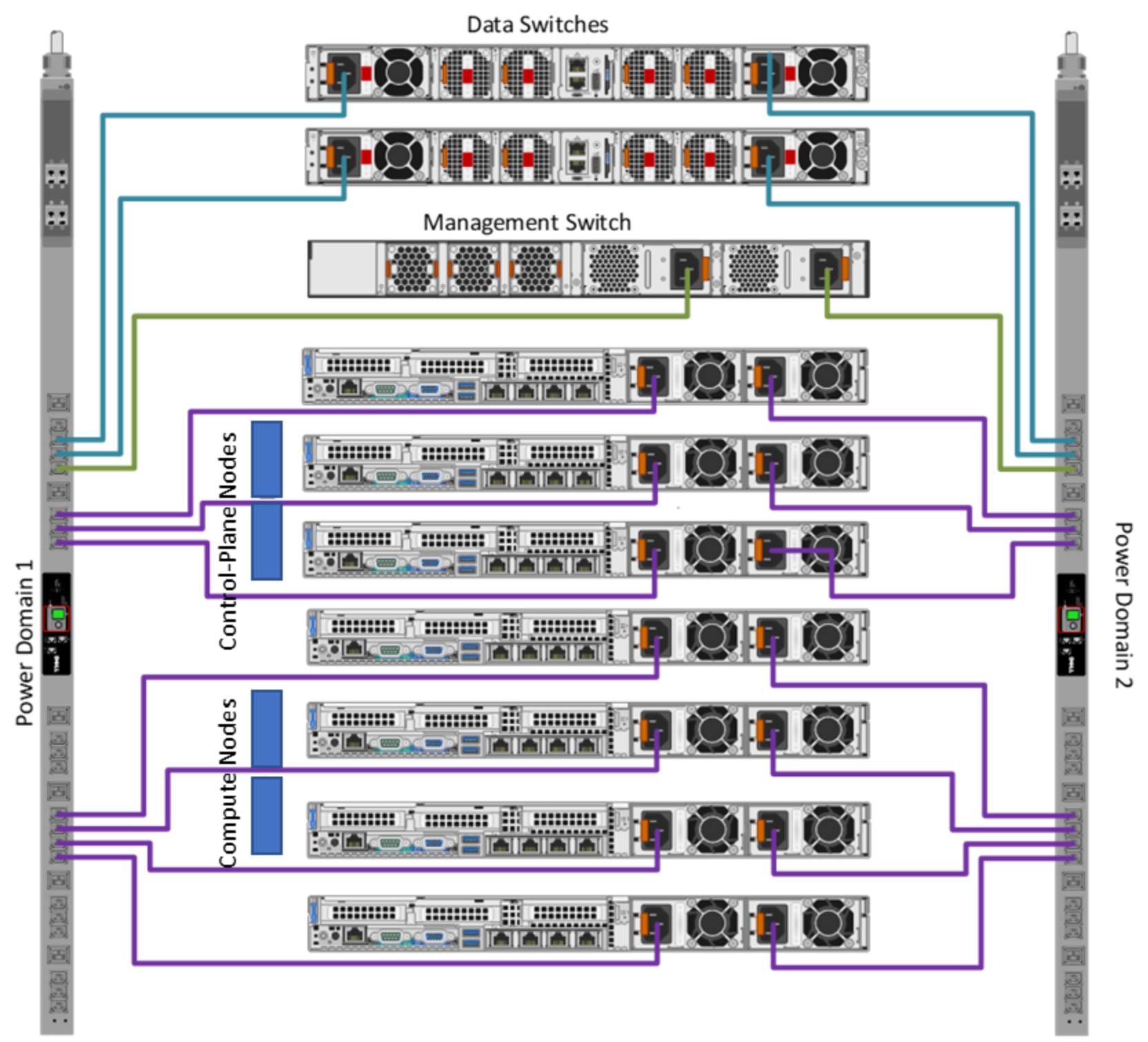

Power configuration

Dell Technologies strongly recommends that all servers are equipped with redundant power supplies and that power cabling provides redundant power to the servers. Configure each rack with pairs of power distribution units (PDUs). For consistency, connect all right-most power supply units (PSUs) to a right-side PDU and all left-most PSUs to a left-side PDU. Use as many PDUs as you need, in pairs. Each PDU must have an independent connection to the data center power bus.

The following figure shows an example of the power configuration that is designed to ensure a redundant power supply for each cluster device:

Figure 11. PSU to PDU power template