Home > Workload Solutions > Oracle > White Papers > Deployment Best Practices for Oracle Databases with Dell EMC PowerMax > Server considerations

Server considerations

-

Partition alignment

Although not required on Linux, Oracle recommends creating one partition on each ASM device. By default, Oracle Linux (OL) and Red Hat Enterprise Linux (RHEL) release 7 and later create partitions with a default offset of 1 MB. However, earlier releases of OL or RHEL defaulted to a 63-block partition offset, or 63 x 512 bytes = 31.5KB.

Because PowerMax uses a 128 KB track size, a 0 offset (if no partitions are created) or a 1 MB offset are perfectly aligned.

If you are creating a partition for ASM devices on an older Linux release that defaults to a 31.5 KB offset, we highly recommend that you align the partition offset to 1 MB.

To create and align partitions, use the parted or fdisk commands. Parted is often easier to use and script.

The following steps show how to use the fdisk command:

- Create a single primary partition on the device.

- Use x to enter fdisk expert mode.

- Use b to change the partition beginning offset.

- Enter 2,048 for a 1 MB offset (2,048 x 512 bytes blocks).

- Optionally, use p to print the partition table layout

- Use w to write the partition table.

The following example shows how to use the Linux parted command with PowerPath devices (a similar script can be written for Device Mapper for example):

for i in {a..h}; do

parted -s /dev/emcpower$i mklabel msdos

parted -s /dev/emcpower$i mkpart primary 2048s 100%

chown oracle.oracle /dev/emcpower$i1

done

fdisk –lu # lists server devices and their partition offset

When RAC is used, other nodes are not aware of the new partitions. Rebooting, or reading and writing the partition table on all other nodes can resolve this condition. For example:

for i in {a..h}; do

fdisk /dev/emcpower$i << EOF

w

EOF

chown oracle.oracle /dev/emcpower$i1

done

fdisk –lu # lists server devices and their partitions offset

Multipathing software

Multipathing software is critical for any database deployment for a few reasons:

- Load-balancing and performance—Each storage device may need to handle thousands of IOPS. With all-flash storage, customers tend to use lower quantity but bigger capacity devices and as a result, the IOPS demand per device increases. Multipathing software allows processing read and write I/Os to the device across multiple HBA ports (initiators) and storage ports (targets). It spreads the load across the ports (‘load-balance’) to avoid single path or port I/O limits.

- Path-failover—The multipath software creates a pseudo device name or alias, which the application uses. Meanwhile, I/Os to the pseudo device are serviced by all the different paths available between initiators and targets. If a path stops working, the multipath software automatically directs I/Os to the remaining paths. If a path comes back, the software will resume servicing I/Os to that path automatically. Meanwhile, the application continues to use the pseudo device, regardless of which paths are active.

- Multipath pseudo devices work well with Oracle ASM—Oracle ASM requires a single presentation of each storage device and will not list a device as an ASM candidate if it finds multiple paths to that device. By using the multipath pseudo name (or user-friendly alias), ASM recognizes a single presentation, and the multipath software takes care of spreading the I/Os across the different paths of that pseudo device.

FC-NVMe multipathing options

As FC-NVMe is relatively new, there are Linux OS releases and multipathing software considerations. While some older Linux releases may claim support for FC-NVMe, this section explains why it may not be an optimal choice.

To achieve FC-NVMe high performance and low latencies, the multipath software needs to address the changes and optimizations made in the protocol. Dell EMC PowerPath 7 is the first PowerPath release that provides FC-NVMe multipath support. PowerPath 7 provides full multipathing functionality for FC-NVMe, including path failover, load-balancing, and high performance.

When considering Linux native multipathing software for FC-NVMe, there are two options:

- Linux Device Mapper (DM)

- A new FC-NVMe multipath

While DM can work with NVMe and FC-NVMe devices, and even with older Linux releases, it is not optimized for that purpose, and in all our tests performance was degraded. As a result, we don’t recommend using DM for FC-NVMe.

Therefore, for native multipathing, we recommend that you use the new FC-NVMe multipath software. Consider the following:

- In early Linux releases supporting FC-NVMe native multipath, only path failover was enabled without load-balancing. The path I/O policy was set as ‘numa’ and allowed only a single active path. All other paths were in a standby state ready for failover. This configuration does not promote high-performance and is not recommended.

- Later Linux releases added a ‘round-robin’ path I/O policy to the FC-NVMe native multipath. This policy allows for both load-balancing and failover and does support high-performance.

Important: For the reasons cited above, we recommend using either PowerPath (with FC-NVMe support) or Linux native FC-NVMe multipathing (not to be confused with DM) implementing the ‘round-robin’ path I/O policy.

At the time this paper was written, the minimum multipathing options and OS releases available were summarized in the following table.

Table 7. FC-NVMe Linux OS release and multipathing options

Operating System

PowerPath

Native MP with ‘round-robin’ I/O policy

SuSE Enterprise Linux

Available[4]

Available[5]

Red Hat

Available[6]

Available[7]

Oracle Linux

Available[8]

Available[9]

Note: refer to Dell EMC eLab Navigator note “Dell EMC PowerMaxOS 5978.444.444 & 5978.479.479 – 32G FC-NVMe Support Matrix” for details on Dell EMC supported configurations.

FC multipathing options

Three multipath choices for Oracle on Linux using FC protocol are available:

- Dell EMC PowerPath software (for bare metal, or PowerPath/VE for VMware)

- Linux Device Mapper (DM) native multipathing software

- VMware native multipathing

Note: While iSCSI protocol is also a very valid option to use for Oracle databases on PowerMax, it is not covered in this paper.

All three options have been available on FC for many years and provide path failover, load-balancing, and high-performance.

Device permissions

When the server reboots, all devices receive root user permissions by default. However, Oracle ASM devices (‘disks’) require an Oracle user permission. The permission setting of the Oracle ASM devices must be part of the boot sequence, which is when Grid Infrastructure and ASM start.

Oracle ASMlib sets the device permissions automatically. When ASMlib is not used, using udev rules is the simplest way to set device permissions during the boot sequence.

Udev rules are added to a text file in the /etc/udev/rules.d/ directory. The rules are applied in order, based on the index number preceding the file names in this directory.

The content of the text file with the rules involves identifying the Oracle devices correctly. For example, if all devices with partition 1 can have Oracle permissions, set a general rule that applies to all such devices. If not all devices can use the same permissions, then the devices must be specified individually, often based on either their UUID / WWN.

When devices are identified individually to receive Oracle user permissions, udev rules can also be used to create a user-friendly alias for each device for easy identification. When using Linux Device Mapper, the /etc/multipath.conf file can also be used to create device aliases.

As there are many ways and options to identify devices and set their permissions, the following examples are not a complete list.

Keep in mind that while a single partition for each device is not required for Oracle ASM on Linux, Oracle recommends it, and it provides an easy way to assign Oracle user permissions to all the devices with partition 1.

PowerPath example (both FC and FC-NVMe)

When using PowerPath, we often use the /dev/emcpower notation without user friendly aliases, because PowerPath devices are persistent across reboot and do not change. Therefore, the udev rule can be generic to all the pseudo devices’ partition 1 as shown in the following example:

# vi /etc/udev/rules.d/85-oracle.rules

ACTION=="add|change", KERNEL=="emcpower*1", OWNER:="oracle", GROUP:="oracle", MODE="0660"

The PowerPath pseudo device naming convention (and therefore the device permission) is the same, regardless of whether you are using FC or FC-NVMe.

Linux Device Mapper example (FC only)

When you use device mapper, there are multiple ways for you to set pseudo device names or aliases. For example, you can set aliases in the /etc/multipath.conf file, or directly in the udev rules file. Based on how you set the aliases, you can apply the udev rule to set device permissions.

The following example assumes that user-friendly aliases are set in the /etc/multipath.conf file (see Linux Device Mapper example section for instructions). In this case, the udev rule can be generic to all the aliases with partition 1 (for example, if devices aliases are created such as: “ora_prod_data1p1”, or “ora_prod_redo3p1”, etc):

# vi /etc/udev/rules.d/12-dm-permissions.rules

ENV{DM_NAME}=="ora_prod_*p1", OWNER:="oracle", GROUP:="oracle", MODE:="660"

Linux native FC-NVMe multipathing example

When you use FC-NVMe with its native multipathing, devices with partition 1 appear with a multipath pseudo name such as /dev/nvmeXXp1. If you do not need aliases, then you can apply a generic udev rule as shown in the following example:

# vi /etc/udev/rules.d/12-dm-permissions.rules

KERNEL=="nvme*p1", ENV{DEVTYPE}=="partition", OWNER:="oracle", GROUP:="oracle", MODE:="660"

VMware example (FC only)

Since VMware multipath presents devices to the VM as /dev/sd block devices (the multipathing is operating at the ESXi level), and block device assignment may change when devices are added or removed, you can specify device permissions based on the UUID for each device separately (see VMware native multipathing example section for instructions).

Consistent device names across RAC nodes

When Oracle RAC is used, the same storage devices are shared across the cluster nodes. ASM places its own labels on the devices in the ASM disk group. Therefore, matching server device names across RAC nodes is not necessary to ASM. However, it often makes storage management operations easier for the user. This section provides instructions regarding how to match server device names.

PowerPath example

To match PowerPath pseudo device names between cluster nodes, follow these steps:

- Use the emcpadm export_mappings command on the first server (cluster node) to create an XML file with the PowerPath configuration:

# emcpadm export_mappings -f /tmp/emcp_mappings.xml

- Copy the file to the other nodes.

- On the other nodes, import the mapping:

# emcpadm import_mappings -f /tmp/emcp_mappings.xml

Note: The PowerPath database is kept in the /etc/emcp_devicesDB.idx and /etc/emcp_devicesDB.dat files. These files can be copied from one of the servers to the others, followed by a reboot. We recommend the emcpadm export/import method to match PowerPath device names across servers, where the file copy is a shortcut that overwrites existing PowerPath mapping on the other servers.

Linux Device Mapper example

Device Mapper (DM) can use UUIDs to identify devices persistently across RAC nodes. While using the UUID is sufficient, you may prefer more convenient aliases (for example, /dev/mapper/ora_data1, /dev/mapper/ora_data2, and so on).

The following example shows how to set the /etc/multipath.conf DM configuration file with aliases. To find the device UUID’s, use the scsi_id -g /dev/sdXX Linux command. If the command is not already installed, add it by installing the sg3_utils Linux package.

# /usr/lib/udev/scsi_id -g /dev/sdb

360000970000198700067533030314633

# vi /etc/multipath.conf

...

multipaths {

multipath {

wwid 360000970000198700067533030314633

alias ora_data1

}

multipath {

wwid 360000970000198700067533030314634

alias ora_data2

}

...

}

To match aliases across RAC nodes, copy the /etc/multipath.conf configuration file to the other nodes and reboot them. If you prefer to avoid a reboot, follow these steps:

- Set up all multipath devices on one server and then stop multipathing on the other servers:

# service multipathd stop

# service multipath -F- Copy the multipath configuration file from the first server to all other servers. If user friendly names must be consistent, copy the /etc/multipath/bindings file from the first server to all others. If aliases must be consistent (aliases are set up in multipath.conf), copy the /etc/multipath.conf file from the first server to all others.

- Restart multipath on the other servers:

# service multipathd start

VMware native multipathing example

When running Oracle in VMware virtual machines (VMs), although PowerPath/VE or VMware native multipathing is in effect, the VM devices appear as /dev/sdXX. You can use Udev rules to create device aliases that match across RAC nodes, and to assign Oracle user permission to the devices.

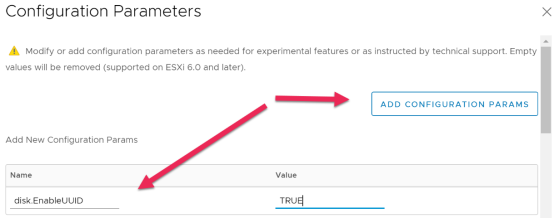

To allow identifying devices’ UUID on a VM, enable disk.EnableUUID for the VM. Follow these steps:

- In vSphere, power-off the VM.

- Right click on the VM and choose Edit Settings.

- Choose the VM options tab.

- Expand the Advanced option and click on Edit Configuration.

- Choose Add Configuration Params to add the parameter as shown in the figure below, and add a parameter: disk.EnableUUID. Set the parameter to TRUE.

Figure 27. Enable UUID device identification

- Restart the VM

- Identify the devices’ UUID using the scsi_id command as shown below.

Note: In RHEL 7 and above, the scsi_id command is located in /lib/udev/scsi_id. In previous releases, it was located in /sbin/scsi_id.

# /lib/udev/scsi_id -g -u -d /dev/sdb

36000c29127c3ae0670242b058e863393

- Build a udev rule to set Oracle user permissions to all the devices with partition 1, based on their UUID. In the example shown below, notice that an alias is given to the device so that the ASM disk string can be set using it.

# /lib/udev/scsi_id -g -u -d /dev/sdb

36000c29127c3ae0670242b058e863393

# cd /etc/udev/rules.d/

# vi 99-oracle-asmdevices.rules

KERNEL=="sd*1", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /dev/$parent", RESULT=="36000c29127c3ae0670242b058e863393", SYMLINK+="ora-data1", OWNER="oracle", GROUP="oracle", MODE="0660"

...

Block multi-queue (MQ)

In recent Linux kernels, the block I/O device driver in the server operating system was rewritten to address NVMe devices and the need for ultra-low latencies and higher IOPS. The new design is referred to as block multi-queue, or MQ for short. When SCSI block devices are presented to the server (/dev/sd) MQ is not enabled by default, because of the chance that these devices are supported by legacy spinning hard disk drives (HDDs). If the storage is, in fact, all-flash (such as with PowerMax), you can enable MQ manually for SCSI devices as described in Appendix I. Blk-mq and scsi-mq.

When devices are presented to the server as NVMe devices (/dev/nvme)—such as when using PowerMax FC-NVMe—they will automatically be enabled for MQ. No additional changes are needed to enable the functionality.

Likely because of the high adoption of flash media, in RHEL 8.0, MQ has been made a default even for SCSI (/dev/sd) devices.

In previous testing, we had found that using MQ provided a significant performance advantage. In recent testing, however, when using newer CPUs, 32Gb SAN, and 4-node Oracle 19c RAC, the servers were no longer a ‘bottleneck’ and MQ did not seem to provide a noticeable advantage.

Dell EMC recommends that in an FC environment in which the server is heavily utilized, you should enable MQ and test to see if it provides significant benefits for your Oracle FC deployment. Remember that FC-NVMe deployments will have MQ enabled automatically.