Home > Workload Solutions > Artificial Intelligence > White Papers > Conversational AI with Kore.ai > Introduction

Introduction

-

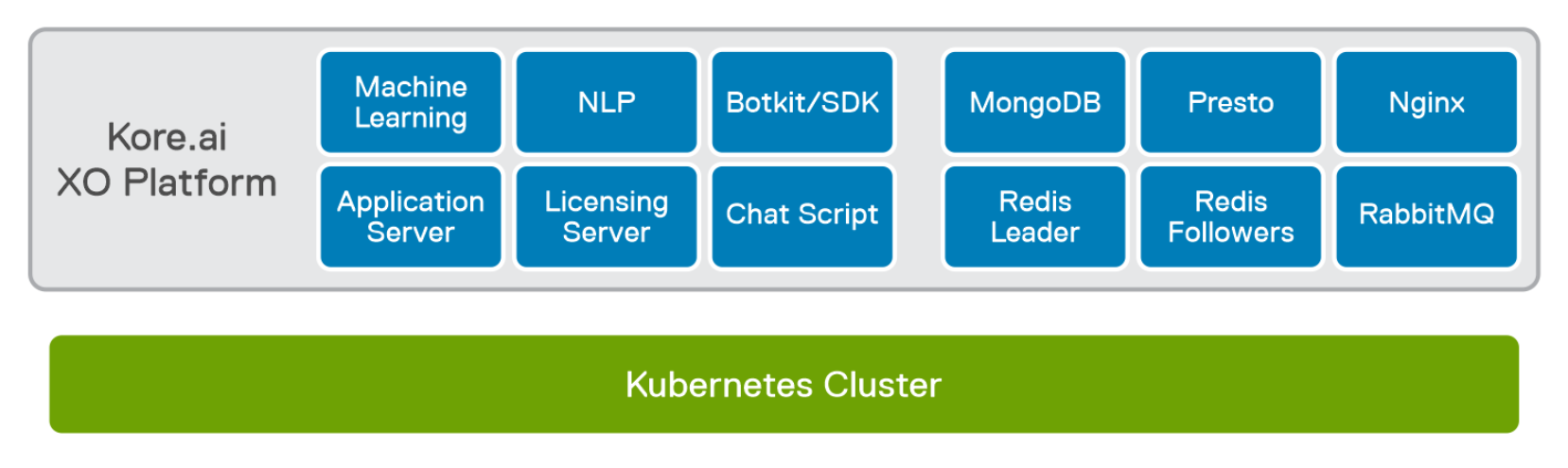

Kore.ai provides an enterprise-ready conversational AI product for data scientists and machine learning engineers to develop and publish AI applications. It can be deployed either in Kubernetes as pods or as a stand-alone container. This document provides an overview of the architecture and major components of the platform. It also describes how enterprises can use each piece to elevate and extend the functionality of current systems, reach more customers, make work easier for their employees, switch from UI to conversational UX, and shorten time to value.

Figure 2. Software architecture for Kore.ai XO Platform

Key components of the Kore.ai XO Platform include:

- Application Server exposes REST API endpoints to different subsystems of the application. The REST API provides endpoints for storing retrieving bot and task definitions and manages context information, encryption service, endpoints for webhook, web, and mobile channels. It enables integration with channels like Twilio, Alexa, Workplace for Facebook, and HTTP listener, which handles requests for IVR. It is developed using Nginx and Node.js

- IDProxy Server provides security and authentication using SSO and OAuth integration. It exposes an endpoint configured as a callback URL for both authentications.

- RabbitMQ Server provides Advanced Message Queuing Protocol (AMQP) Message broker for internal subsystems and services communication.

- Bot Service Runtime processes incoming messages and sends the outgoing messages for the bots, web, and mobile channel. It provides a stand-alone timer service to handle scheduled tasks and user-configured alerts.

- ChatScript is an NPL engine to process the incoming messages and identify the intent.

- Bots Service Admin is a stand-alone service that pulls the enterprise user profiles from LDAP or Active Directory and updates them in the Kore.ai platform. It also runs the API requests for backend web services.

- Sandbox provides a JavaScript execution environment to run developer-provided scripts while processing message templates and script nodes.

- Key Server is an API server that manages encryption and decryption of keys.

- Presto is responsible for fetching the analytics data (dashboard and analyze screens in the UI) and audit logs.

- Machine Learning is responsible for training the bot model and the intent prediction based on the trained model.

- FAQ Engine is responsible for predicting the answers for knowledge collections.

- BotsServiceAlerts is a service that processes and delivers messages generated by alert task subscriptions.

- ProcessFlowRuntime is a service that provides an environment to run the flows that developers design.

- MongoDB provides a database for the XO Platform.