Deploy K8s clusters into Azure Stack Hub user subscriptions

Mon, 24 Jul 2023 15:06:01 -0000

|Read Time: 0 minutes

Deploy K8s Cluster into an Azure Stack Hub user subscription

Welcome to Part 2 of a three-part blog series on running containerized applications on Microsoft Azure’s hybrid ecosystem. Part 1 provided the conceptual foundation and necessary context for the hands-on efforts documented in parts 2 and 3. This article details the testing we performed with AKS engine and Azure Monitor for containers in the Dell Technologies labs.

Here are the links to all the series articles:

Part 1: Running containerized applications on Microsoft Azure’s hybrid ecosystem – Provides an overview of the concepts covered in the blog series.

Part 2: Deploy K8s Cluster into an Azure Stack Hub user subscription – Setup an AKS engine client VM, deploy a cluster using AKS engine, and onboard the cluster to Azure Monitor for containers.

Part 3: Deploy a self-hosted Docker Container Registry – Use one of the Azure Stack Hub QuickStart templates to setup container registry and push images to this registry. Then, pull these images from the registry into the K8s cluster deployed with AKS engine in Part 2.

Introduction to the test lab

Before proceeding with the results of the testing with AKS engine and Azure Monitor for containers, we’d like to begin by providing a tour of the lab we used for testing all the container-related capabilities on Azure Stack Hub. Please refer to the following two tables. The first table lists the Dell EMC for Microsoft Azure Stack hardware and software. The second table details the various resource groups we created to logically organize the components in the architecture. The resource groups were provisioned into a single user subscription.

Scale Unit Information | Value |

Number of scale unit nodes | 4 |

Total memory capacity | 1 TB |

Total storage capacity | 80 TB |

Logical cores | 224 |

Azure Stack Hub version | 1.1908.8.41 |

Connectivity mode | Connected to Azure |

Identity store | Azure Active Directory |

Resource Group | Purpose |

demoaksengine-rg | Contains the resources associated with the client VM running AKS engine. |

demoK8scluster-rg | Contains the cluster artifacts deployed by AKS engine. |

demoregistryinfra-rg | Contains the storage account and key vault supporting the self-hosted Docker Container Registry. |

demoregistrycompute-rg | Contains the VM running Docker Swarm and the self-hosted container registry containers and the other supporting resources for networking and storage. |

kubecodecamp-rg | Contains the VM and other resources used for building the Sentiment Analysis application that was instantiated on the K8s cluster. |

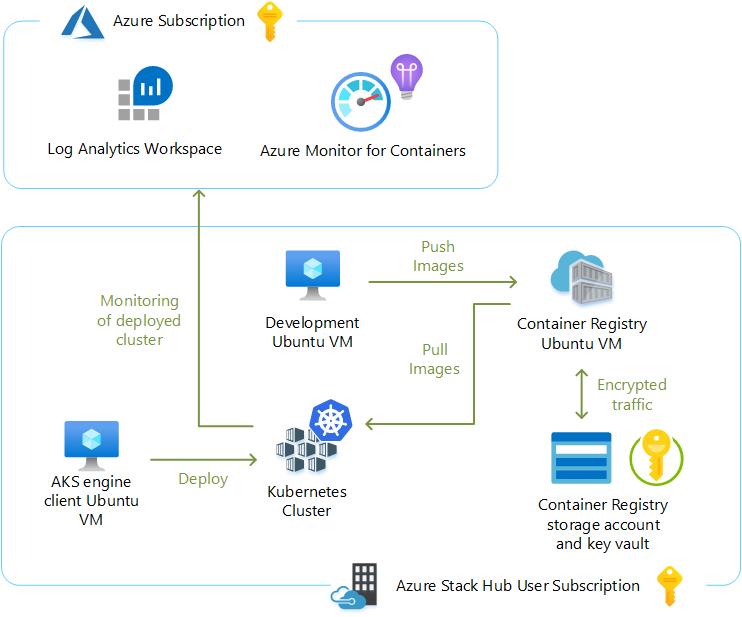

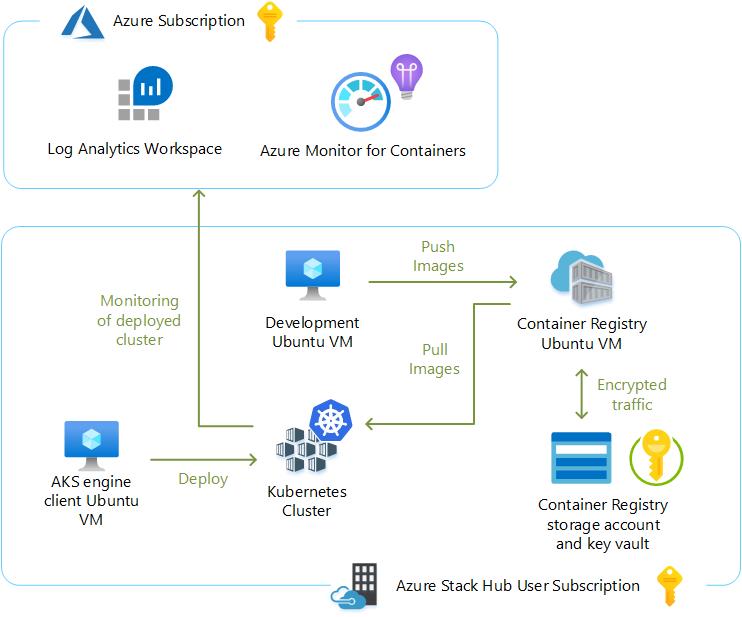

Please also refer to the following graphic for a high-level overview of the architecture.

Prerequisites

All the step-by-step instructions and sample artifacts used to deploy AKS engine can be found in the Azure Stack Hub User Documentation and GitHub. We do not intend to repeat what has already been written – unless we want to specifically highlight a key concept. Instead, we will share lessons we learned while following along in the documentation.

We decided to test the supported scenario whereby AKS engine deploys all cluster artifacts into a new resource group rather than deploying the cluster to an existing VNET. We also chose to deploy a Linux-based cluster using the kubernetes-azurestack.json API model file found in the AKS engine GitHub repository. A Windows-based K8s cluster cannot be deployed by AKS engine to Azure Stack Hub at the time of this writing (November 2019). Do not attempt to use the kubernetes-windows.json file in the GitHub repository, as this will not be fully functional.

Addressing the prerequisites for AKS engine was very straight forward:

- Ensured that the Azure Stack Hub system was completely healthy by running a Test-Azure Stack.

- Verified sufficient available memory, storage, and public IP address capacity on the system.

- Verified that the quotas embedded in the user subscription’s offers provided enough capacity for all the Kubernetes capabilities.

- Used marketplace syndication to download the appropriate gallery items. We made sure to match the version of AKS engine to the correct version of the AKS Base Image. In our testing, we used AKS engine v0.43.0, which depends on version 2019.10.24 for the AKS Base Image.

- Collected the name and ID of the Azure Stack Hub user subscription created in the lab.

- Created the Azure AD service principal (SPN) through the Public Azure Portal, but a link is provided to use programmatic means to create the SPN, as well.

- Assigned the SPN to the Contributor role of the user subscription.

- Generated the SSH key and private key file using PuTTY Key Generator (PUTTYGEN) for each of the Linux VMs used during testing. We associated the PPK file extension with PUTTYGEN on the management workstation so the saved private key files are opened within PUTTYGEN for easy copy and pasting. We used these keys with PuTTY and WinSCP throughout the testing.

At this point, we built a Linux client VM for running the AKS engine command-line tool used to deploy and manage the K8s cluster. Here are the specifications of the VM provisioned:

- Resource group: demoaksengine-rg

- VM size: Standard DS1v2 (1 vcpu + 3.5 GB memory)

- Managed disk: 30 GB default managed disk

- Image: Latest Ubuntu 16.04-LTS gallery item from the Azure Stack Hub marketplace

- Authentication type: SSH public key

- Assigned a Public IP address so we could easily SSH to it from the management workstation

- Since this VM was required for ongoing management and maintenance of the K8s cluster, we took the appropriate precautions to ensure that we could recover this VM in case of a disaster. A critical file that needed to be protected on this VM after the cluster was created is the apimodel.json file. This will be discussed later in this blog series.

Our Azure Stack Hub runs with certificates generated from an internal standalone CA in the lab. This means we needed to import our CA’s root certificate into the client VM’s OS certificate store so it could properly connect to the Azure Stack Hub management endpoints before going any further. We thought we would share the steps to import the certificate:

- The standalone CA provides a file with a .cer extension when requesting the root certificate. In order to work with an Ubuntu certificate store, we had to convert this to a .crt file by issuing the following command from Git Bash on the management workstation:

openssl x509 -inform DER -in certnew.cer -out certnew.crt - Created a directory on the Ubuntu client VM for the extra CA certificate in /usr/share/ca-certificates:

sudo mkdir /usr/share/ca-certificates/extra - Transferred the certnew.crt file to the Ubuntu VM using WinSCP to the /home/azureuser directory. Then, we copied the file from the home directory to the /usr/share/ca-certificates/extra directory.

sudo cp certnew.crt /usr/share/ca-certificates/extra/certnew.crt - Appended a line to /etc/ca-certificates.conf.

sudo nano /etc/ca-certificates.conf

Add the following line to the very end of the file:

extra/certnew.crt - Updated certs non-interactively

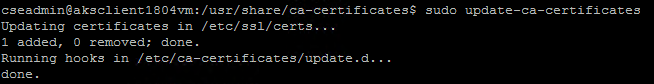

sudo update-ca-certificates

This command produced the following output that verified that the lab’s CA certificate was successfully added:

Note: We learned that if this procedure to import a CA’s root certificate is ever carried out on a server already running Docker, you have to stop and re-start Docker at this point. This is done on Ubuntu via the following commands:

sudo systemctl stop docker

sudo systemctl start docker

We then SSH’d into the client VM. While in the home directory, we executed the prescribed command in the documentation to download the get-akse.sh AKS engine installation script.

curl -o get-akse.sh https://raw.githubusercontent.com/Azure/aks-engine/master/scripts/get-akse.sh

chmod 700 get-akse.sh

./get-akse.sh --version v0.43.0

Once installed, we issued the aks-engine version command to verify a successful installation of AKS engine.

Deploy K8s cluster

There was one more step that needed to be taken before issuing the command to deploy the K8s cluster. We needed to customize a cluster specification using an example API Model file. Since we were deploying a Linux-based Kubernetes cluster, we downloaded the kubernetes-azurestack.json file into the home directory of the client VM. Though we could have used nano on the Linux VM to customize the file, we decided to use WinSCP to copy this file over to the management workstation so we could use VS Code to modify it instead. Here are a few notes on this:

- Through issuing an aks-engine get-versions command, we noticed that cluster versions up to 1.17 were supported by AKS engine v0.43.0. However, the Azure Monitor for Container solution only supported versions 1.15 and earlier. We decided to leave the orchestratorRelease key value in the kubernetes-azurestack.json file at the default value of 1.14.

- Inserted https://portal.rack04.azs.mhclabs.com as the portalURL.

- Used labcluster as the dnsPrefix. This was the DNS name for the actual cluster. We confirmed that this hostname was unique in the lab environment.

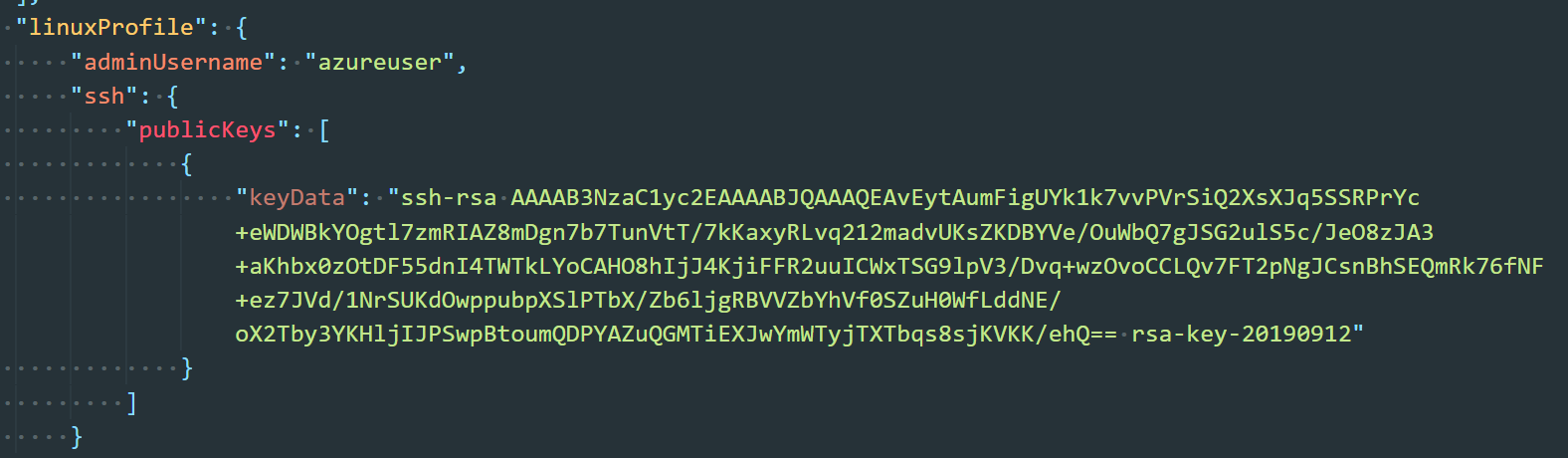

- Left the adminUsername at azureuser. Then, we copied and pasted the public key from PUTTYGEN into the keyData field. The following screen shot shows what was pasted.

- Copied the modified file back to the home directory of the client VM.

While still in the home directory of the client VM, we issued the command to deploy the K8s cluster. Here is the command that was executed. Remember that the client ID and client secret are associated with the SPN, and the subscription ID is that of the Azure Stack Hub user subscription.

aks-engine deploy \

--azure-env AzureStackCloud \

--location Rack04 \

--resource-group demoK8scluster-rg \

--api-model ./kubernetes-azurestack.json \

--output-directory demoK8scluster-rg \

--client-id xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx \

--client-secret xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx \

--subscription-id xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx

Once the deployment was complete and verified by deploying mysql with Helm, we copied the apimodel.json file found in the home directory of the client VM under a subdirectory with the name of the cluster’s resource group – in this case demoK8scluster-rg – to a safe location outside of the Azure Stack Hub scale unit. This file was used as input in all of the other AKS engine operations. The generated apimodel.json contains the service principal, secret, and SSH public key used in the input API Model. It also has all the other metadata needed by the AKS engine to perform all other operations. If this gets lost, AKS engine won't be able configure the cluster down the road.

Onboarding the new K8s cluster to Azure Monitor for containers

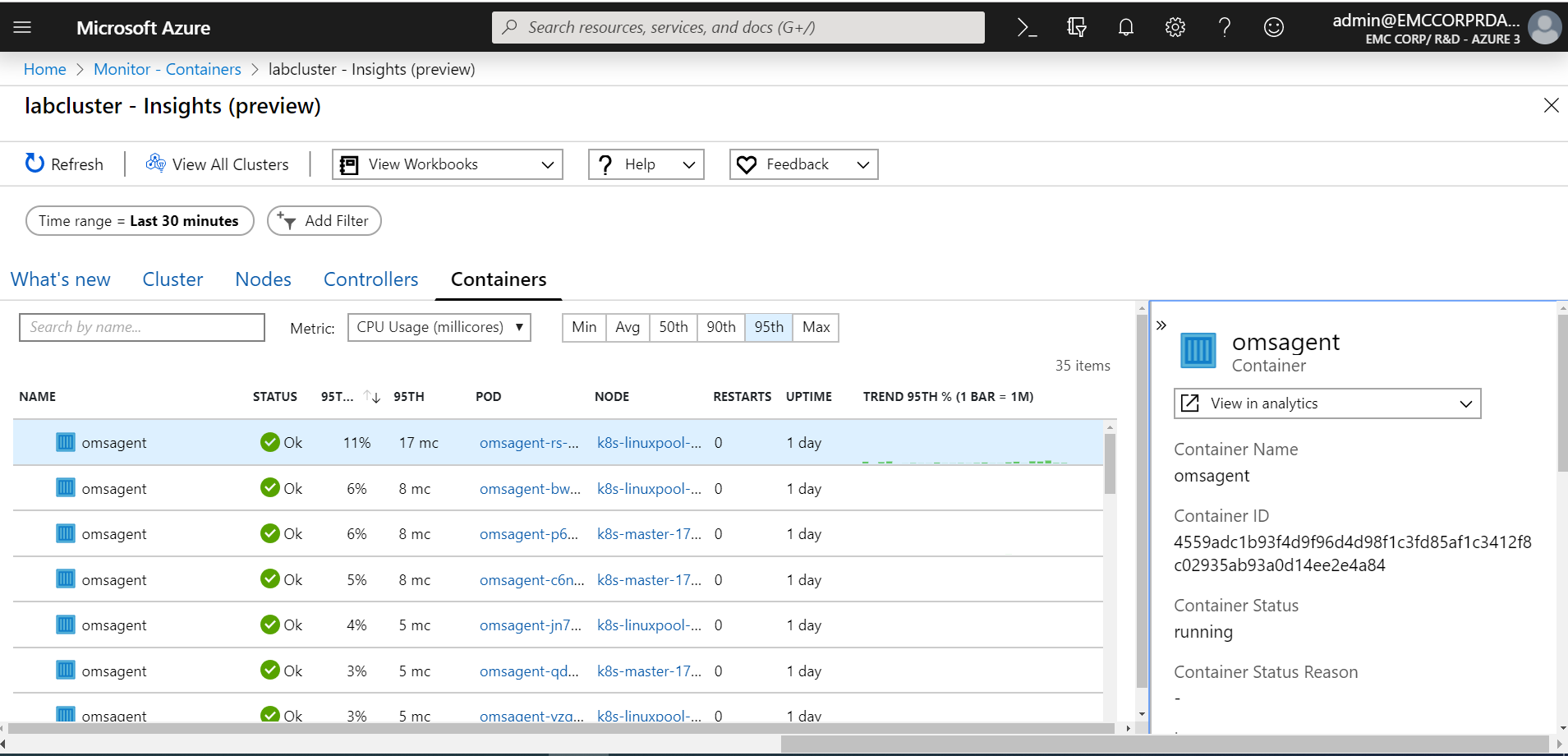

Before introducing our first microservices-based application to the K8s cluster, we preferred to onboard the cluster to Azure Monitor for containers. Azure Monitor for containers not only provides a rich monitoring experience for AKS in Public Azure but also for K8s clusters deployed in Azure Stack using AKS engine. We wanted to see what resources were being used only by the Kubernetes system functions before deploying any applications. The steps we performed in this section were performed on one of the K8s primary nodes using an SSH connection.

The prerequisites were fairly straight forward, but we did make a couple observations while stepping through them:

- We decided to perform the onboarding using the HELM chart. For this option, the latest version of the HELM client was required. We found that as long as we performed the steps under Verify your cluster in the documentation, we did not encounter any issues.

- Since we were only running a single cluster in our environment, we found we didn’t have to do anything with regards to configuring kubectl or the HELM client to use the K8s cluster context.

- We did not have to make any changes in the environment to allow the various ports to communicate properly within the cluster or externally with Public Azure.

Note: Microsoft also supports the enabling of monitoring on this K8s cluster on Azure Stack Hub deployed with AKS engine using the API definition as an alternative to using the HELM chart. The API definition option doesn’t have a dependency on Tiller or any other component. Using this option, monitoring can be enabled during the cluster creation itself. The only manual step for this option would be to add the Container Insights solution to the Log Analytics workspace specified in the API definition.

We already had a Log Analytics Workspace at our disposal for this testing. We did make one mistake during the onboarding preparations, though. We intended to manually add the Container Insights solution to the workspace but installed the legacy Container Monitoring solution instead of Container Insights. To be on the safe side, we ran the onboarding_azuremonitor_for_containers.ps1 PowerShell script and supplied the values for our resources as parameters. The script skipped the creation of the workspace since we already had one and just installed the Container Insights solution via an ARM template in GitHub identified in the script.

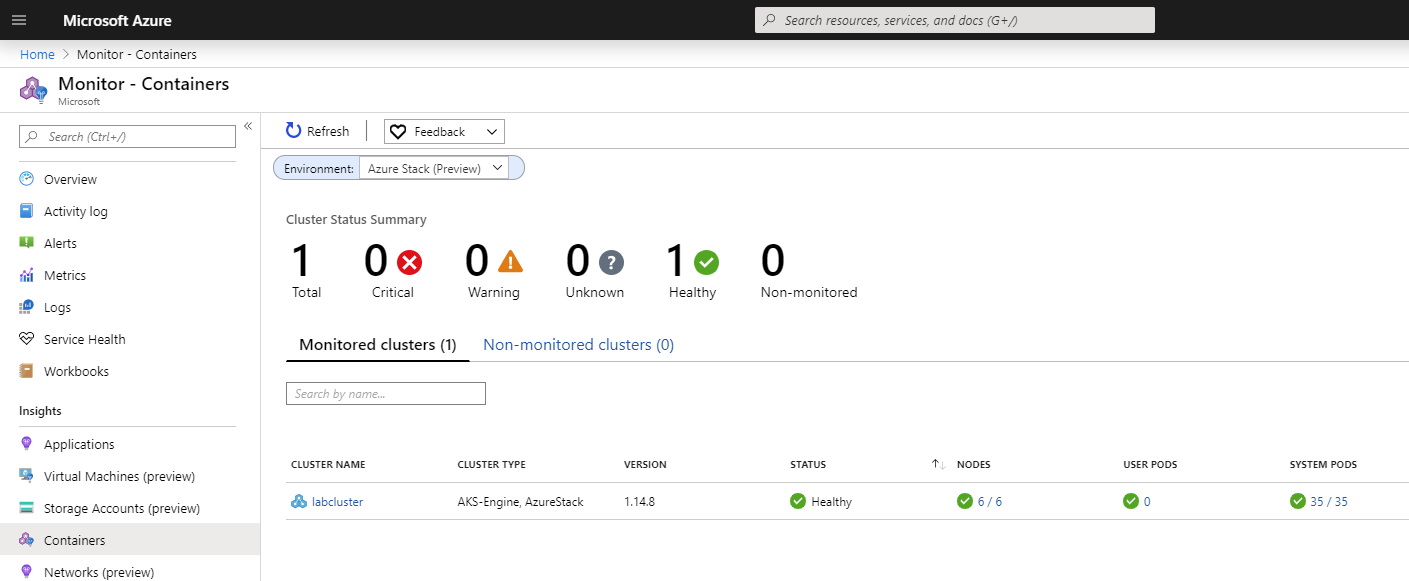

At this point, we could simply issue the HELM commands under the Install the chart section of the documentation. Besides inserting the workspace ID and workspace key, we also replaced the --name parameter value with azuremonitor-containers. We did not observe anything out of the ordinary during the onboarding process. Once complete, we had to wait about 10 minutes before we could go to the Public Azure portal and see our cluster appear. We had to remember to click on the drop-down menu for Environment in the Container Insights section in Azure Monitor and select “Azure Stack (Preview)” for the cluster to appear.

We hope this blog post proves to be insightful to anyone deploying a K8s cluster on Azure Stack Hub using AKS engine. We also trust that the information provided will assist in the onboarding of that new cluster to Azure Monitor for containers. In Part 3, we will step through our experience deploying a self-hosted Docker Container Registry into Azure Stack Hub.

Related Blog Posts

Running containerized applications on Microsoft Azure's hybrid ecosystem - Introduction

Tue, 30 Apr 2024 12:54:21 -0000

|Read Time: 0 minutes

Running containerized applications on Microsoft Azure’s hybrid ecosystem

Introduction

A vast array of services and tooling has evolved in support of microservices and container-based application development patterns. One indispensable asset in the technology value stream found in most of these patterns is Kubernetes (K8s). Technology professionals like K8s because it has become the de-facto standard for container orchestration. Business leaders like it for its potential to help disrupt their chosen marketplace. However, deploying and maintaining a Kubernetes cluster and its complimentary technologies can be a daunting task to the uninitiated.

Enter Microsoft Azure’s portfolio of services, tools, and documented guidance for developing and maintaining containerized applications. Microsoft continues to invest heavily in simplifying this application modernization journey without sacrificing features and functionality. The differentiators of the Microsoft approach are two-fold. First, the applications can be hosted wherever the business requirements dictate – i.e. public cloud, on-premises, or spanning both. More importantly, there is a single control plane, Azure Resource Manager (ARM), for managing and governing these highly distributed applications.

In this blog series, we share the results of hands-on testing in the Dell Technologies labs with container-related services that span both Public Azure and on-premises with Azure Stack Hub. Azure Stack Hub provides a discrete instance of ARM, which allows us to leverage a consistent control plane even in environments with no connectivity to the Internet. It might be helpful to review articles rationalizing the myriad of announcements made at Microsoft Ignite 2019 about Microsoft’s hybrid approach from industry experts like Kenny Lowe, Thomas Maurer, and Mary Branscombe before delving into the hands-on activities in this blog.

Services available in Public Azure

Azure Kubernetes Service (AKS) is a fully managed platform service hosted in Public Azure. AKS makes it simple to define, deploy, debug, and upgrade even the most complex Kubernetes applications. With AKS, organizations can accelerate past the effort of deploying and maintaining the clusters to leveraging the clusters as target platforms for their CI/CD pipelines. DevOps professionals only need to concern themselves with the management and maintenance of the K8s agent nodes and leave the management of the master nodes to Public Azure.

AKS is just one of Public Azure’s container-related services. Azure Monitor, Azure Active Directory, and Kubernetes role-based access controls (RBAC) provide the critical governance needed to successfully operate AKS. Serverless Kubernetes using Azure Container Instances (ACI) can add compute capacity without any concern about the underlying infrastructure. In fact, ACI can be used to elastically burst from AKS clusters when workload demand spikes. Azure Container Registry (ACR) delivers a fully managed private registry for storing, securing, and replicating container images and artifacts. This is perfect for organizations that do not want to store container images in publicly available registries like Docker Hub.

Leveraging the hybrid approach

Microsoft is working diligently to deliver the fully managed AKS resource provider to Azure Stack Hub. The first step in this journey is to use AKS engine to bootstrap K8s clusters on Azure Stack Hub. AKS engine provides a command-line tool that helps you create, upgrade, scale, and maintain clusters. Customers interested in running production-grade and fully supported self-managed K8s clusters on Azure Stack Hub will want to use AKS engine for deployment and not the Kubernetes Cluster (preview) marketplace gallery item. This marketplace item is only for demonstration and POC purposes.

AKS engine can also upgrade and scale the K8s cluster it deployed on Azure Stack Hub. However, unlike the fully managed AKS in Public Azure, the master nodes and the agent nodes need to be maintained by the Azure Stack Hub operator. In other words, this is not a fully managed solution today. The same warning applies to the self-hosted Docker Container Registry that can be deployed to an on-premises Azure Stack Hub via a QuickStart template. Unlike ACR in Public Azure, Azure Stack Hub operators must consider backup and recovery of the images. They would also need to deploy new versions of the QuickStart template as they become available to upgrade the OS or the container registry itself.

If no requirements prohibit the sending of monitoring data to Public Azure and the proper connectivity exists, Azure Monitor for containers can be leveraged for feature-rich monitoring of the K8s clusters deployed on Azure Stack Hub with AKS engine. In addition, Azure Arc for Data Services can be leveraged to run containerized images of Azure SQL Managed Instances or Azure PostgreSQL Hyperscale on this same K8s cluster. The Azure Monitor and Azure Arc for Data Services options would not be available in submarine scenarios where there would be no connectivity to Azure whatsoever. In the disconnected scenario, the customer would have to determine how best to monitor and run data services on their K8s cluster independent of Public Azure.

Here is a summary of the articles in this blog post series:

Part 1: Running containerized applications on Microsoft Azure’s hybrid ecosystem – Provides an overview of the concepts covered in the blog series.

Part 2: Deploy K8s Cluster into an Azure Stack Hub user subscription – Setup an AKS engine client VM, deploy a cluster using AKS engine, and onboard the cluster to Azure Monitor for Containers.

Part 3: Deploy a self-hosted Docker Container Registry – Use one of the Azure Stack Hub QuickStart templates to setup container registry and push images to this registry. Then, pull these images from the registry into the K8s cluster deployed with AKS engine in Part 2.

Deploy a self-hosted Docker Container Registry on Azure Stack Hub

Thu, 22 Jul 2021 10:35:43 -0000

|Read Time: 0 minutes

Deploy a self-hosted Docker Container Registry on Azure Stack Hub

Introduction

Welcome to Part 3 of a three-part blog series on running containerized applications on Microsoft Azure’s hybrid ecosystem. In this part, we step through how we deployed a self-hosted, open-source Docker Registry v2 to an Azure Stack Hub user subscription. We also discuss how we pushed a microservices-based application to the self-hosted registry and then pulled those images from that registry onto the K8s cluster we deployed using AKS engine.

Here are the links to all the series articles:

Part 1: Running containerized applications on Microsoft Azure’s hybrid ecosystem – Provides an overview of the concepts covered in the blog series.

Part 2: Deploy K8s Cluster into an Azure Stack Hub user subscription – Setup an AKS engine client VM, deploy a cluster using AKS engine, and onboard the cluster to Azure Monitor for containers.

Part 3: Deploy a self-hosted Docker Container Registry – Use one of the Azure Stack Hub QuickStart templates to setup container registry and push images to this registry. Then, pull these images from the registry into the K8s cluster deployed with AKS engine in Part 2.

Here is a diagram depicting the high-level architecture in the lab for review.

There are a few reasons why an organization may want to deploy and maintain a container registry on-premises rather than leveraging a publicly accessible registry like Docker Hub. This approach may be particularly appealing to customers in air-gapped deployments where there is unreliable connectivity to Public Azure or no connectivity at all. This can work well with K8s clusters that have been deployed using AKS engine at military installations or other such locales. There may also be regulatory compliance, data sovereignty, or data gravity issues that can be addressed with a local container registry. Some customers may simply require tighter control over where their images are being stored or need to integrate image storage and distribution tightly into their in-house development workflows.

Prerequisites

The deployment of Docker Registry v2 into an Azure Stack Hub user subscription was performed using the 101-vm-linux-docker-registry Azure Stack Hub QuickStart template. There are two phases to this deployment:

1. Creation of a storage account and key vault in a discrete resource group by running the setup.ps1 PowerShell script provided in the template repo.

2. The setup.ps1 script also created an azuredeploy.parameters.json file that is used in the PowerShell command to deploy the ARM template that describes the container registry VM and its associated resources. These resources get provisioned into a separate resource group.

Be aware that there is another experimental repository in GitHub called msazurestackworkloads for deploying Docker Registry. The main difference is that the code in the msazurestackworkloads repo includes artifacts for creating a marketplace gallery item that an Azure Stack Hub user could deploy into their subscription. Again, this is currently experimental and is not supported for deploying the Docker Registry.

One of the prerequisite steps was to obtain an X.509 SSL certificate in PFX format for loading into key vault. We pointed to its location during the running of the setup.ps1 script. We used our lab’s internal standalone CA to create the certificate, which is the same CA used for deploying the K8s cluster with AKS engine. We thought we’d share the steps we took to obtain this certificate in case any readers aren’t familiar with the process. All these steps must be completed from the same workstation to ensure access to the private key.

We performed these steps on the management workstation:

1. Created an INF file that looked like the following. The subject’s CN is the DNS name we provided for the registry VM’s public IP address.

[Version]

Signature="$Windows NT$"

[NewRequest]

Subject = "CN=cseregistry.rack04.cloudapp.azs.mhclabs.com,O=CSE Lab,L=Round Rock,S=Texas,C=US"

Exportable = TRUE

KeyLength = 2048

KeySpec = 1

KeyUsage = 0xA0

MachineKeySet = True

ProviderName = "Microsoft RSA SChannel Cryptographic Provider"

HashAlgorithm = SHA256

RequestType = PKCS10

[Strings]

szOID_SUBJECT_ALT_NAME2 = "2.5.29.17"

szOID_ENHANCED_KEY_USAGE = "2.5.29.37"

szOID_PKIX_KP_SERVER_AUTH = "1.3.6.1.5.5.7.3.1"

szOID_PKIX_KP_CLIENT_AUTH = "1.3.6.1.5.5.7.3.2"

[Extensions]

%szOID_SUBJECT_ALT_NAME2% = "{text}dns=cseregistry.rack04.cloudapp.azs.mhclabs.com"

%szOID_ENHANCED_KEY_USAGE% = "{text}%szOID_PKIX_KP_SERVER_AUTH%,%szOID_PKIX_KP_CLIENT_AUTH%"

[RequestAttributes]

2. We used the certreq.exe command to generate a CSR that I then submitted to the CA.

certreq.exe -new cseregistry_req.inf cseregistry_csr.req

3. We received a .cer file back from the standalone CA and followed the instructions here to convert this .cer file to a .pfx file for use with the container registry.

Prepare Azure Stack PKI certificates for deployment or rotation

We also needed to have CA’s root certificate in .crt file format. We originally obtained this during the K8s cluster deployment using AKS engine. This needs to be imported into the certificate store of any device that intends to interact with the container registry.

Deploy the container registry supporting infrastructure

We used the setup.ps1 PowerShell script included in the QuickStart template’s GitHub repo for creating the supporting infrastructure for the container registry. We named the new resource group created by this script demoregistryinfra-rg. This resource group contains a storage account and key vault. The registry is configured to use the Azure storage driver to persist the container images in the storage account blob container. The key vault stores the credentials required to authenticate to the registry as a secret and secures the certificate. A service principal (SPN) created prior to executing the setup.ps1 script is leveraged to access the storage account and key vault.

Here are the values we used for the variables in our setup.ps1 script for reference (sensitive information removed, of course). Notice that the $dnsLabelName value is only the hostname of the container registry server and not the fully qualified domain name.

$location = "rack04"

$resourceGroup = "registryinfra-rg"

$saName = "registrystorage"

$saContainer = "images"

$kvName = "registrykv"

$pfxSecret = "registrypfxsecret"

$pfxPath = "D:\file_system_path\cseregistry.pfx"

$pfxPass = "mypassword"

$spnName = "Application (client) ID"

$spnSecret = "Secret"

$userName = "cseadmin"

$userPass = "!!123abc"

$dnsLabelName = "cseregistry"

$sshKey = "SSH key generated by PuttyGen"

$vmSize = "Standard_F8s_v2"

$registryTag = "2.7.1"

$registryReplicas = "5"

Deploy the container registry using the QuickStart template

We deployed the QuickStart template using a similar PowerShell command as the one indicated in the README.md of the GitHub repo. Again, the azuredeploy.parameters.json file was created automatically by the setup.ps1 script. This was very straightforward. The only thing to mention is that we created a new resource group when deploying the QuickStart template. We could have also selected an existing resource group that did not contain any resources.

Testing the Docker Registry with the Sentiment Analyzer application

At this point, it was time to test the K8s cluster and self-hosted container registry running on Azure Stack Hub from end-to-end. For this, we followed a brilliant blog article entitled Learn Kubernetes in Under 3 Hours: A Detailed Guide to Orchestrating Containers written by Rinor Maloku. This was a perfect introduction to the world of creating a microservices-based application running in multiple containers. It covers Docker and Kubernetes fundamentals and is an excellent primer for anyone just getting started in the world of containers and container orchestration. The name of the application is Sentiment Analyser, and it uses text analysis to ascertain the emotion of a sentence.

Learn Kubernetes in Under 3 Hours: A Detailed Guide to Orchestrating Containers

We won’t share all the notes we took while walking through the article. However, there are a couple tips we wanted to highlight as they pertain to testing the K8s cluster and new self-hosted Docker Registry in the lab:

- The first thing we did to prepare for creating the Sentiment Analyser application was to setup a development Ubuntu 18.04 VM in a unique resource group in our lab’s user subscription on Azure Stack Hub. We installed Firefox on the VM and Xming on the management workstation so we could test the functionality of the application at the required points in the process. Then, it was just a matter of setting up PuTTY properly with X11 forwarding.

- Before installing anything else on the VM, we imported the root certificate from our lab’s standalone CA. This was critical to facilitate the secure connection between this VM and the registry VM for when we started pushing the images.

- Whenever Rinor’s article talked about pushing the container images to Docker Hub, we instead targeted the self-hosted registry running on Azure Stack Hub. We had to take these steps on the development VM to facilitate those procedures:

- Logged into the container registry using the credentials for authentication that we specified in the setup.ps1 script.

- Tagged the image to target the container registry.

sudo docker tag <image ID> cseregistry.rack04.cloudapp.azs.mhclabs.com/sentiment-analysis-frontend

- Pushed the image to the container registry.

sudo docker push cseregistry.rack04.cloudapp.azs.mhclabs.com/sentiment-analysis-frontend

- Once the images were pushed to the registry, we found out that we could view them from the management workstation by browsing to the following URL and authenticating to the registry:

https://cseregistry.rack04.cloudapp.azs.mhclabs.com/v2/_catalog

The following output was given after we pushed all the application images:

{"repositories":["sentiment-analysis-frontend","sentiment-analysis-logic","sentiment-analysis-web-app"]}

- We noticed that we did not have to import the lab’s standalone CA’s root certificate on the primary node before attempting to pull the image from the container registry. We assumed that the cluster picked up the root certificate from the Azure Stack Hub system, as there was a file named /etc/ssl/certs/azsCertificate.pem on the primary node from where we were running kubectl.

- Prior to attempting to create the K8s pod for the Sentiment Analyser frontend, we had to create a Kubernetes cluster secret. This is always necessary when pulling images from private repositories – whether they are private repos on Docker Hub or privately hosted on-premises using a self-hosted container registry. We followed the instructions here to create the secret:

Pull an Image from a Private Registry

In the section of this article entitled Create a Secret by providing credentials on the command line, we discovered a couple items to note:

- When issuing the kubectl create secret docker-registry command, we had to enclose the password in single quotes because it was a complex password.

kubectl create secret docker-registry regcred --docker-

server=cseregistry.rack04.cloudapp.azs.mhclabs.com --docker-username=cseadmin --docker-

password='!!123abc' --docker-email=<email address>

We’ve now gotten into the habit of verifying the secret after I create it by using the following command:

kubectl get secret regcred --output="jsonpath={.data.\.dockerconfigjson}" | base64 --decode

- Then, when we came to the modification of the YAML files in the article, we learned just how strict YAML is about the formatting of the file. When we added the imagePullSecrets: object, we had to ensure it perfectly lined up with the containers: object. Also, interesting to note is that the numbers in the right-hand column were not necessary to duplicate.

Here is the content of the file that worked, but this blog post interface will not be able to display the indentation correctly:

apiVersion: v1

kind: Pod # 1

metadata:

name: sa-frontend

labels:

app: sa-frontend # 2

spec: # 3

containers:

- image: cseregistry.rack04.cloudapp.azs.mhclabs.com/sentiment-analysis-frontend # 4

name: sa-frontend # 5

ports:

- containerPort: 80 # 6

imagePullSecrets:

- name: regcred

At this point, we were able to observe the fully functional Sentiment Analyser application running on our K8s cluster on Azure Stack Hub. We were not only running this application on-premises in a highly prescriptive, self-managed Kubernetes cluster, but we were also able to do so while leveraging a self-hosted Docker Registry for the transferring of the images. We could also proceed to Azure Monitor for containers using the Public Azure portal to monitor our running containerized application and create thresholds for timely alerting on any potential issues.

Articles in this blog series

We hope this blog post proves to be insightful to anyone deploying a self-hosted container registry on Azure Stack Hub. It has been a great learning experience stepping through the deployment of a containerized application using the Microsoft Azure toolset. There are so many other things we want to try like Deploying Azure Cognitive Services to Azure Stack Hub and using Azure Arc to run Azure data services on our K8s cluster on Azure Stack Hub. We look forward to sharing more of our testing results on these exciting capabilities in the near future.