Dell PowerEdge Servers Unleash Another Round of Excellent Results with MLPerf™ v4.0 Inference

Wed, 27 Mar 2024 15:12:53 -0000

|Read Time: 0 minutes

Today marks the unveiling of MLPerf v4.0 Inference results, which have emerged as an industry benchmark for AI systems. These benchmarks are responsible for assessing the system-level performance consisting of state-of-the-art hardware and software stacks. The benchmarking suite contains image classification, object detection, natural language processing, speech recognition, recommenders, medical image segmentation, LLM 6B and LLM 70B question answering, and text to image benchmarks that aim to replicate different deployment scenarios such as the data center and edge.

Dell Technologies is a founding member of MLCommons™ and has been actively making submissions since the inception of the Inference and Training benchmarks. See our MLPerf™ Inference v2.1 with NVIDIA GPU-Based Benchmarks on Dell PowerEdge Servers white paper that introduces the MLCommons Inference benchmark.

Our performance results are outstanding, serving as a clear indicator of our resolve to deliver outstanding system performance. These improvements enable higher system performance when it is most needed, for example, for demanding generative AI (GenAI) workloads.

What is new with Inference 4.0?

Inference 4.0 and Dell’s submission include the following:

- Newly introduced Llama 2 question answering and text to image stable diffusion benchmarks, and submission across different Dell PowerEdge XE platforms.

- Improved GPT-J (225 percent improvement) and DLRM-DCNv2 (100 percent improvement) performance. Improved throughput performance of the GPTJ and DLRM-DCNv2 workload means faster natural language processing tasks like summarization and faster relevant recommendations that allow a boost to revenue respectively.

- First-time submission of server results with the recently released PowerEdge R7615 and PowerEdge XR8620t servers with NVIDIA accelerators.

- Besides accelerator-based results, Intel-based CPU-only results.

- Results for PowerEdge servers with Qualcomm accelerators.

- Power results showing high performance/watt scores for the submissions.

- Virtualized results on Dell servers with Broadcom.

Overview of results

Dell Technologies delivered 187 data center, 28 data center power, 42 edge, and 24 edge power results. Some of the more impressive results were generated by our:

- Dell PowerEdge XE9680, XE9640, XE8640, and servers with NVIDIA H100 Tensor Core GPUs

- Dell PowerEdge R7515, R750xa, and R760xa servers with NVIDIA L40S and A100 Tensor Core GPUs

- Dell PowerEdge XR7620 and XR8620t servers with NVIDIA L4 Tensor Core GPUs

- Dell PowerEdge R760 server with Intel Emerald Rapids CPUs

- Dell PowerEdge R760 with Qualcomm QAIC100 Ultra accelerators

NVIDIA-based results include the following GPUs:

- Eight-way NVIDIA H100 GPU (SXM)

- Four-way NVIDIA H100 GPU (SXM)

- Four-way NVIDIA A100 GPU (PCIe)

- Four-way NVIDIA L40S GPU (PCIe)

- NVIDIA L4 GPU

These accelerators were benchmarked on different servers such as PowerEdge XE9680, XE8640, XE9640, R760xa, XR7620, and XR8620t servers across data center and edge suites.

Dell contributed to about 1/4th of the closed data center and edge submissions. The large number of result choices offers end users an opportunity to make data-driven purchase decisions and set performance and data center design expectations.

Interesting Dell data points

The most interesting data points include:

- Performance results across different benchmarks are excellent and show that Dell servers meet the increasing need to serve different workload types.

- Among 20 submitters, Dell Technologies was one of the few companies that covered all benchmarks in the closed division for data center suites.

- The PowerEdge XE8640 and PowerEdge XE9640 servers compared to other four-way systems procured winning titles across all the benchmarks including the newly launched stable diffusion and Llama 2 benchmark.

- The PowerEdge XE9680 server compared to other eight-way systems procured several winning titles for benchmarks such as ResNet Server, 3D-Unet, BERT-99, and BERT-99.9 Server.

- The PowerEdge XE9680 server delivers the highest performance/watt compared to other submitters with 8-way NVIDIA H100 GPUs for ResNet Server, GPTJ Server, and Llama 2 Offline

- The Dell XR8620t server for edge benchmarks with NVIDIA L4 GPUs outperformed other submissions.

- The PowerEdge R750xa server with NVIDIA A100 PCIe GPUs outperformed other submissions on the ResNet, RetinaNet, 3D-Unet, RNN-T, BERT 99.9, and BERT 99 benchmarks.

- The PowerEdge R760xa server with NVIDIA L40S GPUs outperformed other submissions on the ResNet Server, RetinaNet Server, RetinaNet Offline, 3D-UNet 99, RNN-T, BERT-99, BERT-99.9, DLRM-v2-99, DLRM-v2-99.9, GPTJ-99, GPTJ-99.9, Stable Diffusion XL Server, and Stable Diffusion XL Offline benchmarks.

Highlights

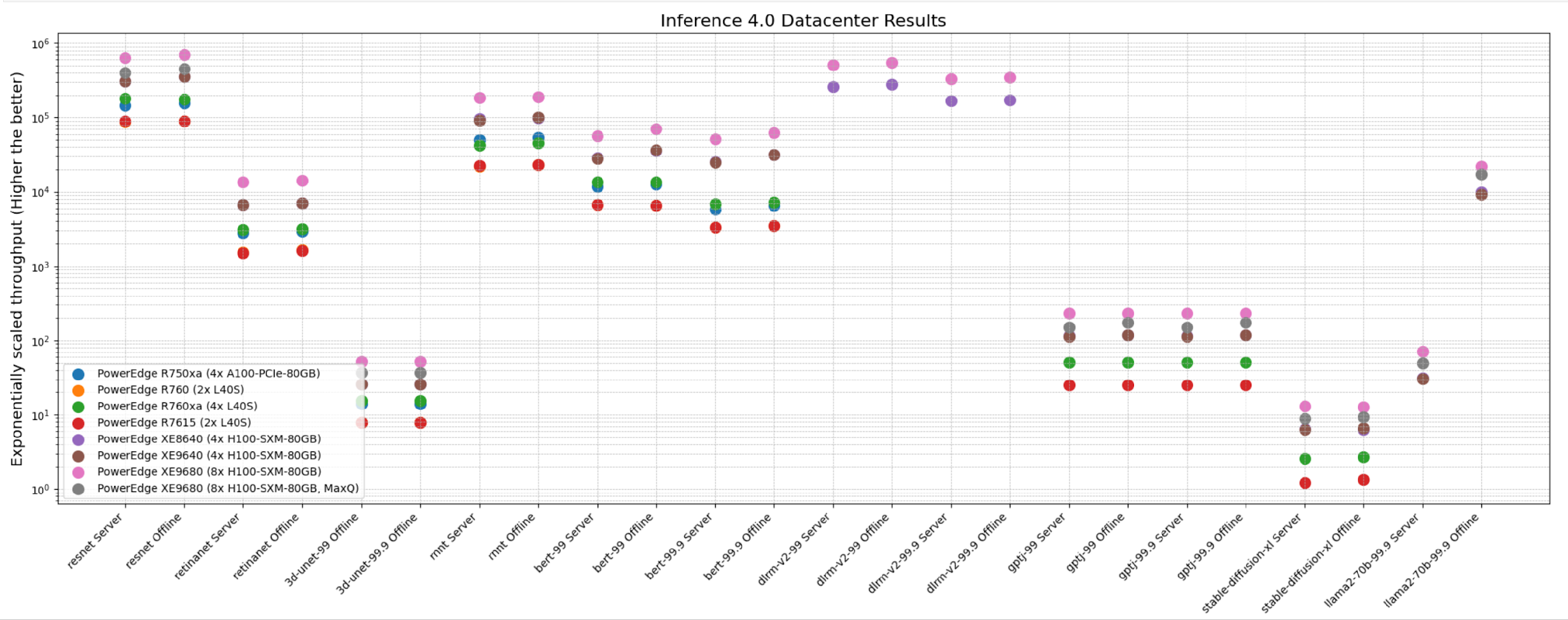

The following figure shows the different Offline and Server performance scenarios in the data center suite. These results provide an overview; follow-up blogs will provide more details about the results.

The following figure shows that these servers delivered excellent performance for all models in the benchmark such as ResNet, RetinaNet, 3D-UNet, RNN-T, BERT, DLRM-v2, GPT-J, Stable Diffusion XL, and Llama 2. Note that different benchmarks operate on varied scales. They have all been showcased in an exponentially scaled y-axis in the following figure:

Figure 1: System throughput for submitted systems for the data center suite.

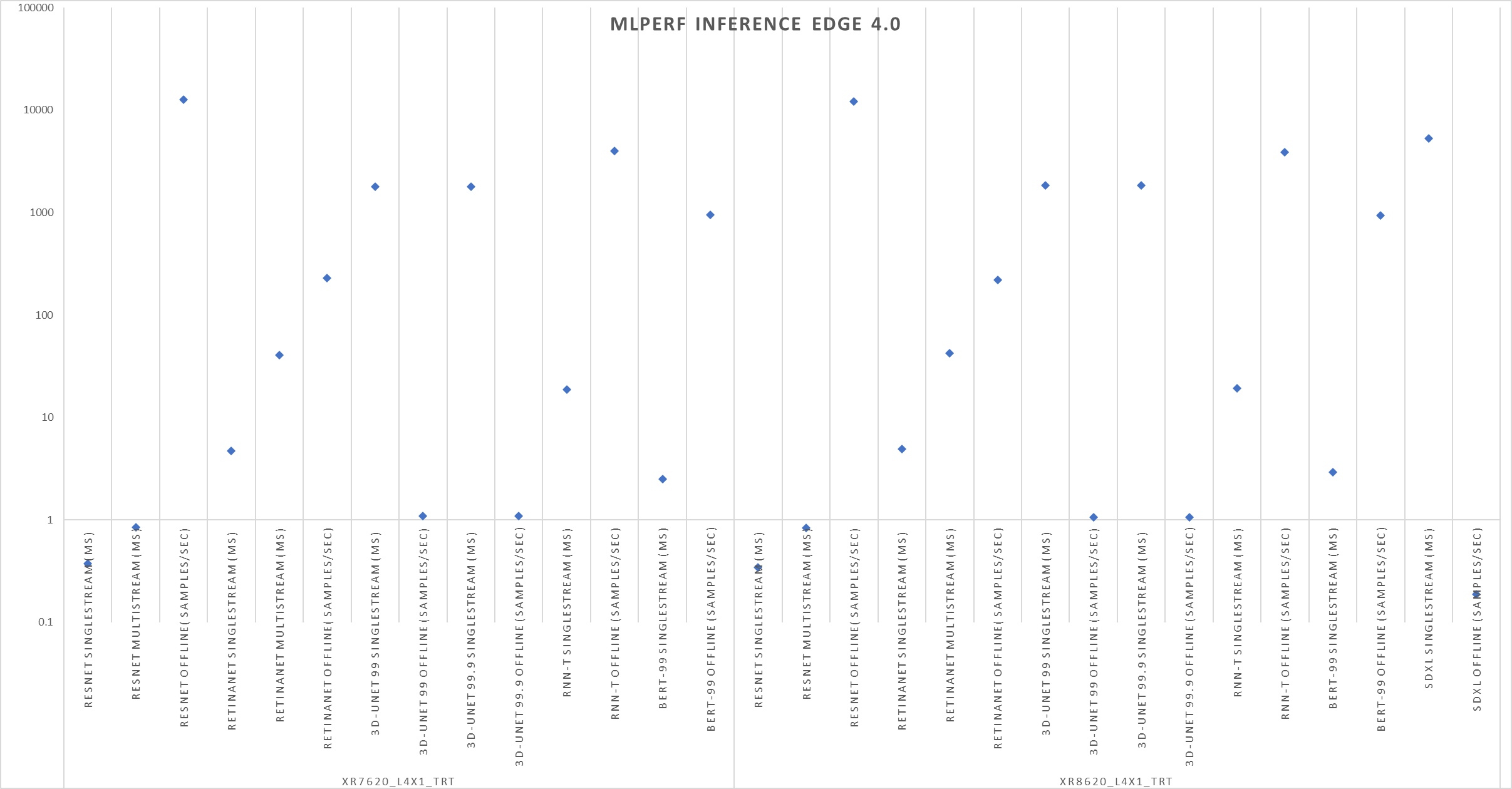

The following figure shows single-stream and multistream scenario results for the edge for ResNet, RetinaNet, 3D-Unet, RNN-T, BERT 99, GPTJ, and Stable Diffusion XL benchmarks. The lower the latency, the better the results and for Offline scenario, higher the better.

Figure 2: Edge results with PowerEdge XR7620 and XR8620t servers overview

Conclusion

The preceding results were officially submitted to MLCommons. They are MLPerf-compliant results for the Inference v4.0 benchmark across various benchmarks and suites for all the tasks in the benchmark such as image classification, object detection, natural language processing, speech recognition, recommenders, medical image segmentation, LLM 6B and LLM 70B question answering, and text to image. These results prove that Dell PowerEdge XE9680, XE8640, XE9640, and R760xa servers are capable of delivering high performance for inference workloads. Dell Technologies secured several #1 titles that make Dell PowerEdge servers an excellent choice for data center and edge inference deployments. End users can benefit from the plethora of submissions that help make server performance and sizing decisions, which ultimately deliver enterprises’ AI transformation and shows Dell’s commitment to deliver higher performance.

MLCommons Results

https://mlcommons.org/en/inference-datacenter-40/

https://mlcommons.org/en/inference-edge-40/

The preceding graphs are MLCommons results for MLPerf IDs from 4.0-0025 to 4.0-0035 on the closed datacenter, 4.0-0036 to 4.0-0038 on the closed edge, 4.0-0033 in the closed datacenter power, and 4.0-0037 in closed edge power.