Dell PowerEdge Servers deliver excellent performance with MLCommons™ Inference 3.1

Mon, 11 Sep 2023 13:18:00 -0000

|Read Time: 0 minutes

Today, MLCommons released the latest version (v3.1) of MLPerf Inference results. Dell Technologies has made submissions to the inference benchmark since its version 0.5 launch in 2019. We continue to demonstrate outstanding results across different models in the benchmark such as image classification, object detection, natural language processing, speech recognition, recommender system and medical image segmentation, and LLM summarization. See our MLPerf™ Inference v2.1 with NVIDIA GPU-Based Benchmarks on Dell PowerEdge Servers white paper that introduces the MLCommons Inference benchmark. Generative AI (GenAI) has taken deep learning computing needs by storm and there is an ever-increasing need to enable high-performance innovative inferencing approaches. This blog provides an overview of the performance summaries that Dell PowerEdge servers enable end users to deliver on their AI Inference transformation.

What is new with Inference 3.1?

Inference 3.1 and Dell’s submission include the following:

- The inference benchmark has added two exciting new benchmarks:

- LLM-based models, such as GPT-J

- DLRM-V2 with multi-hot encodings using the DLRM-DCNv2 architecture

- Dell’s submission has been expanded to include the new PowerEdge XE8640 and PowerEdge XE9640 servers accelerated by NVIDIA GPUs.

- Dell’s submission includes results of PowerEdge servers with Qualcomm accelerators.

- Besides accelerator-based results, Dell’s submission includes Intel-based CPU-only results.

Overview of results

Dell Technologies submitted 230 results across 20 different configurations. The most impressive results were generated by PowerEdge XE9680, XE9640, XE8640, R760xa, and servers with the new NVIDIA H100 PCIe and SXM Tensor Core GPUs, PowerEdge XR7620 and XR5610 servers with the NVIDIA L4 Tensor Core GPUs, and the PowerEdge R760xa server with the NVIDIA L40 GPU.

Overall, NVIDIA-based results include the following accelerators:

- (New) Four-way NVIDIA H100 Tensor Core GPU (SXM)

- (New) Four-way NVIDIA L40 GPU

- Eight-way NVIDIA H100 Tensor Core GPU (SXM)

- Four-way NVIDIA A100 Tensor Core GPU (PCIe)

- NVIDIA L4 Tensor Core GPU

These accelerators were benchmarked on different servers such as PowerEdge XE9680, XE8640, XE9640, R760xa, XR7620, XR5610, and R750xa servers across data center and edge suites.

The large number of result choices offers end users an opportunity to make system purchase decisions and set performance and design expectations.

Interesting Dell Datapoints

The most interesting datapoints include:

- The performance numbers on newly released Dell PowerEdge servers are outstanding.

- Among 21 submitters, Dell Technologies was one of the few companies that covered all benchmarks in all closed divisions for data center, edge, and edge power suites.

- The PowerEdge XE9680 system with eight NVIDIA H100 SXM GPUs procures the highest performance titles with ResNet Server, RetinaNet Server, RNNT Server and Offline, BERT 99 Server, BERT 99.9 Offline, DLRM-DCNv2 99, and DLRM-DNCv2 99.9 Offline benchmarks.

- The PowerEdge XE8640 system with four NVIDIA H100 SXM GPUs procures the highest performance titles with all the data center suite benchmarks.

- The PowerEdge XE9640 system with four NVIDIA H100 SXM GPUs procures the highest performance titles for all systems among other liquid cooled systems for all data center suite benchmarks.

- The PowerEdge XR5610 system with an NVIDIA L4 Tensor Core GPU offers approximately two- to three-times higher performance/watt compared to the last round and procures the highest power efficiency titles with Resnet RetinaNet 3d-unet 99, 3D U-Net 99.9 and Bert-99.

Highlights

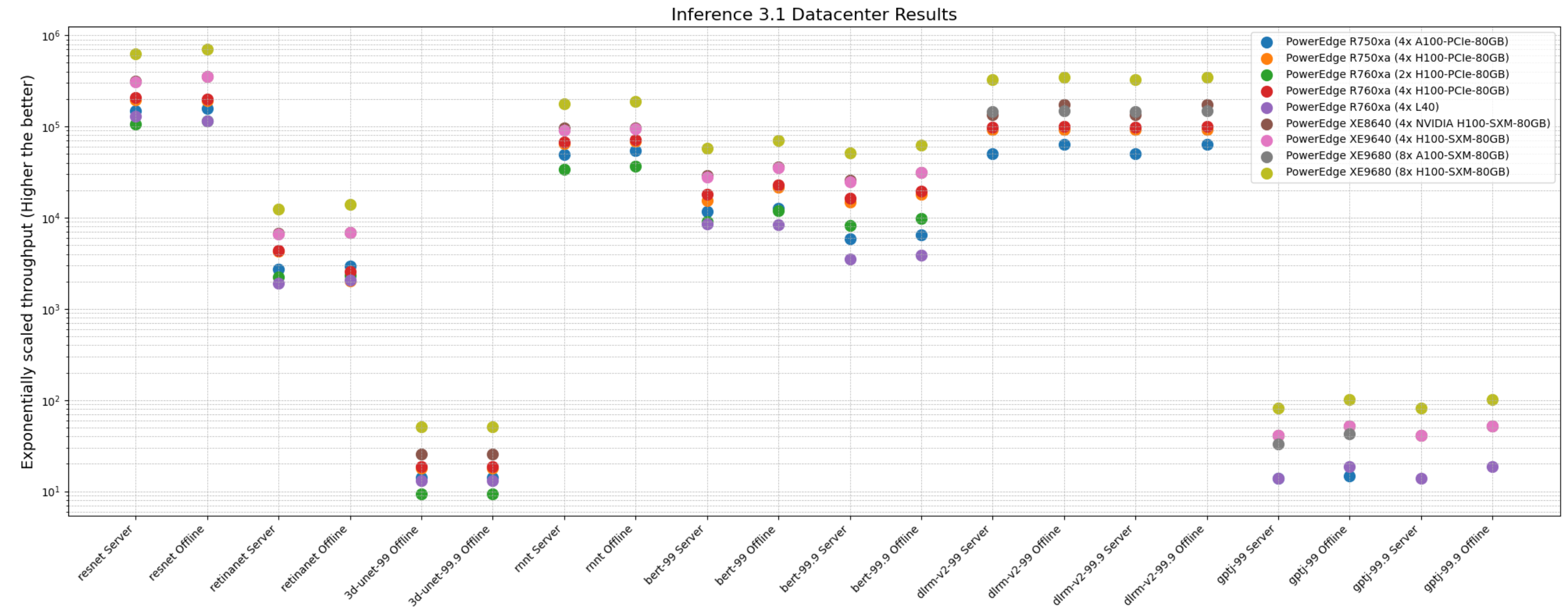

The following figure shows the different system performance for offline and server scenarios in the data center. These results provide an overview; future blogs will provide more details about the results.

The figure shows that these servers delivered excellent performance for all models in the benchmark such as ResNet, RetinaNet, 3D-U-Net, RNN-T, BERT, DLRM-v2, and GPT-J. It is important to recognize that different benchmarks operate on varied scales. They have all been showcased in the following figures to offer a comprehensive overview.

Figure 1: System throughput for submitted systems for the data center suite

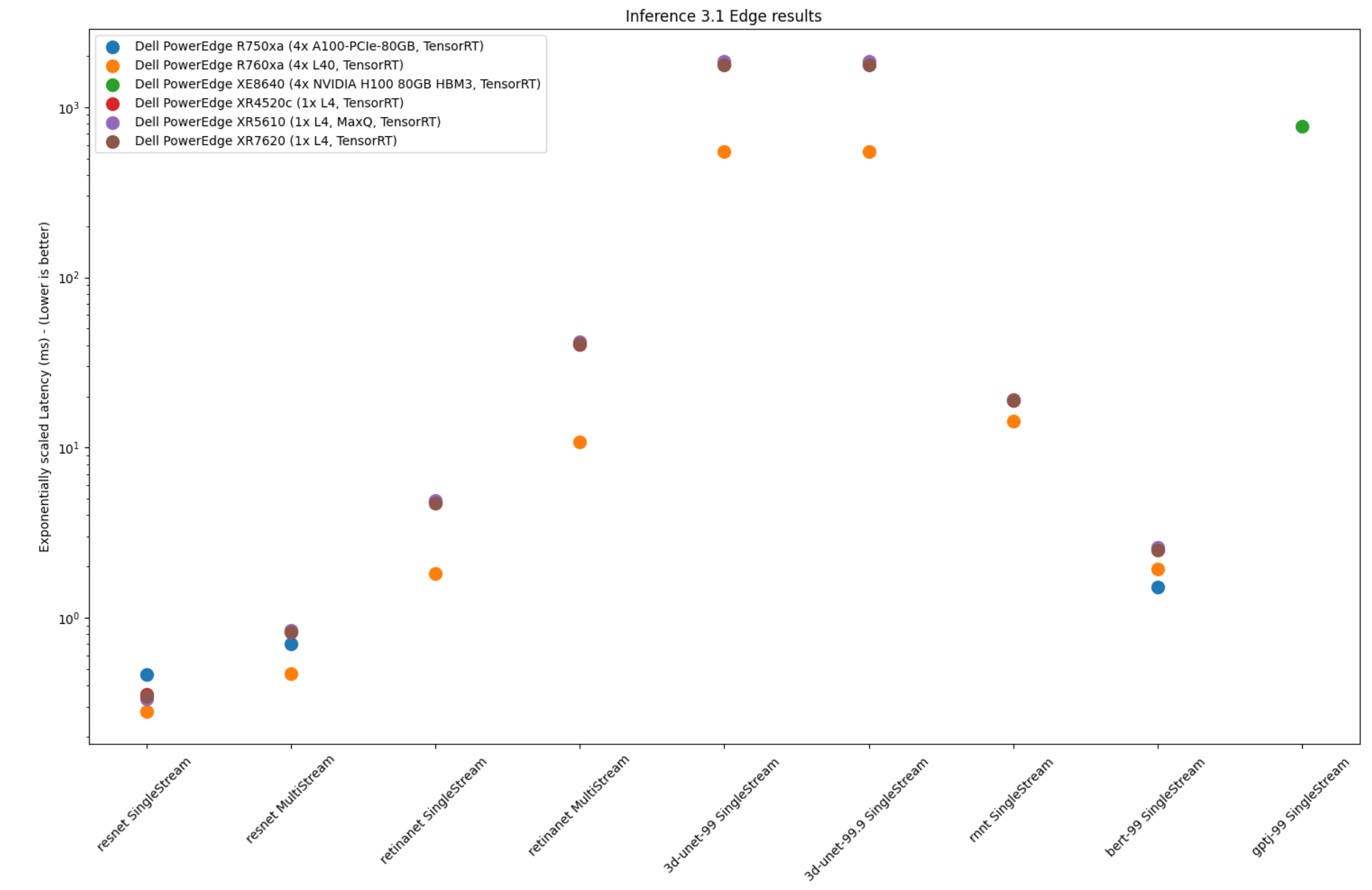

The following figure shows single-stream and MultiStream scenario results for the edge for ResNet, RetinaNet, 3D-Unet, RNN-T, and BERT 99 and GPTJ benchmarks. The lower the latency, the better the results.

Figure 2: Latency of edge systems

Conclusion

We have provided MLCommons-compliant submissions to the Inference 3.1 benchmark across various benchmarks and suites for all tasks in the benchmark such as image classification, object detection, natural language processing, speech recognition, recommender systems and medical image segmentation, and LLM summarization. These results indicate that with the newer generation of Dell PowerEdge servers such as the PowerEdge XE9680, XE8640, XE9640, and R760xa servers and newer GPUs from NVIDIA, end users can benefit from higher performance from their data center and edge inference deployments. We have also secured numerous Number 1 titles that make Dell PowerEdge servers an excellent choice for inference data center and edge deployments. End users can refer to different results across various servers to make performance and sizing decisions. With these results, Dell Technologies can help fuel enterprises’ AI transformation, including Generative AI adoption and expansion effectively.

Future Steps

More blogs that provide an in-depth comparison of the performance of specific models with different accelerators are on their way soon. For any questions or requests, contact your local Dell representative.

MLCommons Results

https://mlcommons.org/en/inference-datacenter-31/

https://mlcommons.org/en/inference-edge-31/

The graphs above are MLCommons results MLPerf IDs from 3.1-0058 to 3.1-0069 on the closed datacenter, 3.1-0058 to 3.1-0075 on the closed edge, and 3.1-0073 on closed edge power.

The MLPerf™ name and logo are trademarks of MLCommons Association in the United States and other countries. All rights reserved. Unauthorized use strictly prohibited. See www.mlcommons.org for more information.