Assets

Dell Validated Design for HPC BeeGFS High Capacity Storage with 16G Servers

Fri, 03 May 2024 22:02:08 -0000

|Read Time: 0 minutes

The Dell Validated Design (DVD) for HPC BeeGFS High Capacity Storage is a fully supported, high-throughput, scale-out, parallel file system storage solution. The system is highly available, and capable of supporting multi-petabyte storage. This blog discusses the solution architecture, how it is tuned for HPC performance, and presents I/O performance using IOZone sequential and MDtest benchmarks.

BeeGFS high-performance storage solutions built on NVMe devices are designed as a scratch storage solution for datasets which are usually not retained beyond the lifetime of the job. For more information, see HPC Scratch Storage with BeeGFS.

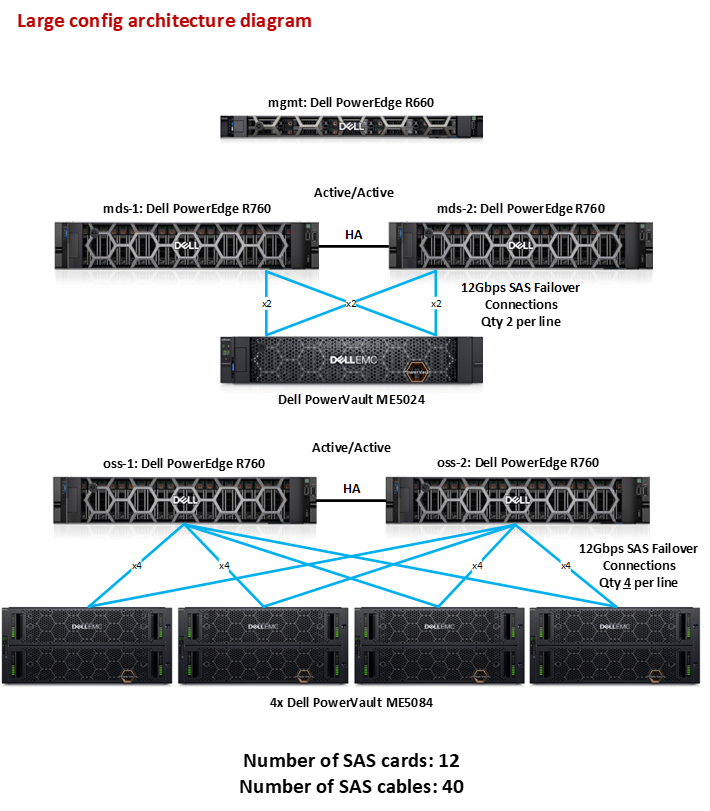

Figure 1. DVD for BeeGFS High Capacity - Large Configuration

The system shown in Figure 1 uses a PowerEdge R660 as the management server and entry point into the system. There are two pairs of R760 servers, each in an active-active high availability configuration. One set serves as Metadata Servers (MDS) connected to a PowerVault ME5024 storage array, which hosts the Metadata Targets (MDTs). The other set serves as Object Storage Servers (OSS) connected to up to four PowerVault ME5084 storage arrays, which host the Storage Targets (STs) for the BeeGFS filesystem. The ME5084 arrays supports hard disk drives (HDDs) of up to 22TB. To scale beyond four ME5084s, additional OSS pairs are needed.

This solution uses Mellanox InfiniBand HDR (200 Gb/s) for the data network on the OSS pair, and InfiniBand HDR-100 (100 Gb/s) for the MDS pair. The clients and servers are connected to the 1U Mellanox Quantum HDR Edge Switch QM8790, which supports up to 80 ports of HDR100 using splitter cables, or 40 ports of unsplit HDR200. Additionally, a single switch can be configured to have any combination of split and unsplit ports.

Hardware and Software Configuration

The following tables describe the hardware specifications and software versions validated for the solution.

Table 1. Testbed configuration

Management Server | 1x Dell PowerEdge R660 |

Metadata Servers (MDS) | 2x Dell PowerEdge R760 |

Object Storage Servers (OSS) | 2x Dell PowerEdge R760 |

Processor | 2x Intel(R) Xeon(R) Gold 6430 |

Memory | 16x 16GB DDR5, 4800MT/s RDIMMs – 256 GB |

InfiniBand HCA | MGMT: 1x Mellanox ConnectX-6 single port HDR-100 MDS: 2x Mellanox ConnectX-6 single port HDR-100 OSS: 2x Mellanox ConnectX-6 single port HDR |

External Storage Controller | MDS: 2x Dell 12Gb/s SAS HBA per R760 server OSS: 4x Dell 12Gb/s SAS HBA per R760 server |

Data Storage Enclosure | Small: 1x Dell PowerVault ME5084 Medium: 2x Dell PowerVault ME5084 Large 4x Dell PowerVault ME5084 Drive configuration is described in the section Storage Service |

Metadata Storage Enclosure | 1x Dell PowerVault ME5024 enclosure fully populated with 24 drives Drive configuration is described in the section Metadata Service |

RAID controllers | Duplex SAS RAID controllers in the ME5084 and ME5024 enclosures |

Hard Disk Drives | Per ME5084 enclosure: 84x SAS3 drives supported by ME5084 Per ME5024 enclosure: 24x SAS3 SSDs supported by ME5024 |

Operating System | Red Hat Enterprise Linux release 8.6 (Ootpa) |

Kernel Version | 4.18.0-372.9.1.el8.x86_64 |

Mellanox OFED version | MLNX_OFED_LINUX-5.6-2.0.9.0 |

Grafana | 10.3.3-1 |

InfluxDB | 1.8.10-1 |

BeeGFS File System | 7.4.0p1 |

Solution Configuration Details

The BeeGFS architecture consists of four main services:

- Management Service

- Metadata Service

- Storage Service

- Client Service

There is also an optional BeeGFS Monitoring Service.

Except for the client service which is a kernel module, the management, metadata, and storage services are user space processes. It is possible to run any combination of BeeGFS services (client and server components) together on the same machine. It is also possible to run multiple instances of any BeeGFS service on the same machine. In the Dell High Capacity configuration of BeeGFS, the metadata server runs the metadata services (1 per Metadata Target), as well as the monitoring service and management service. It’s important to note that the management service runs on the metadata server, not on the management server. This ensures that there is a redundant node to fail over to in case of a single server outage. The storage service (only 1 per server) runs on the storage servers.

Management Service

The beegfs-mgmtd service is handled by the metadata servers (not the management server) and handles registration of resources (storage, metadata, monitoring) and clients. The mgmtd store is initialized as a small partition on the first metadata target. In a healthy system, it will be started on MDS-1.

Metadata Service

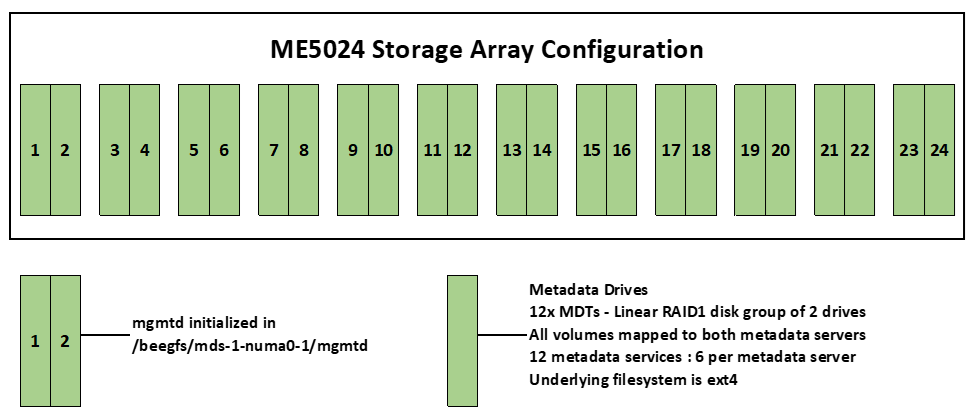

The ME5024 storage array used for metadata storage is fully populated with 24x 960 GB SSDs in this evaluation. These drives are configured in 12x linear RAID1 disk groups of two drives each as shown in Figure 2. Each RAID1 group is a metadata target (MDT).

Figure 2. Fully Populated ME5024 array with 12 MDTs

In BeeGFS, each metadata service handles only a single MDT. Since there are 12 MDTs, there are 12 metadata service instances. Each of the two metadata servers run six instances of the metadata service. The metadata targets are formatted with an ext4 file system (ext4 performs well with small files and small file operations). Additionally, BeeGFS stores information in extended attributes and directly on the inodes of the file system to optimize performance, both of which work well with the ext4 file system.

Storage Service

The data storage solution evaluated in this blog is distributed across four PowerVault ME5084 arrays, which makes up a large configuration. Additionally, there is a medium configuration (comprising two arrays) and a small configuration (consisting of a single array), which will not be assessed in this document. Linear RAID-6 disk groups of 10 drives (8+2) each are created on each array. A single volume using all the space is created for every disk group. This will result in 8 disk groups/volumes per array. Each ME5 array has 84 drives and handles 8 RAID-6 disk groups. 4 drives are left over, which are configured as global hot spares across the array volumes. The storage targets are formatted with an XFS filesystem as it delivers high write throughput and scalability with RAID arrays.

There are a total of 32x RAID-6 volumes across 4x ME5084 in the base configuration shown in Figure 1. Each of these RAID-6 volumes are configured as a Storage Target (ST) for the BeeGFS file system, resulting in a total of 32 STs.

Each ME5084 array has 84 drives, with drives numbered 0-41 in the top drawer, and 42-83 in the bottom drawer. In Figure 3, the drives are numbered 1-8 signifying which RAID6 disk group it belongs to. The drives marked “S” are configured as global hot spares, which will automatically take the place of any failed drive in a disk group.

Figure 3. RAID 6 (8+2) disk group layout on one ME5084

Client Service

The BeeGFS client module is loaded on all the hosts which require access to the BeeGFS file system. When the BeeGFS module is loaded and the beegfs-client service is started, the service mounts the file systems defined in /etc/beegfs/beegfs-mounts.conf instead of the usual approach based on /etc/fstab. With this approach, the beegfs-client service starts, like any other Linux service, through the service startup script and enables the automatic recompilation of the BeeGFS client module after system updates.

Monitoring Service

The BeeGFS monitoring service (beegfs-mon.service) collects BeeGFS statistics and provides them to the user, using the time series database InfluxDB. For visualization of data, beegfs-mon-grafana provides predefined Grafana dashboards that can be used out of the box. Figure 4 provides an overview of the main dashboard, showing BeeGFS storage server statistics during a short benchmark run. There are charts that display how much network traffic the servers are handling, how much data is being read and written to the disk, etc.

The monitoring capabilities in this release have been upgraded with Telegraf, allowing users to view system-level metrics such as CPU load (per-core and aggregate), memory usage, process count, and more.

Figure 4. Grafana Dashboard - BeeGFS Storage Server

High Availability

In this release, the high-availability capabilities of the solution have expanded to improve the resiliency of the filesystem. The high availability software now takes advantage of dual network rings so that it may communicate via both the management ethernet interface as well as InfiniBand. Additionally, the primary quorum of decision-making nodes have been expanded to include all five base servers, instead of the previous three (MGMT, MDS-1, MDS-2).

Performance Evaluation

This section presents the performance characteristics of the DVD for HPC BeeGFS High-Capacity Storage using IOZone sequential and MDTest. More information about performance characterization using IOR N-1, IOZone random, IO500, and StorageBench, will be available in an upcoming white paper.

The storage performance was evaluated using the IOZone benchmark which measured sequential read and write throughput. Table 2 describes the configuration of the PowerEdge C6420 nodes used as BeeGFS clients for these performance studies. These client nodes use a mix of processors i due to available resources, everything else is identical.

Table 2. Client Configuration

Clients | 16x Dell PowerEdge C6420 |

Processor per client | 12 clients: 2x Intel(R) Xeon(R) Gold 6248 CPU @ 2.50GHz 3 clients: 2x Intel(R) Xeon(R) Gold 6230 CPU @ 2.10GHz 1 client: 2x Intel(R) Xeon(R) Platinum 8260 CPU @ 2.40GHz |

Memory | 12x 16GB DDR4 2933MT/s DIMMs – 192GB |

Operating System | Red Hat Enterprise Linux release 8.4 (Ootpa) |

Kernel Version | 4.18.0-305.el8.x86_64 |

Interconnect | 1x Mellanox Connect-X 6 Single Port HDR100 Adapter |

OFED Version | MLNX_OFED_LINUX-5.6-2.0.9.0 |

The clients are connected over an HDR-100 network, while the servers are connected with a mix of HDR-100 and HDR. This configuration is described in Table 3.

Table 3. Network Configuration

InfiniBand Switch | QM8790 Mellanox Quantum HDR Edge Switch – 1U with 40x HDR 200Gb/s ports (ports can also be configured into split 2xHDR-100 100Gb/s) |

Management Switch | PowerSwitch N3248TE-ON – 1U with 48x 1Gb is recommended. Due to resource availability, this DVD was tested with Dell PowerConnect 6248 Switch – 1U with 48x 1Gb |

InfiniBand HCA | MGMT: 1x Mellanox ConnectX-6 single port HDR-100 MDS: 2x Mellanox ConnectX-6 single port HDR-100 OSS: 2x Mellanox ConnectX-6 single port HDR Clients: 1x Mellanox ConnectX-6 single port HDR-100 |

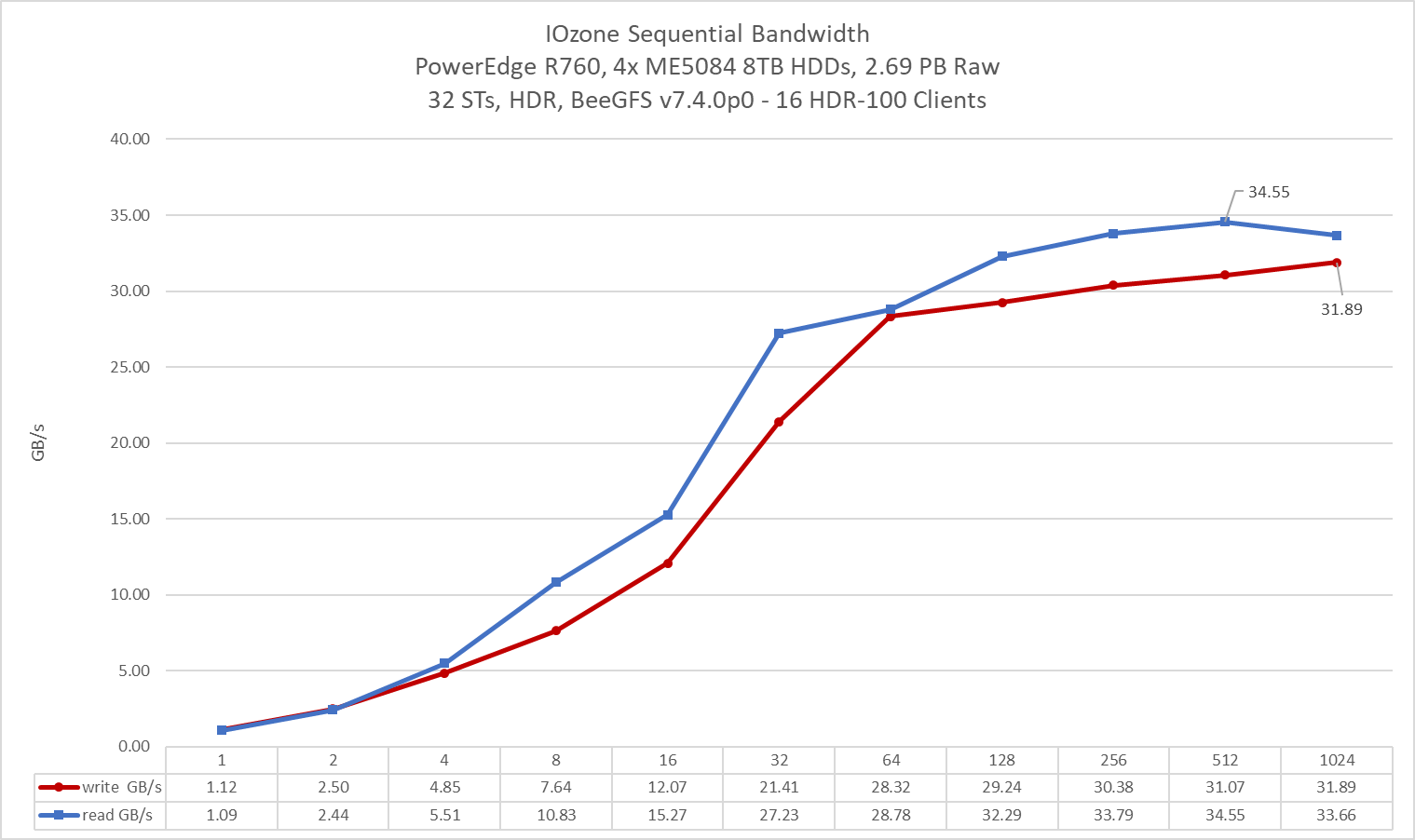

Sequential Reads and Writes N-N

The sequential reads and writes were measured using IOZone (v3.492). Tests were conducted with multiple thread counts starting at one thread and increasing in powers of two, up to 1,024 threads. This test is N-N; one file will be generated per thread. The processes were distributed across the 16 physical client nodes in a round-robin manner so that requests were equally distributed with load balancing.

For thread counts two and above, an aggregate file size of 8TB was chosen to minimize the effects of caching from the servers as well as from the BeeGFS clients. For one thread, a file size of 1TB was chosen. Within any given test, the aggregate file size used was equally divided among the number of threads. A record size of 1MiB was used for all runs. The benchmark was split into two commands: a write benchmark and a read benchmark, which are shown below.

iozone -i 0 -c -e -w -C -r 1m -s $Size -t $Thread -+n -+m /path/to/threadlist #write

iozone -i 1 -c -e -C -r 1m -s $Size -t $Thread -+n -+m /path/to/threadlist # readOS Caches were dropped on the servers and clients between iterations as well as between write and read tests by running the following command:

sync; echo 3 > /proc/sys/vm/drop_caches

Figure 5. N-N Sequential Performance

Figure 5 illustrates the performance scaling of read and write operations. The peak read throughput of 34.55 GB/s is achieved with 512 threads, while the peak write throughput of 31.89 GB/s is reached with 1024 threads. The performance shows a steady increase as the number of threads increases, with read performance beginning to stabilize at 32 threads and write performance at 64 threads. Beyond these points, the performance increases at a gradual rate, maintaining an approximate throughput of 34 GB/s for reads and 31 GB/s for writes. Regardless of the thread count, read operations consistently match or exceed write operations.

Tuning Parameters

The following tuning parameters were in place while carrying out the performance characterization of the solution.

The default stripe count for BeeGFS is 4, however, the chunk size and number of targets per file (stripe count) can be configured on a per-directory or per-file basis. For all of these tests, BeeGFS stripe size was set to 1MiB and stripe count set to 1, as shown below:

[root@node025 ~]# beegfs-ctl --getentryinfo --mount=/mnt/beegfs/ /mnt/beegfs/stripe1 --verbose

Entry type: directory

EntryID: 0-65D529C1-7

ParentID: root

Metadata node: mds-1-numa1-2 [ID: 5]

Stripe pattern details:

+ Type: RAID0

+ Chunksize: 1M

+ Number of storage targets: desired: 1

+ Storage Pool: 1 (Default)

Inode hash path: 4E/25/0-65D529C1-7Transparent huge pages were disabled, and the following virtual memory settings configured on the metadata and storage servers:

/proc/sys/vm/dirty_background_ratio = 5

/proc/sys/vm/dirty_ratio = 20

/proc/sys/vm/min_free_kbytes = 262144

/proc/sys/vm/vfs_cache_pressure = 50The following tuning options were used for the storage block devices on the storage servers

IO Scheduler deadline : deadline Number of schedulable requests : 2048 Maximum amount of read ahead data :4096

In addition to the above, the following BeeGFS specific tuning options were used

beegfs-meta.conf

connMaxInternodeNum = 96 logLevel = 3 tuneNumWorkers = 4 tuneTargetChooser = roundrobin tuneUsePerUserMsgQueues = true

beegfs-storage.conf

connMaxInternodeNum = 32 tuneBindToNumaZone = tuneFileReadAheadSize = 0m tuneFileReadAheadTriggerSize = 4m tuneFileReadSize = 1M tuneFileWriteSize = 1M tuneFileWriteSyncSize = 0m tuneNumWorkers = 10 tuneUsePerTargetWorkers = true tuneUsePerUserMsgQueues = true tuneWorkerBufSize = 4m

beegfs-client.conf

connRDMABufSize = 524288 connRDMABufNum = 4

Additionally, the ME5084s were initialized with a chunksize of 128k.

Conclusion and Future Work

This blog presents the performance characteristics of the Dell Validated Design for HPC High Capacity BeeGFS Storage with 16G hardware. This DVD provides a peak performance of 34.6 GB/s for reads and 31.9 GB/s for writes using the IOZone sequential benchmark.

Major improvements have been made in this release to security, high-availability, deployment, and telemetry monitoring.In future projects, we will evaluate other benchmarks including MDTest, IOZone random (random IOP/s), IOR N to 1 (N threads to a single file), NetBench (clients to servers, omitting ME5 devices), and StorageBench (servers to disks directly, omitting network).