Assets

Accelerating and Optimizing AI Operations with Infrastructure as Code

Fri, 03 May 2024 12:00:00 -0000

|Read Time: 0 minutes

Accelerating and Optimizing AI Operations with Infrastructure as Code

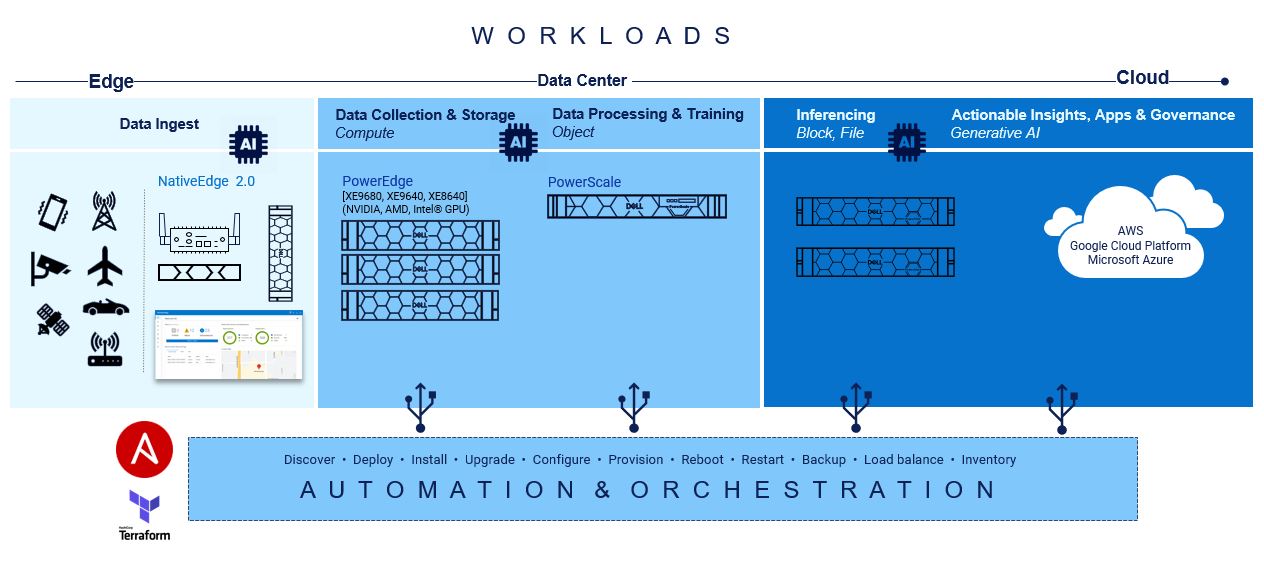

Achieving maturity in a DevOps organization requires overcoming various barriers and following specific steps. The level of maturity attained depends on the short-term and long-term goals set for the infrastructure. In the short term, IT teams must focus on upskilling their resources and integrating tools for containerization and automation throughout the operating lifecycles, from Day 0 to Day 2. Any progress made in scaling up containerized environments and automating processes significantly enhances the long-term economic viability and sustainability of the company. Furthermore, in the long term, it involves deploying these solutions across multicloud, multisite landscapes and effectively balancing workloads.

The optimization of your AI applications, and by extension, other high-value workloads, hinges upon the velocity, scalability, and efficacy of your infrastructure, as well as the maturity of your DevOps processes. Prior to the explosion that is AI, recent survey results indicated the state of automation for infrastructure operations’ workflows was overall less than 50%; partner that with twofold the increase of application counts and organizations may struggle against the waves of change[1].

From compute capabilities to storage density and speed, spanning across unstructured, block, and file formats, there exists fundamental elements of automation ripe for swift integration to establish a robust foundation. By seamlessly layering pre-built integration tools and a complementary portfolio of products at each stage, the journey towards ramping up AI can be alleviated.

There are important considerations regarding the various hardware infrastructure components for a generative AI system, including high performance computing, highspeed networking, and scalable, high-capacity, and low-latency storage to name a few. The infrastructure requirements for AI/ML workloads are dynamic and dependent on several factors, including the nature of the task, the size of the dataset, the complexity of the model, and the desired performance levels. There is no one-size-fits-all solution when it comes to Gen AI infrastructure, as different tasks and projects may demand unique configurations. Central to the success of generative AI initiatives is the adoption of Infrastructure-as-Code (IaC) principles which facilitate the automation and orchestration of underlying infrastructure components. By leveraging IaC tools like RedHat Ansible and HashiCorp Terraform, organizations can streamline the deployment and management of hardware resources, ensuring seamless integration with Gen AI workloads.

At the base of this foundation is Red Hat Ansible modules for Dell, and they speed up the provisioning of servers and storage for quick AI application workload mobility.

At the base of this foundation is Red Hat Ansible modules for Dell, and they speed up the provisioning of servers and storage for quick AI application workload mobility.

Creating playbooks with Ansible to automate server configurations, provisioning, deployments, and updates are seamless while data is being collected. Due to the declarative and mutable nature of Ansible, the playbooks can be changed in real-time without interruption to processes or end users.

Compute

On the compute front, a lot goes into configuring servers for the different AI and ML operations:

GPU Drivers and CUDA toolkit Installation: Install appropriate GPU drivers for the server's GPU hardware. For example, installing CUDA Toolkit and drivers to enable GPU acceleration for deep learning frameworks such as TensorFlow and PyTorch.

Deep Learning Framework Installation: Install popular deep learning frameworks such as TensorFlow or PyTorch, along with their associated dependencies.

Containerization: Consider using containerization technologies such as Docker or Kubernetes to encapsulate AI workloads and their dependencies into portable and isolated containers. Containerization facilitates reproducibility, scalability, and resource isolation, making it easier to deploy and manage GenAI workloads across different environments.

Performance Optimization: Optimize server configurations, kernel parameters, and system settings to maximize performance and resource utilization for GenAI workloads. Tune CPU and GPU settings, memory allocation, disk I/O, and network configurations based on workload characteristics and hardware capabilities.

Monitoring and Management: Implement monitoring and management tools to track server performance metrics, resource utilization, and workload behavior in real-time.

Security Hardening: Ensure server security by applying security best practices, installing security patches and updates, configuring firewalls, and implementing access controls. Protect sensitive data and AI models from unauthorized access, tampering, or exploitation by following security guidelines and compliance standards.

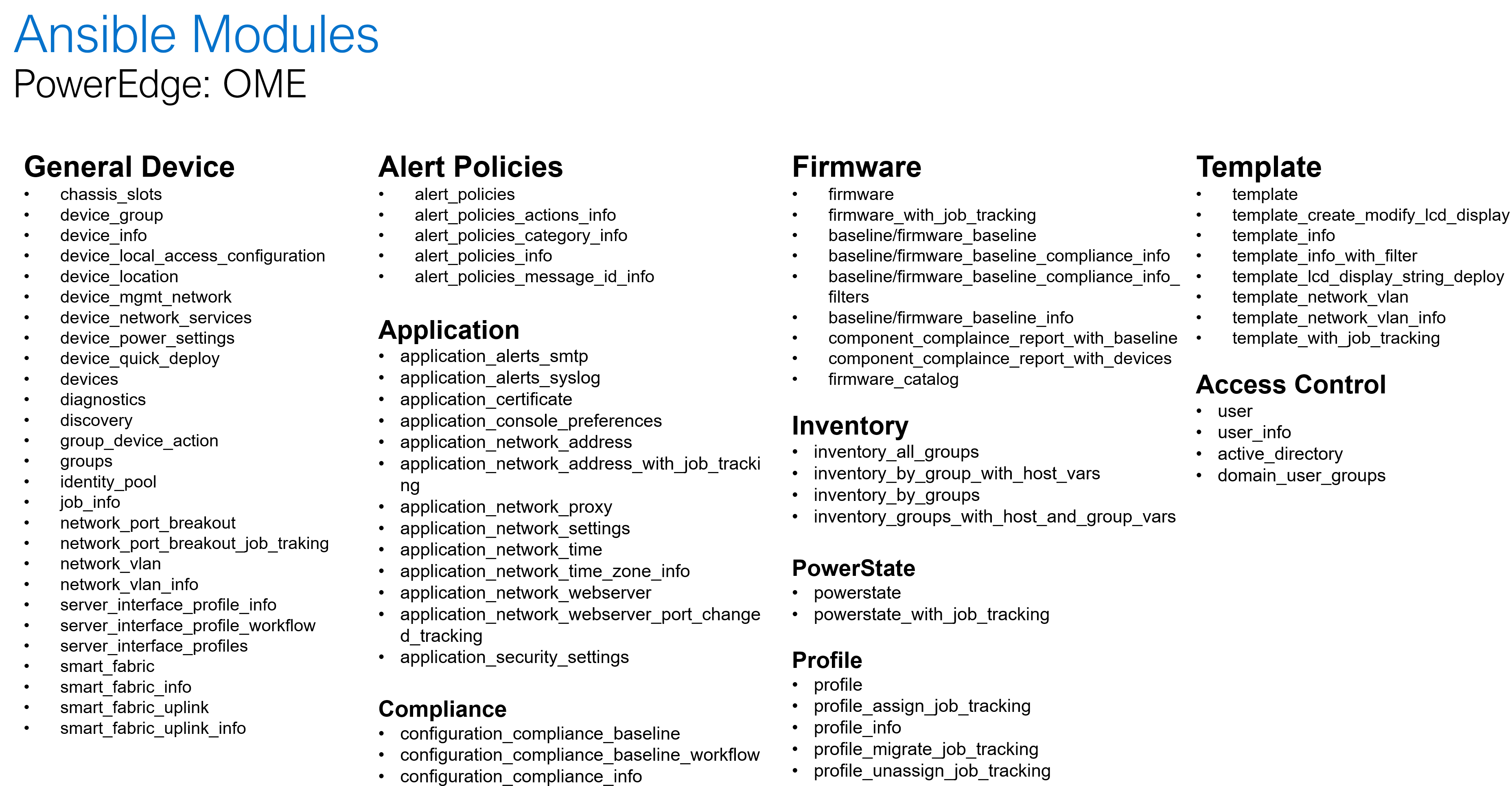

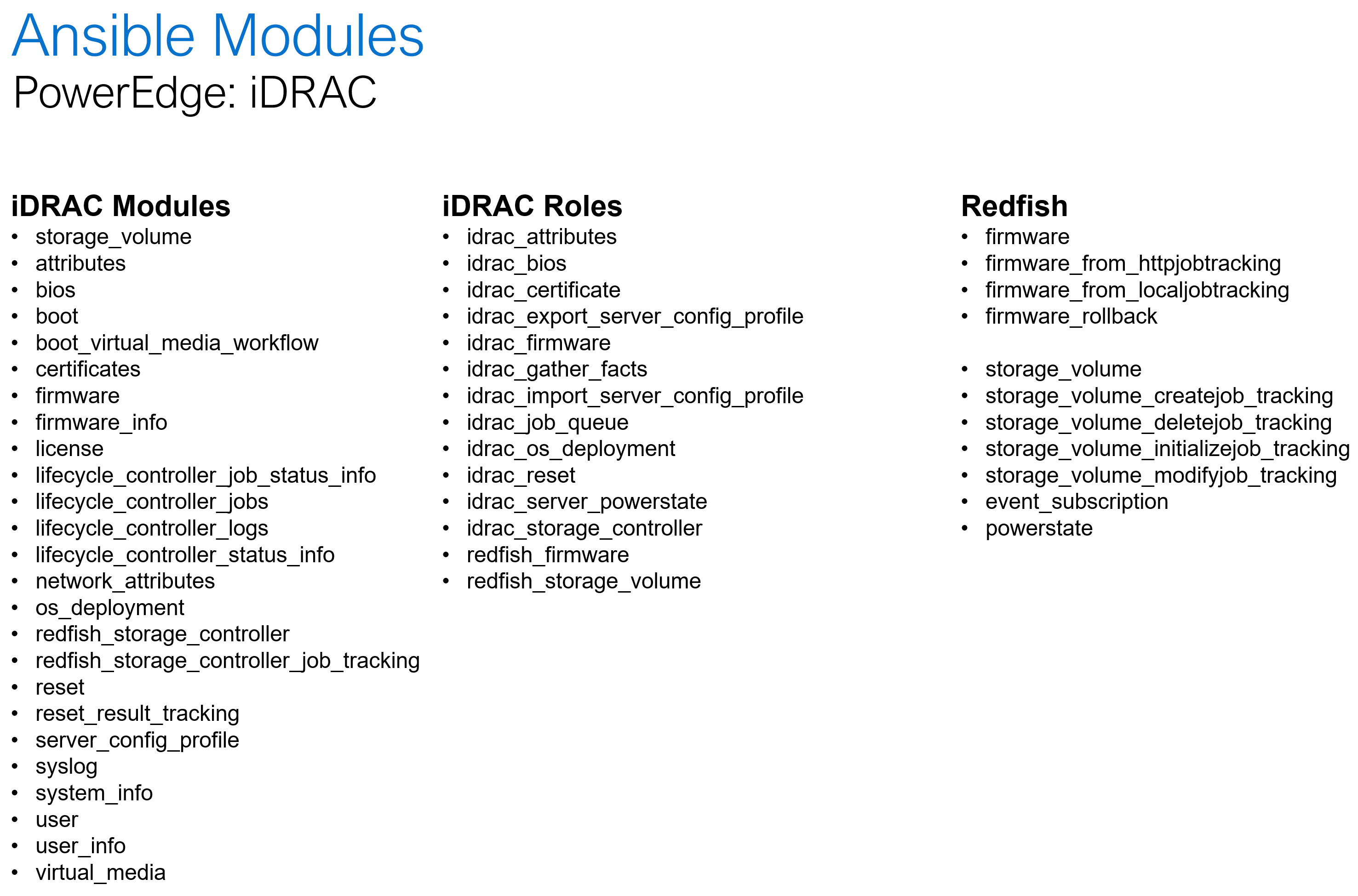

Dell Openmanage Ansible collection offers modules and roles both at the iDRAC/Redfish interface level and at the OpenManage Enterprise level for server configurations such as PowerEdge XE 9860 designed to collect, develop, train, and deploy large machine learning models (LLMs).

The following is a summary of the OME and iDRAC modules and roles as part of the openmanage collection:

Storage

When it comes to AI and storage, during the data processing and training aspects, customers rely on scalable and simple access to file systems which increased data is trained on. With AI unstructured data storage is necessary for the bounty of rich context and nuance that will be accessed during the building phase. It also highly depends on user access to be variable, and Ansible automation playbooks can help change and adapt quickly.

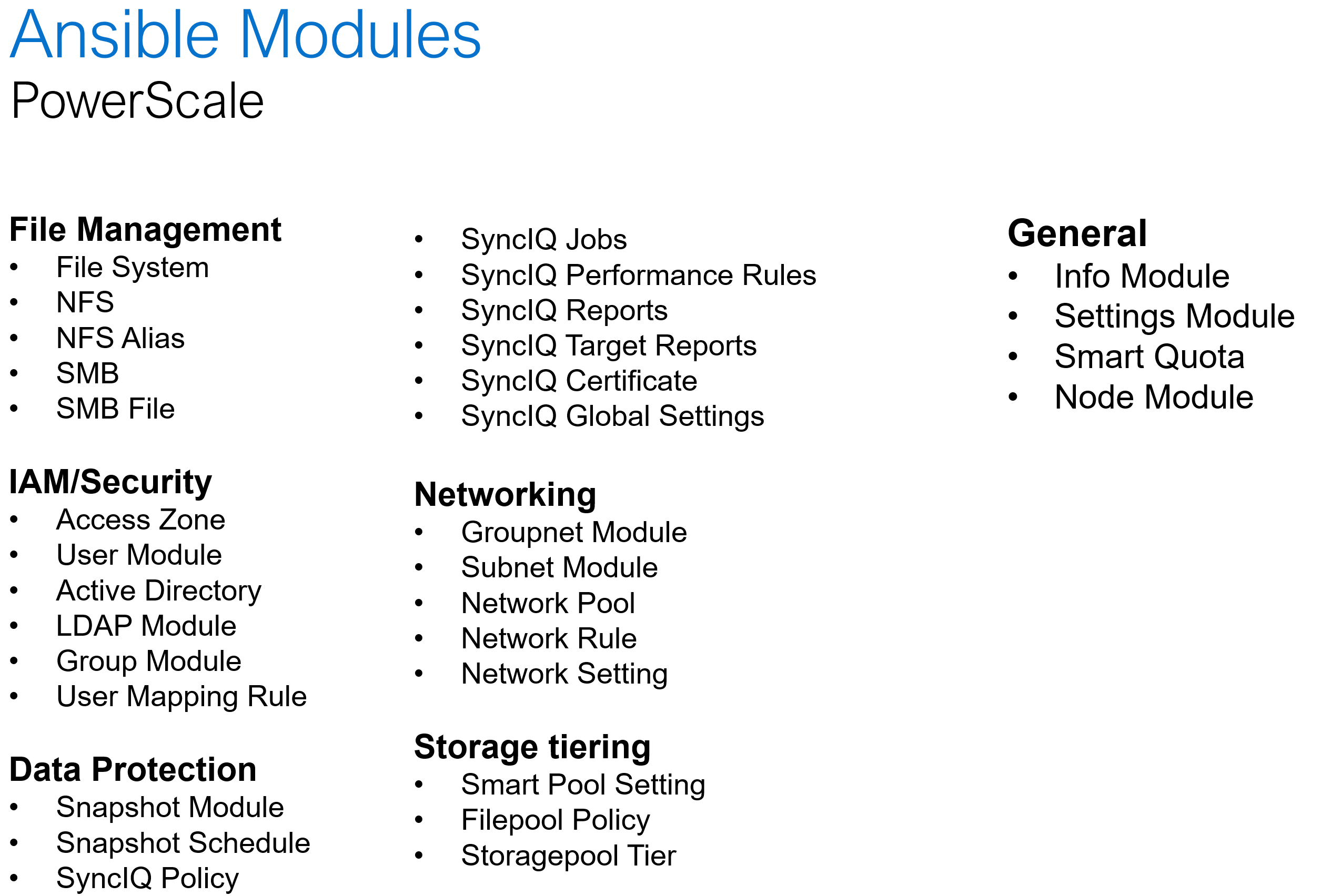

Dell PowerScale is the world’s leading scale-out NAS platform, and it recently became the first ethernet storage certified on NVIDIA SuperPod. When it comes to Ansible automation, PowerScale comes with an extensive set of modules that covers a wide range of platform operations:

Software defined storage

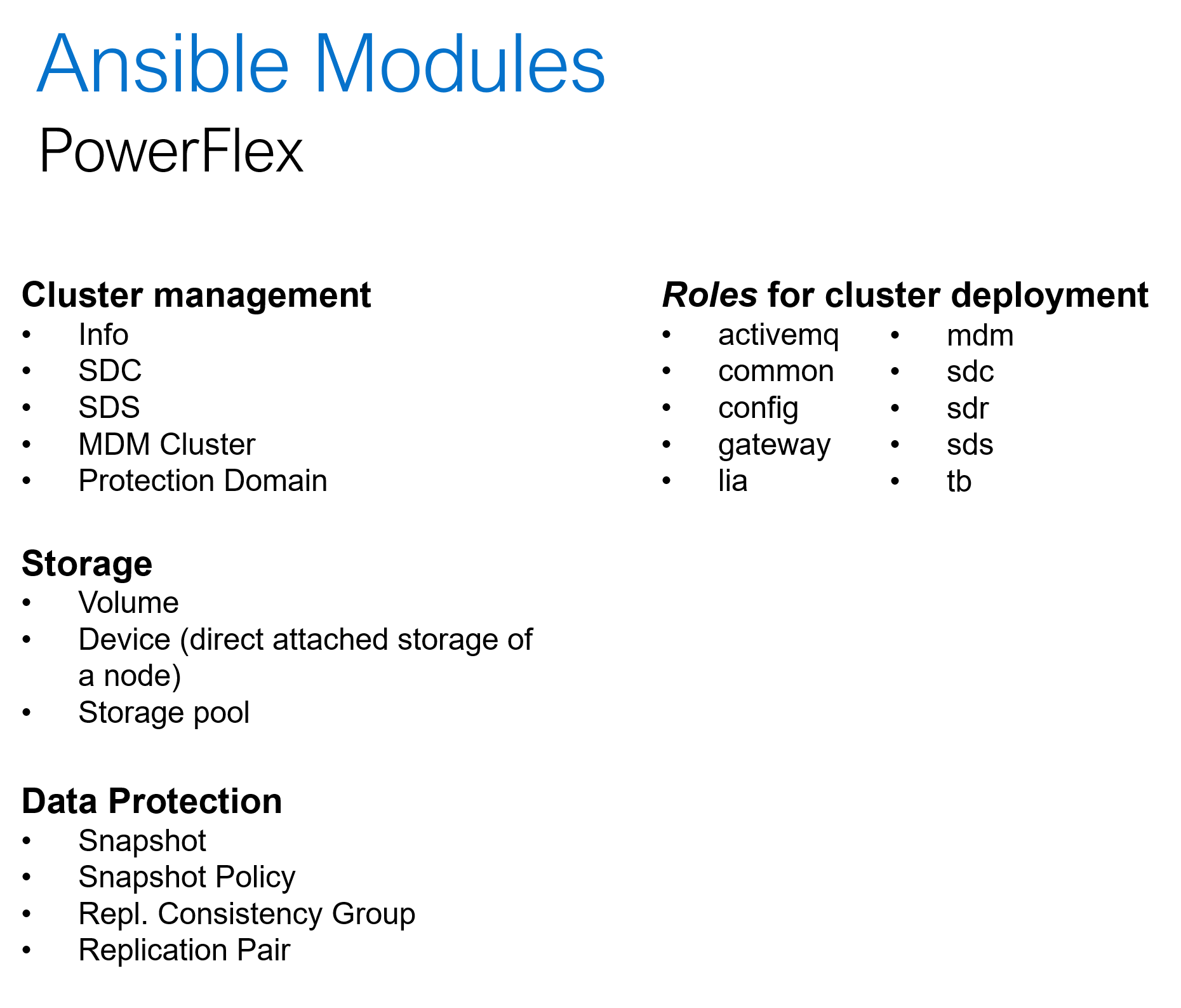

Hyper converged platforms like PowerFlex offer highly scalable and configurable compute and storage clusters. In addition to the common day-2 tasks like storage provisioning, data protection and user management, the Ansible collection for PowerFlex can be used for cluster deployment and expansion. Here is a summary of what Ansible collections for PowerFlex offers:

Conclusion

The one thing agreed upon is that Generative AI tools need the scale, repeatability, and reliability beyond anything created from the software and data center combined. This is precisely what building infrastructure-as-code practices into a multisite operation are designated to do. From PowerEdge to PowerScale, the level of capacity and performance is unmatched. This allows AI operations and Generative AI to absorb, grow and provide the intelligence that organizations need to be competitive and innovative.

[1] Infrastructure-as-code and DevOps Automation: The Keys to Unlocking Innovation and Resilience, September 2023

Other resources:

GenAI Acceleration Depends on Infrastructure as Code

Authors: Jennifer Aspesi, Parasar Kodati